94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun., 23 May 2024

Sec. Science and Environmental Communication

Volume 9 - 2024 | https://doi.org/10.3389/fcomm.2024.1384403

The expansion of science communication underscores the increasing importance of understanding what constitutes good science communication. This question concerns the public’s understanding and engagement with science. The scholarly discussion has shifted from the traditional deficit model to a more dialog-oriented approach yet remains normatively anchored. There is a pivotal lack of attention to the audience’s perspective regarding the question of what good science communication is. Moreover, different formats of science communication have hardly been researched thus far. Therefore, this paper introduces a multi-dimensional scale to capture the audience’s assessment of specific science communication formats. We utilized a multi-step process to identify relevant criteria from both theoretical and practical perspectives. The instrument integrates 15 distinct quality dimensions, such as comprehensibility, credibility, fun, and applicability, structured according to different quality levels (functional, normative, user-, and communication-oriented). It considered theory-driven and practice-experienced categories and was validated through confirmatory factor analyses conducted on a German representative sample (n = 990). For validation, the scale was applied to a science blog post and a science video on homeopathy. After employing a seven-step process, we conclude that the newly devised scale effectively assesses the perceived quality of both blog and video science communication content. The overall assessment aligns with common target variables, such as interest and attitudes. The results regarding the different quality subdimensions provide a nuanced understanding of their contribution to the perceived overall quality. In this way, the scale aids in enhancing science communication in accordance with audience perceptions of quality. This marks the inaugural introduction of a comprehensive measurement instrument tailored to gauge quality from the audience’s standpoint, rendering it applicable for utilization by both researchers and practitioners.

Science communication is pivotal in rendering scientific knowledge understandable to the broader population, bearing significance at both the individual and societal levels (Gibbons, 1999; Kohring, 2012). Its pertinence is particularly pronounced in difficult situations such as the COVID-19 pandemic or in the context of climate change (Su et al., 2015; Newman et al., 2018; Scheufele et al., 2020; Loomba et al., 2021; Dempster et al., 2022), yet it remains equally germane in routine circumstances (Burns et al., 2003; Bromme and Goldman, 2014; Taddicken et al., 2020; Freiling et al., 2023). However, the communication of scientific knowledge is challenging as it opens up complex relationships, is very abstract, and is characterized by ambiguities and uncertainties (Popper, 2002)—which makes it challenging for laypeople who lack access to scientific sources, methodologies, and processes (Fischhoff and Scheufele, 2013). This raises the question of what is appropriate science communication for laypeople, how much scientific detail should be included, and how it should be presented, for example, how entertaining or hinting to everyday life it should be.

Science communication happens in various ways, from classic offerings such as discussion events and television documentaries to digital forms of communication such as online blogs and science videos to citizen science projects. It has been demonstrated that distinct formats of science communication elicit varying expectations from the audience (Wicke and Taddicken, 2020; Wicke, 2022). Against this background, considerations regarding the suitability of science communication come to the forefront, contingent upon the specific formats employed. Put differently, an inquiry emerges regarding the criteria defining good science communication content and whether and how these evaluative criteria diverge across various communication formats.

However, an examination of such quality issues is in its infancy in science communication. Standards or benchmarks for quality assurance have not been uniformly defined or established (Wormer, 2017). So far, they increasingly refer to concrete fields of application, such as the “Guidelines for Good Science PR” (Siggener Kreis, 2016) or the evaluation of science journalistic reporting (Rögener and Wormer, 2017, 2020).

A vast body of literature examines communication training and its evaluation, with a common focus on enhancing oral and written proficiency, as well as mastering the comprehensive use of language (Besley and Tanner, 2011; Sharon and Baram-Tsabari, 2014; Besley et al., 2015, 2016; Dudo and Besley, 2016; Baram-Tsabari and Lewenstein, 2017; Rakedzon and Baram-Tsabari, 2017; Robertson Evia et al., 2018; David and Baram-Tsabari, 2020; Rodgers et al., 2020; Akin et al., 2021; Rubega et al., 2021; Besley and Dudo, 2022). While research in this area is invaluable, it mainly focuses on the communicators’ performances and often relies on mere self-assessments from trainees and is thus often flawed in substantive ways (Dunning et al., 2004). Research has just recently started to offer promising methods for integrating audience perspectives into evaluation processes (Rodgers et al., 2020; Rubega et al., 2021). However, as the impact on the target audiences is said to be the gold standard for science communication trainings (Rodgers et al., 2020), it is imperative to comprehensively incorporate the audience’s viewpoint.

Therefore, it is beneficial to consider not only research in science education but also approaches from communication studies. Communication theories have long established that users play an active role not only in selecting content but also in directing their attention and processing specific elements (Chaiken, 1980; Ruggiero, 2000; LaRose and Eastin, 2004). Consequently, grasping the degree to which science communication aligns with audience expectations directly influences their utilization of such content, as well as their awareness and learning experiences (Hart and Nisbet, 2011; Hendriks et al., 2015; Romer and Jamieson, 2021). Prior research has shown that designing science communication in line with expectations can foster interest and thus increase the application of scientific knowledge (Serong et al., 2019; Wicke and Taddicken, 2021). Findings from journalism research also suggest links between audience perceptions of “good journalism” and their media use (Gil de Zúñiga and Hinsley, 2013; Loosen et al., 2020). On the contrary, dissatisfaction and frustration can even lead to a loss of trust (Donsbach, 2009; Fawzi and Obermaier, 2019; Arlt et al., 2020). Science communication should therefore meet the quality expectations of its audience (Wicke and Taddicken, 2020) to reach the population and provide them with access to science.

As usage metrics and high reach do not yet allow conclusions to be drawn about what the audience likes about the content (Hasebrink, 1997), audiences should be asked about it. This can increase the understanding of how science communication can be designed and derive ideas for modifying or optimizing formats.

However, until now, no comprehensive measure has been developed to assess audiences’ perspectives on the quality of specific science communication offers. While the goal of “effectiveness” in science communication is frequently emphasized, achieving a unified understanding of what constitutes effectiveness appears challenging (Bray et al., 2012; Bullock et al., 2019; Rubega et al., 2021). Clarity, credibility, and engagement of the public are key quality criteria underlying science communication (Rubega et al., 2021), yet they alone do not provide a comprehensive assessment, which should also encompass considerations of public relevance and societal meaningfulness.

Hence, within the scope of the current research, we have constructed and validated a “Quality Assessment Scale from the Audience’s Perspective” (QuASAP) guided by theory. This scale comprises 65 items on 15 dimensions. This standardized instrument allows both academics and practitioners to delve into the evaluations made by individuals. It is easily applicable to different science communication content. In the process of scale development, we deployed across two distinct science communication modalities, namely science blog posts and science videos, to gauge its applicability. As a dependable and robust tool, the scale enables scholars to compare individuals’ quality assessments across various dimensions and to investigate the predictive or explanatory potential of personal and situational attributes in this context.

The shift in perspective moves from assessing the effectiveness of science communication to adopting a normative approach, which emphasizes aims oriented toward society and democracy. The inquiry into the definition of ‘good’ science communication can be embedded in the normative frameworks of the science communication paradigms of Public Understanding of Science to the Public Engagement with Science and Technology (Mede and Schäfer, 2020). Concerning public sphere theories, the inquiry into quality claims and criteria has a longstanding tradition in communication research. Here, it has been acknowledged that distinct quality dimensions and perspectives are relevant for journalistic content regarding the tension between democratic-theoretical and economic requirements (Arnold, 2009, 2023).

A debate about the quality of science communication has also recently unfolded, especially in light of the COVID-19 pandemic or the Fridays4Future movement (Wormer, 2017; Loomba et al., 2021; Dempster et al., 2022). This is accompanied, for example, by concerns about a possible loss of trust and credibility in science (Kohring, 2005; Weingart and Guenther, 2016; Cologna et al., 2024). However, even within this tradition of research, uniform definitions or standards for quality assurance have not been systematically delineated or established (Wormer, 2017; Wicke, 2022). One reason for this may be the challenge of formulating generally applicable, objective quality criteria, as these are, among other things, oriented to the respective format and audience as well as reference point and are thus dynamic and variable (Serong, 2015; Arnold, 2023). Likewise, judgments of quality depend to a large extent on the particular perspective and are, therefore, also subjective (Saxer, 1981; Hasebrink, 1997; Dahinden, 2004; Wicke, 2022). It is consequently crucial to acknowledge the viewpoint of the audience. Considering the audience’s perspectives, ideas, and values leads to a positive quality assessment within the audience; and quality assessments significantly influence usage decisions (Katz et al., 1974; Ruggiero, 2000; Wolling, 2004, 2009; Mehlis, 2014).

Aligned with the constrained theoretical framework surrounding the definition of good science communication, empirical findings in this domain are also limited. In the intersection of journalism and science communication research, investigations have so far mainly focused on assessing environmental reporting as well as medical and health communication [e.g., Oxman et al. (1993), Schwitzer (2008), Wilson et al. (2009), Anhäuser and Wormer (2012), Bartsch et al. (2014), Rögener and Wormer (2017), and Serong et al. (2019)]. The quality criteria presented here are content-specific, primarily tailored to topics, and specifically relevant to print and online science journalism. Consequently, their application cannot be extended without limitations to the broad evaluation of diverse science communication content. There remains a conspicuous absence of a standardized framework for assessing the quality of science communication: “science communication [seems] to have initially pursued little effort to establish its own quality benchmarks in 15 years of PUSH [Public Understanding of Science and Humanities]” (Wormer, 2017; own translation). This deficiency encompasses both the absence of comprehensive theoretical development and the shortage of associated empirical measurement instruments that could be deployed to evaluate various forms of science communication.

Wormer suggests using established quality criteria from (science) journalism, which seems plausible to him “given the stated lack of established criteria for ensuring the journalistic quality of such products” (Wormer, 2017). Kohring (2012) argues that the relationship between science and the public has become increasingly problematic because of the impartation challenge. Consequently, science communication serves parallel functions to (science) journalism. These functions are information transfer, education and enlightenment, criticism and control, and acceptance (Kohring, 2012; Weitze and Heckl, 2016; Gantenberg, 2018). Therefore, Anhäuser and Wormer (2012) contend that, rather than evaluating the quality of (science) journalism and science communication using distinct criteria, there is a need to develop cross-actor dimensions for quality assessment.

To date, assessments of science communication have predominantly been conducted through qualitative and hardly theory-driven research approaches (de Cheveigné and Véron, 1996; Cooper et al., 2001; Milde, 2009; Olausson, 2011; Maier et al., 2016; Milde and Barkela, 2016; Taddicken et al., 2020). For instance, research has shown that audiences place considerable emphasis on the comprehensibility of scientific content, which often tends to be abstract and complex, making it challenging to grasp. This entails the avoidance of technical terms and scientific jargon, as well as the contextualization of scientific findings (Bullock et al., 2019; Taddicken et al., 2020; Wicke and Taddicken, 2020, 2021). Furthermore, audiences seek information concerning the processes and methodologies employed in scientific research and awareness of uncertainties and discrepancies in scientific results (Maier et al., 2016; Milde, 2016; Milde and Barkela, 2016). In their perspective, good science communication also entails the presentation of a variety of (scientific) viewpoints and the multidisciplinary nature of research on a given topic, coupled with an emphasis on its relevance to everyday life, thereby enabling the “practical application” of scientific discoveries. In addition, content is perceived positively when it offers an element of entertainment and novelty (Wicke and Taddicken, 2021).

To provide a theoretical basement for the measurement of an audience’s quality assessments, the integrative quality concept of Arnold (2009) is suitable. This quality concept has been called a “milestone” (Wyss, 2011) of journalistic quality research. Arnold (2009) positions the quality criteria within a tripartite theoretical framework, encompassing functional-system-oriented, normative-democracy-oriented, and audience-action-oriented dimensions. In this regard, the quality of journalism can be ascertained through two distinct avenues. Firstly, it can be derived from its fundamental functions, responsibilities, self-perceived role, and professional norms, effectively emanating “from within,” so to speak. Secondly, quality assessment can be conducted by aligning journalism with societal values and media regulations while considering how journalism can actively engage with and cater to its audience.

In the context of science communication, there are additional specific tasks and functions that extend beyond the traditional roles of journalism (Wicke, 2022). Thereby, based on the quality dimensions of Arnold (2009) on journalistic media, Wicke (2022) introduces a dimension catalog that is extended by specific science communication-related criteria. This catalog differentiates between four different levels: First, the functional-system-oriented level captures the quality dimensions of diversity, timeliness, relevance, credibility, independence, investigation, criticism, and accessibility/comprehensibility. Second, the normative-democratic level includes balance, neutrality, and respect for personality. Third, a user-action-oriented level asks for applicability and entertainability. Fourth and last, the science communication/format-specific level considers education, enlightenment and information, legitimacy and acceptance, dialog and participation, emotions, and context/situation (Wicke, 2022; own translation). In her study, this catalog is objective of the investigation of congruencies between audiences’ and science communicators’ perceptions of specific functions of science communication. Furthermore, she captures quality expectations from the audience toward an expert debate. According to the results, credibility, accessibility, independence, neutrality, and plurality are the most important expectations toward the science communication format expert debate. However, although these expectations were nearly completely fulfilled, the overall assessment of the expert debate was only on a medium level (Wicke, 2022). In this regard, it remains unclear whether the survey adequately addressed all quality dimensions that are pertinent to the intended audience. In addition, Wicke (2022) advocates for developing a standardized survey tool that can be easily used and facilitates cross-format, cross-topic, and cross-temporal comparisons.

In pursuit of this objective, we will integrate this compendium of quality criteria into a fundamental scale construction process, encompassing theoretical foundations and expert assessments derived from academic research and practical domains.

The development of new scales must undergo multiple iterations to ensure their validity. In this context, validity refers to the degree to which the instrument accurately measures its intended criteria. Therefore, rigorous testing and refinement were essential to ensure the scale had clear and precise wording, well-defined parameters and encompassed the critical aspects required to evaluate science communication. In order to assess the quality of science communication from an audience’s perspective, we developed a new questionnaire through a foundational seven-step process. We will describe this process in the following.

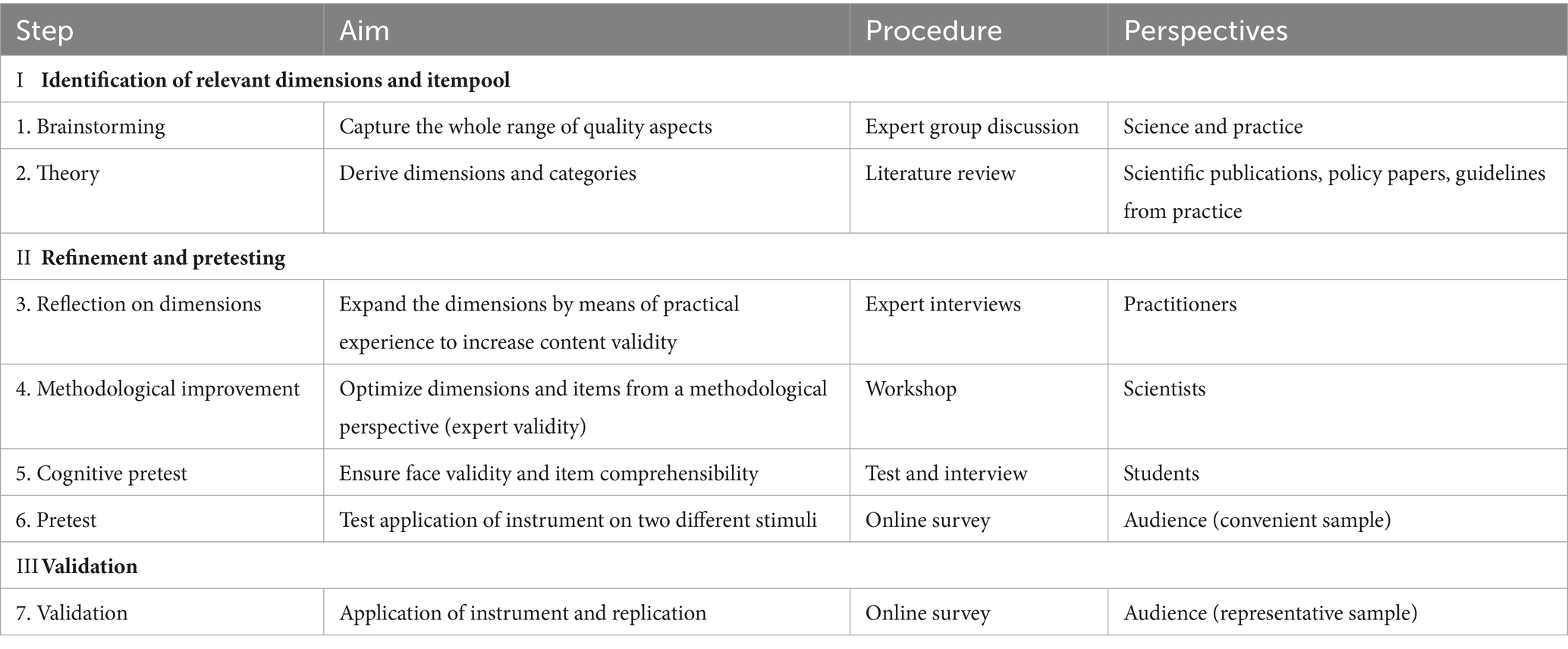

The multi-step process is composed of a total of seven steps in three different development phases (Table 1):

Table 1. Applied multi-step approach to identify relevant criteria from science and practice to develop a multi-dimensional quality assessment scale from the audience’s perspective.

In the first phase of identification of relevant dimensions and itempool, we started with a collaborative brainstorming session with our research project’s practical collaborators. The panel of expert practitioners involved in this group discussion comprised professionals from various domains, including university communication, research institution communication, individual science communication by scientists, a science museum, and a science center dedicated to bridging the gap between scientific knowledge and the public. The step aimed to capture the whole range of quality aspects, considering the practitioners’ experiences, ideas, and aims.

Further, to incorporate the current state of research, we conducted an extensive literature review (step 2: theory). This review was the foundation for deriving quality dimensions and items, drawing insights from academic publications, policy documents, and available practical guidelines. As introduced above, the categories of Arnold (2009) and Wicke (2022) were important sources.

As a result, we developed an extensive pool of various items. Our aim was to create at least two different items for each aspect to achieve a pool at least two times larger than needed (Schinka and Velicer, 2003; Kline, 2013).

In the second phase of refinement and pretesting, we started with reflection on dimensions (third step) in expert interviews conducted with six practitioners, three from our collaboration partners and three from other positions. The objective was to enhance the content validity of dimensions and items by incorporating practical insights and experiences from these experts.

In the fourth step, we focused on methodological improvement by crafting specific items aligned with the identified dimensions. To enhance the robustness of our questionnaire, we convened a workshop involving six experienced survey and science communication researchers. To test for content validity by evaluating expert judges (Boateng et al., 2018). During this workshop, the experts were asked for their feedback concerning the conceptualization of the construct, their assessment of the chosen underlying dimensions, and their appraisals of each specific item, alongside the assessment of the entire instrument. During this stage, participants were encouraged to articulate any feedback, such as suggestions for item enhancement, potential exclusions, or modifications aimed at enhancing item clarity. We ensured that each item underwent rigorous scrutiny with particular attention to wording, such as ensuring clarity, comprehensibility, and balance, and to the logical sequence within the questionnaire.

In the fifth step, we invited five advanced communication students to complete the resultant questionnaire and engage in discussions about the comprehensibility of the items. This cognitive pretest aimed to confirm the face validity of the questionnaire. These interviews served the following purposes: (1) to ascertain if participants comprehended the items, (2) to gauge if participants perceived the items in the same manner as intended by the researchers, and (3) to explore how participants calibrated the item along with its response options.

In the sixth step, following the final modifications, the scale underwent an empirical pretest. We conducted an online survey with a convenient sample of n = 69 participants. Here, we were interested in how appropriate our two different stimuli (blog post, video) were for application in an online survey. Moreover, despite the relatively small sample size, we conducted an exploratory factor analysis to assess how the items interacted. We utilized the results only to identify potentially problematic items with low standard deviations or high kurtosis values. Subsequently, we revisited and refined the identified items through another round of methodological improvements.

In the final step of validation, step seven of our multi-step scale testing process, we administered another online survey using the refined scale for an initial test and its replication. For this phase, we employed Qualtrics to ensure we obtained a sample representative in terms of sex, age, and education. Due to the significance of this concluding step, it will be introduced in the next chapter alongside the presentation of the final scale.

To present the instrument called the Quality Assessment Scale from the Audience’s Perspective—QuASAP— we will follow the suggested systematization of Wicke (2022), adapted from Arnold (2009).

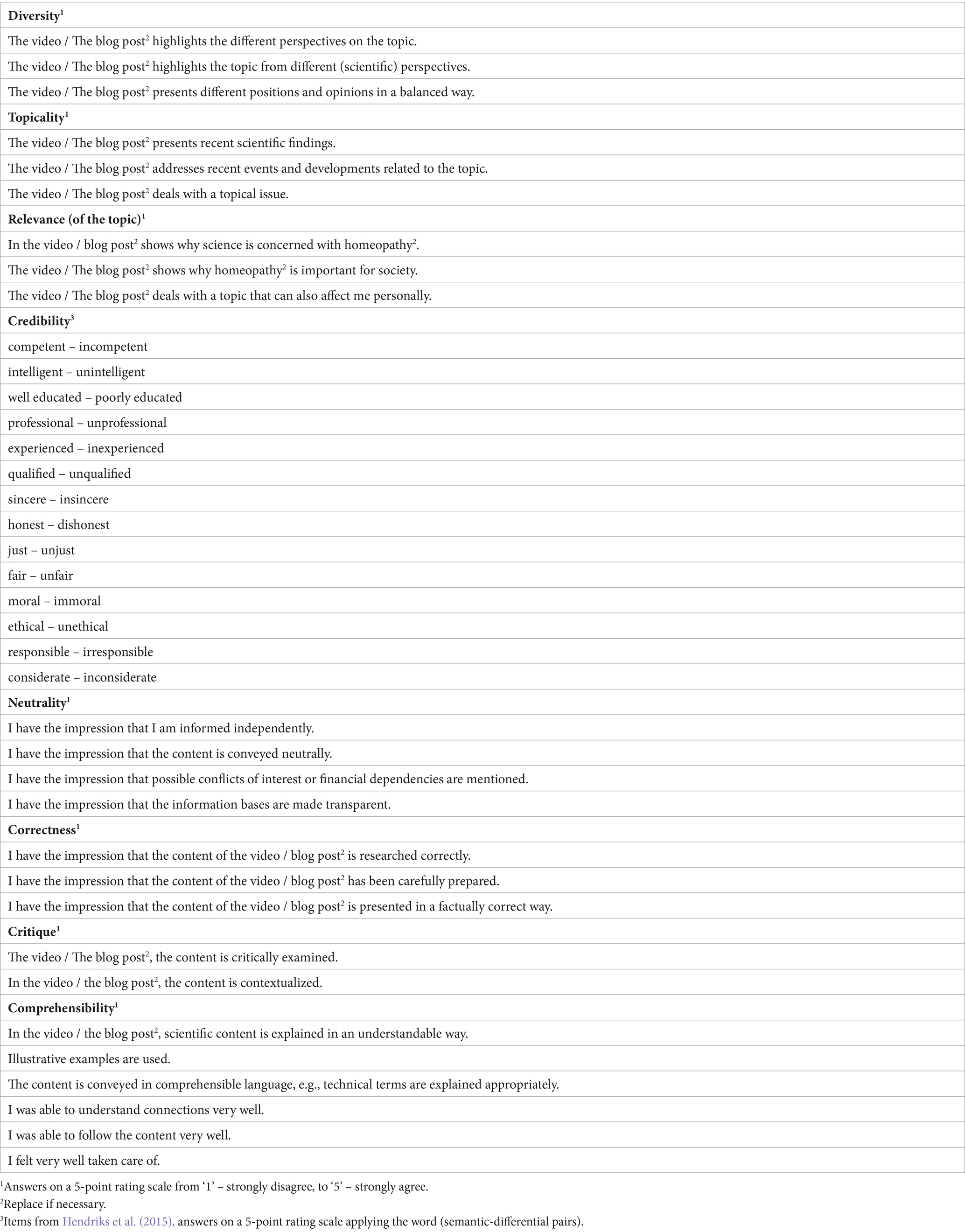

On the functional-system-oriented level, our scale development process confirmed the dimensions introduced by Arnold (2009) and Wicke (2022) but reflects the peculiarities of science communication (Table 2).

Table 2. Items for dimensions diversity, topicality, relevance, credibility, neutrality, correctness, critique and comprehensibility (functional-system-oriented level).

For diversity, it was identified that the presentation of distinct scientific perspectives from different disciplines and research fields is important. Topicality is to include the latest research findings. Relevance is meant as societal relevance. Individual relevance is also considered important, but is called applicability and one of the following dimensions. Credibility was identified as a major quality dimension for science communication. This is in line with prior research [e.g., Bucchi (2017), Vraga et al. (2018), and Wicke (2022)]. As Hendriks et al. (2015) introduced a well-adopted scale for laypeople’s trust in experts (METI), we relied on this.

The classic quality dimensions of neutrality and correctness were confirmed as relevant dimensions, although correctness is seen more as a result of research than (journalistic) investigation. Further, a critical perspective on science, including the contextualization of findings (critique), was highlighted as relevant.

Comprehensibility of science communication is one of the most discussed quality aspects. The focus is often on using technical terms and scientific jargon, and the recommendation is to reduce it (Dean, 2012; Scharrer et al., 2012; Sharon and Baram-Tsabari, 2014; Bullock et al., 2019; Willoughby et al., 2020; Meredith, 2021). However, the overall comprehensibility of the content, together with the comprehensibility of structures and illustration through examples related to the layperson’s world, was also mentioned as important [see also Taddicken et al. (2020) and Siggener Kreis (2016)].

Two dimensions of our instrument refer to the normative-democracy-oriented level of Arnold (2009). The idea of objectivity through a separation of news and opinion was adopted. The aspect of balanced reporting as mentioned by Arnold (2009), converged with plurality and is thus represented. Respect for personality was acknowledged as important when asked for but was not actively mentioned by the practitioners (Table 3).

Originally introduced were applicability and entertainingness (Arnold, 2009) and are confirmed here. For applicability, the relevance for people’s everyday life and, thus, the level of usefulness for their daily life was mentioned as an important quality aspect (Table 4).

In addition to the original integrative quality concept of Arnold (2009) and Wicke (2022) added five further dimensions to consider science communication or format-specific aspects. These include education, enlightenment, and information, as well as legitimation and acceptance of science as central tasks of science communication: The population should be informed and educated, and their understanding of and for science should be promoted. Since science depends, among other things, on societal resources and the trust of the population, it must also secure its public legitimacy (Weingart, 2011). An increasing orientation toward the public engagement paradigm in outreach has increased the importance of interactions between science and the public and produced numerous formats, making dialog and participation another dimension (van der Sanden and Meijman, 2008; Bubela et al., 2009). Furthermore, emotions were included, as science and environmental topics are not only communicated in an objective and factual way, but in many cases emotionalization can be observed (Huber and Aichberger, 2020; Lidskog et al., 2020; Taddicken and Reif, 2020). Last, Wicke (2022) added situational contexts to consider pragmatic and organizational aspects, similar to criteria developed to capture the aesthetic design of a media product (Göpfert, 1993; Dahinden, 2004).

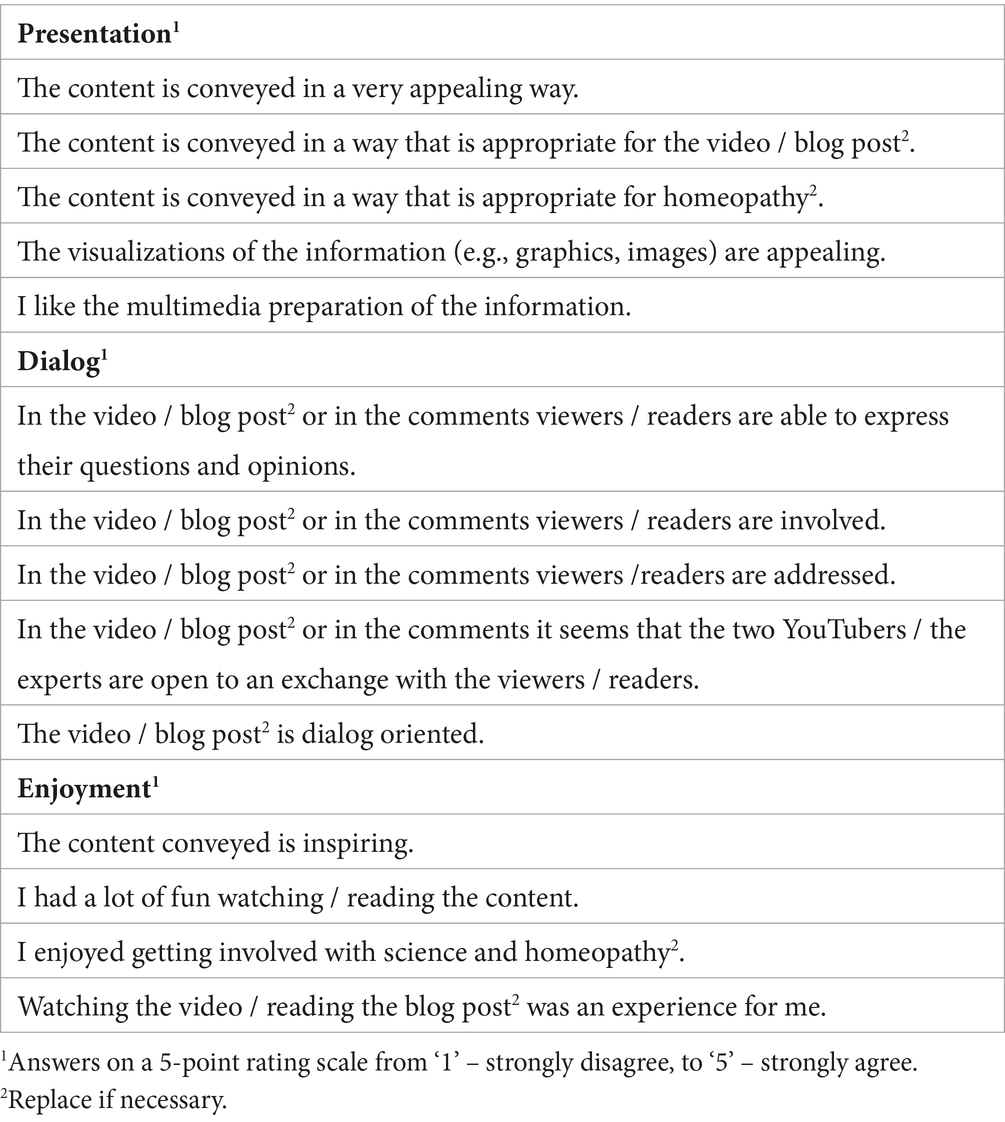

The multi-step process was applied—initially relying on the ideas of Arnold and Wicke as described above—with only three dimensions being identified in the process (Arnold, 2009; Wicke, 2022) (Table 5): First, the presentation of the content, which considers aspects of education, enlightenment, and information. Here, aspects of multimodality were integrated. Aspects of dialog and participation were also identified as relevant quality aspects (according to the paradigm of Public Engagement with Science); thus, it is asked for perceived possibilities to comment, of interactivity and dialog. Last, enjoyment was identified as an important emotion and was captured here.

Table 5. Items for dimensions presentation, dialog, and enjoyment (science communication specific level).

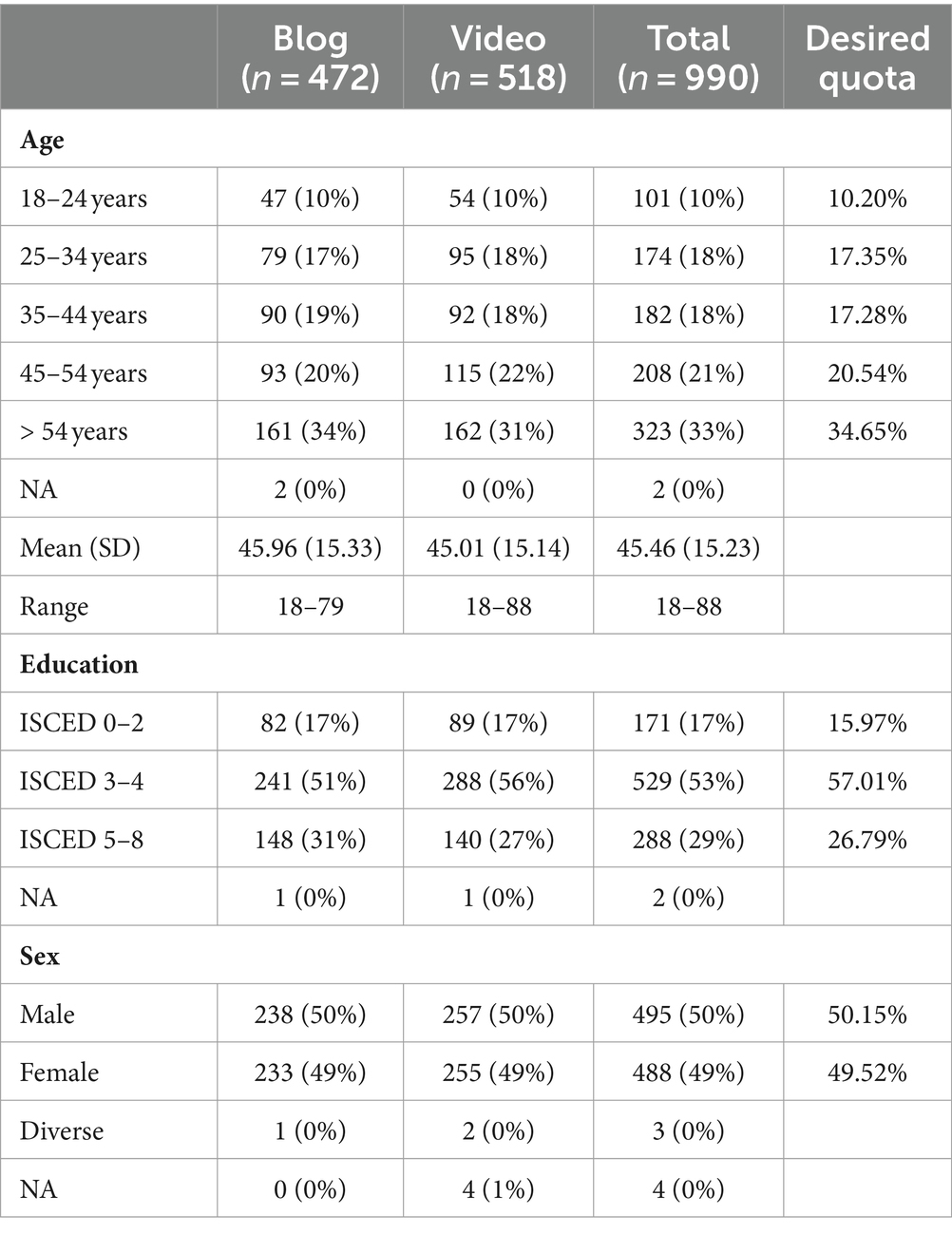

We commissioned the survey company Qualtrics to collect a sample of participants that represented the adult German population regarding age, education, and sex (see quota in Table 6). Field time started 10/22/2021 and ended 11/09/2021. Of the initial 1,075 participants, the first 77 respondents had to be excluded for technical issues regarding the survey implementation, five because all or all but one of the relevant variables were missing, and three because the participants took more than 15 h to complete the online survey. Of the remaining 990 participants, 472 were randomly selected to see the blog post and 518 to watch the video. We partitioned the sample and subjected it to varied stimuli to facilitate the administration of the scale on two occasions. The participants’ key characteristics are shown in Table 6. The data were collected in October and November 2021, and participation took on average 17.3 min (SD = 15.63, min = 7.00, max = 275.27; Blog: M = 18.10 min, SD = 19.19, min = 7.00, max = 275.27; Video: M = 16.57 min, SD = 11.41, min = 7.00, max = 126.18).

Table 6. Overview of basic demographic variables in the sample separated by group (blog vs. video) and desired quota.

First, participants were informed about their privacy rights and that data were analyzed anonymously via written statement, asked about their written consent to participate in the survey and to provide personal data. Basic demographics as well as reception prerequisites (e.g., prior knowledge and attitudes) were assessed. Second, they were randomly assigned to a blog or video condition.

Subsequently, they either read a blog post or watched a video. Both were authentic materials and dealt with the critical view of science on homeopathy. We selected homeopathy as our focus because it represents a typical topic within alternative medicine that is familiar to many and closely tied to everyday life. Despite its widespread recognition, there is limited or no evidence supporting its effectiveness. The blog post was published on “scienceblogs.de,” a private German popular science platform, and was written by a health scientist [https://scienceblogs.de/gesundheits-check/2014/03/22/10-gruende-an-die-homoeopathie-zu-glauben-oder-es-sein-zu-lassen/ (own translation: 10 reasons to believe in homeopathy or to drop it)]. The video was created by a popular German video channel on YouTube, with a duration of approximately 3 min and more than 40,000 views [https://www.youtube.com/watch?v=FkEMbhH2gms (own translation: Homeopathy – The most expensive placebos in the world?)]. Both stimuli are in response to the lack of evidence and are detailed in the Supplementary Appendix S1.

After this stimulus, participants were asked to state whether they interacted with the stimulus as instructed. Individuals who did not watch the whole video or read the whole article were directly excluded from further participation. Third, the participants were asked to rate the quality of the blog article or video on the introduced quality dimensions. Fourth, they were asked about their subjective impression of the stimulus’ impact on them.

In order to assess the quality of science communication from an audience’s perspective, we applied (see 3.2).

While the overarching quality factor of our scale indicates the general quality judged by the audience, the subdimensions and items offer insights into how this quality perception is formed. The strength of this scale lies in its ability to provide a nuanced understanding of the various quality subdimensions.

To assess the extent to which the overarching qualify factor correlates with single-item quality ratings (criterion validity), we included two items: The first item directly asked participants how well they liked the blog post (the video) in general [original: “Wie gut hat Ihnen der Blogbeitrag (das Video) insgesamt gefallen?”] and the second asked whether participants would recommend the items to others [original: “Würden Sie den Blogbeitrag (das Video) weiterempfehlen?”].

As the participants were not asked any sensitive questions, e.g., regarding political, religious or sexual attitudes, a statement from the ethics committee was not obtained.

All data analyses were completed using R (R Core Team, 2022) and R Studio (Posit Team, 2022). The confirmatory factor analysis (cfa) was calculated using the lavaan package (Rosseel, 2012). A detailed description of all packages used is provided in Supplementary Table S2. In order to increase comparability between the original study and its replication, both are presented in each paragraph. However, the replication analysis was completed after the original analysis.

All items were left skewed (range: −1.13 to −0.19), with means ranging from 3.20 (SD = 1.12) to 4.10 (SD = 0.89), revealing no evidence of a severe ceiling effect. The number of non-responses per item went from two to 30. Missing values were excluded from the analysis of the respective item.

Similar results were obtained, replicating the analysis with video data. All items were left skewed (range: −1.19 to −0.27), and the means ranged from 3.16 (SD = 1.26) to 4.04 (SD = 1.08). Up to 25 missing values on the item level were removed from the analysis.

A detailed analysis of the characteristics of all items is provided in Supplementary Table S3.

Internal consistency: Cronbach’s alpha of the subscales ranged from 0.76 to 0.95, showing medium to high internal consistency. One exception was the critique subscale, which showed a lower internal consistency (α = 0.64). The lower value is unsurprising as this subscale consists of only two items.

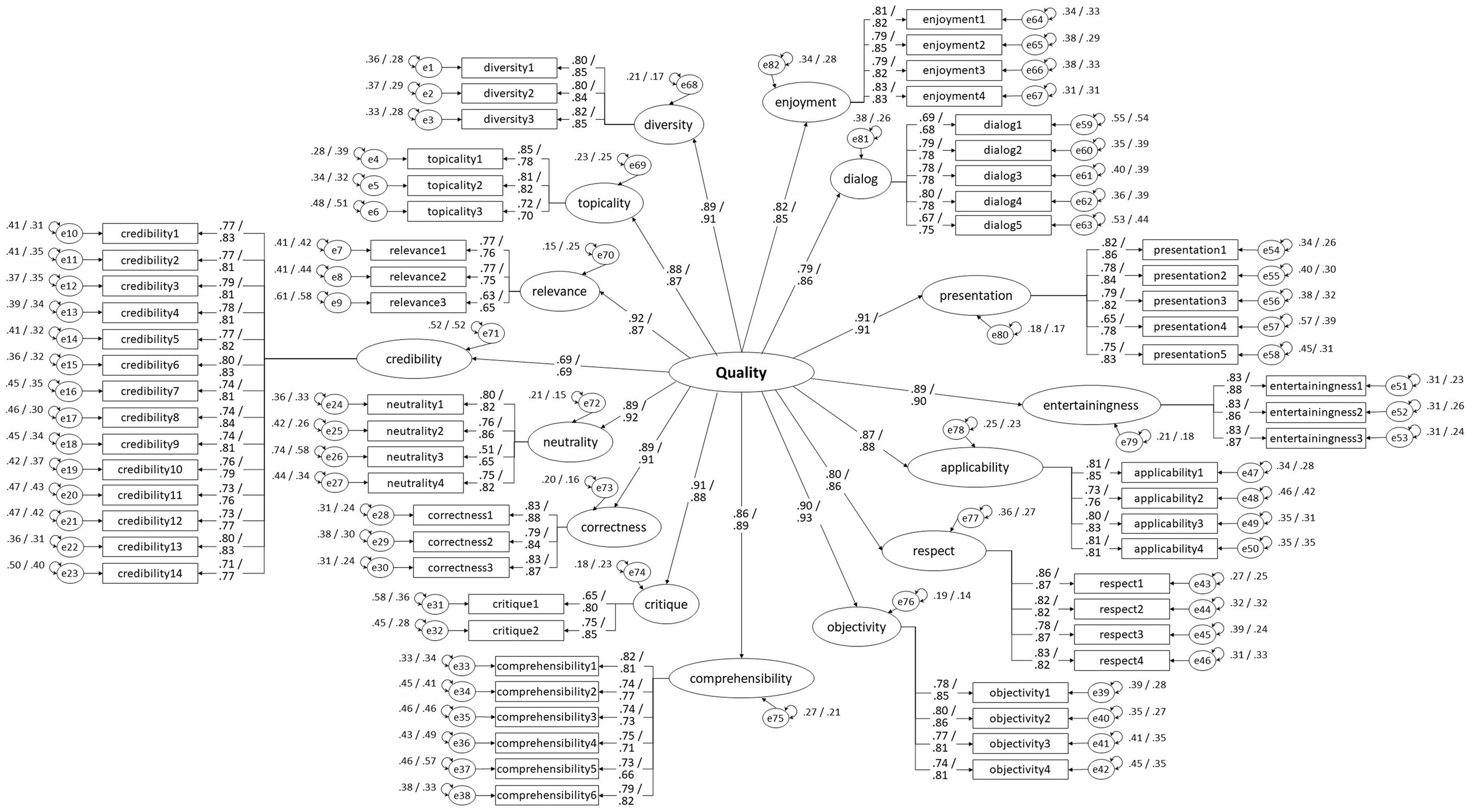

Factorial validity: The factorial validity was tested using a cfa (n = 472, df = 2,130). The model was estimated using the maximum likelihood estimation with robust standard errors (Huber-White method) and scaled test statistics. Missing data were assumed to be missing at random (MAR) and were estimated on the sample level using complete information maximum likelihood estimation. Although the expected model differed significantly from the observed model [Chi2 (2130) = 3640.49, p < 0.001], if relativized at the degrees of freedom, then a close fit to the data is reached (rRMSEA = 0.046, 90% CI [0.043, 0.048]; MacCallum et al., 1996). The user model outperformed the baseline model (robust CFI = 0.91, robust TLI = 0.90) and accurately fit the data. All standardized factor loadings (betas) of the subdimensions differed significantly from 0 (p < 0.001) and ranged from 0.51 to 0.86, indicating a bad to good fit. All standardized loadings of the superordinated quality factor on the subdimensions reached significance (p < 0.001) and ranged from 0.69 to 0.92, showing an acceptable to good fit. An overview of all standardized factor loadings is provided in Figure 1.

Figure 1. Measurement model consisting of one first-order quality factor, 15 second-order quality factors, and 65 quality indicators. The numbers represent the standardized loadings and variances separated by group (blog group/ video group). Blog: cfa (n = 472, df = 2,130): Chi2 (2130) =3640.49, p < 0.001, rRMSEA = 0.046, 90% CI [0.043, 0.048]; rCFI = 0.91, rTLI = 0.90.; Video: cfa (n = 518, df = 2,130): Chi2 (2130) =3708.75, p < 0.001, rRMSEA = 0.045, 95% CI [0.042, 0.047]; rCFI = 0.93, rTLI = 0.92.

Criterion validity: To control for criterion validity, participants were asked how well they liked the blog entry (n = 459, M = 3.65, SD = 1.06) and whether they would recommend it to others (n = 457, M = 3.47, SD = 1.18). Both criterion variables were intercorrelated (n = 450, r = 0.76, p < 0.001). The conducted latent quality variable was significantly correlated to both the direct overall evaluation (n = 459, r = 0.63, p < 0.001) and the recommendation (n = 457, r = 0.65, p < 0.001). From this, we conclude a high criterion validity.

Internal consistency: Replicating the scale analysis with the video data again showed similar results. Cronbach’s alpha reached 0.76 to 0.96, showing medium to high internal consistency.

Factorial validity: Again, a cfa (n = 518, df = 2,130) with the same specification and estimation methods as the original study was conducted and revealed similar results. Relativized to the degrees of freedom, the expected model showed a close fit to the observed model [Chi2 (2130) = 3708.75, p < 0.001, rRMSEA = 0.045, 90% CI (0.042, 0.047)] and outperformed the baseline model (rCFI = 0.93, rTLI = 0.92). All standardized loadings reached significance (p < 0.001). Loadings from subdimensions on items ranged from 0.65 to 0.88, and loadings from the superordinate factor to the subdimensions ranged from 0.69 to 0.93, indicating an acceptable to good fit. Figure 1 provides an overview of all the loadings.

Criterion validity: Both criterion variables, the overall evaluation (n = 511, M = 3.63, SD = 1.22) and the recommendation to others (n = 513, M = 3.42, SD = 1.34) were intercorrelated (n = 509, r = 0.83, p < 0.001). Again, the latent quality factor was significantly correlated to the direct overall evaluation (n = 511, r = 0.72, p < 0.001) and the recommendation (n = 513, r = 0.69, p < 0.001), which indicates good validity.

The correlation analysis of the dimensions can be found in Supplementary Table S4, an overview of the factor loadings of each item in Supplementary Table S5.

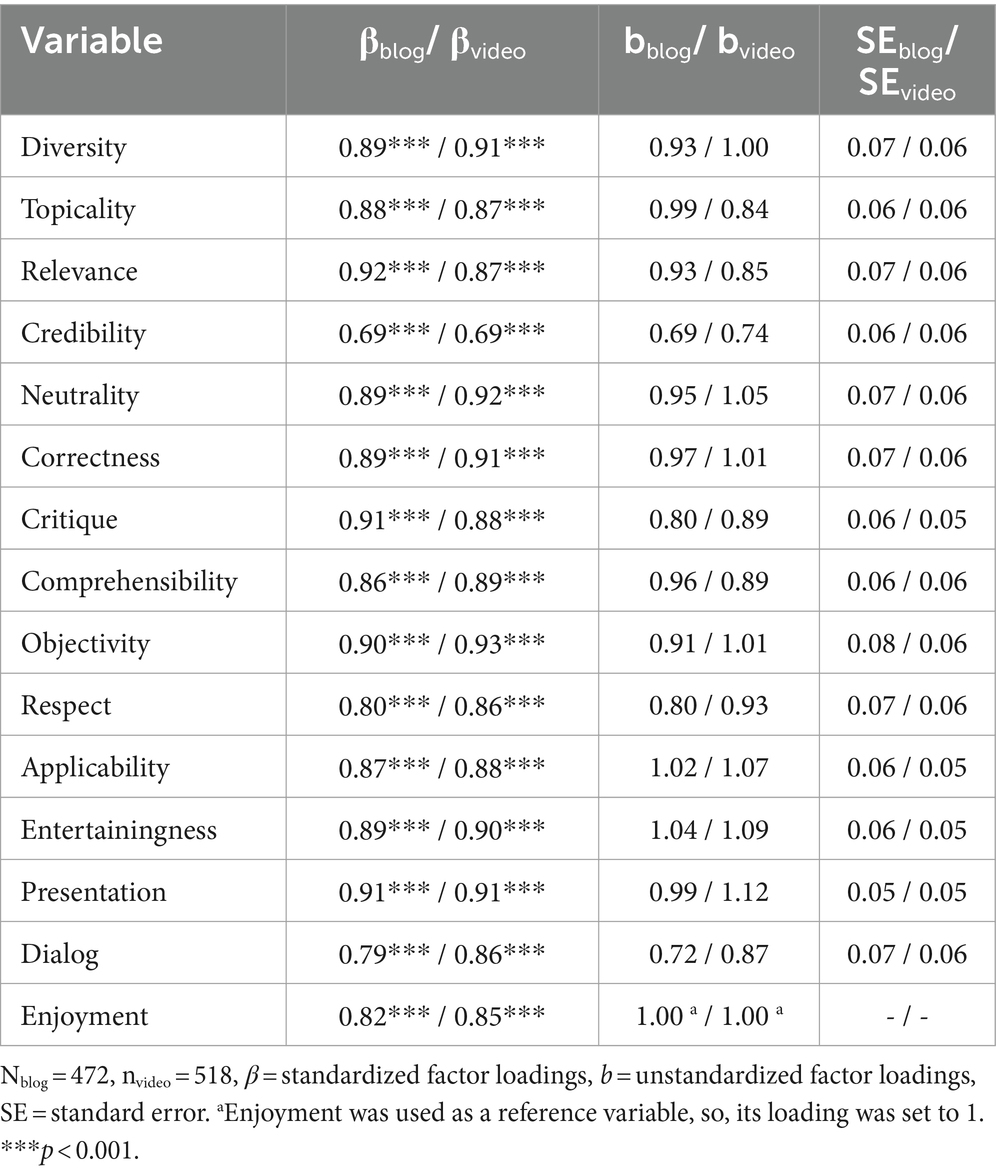

Table 7 contains information on the extent to which the 15 quality dimensions relate to the audience’s overall quality assessment. All 15 dimensions make a very high explanatory contribution to the overall concept of quality. With the exception of the dimension of credibility, all values are even in an exceptionally high range.

Table 7. Factor loadings of quality dimensions on the overall quality assessment of the audience (blog / video).

This finding, that all 15 dimensions explain the overarching quality factor to a high degree should be emphasized, as it shows that all dimensions were indeed highly relevant for the overall quality perception. This had been theoretically assumed in advance, but would not necessarily have been confirmed by the empirical data.

The results (Figure 1) indicate that several subdimensions, notably objectivity, presentation, neutrality, correctness, diversity, relevance, critique, and entertainingness, displayed high path coefficients in relation to the overall quality. Additionally, applicability, topicality, and comprehensibility were highly important. Although with slightly lower values, the subdimensions of respect, dialog, and enjoyment also made significant contributions to the overall assessment. Credibility exhibited the lowest values, albeit still at a moderate level. One possible explanation for this discrepancy could be attributed to the differing item format of the original METI scale utilized in this study.

Overall, it becomes apparent that the subdimensions of societal meaningfulness were not less important than subdimensions more oriented to the individual user.

Therefore, for a comprehensive quality assessment oriented toward the audience’s expectations, these 15 dimensions should be conducted. However, the QuASAP scale also allows for a selection of quality dimensions.

The extent to which various quality dimensions correlate with typical target variables of science communication is also of interest to assess the appropriateness of the QuASAP instrument. Here, we chose to examine six different concepts: interest, knowledge, attitudes, behavior, abilities (to understand and engage), and self-efficacy—each pertaining to both science in general and the specific topic of homeopathy. The number of items assessed varied between 4 and 8 (refer to Supplementary Table S6 for additional details and specific items), and Cronbach’s alpha for each variable demonstrated satisfactory reliability (Table 8).

Table 8. Correlation analyses of quality dimensions and typical target concepts of science communication.

The correlation analyses revealed that all correlations are of medium to high magnitude and statistically significant at a p ≤ 0.001 level (Table 8). This demonstrates a moderate to strong relationship between the science communication presented to the participants and their interest in and perceptions of the science topic. Therefore, it suggests that the QuASAP scale effectively captured associations between its measures and external target variables.

As mentioned, the primary advantage of the scale is its ability to elucidate the various subdimensions and their connections to various external concepts. Notably, upon examining the correlation values, credibility consistently exhibits the lowest correlation. It is reasonable to posit that credibility serves as a fundamental prerequisite, becoming more salient when perceived as lacking—specifically when the presented content is not deemed credible.

The quality dimensions with the highest correlations with the chosen target concepts vary. This leads to a nuanced depiction, highlighting the strengths of a quality scale with different subdimensions. Different science communication offerings may pursue different goals; thus, the detailed exploration of individual dimensions can reveal the extent of relationships present.

When examining the individual target variables, interest and behavior demonstrate the strongest correlation with enjoyment. This means that a higher level of enjoyment is associated with a higher level of interest in the topic and corresponding research as well as increased behavior, which was queried here in relation to information searches and participation in discussions.

Attitudes and abilities display the highest correlation with the quality dimension of applicability. This implies that individuals who perceived higher applicability in science communication content exhibited greater capacity to form opinions, as well as to comprehend, and engage with the content. This assertion is plausible because perceiving science as applicable to one’s own life should reduce the threshold for contemplating and engaging with science issues.

The greatest correlation for knowledge is observed with correctness, reflecting the assessment of how truthful the presented information is in relation to the perception of how much one has learned about science and the topic of homeopathy. This correlation is also logical.

In addition, science communication can aim to enhance self-efficacy, defined as an individual’s belief in their capacity to execute behaviors necessary for specific performance attainments (Bandura, 1977). Interestingly, entertainingness exhibits the highest correlation in this context, suggesting that an entertaining way of presenting science may benefit the intentions to engage with science information, such as reading academic content.

However, it is important to note that these correlation analyses primarily provide insights into the suitability of different quality dimensions in explaining selected goals of science communication. This study was not intended to investigate the specific effects of the science communication content on the participants, but rather to indicate whether the QuASAP scale can accurately identify relationships between the scale’s measures and external variables.

This paper aimed to introduce a quality assessment scale for science communication that acknowledges the audience’s perspective. Although a valuable strand of research exists to assess the effectiveness of science communication and science communication trainings (Besley and Dudo, 2022) with integrating the audience perspective on the science communicators’ outcomes, and alongside the ongoing normatively anchored discussion on the societal and democratic quality of science communication (Fähnrich, 2017; Mede and Schäfer, 2020), it is striking how far the audience perspective is neglected in the second strand of research. However, as the impact on the target audiences is considered the gold standard for science communication trainings (Rodgers et al., 2020), and as it has been found that the audience perspective on journalism is related to its usage and information processing (Gil de Zúñiga and Hinsley, 2013; Loosen et al., 2020), there is an urgent need for a comprehensive assessment that not only captures aspects of clarity and credibility but also considers public relevance and societal meaningfulness. To introduce such a comprehensive assessment was the aim of this study.

The question of what good science communication is should not only be answered from an academic standing, even if it is based on the idea of considering the public perspective. The practitioners’ viewpoints should also be acknowledged, as extensive discussions on this question and manifold experiences have been developed (e.g., the “Guidelines for Good Science PR” (Siggener Kreis, 2016)). The variety of science communication offerings has massively increased over the last few decades (Bubela et al., 2009; Bucchi and Trench, 2014). This underscores the importance of measurement instruments based on cross-actor dimensions instead of using distinct criteria to conduct a single format’s success.

This study follows the suggestion of Wormer (2017) when adopting established quality criteria from (science) journalism and builds upon prior work of journalism and science communication researchers (Arnold, 2009; Wicke, 2022). A multi-step process of seven different stages was carried out, and prior academic research and practitioners’ experiences were integrated to validate a multi-dimensional scale of quality assessments of science communication from the audience’s perspective—the QuASAP.

The instrument integrates 15 distinct quality dimensions: Diversity, Topicality, Relevance, Credibility, Neutrality, Correctness, Critique, Comprehensibility, Objectivity, Respect, Applicability, Entertainingness, Presentation, Dialog, and Enjoyment. It was applied to a science blog post and a science video on homeopathy to allow for advanced statistical analyses. Confirmatory factor analyses indicated that quality is effectively assessed for both science communication formats.

All 15 subdimensions, originating from functional-system-oriented, normative-democracy-oriented, user-action-oriented, and science communication-specific perspectives, contributed substantially to the overarching quality factor. This confirmation underscores the importance of these subdimensions as key contributors to the audience’s overall perception of science communication quality.

A major benefit of using multidimensional assessment scales such as the introduced QuASAP scale is their ability to illuminate the various subdimensions and their connections to various external concepts. Correlation analyses between the QuASAP scale and different external variables, representing typical target variables of science communication, revealed that different quality dimensions were associated with different science communication target concepts, such as interest and knowledge. This resulted in a nuanced depiction, enabling a detailed exploration of individual dimensions to reveal the extent of relationships present.

This also underscores the practical value of the QuASAP scale: it allows for the focused assessment of content on specific dimensions that are most likely to facilitate the achievement of science communication goals. The impact of quality subdimensions could be analyzed in future effect studies that consider the information provided in the science communication content. However, such analysis was not conducted in this study, as its primary aim was to introduce the multidimensional quality scale.

Naturally, the study has several limitations. First, the science issue chosen was homeopathy. We have neither controlled for the general interest in this issue nor for individuals’ beliefs in this alternative medicine. The QuASAP should be used for other science issues and tested for validity. Moreover, we focused on social-media-based science communication formats as the research project was mainly interested in these due to their higher potential for public engagement. As much of the prior work included in the scale development was carried out in the context of expert discussions, we assume that the QuASAP should be able to be used for other content and formats as well, but this should be tested in future projects.

If the QuASAP scale were to be applied to other science communication formats and content, such as television programs, science exhibitions, barcamps, and others, it could facilitate the establishment of benchmarks for assessing the perceived quality of science communication. This would provide valuable insights for science communication practitioners to enhance their offerings and optimize them accordingly, resulting in significant practical benefits.

Besides the limitations based on researchers’ decisions, it is further to mention that the validation of the scale relies on data from a single survey. Although the sample was representative of the German population regarding age, sex and education, sample effects cannot be excluded. Other studies with other samples need to use QuASAP to detect the robustness of the results.

Finally, as a first step, we were interested in developing a holistic assessment scale, including the societal-normative perspective, that can be applied to all science communication formats. However, using this instrument to learn more about the format-specific benefits and advantages for specific communication aims might also be possible. Here, we could not compare, for example, whether the level of entertainingness is higher in science videos than in text-based blog posts as we had not controlled for the content but relied on field material. This could be a fruitful approach to learning more about the specific advantages of science communication formats and even channels.

In general, we aimed to highlight the relevance of the audience perspective with this introduction of the multi-dimensional quality assessment scale, and thus hope to see this reflected in future research.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

MT: Writing – original draft, Writing – review & editing, Validation, Resources, Methodology, Funding acquisition, Formal analysis, Conceptualization. JF: Writing – review & editing, Visualization, Validation, Methodology, Formal analysis, Data curation. NW: Writing – review & editing, Project administration, Methodology, Data curation, Conceptualization.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was part of the project ‘Science Communication and Public Engagement’ (SCoPE), funded by the Ministry of Science and Culture of Lower Saxony, grant number: 74ZN1806. Grant applicant is MT. The project was coordinated by NW. Further members of the research group were Kaija Biermann, Lennart Banse, Julian Fick, Lisa-Marie Hortig and Maria Prechtl.

We would like to thank numerous researchers and colleagues who provided valuable insights at various stages of the process. Additionally, we highly appreciate the cooperation with our partners from science communication practice for consistently enabling us to integrate practical experiences and insights into our project work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2024.1384403/full#supplementary-material

Akin, H., Rodgers, S., and Schultz, J. (2021). Science communication training as information seeking and processing: a theoretical approach to training early-career scientists. JCOM 20:A06. doi: 10.22323/2.20050206

Anhäuser, M., and Wormer, H. (2012). “A question of quality: criteria for the evaluation of science and medical reporting and testing their applicability” in Quality, honesty and beauty in science and technology communication. eds. B. Trench and M. Bucchi, 335–337.

Arlt, D., Schumann, C., and Wolling, J. (2020). Upset with the refugee policy: exploring the relations between policy malaise, media use, trust in news media, and issue fatigue. Communications 45, 624–647. doi: 10.1515/commun-2019-0110

Arnold, K. (2009). “Qualität im Journalismus,” in Journalismusforschung: Stand und Perspektiven. eds. K. Meier and C. Neuberger 3., aktualisierte und erweiterte Auflage (Baden-Baden: Nomos), Konstanz: UVK.

Arnold, K. (2023). “Qualität im Journalismus” in Journalismusforschung: Stand und Perspektiven. eds. K. Meier and C. Neuberger, 93–110.

Bandura, A. (1977). Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 84, 191–215. doi: 10.1037/0033-295X.84.2.191

Baram-Tsabari, A., and Lewenstein, B. V. (2017). Science communication training: what are we trying to teach? Int. J. Sci. Educ. Part B 7, 285–300. doi: 10.1080/21548455.2017.1303756

Bartsch, J., Dege, C., Grotefels, S., and Maisel, L. (2014). “Gesund und munter? Qualität von Gesundheitsberichterstattung aus Nutzersicht” in Qualität im Gesundheitsjournalismus: Perspektiven aus Wissenschaft und Praxis. ed. V. Lilienthal (Wiesbaden: Springer VS), 119–138.

Besley, J. C., and Dudo, A. (2022). Strategic science communication: Why setting the right objective isessential to effective public engagement. Baltimore: Johns Hopkins University Press.

Besley, J. C., Dudo, A., and Storksdieck, M. (2015). Scientists’ views about communication training. J. Res. Sci. Teach. 52, 199–220. doi: 10.1002/tea.21186

Besley, J. C., Dudo, A. D., Yuan, S., and Abi Ghannam, N. (2016). Qualitative interviews with science communication trainers about communication objectives and goals. Sci. Commun. 38, 356–381. doi: 10.1177/1075547016645640

Besley, J. C., and Tanner, A. H. (2011). What science communication scholars think about training scientists to communicate. Sci. Commun. 33, 239–263. doi: 10.1177/1075547010386972

Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., and Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: a primer. Front. Public Health 6:149. doi: 10.3389/fpubh.2018.00149

Bray, B., France, B., and Gilbert, J. K. (2012). Identifying the essential elements of effective science communication: what do the experts say? Int. J. Sci. Educ., Part B 2, 23–41. doi: 10.1080/21548455.2011.611627

Bromme, R., and Goldman, S. R. (2014). The Public’s bounded understanding of science. Educ. Psychol. 49, 59–69. doi: 10.1080/00461520.2014.921572

Bubela, T., Nisbet, M. C., Borchelt, R., Brunger, F., Critchley, C., Einsiedel, E., et al. (2009). Science communication reconsidered. Nat. Biotechnol. 27, 514–518. doi: 10.1038/nbt0609-514

Bucchi, M. (2017). Credibility, expertise and the challenges of science communication 2.0. Pub. Understand. Sci. 26, 890–893. doi: 10.1177/0963662517733368

Bucchi, M., and Trench, B. (2014). Routledge handbook of public communication of science and technology. 2nd Edn. London, New York: Routledge Taylor & Francis Group.

Bullock, O. M., Colón Amill, D., Shulman, H. C., and Dixon, G. N. (2019). Jargon as a barrier to effective science communication. Evidence from metacognition. Pub. Understand. Sci. 28, 845–853. doi: 10.1177/0963662519865687

Burns, T. W., O’Connor, D. J., and Stocklmayer, S. M. (2003). Science communication: a contemporary definition. Pub. Understand. Sci. 12, 183–202. doi: 10.1177/09636625030122004

Chaiken, S. (1980). Heuristic versus systematic information processing and the use of source versus message cues in persuasion. J. Pers. Soc. Psychol. 39, 752–766. doi: 10.1037/0022-3514.39.5.752

Cologna, V., Mede, N. G., Berger, S., Besley, J. C., Brick, C., Joubert, M., et al. (2024). Trust in scientists and their role in society across 67 countries. OSFpreprints, doi: 10.31219/osf.io/6ay7s

Cooper, C. P., Burgoon, M., and Roter, D. L. (2001). An expectancy-value analysis of viewer interest in television prevention news stories. Health Commun. 13, 227–240. doi: 10.1207/S15327027HC1303_1

Dahinden, U. (2004). “Gute Seiten – schlechte Seiten. Qualität in der Onlinekommunikation” in Content is King – Gemeinsamkeiten und Unterschiede bei der Qualitätsbeurteilung aus Angebots-vs. Rezipientenperspektive. eds. K. Beck, W. Schweiger, and W. Wirth (München: Fischer), 103–126.

David, Y. B.-B., and Baram-Tsabari, A. (2020). “Evaluating science communication training: going beyond self-reports” in Theory and best practices in science communication training. ed. T. P. Newman (Abingdon, Oxon, New York, NY: Routledge), 122–138.

Cheveigné, S.de, and Véron, E. (1996). Science on TV: forms and reception of science programmes on French television. Pub. Understand. Sci. 5, 231–253. doi: 10.1088/0963-6625/5/3/004

Dean, C. (2012). Am I making myself clear? A scientist’s guide to talking to the public. Cambridge, Massachusetts: Harvard University Press.

Dempster, G., Sutherland, G., and Keogh, L. (2022). Scientific research in news media: a case study of misrepresentation, sensationalism and harmful recommendations. JCOM 21:A06. doi: 10.22323/2.21010206

Donsbach, W. (2009). Entzauberung eines Berufs. Was die Deutschen vom Journalismus erwarten und wie sie enttäuscht werden. Konstanz: UVK.

Dudo, A., and Besley, J. C. (2016). Scientists’ prioritization of communication objectives for public engagement. PLoS One 11:e0148867. doi: 10.1371/journal.pone.0148867

Dunning, D., Heath, C., and Suls, J. M. (2004). Flawed self-assessment: implications for health, education, and the workplace. Psychol. Sci. Pub. Int. 5, 69–106. doi: 10.1111/j.1529-1006.2004.00018.x

Fähnrich, B. (2017). “Wissenschaftsevents zwischen Popularisierung, Engagement und Partizipation” in Forschungsfeld Wissenschaftskommunikation. eds. H. Bonfadelli, B. Fähnrich, C. Lüthje, J. Milde, M. Rhomberg, and M. S. Schäfer (Wiesbaden: Springer Fachmedien), 165–182.

Fawzi, N., and Obermaier, M. (2019). Unzufriedenheit – Misstrauen – Ablehnung journalistischer Medien. Eine theoretische Annäherung an das Konstrukt Medienverdrossenheit. M&K 67, 27–44. doi: 10.5771/1615-634X-2019-1-27

Fischhoff, B., and Scheufele, D. A. (2013). The science of science communication. Proceed. Nat. Acad. Sci. 110, 14031–14032. doi: 10.1073/pnas.1312080110

Freiling, I., Krause, N. M., Scheufele, D. A., and Brossard, D. (2023). Believing and sharing misinformation, fact-checks, and accurate information on social media: the role of anxiety during COVID-19. New Media Soc. 25, 141–162. doi: 10.1177/14614448211011451

Gantenberg, J. (2018). Wissenschaftskommunikation in Forschungsverbünden: Zwischen Ansprüchen und Wirklichkeit. Wiesbaden: Springer VS.

Gibbons, M. (1999). Science’s new social contract with society. Nature 402, C81–C84. doi: 10.1038/35011576

Gil de Zúñiga, H., and Hinsley, A. (2013). The press versus the public. Journal. Stud. 14, 926–942. doi: 10.1080/1461670X.2012.744551

Göpfert, W. (1993). “Publizistische Qualität. Probleme und Perspektiven ihrer Bewertung: Göpfert, W. (1993). Publizistische Qualität: Ein Kriterien-Katalog” in Publizistische Qualität. Probleme und Perspektiven ihrer Bewertung. eds. A. Bammé, E. Kotzmann, and H. Reschenberg (Profil: München), 99–109.

Hart, P. S., and Nisbet, E. C. (2011). Boomerang effects in science communication:. How motivated reasoning and identity cues amplify opinion polarization about climate mitigation policies. Commun. Res. 39, 701–723. doi: 10.1177/0093650211416646

Hasebrink, U. (1997). “Die Zuschauer als Fernsehkritiker?” in Perspektiven der Medienkritik: Die gesellschaftliche Auseinandersetzung mit öffentlicher Kommunikation in der Mediengesellschaft. Dieter Roß zum 60. Geburtstag. eds. H. Weßler, C. Matzen, O. Jarren, and U. Hasebink (Wiesbaden: VS Verlag für Sozialwissenschaften), 201–215.

Hendriks, F., Kienhues, D., and Bromme, R. (2015). Measuring Laypeople’s Trust in Experts in a digital age. The muenster epistemic trustworthiness inventory (METI). PLoS One 10:e0139309. doi: 10.1371/journal.pone.0139309

Huber, B., and Aichberger, I. (2020). Emotionalization in the media coverage of honey bee Colony losses. MaC 8, 141–150. doi: 10.17645/mac.v8i1.2498

Katz, E., Blumler, J. G., and Gurevitch, M. (1974). “Utilization of mass communication by the individual” in The uses of mass communication: Current perspectives on gratifications. eds. J. G. Blumler and E. Katz (Beverly Hills: Sage), 19–34.

Kohring, M. (2005). “Wissenschaftsjournalismus” in Handbuch Journalismus und Medien. eds. S. Weischenberg, H. J. Kleinsteuber, and B. Pörksen (Konstanz: UVK Verlagsgesellschaft), 458–488.

Kohring, M. (2012). “Die Wissenschaft des Wissenschaftsjournalismus. Eine Forschungskritik und ein Alternativvorschlag” in Öffentliche Wissenschaft und Neue Medien: Die Rolle der Web 2.0-Kultur in der Wissenschaftsvermittlung. eds. C. Y. R.-v. Trotha and J. M. Morcillo (Karlsruhe), 127–148.

LaRose, R., and Eastin, M. S. (2004). A social cognitive theory of internet uses and gratifications. Toward a new model of media attendance. J. Broadcast. Electron. Med. 48, 358–377. doi: 10.1207/s15506878jobem4803_2

Lidskog, R., Berg, M., Gustafsson, K. M., and Löfmarck, E. (2020). Cold science meets hot weather: environmental threats, Emotional Messages and Scientific Storytelling. MaC 8:118. doi: 10.17645/mac.v8i1.2432

Loomba, S., Figueiredo, A.de, Piatek, S. J., Graaf, K.de, and Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat. Hum. Behav. 5, 337–348. doi: 10.1038/s41562-021-01056-1

Loosen, W., Reimer, J., and Hölig, S. (2020). What journalists want and what they ought to do (in)Congruences between journalists’ role conceptions and audiences’ expectations. Journal. Stud. 21, 1744–1774. doi: 10.1080/1461670X.2020.1790026

MacCallum, R. C., Browne, M. W., and Sugawara, H. M. (1996). Power analysis and determination of sample size for covariance structure modeling. Psychol. Methods 1, 130–149. doi: 10.1037/1082-989X.1.2.130

Maier, M., Milde, J., Post, S., Günther, L., Ruhrmann, G., and Barkela, B. (2016). Communicating scientific evidence: scientists’, journalists’ and audiences’ expectations and evaluations regarding the representation of scientific uncertainty. Communications 41, 239–264. doi: 10.1515/commun-2016-0010

Mede, N. G., and Schäfer, M. S. (2020). “Kritik der Wissenschaftskommunikation und ihrer Analyse: PUS, PEST, Politisierung und wissenschaftsbezogener Populismus” in Medienkritik zwischen ideologischer Instrumentalisierung und kritischer Aufklärung. ed. H.-J. Bucher (Köln: Herbert von Halem Verlag), 297–314.

Mehlis, K. (2014). “Von der Sender-zur Nutzerqualität” in Journalismus und (sein) Publikum. eds. W. Loosen and M. Dohle (Wiesbaden: Springer VS), 253–271.

Meredith, D. (2021). Explaining research: How to reach key audiences to advance your work: How to reach key audiences to advance your work. 2nd Edn. Oxford: Oxford University Press.

Milde, J. (2009). Vermitteln und Verstehen: Zur Verständlichkeit von Wissenschaftsfilmen im Fernsehen. Wiesbaden: VS Research.

Milde, J. (2016). “Wissenschaftskommunikation zwischen Risiko und (Un-)Sicherheit: Milde, J., & Barkela, B. (2016). Wie Rezipienten mit wissenschaftlicher Ungesichertheit umgehen: Erwartungen und Bewertungen bei der Rezeption von Nanotechnologie im Fernsehen” in Wissenschaftskommunikation zwischen Risiko und (Un-)Sicherheit. eds. G. Ruhrmann, L. Guenther, and S. H. Kessler (Köln: Herbert von Halem Verlag), 193–211.

Milde, J., and Barkela, B. (2016). “Wie Rezipienten mit wissenschaftlicher Ungesichertheit umgehen: Erwartungen und Bewertungen bei der Rezeption von Nanotechnologie im Fernsehen” in Wissenschaftskommunikation zwischen Risiko und (Un-)Sicherheit. eds. G. Ruhrmann, S. H. Kessler, and L. Guenther (Köln: Herbert von Halem Verlag), 193–211.

Newman, T. P., Nisbet, E. C., and Nisbet, M. C. (2018). Climate change, cultural cognition, and media effects: worldviews drive news selectivity, biased processing, and polarized attitudes. Pub. Understand. Sci. 27, 985–1002. doi: 10.1177/0963662518801170

Olausson, U. (2011). We’re the ones to blame. Citizens’ representations of climate change and the role of the media. Environ. Commun. 5, 281–299. doi: 10.1080/17524032.2011.585026

Oxman, A. D., Guyatt, G. H., Cook, D. J., Jaeschke, R., Heddle, N., and Keller, J. (1993). An index of scientific quality for health reports in the lay press. J. Clin. Epidemiol. 46, 987–1001. doi: 10.1016/0895-4356(93)90166-X

Popper, K. R. (2002). Conjectures and refutations: the growth of scientific knowledge. Reprinted (twice) Edn. London: Routledge.

Posit team (2022). RStudio: Integrated Development Environment for R [Computer Software]. Posit Software. Available at: http://www.posit.co/

Rakedzon, T., and Baram-Tsabari, A. (2017). To make a long story short: a rubric for assessing graduate students’ academic and popular science writing skills. Assess. Writ. 32, 28–42. doi: 10.1016/j.asw.2016.12.004

R Core Team (2022). R: A Language and Environment for Statistical Computing [Computer Software]. R Foundation for Statistical Computing. Available at: https://www.R-project.org/

Robertson Evia, J., Peterman, K., Cloyd, E., and Besley, J. (2018). Validating a scale that measures scientists’ self-efficacy for public engagement with science. Int. J. Sci. Educ. Part B 8, 40–52. doi: 10.1080/21548455.2017.1377852

Rodgers, S., Wang, Z., and Schultz, J. C. (2020). A scale to measure science communication training effectiveness. Sci. Commun. 42, 90–111. doi: 10.1177/1075547020903057

Rögener, W., and Wormer, H. (2017). Defining criteria for good environmental journalism and testing their applicability: an environmental news review as a first step to more evidence based environmental science reporting. Pub. Understand. Sci. 26, 418–433. doi: 10.1177/0963662515597195

Rögener, W., and Wormer, H. (2020). Gute Umweltkommunikation aus Bürgersicht. Ein Citizen-Science-Ansatz in der Rezipierendenforschung zur Entwicklung von Qualitätskriterien. M&K 68, 447–474. doi: 10.5771/1615-634X-2020-4-447

Romer, D., and Jamieson, K. H. (2021). Conspiratorial thinking, selective exposure to conservative media, and response to COVID-19 in the US. Soc. Sci. Med. 291:114480. doi: 10.1016/j.socscimed.2021.114480

Rosseel, Y. (2012). lavaan: An R Package for Structural Equation Modeling. Journal of Statistical Software 48, 1–36. doi: 10.18637/jss.v048.i02

Rubega, M. A., Burgio, K. R., MacDonald, A. A. M., Oeldorf-Hirsch, A., Capers, R. S., and Wyss, R. (2021). Assessment by audiences shows little effect of science communication training. Sci. Commun. 43, 139–169. doi: 10.1177/1075547020971639

Ruggiero, T. E. (2000). Uses and gratifications theory in the 21st century. Mass Commun. Soc. 3, 3–37. doi: 10.1207/S15327825MCS0301_02

Saxer, U. (1981). Publizistische Qualität und journalistische Ausbildung: Saxer, U., & Kull, H. (1981). Publizistische Qualität und journalistische Ausbildung. Zürich: Publizistisches Seminar der Universität.

Scharrer, L., Bromme, R., Britt, M. A., and Stadtler, M. (2012). The seduction of easiness: how science depictions influence laypeople’s reliance on their own evaluation of scientific information. Learn. Instr. 22, 231–243. doi: 10.1016/j.learninstruc.2011.11.004

Scheufele, D. A., Krause, N. M., Freiling, I., and Brossard, D. (2020). How not to lose the COVID-19 communication war. Issues Sci. Technol. Available at: https://issues.org/covid-19-communication-war/

Schwitzer, G. (2008). How do US journalists cover treatments, tests, products, and procedures? An evaluation of 500 stories. PLoS Med. 5:e95. doi: 10.1371/journal.pmed.0050095

Serong, J. (2015). Medienqualität und Publikum: Zur Entwicklung einer integrativen Qualitätsforschung: Serong, J. (2015). Medienqualität und Publikum: Zur Entwicklung einer integrativen Qualitätsforschung. Konstanz: UVK.

Serong, J., Lang, B., and Wormer, H. (2019). Handbuch der Gesundheitskommunikation: Kommunikationswissenschaftliche Perspektiven. 1. Auflage 2019. Wiesbaden: Springer Fachmedien Wiesbaden GmbH; Springer VS.

Sharon, A. J., and Baram-Tsabari, A. (2014). Measuring mumbo jumbo: a preliminary quantification of the use of jargon in science communication. Pub. Understand. Sci. 23, 528–546. doi: 10.1177/0963662512469916

Siggener Kreis (2016). Guidelines for good science PR. Available at: https://www.wissenschaft-im-dialog.de/fileadmin/user_upload/Ueber_uns/Gut_Siggen/Dokumente/Guidelines_for_good_science_PR_final.pdf

Su, L. Y.-F., Akin, H., Brossard, D., Scheufele, D. A., and Xenos, M. A. (2015). Science news consumption patterns and their implications for public understanding of science. J. Mass Commun. Quart. 92, 597–616. doi: 10.1177/1077699015586415

Taddicken, M., and Reif, A. (2020). Between evidence and emotions: emotional appeals in science communication. MaC 8, 101–106. doi: 10.17645/mac.v8i1.2934

Taddicken, M., Wicke, N., and Willems, K. (2020). Verständlich und kompetent? Eine Echtzeitanalyse der Wahrnehmung und Beurteilung von Expert*innen in der Wissenschaftskommunikation. M&K 68, 50–72. doi: 10.5771/1615-634X-2020-1-2-50

van der Sanden, M. C., and Meijman, F. J. (2008). Dialogue guides awareness and understanding of science: an essay on different goals of dialogue leading to different science communication approaches. Pub. Understand. Sci. 17, 89–103. doi: 10.1177/0963662506067376

Vraga, E., Myers, T., Kotcher, J., Beall, L., and Maibach, E. (2018). Scientific risk communication about controversial issues influences public perceptions of scientists’ political orientations and credibility. R. Soc. Open Sci. 5:170505. doi: 10.1098/rsos.170505

Weingart, P. (2011). “Die Wissenschaft der Öffentlichkeit und die Öffentlichkeit der Wissenschaft”, in Wissenschaft und Hochschulbildung im Kontext von Wirtschaft und Medien. ed. B. Hölscher and J. Suchanek (VS Verlag für Sozialwissenschaften), 45–61.

Weingart, P., and Guenther, L. (2016). Science communication and the issue of trust. J. Sci. Commun. 15:C01. doi: 10.22323/2.15050301

Weitze, M.-D., and Heckl, W. M. (2016). “Aktuelle Herausforderungen und Ziele” in Wissenschaftskommunikation - Schlüsselideen, Akteure, Fallbeispiele. eds. M.-D. Weitze and W. M. Heckl (Berlin, Heidelberg: Springer), 269–275.

Wicke, N. (2022). Eine Frage der Erwartungen? Publizistik 67, 51–84. doi: 10.1007/s11616-021-00701-z

Wicke, N., and Taddicken, M. (2020). Listen to the audience(s)! Expectations and characteristics of expert debate attendants. JCOM 19:A02. doi: 10.22323/2.19040202

Wicke, N., and Taddicken, M. (2021). I think it’s up to the media to raise awareness. quality expectations of media coverage on climate change from the audience’s perspective. SComS 21. doi: 10.24434/j.scoms.2021.01.004

Willoughby, S. D., Johnson, K., and Sterman, L. (2020). Quantifying scientific jargon. Pub. Understand. Sci. 29, 634–643. doi: 10.1177/0963662520937436

Wilson, A., Bonevski, B., Jones, A., and Henry, D. (2009). Media reporting of health interventions: signs of improvement, but major problems persist. PLoS One 4:e4831. doi: 10.1371/journal.pone.0004831

Wolling, J. (2004). Qualitätserwartungen, Qualitätswahrnehmungen und die Nutzung von Fernsehserien. Publizistik 49, 171–193. doi: 10.1007/s11616-004-0035-y

Wolling, J. (2009). “The effect of subjective quality assessments on media selections” in Media choice: A theoretical and empirical overview. ed. T. Hartmann (New York: Routledge), 84–101.

Wormer, H. (2017). “Vom public understanding of science zum public understanding of journalism”, in Forschungsfeld Wissenschaftskommunikation. eds. H. Bonfadelli, B Fähnrich, C. Lüthje, J. Milde, M. Rhomberg, and M. S. Schäfer (Wiesbaden: Springer VS), 429–451

Wyss, V. (2011). Klaus Arnold: Qualitätsjournalismus. https://www.rkm-journal.de/archives/630

Keywords: science communication, quality, audience perspective, evaluation, multidimensional

Citation: Taddicken M, Fick J and Wicke N (2024) Is this good science communication? Construction and validation of a multi-dimensional quality assessment scale from the audience’s perspective. Front. Commun. 9:1384403. doi: 10.3389/fcomm.2024.1384403

Received: 09 February 2024; Accepted: 22 April 2024;

Published: 23 May 2024.

Edited by:

Anabela Carvalho, University of Minho, PortugalReviewed by:

Alessandra Fornetti, Venice International University, ItalyCopyright © 2024 Taddicken, Fick and Wicke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Monika Taddicken, bS50YWRkaWNrZW5AdHUtYnJhdW5zY2h3ZWlnLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.