- Department of Media Studies, Maynooth University, Maynooth, Ireland

Regular engagement with technologies through habit enables these to infiltrate our lives as we are constituted through our machines. This provocation underpins the digital performance art works Ghost Work and Friction, which involve creative repurposing of everyday digital technologies as poetic operations, presenting an embodiment of algorithms that engages with their performativity. The execution of these performance algorithms are interventions into data collection, crafting feminist fabulations in the algorithmic empire of what Couldry and Mejias refer to as data colonialism. Using methods of data feminism in conjunction with Hui's philosophy of technology, these performances cultivate aesthetic experiences that are multifaceted instances of data visceralization. Ghost work and Friction use artistic idioms thick with meaning, reflexively engaging with processes of contingency and recursivity present in human-technological relations. The resulting digital performances are aesthetic experiences that are affective and ambivalent, introducing alternative logics to hegemonic algorithmic thinking that emphasizes extraction and optimization.

1 Introduction

Media theorist Wendy Chun describes how “through habits users become their machines” (Chun, 2016, p. 1). This provocation underpins my approach to technical systems in the performance artworks Ghost Work (2023) and Friction (2023). As a performance and digital artist, I engage human and technological relations with the human body as interface. The body functions as creator of an artwork as well as existing as medium. I develop a score of performance actions—an algorithm—that is implemented as a creative repurposing of everyday digital technologies, presenting an embodiment of algorithms that engages with their performativity. This process instigates interventions into data collection, crafting feminist fabulations in the algorithmic empire of what Nick Couldry and Ulises Ali Mejias refer to as data colonialism, or “an emerging order for the appropriation of human life so that data can be continuously extracted from it for profit” (Couldry and Mejias, 2019, p. xiii). In this paper, I present analyses of these performances, bringing together principles of data feminism (D'Ignazio and Klein, 2020) with Hui's (2019, p. 114) philosophy of technology, specifically his notion of algorithmic thinking, or the realization of “general recursive thinking” with the rise of digital technological systems. The resulting works are aesthetic experiences that are affective and ambivalent, engaging with the recursion and contingency of human-technological relations through idiosyncratic, alternative logics.

2 Algorithms of/as performance

I use the term algorithm to describe the series of steps followed in the execution of a performance. An algorithm is commonly understood as a set of instructions that lead to a particular outcome. However, Gillespie (2016) describes how the term algorithm evokes different meanings within different contexts. While in computing, the concept of algorithm takes on literal meaning related to problem solving in software development, within the social sciences, the term becomes nuanced, challenging the seeming objectivity of the term. For instance, social scientist and African American studies scholar Benjamin (2019) argues how even determining what problems get addressed encompasses a range of judgements rooted in human preference and bias. Bucher (2018) emphasizes how the significance of algorithms comes through their enactment, which is material, relational, and cultural, shaping how people engage with the world. Algorithms are not simply a set of instructions, but define operational logic: a way of thinking.

The algorithms of my performances set forth the steps to be followed presented in pseudocode (see Supplementary material). The outcomes of the described actions are not known in advance, with each performance functioning as a unique iteration of the algorithm's execution (Chun, 2011). This type of creative and critical engagement with computation through practice-based research is what cárdenas (2022, p. 29) refers to as poetic operations, or when “algorithmic poetics use the performativity of digital code to bring multiple layers of meaning to life in networks of signification.” Instead of just critiquing algorithmic operations, digital code becomes the means of producing alternative engagement.

My approach to artistic production and this subsequent analysis engages with methods of data feminism. Catherine D'Ignazio and Klein (2020) define data feminism as an approach to data science and ethics informed by intersectional feminism. Their method provides an alternative to the current dominant approach to data analysis that emphasizes large-scale data collection, objective presentations, and an unquestioning faith in statistical analysis devoid of context. They propose seven core principles of data feminism, of which I engage with several in the discussed performances: elevation of embodiment and emotion, consider context, and make labor visible. I also engage in methods of data visceralization, which are a means of extending beyond the visual as “representations of data that the whole body can experience, emotionally as well as physically” (D'Ignazio and Klein, 2020, p. 84–85).

I produce art from the position of an artist-philosopher, which Smith (2018) proposes as an artist who does not just reflect on philosophy through art, but acts as a poetic logician, where art becomes the means of practicing philosophy. My method for producing an art work tends to first focus on the medium as means of inquiry and production, where I act as instrument in arts-based research (Eisner, 1981). When writing about my own work, as in this article, I reflect on the process of production and execution, unpacking theoretical insights that arise, expand ideas and draw explicit connections to the theoretical groundings of the work. My artistic practice is also influenced by my situated experiences and knowledge as a white, non-gender conforming woman and American citizen who has been living in Ireland since 2013. Ireland, while part of the European Union, is a former British colony. As a performance artist whose body functions as both instrument and medium, such qualities of my situated identity are evident within the performances.

3 Ghost work: body as extractable

In Ghost work, I act as the interface between two computer systems (Figure 1).1 One computer generates a series of five animations that are played on a television monitor. These are created in random order, with each animation running for a random period of time between 1 and 5 min. I have assigned an exercise to four animations and one for rest, and I perform the exercise in accordance to what animation is being generated. I wear a Bluetooth heart rate monitor that connects to a second computer where a slit-scan camera captures my movements. My heart rate controls the color of the video and frequency of sound that this second computer generates. Every time something goes wrong with the technology, I scream “Crash” and fix it. Once resolved, I scream “Override” and return to exercising. The performance ends after 1 hour. This performance was presented twice (23 and 25 January 2023) at Emerson Contemporary Media Gallery in Boston, MA, USA.

Figure 1. Performance documentation of Ghost work (2023) by EL Putnam at Emerson Contemporary. Presented as part of the solo exhibition PseudoRandom, curated by Leonie Bradbury. Photo courtesy of the artist.

The title Ghost Work refers to Gray and Suri (2019) description of the hidden labor that powers current digital technologies. Despite hyped-up claims of automation and artificial intelligence, humans are vital to the structure and functioning of computation. Much of this labor is hidden beneath the interface, partitioned as microtasks that are distributed through crowd work platforms, increasingly being carried out in the global south (Gray and Suri, 2019; Crawford, 2021; Perrigo, 2023). Ghost work is a performed manifestation of work (both the work of code debugging and physical exercise) that is operationalized through the algorithmic instructions of the performance and the algorithms of the animations.

Within Ghost work, there is a series of repeating loops. From the looping computer functions that create the generative animations to the repetitive actions of my exercise, these iterative cycles are not just recurrence of the same, but create potentials for difference in how the performance unfolds. Media philosopher Hui uses the term recursion, which he derives from computation, to describe this process. He emphasizes how recursion is not simply a loop, but is “a function [that] calls itself in each iteration until a halting state is reached, which is either a predefined and executable goal or a proof of being incomputable” (Hui, 2019, p. 114). That is, recursion involves repetition of an action that produces and incorporates feedback, like a spiral. Hui defines contingency as a rupture in the functioning of systems. He extends these concepts beyond computation to consider relations between “human and machine, technology and environment, the organic and the inorganic” (Hui, 2021, p. 232–33). Taken together, recursivity and contingency “lead to the emergence and constant improvement of technical systems” (Hui, 2019, p. 1).

While recursivity is present in Ghost work through the looping of functions and actions, contingency manifests in the moments of breakdown. Even though I had spent time debugging the software to ensure functionality, different issues arose during each iteration of the performance. I drew attention to software malfunction by screaming and live coding in order to resolve the issues to keep the system functioning. Notably, my screaming “Crash” referred only to technological breakdown and not to my physical state. For instance, during the second iteration of the performance, the input of heart rate value for the audio tone generator was a number outside the designated range of the function, thereby preventing playback of the slit-scan video. It took some time for me to identify this issue, with this process of trouble shooting taking place during a designated rest period from exercise, in accordance with the generated animations. This meant I was unable to physically recover from an extended series of performing burpees and went straight into a sequence of jumping jacks. To let my body recover, I performed these jumping jacks slowly, lifting my arms and spreading my legs without jumping off the ground. While I appeared to do strenuous exercise, my heart rate lowered during this period as I took the time to rest. I developed a strategy to cope with the demands of the machine, adapting my movements to the algorithmic thinking that was physically exhausting to maintain the functionality of the system.

Hui (2019) refers to modernity as defined by resilience, or the capacity to tolerate contingency. That is, recursivity integrates contingency as feedback to improve systems, resulting in what he describes as a giant or general machinic organism within which we live. As we move toward higher degrees of automation on all levels, dominated by algorithmic thinking, or calculative reason and rationalism, other means of thought, such as speculative reason and techno-diversity, are precluded. Couldry and Mejias (2019) describe how every aspect of human experience and relationality is subjected to profitable extraction. They argue that these processes are a perpetuation of colonization through data, which includes the use of apps and other technical devices to track biometric data, such as the Bluetooth heart rate monitor I engage with in Ghost work. While historically colonialism involves the annexation of territories and inhabitants for resources and profit, data colonialism encompasses “the capture and control of human life itself through appropriating the data that can be extracted from it for profit” (Couldry and Mejias, 2019, p. xi). To facilitate such capture, our behaviors and activities are encouraged, nudged, and mediated in ways to be more extractable. The extraction of such “resources” in Ghost work include time, labor, and biometric data. Emphasis is placed on the functioning of the technical system at the expense of the physical body and its cognitive labor. Colonialism is not just present in the extraction of resources, but also in the thinking that underpins this process, or what Mignolo (2011) defines as coloniality: the logic and rationale that underpins colonization. That is, the algorithmic thinking of data colonialism functions as a form of data coloniality.2 As such, Ghost work offers another way of thinking, where breakdown becomes a means of interrupting the logics of data colonialism. What is at stake is not just processes of extraction, but the algorithmic thinking that motivates such extraction.

4 Friction: snagging information

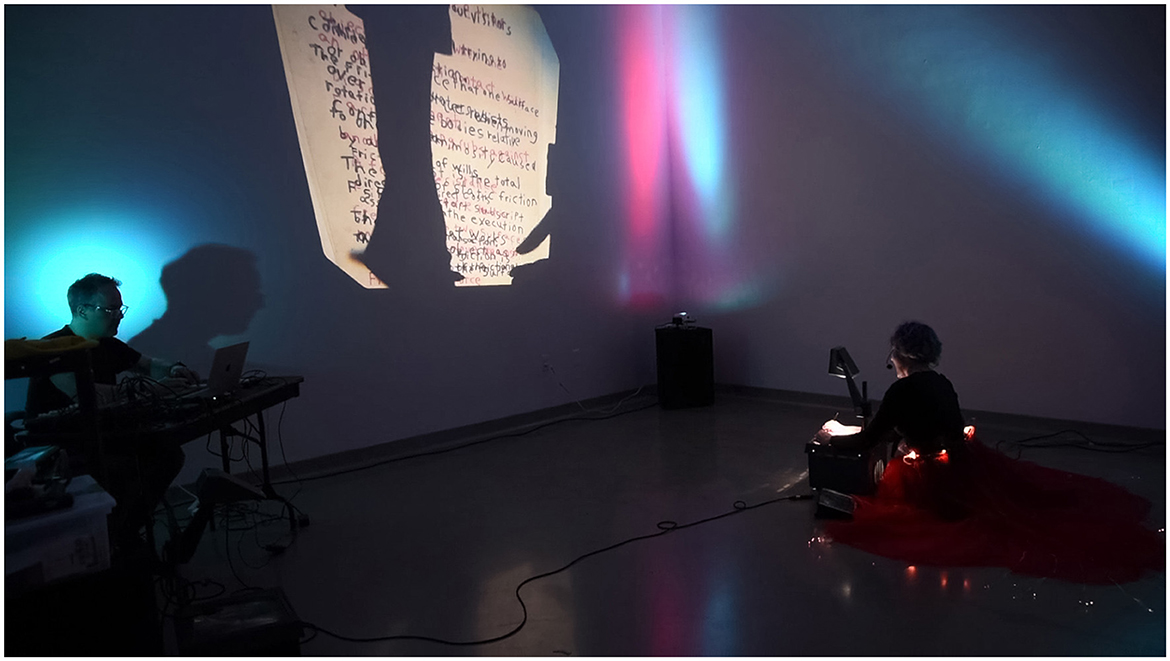

Friction was developed and performed in collaboration with sound artist and composer David Stalling (Figure 2).3 It was presented at Emerson Contemporary on 22 March 2023. Inspired by machine learning models that scrape the World Wide Web for data to produce generated text, I google the term “friction,” writing my results without source information onto acetate transparencies on an overhead projector while vocalizing my findings. Stalling turns my vocalizations, along with sounds from a contact microphone on the projector, into an improvised electroacoustic composition. In contrast to large language models (Bender et al., 2021), the pace and scale of my resulting data set is slow and small. I decided on what text to transcribe in the order it appeared in the search results, practicing discretion in selecting text based on what was already written. I did not click onto any page, but limited the text I transcribed to what was presented in the search results. I did not provide context or citations, intentionally merging the text into a single document that layered as I added pieces of acetate.

Figure 2. Performance documentation of Friction by EL Putnam and David Stalling. Presented as part of the solo exhibition PseudoRandom, curated by Leonie Bradbury. Photo courtesy of the artists.

Recursivity occurred through the repetition of performed gestures, with contingency introduced through the difficulties faced in collecting and transcribing information within the context of the performance. I was writing upside down and vocalizing the text at the pace of writing, and the space for writing crowded as acetate sheets accumulated over time. Through the execution of the performance algorithm, recursivity and contingency triggered differences that instigated creative responses. As the performance progressed, my recitations took on a musical quality as I was aware of the improvised soundscape filling the room. In some moments, I picked up my pace, my voice becoming louder. At other times, I paused in the flow and rhythm of the experience, meditating on the output as I slowly moved my hand over the projector's light. This improvised gathering of information was productive due to, not despite, the frictions that emerged, which include the differences that come from working with two people in a context of improvisation; a need to be attuned not just to the mechanics of technology, but also each other.

The use of the Google search engine is intentional in Friction, as it currently dominates the market to the extent where “Google” is used to refer to any act of searching for information on the web. Google uses its PageRank algorithm to organize and display search results, which Larry Page and Sergey Brin developed in 1998. Despite the ubiquity of Google and its minimal interface—the landing page for the search engine conveys only a search bar on a blank screen with the Google logo above it—the exact workings of its proprietary algorithms are not revealed and protected as trade secrets (Vaidhyanathan, 2012). Minimal design enables illusions of transparency, building trust in the accuracy and credibility in seemingly objective search results. Siva Vaidhyanathan describes the extent to which Google has infiltrated our engagement with information, putting “unimaginable resources at our finger tips,” while cultivating a faith in the Google brand where “Google is the lens through which we view the world” (Vaidhyanathan, 2012, p. 2 and 7). This extends from our engagement with information to our identity as subjects, as digital proxies come to determine what information we access (Cheney-Lippold, 2017). In addition, as scholars have made evident (Introna and Nissenbaum, 2000; Noble, 2018; Benjamin, 2019), search results are rife with biases, including influence from political beliefs, racism, sexism, and other prejudices, despite the fact that search results are presented as objective.

This array of concerns for how information is ordered and accessed through Google has been scaled up and made opaquer with the rise of natural language processing and predictive chat bots like Chat GPT. While Google search results at least enable the capacity to visit websites and view information within its initial scenario of presentation, affording at least some credibility check, these qualities are not present in results for Chat GPT as information is provided without context. The operational logic of organizing information for relevancy through opaque algorithmic thinking is evident in the development of Chat GPT and its subsequent celebration as a means of increasing productivity and improving optimization. However, what gets lost when emphasis gets placed on efficiency? Who and what benefits from increased optimization?

In her analysis of Smart Cities, Powell (2021, p. 6) states: “The important point here is that sociotechnical imaginaries are not mere visions; they are sustained also by the creation and maintenance of technological systems and by the alignment of particular ideas about how things ought to be with what technologies have made possible.” She emphasizes how cybernetic systems require data that are cleaned, ordered, and parsed to improve predictability and provide optimal results. A consequence of these processes, she notes, is the reduction of friction and difference in data, with the logic of computation extending from software to social relations as platforms influence how we engage with the world. Friction for Powell is vital for countering processes of optimization that reinforce technology-driven assumptions rooted in calculative logic that is designed to benefit visions of technology companies. Tsing (2005, p. 4) defines friction as “the awkward, unequal, unstable, and creative qualities of interconnection across difference.” Instead of being simply problems to be resolved, frictions are what make connections possible. Reducing friction may improve the optimization of cybernetic systems, but this algorithmic thinking precludes the potential for alternative processes when removed from systems (Powell, 2021). Hence, I end Friction with the phrase: “Friction is the snags that keep us together.”

5 Techno-diversity: other logics

At the same time, this friction produces contingencies that can be productive for cybernetic systems, as these introduce feedback that is recursively integrated into the machine (Hui, 2019). The more data input into a system and the more feedback on the accuracy of the results enables improvement to the model through recursion. Therefore, the existence of friction alone is not sufficient, since as contingencies, these can be recursively integrated, enabling machinic logic to expand through colonization of information. Countering this totalizing system requires a different logic from the rationale of coloniality that underpins algorithmic thinking. Data coloniality, like coloniality more generally speaking, involves power relations between regimes of privilege and disadvantage, including the economic, geographic, and racial divides between the global north and global south. The discussed performances speak to this darker side (Mignolo, 2011) of the technology industries (Putnam, 2023). For instance, the hidden labor used to develop AI, increasingly being performed in the global south, is contrasted with my situated experiences in the global north, evident in my presence in the work as medium, as I benefit from the privileges that data colonialism perpetuates.

However, these performances are not just critiques. Performance art enables the creation and utilization of idiosyncratic gestures and meanings developed through artistic production. As an artist, I engage with everyday technologies, but implement them in atypical ways in the scenarios of performance to defamiliarize our relationship to them, as noted in the analyses of Ghost work and Friction above. These processes introduce alternative logics in response to the non-rational, or what Hui (2019, p. 33) describes as “the limit of the rational.” Logic systems, epistemologies, and different sensibilities all attempt to bring consistency to the non-rational, with technology functioning as a means of inscribing these systems (Hui, 2021). Data coloniality is one such attempt at cultivating consistency with a global reach. Art can function as a means of bringing out the non-rational, or what is “beyond the realm of demonstration,” through engagement with the unconventional and paradoxes (Hui, 2021, p. 123). Here contradictions are not resolved through the Boolean logic of algorithmic thinking, but are allowed to exist. Such qualities are present in both Ghost work and Friction. For instance, Ghost work functions as a performance because of, not despite, its breakdown. Attention is verbally drawn to the breakdown of the machine. The breakdown of my body through exhaustion is also evident through heavy breathing and physical strain, yet not acknowledged in the same way. In Friction, the collection of information produces a palimpsest, where the performance builds through the cultivation of sonic and visual noise, resulting in a compilation of data that is confusing rather than clarifying. The success of both works depends on cultivating these tensions, introducing a temporary intervention in ubiquitous technologies that invites a different engagement through poetic operations. Aesthetic experiences like these cannot be easily quantified. According to Noel Fitzpatrick, “there are modes of mediation in the world which lie outside measurability and calculation” (Fitzpatrick, 2021, p. 124). When technologies are engaged in this manner, they can “enable reflection, deliberation, conflict and reason” (Fitzpatrick, 2021, p. 124), potentially challenging the totalizing logic of algorithmic thinking.

6 Conclusion

Ghost Work and Friction employ the performativity of algorithms through processes of recursivity and contingency that refuses to simply become machine. That is, these performances offer alternatives to the operational logics of algorithmic thinking to counter dominant, colonizing regimes of sociotechnical imaginaries. In the discussed performances, emphasis is placed on engagements with technologies, rather than output. The purpose of this approach is to counter the treatment of human bodies, their actions, and relations as standing reserve for data colonialism. My approach as an artist engages with the simple operations of the performance algorithms, with resulting aesthetic experiences that are multifaceted instances of data visceralization. I intend to cultivate affective responses from the audience that hold rather than resolve contradictions, which may invoke ambiguous and changing emotions such as confusion, interest, boredom, and/or meditative engagement. I create an alternative logic for presentations of data gathering and analysis as temporary interventions into such systems while critically engaging with the technologies of data colonialism and their underlying logics. These alternative logics introduce difference while making visible the processes of contingency and recursivity in action present in human and technological relations, using idiosyncratic gestures that are thick with meaning, which cannot be easily extracted from the work nor easily quantified.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

EP: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The author would like to thank Leonie Bradbury, Jim Manning, and Shana Dumont Garr of Emerson Contemporary for support in presenting the performances Ghost Work and Friction as part of the exhibition PseudoRandom. I would also like to thank the editor Abdelmjid Kettioui for providing vital insights and feedback for revising this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2024.1352628/full#supplementary-material

Footnotes

1. ^For additional images and video documentation, refer to the website: EL Putnam, “Ghost Work,” http://www.elputnam.com/ghostwork/.

2. ^I would like to thank Abdelmjid Kettioui for this suggestion and drawing this to my attention.

3. ^For additional images and video documentation, refer to the website: EL Putnam, “Friction,” http://www.elputnam.com/friction/.

References

Bender, E. M., Gebru, T., McMillan-Major, A., and Shmitchell, S. (2021). “On the dangers of stochastic parrots: can language models be too big?” in Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. Virtual Event Canada (Toronto, ON: ACM), 610–623. doi: 10.1145/3442188.3445922

Benjamin, R. (2019). Race after Technology: Abolitionist Tools for the New Jim Code. Newark: Polity Press. doi: 10.1093/sf/soz162

Bucher, T. (2018). If...Then: Algorithmic Power and Politics. New York, NY: Oxford University Press.

cárdenas, m. (2022). Poetic Operations: Trans of Color Art in Digital Media. Asterisk. Durham: Duke University Press. doi: 10.1515/9781478022275

Cheney-Lippold, J. (2017). We Are Data: Algorithms and the Making of Our Digital Selves. New York, NY: New York University Press. doi: 10.18574/nyu/9781479888702.001.0001

Chun, W. H. K. (2011). Programmed Visions: Software and Memory. Cambridge, MA: MIT Press. doi: 10.7551/mitpress/9780262015424.001.0001

Chun, W. H. K. (2016). Updating to Remain the Same: Habitual New Media. Cambridge, MA: The MIT Press. doi: 10.7551/mitpress/10483.001.0001

Couldry, N., and Mejias, U. A. (2019). The Costs of Connection: How Data Is Colonizing Human Life and Appropriating It for Capitalism. Stanford, CA: Stanford University Press. doi: 10.1515/9781503609754

Crawford, K. (2021). Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. New Haven, CT: Yale University Press. doi: 10.12987/9780300252392

D'Ignazio, C., and Klein, L. (2020). Data Feminism. Cambridge, MA: MIT Press. doi: 10.7551/mitpress/11805.001.0001

Eisner, E. W. (1981). On the differences between scientific and artistic approaches to qualitative research. Educ. Res. 10, 5. doi: 10.2307/1175121

Fitzpatrick, N. (2021). “The neganthropocene: introduction,” in Aesthetics, Digital Studies and Bernard Stiegler, eds N. Fitzpatrick, N. O'Dwyer, and M. O'Hara (London; New York, NY: Bloomsbury), 123–125.

Gillespie, T. (2016). “Algorithm,” in Digital Keywords: A Vocabulary of Information Society and Culture, ed. B. Peters (Princeton, NJ: Princeton University Press), 18–30. doi: 10.2307/j.ctvct0023.6

Gray, M. L., and Suri, S. (2019). Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass. Boston, MA: Houghton Mifflin Harcourt.

Hui, Y. (2019). Recursivity and Contingency. Media Philosophy. London: Rowman and Littlefield International.

Introna, L. D., and Nissenbaum, H. (2000). Shaping the web: why the politics of search engines matters. Inf. Soc. 16, 169–85. doi: 10.1080/01972240050133634

Mignolo, W. D. (2011). The Darker Side of Western Modernity: Global Futures, Decolonial Options. Durham, NC: Duke University Press. doi: 10.2307/j.ctv125jqbw

Noble, S. U. (2018). Algorithms of Oppression : How Search Engines Reinforce Racism. New York, NY: New York University Press. doi: 10.18574/nyu/9781479833641.001.0001

Perrigo, B. (2023). Exclusive: the $2 per hour workers who made ChatGPT safer. TIME January 18, 2023. Available online at: https://time.com/6247678/openai-chatgpt-kenya-workers/ (accessed January 9, 2024).

Powell, A. B. (2021). Undoing Optimization: Civic Action in Smart Cities. New Haven, CT: Yale University Press. doi: 10.12987/9780300258660

Putnam, E. L. (2023). The question concerning technology in ireland?: art, decoloniality and speculations of an Irish cosmotechnics. Arts 12, 92. doi: 10.3390/arts12030092

Smith, G. (2018). The Artist-Philosopher and New Philosophy. London: Routledge. doi: 10.4324/9781315643823

Tsing, A. L. (2005). Friction: An Ethnography of Global Connection. Princeton, NJ: Princeton University Press. doi: 10.1515/9781400830596

Keywords: digital performance art, algorithmic thinking, poetic operations, data feminism, data visceralization

Citation: Putnam EL (2024) On (not) becoming machine: countering algorithmic thinking through digital performance art. Front. Commun. 9:1352628. doi: 10.3389/fcomm.2024.1352628

Received: 08 December 2023; Accepted: 10 January 2024;

Published: 23 January 2024.

Edited by:

Abdelmjid Kettioui, Moulay Ismail University, MoroccoReviewed by:

Miren Gutierrez, University of Deusto, SpainCopyright © 2024 Putnam. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: EL Putnam, ZWwucHV0bmFtQG11Lmll

EL Putnam

EL Putnam