- 1Integrated Science Lab, School of Interactive Arts and Technology, Simon Fraser University Surrey, Surrey, BC, Canada

- 2Quantellia LLC, Office of the Chief Scientist, Sunnyvale, CA, United States

Visual analytics was introduced in 2004 as a “grand challenge” to build an interdisciplinary “science of analytical reasoning facilitated by interactive visual interfaces”. The goal of visual analytics was to develop ways of interactively visualizing data, information, and computational analysis methods that augment human expertise in analysis and decision-making. In this paper, we examine the role of human reasoning in data analysis and decision-making, focusing on issues of expertise and objectivity in interpreting data for purposes of decision-making. We do this by integrating the visual analytics perspective with Decision Intelligence, a cognitive framework that emphasizes the connection between computational data analyses, predictive models, actions that can be taken, and predicted outcomes of those actions. Because Decision Intelligence models factors of operational capabilities and stakeholder beliefs, it necessarily extends objective data analytics to include intuitive aspects of expert decision-making such as human judgment, values, and ethics. By combining these two perspectives we believe that researchers will be better able to generate actionable decisions that ideally effectively utilize human expertise, while eliminating bias. This paper aims to provide a framework of how Decision Intelligence leverages visual analytics tools and human reasoning to support the decision-making process.

1 Introduction

Businesses and organizations are shaped by micro and macro-level decisions about actions to be taken, made on a daily basis (Spetzler et al., 2016, p. 3). Approximately three billion of these decisions are made every year (Larson, 2017). Research has shown that there is a 95% correlation between decision effectiveness and financial performance (Blenko et al., 2014). Human reasoning is the bridge that connects analysis of available data and information, to decisions about potential actions and their links to desired outcomes. Recent advancements in Artificial Intelligence have allowed for more robust decision automation, where AI can tackle problems in the real-world (Autor, 2015; Brynjolfsson and McAfee, 2018), however, it has also brought forward questions regarding its integration into organizational decision-making processes (Pratt et al., 2023), where expertise and intuition of the decision-maker is known to play a major role (Lufityanto et al., 2016).

Visual analytics aims to augment this process, by providing guidance on how to effectively visualize representations of data for understanding, and interaction with those data to better support analytic workflows and improve outcomes (Thomas and Cook, 2005). The data needed to be analyzed for any specific decision can be extremely large and complex and can be presented in a clearer manner using interactive information visualization displays. Through such analyses, users are able to identify patterns, trends, outliers, correlations, or other types of relationships between variables. These analyses may include predictive modeling. These observations and analyses can lead to formal or informal formulations of hypotheses about situations and potential outcomes, which can be further analyzed to uncover potential causal relationships. In some situations there is sufficient resulting data about outcomes that allows validation of the hypothesis.

Given the visual analytics' goal of driving higher-quality intuition in the analysis process (Lufityanto et al., 2016), this quest for knowledge is inherently subject to objectivity challenges. The application of intuition, according to Kahneman (2013) System 1 and System 2 thinking approach, is carried out automatically in tasks such as pattern recognition. This intuitive system is more difficult to justify, given that it isn't directly derived from facts or evidence, but rather from the experience of the decision-maker. However, most organizational decisions ideally integrate individual expertise with System 2 thinking, or in other words, conscious analytical processing. When justifying a decision to a stakeholder, decision-makers within an organization must be able to provide reasoning based on facts and what is assumed to be accurate, or “objective,” data. This challenge can be mitigated if the decision-maker has a proven record of prior decisions that have resulted in achieving the desired outcomes. The discussions around this dilemma have made room for further investigation into the role of human reasoning in data-informed decision-making and how this intuitive process is conducted alongside the inherently analytic and objective forms of the visualized data.

A recent development that attempts to address this challenge is Decision Intelligence (DI). DI supports decision-making processes through an analytical connection between data presented using visual analytics guidelines, potential actions, and intended outcomes. This new field aims to provide a structured process for more efficient data-driven decision making, through ensuring the productive collaboration between humans to map the decision. The mapped decisions are then supported using predictive analytics and machine learning models, and are then presented to the decision maker in the form of visualizations. DI also utilizes the interactivity component of visual analytics, as the dynamic exploration of visualized data and the decision process promotes a deeper understanding of the consequences of certain actions. DI supports decision making at three levels: decision support, decision augmentation, and decision automation (Bornet, 2022). These levels are utilized based on the amount of automation required and the type of decision (e.g., action-to-outcome vs. classification vs. regression) required (Kozyrkov, 2019; Pratt, 2019). Visual analytics is involved in all three levels, although in different stages. Decision support and decision augmentation utilize visual analytics in the exploration of data and the decision process visualizations. Decision automation refers to automating the descriptive and predictive analytics processes. The goal of a hybrid intelligent system, such as a DI simulation, is partial automation, which requires human input for prescriptive analytics and optimization, which is often communicated to the human through visual analytics tools.

2 Visualization and human reasoning

Data, in its raw form, is often interpreted to be inherently objective and provides seemingly objective information and insight on the subject. Human reasoning plays an important epistemological role in data-informed decision-making, as it provides the connection between the provided data and the ultimate decision. The process of interpreting the available data, identifying potential trends or patterns, and eventually creating an analytical and structured mental decision map is entirely a product of human reasoning.

There are multiple cognitive processes that play a role in human reasoning, such as memory, attention, and sensory perception. These mechanisms help create meaningful representations of the raw data and identify meaningful patterns. Human reasoning allows us to judge the provenance of the data, to assess its reliability given the circumstances. It also helps a decision-maker to determine the significance of the available data and how it pertains to solving the problem of making the current decision. Raw data may be seen as inherently objective, but the appropriateness of said data, as well as any analysis conducted on it, poses questions regarding validity, biases, and limitations.

In order to identify deeply embedded patterns in the data, humans utilize more complex mechanisms and skills, such as logic and inference. When reasoning about a decision-making event, causal reasoning is mainly used to connect actions to outcomes. Causal reasoning, a top-down approach, compared to the bottom-up approach of Causal Learning, allows an individual to ask how and why questions. This requires more complex cognitive mechanisms and skills, such as logic and inference.

One of the common challenges present both in this human reasoning process, as well as information and data visualization in general, is biases (Dimara et al., 2018). Biases appear in many distinct forms, as categorized by Dimara et al. into seven main categories. Biases may be present in the raw data, which is a separate challenge to be addressed through analysis of data provenance, but conclusions that are developed through human-centered analysis are also naturally affected by the analyst's cognitive biases. Furthermore, the developments in machine learning and predictive modeling built to test hypotheses through visual analytics has exacerbated the issues around biases, given that the training datasets are also at risk of similar challenges of data provenance. Assuming the data is accurate, and no biases exist in the analysis phase, decision-makers might subconsciously favor some specific information that confirms their preexisting thought pattern, which is referred to as Confirmation Bias. Overconfidence in one's domain knowledge, or potential instances of the domain of expertise not perfectly aligned with actual proficiency, are additional challenges the decision-maker may either knowingly or unknowingly face, which is at times the case when there is resistance to change within the organization. Overreliance on AI models or simulations is an additional challenge, given that humans sometimes utilize System 1 thinking, and “outsource” System 2 thinking (Buçinca et al., 2021). Buçinca et al. (2021) report that this challenge can potentially be addressed through cognitive forcing functions that force the decision-maker to consciously deliberate the output of the AI model.

These limitations may also be addressed via reasoning, given that, with enough time and the ability to look at the decision process as a whole, humans can, in theory, uncover any biases or limitations present in the process, either originating from the decision-maker themselves, from the mechanism by which data was created or collected, originating within the system using the technology, or originating from a low-fidelity model. Just as software developers or aeronautical engineers routinely review their work for defects, decision makers can do the same for decision models. The efficacy of this validation process is notably enhanced when undertaken as a collaborative venture among a diverse cohort of subject-matter experts and stakeholders.

In such data-informed decision making, the specific role of human reasoning is variable and depends on factors such as the expertise of the decision maker, the validity of the data presented, and the ability to identify potential biases and limitations before they occur. These factors are central to the visual analytics approach to visualization design, which has historically focused on the creation of a “science of analytical reasoning facilitated by interactive visual interfaces” (Thomas and Cook, 2005) and a proposed research shift from the design of visualization per se to support for “visually-enabled reasoning” (Meyer et al., 2010).

3 Decision intelligence

Decision Intelligence (DI) is a term popularized by Pratt and Zangari (2008), Pratt (2019), Pratt and Malcolm (2023), and Pratt et al. (2024). DI is a field that aims to support, augment, and potentially to automate decision-making processes using multiple methods including Artificial Intelligence. DI has gained popularity quite quickly due to its implications for decision making in businesses and other organizations. Cassie Kozyrkov, former Chief Decision Scientist at Google, regularly provides insight into data-driven decision processes and strategies, especially regarding the safe and ethical use of Artificial Intelligence tools, so that appropriate data and analyses can lead to better actions (Kozyrkov, 2019; Sheppard, 2019). Bornet (2022), a widely-recognized AI and Automation expert, has even gone so far as deeming DI to be the “next Artificial Intelligence.”

DI is becoming increasingly standardized (The Open Decision Intelligence Initiative, 2023). DI provides a framework that considers stakeholder assumptions, the scope of the organization's capabilities, external factors, and subject-matter expertise, integrating these factors into a graphical map of causal factors that should inform the decision-making process (Pratt and Malcolm, 2023). The DI approach begins by creating a causal map of these factors, which may be achieved through automated document analysis, use of LLMs (Pratt, 2023), or in a collaborative elicitation process led by DI Analysts along with Subject-Matter Experts (SMEs). This specific approach to DI was pioneered by Pratt (2019), who argues that Artificial Intelligence (AI) and Machine Learning (ML) systems are not used effectively in organizations, despite access to the data and the models required to make informed decisions.

Pratt (2019) describes the process of mapping decisions into a “Causal Decision Diagram” (CDD), that illustrates the components of a decision including: Actions/Levers that are under the control of the organization, External factors that are not, desired Outcomes, and Intermediate elements along a causal chain from Externals and Actions to Outcomes. Data, AI, and more inform this causal chain in various ways as described in Pratt et al. (2023). After creating this shared decision diagram, the role of the DI builder is to connect these dots with causal models that perform calculations, which results in a system that can visually simulate how actions lead to outcomes. The decision maker then considers what they can and cannot control with this decision—either simply using the diagram or using simulation software based on the diagram—and ends up making an informed decision, being aware of any shortcomings, problems, and/or unintended outcomes. These decision models can be recycled and updated over time, to include new data, new measurements/metrics, new externals, and so on. The goal of DI is to provide a better framework for decision makers to fully utilize AI and ML systems in making informed organizational decisions.

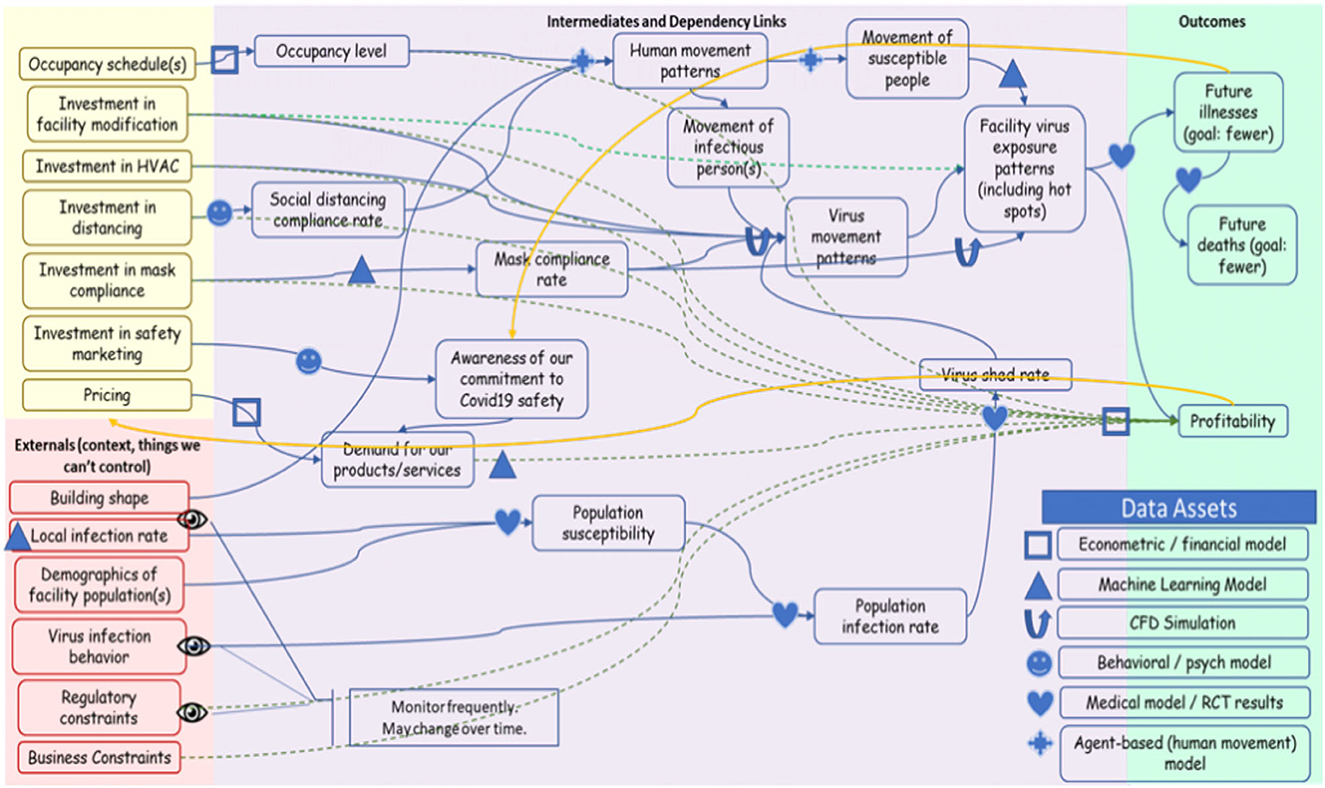

The initial step of DI is to create a map of the decision, or CDD, as illustrated in Figure 1. Here, the top-left section—colored in yellow—shows potential actions or decisions. The bottom-left section shows Externals, colored in red. Desired Outcomes are shown on the right, with the middle of the CDD showing the Intermediates and the Dependency Links that connect the decision elements.

Figure 1. An example of a Causal Decision Diagram. Source: Zangari (2022). Reprinted with permission.

The CDD is created, reviewed, and finalized through a social and collaborative process, in which stakeholders and subject-matter experts discuss the intended outcome, potential actions that can be taken (or potential decisions), as well as intermediate (controllable) and external (uncontrollable) factors that may play a role. In this example, a potential action or decision point is the “Investment in distancing,” which directly influences the “Social distancing compliance rate,” calculated using a “Behavioral/psych model.” Coupled with other intermediate elements, such as “Human movement pattern,” and external factors, such as “Building shape,” the decision of how much to invest in distancing has an effect on the desired outcomes, which in this example are fewer “Future illnesses” and “Future deaths.”

The CDD elicitation is crucial for mapping the decision, given that, when coupled with models rendered in software that connect the decision elements (nodes/boxes), decision makers can interactively simulate and explore the consequence of each action. Importantly, the outcome of a decision model like this one is not the same as the outcome of the decision-making process, which is the choice of actions on the left-hand-side of the CDD (so chosen because, in the decision makers' mental model or computer-augmented model of reality, they lead to the best outcomes).

Pratt and Malcolm (2023) suggest beginning the elicitation by listing the outcomes and asking how questions to connect these outcomes to potential actions, referred as the How-chain. The opposite direction is guided through the Why-chain. For example, if the stakeholders are debating whether to pursue a specific action, asking why questions should ideally lead them to the desired outcome. The connections between Actions and Outcomes are uncovered through a human interview process, possibly augmented by LLMs or other NLP methods. Working with the CDD can be seen as a new visually-enabled reasoning task that complements the more familiar visual data analysis process that has been the historical goal of visual analytics.

We argue that, given that the goal of visual data analytics has always been to, ultimately, allow decision makers to achieve better outcomes through the use of data, the creation, review, maintenance, and reuse of CDDs and CDD-based software be considered a new and important topic of study within the field of visual analytics.

4 Objectivity, subjectivity, and decision intelligence

Generally speaking, “objectivity” refers to the representation based on facts and evidence, one which is free of biases or favoritism. In an epistemological sense, objectivity is crucial in determining how knowledge is gathered and validated. When an objective knowledge claim is made, it should be justifiable through solid evidence and structured reasoning, which necessitates a process that is free of cognitive biases and independent of the analyst's individual perspective.

In decision making, data that is considered less than objective is considered—as a general rule—to be less desirable. In theory, decision maker(s) apply a variety of techniques to assess data quality, and therefore the quality of any trends or models based on that data, in order to detect and thereby remove any data errors that may have crept into the data through subjectivity or other means. The choice of such methods, along with the degree of rigor that is applied, is by necessity at the discretion of the data scientist, and is thereby imperfect.

Even with perfect and objectively-validated data, however, the decision model in which it is embodied is by necessity an imperfect representation of reality. How can decision makers thereby make good decisions based on flawed models—either mental ones or those supported by computers? Fletcher et al. (2020) argue that it is here where intuition bridges the gap between whatever analytical support is available and good decisions. Thus, finding ways to integrate intuition and expertise, while eliminating any potential biases that could hinder claims of an objective decision process, is essential. Pratt and Malcolm characterize this as, “High-quality decisions using low-fidelity models” (Pratt and Malcolm, 2023).

DI processes constitute a systematic approach to this connection between subjective and objective decision elements, through integrating complex models decision models into a data visualization or dashboard (graphical user interfaces portraying key performance indicators of an operation) of the CDD, with an easy-to-navigate user interface, so that the decision makers can fully understand the implications of various action choices in combination with external factors that combine to have an impact on the outcome.

The question of how humans and machines best collaborate is nothing new. In particular, the notion of mechanical objectivity—as defined by Daston and Galison (2021)—is highly relevant to the DI decision-making process. Daston and Galison discuss that, even if their knowledge is external, or in other words, found in nature, an atlasmaker's attempt to capture and illustrate that knowledge is inherently subject to the atlasmaker's perspective. Similarly for the present purpose of considering a decision model that has been used and evaluated as correct in the past, its knowledge (its ability to accurately predict) can be said to have been so established, to some level of empirically-measured confidence. However, when intuition and expertise are integral parts of this prediction process, the concept of knowledge moves from this objectively-measurable, empirical position to something that is internal and so by its nature, subjective. The rub is that, as illustrated here, such intuitive internal knowledge is essential, despite the fact that it introduces the threat of bias and favoritism. This question of subjective lenses goes back, of course, many centuries to the very nature of science itself: insights from which are relevant here as we move beyond hypothesis testing—studied for millennia—to the use of the results of those hypotheses as elements in complex decisions that integrate with AI and data.

How does the use of a CDD address this dilemma: between essential subjectivity and the need to avoid bias? It may appear that a first answer to this question is that capturing the decision as accurately as possible is important, but the truth is actually more nuanced. All models are wrong, yet some are useful. CDDs—or for that matter any model meant to represent some aspect of reality—should ideally represent only those attributes of a decision to which the outcomes are sensitive. Including additional attributes constitutes unnecessary effort with—tautologically—no benefit to the decision outcomes. In the CDD of Figure 1, for example, including the color of people's hair or their height is most likely—and obviously—irrelevant. So increased accuracy in these dimensions is not of value. Other factors are less obvious, and require careful analysis of the CDD involved.

But it is more than the CDD that determines the value of which elements should be modeled; indeed the value of a CDD is a function of two factors: the map and the user of that map. This is where the field of visual analytics has tremendous relevance to Decision Intelligence: in integrating our understanding of how humans interact with technical systems we can better understand answers to many questions about their construction, including the one at hand regarding their necessary fidelity. Much like the best subway map doesn't show the exact route of the trains, and how many users of GPS systems do not value the “satellite” view which shows all features of the landscape, the question posed by DI to our field is, “what attributes of the decision are most valuable to model, as measured by the degree to which users of this diagram or computerized asset based on the diagram do indeed make better decisions?” As you can see, it is about far more than simple fidelity (accuracy).

To the degree that fidelity of a decision model to reality is indeed relevant, however, the CDD formalism moves the needle significantly. First, simply by moving this knowledge “out of the head” of decision makers and into a shared map invites the ability to check for bias, in a way that is much harder for knowledge that remains invisible. The knowledge being captured in the CDD is typically socially constructed and strengthened with cross-validation and critical reflection. Decision Intelligence aims to involve as many subject-matter experts in the CDD elicitation process as possible, while ensuring they each have expertise in the area and a scope of responsibility within the organization that is applicable to the decision at hand.

Multiple experts with a wide range of expertise areas may be complementary to each other, however, given that the CDD elicitation process is collaborative, redundancy in number of participants is also not desired. Through this systematic exploration and collaborative elicitation process, individual knowledge claims are discussed thoroughly, until an agreement is reached. The objectivity claims made in a CDD, thus, depends heavily on the number of participants present in the elicitation session, as well as their respective levels of expertise and authority.

Secondly, by decomposing a decision explicitly into its constituent elements, the cognitive load of such validation appears prima facie to be qualitatively—and substantially—lowered. One CDD element at a time (external, dependency, intermediate, and so on) can be examined for its provenance, potential bias, and fidelity to reality. However, this apparent benefit has yet to be subjected to rigorous research and analysis—a topic that is being explored by our research, but which we also consider an open challenge to the field of visual analytics as a whole.

5 DI and objectivity

By fostering a culture of critical thinking and reasoning, the collaborative CDD elicitation process allows individuals to systematically explore and scrutinize a decision. The goal of this process is to identify key drivers, and ultimately create a model at the right fidelity (see above) of the causal chain from actions to outcomes. This diagram-based model then forms a scaffold so that predictive modeling and other computational tools can be integrated (Pratt et al., 2023). The resulting CDD should ideally capture any initially obscure factors or unintended outcomes, which are uncovered through the utilization of expertise. This process involves engaging subject matter experts who contribute their individual expertise, intuition, and analytical perspectives. The SME's expertise, given its subjective nature, is not immune to biases. However, through reasoning, expertise can be justified and defended in argumentation. In other words, the basis for their expertise can be communicated clearly and can withstand scrutiny.

Although this collaboration seems to present an ideal environment for creating a universally accepted process for any given decision, it is subject to challenges inherent in social collaboration between people with different values and beliefs. For the DI experts to move into the second phase of integrating predictive models into the CDD, the subject-matter experts must first agree on the final form of the CDD and on each and every element connection. The process consists of the subject-matter experts providing what they think to be accurate relationships between these elements, while at the same time analyzing, evaluating and—at times—challenging elements suggested by from others. This continuous dialogue is reminiscent of a negotiation, where stakeholders strive to collaboratively agree on a subject, while attempting to make as few sacrifices as possible to their perspectives. If the stakeholders are already aligned in terms of priorities, goals, and values, however, this process is reminiscent of an engineering review (Wiegers, 2002).

The resulting CDD is then used for future causal reasoning regarding if a certain action will lead to an intended outcome: an important use case within the general arena exploring the socio-technical system of human-AI interaction (Westhoven and Herrmann, 2023). Decision Intelligence utilizes predictive models and machine learning tools to generate simulation of the action-to-outcome causal pathway, as defined within the CDD.

In some cases, these models are developed with the best data available, but remain imperfect. For example, for the decision in Figure 1 above that includes a model of the spread of airborne viruses in a closed space, DI experts can make use of widely-acknowledged models that calculate the movement of particulates in the air. In this example, the knowledge of how particulates move in the air is, at least partially, external to the stakeholder, and they simply capture this knowledge and use it in their epistemological quest to model reality at the right level of fidelity. In other examples, these predictions may be heavily based on the input of subject-matter experts, which initiates a deeper conversation into whether this knowledge is internal or external to them, and therefore embodies a greater risk of bias or inaccuracy introduced through this subjective pathway.

Analyzing this view alongside Daston and Galison's mechanical objectivity perspective, it is clear that the perfectionist sense of objectivity is an elusive goal but fortunately not a necessary one. Expertise of SMEs is integrated through a collaborative reasoning process that is based on establishing a common ground (Kaastra and Fisher, 2014) of where and how to integrate individual expertise. The difference between expertise and bias is highly dependent on justification and defensibility, which is a confirmatory process that is largely subjective, given that other experts' and stakeholders' confirmation is what determines an informative individual input as expertise. Thus, the common ground built by the experts and stakeholders, and the continuous negotiation and analysis required to do so, establishes an acceptable level of objectivity for the decision at hand. Over time, with the addition of more data and different experts, CDDs may be reused, and the previously-established common grounds can be readapted, which also counters any mechanical objectivity (Daston and Galison, 2021) claims. An ideal DI process aims to leverage expertise, justifications, and discussions to establish a working framework that can be refined over time, incorporating updated data, insights from new stakeholders, and evolving understanding of the decision domain.

6 Conclusion

Decision Intelligence provides the decision maker with the complete toolset to show potential outcomes of each action or decision, however it is up to the decision maker's expertise, or intuition, to make the good decision at the end of the day. This may, as we have discussed, introduce substantial intuition, and thereby the risk of subjective bias.

Human reasoning plays two important roles in the CDD elicitation phase of Decision Intelligence. First, collaborative reasoning between experts and stakeholders helps establish common ground, specifically regarding the desired outcome(s) and the potential actions the organization could take to reach those outcomes. Additionally, the collaborative aspect also serves to address objectivity issues, as it aims to eliminate potential biases that may arise from individual insights or analyses, through cross-validation among subject matter experts and stakeholders. If a potential bias is spotted, the expert is asked to provide justification for the input, and the justification is discussed among the elicitation group to determine its robustness. Once a CDD that is generally accepted by the experts and stakeholders is derived, predictive models are integrated to simulate the process, which will be used to determine the effectiveness of a specific decision.

From an epistemological perspective, the collaborative elicitation session within Decision Intelligence provides the environment for social construction of knowledge. Through the dialogue and interactions between subject-matter experts, stakeholders and DI analysts and modelers, a shared, social process is conducted, in which the problem or decision at hand is explored collectively. The group of experts provide feedback and reflections regarding other members' inputs, identifying any unwarranted assumptions or biases, which serve to continuously improve the decision process in the pursuit of knowledge.

This process is inherently limited by a wide variety of potential biases, ranging from biases in raw data, to biases in the Artificial Intelligence models being used to simulate decisions. Therefore, it is crucial to identify any biases or limitations before the CDD elicitation process is completed. Given their expertise in the specific area, subject-matter experts are more likely to identify any bias present, however this challenge is too elusive and demanding to simply fall on their shoulders alone. The DI process should therefore encourage and support continuous evaluation of examination and re-examination, to the extent that improved fidelity is justified by the problem at hand.

As the field of DI continues to evolve, it becomes evident that the convergence of human expertise and machine learning models and simulations will result in discussions around opportunities, as well as challenges. Biases that are present in the decision-making processes may be appended with the relatively uncovered human-AI collaboration.

The field of visual analytics embodies at its core the idea that people interact with visualizations, bringing along their own subjectivity and preconceptions. DI is no different in this regard, however the nature of what is visualized is fundamentally different: models of actions within the sphere of control of a decision maker, leading to outcomes for which they are responsible. DI therefore represents an important new frontier for researchers in visual analytics to bridge not only to data but also to AI, digital twin simulations, and our evolving understanding of how decision makers wish to use these assets to maximize their desired outcomes. DI is, indeed, a natural next step in the evolution of our field, in which there are rich opportunities for research as well as comprehensive frameworks addressing multiple areas, including cognitive bias, ethics, fidelity, subjectivity, and much more.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

This article came about through the efforts of AZ, who provided the core concepts and structure for the article and who integrated the contributions of the other participants in that frame. LP contributed her deep understanding of DI and its many applications, while BF provided his perspective on visual analytics as an applied cognitive science.

Acknowledgments

We would like to extend our gratitude to SFU Library for the support.

Conflict of interest

LP was employed by Quantellia LLC, United States.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Autor, D. H. (2015). Why are there still so many jobs? The history and future of workplace automation. J. Econ. Perspect. 29, 3–30. doi: 10.1257/jep.29.3.3

Blenko, M. W., Rogers, P., and Menkins, M. (2014). The Decision-Driven Organization. Harvard Business Review. Available online at: https://hbr.org/2010/06/the-decision-driven-organization (retrieved November 21, 2022).

Bornet, P. (2022). Is Decision Intelligence The New AI? Available online at: https://www.forbes.com/sites/forbestechcouncil/2022/05/25/is-decision-intelligence-the-new-ai/?sh=b41d91c4e425 (retrieved October 10, 2022).

Brynjolfsson, E., and McAfee, A. (2018). The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. New York, NY: Langara College.

Buçinca, Z., Malaya, M. B., and Gajos, K. Z. (2021). To trust or to think: cognitive forcing functions can reduce overreliance on AI in AI-assisted decision-making. Proc. ACM Hum. Comput. Interact. 5, 1–21. doi: 10.1145/3449287

Daston, L., and Galison, P. (2021). Objectivity. New York, NY: Zone Books. doi: 10.2307/j.ctv1c9hq4d

Dimara, E., Franconeri, S., Plaisant, C., Bezerianos, A., and Dragicevic, P. (2018). A task-based taxonomy of cognitive biases for information visualization. IEEE Trans. Vis. Comput. Graph. 26, 1413–1432. doi: 10.1109/TVCG.2018.2872577

Fletcher, A. C., Wagner, G. A., and Bourne, P. E. (2020). Ten simple rules for more objective decision-making. PLoS Comput. Biol. 16:e1007706. doi: 10.1371/journal.pcbi.1007706

Kaastra, L., and Fisher, B. (2014). “Field experiment methodology for pair analytics,” in Proceedings of the Fifth Workshop on Beyond Time and Errors: Novel Evaluation Methods for Visualization (BELIV'14). doi: 10.1145/2669557.2669572

Kahneman, D. (2013). “Thinking, fast and slow,” in Probabilistic Graphical Models: Principles and Techniques, eds D. Straus Farrar, G. Koller, and N. Friedman (Great Britain: Penguin Books), 19–109.

Kozyrkov, C. (2019). What Is Decision Intelligence? Available online at: https://towardsdatascience.com/introduction-to-decision-intelligence-5d147ddab767

Larson, E. (2017). Don't Fail At Decision Making Like 98% of Managers Do. Forbes. Available online at: https://www.forbes.com/sites/eriklarson/2017/05/18/research-reveals-7-steps-to-better-faster-decision-making-for-your-business-team/?sh=4862304140ad (retrieved November 21, 2022,)

Lufityanto, G., Donkin, C., and Pearson, J. (2016). Measuring intuition. Psychol. Sci. 27, 622–634. doi: 10.1177/0956797616629403

Meyer, J., Thomas, J., Diehl, S., Fisher, B. D., Keim, D., Laidlaw, D., et al. (2010). “From visualization to visually enabled reasoning,” in Scientific Visualization: Advanced Concepts, Vol. 1 (Schloss Dagstuhl: Leibniz-Zentrum fuer Informatik), 227–245.

Pratt, L. (2019). Link: How Decision Intelligence Connects Data, Actions, and Outcomes for a Better World. Bingley: Emerald Publishing. doi: 10.1108/9781787696532

Pratt, L. (2023). ChatGPT Does Decision Intelligence for Net Zero. Available online at: https://www.lorienpratt.com/chatgpt-does-decision-intelligence-for-net-zero/

Pratt, L., Bisson, C., and Warin, T. (2023). Bringing advanced technology to strategic decision-making: the Decision Intelligence/Data Science (DI/DS) integration framework. Futures 152:103217. doi: 10.1016/j.futures.2023.103217

Pratt, L., and Malcolm, N. E. (2023). The Decision Intelligence Handbook: Practical Steps for Evidence-Based Decisions. Sebastopol, CA: O'Reilly.

Pratt, L., Roberts, D. L., Malcolm, N. E., Fisher, B., Barnhill-Darling, K., Jones, D. S., et al. (2024). How decision intelligence needs foresight (and how foresight needs decision intelligence). Foresight.

Pratt, L., and Zangari, M. (2008). Overcoming the Decision Complexity Ceiling Through Design. Available online at: https://www.quantellia.com/Data/WP-OvercomingComplexityv1_1.pdf

Sheppard, E. (2019). Google's Got a Chief Decision Scientist. Here's What She Does. Wired. Available online at: https://www.wired.co.uk/article/google-chief-decision-scientist-cassie-kozyrkov

Spetzler, C. S., Winter, H., and Meyer, J. (2016). Decision Quality: Value Creation From Better Business Decisions. Hoboken, NJ: John Wiley and Sons, Inc. doi: 10.1002/9781119176657

The Open Decision Intelligence Initiative (2023). Available online at: https://www.opendi.org

Thomas, J. J., and Cook, K. A. (2005). Illuminating the path: the research and development Agenda for visual analytics. IEEE Comput. Soc. 28–63.

Westhoven, M., and Herrmann, T. (2023). Interaction design for hybrid intelligence: the case of work place risk assessment. Artif. Intell. HCI 629–639. doi: 10.1007/978-3-031-35891-3_39

Zangari, M. (2022). Guest Post: Why We Need Decision Modeling and Simulation. Available online at: https://www.lorienpratt.com/why-we-need-decision-modeling-and-simulation/ (retrieved August 3, 2023).

Keywords: epistemology, reasoning, objectivity, decision-making, intuition, decision intelligence, artificial intelligence, socio-technical systems

Citation: Zaimoglu AK, Pratt L and Fisher B (2023) Epistemological role of human reasoning in data-informed decision-making. Front. Commun. 8:1250301. doi: 10.3389/fcomm.2023.1250301

Received: 29 June 2023; Accepted: 30 October 2023;

Published: 23 November 2023.

Edited by:

Wolfgang Aigner, St. Pölten University of Applied Sciences, AustriaReviewed by:

Tumasch Reichenbacher, University of Zurich, SwitzerlandCopyright © 2023 Zaimoglu, Pratt and Fisher. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Abdullah Kaan Zaimoglu, akz@sfu.edu

Abdullah Kaan Zaimoglu

Abdullah Kaan Zaimoglu