95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun. , 02 February 2022

Sec. Psychology of Language

Volume 6 - 2021 | https://doi.org/10.3389/fcomm.2021.797485

This article is part of the Research Topic Capturing Talk: The Institutional Practices Surrounding the Transcription of Spoken Language View all 17 articles

By comparing two distinct governmental organizations (the US military and NASA) this paper unpacks two main issues. On the one hand, the paper examines the transcripts that are produced as part of work activities in these worksites and what the transcripts reveal about the organizations themselves. Additionally, the paper analyses what the transcripts disclose about the practices involved in their creation and use for practical purposes in these organizations. These organizations have been chosen as transcription forms a routine part of how they operate as worksites. Further, the everyday working environments in both organizations involve complex technological systems, as well as multi-party interactions in which speakers are frequently spatially and visually separated. In order to explicate these practices, the article draws on the transcription methods employed in ethnomethodology and conversation analysis research as a comparative resource. In these approaches audio-video data is transcribed in a fine-grained manner that captures temporal aspects of talk, as well as how speech is delivered. Using these approaches to transcription as an analytical device enables us to investigate when and why transcripts are produced by the US military and NASA in the specific ways that they are, as well as what exactly is being re-presented in the transcripts and thus what was treated as worth transcribing in the interactions they are intended to serve as documents of. By analysing these transcription practices it becomes clear that these organizations create huge amounts of audio-video “data” about their routine activities. One major difference between them is that the US military selectively transcribe this data (usually for the purposes of investigating incidents in which civilians might have been injured), whereas NASA’s “transcription machinery” aims to capture as much of their mission-related interactions as is organizationally possible (i.e., within the physical limits and capacities of their radio communications systems). As such the paper adds to our understanding of transcription practices and how this is related to the internal working, accounting and transparency practices within different kinds of organization. The article also examines how the original transcripts have been used by researchers (and others) outside of the organizations themselves for alternative purposes.

This article compares two distinct governmental organizations (the US military and NASA) as perspicuous worksites that produce written transcripts as part of their routine work activities and practices. It examines the transcription practices of these organizations with respect to everyday working environments made up of complex, multiple-party interactions in which speakers are frequently spatially and visually separated while engaged in collaborative work. These are technical worksites with multiple communication channels open and in-use to co-ordinate disparate and varied courses of action. How these complexities are re-presented in the transcripts produced provides researchers with a window into the priorities and purposes of transcription, and the “work” transcripts are produced to do in terms of these organizations’ tasks. This paper thus examines how transcription fits within the accounting practices of the organizations and how these serve various internal and external purposes. Above all, then, it is interested in how transcripts make the practices they detail “accountable” in Harold Garfinkel’s terms (Garfinkel, 1967:1), that is, differently observable and reportable, in their specific contexts of use. By attending to transcription practices in these terms, it becomes possible to draw out lessons about the internal working, accounting and transparency practices within different kinds of organization. With our focus on transcription practices in organizational contexts, this represents a particular kind of “study of work” (Garfinkel, 1986). To aid this comparative exercise the transcription practices routinely used in ethnomethodology and conversation analysis will be deployed as an analytical device to consider decisions made about the level of detail included in any given transcript and the consequences of these decision-making processes.

As with all social scientific research methods and tools, transcription is built upon a set of assumptions about the social settings and practices under investigation. Whether in academia or professional contexts, the work of transcription always requires that a set of decisions be made—explicitly acknowledged or otherwise—in accordance with the goals and purposes of the work, the background understandings which underpin it, and prior knowledge about transcribed interactions. As Bucholtz (2000) argues, these decisions can be grouped into two categories: “interpretive” decisions concerning the content of the transcription and “representational” decisions concerning the form they take. In this regard, written transcripts are never “natural data”, neutral imprints of the transcribed interaction, but professional artifacts whose production is ultimately contingent upon organization-specific ways of maintaining and preserving what happened “for the record” for particular practical purposes.

The methodological research literature in this area has suggested that transcription rarely receives the same level of scrutiny and critique applied to research topics or data collection processes, which are frequently the focus of accusations of bias, subjectivity, selectivity, and so on (Davidson, 2009). As Lapadat (2000) frames the issue, transcription is too often treated as holding a “mundane and unproblematic” position in the research process, characterised as being neutral, objective, and concerned solely with re-presenting the spoken words presented in the original recorded data. In the vast majority of cases, little to no effort is made to account for the transcription practices which have been employed, with their reliability usually “taken for granted”, a process in which the “contingencies of transcription” are often hidden from view (Davidson, 2009).

For those seeking to open those contingencies up, a key feature of transcription is how original audio/visual data is converted into text for analytical and practical purposes (Ochs, 1979; Duranti, 2006). As Ochs (1979) has demonstrated, the very “format” and re-presentation of audio and/or video-recorded data directly impacts how researchers and readers “interpret” the communication transcribed so that, in her field for instance, talk between adults and children is almost automatically compared to adult-adult interactional practices. Likewise, seemingly trivial omissions of spoken words can considerably shift the readers’ understanding of the overall interaction and situation, as Bucholtz shows in a highly consequential analysis of how transcription of a police interview can impact legal proceedings and outcomes (Bucholtz, 2000). However, when taking a practice-based view on transcripts, the work/act of reading and interpreting a written transcript is just as important to consider as the work/activities involved in producing the transcript. Crucially, both activities are part of the organizational work of accounting for and preserving organizational actions (Lynch and Bogen, 1996). Just as presuppositions and organizational purposes influence the production of the transcripts, they also guide the use of the transcripts, where the transcribed situations are woven into broader narratives. In military-connected investigations these narratives include legal assessments based on assumptions of normal/regular soldierly work and the defining operational context. For NASA, these narratives center on communicating the significance of their missions to domestic public and political audiences as more or less direct stakeholders on whom future funding depends, alongside underlining organizational contributions to scientific and technical knowledge.

Transcription practices are, on the whole, then, opaque. A notable exception in this regard, however, is the discipline of conversation analysis, which, in its perennial focus on transcription techniques and conventions, tends to be more transparent with regards to the contingencies, challenges and compromises which are an unavoidable feature of transcription (section 2.2 for full details). Tellingly, for the current analysis, when set against the example of conversation analysis, we find that the US military and NASA also do not explain their transcription practices in any of the documents created. The assumption is that the “work” of explicating the transcription method is not necessary to the organizations’ actual work. However, one reason why these worksites represent “perspicuous” settings for comparison is because it is possible to learn lessons from the “complexities” inherent in the production of transcripts in technology-driven, spatially/visually separated, multi-party interactions (Garfinkel, 2002; Davidson, 2009, 47). That is why, after some additional background, we want to unpack what is involved below (sections 3, 4).

This paper draws together our findings regarding the transcription processes and practices employed by US military personnel following a range of high-profile incidents and accidents that led to the death and injury of civilians during operations involving a combination of ground force and air force units (e.g., planes, helicopters and drones). Table 1 provides an overview of the key military incidents covered in this paper (listed in chronological order of occurrence).

What unites these tragic incidents for the purposes of our comparison is that they each resulted in formal internal investigations, Army Regulation or AR 15-6s, and because transcripts of both events were produced using the original audio-visual recordings to capture the various parties speaking, though by different parties in each case. Given the loss of civilian life involved, these incidents achieved notoriety when the incidents were eventually made public and thus require careful scrutiny. How transcripts help in that regard is worth some consideration.

The National Aeronautics and Space Administration (NASA) is an independent agency of the American government that oversees the US national civilian space program as well as aeronautics and space research activity. From their earliest human-crewed spaceflights, NASA have kept detailed “Air-to-Ground” conversation transcripts covering every available minute of communications throughout human-crewed missions. These transcription practices mobilise a vast pool of human resources in their production—from the crew and ground teams themselves, to technical operators of radio/satellite communications networks across Earth, to teams of transcribers tasked with listening to the recorded conversational data and putting them to paper. This makes it all the more impressive that NASA have been consistently able to produce such transcripts within approximately 1 day of the talk on which they were based. Even with NASA’s Skylab program—America’s first space station, which was occupied by nine astronauts throughout the early 1970s—it was possible to record and transcribe every available minute of talk occurring when the vehicle was in range of a communications station, amounting to approximately 246,240 min of audio and many thousands of pages of typed transcripts. Though granular detail is difficult to acquire, the annual NASA budget indicates the size of the enterprise, with the mid-Apollo peak of close to $60 billion levelling out to between $18 billion and $25 billion since the 1970s to today (between 0.5 and 1% of all U.S. government public spending) (Planetary Society, 2021). Just why such a huge transcribing machine has been constructed and put to work as part of that effort remains, however, curiously unclear. Ostensibly, the transcripts capture talk for various purposes: to support journalistic reportage of missions, as a kind of telemetry that allows a ground team to learn more about space missions in operation, for its scientific functions (e.g., astronaut crews reporting experimental results) and as a matter of historical preservation. Yet as these transcripts are not drawn on in their fullness for any of these purposes, an exploration of the transcripts themselves is required to learn more about their practical organizational relevance.

This study is informed by the principles and practices of ethnomethodology (hereafter EM) and conversation analysis (hereafter CA). These sociological traditions have had an enduring connection with transcription practices and processes as a matter of practical and analytical interest. Given their preoccupation with them, how transcripts fit into these academic enterprises is worth exploring.

In outlining what gave CA its distinctive creative spark, Harvey Sacks (1984: 25-6, our emphasis) suggested CA’s novel approach to sociology needed to be understood in the following way:

[This kind of] research is about conversation only in this incidental way: that conversation is something that we can get the actual happenings of on tape and that we can get more or less transcribed; that is, conversation is something to begin with.

Yet despite this emphasis on transcripts as something to begin analytical investigations with, for researchers working in these areas the re-production and re-presentation of audio/visual data has, in part, also been a technical issue. While it was Sacks who instigated the focus on conversations as data, it was Gail Jefferson who worked to develop and revise transcription techniques and conventions that reflected the original recordings as closely as possible (Schegloff 1995; Jefferson, 2015). The now established Jeffersonian transcription conventions were designed to capture the temporal or sequential aspects of talk (e.g., overlap, length of pauses, latched utterances) and the delivery of the utterances (e.g., stretched talk/cut-off talk, emphasis/volume, intonation, laughter). For analysts in these fields, transcripts were intended to re-present the original recordings as accurately as possible in order for the resulting analysis to be open to scrutiny by the reader, even if the recording was not available.

In this paper, we use these same transcription techniques as an analytical resource to investigate the transcription practices of a specific set of organizational and institutional settings. Unusually compared with those transcribing verbatim, researchers working under the aegis of EM and/or CA routinely document the transcription procedures and processes applied to any given dataset (audio and/or video). Using these conventions as comparative tools allows us, at least partially, to recover the sense-making and reasoning practices which shaped how transcripts were produced and to what ends in the organizations we examine. A key issue we will take up in this paper is why a specific transcript was created and disseminated in a particular form, something which, we will argue, the transcript itself as an organizational artifact gives us insight into.

Using transcription conventions as an analytical device and method allows researchers to explore the following issues (Davidson, 2009:47):

• What is included in a transcript?

• What is considered pertinent? What is missing (e.g., speaker identifiers and utterance designations)?

• What is deliberately missing or omitted?

• What is/was the purpose/use of the transcript?

• When was it originally produced?

• What is the wider context of the transcripts production and release (e.g., legal/quasi-legal inquiry, inquest, leak)?

• Who is/was the intended audience?

• Is the original recording available? Is the transcript an aid to follow the audio/video or intended to replace it?

These research questions will be applied to the transcription practices in two contrasting work contexts, namely US military investigative procedures and the documentary work of space agencies, in order to provide a window into these settings and to explore issues of record-keeping, self-assessment and accountability. These organizations’ transcription practices are compared as they adopt different approaches as to what is transcribed and when. For instance, whereas, NASA operates a “completist” approach to transcription (i.e., with a setup for recording and transcribing all interactions relating to day-to-day space activities within the limits of the physical capacity of their communication setups), the US military audio/video record all missions conducted, but only selectively transcribe when there is a military “incident” requiring formal investigation. This is an important distinction as it speaks to the motives for transcribing and the practical purposes that transcripts are used for. The relevance of this distinction and its implications will be unpacked below.

In this section of the paper we outline how and why the US military and NASA use the “data” they collect as part of their work. It will also unpack how this data is re-presented, what is transcribed and the transcription practices that are recoverable from transcripts as artifacts alongside their uses within these worksites.

All airborne military missions and a growing number of ground missions are routinely audio-video recorded. Alongside training and operations reviews, this is done for the purposes of retrospectively collecting evidence in case of the reporting of incidents that occur during operations. As outlined above, such incidents include actions resulting in the injury or death of civilians. However, it is normally only when an incident is declared and a formal internal inquiry is organized that the audio-video recording will be scrutinized for the purposes of producing a transcript. Fundamental differences between the cases we have previously analysed become apparent at this stage. First, not all types of inquiries require transcripts for their investigative work. Depending on the objective, scope and purpose of the investigation, the recorded talk may be treated as more (or less) sufficient on its own. Secondly, the transcripts produced can, at times, be made available either as a substitute for the original audio-video data or as a supplement to it. To demonstrate the relevance of these issues, we will examine two cases in which transcription was approached in divergent ways. By describing, explicating and scrutinizing the transcription practices used in each case, we can contrast the “work” these practices accomplish. The analysis in this section focuses on the Uruzgan incident as it provides documentary evidence of transcription practices in conjunction with how military investigators read, interpret, and use transcripts as part of their internal accounting practices. The “Collateral Murder” case will be taken up more fully in sections 3.1.2, 4.2.

The Uruzgan incident, which took place in Afghanistan in 2010, was the result of a joint US Air Force and US Army operation in which a special forces team, or “Operational Detachment Alpha” (ODA), were tasked with finding and destroying an improvised explosive device factory in a small village in Uruzgan province. Upon arriving in the village, however, the ODA discovered that the village was deserted. Intercepted communications revealed that a Taliban force had been awaiting the arrival of US forces and were preparing to attack the village under cover of darkness. As the situation on the ground became clearer, three vehicles were identified travelling towards the village from the north, and an unmanned MQ-1 Predator drone crew were tasked with uncovering evidence that these vehicles were a hostile force and thus could be engaged in compliance with the rules of engagement. In communication with the ODA’s Joint Terminal Attack Controller (JTAC)—the individual responsible for coordinating aircraft from the ground—the Predator crew surveilled the vehicles for well over 3 hours as they drove through the night and early morning. Despite their journey having taken the vehicles away from the special forces team for the vast majority of this period, the vehicles were eventually engaged and destroyed by a Kiowa helicopter team at the request of the ODA commander. It did not take long for the reality of the situation to become clear. Within 6 minutes the first call was made that women had been seen nearby the wreckage, and within 25 min the first children were identified. The vehicles had not been carrying a Taliban force. In fact, the passengers were a group of civilians seeking safety in numbers as they drove through a dangerous part of the country. Initial estimates claimed that as many as 23 civilians had been killed in the strike, though subsequent investigations by the US would conclude there had been between fifteen and sixteen civilian casualties. Though investigations into what took place identified numerous shortcomings in the conduct of those involved in the incident, the strike was ultimately deemed to have been compliant with the US rules of engagement and, by extension, the laws of war.

In this first section of analysis, we will approach the investigative procedures which took place following the Uruzgan incident, identifying the ways in which investigators made use of transcripts in order to: re-construct the finer details of what unfolded; make assessments of the conduct of those involved in the incident; make explanatory claims about the incident’s causes; and, finally, contest the adequacy and relevance of other accounts of the incident. The Uruzgan incident is distinctive as a military incident because of the vast body of documentation which surrounds it. There are two publicly available investigations into the incident which not only provide access to the details of the operation itself, but also make visible the US armed forces’ mechanisms of self-assessment in response to a major civilian casualty incident. The analysis will exhibit how the three transcripts that were produced following the incident were employed within the two publicly available investigations in order to achieve different conclusions.

The first investigation to be conducted into the Uruzgan incident was an “Army Regulation (AR) 15-6 investigation” (United States Central Command, 2010). AR 15-6 investigations are a type of administrative (as opposed to judicial) investigation conducted internally to the US armed forces concerning the conduct of its personnel. Principally, AR 15-6 investigations are structured as fact-finding procedures, with investigating officers being appointed with the primary role of investigating “the facts/circumstances” surrounding an incident (Department of the Army, 2016: 10). In order to tailor specific investigations to the details of each case, the appointing letter by which a lead investigator is selected includes a series of requests for information. AR 15-6 investigations are intended to serve as what Lynch and Bogen might call the “master narrative” of military incidents, providing a “plain and practical version” of events “that is rapidly and progressively disseminated through a relevant community” (1996: 71). Within this process AR 15-6’s represent initial investigations that are routinely conducted where possible mistakes or problems have arisen (see the Collateral Murder analysis in sections 3.1.2, 4.2 for another example).

The task of conducting the AR 15-6 investigation into the Uruzgan incident was given to Major General Timothy P. McHale, whose appointing letter stated that he must structure his report as a response to 15 specific requests for information listed from a-t. These questions included:

1) “what were the facts and circumstances of the incident (the 5 Ws: Who, What, When, Where, and Why)?”

2) “was the use of force in accordance with the Rules of Engagement (ROE)?”,

3) “what intelligence, if any, did the firing unit receive that may have led them to believe the vans were hostile?” (United States Central Command, 2010: 14–15)

In producing responses to these requests, the appointing letter clearly stated that McHale’s findings “must be supported by a preponderance of the evidence” (United States Central Command, 2010: 16). In accumulating evidence during the AR 15-6 investigation, McHale travelled to Afghanistan to conduct interviews with US personnel, victims of the incident, village elders, members of local security groups, and others. He reviewed an extensive array of documents relating to the incident, including personnel reports, battle damage assessments, intelligence reports, and medical records alongside the video footage from aerial assets involved in the operation. Crucially, he also analyzed transcripts of communications that were recorded during the incident. In this way, it can be said that McHale’s investigative procedures were demonstrative of concerns similar to those of any individual tasked with producing an account of an historical event. That is, he sought to “use records as sources of data… which permit inferences… about the real world” (Raffel, 1979: 12). Transcripts of recordings produced during the incident were central among McHale’s sources of data and, before making assessments of the character of their use in the AR 15-6, it is necessary to introduce the three different transcripts to which McHale refers in the course of his report: the Predator, Kiowa and mIRC Transcripts.

The first transcript, which will be referred to as “the Predator transcript”, was produced using recordings from the Predator drone crew’s cockpit. This transcript documents over four of hours of talk and includes almost a dozen individuals. That said, as the recordings were made in the Predator crew’s cockpit, the bulk of the talk takes place between the three crew members who are co-located in Creech Air Force Base in Nevada. The crew includes the pilot, the mission intelligence coordinator (also known as MC/MIC), and the camera operator (also known as “sensor”). Though the conversations presented in this transcript cover a diversity of topics, they are broadly unified by a shared concern for ensuring that the desired strike on the three vehicles could be conducted in compliance with the rules of engagement. This involved, but was not limited to, efforts to identify weapons onboard the vehicles, efforts to assess the demographics of the vehicles’ passengers, and efforts to assess the direction, character, and destination of the vehicles’ movements. In terms of format, the Predator transcript is relatively simple—containing little information beyond the utterances themselves, the speakers, and the timing of utterances—though the communications themselves are extremely well preserved as Figure 1 shows.

The second transcript is “the Kiowa transcript”. As above, this document was produced using recordings from the cockpit of one of the Kiowa helicopters which conducted the strike. This document is far more restricted than the Predator transcript in several important ways. For one thing it is far shorter, around six pages, and largely documents the period immediately surrounding the strike itself. There are far fewer speakers, with only two members of the Kiowa helicopter crew, the JTAC, and some unknown individuals being presented in the document. Additionally, the subject matter of the talk presented is far more focused, almost exclusively concerning the work of locating and destroying the three vehicles. In terms of transcription conventions, the Kiowa transcript is far more rudimentary than the Predator transcript, crucially lacking the timing of utterances and—in the publicly available version—the identification of speakers (see WikiLeaks’ Collateral Murder transcript in sections 3.1.2, 4.2 for comparison). As such, the transcript offers a series of utterances separated by paragraph breaks which do not necessarily signify a change of speaker, as exhibited in Figure 2.

Though the Kiowa transcript presents significant analytic challenges in terms of accessing the details of the incident, our present concern lies in the ways in which this transcript was used in McHale’s AR 15-6 report, and as such the opacity of its contents constitutes a secondary concern in the context of this paper.

Where the Kiowa transcript is opaque, the final transcript to which McHale refers in the AR 15-6 report is almost entirely inaccessible. That transcript, known as “the mIRC transcript”, is constituted by the record of typed chatroom messages sent between the Predator crew and a team of image analysts, known as “screeners”, who were reviewing the Predator’s video feed in real time from bases in different parts of the US. “mIRC” (or military internet relay chat) communications are text-based messages sent in secure digital chatrooms which are used to distribute information across the US intelligence apparatus. Excepting some small fragments the mIRC transcripts in the AR 15-6 report are entirely classified, and as such, the only means of accessing their contents is through their quotation in the course of the AR 15-6 report. As it happens, McHale frequently makes reference to the contents of the mIRC transcript because, as we shall see, he considers faulty communications between the image analysts and the Predator crew to have played a causal role in the incident.

Though the transcripts which are present in the Uruzgan incident’s AR 15-6 investigation each, in different ways, fall short of the standards established by the Jeffersonian transcription conventions, the following sections will identify three ways in which investigators made use of transcripts in order to make, substantiate, and contest claims about what took place.

The first and most straightforward manner in which transcripts were used in the AR 15-6 investigation was as a means of reconstructing the minutia of the incident. This usage of the transcript is most straightforwardly evident in the response to the request number 2 of the appointing letter, which asked that McHale “describe in specific detail the circumstances of how the incident took place”. In response to this question McHale provides something akin to a timeline of events—though not a straightforward one. It does not contain any explicitly normative assessments of the activities it describes and makes extensive reference to various documentary materials which were associated with the incident, including both the Kiowa and the Predator transcripts. In the following excerpt, McHale uses the Kiowa transcript to provide a detailed account of the period during which the strike took place:

“The third missile struck immediately in front of the middle vehicle, disabling it. After the occupants of the second vehicle exited, the rockets were fired at the people running from the scene referred to as “squirters”; however, the rockets did not hit any of the targets. (Kiowa Radio Traffic, Book 2, Exhibit CC). The females appeared to be waving a scarf or a part of the burqas. (Kiowa Radio Traffic, Book 2. Exhibit CC). The OH-58Ds immediately ceased engagement, and reported the possible presence of females to the JTAC. (Kiowa Radio Traffic, Book 2, Exhibit CC).” (United States Central Command, 2010: 24).

Passages such as this are a testament to the ability of the US military to produce vast quantities of information regarding events which only become significant in retrospect. Though the fact that every word spoken by the Kiowa and Predator crews was recorded is a tiny feat in the context of the US military’s colossal data management enterprise (Lindsay, 2020), McHale’s ability to reconstruct the moment-by-moment unfolding of the Uruzgan incident remains noteworthy. Where the task of establishing the “facts and circumstances of the incident” is concerned, the transcripts provide McHale with a concrete resource by which “what happened” can be well established, and the AR 15-6’s status as a “master narrative” can be secured. As we shall see, however, in those parts of the report where McHale proceeds beyond descriptive accounts of what took place, and into causal assessments of why the Uruzgan incident happened, allowing the transcript to “speak for itself” is no longer sufficient. As such, the second relevant reading of the transcripts in the AR 15-6 report was as an evidentiary basis by which causal claims could be substantiated.

Though McHale’s AR 15-6 report identified four major causes for the incident, our focus here will be upon his assertion that “predator crew actions” played a critical role in the incident’s tragic outcome. The following excerpt is provided in response to the appointing letter’s request that McHale establish “the facts and circumstances surrounding the incident (5 Ws)”:

“The predator crew made or changed key assessments to the ODA (commander) that influenced the decision to destroy the vehicles. The Predator crew has neither the training nor the tactical expertise to make these assessments. First, at 0517D, the Predator crew described the actions of the passengers of the vehicles as “tactical maneuvering”. At that point, the screeners located in Hurlburt field described the movement as adult males, standing or sitting [(redacted) Log, book 5, Exhibit X, page 2]. At the time of the strike “tactical maneuver” is listed by the ODA Joint Tactical Air Controller (JTAC), as one of the elements making the vehicle a proper target [(Redacted) Logbook 5, Exhibit T, page 57” (United States Central Command, 2010: 21-22).”

In this section, the citation of “[(redacted) Log, Book 5, Exhibit X, page 2]” is a reference to the mIRC transcript. As such, though it is not explicitly stated, the communications at 0517D took the form of typed messages between the Predator crew and the Florida-based image analysts1. It should be immediately clear that this passage is of a different character to our previous excerpt. Most notably, the assertion of a causal relation between the Predator crew’s assessments of the vehicles’ movements and the commander’s decision to authorize the strike is rooted in McHale’s own interpretation of events. In line with the appointing letter’s request that McHale’s assertion be based upon a “preponderance of the evidence”, McHale seeks to use the mIRC transcript to substantiate that claim as this section proceeds.

As a first step towards doing so, McHale sets up a contrast between the Predator crew’s assessment that the vehicles were engaged in “tactical maneuvering” and the image analysts’ apparently contradictory assessment that there were “adult males, standing or sitting”. In establishing the incongruity between these conflicting assessments, McHale presents tactical maneuvering as a contestable description that the Predator crew put forward without the requisite training or tactical expertise. As McHale proceeds, he proposes a link between the Predator crew’s use of the term and its appearance in the JTAC’s written justification for the strike. In this way, McHale not only makes use of the transcript as a mechanism by which assessments of the Predator crew’s inadequate conduct could be made, but also as a means by which a causal relationship between the Predator crew’s actions and the incident’s outcome could be empirically established. As we shall see, however, assessments which are secured by reference to the record of what took place ultimately open to contestation, and McHale’s own analysis in this regard would be open to criticism from elsewhere.

Following the completion of the AR 15-6 investigation, McHale recommended that a Command Directed Investigation be undertaken to further examine the role of the Predator crew in the incident. This was undertaken by Brigadier General Robert P. Otto. At that time Otto was the Director of Surveillance and Reconnaissance in the US Air Force and, in Otto’s own words, the investigation took a “clean sheet of paper approach” to the Predator crew’s involvement in the operation (Department of the Air Force, 2010: 34). Despite McHale’s initial findings, Otto’s commentary on the incident resulted in a different assessment of the adequacy and operational significance of the Predator crew’s actions. One particularly notable example concerns McHale’s criticism of the Predator crew’s use of the term ‘tactical maneuvering’. Otto writes:

“The ground force commander cited “tactical maneuvering with (intercepted communications) chatter as one of the reasons he felt there was an imminent threat … Tactical maneuvering was identified twice before Kirk 97 began tracking the vehicles. Although not specifically trained to identify tactical maneuvering, Kirk 97 twice assessed it early in the incident sequence. However, for 3 hours after Kirk 97’s last mention of tactical maneuvering, the (commander) got frequent reports on convoy composition, disposition, and general posture (…) I conclude that Kirk 97’s improper assessment of tactical maneuvering was only a minor factor in the final declaration”. (Department of the Air Force, 2010: 36)

In this passage, McHale’s causal claim regarding the significance of the Predator crew’s reference to tactical maneuvering is rejected, initially on the grounds that the Predator crew were not responsible for introducing the concept. As Otto observes, “Tactical maneuvering was identified twice before Kirk 97 began tracking the vehicles” (ibid.). Interestingly, this counter-analysis charges McHale with having straightforwardly misread the record of what took place. Recall that McHale’s analysis of the term tactical maneuvering cited the mIRC transcript as evidence of the Predator crew’s shortcomings without making any reference to the Predator transcript. As Otto observes, analysis of the Predator transcript reveals that the first reference to tactical maneuvering took place at 0,503, where the term was used by the JTAC himself. With this being the case, McHale’s causal claim regarding the Predator crew’s characterization of the vehicles’ movements as tactical maneuvering is problematic and significantly weakened.

This is not the end of Otto’s criticism, however. As the passage goes on, Otto also rejects the McHale account as having overstated the operational relevance of the Predator crew’s reference to tactical maneuvering. Though Otto doesn’t cite the Predator transcript explicitly, he notes that in the hours following the final use of the term the crew routinely provided detailed accounts of the “composition, disposition, and general posture” (ibid.) of the vehicles. The proposal here is that by the time the strike took place, so much had been said about the vehicles and their movements that the reference to tactical maneuvering hours previously was unlikely to have been a crucial element in the strike’s justification. Again, Otto’s criticism is rooted in an accusation that McHale’s account misinterprets what the transcript reveals about the Uruzgan incident. On this occasion, it was not a misreading which led to error, rather it was a failure to appreciate the ways in which transcripts warp the chronology of events. There is a lesson to be learned here: though transcripts effectively preserve the details of talk, they do not provide instructions for assessing their relevance. The relevance of particular utterances within broader courses of action depends upon a considerable amount of contextualizing information, as well as the place of that utterance within an on-going sequence of talk. Of course, Otto does not articulate McHale’s error in these terms—he has no reason to—but his critical engagement with McHale’s analysis has clear corollaries with conversation analytic considerations when working with transcripts.

Not all military investigations seek to use transcripts as the primary means by which the details of what took place can be accessed. The “Collateral Murder” case—so named following the infamous Wikileaks publication of video footage from the incident under that name—took place in 2007 and involved the killing of 11 civilians, of whom two were Reuters journalists, following a US strike conducted by a team of two Apache helicopters (Reuters Staff, 2007; Rubin, 2007). It took 3 years for the incident to make its way to the public eye. On April 5th, 2010, Wikileaks published a 39-min video depicting the gunsight footage from one of the Apache helicopters involved in the strike. As with the Uruzgan incident, the collateral murder case had been the subject of an AR 15-6 investigation soon after the incident, but the investigations resulting report was not made publicly available until the day the WikiLeaks video was published. Once again, the investigation declared that the strike had taken place in compliance with the laws of war, though it was not nearly so critical of the conduct of those involved as McHale’s account of the Uruzgan incident had been.

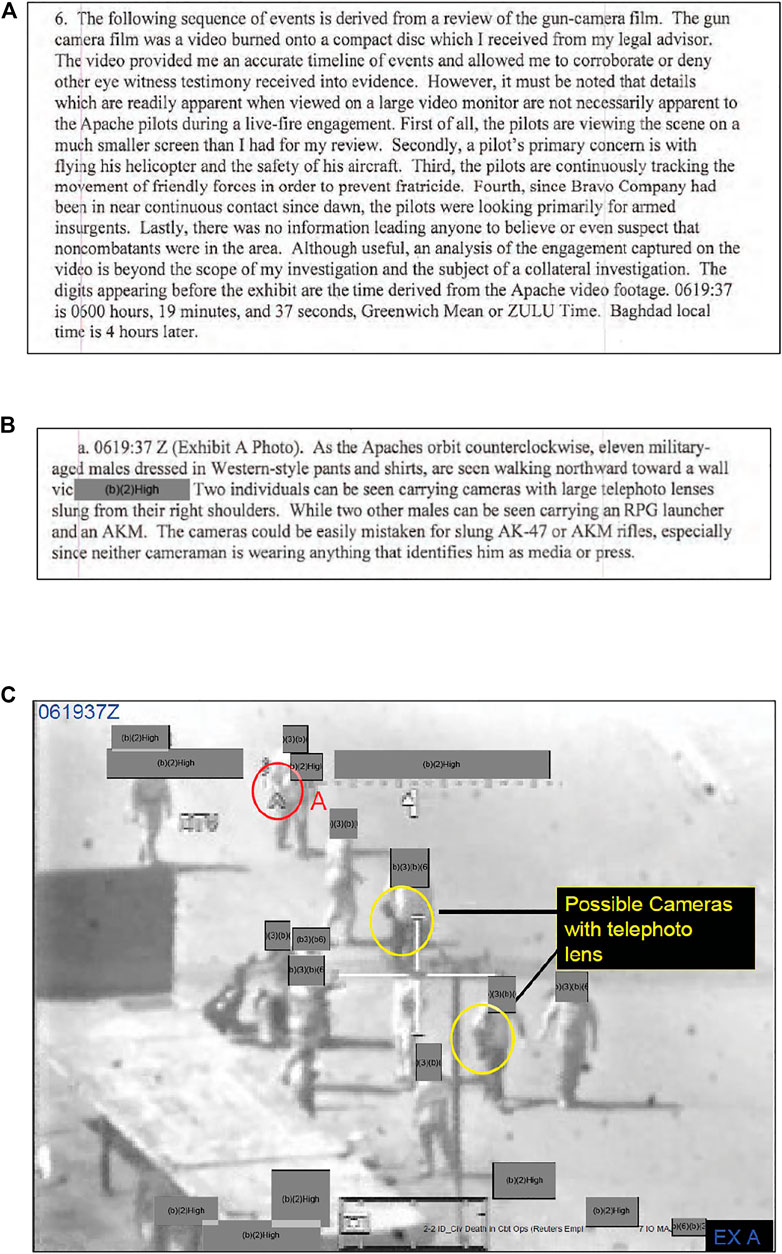

Based on the completed report, we are able to ascertain what evidence was gathered in support of the investigation (Investigating Officer 2nd Brigade Combat Team 2nd Infantry Division, 2007). Fundamentally, the Investigating Officer (IO) drew on two main forms of evidence: witness testimony from the US personnel involved and the Apache video footage, which was utilized by the IO to produce a timeline of what happened on the day (Figure 3 below). No transcript was produced in support of the investigation. As such, the report displays the ways in which visual materials were used in combination with after the fact interviews to establish how the incident had unfolded.

Instead of making use of a transcript to reconstruct the details of the incident, the IO decided that the combination of timestamps (actual time, taken from the video recording), still images taken from the video (displayed as exhibits in the appendices with IO annotations) and visual descriptions of the action taken from the video could be compiled into a “sequence of events” or timeline covering those actions deemed to constitute the incident. This offers a neat contrast with Sacks’ understanding of the analytic value of transcription. For Sacks, in depth transcriptions allowed interaction to be closely examined, forming as “a “good enough” record of what happened” in real-time interactions (Sacks 1984, 25-6). Transcription would become a consistent feature of CA but not, as we see here, a consistent feature of US military investigations which have various other ways of arriving at a “good enough record” for their own analytic purposes.

An example of the alternative “pairing” of evidence and reporting is provided in the extracts from the official report (Figure 4).

FIGURE 4. (A) and (B) Paired extracts from the US military AR 15-16 investigation (Iraq, July 12, 2007). (C) Exhibit A Photo’ from the US military AR 15-16 investigation (Iraq, 12 July 2007).

The report itself was fairly brief (amounting to 43 pages), and in its course the IO was able to identify the primary features of the incident, all without a transcript. Using the kinds of materials outlined above, the IO was able to provide an adequate account of the mission objectives, who was killed and their status (as either civilian or combatants), and how/why the Reuters journalists were misidentified (i.e., their large cameras could/were reasonably mistaken for RPGs, there were no known journalists in the area, etc.). Within the understood scope of the AR 15-6’s administrative parameters and functions, a transcript was not, therefore, required.

The evidence from the witness testimony and the video recording was deemed sufficient to ascertain that the troops had come under fire from a “company of armed insurgents” the Reuters journalists were said to be moving around with. The identities of the journalists were later verified in the report (via the presence of their cameras, the photographic evidence on the memory cards, and the recovered “press identification badges from the bodies”). Despite this, the conduct of the US military personnel (Apache crews and ground forces) was given the all-clear by the report (see Figure 5 below):

Thus, whilst both the Uruzgan incident and the collateral murder case were deemed legal by their respective investigations, their conclusions differ significantly insofar as the AR 15-6 for the collateral murder case does not identify shortcomings in the conduct of the US personnel involved. In our analysis of the AR 15-6 investigation into the Uruzgan incident, we have clearly demonstrated that McHale’s (and subsequently Otto’s) assessments of the incident were, to a large extent, pre-occupied with the adequacy of the conduct of those involved. We would here propose that the documentary materials used to reconstruct the facts and circumstances of the incident are reflective of this pre-occupation—with transcripts of talk being treated as a primary means of reconstructing what had taken place in one case but deemed to be superfluous in the latter case.

Even in relation to one of the most seemingly egregious aspects of the incident, the injuries to the two young children, the report concluded that their presence could not have been expected, anticipated or known as they were not known to the Apache crews and could not be identified on the video—the Apache’s means of accessing the scene below them—prior to contact. Beyond a short, redacted set of recommendations, these conclusions meant the incident was not deemed sufficiently troublesome to require a more formal legal investigation of the kind that would have generated a transcript.

Having presented two contrasting cases of the use of transcripts with US military AR 15-6 investigations, we will now turn to our other institutional setting, namely NASA’s Skylab Program.

As noted previously in section 2.1.2, the transcription machinery of NASA that was deployed in the service of their Skylab program forms an extraordinarily large collective effort to meet the needs of NASA’s first long-duration missions. NASA’s Skylab space station was launched in May 1973, and was occupied on a near-continuous basis for 171 days until February 1974, producing (amongst its scientific achievements) 246,240 min of audio, all of which was transcribed and archived as a legacy of the program. Elaborating the justification for and purpose of such vast collaborative labor inevitably involves tracing NASA’s transcription practices back to Skylab’s predecessors; NASA’s major human spaceflight programs Mercury (1958–1963), Gemini (1961–1966) and Apollo (1960–1972).

The Mercury program was NASA’s early platform for researching the initial possibility (technical and biological) of human-crewed orbital spaceflight, hosting a single pilot for missions lasting from just over 15 min to approximately 18 h. Once it was proven that a vessel could be successfully piloted into low Earth orbit and sustain human life there, the Gemini program extended NASA’s reach by building craft for two-person crews that could be used to develop human spaceflight capabilities further—for instance, Gemini oversaw the first EVA (extra-vehicular activity, i.e., a “spacewalk” outside of a craft) by an American, the first successful rendezvous and docking between two spacecraft, and testing if human bodies could survive long duration zero gravity conditions for up to 14 days. Building on the successes of Gemini, Apollo’s goal—famously—was to transport three-person crews to the Moon, orbit and land on the Moon, undertake various EVA tasks and return safely to Earth, and Apollo mission durations ranged from 6 to just over 12 days. For all three programs—due to the relatively short duration of individual missions and the experimental nature of the missions themselves—not only were spaceflight technical systems tested, so were auxiliary concerns such as food and water provision, ease of use of equipment, various measures of crew health and wellbeing, etc., and all possible communications were tape-recorded and transcribed2. In this sense, while live communications with an astronaut crew flying a mission were vital for monitoring health, vehicles and performance, the transcriptions of talk between astronauts and mission control has a different function—they stand as a more or less full record of significant historical moments for journalistic purposes, but also a record of source data for the various experiments that were built into these missions.

The Skylab transcription machine of the 1970s might then be seen as a direct continuation of a system that had already worked to great effect for NASA since the late 1950s. Despite the obvious differences between Skylab and its predecessor programs—far longer duration missions (up to 84 days) and a different substantive focus (laboratory-based scientific experimentation)—Skylab sought to implement a tried-and-tested transcription machinery without questioning its need or purpose in this markedly new context. There are seemingly two interrelated reasons for this: first, NASA’s achievements were iteratively built on risk aversion (as the adage goes, “if it ain’t broke, don’t fix it”) (Newell, 1980; Hitt et al., 2008), and second, that in the scientific terms under which Skylab was designed and managed (Compton and Benson, 1983; Hitt et al., 2008) the matter becomes one of merely scaling up a variable (e.g., mission duration) as a technically-achievable and predictable phenomenon rather than being seen as an opportunity or need to revisit the social organization of NASA itself. To some degree, producing full supplementary transcriptions did serve some purposes for Skylab, where mission activities aligned with those of earlier programs—for instance, in scientific work where crews could verbally report such experimental metadata as camera settings which could then be transcribed and linked to actual frames of film when a mission had returned its scientific cache to Earth upon re-entry, or where various daily medical measurements could be read down verbally from crew to ground to be transcribed and passed along to the flight surgeon teams. For these kinds of activities, having a timestamped transcript to recover such details post-mission was useful. However, given the longer duration of Skylab missions generally, and the intention for those missions to help routinise the notion of “Living and Working in Space” (cf. Brooker, forthcoming; Froehlich, 1971; Compton and Benson, 1983), much was also transcribed that seemingly serves very little purpose—for instance, regularly-occurring humdrum procedural matters such as morning wake-up calls, and calls with no defined objective other than keeping a line open between ground and crew.

It is perhaps useful at this point to introduce excerpts of transcriptions that illuminate the ends to which such an enormous collaborative transcribing effort was put, and to provide further detail on just what is recorded and how. The transcripts that follow are selected to represent relevant aspects of the Skylab 4 mission specifically [as this forms the basis of ongoing research covering various aspects of Skylab (Brooker and Sharrock, forthcoming)], reflecting 1) a moment of scientific data capture (Figure 6), and 2) a moment where nothing especially significant happens (see Figure 7)3. Timestamps are given in the format “Day-of-Year: Hour: Minute: Second”, and speakers are denoted by their role profile: CDR is Commander Gerald Carr, PLT is Pilot William Pogue, SPT is Science-Pilot Ed Gibson, and the CCs are CapComs Henry “Hank” Hartsfield Jr and Franklin Story Musgrave4.

Figure 6 commences with a call at 333 16 01 56 with CC announcing their presence, which communications relay they are transmitting through, and the time they will be available before the next loss of signal (LOS) (“Skylab, Houston through Ascension for 7 min”), and closes at 333 16 08 11 with CC announcing the imminent loss of signal and timings for the next call. In the intervening 7 min, SPT and CDR take turns at reporting the progress of their current, recent and future experimental work in what proves to be a tightly-packed call with several features to attend to here. Immediately, SPT takes an opportunity to report on an ongoing experiment (e.g., “Hello, hank. S054 has got their 256 exposure and now I’m sitting in their flare wait mode of PICTURE RATE, HIGH, and EXPOSURE, 64. I believe that’s what they’re [the scientists in charge of experiment S054] after.”). This report delivers key salient metadata—the experiment designation (S054), and various details pertaining to camera settings. In the transcript, these salient details are all the more visible for being typed out in all-caps; strategically a useful visual marker for science teams on the ground seeking to identify their metadata from transcripts replete with all manner of information. That it is SPT delivering this information is also important, as it is he who was designated to perform this particular experiment on this particular day (another clue for transcript readers seeking to gather details of a particular experiment post-hoc)—this provides for specific timestamps to be catalogued by ground-based science teams according to their relevance to any given scientific task.

CC then (333 16 03 36) requests a report from CDR on a recently-completed photography activity, and CDR and CC are able to both talk about the live continuation of that activity (e.g., instruction to use a particular headset in future as opposed to malfunctioning microphones) as well as record, for the benefit of the eventual transcript, CDR’s evaluation of the performance of that activity to complement what will eventually be seen on film (e.g. “I did not see the laser at all. I couldn’t find it, so I just took two 300-mm desperation shots on the general area, hoping that it’ll show up on film.”). In this call, SPT also proposes a suggestion on undertaking a continuation of his current experiment (333 16 04 48)—again, this serves a live function in terms of providing details that CC can pass on to relevant ground teams (mission control and scientific investigators) for consideration, but also records specific parameters that SPT intends to use in that experiment for the transcript (e.g. “I think the persistent image scope, as long as you keep your eye on it, will work real well. I’m able to see four or five different bright points in the active regions of 87, 80, 89 and 92 or may be even an emerging flux region.”). On this latter reporting, SPT also notes an intention to “put some more details on this on the tape (which records “offline” notes that can be reviewed and transcribed at a later point)”, flagging for the transcript that a future section of the transcribed tape recordings—another set of volumes capturing the talk of astronauts, though not talk that is held on the air-to-ground channel—may contain relevant details for the scientific teams on the ground.

At moments such as these, where scientific work is in-train and there is much to be reported, the transcripts reveal strategies for making that work visible post-hoc, and in doing so, for supporting the analysis of the data that astronauts are gathering through flagging the location and type of metadata that it is known will be transcribed. At other moments however, the between-times of experiments, or during longer-running experiments where little changes minute-by-minute, there may be less of a defined use for the transcripts, as we will see in the following excerpt Figure 7.

This excerpt, in fact, features two successive calls with seemingly little content which might be used to elaborate the practical work the astronauts are undertaking at the time of the call. CC announces the opening of a call (333 12 14 48), the transmission relay in-use, and the expected duration of the signal (“Good morning, Skylab. Got you through Goldstone for 9 min”). Good-mornings are exchanged between CDR and CC, but the call is brought to end 9 min later with no other substantive content other than an announcement of loss of signal and a pointer towards when and where the next call will take place (CC at 333 12 23 36: “Skylab, we’re a minute to LOS and 5 min to Ber—Bermuda.”). The next call (333 12 28 00) opens similarly—CC: “Skylab, we’re back with you through Bermuda for 5 min”. In contrast to the previous call however, the astronauts remain silent and the call closes shortly thereafter with a similar announcement of the imminent loss of signal from CC, plus the location of the relay for the next call and a note that the next call will begin with the ground team retrieving audio data to be fed into the transcription machine, without the astronaut crews having spoken at all (333 12 32 58: “Skylab, we’re a minute to LOS and 5 min to Canaries; be dumping the data/voice at Canaries”).

Despite the seeming inaction on display here, the transcripts might still be used to elicit an insight into various features of the ways in which NASA is organized. For instance, we learn that transcribing activity is comprehensive rather than selective—it is applied even when nothing overtly interesting is taking place, to keep the fullest record possible. Communication lines are accountably opened and closed in the eventuality that there might be things worth recording, even if that isn’t always the case. There are procedural regularities to conversations between ground and astronauts that bookend periods of communications (e.g., a sign-on and a sign-off), which do not necessarily operate according to the general conventions of conversation (e.g., it would be a noticeable breach for a person not to respond to a greeting on the telephone, but not here) (Schegloff, 1968). However, it is worth noting that what we might learn from these episodes is of no consequence to NASA or their scientific partners—for them, the purpose of transcribing these episodes can only be to ensure their vast transcription machine continues rolling; here, producing an extraordinarily elaborate icing on what could at times be the blandest of cakes.

This section will explore the ways in which materials we have introduced up have been put to use for different ends post-hoc by other institutions with differing sets of interests beginning with the NASA case first.

Post-mission, various researchers have attempted to tap into the insights contained in Skylab’s volumes of transcripts, particularly as part of computationally-oriented studies that process the data captured therein (scientific results and talk alike) to elaborate on the work of doing astronautics and propose algorithmic methods for organising that work more efficiently. Kurtzman et al. (1986), for instance, draw on astronaut-recorded data to propose a computer system—MFIVE—for absolving the need of having insights recorded in transcript at all by mechanising the processes of space station workload planning and inventory management. The addition of a computerised organisational tool, which would record and process information about workload planning and inventory management issues, is envisaged as follows:

“The utility and autonomy of space station operations could be greatly enhanced by the incorporation of computer systems utilizing expert decision making capabilities and a relational database. An expert decision making capability will capture the expertise of many experts on various aspects of space station operations for subsequent use by nonexperts (i.e., spacecraft crewmembers).” (Kurtzman et al., 1986: 2)

From their report then, we get a sense that what the computer requires and provides is a fixed variable-analytic codification of the work of doing astronautics that can form the basis for artificially-intelligent decision-making and deliver robust instructions on core tasks to astronaut crews. The crew autonomy that is promised, then, is partial, inasmuch as Kurtzman et al.’s (1986) MFIVE system is premised on having significant components of the work operate mechanistically (e.g., with a computer providing decision-making on the optimum ways to complete given core tasks, and astronauts then following the computer-generated instructions). In this sense, we might take their recommendations to be to de-emphasise the need for transcriptions altogether, as they argue that much of the decision-making might be taken off-comms altogether in the first place.

The notion of standardising and codifying the work of astronautics for the benefit of computerised methods (especially in regard to work which has previously been captured in and mediated through talk and its resultant transcriptions) is developed further by DeChurch et al. (2019), who leverage natural language processing techniques to analyze the conversation transcripts produced by Skylab missions. Chiefly, the text corpus is treated with topic modelling—“computational text analysis that discovers clusters of words that appear together and can be roughly interpreted as themes or topics of a document” (DeChurch et al., 2019: 1)—to demonstrate a standardised model of “information transmission” (DeChurch et al., 2019: 1) which can be organised and managed in ways that mitigate communicative troubles between astronaut crews and mission control. As with the Kurtzman et al. (1986) study, the notion embedded in DeChurch et al. (2019) use of the transcripts is one of standardisation; that astronauts’ talk can be construed as a topically-oriented, discoverable phenomenon, the verbal content of which directly maps onto the work of doing astronautics. This is problematic for conceptual as well as practical reasons. Conceptually, the talk that is represented in a transcript does not necessarily fully elaborate on the goings-on of the settings and work within which that talk is contextually situated (cf. Garfinkel (1967) on good organisational reasons for bad clinical records). Practically, it is important to recognise that Skylab spent 40 minutes out of every hour out of radio contact with mission control due to its orbital trajectory taking it out of range of communications relay stations (and naturally, there is more to the work of doing astronautics than talking about doing astronautics; the astronauts were of course busy even during periods of loss-of-signal).

An interesting question then might be, if using conversation transcripts in the ways outlined above is problematic in terms of how a transcript maps onto the practices that produce it, how might we use them alternatively? An ethnomethodological treatment might instead focus on how the audio-only communications link is used to make the work of both astronauts and mission control accountable, and where the notion of “life” and “work” in space is defined and negotiated in terms of how it is to be undertaken, achieved and evaluated. The difference being pointed to here is between two positions. First, the approach that follows or more-or-less direct continuation of NASA’s own staunchly scientific characterisations of living and working in space: conceptualising the work of astronauts and other spaceflight personnel as if it could be described in abstract universal terms (i.e., as if it can be codified as a set of rules and logical statements connecting them, such that a computer technology—artificial intelligence, natural language processing—can ‘understand’ this work as well as the human astronauts designated to carry it out). Second, leveraging the transcripts as some kind of (non-comprehensive, non-perfect) record through which we might learn something of what astronauts do and how they do it (which is often assumed a priori rather than described).

Earlier, we accounted for the absence of a military-produced transcript documenting the talk of the individuals involved in the Collateral Murder incident by reference to the fact that the IO for the incident’s AR 15-6 did not believe that the conduct of US personnel had played a causal role in the deaths of the 11 civilians killed in the strike. As we know, however, the US military were not the only organization to take an interest in the Collateral Murder case. As noted, Wikileaks published leaked gunsight footage from one of the Apache helicopter’s which carried out the strike in 2010. Alongside the video, Wikileaks released a rudimentary transcript of talk (Figure 8 below) which was produced using recordings from the cockpit of that same Apache helicopter, the audio from which was included in the leaked video (see Mair et al., 2016).

In our previous discussion of the Collateral Murder case, we accounted for the absence of a military-produced transcript by reference to the fact that, in contrast to the Uruzgan incident, the AR 15-6 IO for the collateral murder case did not believe that the conduct of US personnel had played a causal role in the incident’s outcome. Wikileaks’ subsequent production of a transcript for the Collateral Murder case can be accounted for by examining their organization-specific practical purposes in taking up the video. In approaching the materials surrounding the strike, Wikileaks’ objectives were radically different to that of the US military. Most notably, the Wikileaks approach is characterized by a significantly different perspective on the culpability of the US personnel involved in the operation. Though it is noteworthy that Wikileaks had relatively little to say about the incident itself, what little commentary does exist surrounding the transcript and the video footage points clearly towards a belief that the US personnel involved in the incident had acted both immorally and illegally. The first piece of evidence regarding this belief can be found in the incident’s given name: Collateral Murder (Elsey et al., 2018). Implicit in such a title is an accusation that the strike did not constitute a legitimate killing in the context of an armed conflict. The brief commentary which surrounds the video reinforces such a claim, describing the strike as an “unprovoked slaying” of a wounded journalist (WikiLeaks, 2010). Comparably to the Uruzgan incident, therefore, the production of a transcript has emerged alongside accusations regarding the failures of military personnel, wherein the transcript provides record by which the conduct of those personnel can be assessed in its details. As with the other cases we have presented up to this point, the Wikileaks transcript has several shortcomings—and in this final section of the paper it will be worth giving these apparent inadequacies some serious consideration in light of the Jeffersonian transcription system and Sacks’ own reflections on the nature of transcripts.

The rudimentary character of the transcripts we have presented up to this point are particularly conspicuous when contrasted with excerpts of transcripts produced using the Jeffersonian transcription conventions. Consider the following transcript excerpt (Table 2 below) taken from a study of a United Kingdom memory clinic where dementia assessments are conducted by neurologists (Elsey 2020: 201):

If we compare this transcript to the Wikileaks transcript of the collateral murder case (Figure 8), we can see various similarities. They both capture the “talk” recorded; they both separate the talk into distinct “utterances” which appear in sequence; and they both preserve the temporal aspects of the talk through the use of time stamps or line numbers. Nevertheless, the Wikileaks transcript differs from the memory clinic transcript insofar as it does not include any reference to the pauses which appear in natural conversation and, crucially, it does not include a distinct column to record “who” is speaking. The audio recordings for collateral murder case include the talk of two Apache helicopter crews, who are communicating both with one another as well as with numerous different parties on the ground, and without speaker identifiers, the action depicted in the Wikileaks transcript is extremely difficult to follow when read on its own. In comparison, the memory clinic interaction notes whether the neurologist (Neu), patient (Pat) or accompanying person (AP) is speaking, albeit the actual identities of the participants are anonymized for ethical purposes in the research findings.

From a CA perspective, therefore, the way in which talk has been presented in the Wikileaks transcript, and indeed in the Uruzgan and Skylab transcripts, fails to preserve a sufficient level of detail for serious fine-grained analysis of the action and interaction to be possible. In rendering speakers indistinguishable from one another, many of CA’s central phenomena—most prominently sequentiality and turn-taking—are obscured (Sacks et al., 1978; Heritage, 1984; Jefferson, 2004; Schegloff, 2007; Elsey et al., 2016). This relates to how individual utterances in interaction both rely on and re-produce the immediate context of the on-going interaction. As such the intelligibility and sense of any utterances is tied to what was previously said and who it was addressed to. In military and space settings this is a critical issue given the number of communication channels and speakers involved.

Now, the lesson to be learned here is not that the transcripts presented over the course of this paper are, in any objective sense, inadequate. It might well be said that they are inadequate for the stated objectives of CA, but if this paper has demonstrated anything it is that conversation analysts are by no means the only ones interested in transcripts. The lesson, therefore, is that questions regarding what constitutes an adequate record of “what happened” are asked and answered within a field of organisationally specific relevancies. Over the course of this paper, we have demonstrated that a diversity of transcripts—many of which bear little resemblance to one another—can be adequately put to use towards a variety of ends depending upon the requirements of the organisation in question. Naturally, this same point applies in the context of transcripts produced using the Jeffersonian transcription conventions, which are, ultimately, just one benchmark for adequate transcription amongst countless others (e.g., Gibson et al., 2014 for a discussion). Towards that end, it is worth returning to an earlier quoted passage from Sacks, this time given more fully, in which he outlines his methodological position regarding audio-recordings in research.

“I started to work with tape-recorded conversations. Such materials had a single virtue, that I could replay them. I could transcribe them somewhat and study them extendedly—however long it might take. The tape-recorded materials constituted a “good enough” record of what happened. Other things, to be sure, happened, but at least what was on the tape had happened.”

From the founder of conversation analysis this could be read as a deflationary account of how recordings of talk can be analyzed. However, Sacks’ explanation clearly speaks towards precisely the thing that transcripts make possible. In preserving talk and making it available for assessment, transcripts afford analysts the opportunity to make empirical assessments regarding ‘what happened’. Thus, the distinctive move that this paper has proposed to make has been to treat the production and use of transcripts as a phenomenon in and of itself, topicalizing their contingent and institutionally produced character in order to gain an insight into the motives and objectives behind the transcription practices of the US Military and NASA. What we are recommending, then, based on our research, is that transcripts be seen as contextually embedded artifacts-in-use. Understanding them, therefore, means understanding the embedding context, how the transcript achieves its specific work of transcription and, crucially, what it allows relevant personnel to subsequently do.

The wide range of different transcripts (re)-presented in this paper indicate that we are dealing with huge organizations, with staff and technology to match. What also becomes apparent from our research is the huge amounts of “data” that NASA and the US military collect as part of their routine work activities. However, for various reasons (i.e., secrecy, sensitivity and so on) military organizations can be characterised as somewhat reluctant actors in terms of the transparency of their routine operations and procedures or the intelligibility of the materials released. As a result, public access to existing “data” (e.g., mission recordings, transcripts, documents) is severely restricted or difficult to make sense of. NASA’s transcription machinery, on the other hand, is more oriented to issues of transparency, although the sheer volume of transcription materials conceivably counteracts that aim.

While a lot of the literature has pointed out the political significance of omitted content—conversational details that had not been included in the transcript—our comparison of NASA and US military transcription work adds a new perspective to that: transcripts can document too little or too much—both creating distinct problems for people relying on/using the transcripts. While in military contexts there is typically too little material, NASA’s transcription machinery produced what might in latter-day social science, based on NASA’s treatment of them, be construed as “Big Data” (Kitchin, 2014): large corpus interactional datasets that by virtue of their volume must necessarily rely on computational processing for their analyze (cf. DeChurch et al. (2019) and Kurtzman et al. (1986) discussed elsewhere in this paper), which itself embeds the assumption that talk is just one more scientific variable that NASA’s scientists have at their analytic disposal. However, these scientistic efforts appear to deepen, rather than diminish, the “representational gap” in NASA’s understanding of the work of astronautics, inasmuch as completionist all-in-one one-size-fits-all approaches do not seem to acknowledge the various mismatches between transcript and transcribed interaction. This is an area that EM and CA have a long-standing tradition in drawing attention to, which compounds their relevance here. In contrast to our previous published work (Mair et al., 2012, Mair et al., 2013, Mair et al., 2016, Mair et al., 2018; Elsey et al., 2016; Elsey et al., 2018; Kolanoski, 2017; Kolanoski, 2018), which focused on using the available “data” to describe and explicate military methods and procedures (e.g., communication practices and target identification methods), this study has used the available “data” and, specifically the transcripts produced internally, to demonstrate aspects of how these organizations work. For instance, the available transcripts we have examined here can provide an open door into the accounting practices of these specific organizations. One key use of transcripts in the military examples relates to the insights we gain about how the transcripts are treated as evidentiary documents during investigations following deadly “incidents”. Though this may also be the case in how NASA leverages their transcriptions (c.f. Vaughan (1996) on usages of various data including conversation transcripts as diagnostic telemetry for forensically and legally examining disasters such as the 1986 Space Shuttle Challenger explosion), it is more typical that transcripts stand as a record of achievements of various kinds. That said, as we have seen, the transcripts that NASA produces are designed to feed into a broad range of activities (e.g. “doing spaceflight”, “doing research”, “doing public relations”, etc), which dually resists attempts to treat them as standardisable documentation as NASA often conceive of them (cf. DeChurch et al. (2019) and Kurtzman et al. (1986)) and point towards the value of an EM/CA approach which can more carefully attune to the interactional nuance that NASA’s own various teams draw on to extract useful information for their specific and discrete purposes (e.g. “doing spaceflight”, “doing research”, “doing public relations”, etc).

One interesting observation that the paper makes plain is the fact that transcripts are rarely, if ever, read and used on their own in any of the examples included in this paper. The transcripts do not offer “objective” accounts that can speak for themselves in the way that videos are occasionally treated (Lynch, 2020). To read and make sense of a transcript requires context and background obtained from supplementary sources (e.g., interviews with participants, other documents). This is strongly linked to the veracity of the original recordings themselves.

A key question that this paper has returned to continually relates to the reasons why transcripts are produced by the different organizations. The military-based examples reveal that the transcription of the audio-video recordings is not a routine part of military action. Instead, it is seen as a required step in formal and/or legal investigations of incidents involving possible civilians or friendly fire. The analysis presented here unpacks the relationship between the audio/video and the transcript produced and raises questions about which (re)presentation of a mission takes primacy. In stark contrast, NASA’s “transcription machinery” displays a systematic and completist approach to transcript production, ranging from scientific experiments, mundane greeting exchanges and all daily press conferences with mission updates (or lack thereof).

The what’s and why’s of transcription practices in these contexts are relatively easy to ascertain and describe. In contrast, the transcription methods themselves remain obscured and only recoverable from the documents produced. This applies to both the military and NASA where transcription practices and methods employed are rarely explicitly described or articulated in comparison to the Jeffersonian transcription techniques in CA. As such we do not learn who actually produced the transcripts and there is no account of the “conventions” used to format the transcripts. Arriving at answers to those questions thus requires additional investigative work. In the military cases, we can use the military “logs” to ascertain when they were produced in relation to the original events and the investigations. These logs and timelines document when transcription occurred (including when it was corrected and approved) and what was transcribed (e.g., witness testimony, gunsight camera/comms audio-video).