- 1Disaster and Emergency Management, School of Administrative Studies, York University, Toronto, Canada

- 2Institute for Methods Innovation (IMI), Eureka, CA, United States

The novel coronavirus (COVID-19) outbreak has resulted in a massive amount of global research on the social and human dimensions of the disease. Between academic researchers, governments, and polling firms, thousands of survey projects have been launched globally, tracking aspects like public opinion, social impacts, and drivers of disease transmission and mitigation. This deluge of research has created numerous potential risks and problems, including methodological concerns, duplication of efforts, and inappropriate selection and application of social science research techniques. Such concerns are more acute when projects are launched under the auspices of quick response, time-pressured conditions–and are magnified when such research is often intended for rapid public and policy-maker consumption, given the massive public importance of the topic.

Introduction

The COVID-19 pandemic has unfortunately illustrated the deadly consequences of ineffective science communication and decision-making. Globally, millions of people have succumbed to scientific misinformation about mitigation and treatment of the virus, fuelling behaviors that put themselves and their loved ones in mortal danger.1 Nurses have told stories of COVID-19 patients, gasping for air, and dying, while still insisting the disease was a hoax (e.g., Villegas 2020). While science communication has always had real world implications, the magnitude of the COVID-19 crisis illustrates a remarkable degree of impact. Moreover, the crisis has demonstrated the complexity and challenge of making robust, evidence-informed policy in the midst of uncertain evidence, divergent public views, and heterogenous impacts. This adds urgency to seemingly abstract or academic questions of how the evidence that informs science communication practice and decision-making can be made more robust, even during rapidly evolving crises and grand challenges.

There has been a massive surge of science communication-related survey research projects in response to the COVID-19 crisis. These projects cover a wide range of topics, from assessing psychosocial impacts to attempting to evaluate different interventions and containment measures. Many of the issues being investigated connect to core themes in science communication, including (mis)information on scientific issues (e.g., Gupta et al., 2020; Pickles et al., 2021), trust in scientific technologies and interventions, including vaccines (e.g., Jensen et al., 2021a; Kennedy et al., 2021a; Kwok et al., 2021; Ruiz and Ball 2021), and more general issues of scientific literacy (e.g., Biasio et al., 2021)—themes being investigated in a context of heightened public interest, significant pressure for effectiveness in interventions, and with highly polarized and contentious debate. Such survey research can be instrumental in informing effective government policies and interventions, for example, by evaluating the acceptability of different mitigation strategies, identifying vulnerable populations experiencing disproportionate negative effects, and clarifying information needs (Van Bavel et al., 2020).

However, the rush of COVID-19 survey research has exposed challenges in using questionnaires in emergency contexts, such as methodological flaws, duplication of efforts, and lack of transparency. These issues are especially apparent when projects are launched under time-pressured conditions and conducted exclusively online. Addressing these challenges head on is essential to reduce the flow of questionable results into the policymaking process, where problematic methods can go undetected. To truly succeed at evidence-based science communication (see Jensen and Gerber 2020)—and to support evidence-based decision-making through good science communication—requires that survey-based research in emergency settings be conducted according to the best feasible practices.

In this article, we highlight the utility of questionnaire-based research in COVID-19 and other emergencies, outlining best practices. We offer guidance to help researchers navigate key methodological choices, including sampling strategies, validation of measures, harmonization of instruments, and conceptualization/operationalization of research frameworks. Finally, we provide a summary of emerging networks, remaining gaps, and best practices for international coordination of survey-based research relating to COVID-19 and future disasters, emergencies, and crises.

Suitability of Survey-Based Research

Social and behavioural sciences have much to offer in terms of understanding emergency situations broadly, including the COVID-19 crisis, and informing policy responses (see Van Bavel et al., 2020) and post-disaster reactions (Solomon and Green, 1992). Questionnaires have unique advantages and limitations in terms of the information that can be gathered and the insights that can be generated when used in isolation from other research approaches (e.g., see Jensen and Laurie, 2016). For these reasons, researchers should carefully assess the suitability of survey-based methods for addressing their research questions.

In emergency contexts, survey research can offer several advantages. Questionnaire-based work can:

• Allow for relatively straightforward recruitment and consenting procedures with large numbers of participants, as well as increasing the geographical scale that researchers can target (versus, for example, interview or observational research).

• Gather accurate data about an individual’s subjective memories or personal accounts, knowledge, attitudes, appraisals, interpretations, and perceptions about experiences.

• Allow for many mixed or integrated strategies for data collection, including both qualitative/quantitative; cross-sectional/longitudinal; closed-/open-ended; among others.

• Integrate effectively with other research methods (e.g., interviews, case study, biosampling) as supplemental or complementary (see Morgan, 2007) approaches to maximise strengths and offset weaknesses that allow for data triangulation.

• Allow for consistent administration of questions across a sample, as well as carefully crafted administration across multi-lingual contexts (e.g., validating multiple languages of a survey for consistent results).

• Enable highly complicated back-end rules (“survey logic”) for tailoring the user experience to ensure only relevant questions are presented.

• Create opportunities for carefully-crafted experimental designs, such as manipulating a variable of interest or comparing responses to different scenarios across a population.

• Deploy with relatively low costs and rapid timeframes compared to in-person methodologies.

At the same time, surveys can have significant limitations in the context of crisis research that can undermine their reliability or create temptations for methodological shortcuts. For example:

• Surveys face important limits in terms of what information can be reliably obtained. For example, respondents generally cannot accurately report about the attitudes, experiences, and behaviors of other people in their social groups. Likewise, self-reports can be systematically distorted by psychological processes, especially when it comes to behavioural intentions and projected future actions. Retrospective accounts can also be unreliable, particularly in cases of complex event sequences or events that took place long ago (e.g., Wagoner and Jensen 2015).

• The quality of survey data can degrade rapidly when there is low ecological validity (i.e., participants are not representative of the broader population), whether through sampling problems, systematic patterns in attrition for longitudinal research, or other factors.

• Seemingly simple designs may require extensive methodological or statistical expertise to maximise questionnaire design and data analysis (i.e., ensuring valid measures, maximizing best practice, and avoiding common mistakes).

• The limited ability to adjust measures once a survey has been released, without compromising the ability to develop inferences from comparable data, can be challenging in rapidly evolving crisis contexts where relevant issues are changing rapidly.

• Cross-sectional surveys can give a false impression of personal attributes that are prone to change if assumptions of cross-situational consistency are applied (e.g., factors that are expected to remain stable across time) (e.g., Hoffman, 2015).

Given these advantages and limitations, there are several appropriate targets for survey research in crises and emergencies. Alongside other methods—including observational, ethnographic, and interview-based work, depending on the specific research questions formulated—surveys can help to gather reliable data on:

• Knowledge: What people currently believe to be true about the disease (e.g., origin of the coronavirus, how could they catch it, or how they could reduce exposure).

• Trust: Confidence in different political and government institutions/actors, media and information sources, and other members of their community (e.g., neighbors, strangers) (e.g., see Jensen et al., 2021).

• Opinions: Approval of particular interventions to slow the spread; belief about whether policies or behaviours have been effective or changed the emergency outcome; or personal views about perceptions of vaccine efficacy or safety.

• Personal impacts: Reports from individuals who are exposed or negatively affected, such as with chronic stress or loss of loved ones, employment, health, and stigmatization.

• Risk perceptions: Hopes and fears related to the disease, end points of the emergency, and return to normalcy.

Even when aware of the limitations, launching and conducting survey research is a specialized skill that requires training, experience and mentorship. This expertise is comparable to conducting epidemiological, biomedical, or statistical research. Even when questionnaires appear ‘simple’ because of the skillful use of plain language and straightforward user interfaces, there are substantial methodological learning curves associated with proper research designs. In the next sections, we provide several project design, coordination, and methodological recommendations for researchers launching or conducting rapid-response research projects on these topics inherent with emergency contexts, in both COVID-19 and beyond. In the next section, we discuss overall research coordination, project designs, and specific methodological approaches.

Project Design

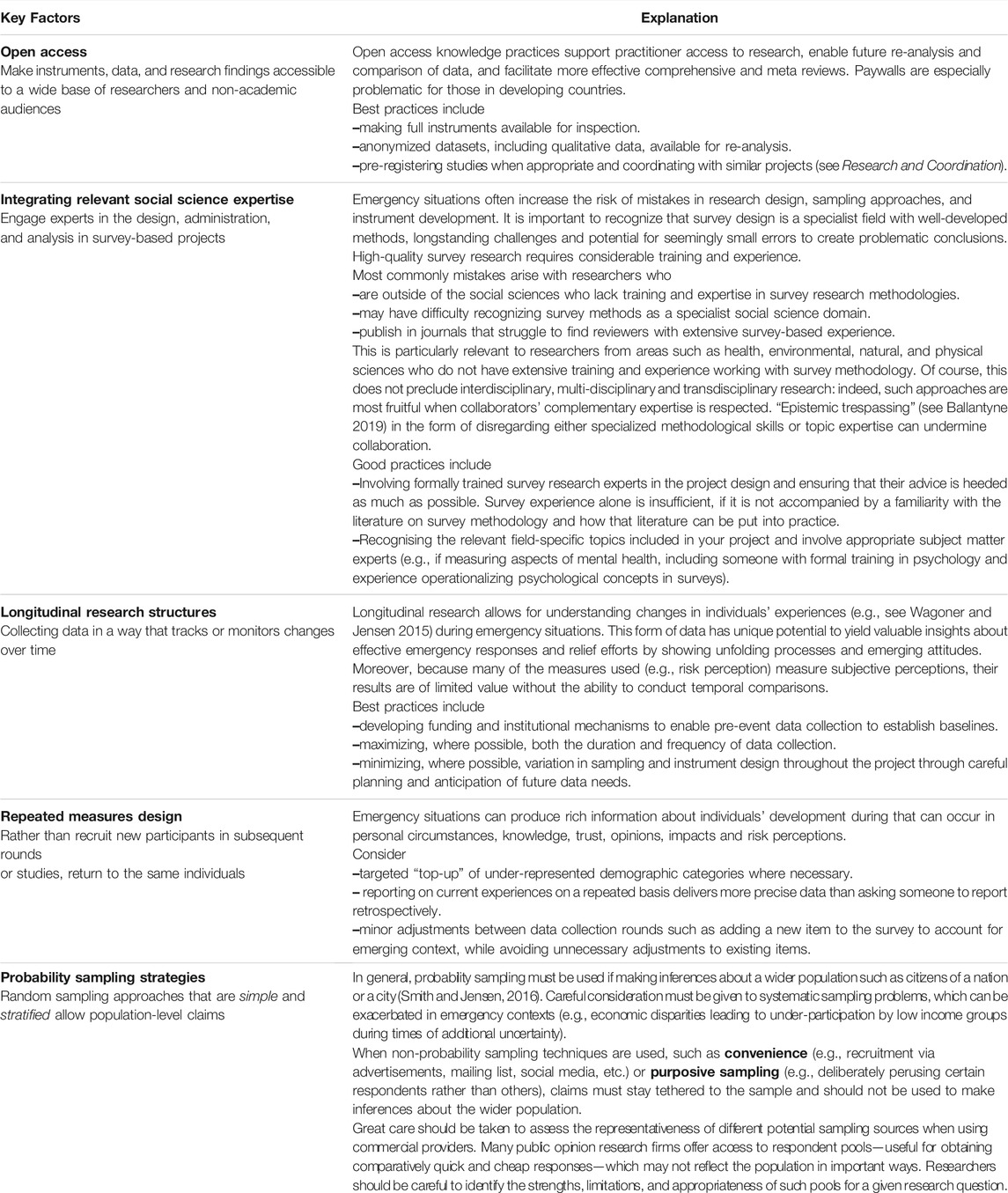

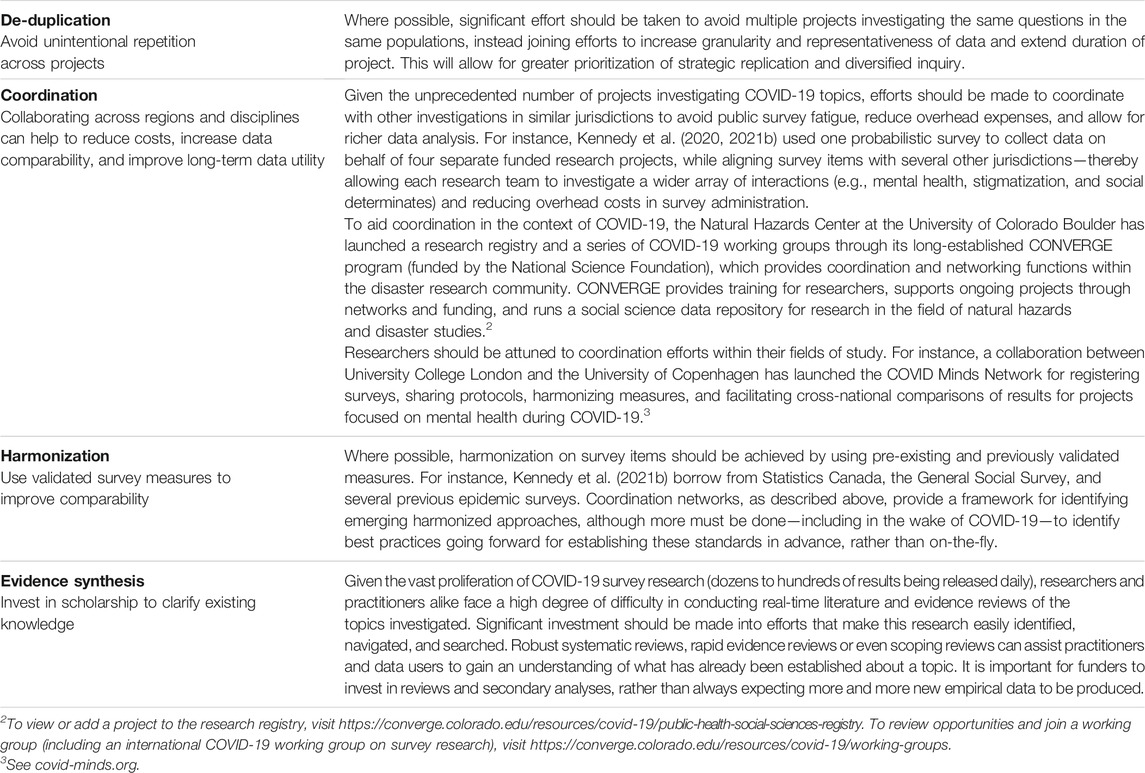

Researchers face important choices when designing survey-based research within the fast-moving context of disasters and emergencies. There can be a substantial pressure to conduct research quickly, including funder timelines, the perceived race to publish, or pressure to collect ephemeral data. Each of these factors can necessitate difficult decisions about project and research designs. At a high level, we recommend that survey-based projects on COVID-19 adopt the following standards (Table 1):

Methodological Considerations

In emergency situations, avoiding common pitfalls in methodological designs can be challenging because of temporal pressures and unique emergency contexts. We recommend the following standards in methodological designs for COVID-19 research (Table 2):

We also encourage readers to explore other resources for supporting methodological rigour in emergency contexts. In particular, the CONVERGE program associated with the Natural Hazards Center at the University of Colorado Boulder maintains a significant community resource via tutorials and “check sheets” to support method design and implementation (see https://converge.colorado.edu/resources/check-sheets/).

Research Coordination

Research coordination during emergencies requires pragmatic strategies to maximise the impact of evidence from rapid-response research. Despite massive government attention and resulting funding schemes, the available funds for social science research are outstripped by research needs–a situation made worse through duplication of research, overproduction, and inefficient use of resources in some topics. This results in fewer topics and populations receiving research attention, and investigations spanning a shorter period. It also generates a “wave profile” of investigation that is temporary and transient, disappearing as funds become limited due to economic constraints or further displacements occur to new topics.

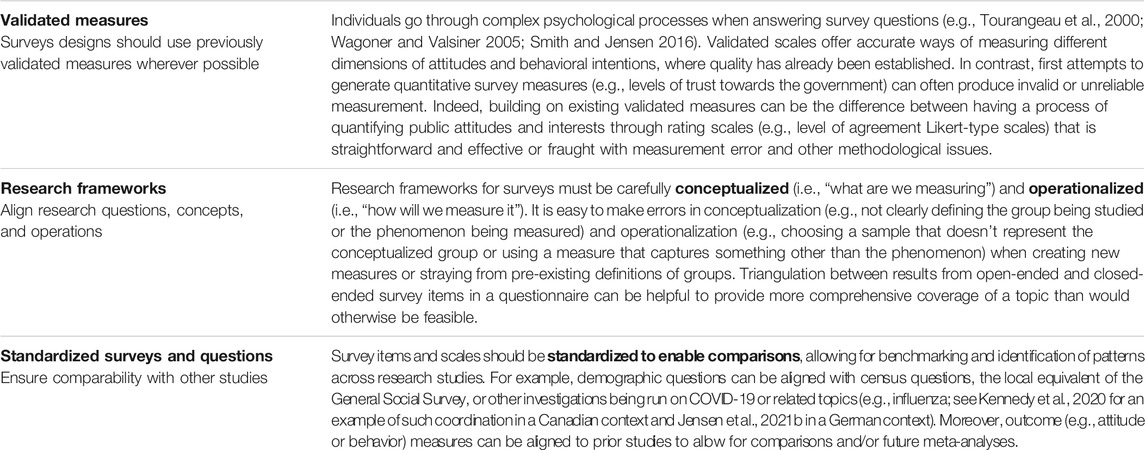

We recommend the following practical considerations to maximize the efficiency, coordination, and effectiveness of survey-based research efforts (Table 3):

Conclusion

Evidence-based science communication and decision-making depends on the reliability and robustness of the underlying research. Survey-based research can be valuable to supporting communication and policy-making efforts. However, it can also be vulnerable to significant limitations and common mistakes in the rush of trying to deploy instruments in an emergency context. The best practices outlined above not only help to ensure more rigorous data, but also serve as valuable intermediate steps when developing the project (e.g., meta-analysis helping to inform more robust question formulations; methodological transparency allowing more scrutiny of instruments before deployment). For example, by drawing on existing survey designs prepared by well-qualified experts, you can both help to enable comparability of data and reduce the risk of using flawed survey questions and response options.

In this article, we have presented a series of principles regarding effective crisis and emergency survey research. We argue that it is essential to begin by assessing the suitability of questionnaire-based approaches (including the unique strengths of surveys, potential limitations related to design and self-reporting, and the types of information that can be collected). We then laid out best practices essential to reliable research such as open access designs, engaging requisite social science expertise, using longitudinal and repeated measure designs, and selecting suitable sampling strategies. We then discussed three methodological issues (validation of items, use of standardized items, and alignment between concepts and operationalizations) that can prove challenging in rapid response contexts. Finally, we highlighted best practices for funding and project management in crisis contexts, including de-duplication, coordination, harmonization, and evidence synthesis.

Survey research is challenging work requiring methodological expertise. The best practices cannot be satisfactorily trained in the immediate race to respond to a crisis. Indeed, even for those with significant expertise in survey methods, issues like open access, de-duplication of projects, and harmonization between designs can pose significant challenges. Ultimately, the same principles hold true in emergency research as in more “normal” survey operations, and “the quality of a survey is best judged not by its size, scope, or prominence, but by how much attention is given to dealing with all the many important problems that can arise” (American Statistical Association, 1998, p. 11).

The emergency context should not weaken commitments to best practice principles, given the need to provide robust evidence that can inform policy and practice during crises. For researchers, this means creating multidisciplinary teams with sufficient expertise to ensure methodological quality. For practitioners and policy makers, this means being conscientious consumers of survey data–and seeking ways to engage expert perspectives in critical reviews of best available evidence. And, for funders of such research, it means redoubling a commitment to rigorous approaches and building the infrastructure that supports pre-crisis design and implementation, as well as effective coordination during events. Building resilience for future crises requires investment in survey methodology capacity building and network development before emergencies strike.

Author Contributions

All three authors contributed to the drafting and editing of the manuscript, with EBK as lead.

Funding

This project is supported in part by funding from the Social Sciences and Humanities Research Council (1006-2019-0001). This project was also supported through the COVID-19 Working Group effort supported by the National Science Foundation-funded Social Science Extreme Events Research (SSEER) Network and the CONVERGE facility at the Natural Hazards Center at the University of Colorado Boulder (NSF Award #1841338). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of SSHRC, NSF, SSEER, or CONVERGE.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1As just one example, Loomba et al. 2021 found that misinformation results in a decline of over 6% in vaccine intentions in the United States, or some approximately 21 million prospective American vaccine recipients.

References

American Statistical Association (1998). Judging the Quality of a Survey. ASA: Section Surv. Res. Methods, 1–13.

Biasio, L. R., Bonaccorsi, G., Lorini, C., and Pecorelli, S. (2021). Assessing COVID-19 Vaccine Literacy: a Preliminary Online Survey. Hum. Vaccin. Immunother. 17 (5), 1304–1312. doi:10.1080/21645515.2020.1829315

Dong, E., Du, H., and Gardner, L. (2020). An Interactive Web-Based Dashboard to Track COVID-19 in Real Time. Lancet Infect. Dis 20 (5). doi:10.1016/s1473-3099(20)30120-1

Gupta, L., Gasparyan, A. Y., Misra, D. P., Agarwal, V., Zimba, O., and Yessirkepov, M. (2020). Information and Misinformation on COVID-19: a Cross-Sectional Survey Study. J. Korean Med. Sci. 35 (27), e256. doi:10.3346/jkms.2020.35.e256

Hoffman, L. (2015). Longitudinal Analysis: Modeling Within-Person Fluctuation and Change. New York: Routledge. doi:10.4324/9781315744094

Jensen, E. A., and Gerber, A. (2020). Evidence-based Science Communication. Front. Commun. 4 (78), 1–5. doi:10.3389/fcomm.2019.00078

Jensen, E. A., Kennedy, E. B., and Greenwood, E. (2021a). Pandemic: Public Feeling More Positive about Science. Nature 591, 34. doi:10.1038/d41586-021-00542-w

Jensen, E. A., Pfleger, A., Herbig, L., Wagoner, B., Lorenz, L., and Watzlawik, M. (2021b). What Drives Belief in Vaccination Conspiracy Theories in Germany. Front. Commun. 6. doi:10.3389/fcomm.2021.678335

Jensen, E. A., Kennedy, E., and Greenwood, E. (2021). Pandemic: Public Feeling More Positive About Science (Correspondence). Nature 591, 34.

Jensen, E., and Laurie, C. (2016). Doing Real Research: A Practical Guide to Social Research. London: SAGE.

Jensen, E., and Wagoner, B. (2014). “Developing Idiographic Research Methodology: Extending the Trajectory Equifinality Model and Historically Situated Sampling,” in Cultural Psychology and its Future: Complementarity in a New Key. Editors B. Wagoner, N. Chaudhary, and P. Hviid.

Kennedy, E. B., Daoust, J. F., Vikse, J., and Nelson, V. (2021a). “Until I Know It’s Safe for Me”: The Role of Timing in COVID-19 Vaccine Decision-Making and Vaccine Hesitancy. Under Review. doi:10.3390/vaccines9121417

Kennedy, E. B., Nelson, V., and Vikse, J. (2021b). Survey Research in the Context of COVID-19: Lessons Learned from a National Canadian Survey. Working Paper.

Kennedy, E. B., Vikse, J., Chaufan, C., O’Doherty, K., Wu, C., Qian, Y., et al. (2020). Canadian COVID-19 Social Impacts Survey - Summary of Results #1: Risk Perceptions, Trust, Impacts, and Responses. Technical Report #004. Toronto, Canada: York University Disaster and Emergency Management. doi:10.6084/m9.figshare.12121905

Kwok, K. O., Li, K.-K., Wei, W. I., Tang, A., Wong, S. Y. S., and Lee, S. S. (2021). Influenza Vaccine Uptake, COVID-19 Vaccination Intention and Vaccine Hesitancy Among Nurses: A Survey. Int. J. Nurs. Stud. 114, 103854. doi:10.1016/j.ijnurstu.2020.103854

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., and Larson, H. J. (2021). Measuring the Impact of COVID-19 Vaccine Misinformation on Vaccination Intent in the UK and USA. Nat. Hum. Behav. 5 (3), 337–348. doi:10.1038/s41562-021-01056-1

Mauss, I. B., and Robinson, M. D. (2009). Measures of Emotion: A Review. Cogn. Emot. 23 (2), 209–237. doi:10.1080/02699930802204677

Morgan, D. L. (2007). Paradigms Lost and Pragmatism Regained: Methodological Implications of Combining Qualitative and Quantitative Methods. Journal of Mixed Methods Research 2007, 1–48. doi:10.1177/2345678906292462

Pickles, K., Cvejic, E., Nickel, B., Copp, T., Bonner, C., Leask, J., et al. (2021). COVID-19 Misinformation Trends in Australia: Prospective Longitudinal National Survey. J. Med. Internet Res. 23 (1), e23805. doi:10.2196/23805

Ruiz, J. B., and Bell, R. A. (2021). Predictors of Intention to Vaccinate against COVID-19: Results of a Nationwide Survey. Vaccine 39 (7), 1080–1086. doi:10.1016/j.vaccine.2021.01.010

Schwarz, N., Kahneman, D., Xu, J., Belli, R., Stafford, F., and Alwin, D. (2009). “Global and Episodic Reports of Hedonic Experience,” in Using Calendar and Diary Methods in Life Events Research, 157–174.

Smith, B. K., and Jensen, E. A. (2016). Critical Review of the United Kingdom's “gold Standard” Survey of Public Attitudes to Science. Public Underst. Sci. 25, 154–170. doi:10.1177/0963662515623248

Smith, B. K., Jensen, E., and Wagoner, B. (2015). “The International Encyclopedia of Communication Theory and Philosophy,” in International Encyclopedia of Communication Theory and Philosophy. Editors K. B. Jensen, R. T. Craig, J. Pooley, and E. Rothenbuhler (New Jersey, Wiley-Blackwell). wbiect201]. doi:10.1002/9781118766804

Solomon, S. D., and Green, B. L. (1992). Mental Health Effects of Natural and Human-Made Disasters. PTSD. Res. Q. 3 (1), 1–8.

Tourangeau, R., Rips, L., and Rasinski, K. (2000). The Psychology of Survey Response. Cambridge: Cambridge University Press.

Van Bavel, J. J., Baicker, K., Boggio, P., Capraro, V., Cichocka, A., Cikara, M., et al. (2020). Using Social and Behavioural Science to Support COVID-19 Pandemic Response. Nat. Hum. Behav. 4, 460–471. doi:10.1038/s41562-020-0884-z

Villegas, Paulina. (2020). South Dakota Nurse Says many Patients Deny the Coronavirus Exists – Right up until Death. Washington, DC: Washington Post. Available at: https://www.washingtonpost.com/health/2020/11/16/south-dakota-nurse-coronavirus-deniers.

Wagoner, B., and Jensen, E. (2015). “Microgenetic Evaluation: Studying Learning in Motion,” in The Yearbook of Idiographic Science. Volume 6: Reflexivity and Change in Psychology. Editors G. Marsico, R. Ruggieri, and S. Salvatore (Charlotte, N.C.: Information Age Publishing).

Keywords: survey, questionnaire, research methods, COVID-19, emergency, crises

Citation: Kennedy EB, Jensen EA and Jensen AM (2022) Methodological Considerations for Survey-Based Research During Emergencies and Public Health Crises: Improving the Quality of Evidence and Communication. Front. Commun. 6:736195. doi: 10.3389/fcomm.2021.736195

Received: 04 July 2021; Accepted: 18 October 2021;

Published: 15 February 2022.

Edited by:

Anders Hansen, University of Leicester, United KingdomReviewed by:

Richard Stoffle, University of Arizona, United StatesIvan Murin, Matej Bel University, Slovakia

Copyright © 2022 Kennedy, Jensen and Jensen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eric B Kennedy, ZWJrQHlvcmt1LmNh

Eric B Kennedy

Eric B Kennedy Eric A Jensen

Eric A Jensen Aaron M Jensen

Aaron M Jensen