- Department of Philosophy, Bucknell University, Lewisburg, PA, United States

Efforts to cultivate scientific literacy in the public are often aimed at enabling people to make more informed decisions — both in their own lives (e.g., personal health, sustainable practices, &c.) and in the public sphere. Implicit in such efforts is the cultivation of some measure of trust of science. To what extent does science reporting in mainstream newspapers contribute to these goals? Is what is reported likely to improve the public's understanding of science as a process for generating reliable knowledge? What are its likely effects on public trust of science? In this paper, we describe a content analysis of 163 instances of science reporting in three prominent newspapers from three years in the last decade. The dominant focus, we found, was on particular outcomes of cutting-edge science; it was comparatively rare for articles to attend to the methodology or the social–institutional processes by which particular results come about. At best, we argue that this represents a missed opportunity.

Introduction

It is widely acknowledged that many Americans do not consistently see science as an authoritative source of information about matters of social importance (Funk and Goo, 2015). For instance, despite the robust scientific consensus on the dangers of anthropogenic climate change or the safety of childhood vaccines or GMOs, significant public dissent remains (stifling action and leading to health crises in the former two cases). Some might point to the poor state of scientific literacy (Miller, 2004) as a salient factor. Or perhaps scientists are not making their messages compelling enough to capture public attention (Olson, 2015) or otherwise failing to earn their trust or respect (Fiske and Dupree, 2014). Science communication researchers have been hard at work attempting to understand the dynamics of successful messaging strategies from scientists (or dedicated science communicators) to the public.1 Relatively little attention has been paid to the role that science journalists play in addressing the consensus gaps or improving the public's understanding of science more generally.

For the journalists' part, there seems to be a general recognition that they have an important role to play in this arena. Many definitions of journalism gesture at its function of supporting democracy by helping to maintain an informed citizenry: “Journalism is a set of transparent, independent procedures aimed at gathering, verifying and reporting truthful information of consequence to citizens in a democracy” (Craft and Davis, 2016, p. 34) or “Journalism is the business or practice of regularly producing and disseminating information about contemporary affairs of public interest and importance” (Schudson, 2013, p. 3).2 Angler's introduction (and guide) for aspiring science journalists notes, specifically, that “science journalism is a journalistic genre that primarily deals with scientific achievements and breakthroughs, the scientific process itself, scientists' quests and difficulties in solving complex problems” (Angler, 2017, p. 3). This is not to say that journalists should be regarded as the ultimate gatekeepers of scientific knowledge; but given their likely influence on many members of the public, it is worth asking how contributions of these various sorts (and potentially others) are distributed.3

At first glance, this variety of contribution types — reporting on the latest developments in science, taking a more thematic or historical approach, focusing on individual scientists' thought processes, and so on — might seem “all to the good” in that they'd presumably help develop the public's understanding of science and thus (again, presumably) bolster appropriate levels of public trust of science. But things are considerably more complicated than this. For one, what “appropriate levels” of such trust are is a matter of considerable debate; nor is it clear what the public ought to understand about science (Shen, 1975; Shamos, 1995). Second, we cannot assume that each of these contribution types promotes scientific literacy (or trust or anything else) equally. Indeed, we shall offer some general reasons for thinking that some can be expected not only to fail to improve important aspects of scientific literacy but that they may, in fact, damage public trust of science. Third, the presumption that more scientific literacy predicts better outcomes when it comes to the public's recognition of (and/or trust of) scientific consensus turns out not to hold up when it comes to “ideologically-entangled” scientific issues like anthropogenic climate change. On such issues, recognition of relevant expertise or the existence of a consensus itself often seems to be polarized; as is by now well-known, there's evidence that ideological-polarization on such issues is worse among those who are more scientifically literate (Kahan et al., 2011, 2012).4

Yet it is not entirely clear why one should expect that science literacy — understood as a grasp of discrete scientific facts or basic methodologies5 — should dispose members of the public to be more deferential to consensus messages. We assume that any normatively appropriate deference to scientific consensus should turn on a recognition of the prima facie epistemic significance of such consensus — e.g., its tendency to reliably indicate knowledge or understanding. Indeed, some scholars have argued that the precise nature of consensus (Miller, 2013) and how it comes about (Longino, 1990; Beatty, 2017; Oreskes, 2019) makes a normative difference to how seriously we should take a matter of consensus.6 This recognition, in turn, arguably depends on recognizing certain features of science as a social enterprise: viz. the prevalence of pro-social, collectivist norms (working together to get it right) à la Merton (1973) and yet its being propelled in many cases by the self-interest of individual scientists in competition with each other — to be first to a discovery, to say something new, to correct the record (Kuhn, 1962; Kitcher, 1990; Strevens, 2003, 2017, 2020).

There is obviously much more to say about the nexus of science communication, public trust, and science literacy. What sort of scientific literacy would best support certain social goals — e.g., cultivating appropriate trust of scientists or the scientific community as a whole, under certain conditions? Just what are those conditions? Should science journalists affirmatively aim at the cultivation of trust of science when doing so might be seen as (or amount to) turning a blind eye to problems within science such as misconduct, questionable research practices, replication failures, and the like (Figdor, 2017; Stegenga, 2018; Ritchie, 2020)? In what follows, we set these questions aside and assume that producing a public with a more nuanced grasp of the ways in which science functions as a social enterprise (including, inter alia, the normal means of regulating its activities, forming research agendas, vetting and solidifying its results, policing and educating its members, and so on) is a valuable social goal. If science is deserving of our trust in certain circumstances, we believe that it will only be so in the context of an understanding of science works as a social institution (Slater et al., 2019). The broad question of the paper, then, is to what extent the distribution of different types of articles about science can be expected to contribute to such an understanding. In the next section, we describe a content analysis of a random sampling of science journalism from three leading newspapers that provides some insight into this question. Our results suggest a strong bias (at least in this area of the “prestige press”) toward reporting on breakthroughs without much context about the scientific enterprise itself. We discuss this and related results and comment on consequences and alternatives below.

Content Analysis of Science Journalism in Popular Newspapers

Research Questions

To address our broad question, we conducted a content analysis of a random sampling of articles about science from three mainstream newspapers with a broad readership — The New York Times, the Washington Post, and USA Today (n = 163) — coding these articles so as to be able to address the following more specific research questions:

RQ1: To what extent do newspaper articles discussing science provide insight into the scientific process or enterprise (the social structure of science, its nature as an ongoing process)?

Given widely-recognized journalistic norms and practices favoring what Boykoff and Boykoff (2007) term “personalization, dramatization, and novelty” (as well as our own anecdotal observations as consumers of science journalism), we hypothesized that articles focusing on outcomes without substantive information about method or process would make up the majority of the dataset. The following two research questions were exploratory and we made no particular hypotheses concerning their answers at the outset:

RQ2: Are articles which address the scientific process more likely to do so in a negative (e.g., emphasizing conflict) or positive way (emphasizing normalcy or self-correction)?

RQ3: Are there specific genres of articles (length, topic, and source) which are more likely to convey information about the scientific process?

The focus on print journalism was motivated in part by the expectation that many Americans regularly receive news about science from such sources (Funk et al., 2017) and from our expectation that insights into the scientific process would be more likely to appear in outlets with reputations for more sober adherence to journalistic norms of accuracy than, say, television news programs or internet sources. This choice was made in full recognition of the fall in the popularity of print newspapers (it's worth noting that these publishers have developed strong online presences, allowing them to maintain their relevance in the digital age). Nevertheless, there is a sense in which our choice was arbitrary and our results are limited in what they can tell us about the media landscape generally; future research might usefully expand the genre of sources considered — e.g., into long-form magazine articles (such as those found in Scientific American, The New Yorker, The Atlantic, and so on), documentary television (e.g., NOVA, Nature, Planet Earth) and film (Particle Fever, March of the Penguins), blogs, podcasts, and other quasi-journalistic sources.

Methods: Generating the Dataset

Articles were collected using the ProQuest newspaper database, as it offered easy access to the archived articles of our chosen publications in one place and allowed for precise filtered searching. Using a random number generator (random.org), we first randomly selected 3 years between 2010 and 2018 and then 4 months for each year (possibly different for each year), also at random. We chose this range of years so as to include relatively recent reporting — since that is the science communication context that most matters for subsequent efforts — and also (potentially) to sample from reporting across two U.S. presidential administrations, election years, and so on. The years/months randomly chosen were 2012 (March, April, June, July), 2015 (February, April, July, October), and 2018 (February, June, July, August).

Next, restricting our search to these years/months (one month at a time), we searched the ProQuest newspaper database using the search string, “pub (“USA TODAY” OR “New York Times” OR “Washington Post”) AND (science OR scientists),”7 randomly selecting results so as to collect around 15 articles per month with the aim of assembling a dataset of between 150 and 180 articles in total.8 In practice, this involved loading a results page with 100 articles, selecting an article at random, and verifying that it fit our inclusion criteria — that is, being an instance of science journalism. Inevitably, however, this strategy returned articles that did not fit — e.g., articles where “science” occurred only incidentally or in non-relevant contexts (e.g., “political science”). We also automatically excluded media reviews (books, movies, &c.), letters to the editor, donor lists, and corrections. When grounds for exclusion were evident at the outset, another article was selected at random from the items on the same results page. Questionable articles were initially included but marked for later examination and kept or dropped with the concurrence of all of the authors (without replacement). In some cases, it only became clear that an article fell outside our inclusion criteria during the coding process; such articles were also removed (again, with the concurrence of the authors) without replacement: thus a final dataset of 163 articles rather than 180.

Two matters of discussion among the research team involved whether to exclude op-eds or obituaries. In the former case, we opted to include them, since many media consumers do not distinguish these from journalism — and anyway they remain a potential source of information about science. In the latter case, we found that many obituaries of scientists in fact provided a context for reviewing a scientist's work and career in richer detail than reports on recent developments or discoveries typically did, so we decided to include these as well.

Methods: Coding/Analysis

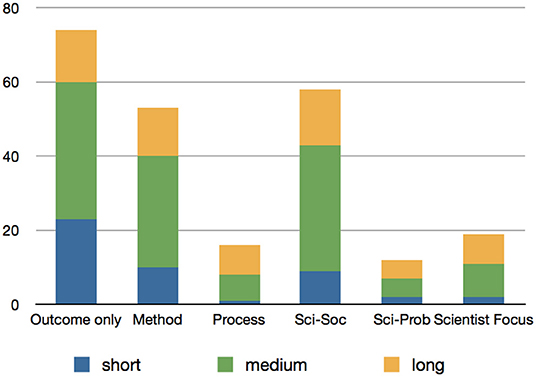

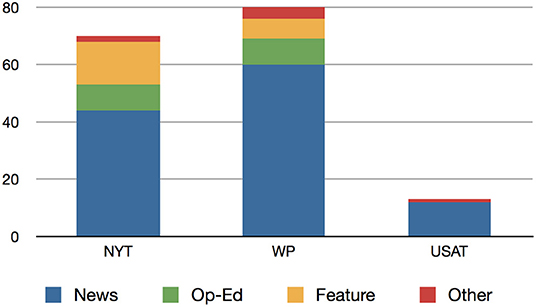

Each article was initially categorized by source, length (short, medium, and long)9, and type (news, op-ed, feature, or other). While the first two types speak for themselves, “features” included interviews, infographics, and other “atypical” news articles; the “other” category included for the most part obituaries and served as a catch-all for articles whose format did not fit neatly into any of the other categories (see Figure 1 for a breakdown). Once these descriptors were assigned and checked by the lead author for consistency, articles were randomly divided into three groups for initial coding by one of the authors.

Figure 1. Article types by source (raw numbers of 163 total articles).10

After each article had been coded once, they were all randomly assigned to one of the two remaining coders. This second coder then either confirmed the original coding or flagged it for discussion by all three coders (if additions or changes were proposed or if the second coder judged that a close look by all coders would be worthwhile); on some occasions, first coders also flagged articles for review by all three authors. A common coding scheme was developed from an initial review of the articles in light of our research questions and used by each coder; it contained the following elements:

Basic Focus (6)

Each article in our dataset was coded as falling into at least one of the following six basic foci, the following three of which centered on the way in which scientific results were presented:

• Outcome only: article presents the outcomes of science without any discussion of how it was produced or insight into the scientific enterprise.

• Method: article presents some non-incidental indication of what methods were used to obtain the outcomes discussed.

• Process: article goes beyond method at the level of the individual researcher or lab to convey information about the scientific enterprise or social processes of scientific research — e.g., discussing peer-review, replication (or its failure), inter-lab controversies or collaboration, and related institutional or social aspects of science. Such articles were judged to engage with the scientific enterprise in a way that would enlighten the reader about the scientific enterprise in some way.

The above three codes were treated as mutually exclusive — or, more accurately, additive, in the sense that articles discussing the scientific enterprise often included discussion of outcomes or individual methods by which particular results were obtained. Thus, coding an article with process is not meant to imply that only process and not outcomes or method, were discussed. Articles were also coded for:

• Science as problematic: articles which prominently portrayed problems within the scientific process that could negatively impact its status as a knowledge-producing enterprise — e.g., scientific misconduct (in behavioral or research contexts), questionable research practices, bias, failure to respond to societal goals or limitations, and related ethical lapses, &c.

• Science and society connection: articles which focused on a scientific topic directly tied to human activities — e.g., political issues, education, health policy, &c.

• Scientist focus: articles which describe the life and/or work of a particular scientist or small group of scientists, either on a specific project or over longer stretches of their career.

The above three codes could overlap with outcome only, method, or process codes and were permitted to overlap with each other. Some articles received only one of the latter three codes; all articles received at least one of the above six.

Scientific Area (4)11

• medical/health

• life sciences

• physical sciences

• social sciences.

Controversial Topics (4)12

• climate change

• GMOs/genetic engineering

• nuclear power13

• vaccines.

Content Codes (7)

• Science itself: articles concerning aspects of the process or enterprise of science (including the lives and work of scientists, scientific conferences and publishing, the funding of science, and similar topics), rather than any particular project or finding.

• Scientific conflict: articles that mention either synchronic or diachronic disagreement or conflict between scientists or labs, or articles that present findings that stand in contrast to previous findings [in the manner of what Nagler and LoRusso (2018) refer to as “contradictory health information” or what Nabi et al. (2018) call “reversals”].

• Self-correction: articles conveying information about the internal oversight structures of the scientific enterprise (e.g., peer-review, replication, retraction).

• Cited science: articles mentioning a specific journal article (or specific public presentation, e.g., a conference presentation).

• Curiosity: articles that discussed uncertainty surrounding a question or referenced the need for further investigation to settle remaining questions.

• Education: any reference to education, whether touched on by the reported science or not.

• Politics: as with education.

A note on our coding practice: we are not reporting a coefficient of intercoder reliability (e.g., Krippendorf's alpha) since our practice effectively allowed us to reach a consensus about the coding of each article. For full disclosure, this practice stemmed from an early, unsuccessful attempt to judge our reliability: after each coder evaluated 30 of the same articles, the lead author calculated Krippendorf's alpha for the Basic Focus variable — the most important variable for our analysis — as 0.63. While outcome only articles were generally easily and reliably recognized (as were most of the rest of our variables), whether an article revealed enough about the scientific process to deserve the process and (to a lesser degree) method codes sometimes involved a difficult judgment call; we found the same to be the case for some instances of the science as problematic category.14 Though we discussed renorming and trying again, we ultimately decided that we would feel more confident that we were treating the articles consistently if we approached any disagreement or feeling of a code's being a close call as a matter for consensus decision-making (as described above). These discussions often involved revisiting (and sometimes revising) previous determinations. Approximately half of our articles were coded according to this consensus-building process; the other half were judged as straightforward determinations by two coders (the lead author spot-checked around 30 of these for consistency and found no questionable cases or reason for revision).

Results

Top-Line Results

The main result is the dominance, as expected, of the outcome only code, followed by methodology and science and society connection (see Figure 2 below). This suggests that the most common view of science conveyed to the public by these newspapers in the last decade has been only minimally informative regarding the actual workings of the scientific enterprise. News articles about science tend to focus only on the results of recent experiments and studies, somewhat more rarely discussing the methodologies producing these outcomes, and rarely contextualizing the science in the work that led up to the study in question or the institutional–social processes in which the study was embedded.

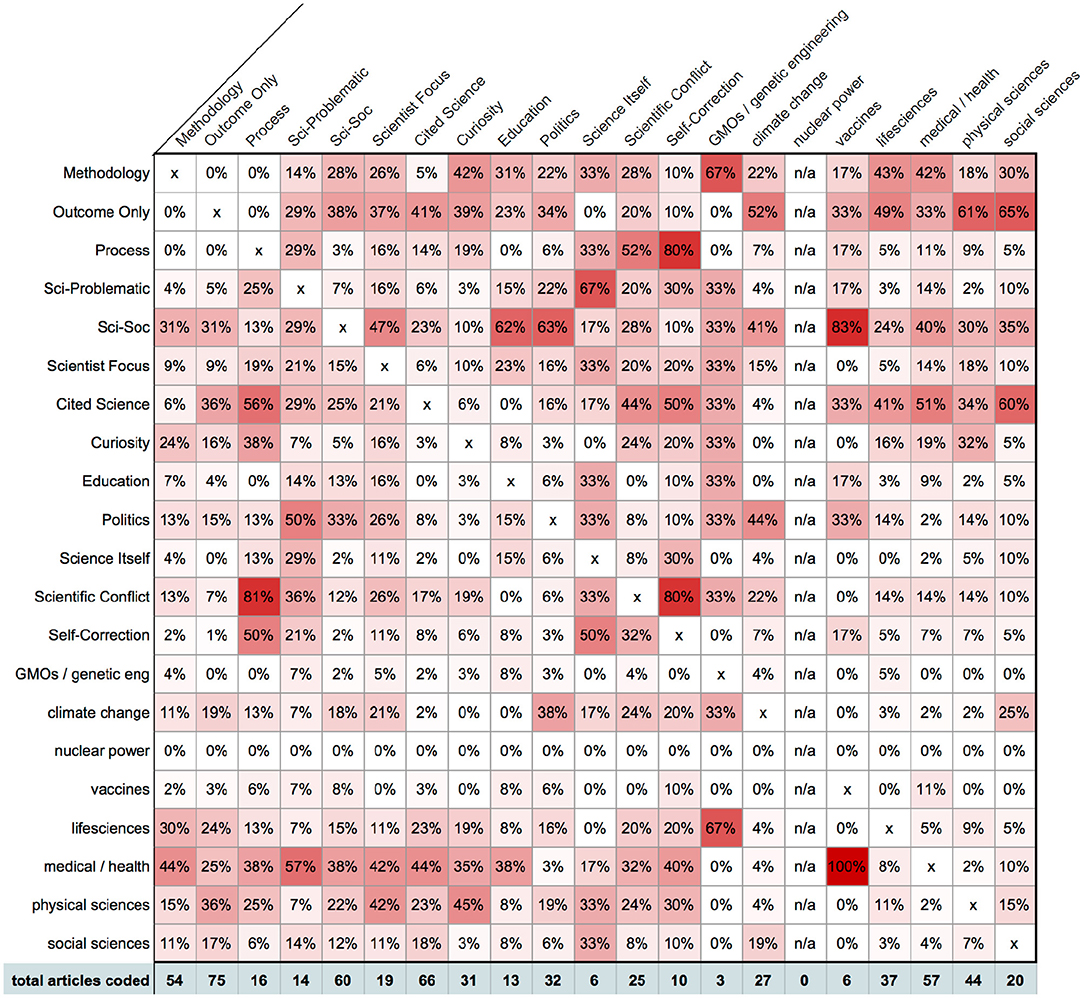

Regarding RQ2, we noted that of the sixteen articles coded as process, thirteen (81%) were also coded for scientific conflict whereas only half were coded as involving self-correction, revealing that the usual way for the public to be exposed to information about the scientific process is in reference to some kind of disagreement among scientists — either at a given time (e.g., “while team A says X; team B says Y”) or over time (e.g., “while previous studies have indicated that X, team A says Y”). Of these thirteen, seven were also coded as involving self-correction, so there is a sense in which conflict is being recognized as part of a broader process. In general, however, only 32% of scientific conflict codes co-occurred with self-correction codes.15 Figure 3 presents a summary of code co-occurrence.16

Figure 3. Code co-occurrence; percentages represent the proportion of the articles on the horizontal axis (totals in the bottom row) that also had the code on the vertical axis. For example, the third column shows that there were 16 articles coded as process; 25% of these co-occurred with science as problematic and 81% co-occurred with scientific conflict. Raw coding data at the article level is available at https://osf.io/6mtcj/.

Concerning RQ3, the most common articles to discuss process were medium or long, with only one short article earning the code. Of the four scientific area codes, medical/health articles were the most likely to be coded as process, with 38% of all process articles in this area. While life sciences, physical sciences, and social sciences articles showed their highest percentages of co-occurrence with the outcome only and methodology codes, medical/health articles showed the highest percentages of co-occurrence in methodology and process, suggesting a relatively high level of sophistication in news regarding this scientific area. This may be due to the commonality of mention of clinical trials in articles on medicine and the relative ease of describing methods involved in medical research compared to those used in other sciences. For example, physical sciences was the scientific area with the highest percentage of co-occurrence with outcome only at 36%, likely because the methodology used in topics like physics, astronomy, and chemistry tends to be more complex and removed from the lay public. Thus, research in these disciplines tends to be mentioned more anecdotally in a broader article than focused on and explained thoroughly. As for the appearance of process across sources, articles from the Post were the most likely to include discussion of process, followed by the Times, then USA Today. This variation in levels of description of the scientific enterprise/lack thereof shows that, while some newspapers do make an attempt to include information regarding the scientific process, others still leave much to be desired in this realm. Further, USA Today tended to have relatively few articles regarding science at all and are thus the least common in our data set.

Simply by virtue of common practice in print journalism, the most common length of article in our data set is medium. As a result of this, medium-length articles are (or are tied for) the most common length of article in all of the main frames, as shown in Figure 2. Thus, while it is significant that long- and medium-length articles are much more likely to include discussion of process than short articles, the prevalence of medium articles over short with the outcome only code is not necessarily as meaningful as it is merely a result of having a large majority of medium-length articles in the data set.

Limitations

One obvious limitation of our study noted at the outset is that it is limited to print journalism and only samples from three newspapers. While we have no grounds for suspecting this, it is possible that the articles we collected (~1% of the total number of instances of science journalism for our chosen sources in the date ranges we selected) are, by chance, atypical in some way of the broader coverage either of these three publications, or of print newspapers more generally, or of science journalism in other media forms. We thus offer the concluding reflections below tentatively and with the acknowledgment that more research would be needed to establish the patterns we've reported here with more confidence.

Discussion and Conclusions

There is a straightforward sense in which our top-line result (the dominance of outcome only stories) should not be surprising. Previous studies of news coverage have likewise found a prevalence of “episodic” rather than “thematic” framing strategies — the former depicting “issues in terms of concrete instances or special events,” whereas the latter place “issues in some general or abstract context” (Iyengar and Simon, 1993, p. 369; see also Hart, 2011). When it comes to science, of course, the latter contexts correspond much more readily to reporting on science as an ongoing social process. Herein lies a problematic tension. As the very name “news” suggests, “newsworthiness” often presupposes novelty. Indeed, as Boykoff and Boykoff (2007) note, the journalistic norms of personalization, dramatization, and novelty (and the concomitant “taboo” against repetition, sensu Gans, 1979) generally militate against taking the “long view” on science — the view that captures the practices which generate robust scientific consensus and thus durable scientific knowledge — in most journalistic contexts. In short, we might say that journalistic norms and practices encourage a focus on discrete events whereas the epistemic significance of science for the broader public's use arguably stems from a longer-run social process (Longino, 1990; Oreskes, 2019; Slater et al., 2019).17

Beyond representing a missed opportunity, we believe that this limited portrayal of science poses a risk of further compromising public trust of science. This is especially obvious when the framing focuses on the drama of highlighting scientific misconduct, conflicts of interests, and so on (de Melo-Martín and Intemann, 2018; Jamieson, 2018). But even setting these sorts of articles aside, there is a potential harm in the episodic, outcome-focus on “recent breakthroughs” in science. Despite the popular assumption that the latest is “the best,” from the perspective of the scientific enterprise's ability to subject claims to critical scrutiny, the latest is generally regarded by scientists as the most tentative and thus the most apt to be subsequently rethought or revised. Thus, reporting that focuses mainly on such articles can be expected to result in a series of contradictions and reversals. This is especially worrisome (and well-documented) in medical reporting. Perceptions of “ambiguity” stemming from conflicting health reports — a component of our scientific conflict code — have been observed to lead to reduced uptake of even basic screening strategies like mammography (Han et al., 2007). Nagler (2014) suggests that these effects may be cumulative, writing that:

there may be important carryover effects of contradictory message exposure and its associated cognitions. Exposure to conflicting information on the health benefits and risks of, for example, wine, fish, and coffee consumption was associated with confusion about what foods are best to eat and the belief that nutrition scientists keep changing their minds. We found evidence that confusion and backlash beliefs, in turn, may lead people to doubt nutrition and health recommendations more generally — including those that are not surrounded by conflict and controversy (e.g., fruit/vegetable consumption, exercise) (p. 35; our italics).18

Something analogous may be happening in cases where beliefs about the so-called “replicability crisis” in social psychology and some biomedical fields (or the growing number of journal retractions) are leveraged to bolster a general science-in-crisis narrative. If scientists are always changing their minds, if they are always fighting with each other, then perhaps the best, most rational epistemic response would be to adopt a (limited) Pyrrhonian stance of belief suspension (Fogelin, 1994; Slater et al., 2018).

One might wonder at this point to what extent this is an artifact of the outcome-focused coverage, and to what extent this is an outcome of the nature of science itself.19 In other words, would a more nuanced coverage of the scientific process result in greater trust of science? We cannot provide a clear answer to this question, as the dynamics here are obviously quite complex. To greatly simplify: perhaps a public with an improved grasp of the social dynamics of the scientific enterprise would be better equipped to judge which findings deserved their trust and which were more tentative; or perhaps “seeing how the sausage is made” would seriously compromise one's appetite for consuming it. But even if we cannot say with any certainty what “ideal” news coverage of science looks like or what its social-epistemic effects might be, it is still possible to identify what is non-ideal about present coverage.

Now, to be clear, our claim is not that science does as a whole deserve our uncritical trust (let alone uncritical deference). It is still a matter of legitimate debate whether the fact that, as Jamieson puts it, scientists are “not simply indicting [replication failures], but are as well-working to implement correctives” (Jamieson, 2018, p. 5) sufficiently vindicates the scientific enterprise as a self-correcting endeavor (Ioannidis, 2012; Stroebe et al., 2012). This is clearly not an all-or-nothing status; self-correction comes in degrees and may be present to different degrees in different areas of science, national contexts, and so on. Yet in articles focusing on scientific conflict, even potential responses or relevant mechanisms of response were only present a third of the time. It is a matter for further research into the details of such representations whether they are more often lacunae (due to a journalistic bias toward drama, e.g.,) or a straightforward representation of the fact that scientists sometimes just find themselves at loggerheads with no obvious resolution.

To what extent is the outcome-only focus of science journalism inevitable? We must recognize the pressures on newsrooms to be first to present what their readership (or increasingly, readers who come across articles via social media algorithm or intentional search) will find newsworthy — not to mention doing so in the midst of shrinking budgets and numbers of dedicated science reporters. In an era of strenuous competition for “clicks,” “eat your peas” journalism cannot be expected to be common (Rusbridger, 2018, p. 83). More generally, journalists are to a great extent bound by prevailing assumptions and narratives in the wider culture (Phillips, 2015, p. 10). Stuart Hall glosses “newsworthiness” with an implicit nod to this tension between what is new and what is familiar:

Things are newsworthy because they represent the changefulness, the unpredictability and the conflictful nature of the world. But such events cannot be allowed to remain in the limbo of the “random” — they must be brought within the horizon of the “meaningful.” In order to bring events “within the realm of meanings” we refer unusual or unexpected events to the “maps of meaning,” which already form the basis of our cultural knowledge (Hall et al., 1996, p. 646, quoted in Phillips, 2015, p. 16–17).

Thus, a tendency in science reporting to play toward simplistic models of scientific methodology or the scientific enterprise and to understand conflict and contestation as 1:1, pathological, and indicative of a lack of understanding.

However, we think that there is reason for optimism. We are already seeing a shift away from standard practices for applying norms of objectivity and fairness — viz. representing “both sides” of an issue without an indication of the weight of evidence. While this practice may make sense in the context of politics or legal reporting, it is increasingly recognized as inaccurately conveying science (Boykoff and Boykoff, 2007), and more nuanced ways of realizing these norms are being developed by editors (and demanded by media consumers).20 It is also possible to imagine ways of achieving the balance that Hall notes with only modest changes of focus (or supplementation). At the conclusion of Jamieson's discussion of the media's tendency to default to crisis framing, she offers four recommendations for science journalism:

(i) supplement the [various] narratives with content that reflects the practice and protections of science; (ii) treat self-correction as a predicate not an afterthought; (iii) link indictment to aspiration; and (iv) focus on problems without shortchanging solutions and in the process hold the science community accountable for protecting the integrity of science.

To these we might add ‘try to take a broader view of the social context of science’ — even when the focus is on a “gee whiz” science reporting (Angler, 2017, p. 3). That is, instead of simply stating the results of a given study, offer insight into the ongoing relevant research being done and how it relates to the results being described. More boldly, one can imagine the “novelty” being described involving not some outcome of an individual scientist (or team thereof) but their experiences, even their failures or confusions; this too, we submit, can help to deepen and nuance the public's mental model of the scientific enterprise. Though it might not be as alluring a story to participate in from an individual investigator's perspective, it may be a greater public service.

Granted: there is something undeniably tempting about reporting on the latest “gee whiz!” or “betcha didn't expect this!” outcomes from science. After all, at least for the science-curious, it's often enjoyable to learn about these; perhaps such results likewise serve to cultivate science curiosity.21 On the other hand, it's often enjoyable to eat ice cream for dinner! Insofar as consuming science as a series of isolated scientific “discoveries” (if discoveries they be) may compromise our trust of the scientific enterprise, perhaps we should abstain from this — or at least balance our epistemic diets. Science journalists have a significant role to play in improving the menu of options.

Data Availability Statement

The raw data (in the form of PDFs of the articles) along with a summary of the code presence and other descriptors for each article is available at https://osf.io/6mtcj/.

Author Contributions

MS wrote the introduction and discussion sections with help from ES. ES, JM, and MS co-wrote the middle sections, contributed equally to the data collection, and analysis.

Funding

The authors gratefully acknowledge financial support from the U.S. National Science Foundation (award SES-1734616) and Bucknell University.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Thanks to Natalie Minella for early assistance in the formulation of the project and initial data collection. Thanks to the editor and the referees for their good questions and constructive suggestions that lead to a much-improved paper.

Footnotes

1. ^To which the existence of entire journals dedicated to the subject — such as Public Understanding of Science, Science Communication, the Journal of Science Communication — attest.

2. ^Both definitions were quoted in the introductory chapter of Vos (2018), which provides a useful general discussion of the attempts to define journalism.

3. ^Social epistemologists are increasingly turning their attention to the importance of journalism in the context of knowledge transmission; for an interesting overview relevant to our concerns, see Godler et al. (2020).

4. ^We hasten to point out that the ramifications of such results for science communication practice are still controversial. Some argue that because recognition of a scientific consensus about climate change acts as a “gateway belief” for increased acceptance and concern (van der Linden et al., 2015); mutatis mutandis, perhaps, for other issues. However this model has proved difficult to replicate or extend to other domains (see, for example, Cook and Lewandowsky, 2016; Landrum et al., 2019; cf. van der Linden et al., 2019); it has also been criticized on methodological grounds (Kahan, 2017; for a reply, see van der Linden et al., 2017). For a review of the state of the debate and its main questions, see Landrum and Slater (2020).

5. ^This is what is measured by Kahan's “Ordinary Science Intelligence” instrument (which also includes some popular numeracy and cognitive reflection items) (Kahan, 2016).

6. ^Such points have been conspicuously ignored in much of the research on science communication surrounding extensions of the Gateway Belief Model (op cit.).

7. ^We used this (limited) full-text search approach because the results from ProQuest's subject search were obviously partial and inaccurate: we found that many articles that were clearly and centrally about science would not be captured by searching for ‘science’ as a subject. This may have been due to inconsistent coding of data within the ProQuest database; we judged the simple full-text search of ‘science’ or ‘scientists’ as the best available alternative, though it is conceivable that some science reporting omits these words and thus would not appear in our search. We also considered the inclusion of topical search terms, such as ‘biology’ or ‘physics,’ but we decided that the judgment of which topics to include would introduce too much subjectivity into the search process. When we made this decision, we conducted searches with and without these topical search terms and saw a negligible difference in output results.

8. ^12 months sampled × 15 articles/each = 180; as discussed below, we expected that some articles initially included in our dataset would later be dropped upon further review.

9. ^These were operationally defined in terms of their length in the standard ProQuest output format as less than a full page, 1–2 pages, or >2 pages, respectively (corresponding to approximately 0–300 words, 300–800 words, or >800 words, respectively).

10. ^You will notice that USA Today accounted for very few articles in our dataset (13 compared to 70 and 80 from the Times and Post, respectively). While we considered normalizing our sample, we opted to let our random sampling procedure yield whatever articles it would yield so as not to bias the overall picture.

11. ^We understood these areas according to what we see as standard academic practice: medical/health articles referred to content focused on human/animal health and well-being; life sciences articles to biology, physiology, ecology, neuroscience, and related fields (as long as these weren't oriented toward medical/veterinary concerns); physical sciences comprised fields such as geology, astronomy, physics, chemistry; social sciences comprised such fields as education, psychology, sociology, and anthropology including their applied subfields. Some academic disciplines — such as engineering — did not cleanly map onto any one of these areas; such articles were judged on a case-by-case basis and coded according to the dominant focus (if any) of the article as attending to the science in one of the four areas.

12. ^For this variable, a mere mention of these topics (e.g., as an illustration) sufficed to earn the relevant code.

13. ^None of the articles in our sample focused on this topic.

14. ^One strategy the authors employed for making this judgement call for the Process code, in particular, involved asking whether the article contained enough discussion of the social processes of the scientific enterprise to potentially expand or improve readers' grasp of this enterprise; thus, mere en passant mention of peer-review, for example, would not suffice to code an article as Process. The Science as Problematic category involved a similar judgment call concerning the overall valence of an article: whether one might reasonably expect a reader to come away with a more negative impression of scientific enterprise (thus, for example, mere passing mention of an issue like misconduct would not trigger this code if it was mentioned in the context of means the scientific community was using to help decrease its incidence).

15. ^It is worth mentioning that the threshold we used for applying the process code was relatively low (e.g., that concepts related to the scientific enterprise were at minimum mentioned in a non-trivial way); thus such articles would in many cases only offer a mere glimpse at the processes by which the scientific community functions.

16. ^Co-occurrence was noted at the article level, not at the level of overlap of strings of text.

17. ^Note that we do not mean to be making a general claim about science as representing an accumulation of knowledge or understanding, nor that scientists aim for consensus. Part of the insight of Kuhn and subsequent thinkers (e.g., Strevens, 2020) is that science can generate knowledge and consensus without explicitly striving for its production.

18. ^As Nabi et al. (2018) point out, citing earlier work by Nabi and Prestin (2016), this is a common problem: “the scientific community is in a constant state of discovery and correction, the nuances of which do not translate well to the more ‘black or white’ reporting style that is more typical of modern journalism” (20).

19. ^Indeed, as one of the referees for this journal did; we appreciate their raising this question.

20. ^It has been reported, for instance, that the BBC issued internal guidance on the avoidance of “false balance”: https://www.carbonbrief.org/exclusive-bbc-issues-internal-guidance-on-how-to-report-climate-change.

21. ^It is worth acknowledging the evidence that science curiosity, as a disposition, is associated with a suppression of the politically biased information processing observed for controversial subjects like climate change (Kahan et al., 2017). It is an open question, however, whether such curiosity is (or must be) sustained by the kind of “gee whiz” (outcome only) stories we have in mind.

References

Angler, M. W. (2017). Science Journalism: An Introduction. Routledge: Taylor & Francis Group. doi: 10.4324/9781315671338

Beatty, J. (2017). “Consensus: sometimes it doesn't add up,” in Landscapes of Collectivity, eds S. Gissis, E. Lamm, and A. Shavit (Cambridge, MA: MIT Press).

Boykoff, M. T., and Boykoff, J. M. (2007). Climate change and journalistic norms: a case-study of US mass-media coverage. Geoforum 38, 1190–1204. doi: 10.1016/j.geoforum.2007.01.008

Cook, J., and Lewandowsky, S. (2016). Rational irrationality: modeling climate change belief polarization using bayesian networks. Topics Cognit. Sci. 8, 160–179. doi: 10.1111/tops.12186

Craft, S., and Davis, C. N. (2016). Principles of American Journalism: An Introduction, 2nd Edn. Routledge: Taylor & Francis Group. doi: 10.4324/9781315693484

de Melo-Martín, I., and Intemann, K. (2018). The Fight Against Doubt. New York, NY: Oxford University Press. doi: 10.1093/oso/9780190869229.001.0001

Figdor, C. (2017). (When) is science reporting ethical? The case for recognizing shared epistemic responsibility in science journalism. Front. Commun. 2:3. doi: 10.3389/fcomm.2017.00003

Fiske, S. T., and Dupree, C. (2014). Gaining trust as well as respect in communicating to motivated audiences about science topics. Proc. Natl. Acad. Sci. U.S.A. 111(Suppl. 4), 13593–13597. doi: 10.1073/pnas.1317505111

Fogelin, R. J. (1994). Pyrrhonian Reflections on Knowledge and Justification. New York, NY: Oxford University Press. doi: 10.1093/0195089871.001.0001

Funk, C., and Goo, S. K. (2015). A Look at What the Public Knows and Does Not Know About Science. Pew Research Center. Available online at: http://www.pewinternet.org/2015/09/10/what-the-public-knows-and-does-not-know-about-science/ (accessed February 10, 2020).

Funk, C., Gottfried, G., and Mitchell, A. (2017). Science News and Information Today. Pew Research Center. Available online at: https://www.journalism.org/2017/09/20/science-news-and-information-today/ (accessed February 10, 2020).

Godler, Y., Zvi, R., and Boaz, M. (2020). Social epistemology as a new paradigm for journalism and media studies. New Media Soc. 22, 213–229. doi: 10.1177/1461444819856922

Hall, S., Critcher, C., Jefferson, T., Clarke, J., and Roberts, B. (1996). “The social production of new,” in Media Studies: A Reader. eds P. Marris, and S. Thornham (Edinburgh: Edinburgh University Press).

Han, P. K. J., Kobrin, S. C., Klein, W. M. P., Davis, W. W., Stefanek, M., and Taplin, S. H. (2007). Perceived ambiguity about screening mammography recommendations: association with future mammography uptake and perceptions. Cancer Epidemiol. Prevent. Biomarkers 16, 458–466. doi: 10.1158/1055-9965.EPI-06-0533

Hart, P. S. (2011). One or many? The influence of episodic and thematic climate change frames on policy preferences and individual behavior change. Sci. Commun. 33, 28–51. doi: 10.1177/1075547010366400

Ioannidis, J. P. A. (2012). Why science is not necessarily self-correcting. Perspect. Psychol. Sci. 7, 645–654. doi: 10.1177/1745691612464056

Iyengar, S., and Simon, A. (1993). News coverage of the gulf crisis and public opinion: a study of agenda-setting, priming, and framing. Commun. Res. 20, 365–383. doi: 10.1177/009365093020003002

Jamieson, K. H. (2018). Crisis or self-correction: rethinking media narratives about the well-being of science. Proc. Natl. Acad. Sci. 115, 2620–2627. doi: 10.1073/pnas.1708276114

Kahan, D. (2016). ‘Ordinary science intelligence’: a science-comprehension measure for study of risk and science communication, with notes on evolution and climate change. J. Risk Res. 20, 995–1016. doi: 10.1080/13669877.2016.1148067

Kahan, D. (2017). The ‘Gateway Belief’ illusion: reanalyzing the results of a scientific-consensus messaging study. J. Sci. Commun. 16:A03. doi: 10.22323/2.16050203

Kahan, D., Jenkins-Smith, H., and Braman, D. (2011). Cultural cognition of scientific consensus. J. Risk Res. 14, 147–174. doi: 10.1080/13669877.2010.511246

Kahan, D., Landrum, A., Carpenter, K., Helft, L., and Jamieson, K. H. (2017). Science curiosity and political information processing. Polit. Psychol. 38, 179–199. doi: 10.1111/pops.12396

Kahan, D., Peters, E., Wittlin, M., Slovic, P., Ouellette, L. L., Braman, D., et al. (2012). The polarizing impact of science literacy and numeracy on perceived climate change risks. Nat. Clim. Change 2, 732–735. doi: 10.1038/nclimate1547

Kuhn, T. S. (1962). The Structure of Scientific Revolutions. Chicago, IL: University of Chicago Press.

Landrum, A. R., Hallman, W. K., and Jamieson, K. H. (2019). Examining the impact of expert voices: communicating the scientific consensus on genetically-modified organisms. Environ. Commun. 13, 51–70. doi: 10.1080/17524032.2018.1502201

Landrum, A. R., and Slater, M. H. (2020). Open questions in scientific consensus messaging research. Environ. Commun. 14, 1033–1046. doi: 10.1080/17524032.2020.1776746

Longino, H. E. (1990). Science as Social Knowledge: Values and Objectivity in Scientific Inquiry. Princeton, NJ: Princeton University Press. doi: 10.1515/9780691209753

Merton, R. K. (1973). The Sociology of Science: Theoretical and Empirical Investigations. Chicago, IL: University of Chicago Press.

Miller, B. (2013). When is consensus knowledge based? Distinguishing shared knowledge from mere agreement. Synthese 190, 1293–1316. doi: 10.1007/s11229-012-0225-5

Miller, J. D. (2004). Public understanding of, and attitudes toward, scientific research: what we know and what we need to know. Public Understand. Sci. 13, 273–294. doi: 10.1177/0963662504044908

Nabi, R., Gustafson, A., and Jensen, R. (2018). “Effects of scanning health news headlines on trust in science: an emotional framing perspective,” in 68th Annual Convention of the International Communication Association (Pague).

Nabi, R. L., and Prestin, A. (2016). Unrealistic hope and unnecessary fear: exploring how sensationalistic news stories influence health behavior motivation. Health Commun. 31, 1115–1126. doi: 10.1080/10410236.2015.1045237

Nagler, R. H. (2014). Adverse outcomes associated with media exposure to contradictory nutrition messages. J. H ealth Commun. 19, 24–40. doi: 10.1080/10810730.2013.798384

Nagler, R. H., and LoRusso, S. M. (2018). “Conflicting information and message competition in health and risk messaging,” in The Oxford Encyclopedia of Health and Risk Message Design and Processing, Vol. 1, ed R. L. Parrott (New York, NY: Oxford University Press). doi: 10.1093/acref/9780190455378.001.0001

Olson, R. (2015). Houston, We Have a Narrative. Chicago, IL: University of Chicago Press. doi: 10.7208/chicago/9780226270982.001.0001

Oreskes, N. (2019). Why Trust Science? Princeton, NJ: Princeton University Press. doi: 10.2307/j.ctvfjczxx

Phillips, A. (2015). Journalism in Context: Practice and Theory for the Digital Age. Abingdon, VA: Routledge. doi: 10.4324/9780203111741

Ritchie, S. (2020). Science Fictions: How Fraud, Bias, Negligence, and Hype Undermine the Search for Truth. New York, NY: Metropolitan Books.

Schudson, M. (2013). Reluctant stewards: journalism in a democratic society. Daedalus 142, 159–176. doi: 10.1162/DAED_a_00210

Slater, M. H., Huxster, J. K., and Bresticker, J. E. (2019). Understanding and trusting science. J. Gen. Philos. Sci. 50, 247–261. doi: 10.1007/s10838-019-09447-9

Slater, M. H., Huxster, J. K., Bresticker, J. E., and LoPiccolo, V. (2018). Denialism as applied skepticism: philosophical and empirical considerations. Erkenntnis 85, 871–890. doi: 10.1007/s10670-018-0054-0

Stegenga, J. (2018). Medical Nihilism. Oxford: Oxford University Press. doi: 10.1093/oso/9780198747048.001.0001

Strevens, M. (2003). The role of the priority rule in science. J. Philos. 100, 55–79. doi: 10.5840/jphil2003100224

Strevens, M. (2017). “Scientific sharing: communism and the social contract,” in Scientific Collaboration and Collective Knowledge, eds T. Boyer-Kassem, C. Mayo-Wilson, and M. Weisberg (New York, NY: Oxford University Press). doi: 10.1093/oso/9780190680534.003.0001

Strevens, M. (2020). The Knowledge Machine: How Irrationality Created Modern Science. New York, NY: Liverlilght Publishing.

Stroebe, W., Postmes, T., and Spears, R. (2012). Scientific misconduct and the myth of self-correction in science. Perspect. Psychol. Sci. 7, 670–688. doi: 10.1177/1745691612460687

van der Linden, S. L., Leiserowitz, A., and Maibach, E. (2017). Gateway illusion or cultural cognition confusion? J. Sci. Commun. 16:A04. doi: 10.22323/2.16050204

van der Linden, S. L., Leiserowitz, A., and Maibach, E. (2019). The gateway belief model: a large-scale replication. J. Environ. Psychol. 62, 49–58. doi: 10.1016/j.jenvp.2019.01.009

van der Linden, S. L., Leiserowitz, A. A., Feinberg, G. D., and Maibach, E. W. (2015). The scientific consensus on climate change as a gateway belief: experimental evidence. PLoS ONE 10:e0118489. doi: 10.1371/journal.pone.0118489

Keywords: science journalism, distrust of science, scientific process, scientific enterprise, content analysis, newspapers

Citation: Slater MH, Scholfield ER and Moore JC (2021) Reporting on Science as an Ongoing Process (or Not). Front. Commun. 5:535474. doi: 10.3389/fcomm.2020.535474

Received: 16 February 2020; Accepted: 02 December 2020;

Published: 12 January 2021.

Edited by:

Carrie Figdor, The University of Iowa, United StatesReviewed by:

Justin Reedy, University of Oklahoma, United StatesBoaz Miller, Zefat Academic College, Israel

Copyright © 2021 Slater, Scholfield and Moore. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matthew H. Slater, bWF0dGhldy5zbGF0ZXJAZ21haWwuY29t

Matthew H. Slater

Matthew H. Slater Emily R. Scholfield

Emily R. Scholfield J. Conor Moore

J. Conor Moore