- 1Science Communication Unit, University of the West of England, Bristol, United Kingdom

- 2Centre for Sustainable Planning and Environments, University of the West of England, Bristol, United Kingdom

In this study, we describe and present an evaluation of how the Q Method was used to engage members of local communities, to examine how those living in former metal mining landscapes value their heritage and understand their preferences for the long-term management of mine waste. The evaluation focused on the participants' experiences, thoughts and views of the Q Method, as a method of collecting individual preferences. The Q Method is for discourse analysis, allowing a systematic analysis of the subjective perspectives of participants. This paper presents a small-scale evaluation of the Q Method. The results indicate that although this method is time-consuming (both for the researchers and for the participants) and demanding, it is a suitable and successful engagement strategy. The Q Method helped the participants feel that their opinion was being sought and valued, and allowed them to express their views on mining heritage in the context of their lives. The method was also a valuable tool in challenging the participants' views and it reinforced the complexity of the decision-making process.

Introduction

Policy decisions often require an assessment of competing values. One of the goals of engaging publics is to ensure that there is public input into the decision-making process (House of Commons, 2013). Researchers use engagement tools to ensure the public have an opportunity to input into policy decisions (Institute of Medicine, 2013). Members of the public may not have the expertise to contribute or comment on technical issues but are more than qualified to reflect on the values underlying public policy decisions. Reflection necessarily involves dialogue: engaging with publics provides an opportunity to collect people's views, values and opinions on difficult issues, as well as to share information about sensitive policy decisions. Policymakers can draw on publics' reflections to inform policy, or at the least ensure that they have been considered (Institute of Medicine, 2013).

However, “public engagement” means different things to different people and researchers often struggle to explain what it means to them. Engagement with the public and may take many forms, such as public lectures, hands-on demonstrations, websites, citizen science projects, community engagement, patient-involvement, and public consultations. Bauer and Jensen (2011) detail the kinds of activities that can be classed as public engagement:

Public engagement activities include a wide range of activities such as lecturing in public or in schools, giving interviews to journalists for newspapers, radio or television, writing popular science books, writing the odd article for newspapers or magazines oneself, taking part in public debates, volunteering as an expert for a consensus conference or a “café scientifique”, or collaborating with non-governmental organisations (NGOs) and associations as advisors or activists, and more (Bauer and Jensen, 2011, p. 4).

Public engagement is routinely used to communicate and disseminate scientific findings, but when used as a tool for dialogue, it can be a medium to ensure public input. The National Co-ordinating Centre for Public Engagement (NCCPE)1 includes this quality in its definition of public engagement:

Engagement is by definition a two-way process, involving interaction and listening, with the goal of generating mutual benefit. (NCCPE, online)

In other words, public engagement has the potential to bring new perspectives to the policymaking arena. It is this form of public engagement, dialogue to inform policymaking, that is the subject of this research.

Q Method is a form of discourse analysis that allows subjective views to be investigated and differing perspectives formulated. Participants are asked to decide what they personally value and find significant. It is well-suited to the discussion of contentious issues where there is no consensus of opinion (Webler et al., 2009) and is effective at ensuring participants prioritise different outcomes. The premise underlying the Q Method is that there are a finite number of discourses in any given topic (Doody et al., 2009). In the method, participants rank and sort a series of statements based on the degree to which the statement represents their perspective on a subject (Van Exel and de Graaf, 2005), usually by arranging statements printed on cards in a quasi-normal distribution. The resulting rankings (Q sorts) are then analysed using Principal Component Analysis to reveal the nature of a smaller number of shared perspectives (Danielson et al., 2012).

Yoshizawa et al. (2016, p. 6281) argue that “in participatory research, the Q Method has also been regarded as a practical tool to select participants for a stakeholder dialogue, as well as to identify changes in perspectives before and after the dialogue” but few studies have focused on the use of Q Method in decision-making and public policy to support the stakeholder consensus and to address specific research aims (Durning and Brown, 2006; Brown et al., 2008). Previous successful uses of the Q Method include discussion of locally-contentious proposals such as wind farms (Ellis et al., 2007), analyses of environmental policy in the context of conflicts and disagreement (Barry and Proops, 1999; Van Eeten, 2000), and studies of environmental politics (Barry and Proops, 1999). O'Neill and Nicholson-Cole (2009, p. 355) used Q Method to uncover “the impacts that fearful messages in climate change communications have on people's senses of engagement with the issue”. The same study tried to understand the implications of Q Method for public engagement strategies; concluding that participants are more likely to engage deeply with the topic when the communications approaches consider their values, attitudes, beliefs, and experiences, which the Q Method aspires to do. Ellis et al. (2007) argue that using the Q Method provides different perspectives into the theme explored (wind farm and conflicts around these) and that this method might be the missing connection between different approaches to policy analysis. O'Neill and Nicholson-Cole (2009) on the other hand, used visual images instead of statements and their results showcase the most relevant points of views held by the participants.

The Q method is oriented to subjectivity and uses a statistical model to analyse the data. In contrast, other methods, such as discourse analysis, focus on text products, and processes by using linguistic analysis (Kaufmann, 2012). One other aspect that sets the Q Method apart from other methods is that it exposes and allows subjectivity in the participants' views (Coogan and Herrington, 2011). The Q Method is unique in capturing the collective voices of a group of people while, at the same time, identifying subtle differences between some of these voices (Coogan and Herrington, 2011). Understanding different perspectives will assist in recognizing views that are little known, marginalised or hidden (Mazur and Asah, 2013). Since Q Method is done individually and anonymously, less vocal participants are able to express their views, even when these are controversial. Therefore, a wider range of views will emerge, compared to using other methods, such as focus groups or more traditional methods used in public engagement such as public consultations and workshops.

Many other methods have been used in public participation and engagement. Facilitated workshops have been used in the context of environmental management to assist in resolving conflicts of interest in the management of alien species (Novoa et al., 2018). They were perceived as an appropriate and useful method but the authors noted that conflicts were not always resolved through facilitated workshops with stakeholders. These workshops were very costly and the duration of the engagement process quite long (Novoa et al., 2018).

The Delphi process (also known as Delphi technique) is a well know method used when trying to reach a consensus around a certain topic. The Delphi process can be used, for example, in patient and public engagement and it allows expert opinion to be reached using a systematic design, even when the participants' knowledge on the topic is limited (Fink et al., 1984). This process is highly effective in reaching consensus over time, as opinions are swayed and it can be done without having a physical meeting with all participants. On the other hand, while the Delphi can sometimes include commentary from a diverse group of participants, it does not involve the same interaction between participants as a live discussion, which usually produce a better example of consensus (Hsu and Sandford, 2007). Corbie-Smith et al. (2018) have used the Delphi process in reaching consensus around the design of an ethical framework and guidelines for engaged research. They conclude that even in such a rigorous multi-stage process, there remain some aspects that are omitted.

Long-term public consultations are another approach used in research. Maguire et al. (2019) ran a 3-year engagement initiative on public involvement in environmental change and health research. The authors argue that their approach allowed them to sustain public involvement “knowledge spaces” and that these spaces allowed the researchers to gain different understandings than would have otherwise be possible. However, the research also indicates that it is impossible to reach participatory parity, even over a long-term relationship.

Focus groups have often been used as a means for democratic participation in research (Bloor et al., 2001), as well as an approach to collect information on public understandings and opinions. Focus groups often involve a long process of preparation and, in return, provide rich data and insights into the thoughts and views of a particular group of people (Acocella, 2012). Focus groups however, are not free from bias and stereotypical answers, as the presence of other people can mean some participants are pressured into socially desirable answers or feel they might be judged based on their opinions (Acocella, 2012).

For successful public engagement in policy-making, practitioners need to understand which methods are effective, but there is often a lack of evaluation of the methods employed. We used the Q Method, as a tool for dialogue, to engage with people living in former metal mining communities in England and Wales to examine how they value their heritage and their preferences for the long-term management of mine waste. There are around 5,000 former metal mines in England and Wales, concentrated in the Pennines and south west of England, and mid to north Wales (Sinnett, 2019). The main period of activity was the eighteenth and nineteenth centuries, with most mines ceasing production by the mid-twentieth century (Bradshaw and Chadwick, 1980). The age of the mines predates current environmental regulations, so the vast majority were abandoned, unrestored, leaving unvegetated spoil heaps across the landscapes (Sinnett, 2019). Despite some sites being restored in the 1980's and 1990's, abandoned spoil heaps often contain elevated concentrations of toxic metals, which can pollute surface water and reduce local air quality, but could also be processed using modern techniques to extract more metals (Crane et al., 2017). However, the historic nature of the mining activity means that many of the sites are protected for their ecological, geological, or cultural significance (Howard et al., 2015; Sinnett, 2019). The tensions between the environmental impact of the mines, these designations and their role in the cultural identity of the areas and as visitor attractions means that it is essential that local residents have a say in their future. The results from the Q Method can be found in Sinnett and Sardo (2020), but briefly we identified five perspectives of local residents: those who valued the cultural heritage and wanted the sites left as they are, those who prioritised restoring the sites for nature conservation, reducing pollution or enhancing the appearance of the mines, and those focused on the local economy who were the most receptive to reworking the mines (Sinnett and Sardo, 2020).

In this paper, we describe and present an evaluation of the Q Method, focusing on exploring how participants experienced the method. Specifically, we wanted to understand what participants thought of the Q Method and if, from the participants' perspective, this an appropriate method for gaining insights into their priorities and values. It is important to state that the researchers implemented the Q Method to answer different research questions during which it appeared, from the comments made by the participants, that it was also acting as a science communication and/or public engagement tool. Therefore, evaluation was not undertaken in the context of a public engagement project, but the results indicate a strong potential for using in the context of public engagement activities and research.

Methodology

Background: The Workshops and Statements

Residents of six former mining areas in England and Wales (Tavistock, Redruth, Matlock, Reeth, Capel Bangor, and Barmouth) were invited to participate in workshops exploring how they value their local mining heritage. Six workshops, each engaging with a different group of residents, were held between September and November 2017. At each paired location one workshop was held in the evening (Tavistock, Matlock, and Capel Bangor) and the other over the subsequent lunchtime (Redruth, Reeth, and Barmouth), as our aim was to engage with people from different demographic groups (employed people, retired people, etc.). All workshops were held in informal locations such as town centre hotels, village halls, etc.

A mix of purposive and snowball sampling was used to recruit participants (Watts and Stenner, 2005). To try to avoid the workshops attracting only people with a specific interest in mining heritage and achieve an unbiased sample (Watts and Stenner, 2005), 100 to 200 addresses lying within 5 km of the workshop venue were randomly sampled, using the Royal Mail's Postcode Address File. Households received an invitation to the workshop, explaining its purpose and giving a brief explanation of the method. The only conditions for attendance were being over 18 years old and a resident of the area for at least 2 years. The invitations included a pre-paid reply slip, email address, and telephone number to reply to the invitation. Snowball sampling was then used with those residents' who replied to the invitation. A total of 38 residents attended the six workshops, in line with recommended sample sizes (Watts and Stenner, 2005).

The statements used in the Q method process were derived from the academic and policy literature, as well as articles in the local press related to mining. Initially, the research team identified 240 statements representing the breadth of opinion on the mining heritage and its management, which were reviewed iteratively by the research team using a sampling grid (Webler et al., 2009). Q method demands that statements should be carefully worded, so that they are easily understandable and fit on a small card (Institute of Medicine, 2013). Statements were combined or adjusted, where necessary, to avoid repetition and technical language, or to use similar language to convey differing priorities. A final set of 33 statements was selected for the workshops. The statements covered a range of opinions of mining legacy, its value, and options for its management, with a particular focus on the potential for metal recovery from the wastes. Example statements are: Mine wastes should be reworked to extract more metals from the waste; The absence of greenery in large areas increases the negative impact of mine waste on the landscape; Mine wastes always have a negative impact on the landscape; The development of greenery on mine wastes should be left to natural processes; Those responsible for the future of mine wastes should prioritise cleaning up pollution; The remnants of the former metal mining industry are an important part of the culture, history, and identity of this area; The public should not be responsible for funding the management of abandoned mines (Sinnett and Sardo, 2020).

Ahead of the workshops with residents, a pilot session was conducted with six participants (randomly recruited at the University) to ensure that the method, instructions, and statements were clear.

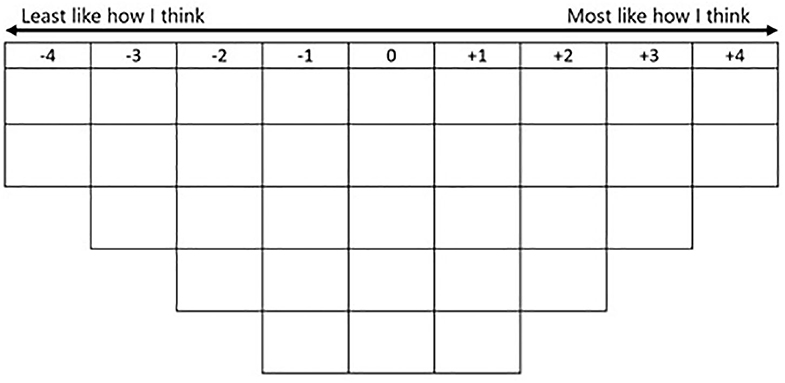

In the workshops, participants were first given a brief overview of the research and wider project, as well as a detailed explanation of the Q Method and what was expected of them. There was an opportunity to ask questions and clarify any issues. Participants were then asked to individually sort the statements on a grid with 33 positions in an inverted normal distribution from “least like how I think (−4)” to “most like how I think (+4)” (Figure 1). After they were happy with their “Q sorts” the participants were asked to complete a paper questionnaire that asked them to provide some explanation for their ranking of the statements that most strongly and least strongly represented their views. The paper questionnaire also asked them to share any statement they found particularly easy or difficult to place, whether was anything missing from the statements and some basic sociodemographic information. Participants were encouraged to complete the ranking and questionnaire individually, without discussing it with the wider group. On average the workshops last from 60 to 90 min and participants were free to leave after they finished, although most chose not to leave until the end of the workshop. After all participants finished, the workshop concluded with an informal chat and discussion about the statements, research project, and the Q Method. Although the participants were asked to do the activity individually, the researchers opted for a group session for the workshops. There is always value in bringing people together and useful information, questions, and comments were exchanged both during the introduction session and the final debrief. The group setting was also more efficient in terms of time than individual Q Methods.

Online Questionnaire: Design

To evaluate the participants' experience of Q Method, an online questionnaire was designed. A link to the questionnaire was sent to the workshop participants shortly after the workshop. Online methods have been shown to be a convenient way to quickly and effectively gather data soon after workshops (Sardo and Weitkamp, 2017). Since there is no human interviewer (Couper et al., 2002), participants tend to feel more comfortable and are likely to be more honest in the answers they give. As Q Method requires considerable time commitment and effort from the participants, the researchers decided against asking participants to complete a traditional paper questionnaire at the end of the workshop, or gathering any other type of immediate feedback.

However, despite being an acceptable and popular evaluation method, online questionnaires have some disadvantages. As argued by Grand and Sardo (2017), one disadvantage is that people are free to ignore the invitation to complete the questionnaire. Grand and Sardo advise that researchers can address this issue by “sending a carefully worded, friendly–and short–email alongside the link” (Grand and Sardo, 2017, p. 3). Those attending the workshops were made aware during the workshop that they would be asked to complete the questionnaire, and they met the researcher conducting the evaluation. To further encourage participation, the online questionnaire was designed to be short and quick to complete; together, these strategies usually help mitigate a low response rate.

The questionnaire comprised mostly closed questions offering a list of response options. Closed questions are more inclusive, as they do not discriminate against less responsive participants (De Vaus, 2002). The questionnaire included some open-ended questions, allowing the respondents to express themselves in their own terms (Groves et al., 2004), as well as adding any further comments or thoughts. However, as open-ended questions traditionally have a lower response rate and may be perceived as laborious by respondents, they were kept to a minimum (De Vaus, 2002). The questionnaire was organised in four sections: method, statements, information about the participants, and any further comments they would like to add. It was set up using the Online Surveys tool (previously the Bristol Online Survey, BOS).

In addition to the online questionnaire, unstructured observations were conducted during the workshops, as these offer a useful way to complement other evaluation methods. Observation allows the evaluator to become aware of subtle or routine aspects of a process and gather more of a sense of an activity as a whole (Bryman, 2004). Grand and Sardo (2017) note that observations are a particularly useful evaluation method when the researchers want to find out people's reactions to an activity and how they interact with it. The evaluator made detailed notes immediately after each workshop, and these were supplemented by additional reflections in the days after the workshops.

Online Questionnaire: Participant Recruitment

The workshops engaged with 38 local residents, of which 32 provided an email address and agreed to be contacted. As some participants were couples, sharing one email address, a total of 28 invitations was sent. The invitations asked participants to complete the online questionnaire and included a direct link to the questionnaire. The email invitations were personally addressed, using participants' names. Where couples shared one email address, they were asked to complete the questionnaire separately.

The invitation was emailed to participants within 2 days of them attending the workshop. It included brief information about the project and an acknowledgment that they had attended the workshop (including reference to its time and location), to make it personal and relevant. There was information on what was expected from the participants, asking them to follow a web link to access the online questionnaire and indicating approximately how long it would take to complete. The invitation mentioned that more information could be found on the questionnaire's landing page and that it was anonymous. The landing page included a background to the project and its funders, and information on research ethics and how the data would be used, stored and handled. A reminder was sent 1 week after the original email, followed by a final reminder 2 weeks after participants attended the workshop. The evaluation was approved by of the University of the West of England's Research Ethics Committee. Participants were informed how the data collected would be used, including the publication of their verbatim quotes, and gave their informed consent prior to participation.

Data Analysis

Data from the questionnaires were collated and answers to each question analysed using Microsoft Excel. For open-ended questions, the researchers looked for common (most frequent) themes in answer to each question and/or common and emergent theme areas, sorting them into excerpt files to locate patterns throughout the responses. An inductive approach allowed concepts and themes to be derived from the raw data, rather than approaching the project with pre-conceived ideas (Thomas, 2006). Once coded by hand, themes were reviewed and re-organised to create a thematic hierarchy (Braun and Clarke, 2006). To illustrate the main points of discussion using the participants' own words, relevant quotes were extracted from the data (Chandler et al., 2015). Observation notes and reflections were looked at in the context of the thematic hierarchy.

Results and Discussion

Demographics

A total of 21 completed questionnaires was available for analysis, an 87.5% response rate. Of the respondents, 61.9% (n = 13) self-identified as male and 38.1% (n = 8) as female. Most respondents were 45–64 years old (38.1%, n = 8) or over 65 years old (57.1%, n = 12) with only one respondent in the 25–44 years age category. Most participants were either retired (42.9%, n = 9) or self-employed (33.3%, n = 7). In terms of the highest degree or level of education, 14.3% (n = 3) had a first degree, 47.6% (n = 10) had completed a post-graduate or professional degree, and another 14.3% (n = 3) had completed a PhD. The remaining three respondents stated they had completed an associate degree (n = 1), had attended some college, but had no degree (n = 1), or had completed secondary education (n = 1).

Previous Experience With Q Method

For the vast majority of respondents (90.5%, n = 19) this was their first experience of the Q Method, with only two (9.5%) stating they had some knowledge about the method. Almost half the respondents found the method difficult (47.6%, n = 10), while 23.8% (n = 5) found it easy or very easy.

Perceptions of Using Q Method

Respondents were asked what were the easiest and the most challenging aspects of using Q Method. The majority of participants (61.9%, n = 13), found reading the statements the easiest part of the process. They went on to explain that the statements were clear and easy to interpret, the questions were short and they were clearly printed on the card. Some respondents added that the type face and font size was good.

Ranking the statements was found to be the most challenging aspect of the Q Method by 61.9% (n = 13) of the respondents, with a further 14.3% (n = 3) stating that providing explanations for their ranking on the paper questionnaire was the most challenging part.

Language

The respondents were asked to reflect specifically on the statements, with a series of questions about the language used, the number of statements available and overall comments. Despite some comments about ambiguous statements (see below), the vast majority (71.5%, n = 15) found the language to be clear or very clear:

[statements were] Succinct and well written. (Respondent 17)

Only three respondents (14.3%) found the language on the statements to be unclear:

Some questions contradicted each other. (Respondent 1)

Some were unclear and required further information. (Respondent 16)

Some respondents also found there were a few ambiguous statements:

Some statements were ambiguous or required context. (Respondent 16)

Although respondents were broadly comfortable with the language used, a few commented it would had been useful to have access to a glossary:

It may have been useful for some people to have had a glossary – e.g., I heard one person asking what remediation meant. (Respondent 7)

This is interesting; while planning and designing the workshops and materials, the researchers contemplated including a glossary. However, after careful consideration and an examination of the Q Method literature, we decided not to include one. Providing predefined statements to participants violates the purpose of Q Method, which is to understand the perspectives of the participants across the set of statements (Watts and Stenner, 2005). Given that terms such as “value” and “restoration” often mean different things to different people, including experts from different fields of research, not including a glossary was decided on as a way to avoid “researcher interference” (Webler et al., 2009) and influence people's ranking of statements. In selecting the statements, we gave preference to those with little or no technical language, but decided to include the statement “To achieve a successful restoration the mine waste has to be remediated and the greenery re-established” as an accurate representation of the breadth of opinion. However, the question related to remediation highlighted above was actually asked following the introduction to the research project at the beginning of the workshop, and a verbal explanation was provided in the context of the overall research project. We are yet to find any previous studies that have used a glossary as an aid to the Q Method. Part of the method is to include contradictory statements, as they are supposed to represent the breadth of opinion on a subject. The fact that people place different interpretations on the statements is welcomed in the Q Method (Watts and Stenner, 2005).

Although Q Method relies on statements containing “excess meaning” (Webler et al., 2009) to allow different interpretations of the statements, this may affect its utility as a public engagement tool if participants do not understand the terms used in statements to such a degree that they cannot place them to accurately reflect their perspective. We prioritised statements written in plain English without unnecessary jargon and provided an opportunity for people to ask questions to avoid this, and given the feedback this appears to have been largely successful. There is a balance between removing technical language enough that statements can be understood by participants, and maintaining sufficient detail that the statements are representative of the breadth of opinion concerning a topic (Doody et al., 2009). We would suggest that those considering using Q Method for public participation are mindful of this balance and remove unnecessary technical language, whilst maintaining “excess meaning” in statements to ensure that different perspectives can be explored.

Of the six workshops, two were held in Wales, where both English and Welsh are official languages. It is important to adapt to the audience and to be aware of details such as different local languages; in 2013, the Institute of Medicine used the Q Method to engage the public in critical disaster planning and decision-making. Although the majority of the sessions was conducted in English, one workshop comprised only Spanish-speaking residents and was conducted in Spanish, with all materials available in that language (Institute of Medicine, 2013). Providing participants with materials in the language they feel most comfortable with, or conducting interviews in their native language, is good practice. Sardo and Weitkamp (2017, p. 225) concluded that doing so helped “to eliminate any barriers that might arise from a potential lack of participants” confidence in spoken English and to increase informants “willingness to participate and to facilitate open and frank discussion.”

Therefore, all materials were made available in both English and Welsh, and a translator was present for workshops in Wales for those wishing to speak in Welsh. Where materials were available in Welsh, respondents commented on how they welcomed this, particularly in one location:

Great to have them in Welsh - which I used throughout. (Respondent 19)

Even participants that decided not to do the activity in Welsh were pleasantly surprised by having the two languages available.

In addition to adapting to local languages, the researchers also catered for participants' individual needs. For example, during one of the workshops, one participant self-identified as dyslexic and was giver further support by the researchers. One researcher sat with the participant and completed the paper questionnaire for them. In another case, a resident completed the sorting with help from a relative to read the statements.

Content and Quantity

Being a highly-structured method, the guidance for the development of the Q set of statements indicates that it often consists of 40–50 statements (Van Exel and de Graaf, 2005) but it is also possible to use fewer or more statements (Van Eeten, 1998). Opinions were split on the number of statements used in these workshops: 52.4% (n = 11) felt the number of statements was about right, while 47.6% (n = 10) thought there were too many statements to sort and rank. No one felt there should be more statements. The main reason given for feeling there were too many statements was that they felt some were repetitive (24.1%, n = 7) or very similar, making it difficult to distinguish them and rank accordingly:

Though generally well written some statements gave the impression at first of being repetitious. (Respondent 10)

This comment is interesting as it suggests that they found the statements repetitive initially but perhaps less so as the activity progressed. Some of the statements were deliberately similar, to reflect nuances in management. One respondent found it “difficult to judge” the number of statements available, adding that “perhaps less statements would help focus and make ordering them easier” (Respondent 21). Another participant recognised that the number of statements was a result of the wide variety of topics included, which was welcomed:

Seemed to be a good variety of issues addressed - requiring a lot of statements. (Respondent 19)

Based on the participants' feedback, the decision of using with a Q set of 33 statements was appropriate, as a bigger set might have overwhelmed the participants.

Respondents were asked if they had any opinion about the content of the statements, and were able to choose from a range of options or adding their own. A high number of respondents indicated they found the statements thought-provoking (44.8%, n = 13), well-written (17.2%, n = 5), and interesting (6.9%, n = 2). No one stated there was too much technical language:

Found it interesting, thought provoking and challenging. (Respondent 4)

The Q-sort method seemed quite effective, it's certainly thought provoking! (Respondent 21)

Workshops

When designing the workshops, the researchers were conscious that the Q Method could pose challenges and that it would be time-consuming, as emphasised by Van Exel and de Graaf (2005). Some open comments reflected that concern, with respondents stating the activity made them reflect but was demanding:

It really made you think. (Respondent 4)

It was clear but demanding. (Respondent 15)

Respondents found the overall workshop and method itself to be stimulating. “Straightforward” was used many times to describe the method:

The process was well explained and quite straightforward. (Respondent 21)

Q-sort method is straight forward and well presented in the card/grid format. (Respondent 17)

Overall, the method was well received by the participants:

The method used was well explained by the researchers, not sure how it could be improved. (Respondent 21)

Some participants commented that the format of the workshop was appropriate and having the statement on paper made the process easier:

I feel handling the statements on paper was useful (probably less easy to order thoughts if it were a digital format). (Respondent 18)

Overall, for all workshops, the participants were observed to be enthusiastic about the topic and very engaged throughout the sessions. They dedicated a considerable amount of time and effort and came across as very capable of reflecting on the complex and controversial issues under discussion, which echoes earlier research (Institute of Medicine, 2013).

The Q method made the participants feel that their opinion was being sought and valued, and allowed them to express their views on mining heritage in the context of their lives. The evaluator observed the participants mentioning during informal discussions that the method was a good way for them to express their opinions, concerns, and priorities regarding local mining heritage; this was observed across all workshops, in particular during the final discussion before the workshops concluded. This led them to feel generally happy and positive about their participation with some being observed mentioning they felt “heard.” Similar results were reported by Doody et al. (2009) who found the Q Method facilitated an inclusive approach and that participants improved their understanding of the relevance of sustainable development, the topic discussed. However, it must be noted that our workshop participants were quite highly educated and this may influence the results. Therefore, the decision to use Q Method and whether to do this in a workshop setting or individually should consider the needs of intended participants.

Pre- and Post-Q Method Activities

The researchers were conscious of how demanding the Q Method is and how sensitive the subject of mining heritage is for local residents. The main purpose of the workshops was to find out how local residents value their mining heritage and if (and how) they would like it managed in the future. Therefore, the researchers did not want to introduce any bias or present solutions that could influence the participants' ranking of statements, and so decided not to present any data or facts during the workshop.

The observations and reflections showed that the participants were interested in a wider discussion on the subject and keen to ask questions about specific statements, the overall project and the method itself, this was observed across all workshops. Although the method was deemed to be demanding and time-consuming, participants none the less suggested possible “add-ons” to the session. One suggestion was to include more discussion amongst the participants and researchers:

More discussion beforehand, maybe some debate. (Respondent 9)

More oral discussion. (Respondent 13)

Institute of Medicine (2013, p. 37) integrated discussion between participants and reported that “the ranking process also stimulates conversation as participants discuss why they ranked a particular statement the way they did.” Having time to reflect on the activities and their individual ranking of statements or an opportunity to review their rankings would have been welcomed by participants in this study, who expressed interest in a follow-up session:

Though time consuming (and therefore perhaps not welcome) repeating the procedure after an interval (of say a week) would be interesting to check on the correspondence between the two responses. (Respondent 8)

Allow time for assimilation and reflection. Opportunity to review after a day or two. (Respondent 10)

It would be interesting to repeat the same Q sort after a period of time (say two weeks) to ascertain how stable the results remained. (Respondent 15)

It should be noted that the “improvement” question was open; an empty text box where participants could add any suggestion, with no pre-conditioning or bias. Although the time for reflection varies (from 1 day to 2 weeks), it seems that revisiting their individual ranking would be something these participants would welcome. The literature is scarce in describing opportunities to re-do the ranking, but in one study participants were given the opportunity to modify their Q-sort rank after group discussion (Institute of Medicine, 2013). However, one participant stated that going back and forth with the rank might not be ideal:

As there is no right answer there is a tendency to keep altering the rankings. It might have been interesting (if not accurate!) to make people rank them quickly on a first reaction. (Respondent 3)

Although the researchers acknowledge the value of group discussion, it felt important to capture individual opinions and views, not those shaped and influenced by a wider group discussion, as these would be of less value for our research.

An individual interview, questionnaire, or ranking activity allows the personal worldview of the participant to be explored in detail. On the other hand, group discussions add richness and a level of emotion that is not present in individual activities (Lambert and Loiselle, 2008). There is no consensus regarding individual vs. collective approaches. However, some groups suggest that focusing on the individual might be a good strategy when sensitive topics are involved. Gaskell (2000) argues that there is not enough evidence to draw on conclusions and that, in the end, it is down to the nature of the research topic, the research objectives and the types of respondents.

Final Remarks

The Q Method seems to demand more energy and focus from the participants than other methods such as surveys and focus groups, but it appears to be a fairly straightforward and pleasant approach; participants stated they had enjoyed the experience. Van Exel and de Graaf (2005) stated their participants “spontaneously indicate they have enjoyed participating in the study and that they experienced it as instructive” (p. 17). Danielson et al. (2012) found participants were happy with the method and found it interesting or stimulating, although some participants did not feel they could express themselves. This was not evidenced in this study; on the contrary, participants felt their opinions were of value and that they were, somehow, making a contribution:

It was an interesting exercise in decision-making. (Respondent 15)

From the researchers' point of view, the Q Method was a labour-intensive experience. As stated by Barry and Proops (1999, p. 344), “the first cost of Q is that it is time intensive for the researcher.” Creating the statement cards is a long and intricate process (Danielson et al., 2012; Institute of Medicine, 2013), however it is a powerful methodology that encourages reflection on complicated subjects and is suitable for exploring and explaining patterns in subjectivities (Van Exel and de Graaf, 2005). The Q Method proved successful in engaging local residents, while respecting their individual views on a potentially contentious subject, making the participants feel that they had a voice and that there was value in their personal opinions on local mining heritage.

Conclusions and Recommendations

Most participants found the Q Method challenging but at the same time straightforward. It was also frequently described as stimulating and thought-provoking. However, this evaluation uncovered factors that contribute to the successful implementation and use of the Q Method in the context of public engagement and elsewhere.

We advise having a clear briefing session at the beginning of the workshop, allowing enough time to explain to participants what is expected of them and what materials are available. For example, it would be worth justifying why there is no glossary available.

Clear statements are crucial when using the Q Method. Statements should be clear both in their language (unambiguous), and physical appearance. Attention should be paid to font type and size, for example. Our participants reported that reading the statements was the easiest part of the process; made possible by most of the statements being clear, easy to interpret, short, and clearly printed on card. However, attention should be paid on the choice and style of the wording, and creating interesting, well-written and thought-provoking statements; this is what participants welcomed the most. In our case we removed as much technical language as possible from statements taken from academic and policy literature, and piloted them prior to developing a final set.

Like all public engagement activities and interventions, adapting to the audience is critical. Researchers need to understand the context in which Q Method will be administered. It is important to be aware of participants' different needs, such as language.

Based on our data and experience, we can say that Q Method is an effective way to engage with a group of people and to assess their preferences on specific issues. Q Method makes the participants feel heard and their opinions valued—it is, in other words, an inclusive public engagement method.

In summary, our recommendations for using the Q Method are:

• Use clear and succinct language, making it easy to interpret.

• Avoid repetitive statements.

• Do not use too many statements.

• Adapt the method to the participants and their preferences (for example, language, sorting the statement on paper or electronically, using questionnaires or interviews to elicit qualitative responses, etc.).

• It is crucial to make people feel listened to and that their opinion is valued.

• Participants enjoy a challenge and like to feel “thought-provoked”—do not over simplify.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by The University of the West of England's Research Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

AS and DS have both contributed to this paper. AS designed the evaluation, with input from DS. Data collection was done online and administered by AS. AS analysed the data with help from DS. The paper's fort draft was prepared by AS with input and later revision by DS. All authors contributed to the article and approved the submitted version.

Funding

This research was conducted through the INSPIRE: In situ Processes In Resource Extraction from waste repositories project funded by the Natural Environment Research Council and Economic and Social Research Council (Grant Reference NE/L013916/1).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1. ^NCCPE website. Available online at: https://www.publicengagement.ac.uk/what

References

Acocella, I. (2012). The focus groups in social research: advantages and disadvantages. Qual. Quant. 46, 1125–1136. doi: 10.1007/s11135-011-9600-4

Barry, J., and Proops, J. (1999). Seeking sustainability discourses with Q methodology. Ecol. Econ. 28, 337–345. doi: 10.1016/S0921-8009(98)00053-6

Bauer, M., and Jensen, P. (2011). The mobilization of scientists for public engagement. Public Understanding Sci. 20, 3–11. doi: 10.1177/0963662510394457

Bloor, M., Frankland, J., Thomas, M., and Robson, K. (2001). Focus Groups in Social Research. London: Sage. doi: 10.4135/9781849209175

Bradshaw, A. D., and Chadwick, M. J. (1980). The Restoration of Land: The Ecology and Reclamation of Derelict and Degraded Land. Berkeley, CA; Los Angeles, CA: University of California Press.

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Brown, S. R., Durning, D. W., and Selden, S. C. (2008). “Q methodology,” in Handbook of Research Methods in Public Administration, eds G. J. Miller and K. Yang (Portland, OR: CRC Press), 721–763. doi: 10.1201/9781420013276.ch37

Chandler, R., Anstey, E., and Ross, H. (2015). Listening to voices and visualizing data in qualitative research. SAGE Open 5, 1–8. doi: 10.1177/2158244015592166

Coogan, J., and Herrington, N. (2011). Q methodology: an overview. Res. Second. Teach. Educ. 1, 24–28.

Corbie-Smith, G., Wynn, M., Richmond, A., Rennie, S., Green, M., Hoover, S. M., et al. (2018). Stakeholder-driven, consensus development methods to design an ethical framework and guidelines for engaged research. PLoS ONE 13:e0199451. doi: 10.1371/journal.pone.0199451

Couper, M., Traugott, M., and Lamias, M. (2002). Web survey design and administration. Public Opin. Q. 65, 230–253. doi: 10.1086/322199

Crane, R., Sinnett, D., Cleall, P., and Sapsford, D. (2017). Physicochemical composition of wastes and co-located environmental designations at legacy mine sites in south west England and Wales: implications for their resource potential. Res. Conserv. Recycl. 123, 117–134. doi: 10.1016/j.resconrec.2016.08.009

Danielson, S., Tuler, S.T., Santos, S.L., Webler, T., and Chess, C. (2012). Three tools for evaluating participation: focus groups, Q method, and surveys. Environ. Pract. 14, 101–109. doi: 10.1017/S1466046612000026

De Vaus, D. (2002). Surveys in Social Research. Social Research Today, 5th Edn. New York, NY: Routhedge. doi: 10.4135/9781446263495

Doody, D. G., Kearney, P., Barry, J., Moles, R., and O'Regan, B. (2009). Evaluation of the Q-method as a method of public participation in the selection of sustainable development indicators. Ecol. Indic. 9, 1129–1137. doi: 10.1016/j.ecolind.2008.12.011

Durning, D. W., and Brown, S. R. (2006). “Q methodology and decision making,” in Handbook of Decision Making, ed G. Morçöl (New York, NY: CRC Press), 537–563. doi: 10.1201/9781420016918.ch29

Ellis, G., Barry, J., and Robinson, C. (2007). Many ways to say ‘no' and different ways to say ‘yes': applying Q-method to understanding public acceptance of wind farm proposals. J. Environ. Plann. Manag. 50, 517–551. doi: 10.1080/09640560701402075

Fink, A., Kosecoff, J., Chassin, M., and Brook, R. H. (1984). Consensus methods: characteristics and guidelines for use. Am. J. Public Health 74, 979–983. doi: 10.2105/AJPH.74.9.979

Gaskell, G. (2000). “Individual and group interviewing,” in Qualitative Researching With Text, Image and Sound: A Practical Handbook for Social Research, eds P. Atkinson, M. W. Bauer, and G. Gaskell (London: SAGE) 38–48.

Grand, A., and Sardo, A. M. (2017). What works in the field? Evaluating informal science events. Front. Commun. 2:22. doi: 10.3389/fcomm.2017.00022

Groves, R., Fowler, F., Couper, M., Lepkowski, J., Singer, E., and Tourangeau, R. (2004). Survey Methodology. Wiley Series in Survey Methodology, 1st Edn. Hoboken, NJ: Wiley-Interscience.

House of Commons (2013). Public Engagement in Policy-Making. Second Report of Session 2013–14. House of Commons Public Administration Select Committee, London, United Kingdom. Available online at: https://publications.parliament.uk/pa/cm201314/cmselect/cmpubadm/75/75.pdf (accessed March, 2019).

Howard, A. J., Kincey, M., and Carey, C. (2015). Preserving the legacy of historic metal-mining industries in light of the water framework directive and future environmental change in mainland britain: challenges for the heritage community. Hist. Environ. Policy Pract. 6, 3–15. doi: 10.1179/1756750514Z.00000000061

Hsu, C. -C., and Sandford, B. A. (2007). The delphi technique: making sense of consensus. Pract. Assess. Res. Eval. 12:10. doi: 10.7275/pdz9-th90

Institute of Medicine (2013). Engaging the Public in Critical Disaster Planning and Decision Making: Workshop Summary. Washington, DC: The National Academies Press.

Kaufmann, G. (2012). Environmental Justice and Sustainable Development With a Case Study in Brazil's Amazon Using Q Methodology. Available online at: https://d-nb.info/1029850070/34 (accessed February, 2019).

Lambert, S. D., and Loiselle, C. G. (2008). Combining individual interviews and focus groups to enhance data richness. J. Adv. Nurs. 62, 228–237. doi: 10.1111/j.1365-2648.2007.04559.x

Maguire, K., Garside, R., Poland, J., Fleming, L. E., Alcock, I., Taylor, T., et al. (2019). Public involvement in research about environmental change and health: a case study. Health 23:215233. doi: 10.1177/1363459318809405

Mazur, K. E., and Asah, S. T. (2013). Clarifying standpoints in the gray wolf recovery conflict: procuring management and policy forethought. Biol. Conserv. 167, 79–89. doi: 10.1016/j.biocon.2013.07.017

Novoa, A., Shackleton, R., Canavan, S., Cybèle, C., Davies, S. J., Dehnen-Schmutz, K., et al. (2018). A framework for engaging stakeholders on the management of alien species. J. Environ. Manag. 205, 286–297. doi: 10.1016/j.jenvman.2017.09.059

O'Neill, S., and Nicholson-Cole, S. (2009). “Fear Won't Do It”: promoting positive engagement with climate change through visual and iconic representations. Sci. Commun. 30, 355–379. doi: 10.1177/1075547008329201

Sardo, A. M., and Weitkamp, E. (2017). Environmental consultants, knowledge brokering and policy-making: a case study. Int. J. Environ. Policy Decis. Making 2, 221–235. doi: 10.1504/IJEPDM.2017.085407

Sinnett, D. (2019). Going to waste? The potential impacts on nature conservation and cultural heritage from resource recovery on former mineral extraction sites in England and Wales. J. Environ. Plann. Manag. 62, 1227–1248. doi: 10.1080/09640568.2018.1490701

Sinnett, D., and Sardo, A. M. (2020). Former metal mining landscapes in England and Wales: five perspectives from local residents. Landsc. Urban Plan. 193:103685. doi: 10.1016/j.landurbplan.2019.103685

Thomas, D. R. (2006). A general inductive approach for analyzing qualitative evaluation data. Am. J. Eval. 27, 237–246. doi: 10.1177/1098214005283748

Van Eeten, M. (1998). Dialogues of the Deaf: Defining New Agendas for Environmental Deadlocks. Delft: Eburon.

Van Eeten, M. (2000). “Recasting environmental controversies,” in Social Discourse and Environmental Policy, eds H. Addams and J. Proops (Cheltenham: Edward Elgar), 41–70.

Van Exel, N. J. A., and de Graaf, G. (2005). Q Methodology: A Sneak Preview. Available online at: www.jobvanexel.nl (accessed March, 2019).

Watts, S., and Stenner, P. (2005). Doing Q methodology: theory, method and interpretation. Qual. Res. Psychol. 2, 67–91. doi: 10.1191/1478088705qp022oa

Webler, T., Danielson, S., and Tuler, S. (2009). Using Q Method to Reveal Social Perspectives in Environmental Research. Greenfield, MA: Social and Environmental Research Institute. Available online at: http://www.seri-us.org/sites/default/files/Qprimer.pdf (accessed March, 2019).

Keywords: Q Method, Q Sort, evaluation, public engagement, consultation, public perspectives, preferences

Citation: Sardo AM and Sinnett D (2020) Evaluation of the Q Method as a Public Engagement Tool in Examining the Preferences of Residents in Metal Mining Areas. Front. Commun. 5:55. doi: 10.3389/fcomm.2020.00055

Received: 30 October 2019; Accepted: 03 July 2020;

Published: 11 August 2020.

Edited by:

Tracylee Clarke, California State University, Channel Islands, United StatesReviewed by:

Justin Reedy, University of Oklahoma, United StatesHelen Featherstone, University of Bath, United Kingdom

Copyright © 2020 Sardo and Sinnett. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ana Margarida Sardo, bWFyZ2FyaWRhLnNhcmRvQHV3ZS5hYy51aw==

Ana Margarida Sardo

Ana Margarida Sardo Danielle Sinnett

Danielle Sinnett