94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Commun., 08 April 2020

Sec. Psychology of Language

Volume 5 - 2020 | https://doi.org/10.3389/fcomm.2020.00017

This article is part of the Research TopicWords in the WorldView all 18 articles

Both localist and connectionist models, based on experimental results obtained for English and French, assume that the degree of semantic compositionality of a morphologically complex word is reflected in how it is processed. Since priming experiments using English and French morphologically related prime-target pairs reveal stronger priming when complex words are semantically transparent (e.g., refill–fill) compared to semantically more opaque pairs (e.g., restrain–strain), localist models set up connections between complex words and their stems only for semantically transparent pairs. Connectionist models have argued that the effect of transparency should arise as an epiphenomenon in PDP networks. However, for German, a series of studies has revealed equivalent priming for both transparent and opaque prime-target pairs, which suggests mediation of lexical access by the stem, independent of degrees of semantic compositionality. This study reports a priming experiment that replicates equivalent priming for transparent and opaque pairs. We show that these behavioral results can be straightforwardly modeled by a computational implementation of Word and Paradigm Morphology (WPM), Naive Discriminative Learning (NDL). Just as WPM, NDL eschews the theoretical construct of the morpheme. NDL succeeds in modeling the German priming data by inspecting the extent to which a discrimination network pre-activates the target lexome from the orthographic properties of the prime. Measures derived from an NDL network, complemented with a semantic similarity measure derived from distributional semantics, predict lexical decision latencies with somewhat improved precision compared to classical measures, such as word frequency, prime type, and human association ratings. We discuss both the methodological implications of our results, as well as their implications for models of the mental lexicon.

Current mainstream models of lexical processing assume that complex words such as unmanagability comprise several morphemic constituents, un-, manage, -able, and -ity, that recur in the language in many other words. Since early research in the seventies (e.g., Taft and Forster, 1975), it has been argued that the recognition of morphologically complex words is mediated by such morphemic units (for a review of models of morphological processing, see Milin et al., 2017b).

One of the issues under investigation in this line of research is whether visual input is automatically decomposed into morphemes before semantics is accessed. Several studies have argued in favor of early morpho-orthographic decomposition (Longtin et al., 2003; Rastle et al., 2004; Rastle and Davis, 2008), but others argue that semantics is involved from the start (Feldman et al., 2009), that the effect is task dependent and is limited to the lexical decision task (Norris and Kinoshita, 2008; Dunabeitia et al., 2011; Marelli et al., 2013), or fail to replicate experimental results central to decompositional accounts (Milin et al., 2017a).

Another issue that is still unresolved is whether complex words are potentially accessed through two routes operating in parallel, one involving decomposition and the other whole-form based retrieval (Frauenfelder and Schreuder, 1992; Marslen-Wilson et al., 1994b; Baayen et al., 1997, 2003). Recent investigations that make use of survival analysis actually suggest that whole-word based effects precede in time constituent-based effects (Schmidtke et al., 2017).

A third issue concerns the role of semantic transparency. Priming studies conducted on English and French prefixed derivations that are semantically transparent, such as distrust, have reported facilitation of the recognition of their stems ( trust), as well as other prefixed or suffixed derivations, such as entrust or trustful. The same holds for suffixed derivations that are semantically transparent like production and productivité in French or confession and confessor in English, which prime each other and their stem (confess). The critical condition in this discussion, however, concerns semantically opaque (i.e., non-compositional) derivations, such as successor, which appear not to facilitate the recognition of stems like success. This latter finding was replicated under auditory prime presentations or visual priming at long exposure durations at 230 or 250 ms (e.g., Rastle et al., 2000; Feldman and Prostko, 2001; Pastizzo and Feldman, 2002; Feldman et al., 2004; Meunier and Longtin, 2007; Lavric et al., 2011). Localist accounts take these findings to indicate that only semantically transparent complex words are processed decompositionally, via their stem, while semantically opaque words are processed as whole word units. Although on different grounds, also distributed connectionist approaches assume that the facilitation between complex words and their stem depends on their meaning relation. In a series of cross-modal priming experiments, Gonnerman et al. (2007) showed for English that morphological effects vary according to the gradual overlap of form and meaning between word pairs. Indeed, word pairs with a strong phonological and semantic relation like preheat–heat induced stronger priming than words with a moderate phonological and semantic relation like midstream–stream, and words holding a low semantic relation like rehearse–hearse induced no priming at all. According to connectionist accounts of lexical processing, this result arises as the consequence of the extent to which orthographic, phonological, and semantic codes converge.

However, these findings for English and French contrast with results repeatedly obtained for German, where morphological priming appears to be unaffected by semantic transparency (Smolka et al., 2009, 2014, 2015, 2019). Under auditory or overt visual prime presentations, morphologically related complex verbs facilitated the recognition of their stem regardless of whether they were semantically transparent (aufstehen–stehen, “stand up”–“stand”) or opaque (verstehen–stehen, “understand”–“stand”). Smolka et al. interpreted these findings to indicate that a German native speaker processes a complex verb like verstehen by accessing the stem stehen irrespective of the whole-word meaning, and argued that morphological structure overrides meaning in the lexical processing of German complex words. To account for such stem effects without effects of semantic transparency, they hypothesized a model in which the frequency of the stem is the critical factor, such that stems of complex words are accessed and activated, independent of the meaning composition of the complex word.

These findings for German receive support from experiments on Dutch—a closely related language with a highly similar system of verbal prefixes, separable particles, and non-separable particles. Work by Schreuder et al. (1990), using an intramodal visual short SOA partial priming technique to study Dutch particle verbs, revealed morphological effects without modulation by semantic transparency. Experiments addressing speech production in Dutch (Roelofs, 1997a,b; Roelofs et al., 2002) likewise observed, using the implicit priming task, that priming effects were equivalent for transparent and opaque prime-target pairs. Morphological priming without effects of semantic transparency have recently been replicated in Dutch under overt prime presentations (Creemers et al., 2019; De Grauwe et al., 2019). Unprimed and primed visual lexical decision experiments on Dutch low-frequency suffixed words with high-frequency base words revealed that the semantics of opaque complex words were equally quickly available as the semantics of transparent complex words (Schreuder et al., 2003), contradicting the original prediction of this study that transparent words would show a processing advantage compared to their opaque counterparts.

Importantly, there are some studies in English, e.g., Gonnerman et al. (2007, Exp. 4) and Marslen-Wilson et al. (1994a, Exp. 5), that applied a similar cross-modal priming paradigm with auditory primes and visual targets, and with similar prefixed stimuli as in the abovementioned studies by Smolka and collaborators, but found no priming for semantically opaque pairs like rehearse–hearse (for similar ERP-results in English see Kielar and Joanisse, 2011). Thus, results for German and results for English appear at present to be genuinely irreconcilable1.

In what follows, we first present an overt visual priming experiment that provides further evidence for the equivalent facilitation effects seen for German transparent and opaque prime-target pairs. The behavioral results are consistent with localist models in which connections between stems and derived words are hand-wired into a network, as argued by, e.g., Smolka et al. (2007, 2009, 2014, 2015) and Smolka and Eulitz (2018). However, this localist model is a post-hoc description of the experimental findings, and a computational implementation for this high-level theory is not available.

In this study, we proceed to show that the observed stem priming effects can be straightforwardly modeled by naive discriminative learning (NDL, Baayen et al., 2011, 2016a,b; Arnold et al., 2017; Divjak et al., 2017; Sering et al., 2018b; Tomaschek et al., 2019) without reference to stems or other morphological units, and without requiring hand-crafting of connections between such units. In fact, measures derived from an NDL network, complemented with a semantic similarity measure derived from distributional semantics, turn out to predict lexical decision latencies with greater precision compared to classical measures, such as word frequency, prime type, and semantic association ratings. Importantly, the NDL model predicts the effects of stem priming without a concomitant effect of semantic compositionality. According to the NDL model, the crucial predictor is the extent to which the target is pre-activated by the sublexical form features of the prime. In the final section, we discuss both the methodological implications of our results, as well as their implications for models of the mental lexicon.

German complex verbs present a very useful means to study the effects of morphological structure with or without meaning relatedness to the same base verb. German complex verbs are very productive and frequently used in standard German. The linguistic literature (Fleischer and Barz, 1992; Eisenberg, 2004) distinguishes two word formations: prefix verbs and particle verbs. Both consist of a verbal root and either a verbal prefix or a particle.

In spite of some prosodic and morphosyntactic differences (see Smolka et al., 2019), prefix and particle verbs share many similar semantic properties. Both may differ in the degree of semantic transparency with respect to the meaning of their base. For example, the particle an (“at”) only slightly alters the meaning of the base führen (“guide”) in the derivation anführen (“lead”), but radically does so with respect to the base schicken (“send”) in the opaque derivation anschicken (“get ready”). Similarly, the prefix ver- produces the transparent derivation verschicken (“mail”) as well as the opaque derivation verführen (“seduce”). Prefix and particle verbs are thus a particularly useful means by which the effects of meaning relatedness to the same base verb can be studied. For instance, derivations of the base tragen (“carry”), such as hintragen (“carry to”), forttragen (“carry away”), zurücktragen (“carry back”), abtragen (“carry off”), auftragen (“apply”), vertragen (“get along”), ertragen (“suffer”), alter the meaning relatedness from fully transparent to fully opaque with respect to the base. It is important to note that, in general, complex verbs in German are true etymological derivations of their base, regardless of the degree of semantic transparency they share with it. Because morphological effects of prefix and particle verbs are alike in German (see Smolka and Eulitz, 2018; Smolka et al., 2019) and Dutch (Schriefers et al., 1991), henceforth, we refer to them as “complex verbs” or “derived verbs.”

Previous findings on complex verbs in German have shown that these verbs strongly facilitate the recognition of their stem, without any effect of semantic transparency (Smolka et al., 2009, 2014, 2015, 2019; Smolka and Eulitz, 2018). That is, semantically opaque verbs, such as verstehen (“understand”) primed their base stehen (“stand”) to the same extent as did transparent verbs, such as aufstehen (“stand up”). Further, the priming by both types of morphological primes was stronger than that by either purely semantically related primes like aufspringen (“jump up”) or purely form-related primes like bestehlen (“steal”). The morphological effects remained unaffected by semantic transparency under conditions that were sensitive to detecting semantic and form similarity, that is, when semantic controls like verlangen–fordern (“require”–“demand”) and Biene–Honig (“bee”–“honey”) induced semantic facilitation or when form-controls with embedded stems, as in bekleiden–leiden (“dress”–“suffer”) and Bordell–Bord (“brothel”–“board”) induced form inhibition (see Exp. 3 in Smolka et al., 2014). This offered assurance that the lack of a semantic transparency effect between semantically transparent and opaque complex verbs was not a null effect but rather indicated that morphological relatedness overrides both semantic and form relatedness.

Further studies explored the circumstances of stem facilitation in more detail. For example, in spite of several differences in the phonological and morpho-syntactic properties of prefix and particle verbs, prefix verbs showed processing patterns that were substantially the same as those for particle verbs and, crucially, were uninfluenced by semantic transparency (Smolka et al., 2019). Furthermore, stem access occurs regardless of the directionality of prime and target entwerfen–werfen vs. werfen–entwerfen vs. entwerfen–bewerfen (Smolka and Eulitz, 2011).

Stem access is modality independent, as it occurs under both intra-modal (visual-visual) and cross-modal (auditory-visual) priming conditions (Smolka et al., 2014, 2019). Finally, event-related brain potentials revealed wide-spread N400 brain potentials in response to semantically transparent and opaque verbs without effects of semantic transparency—N400 brain potentials that are generally taken to be characteristic to indicate expectancy and (semantic) meaning integration. Most importantly, these brain potentials revealed that stem facilitation in German occurs without an overt behavioral response and is stronger than the activation by purely semantically related verbs or form-related verbs (Smolka et al., 2015).

The present experiment was closely modeled after previous experiments addressing priming effects for German verb pairs (e.g., Smolka et al., 2009, 2014).

We compared the differential effects of semantic, form, or morphological relatedness between complex verbs and a base verb in four priming conditions: (a) semantic condition, where the complex verb was a synonym of the target verb, (b) morphological transparent condition, where the complex verb was a semantically transparent derivation of the target verb, (c) morphological opaque condition, where the complex verb was a semantically opaque derivation of the target verb, and (d) form condition, where the base of the complex verb was form-related with the base of the target. We measured lexical decision latencies to the target verbs and calculated priming relative to an unrelated control condition. In addition to the (unrelated minus related) priming effects, the influence of the stem should surface in the comparison of the conditions (a) and (b), where both types of primes are synonyms of the base verb—the former holding a different stem as the target, the latter holding the same stem as the target; the influence of the degree of semantic transparency should surface in the comparison between conditions (b) and (c), where both types of primes are true morphological derivations of the base target. The influence of form similarity should surface in the comparison between conditions (c) and (d), where both types of primes have stems that are form-similar with the target.

As in our previous experiments, we were interested in tapping into lexical processing, when participants are aware of the prime and integrate its meaning, and thus applied overt visual priming at a long SOA (see Milin et al., 2017b, for a comparison between masked and overt priming paradigms). We used only verbs as materials to avoid word category effects, and inserted a large number of fillers to prevent expectancy or strategic effects. Different from our previous experiments, though, we applied a between-subject and between-target design.

In summary, the primes in all conditions were complex verbs with the same morphological structure and were thus (a) of the same word category, and (b) closely matched on distributional variables like lemma frequency, number of syllables and letters. They differed only with respect to the morphological, semantic, or form-relatedness with the target. Prime conditions are exemplified in Table 1; all critical items are listed in Appendix A. Our prediction is that both semantically transparent and opaque complex verbs will induce the same amount of priming to their base, and that this priming will be stronger than the priming by either semantically related or form-related verbs.

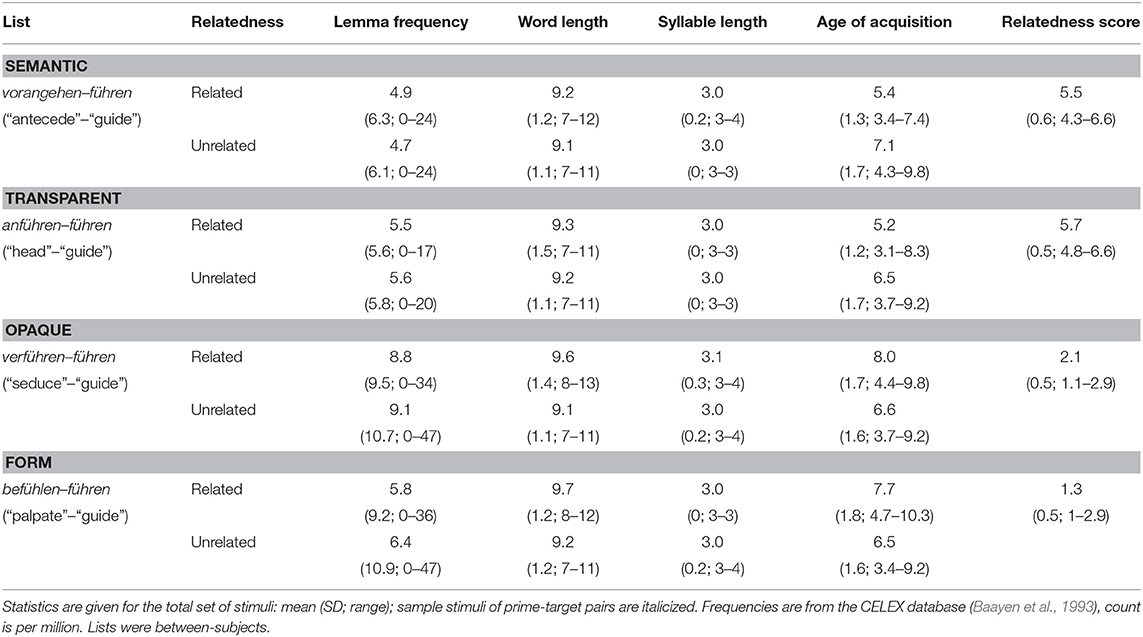

Table 1. Stimulus characteristics of related primes and their matched unrelated controls in the semantic synonym list, semantically transparent list, semantically opaque list, and form control list.

Fifty students of the University of Konstanz participated in the experiment (14 males; mean age = 22.69, range 19–32). All were native speakers of German, were not dyslexic, and had normal or corrected-to-normal vision. They were paid for their participation.

As critical stimuli, 88 prime-target pairs with complex verbs as primes and base verbs as targets were selected from the CELEX German lexical database (Baayen et al., 1993), 22 pairs in each of four conditions (see also Table 1): (a) morphologically unrelated synonyms of the base (e.g., vorangehen–führen, “antecede”–“guide”), (b) morphologically related synonyms of the base, these were semantically transparent derivations of the base, (e.g., anführen–führen, “head”–“guide”), (c) semantically opaque derivations of the base (e.g., verführen–führen, “seduce”–“guide”), and (d) semantically and morphologically unrelated form controls that changed a letter in the stem (by retaining the stem's onset and changing a letter in the rime, e.g., befühlen–führen, “palpate”–“guide”). Complex verbs in conditions (a) and (b) were synonyms of the target base and were selected by means of the online synonym dictionaries http://www.canoo.net/ and https://synonyme.woxikon.de/.

For each of the 88 related primes, we selected an unrelated control that served as baseline and (a) was morphologically, semantically, and orthographically unrelated to the target and (b) matched the related prime in word class, morphological complexity (i.e., it was a complex verb), number of letters and syllables. In addition, control primes were pair-wise matched to the related primes on lemma frequency according to CELEX. Furthermore, primes across conditions were matched on lemma frequency according to CELEX.

The critical set of 88 prime-target pairs was selected from a pool of verb pairs that had been subjected to semantic association tests, in which participants rated the meaning relatedness between the verbs of each prime-target pair on a 7-point scale from completely unrelated (1) to highly related (7) (for a detailed description of the database see Smolka and Eulitz (2018). The following criteria determined whether a verb pair was included in the critical set: The mean ratings for a semantically-related pair (in the synonym and semantically transparent conditions) had to be higher than 4, and those for a semantically unrelated pair (in the semantically opaque and form-related conditions) lower than 3. The set of words that were included in the experiment had mean ratings of 5.5 (range 4.3–6.7) for synonyms, 5.7 (range 4.78–6.56) for semantically transparent derivations, 2.13 (range 1.5–2.88) for semantically opaque derivations, and 1.7 (range 1.0–2.89) for form-related pairs. Table 1 provides the prime characteristics (lemma frequency, number of letters and syllables, meaning relatedness); the Appendix lists all stimuli.

In order to prevent strategical processes, a total of 140 prime-target pairs was added as fillers. All had complex verbs as primes, 48 had verbs and 92 had pseudoverbs as targets. With respect to the former, 18 of the 48 prime-verb fillers comprised related prime-target pairs of the other lists. These types were included to assure that participants would not detect a certain type of prime-target relatedness in a list. For example, list A held six items of list B, six of list C, and six of list D as fillers. The other 30 prime-target pairs were semantically, morphologically, and orthographically unrelated.

Regarding the prime-pseudoverb fillers, 44 of the pseudoverb targets were closely matched to the critical verb targets by keeping the onset of the verbs' first syllable (e.g., binden–binken). To further ensure that participants did not respond with “word” decisions for any trial where prime and target were orthographically similar, eleven pseudoverbs were preceded by a form-related prime (e.g., umwerben–wersen) to mimick the form condition. All pseudoverbs were constructed by exchanging one or two letters in real verbs, while preserving the phonotactic constraints of German.

The between-subject design had the following list composition: Each list comprised 184 prime-target pairs, half of these holding verbs, the other half pseudoverbs as targets. Of the 92 prime-verb pairs, 22 were related prime-target pairs of either condition (a), (b), (c), or (d), 22 were matched unrelated prime-target pairs, and 48 were filler pairs (30 unrelated and 18 of other related conditions). The 92 prime-pseudoverb-target pairs included 44 form-matched and 48 unrelated pairs.

Overall, the large amount of fillers in the present study reduced the proportion of (a) critical prime-target pairs to 24% per list or 48% of prime-verb pairs, and (b) related prime-target pairs (including critical and filler pairs) to 22% per list or 43% of verb pairs. Napps and Fowler (1987) showed that a reduction in the proportion of related items from 75% to 25% reduced both facilitatory and inhibitory effects. A significant reduction of related items in the present study should thus discourage participants from expecting a particular related verb target and thus prevent both expectancy and failed expectancy effects. All filler items differed from the critical items. Throughout the experiment, all primes and targets were presented in the infinitive (stem/-en), which is also the citation form in German.

Stimuli were presented on a 18.1″ monitor, connected to an IBM-compatible AMD Atlon 1.4 GHz personal computer. Stimulus presentation and data collection were controlled by the Presentation software developed by Neurobehavioral Systems (https://www.neurobs.com). Response latencies were recorded from the left and right buttons of a push-button box.

Each participant saw only one list. Each list was divided into four blocks, each block containing the same amount of stimuli per condition. The critical prime-target pairs were rotated over the four blocks according to a Latin Square design in such a way that the related and unrelated primes of the same target were separated by a block. The related fillers (form-related prime-pseudoverb pairs, related prime-verb pairs) and unrelated filler pairs were evenly allocated to the blocks.

In total, an experimental session comprised 184 prime-target pairs, with 66 pairs per block. Within blocks, prime-target pairs were randomized separately for each participant. Twenty additional prime-target pairs were used as practice trials. Participants were tested individually in a dimly lit room, seated at a viewing distance of about 60 cm from the screen. Stimuli were presented in Sans-Serif letters on a black background. To ensure that primes and targets were perceived as physically distinct stimuli, primes were presented in uppercase letters, point 32, in light blue (RGB: 0-255-255), 20 points above the center of the screen. Targets were presented centrally in lowercase letters, point 36, in yellow (RGB: 255-255-35).

Each trial started with a fixation cross in the center of the screen for 300 ms. This was followed by the presentation of the prime for 400 ms, followed by an offset (i.e., a blank screen) for 100 ms, resulting in a stimulus onset asynchrony (SOA) of 500 ms. After the offset, the target immediately followed and remained on the screen until a participant's response. The intertrial interval was 1,500 ms. Participants were instructed to make lexical decisions to the targets, as fast and as accurately as possible. “Word” responses were given with the index finger of the dominant hand, “pseudoword” responses with the subordinate hand. Feedback was given on both correct (“richtig”) and incorrect (“falsch”) responses during the practice session, and on incorrect responses during the experimental session. The experiment lasted for about 12 min, during which participants self-administered the breaks between blocks.

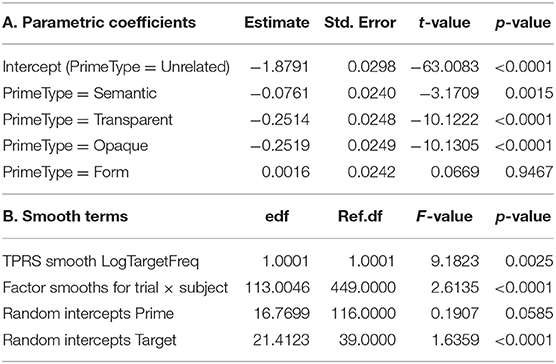

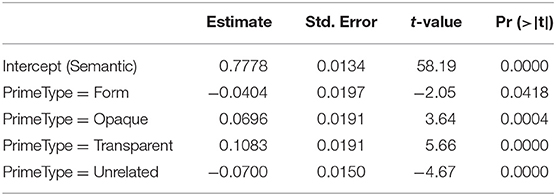

A generalized additive mixed model (Wood, 2017) was fitted to the inverse-transformed reaction times with predictors Prime Type (using treatment coding, with the unrelated condition as reference level) and log target frequency2. Random intercepts were included for target and prime, and a factor smooth for the interaction of subject by trial number (see Baayen et al., 2017, for detailed discussion of this non-linear counterpart to what in a linear mixed model would be obtained with by-subject random intercepts and by-subject random slopes for trial). Table 2 presents the model summary. Prime-target pairs in the semantic condition were responded to slightly more quickly than prime-target pairs in the unrelated condition. Prime-target pairs in the transparent and opaque conditions showed substantially larger facilitation of equal magnitude. Prime-target pairs in the form condition elicited reaction times that did not differ from those seen in the control condition.

Table 2. Generalized additive mixed model fitted to inverse-transformed primed lexical decision latencies.

To obtain further insight into the effects of the predictors not only for the median, but across the distribution of reaction times, we fitted quantile GAMs to the deciles 0.1, 0.2, …, 0.9, using the qgam package (Fasiolo et al., 2017)3. For the median, the quantile GAM also complements the Gaussian GAMM reported in Table 2. The Gaussian GAMM could have been expanded with further random effects for the interaction of subject by priming effect, but such models run the risk of overspecification (Bates et al., 2015). More importantly, the distribution of the residuals showed clear deviation from normality that resisted correction. As quantile GAMS are distribution free, simple main effects can be studied without having to bring complex random effects into the model as a safeguard against anti-conservative p-values.

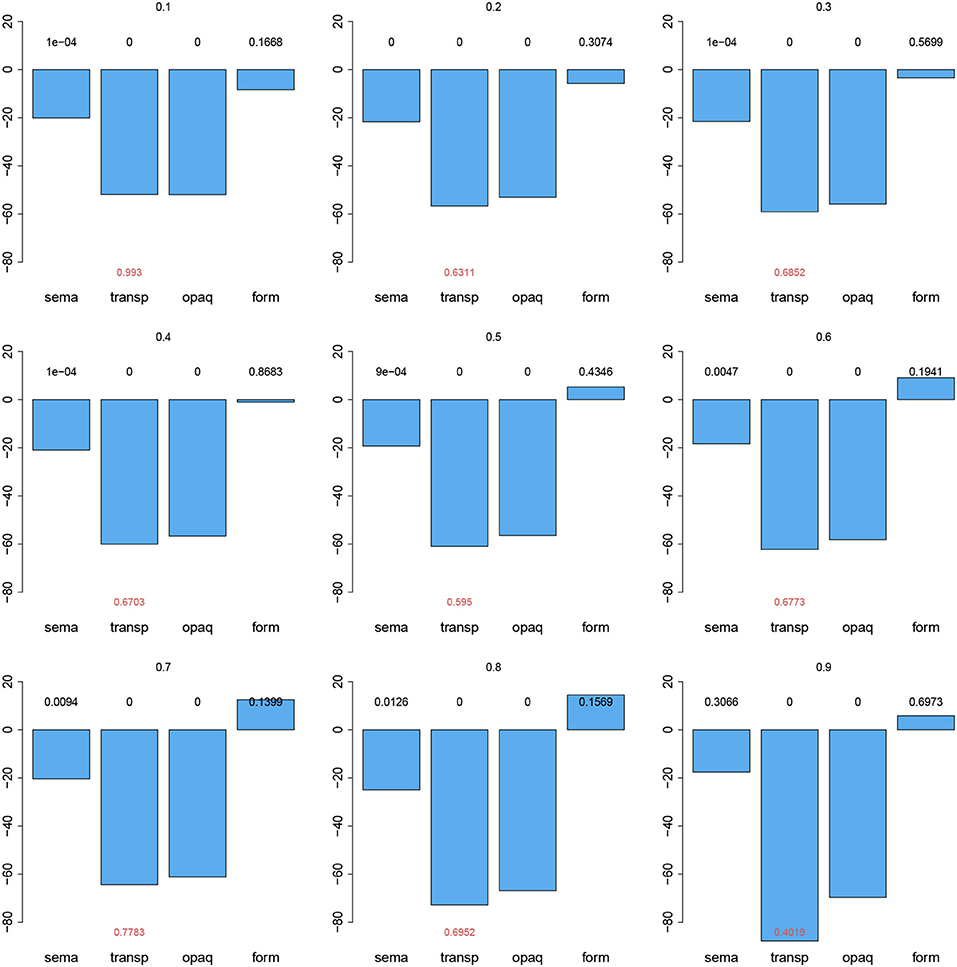

Figure 1 presents, from top left to bottom right, the effects of Prime Type for the deciles 0.1, 0.2, …, 0.9. The p-values above the bars concern the contrasts with the unrelated condition (the reference level). Across the distribution, the form condition was never significantly different from the unrelated condition. The small effect of the semantic condition hardly varied in magnitude across deciles, but was no longer significant at the last decile. The magnitude of the effects for the transparent and opaque conditions was significantly different from that for the unrelated condition across all deciles, and increased in especially the last three deciles. Across the deciles, the transparent condition showed a growing increase in facilitation compared to the opaque condition, but as indicated by the p-values in red, the difference between these two conditions was never significant.

Figure 1. Effects of Prime Type (with unrelated as reference level) in a Quantile GAM fitted to primed lexical decision latencies, for deciles 0.1, 0.2, …, 0.9.

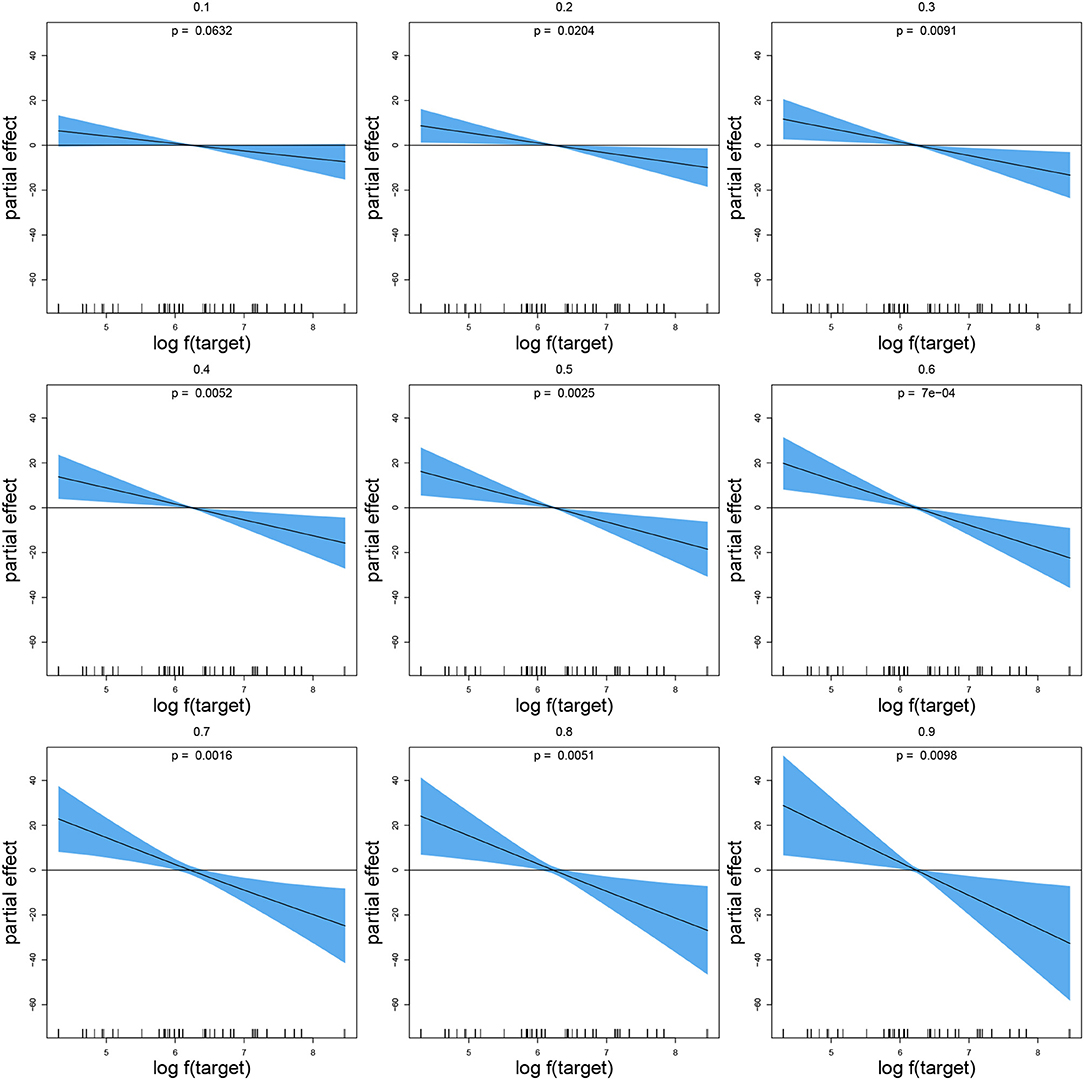

Figure 2 visualizes the effect of target frequency. From the second decile onwards, target frequency was significant, with greater frequencies affording shorter reaction times, as expected. The magnitude of the frequency effect, as well as its confidence interval, increased across deciles.

Figure 2. Partial effect of log target frequency in a Quantile GAM fitted to primed lexical decision latencies, for deciles 0.1, 0.2, …, 0.9.

In summary, replicating earlier studies, morphologically related primes elicited a substantial priming effect that did not vary with semantic transparency. The priming effect tended to be somewhat stronger at larger deciles, for which the effect of target frequency was also somewhat larger.

When the transparent and opaque priming conditions are considered in isolation, it might be argued that participants are superficially scanning primes and targets for shared stems, providing a word response when a match is found, and a non-word response otherwise. Such a task strategy can be ruled out, however, due to the high proportion of unrelated fillers, and once the other two priming conditions are taken into consideration. If subjects would indeed have been scanning for stems shared by prime and target, then the Unrelated and Semantic conditions should have elicited the same mean reaction times, contrary to fact. Clearly, participants processed the word stimuli more deeply than a form-based scan suggests. Furthermore, since form-based evaluation of pairs in the Form condition is more difficult given the substantial similarity of the stems, longer reaction times are predicted for the Form condition compared to the Unrelated condition. Also this prediction is falsified by the data: The means for the Form and Unrelated condition do not differ significantly.

At first sight, it might be argued that the present between-participants design has strengthened the opaque priming effects. However, given that all our previously conducted experiments used within-participants designs (e.g., three visual priming experiments in Smolka et al., 2009; one cross-modal and two visual priming experiments in Smolka et al., 2014; two cross-modal priming experiments in Smolka et al., 2019; and one visual EEG priming experiment in Smolka et al., 2015) and yielded equivalent priming by opaque and transparent prime-target pairs, we are confident that our present findings are not due to a design limitation.

We therefore conclude that the behavioral results of “pure morphological priming” without semantic transparency effects in German, as well as the older results obtained for speech production in Dutch, appear to indicate a fundamental role in lexical processing for morphemic units, such as the stem. However, perhaps surprisingly, developments in current linguistic morphology indicate that the theoretical construct of the morpheme is in many ways problematic. In what follows, we show that the present results can be explained within the framework of naive discriminative learning, even though this theory eschews morphemic units altogether.

Before introducing naive discriminative learning (NDL), we first provide a brief overview of developments in theoretical morphology over the last decades that motivated the development of NDL.

The concept of the morpheme, as the minimal linguistic sign combining form and meaning, traces its history to the American structuralists that sought to further systematize the work of Leonard Bloomfield (see Blevins, 2016, for detailed discussion). The morpheme as minimal sign has made it into many introductory textbooks (e.g., Plag, 2003; Butz and Kutter, 2016). The hypothesis that semantically transparent complex words are processed compositionally, whereas semantically opaque words are processed as units, is itself motivated by the belief that morphemes are linguistic signs. For semantically opaque words, the link between form and meaning is broken, the morpheme is no longer a true sign, and hence the rules operating over true signs in comprehension and production are no longer relevant.

The theoretical construct of the morpheme as smallest sign of the language system has met with substantial criticism (see e.g., Matthews, 1974; Beard, 1977; Aronoff, 1994; Stump, 2001; Blevins, 2016). Whereas the morpheme-as-sign appears a reasonably useful construct for agglutinating languages, such as Turkish, as well as for morphologically simple languages, such as English (but see Blevins, 2003), it fails to provide much insight for typologically very dissimilar languages, such as Latin, Estonian, or Navajo (see e.g., Baayen et al., 2018, 2019; Chuang et al., 2019, for detailed discussion). One important insight from theoretical morphology is that systematicities in form are not coupled in a straightforward one-to-one way with systematicities in meaning. Realizational theories of morphology (e.g., Stump, 2001) therefore focus on how sets of semantic features are expressed in phonological form, without seeking to find atomic form features that line up with atomic semantic features. Interestingly, as pointed out by Beard (1977), form and meaning are subject to their own laws of historical change or resistance to change.

Within realizational theories, two main approaches have been developed, Realizational Morphology and Word and Paradigm Morphology. Realizational Morphology formalizes how bundles of semantic (typically inflectional) features are realized in phonological form by making use of units for stems, stem variants, and the morphs (now named exponents) that realize (or express) sets of inflectional or derivational features (see e.g., Stump, 2001). Realizational Morphology is to some extent compatible with localist models in psychology, in that the stems and exponents of realizational morphology can be seen as corresponding to the “morphemes” (now understood strictly as form-only units, henceforth “morphs”) in localist networks. The compatibility is only partial, however, as current localist models typically remain underspecified as to how, in comprehension, the pertinent semantic feature bundles are activated once the proper exponents have been identified. For instance, in the localist interactive activation model of Veríssimo (2018)4, the exponent -er that is activated by the form teacher has a connection to a lemma node for ER as deverbal nominalization, but no link is given from the -er exponent to an inflectional function that in English is also realized with -er, namely, the comparative. Furthermore, even the node for deverbal -ER is semantically underspecified, as -ER realizes a range of semantic functions, including AGENT, INSTRUMENT, CAUSER, and PATIENT (Booij, 1986; Bauer et al., 2015).

A further, empirical, problem for decompositional theories that take the first step in lexical processing to be driven by units for morphs are experiments indicating that quantitative measures tied to properties of whole words, rather than their component morphs, are predictive much earlier in time than expected. For eye-tracking studies on Dutch and Finnish, see Kuperman et al. (2008, 2009, 2010) and for reaction times analyzed with survival analysis, see Schmidtke et al. (2017). These authors consistently find that measures linked to whole words are predictive for shorter response times, and that measures linked to morphs are predictive for longer response times. This strongly suggests that properties of whole words determine early processing and properties of morphs arise later in processing.

There is a more general problem specifically with models that make use of localist networks and the mechanism of interactive activation to implement lexical access. First of all, interactive activation is a very expensive mechanism, as inhibitory connections between morpheme nodes grow quadratically with the number of nodes, and access times increase polynomially or even exponentially. Furthermore, interactive activation as a method for candidate selection in a what amounts to a straightforward classification task is unattractive as it would have to be implemented separately for each classification task that the brain has to carry out. Redgrave et al. (1999) and Gurney et al. (2001) therefore propose a central single mechanism, supposed to be carried out by the basal ganglia, that receives a probability distribution of alternatives as input from any system requiring response selection, and returns the best-supported candidate (see Stewart et al., 2012, for an implementation of their algorithm with spiking neurons).

The second main approach within morpheme-free theories, Word and Paradigm Morphology, rejects the psychological reality of stems and exponents, and calls upon proportional analogies between words to explain how words are produced and comprehended (Matthews, 1974; Blevins, 2003, 2006, 2016). Although attractive at a high level of abstraction, without computational implementation, supposed proportional analogies within paradigms do not generate quantitative predictions that can be tested experimentally. As discussed in detail by Baayen et al. (2019), discrimination learning provides a computational formalization of Word and Paradigm Morphology that does generate testable and falsifiable predictions.

In what follows, we will use NDL to estimate a distribution of activations (which, if so desired, could be transformed into probabilities using softmax) over the set of possible word meanings given the visual input. Specifically, we investigate whether we can predict how prior presentation of a prime word affects the activation of the target meaning.

Naive discriminative learning is not the first cognitive computational model that seeks to move away from morphemes. The explanatory adequacy of the morpheme for understanding lexical processing has also been questioned within psychology by the parallel distributed processing programme (McClelland and Rumelhart, 1986; Rumelhart and McClelland, 1986). As mentioned previously, the triangle model (Harm and Seidenberg, 2004) has been argued to explain the effects of semantic transparency observed for English derived words as reflecting the convergence of phonological and semantic codes (Plaut and Gonnerman, 2000; Gonnerman and Anderson, 2001; Gonnerman et al., 2007). It is noteworthy, however, that to our knowledge, actual simulation studies demonstrating this have not been forthcoming. Importantly, if indeed the triangle model makes correct predictions for English, then one would expect its predictions for German to be wrong, because it would predict semantic transparency effects and no priming for semantically opaque word pairs.

Like the PDP programme, the twin theories of Naive Discriminative Learning (NDL Baayen et al., 2011, 2016b; Sering et al., 2018b) and Linear Discriminative Learning (Baayen et al., 2018, 2019), eschew the construct of the morpheme. But instead of using backpropagation multi-layer networks, NDL and LDL build on simple networks with input units that are fully connected to all output units.

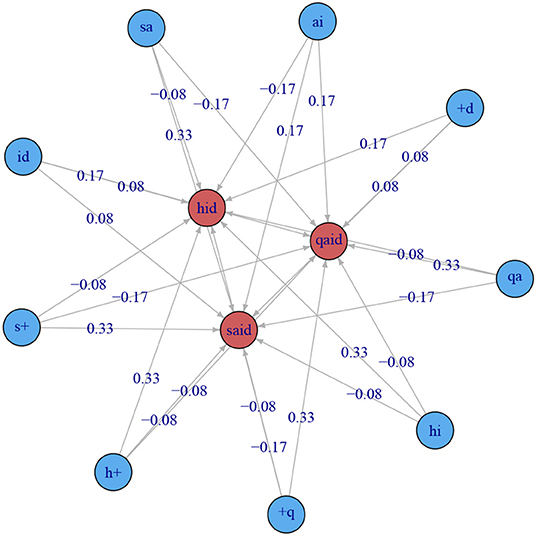

An NDL network is defined by its weight matrix W. By way of example, consider the following weight matrix,

which is visualized in Figure 3. The output nodes are on the inner circle in red, and the input nodes in the outer circle in blue. A star layout was chosen in order to guarantee readability of the connection weights. The network corresponding to this weight matrix comprises nine sublexical input units, shown in the left margin of the matrix. We refer to these units, here the letter bigrams of the words qaid, said, and hid, as cues; the # symbol (a + in Figure 3 represents the space character). There are three output units, the outcomes, shown in the upper margin of the matrix. The entries in the matrix present the connection strengths of the digraphs to the lexical outcomes. The digraph qa provides strong support (0.33) for QAID (“tribal chieftain”), and sa provides strong support (0.33) for SAID. Conversely, ai, which is a valid cue for two words, QAID and SAID, has connection strengths to these lexomes of only 0.17. The weights from hi and sa to QAID are negative, −0.08 and −0.17, respectively. For QAID, the cue that best discriminates this word from the other two words is qa. Conversely, sa is a (somewhat less strong) discriminative cue arguing against QAID. Informally, we can say that the model concludes the outcome must be QAID given qa, and that the outcome cannot be QAID given sa.

Figure 3. The two-layer network corresponding to W. The outer vertices in blue represent the input nodes, the inner vertices in red represent the output nodes. All input nodes are connected to each of the three output nodes. Each edge in the graph is associated with a weight which specifies the support that an input node provides for an output node.

In the present example, form cues are letter pairs, but other features have been found to be effective as well. Depending on the language and its writing conventions, larger letter or phone n-grams (typically with 1 < n ≤ 4) may outperform letter bigrams. For auditory comprehension, low-level acoustic features have been developed for modeling auditory comprehension (Arnold et al., 2017; Shafaei Bajestan and Baayen, 2018; Baayen et al., 2019). For visual word recognition, low-level visual “histograms of gradient orientation” features have been applied successfully in (Linke et al., 2017).

The total support aj for an outcome j given the set of cues in the visual input to the model, henceforth its activation, is obtained by summing the weights on the connections from these cues to that outcome:

For QAID (j = 1), the total evidence a1 given the cues #q, qa, ai, id, and d# is 0.33 + 0.33 + 0.17 + 0.08 + 0.08 = 1.

The values of the weights are straightforward to estimate. We represent the digraph cues of the words by a matrix C, with a 1 representing the presence of a cue in the word, and a 0 its absence:

We also represent the outcomes using a matrix, again using binary coding:

The vectors representing the outcomes in an NDL network are orthogonal: each pair of row vectors of T is uncorrelated. The weight matrix W follows by solving5

In other words, W projects words' forms, represented by vectors in a form space {C}, onto words' meanings, represented by vectors in a semantic space {T}6.

The outcomes of an NDL network represent lexical meanings that are discriminated in a language. Milin et al. (2017a) refer to these outcomes as lexomes, which they interpret as pointers to (or identifiers of) locations (or vectors) in some high-dimensional space as familiar from distributional semantics (see e.g., Landauer and Dumais, 1997; Mikolov et al., 2013). However, as illustrated above with the T matrix, NDL's lexomes can themselves be represented as high-dimensional vectors, the length k of which is equal to the total number of lexomes. The vector for a given lexome has one bit on and all other bits off (cf. Sering et al., 2018b). Thus, the lexomes jointly define a k-dimensional orthonormal space.

However, the orthonormality of the outcome space does not do justice to the fact that some lexomes are more similar to each other than others. Within the general framework of NDL, such similarities can be taken into account, but to do so, measures gauging semantic similarity have to be calculated from a separate semantic space that constructs lexomes' semantic vectors (known as word embeddings in computational linguistics) from a corpus. A technical complication is that, because many words share semantic similarities, the dimensionality of NDL's semantic space, k, is much higher than it need be. As a consequence, the classification accuracy of the model is lower than it could be (see Baayen et al., 2019, for detailed discussion).

The twin model of NDL, LDL, therefore replaces the one-hot encoded semantic vectors as exemplified by T by real-valued vectors. For the present example, this amounts to replacing T by a matrix, such as S:

Actual corpus-based semantic vectors are much longer than this simple example suggests, with hundreds or even thousands of elements. The method implemented in Baayen et al. (2019) produces vectors the values of which represent a given lexome's collocational strengths with all the other lexomes in the corpus.

Model accuracy is evaluated by examining how close a predicted semantic vector is to the targeted semantic vector s, a row vector of S. In the case of NDL, this evaluation is straightforward: The lexome that is best supported by the form features in the input, and that thus receives the highest activation, is selected. In the case of LDL, that word ω is selected as recognized for which the predicted semantic vector is most strongly correlated with the targeted semantic vector sω.

As the dimensionality of the row vectors of S, T, and C can be large, with thousands or tens of thousands of values, we refer to the network W as a “wide learning” network, as opposed to “deep learning” networks which have multiple layers but usually much smaller numbers of units on these layers.

Of specific relevance to the present study is how NDL and LDL deal with morphologically complex words. With respect to the forms of complex words, exactly the same encoding scheme is used as for simple words, with either n-grams or low-level modality-specific features used as descriptor sets. No attempt is made to find morpheme boundaries, stems, affixes, or allomorphs.

At the semantic level, both NDL and LDL are analytical. NDL couples inflected words, such as walked and swam with the lexomes WALK and PAST, and SWIM and PAST, respectively. In the example worked out in Table 3, the word form walk has LX6 as identifier; the lexome for past is indexed by LX4. The form walked is linked with both LX6 and LX4. For clarity of exposition, instead of using indices, we refer to lexomes using small caps: WALK and PAST. An NDL network is thus trained to predict, for morphologically complex words, on the basis of the form features in the input, the simultaneous presence of two (or more) lexomes. Mathematically, as illustrated in the top half of Table 3, this amounts to predicting the sum of the one-hot encoded vectors for the stem (WALK) and the inflectional function (PAST). Thus, NDL treats the recognition of complex words as a multi-label classification problem (Sering et al., 2018b).

LDL proceeds in exactly the same way, as illustrated in the bottom half of Table 3. Again, the semantic vector of the content lexome and the semantic vector of the inflection are added. The columns now label semantic dimensions. In the model of Baayen et al. (2019), these dimensions quantify collocational strengths with—in the present example—10 well-discriminated lexomes. Regular past tense forms, such as walked and irregular past tense forms, such as swam are treated identically at the semantic level. It is left to the mapping W (the network taking form vectors as input and producing semantic vectors as output) to ensure that the different forms of regular and irregular verbs are properly mapped on the pertinent semantic vectors.

The NDL model as laid out by Baayen et al. (2011) treats transparent derived words in the same way as inflections, but assigns opaque derived words their own lexomes. For opaque words in which the semantics of the affix are present, even though there is no clear contribution from the semantics of the base word, a lexome for the affix is also included (e.g., employer: EMPLOY + ER; cryptic: CRYPTIC + IC).

The LDL model, by contrast, takes the idea seriously that derivation serves word formation, in the onomasiological sense. Notably, derived words are almost always characterized by semantic idiosyncracies, the exception being inflection-like derivation, such as adverbial -ly in English7. For instance, the English word worker denotes not just “someone who works,” but “one that works especially at manual or industrial labor or with a particular material,” a “factory worker,” “a member of the working class,” or “any of the sexually underdeveloped and usually sterile members of a colony of social ants, bees, wasps, or termites that perform most of the labor and protective duties of the colony” (https://www.merriam-webster.com/dictionary/worker, s.v.). Given these semantic idiosyncracies, when constructing semantic vectors from a corpus, LDL assigns each derived word its own lexome. However, in order to allow the model to assign an approximate interpretation to unseen derived words, each occurrence of a derived word is also coupled with a lexome for the semantic function of the affix. For instance, worker (in the sense of the bee) is associated with the lexomes WORKER and AGENT, and amplifier with the lexomes AMPLIFIER and INSTRUMENT. In this way, semantic vectors are created for derivational functions, along with semantic vectors for the derived words themselves (see Baayen et al., 2019, for detailed discussion and computational and empirical evaluation)8.

To put LDL and NDL in perspective, consider the substantial advances made in recent years in machine learning and its applications in natural language engineering. Computational linguistics initially worked with deterministic systems applying symbolic units and formal grammars defined over these units. It then became apparent that considerable improvement in performance could be obtained by making these systems probabilistic. The revolution in machine learning that has unfolded over the last decade has made clear that yet another substantial step forward can be made by moving away from hand-crafted systems building on rules and representations, and to make use instead of deep learning networks, such as autoencoders, LSTM networks for sequence to sequence modeling, and deep convolutional networks, outperforming almost all classical symbolic algorithms on tasks as diverse as playing Go (AlphaGo, Silver et al., 2016) and speech recognition (deep speech, Hannun et al., 2014). How far current natural language processing technology has moved away from concepts in classical (psycho)linguistics theory is exemplified by Hannun et al. (2014), announcing in their abstract that they “…do not need a phoneme dictionary, nor even the concept of a ‘phoneme”' (p. 1).

The downside of the algorithmic revolution in machine learning is that what exactly the new networks are doing often remains a black box. What is clear, however, is that these networks are sensitive to what in regression models would be higher-order non-linear interactions between predictors (Cheng et al., 2018). Crucially, such complex interactions are impossible to reason through analytically. As a consequence, models for lexical processing that are constructed analytically by hand-crafting lexical representations for stems and exponents, and hand-crafting inhibitory or excitatory connections between these representations, as in standard interactive activation models, are unable to generate sufficiently accurate estimates for predicting with precision aspects of human lexical processing.

We note here that NDL and LDL provide high-level functional formalizations of lexical processing. They should not be taken as models for actual neural processing: biological neural networks involve cells that fire stochastically, with connections that are stochastic (Kappel et al., 2015, 2017) as well. Furthermore, most neural computations involve ensembles of spiking neurons (Eliasmith et al., 2012).

NDL and LDL are developed to provide a linguistically fully interpretable model using mathematically well-understood networks that, even though very simple, are powerful enough to capture important aspects of the interactional complexities in language, and to generate predictions that are sufficiently precise to be pitted against experimental data. Although NDL and LDL make use of the simplest possible networks, these networks can, in combination with carefully chosen input features, be surprisingly effective. For instance, for auditory word recognition, an NDL model trained on the audio of individual words extracted from 20 hours of German free conversation correctly recognized around 20% of the words, an accuracy that was subsequently found to be within the range of human recognition accuracy (Arnold et al., 2017). Furthermore, Shafaei Bajestan and Baayen (2018) observed that NDL outperforms deep speech networks by a factor 2 on isolated word recognition. With respect to visual word recognition, Linke et al. (2017) showed, using low-level visual features, that NDL outperforms deep convolutional networks (Hannagan et al., 2014) on the task of predicting word learning in baboons (Grainger et al., 2012). For a systematic comparison of NDL/LDL with interactive activation and parallel distributed processing approaches, the reader is referred to Appendix B.

In the present study, we model our experiment with NDL, rather than LDL, for two reasons. First, it turns out that NDL, the simpler model, is adequate. Second, work is in progress to derive corpus-based semantic vectors for German along the lines of Baayen et al. (2019), which will include semantic vectors for inflectional and derivational semantic functions, but these vectors are not yet available to us.

The steps in modeling with NDL are the following. First, the data on which the network is to be trained have to be prepared. Next, the weights on the connections from the form features to the lexomes are estimated. Once the network has been trained, it can be used to generate predictions for the magnitude of the priming effect. In the present study, we generate these predictions by inspecting the extent to which the form features of the prime support the lexome of the target.

The data on which we trained our NDL network comprised 18,411 lemmas taken from the CELEX database, under the restrictions that (i) they contained no more than two morphemes according to the CELEX parses, (ii) that the word was not a compound, and (iii) that it either had a non-zero CELEX frequency or occurred as a stimulus in the experiment. One stimulus word, betraten, was not listed in CELEX, and hence this form was not included in the simulation study. For each lemma, its phonological representation and its frequency were retrieved from CELEX. As form cues, we used triphones (for the importance of the phonological route in reading, see Baayen et al., 2019, and references cited there).

Each lemma was assigned its own lexome (but homophones were collapsed). The decision to assign each lemma its own lexome follows Baayen et al. (2019) and departs from Baayen et al. (2011). This similar treatment of transparent and opaque verbs is motivated by several theoretical considerations. First, there is no binary distinction between transparent and opaque. The meanings of particle verbs lie on a continuum between relatively semantically compositional and relatively semantically opaque. Second, even the compositional interpretation of a supposedly transparent verb, such as aufstehen (“stand up”) is not straightforward in the absence of situational experience—the particle auf (roughly meaning on or onto) may express a wide range of meanings, depending on cotext and context. In what follows, we therefore assume that even transparent complex words possess somewhat idiosyncratic meanings, and hence should receive their own lexomes in the NDL network.

The resulting input to the model was a file with 4,492,525 rows and two columns, one column spelling out a word's triphones, and the other column listing its lexome. Each word appeared in the file with a number of tokens equal to its frequency in CELEX. The order of the words in the file was randomized.

An NDL network with 10,180 input nodes (triphones) and 18,404 output nodes (lexomes) was trained on the input list, with incremental updating of the weights on the connections from features in the input to the lexomes, using the learning rule of Rescorla and Wagner (1972) (λ = 1, α = 0.001, β = 1; i.e., with a learning rate of 0.001). As there were 4,492,525 learning events in the input file, the total number of times that weights were updated was 4,492,5259.

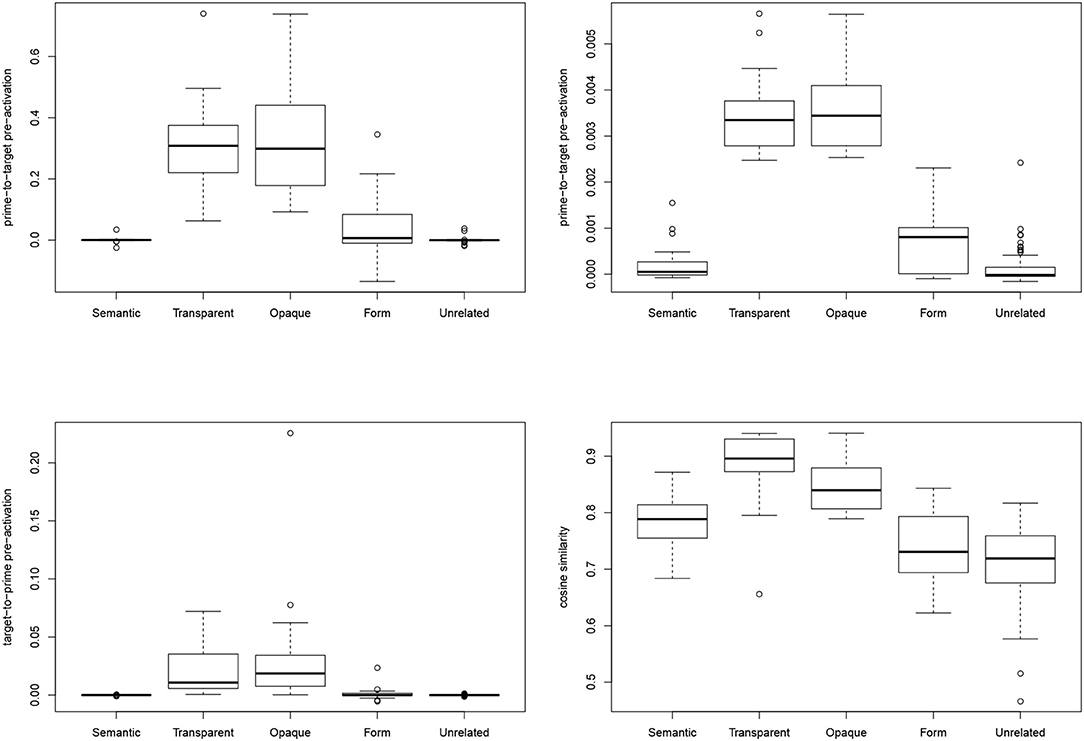

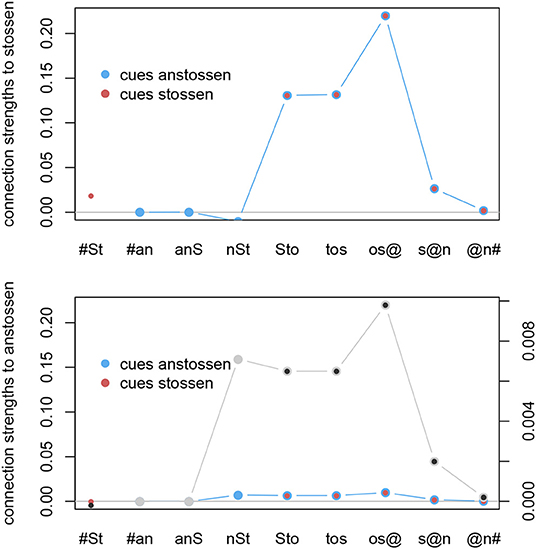

To model the effect of priming, we presented the triphones of the prime to the network, and summed the weights on the connections from these triphones to the pertinent target to obtain a measure of the extent to which the prime pre-activates its target (henceforth PrimeToTargetPreActivation). Figure 4, upper left panel, presents a boxplot for PrimeToTargetPreActivation as a function of PrimeType. Interestingly, the opaque and transparent prime types comprise prime-target pairs for which the prime provides substantial and roughly the same amount of pre-activation for the target. For the other prime types, pre-activation is close to zero. Form-related prime-target pairs show some pre-activation, but this pre-activation is much reduced compared to the prime-target pairs in the opaque and transparent conditions.

Figure 4. Predicted NDL Prime-to-Target Pre-Activation using empirical word frequencies (upper left panel), Predicted NDL Prime-to-Target Pre-Activation using uniformly distributed frequencies (upper right panel), Target-to-Prime Pre-Activation using empirical frequencies (lower left panel), and Prime-target Cosine Similarity (lower right panel), broken down by prime type.

The upper right panel of Figure 4 presents the results obtained when the empirical frequencies with which words were presented to the NDL network are replaced by uniformly distributed frequencies. This type-based simulation generates predictions that are very similar to those of the token-based simulation. This result shows that imprecisions in the frequency counts underlying the token-based analysis are not responsible for the model's predictions.

Above, we called attention to the finding of Smolka and Eulitz (2011) that very similar priming effects are seen when the order of prime and target is reversed. We therefore also ran a simulation in which we reversed the order of prime and target, and investigated the extent to which the current targets (now primes) co-activate the current primes (now targets). The distributions of the predicted pre-activations are presented in the lower left panel of Figure 4 (target-to-prime pre-activation). Apart from one extreme outlier for the opaque condition, the pattern of results is qualitatively the same as for the Prime-to-Target Pre-Activation presented in the upper panel. For both simulations, there is no significant difference in the mean for the opaque and transparent conditions, whereas these two conditions have means that are significantly larger than those of the other three condition (Wilcoxon-tests with Bonferroni correction). In summary, our NDL model generates the correct prediction that the priming effect does not depend on the order of prime and target.

Reaction times are expected to be inversely proportional to PrimeToTargetPreActivation. We therefore ran a linear model on the stimuli, and used the reciprocal of PrimeToTargetPreActivation as response variable, based on the simulation in which the model was presented with the empirical word frequencies. As the resulting distribution is highly skewed, the response variable was transformed to log(1/(PrimeToTargetPreActivation + 0.14)10. The opaque and transparent priming conditions were supported as having significantly shorter simulated reaction times compared to the unrelated condition (both p ≪ 0.0001), in contrast to the other two conditions (both p > 0.5).

Recall that the outcome vectors of NDL are orthogonal, and that hence the present NDL models all make predictions that are driven purely by form similarity. The model is blind to potential semantic similarities between primes and targets, not only for the primes and targets in the transparent and opaque conditions, but also to semantic similarities present for the other prime types. To understand to what extent semantic similarities might be at issue in addition to form similarities, we therefore inspected prime and target's semantic similarity using distributional semantics.

As LDL-based semantic vectors for German are currently under construction, we fell back on the word embeddings (semantic vectors) provided at http://www.spinningbytes.com/resources/wordembeddings/ (Cieliebak et al., 2017; Deriu et al., 2017). These embeddings (obtained with word2vec, Mikolov et al., 2013) are 300-dimensional vectors derived from a 50 million word corpus of German tweets. Tweets are relatively short text messages that reflect spontaneous and rather emotional conversation. Tweets from facebook have been shown to outperform frequencies from standard text corpora in predicting lexical decision latencies (Herdağdelen and Marelli, 2017).

Cieliebak et al. (2017) and Deriu et al. (2017) provide separate semantic vectors for words' inflected variants. For instance, the particle verb vorwerfen (“accuse”) occurs in their database in the forms vorwerfen (infinitive and 1st or 3rd person plural present), vorwerfe (1st person singular present), vorwirfst (2nd person singular present), vorwirft (3rd person singular present), vorwerft (2nd person plural present), vorgeworfen (past participle), and vorzuwerfen (infinitive construction with zu). As we can expect for tweets, not all inflected forms, in particular the more formal ones, appear in the database. Importantly, the semantic vectors are probably obtained without taking into account that the particle of a particle verb can appear separated from its verb, sometimes at a considerable distance (see Schreuder, 1990, for discussion of the cognitive consequences of this separation), as in the sentence “Sie wirft ihm seinen Leichtsinn vor,” “She accuses him of his thoughtlessness.” Given the computational complexity of identifying particle-verb combinations when the particle appears at a distance, it is highly likely that for split particle verbs, the base verb of the verb-particle combination is processed as if it were a simple verb (e.g., werfe, wirfst, wirft, werfen, and werft, 1st, 2nd, and 3rd person singular and plural present, respectively). As a consequence, the semantic similarity of simple verbs and particle verbs computed from the word embeddings provided by Cieliebak et al. (2017) and Deriu et al. (2017) is in all likelihood larger than it should be.

Not all words in the experiment are in this database; but for six words, we were able to replace the infinitive by a related form (einpassen → reinpassen, verqualmen → verqualmt, fortlaufen → fortlaufend, bestürzen → bestürzend, verfinstern → verfinstert, beschneien → beschneites).

For each prime-target pair for which we had data, we calculated the cosine similarity of the semantic vectors of prime and target, henceforth Prime-to-Target Cosine Similarity. Figure 4, lower right panel, shows that the transparent pairs have the greatest semantic similarity, followed by the opaque pairs, then the semantic pairs and the form pairs, and the least semantic similarity by the unrelated pairs.

Surprisingly, the semantic controls have a rather low semantic similarity, substantially less than that of the opaque pairs. A linear model with the semantic primes as reference level clarifies that the semantic pairs are on a par with the form controls, more similar than the unrelated pairs, but less similar than both the opaque and transparent pairs (Table 4).

Table 4. Effect of PrimeType in a linear model predicting cosine similarity, using treatment coding with the semantic condition as reference level.

There is a striking discrepancy between the assessment of semantic similarity across prime types based on the cosine similarity of the semantic vectors on the one hand, and an assessment based on the ratings for semantic relatedness between word pairs, as documented in Table 1. In the former, semantic pairs pattern with form controls and differ from transparent ones, while in the latter, semantic pairs pattern with transparent pairs (5.5 and 5.7, respectively), and opaque pairs with form-related ones (2.1 and 1.7, respectively).

Most important to our study is that the opaque pairs show significantly less semantic similarity than the transparent ones (p < 0.0047, Wilcoxon test): The analysis of word embeddings confirms that there is a true difference in semantic transparency between the transparent and opaque prime-target pairs. And yet, this difference is not reflected in our reaction times.

Given the strong track record of semantic vectors in both psychology and computational linguistics, the question arises of whether the prime-target cosine similarities are predictive for reaction times, and how the magnitude of their predictivity compares to that of the NDL Prime-to-Target Pre-Activation.

To address these questions, we fitted a new GAMM to the inverse-transformed reaction times of our experiment, replacing the factorial predictor PrimeType with the model-based predictor Prime-to-Target Pre-Activation. We also replaced target frequency by the activation that the target word receives from its own triphones (henceforth TargetActivation, see Baayen et al. (2011) for detailed analyses using this measure). Target activation is proportional to frequency, and hence larger values of target activation are expected to indicate shorter response times11.

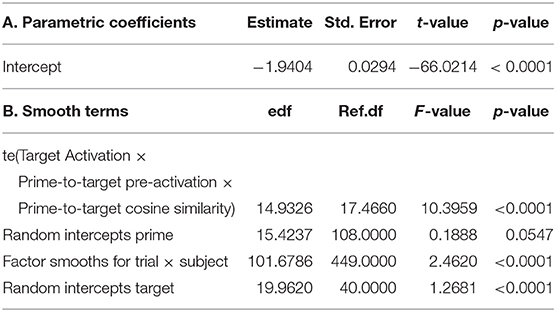

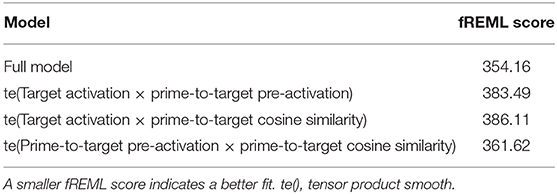

Of the experimental dataset, about 7% of the observations was lost due to 7 words not being available in CELEX or in the dataset of word embeddings. To set a baseline for model comparison, we refitted the GAMM discussed above to the 1999 datapoints of the reduced dataset. The fREML score for this model was 360.76. A main effects model replacing Target Frequency by Target Activation, PrimeType by Prime-to-Target Pre-Activation, and as additional predictor the Prime-to-Target Cosine Similarity had a slightly higher fREML score, 370.97. An improved model was obtained by allowing the three new covariates to interact, using a tensor product smooth. The fREML score of this model, summarized in Table 5, was 354.16. A chi-squared test for model comparison (implemented in the compareML function of the itsadug package van Rij et al., 2017) suggests that the investment of 4 additional effective degrees of freedom is significant (p = 0.010). As the models are not nested and the increase in goodness of fit is moderate (6.6 fREML units), we conclude that—to obtain a model that is at least equally good—it is possible to replace the classical predictors, such as Frequency and PrimeType by model-based predictors without loss of prediction accuracy12.

Table 5. GAMM fitted to inverse transformed reaction times using model-based predictors; te(): tensor product smooth.

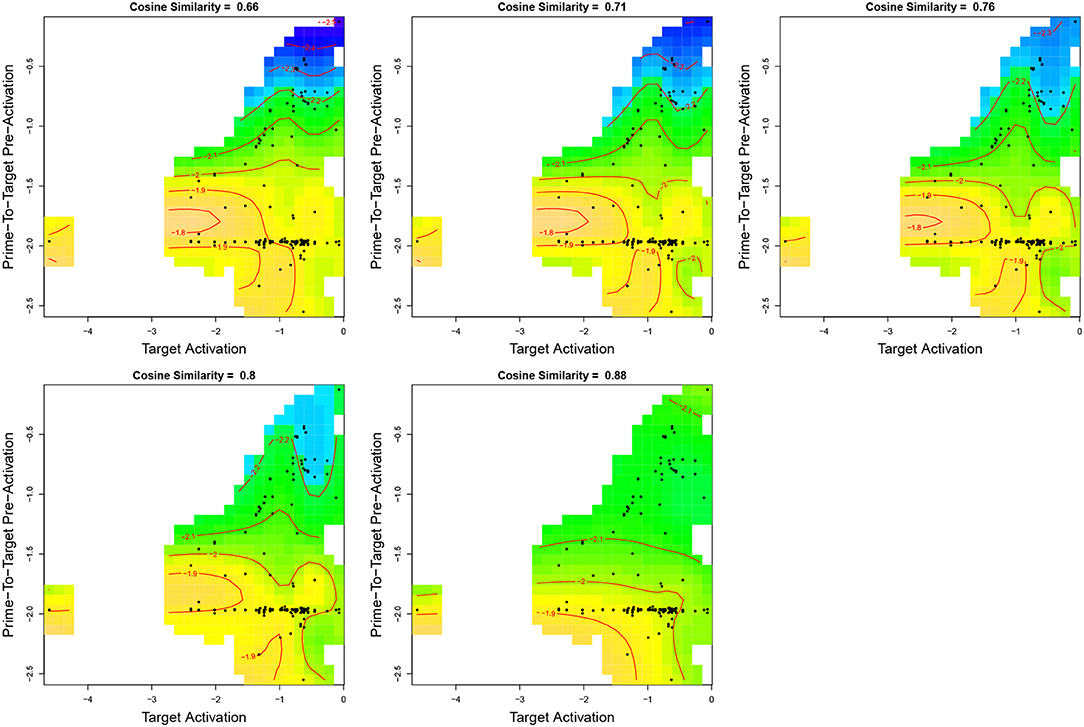

The three-way interaction involving Target Frequency, Prime-To-Target Pre-Activation, and Prime-to-TargetCosine Similarity is visualized in Figure 5. The five panels show the joint effect of the first two predictors for selected quantiles of Prime-to-TargetCosine Similarity: From top left, to bottom right, Prime-to-Target Cosine Similarity is set to its 0.1, 0.3, 0.5, 0.7, and 0.9 deciles. Darker shades of blue indicate shorter reaction times, and darker shades of yellow longer RTs.

Figure 5. Interaction of Target Activation by Prime-to-Target Pre-Activation by Prime-to-Target Cosine Similarity in a GAMM fitted to the inverse-transformed reaction times. Darker shades of blue indicate shorter reaction times, and darker shades of yellow longer RTs. For visualization, one extreme outlier value for Prime-to-Target Pre-Activation was removed (13 datapoints). Contour lines are 0.1 inverse RT units apart.

As can be seen in the upper left panel (for the first decile of Cosine Similarity), reaction times decrease slightly as Target Activation is increased, but only when there is little Prime-To-Target Pre-Activation. A clear effect of Prime-to-TargetPre-Activation is present for the larger values of Target Activation.

Recall that, as shown in Figure 4, transparent and opaque prime-target pairs have the same mean pre-activation, whereas the mean cosine similarity is greater for transparent prime-target pairs compared to opaque pairs. If both pre-activation and cosine similarity would have independent effects, one would expect a difference in the mean reaction times for these two prime types, contrary to fact. The interaction of pre-activation by target activation by cosine similarity resolves this issue by decreasing the effect of pre-activation as cosine similarity increases. When prime and target are more similar semantically, the effect of pre-activation is reduced, and reaction times are longer than would otherwise have been the case. This increase in RTs may reflect the cognitive system slowing down to deal with two signals for very similar meanings being presented in quick succession in a way that is extremely rare in natural language.

We checked whether the association ratings that were used for stimulus preparation were predictive for the reaction times. This turned out not to be the case, not for the model with classical predictors, nor when the association ratings were added to the model with discrimination-based predictors.

Finally, Table 6 presents the fREML scores for the full model, and the three models obtained when one covariate is removed at a time. Since smaller fREML scores indicate a better fit, Table 6 clarifies that Prime-to-Target Pre-Activation is the most important covariate, as its exclusion results in the worst model fit (386.11). The variable importances of Prime-to-TargetCosine Similarity is also substantial (383.49) whereas removing Target Activation from the model specification reduces model fit only slightly (361.62). The model that best predicts (AIC 354.16) pure morphological priming includes all three factors: Prime-to-Target Pre-Activation (capturing Prime Type), Prime-to-Target Cosine Similarity (capturing minor semantic effects), and Target Activation (capturing the frequency effect).

Table 6. fREML scores for four models: the full model, a model without Prime-to-Target Cosine Similarity, a model without Prime-to-Target Pre-Activation, and a model without Target Activation.

We presented an overt primed visual lexical decision experiment that replicated earlier results for German complex verbs: Priming effects were large and equivalent for semantically transparent and semantically opaque prime-target pairs.

These findings add to the cumulative evidence of “pure morphological priming” patterns that suggest stem access independent of semantic compositionality in German, in contrast to English and French, where semantic compositionality has been reported to co-determine word processing.

One could argue that the fact that we used verbs might account for these cross-language differences, in particular because the majority of the verbs in German hold prefixes or particles. Indeed, there are only few studies in English that applied prefixed stimuli that can be interpreted as verbs, such as preheat–heat (e.g., Exp. 5 in Marslen-Wilson et al., 1994b; Exp. 4 in Gonnerman et al., 2007; EEG-experiment in Kielar and Joanisse, 2011). In these experiments, though, opaque prime-target pairs like rehearse–hearse did not induce priming, which contrasts with our findings in German. Furthermore, in a previous study (Exp. 3 in Smolka et al., 2014), we applied both verbs and nouns or adjectives in the same experiment to make sure that the opaque priming effects are not due to the fact that participants see verbs only. It might indeed be the case that, in German, morphological constituents assume a more prominent role than prefixes and suffixes in nouns and adjectives in languages as English and French, and that the complexity and structure of the language affects its (lexical) processing (Günther et al., 2019).

Most importantly, because neither localist nor connectionist models of lexical processing are able to account for the German findings, Smolka et al. (2009, 2014, 2019) proposed a stem-based frequency account, according to which stems constitute the crucial morphological units regulating lexical access in German, irrespective of semantic transparency.

In the present study, we took the next step and modeled the German stem priming patterns using naive discriminative learning (NDL). This morpheme-free computational model clarifies that the observed priming effects across all prime types may follow straightforwardly from basic principles of discrimination learning. The extent to which sublexical features of the prime (letter triphones) pre-activate the lexome of the target is the strongest predictor for the reaction times. A substantially smaller effect emerged for the activation of the target (comparable to a frequency effect). The semantic similarity of prime and target as gauged by the cosine similarity measure also had a solid effect.

The semantic vectors (word embeddings) used for calculating the cosine similarity between primes and their targets were taken from a database of German tweets. It is noteworthy that the cosine similarity measure provided good support for the transparent prime-target pairs being on average more semantically similar than the opaque prime-target pairs. However, this difference between the two prime types was not reflected in the corresponding mean reaction times.

A three-way interaction between prime-to-target pre-activation, target activation, and prime-target cosine similarity detected by a generalized additive mixed model fitted to the reaction times clarified that as the semantic similarity of primes and targets increases, the facilitatory effect of pre-activation decreases. Apparently, when primes and targets are more similar, pre-activation by the prime forces the cognitive system to slow down in order to resolve the near simultaneous activation of two very similar, but conflicting, meanings.

Interestingly, even though across prime types stimuli were matched for association ratings, these ratings were not predictive for reaction times. Stimuli were not matched across prime types for the cosines of the angles between primes' and targets' tweet-based semantic vectors, yet, surprisingly, these were predictive for reaction times. This finding is particularly surprising for the present data on German, as in previous work semantic similarity measures (not only human but also vector-based measures like LSA (Landauer and Dumais, 1997) and HAL (Lund and Burgess, 1996)) were observed not to be predictive of reaction times. It is conceivable that the present semantic measure is superior to LSA and HAL, due to it being calculated from a large volume of tweets—Herdağdelen and Marelli (2017) point out that measures based on distributional semantics calculated from corpora of social media provide excellent predictivity for lexical processing.

A caveat is in order, though, with respect to the cosine similarity measure, as in all likelihood particle verbs and their simple counterparts are estimated to be somewhat more similar than they should be. Particles can be separated by several words from their stems, and these stems will therefore be treated as simple verbs by the algorithm constructing semantic vectors (especially when the particle falls outside word2vec's 5-word moving window). This, however, implies that the cosine similarity measure must be less sensitive than it could have been: The vector for the simple verb is artifactually shifted in the direction of the vector of its particle verb, with the extent of this shift depending on the frequency of the simple verb, and the frequencies of the separated and non-separated derived particle verbs. As simple verbs typically occur with several particles, the semantic vectors for these simple verbs are likely to have shifted somewhat in the direction of the centroid of the vectors of its particle verbs.

Nevertheless, the present tweet-based semantic vectors contribute to the prediction of reaction times. Importantly, there is no a-priori reason for assuming that the rate at which particles occur separated from their stem would differ across prime types. As a consequence, the partial confounding of particle verbs and simple verbs by the distributional semantics algorithm generating the semantic vectors cannot be the main cause of the different distributions of cosine similarities across the different prime types (in this context, it is worth noting that any skewing in frequency counts of complex and simple verbs does not affect the qualitative pattern of results for the predictor with the greatest effect size, the prime-to-target activation measure, as shown by the simulation in which all words are presented to the network with exactly the same frequency. We leave answering the question of why this particular frequency measure appears to be effective as predictor for the present reaction times to further research).

An important result is that a generalized additive mixed model fitted to the reaction times provides a fit that is at least as good, if not slightly better, when the activation, pre-activation, and cosine similarity measures are used, compared to when prime type and frequency of occurrence are used as predictors (reduction in fREML score: 6.6, which, for 4 degrees of freedom is significant (p = 0.010) according to the chi-squared test implemented in the compareML function of the itsadug package)13.