- 1School of Journalism and Communication, Carleton University, Ottawa, ON, Canada

- 2Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, ON, Canada

- 3Department of Psychiatry, University of Ottawa, Ottawa, ON, Canada

Introduction: Since the launch of Web 2.0, we have witnessed a trend toward digitizing healthcare tools for use by both patients and providers. Clinical trials focus on the ways that digital health technologies result in better outcomes for patients, increase access to healthcare and reduce costs. Critical approaches which explore how these technologies result in changes in patient embodiment, power relations, and the patient-provider relationship are badly needed.

Objective: To provide an instructive case example of how Light et al.'s (2018) walkthrough method can be mobilized to study apps to address critical health communication research questions.

Methods: We apply the walkthrough method to the BEACON Rx Platform. In doing so, we conduct a detailed technical walkthrough and evaluate the environment of expected use to answer the following questions: How does the platform shape (and how is it shaped by) understandings of what it means to be healthy? Who are its ideal users? How does this impact its environment of expected use?

Conclusions: This paper demonstrates the potential contributions of the walkthrough method to critical health communication research, namely how it enables a detailed consideration of how an app's technical architecture and environment of expected use are embedded with symbolic representations of what it means to be healthy and what practices should be engaged in to maintain “good” health. It also demonstrated that, despite the rhetoric that digital health technologies democratize healthcare, the BEACON Rx platform is a risk monitoring tool by its very design.

Introduction

“It's like having a physician in your pocket” (Lupton and Jutel, 2015, p. 130). This quote reflects current trends toward digitizing healthcare tools for use by both patients and healthcare providers. This shift toward digital solutions is especially prominent in mental healthcare, with ~29% of all health smartphone applications (“apps”) being used for the diagnosis, treatment, or support of psychiatric disorders (Anthes, 2016). Currently, there are ~965 and 470 apps targeting mental health disorders in the Google Play and iTunes stores, respectively (Larsen et al., 2019). This includes apps such as Headspace, a guided-mediation app that targets stress, anxiety and sleep; Moodpath: Depression and Anxiety, in which users can monitor their own depression symptoms through daily assessments and access personalized guidance and resources based on their results; and, Youpper, an “emotional health assistant” which uses artificial intelligence to “[interact] with you, [learn] from you, and [become] attuned to your needs over time” (Youpper, 2019).

In addition to commercially available apps, clinicians are now receiving funding to develop their own apps for incorporation into routine clinical care to better monitor their patients' treatment outcomes. For instance, the CanImmunize app was developed by a physician to create a digital version of individual and family immunization records, including: information about vaccinations and related diseases; customized vaccination schedules for each family member; reminders for upcoming vaccination appointments; and, information about regional disease outbreaks (Houle et al., 2017). The BEACON Rx platform, which is the focus of this case study, was similarly developed through a partnership between the Ottawa Hospital Research Institute (OHRI) and private industry to facilitate psychiatric treatment among men who present to the Emergency Department for an episode of self-harm. Digital solutions such as CanImmunize and the BEACON Rx platform are packaged as a means of putting patients in charge of their own healthcare and disease prevention; however, scholars have expressed a need to examine these technologies critically, with a view of developing an understanding of how they are embedded within a larger discourse of health surveillance in which patients are disciplined into self-tracking and self-regulating subjects. As explained by Ayo (2012), the intent is not to classify digital tools as either “good” or “bad” but, instead, “to demonstrate how such self-regulating, individualized practices become championed over other forms of well-established knowledge such as the social determinants of health” (p. 102).

In order to take up this call for critical scholarship, this paper seeks to provide an instructive example of how to mobilize Light et al.'s (2018) walkthrough method to answer critical health communication research questions specific to health apps. To demonstrate this, we will apply this method to an analysis of the BEACON Rx Platform to assess the following questions: How does the BEACON Rx platform shape (and how is it shaped by) understandings of what it means to be healthy? Who are its ideal users? How does this impact its environment of expected use? In answering these questions, we will advance the argument, using the BEACON Rx platform as a case example, that, despite the neoliberal rhetoric that mental health apps encourage users to take control of their own care, they are, in fact, risk monitoring tools by their very design.

Literature Review

The Use of Digital Health Technologies in Psychiatric Care

In this paper, the term “digital health technologies” will be used to refer to internet-based tools which facilitate the delivery of healthcare, also termed “eHealth,” “Medicine 2.0” and “Health 2.0” (Lupton, 2013b). This can refer to a broad range of products and services including, but not limited to electronic medical information systems; telemedicine tools which facilitate medical evaluation and diagnosis at a distance; computerized therapies which are designed to deliver health interventions; and, mobile, wireless or wearable technologies which allow patients to monitor their health and well-being (Lupton, 2013b). This paper will specifically focus on the combination of technology and psychotherapy in the treatment of psychiatric disorders such as depression and anxiety. This has also been termed “blended care” in the public health literature and is similar to Lupton's (2013b) definition of telemedicine as the use of “digital and other technologies to encourage patients to self-monitor their medical conditions at home, thus reducing visits to or from healthcare providers, and to communicate with healthcare providers via these technologies rather than face to face” (p. 259). Here, however, digital health technologies are not intended to replace face-to-face contact with healthcare providers, but instead, refer to “any possible combination of regular face-to-face treatments and web-based interventions” (Krieger et al., 2014, p. 285).

To date, few studies have examined the use of digital health technologies in conjunction with routine psychiatric care, but those that have demonstrate promising results (Wright et al., 2005; Carroll et al., 2008; Kooistra et al., 2014; Krieger et al., 2014; Kleiboer et al., 2016). These trials highlight the numerous benefits to both patients and healthcare provides including increasing the intensity of mental health treatment without a reduction in the number of sessions (Kooistra et al., 2014); case management benefits for mental health professionals (Wright et al., 2005); and, the potential to reduce in the number of face-to-face therapy sessions required by patients, in turn, decreasing the total cost of mental health treatments to the health care system (Kleiboer et al., 2016).

While few rigorous clinical trials have been conducted examining the use of blended therapy in mental health care, even fewer studies specifically examine the role of apps in psychiatric care (Watts et al., 2013). However, it has been argued that these devices can significantly enhance therapeutic outcomes by increasing exposure to treatments as well as reducing the demands on clinician time (Boschen and Casey, 2008). Additionally, the use of smartphone apps for the self-management and monitoring of mental health has been found to be generally favorable by both research participants (Reid et al., 2011) and providers (Kuhn et al., 2015; Miller et al., 2019a,b).

While research in the fields of medicine, public health and epidemiology focus on how these technologies result in better outcomes for patients, increase access to healthcare and reduce costs to the healthcare system, there is a need to approach these technologies critically, with a focus on how they contribute to changes in patient embodiment, power relations and the patient-provider relationship (Lupton, 2013b). In response, Lupton (2013b) advocates for an approach that she terms “critical digital health studies,” which focuses on the social, cultural, economic and ethical components of digital health technologies. This approach is interdisciplinary in nature, involving theorists from the fields of sociology, anthropology, science, and technology studies (STS), media studies and cultural studies. These scholars focus not only on the instrumental uses of digital health technologies but explore their development within established ideological and discursive contexts (Andreassen et al., 2006; Beer and Burrows, 2010; Greenhalgh et al., 2013; Ritzer, 2014).

“Healthism” and the Neoliberal Rationality

The language of neoliberalism is often invoked in critical analyses of digital health technologies, highlighting how it shapes how health is defined and what practices are promoted to ensure the maintenance of “good” health (Crawford, 2006; Zoller and Dutta, 2011; Ayo, 2012; Lupton, 2014b,c, 2015, 2016; Millington, 2014; Ajana, 2017; Fotopoulou and O'Riordan, 2017; Elias and Gill, 2018). Ayo (2012) describes neoliberalism as “a political and economic approach which favors the expansion and intensification of markets, while at the same time minimizing government intervention” (p. 101). This framework is characterized by minimal government intervention, market fundamentalism, risk management, individual responsibility, and, as a result, inevitable inequality (Ericson et al., 2000). These principles are highly value laden, extending far beyond the economic or the political. Neoliberalism can, thus, be understood as shaping how citizens are “governed and expected to conduct [themselves], right from the privacy of one's own home to the administration of public institutions across all demographics” (Ayo, 2012, p. 101).

This discourse has significant consequences on health and healthcare policy and has led to an “ideology of healthism,” first coined by Crawford (1980), which positions the achievement and maintenance of “good” health as the central component of identity, “so that an individual's everyday activities and thoughts are continually directed toward this goal” (Lupton, 2013a, p. 397). Rather than improving social conditions related to health, such as access to basic income, food, clean water and shelter, the state has reverted to frameworks of health that emphasize the importance of individual lifestyle choices (Ayo, 2012). This is necessarily a privileged position in that our ability to achieve healthfulness is necessarily conditioned by factors such gender, race, and class (Lupton, 2013a). Under the discourse of healthism, individuals “choose” to take proactive steps to ensure their own health. This moralistic position leads to understandings of poor health as a failure of personal accountability, rather than one of the state (Ayo, 2012). Here, healthy citizens are equated with “good” citizens. This, in turn, legitimizes discriminatory and exclusionary health policies and practices (French and Smith, 2013). This, consequentially, permeates how we understand what it means to be healthy (e.g., Depper and Howe, 2017).

Modes of Self-Tracking and the “Digitally Engaged Patient”

Digital health technologies are an important part of this trend toward taking personal responsibility for our health as they facilitate the self-tracking of health and related conditions that are necessary in order for individuals to make health-related choices. Lupton (2016) describes self-tracking as “practices in which people knowingly and purposively collect information about themselves, which they then review and consider applying to the conduct of their lives” (p. 2). Digital health technologies, therefore, encourage self-surveillance through what has been termed dataveillance, or surveillance via the collection of mass amounts of personal information to be stored, sorted and analyzed electronically. Invoking the language of surveillance often implicitly signals coercion and, thus, negative consequences. However, here, Lupton's (2014c, 2016) typology of the modes of self-tracking is instructive in that it highlights that various modes and technologies of self-tracking will necessarily vary in their repressive effects. While these modes are not mutually exclusive, it is a useful framework for understanding how self-tracking can, in some instances, be voluntary or even pleasurable. First, she explains, that private self-tracking refers to engagement in tracking practices that is purely voluntary, self-initiated and pleasurable; second, pushed self-tracking, refers to that which is initiated or suggested by a third party who “nudges” the user toward behavior change; third, communal self-tracking, refers to groups or communities of trackers who share their data via social media or other avenues for the purposes of engaging with and learning from one another; fourth, imposed self-tracking, occurs when self-tracking is initiated by institutions (e.g., one's employer or school) where individuals have little choice in whether or not to engage in self-tracking practices due to either limited opportunities for refusal or the consequences of non-tracking; and, finally, exploited self-tracking, refers to the repurposing of self-tracking data by commercial entities (e.g., market research firms) for the financial benefit of others (Lupton, 2014c, 2016). Lupton's (2014c, 2016) typology highlights how surveillance, as articulated through self-tracking practices, is not necessarily repressive, but instead, speaks to Foucault's concept of governmentality in which social control “[operates] on autonomous individuals willfully regulating themselves in the best interest of the state” (Ayo, 2012, p. 100). His concept of the panopticon, likewise demonstrates how control and discipline work together to ensure the production of “good” citizens (Foucault, 1977). Self-tracking further illustrates how the creation and monitoring of identity via categorization has also figured prominently into contemporary surveillance practices. Ericson and Haggerty (2006) claim that there are two important dimensions of identity politics in relation to surveillance practices: (1) the monitoring of pre-constituted social groups; and, (2) the creation of new forms of identity through risk categorization. Borrowing from Guattari and Deleuze (2000) and Haggerty and Ericson (2000) describe a nearly invisible model of surveillance in which individuals are de-territorialized and separated into discrete flows of information. These flows, referred to as “data doubles,” are highly mobile, reproducible, transmittable, and continually updated. Barnard-Willis (2012) describes these electronic profiles as taking on a life of their own as they are often seen as more real, accurate and accessible than the individual themselves. Within this understanding, he explains that “identity is shifted from the individual to their representation in multiple databases” (p. 33).

This is similar to Foucault's (1977) concept of biopower, or the subjugation of bodies and the control of populations (p. 140), which instructs citizens on the “right” ways to live and govern oneself. Here, understandings of “risk” and “normality” are essential, especially in relation to health. Digital health technologies, thus, allow for new refinements of categorization, enabling increased specificity in the identification of “risk factors” and “at-risk groups” in need of medical targeting (Lupton, 2012). Normative expectations are inherent to risk calculations in the sense that their categorizations are infused with an amount of moral certainty and legitimacy (Ericson and Haggerty, 1997). This presents the achievement of “good health” as an ongoing, forever unfinished project in which even healthy individuals are potentially “at risk” and must, therefore, engage in proactive self-monitoring practices in order to remain in good health (Lupton, 1995).

These practices, therefore, produce a very specific kind of subject: an entrepreneurial citizen who uses digital health technologies to engage in self-surveillance in order the ensure the most accurate representation of their health which they can then act upon as necessary (French and Smith, 2013; Doshi, 2018). The work of Foucault has been especially relevant in mobilizing critical examinations of digital health technologies and subjectivity (French and Smith, 2013; Williamson, 2015; Esmonde and Jette, 2018). Engagement in self-tracking practices via digital health technologies has been theorized as giving rise to a “quantified self” (Lupton, 2014c; Nafus and Sherman, 2014), or a self that uses apps to “to collect, monitor, record and share a range of – quantified and non-quantifiable—information about herself or himself while engaging in ‘the process of making sense of this information as part of the ethical project of selfhood”’ (para. 9). The engagement in self-tracking practices under the guise of health has been likened to technologies of the self, in which individuals take actions to “transform themselves in order to attain a certain state of happiness, purity, wisdom, perfection, or immortality” (Foucault, 1977, p. 18) Technologies of the self, in this case digital health technologies, then, interpellate particular subjects or identities (Ayo, 2012; Depper and Howe, 2017; Esmonde and Jette, 2018). Lupton (2013b) uses the term “digitally engaged patient” to describe the subject that emerges through interactions with digital health technologies, highlighting that, through discourses which emphasize patient empowerment and the availability of digital health monitoring tools, the patient is constructed as one that is “at the center of action-taking in relation to health and healthcare” (Swan, 2012; as cited in Lupton, 2013b, p. 258). Lupton (2012) explains that the digitally engaged patient is far from disembodied but, instead, is involved in a continuous circuit of data production and response, with information generated by digital health technologies being fed back to the user in a format that encourages the user to act in particular ways (Lupton, 2012).

Objectives

The primary objective of this study is to provide an instructive case example of how the walkthrough method (Light et al., 2018) can be mobilized in the study of apps to address critical health communication research questions. To demonstrate this, we will apply this method to an analysis of the BEACON Rx platform to assess its environment of expected use to address how it shapes (and how is it shaped by) understandings of what it means to be healthy, its construction of ideal or default users, and who is, consequently, rendered invisible through these constructions.

The BEACON Rx Platform and Cluster Randomized Controlled Trial

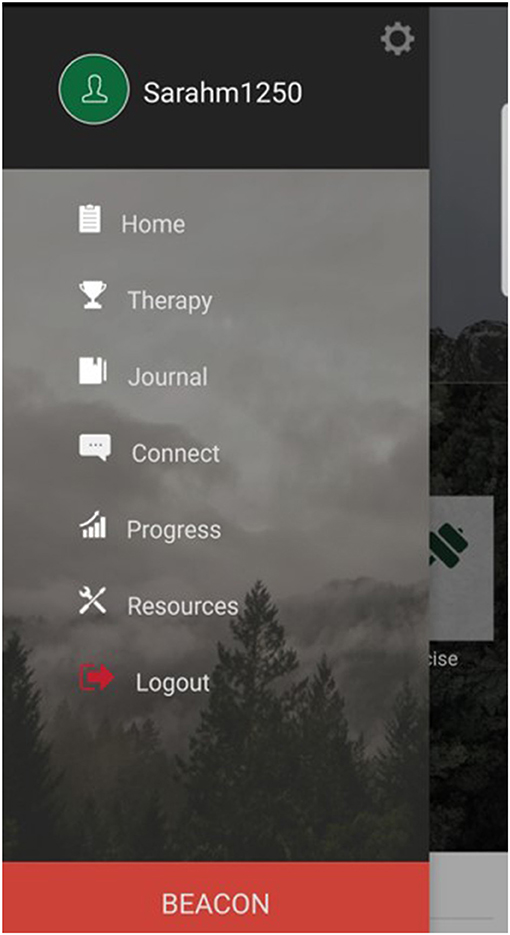

The BEACON Rx Platform was developed through a partnership between CHESS Health Inc. and the Ottawa Hospital Research Institute (OHRI) and is designed to extend the reach of face-to-face psychotherapy for men who present to the Emergency Department for an episode of self-harm. The digital health solution is one component of a complex clinical intervention that is being evaluated through a cluster randomized trial (CRT) in seven Emergency Departments across the province of Ontario. The BEACON Rx platform includes both a patient-facing app and a health provider-facing dashboard. The app includes eight integrated sections, including: a user profile; a home page, where users can monitor any number of “trackables” (e.g., diet, hygiene and exercise) and mental health outcomes (e.g., mood); materials designed to support face-to-face psychotherapy; a journaling function; a connect feature which allows users to instant message their healthcare provider in between visits; a progress tab where users can review changes to their trackables and mood over time; a resource section which allows their healthcare provider to push out targeted content through the app; and, “the BEACON button” which users can press should they find themselves in a mental health crisis to be connected to their provider, emergency contact or crisis support line (Figure 1). The BEACON Rx Clinician Dashboard facilitates provider case management through the monitoring of patient's progress. Through the Clinician Dashboard, the provider can access anything that is inputted into the app by the patient, including responses on mood logs, trackables, journal entries, and BEACON button presses.

Both authors were involved in the development of the BEACON Rx platform, as Principal Investigator [SH] and Clinical Research Coordinator [SM], assisting with the development of the clinical content to be included in the platform and providing commentary and feedback on its technical architecture. Beyond this, both authors were involved in usability testing of both the beta and current version of the BEACON Rx platform. As such, it is possible that our familiarity with the platform impacted our findings in this study.

The Walkthrough Method

Despite the proliferation of apps that accompanied the emergence of Web 2.0 innovations, their empirical study brings with it significant methodological challenges. For instance, unlike webpages, they are technically closed systems and researchers rarely have access to their proprietary source code, rendering examinations of their operating structure difficult, if not impossible (Light et al., 2018). While researchers have attempted solve this problem via queries of Application Programming Interfaces (APIs) (e.g., Bivens, 2017), the data gathered are often incomplete, limited to variables which are relevant for commercial or advertising purposes.

To address these challenges, Light et al. (2018) developed the walkthrough method, which combines science and technology studies (STS) and cultural studies to allow for a systematic step-by-step exploration of apps through their various screens, features, and flow of activities: “slowing down the mundane actions and interactions that form part of normal app use in order to make them salient and therefore available for critical analysis” (Light et al., 2018, p. 882). Through this approach, connections are elucidated between an app's technical interface and discursive and symbolic representations (Light et al., 2018). This, in turn, provides an understanding of the environment of expected use; that is, how designers anticipate the technological artifact will be received by users, how it will generate profit and regulate user activities within the app (Light et al., 2018). This approach is ontologically grounded in Actor-Network Theory (ANT) and understands technology as “never merely technical or social” (Wajcman, 2010, p. 149) but, instead, sociocultural and technological processes are understood to be mutually shaping (Light et al., 2018). As explained by Baym (2010), the consequences of technologies are the result of both their affordances and the ways that these affordances are then appropriated by users. To account for this, we need to consider how social conditions give rise to technological artifacts; how these technologies, in turn, promote or constrict behavior; and, how this is taken up, reworked or resisted through everyday use (Baym, 2010). The walkthrough method also draws on aspects from both textual and semiotic analysis in that it involves an analysis of how apps, through their embedded symbolic and representational features, construct our understanding of gender, ethnicity, race, sexuality, and class. However, as explained by Light et al. (2018), the walkthrough method extends these analyses to provide an understanding of how an app “seeks to configure relations among actors, such as how it guides users to interact (or not) and how these actors construct or transfer meaning” (p. 891).

The application of the walkthrough method involves two key components. First, researchers must conduct a technical walkthrough of the app in which they navigate through the app's various screens, menus and functions, generating detailed fieldnotes or recordings (e.g., screenshots). Elements to be explored during the technical walkthrough include: registration and entry, everyday use, and app suspension, closure and leaving (Light et al., 2018). In addition to documenting an app's technological architecture, researchers should also take note of any mediating characteristics throughout the app, including the user interface; its functions and features; its textual content and tone; and, its symbolic representations conveyed through branding, color, and font choices (Light et al., 2018). Second, researchers must establish an app's environment of expected use, which considers the social, political, cultural, and economic context in which it was developed and gives researchers an understanding of who its intended users are and how they are expected to integrate the app into their everyday practices. This involves an assessment of an app's vision (e.g., its purpose, default users, and conditions of expected use); its operating model (e.g., its business model and its mechanisms for generating profit); and, its governance structure (e.g., how an app regulates user activity in service of its vision and operating model) (Light et al., 2018).

The walkthrough method has been applied by a limited number of scholars in the fields of communication, media, and cultural studies. Bivens and Haimson (2016), for instance, used the walkthrough method to explore how gender is represented in the 10 most popular English-language social media platforms, including Facebook, Twitter, and Instagram. Similarly, Duguay (2017) deployed this method to explore how the concept of authenticity is mobilized on Tinder, a popular mobile dating app. We seek to add to this literature by applying the walkthrough method to the BEACON Rx platform. To our knowledge, at the time of writing, this is the first study to apply this methodology to health apps.

Data Collection

In applying the walkthrough method to an analysis of the BEACON Rx platform, we created a patient-user profile, an administrator-user profile, and a provider-user profile to tour the platform environment. We then took photos of all screens presented to each type of user, which are included in Supplementary Material to this paper. All app screenshots were generated using Samsung S8 with Android version 9 and all clinician dashboard screenshots were generated using version 75.0.3770.142 of Google Chrome. This screenshot data is supplemented by analysis of study materials made available through the BEACON Study cluster randomized trial, including the informed consent form and study training manual.

Findings

The Technical Walkthrough

Registration and Entry

We began our technical walkthrough by downloading the BEACON Rx app from the Google Play Store. While anyone can download the app in the Google Play Store, only those with a valid agency identification number (“Agency ID”) can create an account. Once a user creates an account, this account must then be validated by an administrator-user through the Clinician Dashboard. Only once this has been done, can the patient-user successfully log in to the BEACON Rx app. Clinician Dashboard registration and entry for administrator- and provider-users occurs in much the same way, with potential users logging on to the clinician dashboard website (https://dashboard.beacon.ohriprojects.ca/) and selecting “Create Account.” In order to sign-up for an account, these users must also input a valid Agency ID number and have their accounts validated by another Clinician Dashboard administrator-user. It is also important to note that developers created a central administrator account to allow for the onboarding of additional administrator-users.

Everyday Use

Patient-users

Once a patient-user has created an account and successfully logged in to the BEACON Rx app, they can navigate to their profile by accessing the app's side menu (Figure 1). Here, they are encouraged to upload a profile image and/or cover image by either taking a photo of themselves or accessing their photo library to select a photo; update their name as per their preference (e.g., patient-users can use pseudonyms if they do not want to use their real names); and, add a mantra for themselves. In this section of the BEACON Rx app, patient-users can also create or edit an existing safety plan, which is “a procedure that is collaboratively developed to support the participant [to] problem-solve [in] moments when they feel they may be at risk of harm to themselves” (Dunn, 2018, p. 22). The safety plan includes the following elements: warning signs, which are “negative thoughts, moods, and behaviors, that you develop or experience during a crisis”; coping strategies, or “things you can do for yourself to take your mind off a crisis”; high risk locations which “are places or unhealthy social settings you want to avoid to stay on track with recovery”; safe locations, or “places where you can go where you feel safe”; support contacts, described as “people who are good to be around”; and, environment actions, or “things you can do to limit access to ways of hurting yourself and keeping your environment safe” (BEACON Rx, 2019). While users are encouraged to create a safety plan with their health provider during their first therapy sessions, the app does not prevent them from editing these fields outside of therapy. Patient-users can also access the app's setting through its side menu, where they can access push notification settings (e.g., “Alerts”); options to clear their history, although, at the time of writing, users were not able to clear their app history, and “BEACON” settings, where they can update their emergency contact person.

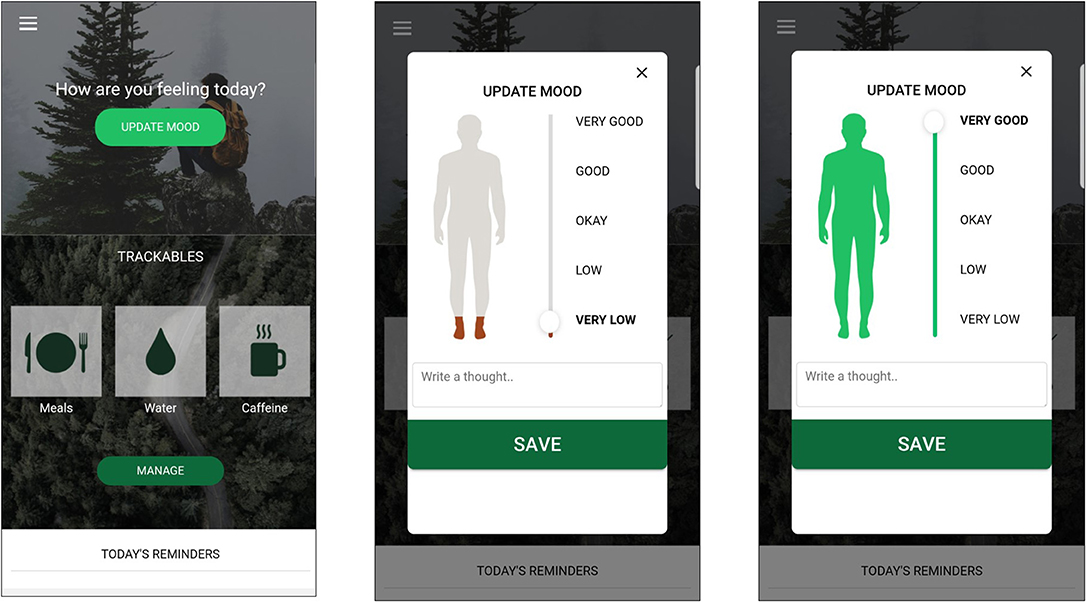

Once a patient-user logs in to the BEACON Rx app, they are immediately taken to the app's home screen, but the home screen can also be accessed from the side menu (Figure 2). Here, patient-users are encouraged to update their mood. Once they select “Update Mood,” the patient-user is prompted to rate their mood on a five-point scale, from “very low” to “very good.” As they toggle this button, the animated figure of a man changes color from deep red (indicating very low mood) to bright green (indicating very good mood) (Figure 2). Following this, as the patient-user scrolls through the home screen, they are also encouraged to log any number of the following trackables, each with its own icon: hygiene, sleep, exercise, meals, water, caffeine intake, alcohol consumption, use of tobacco products or drug use. Provider- and administrator-users also have the option to create a “custom trackable” to capture any other variable interest. Finally, at the bottom of the home screen, the patient-user can find a list of their day's reminders, which they can set in other parts of the app to remind them to attend appointments, take medication or complete goals they have set during face-to-face therapy with their healthcare provider.

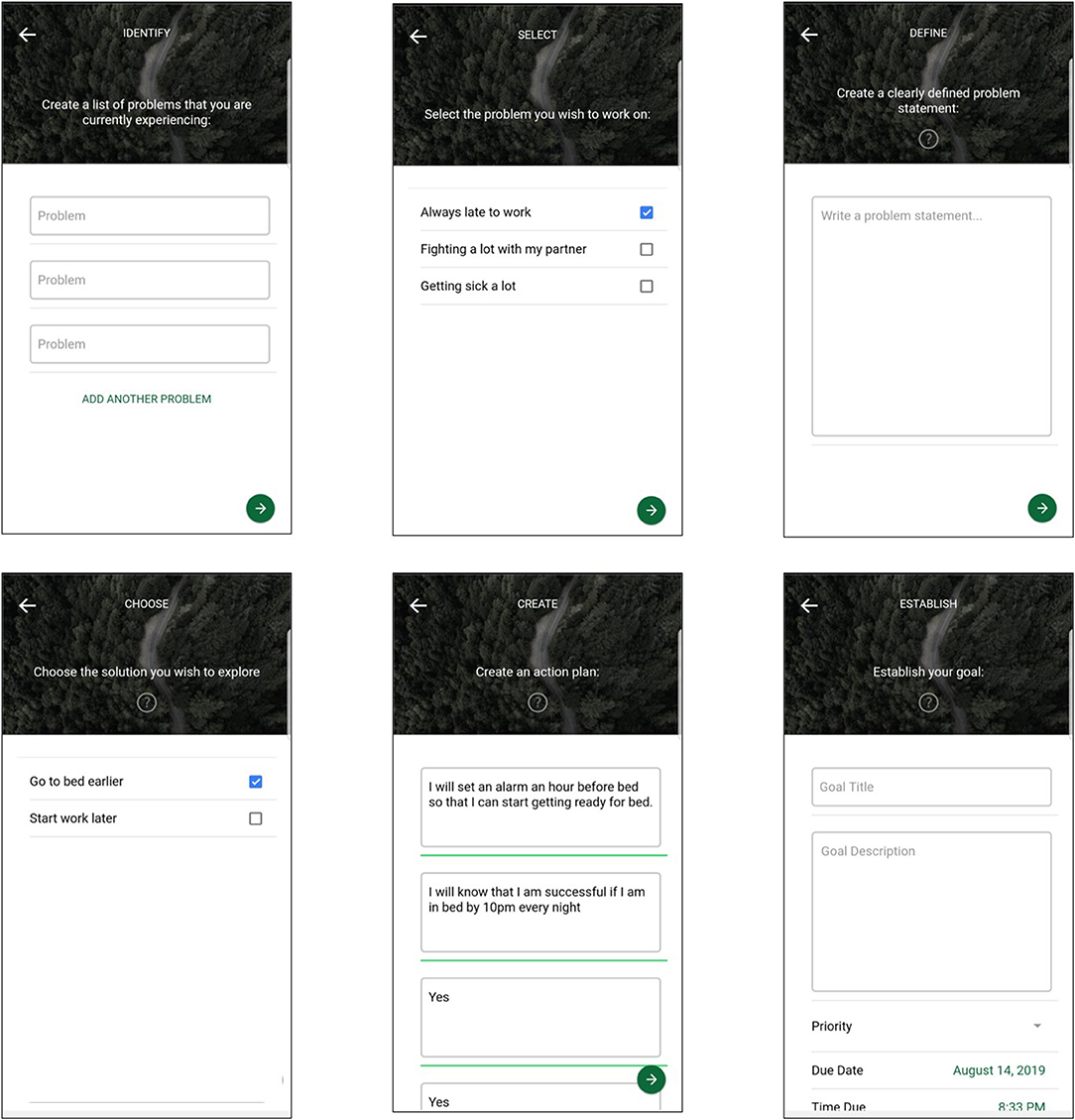

Patient-users can then navigate to the side menu to access the “Therapy” section of the BEACON Rx app. This section is designed to house clinical tools to be used either in therapy with their healthcare provider or by the patient-user as homework. Here, the patient-user can create goals and access therapy materials, such as therapy worksheets, that have been uploaded by their healthcare provider. In creating new goals, a key part of the psychotherapeutic intervention, the patient-user is taken through seven separate screens: “identify,” in which users can identify problems that they are currently experiencing; “select,” where users must select a problem they would like to work on; “define” where users are asked to write a problem statement; “generate” in which patient-users can brainstorm potential solutions to the problem they have identified; “choose” where they must choose which solution they would like to implement; “create” which prompts users to develop a SMART action plan (e.g., one that is Specific, Measurable, Achievable, Relevant and Time-bound); and, finally, patient-users must name their goal in order to save it. In this final screen, users also have the option to set alerts to remind them to complete their goals. In this section of BEACON Rx, the app is designed to elicit particular information and behaviors from patient-users; for instance, it is not possible to move forward from the “identify” screen without inputting at least one problem. Similarly, patient-users are not able to save their goal unless they have gone through and inputted all required information in all goal screens (Figure 3).

Next, in the “Journal” section, patient-users may select one of 35 writing prompts or write a journal entry on a topic of their choosing. They may also append audio notes recorded via the app or photos from their camera or photo library to their entries. In the “Connect” section of the BEACON Rx app, patient-users may enter a list of their contacts, which will appear in a list in this section, as well as access a chat section. Patient-users can call anyone from their contact list directly from the BEACON Rx app. In the chat section, a patient-user can send a message directly to their healthcare provider in between face-to-face sessions. Messages are read by the provider via the Clinician Dashboard. Through the side menu, patient-users can also access the “Progress” section, where they can track changes to their moods and trackables over the previous week, month, or year. In the “Resources” section of BEACON Rx, the patient-user can access targeted content that has been uploaded by their provider, including links to YouTube videos, audio files and website links. In this section, there is also a tab for local mental health services and crisis lines which have been inputted by their provider and suggested based on a patient-user's location.

Finally, should patient-users find themselves in a mental health crisis, they are encouraged to press the “BEACON Button” which is a large red button located in the app's side menu (Figure 1). When the patient-user selects “BEACON” button, they are prompted with a warning measure to ensure that they meant to select this emergency button. If the user selects “yes,” they are taken to the “BEACON” screen where they have access to their safety plan, which can provide them with coping skills to de-escalate their crisis. They are also able to select “Assess Situation” to complete a questionnaire about how they are feeling and which warning signs they are exhibiting. The app then provides them with a recommendation that has been inputted by their provider with advice on what to do next. Finally, patient-users can directly call their emergency contact, healthcare provider, or other support contact from this screen, which they have programmed via the settings and connect menus.

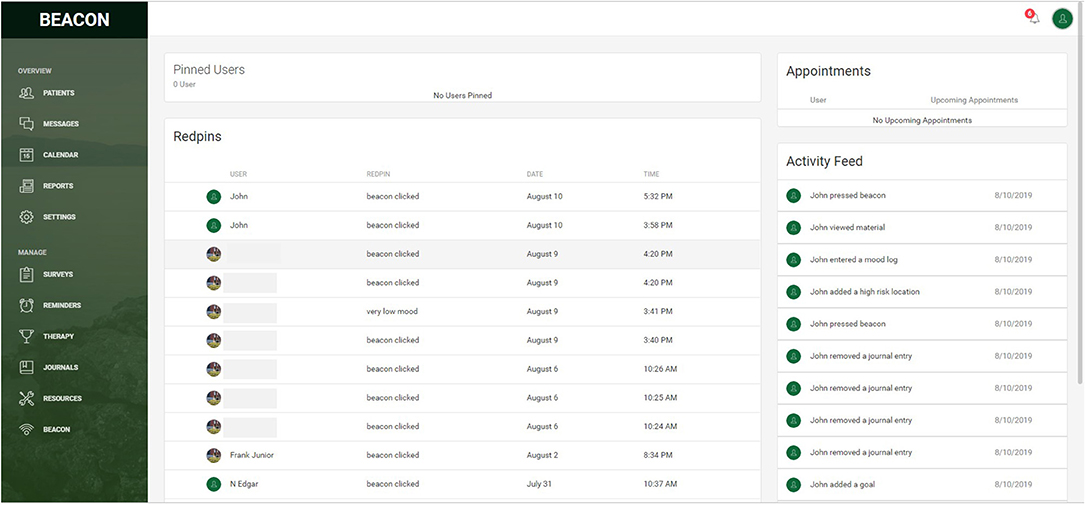

Provider-users

When a provider-user first logs into the BEACON Rx Clinician Dashboard, they are taken to their landing page which mirrors the look and style of the BEACON Rx app. On the clinician- and administrator-user landing page is a list of their pinned users (e.g., their assigned patient-users); a list of user “red pins,” which are pre-established events that might signal that a patient-user is in distress, such as a decline in mood, clicking of the BEACON button, entering a high-risk location, or any custom red pins created by their provider-user (e.g., a visit to the Emergency Department); a list of upcoming appointments; and, their patient-user activity feed which logs all actions by their assigned patient-users in the BEACON Rx app (Figure 4).

From here, provider- users can navigate to the top right corner to their profile where they can update their contact information and upload a picture from their computer. They can also navigate to the preferences menu to toggle the extent to which they receive notifications, both in the Clinician Dashboard and by email. In module settings, provider- and administrator-users can edit the look of their landing page by modifying what elements appear here (e.g., patient activity feed, red pins, pinned users, and reminders). Here, they can also edit their pinned users, which appear at the top of their landing page (Figure 4).

Next, by clicking on the “Patients” tab, a provider-user can access a list of their assigned patients. Patients whose profile pictures are outlined in blue are “active” patient-users, which refers to patients in the active treatment period of the study intervention, and profile pictures outline in red are “passive” patient users, which refer to patients who are in the follow-up period of the study. From this screen, provider-users can access each of their assigned patient-users' profiles by selecting their profile picture. In the patient profile screen, the provider-user can access any information that is inputted into the BEACON Rx app by their assigned patient-user, including: the details for their safety plan, results on mood logs, reminders, goals created by the patient-user, journal entries, chat message history between the patient- and provider-user, achievements (however, at the time of writing, these were disabled); information relating to the BEACON button, including any red pins that have been triggered and completed BEACON assessments; and, finally, settings, where provider-users can modify alert settings and patient-users' ability to change these features in the BEACON Rx app. In this screen, provider-users can also edit or add information which will then appear in the patient-users' app; for instance, a provider-user can add an appointment to the patient-user's profile, which will then appear in the reminders section of that patient-user's BEACON Rx app.

Through the “Messages” tab, a provider-user can access the chat history with all of their assigned patient-users. Here, rather than loading each patient-user's profile to respond to potential messages, they are all consolidated in one place. The “Calendar” tab provides a consolidated view of all scheduled patient-user appointments. From this menu, the provider-user can also easily add an upcoming appointment during a face-to-face therapy session which will be reflected in the patient-user's reminders. In the “Reports” section, a provider-user can generate patient-user reports on any number activities of interest, including but not limited to red pins, modifications to the safety plan, and changes in reported mood. However, at the time of writing, this section of the BEACON Rx Clinician Dashboard was not yet enabled. Next, through the “Settings” tab, provider-users may toggle the notifications and email settings associated with the BEACON Rx Clinician Dashboard, including low mood notifications, BEACON Button presses, and high-risk location notifications.

In addition to these day-to-day case management activities, provider-users can also manage the content of the app through the BEACON Rx Clinician Dashboard. In the “Surveys” tab, provider-users are provided with an overview of the mood logs for all of their assigned patient-users, are able to add different trackables to their patient-users' home screens, and, add customized trackables which can be targeted to particular patient-users. Provider-users are also able to send out surveys which have been hard-coded into the BEACON Rx app; however, at the time of writing, this portion of the BEACON Rx platform had not yet been deployed due to technological difficulties. The “Reminders” section allows provider-users to view all assigned patient-user reminders as well as create new reminders for their assigned patients as needed. The “Therapy” section allows provider-users to view all of the goals created by their assigned patient-users and upload relevant therapy materials, such as patient worksheets and psychoeducational materials.

Next, via the “Journals” tab, provider-users can view a consolidated list of all journal entries created by their patients as well as any appended audio-visual materials. The “Resources” tab allows provider- and administrator-users to upload targeted content to the app. It also provides a list of all resource content upload to the BEACON Rx app by all provider- and administrator-users as well as a list of services and crisis lines to be consulted by patient-users. All of the content uploaded in this screen can be either sent to all patient-users or to only a specific few who might find it useful. Finally, the “BEACON” tab allows provider-users to view a consolidated list of all completed BEACON Assessments. It is also here that provider-users may add to a consolidated list of warning signs, feelings, and recommendations which appear in the BEACON Rx app's “Assess Situation” screens.

Administrator-users

Administrator-user accounts are identical to provider-user accounts with two important exceptions: the ability to approve new provider-users, and the ability to approve and assign new patient-users to provider-users. When the “Approve” tab is selected, an administrator-user can view all new patient- and provider-user accounts as well as approve or deny these requests. This screen also provides a list of all current administrator- and provider-user accounts. Through this screen, administrator-users have the ability enable/disable user access, delete provider-users as well as toggle a user's account type (e.g., provider/administrator). Next, via the “Assign” tab, administrator-users can approve new patient-user accounts and assign them to the appropriate provider-user(s). In this section, an administrator may also select a provider user to view/modify their list of assigned patients.

App Suspension, Closure, and Leaving

Administrator-users

While patient- and provider-users can log out of the BEACON Rx platform, they are not currently permitted to delete or close their accounts. While an administrator-user can delete a provider-user account through the “Approve” section of the BEACON Rx Clinician Dashboard, currently, patient-user accounts cannot be deleted; they can only be disabled at the end of their participation in the study (e.g., either at withdrawal or study completion).

Terms of Use

Given that the BEACON Rx Platform is currently designed to support an interventional treatment, no terms of use content exists within the platform. Instead, this information is reviewed with patient-, provider-, and administrator-users prior to use. Prior to enrollment in the study, patient-users are asked to review the study's Informed Consent Form, which includes information related to the BEACON Rx platform as an intervention, how it is to be used, and data privacy and confidentiality information. Prior to onboarding, provider- and administrator-users are trained using the Study Treatment Manual which details how each session of face-to-face therapy is to be conducted and how the BEACON Rx platform is to be incorporated into these sessions.

The BEACON Study's Informed Consent Form explains to patient-users that the app is designed to use “the power and convenience of the internet to allow simultaneous and time delayed communication between an individual and their therapist, as well as the delivery of cognitive behavior therapy and access to resources designed specifically for self-harm” (Hatcher, 2018, p.2). Similarly, it summarizes research revealing that men are less likely to seek mental healthcare services for the treatment of self-harm and are, thus, more likely to die by suicide than their female counterparts. The BEACON Rx platform has therefore been designed to fill this gap in care and encourage men to seek support by making it available to them with the click of a few buttons, on devices that they carry with them everywhere. In explaining the functionality of the app, the Informed Consent Form highlights the importance of the mood log and GPS functionality embedded with in the app which, while optional, are designed to help patient- and provider-users monitor the risk of subsequent self-harm or suicide. Finally, it is explained to patient-users that provider- and administrator-users will have access to all information that is inputted into the app by patient-users and, as a result, they may “discover thoughts or behaviors that raise concern about harm to yourself or others. If the research team sees anything that suggests you or others face imminent risk of harm, they will contact appropriate staff members to intervene” (Hatcher, 2018, p. 5).

Similarly, the Study Treatment Manual, which is used to train all new staff members (e.g., provider- and administrator-users) and introduces the BEACON Rx Clinician Dashboard as a tool to “manage and monitor both progress and setbacks experienced by participants” (Dunn, 2018, p. 12). The manual also encourages study staff to download the BEACON Rx app to become familiar with it in order to build trust and rapport with future patient-users. Provider- and administrator-users are encouraged to set aside at least 30 min each day to “track daily check-ins, receive and send messages, and review participant [app] usage” (p. 14). Prior to detailing the session-by-session breakdown of the therapy being used in the study, the Study Treatment Manual includes a section on safety planning and relapse prevention. This section guides future provider-users through the steps to establish a patient-user's safety plan. This is an essential task that begins at the start of the therapeutic relationship. Central to this plan is the identification of risk and protective factors.

Discussion: The Environment of Expected Use

Vision

The BEACON Rx platform's vision is clearly laid out for prospective patient-, provider-, and administrator-users through the terms of use documentation (e.g., Informed Consent and Study Treatment Manual documents). Through the analysis of these documents we see that the BEACON Rx Platform, despite language around patient empowerment, is designed as a risk-monitoring tool for men, identified as a high-risk, treatment- adverse group. The language of risk is mobilized throughout both the BEACON Rx platform and its terms of use documentation. Patient-users are informed that the platform will be used to “monitor their risk of self-harm” and the extent to which they move in and out of “risky” locations. Through the explanation of the safety and suicide risk management protocols embedded within the platform and larger study, it is explained to patient-users that their usage data will also be used to monitor risk, and should the combination of data suggest that they are at risk, the appropriate members of their care team will intervene. Similarly, the technical structure of the BEACON Rx platform is also based around risk, which can be seen through the establishment of the safety plan, the use of red pins to notify the provider-user that their assigned patient-users are engaging in risky behaviors, and notifications received by patient-users when they enter “risky” locations. Within this conceptualization, the healthcare provider now has access to unlimited amounts of information about their patients to facilitate diagnosis and risk categorization. The BEACON Rx platform, thus, separates patients into innumerable categories based on their risk of self-harm. Patients who are perceived as being at greater risk for self-harm, in turn, receive greater follow-up from their providers. This categorization of individuals is essential to the neoliberal rationality in which “at risk” populations are targeted as requiring a greater degree of disciplinary control (Lupton, 2012).

This constructs the ideal BEACON Rx patient-user as once who proactively and dutifully tracks his mental health symptoms and triggers and who, upon review with his healthcare provider, modifies his behavior accordingly. This mirrors Lupton's (2013b) description of the “digitally engaged patient.” Patient-users are engaged in a feedback loop in which they not only generate endless amounts of data for their provider through the use of the BEACON Rx app, but they are then expected to modify their behavior accordingly based on the data that have been generated. Not only are patient-users expected to truthfully report their mood and other variables of interest to their provider, they are also expected to proactively consult online and community resources available through the app in between face-to-face sessions with their provider. Further to this, they are also expected to alert their provider when they are in a mental health crisis by pressing the BEACON button, which directly connects them to their provider, an emergency contact or a mental health crisis line. This renders invisible men who are hesitant to seek health services; those who are not comfortable with using or do not have access to a smartphone; and, men who are chronically suicidal which impacts their willingness to engage with digital health technologies. These patient-users are then viewed as “bad” citizens.

Operating Model

When apps are not being sold for commercial use, such as those produced by governments or healthcare institutions, Light et al. (2018) encourage researchers to consider what other forms of revenue might figure into their operating structure. The BEACON Rx platform is an experimental research technology that is being funded by federal grant money, and therefore, providing evidence for its proof of concept is essential. Study sites and their patients receive access to the platform free of charge in exchange for their data—both clinical data, such as scores on standardized mental health assessments, as well as platform usage data, in order to demonstrate its effectiveness in the treatment of self-harm among men. These data are essential to the next phase of the platform's development, garnering interest from investors for its commercialization. Consider, for instance, the A-CHESS platform which is designed to reduce problematic substance use. Gustafson et al. (2014) first evaluated this platform for clinical effectiveness among veterans in the United States and it is now being sold commercially to addiction management providers across the country. The data initially supplied by patients and providers in the Gustafson et al. (2014) clinical trial was an essential step to establishing commercial interest in the A-CHESS platform. As explained by Crawford (2006), this emphasis on the commercialization potential of digital health technologies is made possible by a capitalist, neoliberal climate in which “good” citizens are those who engage in self-monitoring practices for the purposes of self-improvement.

Governance Structure

The BEACON Rx platform's governance structure is evident in the access and data management structure embedded within it. Access to the BEACON Rx platform is tightly controlled. While the app and Clinician Dashboard website are publicly available, in order for an account to be created, potential users must be provided with a valid Agency ID. Additionally, even when users have a valid Agency ID, all accounts must first be validated by an administrator-user who has the ability to approve or deny access to potential users. Patient-users are also not permitted to clear their data histories, functionality which can only be accessed through provider- or administrator-user accounts. Finally, while both patient- and provider-users can log out of the BEACON Rx app and/or Clinician Dashboard, it is not possible to delete their accounts, they can only be disabled by an administrator-user. This highlights the complexity of designing digital health technologies for integration in clinical care. Here, we see a distinguishing between primary and secondary default users. Despite the fact that patient-users input the majority of the information into the BEACON Rx platform, they appear to be its secondary users, with the technical architecture of the platform designed to first meet the risk monitoring and case management needs of its provider-users.

We argue that Lupton's (2013b) concept of the “digitally engaged patient” can be expanded to the imagined provider-user promoted by the BEACON Rx platform in what we are calling the “digitally informed provider.” Digital health technologies are part of what Davis (2012) terms a “techno-utopia” in which health-related technological innovations are understood as normatively good and necessary to health and happiness. In this vein, data is equated with knowledge which is seen as necessarily good. The more data one has about their body (or the bodies of their patients), the more knowledge one has. More knowledge is necessarily better as it is key to the prevention of illness and disease, negating the role played by social determinants of health. This is reflective of an ideological discourse which, in the case of health surveillance, is reflective of the neoliberal emphasis on personal responsibility (Ayo, 2012). That is, the encouragement of citizens to voluntarily subject themselves to increased levels of surveillance under the guise of self-improvement is central to the neoliberal agenda (Lupton, 2014a).

These constructions of the digitally engaged patient and the digitally informed provider have significant impacts on the patient-provider relationship. Interactions that once took place in person and involved the provider physically meeting with the patient to diagnose a problem are now routinely delegated to technological solutions (Lupton, 2014a). Through the use of mental health apps as part of clinical care, providers have a greater ability to monitor and act upon their patients than ever before as a result of the volume of data that these technologies collect. The relationship to the patient, in a sense, is secondary to the relationship to the patient's data. This results in a shifting of the onus for healthcare from the provider to the patient that is characteristic of a neoliberal climate. Here, we also witness a shift from the types of knowledge that are privileged in healthcare interactions. Specifically, on the surface, we see a shift from an emphasis on the instrumental knowledge of the provider to the introspective knowledge of the patient. Instead, we argue that this perceived shift is part of the rhetoric of patient empowerment in which self-monitoring is presented as a choice that is made by the patient; however, this is, at least in part, an illusion. This is similar to what Lupton (2014c, 2016) refers to as imposed self-tracking. That is, while patients have a choice as to whether or not to engage with self-tracking practices, these choices are constrained by the consequences of non-engagement. In the case of the BEACON Rx platform, patients' choices are limited that by the fact that a possible consequence of non-tracking is the potential of receiving little to no treatment. We need to question what meaningful consent looks like when self-tracking technologies are deployed as part of routine psychiatric care.

Conclusion

In this paper, we have sought to provide a case example of how to effectively deploy Light et al.'s (2018) walkthrough method to answer research questions relevant to critical health communication studies. As demonstrated in this paper, this method allows for the detailed consideration of how an app's technical architecture and environment of expected use are embedded with symbolic representations of what it means to be healthy and what practices should be engaged in to maintain “good” health. It also allows for an analysis of not only the textual content of the technological artifact but also the content developed around it (e.g., websites, blogs, marketing material, employee recruitment documents) as well how its representative or stylistic elements, such as icons, colors and fonts come together to produce the conditions under which an app should be used, by whom, and to what end.

In the current example of the BEACON Rx platform, the walkthrough method allowed for a consideration of how mental health apps which purportedly allow users to take control of their own health through self-tracking are designed as tools of risk monitoring. Further, it demonstrated that the ideal (healthy) patient-user is one who is invested in his own health which he demonstrates through the dutiful tracking of his mood and self-harm triggers and is in constant contact with his provider, ready to modify his behavior to ensure his own health at a moment's notice. Similarly, the ideal provider-user is one who spends a significant amount of his or her clinical time reviewing usage data generated by patients via the BEACON Rx app to ensure that their risk factors are significantly monitored, and who is prepared to intervene as needed. This construction does not take account of the impact of the digital divide or of the impact that chronic suicidality may have on one's willingness to engage with self-tracking technologies. These users, who are rendered invisible through the BEACON Rx platform are then, within a neoliberal framework that emphasizes personal accountability for actions related to health and healthcare, viewed as “bad” citizens.

However, as explained by French and Smith (2013), “social control through health-related surveillance is neither straightforward, nor a foregone conclusion” (p. 387). The walkthrough method, as deployed in the current analysis, cannot account for patient-, provider-, and administrator-users' perceptions of mental health app use. In order to address these questions, researchers would need to employ, in addition to a technical walkthrough of the technological artifact, an interview with users or, perhaps, a guided walkthrough with users in which they explain their use of the app (e.g., Light, 2007). In doing so, researchers would be able to describe how users ignore, resist or, even enjoy, self-tracking practices, an essential component of understanding health-related surveillance in our neoliberal climate.

Data Availability Statement

The raw data supporting the conclusions of this manuscript will be made available by the authors, without undue reservation, to any qualified researcher.

Author Contributions

SH was the grant holder, conceived of The BEACON Study, and developed the BEACON platform in collaboration with CHESS Health Inc. SM conceived of the current sub-study, completed the data collection and analysis, and took the lead in drafting the manuscript. SM and SH reviewed and approved the final manuscript.

Funding

The BEACON Study clinical trials were supported by an Ontario SPOR Support Unit (OSSU) IMPACT grant, developed via a partnerships between the Canadian Institutes of Health Research (CIHR) and the Ontario Ministry of Health and Long-Term Care. The funders had no role in the review or approval of the manuscript for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomm.2019.00052/full#supplementary-material

References

Ajana, B. (2017). Digital health and the biopolitics of the quantified self. Digit, Health 3:205520761668950. doi: 10.1177/2055207616689509

Andreassen, H. K., Trondsen, M., Kummervold, P. E., Gammon, D., and Hjortdahl, P. (2006). Patients who use e-mediated communication with their doctor: new constructions of trust in the patient-doctor relationship. Qual. Health Res. 16, 238–248. doi: 10.1177/1049732305284667

Ayo, N. (2012). Understanding health promotion in a neoliberal climate and the making of health conscious citizens. Crit. Public Health 22, 99–105. doi: 10.1080/09581596.2010.520692

Barnard-Willis, D. (2012). Surveillance and Identity: Discourse, Subjectivity and the State. Surrey: Ashgate.

Beer, D., and Burrows, R. (2010). Consumption, prosumption and participatory Web cultures: an introduction. J. Consumer Cult. 10, 3–12. doi: 10.1177/1469540509354009

Bivens, R. (2017). The gender binary will not be deprogrammed: Ten years of coding gender on Facebook. New Media Soc. 19, 880–898. doi: 10.1177/1461444815621527

Bivens, R., and Haimson, O. L. (2016). Baking gender into social media design: how platforms shape categories for users and advertisers. Soc. Media Soc. 2:205630511667248. doi: 10.1177/2056305116672486

Boschen, M. J., and Casey, L. M. (2008). The use of mobile telephones as adjuncts to cognitive behavioral psychotherapy. Prof. Psychol. Res. Pract. 39, 546–552. doi: 10.1037/0735-7028.39.5.546

Carroll, K. M., Ball, S. A., Martino, S., Nich, C., Babuscio, T. A., Nuro, K. F., et al. (2008). Computer-assisted delivery of cognitive-behavioral therapy for addiction: a randomized trial of CBT4CBT. Am. J. Psychiatry 165, 881–888. doi: 10.1176/appi.ajp.2008.07111835

Crawford, R. (1980). Healthism and the medicalization of everyday life. Int. J Health Services 10, 365–388.

Crawford, R. (2006). Health as a meaningful social practice. Health 10, 401–420. doi: 10.1177/1363459306067310

Davis, J. L. (2012). Social media and experiential ambivalence. Fut. Internet 4, 955–970. doi: 10.3390/fi4040955

Depper, A., and Howe, P. D. (2017). Are we fit yet? English adolescent girls' experiences of health and fitness apps. Health Sociol. Rev. 26, 98–112. doi: 10.1080/14461242.2016.1196599

Doshi, M. J. (2018). Barbies, goddesses, and entrepreneurs: discourses of gendered gigital embodiment in women's health apps. Women's Stud. Commun. 41, 183–203. doi: 10.1080/07491409.2018.1463930

Duguay, S. (2017). Dressing up Tinderella: interrogating authenticity claims on the mobile dating app Tinder. Inform. Commun. Soc. 20, 351–367. doi: 10.1080/1369118X.2016.1168471

Dunn, N. (2018). BEACON Study Treatment Manual. Unpublished BEACON Study document. Ottawa, ON: Clinical Epidemiology Program; Ottawa Hospital Research Institute.

Elias, A. S., and Gill, R. (2018). Beauty surveillance: the digital self-monitoring cultures of neoliberalism. Eur. J. Cult. Stud. 21, 59–77. doi: 10.1177/1367549417705604

Ericson, R., Barry, D., and Doyle, A. (2000). The moral hazards of neo-liberalism: lessons from the private insurance industry. Econ. Soc. 29, 532–558. doi: 10.1080/03085140050174778

Ericson, R. V., and Haggerty, K. D. (1997). Policing the Risk Society. Toronto, ON: University of Toronto Press.

Ericson, R. V., and Haggerty, K. D. (eds.). (2006). The New Politics of Surveillance and Visibility. University of Toronto Press.

Esmonde, K., and Jette, S. (2018). Assembling the ‘Fitbit subject’: a Foucauldian-sociomaterialist examination of social class, gender and self-surveillance on Fitbit community message boards. Health. doi: 10.1177/1363459318800166

Fotopoulou, A., and O'Riordan, K. (2017). Training to self-care: fitness tracking, biopedagogy and the healthy consumer. Health Sociol. Rev. 26, 54–68. doi: 10.1080/14461242.2016.1184582

French, M., and Smith, G. (2013). “Health” surveillance: new modes of monitoring bodies, populations, and polities. Crit. Public Health 23, 383–392. doi: 10.1080/09581596.2013.838210

Greenhalgh, T., Wherton, J., Sugarhood, P., Hinder, S., Procter, R., and Stones, R. (2013). What matters to older people with assisted living needs? A phenomenological analysis of the use and non-use of telehealth and telecare. Soc. Sci. Med. 93, 86–94. doi: 10.1016/j.socscimed.2013.05.036

Guattari, F., and Deleuze, G. (2000). A Thousand Plateaus: Capitalism and Schizophrenia. London: Athlone Press.

Gustafson, D. H., McTavish, F. M., Chih, M.-Y., Atwood, A. K., Johnson, R. A., Boyle, M. G., et al. (2014). A smartphone application to support recovery from alcoholism. JAMA Psychiatry 71:566. doi: 10.1001/jamapsychiatry.2013.4642

Haggerty, K. D., and Ericson, R. V. (2000). The surveillant assemblage. Br. J. Sociol. 51, 605–622. doi: 10.1080/00071310020015280

Hatcher, S. (2018). Informed Consent Form for Participation in a Research Study. Unpublished BEACON Study document. Ottawa, ON: Clinical Epidemiology Program, Ottawa Hospital Research Institute.

Houle, S. K. D., Atkinson, K., Paradis, M., and Wilson, K. (2017). CANImmunize: a digital tool to help patients manage their immunizations. Can. Pharm. J. 150, 236–238. doi: 10.1177/1715163517710959

Kleiboer, A., Smit, J., Bosmans, J., Ruwaard, J., Andersson, G., Topooco, N., et al. (2016). European COMPARative Effectiveness research on blended Depression treatment versus treatment-as-usual (E-COMPARED): study protocol for a randomized controlled, non-inferiority trial in eight European countries. Trials 17:387. doi: 10.1186/s13063-016-1511-1

Kooistra, L. C., Wiersma, J. E., Ruwaard, J., van Oppen, P., Smit, F., Lokkerbol, J., et al. (2014). Blended vs. face-to-face cognitive behavioural treatment for major depression in specialized mental health care: study protocol of a randomized controlled cost-effectiveness trial. BMC Psychiatry 14:290. doi: 10.1186/s12888-014-0290-z

Krieger, T., Meyer, B., Sude, K., Urech, A., Maercker, A., and Berger, T. (2014). Evaluating an e-mental health program (“deprexis”) as adjunctive treatment tool in psychotherapy for depression: design of a pragmatic randomized controlled trial. BMC Psychiatry 14:285. doi: 10.1186/s12888-014-0285-9

Kuhn, E., Crowley, J., Hoffman, JE, Eftekhari, A., Ramsey, K., Owen, J., et al. (2015). Clinician characteristics and perceptions related to use of the PE (prolonged exposure) coach mobile app. Prof. Psychol. Res. Pract. 46, 437–443. doi: 10.1037/pro0000051

Larsen, M. E., Huckvale, K., Nicholas, J., Torous, J., Birrell, L., Li, E., et al. (2019). Using science to sell apps: evaluation of mental health app store quality claims. Npj Digit. Med. 2:18. doi: 10.1038/s41746-019-0093-1

Light, B. (2007). Introducing masculinity studies to information systems research: the case of Gaydar. Eur. J. Inf. Sys. 16, 658–665.

Light, B., Burgess, J., and Duguay, S. (2018). The walkthrough method: an approach to the study of apps. New Media Soc. 20, 881–900. doi: 10.1177/1461444816675438

Lupton, D. (2012). M-health and health promotion: the digital cyborg and surveillance society. Soc. Theory Health 10, 229–244. doi: 10.1057/sth.2012.6

Lupton, D. (2013a). Quantifying the body: monitoring and measuring health in the age of mHealth technologies. Crit. Public Health 23, 393–403. doi: 10.1080/09581596.2013.794931

Lupton, D. (2013b). The digitally engaged patient: self-monitoring and self-care in the digital era. Soc. Theory Health 11, 256–270. doi: 10.1057/sth.2013.10

Lupton, D. (2014b). “Self-tracking cultures: towards a sociology of personal informatics,” in Proceedings of the 26th Australian Computer-Human Interaction Conference, OzCHI 2014, 77–86. doi: 10.1145/2686612.2686623

Lupton, D. (2014c). Self-tracking modes: reflexive self-monitoring and data practices. SSRN Electron. J. 1–19. doi: 10.2139/ssrn.2483549

Lupton, D. (2015). Quantified sex: a critical analysis of sexual and reproductive self-tracking using apps. Cult. Health Sexuality 17, 440–453. doi: 10.1080/13691058.2014.920528

Lupton, D. (2016). The diverse domains of quantified selves: self-tracking modes and dataveillance. Econ. Soc. 45, 101–122. doi: 10.1080/03085147.2016.1143726

Lupton, D., and Jutel, A. (2015). “It”s like having a physician in your pocket!” A critical analysis of self-diagnosis smartphone apps. Soc. Sci. Med. 133, 128–135. doi: 10.1016/j.socscimed.2015.04.004

Miller, K. E., Kuhn, E., Owen, J. E., Taylor, K., Yu, J. S., Weiss, B. J., et al. (2019a). Clinician perceptions related to the use of the CBT-I Coach mobile app. Behav. Sleep Med. 17, 481–491. doi: 10.1080/15402002.2017.1403326

Miller, K. E., Kuhn, E., Yu, J., Owen, J. E., Jaworski, B. K., Taylor, K., et al. (2019b). Use and perceptions of mobile apps for patients among VA primary care mental and behavioral health providers. Prof. Psychol. Res. Pract. 50, 204–209. doi: 10.1037/pro0000229

Millington, B. (2014). Smartphone apps and the mobile privatization of health and fitness. Crit. Stud. Media Commun. 31, 479–493. doi: 10.1080/15295036.2014.973429

Nafus, D., and Sherman, J. (2014). This one does not go up to 11: the Quantified Self Movement as an alternative big data practice. Int. J. Commun. 8, 1784–1794.

Reid, S. C., Kauer, S. D., Hearps, S. J., Crooke, A. H., Khor, A. S., Sanci, L. A., et al. (2011). A mobile phone application for the assessment and management of youth mental health problems in primary care: a randomised controlled trial. BMC Fam. Pract. 12:131. doi: 10.1186/1471-2296-12-131

Ritzer, G. (2014). Prosumption: evolution, revolution, or eternal return of the same? J. Consumer Cult. 14, 3–24. doi: 10.1177/1469540513509641

Swan, M. (2012). Health 2050: the realization of personalized medicine through crowdsourcing, the quantified self, and the participatory biocitizen. J. Pers. Med. 2, 93–118. doi: 10.3390/jpm2030093

Wajcman, J. (2010). Feminist theories of technology. Cambridge J. Econ. 34, 143–152. doi: 10.1093/cje/ben057

Watts, S., Mackenzie, A., Thomas, C., Griskaitis, A., Mewton, L., Williams, A., et al. (2013). CBT for depression: a pilot RCT comparing mobile phone vs. computer. BMC Psychiatry 13:49. doi: 10.1186/1471-244X-13-49

Williamson, B. (2015). Algorithmic skin: health-tracking technologies, personal analytics and the biopedagogies of digitized health and physical education. Sport Educ. Soc. 20, 133–151. doi: 10.1080/13573322.2014.962494

Wright, J. H., Wright, A. S., Albano, A. M., Basco, M. R., Goldsmith, L. J., Raffield, T., et al. (2005). Computer-assisted cognitive therapy for depression: maintaining efficacy while reducing therapist time. Am. J. Psychiatry 162, 1158–1164. doi: 10.1176/appi.ajp.162.6.1158

Youpper. (2019). Youper - Emotional Health Assistant Powered by AI. Retrieved from: https://www.youper.ai/ (accessed August 15, 2019)

Keywords: mental health, apps, surveillance, neoliberalism, walkthrough method, critical health communication

Citation: MacLean S and Hatcher S (2019) Constructing the (Healthy) Neoliberal Citizen: Using the Walkthrough Method “Do” Critical Health Communication Research. Front. Commun. 4:52. doi: 10.3389/fcomm.2019.00052

Received: 27 April 2019; Accepted: 12 September 2019;

Published: 04 October 2019.

Edited by:

Ambar Basu, University of South Florida, United StatesReviewed by:

Vian Bakir, Bangor University, United KingdomPatrick J. Dillon, Kent State University at Stark, United States

Marissa Joanna Doshi, Hope College, United States

Copyright © 2019 MacLean and Hatcher. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sarah MacLean, c2FyYWgubWFjbGVhbjNAY2FybGV0b24uY2E=

Sarah MacLean

Sarah MacLean Simon Hatcher

Simon Hatcher