- Department of Communication, Wayne State University, Detroit, MI, United States

Though the contemporary media environment is filled with many different sources disseminating communications about science—including journalists, politicians and opinion leaders, and researchers—few studies have examined how messages about science communicated by different sources directly influence audience opinion about scientific research itself. This experiment (N = 170) used stimuli articles that reflected different presentation sources (3: political/public relations/researcher) and types of science research (2: “hard”/“soft”) to examine effects on people's attitudes toward the featured research project's (a) utility and (b) worthiness of federal grant funding, while controlling for individual differences in political attitudes and interest in science. Overall, political-source messages suppressed ratings of the project's worthiness of funding and utility, and while messages from the researcher source produced greater utility ratings compared to the public relations source for soft science projects, this pattern was reversed for hard science research. Additionally, different sources influenced people's comprehension of articles they read. We interpret these results within the larger landscape of science communication and conclude with brief practical recommendations for those engaging in science communication.

Introduction

In a 2017 report, the National Academies of Sciences, Engineering, and Medicine's (NAS) argued that the study of science communication and science journalism should be updated to reflect the contemporary media environment. The NAS's report contends that a modern approach to investigating the production and effects of science information should mirror its real-world complexity by incorporating the influences of format, market segmentation, the channels science information can be received through, the influence of opinion leaders' framing of science, and the backdrop of political and social realities in which science communication takes place (pp. 65–69). In the past, science journalism was a more robust field, but traditional news reporting about science has dwindled sharply in recent years, in line with broad declines in news employment. The print space made available to cover science has steadily decreased: In 1989, 95 U.S. newspapers had weekly science sections, and by 2012, that number shrank to 19 (Morrison, 2013). Although newspaper coverage has declined, the amount of science research featured in online sites has risen—in fact, 23% of Americans claim to rely primarily on the Internet for their information about science and technology (National Science Board, 2016).

The proliferation of online sources about science suggests that the information environment is crowded with many voices, all competing for audiences' attention. Recent research has examined many of these different sources of science communication including: science journalists (Hayden and Check Hayden, 2018), public relations (PR) professionals (Autzen, 2014), and scientists themselves (Liang et al., 2014). One source receiving less scholarly attention is politicians: Much has been written about policymakers' use of science as a political issue (see Gauchat, 2012) and considered them as a unique type of audience that receives science communications (see National Academies of Sciences, Engineering, and Medicine, 2017), but less attention has been paid to how the messages they broadcast about science are received by the public.

That policymakers have fueled the political polarization of science in current popular discourse is not a new realization. Hofstadter (1964) saw such a division emerging in the New Deal era and expanding during the Cold War: events and technology leave the ordinary citizen “at the mercy of experts,” but citizens can strike back by making fun of intellectuals themselves or by “applauding the politicians as they pursue the subversive teacher, the suspect scientist” (p. 37). The first notable example of this phenomenon was Senator William Proxmire's “Golden Fleece Award,” which he used to draw attention to federally funded research projects that he considered frivolous or wasteful. Though Proxmire was a Democrat, historians like Hofstadter (1964) and Lipset and Raab (1978) trace such trends as a distrust of expertise and a preference for simpler solutions primarily to conservative movements. More recent research has suggested that the degree to which a science topic is seen as controversial depends on its interplay with cultural and social identities (Hart and Nisbet, 2012; Drummond and Fischhoff, 2017). Indeed, contemporary incarnations of this politicization of science come from conservative politicians—Senator Tom Coburn (R-Oklahoma) published The Wastebook annually from 2009 to 2014, with the purpose of providing a report that “details 100 of the countless unnecessary, duplicative, or just plain stupid projects spread throughout the federal government and paid for with your tax dollars this year that highlight the out-of-control and shortsighted spending excesses in Washington” (Coburn, 2011, p. 2). Since Coburn's retirement, other U.S. Senators have recently begun to publish their own editions of The Wastebook. Notable examples have emerged from Senator Rand Paul, Senator Jeff Flake, and Senator James Lankford, who noted, “There shouldn't be one [federal Wastebook]. There should be 535. Every office in the House should put it out, every office in the Senate. We all see the different issues that are out there” (Heckman, 2018). Though the treatment of science as a fiscal issue is not new, the number of Senators joining the discussion reflects its rising popularity within the political sphere.

Although academics have written about politicians' fiscal framing of science for many decades, these articles typically offer a “call to arms” for researchers to rally against it (e.g., Shaffer, 1977; Atkinson, 1999; Hatfield, 2006), rather than empirical investigation into what effects these kinds of science communications actually produce on audiences that attend to them. The current study sets out to examine these audience effects. Specifically, we compare the satirical political source seen in The Wastebook to the formal scientific source of the National Science Foundation (NSF) project abstract, with the science press release (PR) source that serve to “promote science and/or the work of scientific institutions” (Schmitt, 2018). The goal of this study is to see if articles reflecting each of these three genres influence readers' attitudes toward (a) the utility of the featured scientific research project and (b) its worthiness of federal funding. We also examine the effects of these different sources on readers' attitudes toward research in “hard science” (e.g., disciplines, such as engineering, mathematics, biology) and “soft science” (e.g., social science disciplines, such as psychology, anthropology, communication) disciplines. In doing so, this research takes a step toward understanding the larger landscape of science journalism, and how the rising popularity of political takes on science compares to other sources of information to influence audience's interpretation of the message and their attitudes toward science.

The Politicization of Science

The current era of contingent-effects media studies has demonstrated how over the past 20 years, political actors on both sides of the aisle have contributed to the creation of a stark divide regarding scientific trust, in which liberals' trust in the scientific community has endured, while conservatives' trust has steadily declined (Gauchat, 2012). Nisbet et al. (2015) argue that this divide is not due to inherent personality differences between liberals and conservatives, but is attributable to two other factors that stem from both information sources and audiences. First is the strategic use of science in the “ongoing competition between American political actors attempting to differentiate themselves and mobilize base constituencies” (p. 40). Second, because both liberal and conservative audiences engage in motivated reasoning when processing information about science, they will interpret messages in ways consistent with their own ideological beliefs and pre-existing attitudes, which creates even greater polarization.

Consistent with Nisbet et al. (2015) assertion, existing discourse reflects the competitive use of science by political actors as an issue to mobilize their constituent base. In their attempt to align science with fiscal policy, politicians have cultivated what Hofstadter (1964) called an anti-intellectualist attitude toward science by spotlighting a “resentment and suspicion of the life of the mind and of those who are considered to represent it; and a disposition constantly to minimize the value of that life” (p. 7). In positioning science as a fiscal issue in publications like The Wastebook, politicians emphasize the careless use of tax dollars being invested in government-funded projects. A recent content analysis of six editions from 2010 to 2015 that examined 600 entries found that although The Wastebook featured projects funded by many different agencies, it was projects funded by the National Science Foundation (NSF) that were the most frequently mentioned. The primary focus of The Wastebook was on research projects based in the Social, Behavioral & Economic Science NSF directorate, as opposed to other directorates, such as Biology, Computer Science, or Engineering (Tong et al., 2016). The fixation on social science in The Wastebook harkens back to what Hofstadter (1964) deemed the “curious cult of practicality” in which, compared to the “hard” sciences, basic research in the social and behavioral sciences is more severely criticized for its “softer” application and seeming lack of immediate utility. The soft/hard distinction played out along cultural lines as well; a Republican congressman offered this caution about NSF funding in 1945:

“If the impression becomes prevalent in the Congress that this legislation is going to establish some sort of an organization in which there would be a lot of short-haired women and long-haired men messing into everybody's personal affairs and lives … you are not going to get your legislation” (Hofstadter, 1964; Gieryn, 1999).

The politicization of science as a form of “cultural status competition” (Kahan, 2015) is reflected today in the growing split between Republicans, who generally want to spend less funding on research, and Democrats, who generally favor federal-grant-supported projects. A recent Pew Research study (Funk, 2017) on the partisan gap in attitudes toward science saw little difference between Republicans and Democrats in 2001, but the gap had widened to 16 percentage points by 2011, and 27 points in 2017. Democrats and Republicans also differ in their evaluations of science news reporting: Another Pew study (Funk et al., 2017) found only 47% of Republicans believe the mainstream media's coverage of science research is solid, compared to 64% of Democrats. Such evidence indicates how the use of science as a political issue has contributed to the growing rift in how Democrats and Republicans might interpret communications they see in the larger information environment.

Classifying Kinds of Contemporary Science Communication

The new landscape of science communication is filled with various messages, but they vary in terms of how they are positioned to grab audiences' attention. Marcinkowski and Kohring (2014) offer three dimensions along which to describe and differentiate the various kinds of science communication: The first dimension is the difference between individual sources, such as an individual academic who publicizes his/her research projects—and institutional sources—like the PR office of a university where that academic works. Second, there is a difference between communication by science and communication about science, which “concerns the question of whether academics or academic institutions provide self-descriptions of their own action, or whether external observers (especially journalists) communicate their assessments of scientific processes and findings, and place them in a social context” (p. 1). Lastly, they point to two modes of science communications—push communications, in which the source purposefully selects desired recipients (such as a university press office contacting various journalists or donor lists) vs. pull communications where the information is made available to the public at large, allowing the message to be pulled in by interested audiences (see also Autzen, 2014; Marcinkowski and Kohring, 2014, p. 2; Schmitt, 2018).

We apply Marcinkowski and Kohring's dimensions as a framework for organizing the three kinds of science communications being tested in the current study. All three are published by an institution as opposed to an individual (though The Wastebook may be associated with a specific US Senator, it is his or her office that prepares and publishes the document). Along the second dimension, only the NSF project abstract can be considered “by science” as it was the research team that drafts that particular kind of message; both the PR and political messages we consider “about science” genres, as they are written by authors who are outside of the scientific research process. We considered the ways the messages featured in our study were disseminated; we classified research abstracts as “pull” messages that were available on the NSF website, and PR messages as “push” messages sent out to targeted audiences. The Wastebook rests in the middle of this dimension, as it can function as both a push message (when sent out to specific constituencies or journalists) and as a pull message (since almost all Senators make their editions of The Wastebook publicly available, any interested individuals can access it freely).

Finally a fourth dimension along which these kinds of communications may vary is style/genre. The PR version adopts the basic principles of journalistic style, often incorporating headlines, lead paragraphs that focus on values like proximity, timeliness, and impact, and other structural characteristics that would be familiar to most news-reading audiences (see also Autzen, 2014). The political Wastebook incorporates journalistic elements, but adds aspects more associated with commentary than reporting: overt evaluation, declaring a preferred stance for the public to take, and—especially—satire, or what Garver (2017) calls a “heavy dose of snark and mockery,” to describe federally-funded research. By contrast, the style of the project abstract tends to be highly technical with specialized vocabulary, which gives the NSF version the aura of authority through scientese or “the use of scientific jargon to create the impression of a sound foundation in science”—indeed this elevated, technical style is designed to “appropriate the credibility granted to science” (Haard et al., 2004, p. 412)1.

Individual Interest in Science

As people's political backgrounds clearly influence their evaluation of message sources and interpretation of message content, a related question is which other individual differences may have a similar effect. Within science communication, an important factor known to affect information seeking and interpretation is individual interest in science. Bubela et al. (2009) argue that people who are interested in science are also more motivated to search for more information in diverse media outlets; on the other hand, those who are less interested in science might simply “avoid science media altogether” (p. 514).

Stewart (2013) argued that those who have a strong background in science are also more motivated and able to process science communications centrally, instead of relying on heuristics, such as political values or emotional appeals. In his study, various frames (e.g., Scientific Progress, Economic Prospects, or Political Conflict) produced different effects depending on participants' background in science (which was measured as college science majors vs. non-science majors): “The results of this study show interaction effects between major and frame condition, consistent with the idea that frames cue heuristics and that these heuristics vary based on participants' background in science” (p. 106). Thus, a person's individual interest, familiarity, or background in science might affect how they attend to and interpret messages about science communicated in different ways by different sources.

The Current Study

Having defined and classified the elements of the political, scientific, and PR sources, the current study investigates whether variations in the source of science communications influence two outcomes. First, we ask if different styles representative of each source affect the comprehension of the message–or what Robinson and Levy (1986) refer to as the “main point” of the message:

RQ1: Do messages from different sources of science communication (political, scientific, PR) affect individuals' comprehension?

Second, we test how different message sources affect people's attitudes toward science. Evidence indicates that conservative politicians have often criticized basic scientific research for lacking in practical utility and for being a wasteful use of federal money. Because such critiques have been primarily aimed at research in the social sciences, the current study examines how the three approaches detailed above operate in conjunction with “hard” vs. “soft” science research to affect attitudes about the utility of different scientific projects and their worthiness of federal funding.

Furthermore, the literature reviewed above indicates that people are motivated to interpret information in line with their political attitudes (Klayman and Ha, 1987) and intellectual interests—including science (Bubela et al., 2009; Stewart, 2013). Thus, we advance these final research questions aimed at examining the interaction between our two experimental variables –source and science—while controlling for each individual difference variable.

First with respect to the main effect of source, we advance the following research question:

RQ2: Do messages from different sources of science communication (political, scientific, PR) affect individuals' judgments regarding a research project's (a) utility and (b) worthiness of federal funding?

We then ask a follow-up question regarding the interaction effect of source with type of science research on audience judgment of science:

RQ3: Does the type of science research (hard vs. soft) interact with messages communicated by different sources to affect individuals' judgments of a research project's (a) utility and (b) worthiness of federal funding?

Materials and Methods

A sample of 170 (86 female, 1 did not disclose) participants was recruited via a national online survey panel through Qualtrics Panels. Participants ranged in age from 18 to 80 (M = 50.35, SD = 15.51). Participants were asked to report their highest level of formal education: 4.7% indicated less than high school, 14.7% indicated high school graduate, 22.4% some college, 19.4% indicated graduate of a 2-years college, 21.8% graduated from a 4-years college, 17.1% indicated post-baccalaureate training. After indicating informed consent, all participants completed pretest questions, read three articles that corresponded to the message source and science type manipulations, and then answered a series of post-test questions (described below). Individuals were paid for their participation in the study. All procedures were approved by the Wayne State University institutional review board.

Participants first read an online information sheet that detailed the purpose and procedures of the study, as well as their rights. To indicate consent, they clicked through this online form and began the pretest. Participants then viewed three articles that reflected the source variable (Wastebook entry, university news bureau press release, researcher-authored project abstract distributed by NSF) in random order. Nested within the source condition was the science type variable (hard vs. soft): In the hard science condition, two of the three articles featured research projects from either biology or computer science and one exposure from communication or psychology; in the soft science condition, the ratio was two soft science articles and a single hard science article. These two variables created a 3 × 2 nested experimental design. They completed the post-test and were thanked for their participation.

Stimulus Materials

Because all stimulus materials were based on real sources, to be included in the final stimuli set, a research project had to have three versions available for use—a version from The Wastebook, a PR version written by media professionals (e.g., a public relations press release or article), and a project abstract available through the NSF website. Selection of stimuli began first by consulting findings from a representative content analysis of all Wastebook entries from 2010 to 2015 that varied by discipline, funding source, and research type (e.g., hard/soft basic science research) (Tong et al., 2016). After indexing the Wastebook entries, we then searched for the corresponding research abstracts from the NSF website and verified that corresponding PR versions were available online. We verified that all PR versions used in the current study were pushed out by University press offices; although many contained quotes from the primary investigator, all PR materials were written by media professionals (as evidenced by authorship byline); none appeared to be written by the researcher. Stimulus materials were edited to be comparable in overall length but not to change features like sentence length, evaluative statements, or verb voice selection that characterize the three styles. Following Nisbet et al. (2015), we relied on stimuli sampling (see Wells and Windschitl, 1999), and used a total of six research projects, three each from hard and soft science disciplines. However, unlike Nisbet et al. (2015), we deliberately selected topics that were not ideologically divisive.

Measures

In the pretest, two individual difference variables were assessed to see how they interacted with the above experimental inductions. As this study is examining the politicization of science as a fiscal issue, we asked participants to answer a single item adapted from Pratto et al. (1994) that measured their level of economic conservatism, where 1 = very liberal to 6 = very conservative (M = 4.24, Mdn = 4.00, SD = 2.00). For interest in science, four questions adapted from Stewart et al. (2009) assessed people's self-reported interest in science, their knowledge about scientific topics, the amount of multimedia (e.g., video, TV, etc.) they consume about science, and the amount of information they read about science in the popular media. This scale ranged from 1 to 7 with high scores indicating greater self-reported interest (M = 4.51, Mdn = 4.75, SD = 1.43, alpha = 0.89). Participants also answered basic demographic questions (age, education, etc.) before moving on to the first article.

After reading each article, participants answered three questions designed to test their ability to process each article. As recognition tests offer a measure of encoding and processing (e.g., Lang, 2000; Stewart, 2013), a total of 18 questions (three questions about the research project featured in each stimulus article) checked participants' ability to recall facts about the particular research project (e.g., “What kind of monkeys were involved in this study?”).

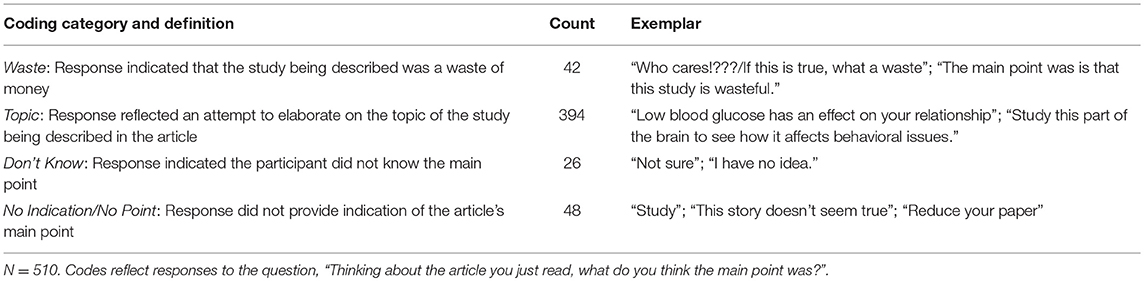

Article comprehension was assessed using the open-ended question: “Thinking about the article you just read, what do you think the main point was?” Three raters examined responses to this question to generate coding categories inductively using the constant comparison method (Glaser and Strauss, 1967). The goal of this procedure is to allow categories “to emerge from the discourse itself” (Benoit and McHale, 2003, emphasis in original). Four discrete clusters of categories emerged after two rounds of comparison: (1) “waste” responses indicated that the project was a waste of federal money, (2) “topic” responses reflected the specific topic of the scientific project, (3) “don't know” responses that indicated the participant did not know, (4) “no indication/no point” responses that were deliberately inconsistent with the main point question being asked (see Table 1 for category counts and exemplars). “Main point” comments were then reanalyzed; intercoder reliability was sufficient (Krippendorf's alpha = 0.83; 95% confidence interval = 0.76; 0.90; see Hayes and Krippendorff, 2007).

Participants also reported their attitudes toward each research project they read about. Items assessing the research project's utility were adapted from Stewart et al. (2009). Scores on these items could range from 1 to 7 with higher scores indicating greater usefulness. Three original items were used to assess whether or not participants believed the research project was worthy of federal funding, where 1 = strongly disagree and 7 = strongly agree: “This project was worthy of the federal monetary support it received,” “This research was a good use of federal funds,” “The US Government should not have paid for this research project” (reverse coded). Composite scores were created for usefulness (M = 4.19, Mdn = 4.50, SD = 1.95, alpha = 0.84), and worthiness of funding (M = 3.63, Mdn = 4.00, SD = 1.81, alpha = 0.85).

Results

Manipulation Checks

Prior to main analyses, checks on participants' ability to process the text were conducted. Processing ability was assessed using the 3-item recognition memory questions that appeared after each article. Results indicated no significant differences in processing across source type or science type; recall scores were also not significantly correlated with either self-reported interest in science or by political attitudes (0.00 < |r| <0.07), suggesting that these variables did not impact participants' ability to understand the information presented in each article. The average number of questions answered correctly across all conditions was 1.68 (SD = 0.04). This suggests that participants processed each story similarly across conditions.

Effects on Comprehension

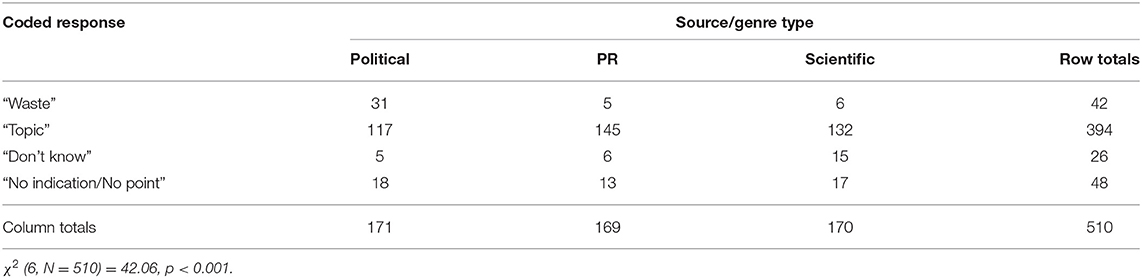

A chi-square test of independence examined the relationship between participants' coded responses to the main point question and source type asked in RQ1. Results indicated a significant association between source type and participants' comprehension, χ2 (6, N = 510) = 42.06, p < 0.001. Seeing the story's main point as wasting taxpayer dollars on science was more likely for messages in the political style (73.8%) than in the PR (11.9%) or scientific (14.3%) styles. Topic-based responses, which accounted for an overall majority of responses, were least likely to occur in the political style (29.5%) and appeared in 36.9% of the total in the PR style and 33.6% in the scientific style. Don't know comprehension responses occurred significantly more frequently in the scientific condition (57.7%) than in The Wastebook (19.2%), or PR (23.1%) conditions. Finally, proportions of no point responses were equally likely in each of the three conditions. (see Table 2 for counts).

Effects on Audience Attitudes Toward Science Research

To test effects on attitude judgments toward science asked in RQ2 and RQ3, the analyses reported below used a mixed analysis of covariance (ANCOVA) procedure with source (political, scientific, PR) as a within-subjects factor and science type (hard, soft) as a between-subjects factor. The two individual difference variables of political attitudes and interest in science were added as additional covariates. For ease of interpretation, we present all analyses pertaining to each dependent variable separately, beginning with judgments of a research project's utility, followed by judgments of the project's worthiness of funding.

Judgments of Research Projects' Utility

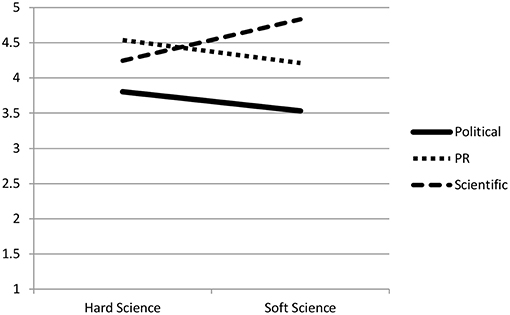

Using the mixed ANCOVA procedure, participants' judgments of the research project's utility were analyzed first. Regarding RQ3a, a significant two-way interaction effect between source and science type on judgments of research project utility emerged, F(2, 342.17) = 3.136, p = 0.045. Examination of the simple effects with Bonferroni corrections indicated that within the political source, soft science projects (M = 3.53, SD = 0.22) received slightly lower judgments of usefulness than hard science projects (M = 3.80, SD = 0.20), t(168) = −1.00, p = 0.37. Examination of the PR genre revealed that soft science projects (M = 4.21, SD = 0.22) were again judged as being less useful than hard science projects (M = 4.54, SD = 0.20), t(168) = −1.10, p = 0.27. But the pattern of utility judgments was reversed for the scientific source whereby soft science projects (M = 4.83, SD = 0.21) were rated as having greater usefulness than hard science projects (M = 4.25, SD = 0.20), t(168) = 2.06, p = 0.04 (see Figure 1). This suggests that with respect to RQ3a, variations in message source and type of science do interact to influence audience attitudes, with the main difference occurring in the PR condition. We return to this finding in the Discussion section. Lastly, although the main effect of science type was not significant, a significant main effect of source on judgments of utility was observed, F(2, 342.18) = 9.81, p < 0.001. Overall, it appeared that the political version produced lowest ratings of research project usefulness (M = 3.66, SD = 0.15), compared to either scientific (M = 4.37, SD = 0.15) or PR conditions (M = 4.53, SD = 0.14), which did not differ from each other.

Figure 1. Two-way interaction effect between message and science type on judgments of research project utility.

Judgments of Research Projects' Worthiness of Federal Funding

The mixed ANCOVA was again conducted to test RQ4b. Similar to the above analysis, a main effect for source type on ratings of the research project's worthiness of funding was observed, F(2, 344.31) = 9.16, p < 0.001, was observed. The pattern of means again indicated that while scientific (M = 3.85, SD = 0.14) and PR conditions (M = 3.91, SD = 0.13) did not differ from each other, both produced significantly higher ratings of a research project's worthiness of federal funding compared to the political condition (M = 3.16, SD = 0.14), in accordance with RQ2. The two-way interaction effect and the main effect of science type were not significant.

Additional Analyses

Though we chose to assess participants' political attitudes using a continuous measure of fiscal conservatism, we also wanted to compare the findings from the current study to those of previous research that has operationalized political attitudes as people's affiliation with either Democrat or Republican parties (Funk, 2017; Funk et al., 2017). Thus, we conducted a second set of analyses that examined the interaction between source type, science type, and political party on judgments of research project utility and worthiness of federal funding. For judgments of research project utility, significant interaction between source × science type was replicated, F(2, 339.39) = 3.055, p = 0.048, as was the main effect for source type, F(2, 339.29) = 9.72, p < 0.001. Although no other effects emerged for science type, there was a main effect for political party affiliation. Consistent with the connections between science research and fiscal spending by politicians, Republican participants (M = 3.95, SD = 0.11) were less inclined to find research projects useful than Democrats (M = 4.50, SD = 0.13), F(1, 496.54) = 10.51, p = 0.001. With respect to judgments of funding worthiness, the same main effect for source detected previously was found again, F(1, 340.66) = 9.38, p < 0.001. Finally, the main effect for political party on judgments of funding worthiness was also significant with Republicans being also less likely to believe projects were worthy of the funding they received (M = 3.36, SD = 0.14) compared to Democrats (M = 3.99, SD = 0.16), F(1, 496.54) = 16.15, p < 0.001.

Discussion

The current study used naturally occurring examples from contemporary media to explore how messages from political, professionalized, and scientific sources explaining hard and soft science research projects affected audience opinion toward basic scientific research. We examined the effects of source and science type while controlling for key individual difference variables of self-reported political attitudes and personal interest in science. The robust effect of source type on judgments of a research project's worthiness of funding underscore the effects that various kinds of science journalism can have on readers' attitudes. Participants in this study appeared to learn information equally well, regardless of the way it was presented; instead, the effect of various kinds of science communications was to set the tone in which this information about science was compared to other information and ideas that readers already held. With regard to the political message, raising the salience of government spending seemed to be a way of “activating schemas that encourage target audiences to think, feel, and decide in a particular way” (Entman, 2007, p. 164).

Overall, the effect of each genre on comprehension was also evident in participants' responses to the main point question. Those who read stories in the political genre were the most likely see a project as wasting taxpayer dollars, as reported in RQ1. But did Democratic and Republican participants internalize this message at different rates? To examine this pattern, a post-hoc analysis was conducted to explore the proportions of Republican and Democratic participants who reported the main point of articles as “wasteful.” Examination of frequency counts indicated that out of a total of 42 wasteful main point responses, 33 were generated by Republican participants compared to 9 by Democratic participants. A binomial test of proportions indicated that the proportion of Republicans who reflected the political genre's main point was beyond chance, Z = 3.69, p < 0.001. The genre seems to amplify the commitments to demonstrating membership in a cultural community—here, as the guardians of the public purse—that Kahan (2015) suggested.

In comparison to the more concrete issue of federal spending, evaluating a scientific project's utility was perhaps a more abstract judgment for participants to make. The interactions of the different messages and kinds of science research on judgments of utility indicated that projects from the hard science side of the scale were seen as more useful when described in the language of the university PR bureau. On the other hand, the softer sciences appeared to gain in perceived utility from the style of the scientific abstract. In interpreting this effect, it may be that the utility of hard science research is more immediately obvious to readers and as a result, its efficacy continued to resonate even within the PR version. On the other hand, readers may have needed additional cues regarding the utility of social science research; if that is the case, then such cues may be made more salient by the “scientese” of a scientific genre, and less so in a PR genre.

Implications for Contemporary Science Journalism and Communication

It is important to consider these findings and how they fit within the larger ecosystem of public information. As Autzen (2014) noted, we are living in a “copy-and-paste” era of journalism, in which press releases from PR professionals get “printed word-for-word in newspapers and on internet media platforms of any kind with “pressing the copy-paste buttons” being the only contribution from the journalists working in the media” (Autzen, 2014, p. 2). These kinds of press releases certainly have important functions of notifying the public of newsworthy accomplishments and creating “buzz” around scholarly work—but more often than not, authors of press releases are tempted to hype findings from individual projects, or report on big grants awarded to investigators or given out by funders without offering any larger context for the research (Schmitt, 2018; see also, Dumas-Mallet et al., 2017; Weingart, 2017). Researchers have a unique role to play in that as the people closest to the actual science, they can offer that context for the public. But in order to do so, they must communicate it in an accessible, interpretable style.

In short, researchers describing their work can gain from the style of PR. As the results of RQ1 also showed that “don't know” responses occurred most frequently in the scientific abstract than any other condition, researchers (particularly ones working in the hard sciences) might be more effective in their science communication by blending scientific vocabulary with some features of PR writing. This may be a difficult task for researchers who have spent years honing a carefully crafted technical vocabulary, and the sounds of scientese do appear to add a sense of gravitas. But if those cues spur an interest to associate with a cultural group that distances itself from academics “messing into everybody's personal affairs,” the cognitive effort of processing scientific prose seems less attractive. The PR style's use of recognized journalistic techniques might also draw skeptical audience members to the information-gathering aspect of science communication, rather than its culture-affirming side (Kahan, 2015). The same interests that help to drive political polarization around an issue (Drummond and Fischhoff, 2017) could also make it less likely that motivated partisan readers would select themselves into media “echo chambers” (Dubois and Blank, 2018); the less political approach favored in the PR style thus stands a good chance of reaching those who could be persuaded by a story that does not threaten their identities.

Furthermore, those researchers who actively “push” their messages to journalists and PR professionals can also further boost the impact of their work (Liang et al., 2014). Such translation efforts are difficult, because they are often thought of as yet another task that researchers must add to their already full to-do lists. We challenge researchers to think of providing accessible explanations about their own work for lay publics as the truly final step in the research and publication process, rather than just circulating the PDF around to other academics.

Limitations

The limitations of the current study include the manipulation of the source variable. In an effort to answer the NAS's call to examine contemporary media environments, we chose to maximize experimental realism and external validity, by selecting existing research studies as the basis for our stimuli across broad categories of “hard” and “soft”; however, the specific topic of each stimulus was not systematically varied. Future work should incorporate specifically selected topics to see how different message sources interact with ideologically divisive topics (e.g., Nisbet et al., 2015). Future work that employs more divisive topics may also include more detailed measures of political attitudes by assessing individuals' specific ideological beliefs on more singular issues, aside from fiscal conservatism. Additionally, the current study was limited to only three purposively selected exemplars of political, PR, and researcher messages in an effort to maintain experimental control. But future work might explore other kinds of science communications including news editorial commentary, interviews with politicians about science, or blog posts written by scientists. We encourage other researchers to continue to adapt naturally-occurring media materials as study stimuli and expand the investigation of this topic.

Conclusion

Knowing exactly which message features to apply in accordance with which attitudes remains an issue for future research, but the current study demonstrates the effects of various sources, types of science, and people's pre-existing political beliefs and interest in science on both abstract and concrete judgments about science. The current study contributes to existing literature by demonstrating that the effects of various message source and genres of science communication depend on the audience's existing attitudes and values and also on the specific judgments they are being asked to make.

This study's use of existing articles from a variety of sources is a step toward understanding how the diverse information ecology that we now live in may affect how audiences interpret information, and how that information may shape their opinions of scientific research. Journalists, scientists, and politicians who are entering the arena of science communication should be mindful of how their messages influence the public, and also what other kinds of messages their audiences are able to access.

Data Availability

The datasets generated for this study are available on request to the corresponding author.

Author Contributions

ST conceptualized and designed the study method, analyzed data, and contributed writing. FV contributed writing, assisted with study design, and consulted on data analyses. SK contributed writing and performed data coding. AE and MA assisted with experimental stimuli preparation and performed data coding.

Funding

This research was supported by the National Science Foundation #1520723. These NSF funds were used to pay for data collection and open access publication fees.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors are grateful to Ms. Rachelle Prince for her assistance with data collection and survey development.

Footnotes

1. ^We note that these dimensions (individual/institution, by/about science, push/pull, and style) may differ across various scientific publications. Our purpose in applying these four dimensions here is to help our readers organize and differentiate the specific articles we featured and tested in this study, but we do not intend our organizing framework to be representative of all science publications of like kinds in the larger media environment.

References

Atkinson, R. C. (1999). The golden fleece, science education, and US science policy. Proc. Am. Philos. Soc. 143, 407–417.

Autzen, C. (2014). Press releases—the new trend in science communication. J. Sci. Commun. 13:C02. doi: 10.22323/2.13030302

Benoit, W. L., and McHale, J. P. (2003). Presidential candidates' television spots and personal qualities. South. J. Commun. 68, 319–334. doi: 10.1080/10417940309373270

Bubela, T., Nisbet, M. C., Borchelt, R., Brunger, F., Critchley, C., Einsiedel, E., et al. (2009). Science communication reconsidered. Nat. Biotechnol. 27, 514–518. doi: 10.1038/nbt0609-514

Coburn, T. (2011). The Wastebook: A Guide to Some of the Most Wasteful and Low Priority Government Spending of 2011. Retrieved from: http://lcweb2.loc.gov/service/gdc/coburn/2011215850.pdf

Drummond, C., and Fischhoff, B. (2017). Individuals with greater science literacy and education have more polarized beliefs on controversial science topics. Proc. Natl. Acad. Sci. U.S.A. 114, 9587–9592. doi: 10.1073/pnas.1704882114

Dubois, E., and Blank, G. (2018). The echo chamger is overstated: the moderating effect of political interest and diverse media. Inf. Commun. Soc. 21, 729–745. doi: 10.1080/1369118X.2018.1428656

Dumas-Mallet, E., Smith, A., Boraud, T., and Gonon, F. (2017). Poor replication validity of biomedical association studies reported by newspapers. PLoS ONE 12:e0172650. doi: 10.1371/journal.pone.0172650

Entman, R. M. (2007). Framing bias: media in the distribution of power. J. Commun. 57, 163–173. doi: 10.1111/j.1460-2466.2006.00336.x

Funk, C. (2017). Democrats Far More Supportive Than Republicans of Federal Spending for Scientific Research. Pew Research Center. Retrieved from: http://www.pewresearch.org/fact-tank/2017/05/01/democrats-far-more-supportive-than-republicans-of-federal-spending-for-scientific-research/

Funk, C., Gottfried, J., and Mitchell, A. (2017). Science News and Information Today. Pew Research Center. Retrieved from: http://www.journalism.org/2017/09/20/science-news-and-information-today

Garver, T. (2017). The Federal Spending ‘Wastebook' is Outrageous in More Ways Than One. Fiscal Times. Retrieved from: http://www.thefiscaltimes.com/2017/01/10/Federal-Spending-Wastebook-Outrageous-More-Ways-One

Gauchat, G. (2012). Politicization of science in the public sphere: a study of public trust in the United States, 1974 to 2010. Am. Sociol. Rev. 77, 167–187. doi: 10.1177/0003122412438225

Gieryn, T. F. (1999). Cultural Boundaries of Science: Credibility on the Line. Chicago, IL: University of Chicago Press.

Glaser, B. G., and Strauss, A. L. (1967). The Discovery of Grounded Theory: Strategies for Qualitative Research. Chicago, IL: Aldine.

Haard, J., Slater, M. D., and Long, M. (2004). Scientese and ambiguous citations in the selling of unproven medical treatments. Health Commun. 16, 411–426. doi: 10.1207/s15327027hc1604_2

Hart, P. S., and Nisbet, E. C. (2012). Boomerang effects in science communication: how motivated reasoning and identity cues amplify opinion polarization about climate mitigation policies. Communic. Res. 39, 701–723. doi: 10.1177/0093650211416646

Hatfield, E. (2006). The Golden Fleece award: Love's labours almost lost. APS Obs. 19. Retrieved from: https://www.psychologicalscience.org/observer/the-golden-fleece-award-loves-labours-almost-lost

Hayden, T., and Check Hayden, E. (2018). Science journalism's unlikely golden age. Front. Commun. 2:24. doi: 10.3389/fcomm.2017.00024

Hayes, A. F., and Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Commun. Methods Meas. 1, 77–89. doi: 10.1080/19312450709336664

Heckman, J. (2018). Lankford: There Shouldn't be One Federal Wastebook. ‘There Should be 535'. Federal News Network. Retrieved from: https://federalnewsnetwork.com/agency-oversight/2018/01/lankford-there-shouldnt-be-one-federal-wastebook-there-should-be-535/ (accessed April 4, 2019).

Kahan, D. M. (2015). Climate-science communication and the measurement problem. Polit. Psychol. 36, 1–43. doi: 10.1111/pops.12244

Klayman, J., and Ha, Y.-W. (1987). Confirmation, disconfirmation and information in hypothesis testing. Psychol. Rev. 94, 211–228. doi: 10.1037//0033-295X.94.2.211

Lang, A. (2000). The limited capacity model of mediated message processing. J. Commun. 50, 46–70. doi: 10.1111/j.1460-2466.2000.tb02833.x

Liang, X., Su, L. Y. F., Yeo, S. K., Scheufele, D. A., Brossard, D., Xenos, M., et al. (2014). Building Buzz: (Scientists) communicating science in new media environments. J. Mass Commun. Q. 91, 772–791. doi: 10.1177/1077699014550092

Lipset, S. M., and Raab, E. (1978). The Politics of Unreason: Right-Wing Extremism in America, 1790–1977. Chicago, IL: University of Chicago Press.

Marcinkowski, F., and Kohring, M. (2014). The changing rationale of science communication: a challenge to scientific authority. J. Sci. Commun.13, 1–6. doi: 10.22323/2.13030304

Morrison, S. (2013). Hard numbers, weird science. Columbia J. Rev. Retrieved from: https://archives.cjr.org/currents/hard_numbers_jf2013.php

National Academies of Sciences, Engineering, and Medicine. (2017). Communicating Science Effectively: A Research Agenda. Washington, DC: The National Academies Press. doi: 10.17226/23674

National Science Board (2016). Chapter 7: Science and Technology: Public Attitudes and Understanding. Science and Engineering Indicators 2016. Arlington, VA: National Science Foundation.

Nisbet, E. C., Cooper, K. E., and Garrett, R. K. (2015). The partisan brain: how dissonant science messages lead conservatives and liberals (dis)trust science. Ann. Am. Acad. Sci. 658, 36–66. doi: 10.1177/0002716214555474

Pratto, F., Sidanius, J., Stallworth, L. M., and Malle, B. F. (1994). Social dominance orientation: a personality variable predicting social and political attitudes. J. Pers. Soc. Psychol. 67. 741–763. doi: 10.1037//0022-3514.67.4.741

Robinson, J., and Levy, M. (1986). The Main Source: Learning From Television News. Beverly Hills, CA: Sage.

Schmitt, C. V. (2018). Push or pull: recommendations and alternative approaches for public science communicators. Front. Commun. 3:13. doi: 10.3389/fcomm.2018.00013

Shaffer (1977). The golden fleece: anti-intellectualism and social science. Am. Psychol. 32, 814–823. doi: 10.1037/0003-066X.32.10.814

Stewart, C. O. (2013). The influence of news frames and science background on attributions about embryonic and adult stem cell research: frames as heuristic/biasing cues. Sci. Commun. 35, 86–114. doi: 10.1177/1075547012440517

Stewart, C. O., Dickerson, D. L., and Hotchkiss, R. (2009). Beliefs about science and news frames in audience evaluations of embryonic and adult stem cell research. Sci. Commun. 30, 427–452. doi: 10.1177/1075547008326931

Tong, S. T., Rochiadat, A., Corriero, E. F., Wibowo, K., Matheny, R., Jefferson, B., et al. (2016). “Under attack: anti-intellectualism and the future of communication research,” Paper Presented at the Annual Conference of the National Communication Association (Philadelphia, PA).

Weingart, P. (2017). “Is there a hype problem in science? If so, how is it addressed?” in The Oxford Handbook of the Science of Science Communication, eds K. H. Jamieson, D. Kahan, and D. Scheufele (New York, NY: Oxford University Press, 111–118.

Keywords: science communication, experiment, quantitative methods, content analysis, audience opinion

Citation: Tong ST, Vultee F, Kolhoff S, Elam AB and Aniss M (2019) A Source of a Different Color: Exploring the Influence of Three Kinds of Science Communication on Audience Attitudes Toward Research. Front. Commun. 4:43. doi: 10.3389/fcomm.2019.00043

Received: 06 June 2019; Accepted: 22 July 2019;

Published: 07 August 2019.

Edited by:

Dara M. Wald, Iowa State University, United StatesReviewed by:

Ann Grand, University of Exeter, United KingdomJames G. Cantrill, Northern Michigan University, United States

Copyright © 2019 Tong, Vultee, Kolhoff, Elam and Aniss. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stephanie Tom Tong, c3RlcGhhbmllLnRvbmdAd2F5bmUuZWR1

Stephanie Tom Tong

Stephanie Tom Tong Fred Vultee

Fred Vultee