- 1University of Michigan, Ann Arbor, MI, United States

- 2The School for Environment and Sustainability, University of Michigan, Ann Arbor, MI, United States

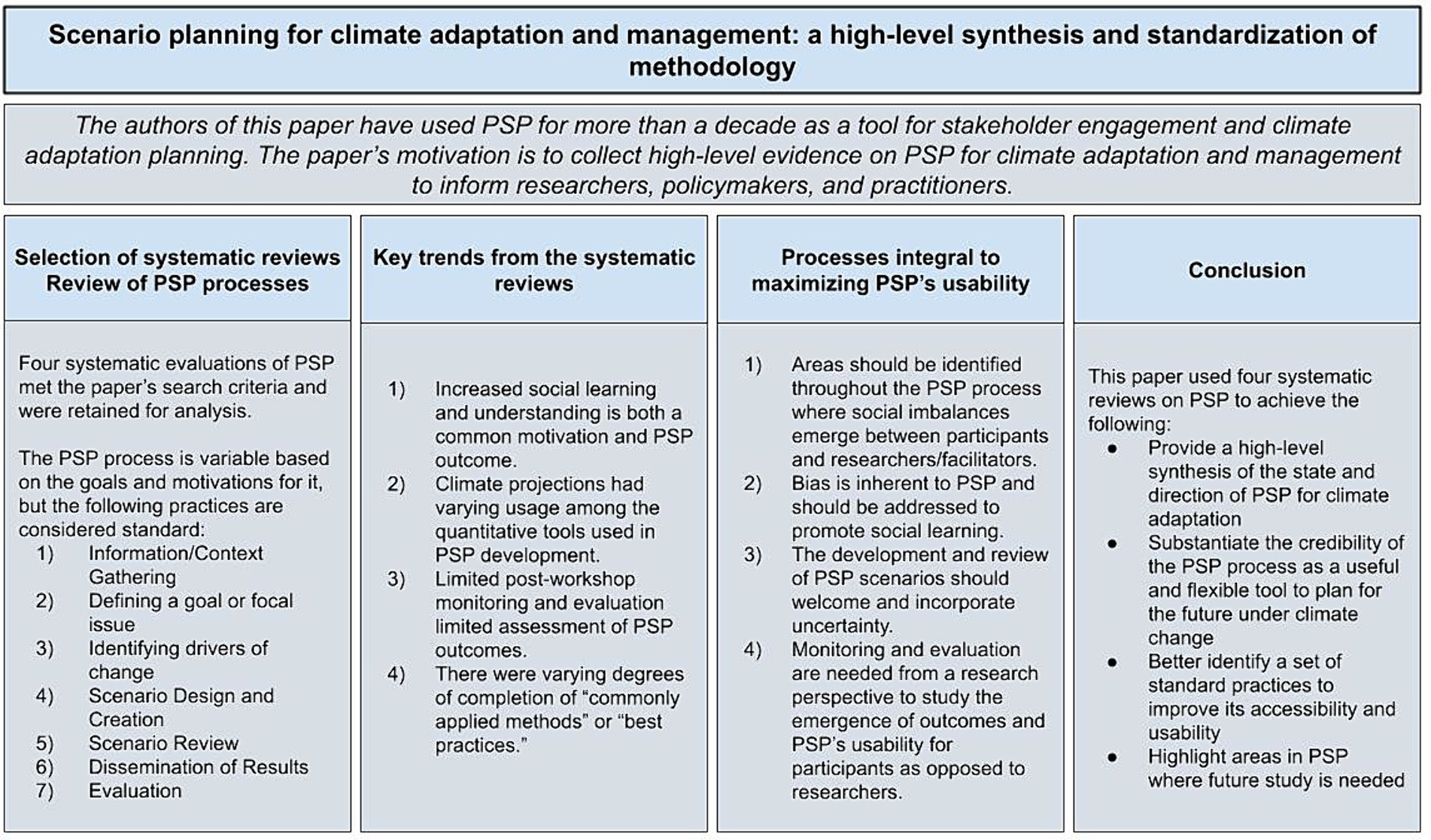

Scenario planning is a tool used to explore a set of plausible futures shaped by specific trajectories. When applied in participatory contexts, it is known as participatory scenario planning (PSP), which has grown in its usage for planning, policy, and decision-making within the context of climate change. There has been no high-level synthesis of systematic reviews covering the overall state and direction of PSP for climate adaptation and management. We draw from four systematic reviews on PSP published between 2015 and 2021 to substantiate the credibility of the process and identify a set of standard practices to make PSP a more accessible and usable tool for not only researchers, but policymakers, practitioners, and other end users who may benefit from PSP. We summarize and synthesize the range of PSP processes and characteristics, highlighting four common trends that provoke additional inquiry: PSP’s contribution to social learning and bias, the varying use of quantitative information in scenario development, issues related to carrying out monitoring and evaluation, and the varying completion of practices recommended by established PSP literature. We propose four processes as integral to maximizing PSP’s usability for end users and recommend these areas for further study: identifying social imbalances throughout the PSP process, recognizing bias as inherent to PSP, explicitly addressing, and incorporating uncertainty, and allocating resources for monitoring and evaluation.

1 Introduction

Scenario Planning (SP) has long been a strategic technique for envisioning the future. SP formally emerged as a defense strategy after World War II (Wohlstetter, 2010), and its scope expanded to a range of sectors: long range business planning (Bradfield et al., 2005); ecosystems and natural resource management (Wollenberg et al., 2000; Millennium Ecosystem Assessment, 2005; Palomo et al., 2011); energy (Cornelius et al., 2005); social capital and political decision-making (Galer, 2004; Kahane, 2004; Lang and Ramírez, 2017); public health and health systems (Sustainable Health Systems Visions, Strategies, Critical Uncertainties and Scenarios, 2013; Public Health 2030: A Scenario Exploration, 2014), and urban and regional planning (Goodspeed, 2017; Norton et al., 2019). One of SP’s recent applications, which we (the authors) are most interested in, is in climate change (Pachauri and Meyer, 2014; Birkmann et al., 2015).

Scenario-based climate adaptation has grown in usage for planning, policy, and decision-making purposes (Garfin et al., 2015; Star et al., 2016). Unlike technical modeling approaches (e.g., predictive and forecasting modeling), SP can use both qualitative and quantitative methods (Swart et al., 2004), while permitting for creativity and storytelling (Bennett et al., 2003). It encourages convening participants of various backgrounds and building consensus, trust, cooperation, and learning among themselves (Barnaud et al., 2007; Johnson et al., 2012; Kohler et al., 2017; Allington et al., 2018). Policy recommendations and adaptation actions can emerge from SP processes (Johnson et al., 2012; Butler et al., 2016), although this is not a universal outcome (Totin et al., 2018). Finally, SP can bridge the gap between the science-policy interface (Peterson et al., 2003; Wilkinson, 2009; Ferrier et al., 2016).

A climate scenario may vary by method of development, content, and application. Scenarios have been used to describe a range of radiative-forced future climates due to increasing greenhouse gasses (e.g., carbon dioxide and methane), population growth, and other socio-economic pathways (e.g., Shared Socioeconomic Pathway/Representative Concentration Pathway framework) (Briley et al., 2021b). Other applications include describing future world states achievable only through certain actions (i.e., normative or prescriptive); evaluating alternative policy or management options; and analyzing the extent to which outcomes achieved in a newly implemented policy matched those in modeling (e.g., Intergovernmental Science-Policy platform on Biodiversity and Ecosystem Services [IPBES], Intergovernmental Panel on Climate Change [IPCC]).

The authors of this paper have used SP for more than a decade as a tool for stakeholder engagement and climate adaptation planning, first applying it in partnership with the U.S. National Park Service. Using a SP technique described in Star et al. (2016), we (the authors) considered climate scenarios as envisioning a set of plausible and divergent climate futures in concert with stakeholder management priorities. In this paper, we define plausible as consistent with known physical processes of a system, and divergent as describing a broad range of plausible conditions (Gates and Rood, 2021). The manner in which we applied SP is called participatory scenario planning (PSP), which emphasizes participant involvement and input in scenario development. Climate change scenario narratives (scenarios), as used in this paper, are the result of identifying and responding to future climate change impacts done through PSP. SP and PSP will be used throughout this paper, the key difference between the two being the participatory element that accompanies PSP.

When we participated in a PSP process for the first time with the U.S. National Park Service in 2012 (see Fisichelli et al., 2013), we treated it as a technique for engagement with the end user (e.g., planners, practitioners, decision-makers, current and future PSP facilitators) as well as an effective approach to co-development. We also used it to manage the intrinsic uncertainty of the climate in adaptation and application. Our particular focus of our PSP process is to incorporate both scientific uncertainty from climate information (i.e., climate model projections) and management-based uncertainty (i.e., based on participants’ management experiences) in decision-making for future climate change impacts.

Since that first use of PSP, we have treated it as a framework by which groups can interact with climate information, explore extreme outcomes, build consensus, and make decisions. After each iteration, we would refine it for the next one based on participant evaluations and evaluations we conducted on ourselves. As our process evolved, natural questions emerged regarding the efficacy and efficiency of our methodology and practice, such as:

• How can we standardize the practices in our approach to support an easier and scalable use of the method?

• Are participants able to apply the outcomes to advance their needs?

• How can we better evaluate the PSP process?

To help us answer questions about standards of practice and evaluation of outcomes, we initiated an effort to document the state of knowledge about SP. As it is an approach used in many fields in many different ways, we narrowed our efforts to approaches that are similar in design and execution as our applications in climate adaptation. We rely on the SP literature to identify standard practices, existing challenges, and research gaps in the practice, which we explore for our scenario planning framework. As far as we know, there has been no synthesis of reviews covering the state and direction of PSP for climate adaptation.

A broad synthesis of reviews to collect high-level evidence on PSP for climate adaptation can inform not only researchers, but policymakers and practitioners of key trends and gaps in the practice without the resource-intensive demands of a systematic review (see Aromataris et al., 2015). We aim to substantiate the credibility of the PSP process and better identify a set of standard practices with the hope of making it a more accessible and usable tool to use. We also aim to highlight areas in PSP where future study is needed (Figure 1).1

Figure 1. Paper Structure and thus are not using previously published and/or copyrighted figures in our article.

In Section 2, we outline our selection criteria for selecting existing systematic reviews and our methods for analyzing them. We also provide a broad overview of the PSP process based on the steps mentioned in the systematic reviews. We qualitatively synthesize the processes as explained by the systematic reviews into an overarching set of non-chronological guidelines. We expected varying PSP processes across the reviews—a reasonable expectation given the diversity of goals and motivations warranting PSP.

Section 3 describes four key trends that we determine cut across all PSP processes outlined in the systematic reviews. After assessing the characteristics and implications of these trends we found across the systematic reviews, we proceed to Section 4 with a description of four processes we consider integral to PSP maximizing its usability for end users during and after the PSP process, based on our findings in Sections 2 and 3. We conclude the paper in Section 5 with a summary, any additional insights, and recommendations for future research and practice.

We recognize that our findings are based on the analytical frameworks employed by the systematic reviews to assess their respective case studies, and these frameworks may have prioritized certain PSP information and omitted others. However, this high-level synthesis focuses on the shared outcomes of case studies as presented by the systematic reviews, with any assessment of the systematic reviews’ evaluation frameworks considered as secondary analysis. Please note that the authors had no part in any of the PSP activities mentioned in this synthesis of reviews.

2 Methods

By summarizing and synthesizing evidence from multiple systematic reviews on PSP within the context of climate change, this paper allows for scenario end users to gain a broad, yet clear understanding of PSP as used in climate adaptation and management (Aromataris et al., 2015).

2.1 Selection of systematic reviews

This paper’s literature review was conducted over a three-month period in 2021, and it stems from an undergraduate thesis performed under the guidance of an academic advisor. Due to time constraints at that time, we did not follow a pre-registered-, time-stamped research plan. Moreover, we chose peer-reviewed literature (and excluded non-peer-reviewed literature) because we anticipated finding objective evaluations of PSP methods. Since our findings do not include non-peer reviewed literature on PSP trends, there is a bias that presents a risk of omitting certain PSP practices, resources, and outcomes that may be predominant in non-peer-reviewed literature, reports, and manuals produced by non-academic entities, whether that be businesses, community organizations, government entities, and others.

We limited our scope to systematic reviews of PSP, and we applied five criteria to selecting papers: (1) the paper was peer-reviewed; (2) the paper was published between the years 2015–2021 (there was no year limit on the individual case studies in any systematic review); (3) the paper had at least five citations, (4) the paper systematically evaluated at least two case studies, and (5) the paper was published in English. These criteria were applied in order to gather a number of papers that could be reviewed within the time frame and held substantial influence in the PSP literature. We anticipated that some case studies may be referenced in more than one review, but that was not a part of the exclusion criteria unless the entirety of case studies in one review were used again in a subsequent review.

We initially found papers using combinations of the keywords participatory scenario planning, systematic* [review], and climate* [change, adaptation] in Scopus (an abstract and citation database) and Web of Science (a platform consisting of multiple databases). The specific search string used for Scopus included “participatory AND ‘scenario planning’ AND review.” The specific search strings used for Web of Science included “participatory scenario planning (Topic) and systematic* (Topic)” as well as “participatory scenario planning (Topic) and climate* (Topic) and review (Topic).” All searches on Web of Science were through its citation database, Web of Science Core Collection and included all indices within that database. Then, the results were scanned for whether the phrases “participatory scenario planning,” “systematic review,” “climate” were present in the title before retaining them. However, it soon became apparent during the literature review that papers on PSP explicitly done for climate change were limited. Climate-related keywords (e.g., climate change, climate adaptation) would return papers of cases not done explicitly within the context of climate, but related to adjacent fields of research, in most cases social-ecological systems.2 To address this, we retained papers that mentioned either climate* or social-ecological systems in the title and/or abstract for further review. From that point, the searches were iteratively refined with keywords from subsequent papers.

Some results from database searches led us to Ecology & Society, a journal of integrative science for resilience and sustainability that also had a search function to search for papers. In Ecology & Society, the search string “participatory scenario planning” was inputted into the search bar and returned papers focused on PSP within the context of “social-ecological systems.”

We also used White Rose (a shared, open access repository from the Universities of Leeds, Sheffield and York) but it would return findings also discovered through Scopus and Web of Science. In addition, EBSCO Open Dissertations was used to gather scholarly background context on PSP but not considered as a part of the literature review.

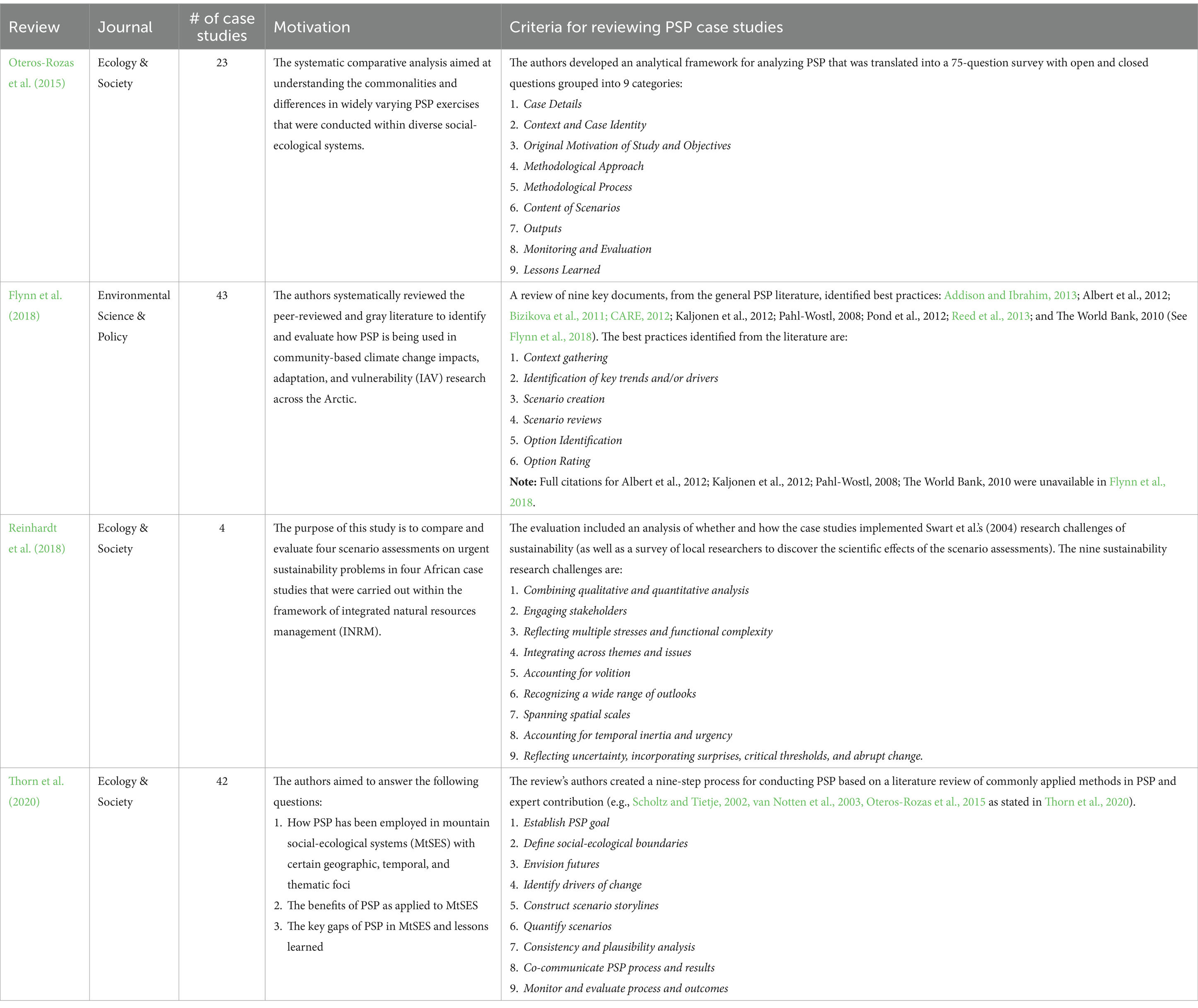

We retained four systematic evaluations of SP, with three out of four of the systematic evaluations focused solely on PSP. To the best of our knowledge, these were the only four systematic evaluations that met the search criteria as of 2021. Three were published in the journal Ecology & Society (Oteros-Rozas et al., 2015; Reinhardt et al., 2018; Thorn et al., 2020), while the fourth was published in Environmental Science & Policy (Flynn et al., 2018).

Oteros-Rozas et al. (2015) systematically reviewed 23 place-based case studies that used PSP as a research tool for evaluating alternative futures of social-ecological systems. They identified their cases through a synthesis activity in 2014 and a snowball search. Their systematic comparative analysis aimed at understanding the commonalities and differences in widely varying PSP exercises that were conducted within diverse social-ecological systems. To analyze the PSP exercises, the reviewers developed a 75-question survey with open and closed questions that were grouped into nine categories. Two rounds of data collection took place to clarify the responses to the categorical questions and to incorporate additional questions arising from the first round. Following this step, the review analyzed the information using a four-step process: (1) coding responses into pre-existing or emergent typologies, (2) summarizing responses to each question including notable outliers, (3) identifying strong trends, dominant approaches, common findings, or lessons, and (4) performing descriptive and multivariate analyses. See Table 1 for the nine categories in which the reviewers in Oteros-Rozas et al. (2015) grouped their survey questions (the 75 questions are not included).

Flynn et al. (2018) systematically reviewed 43 case studies on how PSP is employed in community-based research on climate change impacts, adaptation, and vulnerability in the Arctic. It identified its cases using a systematic review approach outlined by Berrang-Ford et al. (2015). Flynn et al. (2018) created an evaluation rubric for its case studies examining the extent to which Arctic PSP studies have incorporated “best practices” and “participation” into research design. The review identified six key stages that were consistently reported from nine key documents from the general PSP literature to underpin PSP work in diverse contexts. See Table 1 for the six key stages present in Flynn et al. (2018).

Thorn et al. (2020) systematically reviewed 42 peer-reviewed and non-peer-reviewed case studies on how PSP has been employed in mountainous social-ecological systems (MtSES) to understand (1) how PSP has been employed in MtSES with certain geographic, temporal, and thematic foci, (2) the benefits of PSP as applied to MtSES, and (3) the key gaps of PSP in MtSES and lessons learned. Thorn et al. (2020) systematic review following methods established by the Collaboration for Environmental Evidence Guidelines and Standards for Evidence Synthesis in Environmental Management as well as by the PRISMA checklist. Once they gathered their case studies, they developed a nine-step process for conducting PSP based on a literature review of commonly applied methods in PSP tools and typologies broadly (both within and beyond MtSES) and expert contribution. They used the nine-step process (along with other tools) to develop a codebook and 89 questions that helped evaluate the extent to which the studies included information about each step. See Table 1 for the nine-step process used in Thorn et al. (2020).

Reinhardt et al. (2018) systematically evaluated four regional case studies in Africa that used the framework of integrated natural resources management (INRM) to assess how climate scenarios supported sustainability science. It evaluated its assessments against a set of indicators directly linked to nine sustainability research challenges outlined by Swart et al. (2004) and against survey responses from local researchers on their perception of the usefulness of these scenario assessments to promote sustainability in their case study areas. See Table 1 for the nine sustainability research challenges.

It should be noted that only two cases in Reinhardt et al. (2018) were described as participatory (hence, PSP). These two participatory cases were kept in the results because (1) the review, as a whole, fulfilled the inclusion criteria; and (2) there was an underrepresentation of systematically reviewed case studies in Africa (by number) relative to other continents (see Oteros-Rozas et al., 2015; Thorn et al., 2020). This inclusion did not eliminate this overall underrepresentation in the selected papers, and there were reported under-representations of other geographical masses in the literature (e.g., Thorn et al., 2020).

In addition to the bias toward peer-reviewed literature, our analysis carries over biases stemming from the systematic reviews, whether that is through the case study selection or method of analysis. For example, Oteros-Rozas et al. (2015) disclosed that their case studies are not necessarily representative of all PSP exercises, and that the reviewers may have underreported or omitted information in their analysis. The reviewers in both Flynn et al. (2018) and Thorn et al. (2020) disclosed geographic biases. Flynn et al. (2018) possibly underreported the prevalence of studies based in European and Russian Arctic communities or specific regions (e.g., Nunavik in Northern Quebec), while Thorn et al. (2020) case studies are biased toward European countries, with less focus in Asian, African, and Latin American mountainous social-ecological systems. The findings in this paper may, therefore, be based on the outcomes or overreported geographic regions and not applicable to other geographic regions that require different needs.

2.2 Review of PSP processes

Due to the different motivations behind the systematic evaluations, as well as the different methods of evaluating case studies, we qualitatively assessed and compared the completion of PSP steps across case studies in the systematic reviews. Our assessment relied on the systematic reviews’ evaluation criteria and the results they chose to include in their articles and supplementary material.

The goal of this assessment is to provide an overview of common practices and their variances for those wishing to understand PSP or to develop their own process.3 This synthesis supported our next step in identifying common PSP trends.

2.2.1 Information/context gathering

A consistent step in the PSP process was collecting “background information” (Oteros-Rozas et al., 2015) or “context gathering” about the case study (Flynn et al., 2018). Context gathering could include the geographical location of the case study; relevant system boundaries (e.g., political boundaries, geographical scale, projected year); the types of stakeholders in the case study; thematic focus; and important ecological, socioeconomic, and/or governance features (Oteros-Rozas et al., 2015). Methods to gather information were mostly through desk research, followed by participatory processes (e.g., with workshops or focus groups; Oteros-Rozas et al., 2015). In Thorn et al. (2020), case studies conducted initial assessments to obtain background information, which was often by building on long-term research collaborations, interviews with key stakeholders and consultations, or a literature review. The reviews did not use descriptive language to characterize exactly ‘who’ conducted the background information except for Oteros-Rozas et al. (2015), who found that researchers and/or research teams primarily directed the case studies, and thus the gathering of background information.

2.2.2 Defining a goal or focal issue

“Goals” (Oteros-Rozas et al., 2015; Thorn et al., 2020) or “focal issues” (Reinhardt et al., 2018) were explicitly reported in three reviews at the beginning of the PSP process. Oteros-Rozas et al. (2015) and Thorn et al. (2020) categorized goals from its respective case studies using van Notten et al. (2003)’s typology: exploratory (i.e., creating scenarios to examine plausible drivers of change), pre-policy or decision support (i.e., building scenarios to examine futures according to their desirability), or both exploratory and pre-policy. The functions of case studies were categorized using the same typology: process-oriented (e.g., enhanced awareness about the future of the social-ecological system), product-oriented (e.g., a set of narratives of plausible scenarios) or both process- and product-oriented (Oteros-Rozas et al., 2015; Thorn et al., 2020).

Flynn et al. (2018) did not state a typology but inferred from its case studies that decision making and planning were key PSP goals. In Reinhardt et al. (2018) “focal issues” were presented as pointed questions or specific topics from which scenarios were developed (e.g., How to preserve and manage the water resources and the socio-agro-ecological system for sustainable development?).

2.2.3 Identifying drivers of change

Drivers of change were integral to the case studies according to all systematic reviews, and they were generally framed as important issues or trends that PSP participants wanted to examine in scenarios. Drivers of change were derived from the physical world (e.g., climate, geological, vegetation changes), from the man-made world (e.g., population growth, market conditions), or from a combination of both.

Two reviews found its case studies to identify drivers of change through participatory methods, such as workshops, interviews, and surveys (Oteros-Rozas et al., 2015; Thorn et al., 2020). These methods were occasionally supported by formal scientific knowledge supplied outside of the participatory process (e.g., previous research or predefined drivers by researchers; Oteros-Rozas et al., 2015). In Thorn et al. (2020), drivers of change were given more attention in exploratory scenarios, whereas pre-policy or decision support scenarios focused on examining the desirability of selected futures.

Most case studies in Oteros-Rozas et al. (2015) prioritized drivers based on their impact, probability of influence, importance, and relevance for a given social-ecological system. Usually, the participants identified no more than 10 drivers, and the majority of the drivers pertained to social issues (Oteros-Rozas et al., 2015). Thorn et al. (2020) also found drivers of change through participatory workshops but stated that most cases did not rank drivers in terms of importance or threat to the system.

Flynn et al. (2018) found drivers of change important when examining future vulnerabilities and adaptation options, and most case studies considered both environmental (e.g., changes in temperature and/or precipitation) and social drivers of change (e.g., economic influences), with over half of the case studies using climate change projections to determine drivers of change.

In Reinhardt et al. (2018), both PSP case studies selected scenarios drivers using stakeholder input, concept maps, and the STEEP Framework.4 One of its participatory cases also assessed drivers based on a range of numbers of natural resources management and climate change projections.

2.2.4 Scenario design and creation

Scenario design and creation varied according to the goal of PSP, the type(s) of scenario, scenario boundaries, scenario projected year, and the chosen selection of qualitative and/or quantitative tools. Typically, researchers guided participants through discussions to share perspectives, experiences, and reflections during scenario design and creation process. Sometimes discussions were accompanied by tools such as prompts (e.g., individual reflections or facilitation), creative visuals (e.g., drawings, maps), or models, both qualitative (e.g., mental models, cognitive conceptualization diagrams) and quantitative (e.g., data about climate/land-use change, applied GIS; Oteros-Rozas et al., 2015; Thorn et al., 2020). All reviewers’ case studies created storylines or narratives to describe the future, but Thorn et al. (2020) reported that nearly half of its cases incorporated quantitative scenarios into their qualitative ones.

Oteros-Rozas et al. (2015) found that most of its cases used stakeholder-driven approaches to developing scenarios around conservation, biodiversity, and human well-being. Workshops generally included facilitators, who often came from their own research team either after facilitation training or with previous experience in PSP workshops. Participants engaged in the process by selecting years for their scenarios, identifying drivers, and imagining how drivers interacted with each other. Sometimes the storylines were developed by participants: other times the research team. Over half of the cases in Oteros-Rozas et al. (2015) created four scenarios, and the scenarios’ content tests how different stakeholder groups benefitted or lost from ecosystem services supply in each scenario. Just under half of the cases in Oteros-Rozas et al. (2015) carried out quantitative analyses (i.e., modeling trends, modeling tendency of drivers to change, etc.).

In Flynn et al. (2018), cases focused on three sectors likely to be influenced by climate change: traditional livelihoods, resource management, and community planning. Scenario creation followed two approaches: forecasting (i.e., considering the future from the vantage point of the present) or backcasting (i.e., creating a desirable future situation and determining the required steps needed at present to reach that future). The decision on which vantage point was influenced by the purpose of the workshop. Visual tools such as maps or collages, storylines, and narratives were effective scenario presentation tools. The creation process was varied, ranging from community members creating their own narratives of possible/desirable futures, to researchers completing this step and presenting it back to the community (Flynn et al., 2018).

Case studies in Thorn et al. (2020) created scenarios thematically focused on governance and policy change, land use change, maintenance of cultural or biological biodiversity, demographic change, technological/infrastructure change, and climate change. The majority of Thorn et al. (2020)’s case studies created three or four scenarios.

The PSP case studies in Reinhardt et al. (2018) were focused on supporting sustainable development by improving integrated natural resource management of specific natural resources under pressure. The two participatory cases used a scenario axes technique inspired by Schwartz (1996) and developed four qualitative scenarios each. Participants created concept maps to provoke discussion and description of key factors, drives, and processes.

2.2.5 Scenario review

Once developed, two systematic reviews identified processes on reviewing the scenarios in its case studies. In Flynn et al. (2018), reviewing scenarios was a best practice found in the literature, and most case studies completed this step in a participatory manner. Reviews acted as forums for stakeholders to discuss scenarios’ impacts on locally relevant sectors and consider the information included in these scenarios, which was reported to increase social learning and understanding among stakeholders. Stakeholders then identified options to address the impacts highlighted (i.e., option identification) and rated them (i.e., option rating). Flynn et al. (2018) considered community identified options as convincing for community buy-in since they are typically more contextually and culturally appropriate, and most cases were able to identify adaptation options in a participatory manner. However, option rating, which reportedly could increase the transparency of policy choices and aid decision-making in Flynn et al. (2018), was not completed in most cases. It is unclear as to whether in ability to rating options was a hindrance to Flynn et al. (2018)’s cases.

In Thorn et al. (2020) about half of its case studies conducted either a check for plausibility (i.e., whether a completed scenario fell within limits of what might plausibly happen) or consistency, but very few tested for both. Cases tested for consistency either internally (i.e., reviewing whether impact variables within a narrative can occur in combination) or externally (i.e., whether diverse future states, or local/global scenarios contradict one another). Although they were not placed in defined steps of the process, we found other scenario review methods that involved referring to historical or expert validation, comparing findings to published results, and having participants comment on scenarios (Oteros-Rozas et al., 2015; Thorn et al., 2020).

2.2.6 Dissemination of results

Similarly, to scenario creation, PSP outputs and dissemination varied by workshop. Case studies in Oteros-Rozas et al. (2015) geared results to local communities, academic audiences, workshop participants, and policy and decision makers. The outputs were creative or artistic (e.g., collages, drawings, or illustrations) to visualize the scenarios and facilitate the PSP processes.

Some case studies in Thorn et al. (2020) disseminated results to stakeholders involved in the PSP process for the purpose of summarizing findings, ensuring results are understood, obtaining feedback, and discussing intentions to apply the evidence to real-world challenges (Thorn et al., 2020). The target audiences were the participants who developed the scenarios and researchers, but occasionally they were intended for decision-makers at sub/national levels (Thorn et al., 2020).

Flynn et al. (2018) did not disclose in detail how results were shared across its case studies, although it emphasized the importance of involving community members and decision makers in IAV research. Reinhardt et al. (2018) disclosed that scenario outcomes could be shared with the scientific community but not with the broader public due to mixed perceived levels of participation in PSP and scenario relevance.

2.2.7 Evaluation

Across the reviews, case studies evaluated PSP workshops by self-reflexive assessments in focus groups, interviews, surveys, participant observation, and/or meetings. Researcher-based monitoring and evaluation of outcomes was explicitly mentioned in the reviews except for Flynn et al. (2018). Flynn et al. (2018) did not consider post-workshop monitoring and evaluation as a best practice and consequently as a part of its evaluation rubrics.

Oteros-Rozas et al. (2015) reported that less than half of the case studies conducted monitoring, and those that did were usually within the project timeframe. For evaluations, case studies conducted ‘informal’ evaluations (i.e., unstructured and sans contractual obligation) to assess social learning, determine the usefulness of the process, and provide immediate feedback to the research team (see Oteros-Rozas et al., 2015).

Thorn et al. (2020) found that no case studies conducted both monitoring and evaluation. Some performed informal evaluations and found evidence of short- and long-term outcomes, but most did not formally evaluate the design, implementation, results, or consequences of social learning from PSP.

Reinhardt et al. (2018) conducted evaluation but not monitoring, and they took a different approach: the review authors invited local researchers to evaluate the case studies’ scenarios using surveys designed by the researcher team. These surveys were distributed a year after the local scenario projects had been finalized.

3 Findings

We applied a thematic analysis, a method for identifying, analyzing and reporting patterns within data to each systematic review and whichever steps and outcomes were observed (Braun and Clarke, 2006). In a thematic analysis, the patterns capture aspects of the data deemed salient in relation to the research question(s). Our thematic analysis used an inductive approach (i.e., deriving patterns from data without preconceptions or pre-existing theories), so patterns emerged as we reviewed PSP processes reported by the reviews (and their supplemental material when available).

We present four patterns (hereafter referred to as trends) that we found to be common across the systematic reviews. We believe these trends are of significance for understanding PSP’s current usability in climate adaptation planning and management. We also find these trends to be useful points of comparison for our boundary organization’s evaluation. We also find these trends to be useful points of comparison for our boundary organization’s evaluation of our own PSP practice and outcomes:

1. Increased social learning and understanding is a common motivator and outcome of PSP.

2. Climate projections had varying usage among the quantitative tools used in PSP development.

3. There is limited post-workshop monitoring and evaluation limited assessment of PSP outcomes.

4. There were varying degrees of completion of “commonly applied methods” or “best practices.”

3.1 Increased social learning and understanding is a common motivator and outcome of PSP

Social learning—when group interactions exchange individual knowledge and understanding—was a primary objective for conducting PSP. Oteros-Rozas et al. (2015) reported that over a quarter of its cases considered social learning as the primary workshop goal, while a smaller amount reported decision-support as the primary goal. In Thorn et al. (2020), most case studies pursued social learning by integrating participants’ views into shared representations of the future, along with understanding the local context and comparing trajectories of change in and across social-ecological systems.

Social learning was also a recorded outcome for participants in PSP workshops. For Thorn et al. (2020), which found substantial evidence of increased learning among participants, that meant a better understanding for case studies’ participants of complexities that exist in mountainous social-ecological systems. For case studies in Oteros-Rozas et al. (2015), it meant an increased knowledge of future management challenges for participants. Flynn et al. (2018), also, observed that discussing the impacts of scenarios on locally relevant sectors increased social learning and understanding between participants.

Sometimes these exchanges revealed biases (e.g., personal bias, cultural bias, etc.) that affected social learning. Some biases could be traced back to historic disruptions such as local political turmoil that affected the whole workshop. For example, one participatory case study reviewed by Reinhardt et al. (2018) was reluctant to develop unpleasant scenarios due to a long and unstable transition period after a revolution. Natural disasters occurring during the workshop were examples of external influences that could influence the discussion and outcome of the workshop (Flynn et al., 2018) or present tensions and individual day-to-day needs (Oteros-Rozas et al., 2015; Reinhardt et al., 2018). As another form of social learning, different cultural perspectives between participants and researchers revealed important tensions that needed to be considered. These included contrasting priorities, values, and interpretations of metaphysical concepts such as time; time and uncertainty were found to have unequal interpretations across Western and Arctic Indigenous cultures (Flynn et al., 2018).

Finally, there were contrasting philosophies in Inuit and Western understandings of planning for the future. Flynn et al. (2018) cautioned that this could have implications for future research and could create situations where Western worldviews are being imposed on communities whose ways of planning are different. PSP’s potential to reveal biases was considered a benefit by Reinhardt et al. (2018) because it could decrease the bias and improve decision quality. However, the review’s authors acknowledged that it requires further analysis.

3.2 Climate projections had varying usage among the quantitative tools used in PSP development

Climate change projections are just one source out of several to obtain quantitative information and have varying patterns of employment in PSP. In Flynn et al. (2018), over half of the case studies used climate projections or broad trends and expected changes derived from global climate models or regional climate models. Those that did not use climate projections turned to other methods: (1) focusing on specific locally observed impacts, (2) using environment modeling of hydrological, vegetation, or snow-cover to create future scenarios, (3) creating scenarios based on an axis with opposite sentiment at each end, or (4) using future scenarios based on broad trends in the literature (e.g., one case study applied globally shared socio-economic pathways to local drivers under different scenarios).

In Reinhardt et al. (2018), one case study used drivers of change that encompassed climate change projections in addition to a “range of numbers” on natural resources management. These projections were also “indirectly considered” by participants as additional information on general future changes (Reinhardt et al., 2018). The review does not describe whether the participants felt comfortable with the projections, nor how the information further served the participants.

The other reviews did not explicitly mention climate model projections but discussed case studies that included quantitative information. In Oteros-Rozas et al. (2015), less than half applied quantitative analysis to assess or model trends on ecosystem services, human well-being, policy response, and drivers of change. Thorn et al. (2020) did not identify many quantitative tools, so it is unclear if case studies used any climate projections. However, it reported that Geographic Information Systems (GIS) were used to model dynamic relationships over time and space.

Sometimes the lack of quantitative information or tools was cited as a weakness, but it is not clear whether this weakness influenced researchers or participants. Oteros-Rozas et al. (2015) reported that a fraction of its cases cited the lack of quantitative information, statistical and data-based testing, or modeling to support trends analysis as weaknesses. However, other case studies reported an “unavoidable” trade-off, where increasing the accuracy of scientific information came at the cost of decreasing the “social relevance” of the process (Oteros-Rozas et al., 2015). In Thorn et al. (2020), the review suggested that mountainous social-ecological systems require fine-scale data to capture high spatio-temporal complexity for effective resource management, yet there is a lack of available data.

Flynn et al. (2018) considered climate projections underutilized, and it theorized why there might be limited use of climate projections in the Arctic: uncertainties surrounding climate projections, limited capacity to use these projections, reservations by Arctic Indigenous populations to discuss possible future events, and limited projections on key Arctic environmental factors being available. These theories were considered in the context of the Arctic, and no other reviews reported on other stakeholder groups’ attitude toward climate projections. The alternatives to the climate projections were helpful in some cases (e.g., environmental modeling, extrapolating current trends, and using observations of present-day vulnerabilities). However, these alternatives only provided present-day quantitative information. Given the magnitude of climate change projected for the Arctic, using this information could lead to misconceptions about how to prepare for the future (Flynn et al., 2018).

3.3 There is limited post-workshop monitoring and evaluation limited assessment of PSP outcomes

Broadly, PSP findings and outcomes had strong policy relevance, and the process itself was a useful tool to build cooperation and collaboration, create shared understanding, and support decision-making (Oteros-Rozas et al., 2015; Thorn et al., 2020). However, case studies struggled to conduct monitoring (i.e., systematic collection of data to track the extent of progress and achievement of outcomes and impacts using indicators) and evaluation (i.e., assessment of the scenario design, implementation, and results through a formal methodological approach; definitions from Oteros-Rozas et al., 2015). This makes it challenging to produce evidence that PSP led to new management actions, partnerships and collaborations, or social learning processes, as stated by Oteros-Rozas et al. (2015).

The lack of evaluations was a weakness or gap in PSP because the extent to which the process scenarios achieve outcomes was unknown, according to the reviewers in Oteros-Rozas et al. (2015) and Thorn et al. (2020). In Oteros-Rozas et al. (2015), the evaluations that were conducted detected evidence of short-term impacts, but the evidence was inconclusive on whether long-term outcomes occur after the PSP process.

Reviewers recommended greater attention to monitoring and evaluation because they could be useful for informing future iterations of PSP, policy and management priorities, and research (Oteros-Rozas et al., 2015; Thorn et al., 2020). To initiate long-term monitoring and evaluation, Oteros-Rozas et al. (2015) suggested adopting an explicit adaptive management approach; that is, embedding PSP within larger and longer-term projects (see Peterson et al., 2003). This would help researchers plan their projects, facilitate them, and then more formally evaluate outcomes (McBride et al., 2017, as cited in Thorn et al., 2020). However, this requires researchers adjusting project timescales and budgets to allow for deeper evaluations and monitoring for certain outcomes (Oteros-Rozas et al., 2015). In most case studies, it was likely that monitoring and evaluation for long-term impacts was limited due to resource constraints, whether that be due to time, personal capacity, or funding (Oteros-Rozas et al., 2015; Flynn et al., 2018). With Thorn et al. (2020) reporting that approximately two-fifths of its case studies had PSP processes embedded in a larger research program, it would be useful to understand whether this research integration could allow for easier monitoring and evaluation.

3.4 There were varying degrees of completion of “commonly applied methods” or “best practices”

The systematic reviews, in their evaluations of case studies, relied on a set of “established” literature that suggested a set of PSP standards, therefore demonstrating PSP’s potential. However, we found that “commonly applied methods” (Thorn et al., 2020) or “best practices” (Flynn et al., 2018) did not appear to be uniformly applied or fully completed.5

In Flynn et al. (2018), despite PSP case studies commonly following the stages recognized as “best practices” in the general PSP literature, community participation varied between case studies. Some steps were completed without any community participation (i.e., completed by researchers instead). In Thorn et al. (2020), half of the case studies failed to address at least eight of the nine steps that the reviewers outlined based on the literature. Although these nine steps were based on a literature review and expert contribution, these steps were not uniformly applied or followed (Thorn et al., 2020). Moreover, a year after finalizing the PSP process, Reinhardt et al. (2018) distributed self-administered surveys to local researchers, who were knowledge brokers in their region. They understood the usefulness of the PSP process according to researchers, but the survey did not appear to collect perspectives from the participants, casting doubt on the efficacy of addressing certain research challenges for practical applications (Reinhardt et al., 2018).

4 Discussion

From Section 2, we find that the methods to create scenarios in PSP vary. Such variability is perhaps expected given the wide range of applications and the diversity of people and organizations carrying out scenario planning. Taking note of this variability, in Section 3 we observe four common outcomes across the systematic reviews that bring up issues related to social learning and bias, varying use of quantitative information, issues related to carrying out monitoring and evaluation, and the varying completion of practices recommended by established PSP literature. Based on the combined findings from both sections, we suggest that four following processes as integral to maximizing PSP’s usability for end users. Note that the limitations used in our selection criteria (see Section 2) consequently extend to our suggestions. Our exclusion of non-peer-reviewed sources limits our ability to understand whether the processes described below are active outside of peer-reviewed literature:

(1) When gathering information, defining goals and focal issues, and identifying participants, researchers and/or PSP facilitators are encouraged to identify areas where social imbalances may emerge between participants and researchers/facilitators. Visible and hidden power relations are inherent in these multi-stakeholder processes, which manifest from: (1) participants who are powerful or influential, and (2) participants who have historically been excluded from decision-making (e.g., women and young people in Oteros-Rozas et al., 2015). However, these unchecked social imbalances among participants can influence PSP outcomes. The absence of powerful stakeholders has consequences on information accessibility and decision-making. In Thorn et al. (2020), bi- and multi-lateral institutions were absent, which could have unintended consequences for international cooperation and budget allocation. Oteros-Rozas et al. (2015) noticed reports in its case studies of absent/underrepresented powerful stakeholders and decision-makers such as industry or big landowners, which undermined the credibility of the PSP process. The absence of those in powerful roles could undermine any decisions stemming from PSP that require their very support. However, equally pertinent is the absence of those considered less powerful and influential, especially groups historically excluded from decision-making. Both powerful and powerless actors must be present in PSP to avoid misconstruing power asymmetries, and to observe and analyze the power relations that do reveal themselves. For example, Flynn et al. (2018) noticed a tendency among certain participants to ‘defer’ to others perceived as authorities, and this happened when there was a limited mix of Arctic stakeholders and most of the group belonged to one stakeholder group. Thorn et al. (2020) observed that although Indigenous organizations were one of the most prominent institutions in participating in PSP for mountainous social-ecological systems, some case studies in its review found limited Indigenous engagement due to cultural barriers.

Social imbalances may also emerge between participants and researchers. Researchers were characterized as the main facilitators and evaluators of the PSP process, and they risk imposing personal bias on the PSP process by incorporating their preferences or interests (Oteros-Rozas et al., 2015). Unless researchers/facilitators are trained to manage the power balance between themselves and participants, their authority and voices are liable to undermine participants’ ownership of the process, and hence the scenarios’ usability (Oteros-Rozas et al., 2015; Thorn et al., 2020). Strategies are needed to not only ensure inclusion, but to ensure that all relevant decision makers have the capacity to implement outcomes—not just the most powerful. Researchers are not always guaranteed to reside in the community of interest to support PSP-based adaptation, and researcher-geared preferences could lead to outputs that do not integrate local planning needs.

(2) Bias is inherent to PSP and should be addressed and either incorporated into scenarios or overcome to promote social learning. Scenarios created during a single workshop inherently are “contextual snapshots,” primarily capturing the perspectives, experiences, and knowledge of the participants present at the workshop. They also may capture perspectives that contain bias or are influenced by external factors occurring at the same time of the workshop. Bias impacts the participatory processes during a PSP workshop.

One example is our observation of global and long-term context failing to be integrated robustly into local planning because of the difficulty balancing global drivers of change with regional/internal drivers due to spatial and temporal mismatches (although climate change was generally an underlying motivator). Local participants were likely to disregard external or long-term drivers of change because they were seen as more abstract and less clearly linked to local impacts. There may be limited capacity at the local level to consider global drivers that may influence local impacts (Wesche and Armitage, 2010, 2014, as cited in Flynn et al., 2018). Butler et al. (2020) explains that participants tended to perceive the future in more immediate terms and favor shorter timeframes, leading to current needs as prioritized higher than systematic change, and therefore possibly leaving out long-term context. As a result, participants do not explore feedbacks, trade-offs, and linkages between processes of multiple spatial scales; and adaptation options may not properly integrate larger issues (e.g., climate change) to local problems. As an example, Reinhardt et al. (2018) stated that two case studies found it challenging to use qualitative approaches to address climate change during participatory exercises. This hampered qualitative assessments of management options. Reinhardt et al. (2018) went on to suggest that linking information across local and larger scales could increase policy relevance, but they recognized that integrating global context from climate change issues was challenging in its case studies’ participatory exercises.

The literature proposed solutions such as community-based research to find potential tension in worldviews and knowledge systems before they appear in the workshop (Flynn et al., 2018) and actor-mapping to identify all stakeholders in the system and their relationships to others (Reed et al., 2009; Oteros-Rozas et al., 2015, as cited by Thorn et al., 2020). These suggestions could lead to more participation in the PSP process and potentially more meaningful outcomes, but their implementation requires careful and respectful coordination with the community of interest.

(3) The development and review of PSP scenarios should welcome and incorporate uncertainty to improve scenario usability for end users. Uncertainty (e.g., uncertain information from models, unexpected events, surpassed thresholds, new vulnerabilities, and risks) may not be as rigorously studied within scenario development as it should be. In Oteros-Rozas et al. (2015), participants were encouraged to embrace uncertainty. Over half of the cases explicitly mentioned uncertainty, which was usually when analyzing drivers of change, but only a smaller fraction addressed it and incorporated it into their solutions. Three of Oteros-Rozas et al. (2015)’s case studies that explicitly incorporated uncertainty aimed to promote community-based solutions and engage underrepresented social actors in decision making. In Reinhardt et al. (2018), case studies tried to address uncertainty by incorporating surprises, abrupt changes, and unexpected trajectories related to natural disasters and sociopolitical events, but uncertainty remained one of the least addressed components of the workshop. In Thorn et al. (2020), less than half of the cases explicitly addressed or evaluated uncertainty, possibly due to insufficient or erroneous data used to construct and test models, problems in understanding local systems, or not having a full range of perspectives in the participatory workshops. If uncertainty is poorly studied and addressed, participants may struggle to understand any scenario outputs or confidently employ them in assessments and decision-making processes (Thorn et al., 2020). Additionally, PSP participants in decision-making or influential roles may not acquire the tools needed to evaluate uncertainty and transform it into usable information (Oteros-Rozas et al., 2015; Thorn et al., 2020).

Expanding the use of plausibility and consistency tests (see Thorn et al., 2020), could support addressing uncertainty and its sources, and these tests should expect to vary in what uncertainties are most important to consider. Flynn et al. (2018) also noticed that combining bottom-up (i.e., scenarios led by local communities at smaller spatial scales) and top-down (i.e., scenarios led by the scientific community at larger spatial scales) approaches allowed for the production of “local scenarios embedded in global pathways” (Nilsson et al., 2015 as cited by Flynn et al., 2018). In other words, local scenarios became consistent with the global drivers and boundary conditions influencing local futures. This may improve the incorporation of global uncertainty, but reviewers noted the difficulty of multilevel analyses, which are inherently more complicated and require a greater diversity of actors and resources (Thorn et al., 2020). We also emphasize that incorporating global context may be restricted by participant bias and would require good data availability, resources, and appropriate assessment tools. However, quantitative information is not entirely necessary to address uncertainty.

Although uncertainty is large in climate projections, that is not necessarily a fundamental barrier to applying climate knowledge to real-world problems. In fact, in the context of planning for the future, climate change may be one of the most certain pieces of knowledge. The difficulty lies in localizing the global phenomenon and anchoring it in known or experienced local vulnerabilities to weather events. Attempting to reduce uncertainty to increase a scenario’s usability is unlikely to occur and could actually be framed as a fallacy (Lemos and Rood, 2010). Rather than force quantitative information from simulations into PSP, the scenarios could incorporate model information through storytelling. Qualitative narratives developed in PSP stand as model-informed alternatives. That is, once the overarching aspects of the scenario are decided (e.g., coastal compound flooding events) model simulations and ensembles are used to assure a grounding physical plausibility. Any quantitative requirements would follow from more tailored use of models designed for specific purposes. This reframing will be discussed in more detail in a separate, forthcoming publication. These two reframings are: (1) the use of model projections, and (2) the representation of uncertainty.

Even when the scenarios are formed and ready for end users, they risk losing their usability. The scenarios made during a PSP are static representations of a community’s state of vulnerability, but that vulnerability can fluctuate over time as new risks appear and disappear. If the scenarios are not attached to iterative processes to capture unexpected future disruptions, they risk becoming redundant (Butler et al., 2020). This could thwart any attempts to build upon specific PSP processes or understand how uncertainties found within scenarios change with time, as discussed by two systematic reviews (Oteros-Rozas et al., 2015; Flynn et al., 2018). Furthermore, uncertainty may not be consistently communicated well to participants and stakeholders beyond the workshop. Thorn et al. (2020) suggested “standards for best practices” on providing robust evaluations of uncertainty and communicating them regardless of PSP goals.

(4) Monitoring and evaluation are needed from a research perspective to study the emergence of outcomes and PSP’s usability for participants as opposed to researchers. Based on the reviews, PSP for climate adaptation and management is a credible tool with enormous flexibility in its motivations and methodologies, stakeholder engagement processes, quantitative and qualitative tools, and more. However, there is inconclusive evidence in the peer-reviewed literature on whether PSP leads to sustained, long-term outcomes (e.g., policy change), and the broader use of PSP is not fully understood, in part due to its limited long-term monitoring and evaluation. Our review also reveals a possible friction in the process due to PSP’s contrasting value between researchers and participants. This contrast in value, combined with the various levels of completion of “best” or “commonly applied” practices, suggests that there are areas for improvement to further enhance its practical usability for end users.

Our decision to explore this phenomenon further is motivated by one of our objectives, which is to better assess the efficacy of our own PSP evaluation techniques. Our observations of certain features in the evaluation frameworks used by the systematic reviews, in combination with researcher-participant dynamics we observed across the systematic reviews, suggests that PSP has a mixed purpose. Its value to its participants lies in the development of outcomes that help them address their particular challenges. Contrarily, its value to researchers lies in investigating its methodology or the motivations, experiences, and outcomes of the participants. In most case studies, as reported by systematic reviews, it is the researchers that are facilitating workshops. The researchers’ protocols may add time requirements and, potentially, objectify stakeholders and are not perceived to benefit the participant’s outcomes. Alternatively, those protocols may experience time and resource constraints, so researchers have to expedite the process by filling in information or completing steps that were intended to be participatory. A PSP process where participants end up unable to or unwilling to participate is problematic for end users.

Continued research to establish PSP’s value and effectiveness will influence people’s desires to use the method. Any further research will also be of great interest to us, in the sense that it may improve our co-creation of usable climate information with end users. Such research might be conducted by researchers expanding their capacity for conducting long-term monitoring and evaluation of PSP. Long-term monitoring and evaluation could allow researchers/workshop organizers to record benefits that take longer time to appear, and this would prompt participants to share their feedback. We noted some suggestions on monitoring and evaluation that were offered by the systematic reviews (e.g., adopting an explicit adaptive management approach, embedding PSP into a larger research program, etc.).

We also note that a possible mechanism to catalyze long-term monitoring and evaluation—iterative PSP (i.e., designing PSP for several iterations)—could also have a dual purpose of evaluating PSP outcomes and incorporating uncertainty. Iterative PSP could capture uncertainty by compelling users to reassess their planning and management (i.e., adaptive management; Peterson et al., 2003). This may be a useful option for end users who would like to minimize the challenge of managing changing vulnerabilities and risks. Building upon existing work could increase the efficiency and policy relevance of the process (Oteros-Rozas et al., 2015) and improve understanding of systems at play (Reinhardt et al., 2018). Take note that these recommendations will likely require an increase in financial and logistical costs. The exact costs of PSP were not quantified in the literature, and this was out of the scope of this review.

5 Conclusion

Participatory scenario planning (PSP) has been increasingly used for planning, policy, and decision-making within the context of climate change, but there has been no high-level synthesis of systematic reviews covering the overall state and direction of PSP for climate adaptation and management. The authors of this paper, who have used PSP for over a decade, initiated a synthesis of reviews to collect, synthesize, and present high-level evidence on the current conditions and benefits of PSP. Using four systematic reviews published between 2015 and 2021, we reviewed and synthesized common practices and outcomes in PSP. Our study’s findings are biased toward peer-reviewed literature due to the authors’ decision to exclude non-peer-reviewed literature made by non-academic entities. Consequently, our findings and suggestions are not representative of all PSP experiences.

We first identified common practices and outcomes in PSP out of the various approaches that exist in the literature. Then, we were able to identify four trends in PSP: (1) increased social learning and understanding, (2) varying usage of climate projections, (3) limited post-workshop monitoring and evaluation, and (4) varying degrees of completion of “commonly applied methods” or “best practices.”

We then proposed four processes as integral to addressing the four trends and maximizing PSP’s usability for end users: (1) identify social imbalances and determine how they impact decision-making processes in PSP; (2) recognize bias as inherent to the PSP process and incorporate it into scenario development to promote social learning and cohesion; (3) explicitly address uncertainties from quantitative and/or qualitative information and incorporate them into scenario development; and (4) set up robust monitoring and evaluation to record short-term and long-term PSP outcomes. Further action on any of the four processes, however, must include research into whether these processes are active outside of academic entities. This will, in turn, provide further insights into ‘desirable’ or ‘standard’ PSP practices and outcomes.

We intend for this high-level synthesis to deliver useful insights into the state of PSP and possible future directions for its adoption by entities in both academic and non-academic spheres as a tool for climate adaptation and management.

Author contributions

LO: Writing – original draft, Writing – review & editing. JJ: Funding acquisition, Investigation, Writing – review & editing. KC: Writing – review & editing. RR: Conceptualization, Methodology, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by NOAA awards NA15OAR4310148 and NA20OAR4310148A to the University of Michigan.

Acknowledgments

We thank Laura Briley for her discussions and development of scenario planning over her years of scientific contributions to the subject matter. Furthermore, we thank Maria Carmen Lemos for her comments on the manuscript and her counsel on bridging social science and physical science perspectives. Finally, we thank the reviewers for taking the necessary time and effort to review the manuscript and provide valuable and insightful feedback.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^A synthesis of reviews can also assess current systematic evaluation methods employed by researchers to assess the outcomes of case studies in their reviews, but that is not the primary objective of this paper.

2. ^A social-ecological system is defined as nested, multilevel systems that provide essential services to society such as supply of food, fiber, energy, and drinking water (Berkes and Folke, 1998).

3. ^Please note that we did not evaluate PSP steps for chronological order but for their appearance and/or completion in the systematic reviews.

4. ^STEEP is an acronym for Social, Technical/Technological, Economic, Environmental, and Political. It is used by futurists and scenario planners when considering the future.

5. ^Please note that our review of the degree to which these steps were completed is influenced by the systematic reviews’ evaluation frameworks and by the authors’ decisions to include or exclude certain information.

References

Addison, A., and Ibrahim, M. (2013). Participatory scenario planning for community resilience. UK: World Vision.

Allington, G., Fernandez-Gimenez, M., Chen, J., and Brown, D. (2018). Combining participatory scenario planning and systems modeling to identify drivers of future sustainability on the Mongolian plateau. Ecol. Soc. 23. doi: 10.5751/ES-10034-230209

Aromataris, E., Fernandez, R., Godfrey, C. M., Holly, C., Khalil, H., and Tungpunkom, P. (2015). Summarizing systematic reviews. Int. J. Evid. Based Healthc. 13, 132–140. doi: 10.1097/xeb.0000000000000055

Barnaud, C., Promburom, T., Trébuil, G., and Bousquet, F. (2007). An evolving simulation/gaming process to facilitate adaptive watershed management in northern mountainous Thailand. Simul. Gaming 38, 398–420. doi: 10.1177/1046878107300670

Bennett, E., Carpenter, S., Peterson, G., Cumming, G., Zurek, M., and Pingali, P. (2003). Why global scenarios need ecology. Front. Ecol. Environ. 1, 322–329. doi: 10.1890/1540-9295(2003)001[0322:WGSNE]2.0.CO;2

Berkes, F., and Folke, C. (1998). Linking social and ecological systems: Management practices and social mechanisms for building resilience. Cambridge, UK: Cambridge University Press.

Berrang-Ford, L., Pearce, T., and Ford, J. D. (2015). Systematic review approaches for climate change adaptation research. Reg. Environ. Chang. 15, 755–769. doi: 10.1007/s10113-014-0708-7

Birkmann, J., Cutter, S. L., Rothman, D. S., Welle, T., Garschagen, M., van Ruijven, B., et al. (2015). Scenarios for vulnerability: opportunities and constraints in the context of climate change and disaster risk. Clim. Chang. 133, 53–68. doi: 10.1007/s10584-013-0913-2

Bizikova, L., Burch, S., Robinson, J., Shaw, A., and Sheppard, S. (2011). “Utilizing participatory scenario-based approaches to design proactive responses to climate change in the face of uncertainties” in Climate change and policy: The calculability of climate change and the challenge of uncertainty. eds. G. Gramelsberger and J. Feichter (Springer), 171–190.

Bradfield, R., Wright, G., Burt, G., Cairns, G., and Van Der Heijden, K. (2005). The origins and evolution of scenario techniques in long range business planning. Futures 37, 795–812. doi: 10.1016/j.futures.2005.01.003

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Briley, L., Dougherty, R., Wells, K., Hercula, T., and Notaro, M., (2021). A Practitioner’s guide to climate model scenarios. Great Lakes integrated sciences and assessments (GLISA). Available at: https://glisa.umich.edu/wp-content/uploads/2021/03/A_Practitioners_Guide_to_Climate_Model_Scenarios.pdf

Butler, J. R. A., Bergseng, A. M., Bohensky, E., Pedde, S., Aitkenhead, M., and Hamden, R. (2020). Adapting scenarios for climate adaptation: practitioners’ perspectives on a popular planning method. Environ. Sci. Pol. 104, 13–19. doi: 10.1016/j.envsci.2019.10.014

Butler, J. R. A., Suadnya, W., Yanuartati, Y., Meharg, S., Wise, R. M., Sutaryono, Y., et al. (2016). Priming adaptation pathways through adaptive co-management: design and evaluation for developing countries. Clim. Risk Manag. 12, 1–16. doi: 10.1016/j.crm.2016.01.001

CARE . (2012). Decision-making for climate resilient livelihoods and risk reduction: A participatory scenario planning approach. Available at: https://careclimatechange.org/wp-content/uploads/2018/07/ALP_PSP_EN.pdf

Cornelius, P., Van de Putte, A., and Romani, M. (2005). Three decades of scenario planning in Shell. Calif. Manag. Rev. 48, 92–109. doi: 10.2307/41166329

Ferrier, S., Ninan, K. N., Leadley, P., Alkemade, R., Acosta, L., Akcakaya, H. R., et al. (2016). Summary for policymakers of the methodological assessment of scenarios and models of biodiversity and ecosystem services of the intergovernmental science-policy platform on biodiversity and Ecosystem services. Secretariat of the Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem Services 39:1676.

Fisichelli, N., Hoffman, C. H., Welling, L., Briley, L., and Rood, R. (2013). Using climate change scenarios to explore management at isle Royale National Park: January 2013 workshop report. Colorado: National Park Service, Fort Collins.

Flynn, M., Ford, J. D., Pearce, T., and Harper, S. L. (2018). Participatory scenario planning and climate change impacts, adaptation and vulnerability research in the Arctic. Environ. Sci. Pol. 79, 45–53. doi: 10.1016/j.envsci.2017.10.012

Galer, G. (2004). Preparing the ground? Scenarios and political change in South Africa. Development 47, 26–34. doi: 10.1057/palgrave.development.1100092

Garfin, G., Black, M., and Rowland, E. (2015). Advancing scenario planning for climate decision making. Eos 89. doi: 10.1029/2015EO037933

Gates, O. C., and Rood, R. B. (2021). (in global climate models, we trust?) an introduction to trusting global climate models and Bias correction. Available at: https://glisa.umich.edu/wp-content/uploads/2021/03/Global-Climate-Model-Bias-and-Bias-Correction.pdf

Goodspeed, R. (2017). An evaluation framework for the use of scenarios in urban planning. Cambridge, MA, USA: Lincoln Institute of Land Policy, 7–8.

Johnson, K., Dana, G., Jordan, N., Draeger, K., Kapuscinski, A., Schmitt Olabisi, L., et al. (2012). Using participatory scenarios to stimulate social learning for collaborative sustainable development. Ecol. Soc. 17. doi: 10.5751/ES-04780-170209

Kahane, A. (2004). Solving tough problems: An open way of talking, listening, and creating new realities. Berrett-Koehler Publishers, Inc. Available at: https://books.google.com/books?hl=en&lr=&id=FJkFXd8GikcC&oi=fnd&pg=PR9&ots=QCWd_yO2yI&sig=VAU0IvrmkCCLsgCijKwe9lq0om4#v=onepage&q&f=false

Kohler, M., Stotten, R., Steinbacher, M., Leitinger, G., Tasser, E., Schirpke, U., et al. (2017). Participative spatial scenario analysis for alpine ecosystems. Environ. Manag. 60, 679–692. doi: 10.1007/s00267-017-0903-7

Lang, T., and Ramírez, R. (2017). Building new social capital with scenario planning. Technol. Forecast. Soc. Chang. 124, 51–65. doi: 10.1016/j.techfore.2017.06.011

Lemos, M. C., and Rood, R. B. (2010). Climate projections and their impact on policy and practice. WIREs Climate Change 1, 670–682. doi: 10.1002/wcc.71

McBride, M., Lambert, K., Huff, E., Theoharides, K., Field, P., and Thompson, J. (2017). Increasing the effectiveness of participatory scenario development through codesign. Ecol. Soc. 22. doi: 10.5751/ES-09386-220316

Millennium Ecosystem Assessment . (2005). Available at: https://www.millenniumassessment.org/en/index.html

Nilsson, A. E., Carlsen, H., and van der Watt, L.-M. (2015). Uncertain futures: The changing global context of the European Arctic, report of a scenario-building workshop in Pajala, Sweden. Stockholm: Stockholm Environment Institute.

Norton, R. K., Buckman, S., Meadows, G. A., and Rable, Z. (2019). Using simple, decision-centered, scenario-based planning to improve local coastal management. J. Am. Plan. Assoc. 85, 405–423. doi: 10.1080/01944363.2019.1627237

Oteros-Rozas, E., Martín-López, B., Daw, T., Bohensky, E., Butler, J., Hill, R., et al. (2015). Participatory scenario planning in place-based social-ecological research: insights and experiences from 23 case studies. Ecol. Soc. 20. doi: 10.5751/ES-07985-200432

Pachauri, R. K., and Meyer, L. A. (Eds.). (2014). Climate change 2014: Synthesis report (contribution of working groups I, II and III to the fifth assessment report of the intergovernmental panel on climate change). IPCC. Available at: https://www.ipcc.ch/site/assets/uploads/2018/02/SYR_AR5_FINAL_full.pdf

Palomo, I., Martín-López, B., López-Santiago, C., and Montes, C. (2011). Participatory scenario planning for protected areas management under the Ecosystem services framework: the Doñana social-ecological system in southwestern Spain. Ecol. Soc. 16. doi: 10.5751/ES-03862-160123

Peterson, G. D., Cumming, G. S., and Carpenter, S. R. (2003). Scenario planning: A tool for conservation in an uncertain world. Conserv. Biol. 17, 358–366. doi: 10.1046/j.1523-1739.2003.01491.x

Public Health 2030: A Scenario Exploration . (2014). Institute for Alternative Futures. Available at: http://www.altfutures.org/pubs/PH2030/IAF-PublicHealth2030Scenarios.pdf

Reed, M. S., Graves, A., Dandy, N., Posthumus, H., Hubacek, K., Morris, J., et al. (2009). Who’s in and why? A typology of stakeholder analysis methods for natural resource management. J. Environ. Manag. 90, 1933–1949. doi: 10.1016/j.jenvman.2009.01.001

Reed, M. S., Kenter, J., Bonn, A., Broad, K., Burt, T. P., Fazey, I. R., et al. (2013). Participatory scenario development for environmental management: A methodological framework illustrated with experience from the UK uplands. J. Environ. Manag. 128, 345–362. doi: 10.1016/j.jenvman.2013.05.016

Reinhardt, J., Liersch, S., Abdeladhim, M., Diallo, M., Dickens, C., Fournet, S., et al. (2018). Systematic evaluation of scenario assessments supporting sustainable integrated natural resources management: evidence from four case studies in Africa. Ecol. Soc. 23. doi: 10.5751/ES-09728-230105

Scholtz, R. W., and Tietje, O. (2002). Formative scenario analysis. In embedded case study methods: Integrating quantitative and qualitative knowledge. Thousand Oaks, California, USA: Sage.

Star, J., Rowland, E. L., Black, M. E., Enquist, C. A. F., Garfin, G., Hoffman, C. H., et al. (2016). Supporting adaptation decisions through scenario planning: enabling the effective use of multiple methods. Clim. Risk Manag. 13, 88–94. doi: 10.1016/j.crm.2016.08.001

Sustainable Health Systems Visions, Strategies, Critical Uncertainties and Scenarios . (2013). World Economic Forum. https://www3.weforum.org/docs/WEF_SustainableHealthSystems_Report_2013.pdf

Swart, R. J., Raskin, P., and Robinson, J. (2004). The problem of the future: sustainability science and scenario analysis. Glob. Environ. Chang. 14, 137–146. doi: 10.1016/j.gloenvcha.2003.10.002

Thorn, J., Klein, J., Steger, C., Hopping, K., Capitani, C., Tucker, C., et al. (2020). A systematic review of participatory scenario planning to envision mountain social-ecological systems futures. Ecol. Soc. 25. doi: 10.5751/ES-11608-250306

Totin, E., Butler, J. R., Sidibé, A., Partey, S., Thornton, P. K., and Tabo, R. (2018). Can scenario planning catalyse transformational change? Evaluating a climate change policy case study in Mali. Futures 96, 44–56. doi: 10.1016/j.futures.2017.11.005

van Notten, P. W. F., Rotmans, J., van Asselt, M. B. A., and Rothman, D. S. (2003). An updated scenario typology. Futures 35, 423–443. doi: 10.1016/S0016-3287(02)00090-3

Wesche, S., and Armitage, D. R. (2010). “‘As long as the sun shines, the Rivers flow and grass grows’: vulnerability, adaptation and environmental change in Deninu Kue traditional territory, Northwest Territories” in Community adaptation and vulnerability in Arctic regions. eds. G. K. Hovelsrud and B. Smit (Netherlands: Springer), 163–189.

Wesche, S. D., and Armitage, D. R. (2014). Using qualitative scenarios to understand regional environmental change in the Canadian north. Reg. Environ. Chang. 14, 1095–1108. doi: 10.1007/s10113-013-0537-0

Wohlstetter, J. (2010). Herman Kahn: public nuclear strategy 50 years later. Hudson Institute. Available at: https://www.hudson.org/content/researchattachments/attachment/842/kahnpublicnuclearstrategywohlstetter.pdf

Keywords: climate adaptation, methodological insights, uncertainty, scenario planning, scenarios

Citation: Oriol LE, Jorns J, Channell K and Rood RB (2024) Scenario planning for climate adaptation and management: a high-level synthesis and standardization of methodology. Front. Clim. 6:1415070. doi: 10.3389/fclim.2024.1415070

Edited by:

Tomas Halenka, Charles University, CzechiaReviewed by:

Matthew Grainger, Norwegian Institute for Nature Research (NINA), NorwayGregg Garfin, University of Arizona, United States