- MaREI Centre, Environmental Research Institute, University College Cork, Cork, Ireland

Evidence-based decision-making has been a focus of academic scholarship and debate for many decades. The advent of global, complex problems like climate change, however, has focused the efforts of a broader pool of scholarship on this endeavor than ever before. The “linear model” of expertise, despite obvious problems, continues to be a touchstone for many policy practitioners as well as for academic understandings of evidence development and use. Knowledge co-production, by contrast, is increasingly proposed as both the antithesis and the solution to the linear model's difficulties. In this paper I argue that, appropriately considered, both models have their uses for understanding evidence for policy, yet neither adequately accounts for the political contexts in which expert knowledge has often been asserted to address climate change. The paper proposes that the difficulty with both models lies in lingering assumptions about the information value of evidence for decision-making, the sensitivity of decision-making to scientific expertise, and the assumed mendacity or irrationality of decision-makers when they seem to fail to heed expert advice. This paper presents a model of evidence use that incorporates the aspirations of linear and co-production frameworks, while providing appropriate guidance for evaluating the role of expert knowledge in climate change policy-making.

Introduction

Since the 1990s, a series of initiatives worldwide have sought to translate the science of climate change into good evidence for policy-making. Judging by the regularity with which frustration is voiced about governments' failure to use this knowledge (e.g., Santer, 2017; Glavovic et al., 2021; O'Sullivan, 2022), climate science is nowhere near as influential as experts and advocates believe it should be. The reasons for this apparent failure have, arguably, not been the subject of nearly enough research or debate. The environmental “decision sciences” increasingly advocate knowledge co-production between experts and decision-makers for problems like climate change (Lemos et al., 2018). Climate scientists and campaigners (e.g., Gelles and Friedman, 2022; McGreal, 2022; Singh Mann, 2022), on the other hand, continue to promote a “linear model”: assuming that impartial expertise can directly and decisively inform decision-makers to help constrain policy options and to take “rational” action (Tangney, 2019b; Karhunmaa, 2020). As discussed here, there are good reasons to believe that more appropriate conceptual models of evidence use are needed. The default assumptions used by scholars and practitioners to date, I argue, have often been inadequate for the purposes of either prescription, evaluation or description of the science-policy interface.

There are many perspectives from which to consider evidence use for policymaking, including from those not immediately associated with this focus of study, such as psychology and philosophy. Policy and political studies have perhaps expended most energy examining evidence-based decision-making. The range of viewpoints arising from this literature have previously been characterized as sitting between two broad churches. In one is a rationalism that aspires to the use of objective knowledge, and in the other a constructivism that is suspicious of notions that policy can ever be impartially informed by evidence (Newman, 2017). These perspectives arise in policy and institutional analysis alongside the many popular policy-making models concerned with—as just two examples—windows of policy-making opportunity (Kingdon, 2002), and the competing efforts of advocacy coalitions (Sabatier, 1988). Within science and technology studies (STS), meanwhile, anticipatory governance (Barben et al., 2007) has been proposed to effectively address the risks and uncertainties of advancing technological development and deployment. And environmental sustainability research has proposed, amongst others, a reflexive governance model to account for the complexity of social-ecological problems (Voß and Kemp, 2006). These and other models have clear implications for evidence-based policy-making for climate change. Yet, arguably none of these perspectives have been embraced by scholars and practitioners as pervasively and implicitly as the linear model. Moreover, few models of policy-making or governance directly address the liberal democratic mandate for expert privilege in decision processes. The models of evidence use critiqued here, and the synthesis I propose, by contrast, specifically address the role for experts in policymaking.

Despite much recognition of its failings, the linear model still abounds in the expectations and practices of experts and policy-makers alike (Beck, 2010; Kunseler, 2016). Durant (2016) humorously describes the linear model as “undead”; it has received so many mortal wounds, yet continually returns to life. The linear aspiration is important, it is argued, because it highlights an important relationship between experts and democratic government. What lies at the heart of healthy liberal democracy are experts with access to true (or at least useful) knowledge who are granted elite status and privileged access to decision-making. On this view, experts act as a rational check on the egalitarian and democratic irrationality of representative authority (Habermas, 1971). Tensions between experts and decision-makers can rise to unhelpful levels, however, when experts' access to truth is perceived to be tentative, politicized, or illusory. Since the 1960s, the epistemic authority of elite experts has come under continual attack from scholarship that highlights the partial nature of privileged knowledge, particularly over policy-making timescales (Weinberg, 1972; Jasanoff and Wynne, 1998; Haas, 2004). Some have also noted the rather poor performance of expert judgment when problem-solving for multi-variate complex systems (Johnson, 1988). The linear model nonetheless remains an aspiration in many instances, one that yearns for inviolable expertise as the central constituent of rational decision-making.

Alongside the linear model, in recent years an advancing scholarship on the co-production of knowledge between experts and other decision stakeholders has sought a participatory alternative to evidence development and use. Co-production is an ideal that can be subsumed within several of the aforementioned governance models to accommodate aspirations for reflexivity and anticipatory thinking, while also acknowledging institutional norms and expert privilege. This paper argues that, while attempting valiantly to accommodate the political priorities and institutional norms relevant for decision-makers and their interactions with experts, co-production models have nonetheless omitted some key considerations for understanding the political challenges of evidence development and use. These challenges are especially prevalent for those climate change problems characterized by contested responsibilities for policy action, polarized partisanship and inequitable burdens placed on communities or particular community groups. To accommodate these sorts of policy contexts, this paper presents what I term a critical model of evidence use. The most modest purpose of this model is to advance a heuristic for understanding political parameters likely to influence the use of expert knowledge for climate policy-making. The model attempts to shore up limitations in theories of (linear) expert privilege and knowledge co-production, by questioning how and to what extent we should reasonably expect political decisions to be informed by experts or by knowledge production processes, or for evidence to be used instrumentally to design and implement policy.

The paper begins by summarizing the linear model of expertise and its assumptions relating to policy-making. I identify a category of so-called boundary organizations at the science-policy interface taking a science-driven approach (SDA) to evidence development that is particularly attuned to the linear model. The SDA has proven to be a popular starting point from which government-sponsored institutions have sought to address the perceived science-policy gap on climate change. I then contrast this SDA with contemporary understandings of knowledge co-production and why even these apparently more sophisticated approaches omit consideration of the most intransigent politics of evidence use. In the second part of the paper, I summarize some key considerations for understanding those political dynamics that have been omitted from both linear and co-production conceptualizations to date. The frameworks describing these considerations are then used as the building blocks of the critical model of evidence use that I describe. Finally, I discuss how, although existing decision models hold important aspirational value for governments' use of good evidence, care is needed when using them for prescriptive purposes.

The Linear Model

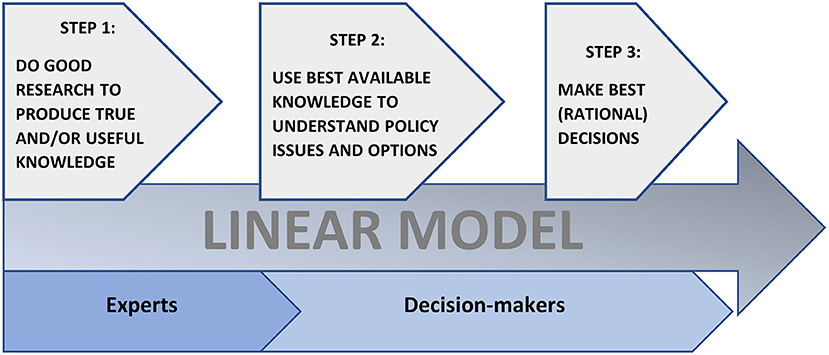

In this section, I present a brief overview of the main assumptions and characteristics surrounding the linear model. For the purposes of this discussion, I distill the central tenets of the linear model into a simplified graphic, as per Figure 1 below.

Linear Assumptions

The linear model has received continual criticism from political studies and cognate fields since the 1950s at least (Grundmann and Stehr, 2012). Following the pioneering critiques of linear-rational policy models (e.g., Lindblom, 1959), the linear model of expertise then became a whipping boy for STS, which focused on the model's tendency to perpetuate the following erroneous assumptions:

- that expert knowledge is not imbued with values and norms, is not politicized (or politicis-able) and is epistemically separated (spatially and temporally) from society (Jasanoff, 1990; Jaeger et al., 1998; Jasanoff and Wynne, 1998; Tangney, 2017a; Karhunmaa, 2020);

- that experts can provide correct answers to policy problems, and these can be made meaningful for non-experts as a matter of course (Sutton, 1999; Sarewitz, 2000, 2004; Beck, 2010; Durant, 2016);

- that decisionmakers' can be uniformly, systematically rational1 and that expert knowledge is the principal evidentiary constituent in the reckoning of policy issues and options (Haas, 2004; Head, 2008; Tangney, 2021).

Despite these well-documented difficulties, Karhunmaa (2020) proposes that the linear model persists today in three ways. First, it is a means to spark reflection from science-policy practitioners about the value of participatory approaches to evidence development and use. We see this sort of reflection, for instance, in case-studies of environmental management in the Netherlands, where practitioners seek to overcome the lingering linear assumptions of their fellow policy actors (e.g., Kunseler, 2016). Second, as noted in the introduction to this paper, linearity persists in the narratives and rhetoric of experts and advocates when justifying the role of experts in the policy process and describing what the ideal form of this role should be. Third, as discussed in Section The Science-Driven Approach to Evidence Development below, the linear model persists in the spatial and temporal separation maintained between experts and non-experts during evidence development, even in contexts where participatory processes are promoted.

Taking the aforementioned criticisms as valid, the critical model proposed here addresses three further assumptions most frequently implied yet most prominently unacknowledged by the contemporary scholarship and practice of evidence development for climate change:

1) That the principal value of evidence for decision-makers lies in its information content;

2) That political decision-making can be entirely sensitive to good evidence which should thereby constrain (if not supersede) political debate and normative choice; and,

3) That where evidence appears to have been dismissed, decision-makers are necessarily acting either irrationally or mendaciously.

I propose that these assumptions are some of the most subtle and persistent in the rhetoric surrounding, and framing of, evidence development even when the broader critique of rational-linear thinking is recognized. To clarify, I am not proposing that these assumptions are always misguided or incorrect; the first two might be reasonable starting points, for instance, if presupposing the tamest forms of uncontested, rational decision process. However, I argue that these assumptions have not been very helpful when seeking to conceptualize the politics of evidence-based policy-making. They are particularly unhelpful when applied to contentious environmental problems, for which multiple conflicting yet valid perspectives and rationalities can be, and often are brought to bear (Tangney, 2021).

The Science-Driven Approach to Evidence Development

One of the most overt manifestations to date of the linear model and the additional assumptions noted above, has been what I term the “science-driven approach” (SDA) to policy-evidence development. While often incorporating many of the linear model's ideas, the SDA is noteworthy because, as the name suggests, it is initiated by the research community (often at the behest of government) and entails an approach that tends to conflate research with evidence (see also, Hoppe, 2005). In so doing, the SDA is prone to perpetuate spatial and temporal linearities that segregate expertise from politics. This SDA mirrors the perspective of a good proportion of the empirical research that has examined evidence-based policy-making since the 1970s. Much of this scholarship conceives of two communities, those of experts and policy-makers, the latter being principally in need of knowledge transfer from the former to optimize decisions (Innvaer et al., 2002). But as Oliver et al. (2014) note, much of this research is methodologically limited by its constraining assumptions about the primacy and utility of research as evidence.

Conventionally, of course, research is undertaken by scholars and expert knowledge-claims develop through the scientific peer-review process. Evidence, by contrast, refers to the information used by decision-makers. While it is true that research and evidence may sometimes amount to the same information, perhaps more frequently evidence is orchestrated by decision-makers as a distillation of available data and research (Oliver et al., 2014), either in consultation/collaboration with experts, or not (e.g., Radaelli and Meuwese, 2010). Evidence is considered in contemporary political studies scholarship to distinguish itself from research through its more overt linkages between facts and what is valued (Beck et al., 2014; Strassheim, 2017). While both research and evidence may constitute available “knowledge” in any given context, conflating these concepts is generally not recommended when developing usable or “actionable” knowledge (Oliver et al., 2014; Dewulf et al., 2020).

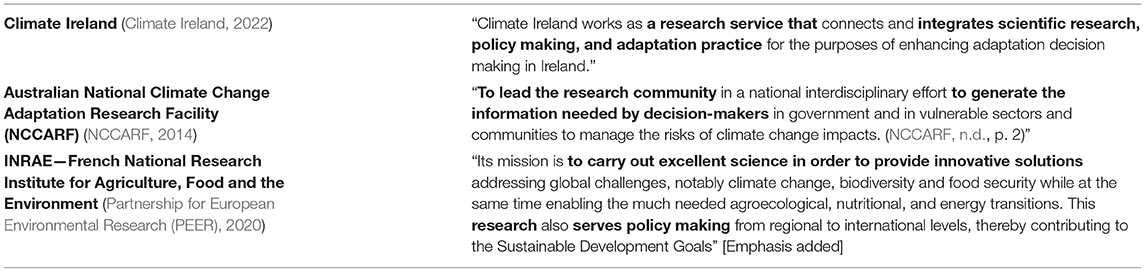

The SDA tends to view expert privilege within the framework of the liberal democratic ideal of experts as arbiters of independent objectivity. Table 1 below highlights some examples of how the SDA is implied by research-based boundary organizations concerned with climate change. To be fair, many contemporary examples of the SDA frequently appear on-board with notions of reflexivity, transparency and humility in the provision of advisory services (e.g., Jones et al., 2014; Kunseler, 2016). Yet, even when research development initiatives are explicit about their intent to take a user-needs or participatory approach—as many initiatives are now careful to do—a SDA may still eventuate, presumably because of expectations for expert privilege (Turnhout et al., 2013; Kunseler, 2016; Dewulf et al., 2020). Australia's National Climate Change Adaptation Research Facility (NCCARF) provides a useful example in this regard.

NCCARF was established by Australia's federal government in 2008 and made many appropriate noises about being user-needs focused in designing its research (NCCARF, 2014). Over the course of 10 years, the Facility delivered a very extensive research programme—some 150 research reports, along with many more policy briefings and other communications material. From the outset NCCARF's rhetoric was clear in its intent for co-designed research, which they sought to inform with a series of National Adaptation Research Plans to which adaptation practitioners and evidence users contributed (NCCARF, n.d.). Yet, there appears to have been rather shallow analysis of the methods of and provisions for evidence development, as distinct from research design, in the development of these plans. In particular, due consideration of political contexts, of a need for knowledge co-production, or of strategies to address Australia's notoriously polarized climate politics, were largely absent from the Facility's programme. NCCARF's many outputs, likewise, are largely devoid of meaningful intelligence on the likely influence of political opposition on evidence-based decision processes in local, state or federal constituencies (NCCARF, n.d.). A principal criticism that may be leveled at the linear SDA, as observed in the case of NCCARF as with others, is an inadequate consideration of the political constituents of evidence-based decision-making. It is noteworthy, given these limitations, that NCCARF failed to retain public funding much beyond the term of its initial government sponsorship.

How Can Knowledge Be (Co-)Produced?

By contrast to the linear model, here I present an overview of the concept of knowledge co-production. The co-production of knowledge between experts and other interested stakeholders has been proposed as both best practice for informing social-ecological problems in most instances (Lemos et al., 2018), and in its ideal form, as the antithesis to the linear model when considering expert influence in policy-making (Maas et al., 2022). Co-production methods developed on the back of theoretical work recognizing that the ways in which we know and represent the world through expert knowledge are not meaningfully distinguishable from the social practices, identities and institutional norms of knowledge creators (Jasanoff, 2004, p. 2). Under the linear model, the value of research has traditionally been judged on its technical credibility for decision-making. By contrast, knowledge co-production envisages a participatory practice between a range of policy actors that recognizes the need for the outward legitimacy of evidence development processes, the value of uncertified forms of expertise for knowledge production, and the importance of producing relevant and usable knowledge for decision-makers. In this vein, Cash, Clark, Alcock, Dickson, Eckley and Jager (2002) Knowledge Systems Framework itemized three interdependent ingredients of useful knowledge—credibility, legitimacy and salience—which was a seminal influence on early advances toward co-production practice. The Knowledge Systems Framework informed subsequent scholarship in developing best efforts to produce usable, legitimate knowledge for decision-makers, although Cash et al. did not go so far as to provide a prescription in this regard.

Today, proponents of co-production—mostly situated within a burgeoning discipline of environmental “decision sciences”—well-recognize the importance of considering political influence and meaning, reflexivity, institutional norms and user needs in evidence development (Dewulf et al., 2020). Co-production in its ideal form seeks to close, rather than to bridge, the spatial and temporal gaps between the “two communities” of policy-makers and expertise. Whereas, the linear SDA conflates research and evidence, an ideal co-production process better recognizes these distinctions and seeks to incorporate a range of voices and perspectives in bureaucratically driven evidence development, in an effort to democratize expertise. The co-production model understands that knowledge production and policy-making in many instances should not be meaningfully distinct endeavors and that co-production can often better account for the institutional and political challenges impeding knowledge use in particular decision contexts (Lemos et al., 2018). Even so, critics highlight continuing problems in agreeing a strict definition of what constitutes “evidence,” “impact,” or “learning” through co-production, in ways that may perpetuate negative stereotypes, and thereby the gap between, expert and policy communities (Oliver et al., 2014). Proponents also concede that, try as they might to advance co-production methods and perspectives in institutional settings, linear aspirations concerning the provision and availability of objective, independent expertise commonly prevail (Kunseler, 2016; Maas et al., 2022).

An example of this reticence to relinquish linear expectations was the UK's first national climate change risk assessment in 2012, an exercise mandated under the UK's pioneering Climate Change Act (2008). The assessment made considerable efforts to undertake participatory co-production of the risk assessment, drawing in stakeholders from a wide range of national and municipal government departments, agencies and institutions, as well as non-governmental organizations. As recounted by interviewees closely involved in the assessment (Tangney, 2017c), however, the assessment's conclusions appear to have been heavily edited in line with government departments' policies, while the minister sponsoring the assessment, in his introduction to the final report, described the assessment as “a world class independent research exercise” drawing on the “best scientific evidence” (Department of Environment, Food and Rural Affairs, 2012, p. 3). Others have also noted that knowledge legitimacy and credibility garnered from the supposed worth of objective expertise continues to hold primacy in many policymaking circumstances (Turnhout et al., 2013; Kunseler, 2016), despite the possibility of its purely performative worth in practice. This raises important questions about the evaluative and prescriptive worth of co-production models, suggesting that co-produced learning and evidence are at risk of being ineffectual in contexts where linear expectations still prevail.

Building on the work of Shove et al. (2012), Turnhout et al. (2013), and Dewulf et al. (2020), Maas et al. (2022, p. 2) have recently proposed a Social Practice model describing science-policy interaction in terms of the repertoires of justification, practitioner competencies and institutional contexts of evidence production and use. Their outlay is based in institutional theory that conceptualizes social practices as “routinized patterns of behavior” that incorporate “individual, intersubjective, and institutional factors.” This conceptualization provides a significant advance in understanding the role of values, norms, and contrasting rationalities in knowledge production. They propose a continuum between linear aspirations for expert knowledge and privilege at one end, and ideal co-production as a facilitative co-learning exercise between science and politics at the other. Yet, questions remain about the extent to which even this synthesis of linear and co-production theory fully accounts for the political dynamics that may be at play.

On Maas et al.'s view, a causal link is presumed between the co-productive agility of practitioners, the instrumental utility of participatory learning and co-produced evidence, and the sensitivity of decision-making to that knowledge. But, what of those instances in which the institutionally legitimate expert-policy interface does not accommodate certain forms of evidence or expertise due to their normative implications? Why assume that co-produced evidence will be used for its information and learning content as opposed to merely its performative worth for winning arguments or demonstrating that due process has been followed? And what of those circumstances in which co-production participants have insufficient political influence to appreciably affect executive decision-making? It is because of these unanswered questions that I propose a gap remains in the existing conceptualizations and heuristics used by the decision sciences as they seek to balance the demands for expert privilege and the democratization of policy knowledge.

The Politics of Expert Knowledge

In this section, I wish to highlight some key characteristics of evidence development and use that relate to political decision-making. As noted by Tangney (2019b) and Dewulf et al. (2020), although the decision sciences are well-versed in the principal failings of the linear model, much of this co-production scholarship has failed to draw on key insights from political and science and technology studies and organizational theory that has long grappled with questions of political influence in evidence development and use. This scholarship in many instances long predates the conceptual advances provided by decision sciences concerning knowledge co-production. Although some branches of policy studies perpetuate the idea of two communities (experts and policy-makers) between which bridges must be built (Oliver et al., 2014), key theoretical insights have also arisen that have helped to advance a more sophisticated appreciation of the politics of evidence-based decision-making. As I discuss below, the omission of key lessons from this literature has implications for both the evaluative and prescriptive worth of co-production frameworks in practice.

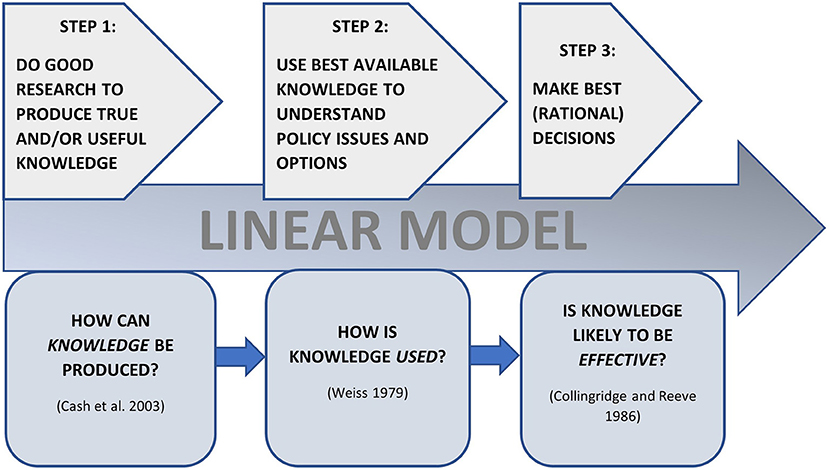

To explain the politics of evidence development and use, readers may be surprised to note that I use the linear model as a scaffold. Contemporary decision tools and methods have avoided explicitly accommodating the linear model, for example, by prescribing an iterative decision cycle (Jones et al., 2014) or through notions of a dispersed participatory process (Kunseler, 2016). As discussed above, these prescriptions often do a poor job of disguising lingering linear assumptions, or of accommodating the linear aspirations of participants. Yet as Karhunmaa (2020) notes, the linear model can provide helpful points of comparison for understanding the political realities of evidence development and use. In Figure 2 above, I use the linear model to situate key questions about political influence in evidence-based decision processes. The knowledge co-production framework has focused most of its efforts on step 1 of this model (although, as noted above, more recent advancements also consider step 2—i.e., Dewulf et al., 2020; Maas et al., 2022), but the linear model is useful for highlighting further political considerations across all three steps, which I discuss below.

Figure 2. The linear model as a conceptual aid to understanding political influences in evidence development and use.

How Is Knowledge Used?

Both the linear and co-production models to date have assumed that knowledge outputs are primarily useful in terms of their information content as opposed to, for instance, their performative worth for demonstrating decision-makers' credentials as rational actors. Co-production has raised the ante by incorporating co-productive learning into the mix, whereby the exact conclusions of research or evidence may be less useful than the learning process involved in knowledge development itself (Lemos et al., 2018). Yet, political scientists have long pointed out that research and evidence development and use may serve a wider range of contrasting uses for political decision-making. Most influentially, Weiss (1979) proposed 6 contrasting ways by which research may be used, only a portion of which constitute instrumental use; that is, uses of the explicit information contained in, or gained through the development of, the research or evidence in question in order to design and implement policy. Weiss highlighted how knowledge may be used symbolically or tactically as a form of armor to enhance government's legitimacy as a rational actor, or politically as a “sword” to wield in order to bolster pre-conceived priorities, decisions, or value positions. Additionally, research can provide enlightenment, whereby key concepts arising from the available knowledge (not necessarily the conclusions or explicit content of that research) resonate in ways that change the prevailing worldviews of the decision-making community over time.

Weiss's framework is important in the context of polarizing political issues like climate change. As I shall argue in the following section, assuming that the principal worth of evidence is by default its instrumental utility, we may limit our ability to evaluate effective evidence use in political realms. While instrumentality is a worthy aspiration per se, here I propose that, to better account for the politics of evidence-based processes, evaluating knowledge use must be advanced beyond conventional instrumental expectations.

Is Knowledge Likely to be Effective?

The final and, in my view, most important consideration for understanding the politics of evidence-based policy, concerns the sensitivity of decision-making to evidence; that is, the extent to which evidence is used in whatever way to help effect policy. As discussed above, both the linear and co-production models frequently assume that decision-makers and the decision process can and should be sensitive to a robust evidence development process and its outputs. Much like for assumptions of evidence instrumentality, I propose that these assumptions represent a significant limitation in existing decision models. Here I propose two principal modes by which political decision-making is likely to be sensitive to evidence, as a means to highlight the ways in which it may not be. In the first mode, sensitivity arises only in so far as evidence is—using Weiss (1979) characterization—tactically or politically useful for decision-makers in bolstering the legitimacy of preceding decisions. In the second mode, decision-making is sensitive only to bodies of evidence which are instrumentally helpful to the preferred rationalizations of decision-makers. Let's look at each of these modes in turn.

The tactical and political worth of evidence for policy was a topic elaborated at length by Collingridge and Reeve (1986) (hereafter, C&R). In their seminal book Science Speaks to Power, C&R propose that cynicism is a worthwhile starting point for understanding and evaluating the dynamics of policy decision-making and the influence of experts. C&R suggest that decision-making is not particularly instrumentally sensitive to available knowledge even though it may be ostensibly “evidence-based.” Decision-makers may engage with a prescribed linear/cyclical or co-production knowledge process for the purposes of tactical and/or political utility but, under C&R's framework, we should anticipate that problem identification and decision-making are directed almost entirely by preceding values, norms and political priorities, regardless of the conclusions of expert knowledge. C&R present two opposing modes for evidence-use in the public realm which have resonated with many STS scholars as being a useful caricature of the frequently superficial character of ostensibly “evidence-based” decision-making.

The first mode of evidence use is what C&R call over-criticality, which arises when there is a fundamental conflict between policy actors over values and priorities in respect of complex, uncertain policy problems. Such conflict can concern the nature of the problem at hand, the proposed solution to that problem, or some other key decision variable to which expert knowledge can be brought to bear. When value conflict arises, each side of a debate brings their preferred evidence and expertise to the table, and the realms of technical debate over the problem-space expands accordingly. In lieu of explicit deliberation over underlying conflicting values, “proxy debates” ensue, ostensibly over which sets of evidence hold greatest weight and where the technical bounds (and therefore, realms of expertise) for the problem reside2. As a result, the problem expands beyond its possible resolution by just one evidence base, knowledge production process, or set of experts. In an over-critical scenario, evidence is wielded by those with decision-making power in a way that is unlikely to ever be acceptable to those with opposing value positions, regardless of the technical credibility and salience of the evidence wielded. And those with decision-making power are only sensitive to their preferred evidence to the extent that it holds political and tactical value for maintaining democratic legitimacy and winning political disputes. Evidence may subsequently be used instrumentally to design and adjust policy, but only to the extent that this knowledge aligns with preconceived values and objectives.

The second mode of evidence use is what C&R call under-criticality. Under-criticality prevails when there is broad coherence between all significant voices in a policy debate concerning what the problem or issue is, what options are viable, and/or which policy should be chosen. Expert knowledge and the evidence development process is principally wielded for its tactical worth, the latter becoming a performative “tick-box” exercise to demonstrate due process. If conflict between experts does arise, those experts with perspectives that dissent from political orthodoxy are silenced or ignored and their preferred evidence or knowledge interpretation is suppressed. Under-criticality in the policy-making sphere looks ostensibly evidence-based, but only because value conflict was not at issue between the principal deliberating actors in the first place. Again, in as much as the decision process has made instrumental use of evidence, this has only occurred because this evidence has been tactically useful for legitimating preceding objectives and values.

Alongside C&R's description of over- and under-criticality, there is an alternative school of thought concerning the sensitivity of decision-making to evidence. Tangney (2021), drawing on the work of Max Weber, has described how those who appear to disregard the available scientific evidence on climate change may be in fact acting entirely rationally. To explain this line of thought, let us assume again an excess of objectivity: that decision-makers concerned with complex uncertain multidisciplinary problems can prioritize between a range of alternative bodies of credible evidence and expertise in line with their preferred political views. Rather than alternative forms of expertise fulfilling first and foremostly tactical or political functions as per C&R's model, however, on this view expert knowledge is used for wholly rational purposes. Decisions are derived instrumentally and logically from credible evidence and directed by a priori moral principles. To understand this view, we can ask and seek to answer the question: do the ends justify the means when addressing climate change? The answer to this question depends on a decision-maker's values yet may nonetheless be wholly rational. To understand this proposition let us first consider that decision-makers' build their political legitimacy on the back of promoted values and ideals that are roughly congruent with their electorate's views. These values and ideals determine the moral principles that, in turn, dictate decision-makers' choice of available means and ends of public action, and the relative priority given between moral means and ends when framing policy issues. Given an excess of objectivity, decision-makers will prioritize evidence and expertise in line with these moral prioritizations. For rationality to prevail, the prioritized knowledge must hold technical credibility and epistemic primacy for determining rational action given the moral premises of choice.

A classic example observed in relation to climate change, as in other social-ecological problems, is the conflict between scientific and economic rationalities. Tangney (2021) has shown how, even when perfectly informed by the best climate science, economic rationalism can result in a means-based prioritization for cost effectiveness that may be fundamentally at odds with climate scientists' ends-based reasoning that favors rapid transformative decarbonization. Conflict arises between one rationality that prioritizes moral means (i.e., cost effective decisions that minimize “opportunity costs” given intractable uncertainties), vs. an opposing rationality that prioritizes moral ends regardless of uncertainties given the enormity of potential consequences (i.e., a zero-emissions economy to avoid climate catastrophe). On this view, the economic rationalist's decisions are entirely logical and evidence-based yet deployed under principles that prioritize moral means over moral ends (Tangney, 2021). For the economic rationalist, the opportunity costs associated with transformative precautionary policies may be too great in many contexts given the prevailing uncertainties concerning the speed and trajectory of future climate change. When considering disadvantaged communities dependent on the fossil fuel industry or developing economies whose societal needs demand rapid economic growth, for instance, scientists' normative demands for transformative precautionary policies may be fundamentally at odds with economic rationalists' prioritization for moral means, even when the latter group are genuinely credulous of the scientific certainties. In this way, decision-makers' logics of “consequentiality,” “appropriateness,” or “meaningfulness” (Dewulf et al., 2020), may not be amenable to scientists' judgments about a need for transformative action where these are based on a conflicting rationality.

On C&R's view, rational decision-making signified by instrumental evidence use, occurs incidentally (or in an associative way) if at all. Evidence is primarily used for its tactical or political value. Tangney's outlay of contrasting valid rationalities on the other hand suggests that the “excess of objectivity” arising from the complexity and uncertainty of social-ecological problems means that it's entirely possible to construct a range of logical evidence-based rationales congruent with contrasting decision-making values. Undoubtedly, the distinction between these two descriptions of evidence (in)sensitivity is slight. The heuristic worth of these distinctions, however, lies in understanding that there exists a range of rational and irrational, mendacious and principled reasons for appearing to disregard available evidence. These possibilities lay bare significant limitations for both the linear and co-production models, individually or in combination, as foundations for evaluating evidence development and use. These models presuppose expert-political interfaces instrumentally determinable by evidence, when no such determinability may exist.

How Sensitive Is the Socio-Political Realm to Expert Knowledge?

Max Weber (2019, p. 98) proposed that most decision-makers went about their responsibilities only “half-aware” of the extent to which they were being rational, and often guided more by instinct or habit than by reason. Although notions of complete instrumental sensitivity or insensitivity to evidence are likely exaggerations for many decision contexts, recognizing the ways that decision-making can be insensitive to evidence is important for understanding how humans' manifest cognitive bias. On both the political left and the right in many liberal democracies well-established scientific propositions appear to be repeatedly rejected. The political right, for example, frequently rejects climate change science and, in some constituencies, advocates (or at least appears tolerant of) creationism being taught in schools alongside theories of evolution (Shermer, 2013). However, social psychologists have also pointed out how left-wing pundits and policy-makers also appear to frequently reject expert evidence concerning—as just one example—biological understandings of human sexual dimorphism, and the biological and psychological differences between men and women (Goldhill, 2018; Washburn and Skitka, 2018). The politically unacceptable implications arising from well-established scientific findings often preclude their use in policy-making regardless of which side of the political divide holds power. Haidt (2013) proposes that this tendency to reject or de-emphasize science occurs because of the innately tribal disposition of human beings when science conflicts with “sacred narratives” held by any particular group.

The modes of evidence insensitivity described above highlight how evidence can become instrumentally redundant in ways that to date have often evaded the scholarship examining research use and “evidence-based” decision-making for climate change (e.g., Jones et al., 2014; New et al., 2022). Yet, insensitivity can be observed in various case-studies of climate change policy-making in polarized constituencies. In relation to the NCCARF case and Australia's associated climate politics, for instance, we can observe the aforementioned conflict between scientific and economic rationalities. In the Australian federal legislature, the Labor Party and the political left tend to wield scientific evidence and expertise, while the political right wields economic evidence. Both sides accuse their opponents of being motivated more by ideology than by evidence (Tangney, 2019a). As noted above, however, even if both sides were to be wholly rational in justifying their political perspectives, those rationalities deployed would likely conflict since they rest on contrasting value premises concerning the relative importance of the means and ends of policy-making to address the problem.

Echoes of C&R's under-critical mode on the other hand can be observed in Australia's municipal constituencies. In Queensland the controversial Adani-Carmichael coal mine proposal received bipartisan support despite its well-recognized environmental risks locally, regionally, and globally. Scientific and economic expertise (under the auspices of successive corporate-sponsored Environmental Impact Assessments mandated under state legislation) has been wielded tactically by government and the Adani corporation to advance the project through successive steps of legislative approval. Those experts who dissented from state-sponsored expert orthodoxy during the approval process and who sought purely instrumental evidence use, were either suppressed [e.g., Prof. Adrian Werner from Flinders University, who provided contradictory judgments to the State's preferred experts on the hydrogeological risks from the project at a Queensland Land Court appeal in 2015 (perscomms 2019)] or were ignored [e.g., Prof. John Quiggin from University of Queensland, who has repeatedly contested the evidentiary basis for the economic benefits promoted by Adani's economists and state government for the mine (Quiggin, 2017)].

Of course, many (and hopefully most) determinations by judicial systems are not unduly colored by broader political priorities of government or by a partisan judiciary, as in the example above. Sound legal judgments must make instrumental use of key bodies of evidence and the testimony of experts, which in some cases may then legally mandate the instrumental use of evidence by policymakers. Yet even these forms of legally enforced instrumentalism may arise despite alternative bodies of valid evidence and expert interpretation that could also have been called upon to arrive at an alternative policy mandate (i.e., in instances characterized by an “excess of objectivity”). In the following section, I characterize these contrasting ways in which evidence may be instrumentally useful to accommodate an excess of objectivity and the contrasting degrees of sensitivity of decision-makers to expert knowledge in any given context.

A Critical Model of Evidence Use

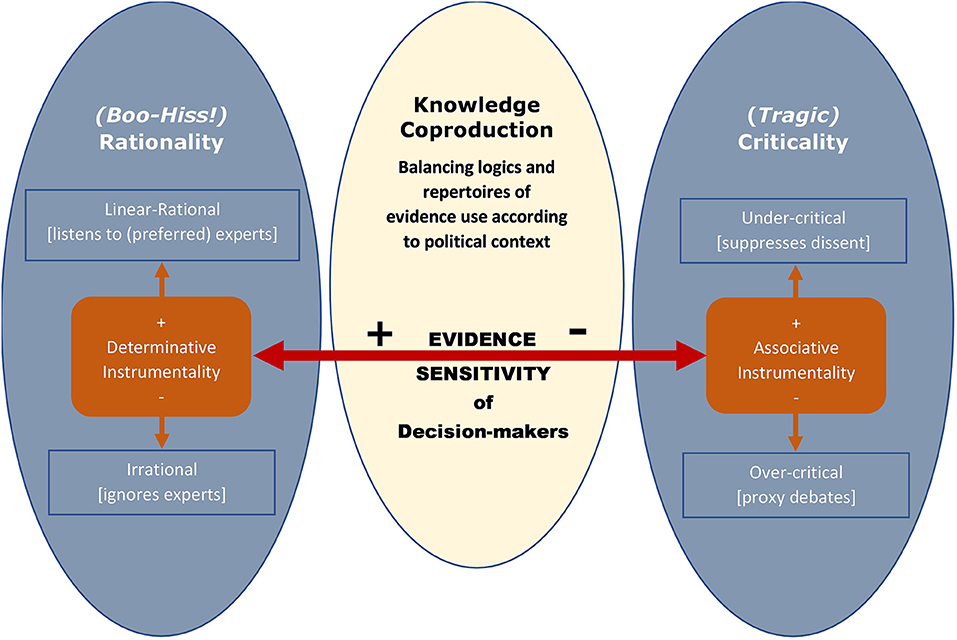

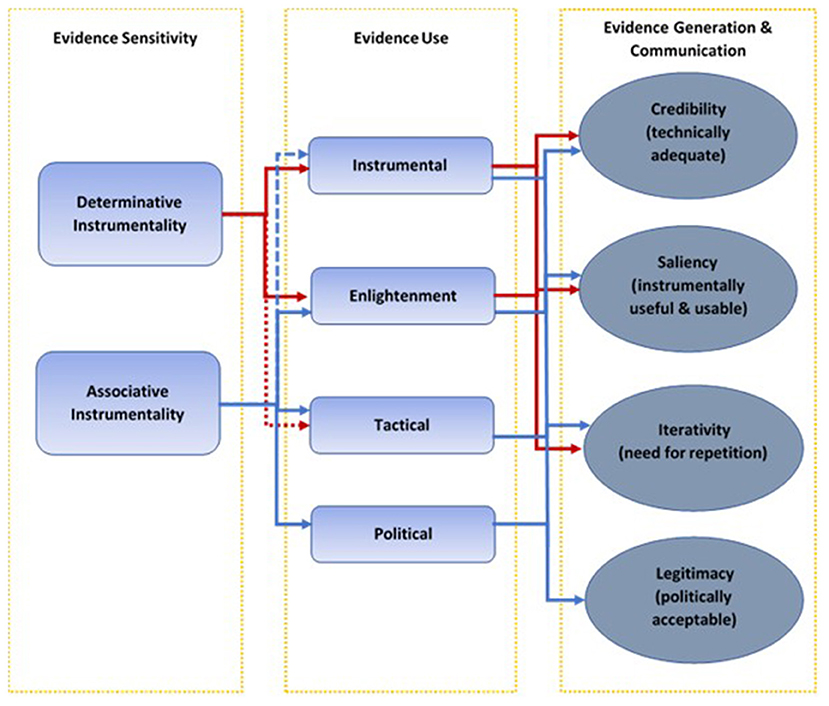

Given the contrasting available uses of evidence and the potential for insensitivity of decision-making to available bodies of expert knowledge, the critical model described here is proposed as an advance on the conceptual frameworks currently available (see Figure 3). Evaluating evidence development and use, I argue, should begin by understanding possible evidence uses within a particular political context. As Grundmann and Stehr (2012, p. 20) note, the successful deployment of knowledge for policy action demands that:

“the possibilities for action, as well as an understanding of the actors' latitude for action and their chances of shaping events, must be linked together, in order that knowledge may become practical knowledge.”

Figure 3. A Critical Model of evidence use—Part 1: Rational vs. Critical orientations for evaluating evidence use. Political context determines the type and extent of evidence sensitivity, and thereby, knowledge instrumentality. Both aspirations for expert privilege and democratization must account for potential insensitivity of decision-making to evidence.

The principal starting point I propose, therefore, is to first understand prevailing political narratives likely to determine whether and to what extent evidence is used instrumentally, and to compare these with the expectations of experts. When combining the various frameworks outlined above into a politically literate model, therefore, some additional interpretive work is necessary. I propose two opposing vantage-points from which to consider the role of expert knowledge and to help contextualize the politics of evidence development and use. I call these the Tragic and Boo-Hiss! Perspectives, respectively, that speak to contrasting ideas about the nature of expert privilege, and its role in the design, development and implementation of climate policies. I frame this model in terms of expert privilege because I propose that this aspiration is still of value, albeit in need of being tempered by political realities concerning evidence use for policy.

The Boo-Hiss! perspective describes the standard viewpoint from which experts and advocates construct narratives of expert influence on politics and when interacting with public decision processes. This perspective can be seen in much expert advocacy and in orthodox media commentary surrounding climate change to date (e.g., Mann, 2017; Santer, 2017). It centers on the perceived failure of governments to take on board the accumulated expert knowledge on climate change. It assumes that decision-making can and should be instrumentally sensitive to evidence in all contexts, and where it is not, irrationality or mendacity should necessarily be inferred (Tangney, 2021). This perspective aligns with traditional notions of expert privilege in liberal democracy and with the SDA to evidence-based decision-making. A challenge arising for this vantage-point, however, is that it limits conceptualization of evidence-based policy-making to a rather narrow subset of expertise, knowledge types and uses in line with one's ideological or value commitments.

As Head (2008) points out, evidence-based decision-making has never been solely about weighing the (social-)scientific research for one course of action over another. Decision-makers must grapple with a range of incommensurable variables and knowledge types in the construction of decision rationales. The assumption underpinning the Boo-Hiss! narrative, however, is that our preferred bodies of expert knowledge and accompanying rational judgments are the only relevant sources of expertise and should be the most significant, if not primary, determinants of decision outcomes. As a result, this perspective frequently interprets any political demurral as irrationality or corruption. In this mode, when expert knowledge is used, it should be used in a determinatively instrumental way; that is, preferred expert knowledge should be the primary determinant of policy design and implementation, used for its explicit information content and/or learning outcomes.

The Tragic perspective3, by contrast, is one that takes on board C&R's cynicism about the extent to which decision processes are instrumentally sensitive to expert knowledge. I use the term Tragic to refer to a pragmatic orientation that expects evidence, by default, to be used primarily in non-instrumental ways. In contrast to the Boo-Hiss! disapproval of perceived irrational or corrupt decision-makers, the Tragic perspective anticipates primarily performative uses of available evidence in line with preceding values and objectives. On this view, we expect evidence to hold only an associative, as opposed to determinative instrumentality. That is, when evidence is used instrumentally this occurs only to the extent that the same evidence performs tactical or political functions for justifying prevailing values and ideals. Evidence may nonetheless be frequently used to good effect overall. The greater the political consensus around the issue in question, the greater will be this associatively instrumental use.

Tempering linear and co-productive expectations for instrumentality and sensitivity in this way, I argue, is important for heuristic purposes. The Tragic perspective not only emphasizes the susceptibility of expert knowledge to performative use, it serves to highlight the absurdity of an opposing set of extreme assumptions that have become the default for contemporary expert advocacy: that political decision-making can and should be fully sensitive to determinatively instrumental expert knowledge in all instances. The problem with failing to recognize variable instrumentality and sensitivity, however, is that we limit our understanding of the range of possible characteristics of well-designed and delivered research and evidence that allow for its maximal influence (see Figure 4).

Figure 4. A Critical Model of evidence use—Part 2: Evaluating evidence development and use based on evidence sensitivity invokes the broadest range of criteria for consideration at each step of the linear model.

Assuming maximum sensitivity and instrumentality under the Boo-Hiss! view, suggests a limit to the capacity of knowledge producers to account for “political knowledge” influencing the production, communication and framing of evidence (Head, 2008). Under the Tragic view, what we anticipate and should prepare for is limited decision sensitivity and an associative instrumentality. The critical model therefore presents a choice, not between all or none of the available evidence, but between the contrasting political, tactical and instrumental utilities of different branches of evidence, depending on political context. This, I argue, is an important basis on which to consider evidence design and use for climate change. Accounting for variable decision sensitivity and the possibilities for both determinative and associative instrumentality allows for better accounting of how the plural perspectives that are inevitably brought to bear on climate-related policy problems may manifest, each with their own preferred logics and repertoires of evidence use.

The possibility of decision insensitivity and associative instrumentality requires the evaluator to consider a broad range of possible evidence use-types (see Figure 4). However, when we assume a unimodal determinative instrumentality, the evaluator implies their expectation for a wholly rational process—in which any tactical uses of evidence are incidental or immaterial, and political uses are anathema. Failing this, any instrumental non-use by the decisionmaker may be considered irrational or mendacious, in which case they are interpreted to have made no meaningful use of evidence whatever. Considering the contrasting perspectives of determinative and associative instrumentality helps the evaluator to understand experts' and advocates' narratives concerning the complacency or complicity of governments toward climate inaction and the prevalence of associated linear model expectations for expert influence.

Discussion

A Need for Critical Perspective

The critical model outlined here seeks to build on the conceptual work of the political studies, STS and decision sciences to date, to advance understanding of climate change decision-making, while accounting for contrasting aspirations for expert privilege and the democratization of expertise. The principal problem with the linear model is not its inability to reflect the realities of expert influence per se. At least some of the model's assumptions arguably serve as useful aspirations for perpetuating healthy liberal democratic decision-making, regardless of attainability. Likewise, knowledge co-production is a useful framework for understanding the need to distill plural perspectives into usable evidence, and for ensuring that decision-makers with values congruent with expert knowledge have a hand in evidence development. The limitation with both models, rather, lies in their failure to consider the possibility that scientific knowledge and expertise may hold little or no instrumental traction on its own due to wider (i.e., non-institutional) political factors.

The critical model asks us to anticipate that even when there is congruence between facts and values, available evidence may nonetheless be used primarily for its performative worth. In as much as its information content is used, this may be only in an associative way. Alternatively, determinatively instrumental use may occur, but the instrumental worth of alternative bodies of knowledge and expertise are de-prioritized under an excess of objectivity and conflicting decision principles. Ultimately, the critical model proposes that the information and learning content of knowledge and its co-production will only be used when it aligns with prior political or legislative positions. On this view, we begin to see that there are a range of evidence use-types and logics beyond the mandates laid down by climate scientists and their advocates under the linear model. Likewise, prior political priorities may negate the instrumental worth of co-production initiatives in contexts where these activities are actually used for their performative worth.

What Are Decision Models for?

A general tendency observable from those striving for conceptual development at the science-policy interface has been to underspecify the intended utility of their preferred models, methods, and tools (Tangney, 2017b). These devices have often been advanced in ways that heavily imply their prescriptive use (e.g., Cash et al., 2002; Jones et al., 2014; Dunn and Laing, 2017)—that is, prescribing what steps one should take, or how one should act—in ways that can be problematic in practice (Oliver et al., 2014). Allow me to clarify therefore that the model set out here is intended as a heuristic to assist evaluation of evidence development and use, rather than for wholly descriptive or prescriptive purposes.

Prescriptions for climate change decision-making abound. Care is needed, however, when transposing whatever criteria we use for evaluation into prescriptions. Many of the conceptual models used to understand evidence development and use are incomplete in one way, shape or form because they adhere to idealized assumptions that have only a tentative basis. However, the prescriptive use of decision models of any sort is challenging and, I argue, often ill-advised in the context of complex, contentious public problems. For example, although we should recognize the importance of knowledge legitimacy for successful evidence use (Cash et al., 2002), designing evidence to maximize its political and tactical utility, alongside (or instead of) its instrumental worth, speaks to attempts at overt knowledge politicization, thereby compromising credibility.

I propose this critical model as a means to help understand the ways in which climate change frequently involves problem types and contexts that are not generally amenable to prescription due to their complexity, uncertainty, and tendency toward political contest. Instead, I argue, we should seek out heuristics that help policy practitioners and experts to recognize the aspirations for and pitfalls of both linear-rationality and knowledge co-production, while characterizing both the political influences on, and potential uses and utility that may be derived from, expert knowledge beyond its assumed instrumentality. A significant challenge for knowledge co-production in this regard, I argue, has been a tendency to assume that government institutions are the sole sphere of political influence bearing on evidence-based policy-making. Yet, I argue, public narratives concerning the importance of expertise for decision-making and the perceived success or failure of government by political opponents can and do also play a significant role in determining what and how evidence is used (Tangney, 2019a). It is for this reason that the critical model outlined here combines aspirations for co-production and expert privilege with contrasting narratives concerning the role of expertise in decision-making.

Conclusion

The linear model promotes an important aspiration for evidence-based policy-making, even though it has long been criticized for its failure to adequately characterize technical expertise, the knowledge that experts' produce, or the process by which that knowledge is disseminated. Linear aspirations may have also hindered the conceptual development of decision models in the past by popularizing unrealistic expectations for the sensitivity of decision-making to particular bodies of evidence, the instrumentality of evidence for policy, and the supposedly irrational or mendacious disposition of decision-makers who are perceived to disregard expert knowledge. While models of knowledge co-production have done much to advance understanding of the relationship between politics and expertise, they nonetheless perpetuate some of these assumptions in a way that struggles to account for polarized political contexts such as for climate change policy-making.

In this paper, I present a critical model of evidence use to aid our understanding of decision-making for climate change. Climate change policy-making must inevitably proceed across a range of political landscapes if humanity is to effectively address the problem. This includes those contexts characterized by polarized political opposition arising from, for example, the perpetuation of traditional mining and fossil fuel intensive economies, or the perceived inequitable burden of policies on particular communities. To advance beyond default perceptions of ill-intent by political opponents in such contexts, a more sophisticated understanding of evidence use can assist those who seek to understand the contrasting rationales at play. Additionally, understanding the institutional norms arising from the linear model can help explain what drives policy-makers toward performative uses of expert knowledge, as well as the range of information types that actually influence decisions. The critical model presented here synthesizes some influential conceptual advancements from political studies, STS and the burgeoning decision sciences to situate existing models of evidence development and use within their political contexts. I conclude that decision-making prescriptions are ill-suited to the policy-making challenges associated with climate change. What is likely more useful for both experts and decision-makers alike, is an effective evaluative heuristic that aids our understanding of the political parameters at play for experts and decision-makers and the challenges they pose for evidence development and use.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The ideas outlined in this article were originally presented in draft form at a workshop organized by Prof. Brian Head, Assoc. Prof. Cassandra Star, and Assoc. Prof. Joshua Newman, sponsored by the Australian Political Studies Association on the 30 November 2021.

Footnotes

1. ^The term rational here is used to denote a process of principled decision-making logically derived from good evidence. Rationality or linear-rationality is sometimes also used in the policy sciences to denote the assumption that there is only one, or a finite subset of, correct answers to any given policy problem (Bridgman and Davis, 2003). By contrast, I employ the term rational here in line with the ideas of Max Weber, indicating that what counts as rational (and thereby correct) depends on one's starting values and decision-making principles when prioritizing between the means and ends of public action (see Tangney, 2021).

2. ^This problem has been described by Sarewitz (2000) as an “Excess of Objectivity” which arises when a policy issue is stymied by uncertainty and complexity, allowing multiple valid expert perspectives on a problem in ways that produce conflicting but equally evidence-based conclusions.

3. ^A moniker I shamelessly appropriate from Sowell (2017) description of the conservative political worldview. Sowell asserts that conservatives hold a tragic viewpoint on humanity, one that has limited faith in humans' capacity for rationality or to avoid institutional corruption.

References

Barben, D., Fisher, E., Selin, C., and Guston, D. H. (2007). “Anticipatory governance of nanotechnology: foresight, engagement, and integration,” in The Handbook of Science and Technology Studies, 3rd Edn, eds E. J. Hackett, O. Amsterdamska, M. P. Lynch, J. Wajcman (Cambridge, MA: MIT Press), 979–1000.

Beck, S.. (2010). Moving beyond the linear model of expertise? IPCC and the test of adaptation. Region. Environ. Change 11, 297–236. doi: 10.1007/s10113-010-0136-2

Beck, S., Borie, M., Chilvers, J., Esguerra, A., Heubach, K., Hulme, M., et al. (2014). Towards a reflexive turn in the governance of global environmental expertise. The Cases of the IPCC and the IPBES. Gaia 23, 80–87. doi: 10.14512/gaia.23.2.4

Bridgman, P., and Davis, G. (2003). What use is a policy cycle? Plenty, if the aim is clear. Austr. J. Publ. Administr. 62, 98–102. doi: 10.1046/j.1467-8500.2003.00342.x

Cash, D., Clark, W., Alcock, F., Dickson, N., Eckley, N., and Jager, J. (2002). Salience, Credibility, Legitimacy and Boundaries: Linking Research, Assessment and Decision Making. John F. Kennedy School of Government, Harvard University, Faculty Research Working Papers Series. doi: 10.2139/ssrn.372280

Climate Ireland (2022). About Us. Available online at: https://www.climateireland.ie/#!/about

Collingridge, D., and Reeve, C. (1986). Science Speaks to Power: The Role of Experts in Policy Making. London: Frances Pinter Publishers.

Department of Environment Food Rural Affairs. (2012). UK Climate Change Risk Assessment: Government Report. HMSO, Norwich, United Kingdom. Available online at: http://www.defra.gov.uk/publications/files/pb13698-climate-risk-assessment.pdf

Dewulf, A., Klenk, N., Wyborn, C., and Lemos, M. C. (2020). Usable environmental knowledge from the perspective of decision-making: the logics of consequentiality, appropriateness, and meaningfulness. Curr. Opin. Environ. Sustain. 42, 1–6. doi: 10.1016/j.cosust.2019.10.003

Dunn, G., and Laing, M. (2017). Policy-makers perspectives on credibility, relevance and legitimacy (CRELE). Environ. Sci. Policy 76, 146–152.

Durant, D. (2016). “The undead linear model of expertise,” in Policy Legitimacy, Science and Political Authority - Knowledge and Action in Liberal Democracies, eds M. Heazle and J. Kane (London: Routledge). doi: 10.4324/9781315688060-2

Gelles, D., and Friedman, L. (2022). There's a Messaging Battle Right Now Over America's Energy Future. The New York Times Available online at: https://www.nytimes.com/2022/03/19/climate/energy-transition-climate-change.html (accessed March 19, 2022).

Glavovic, B., Smith, T. F., and White, I. (2021). The Tragedy of Climate Change Science. Climate and Development. doi: 10.1080/17565529.2021.2008855

Goldhill, O. (2018). The Left Is Also Guilty of Unscientific Dogma. Quartz. Available online at: https://qz.com/1177154/political-scientific-biases-the-left-is-guilty-of-unscientific-dogma-too/ (accessed January 18, 2018.).

Grundmann, R., and Stehr, N. (2012). The Power of Scientific Knowledge - From Research to Public Policy. Cambridge, UK: Cambridge University Press. doi: 10.1017/CBO9781139137003

Haas, P. (2004). When does power listen to truth? A constructivist approach to the policy process. J. Eur. Publ. Policy 11, 569–592. doi: 10.1080/1350176042000248034

Haidt, J. (2013). Why So Many Americans Don't Want Social Justice and Don't Trust Scientists. Boyarsky Lecture in Law, Medicine and Ethics. Duke University. Available online at: https://www.youtube.com/watch?v=b86dzTFJbkcandlist=LLandindex=2

Head, B. (2008). Three lenses of evidence-based policy. Aust. J. Public Admin. 67, 1–11. doi: 10.1111/j.1467-8500.2007.00564.x

Hoppe, R. (2005). Rethinking the science-policy nexus: from knowledge utilization and science technology studies to types of boundary arrangements. Poiesis Prax 3, 199–215. doi: 10.1007/s10202-005-0074-0

Innvaer, S., Vist, G., Trommald, M., and Oxman, A. (2002). Health policy-makers' perceptions of their use of evidence: a systematic review. J. Health Serv. Res. Policy 7, 239–244. doi: 10.1258/135581902320432778

Jaeger, C. C., Renn, O., Rosa, E. A., and Webler, T. (1998). “Decision analysis and rational action,” in Human Choice and Climate Change Volume 3: Tools for Policy Analysis, eds S. Rayner and E. L. Malone (Columbus, OH: Batelle Press), 141–215.

Jasanoff, S. (1990). The Fifth Branch: Science Advisers as Policy-Makers. Cambridge MA: Harvard University Press.

Jasanoff, S. (2004). States of Knowledge: The Co-production of Science and Social Order. London: Routledge.

Jasanoff, S., and Wynne, B. (1998). “Science and decision-making,” in Human Choice and Climate Change Volume 1: The Societal Framework, eds S. Rayner and E. L. Malone (Columbus, OH: Batelle Press), 1–36.

Johnson, E. J. (1988). “Expertise and decision under uncertainty: performance and process,” in The Nature of Expertise, eds M. T. H. Chi, R. Glaser, and M. J. Farr (New Jersey, NJ: Lawrence Erlbaum Associates, Inc.), 209–228.

Jones, R. N., Patwardhan, A., Cohen, S. J., Dessai, S., Lammel, A., Lempert, R. J., et al. (2014). “Foundations for decision making,” in IPCC 2014. 'Climate Change 2014: Impacts, Adaptation, and Vulnerability. Part A: Global and Sectoral Aspects. Contribution of Working Group II to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Cambridge, UK: Cambridge University Press).

Karhunmaa, K. (2020). Performing a linear model: the professor group on energy policy. Environ. Sci. Policy 114, 587–594. doi: 10.1016/j.envsci.2020.09.005

Kunseler, E. M. (2016). 'Revealing a paradox in scientific advice to governments: the struggle between modernist and reflexive logics within the PBL Netherlands'. Environ. Assess. Agency Palgrave Commun. 2:16029. doi: 10.1057/palcomms.2016.29

Lemos, M. C., Arnott, J. C., Ardoin, N. M., Baja, K., Bednarek, A. T., Dewulf, A., et al. (2018). To co-produce or not to co-produce. Nat. Sustain. 1, 722–724. doi: 10.1038/s41893-018-0191-0

Lindblom, C. E. (1959). The science of “muddling through”. Publ. Administr. Rev. 19, 79–88. doi: 10.2307/973677

Maas, T. Y., Pauwelussen, A., and Turnhout, E. (2022). Co-producing the science-policy interface: towards common but differentiated responsibilities. Human. Soc. Sci. Commun. 9, 1–11. doi: 10.1057/s41599-022-01108-5

Mann, M. E. (2017). Climate Catastrophe is a Choice: Downplaying the Risk is the Real Danger. Foreign Affairs. Available online at: https://www.foreignaffairs.com/articles/2017-04-21/climate-catastrophe-choice (accessed April 21, 2017).

McGreal, C. (2022). The Man Who Could Help Big Oil Derail America's Climate Fight. The Guardian. Available online at: https://www.theguardian.com/environment/2022/mar/17/the-stealth-operative-for-big-oil-whos-out-to-derail-americas-climate-fight (accessed March 17, 2022.)

NCCARF (2014). NCCARF2008-2013: The First Five Years. National Climate Change Adaptation Research Facility. Available online at: https://nccarf.edu.au/wp-content/uploads/2019/04/NCC030-report-FA.pdf

NCCARF (n.d.) National Climate Change Adaptation Research Facility. Available online at: https://nccarf.edu.au/

New, M., Reckien, D., and Viner, D. (2022). “Chapter 17: Decision making options for managing risk,” in Intergovernmental Panel on Climate Change, Climate Change 2022 - Impacts, Adaptation and Vulnerability, - Working Group II Contribution to the Sixth Assessment Report. Cambridge: Cambridge University Press.

Newman, J. (2017). Deconstructing the debate over evidence-based policy. Crit. Policy Stud. 11, 211–226. doi: 10.1080/19460171.2016.1224724

Oliver, K., Lorenc, T., and Innvaer, S. (2014). New directions in evidence-based policy research: a critical analysis of the literature. Health Res. Policy Syst. 12, 1–11. doi: 10.1186/1478-4505-12-34

O'Sullivan, C. (2022). Irish Scientists Call for Action on Climate Change. RTE News. Available online at: https://www.rte.ie/news/world/2022/0316/1286787-climate-change-report/ (accessed March 16, 2022).

Partnership for European Environmental Research (PEER) (2020) INRAE - National Research Institute for Agriculture Food and the Environment (France). Available online: https://www.peer.eu/about-peer/centres/inrae-national-research-institute-for-agriculture-food-and-the-environment.

Quiggin, J. (2017). The Economic (non)viability of the Adani Galilee Basin Project. University of Queensland School of Economics. Available online at: https://australiainstitute.org.au/wp-content/uploads/2020/12/The-economic-non-viability-of-the-Adani-Galilee-Basin-Project.pdf

Radaelli, C. M., and Meuwese, A. C. M. (2010). Hard questions, hard solutions: proceduralisation through impact assessment in the EU. West Eur. Polit. 33, 136–153. doi: 10.1080/01402380903354189

Sabatier, P. A. (1988). An advocacy coalition framework of policy change and the role of policy-oriented learning therein. Pol. Sci. 21, 129–168. doi: 10.1007/BF00136406

Santer, B. (2017). I'm a Climate Scientist. And I'm Not Letting Trickle-Down Ignorance Win. The Washington Post. Available online at: https://www.washingtonpost.com/news/posteverything/wp/2017/07/05/im-a-climate-scientist-and-im-not-letting-trickle-down-ignorance-win/?utm_term=.da48e633eef6#comments (accessed July 5, 2017).

Sarewitz, D. (2000). “Science and environmental policy: an excess of objectivity,” in Earth Matters: The Earth Sciences, Philosophy, and the Claims of Community, ed R. Frodeman (Prentice Hall, Michigan, USA).

Sarewitz, D. (2004). How science makes environmental controversies worse. Environ. Sci. Pol. 7, 385–403. doi: 10.1016/j.envsci.2004.06.001

Shermer, M. (2013). The Liberal's War on Science: How Politics Distorts Science on Both Ends of the Political Spectrum. Scientific American. Available online at: https://www.scientificamerican.com/article/the-liberals-war-on-science/

Shove, E., Pantzar, M., and Watson, M. (2012). The Dynamics of Social Practice: Everyday Life and How it Changes. London: SAGE.

Singh Mann, A. (2022). Climate change: 'Critical' weeks ahead for Great Barrier Reef as concerns grow over 'severe' coral bleaching. Sky News. Available online at: https://news.sky.com/story/climate-change-critical-weeks-ahead-for-great-barrier-reef-as-concerns-grow-over-severe-coral-bleaching-12569466 (accessed March 18, 2022).

Strassheim, H. (2017). Bringing the political back in: reconstructing the debate over evidence-based policy. A response to Newman. Crit. Policy Stud. 11, 235–245. doi: 10.1080/19460171.2017.1323656

Sutton, R. (1999). The Policy Process: An Overview. London: Chameleon Press; Overseas Development Institute.

Tangney, P. (2017a). Climate Adaptation Policy and Evidence: Understanding the Tensions Between Politics and Expertise in Public Policy. London: Routledge-Earthscan. doi: 10.4324/9781315269252

Tangney, P. (2017b). What use is CRELE? A response to Dunn and Laing. Environ. Sci. Policy. 77, 147–150. doi: 10.1016/j.envsci.2017.08.012

Tangney, P. (2017c). The UK's 2012 climate change risk assessment: how the rational assessment of science develops policy-based evidence. Sci. Publ. Policy 44, 225–234. doi: 10.1093/scipol/scw055

Tangney, P. (2019a). Between conflation and denial: the politics of climate expertise in Australia. Austr. J. Polit. Sci. 54, 131–149. doi: 10.1080/10361146.2018.1551482

Tangney, P. (2019b). Does Risk-Based Decision-Making Present an 'Epistemic Trap' for Climate Change Policy-Making?. Evidence and Policy. Available online at: https://www.ingentaconnect.com/content/tpp/ep/pre-prints/content-pppevidpold1900070r2. doi: 10.1332/174426419X1557747600211

Tangney, P. (2021). Are “climate deniers” rational actors? Applying Weberian rationalities to advance climate change policy-making. Environ. Commun. 15, 1077–1091. doi: 10.1080/17524032.2021.1942117

Turnhout, E., Stuiver, M., Klostermann, J., Harms, B., and Leeuwis, C. (2013). New roles of science in society: different repertoires of knowledge brokering. Sci. Publ. Policy 40, 354–365. doi: 10.1093/scipol/scs114

Voß, J. P., and Kemp, R. (2006). “Sustainability and reflexive governance: introduction,” in Reflexive Governance for Sustainable Development, eds J. P. Voß, D. Bauknecht, R. Kemp (Cheltenham; Northampton, MA: Edward Elgar), 3–26. doi: 10.4337/9781847200266

Washburn, A. N., and Skitka, L. J. (2018). Science denial across the political divide: liberals and conservatives are similarly motivated to deny attitude-inconsistent science. Soc. Psychol. Pers. Sci. 9, 972–980. doi: 10.1177/1948550617731500

Weber, M. (2019). Economy and Society. Trans. by Tribe, K. Harvard (Massachusetts: University Press).

Keywords: climate change, experts, evidence-based policy, linear model, knowledge co-production, science and technology studies (STS), rationalities

Citation: Tangney P (2022) Examining Climate Policy-Making Through a Critical Model of Evidence Use. Front. Clim. 4:929313. doi: 10.3389/fclim.2022.929313

Received: 26 April 2022; Accepted: 31 May 2022;

Published: 22 June 2022.

Edited by:

Peter Haas, University of Massachusetts Amherst, United StatesReviewed by:

Nils Matzner, Technical University of Munich, GermanyArden Rowell, University of Illinois at Urbana-Champaign, United States

Copyright © 2022 Tangney. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peter Tangney, cHRhbmduZXlAdWNjLmll

Peter Tangney

Peter Tangney