94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Clim., 22 April 2021

Sec. Climate Risk Management

Volume 3 - 2021 | https://doi.org/10.3389/fclim.2021.620497

This article is part of the Research TopicOpen Citizen Science Data and MethodsView all 28 articles

Holli A. Kohl1,2*†

Holli A. Kohl1,2*† Peder V. Nelson3†

Peder V. Nelson3† John Pring4

John Pring4 Kristen L. Weaver1,2

Kristen L. Weaver1,2 Daniel M. Wiley5

Daniel M. Wiley5 Ashley B. Danielson5

Ashley B. Danielson5 Ryan M. Cooper5

Ryan M. Cooper5 Heather Mortimer1,2

Heather Mortimer1,2 David Overoye6

David Overoye6 Autumn Burdick6

Autumn Burdick6 Suzanne Taylor7

Suzanne Taylor7 Mitchell Haley8

Mitchell Haley8 Samual Haley8

Samual Haley8 Josh Lange8

Josh Lange8 Morgan E. Lindblad5,9

Morgan E. Lindblad5,9Land cover and land use are highly visible indicators of climate change and human disruption to natural processes. While land cover is frequently monitored over a large area using satellite data, ground-based reference data is valuable as a comparison point. The NASA-funded GLOBE Observer (GO) program provides volunteer-collected land cover photos tagged with location, date and time, and, in some cases, land cover type. When making a full land cover observation, volunteers take six photos of the site, one facing north, south, east, and west (N-S-E-W), respectively, one pointing straight up to capture canopy and sky, and one pointing down to document ground cover. Together, the photos document a 100-meter square of land. Volunteers may then optionally tag each N-S-E-W photo with the land cover types present. Volunteers collect the data through a smartphone app, also called GLOBE Observer, resulting in consistent data. While land cover data collected through GLOBE Observer is ongoing, this paper presents the results of a data challenge held between June 1 and October 15, 2019. Called “GO on a Trail,” the challenge resulted in more than 3,300 land cover data points from around the world with concentrated data collection in the United States and Australia. GLOBE Observer collections can serve as reference data, complementing satellite imagery for the improvement and verification of broad land cover maps. Continued collection using this protocol will build a database documenting climate-related land cover and land use change into the future.

Global land cover and land use (LCLU) mapping is critical in understanding the impact of changing climatic conditions and human decisions on natural landscapes (Sleeter et al., 2018). Modeling the biophysical aspects of climatic change requires accurate baseline vegetation data, often from satellite-derived global LCLU data products (Frey and Smith, 2007). Satellite-based global LCLU products are generated through classification algorithms and verified through the visual interpretation of satellite images, detailed regional maps, and ground-based field data (Tsendbazar et al., 2015). However, an assessment of such LCLU data products found that land cover classifications agreed with reference data between 67 and 78% of the time (Herold et al., 2008). Some classes, such as urban land cover, are more challenging to accurately identify. At high latitudes, where land cover change has the potential to generate several positive feedback loops enhancing CO2 and methane emissions, field observations agreed with global LCLU data as little as 11% of the time (Frey and Smith, 2007). The high volume of reference data needed to refine global LCLU products can be impractical to obtain, but geotagged photographs may have potential to inform multiple global LCLU products at relatively low cost (Tsendbazar et al., 2015).

Citizen science can be a tool for collecting widespread reference data in support of studies of land cover and land use change, particularly if multiple people document the same site (Foody, 2015a). For example, both the Geo-Wiki Project (Fritz et al., 2012) and the Virtual Interpretation of Earth Web-Interface Tool (VIEW-IT) generated early citizen science-based land cover and land use reference datasets by asking volunteers to provide a visual interpretation of high-resolution satellite imagery and maps (Clark and Aide, 2011; Fritz et al., 2017). Other citizen science efforts, such as the Degree Confluence Project (Iwao et al., 2006), GeoWiki Project (Antoniou et al., 2016), Global Geo-Referenced Field Photo Library (Xiao et al., 2011), and PicturePost (Earth Observation Modeling Facility, 2020), have built libraries of geotagged photographs that may also serve as reference data. In this paper, we present a subset of GLOBE Observer Land Cover citizen science data as another potential LCLU reference dataset of geotagged photographs collected following a uniform protocol.

GLOBE Observer (GO) is a mobile application compatible with Android and Apple devices used to collect environmental data in support of Earth science (Amos et al., 2020). GLOBE Observer includes four observation protocols, one of which is called GLOBE Observer Land Cover. The land cover protocol first trains citizen scientists and then facilitates recording land cover with georeferenced photographs and classifications. GLOBE Observer is a component of the Global Learning and Observations to Benefit the Environment (GLOBE) Program (https://www.globe.gov), an international science and education program in operation since 1995 (GLOBE, 2019). As such, GLOBE Observer Land Cover data is submitted and stored in the GLOBE Program database with the GLOBE Land Cover measurement protocol data, in addition to 25 years of student-collected environmental data (biosphere, atmospheric, hydrologic, soils). The GLOBE Observer Land Cover protocol, which launched in September 2018, is built on an existing paper-based GLOBE Land Cover measurement protocol that has its roots early in the GLOBE Program (Becker et al., 1998; Bourgeault et al., 2000; Boger et al., 2006; GLOBE, 2020b). The connection to this deep history and well-established, experienced volunteer community makes GLOBE Land Cover unique.

This paper documents the method used to collect geotagged land cover reference photos through citizen science with GLOBE Observer, including data collection, the use of a data challenge to motivate data collection, and a description and assessment of the data collected in one such challenge, GO on a Trail, held June 1 through October 15, 2019.

All GLOBE Observer Land Cover data, including the data resulting from the GO on a Trail challenge, were collected through the NASA GLOBE Observer app. Data collection is contained entirely within the app to ensure that data are uniform following the defined land cover protocol. No external equipment is required. The app automatically collects date, time, and location when a user begins an observation. Location is recorded in latitude and longitude coordinates determined through the mobile device's location services [cellular, Wi-Fi, Global Positioning System (GPS)]. The accuracy of these coordinates is shown on-screen, providing the user the opportunity to improve the location coordinate accuracy, with a maximum accuracy of 3-meters, or the option to manually adjust the location using a map.

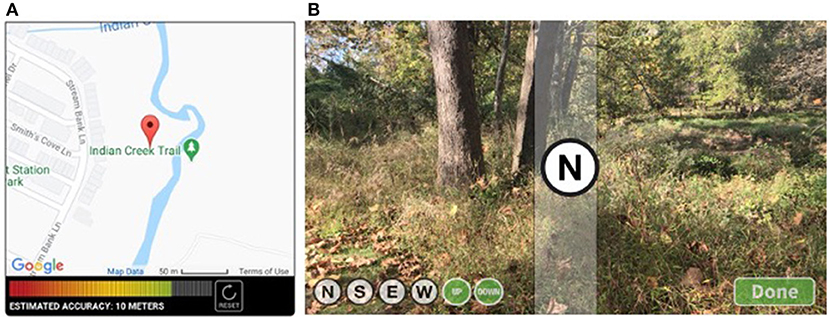

The collection of geotagged photographs also builds on embedded phone technology. The GLOBE Observer app integrates the phone's native compass sensor with the camera sensor to help users center the photographs in each cardinal direction. The direction is superimposed on the camera view; the user then taps the screen to capture a photograph when the camera is centered on North, South, East, or West. To collect uniform up and down photos, the phone's gyroscope sensor detects when the phone is pointed straight up and straight down and automatically takes a photo when the camera is appropriately oriented. Users may also upload photos directly from their device, a measure put in place to allow participation on devices that do not have compass or gyroscope (Manually uploaded photos are flagged in the database.). Direction indicators on the bottom of the screen turn green when a photo exists so that the user can clearly see if more photos are needed to complete the observation. The end user may review all photos and retake them as needed. Both the location and photography tools are shown in Figure 1.

Figure 1. Screenshot of the location accuracy interface (A) and the photo collection tool (B) in the GLOBE Observer app. These tools integrate the phone's functions (GPS, compass, and gyroscope) to ensure land cover photographs are collected uniformly.

Volunteer-collected geotagged photographs have been shown to provide useful reference data if a protocol is followed (Foody et al., 2017). At minimum, photos should include date, location, and standardized tags; ideally, a photograph should be taken in each cardinal direction to fairly sample the land cover at that location (Antoniou et al., 2016). The GLOBE Observer Land Cover protocol meets these requirements. The protocol contains two components: definition of the observation site and definition of the attributes of the site.

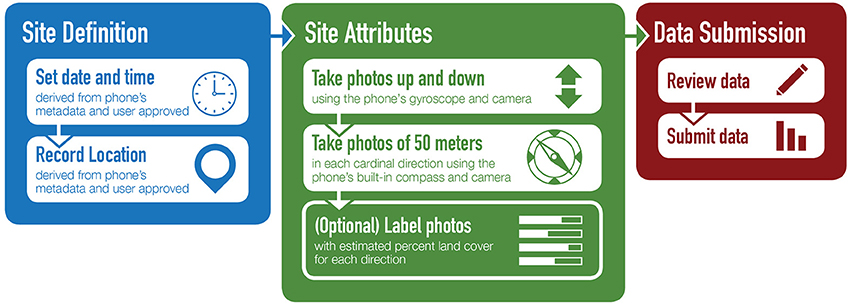

Data collection begins with the definition of the observation site, a 100-square meter area centered on the observer. The date, time, and location derived from carrier and phone settings are autofilled and verified by the user.

Site attributes identified in the second phase of the data-collection protocol include ephemeral surface conditions (snow/ice on the ground, standing water, muddy or dry ground, leaves on trees, raining/snowing), site photos, and optionally, land cover classification labels. Up to six photographs are taken at each location: horizontal landscape views focused on the nearest 50 meters and centered on each cardinal direction, up to show canopy and cloud conditions, and down to show ground cover at the center of the site. Volunteers may label each N-S-E-W photo with the primary land cover types visible in the image and estimate the percentage of the 50-meter area includes that land cover type. The classification step is optional, and an observation may be submitted without classifications.

To collect high quality data, users are trained before data collection and are required to review data before submission. Before data collection begins, users complete an interactive in-app tutorial to unlock the protocol tools. Training includes definitions of land cover, animations demonstrating how to photograph the landscape, and an interactive labeling exercise. The animation screens cannot be advanced until the animation finishes, preventing the user from skipping the training. The tutorial and a simple land cover classification guide with photo examples are accessible from any screen during data collection and classification. After collecting data, the volunteer sees a summary of the observation and has the opportunity to correct errors before final submission. The data collection process is documented in Figure 2.

Figure 2. Task flow for a GLOBE Observer Land Cover observation. Users review all data submitted, including metadata.

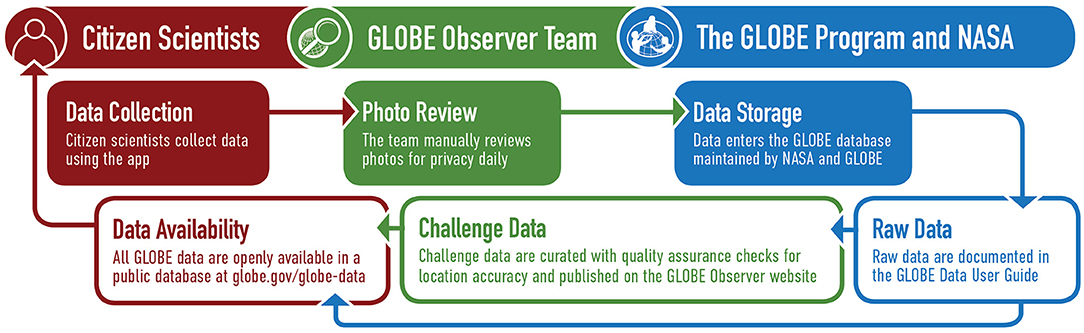

Upon submission, all data are stored in the GLOBE Program database. Before storage, data, including metadata, are lightly sanitized for privacy. Quality assessment/quality control measures are performed on subsets of data and published separately, Figure 3.

Figure 3. Data collection and data management workflow. Raw data are sanitized to ensure volunteer privacy, and are entirely user-inputted data. Challenge data and other curated datasets include quality assessments done after data submission.

Photos are manually reviewed by GLOBE Observer staff daily. If a staff member sees a photo with an identifiable person, identifying text (primarily car license plate numbers), violence, or nudity, the photo is moved to a non-public database. During the GO on a Trail challenge, 1% of photos (234 photos) were rejected. All remaining photos are entered into the public database. Photos taken with the app's camera plugin are compressed to a standard size (1920 by 1,080 pixels), while manually uploaded images maintain their original size.

Camera Exchangeable Image File Format (EXIF) metadata is stripped from the photos to maintain user privacy before images are stored in the system. Relevant metadata (date, time, location) are contained in the user-reviewed data entry. The app's location accuracy estimate is also stored with the location data. The app requests location from the phone up to 10 times or until repeat measurements are <50 meters apart. The distance between repeat measurements is the location accuracy. The location and accuracy estimate is visible to the end user on the location screen, and the user has the opportunity to repeatedly refresh the location to improve accuracy. This means that the volunteer sees and approves all metadata associated with each observation to ensure accuracy and privacy.

Volunteer privacy is further protected in the database through anonymity. Each observation is associated with a unique number assigned to each person that is not publicly associated with their name or email address. For quality assurance, it is possible to gauge a volunteer's experience level by looking at all data associated with the anonymous user number, but a volunteer's name or contact information is never made public.

Approved photos and all inputs from the volunteer are available through the GLOBE Program database (globe.gov/globe-data) as a comma-separated values (CSV) file. The file includes fields for date, time, location, user ID, elevation (based on the location, not from the phone's metadata), URLs to each photo within the database, LCLU overall classification, classification percent estimates for each photo, and user-submitted field notes. These data should be viewed as “raw” primary data. Subsets of data on which additional quality assurance or data validation analysis has been done after submission, such as the GO on a Trail data described in this paper, are provided on the GLOBE Observer website (observer.globe.gov/get-data). GLOBE Observer Land Cover data and data access are documented in the GLOBE Data User Guide (GLOBE, 2020a).

While GLOBE Observer Land Cover data collection began in September 2018 and continues through the present, this paper focuses on applications of the method to generate data during a citizen science challenge called GO on a Trail and held June 1 through October 15, 2019. The challenge was modeled on other successful challenges conducted through GLOBE Observer, particularly the Spring Clouds Challenge held March-April 2018 (Colón Robles et al., 2020), which resulted in increased rates of data submission during and after the challenge period. During the GO on a Trail challenge, citizen scientists from all 123 countries that participate in The GLOBE Program were encouraged to submit observations of land cover (The GLOBE Program, of which GLOBE Observer is a part, operates through bi-lateral agreements between the U.S. government and the governments of partner nations.). GLOBE countries are grouped into six regions. To motivate data collection, the three observers who collected the most data in each region during the challenge period were publicly recognized (if they wished to be) as top observers for the challenge and awarded a certificate.

To collect regions of geographically dense data within the challenge, GLOBE Observer partnered with Lewis and Clark National Historic Trail (LCNHT) and requested data at specific locations of historical and scientific interest along the Trail, and with John Pring of Geosciences Australia and Scouts Australia for data in remote locations in Australia. Top participants in the partner-led challenges were awarded a Trail patch and poster (LCNHT) or recognized in a formal ceremony (Scouts Australia).

In the United States, the GO on a Trail data challenge was planned and implemented as a partnership between the NASA-based GLOBE Observer team and Lewis and Clark National Historic Trail (LCNHT) under the U.S. National Park Service. LCNHT was deemed an ideal education partner because the trail covers ~7,900 km (4,900 miles) over 16 states, transecting North America from Pittsburg, PA, to Astoria, OR. The Trail crosses eight ecoregions, encompassing a variety of land cover types, and consists of 173 independently operated partner visitor centers and museums that could support volunteer recruitment and training.

Interested visitor centers and museums were trained on the land cover protocol and given challenge support material including data collection instructions and a large cement sticker to be placed at a training site near the building. Called Observation Stations, the stickers were designed to be locations where on-site educators could train new citizen scientists how to collect data. Observation Stations were intended to generate repeat observations to gauge the variability in data collection and classification across citizen scientists. More than 150 Observation Station stickers were distributed, but it is unclear how many were placed.

Acknowledging that many successful citizen science projects use game theory to improve volunteer retention and to increase data creation (Bayas et al., 2016; National Academies of Sciences Engineering, and Medicine, 2018) and to encourage data collection at Observation Stations, a point system was implemented to award the most points (4) for observations collected at an Observation Station. Participants could earn 2 points per observation taken at designated historic sites (United States Code, 2011) along the Trail, and 1 point per observation taken anywhere else along the Trail. While the single point was meant to enable opportunistic data collection, we also awarded a single point to data collected at the center points of Moderate Resolution Imaging Spectroradiometer (MODIS) pixels to encourage observations that could be matched to the global 500-meter MODIS Land Cover Type (MCD12Q1) version 6 data product. The participants with the most points were recognized as the challenge top observers and received a Trail patch and poster.

Concentrated data collection in Australia resulted from a land cover data collection competition for youth participating in Scouts, an organization for children and youth (male and female) aged 5–26, and associated adults. John Pring, Geosciences Australia, hosted the competition, which ran June 15–October 15, timed to coincide with both state-based school holidays and cooler weather. The competition incorporated a points system intended to encourage observations in non-metropolitan settings and with value increasing with distance from built up areas. While 23 scouting-based teams registered through the GLOBE Teams function and contributed data, two were extremely active, adding nearly 200 observations across 5 Australian states and territories. The winning team of three [aged 10, 11 (Team Captain) and 15] collected 111 LCLU observations. They also ranked among the top GO on a Trail observers in the Asia and Pacific region, contributing just over a quarter of all data submitted from the region during the challenge.

To prepare data for scientific use, challenge data were assessed for quality assurance focusing on location, data completeness, and classification completeness. All data with location accuracy error > 100 meters were removed as were submissions that lacked photographs. Ninety percent of the observations submitted passed screening. QA fields include location accuracy error, image count (0–6), number of images rejected (0–6), image null (0–6), classification for each direction (0–4), completeness (image count + classification direction count/10, range of 0–1), presence/absence for each directional photo (1 is present, 0 is blank), and the sequence of values for image presence/absence to indicate which directions are absent in the observation.

As an initial assessment of user classification labeling, the MODIS/Terra + Aqua Land Cover Type Yearly L3 Global 500-meter classification data are included in the final data file for each GO observation site. A mismatch between user classification and MODIS data does not necessarily indicate that the volunteer incorrectly labeled the land cover. Differences can also result from LCLU change, differences in scale, or errors in the satellite data product. Discussion of additional planned quality assessment of user classifications follows.

GLOBE Observer Land Cover GO on a Trail challenge data are in the supplemental data and are archived on the GLOBE Observer website, https://observer.globe.gov/get-data/land-cover-data, as a CSV file. An accompanying folder of GO on a Trail photos is provided on the website.

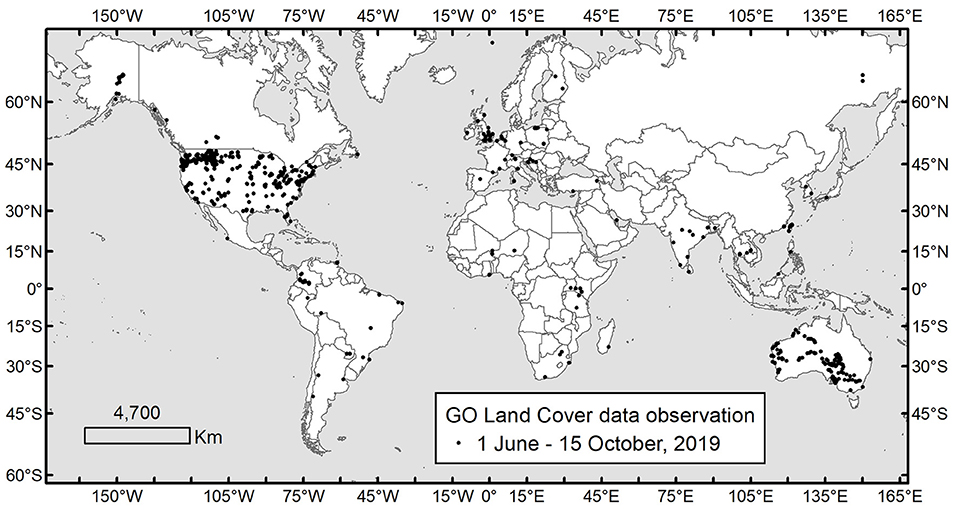

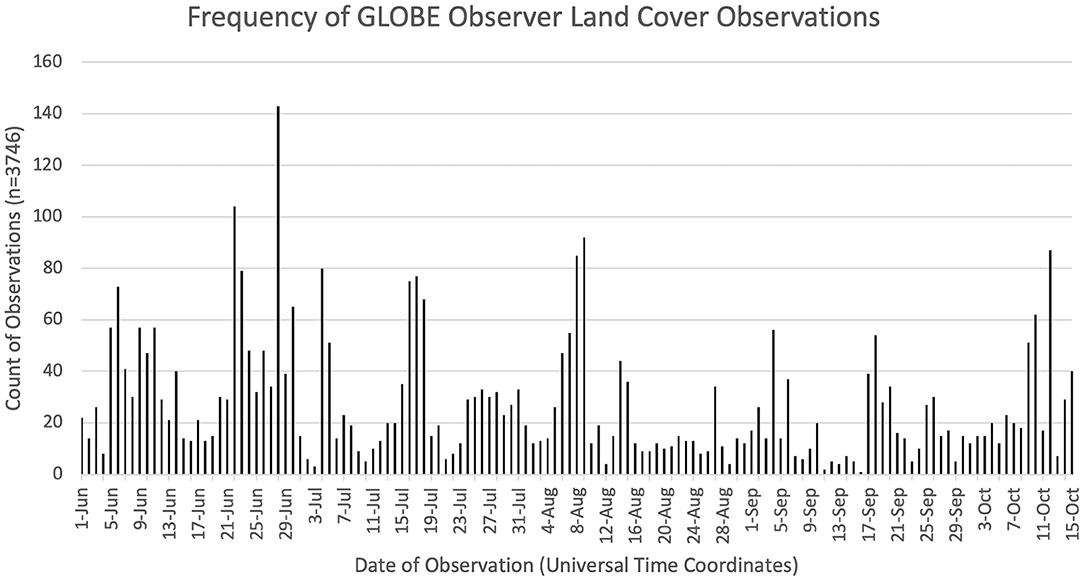

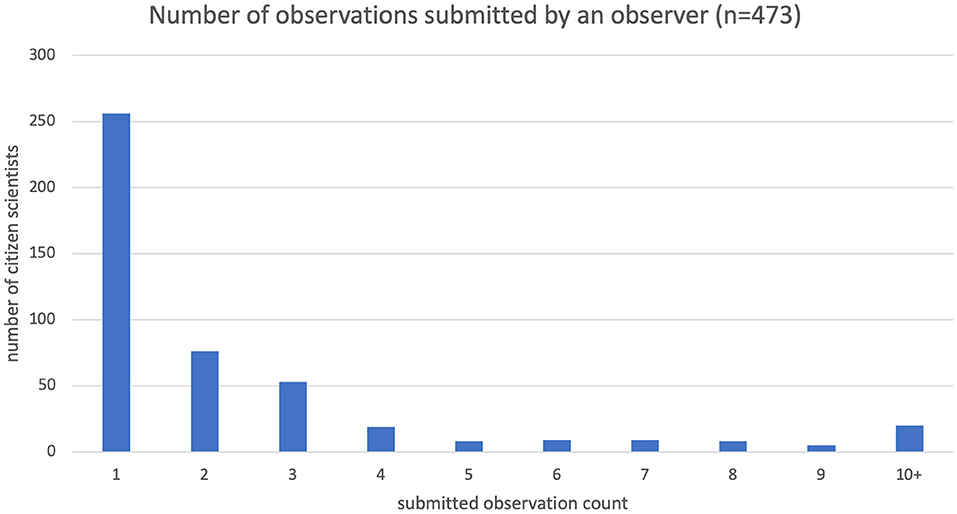

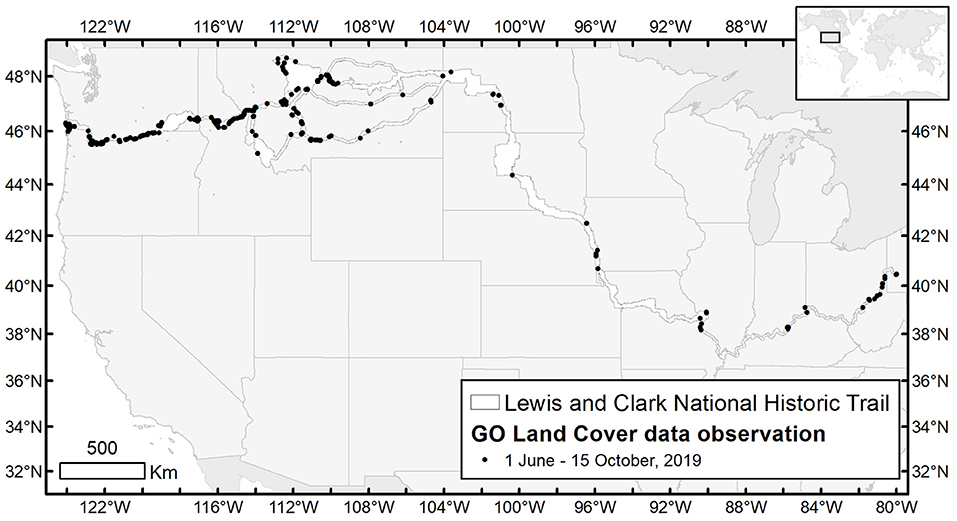

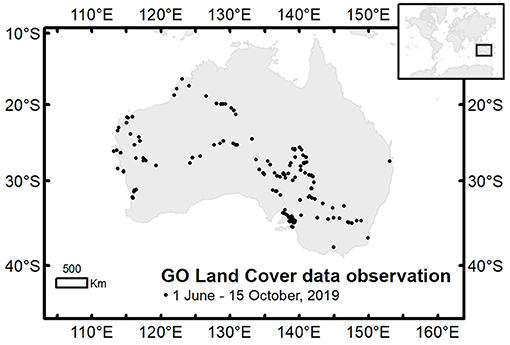

GO on a Trail data were collected opportunistically between June 1 and October 15, 2019, by a group of 473 citizen scientists that created 3,748 (3,352 after QA/QC) LCLU point observations consisting of 18,836 photos and 906 classification labels using the GLOBE Observer mobile app with the Land Cover protocol. Observations were submitted from 37 countries in North and South America, Africa, Europe, Asia, and Australia with concentrations in the United States (70% of all data) and Australia, Figure 4. Other top contributors included Poland, United Kingdom, and Thailand. Participation varied throughout the period, with a peak in late June when the challenge was heavily promoted, as shown in Figure 5. Most of the data were collected by experienced volunteers. As is typical in many citizen science projects, 6% of the participants (super users) collected 75% of the data, while 54% of users submitted just one observation, Figure 6.

Figure 4. Distribution of ground reference images collected globally using the GLOBE Observer mobile app with the Land Cover protocol during the GO on a Trail data campaign that occurred from June 1 to October 15, 2019. Observations were reported from every continent except Antarctica, but are most concentrated in North America and Australia where partner-led challenges drove data collection.

Figure 5. Participation of citizen scientists in data collection varied but was consistent throughout the time period of 1 June−15 October, 2019.

Figure 6. The overall challenge engaged 473 citizen scientists who primarily submitted between 1 and 9 observations while there were 20 highly-engaged participants who each contributed >10 observations.

Twenty-seven percent (902) of the total observations were within the focus area of the LCNHT, defined as an area five kilometers on either side of the Trail, as shown in Figure 7. Ten percent of the LCNHT observations came from visitor centers (potential Observation Stations), resulting in 578 images collected within 500 meters of the visitor centers. Too few repeat observations were submitted from Observation Stations to do the intended assessment of variability in data collection and classification across citizen scientists.

Figure 7. This map shows the results of a geographically-focused portion of the data challenge with partner Lewis and Clark National Historic Trail (LCNHT) under the U.S. National Park Service.

In Australia, the challenge resulted in 183 new land cover observations with 1,028 photos. Teams traveled more than 20,000 kilometers between them based on known home locations and the farthest data point from home collected by each team. New data includes contributions from extremely remote locations where other LCLU reference data are scarce, Figure 8.

Figure 8. Distribution of GLOBE Observer Land Cover observation sites during the geographically-focused Australian data challenge June 15–October 15, 2019.

Most observations were collected in dry conditions when leaves were on the trees, Table 1. Twenty-five percent of the observations include optional classification data, Table 2. For all observations with classification labels submitted during this data challenge, the most common LCLU type mapped was herbaceous land (grasses and forbs, 387 sites) followed by urban/developed land (197 sites). The high number of urban/developed land likely reflects opportunistic data collection, meaning participants received “credit” for data collected anywhere and these land cover types are most accessible to volunteers. Along the LCNHT, classified sites were also primarily herbaceous, followed by open water and urban. Considering that grassland is the dominant land cover type (78%: MODIS IGBP) and that the Trail itself is defined by roadways along rivers, these LCLU class results are not surprising.

The data that results from the GO Land Cover protocol is a series of six photographs tagged with date, time, location, and, in some cases, land cover classification estimates. Other projects create collections of similar geotagged photographs. The Degree Confluence Project encourages users to photograph integer latitude-longitude confluence points in each cardinal direction. By the nature of the project, the spatial density of the photographs is limited (Fonte et al., 2015) to 24,482 potential points on land (Iwao et al., 2006). The Geo-Wiki Project also accepts geotagged photos of specific, pre-defined locations for brief project periods during which users upload a single photo from a requested location or land cover type (Fonte et al., 2015).

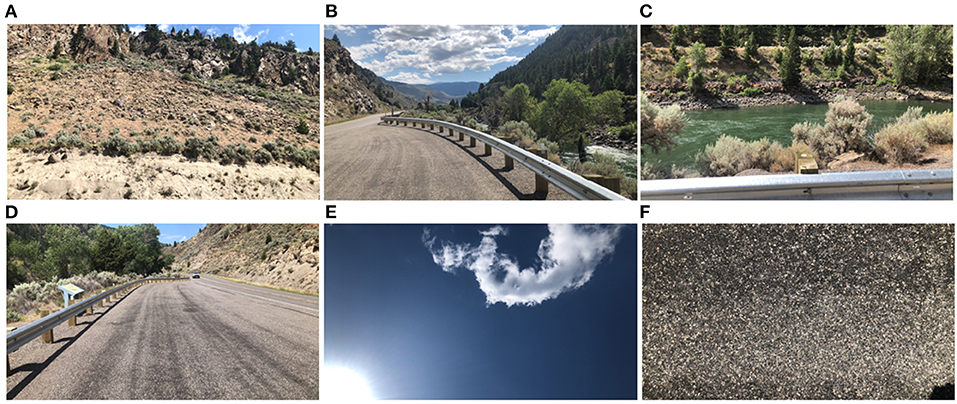

GLOBE data offers photographs of the four cardinal directions and adds up and down photos for additional context. Figure 9 and Table 3 show the raw data from a single user-submitted observation. While this paper focuses on data collected during the GO on Trail challenge in 2019, GLOBE data collection is ongoing with data reported at more than 17,000 locations in 123 countries.

Figure 9. Sample GLOBE Observer land cover photos: (A) North, (B) East, (C) South, (D) West, (E) Up, and (F) Down.

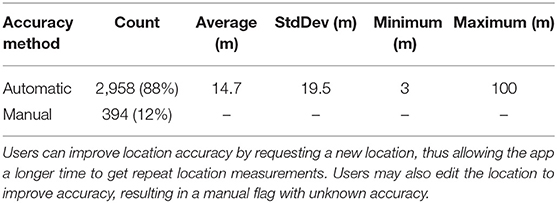

Since the primary data are geotagged photos, location accuracy is the most significant data quality check done on the GO on a Trail data to facilitate mapping the photos to other LCLU data. Further, a published quality assessment of all GLOBE Observer land cover data collected between 2016 and 2019, including GO on a Trail data, found that location errors are the most common errors (Amos et al., 2020). The GLOBE Observer app reports location accuracy estimates based on repeated queries of the phone's GPS receiver. The minimum accuracy error is 3 meters and the maximum is 100 meters with an average error of 14.7 meters, Table 4. Data with location accuracy errors > 100 meters (242 observations, 6%) have been eliminated from the dataset.

Table 4. Location accuracy estimates are derived by pinging the phone's GPS location service up to 10 times or until the accuracy error is <50 meters.

The dataset may include a degree of LCLU bias introduced by citizen observers. Foody (2015a) notes that a weakness of citizen-collected geotagged photos, such as GLOBE Observer photos, is that certain types of land cover may be over-represented. People are known to show preferences for visiting particular land cover types. Han (2007) highlighted a preference for coniferous forest landscapes compared to grassland/savanna biomes. Buxton et al. (2019) noted preferences for greener landscapes in urban neighborhoods. White et al. (2010) observed preferences for water landscapes. Kisilevich et al. (2010) highlighted a trend to visit and document scenic locations. Understanding these potential biases, LCNHT staff used the GO on a Trail challenge to identify particularly scenic locations along the trail. Also, because the data collection was more directed in this area, this sample of land cover types observed by citizen scientists along the LCNHT have more heterogeneity than in the global GO on a Trail data. This bias will be mitigated in future challenges by encouraging data collection at pre-selected sites within the app in addition to allowing user-driven opportunistic data collection.

The third area to assess is the quality of volunteer-assigned land cover classification labels. The optional land cover labels are adapted from a hierarchical global land cover classification system (UNESCO, 1973) developed for the GLOBE Program's 1996 land cover protocol, on which GLOBE Observer Land Cover is based, and named the Modified UNESCO Classification (MUC) system (Becker et al., 1998; GLOBE, 2020b). The subsequent GLOBE Observer land cover data labels were required to be consistent with historic GLOBE data to maintain continuity.

As stated in the GLOBE MUC Field Guide, the original goal of the GLOBE Land Cover measurement protocol was “the creation of a global land cover data set to be used in verifying remote sensing land cover classifications.” Selecting a land cover classification system for citizen science poses a challenge because many of the current global LCLU datasets (e.g., the Moderate Resolution Imaging Spectroradiometer, or MODIS) employ different classifications systems (Herold et al., 2008), making them difficult to compare without harmonizing to similar land cover definitions (Yang et al., 2017; Li et al., 2020; Saah et al., 2020). To make GLOBE Observer Land Cover data comparable to global LCLU maps central to NASA-funded science while maintaining continuity with prior GLOBE Land Cover measurement data, the MUC system was cross-mapped with the International Geosphere-Biosphere Programme (IGBP) Land Cover Type Classification (Loveland and Belward, 1997) used in the MODIS Land Cover Product (Sulla-Menashe and Friedl, 2018). MODIS pixel-level LCLU values are reported for each observation site in the GO on a Trail data. The MODIS Land Cover Product was selected because it was the highest resolution global NASA product available at the time. Alignments are shown in Supplementary Table 1. Because GO data include raw percent estimates in addition to the overall land cover for each photograph, a similar process could be used to harmonize with other classification systems, such as the Land Cover Classification System used by the Food and Agriculture Organization of the United Nations.

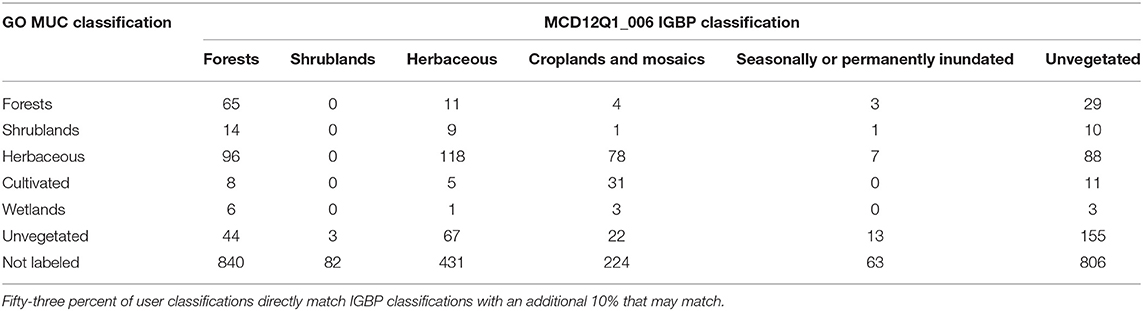

Since building a library of geotagged photos is the primary objective of the protocol and classification is optional, only 25% of the data includes classification labels. Fifty-three percent of the volunteer labels match the IGBP classification for that location, Table 5. The remaining 37% of classifications do not match because of LCLU change between 2018 and 2019 (i.e., GO-classified cultivated vs. IGBP forest), volunteer misclassification (i.e., GO-classified herbaceous grassland vs. IGBP urban), or differences in scale (GO-classified barren vs. IGBP sparse herbaceous). An additional 10% of volunteer classifications may match such as a volunteer classification of herbaceous land cover being assigned to a location classified as savanna or open forest in the MODIS product. In this case, grasses may cover a greater percentage of the 100-m area mapped with GO than trees. Of the observations that are classified, it's unclear how well volunteers are estimating percent cover. Dodson et al. (2019) reported that GLOBE citizen scientists estimating percent cloud cover in the GLOBE Observer Clouds tool tend to overestimate percent cover compared to concurrent satellite data. This means that GLOBE Observer Land Cover percent estimates, which are done following a similar protocol, may also be high and should be viewed not as quantitative data, but as a means to gauge general land cover representation in the area.

Table 5. Confusion matrix for GLOBE Observer Land Cover classes based on comparison with IGBP classification.

GLOBE Observer is implementing two initiatives to further assess and remove errors in classification. First, GLOBE Observer is exploring the potential use of artificial intelligence/machine learning (AIML) to identify land cover in the photos. The second initiative is a secondary classification of the photos by other citizen science volunteers, an approach such as those employed by Geo-Wiki Project's Picture Pile (Danyo et al., 2018) or classification projects on the Zooniverse platform (Rosenthal et al., 2018). Further analysis is required to compare the accuracy of AIML classification and secondary classification to primary classification.

AIML and secondary classification will also expand the number of geotagged photos that include classification labels. GLOBE Observer is additionally pursuing incentives to encourage volunteers to submit complete and accurate observations by completing phase two of data collection. While recognition for “winning” does motivate people to participate in challenges, feedback may provide a more powerful mechanism for encouraging routine data completeness and accuracy. A survey of GLOBE Observer users found that the majority of active participants contribute because they are interested in contributing to NASA science and that some that stop participating do so because they feel that a lack of feedback from the project indicates that their contributions aren't useful (Fischer et al., 2021). Clear feedback will help users understand the value of complete and accurate data and will improve data accuracy by identifying classification success or offering correction.

Bayas et al. (2020) report significant improvement in the quality of volunteers' land cover classifications when users were provided with timely feedback. As documented in Amos et al. (2020), GLOBE Observer provides such feedback for volunteers who submit clouds data. Volunteers are encouraged to take cloud observations when a satellite is overhead through an alert that appears 15 min before the overpass. Data concurrent with an overpass are matched to the satellite-derived cloud product from that overpass, and the user is sent an email that compares their observation to the satellite classification. Daily cloud data submissions peak during satellite overpass times, indicating that the alert combined with feedback motivate data collection. We are exploring mechanisms to provide a similar satellite match email for land cover data. Such a system would not only provide feedback, but also flag observations that report land cover that differs from the matched data product. These sites could be reviewed by experts to identify volunteer errors and offer feedback or to identify change or errors in the satellite-based land cover product.

Since a desire to contribute meaningfully to science motivates GLOBE Observer users, data completeness may also improve if volunteers are asked to collect specific types of data to meet a particular science objective. To that end, an in-app mechanism is under development to enable scientists to request observations at designated observation sites. By communicating the scientific need for data and making it simple for volunteers to identify where to collect the most useful data, we will provide motivation for complete and accurate data collection.

The GLOBE Observer Land Cover dataset is a relatively new but growing data set and the authors suggest some potential data applications. First, the photos could be used on their own in a standard photo monitoring approach (e.g., Sparrow et al., 2020) to estimate current conditions or for tracking LCLU changes over time. Second, if a photo was not classified by a GO citizen scientist, there are improvements in computer vision processing to automatically identify land cover (Xu et al., 2017) or elements like woody vegetation (Bayr and Puschmann, 2019) and thus be incorporated in a variety of software workflows. Third, the ground reference photos could support remote sensing activities that rely on human cognition (White, 2019) and readily-accessible datasets to accurately label satellite imagery such as developing datasets for LCLU mapping and monitoring with tools such as TimeSync (Cohen et al., 2010) and Collect Earth (Bey et al., 2016; Saah et al., 2019) or in the attribution of land cover change (e.g., Kennedy et al., 2015). The growing dataset could support both of these examples via the provision of landscape level observations across widely dispersed areas. A deep review is underway to assess how well the GO photos map to satellite data which of these applications are most viable.

Foody (2015b) and Stehman et al. (2018) both note the potential large impact on statistical or area estimation in LCLU mapping if reference data is not collected in a specific manner. The increasing temporal and spatial breadth of the GO Land Cover dataset should support the verification of remote sensing Land Cover mapping and the determination of “error-adjustment[s]” suggested by both Foody and Stehman. Indeed, use of geotagged photos as a supporting data source to inform land cover maps is not without precedent, and LCLU data could be “radically improved” with the introduction of more quality volunteer-produced data (Fonte et al., 2015). Iwao et al. (2006) established that photos collected in the Degree Confluence Project provided useful validation information for three global land cover maps. The Geo-Wiki Project also demonstrated the potential value of geotagged photos in a handful of case studies (Antoniou et al., 2016).

The GLOBE Land Cover photo library similarly has the potential to contribute quality reference data to the land cover and land use research community. The location accuracy of GLOBE Observer georeferenced photos is 100 meters or better for 80% of the data and 10 meters or better for 60% of the data. This is sufficient to place the photos within a single pixel of moderate-resolution satellite-based LCLU products, such as the MODIS Land Cover Map. Up to 63% of volunteer classification labels align with the MODIS Land Cover product. Cases of mismatched labeling require deeper investigation, but ongoing assessment of volunteer and expert classification labels will add value to GO on a Trail data.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://observer.globe.gov/get-data/land-cover-data.

HK and KW manage GLOBE Observer and conceived of the GO on a Trail data challenge. HK, PN, and DO designed the GLOBE Observer Land Cover protocol, and DO led the development of the app data collection tool. AD, RC, and DW planned and implemented the LCNHT portion of the GO on a Trail challenge, contributed to communications, and supported trail partners. ML did the secondary classification of trail data. JP led the Australia Scouts challenge. PN, AD, and ST collected the most GLOBE Observer land cover data along the LCNHT. MH, SH, and JL were the Scouts who collected the most data in the Australia data challenge. AB managed communication to recruit citizen scientists to participate in the challenge. PN did much of the data analysis presented in this paper. HK wrote most of the manuscript, and PN contributed significantly, particularly to the discussion. JP and KW also contributed to the text. HM designed challenge graphics and Figures 2, 3. All authors contributed to the final manuscript.

GLOBE Observer was funded by the NASA Science Activation award number NNX16AE28A for the NASA Earth Science Education Collaborative (NESEC; Theresa Schwerin, PI). The GLOBE Program was sponsored by the National Aeronautics and Space Administration (NASA); supported by the National Science Foundation (NSF), National Oceanic and Atmospheric Administration (NOAA), and U.S. Department of State; and implemented by the University Corporation for Atmospheric Research. The GLOBE Data Information Systems Team developed and supports the GLOBE Observer app.

HK, KW, HM, DO, and AB were employed by the company Science Systems and Applications, Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors express appreciation for the citizen scientists who volunteer their time to collect GLOBE Observer data. The authors acknowledge the contribution of the broader GLOBE Observer team to implementing the GO on a Trail challenge. Finally, the authors thank the three reviewers for their thoughtful and constructive feedback.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fclim.2021.620497/full#supplementary-material

Amos, H. M., Starke, M. J., Rogerson, T. M., Colón Robles, M., Andersen, T., et al. (2020). GLOBE Observer Data: 2016–2019. Earth Space Sci. 7:e2020EA001175. doi: 10.1029/2020EA001175

Antoniou, V., Fonte, C. C., See, L., Estima, J., Arsanjani, J. J., Lupia, F., et al. (2016). Investigating the feasibility of geo-tagged photographs as sources of land cover input data. Int. J. Geo Inform. 5:64. doi: 10.3390/ijgi5050064

Bayas, J. C. L., See, L., Bartl, H., Sturn, T., Karner, M., et al. (2020). Crowdsourcing LUCAS: citizens generating reference land cover and land use data with a mobile app. Land 9:446. doi: 10.3390/land9110446

Bayas, J. C. L., See, L., Fritz, S., Sturn, T., Preger, C., Durauer, M., et al. (2016). Crowdsourcing in-situ data on land cover and land use gamification and mobile technology. Remote Sens. 8:905. doi: 10.3390/rs8110905

Bayr, U., and Puschmann, O. (2019). Automatic detection of woody vegetation in repeat landscape photographs using a conventional neural network. Ecol. Inform. 50, 220–233. doi: 10.1016/j.ecoinf.2019.01.012

Becker, M. L., Congalton, R. G., Budd, R., and Fried, A. (1998). A GLOBE collaboration to develop land cover data collection and analysis protocols. J. Sci. Educ. Technol. 7, 85–96. doi: 10.1023/A:1022540300914

Bey, A., Sánchez-Paus Díaz, A., Maniatis, D., Marchi, G., Mollicone, D., Ricci, S., et al. (2016). Collect earth: land use and land cover assessment through augmented visual interpretation. Remote Sens. 8:807. doi: 10.3390/rs8100807

Boger, R., LeMone, P., McLaughlin, J., Sikora, S., and Henderson, S. (2006). “GLOBE ONE: a community-based environmental field campaign,” in Monitoring Science and Technology Symposium: Unifying Knowledge for Sustainability in the Western Hemisphere Proceedings RMRS-P-42CD, eds C. Aguirre-Bravo, J. P. Pellicane, D. P. Burns, and S. Draggan (Fort Collins, CO: U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station), 500–504.

Bourgeault, J. L., Congalton, R. G., and Becker, M. L. (2000). “GLOBE MUC-A-THON: a method for effective student land cover data collection,” in IGARSS 2000. IEEE 2000 International Geoscience and Remote Sensing Symposium. Taking the Pulse of the Planet: The Role of Remote Sensing in Managing the Environment. Proceedings Cat. No.00CH37120 (Honolulu, HI: IEEE), 551–553.

Buxton, J. A., Ryan, R. L., and Wells, N. M. (2019). Exploring preferences for urban greening. Cities Environ. 12:3. Available online at: https://digitalcommons.lmu.edu/cate/vol12/iss1/3

Clark, M. L., and Aide, T. M. (2011). Virtual Interpretation of Earth Web-Interface Tool (VIEW-IT) for collecting land-use/land-cover reference data. Remote Sens. 3, 601–620. doi: 10.3390/rs3030601

Cohen, W. B., Yang, Z., and Kennedy, R. (2010). Detecting trends in forest disturbance and recovery using yearly Landsat time series: 2. TimeSync - Tools for calibration and validation. Rem. Sens. Environ. 114, 2911–2924. doi: 10.1016/j.rse.2010.07.010

Colón Robles, M., Amos, H. M., Dodson, J. B., Bowman, J., Rogerson, T., Bombosch, A., et al. (2020). Clouds around the world: how a simple citizen science data challenge became a worldwide success. Bull. Amer. Meteor. Soc. 101, E1201–E1213. doi: 10.1175/BAMS-D-19-0295.1

Danyo, O., Moorthy, I., Sturn, T., See, L., Laso Bayas, J.-C., Domian, D., et al. (2018). “The picture pile tool for rapid image assessment: a demonstration using hurricane matthew,” in ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences. IV-4, 2018.

Dodson, J. B., Colón Robles, M., Taylor, J. E., DeFontes, C. C., and Weaver, K. L. (2019). Eclipse across America: citizen science observations of the 21 August 2017 total solar eclipse. J. Appl. Meteorol. Climatol. 58, 2363–2385. doi: 10.1175/JAMC-D-18-0297.1

Earth Observation Modeling Facility University of Oklahoma. (2020). Picture Post. Available online at: https://picturepost.ou.edu/ (accessed October 21, 2020).

Fischer, H. A., Cho, H., and Storksdieck, M. (2021). Going beyond hooked participants: the nibble and drop framework for classifying citizen science participation. Citizen Sci. Theory Pract. 6, 1–18. doi: 10.5334/cstp.350

Fonte, C. C., Bastin, L., See, L., Foody, G., and Lupia, F. (2015). Usability of VGI for validation of land cover maps. Int. J. Geograph. Inform. Sci. 29, 1269–1291. doi: 10.1080/13658816.2015.1018266

Foody, G., Fritz, S., Fonte, C. C., Bastin, L., Olteanu-Raimond, A.-M., Mooney, P., et al. (2017). “Mapping and the citizen sensor,” in Mapping and the Citizen Sensor, eds G. Foody, L. See, S. Fritz, P. Mooney, A. M. Olteanu-Raimond, C. C. Fonte, and V. Antoniou (London: Ubiquity Press), 1–12.

Foody, G. M. (2015a). “An assessment of citizen contributed ground reference data for land cover map accuracy assessment,” in ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, II-3/W5, 219–225. doi: 10.5194/isprsannals-II-3-W5-219-2015

Foody, G. M. (2015b). Valuing map validation: the need for rigorous land cover map accuracy assessment in economic valuation of ecosystem services. Ecol. Econ. 111, 23–28. doi: 10.1016/j.ecolecon.2015.01.003

Frey, K. E., and Smith, L. C. (2007). How well do we know northern land cover? Comparison of four global vegetation and wetland products with a new ground-truth database for West Siberia. Glob. Biogeochem. Cycles 21:1. doi: 10.1029/2006GB002706

Fritz, S., McCallum, I., Schill, C., Perger, C., See, L., Schepaschenko, D., et al. (2012). Geo-Wiki: an online platform for improving global land cover. Environ. Model. Software 31, 110–123. doi: 10.1016/j.envsoft.2011.11.015

Fritz, S., See, L., Perger, C., McCallum, I., Schill, C., Schepaschenko, D., et al. (2017). A global dataset of crowdsourced land cover and land use reference data. Sci. Data 4:170075. doi: 10.1038/sdata.2017.75

GLOBE (2019). About GLOBE. Global Learning and Observations to Benefit the Environment. Available online at: https://www.globe.gov/about/overview (accessed October 21, 2020).

GLOBE (2020a). GLOBE Data User Guide. Global Learning and Observations to Benefit the Environment. Available online at: https://www.globe.gov/globe-data/globe-data-user-guide (accessed October 21, 2020).

GLOBE (2020b). MUC Field Guide, A Key to Land Cover Classification. Global Learning and Observations to Benefit the Environment. Available online at: https://www.globe.gov/documents/355050/355097/MUC+Field+Guide/5a2ab7cc-2fdc-41dc-b7a3-59e3b110e25f (accessed October 20, 2020).

Han, K-T. (2007). Responses to six major terrestrial biomes in terms of scenic beauty, preference, and restorativeness. Environ. Behav. 39, 529–556. doi: 10.1177/0013916506292016

Herold, M., Mayaux, P., Woodcock, C. E., Baccii, A., and Scmullius, A. (2008). Some challenges in global land cover mapping: an assessment of agreement and accuracy in existing 1 km datasets. Remote Sens. Environ. 112, 2538–2556. doi: 10.1016/j.rse.2007.11.013

Iwao, K., Nishida, K., Kinoshita, T., and Yamagata, Y. (2006). Validating land cover maps with degree confluence project information. Geophys. Res. Lett. 33:L23404. doi: 10.1029/2006GL027768

Kennedy, R. E, Yang, Z., Braaten, J., Copass, C., Antonova, N., Jordan, C., and Nelson, P. (2015). Attribution of disturbance change agent from Landsat time-series in support of habitat monitoring in the Puget Sound region, USA. Remote Sens. Environ. 166, 271–285. doi: 10.1016/j.rse.2015.05.005

Kisilevich, S., Krstajic, M., Keim, D., Andrienko, N., and Andrienko, G. (2010). “Event-based analysis of people's activities and behavior using Flickr and Panoramio geotagged photo collections,” in Proceedings of the 14th International Conference on Information Visualisation (IV), London, UK, 26–29 July 2010, 289–296.

Li, Z., White, J. C., Wulder, M., Hermosilla, T., Davidson, A. M., and Comber, A. J. (2020). Land cover harmonization using Latent Dirichlet allocation. Int. J. Geograph. Inform. Sci. 35, 348–374. doi: 10.1080/13658816.2020.1796131

Loveland, T. R., and Belward, A. S. (1997). The IGBP-DIS global 1km land cover data set, DISCover: first results. Int. J. Remote Sens. 18, 3289–3295. doi: 10.1080/014311697217099

National Academies of Sciences Engineering and Medicine. (2018). Learning Through Citizen Science: Enhancing Opportunities by Design. Washington, DC: The National Academies Press.

Rosenthal, I. S., Byrnes, J. E.K, Cavanaugh, K. C., Bell, T. W., Harder, B., Haupt, A. J., et al. (2018). Floating forests: quantitative validation of citizen science data generated from consensus classifications. Phys. Soc. arXiv. arXiv:1801.08522

Saah, D., Johnson, G., Ashmall, B., Tondapu, G., Tenneson, K., Patterson, M., et al. (2019). Collect Earth: An online tool for systematic reference data collection in land cover and use applications. Environ. Model. Softw. 118, 166–171. doi: 10.1016/j.envsoft.2019.05.004

Saah, D., Tenneson, K., Poortinga, A., Nguyen, Q., Chishtie, F., Aung, K. S., et al. (2020). Primitives as building blocks for constructing land cover maps. Int. J. Appl. Earth Observ. Geoinform. 85:101979. doi: 10.1016/j.jag.2019.101979

Sleeter, B. M. T., Loveland, T., Domke, G., Herold, N., Wickham, J., et al. (2018). “Land Cover and Land-Use Change,” in Impacts, Risks, and Adaptation in the United States: Fourth National Climate Assessment, Vol II, eds D. R. Reidmiller, C. W. Avery, D. R. Easterling, K. E. Kunkel, K. L.M. Lewis, T. K. Maycock, and B. C. Stewart (Washington, DC: U.S. Global Change Research Program), 202–231.

Sparrow, B., Foulkes, J., Wardle, G., Leitch, E., Caddy-Retalic, S., van Leeuwen, S., et al. (2020). A vegetation and soil survey method for surveillance monitoring of rangeland environments. Front. Ecol. Evol. 8:157. doi: 10.3389/fevo.2020.00157

Stehman, S. V., Fonte, C. C., Foody, G. M., and Linda See, L. (2018). Using volunteered geographic information (VGI) in design-based statistical inference for area estimation and accuracy assessment of land cover. Remote Sens Environ. 212, 47–59. doi: 10.1016/j.rse.2018.04.014

Sulla-Menashe, D., and Friedl, M. A. (2018). User Guide to Collection 6 MODIS Land Cover (MCD12Q1 and MCD12C1) Product. Land Processes Distributed Active Archive Center. Available online at: https://lpdaac.usgs.gov/documents/101/MCD12_User_Guide_V6.pdf

Tsendbazar, N. E., de Bruin, S., and Herold, M. (2015). Assessing global land cover reference datasets for different user communities. ISPRS J. Photogram. Remote Sens. 103, 93–114. doi: 10.1016/j.isprsjprs.2014.02.008

UNESCO (1973). International Classification and Mapping of Vegetation, Series 6, Ecology and Conservation. Paris, France: United Nations Educational, Scientific and Cultural Organization.

United States Code, 2006 Edition, Supplement 5. (2011). “Chapter 27 - National Trails System” in Title 16 - Conservation. U.S. Government Publishing Office. Available online at: https://www.govinfo.gov/app/details/USCODE-2011-title16/USCODE-2011-title16-chap27 (accessed April 7, 2021).

White, A. R. (2019). Human expertise in the interpretation of remote sensing data: A cognitive task analysis of forest disturbance attribution. Int. J. Appl. Earth Observ. Geoinform. 74, 37–44. doi: 10.1016/j.jag.2018.08.026

White, M., Smith, A., Humphryes, K., Pahl, S., Snelling, D., et al. (2010). Blue space: The importance of water for preference, affect, and restorativeness ratings of natural and built scenes. J. Environ. Psychol. 30, 482–493. doi: 10.1016/j.jenvp.2010.04.004

Xiao, X., Dorovskoy, P., Biradar, C., and Bridge, E. (2011). A library of georeferenced photos from the field. Eos Trans. AGU 92:453. doi: 10.1029/2011EO490002

Xu, G., Zhu, X., Fu, D., Dong, J., and Xiao, X. (2017). Automatic land cover classification of geo-tagged field photos by deep learning. Environ. Model. Software 91, 127–134. doi: 10.1016/j.envsoft.2017.02.004

Keywords: citizen science, community engagement, science technology engineering mathematics (STEM), reference data, geotagged photographs, land cover - land use

Citation: Kohl HA, Nelson PV, Pring J, Weaver KL, Wiley DM, Danielson AB, Cooper RM, Mortimer H, Overoye D, Burdick A, Taylor S, Haley M, Haley S, Lange J and Lindblad ME (2021) GLOBE Observer and the GO on a Trail Data Challenge: A Citizen Science Approach to Generating a Global Land Cover Land Use Reference Dataset. Front. Clim. 3:620497. doi: 10.3389/fclim.2021.620497

Received: 23 October 2020; Accepted: 23 March 2021;

Published: 22 April 2021.

Edited by:

Alex de Sherbinin, Columbia University, United StatesReviewed by:

Celso Von Randow, National Institute of Space Research (INPE), BrazilCopyright © 2021 Kohl, Nelson, Pring, Weaver, Wiley, Danielson, Cooper, Mortimer, Overoye, Burdick, Taylor, Haley, Haley, Lange and Lindblad. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Holli A. Kohl, aG9sbGkua29obEBuYXNhLmdvdg==

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.