- 1The First Affiliated Hospital of Chongqing Medical University, Chongqing Key Laboratory of Ophthalmology and Chongqing Eye Institute, Chongqing Branch of National Clinical Research Center for Ocular Diseases, Chongqing, China

- 2School of Computer Science and Technology, Harbin Institute of Technology, Harbin, China

Fuchs’ uveitis syndrome (FUS) is one of the most under- or misdiagnosed uveitis entities. Many undiagnosed FUS patients are unnecessarily overtreated with anti-inflammatory drugs, which may lead to serious complications. To offer assistance for ophthalmologists in the screening and diagnosis of FUS, we developed seven deep convolutional neural networks (DCNNs) to detect FUS using slit-lamp images. We also proposed a new optimized model with a mixed “attention” module to improve test accuracy. In the same independent set, we compared the performance between these DCNNs and ophthalmologists in detecting FUS. Seven different network models, including Xception, Resnet50, SE-Resnet50, ResNext50, SE-ResNext50, ST-ResNext50, and SET-ResNext50, were used to predict FUS automatically with the area under the receiver operating characteristic curves (AUCs) that ranged from 0.951 to 0.977. Our proposed SET-ResNext50 model (accuracy = 0.930; Precision = 0.918; Recall = 0.923; F1 measure = 0.920) with an AUC of 0.977 consistently outperformed the other networks and outperformed general ophthalmologists by a large margin. Heat-map visualizations of the SET-ResNext50 were provided to identify the target areas in the slit-lamp images. In conclusion, we confirmed that a trained classification method based on DCNNs achieved high effectiveness in distinguishing FUS from other forms of anterior uveitis. The performance of the DCNNs was better than that of general ophthalmologists and could be of value in the diagnosis of FUS.

Introduction

Fuchs’ uveitis syndrome (FUS) is a chronic, mostly unilateral, non-granulomatous anterior uveitis, accounting for 1–20% of all cases of uveitis at referral centers, and is the second most common form of non-infectious uveitis (Yang et al., 2006; Kazokoglu et al., 2008; Abano et al., 2017). It is reported to be one of the most under- or misdiagnosed uveitis entities, with its diagnosis often delayed for years (Norrsell and Sjödell, 2008; Tappeiner et al., 2015; Sun and Ji, 2020). Patients with FUS generally present with an asymptomatic mild inflammation of the anterior segment of the eye (Sun and Ji, 2020). The syndrome is featured by characteristic keratic precipitates (KPs), depigmentation in the iris with or without heterochromia, and absence of posterior synechiae (Tandon et al., 2012). Heterochromia is a striking feature of FUS in white people (Bonfioli et al., 2005). However, iris depigmentation may be absent or subtle, especially in patients from Asian or African populations, who have a higher melanin density in their iris (Tabbut et al., 1988; Arellanes-García et al., 2002; Yang et al., 2006; Tugal-Tutkun et al., 2009). In a previous study on Chinese FUS patients, we described the presence of varying degrees of diffuse iris depigmentation without posterior synechiae rather than heterochromia (Yang et al., 2006). Degrees of diffuse iris depigmentation may be considered as the most sensitive and reliable signs of FUS in Chinese as well as in other highly pigmented populations (Mohamed and Zamir, 2005; Yang et al., 2006). The subtle iris depigmentation is however often neglected, leading to a misdiagnosis (Tappeiner et al., 2015). Many undiagnosed FUS patients are unnecessarily treated chronically or intermittently with topical or systemic corticosteroids or even other immunosuppressive agents, which may lead to cataract formation and severe glaucoma (Menezo and Lightman, 2005; Accorinti et al., 2016; Touhami et al., 2019). Until now, the diagnosis is highly dependent on the skills of the uveitis specialist with broad experience in the detection of subtle iris pigmentation abnormalities in a patient with mild anterior uveitis.

Deep learning (DL), one of the most promising artificial intelligence technologies, has been demonstrated to learn from and make predictions on data sets (He et al., 2019). Deep convolutional neural network (DCNN), a subtype of DL, has proven to be a useful method in image-centric specialties, especially in ophthalmology (Hogarty et al., 2019). The capability of DCNN to learn a complicated representation of the data makes it useful for solving the classification problem to facilitate accurate diagnosis of various diseases (Kapoor et al., 2019; Ting et al., 2019). To offer assistance for ophthalmologists in the screening and diagnosis of FUS, we decided to develop DCNNs to classify slit-lamp images automatically and in this report we show its feasibility in the detection of FUS.

Materials and Methods

Data Sets

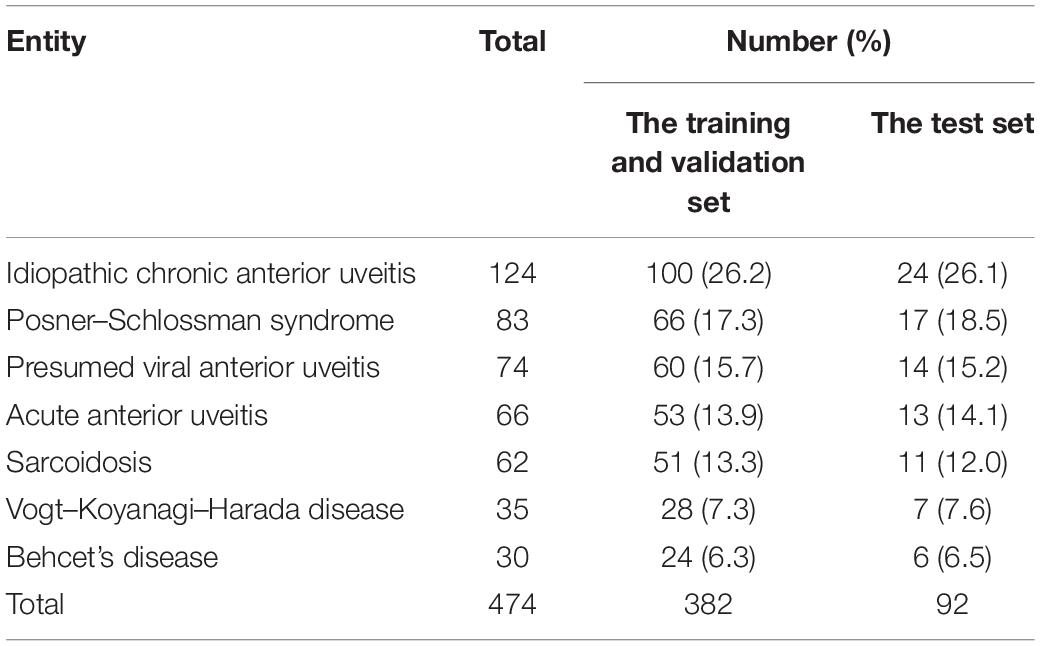

We designed a retrospective study based on the slit-lamp images of 478 Fuchs patients and 474 non-FUS controls from the uveitis center of the First Affiliated Hospital of Chongqing Medical University during January 2015 to October 2020. The diagnosis of FUS was made according to the criteria by La Hey et al. (1991) in combination with the description for Chinese FUS patients in a previous report from our group (Yang et al., 2006). Non-FUS patients with other uveitis entities (Table 1), who presented with signs and symptoms of anterior uveitis and had images comparable with those in FUS patients, served as controls. All enrolled patients were diagnosed by more than two specialists from referring hospitals and then verified by uveitis specialists from our center. The slit-lamp images of each patient were collected using a digital slit-lamp microscope (Photo-Slit Lamp BX 900; Haag-Streit, Koeniz, Switzerland). These images were taken with a 30° angle using direct illumination and focused on the iris with varying degrees of magnification (10, 16, or 25). To highlight the diffusion and uniformity of the iris depigmentation, only images that covered about half of the iris appearance were included.

A total of 2,000 standard slit-lamp images were collected anonymously and removing all personal data except types of disease. These images were used as the basis for training DCNNs consisting of 872 slit-lamp images of affected eyes showing the diffuse and uniform iris depigmentation without posterior synechiae from FUS patients (FUS group) and 1,128 images of control eyes from non-FUS patients (non-FUS group). Then, the 20% aggregate images were set as an independent test set to evaluate the effectiveness and generalization ability of DCNNs. The remaining 80% images were randomly and respectively assigned to the training set and the validation set in an 8:2 ratio. The training set was used to train DCNNs, whereas the validation set was utilized to optimize learnable weights and parameters of DCNNs. The images collected from the same patient (left and right eyes or from multiple sessions) could ensure to be not separated between the test set and the other two sets. The study was approved by the Ethical Committee of First Affiliated Hospital of Chongqing Medical University (No. 2019356) and was conducted in accordance with the Declaration of Helsinki for research involving human subjects.

Development of the DL Algorithm

The slit-lamp images were initially preprocessed to derive data for developing the DCNNs. Each image was resized to 224 × 224 pixels to be compatible with the original dimensions of the experiment networks. Then, the pixel values were scaled to range from 0 to 1. To increase the diversity of the data set and reduce the risk of overfitting, we applied several augmentations to each image, involving random cropped, random rotation, random brightness change, and random flips. Data augmentation is an essential approach to automatically generate new annotated training samples and improve the generalization of DL models (Tran et al., 2017). We obtained samples with shearing with ranges of [−15%, + 15%] of the image width, with rotation [0°, 360°], with brightness change with ranges of [−10%, + 10%], and with or without flipping, thereby generating 10 images per photograph.

Resnet, as a residual deep neural network, was widely used because it is easy to optimize and can gain accuracy from significantly increased depths (He et al., 2016). There are various depths of Resnet structures (Resnet50, Resnet101, and Resnet152), and in this study we used Resnet50 as the experimental models. ResNext, as a new network derived on the basis of Resnet, was included since it can improve accuracy while maintaining Resnet’s high-portability benefits (Xie et al., 2017). Moreover, we introduced a new “attention” unit: the Squeeze-and-Excitation (SE) module. This module allows the network to selectively emphasize informative features and suppresses less useful ones (Hu et al., 2017). After uniting data with the SE module, four different networks (Resnet50, SE-Resnet50, ResNext50, and SE-ResNext50) were included. For comparison, we also selected the Xception network, containing a new convolutional structure named depth-wise separable convolutions that used less parameters and were defined or modified easily (Chollet, 2017). These five classical DCNNs were pre-trained to running the detection of FUS.

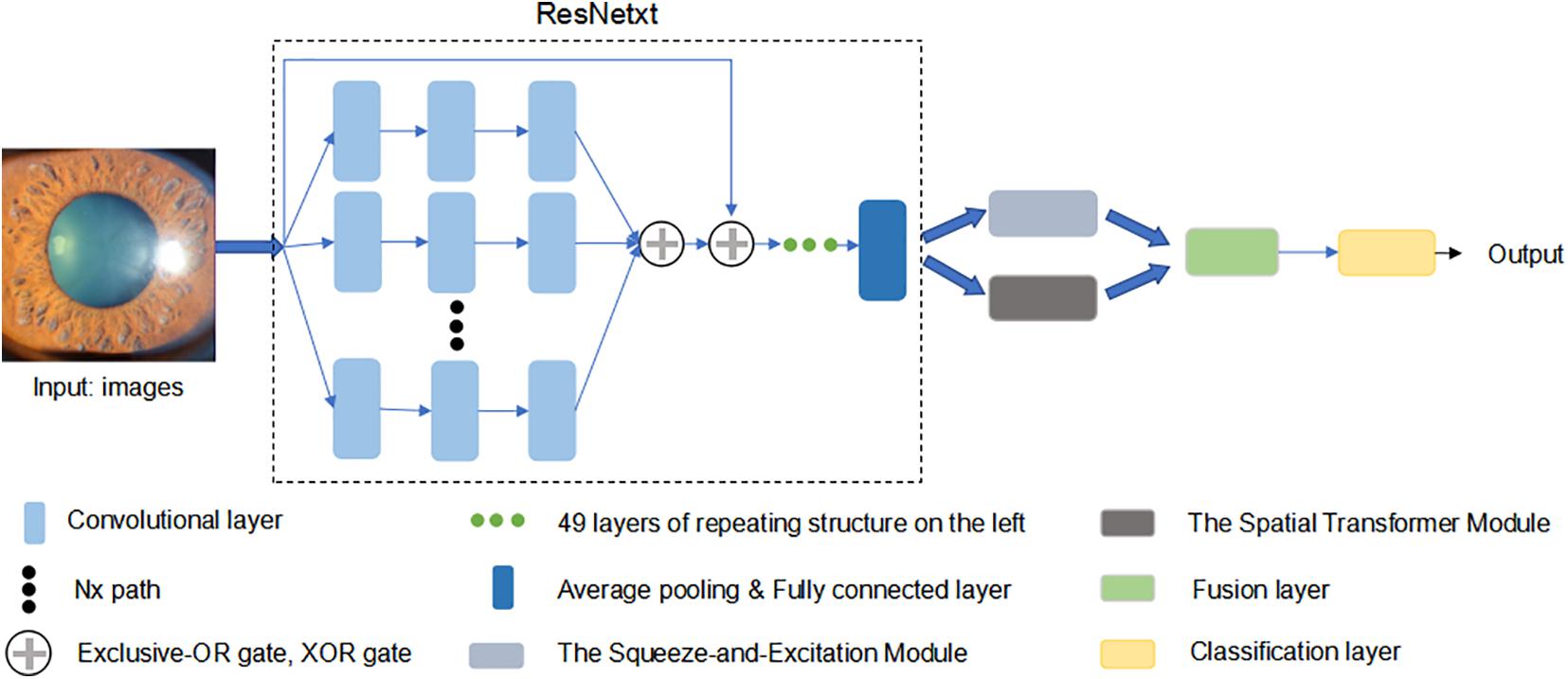

To improve test accuracy, we constructed a new optimized model, using ResNext50 as the backend. Considering the ResNext50 lack of ability to be spatially invariant to the input data, we introduced a “Spatial Transformer (ST)” module, which is another “attention” unit to provide explicit spatial transformation capabilities. This module performs the ability to learn invariance to translation, scale, rotation, and more generic warping, resulting in state-of-the-art performance (Jaderberg et al., 2015). Applying a mixed “attention” module (SE and ST module), our new model (SET-ResNext50) could not only learn the informative features but also focus on the informative location. We also conducted ablation experiments (ST-ResNext50: ResNext50 with ST module) to verify the effectiveness of our proposed mixed “attention” module. Moreover, we manually tuned various combinations of the hyper-parameters to ensure that the trained models met our experimental requirements. The architecture of SET-ResNext50 is shown in Figure 1.

Figure 1. The architecture of SET-ResNext50. We used ResNext50 as backend uniting a mixed “attention” module (the Squeeze-and-Excitation module and the Spatial Transform module). This network was pre-trained in a classification dataset Imagenet to initialize its parameters. Then, we modified the last layer to output a two-dimension vector and updated all the parameters by using the cross entropy.

We developed DCNNs to classify the slit-lamp images into two categories: FUS and Non-FUS. To optimize the models and achieve a better training effect, each model was pre-trained in a classification dataset Imagenet (Deng et al., 2009) to initialize its parameters. Then, we used a 2080 Ti GPU and mini-batches of 96 inputs. The cross entropy was used as a loss function to update all the parameters of the network. The Adam optimizer was used as an optimization function with a learning rate of 10–4. The last layer of DCNNs was modified to output a two-dimension vector. We applied fivefold cross-validation for each DCNN to test the statistical significance of the developed models. The heat maps highlighted lesions and showed the location on which the decision of the algorithm was based (Zhou et al., 2016).

Evaluation of the DL Algorithm

The performance of our experimental models was evaluated in an independent test data set. Images obtained from the same patient could ensure to be not split across the test set and the other two sets. The fivefold cross-validation binary classification results of each model were used to calculate the mean and standard deviation for testing the statistical significance of the developed models. We used receiver operating characteristic (ROC) curves, with calculations of an area under the receiver operating characteristic curves (AUCs), as an index of the performance of our automated models (Carter et al., 2016). AUCs were computed for each finding with 95% confidence intervals computed by the exact Clopper–Pearson method using the Python scikit-learn package version 0.18.2. Precision, accuracy, and recall were used to evaluate the FUS classification performance of our developed models. To make a trade-off between precision and accuracy, F1 measures were added to assess the effectiveness. SPSS version 24.0 (IBM) was used to compare quantitative variables by Student’s t-test.

Comparison of the Networks With Human Ophthalmologists

We compared the performance between seven DCNNs and the clinical diagnosis of ophthalmologists. We chose six ophthalmologists in two different levels (attending ophthalmologists with at least 5 years of clinical training in uveitis from our center: Dr. Zi Ye, Shenglan Yi, and Handan Tan; resident ophthalmologists with 1–3 years of clinical training in ophthalmology from other eye institutes: Dr. Jun Zhang, Yunyun Zhu, and Liang Chen). None of them has participated in the current research. The slit-lamp images were subjected to each ophthalmologist alone and were requested to assign one of three labels to each image, i.e., FUS, uncertain, non-FUS. They were strongly advised not to choose the uncertain label because it is considered as a wrong answer for final evaluation.

Results

Baseline Characteristics

A total of 2,000 slit-lamp images from 478 FUS patients and 474 non-FUS controls were collected and assessed during the study period. The non-FUS group included various forms of anterior uveitis and panuveitis with a presentation of anterior uveitis. The types and proportion of non-FUS cases are listed in Table 1. The 2,000 images were assigned to the training set, the validation set, and the test set. The training and validation set (1,600 images) included 698 images from 380 FUS patients and 902 images from 382 non-FUS patients, and the test set (400 images) consisted of 174 images from 98 FUS patients and 226 images from 92 non-FUS patients.

Performance of the DL Algorithm

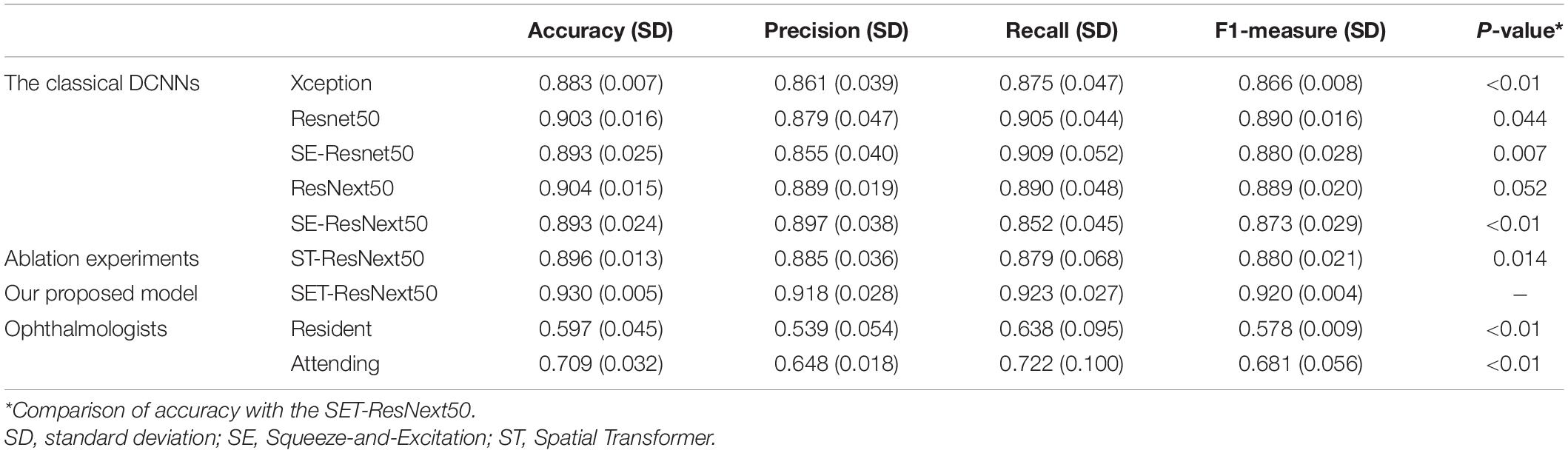

After applying fivefold cross-validation, we calculated the mean value and standard deviation to evaluate the performance of our developed models. Performance results are reported in Table 2. In aggregate, the performance of all trained models showed promising outcomes when considering the selected metrics including accuracy, precision, F1 measure, and recall. In the test set of 400 images, seven DCNNs achieved the accuracy of 0.883–0.930, while F1 measures were 0.866–0.920. We found that the performance of ResNext50 was better than that of SE-ResNext50 or ST-ResNext50, demonstrating that the combination of SE or ST modules with the model would not improve the effectiveness of our networks. However, after uniting with the mixed “attention” module, our SET-ResNext50 model consistently outperformed other network models with its performance (accuracy = 0.930; Precision = 0.918; Recall = 0.923; F1 = 0.920). There were significant differences in accuracy between SET-ResNext50 and the other models except ResNext50 (p < 0.05). The F1 measure of SET-ResNext50 was higher than that of ResNext50 (p = 0.043), which showed that SET-ResNext50 is more superior than other models.

Table 2. Performance of the deep convolutional neural networks with fivefold cross-validation and the compared methods in the test set.

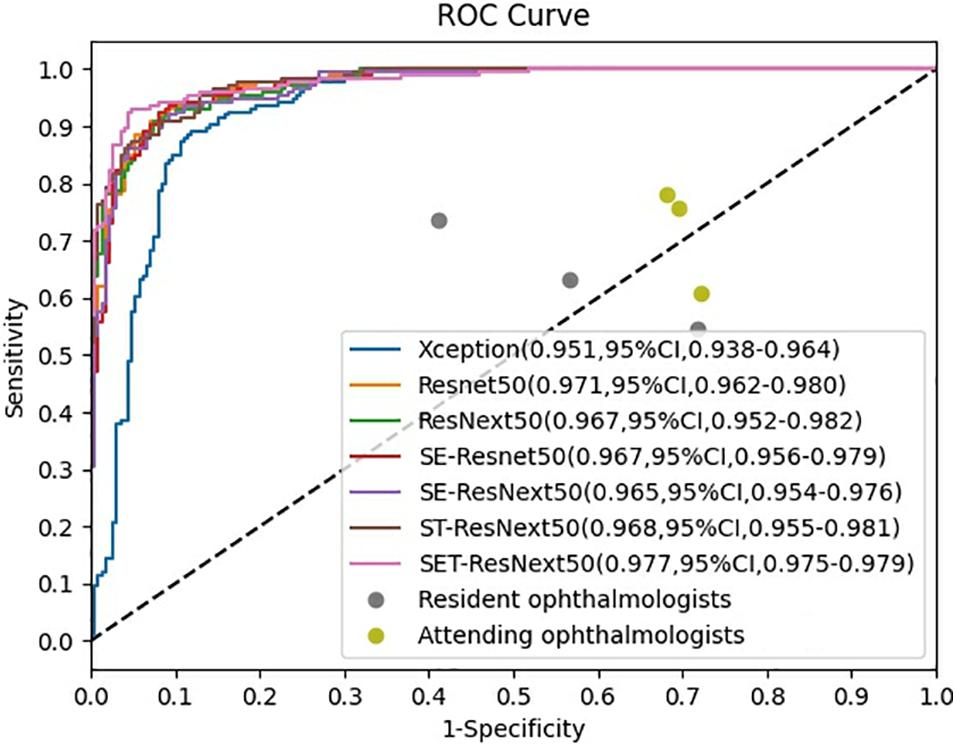

The ROCs and AUCs are reported in Figure 2. AUCs were 0.951–0.977 of DCNNs, which also demonstrate good performance of the developed models. SET-ResNext50 with its AUC of 0.977 showed that this model could be the optimal choice to facilitate the diagnosis of FUS among seven networks. The other metrics in Table 2 also echoed these observations.

Figure 2. Receiver operating characteristic curves of the performance for diagnosis of Fuchs uveitis syndrome in the test set. SET-ResNext50 achieved an AUC of 0.977 (95%CI, 0.975–0.979), which outperformed other developed networks and outperformed all the ophthalmologists by a large margin.

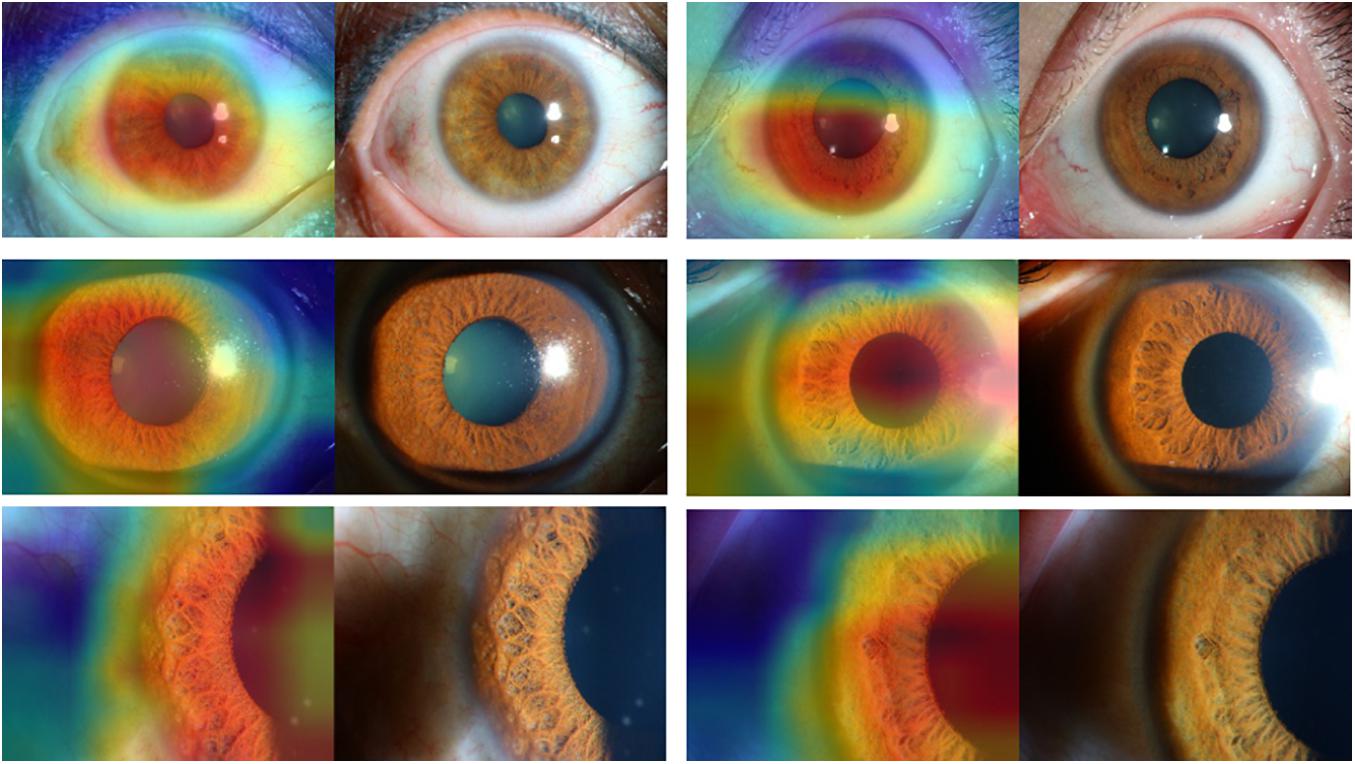

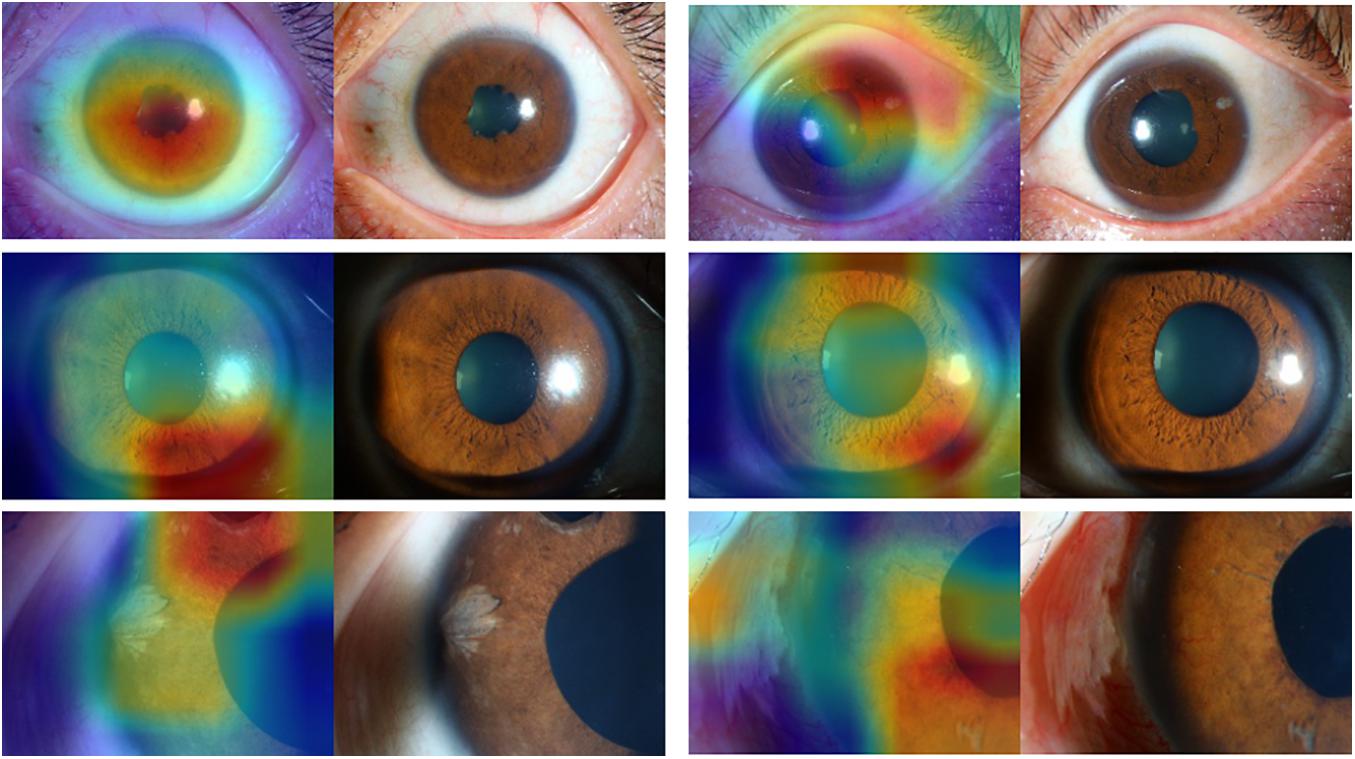

Figures 3, 4 present the examples of heat maps of SET-ResNext50 model for each finding, accompanied by the corresponding original image. The heat maps showed the most apparently affected region in slit-lamp images. This region was the most important indicator to distinguish FUS from non-FUS. In Figure 3, the affected area of FUS accounted for nearly half of the total iris appearance and was mostly located in the pupillary collar. In contrast, the affected area of non-FUS images (Figure 4) was unevenly distributed, including in the pupil or around the periphery of the iris. The affected areas of our SET-ResNext50 model correspond to those identified by the clinicians for diagnosis. In summary, SET-ResNext50 showed the best level of performance in our study and emphasized the most important clues of the image that pointed to the classification results.

Figure 3. The heat maps of the SET-ResNext50 model in slit-lamp image with Fuchs uveitis syndrome demonstrating representative findings, shown in the original slit-lamp image (right) and corresponding heat map for target areas (left).

Figure 4. The heat maps of the SET-ResNext50 model in slit-lamp image with non-Fuchs uveitis syndrome demonstrating representative findings, shown in the original slit-lamp image (right) and corresponding heat map for target areas (left).

Comparison With Ophthalmologists

Three resident ophthalmologists and three attending ophthalmologists were included to detect FUS. The average accuracy is 0.709 for attending ophthalmologists from our uveitis center, which is higher than that (0.597) for resident ophthalmologists from other eye institutes. There is significant difference in accuracy between these two groups (p = 0.024). Moreover, there was a huge performance gap between ophthalmologists and DCNNs (Table 2). The average accuracy of ophthalmologists is significantly lower than that of DCNNs (p < 0.01). As shown in Table 2, comparing the accuracy of ophthalmologists (0.597 and 0.709), the SET-ResNext50 model with the accuracy of 0.930 shows that the latter is superior for detecting FUS.

Discussion

In this study, we developed DCNNs to prove a well-trained DL method for distinguishing FUS from various anterior uveitis and even identify the diagnostic clues that many clinical ophthalmologists neglect. Our study includes three meaningful conclusions. Firstly, to our knowledge, this is the first initiative to assist ophthalmologists in making a correct diagnosis of FUS using slit-lamp images. Secondly, we trained seven DCNNs and developed a new optimized model (SET-ResNext50). SET-ResNext50 achieved both high accuracy and precision, consistently outperforming other models and the general ophthalmologists. Thirdly, our study provided heat maps that highlighted and showed the location of lesions in slit-lamp images. Applying the DCNNs to assist the detection of hidden lesions can facilitate the clinical diagnosis and treatment process.

Recently, DCNNs have been rapidly popularized in clinical practice to make predictions of diseases automatically. Schlegl et al. (2018) achieved excellent accuracy for the detection and quantification of macular fluid in OCT images by using DL in retinal image analysis. Several studies have suggested that applying a DCNN-based automated assessment of age-related macular degeneration from fundus images can produce results that are similar to human performance levels (Burlina et al., 2017; Grassmann et al., 2018; Russakoff et al., 2019). Intensive efforts to develop automated methods highlight the attraction of these tools for advanced management of clinical disease, especially for diseases like FUS. The slit-lamp microscope is the most widely used auxiliary instrument in clinical practice (Jiang et al., 2018). In busy clinics, taking a mass of slit-lamp images into consideration is inherently impractical and error-prone for ophthalmologists. Therefore, automated DCNNs could be used to screen slit-lamp image data sets, direct the ophthalmologists’ attention to the lesion, and in the near future perform diagnosis independently. Our presented DCNNs with high accuracy for the detection of FUS highlighted the location of lesions and may become widely applicable.

Building and optimizing a new DCNN may require a substantial amount of hyper-parameter tuning time. Therefore, many studies have used classical networks such as Resnet as the backend (Wu et al., 2017). In this study, as the basis of ResNext50, we proposed a mixed “attention” module combining informative attention and spatial attention in our optimized model architecture. We found that SET-ResNext50 with a mixed “attention” module outperformed the models combining with one of the SE and ST modules, indicating that there is mutual promotion between the SE module and ST module. The innovative method of using this mixed module may be useful in other areas of ophthalmology. With too few images or too many training steps, the DL classifier may show overfitting, resulting in the poor generalization of results (Treder et al., 2018). In this study, we used data augmentation to generate new annotated training samples and set an independent test set to evaluate the generalization ability of DCNNs. We found that the performance of our DCNNs was consistently good in the test set, indicating that the models had the generalization ability without overfitting. We compared the effectiveness of DCNNs against ophthalmologists with different experience levels. The performance of attending ophthalmologists was better than that of the resident ophthalmologists, indicating that the misdiagnosis of FUS may be due to a lack of accumulation of clinical experience. As expected, the DCNNs achieving the highest sensitivity while keeping high specificity outperformed the resident and attending ophthalmologists by a large margin. Moreover, our model produced the heat map visualizations to identify the existence of the target areas in images and then generated the output of the classification. In the heat maps, the most apparently affected regions of FUS images were mainly located in the depigmentation of the pupil collar and accounted for nearly half of the overall iris appearance (Figure 3), which generally proved the characteristic of diffuse and uniform iris depigmentation in the vicinity of the pupil in FUS patients. Other uveitis entities like the Posner–Schlossman syndrome may show heterogeneous and uneven iris depigmentation. Iris depigmentation can also be detected in patients with herpetic uveitis, but it usually displays a local appearance. Those signs correspond to the irregular affected areas on the heat maps of non-FUS (Figure 4). Some affected areas in non-FUS cases (like idiopathic chronic anterior uveitis) that were located in the pupil may arise from the presentation of posterior synechiae without iris depigmentation. However, the heat maps produced by DCNNs are challenging and difficult to interpret (Ramanishka et al., 2017). In image-based diagnostic specialties, interpreting the heat map may facilitate a better understanding of the diagnosis.

We realize that our study has several limitations. First, our data of slit-lamp images only included Chinese patients with highly pigmented iris and our findings therefore need to be validated in other ethnic populations. Unfortunately, there is no other public dataset of the FUS patients from different populations to validate our models. Such a dataset would be a significant value for further research and expected to evaluate the performance of other DCNNs in the future. Second, the available data set is relatively small for training or validation. Unlike in other common eye diseases such as age-related macular degeneration or diabetic retinopathy, there are a relatively smaller number of cases with FUS. Third, the program we developed could only distinguish FUS from non-FUS according to the iris change. Further research is expected to combine DCNNs with other clinical findings in the diagnosis of complex diseases. Anyhow, we believe that the method presented here is a meaningful step toward the automated analysis of slit-lamp images and may aid in the detection of FUS.

Conclusion

In conclusion, we have developed various DCNNs and validated a sensitive automated model (SET-ResNext50) to detect FUS using slit-lamp images. Our presented models achieved both high accuracy and precision, and outperformed general ophthalmologists by a large margin. The SET-ResNext50 model may be the optimal choice to facilitate the diagnosis of FUS. Moreover, the heat map could extract important features from the iris, which proved that DCNNs could be trained to detect specific disease-related changes. The DCNNs are expected to be applied to auxiliary imaging instruments for preliminary screening of diseases, which is of value in future clinical practices.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethical Committee of First Affiliated Hospital of Chongqing Medical University (No. 2019356). The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

PY, WZ, and ZC: had full access to all of the data in the study and took responsibility for the integrity of the data and the accuracy of the data analysis. WZ, ZC, and HZ: acquisition, analysis, or interpretation of data. RC, LC, and GS: statistical analysis. YZ, QC, CZ, and YW: methodology supervision. WZ and ZC: writing—review and editing. PY: funding acquisition. All authors contributed to the article and approved the submitted version.

Funding

The work was supported by Chongqing Outstanding Scientists Project (2019), Chongqing Key Laboratory of Ophthalmology (CSTC, 2008CA5003), Chongqing Science and Technology Platform and Base Construction Program (cstc2014pt-sy10002), and the Chongqing Chief Medical Scientist Project (2018).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Dr. Zi Ye, Handan Tan, Shenglan Yi, Jun Zhang, Yunyun Zhu, and Liang Chen for their helpful and valuable discussions.

References

Abano, J. M., Galvante, P. R., Siopongco, P., Dans, K., and Lopez, J. (2017). Review of epidemiology of uveitis in Asia: pattern of uveitis in a tertiary hospital in the Philippines. Ocul. Immunol. Inflamm. 25(suppl. 1) S75–S80. doi: 10.1080/09273948

Accorinti, M., Spinucci, G., Pirraglia, M. P., Bruschi, S., Pesci, F. R., and Iannetti, L. (2016). Fuchs’ Heterochromic Iridocyclitis in an Italian tertiary referral centre: epidemiology, clinical features, and prognosis. J. Ophthalmol. 2016:1458624. doi: 10.1155/2016/1458624

Arellanes-García, L., del Carmen Preciado-Delgadillo, M., and Recillas-Gispert, C. (2002). Fuchs’ heterochromic iridocyclitis: clinical manifestations in dark-eyed Mexican patients. Ocul. Immunol. Inflamm. 10, 125–131. doi: 10.1076/ocii.10.2.125.13976

Bonfioli, A. A., Curi, A. L., and Orefice, F. (2005). Fuchs’ heterochromic cyclitis. Semin. Ophthalmol. 20, 143–146. doi: 10.1080/08820530500231995

Burlina, P. M., Joshi, N., Pekala, M., Pacheco, K. D., Freund, D. E., and Bressler, N. M. (2017). Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 135, 1170–1176. doi: 10.1001/jamaophthalmol.2017.3782

Carter, J. V., Pan, J., Rai, S. N., and Galandiuk, S. (2016). ROC-ing along: evaluation and interpretation of receiver operating characteristic curves. Surgery 159, 1638–1645. doi: 10.1016/j.surg.2015.12.029

Chollet, F. (2017). “Xception: deep learning with depthwise separable convolutions,” in Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Honolulu, HI: IEEE), 1800–1807. doi: 10.1109/CVPR.2017.195

Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., and Fei-Fei, L. (2009). “Imagenet: a large-scale hierarchical image database,” in Proceedings of IEEE Computer Vision & Pattern Recognition, Miami, FL, 248–255. doi: 10.1109/CVPR.2009.5206848

Grassmann, F., Mengelkamp, J., Brandl, C., Harsch, S., Zimmermann, M. E., Linkohr, B., et al. (2018). A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology 125, 1410–1420. doi: 10.1016/j.ophtha.2018.02.037

He, J., Baxter, S. L., Xu, J., Xu, J., Zhou, X., and Zhang, K. (2019). The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 25, 30–36. doi: 10.1038/s41591-018-0307-0

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, (Las Vegas, NV: IEEE Computer Society). doi: 10.1109/CVPR.2016.90

Hogarty, D. T., Mackey, D. A., and Hewitt, A. W. (2019). Current state and future prospects of artificial intelligence in ophthalmology: a review. Clin. Exp. Ophthalmol. 47, 128–139. doi: 10.1111/ceo.13381

Hu, J., Shen, L., Sun, G., and Albanie, S. (2017). “Squeeze-and-excitation networks,” in Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Salt Lake City, 7132–7141. doi: 10.1109/TPAMI.2019.2913372

Jaderberg, M., Simonyan, K., Zisserman, A., and Kavukcuoglu, K. (2015). “Spatial transformer networks,” in Proceedings of the Advances in neural information processing systems, London, 2017–2025.

Jiang, J., Liu, X., Liu, L., Wang, S., Long, E., Yang, H., et al. (2018). Predicting the progression of ophthalmic disease based on slit-lamp images using a deep temporal sequence network. PLoS One 13:e0201142. doi: 10.1371/journal.pone.0201142

Kapoor, R., Walters, S. P., and Al-Aswad, L. A. (2019). The current state of artificial intelligence in ophthalmology. Surv. Ophthalmol. 64, 233–240. doi: 10.1016/j.survophthal.2018.09.002

Kazokoglu, H., Onal, S., Tugal-Tutkun, I., Mirza, E., Akova, Y., Ozyazgan, Y., et al. (2008). Demographic and clinical features of uveitis in tertiary centers in Turkey. Ophthalmic Epidemiol. 15, 285–293. doi: 10.1080/09286580802262821

La Hey, E., Baarsma, G. S., De Vries, J., and Kijlstra, A. (1991). Clinical analysis of Fuchs’ heterochromic cyclitis. Doc. Ophthalmol. 78, 225–235. doi: 10.1007/BF00165685

Menezo, V., and Lightman, S. (2005). The development of complications in patients with chronic anterior uveitis. Am. J. Ophthalmol. 139, 988–992. doi: 10.1016/j.ajo.2005.01.029

Mohamed, Q., and Zamir, E. (2005). Update on Fuchs’ uveitis syndrome. Curr. Opin. Ophthalmol. 16, 356–363. doi: 10.1097/01.icu.0000187056.29563.8d

Norrsell, K., and Sjödell, L. (2008). Fuchs’ heterochromic uveitis: a longitudinal clinical study. Acta Ophthalmol. 86, 58–64. doi: 10.1111/j.1600-0420.2007.00990.x

Ramanishka, V., Das, A., Zhang, J., and Saenko, K. (2017). “Top-down visual saliency guided by captions,” in Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, 3135–3144. doi: 10.1109/CVPR.2017.334

Russakoff, D. B., Lamin, A., Oakley, J. D., Dubis, A. M., and Sivaprasad, S. (2019). Deep learning for prediction of AMD progression: a pilot study. Invest. Ophthalmol. Vis. Sci. 60, 712–722. doi: 10.1167/iovs.18-25325

Schlegl, T., Waldstein, S. M., Bogunovic, H., Endstraßer, F., Sadeghipour, A., Philip, A. M., et al. (2018). Fully automated detection and quantification of macular fluid in OCT using deep learning. Ophthalmology 125, 549–558. doi: 10.1016/j.ophtha.2017.10.031

Sun, Y., and Ji, Y. (2020). A literature review on Fuchs uveitis syndrome: an update. Surv. Ophthalmol. 65, 133–143. doi: 10.1016/j.survophthal

Tabbut, B. R., Tessler, H. H., and Williams, D. (1988). Fuchs’ heterochromic iridocyclitis in blacks. Arch. Ophthalmol. 106, 1688–1690. doi: 10.1001/archopht.1988.01060140860027

Tandon, M., Malhotra, P. P., Gupta, V., Gupta, A., and Sharma, A. (2012). Spectrum of Fuchs uveitic syndrome in a North Indian population. Ocul. Immunol. Inflamm. 20, 429–433. doi: 10.3109/09273948.2012.723113

Tappeiner, C., Dreesbach, J., Roesel, M., Heinz, C., and Heiligenhaus, A. (2015). Clinical manifestation of Fuchs uveitis syndrome in childhood. Graefes Arch. Clin. Exp. Ophthalmol. 253, 1169–1174. doi: 10.1007/s00417-015-2960-z

Ting, D. S. W., Peng, L., Varadarajan, A. V., Keane, P. A., Burlina, P. M., Chiang, M. F., et al. (2019). Deep learning in ophthalmology: the technical and clinical considerations. Prog. Retin Eye Res. 72:100759. doi: 10.1016/j.preteyeres.2019.04.003

Touhami, S., Vanier, A., Rosati, A., Bojanova, M., Benromdhane, B., Lehoang, P., et al. (2019). Predictive factors of intraocular pressure level evolution over time and glaucoma severity in Fuchs’ heterochromic iridocyclitis. Invest. Ophthalmol. Vis. Sci. 60, 2399–2405. doi: 10.1167/iovs.18-24597

Tran, T., Pham, T., Carneiro, G., Palmer, L., and Reid, I. (2017). “A Bayesian data augmentation approach for learning deep models,” in Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, 2794–2803.

Treder, M., Lauermann, J. L., and Eter, N. (2018). Automated detection of exudative age-related macular degeneration in spectral domain optical coherence tomography using deep learning. Graefes Arch. Clin. Exp. Ophthalmol. 256, 259–265. doi: 10.1007/s00417-017-3850-3

Tugal-Tutkun, I., Güney-Tefekli, E., Kamaci-Duman, F., and Corum, I. (2009). A cross-sectional and longitudinal study of Fuchs uveitis syndrome in Turkish patients. Am. J. Ophthalmol. 148, 510–515.e1. doi: 10.1016/j.ajo.2009.04.007

Wu, S., Zhong, S., and Liu, Y. (2017). Deep residual learning for image steganalysis. Multimedia Tools Appl. 77, 10437–10453. doi: 10.1007/s11042-017-4440-4

Xie, S., Girshick, R., Dollar, P., Tu, Z., and He, K. (2017). “Aggregated residual transformations for deep neural networks,” in Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Honolulu, HI: IEEE), 5987–5995. doi: 10.1109/cvpr.2017.634

Yang, P., Fang, W., Jin, H., Li, B., Chen, X., and Kijlstra, A. (2006). Clinical features of Chinese patients with Fuchs’ syndrome. Ophthalmology 113, 473–480. doi: 10.1016/j.ophtha

Keywords: Fuchs’ uveitis syndrome, diffuse iris depigmentation, slit-lamp images, deep convolutional neural model, deep learning

Citation: Zhang W, Chen Z, Zhang H, Su G, Chang R, Chen L, Zhu Y, Cao Q, Zhou C, Wang Y and Yang P (2021) Detection of Fuchs’ Uveitis Syndrome From Slit-Lamp Images Using Deep Convolutional Neural Networks in a Chinese Population. Front. Cell Dev. Biol. 9:684522. doi: 10.3389/fcell.2021.684522

Received: 23 March 2021; Accepted: 30 April 2021;

Published: 18 June 2021.

Edited by:

Wei Chi, Sun Yat-sen University, ChinaReviewed by:

Monica Trif, Centre for Innovative Process Engineering, GermanyZuhong He, Wuhan University, China

Copyright © 2021 Zhang, Chen, Zhang, Su, Chang, Chen, Zhu, Cao, Zhou, Wang and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peizeng Yang, cGVpemVuZ3ljbXVAMTI2LmNvbQ==

†These authors have contributed equally to this work

Wanyun Zhang1†

Wanyun Zhang1† Ying Zhu

Ying Zhu Qingfeng Cao

Qingfeng Cao Peizeng Yang

Peizeng Yang