- S.M.A.R.T. Construction Research Group, Division of Engineering, New York University Abu Dhabi (NYUAD), Abu Dhabi, United Arab Emirates

Introduction: The use of robots can boost productivity and reduce costs in construction projects. However, choosing the right robotic platform for the right application can be challenging, costly, and time-consuming. This paper presents a guide for researchers and construction practitioners interested in using robotic systems.

Methods: A methodology covering the different aspects to be considered when it comes to a robotic platform, such as the framework (both hardware and software), the environment the robot is going to be operating, the level of supervision (i.e., autonomy) the robot requires, different hardware required on board (i.e., sensors and computers), and the control strategies and systems and communication network, is presented. The methodology is implemented with a practical application where a semi-autonomous robotic system is designed and developed with the simple goal of data collection on construction sites, making sure that all the steps covered in the methodology are addressed.

Results: The results show that the methodology is applicable to a wide range of solutions, with a focus on the development of the platform itself and not the final application.

Discussion: This guide is meant to assist in developing a flexible open platform that can be customized to the specific requirements needed.

1 Introduction

In recent years, the integration of robotics has emerged as a transformative force across various industries, offering unprecedented opportunities for automation and enhanced efficiency. This paradigm shift is often referred to as Industry 4.0 (Javaid et al., 2021). Construction 4.0 embodies a set of principles that align with the broader Industry 4.0 framework. These principles include interoperability, information transparency, decentralized decision-making, and integrating cyber-physical systems (Pärn et al., 2020). Positioned as a key component of Construction 4.0, robotics is the linchpin connecting physical construction processes with digital technologies. However, the built environment poses unique challenges, such as the dynamic and unpredictable nature of construction sites, coupled with the diversity of tasks involved, which necessitate a specialized approach when incorporating robotic technologies compared to other industries. This integration fosters a more intelligent and responsive construction ecosystem to address the industry’s challenges, such as labor shortages, safety concerns, and the need for sustainable practices.

The construction industry has evolved to adapt to new processes and methodologies. With the development of robotic technologies, more robotic applications for construction activities have been brought into the stage. Bock and Linner (Bock et al., 2016) summarized single-task construction robots, from academic and commercial perspectives, that could efficiently conduct repetitive tasks, assist human workers with extra work, or even replace workers in dangerous situations. Most of the current applications are stationary and focus on the mechanical programming of the manipulators’ trajectory, which allows construction robots to be used for tasks such as bricklaying (Gambao et al., 2000), concrete placement (Reichenbach and Kromoser, 2021), or earth moving (Bock et al., 2016). Gradually, due to the complexity and unstructured environments of construction sites, new functionalities such as perception algorithms (Premebida et al., 2018), pose and state estimation algorithms (Doumbia and Cheng, 2020), and onboard autonomy (motion/path planning (Xu et al., 2022), target/waypoint selection (Moreno et al., 2020), etc.) have been applied to allow robotic systems in more complicated situations. With mobility added, construction robots are capable of more vigorous construction activities such as progress monitoring (Lee et al., 2018), mapping and reconstruction of specific areas (Shang and Shen, 2018), and the inspection and maintenance of buildings (García and García, 2019). For example, mobile robots for data collection and site management are trending research areas due to the boost of computer vision technologies; advanced algorithms such as simultaneous localization and mapping (SLAM) allow them to sense the evolving sites with accurate and real-time data transferring (Kim et al., 2018a). This leaves more room for developing mobile robotic systems suitable for construction sites. However, the development of such a platform with functionalities for construction activities is challenging because of the requirement for expertise in robotics (Davila Delgado et al., 2019). Current robot applications on the market are not sufficient to address the challenges on the construction site. Construction practitioners are required to combine knowledge of structure and algorithms for robotic platforms with practical use cases in the real construction environment. Thus, it is hard for civil engineers to design their own robots from scratch to fulfill their requirements for specific designs. Although much research has been done on specific applications, to the authors’ knowledge, no studies provide a process for developing a robotic platform for construction projects. When considering the integration of robots into construction sites to enhance efficiency, there is plenty of room for researchers to answer the open research questions, such as finding the optimal process for developing the system from the ground up, selecting suitable platforms equipped with compatible automation solutions to support construction activities effectively, and providing a reliable procedure to build, test and deploy such system.

To address these research questions, the objective of this paper is to present a guide for construction practitioners without particular expertise in robotics to allow them to follow simple steps to develop their robotic systems to suit their specific applications. It aims to help civil engineers interested in implementing their own platforms by explaining how to integrate different robotic components into a user-defined platform with the flexibility to address the challenges in construction activities. The ultimate goal of this guide is to help construction practitioners who might not be experts in the robotics field to choose all the features and characteristics their platform should present (i.e., equipped sensors, means of locomotion, framework and operating system, main algorithms to consider). As an example of this decision process in action, we introduce a programmable robotic platform into a generic framework, which introduces the methodology to design and implement an autonomous robotic platform that integrates both hardware and software, the operation environment, automatic control, and the communication network for such systems. This construction robotic design decision process could be a valuable resource for anyone wanting to integrate a similar flexible system (i.e., not dependent on proprietary/closed products) in construction projects and get information about the software and hardware requirements, challenges to be aware of, and benefits of such systems. To test the validity of the proposed framework, the methodology created in this study has been applied to develop a practical case of a robotic platform for data collection in construction sites, which is meant to showcase only the applicability of the developed methodology and not the novelty of the methods applied in the case study. With the same logic, the proposed methodology can be utilized on other mobile applications on the construction site for other usages, with different open/closed products embedded with different sensors and algorithms. The decision-making process presented in this paper is a high-level overview of construction robotics design; each process presented here is unique and would need to be specifically tailored for each application.

The remaining of this article is organized as follows. Section 2 provides a literature review regarding the use of robotic and semi-autonomous systems with an emphasis on construction applications. This section will summarize the state-of-the-art application of construction robots, identify challenges in building such platforms, and point out possible solutions. Based on that, Section 3 presents the methodology (i.e., all the necessary steps) followed to develop a robotic system in a theoretical way. To verify the validity of the proposed method, Section 4 illustrates the different steps from the methodology applied to a case study, using data collection as the sample application. In that section, the software and hardware requirements for the selected platform are presented, and the outcome and testing of the developed platform are shown. To summarize the tested results, Section 5 identifies the limitations of the proposed methodology. Finally, Section 6 summarizes the main points and provides directions for further development.

2 Literature review

2.1 Robotic applications in the construction industry

Construction 4.0, as an extension of the fourth industrial revolution, recognizes the potential of automation to revolutionize the industry. By integrating advanced technologies such as robotics, autonomous vehicles, and intelligent machinery, Construction 4.0 seeks to mitigate labor constraints, enhance precision in construction processes, and optimize resource utilization. Automation not only accelerates project timelines but also significantly improves safety conditions by automating hazardous or repetitive tasks. With the development of new technologies to make the best use of the robotic system for the benefit of humankind, most of the research nowadays is conducted in the categories shown in Table 1.

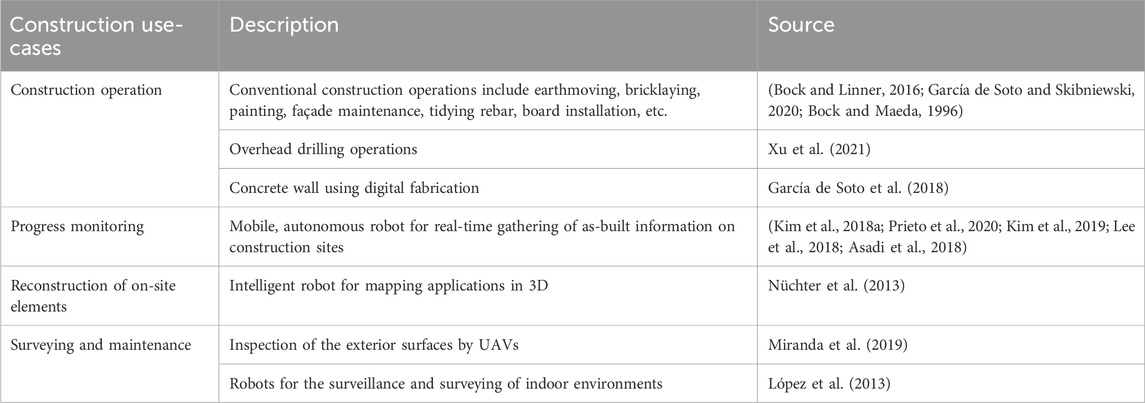

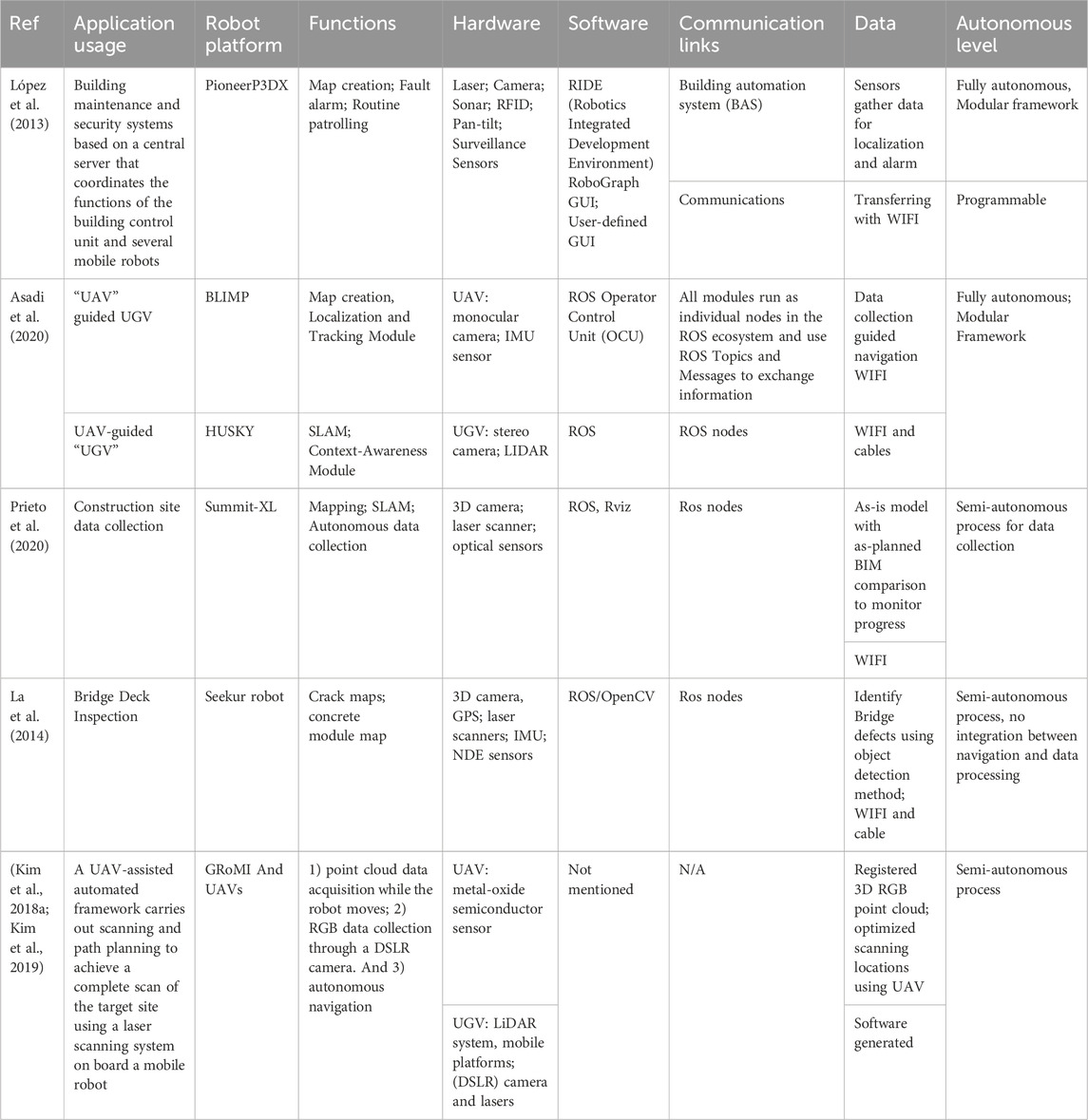

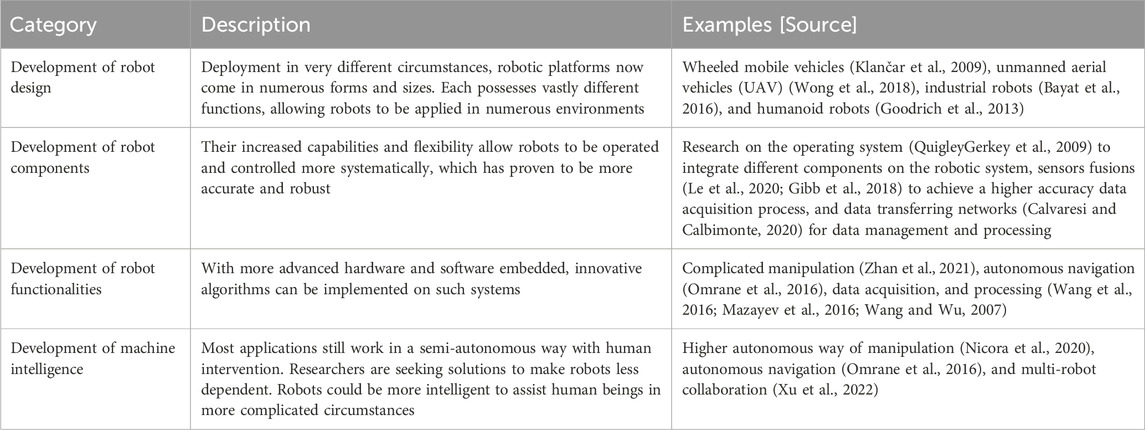

TABLE 1. Summary of different categories of research areas and development of robotic systems in the construction industry.

Construction robots are a subset of industrial robots used for building and infrastructure construction (Elattar, 2008) designed for single-task operations, typically in a dynamic but still controlled environment (Feng et al., 2015). Automating construction would be helpful, particularly in settings where human presence is dangerous or problematic. Repetitive and labor-intense tasks, such as bricklaying, painting, loading, and bulldozing, are good candidates for automation. Using robots can assist in making construction sites less labor-intensive and creating safer work environments.

Table 2 summarizes some typical uses of robots in the construction industry. Although much research has been conducted to supplement these categories of construction robotic platforms, few have utilized these findings to systematically develop the integration of hardware and software, the operation environment, automatic control, and communication network for such systems to advance the functionalities of existing construction robotic applications. Thus, the usage of robotic applications in the construction industry is not as extensive as in comparison with any other field. Several challenges contribute to the limited adoption of robots in construction, including the variability of construction tasks and projects, resistance to change within the industry, insufficient training and knowledge, high initial costs, and the lack of a standardized framework. To address some of these challenges, a fundamental solution involves creating a guide tailored for non-robotic experts in the construction sector. This guide aims to facilitate the seamless integration of robotic and automation technologies into construction-related tasks, ultimately boosting their widespread use. By providing specific usage cases and considering the diverse perspectives of stakeholders, this guide equips individuals interested in adopting robots with a generic yet comprehensive procedure. Such an approach allows them to build their applications effectively and leverage the advantages offered by robotic systems in diverse construction scenarios. There is plenty of room for researchers to investigate how to integrate these subareas into a higher-level application to see how they perform in the real world to facilitate non-robotic experts in the construction sector (e.g., project managers or contractors) interested in the implementation of a solution for their various needs that could benefit from automation (i.e., robotic implementation).

2.2 Robotic morphology in the construction industry

To better help the researchers focus on developing integrated robotic systems within the construction environment, robots can be classified according to how automated platforms are developed and operated in the construction domain. Those categories are summarized below.

2.2.1 Single-task construction robots (STCRs)

The use of single-task robots aims at simplifying the complicated construction process. They were designed preliminarily for one specified construction process, such as painting, material delivery, and bricklaying (Gharbia et al., 2020). STCRs are mainly designed to substitute or supplement human physical activities with repetitive activities, which do not require extra effort to control the robots for desired motions and trajectories during the operation. They can finish the tasks automatically with many iterations. However, they are preprogrammed and more suitable for a static working environment with all variables set up. They can only handle the problem that has been pre-defined in their working schemes. In most cases, because of the preprogrammed settings and the working space requirement, the environment needs to be consistent to allow duplication of the same trajectory in a pre-defined working space. Also, considering the safety of workers, these systems cannot work simultaneously with the workers on the site. Besides, setting up such systems is also time-consuming, significantly reducing the productivity gains from automation (Bock and Linner, 2016). In general, for better usage in the construction industry, construction robots need to be more flexible with easier usage and higher dynamical sensing capabilities.

2.2.2 Teleoperated systems in construction

Teleoperated systems have been introduced to the construction domain to allow flexibility in handling different situations for construction tasks. These systems make it easier for the workers to control the robots. In telerobotics, the machine does not operate autonomously but is under the control of a human operator. The operator does data sensing, interpretation, and cognitive activities such as task planning (La et al., 2014). Telerobotics could significantly reduce the uncertainties in designing and preprogramming. These telerobotic systems make it more reliable for the robots to work in a dynamic and constantly evolving environment such as a construction site. Moreover, they significantly reduce testing time when the application needs to be changed to something different. However, the drawback is also apparent: these systems are semi-autonomous and require high expertise for robotic knowledge, which could not easily be implemented on the actual site. The effective utilization of construction robots demands a workforce equipped with specialized knowledge in robotics, automation, and technology integration. Skilled workers not only enhance the overall productivity and performance of robotic applications but also contribute to the safe and optimal use of these technologies on construction sites. In light of this, investing in training programs and fostering a culture of continuous learning becomes crucial. However, the investment in training is high-cost and time-consuming. In a well-established industry such as construction, stakeholders are also skeptical about the productivity gained through the automation process, so there is a need for robotic systems to be more robust so they can be fully utilized and add value to construction sites (Javaid et al., 2021).

2.2.3 Programmable construction machines

Programmable automation allows machine configurations and operation sequences to change based on signals sent from electronic controls (Ding et al., 2018). With a programmable automation system, machine operations and sequences can be preprogrammed into different functionalities. Besides, programmable construction machines can use an electronic representation of a portion of the construction site where their work will be conducted to control all or part of the machine’s operation (Saidi et al., 2016). The final goal of this kind of system is to allow the operators to vary the task to be accomplished within certain constraints by choosing from a preprogrammed menu of functions or by teaching the machine a new function. This introduces a critical shift along two distinct dimensions. Firstly, they alleviate the demand for significant physical effort traditionally required in various tasks. Secondly, they target a reduction in human cognitive effort, streamlining processes and allowing human workers to focus on higher-order decision-making rather than routine, repetitive tasks. Explicitly addressing these dimensions becomes paramount in assessing the impact and effectiveness of programmable robots in both enhancing efficiency and optimizing the allocation of human skills within the given context. For example, Ding et al. (Nvidia, 2022) introduced a programmable hydraulic controller for a robotic excavator to adapt to dynamic changes during excavation. Prieto et al. (2020) proposed an integrated programmable robotic platform to extract building elements from IFC to generate a progress report in changing environments. Programmable machines are a further development of those two categories mentioned above to achieve a higher autonomous level of control, which is easier to use on the job site and more suitable to address the challenges in adoption. The final goal of these systems is to build up a collection of robotic construction activities, a standardized database or reference that could be adopted into many construction tasks in different projects. However, a systematic framework needs to be developed to guide the integration of different robotic activities and functionalities to build up a programmable machine. This is a challenge for construction practitioners without particular expertise in robotics to set up sensors, autonomous control schemes, and communication networks for different robotic components. To the best of the authors’ knowledge, there is no general framework to guide construction practitioners to build up their own programmable machines from scratch. Thus, a guide is needed to boost the wide usage of the integrated robotic system on the construction site.

2.3 Challenges for robot design in the construction industry

2.3.1 Sensing capabilities for construction environments

Automated and robotized manufacturing facilities are typically structured environments since the machines and evolving products either stay in their pre-defined locations or move on predesigned and typically fixed paths (Milberg and Tommelein, 2003). However, construction robots have more complicated situations than the robotic systems used in factories/manufacturing. Construction sites in the construction industry can typically be considered unstructured since they constantly evolve, dramatically changing shape and form in response to construction tasks. Building components are moved around without fixed paths or laydown/staging areas. Various physical connections are established through improvisation in response to in situ conditions, making tight tolerances hard to maintain and enforce (M et al., 2005). This means that pre-defined actions may not be suitable for all circumstances as construction sites and workspaces constantly change. Therefore, robots need to perceive the environment and understand how to react to changes (Feng et al., 2015). For example, Mantha and García de Soto (Mantha et al., 2019; Mantha and García de Soto, 2022) utilized fiducial markers to allow the accurate positioning of robots. Wang et al. (2020) used digital twins to update the construction environment and guided robots to execute tasks as human-instructed in the virtual environment. Prieto et al. (2021) proposed an autonomous robot equipped with different sensors to collect data to automatically assess the state of construction. Ko et al. (2022) integrated V-SLAM with an RGBD camera on an autonomous Unmanned Aerial Vehicle (UAV) platform for concrete crack detection on construction sites. They are embedded with new technologies, and a more advanced construction robot platform could address the dynamic changes, allowing other robotic activities to be integrated to achieve a higher autonomous level.

2.3.2 Control and locomotion of mobility

In manufacturing, factory robotics typically involves robotic platforms that are generally stationary. Precision is achieved by controlling the pose of the moving (and evolving) product, and the robots are programmed to manipulate the products through fixed trajectories. Thus, from a mobility and cognitive perspective, a factory robot has little responsibility and autonomy (Feng et al., 2015). However, this spatial relationship is reversed in construction. A construction robot must travel to its next workstation, perceive its environment, account for tight tolerances, and perform manipulation activities. This places significant mobility and cognitive burden on a robot intended for construction tasks, even if the job itself is repetitive. The mobility control of construction robots is also a trending topic in recent research. For example, Lee et al. (1997) proposed a fuzzy logic controller that allows robots to navigate massive construction sites based on feedback from real-time sensors; Xu et al. (2022) suggested using reinforcement learning to teach the robots to learn from their past failure and experience to learn the skills to locate themselves. Researchers have put much effort into enhancing the performance of the mobility of the robots, and advanced strategies such as enhanced sensing capabilities, localization, and mapping algorithms are the priority to address the problem. However, only a few investigate how these algorithms can benefit the whole construction process in a broader view. For example, Chen et al. (2020) analyzed the robotic mobility and manipulation performance when working with workers in an intelligent warehouse system to order and place bricks. Xu et al. (2021) analyzed the performance of a drilling robot and identified some challenges to productivity gains because of the complicated setup procedure and time-consuming localization process using a total station. However, they did not devise a solution to enhance the whole process. Almost none analyze the mobility performance and benefits from a systemic view. Instead, they only evaluate the accuracy and performance of the mobility strategies. There is plenty of room for researchers to optimize mobility strategies more autonomously and systematically.

2.3.3 Autonomy: higher-autonomous intelligent systems

An autonomous construction robot is expected to accomplish its task(s) within a defined scope, with minimal or no human intervention (Saidi et al., 2016). However, in most of the literature mentioned above, most of the applications work with semi-autonomous configurations, which require the efforts of human beings to assess and inspect. For example, Elfes et al. (1998) investigated a semi-autonomous airship for environmental monitoring with humans giving orders on actions to take in navigation; Sutter et al. (2018) proposed a semi-autonomous bridge inspection system that needs humans to inspect and modify the position for scan taken, the semi-autonomous manner separate the localization and data acquisition process, which added an extra burden to system management and data transfer. Previous research also shows that few of them investigate how to automate the process of defined construction tasks with added extra functions. For example, Mantha and García de Soto (2019) proposed a system using fiducial markers to localize the robotic system and do the path planning according to the data collected. The proposed system is in a semi-autonomous setting, which uses static markers with human instructions, which adds much complexity when using such a robotic system. In a higher-level autonomous setting, the construction robot is expected to adapt to its sensed environment, formulate plans for executing its task, and re-plan as necessary. The intelligent construction robot should also determine when its tasking is not executable and request assistance (Eiben et al., 2021). All these processes need an integrated control system and communication networks to execute different services or actions when given a specific scenario. Previous research has never found a way to solve this problem. This paper proposes a general framework to handle and standardize the process (see Section 3). The application is tested using an integrated autonomous platform for data collection (see Section 4).

2.4 Existing construction robotic platforms

Given the wide range and availability of robotic platforms, a distinction needs to be made between the framework they are based on. In the following subsections, the terms “closed” and “open-source” platforms are used to refer to platforms that keep the development of the algorithms private and to those that make all the development available, respectively.

2.4.1 Existing “closed” platforms

Construction robots are generally creating interest in the academic and industrial domains. Gradually, more and more robotic applications have been brought into the construction industry to assist construction activities. Many researchers have tried to adopt robotic systems mostly based on robotic platforms that are commercially available in the market. However, most commercial products are “closed” products, meaning that the company behind the product’s manufacturing might not be willing to fully disclose the working principles of the control behind their platform or even allow the end user to modify it to suit their application better. A software development kit (SDK) is sometimes provided, although it often does not provide access to all the platform’s capabilities. For example, Brosque and Fischer (2022) proposed a reference framework to evaluate the performance of multiple commercial products on the construction site and assess their safety, productivity, and quality performance. Xu et al. (2021) used a case study to evaluate the performance of the drilling robot “Jaibot” to drill holes for the installation hangers on the MEP systems according to the BIM models and suggested possible enhancements to the platform. Wetzel et al. (2022) used Boston Dynamics’ four-legged robot “Spot” to test the quality and efficiency of LiDAR Scanning on Active Construction Sites. Tribelhorn and Dodds (2007) evaluated the opportunities and limitations of an “iRobot” platform for construction research and tasks.

These cases show that the adoption of robots on construction sites is increasing, but it remains low compared to other fields. The current robots used in construction are limited to one specific task on a small scale. On top of that, the functionalities provided heavily rely on the stock features and characteristics of the commercial products. Most currently developed platforms are of general usage in multiple fields, with only some specifically designed for a construction task. Such as the Tybot for tying rebars (García de Soto and Skibniewski, 2020) or the Canvas platform aimed at drywall finishes for wall painting (Cronin et al., 2019). Using a general usage system would need further development or modification to make it suitable for a construction site, which results in tedious work for researchers and contractors to investigate.

Previous research highly depends on the commercially developed robotic platform, which is limited by the manufacturer’s options and equipment. They are looking into adopting the existing platforms into construction projects instead of finding a way to systematically connect different construction functionalities into a more generic robotic platform. Commercially available platforms come hassle-free and ready to be used with minor tweaking, but they often do not suit 100% of the client’s needs.

2.4.2 Existing “open-source” platforms

In contrast, open-source platforms are more customizable to the required needs and applications. With the possibility of access to open-source platforms, researchers have plenty of room to focus on developing the robotic platform themselves (Adán et al., 2019). For example, some researchers have started to integrate new navigation algorithms to advance autonomous control in dynamic evolving environments (Kim et al., 2018a; Labbé and Michaud, 2014; Kohlbrecher et al., 2011), or using the reprogrammed platform with new sensing modules to extract information for 3D mapping and reconstruction of the construction site (Kim et al., 2018b; Kurazume et al., 2017; Prieto et al., 2017), or investigating the communication working schemes between different components embedded on top of the robotic platforms (Mayoral et al., 2017). Still, someone getting started in robotics could easily get lost with the number of variables to be considered. There are limited studies that introduce in a detailed way the steps to develop an open system customized for a particular application. With researchers still focusing on the robotic platforms available in the market, the lack of resources for construction experts to develop robots has limited the widespread integration of construction robotic applications. No previous literature in the construction domain provides a systematic approach to developing such platforms. Thus, a guide for construction practitioners without expertise in robotics would greatly benefit the development and integration of robotics in the construction field.

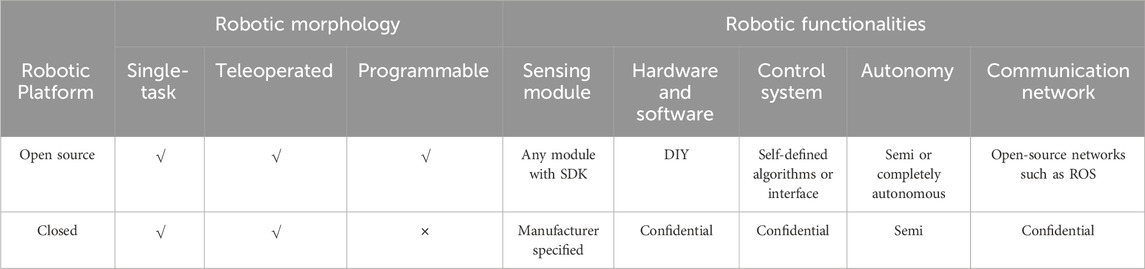

A comparison is summarized in Table 3 to help understand the different characteristics between the “closed” and “open-source” platforms.

2.5 Comparison with the state-of-the-art works

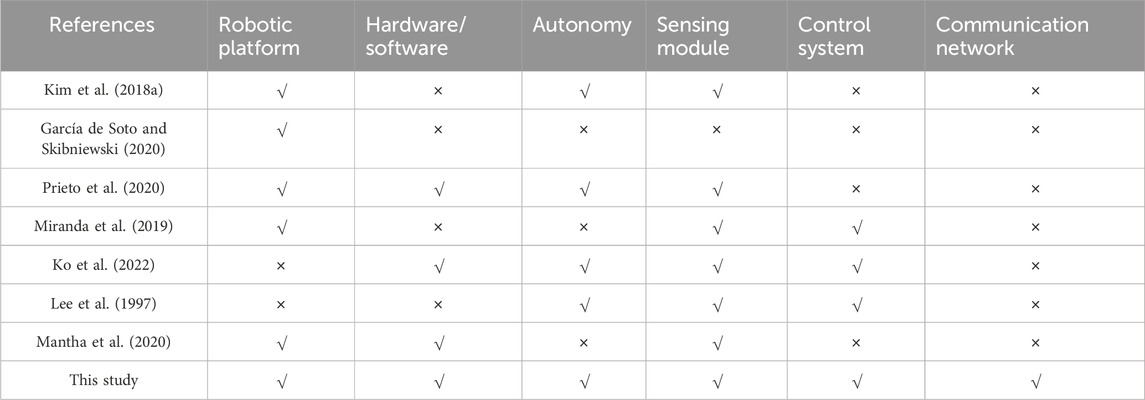

As stated in the previous literature, to build a construction robot, all the aspects need to be considered, including the robotic platform to be built on, the hardware and software integration, the autonomy the robot requires, different sensing modules on board (i.e., sensors and computers), the control strategies and systems, and communication network. However, to the author’s knowledge, previous studies on construction robots mainly focused on one or two specific aspects instead of giving a general integration of all the elements required to build the system. Table 4 gathers information from state-of-the-art studies on construction robots, summarizing if the elements discussed above are present in their research work. This paper aims to integrate all elements to design a construction robot from scratch, which provides easy-to-follow steps, allowing construction practitioners who do not have a robotic background to be able to design their own robot based on the application requirements.

3 Methodology

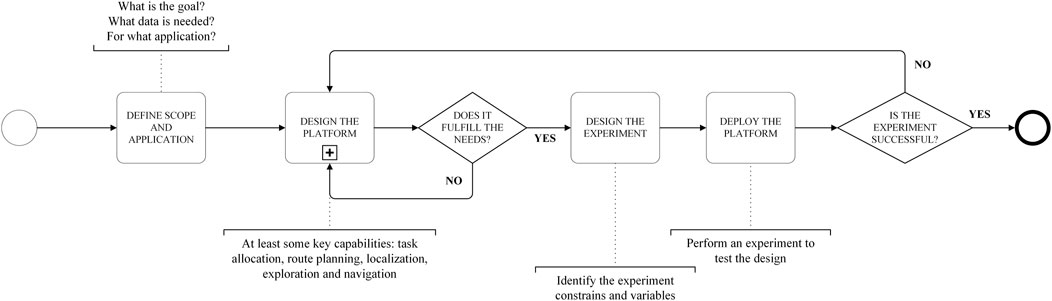

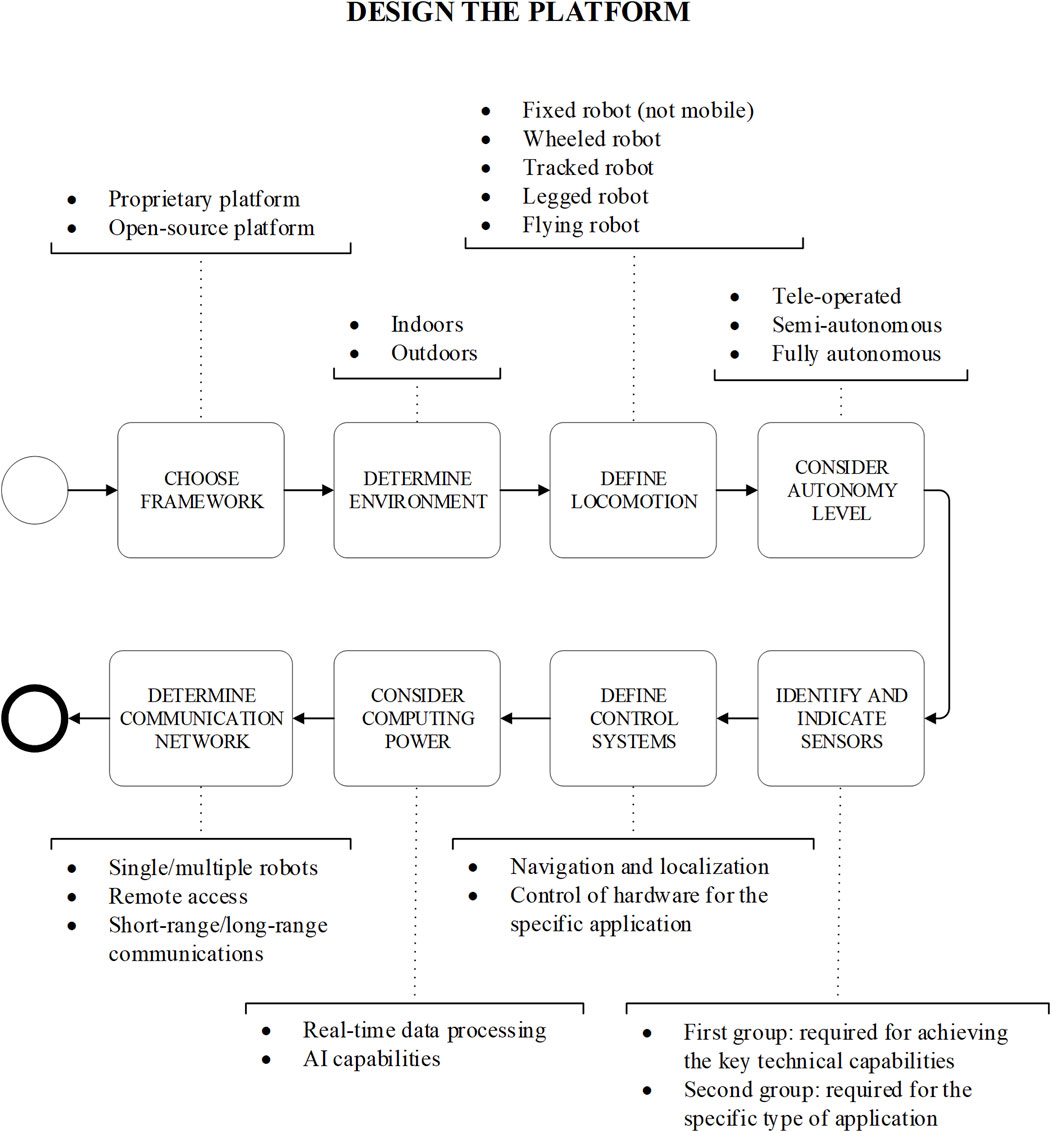

Robotic platforms come in different forms and are embedded with various functionalities to achieve different goals. This section provides a systematic methodology to cover all the possible choices and assist construction professionals interested in determining which robotic platform might be useful in their projects. Our methodology aligns with the principles of Design Science Research (DSR) (Hevner et al., 2004), following an iterative process of design, development, and evaluation to address the identified challenges. The main steps of the methodology are summarized in Figure 1. The principal component is the actual design of the robotic platform, which consists of an iterative process ensuring that all the robot components are chosen considering their specific purpose. Each step is explained in detail in this section.

3.1 Define the scope and application

The first step is to define the scope and the goals/application of the robotic platform. Questions such as what is the robot intended to do? Or what tasks will be performed by the system? Should be addressed in this step. This will be a determinant factor for the other choices regarding the actual design.

The definition and thinking process of the application itself follows its own process, but that is beyond the scope of this study, focusing solely on the design process of the robotic platform. Therefore, it is assumed that the application and scope of the robotic platform are clear and well-defined at this point. For example, in the case of an application focused on masonry work, the goal of the robot should be clearly defined and not too broad, like laying bricks with a set of pre-defined parameters (i.e., length, width, height, and position of the wall to be built).

3.2 Design the platform

Despite the specifics of the individual applications defined in the previous step, a robotic platform with minimum autonomy must fulfill a set of key capabilities that will be common in every case (Mantha et al., 2020). First, to achieve the desired application, the platform needs to allocate tasks, e.g., breaking down the main goal into smaller tasks that need to be processed in a given order. For example, the robot needs to be able to locate itself within the work environment, knowing at every moment the relationship between the local coordinate system of the platform and the global coordinate system of the scenario. This can be achieved by different means, such as passive localization (Nahangi et al., 2018; Park and Hashimoto, 2009) (i.e., markers, April tags, RFID), or active localization (Fox et al., 1998) (i.e., visual odometry, particle filters). Following localization, both navigation and route planning are required as well. If the robot is to perform an autonomous, unsupervised movement, it must be equipped with the necessary sensors and algorithms. To ensure that all the different aspects are being considered, a more detailed breakdown of this step is shown in Figure 2. The following subsections provide a detailed explanation of each step.

3.2.1 Choose framework

In some cases, if the application does not require unusual or very customized requirements, the options available from robotic service retailers (e.g., Boston Dynamics, iRobot, Universal Robots) could suffice the needs of the user. However, for the most part, proprietary platforms ready to be deployed are often limited to the capabilities provided by the developer/manufacturer, with little room for expansion or customization. Some advantages of said platforms are that they do not require an operator with robotics knowledge or special skills to set up and deploy the platform for the desired application.

On the other hand, going for an open-source platform or even developing one from scratch offers way more customization and freedom regarding hardware components and overall control. For a platform to be fully open-source, not only the Operating System (OS) of the devices controlling the platform needs to be open-source, but also the middleware running on that OS. The OS manages the interaction between the different hardware components through device drivers. The middleware, however, is a framework that facilitates implementing a complex distributed application involving many interacting components and logic. The most popular middleware used in robotics is the Robot Operating System (ROS) (Bock and Linner, 2016). ROS is an open-source middleware system dedicated to robots, with the first version (ROS1) widely spread throughout the research community, providing multiple tools for researchers to control every aspect of a robotic platform. The next and improved version, ROS2, is building upon the feedback from the community and improving on its predecessor’s faults, such as the cybersecurity component. Other alternatives are rising in popularity, such as the NVIDIA Isaac Middleware (Nvidia, 2022), Robot Construction Kit (Rock) (Rock-robotics, 2022), Urbi (Softbank Robotics Europe, 2022), and Orca (Orca-robotics, 2022; Feng et al., 2015), to name a few. Arduino platforms are also popular within the hobbyist community, given how widespread they are and the flexibility they offer. Although they could be used for specific and simple tasks, such as IoT and communicating different sensors throughout the construction site, they do not scale properly with bigger and more complex systems. Based on the authors’ experience, ROS is the most commonly used in the research community and the industry and, therefore, the one used in this study.

Proprietary commercial and open-source platforms have advantages and disadvantages, and a detailed comparison is beyond the scope of this study. However, the three main differentiating factors are the cost, customization capacity, and skills required for deployment. In general, the proprietary commercially available platform is costlier and less customizable but easier to deploy and has the added benefit of technical support availability. For this study, customization and flexibility are preferred, so only an open-source platform is considered.

3.2.2 Determine environment

An important aspect to consider is whether the application to be performed by the platform happens indoors or outdoors. This will not significantly affect the software part of the robot but will most likely affect any choice regarding the hardware of the robot.

The most significant consideration is to be able to deal with the weather conditions in the case of an outdoor scenario. Climate can present itself in extreme and challenging conditions in specific parts of the world, such as very high or very low temperatures, high humidity and rain, often unsuitable for most hardware components. There are some specific ratings, such as Ingress Protection (IP) ratings for outdoor devices to get a minimum waterproof enclosure, such as IP65, IP66, and IP67, that need to be considered, providing certifications that the device to be included in the robotic platform is both dustproof and waterproof.

The type of scenario can also affect how the robotic platform interacts with the environment, not only in how it traverses the scenario but also in how it collects data from it. Certain outdoor scenarios require different characteristics for the locomotion aspect, and some specific sensors, such as 3D scanners, are often not suitable for large open outdoor scenarios, not only for their range limitations but also for their poor performance with open skies or direct sunlight exposure (Tredinnick et al., 2019). In the example of a robot aimed to perform masonry work, both outdoor and indoor environments should be taken into consideration. In addition, debris on the floor should be expected, as well as the presence of heavy dust conditions.

3.2.3 Define the locomotion system

Once the environment has been defined, the locomotion of the robot can be determined. Depending on the morphology of the robot by the way it moves (or its lack of movement) through the scenario, robotic systems can be categorized into fixed robots (non-mobile platforms), wheeled robots, tracked robots, legged robots, and flying robots (Quintana et al., 2021; Quintana et al., 2021).

Fixed robots are not that common in the construction industry, except for applications where pre-fabricated structures are being used (i.e., gantry systems and fixed robots for 3D concrete printing applications).

Flying robots are often limited to outdoor scenarios, with specific cases able to fully perform in indoor applications (Grzonka et al., 2009; Sato et al., 2016). They are often best performing when height is the controlling factor, such as when inspecting building facades or where a top-down aerial view is needed. The control and localization of the flying robot often rely on external elements, such as the availability of GPS.

Wheeled robots, on the other hand, are the most common in indoor scenarios. It does not mean they are not suitable for outdoor environments, but they are more reliable and less challenging for indoor environments. They are often easy to control and offer reliable and high-precision maneuverability. A distinction can be made for holonomic platforms equipped with mecanum or omnidirectional wheels. These allow the robotic platform to perform movements in the X and Y-axes (i.e., lateral movements). They are exclusively limited to environments with relatively flat surfaces where the grip between the floor and the wheel is high enough for the holonomic action to take effect. These configurations often perform poorly in environments with a high presence of loose gravel or sand.

Tracked robots are better for more challenging environments that cannot ensure a flat surface or enough friction with the wheels (Ducros et al., 2017; Lever et al., 2006). Tracks offer a more reliable movement for scenarios rich in obstacles and different elevations, allowing the robotic platform to surpass small obstacles or uneven terrain. However, they do not provide as much precision and maneuverability as wheels.

Finally, legged robots are a good compromise if the robot is to perform both indoors and outdoors. Legged locomotion offers the best solution for dealing with obstacles of all kinds, even going up and down stairs. This, however, can be a more expensive choice, not to mention the hardest to control due to the natural complexity of the different gaits available for a legged robot (Erden and Leblebicioǧlu, 2008; Plagemann et al., 2008). With the proper control, they can be as precise (or even more) as a holonomic wheeled platform. However, when speed is a requirement, a legged robot might not be able to achieve high speeds reliably and efficiently compared to a wheeled or tracked robot. In the case of a masonry robot, a compromise between precision and reliability to navigate in rough terrain needs to be achieved. Tracks are good in terms of achieving linear movement accuracy, which would be the main movement needed for a brick-laying robot, and they would be ideal to surpass any debris present in the working area.

3.2.4 Consider the autonomy level

The level of autonomy of the robotic platform will greatly impact the choice of the sensors and the computing power and communication devices on board the robot. Three main categories can be differentiated in this aspect: completely manual (teleoperated), semi-autonomous, and completely autonomous robots (Mantha et al., 2020).

Teleoperated robots are the simplest ones regarding control and the least demanding regarding sensing capabilities. A distinction must be made between teleoperation with the direct sight of the robot and remote teleoperation without the direct sight of the robot (Chen et al., 2007; Lin and Kuo, 1997). The latter needs enough sensing capabilities to provide feedback to the user controlling the platform, whereas the first does not need sensors at all. Despite being simple in sensory load and control algorithms, they require a skilled operator to control them. They are mostly used in monitoring and rescue missions in hazardous environments where the presence of human beings is not an option.

Semi-autonomous robotic platforms are the most commonly used ones. They do offer some autonomous capabilities that may vary depending on the application, but they do rely on human interaction for some other tasks, such as charging, objective identification, or adjusting multiple parameters throughout the application. Some of the most common tasks performed autonomously are movements and anything that involves trajectory planning, repetitive actions, or task allocation (Van Henten et al., 2002; Peungsungwal et al., 2001).

Completely autonomous robots can perform the entire process without human intervention. Ideally, the robot gets an initial environment setup once, and there is no longer a need to interact with the platform again. Completely autonomous robots need a higher payload of sensors and require a greater complexity when designing the control system since they have to be reliable even when facing dynamic environments. In the case of a brick-laying robot, some manual process would be needed in order to keep the robotic platform supplied with materials (i.e., bricks). The brick-laying process could be designed to be made completely autonomous.

3.2.5 Identify and choose sensors on board

In general, sensors are needed to collect the necessary data from the surroundings and for the robot to react to the environment. For the sensory load of the robotic platform, two main groups of sensors can be differentiated. The first group consists of all the necessary sensors to fulfill the minimum key capabilities previously indicated, i.e., mapping, localization, and navigation tasks. These are referred to as basic sensors. Sensors within this group can be of different types, but they all have in common the ultimate goal of providing feedback from the environment to either the robot or the user behind its control. Some of the most typical sensors for this purpose are wheel encoders (to provide odometry), inertial measurement units (IMU), a global positioning system (GPS), light detection and ranging (LiDAR), ultrasonic ranging devices, or cameras. The second group comprises specific sensors for the application (i.e., task-specific sensors). These could be any type of sensor and should be chosen based on the specific application. For example, if the objective of the robotic is to monitor the comfort level of a building (Quintana et al., 2021), it would have to be equipped with CO2, humidity, and temperature sensors, to mention a few. In some cases, the line between the first and second groups can be fuzzy since sensors from the first group could also be used as task-specific sensors. However, the distinction is made based on their usage for the minimum key capabilities (i.e., mapping, localization, and navigation tasks). If a sensor is specifically used to fulfill these capabilities, then it belongs to the first group, even though the data coming from the sensor could also be used for task-specific applications.

In general, the more sensors there are, the better equipped the platform will be. However, three main limiting factors determine the number of sensors a platform can carry. These factors are the platform payload, the computing power needed to handle all the incoming data, and the cost of the devices. For example, if the platform does not have a big payload capacity, specific sensors, such as long-range 3D scanners, can be too heavy to be combined with other sensors. Taking into consideration these three factors, for more complicated tasks, it is advisable to split the sensory load amongst multiple robotic agents (Prieto et al., 2021). In the particular case of a brick-laying robot, sensors regarding autonomous close-range movement would be needed (i.e., LiDAR and short-range ultrasonic ranging devices). A way to detect the 3D space in front of the robot to keep track of the building wall would be needed (i.e., LiDAR or depth camera). In addition, a robotic arm and a specifically designed gripper would be needed to handle the bricks and lay them properly.

3.2.6 Define control systems

Having defined the tasks needed to fulfill the application requirements, the level of autonomy the robotic platform needs to have, the sensors on board the platform to fulfill all the required capabilities, and the proper control system to handle everything needs to be implemented.

As mentioned in Section 3.2.1, choosing the right middleware is a key step to fully control the robotic platform. Most middleware options come with predesigned and ready-to-use solutions that can take care of most of the basic capabilities a robot must be able to perform, such as odometry computations, localization and mapping (e.g., SLAM), or autonomous navigation. For the specifics of the application, most of the commercially available sensors come prepared with a Software Development Kit (SDK) that allows the user to write specific code to control the sensor. In most cases, a driver can be written for the interaction of the proper middleware with the sensor, allowing the robot platform to fully control all the devices on board.

3.2.7 Consider computing power

The computing hardware and software components play a central role in implementing the logic and ensuring the proper behavior of autonomous robots. In terms of size and complexity, hardware components can range from small microcontroller units (MCUs) to full-featured computers. MCUs have limited computing capacity but are equipped with several interfaces to connect sensors, actuators, and external peripherals. They are, thus, used for low-level interfacing with such devices. Embedded computers are more powerful. They typically run OSs, such as Linux, but they have limited support for direct interfacing with sensors and actuators. Common architectures in complex applications include several MCUs and computers.

The amount of computing power to be added to the robotic platform will be determined by different factors, such as the amount of data coming from the different sensors on board or how much data processing will happen in real-time on the platform. Given the rapid growth of Artificial Intelligence (AI) and Machine Learning (ML) algorithms in data processing, it is safe to assume that any platform requiring real-time data processing will need a fair amount of computing power to run these algorithms. Therefore, the addition of Graphical Processing Units (GPUs) that are better suited for this type of processing also needs to be considered. A brick-laying robot, for example, would not prioritize high-speed real-time computing, and therefore, GPUs would not be necessary.

3.2.8 Determine the communication network

There are a few elements that the communication network needs to fulfill, such as providing reliable and stable communication between the user and the robot or between the robot and any other agent it needs to interact with (e.g., a data server or another robot). The characteristics of this basic communication network will be determined by some of the aspects mentioned before, such as the level of autonomy the platform needs to have or the environment.

The complexity of the communication network for the robotic platform will increase with the number of agents within the network (Doriya et al., 2015). This is why choosing the number of robots (if a robotic swarm is needed) or elements within the network (e.g., multiple command stations or servers) is crucial to designing a manageable and reliable communication network.

Different communication topologies are suggested for robot communication, such as star, tree, ring, mesh, line, bus, and hybrid topologies (Amiripalli and Bobba, 2019). In addition, there are also different possible models of communication. For example, they can have a master, rotating token, etc. Determining the appropriate communication system depends on mission circumstances and communication hardware and limitations, such as distance of units from each other, communication media and technologies, bandwidth limitations, delay, noise, communication speed, etc.

Another aspect to consider is having the robot confined to a closed, local communication network or having Internet exposure. The former ensures a controlled communication environment, limiting exposure to external risks and maintaining data security. However, it restricts the robot’s access to broader data resources and may limit its functional capabilities, especially in tasks requiring real-time, global data. The latter provides robots an Internet exposure through 5G connectivity. This aligns them more closely with concepts like the Internet of Things (IoT) and the Internet of Everything (IoE). The advantages include real-time data access, remote control capabilities, and integration with a wide array of online services, sensors, and databases. However, this approach raises significant concerns regarding cybersecurity, data privacy, and the need for robust, uninterrupted internet connectivity.

3.3 Test and deploy the platform

Once the platform has been defined based on the design requirements indicated in Section 3.2, it is necessary to test that the result can fulfill all the specifications laid out in the initial stage. This process is carried out at the end of the design process, so there is an opportunity to test the full system every time something new gets implemented. During this iterative process, based on the test results, if the platform is successful and performs as intended, then it can be deployed. If the test is not successful, the process loops back to the beginning of the design process to modify whatever is necessary before another test.

Once the design is completed and the test has been successful, the platform can be deployed for its intended application, ensuring that enough user training is provided to the people who would be in contact with the platform, both in terms of use and safety.

4 Implementation: development of a semiautonomous data collection system

To illustrate the use of the methodology presented in Section 3, this section compiles the required steps to develop a platform for automating the collection of data (e.g., taking scans including point cloud data, RBG, and thermal images) on construction sites based on open-source platforms and flexible systems (i.e., not dependent on proprietary/closed products). The reason for choosing this particular application is because data collection robots have been applied to different construction activities such as progress monitoring, construction operating, surveying, reconstruction of on-site elements, and maintenance of buildings. However, there is a gap in integrating these two processes to advance the functionality of such systems. Besides, the development of data acquisition hardware, such as cameras, IMU, Lidar, and Lasers, provides researchers with higher dimensional data streaming in the data acquisition and inspection process, which requires extra expertise to process and manage data in different formats (Milberg and Tommelein, 2003). Relevant research on on-site construction data collection platforms. A summary is provided in Appendix A.

4.1 Define the scope and application

The particular application is data collection in construction sites. General tasks such as data collection for progress monitoring purposes are an ideal application for autonomous robots since, for the most part, they do not require very structured or extremely dynamic task requirements. In this case, the developed platform will have to autonomously traverse a construction environment during all the different stages of the construction process, from the early stages of the construction to operations and maintenance. While navigating the construction site, the robot will have to be able to collect different types of data from the environment, mainly 3D geometric data, (color) pictures, and infrared information. The application requires the platform to be completely autonomous during operation, with only minimum interaction from the human operator while setting the task. Based on this, the platform needs to have the following characteristics:

• Good mobility in small indoor spaces.

• Limited interaction from a human operator.

• The ability to add sensors and peripherals to collect data from the site (i.e., have enough payload).

• The ability to customize software and algorithms in control of the robot’s behavior.

• Have sufficient battery life to perform the application.

• Comply with any required safety requirements.

4.2 Design the platform

There are multiple commercially available robotic platforms (FARO, 2022; Leica, 2022); however, for purposes of this study, customization and flexibility are preferred, so only an open-source platform is considered, and discussion of off-the-shelf robotic platforms is not part of the scope.

4.2.1 Choose the proper framework

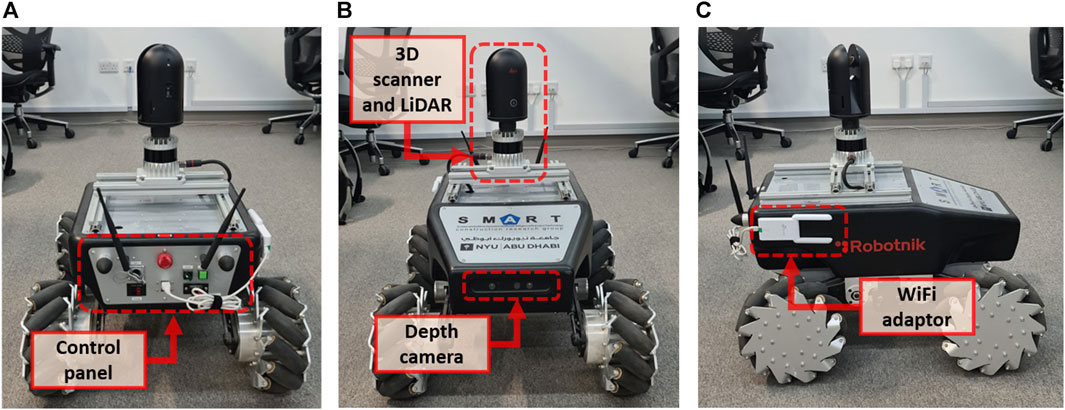

An integrated framework is needed to set up communication between different modules of the robotic platform and the information flow between them. Since the level of customization and control needed for the specific application is relatively high, an open architecture ROS-based platform has been chosen. The basic hardware corresponds to a SUMMIT-XL robotic platform from Robotnik (2022). Different sensors and customizations (Figure 3), discussed in detail next, have been added to the basic platform, making it suitable to address the above characteristics.

FIGURE 3. Main components of the robotic platform. (A) Rear view showing the control panel, (B) front view of the robot with the depth camera, 3D scanner, and LiDAR, and (C) side view (right) showing the Wi-Fi adaptor.

4.2.2 Determine environment

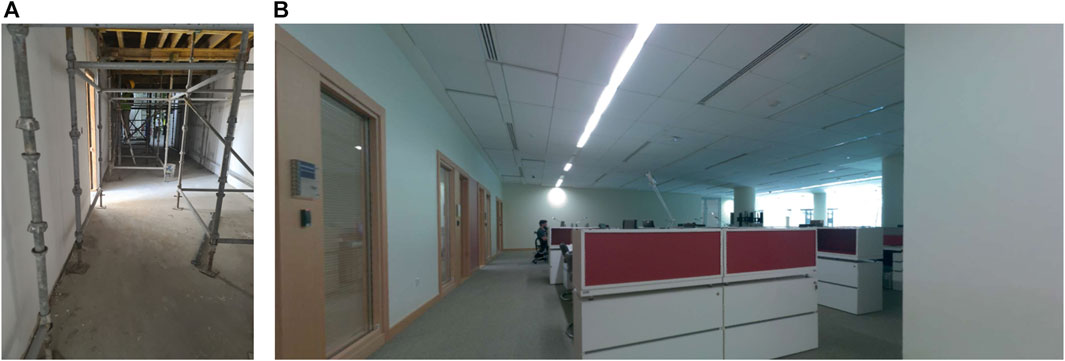

The robotic platform will mostly collect data in indoor environments for the specific required application. In general, most of the inspections during the construction stage (except for the very early ones) will happen indoors. It is expected that some of the key aspects of the environment where the robotic platform will perform most of the time are a high presence of walls and narrow passages, flat floors with a minimum amount of debris or small clutter, and well-illuminated areas. The environment can differ depending on the stage of the lifecycle of the building, such as an ongoing construction site or during operation (e.g., Figure 4). Different stages will present different challenges, such as the presence of obstacles (static or moving) that might affect mobility.

FIGURE 4. Example views of places where the platform is expected to be used during the different construction stages. General view of (A) ongoing construction site and (B) occupied office building.

4.2.3 Define the locomotion system

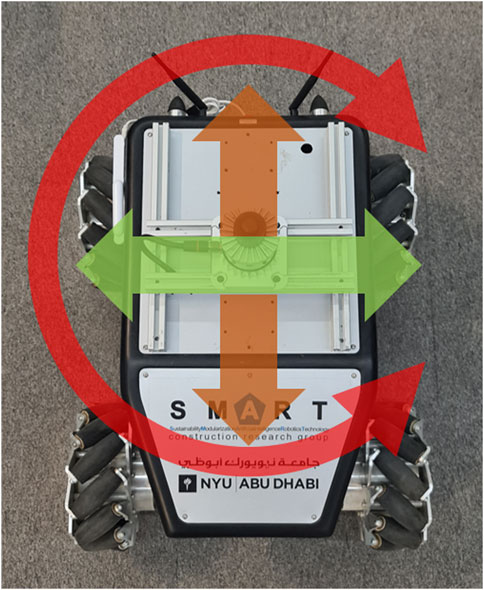

In general, construction environments contain narrow passages, so it is expected that the platform will require high precision in its movements. Legged platforms are a good option but have limitations related to maintenance and are generally more expensive than wheeled ones. A holonomic platform would allow the robotic system to maneuver amongst the narrow passages and avoid any clutter or obstacles that might be present. Using this locomotion, the robot can move in both directions of the planar space (Figure 5), presenting a wider range of movements in relatively small spaces without having to perform complicated maneuvers. This particular feature becomes very useful when traversing narrow door frames and avoiding clutter on the construction site. However, this approach might be challenging in spaces where a lot of clutter or obstructions are expected or in floors with poor traction (such as places with sand or gravel flooring conditions).

FIGURE 5. Diagram of the movement axis for a holonomic platform (X-axis: orange arrow, Y-axis: green arrow, yaw movement: red arrow).

4.2.4 Consider the autonomy level

In general, a high level of autonomy is required. That means that not only can the platform navigate from one point to another in an autonomous manner, but ideally, the entire data collection process is autonomous, too. Only the bare minimum interaction from the human operator at the beginning of the process (e.g., providing enough data (i.e., the BIM model) to the robotic platform to perform the assessment) is the input required. For simplicity, it is expected that the platform will not be controlled remotely from outside the construction site; instead, all the interactions with the platform will be within the site. Another important aspect to consider when defining the autonomy level is the battery life of the robotic platform. The autonomous process might get interrupted if the battery life is not long enough for the platform to perform one full operation. The SUMMIT-XL platform has a standard battery life of 4–5 h, which should be sufficient to perform standard data collection in a construction environment.

4.2.5 Identify and indicate sensors on board

The desired level of autonomy will be fundamental for the selection of the 1) type of sensors for mobility or basic sensors (i.e., those used for navigation/localization/positioning, etc.) and 2) data collection or task-specific sensors (e.g., thermal camera, scanner, etc.).

4.2.5.1 Basic sensors

The first group comprises sensors responsible for localization and autonomous navigation. Construction sites are characterized by being unstructured and dynamic. This creates extraordinary challenges for robots to localize and navigate in such environments, which is why specialized sensors must be added to solve this challenge. Since the robot will be working in a dynamic and cluttered environment, it must be able to avoid dynamic and static obstacles while executing a given task.

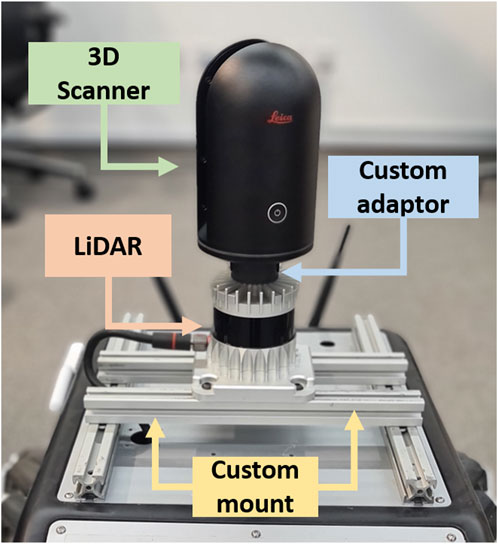

The most common way to solve both localization and navigation is by Simultaneous Localization and Mapping Algorithms (SLAM). There are many implementations of 2D and 3D SLAM with different sensor configurations. Most hardware configurations employ a dedicated sensor for 2D SLAM and other sensors to gather 3D data. The SUMMIT-XL platform comes with a 2D Hokuyo LiDAR, limited to dynamic and complex environments. To improve that, the 2D LiDAR was replaced by a 3D Ouster OS1 LiDAR (Figure 6). That LiDAR has a minimum range of 0.8 m and a maximum range of 120 m, a vertical field of view (FOV) of 33.2° and a 360° horizontal FOV, with a maximum of 5.2 million points per second and a precision of up to ±0.7 cm. The LiDAR is used to give the robotic platform a detailed enough awareness of the surroundings, which can be used for both localization and navigation purposes, as well as dynamic obstacle avoidance (Figure 7). On top of this, the localization of the robot is improved by fusing the LiDAR data with the odometry from the wheels’ encoders and the Pixhawk Inertial Measurement Unit (IMU) installed in the robot.

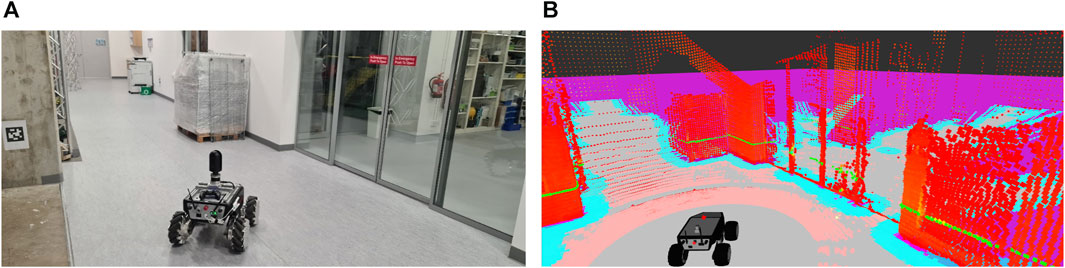

FIGURE 7. (A) View of the robot in the scenario. (B) Real-time visualization of the sensors’ data in Rviz.

4.2.5.2 Task-specific sensors

For the second group of sensors, the application requires geometric 3D data, color, and infrared information. For this particular purpose, the Leica BLK360 3D scanner (Figure 6) was chosen. The 3D scanner can collect data with a high FOV (360° × 300°) and a much higher 3D point accuracy (8 mm @ 20 m). The maximum range of the BLK360 is 60 m, which is enough for the application requirements. It runs on batteries and weighs just 1 kg, which makes it ideal to be mounted on top of mobile robots. The scanner not only gathers 3D points but is also equipped with three 5 MP cameras to generate an HDR full-dome RGB image and a thermal infrared FLIR camera to provide thermal information. The data collected from the BLK360 is also complemented with an RGB-D camera installed in the front of the robot that can be used when single pictures need to be taken for monitoring purposes. The depth camera is an Orbbec Astra with a maximum range of 8 m, a FOV of 60°H x 49.5°V x 73°D, and an RGB and depth resolution of 640 × 480.

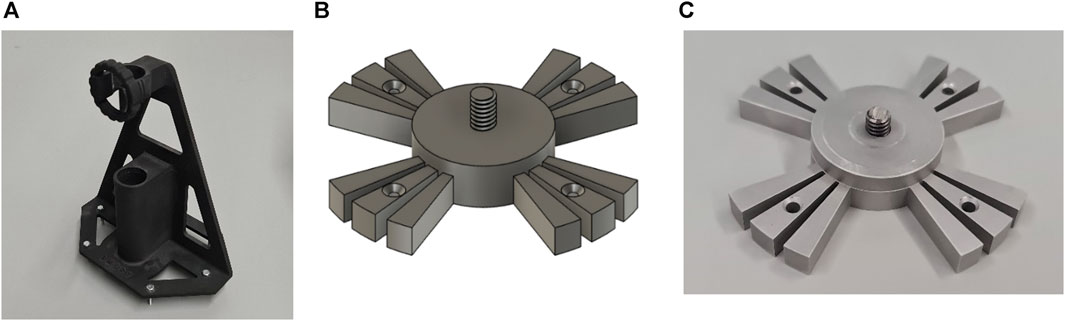

A special mount was designed to adapt the BLK360 on top of the OS1 LiDAR to use the full 360° of the LiDAR (Figure 8). A series of iterations and rapid prototyping was needed before the final piece was mechanized in aluminum so as not to interfere with the cooling process of the OS1 LiDAR. The first prototype fixed the BLK360 behind the LiDAR, occluding the rear part of the robot. The final piece allows for the BLK360 to be mounted without occluding any of the available FOV of the OS1 LiDAR, taking advantage of the low weight of the 3D scanner. A custom modular rail system was also designed to mount the sensory system onboard the mobile robot.

FIGURE 8. (A) First prototype designed for the BLK360 mount. (B) 3D CAD model of the final design. (C) Final piece mechanized in aluminum.

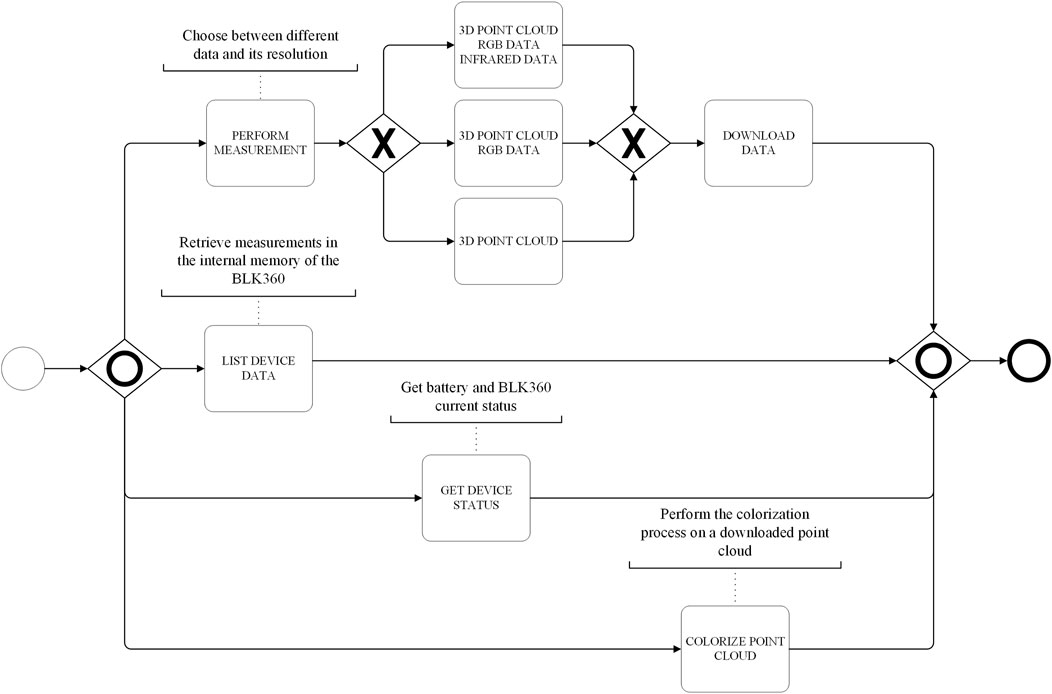

In order to automate the data collection, a specific and customized ROS node was created to control the BLK360 3D scanner using the API provided by the vendor; this makes the 3D scanner control accessible through the platform and puts all the devices inside the platform inside the same framework (ROS), making it very convenient for the platform to autonomously control all the sensors on board.

4.2.6 Define control systems

As previously indicated, the control algorithms/drivers can be classified into the same two categories (i.e., basic and task-specific sensors).

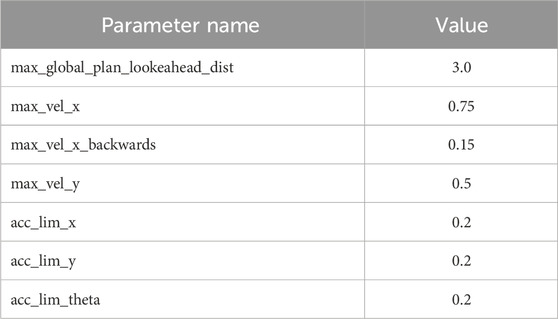

The developed platform in this study needs to perform SLAM to obtain a map of the environment and localize itself within the created map for further autonomous navigation. ROS already provides developed solutions for all these applications, requiring minimum configuration to adapt the algorithm to the particular hardware of the robotic platform. Thanks to how ROS is structured and configured (i.e., nodes and topics), the developed solutions are designed to work with the topics containing a certain type of information (i.e., point cloud topics, laser scan topics, odometry topics). As long as the data is properly formatted in a ROS message structure, the source of said data is of no relevance to the ROS node running the application. For example, there are multiple solutions for the SLAM problem, such as Gmapping (Grisetti et al., 2007), Google Cartographer (Hess et al., 2016), or HectorSlam (Kohlbrecher et al., 2011). Gmapping has been chosen in this study due to its robustness, good performance, and minimal adjustments required for working results out of the box (i.e., the only necessary changes to make are those specific to the robot, such as the name of the topic publishing the scan information and the names of the different transformation systems). Gmapping generates an obstacle map of the environment that will be used for the robotic platform to autonomously navigate the scenario. To further increase the accuracy of the localization, an Adaptive Monte Carlo Localization (AMCL) approach was chosen (Fox et al., 1999). Algorithms based on particle filters, such as AMCL, have been used for many years and have been proven reliable in providing accurate position estimates right out of the box. The position estimate provided by the AMCL is further fused with the odometry coming from the wheels’ encoders and the data from the IMU to have an even more accurate position estimation. The navigation is solved using a global planner and a local planner. The global planner is in charge of computing an obstacle-free path from point A to point B. The mission of the local planner is to compute the necessary velocity commands to ensure the robotic platform follows the path generated by the global planner and deals with dynamic obstacles that might not be present in the global path. For this particular application, two well-known algorithms available in the ROS default navigation stack have been used for their reliability and performance. The Navfn algorithm serves as the global planner, using a Dijkstra approach (Dijkstra, 1959) to compute the path to follow. A Time-Elastic-Band approach (TEB) (Rösmann et al., 2012) was chosen as the local planner because of its performance with holonomic systems like the one developed in this study. Both algorithms are good to work right out of the box with the minimum changes mentioned before (i.e., topics and transformation systems’ names), although the TEB local planner can be further tuned to suit the specific environment where the robot is going to perform for an even increased performance and efficiency in the navigation. For this particular application, the navigation system was completely unaware of the quality of the data being collected by the 3D scanner. If one of the requirements of the system is to minimize the travel time of the robot, further optimization and tuning need to be done in the navigation system, so that it tries to maximize the amount of information collected on each scan location. Some of the main specific parameters used for this robotic platform are summarized in Table 5.

TABLE 5. Some of the specific parameters used for the TEB local planner with the implemented robotic platform.

For the task-specific sensors, two main ROS nodes are being used. The first one controls the Ouster OS1 LiDAR. The ROS node used here is provided by Ouster and consists of a driver that allows the ROS system to interact with the data flow from the LiDAR. The node publishes all the available information (i.e., the actual point cloud, reflectance information, 2D panoramic maps) in the form of different topics that can be used by the other nodes responsible for the SLAM and the navigation. The published information, in the form of a 3D point cloud, is further processed to condense all the information into a single 2D slice at the height of the LiDAR that can be used with the AMCL localization algorithm. This has been done with the help of the “pointcloud_to_laserscan” ROS node (wiki.ros.org, 2022a).

The BLK360 3D scanner is also controlled by a ROS node. For this particular case, an SDK has been provided by Leica, but a ROS wrapper (i.e., a node created to interface non-ROS code with the rest of the system) had to be developed to integrate the scanner with the ROS system. The developed ROS node needs to be able to interact with the BLK360 and fulfill at least the following characteristics:

• Perform a measurement (3D scan, RGB, and thermal data) with the ability to choose the concerned parameters (i.e., choose what data needs to be collected and its resolution).

• Download the measurement to the robot computer.

• Perform the necessary data processing (i.e., colorizing the 3D point cloud with the RGB images).

• Data management operations (i.e., download, manage, delete, list, and rename data inside the BLK360).

• Device management operations (i.e., get device and battery status).

The node has been developed in C++, following the latest ROS coding conventions (wiki.ros.org, 2022b), and it can integrate the BLK360 with the ROS system, providing all the previously stated functionalities in the form of ROS services. These developed ROS services can be called by any other ROS node, allowing the robotic platform full control over the 3D scanner. The overall structure of the node can be seen in Figure 9.

Finally, another ROS node was developed to integrate everything. In other words, this node is responsible for allocating all the different tasks within the data collection process. It allows the user to interact with the created map to indicate the different positions where the robot needs to stop and collect data and creates a state machine to control the robotic platform at all times. The state machine is responsible for systematically sending the robot to the different goals, making sure the robot has successfully reached the goal and is in a stop position, and commanding the BLK360 scanner to perform a measurement. The robot returns to an initial resting position when all the goals have been visited.

In addition to the previous ROS nodes, there are other multiple nodes in charge of all the different behaviors and control of the different components on board the robot. A simplified version of the ROS network with the main nodes discussed here is shown in Figure 10. Figure 10 shows the main nodes responsible for the data collection process (grey ovals) and their respective data published in different topics (orange rectangles).

4.2.7 Computing power

The robot has an internal computer controlling all the sensors on board and the main algorithms for navigation and data collection. The computer is an embedded PC with an Intel i3 8th Gen processor, 8 GB of RAM, and 250 GB of SSD storage. Since the purpose of the platform, for now, is data collection, there is not going to be heavy real-time data processing. The processing of the collected data would happen ad hoc on an external device. With that in mind, the equipped computer has plenty of power to run the ROS architecture in control of the robot navigation and data collection. The computer is remotely accessed to give commands and retrieve the collected data.

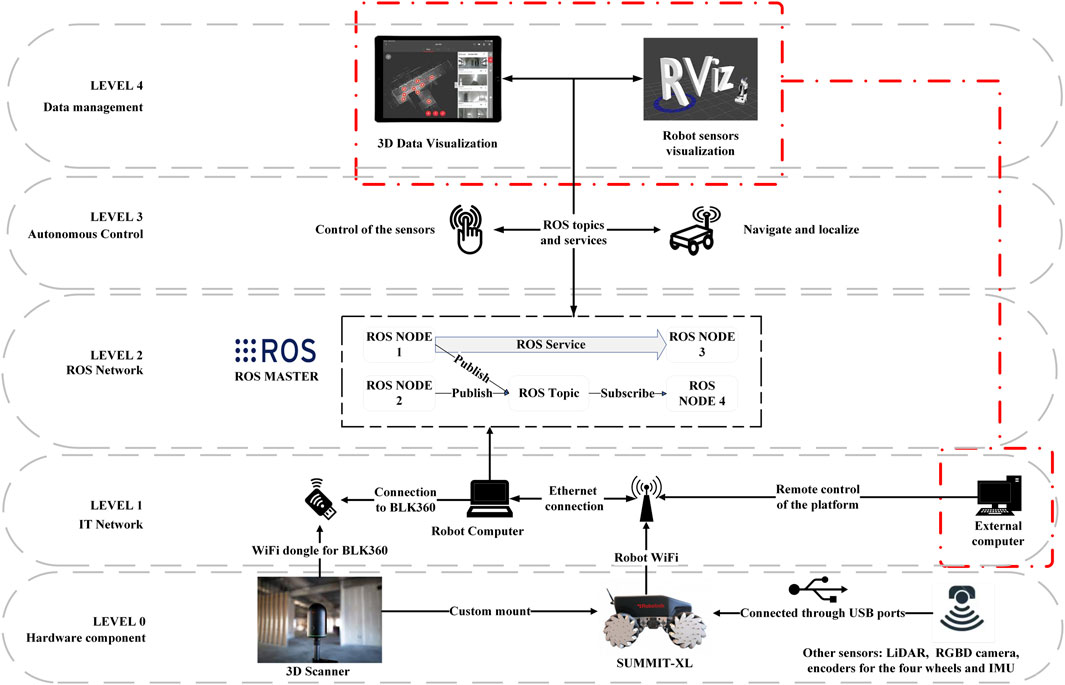

4.2.8 Communication network

The robotic platform has its own closed (i.e., without Internet access) Wi-Fi network for all internal components’ communication and remote control. A good understanding of the internal communication structure among the different components is needed. In this case, the integration of the different components and communications links within the robotic platform is divided into five levels to better illustrate how all the components are connected with each other (Figure 11). These five levels represent different aspects of the communication network, starting from the hardware and physical connections (Level 0) to a more abstract level, such as data visualization (Level 4). These layers have been established for pure visualization and explanation purposes. In reality, the barriers between the different layers are fuzzy, and everything belongs to the same network.

FIGURE 11. Structure of the different external and internal communication strategies of the robotic platform.

Level 0 comprises the hardware components of the robot, both for acquiring data and interacting with the environment (i.e., sensors and actuators). Within this level, devices such as the 3D scanner and all the sensors embedded in the robot (i.e., LiDAR, RGBD camera, encoders, and IMU) can be found. Most of the sensors on board the robot are connected through USB. The robotic platform itself is also part of this level, housing all the components and providing locomotion.

Level 1 corresponds to the IT network of the system. The robotic platform is equipped with a router that creates a network (both wired and wireless) to communicate all the different components with each other. The embedded computer is connected via ethernet to the internal router to make everything accessible within the network created by the platform. One of the downsides of the BLK360 is that it only offers wireless communication to interact with the device. Since the embedded computer of the robot does nothave a wireless adaptor, a Wi-Fi dongle was needed to communicate the computer with the 3D scanner. To provide a Human-Machine Interaction (HMI) interface to control the system and visualize the data, an external computer can be connected to the network.

Level 2 contains the Robot Operating System (ROS) network. The key component within the ROS network is the presence of a master node. For other nodes to exchange information and communicate with each other, a master needs to supervise all the information exchange. In this case, the internal robot computer runs the master node, and any external computer can connect to the ROS network to interact with it. This interaction works both ways, allowing the robotic platform to listen to any command from the external computer and the external computer to visualize any data coming from the robotic platform. Inside the ROS network, besides the master node, multiple other nodes are in charge of all the different sensors and robot capabilities, as seen in the previous sections. All these nodes exchange information with each other in the form of messages that use topics as transport. The nodes can also request actions amongst each other by means of services.

Level 3 involves the semi-autonomous control of the robot. Within this level, the different ROS nodes responsible for task allocation and autonomous applications request information from the nodes controlling the sensors and send commands to the nodes controlling the actuators (i.e., wheels).

Level 4 is the data management layer. A graphical user interface (GUI) can be used to interact with and visualize the collected data. The default GUI used in ROS is RViz. It runs on the external computer to visualize any real-time information from the robotic platform, to not overload the processing being performed in the embedded robot computer.

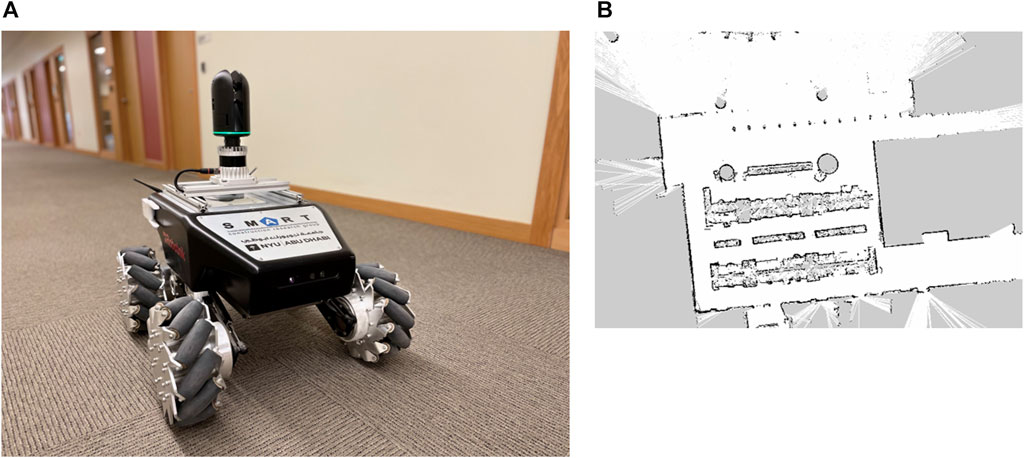

4.3 Deployment and testing

Since the developed platform is intended to work on every stage of a building’s lifecycle, it has first been tested in a finished and in-operation building, simulating a maintenance operation. Data collection during the maintenance stage could be useful for monitoring the building over time and if a refurbishment operation is planned and there is no updated BIM model. The chosen site test is an office space in one of the buildings for a university campus. Since the BIM model of the building is not available, the robotic platform was used to generate a 2D map of the floor (Figure 12) that was used for the autonomous navigation of the robot.

FIGURE 12. (A) View of the robotic platform performing an autonomous mission of collecting data (using the 3D scanner) in an office space. (B) Image of the obstacle map generated by the robot during SLAM while autonomously collecting data.

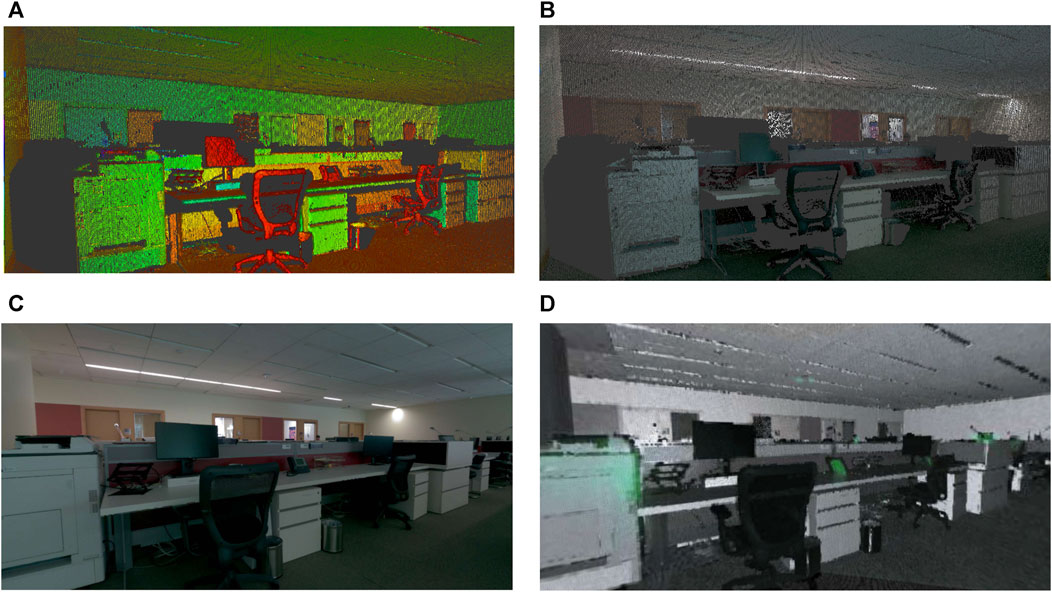

The robotic platform autonomously navigated through multiple positions scattered around the entire floor (Figure 13) to completely digitize and collect not only 3D geometric information but also RGB images used to colorize the resultant point cloud.

FIGURE 13. (A) 3D geometry from the point cloud with reflectance information, (B) 3D colored point cloud collected during the data collection inspection, (C) panorama RGB image, and (D) thermal data collected.

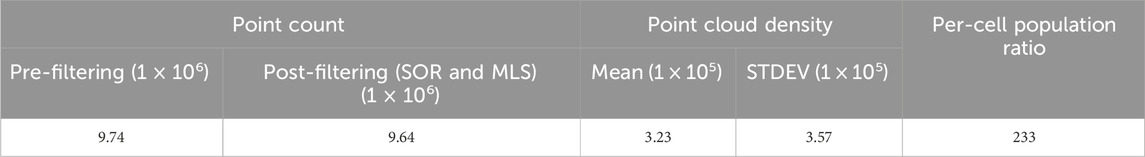

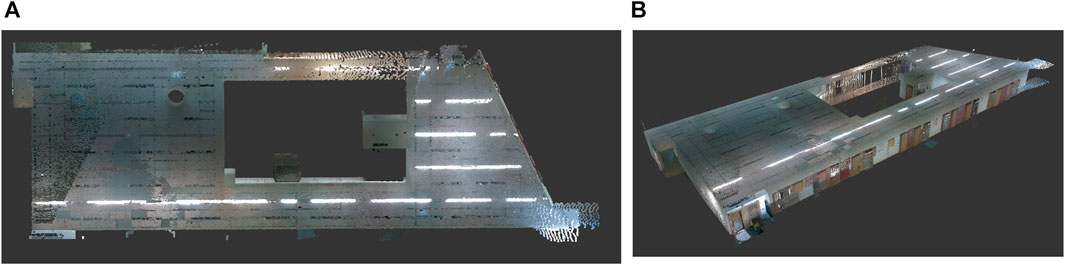

The entire process for the inspection took approximately 30 min and successfully digitized the entire office space (approx. 500 m2) with minimal human intervention: just one individual to indicate the waypoints and monitor the robot. The resultant floor point cloud (Figure 14) has more than 50 million points and could be used to autonomously generate an as-is model of the current state of the building, which could be useful for maintenance purposes or future refurbishment operations. To further assess the quality of the collected data, a quantitative analysis regarding the density of the point cloud, percentage of noise collected by the scanner, and point cloud coverage has been added to the paper. As can be seen from the results in Table 6, the difference between the point count before and after noise removal is minimal, meaning that the original point cloud did not account for much noise overall. A point cloud’s volume is measured based on the volumetric space enclosed within a set of neighboring points. By using a 0.077 m search radius, the results obtained for the density are summarized as the mean and standard deviation resulting from the volumetric space analysis. Finally, the per-cell population ratio represents the average distribution of points in equal-sized cells with a magnitude of 0.05 m.

FIGURE 14. (A) Top-down view (i.e., floor layout). and (B) perspective view of the 3D colored point cloud collected in the office space.

5 Limitations