- 1Singapore-ETH Centre (SEC), Singapore, Singapore

- 2School of Architecture, Civil and Environmental Engineering (ENAC), Swiss Federal Institute of Technology (EPFL), Lausanne, Switzerland

With increasing urbanization and depleting reserves of raw materials for construction, sustainable management of existing infrastructure will be an important challenge in this century. Structural sensing has the potential to increase knowledge of infrastructure behavior and improve engineering decision making for asset management. Model-based methodologies such as residual minimization (RM), Bayesian model updating (BMU) and error-domain model falsification (EDMF) have been proposed to interpret monitoring data and support asset management. Application of these methodologies requires approximations and assumptions related to model class, model complexity and uncertainty estimations, which ultimately affect the accuracy of data interpretation and subsequent decision making. This paper introduces methodology maps in order to provide guidance for appropriate use of these methodologies. The development of these maps is supported by in-house evaluations of nineteen full-scale cases since 2016 and a two-decade assessment of applications of model-based methodologies. Nineteen full-scale studies include structural identification, fatigue-life assessment, post-seismic risk assessment and geotechnical-excavation risk quantification. In some cases, much, previously unknown, reserve capacity has been quantified. RM and BMU may be useful for model-based data interpretation when uncertainty assumptions and computational constraints are satisfied. EDMF is a special implementation of BMU. It is more compatible with usual uncertainty characteristics, the nature of typically available engineering knowledge and infrastructure evaluation concepts than other methodologies. EDMF is most applicable to contexts of high magnitudes of uncertainties, including significant levels of model bias and other sources of systematic uncertainty. EDMF also provides additional practical advantages due to its ease of use and flexibility when information changes. In this paper, such observations have been leveraged to develop methodology maps. These maps guide users when selecting appropriate methodologies to interpret monitoring information through reference to uncertainty conditions and computational constraints. This improves asset-management decision making. These maps are thus expected to lead to lower maintenance costs and more sustainable infrastructure compared with current practice.

Introduction

Annual spending of the architecture, engineering and construction (AEC) industry is over 10 trillion USD (Xu et al., 2021). It is the largest consumer of non-renewable raw materials and accounts for up to 40% of world’s total carbon emissions (World Economic Forum and Boston Consulting Group 2016; Omer and Noguchi 2020). Additionally, each year, the gap between supply (new plus existing) and demand for infrastructure is increasing (World Economic Forum 2014). Therefore, sustainable and economical management of existing civil infrastructure is currently an important challenge (ASCE 2017; Amin and Watkins 2018; Huang et al., 2018; Tabrizikahou and Nowotarski 2021).

Civil infrastructure elements are designed using justifiably conservative models. Therefore, most civil infrastructure has reserve capacity beyond that was intended by safety factors (Smith 2016). This is provided at the expense of unnecessary use of materials and resources. However, without quantifying this reserve capacity, decisions on managing civil infrastructure may be prohibitively conservative, leading to uneconomical and unsustainable actions. Reserve capacity, in this context, is defined as the capacity available that is beyond code-specified requirements related to the critical limit state (Proverbio et al., 2018c). Improving understanding of structural behavior through monitoring helps avoid such actions.

Today, the availability of inexpensive sensing (Lynch and Loh 2006; Taylor et al., 2016; Wade 2019; Vishnu et al., 2020) and computing tools (Frangopol and Soliman 2016; Jia et al., 2022) has made it feasible to monitor civil infrastructure. However, use of monitoring for asset management is limited by lack of methods for accurate, precise and efficient interpretation of data to support decision making. Asset management activities, such as in-service infrastructure evaluations, under contexts such as increased loading and events such as code changes, may involve structural behavior extrapolation predictions at unmeasured locations under conditions that are different from those present during monitoring. Extrapolating behavior predictions outside the domain of data requires physics-based behavior models; data-driven model-free strategies are not intended to be used in such situations.

Structural identification is the task of interpreting monitoring data using physics-based models. In probabilistic structural identification, parameters of physics-based models (usually finite-element representations) are updated using monitoring data and by taking into account uncertainties from various sources. In the context of civil infrastructure, simulation models are approximate and typically conservative. These approximations may be related to modelling of boundary conditions, structural geometry, material properties etc. Assumptions related to such modelling decisions, usually result in significant and biased modelling uncertainties (Steenackers and Guillaume 2006; Goulet et al., 2013). In these situations, the accuracy of model-based data interpretation depends upon how well biased uncertainties are quantified (Goulet and Smith 2013; Pasquier and Smith 2015; Astroza and Alessandri 2019).

Much research has been carried out to develop model-based data interpretation methodologies, for example (Worden et al., 2007; Beck 2010; Cross et al., 2013; Moon et al., 2013). Methodologies that have been studied comprehensively are residual minimization (RM) (Beven and Binley 1992; Alvin 1997), traditional Bayesian model updating (BMU) (Beck and Katafygiotis 1998; Behmanesh et al., 2015a) and error-domain model falsification (EDMF) (Goulet and Smith 2013; Pasquier and Smith 2015). EDMF is a special implementation of BMU that has been developed to be compatible with the form of typically available engineering knowledge and infrastructure evaluation concepts (Pai et al., 2019). These methodologies differ in the criteria used to update models using data and assumptions related to quantification of uncertainties. Since every civil infrastructure element is unique in its geometry, function, and utility, no one data-interpretation methodology is suitable for all infrastructure management contexts. No guidelines are available in literature for selecting the most appropriate methodology for data interpretation based on uncertainty estimations and other more practical constraints, such as flexibility when information changes.

In this paper, methodology maps have been developed for selection of appropriate data-interpretation methodologies based on uncertainty estimations and practical constraints such as computational cost and ease of recalculation when information changes. These maps have been developed through synthesizing knowledge gained from many research projects and by reviewing hundreds of research articles over the past 20 years. This review includes comparisons between RM, traditional BMU and EDMF in terms of their ability to support asset management of built infrastructure. Finally, open-access software that supports use of EDMF and subsequent validation is presented.

Methodologies for Model-Based Sensor Data Interpretation

Monitoring of civil infrastructure enhances understanding of structural behavior. Using this improved knowledge, asset managers and engineers have the possibility to enhance decision making. The value of monitoring has improved with the availability of many cheap sensing and computing tools. Developments in data storage capabilities and increased ability to transfer and store data has made infrastructure monitoring feasible in practice.

While infrastructure monitoring is feasible, important challenges exist when interpreting monitoring data to make accurate and informed decisions. Interpretation of data requires development of appropriate physics-based models and knowledge-intensive assessments of uncertainties related to modelling and measurements. In this section, the importance of uncertainties and various methodologies for data interpretation is explained.

Uncertainties in Data Interpretation

Design of civil infrastructure involves many conservative (safe) choices. These choices lead to existing structures that are safer and have higher serviceability than design requirements. However, conservative models used for design may not be safe for the inverse task of management of existing structures (Pai and Smith 2020). Management of built infrastructure (Pai and Smith 2020) involves predicting structural behavior based on observations in order to make decisions such as repair strategies and loading limitations.

Acknowledging model uncertainty may help ensure accurate predictions and conservative management (Smith 2016; Pai et al., 2019). Quantification of model uncertainty is challenging in the context of incomplete knowledge of model fidelity and the physical principles that govern real structural behavior. Uncertainties, including those from modelling sources, are generally estimated to be distributed normally.

Civil-infrastructure elements are typically built to meet the design requirements as a lower bound. For example, on-site inspectors would not allow a reinforced-concrete beam with dimensions smaller than design requirements. Conversely, a beam that is slightly larger (within reasonable tolerance limits) and consequently stiffer would pass inspection. Construction practice often leads to stiffer-than-designed built infrastructure. Therefore, models developed with design information are biased compared with built infrastructure behavior and this leads to biased uncertainties related to model predictions.

Civil infrastructure is built to at least meet design specifications. The uncertainty following construction can therefore be estimated to have a lower bound approximately equal to the design value (excluding unforeseen construction errors). The upper bound may be estimated with engineering information such as heuristics, site-inspection results and local knowledge of material properties such as concrete stiffness. In most full-scale situations, bound-value estimations are the only available engineering information. While practicing engineers often refer to maximum and minimum bounds, they are typically unable to provide values for more sophisticated metrics such as mean and standard deviation. Also, throughout the service life, important information may change and engineers may not be able to modify accurately such metrics. The most appropriate choice for uncertainty quantification in this context is thus a bounded uniform distribution (Jaynes 1957). Assuming uniform distributions for data interpretation using models has other advantages, such as robustness to changes in correlations amongst measurement locations (Pasquier 2015; Pai 2019). In the presence of additional knowledge, other probability distributions may also be used for quantifying uncertainties (Cooke and Goossens 2008) such a modulus of elasticity. Quantification of uncertainty in parameters such as boundary conditions as a Gaussian distribution is challenging compared with using a uniform distribution. Additionally, use of more sophisticated distributions may require quantification of poorly known quantities, such as correlations between measurement locations in the presence of bias (Simoen et al., 2013).

Residual Minimization

Residual minimization (RM), also called model updating, model calibration and parameter estimation, originated from the work of Gauss and Legendre in the 19th century (Sorenson 1970). In RM, a structural model is calibrated by determining model parameter values that minimize the error between model prediction and measurements. In this method, the difference between model predictions and measurements is assumed to be governed only by the choice of parameter values (Mottershead et al., 2011), that is, there is no other source of model uncertainty.

In this method, the systematic model bias from typically conservative assumptions related to modelling are not taken into account. Additionally, uncertainties are assumed to be independent and have zero means. These assumptions may not be satisfied, particularly in the presence of significant uncertainty bias (Rebba and Mahadevan 2006; Jiang and Mahadevan 2008; McFarland and Mahadevan 2008). RM may not provide accurate identification when inherent assumptions are not satisfied in reality (Beven 2000). Moreover, due to the ill-posed nature of structural identification task, unique solutions are inappropriate due principally to parameter-value compensation (Neumann and Gujer 2008; Beck 2010; Goulet and Smith 2013; Moon et al., 2013; Atamturktur et al., 2015).

RM may occasionally result in accurate identification. However, models updated using RM are limited to the domain of data used for calibration (Schwer 2007). While updated models may be suitable for interpolation (predictions within the domain of data used for calibration) (Schwer 2007), they are not suitable for extrapolation (predictions outside the domain of data used for calibration) (Beven 2000; Mottershead et al., 2011).

Despite limitations related to accuracy of solutions obtained with RM, this method is widely used in practice due to its simplicity and fast computation time (Brownjohn et al., 2001, 2003; Rechea et al., 2008; Zhang et al., 2013; Chen et al., 2014; Mosavi et al., 2014; Feng and Feng 2015; Sanayei et al., 2015; Hashemi and Rahmani 2018). This underscores the needs for ease of use, as well as accuracy, to ensure practical adoption of more modern data-interpretation methodologies to support the extrapolations that are needed for good asset management.

Traditional Bayesian Model Updating

BMU is a probabilistic data-interpretation methodology that is based on Bayes’ Theorem (Bayes, 1763). Structural identification using Bayesian model updating gained popularity in late 1990’s (Alvin 1997; Beck and Katafygiotis 1998; Katafygiotis and Beck 1998). In BMU, prior information of model parameters, p(θ), is updated using a likelihood function, p(y|θ), to obtain a posterior distribution of model parameters, p(θ|y), as shown in Eq. 1.

In Eq. 1, p(y) is a normalization constant. The likelihood function, p(y|θ) is the probability of observing the measurement data, y, given a specific set of model-parameter values, θ.

Traditionally, BMU has been carried out using a zero-mean L2-norm-based Gaussian probability-distribution function (PDF) as a likelihood function, which is shown in Eq. 2.

While employing this likelihood function, uncertainties at measurement locations are estimated as zero-mean Gaussian (no model bias with a bell-shaped normal distribution). Additionally, traditional application of this likelihood function assumes independence between measurement uncertainties (no correlations) (Beck et al., 2001; Ching and Beck 2004; Katafygiotis et al., 1998; Muto and Beck 2008; Yuen et al., 2006). Also, variance in uncertainty, σ2 (diagonal terms of the covariance matrix Σ in Eq. 2, is assumed to be the same for all measurement locations. Assumptions made for the development of traditional BMU are rarely satisfied in civil engineering (Tarantola 2005; Simoen et al., 2013), and this leads to inaccurate identification (Goulet and Smith 2013; Pasquier and Smith 2015). Such challenges have motivated improvements to BMU, some of which are described in the next section.

Bayesian Model Updating With Parameterized Model-Error

Measurement data may be used to identify characteristics of the model error to avoid incorrect assumptions related to development of the likelihood function. This procedure of parameterizing the model error for BMU applications is called as parameterized BMU in this paper to distinguish it from the traditional application discussed in Traditional Bayesian Model Updating.

Typically, the standard deviation terms, σ2, of the covariance matrix, Σ, are parameterized and estimated using measurements as part of the BMU framework (Ching et al., 2006; Christodoulou and Papadimitriou 2007; Goller et al., 2011; Goller and Schueller 2011). This attempts to overcome the challenge related to estimating the magnitude of model error. Simoen et al. (2013) demonstrated that determining the values of the non-diagonal correlation terms in the covariance matrix using measurement data improved accuracy of structural identification.

Many researchers have parameterized and estimated the model-error terms in a hierarchical application of BMU, for example (Behmanesh et al., 2015a; Behmanesh and Moaveni 2016). Hierarchical BMU overcomes further challenges related to estimating the model error and bias. However, estimating model error terms involves estimation of additional parameters, which lead to identifiability and computational challenges (Prajapat and Ray-Chaudhuri 2016).

Magnitudes of systematic bias and correlations are related (Goulet and Smith 2013) and cannot be estimated independently. Additionally, the magnitudes of systematic bias, variance and correlations differ from one measurement location to another. Assuming these parameters to be the same at all locations may not be accurate. Due to these challenges, solutions obtained with BMU, while possibly suitable for damage assessment applications, are not suitable to support extrapolation predictions in civil-engineering contexts (Song et al., 2020).

Error-Domain Model Falsification

Error-domain model falsification (EDMF) is a population-based data-interpretation methodology. This methodology was developed by Goulet and Smith, 2013) and builds on more than a decade of research (Robert-Nicoud et al., 2005a; Saitta et al., 2008; Smith and Saitta 2008). The application of this methodology has been evaluated with applications to over ten full-scale case studies (Smith 2016).

In this methodology, model instances (physics-based model with instances of parameter-values as input) that provide predictions that are incompatible with observations (measurements) are falsified (refuted). Compatibility is assessed using thresholds (tolerance) on residuals between model predictions and measurements. These threshold values are computed based on uncertainty associated with the interpretation task at each measurement location.

Threshold values for each measurement location are calculated based on the combined uncertainty at each measurement location. This uncertainty is a combination of uncertainties from many sources such as measurement noise, modelling error and parametric uncertainty (from sources not included in the interpretation task). A few of these uncertainties, such as measurement noise and some material properties, may be estimated as normal random variables when sufficient information is available. Other uncertainties from sources such as geometrical assumptions, modelling of load and boundary conditions are unique to the model and are usually biased, as discussed earlier. With incomplete knowledge, these uncertainties are best quantified as uniform random variables (Jaynes 1957).

Threshold bounds from combined uncertainty PDFs are calculated based on a user-defined target reliability of identification. This user-defined metric determines the confidence (probability) that solutions of data-interpretation include the correct solution (real model). Model instances that provide predictions within threshold values of measurements, for all measurement locations, are accepted. Model instances whose predictions lie outside threshold bounds on measurement for any measurement location are rejected.

Model instances accepted by EDMF (not refuted by measurements) form the candidate model set (CMS). All model instances within this set are assumed to be equally likely, i.e., no model instance is more likely to be the correct solution than other model instances. It is rare to have enough accurate information on uncertainty do conclude otherwise. Candidate models are then used for making predictions with reduced uncertainty compared with predictions with no measurements (Pasquier and Smith 2015).

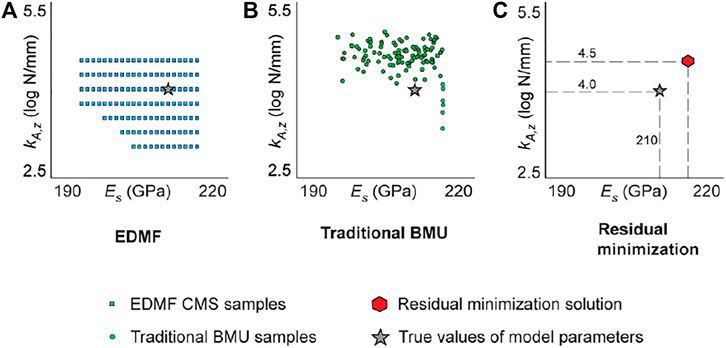

Using thresholds for falsification enables EDMF to be robust to correlation assumptions between uncertainties (Goulet and Smith 2013). Additionally, EDMF explicitly accounts for model bias based on engineering heuristics (Goulet and Smith 2013; Pasquier and Smith 2015). Consequently, EDMF, when compared with traditional BMU and RM, has been shown to provide more accurate identification (Goulet and Smith 2013) and prediction (Pasquier and Smith 2015; Reuland et al., 2017) when there is significant systematic uncertainty. In Figure 1, a comparison of solutions obtained using EDMF traditional BMU and RM is presented. These solutions have been obtained for a full-scale case-study with simulated measurements (known true values of model parameters) as described in Pai (2019). For this case study, as shown in Figure 1, EDMF and modified BMU provide accurate albeit less precise solutions compared with traditional BMU and residual minimization. Similar observations have been made by many researchers for various applications (Goulet and Smith 2013; Pasquier and Smith 2015; Pai and Smith 2017; Reuland et al., 2017; Pai et al., 2018). Accuracy in this context is defined as the correct value of the model parameter to be identified. In reality, the correct value of model parameters is not known. Therefore, the assessment of accuracy can be performed using cross-validation by comparing updated predictions with new measurements (measurements not included in identification) as described by Pai and Smith (2021). Precision is defined as the relative reduction in model-parameter-value uncertainty width due to information obtained using measurements. This is also quantified using the relative reduction in prediction uncertainty due to updated knowledge of model parameters, as described in Pai and Smith (2021).

FIGURE 1. Comparison of data-interpretation solutions obtained using (A) EDMF, (B) traditional BMU, and (C) residual minimization for the Ponneri Bridge case study. The results are adapted from the Ponneri Bridge case study described in Pai (2019). EDMF and modified BMU provide accurate, albeit less precise, solutions compared with traditional BMU, and residual minimization.

EDMF has been shown analytically and empirically to be equivalent to BMU when a box-car shaped likelihood function is used for incorporating information from measurements (Reuland et al., 2017; Pai et al., 2018, 2019). This likelihood function is determined using the same EDMF thresholds. As these thresholds are calculated based on explicit quantification of bias, the function is robust to incomplete knowledge of correlations, as is EDMF. Therefore, BMU with a modified likelihood function, similar to EDMF, provides more accurate solutions compared with traditional BMU and RM. EDMF may be interpreted as a practical implementation of BMU with uniform uncertainty characterization for model-based data interpretation.

Challenges in Sensor Data Interpretation

Use of sensor data for updating knowledge of structural behavior enhances asset management. However, many challenges exist related to development of measurement systems, data processing and development of appropriate physics-based models. Most data-interpretation studies have been limited to laboratory experiments and simulated hypothetical cases. Extending conclusions to full-scale cases must be done with care. In this section, a few common challenges are discussed along with methods to overcome weaknesses.

Outliers in Data

Use of data interpretation methodologies typically involves the assumption that measurement datasets do not include spurious data. Outliers are anomalous measurements that may occur due to sensor malfunction (Beckman and Cook 1983) and other factors such as environmental and operation variability (Hawkins 1980). The presence of outliers in measurement datasets reduces accuracy and performance of structural identification methods (Worden et al., 2000; Pyayt et al., 2014; Reynders et al., 2014).

Most developments related to outlier detection are focused on continuous monitoring applications (Burke 2001; Hodge and Austin 2004; Ben-Gal 2006; Posenato et al., 2010; Vasta et al., 2017; Deng et al., 2019). These methods are not suitable to detect outliers in datasets that consist of sparse measurements recorded during static load tests (Pasquier et al., 2016). Suggested an outlier-detection method for EDMF based on sensitivity of identified solutions to each measurement. A measurement data point that falsifies uncharacteristically high number of models was flagged as a potential outlier. A drawback of this approach is that when one measurement data point is much more informative than other measurement data points, this data point could be labelled as an outlier even when the measurement is valid. Exclusion of the most informative data point from identification procedures may severely limit the information gained from monitoring.

Proverbio et al. (2018a) suggested comparing the expected performance of a sensor configuration (as estimated with a sensor placement algorithm) with observed performance based on monitoring data. Measurement data that showed large variations from expected performance were flagged as outliers and excluded during identification. This approach is able to detect outliers in sparse datasets and overcomes several limitations of other outlier-detection methodologies (Proverbio et al., 2018a).

Measurement System Design

The use of information entropy for measurement system design has been studied extensively (Papadimitriou et al., 2000; Papadimitriou 2004; Robert-Nicoud et al., 2005b; Kripakaran and Smith 2009). However, most researchers have not accounted for the possibility of mutual information between sensor locations while designing measurement systems (Papadimitriou and Lombaert 2012; Barthorpe and Worden 2020). Papadopoulou et al. (2014) developed a hierarchical sensor-placement algorithm that accounts for mutual information. This algorithm was demonstrated for designing a measurement system to study wind around buildings (Papadopoulou et al., 2015, 2016). Bertola et al. (2017) extended this algorithm for multi-type sensor placement to monitor civil infrastructure systems under several static load tests.

Typically, measurement data for structural identification is acquired by conducting either dynamic or static load tests. Information from dynamic and static load tests may be either unique or redundant (Schlune et al., 2009). Many studies have been carried out to maximize information gained through dynamic and static load tests (Goulet and Smith 2012; Argyris et al., 2017). However, little research has been carried out for design of measurement systems involving static and dynamic load tests. Bertola and Smith (2019) suggested an information entropy-based methodology for designing measurement systems when dynamic and static load tests are planned.

There are several challenges involved with designing measurement systems. The task of sensor placement is computationally expensive and requires use of adaptive search techniques such as global (Chow et al., 2011) and greedy (Kammer 1991; Bertola and Smith 2018) searches. Also, since measurement-system design methodologies cannot be easily validated, Bertola et al. (2020a) developed a validation strategy using hypothesis testing. Then, the optimal measurement-system design depends on several performance criteria such as information gain, monitoring costs and robustness of information gain to sensor failure. Bertola et al. (2019) introduced a framework where measurement-system recommendations are made based on a multi-criteria decision analysis that accounts for several performance-criterion evaluations as well as asset-manager preferences.

The task of measurement system design is critical to ensure that measurement data for structural identification is informative and leads to reduction in uncertainty related to system behavior (Peng et al., 2021a). Poor design of measurement systems will lead to weak justification for monitoring and ultimately, to uninformed asset-management decision making. Measurement systems must thus be justified using cost-benefit analyses. Bertola et al. (2020b) proposed a framework to evaluate the value-of-information of measurement systems based on the influence of the information collected on the bridge reserve-capacity estimation.

Measurement-system-design methodologies has the potential to select informative data among large existing data sets. Wang et al. (2021) proposed an entropy-based methodology to reduce the number of measurements used for data interpretation. Using the reduced sets of data (up to 95% reduction) led to additional information gain compared with using complete data sets.

Model-Class Selection

Physics-based models include parameters that represent physical phenomena affecting structural behavior. For real-world applications, not all phenomena affecting infrastructure behavior are known. Engineering knowledge is important for development of physics-based models and inclusion of appropriate parameters.

As structural behavior is not known perfectly, some parameters of physics-based models are quantified as random variables. As previously described, appropriate quantification of their values is necessary for accurate data interpretation. The task of model-based data interpretation is to reduce uncertainty related to parameter values that govern structural behavior.

Model-based data interpretation involves searching for solutions (parameters that lead to model behavior that is compatible with measurements) in a large model-parameter space. The larger the parameter space to be explored, the greater the computational cost of finding solutions. Moreover, all measurements are not informative for all parameters. Hence, selecting parameters that are compatible with the information that is available from measurements is important to improve computational efficiency while maintaining precision.

For a given physics-based model, from a large set of potential model parameters, many smaller subsets of parameters can be selected for identification. Each of these subsets defines a model class for identification. The task of selecting an appropriate model class for identification and subsequent predictions of structural behavior is called model-class selection (or feature selection) (Liu and Motoda 1998; Bennani and Cakmakov 2002; Guyon and Elisseeff 2006).

Selection of a model-class for identification without utilizing information from measurements is called as a-priori model class selection. Traditionally, selection of a model-class has been carried out using sensitivity analysis based on linear-regression models (Friedman 1991). Other methods include assessment of coefficient of variation, analysis of variance (Van Buren et al., 2013; Van Buren et al., 2015), information criterion such as Akaike information criterion (Akaike 1974) and Bayesian information criterion (Schwarz, 1978) and regularization.

Most methods available in literature are restricted to linear models and Gaussian uncertainties. Also, these methods focus on finding a good subset of parameters that influence model response at one measurement location. For civil infrastructure, model response at various measurement locations may not be governed by the same set of parameters. To select an optimal model class that is suitable for all measurement locations, either the importance of parameters to model responses have been averaged (Matos et al., 2016) or an intersection of parameters important to response at all sensor locations (Van Buren et al., 2015) have been assumed. However, novel sensor placement strategies (Papadopoulou et al., 2015; Argyris et al., 2017; Bertola et al., 2017) have been developed that minimize number of sensors and maximize information from each sensor. Ideally, these strategies result in each sensor providing new information about model parameters. Under such conditions, use of averaged sensitivities is not an appropriate metric for model-class selection.

Pai et al. (2021) proposed a novel model-class selection method to overcome many challenges related to existing methods, especially in context of developments related to novel sensor placement strategies. In this method, the selection of parameters is not carried out by evaluating the importance of parameters to response at each measurement location. Instead, a global understanding of structural behavior is evaluated using clustering. As parameter values vary, global changes in structural behavior are estimated using clustering. Parameters governing these clusters of response are identified using a classifier whose features are selected based on a greedy search.

Validation

EDMF, when compared with traditional BMU and RM, has been shown to provide accurate model updating for theoretical cases using simulated measurements (Goulet and Smith 2013; Pasquier and Smith 2015; Reuland et al., 2017). In these theoretical comparisons, the ground-truth values are known. For assessment of accuracy of full-scale structures, cross-validation methods have the potential to demonstrate quantitative validation.

Comparisons of EDMF with traditional BMU and RM have been made for full-scale case studies using leave-one-out cross-validation (Pai et al., 2019) and hold-out cross-validation (Pai et al., 2018). In these comparisons, one or more measurements (data points) are excluded during identification. Subsequent to identification, the updated parameter values are used to predict response at measurement locations that were excluded. If the predicted response is similar to the measurement value, then structural identification is assumed to be validated (Vernay et al., 2018).

However, in cross-validation methods (Golub et al., 1979; Kohavi, 1995) such as leave-one-out and hold-out cross-validation (Hong and Wan 2011), the data points left out may or may not contain new information. If information contained in the validation dataset is not exclusive, then validation with redundant data is not suitable for assessment of accuracy. Therefore, information entropy metrics (Papadopoulou et al., 2015; Bertola and Smith 2019) may be used to assess exclusivity of information in validation data and suitability of validated solutions for making further predictions to support asset management decision-making.

Pai and Smith (2021) demonstrated the utility of assessing mutual information between data used for identification and validation to ensure appropriate assessment of accuracy. Structural identification of a steel-concrete composite bridge was carried out using the three data-interpretation methodologies described in Methodologies for Model-Based Sensor Data Interpretation. To assess accuracy of the identification results, leave-one-out and hold-out cross-validation strategies were carried out. As validation data became more exclusively informative (less mutual information with identification data), the number of cases where identification was assessed to be accurate was observed to reduce. Therefore, using exclusive information (not redundant information) for validation may lead to better assessment of accuracy of structural identification.

Case Studies

Model-based data interpretation enables use of updated knowledge of structural behavior for prognosis and estimation of capacity available beyond design calculations. The use of a physics-based model enables propagation of uncertainty from various sources during prognosis to support the extrapolation that is needed for asset management decision-making. These decisions may be related to remaining fatigue life estimation, retrofit design, load-carrying capacity, post-earthquake capacity, localization of damage and ultimately, replacement.

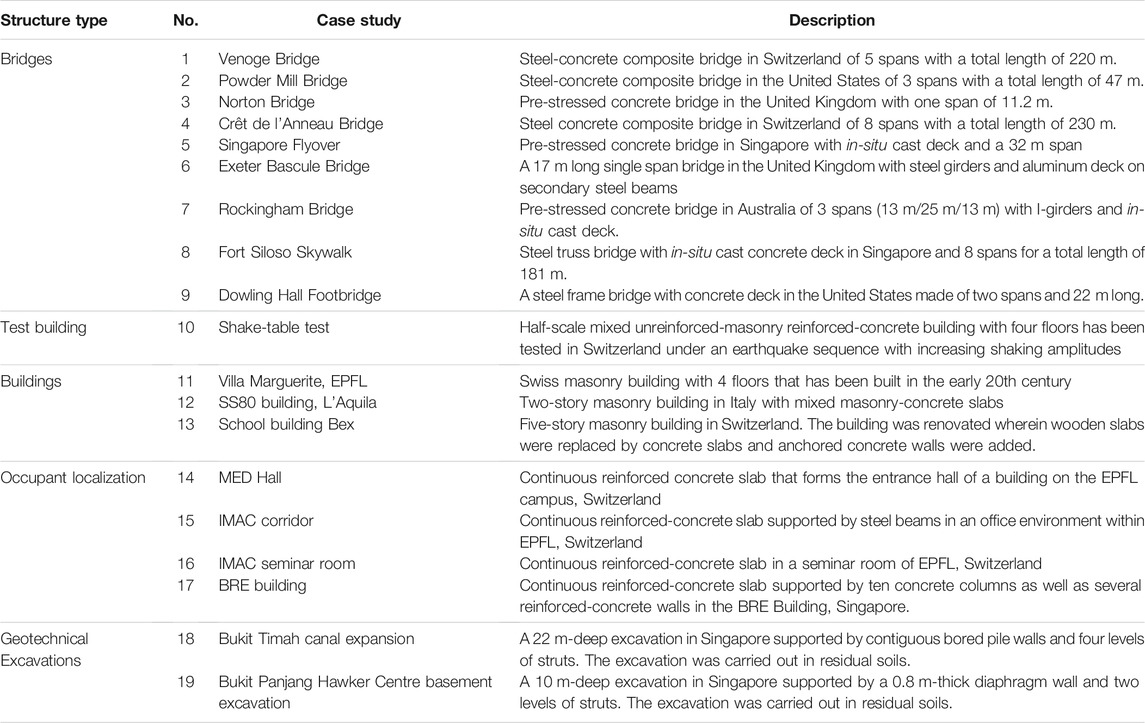

Challenges in Sensor Data Interpretation outlines key challenges in practical implementation of the three data-interpretation methodologies that are described in Methodologies for Model-Based Sensor Data Interpretation. Laboratory experiments are designed to reduce unknowns and uncertainties cannot contribute to challenges that are typically encountered in real world-applications. In this section, a summary of case studies that have been evaluated from 2015 to 2020 are described. A list of these case studies is provided in Table 1 with a brief description.

TABLE 1. Full-scale case studies evaluated with monitoring data since 2015. Case studies evaluated before 2015 are described in Smith (2016).

Reserve Capacity Estimation

Some of the case studies listed in Table 1 have been studied to improve understanding of reserve capacity in built infrastructure. Reserve capacity is defined as the capacity available in built infrastructure beyond code-specified requirements related to the critical limit state (Proverbio et al., 2018c). Typical limit states are defined to be either fatigue failure, or serviceability limits. For the ultimate limit state, a special strategy has been developed (Proverbio et al., 2018c). Quantifying reserve capacity provides useful information for performing asset-management tasks such as comparing repair scenarios with replacement. Case studies listed in Table 2 have been investigated to identify reserve capacity related to the ultimate limit state (ULS), the serviceability limit state (SLS) and fatigue.

TABLE 2. Methodologies compared and reserve capacity assessments of civil infrastructure cases according to the critical limit state in parentheses and determined using EDMF.

In Table 2, the data-interpretation methodology used to interpret monitoring data (static and dynamic) is indicated. Typically, data is interpreted using multiple methodologies and solutions obtained are compared and validated using methods described in Validation. Only solutions that have been validated are used to predict the reserve capacity. References that provide more details regarding these evaluations have been provided in the table. The reserve capacities are calculated so that unity is the situation where calculations with all relevant safety factors exactly attains the critical limit state. A reserve capacity that is greater than unity indicates that there is additional safety built into the structure beyond the critical limit state.

Reserve capacities listed in Table 2 suggest that most built infrastructure has significant reserve capacity compared with code-based requirements, and this confirms similar observations made previously (Smith 2016). Quantifying this reserve capacity using monitoring data enhances decision making related to asset-management actions. Overdesign reflected by reserve capacity values that are over one also leads to unnecessary material and construction costs as well as unnecessarily high carbon footprints.

For two case studies listed in Table 2, Singapore Flyover and Rockingham Bridge, the reserve capacity assessment was compared with the present verification code (CEN 2012a; b; NAASRA 2017) and the design code at the time of construction (NAASRA 1970; BSI 1978, 1984). Both infrastructure elements were significantly over-designed during construction with respect to the prevalent codes at the design stage. Additionally, for the Singapore Flyover, not only did the reserve capacity change, the critical limit state at design stage was different from the new-code-verification stage (Proverbio et al., 2018c). Monitoring and reserve-capacity assessment with enhanced models can potentially avoid unnecessary repair actions in such situations.

For most of the case studies listed in Table 2, EDMF was able to provide accurate data interpretation upon validation to support asset management. This observation was made for various types of bridges, from different decades, built with a range of materials and monitored to provide heterogeneous data, including static and dynamic data. Validation was possible because of the explicit quantification of multi-sourced biased uncertainties as well as the intrinsic robustness of EDMF to unknown correlations as described in Error-Domain Model Falsification.

Other Applications

Interpreting monitoring data with physics-based models can also be used to enhance decision making in other application areas. For example, applications in post-earthquake hazard assessment, risk mitigation during excavations, damage detection and occupant localization have been studied.

Post-earthquake monitoring data has been used to update physics-based models and predict residual capacity (Reuland et al., 2019a). Residual capacity is defined as the ability of an infrastructure to resist aftershocks and subsequent earthquake events. While EDMF has been used for post-earthquake evaluation of many case studies (Reuland 2018; Reuland et al., 2015, 2017), due to limitations in data available from real earthquakes, validation with data from aftershocks could not be performed for every case study. However, data-interpretation results from the shake table test were validated to show that EDMF provided the accurate identification (Reuland et al., 2019b). Models updated with measurement data reduce uncertainty in predictions and improve understanding of structural capacity. This enhanced knowledge can be used to avoid unnecessary post-hazard closures of buildings while supporting a risk-based assessment to either close or retrofit essential buildings.

Wang et al. (2020, 2019) studied the use of data-interpretation methodologies for reducing uncertainty related to excavations. Data from one stage of excavation is used to predict behavior at further stages of excavation leading to less conservative practices. In addition, Wang et al. (2021) combined EDMF and a hierarchical algorithm based on a joint-entropy objective function to select field response measurements that provide the most useful knowledge of material parameter values in a model-based data interpretation exercise. Also, (Cao et al., 2019a), combined information from monitoring data and physics-based models to predict damage location in train wheels to improve infrastructure management.

Another application of monitoring data is localizing occupants within buildings. Information about occupancy in buildings can be used for security needs and non-intrusive monitoring in care-homes and other applications. Drira et al. (2019) investigated the use of model updating to localize occupants in buildings. These investigations were performed on floor slabs of buildings with various structural and occupancy characteristics. EDMF was found to be able to accurately localize and track occupants in buildings with floor-vibration data from sparse sensor configurations.

In this section, applications of data-interpretation to real-world case studies are briefly presented. More information regarding these studies can be found in the references provided in Tables 2, 3. Challenges encountered while evaluating these case studies along with relevant research are presented briefly in Challenges in Sensor Data Interpretation.

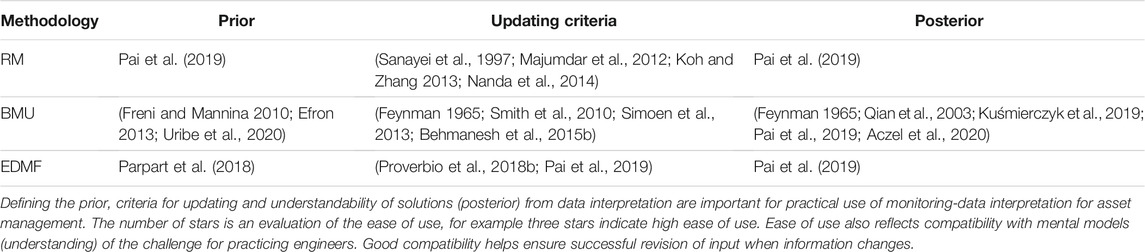

TABLE 3. Comparison of ease of use of model-based data interpretation methodologies at three stages of application.

In the next section, methodology maps have been developed following experience with the case studies presented in this section. Significant literature was also reviewed while evaluating these case studies and knowledge acquired from these reviews was also used to develop these maps. While the maps are in no way absolute indications of the best method for all cases, they are intended to guide users towards appropriate choices of methodologies to interpret data.

Methodology Maps

In this section, methodology maps are presented that guide the choice of the best data interpretation methodology based on the following four criteria:

• Magnitude of uncertainty

• Magnitude of bias

• Model complexity

• Ease of implementation in practice

The magnitude of uncertainty refers to the total uncertainty (difference between model and measured response) affecting the task of data interpretation at each measurement location. This includes combination of uncertainties from sources such as sensor noise, operational conditions, modelling assumptions, parametric uncertainty, geometrical simplifications and numerical errors. For example, if the combined uncertainty is assumed to be zero-mean Gaussian then the magnitude of uncertainty is defined by the standard deviation of the distribution. If the combined uncertainty is assumed to be uniformly distributed, then the magnitude of uncertainty is defined by the range of the distribution (upper bound minus the lower bound value).

The magnitude of bias refers to the systematic bias between model response and real system behavior. As most civil engineering models are safe for design, implicit assumptions made during model development are biased to provide conservative predictions of system behavior. For example, if the combined uncertainty is assumed to be Gaussian, then the magnitude of bias is defined by the non-zero mean of the distribution. If the combined uncertainty is defined as a uniform distribution then the bias magnitude is defined as the mid-point of the uniform distribution (0.5 times range of the distribution plus the lower bound value).

Model complexity refers to the level of model detail and fidelity of the model to real system behavior. For example, a three-dimensional FE model of a bridge is more complex than an analytical one-dimensional model based on Euler-Bernoulli beam theory. The computational cost associated with obtaining solutions for the task of structural identification is often related to the model complexity. A complex model is computationally more expensive than a simpler model. Complex models (for example FE models) are often defined by more parameters than simple models (for example a Euler-Bernoulli beam) that may have to be identified, which would increase number of simulations required, thereby increasing the computational cost. However, a complex model could have better fidelity with real system behavior compared with a simpler model.

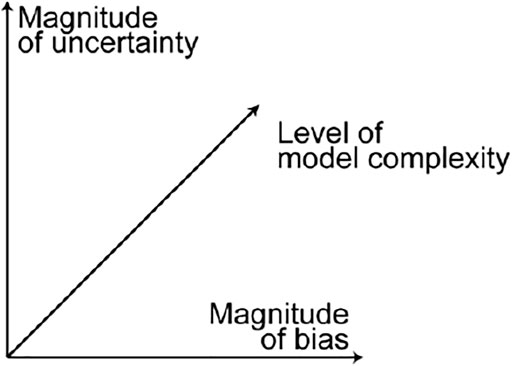

Three methodology maps are developed with magnitude of uncertainty and systematic bias as the two axis. These methodology maps correspond to three levels of model complexity: low (Low Model Complexity), medium (Medium Model Complexity) and high (High Model Complexity). Maps in these sections are two-dimensional projections of a three-dimensional map, which is schematically shown in Figure 2. This three-dimensional map is separated into three maps along the axis of model complexity. For the purpose of developing methodology maps in this paper, the discrimination between low, medium and high model complexity, uncertainty and bias are quantified based on the experience of authors gained by evaluating many case studies as described in 4. Using the Methodology Maps includes examples on the use of these methodology maps to select an appropriate data-interpretation methodology.

FIGURE 2. Schematic representation of the three dimensions of a methodology map to guide practitioners in selecting the appropriate data-interpretation methodology.

Other than the three criteria of the magnitude of uncertainty, systematic bias, and model complexity, another criterion, ease of implementation, governs the choice of data interpretation methodologies. Ease of implementation in practice refers to the challenges associated with the data interpretation task. This includes computational costs, prior knowledge, developing the strategy for updating (for example the likelihood function, see Traditional Bayesian Model Updating) and understanding solutions of the interpretation task. Typically, this criterion governs whether data-interpretation can be applied in practical contexts.

In the next sections, methodology maps that support users in selecting appropriate methodologies based on these criteria are presented. The data interpretation methodologies that are considered are RM (see Residual Minimization), BMU (see Traditional Bayesian Model Updating and Bayesian Model Updating With Parameterized Model-Error) and EDMF (see Error-Domain Model Falsification). The objective of these maps is to help users select a good data-interpretation methodology according to criteria related to uncertainty (amount as well as bias), model complexity and transparency (ease-of-use).

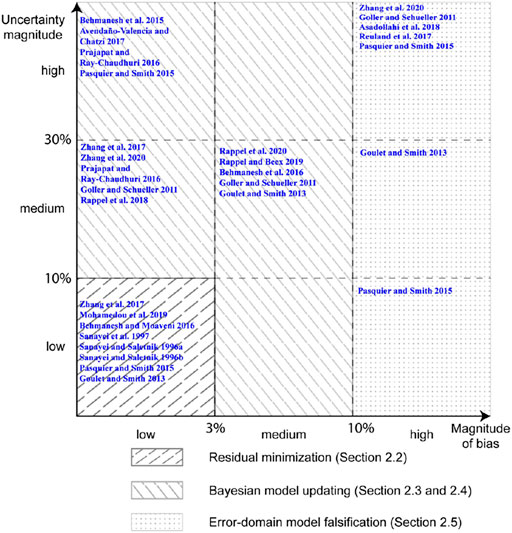

Low Model Complexity

A methodology map to select an appropriate data interpretation methodology considering magnitude of uncertainty and magnitude of systematic bias for interpretation using models with low complexity (computational cost) is presented in Figure 3. Low complexity models are analytical models such as beam deflection equations and simple 1D beam models. Therefore, models that have a complexity O(n) and finite element models with a small number of nodes and elements (such as beam element models with O(n3) complexity) can be considered as low complexity models, where n is number of degrees of freedom.

FIGURE 3. Methodology map describing best use of data-interpretation methodologies in relation to systematic bias and magnitude of uncertainty when using models with low complexity (computational cost).

The two axes of the map shown in Figure 3 are uncertainty magnitude and bias in uncertainty. In civil engineering contexts, the main contribution for this uncertainty involves model error, which is the difference between response obtained using a physics-based model and real system behavior (not known). For the purpose of developing methodology maps in this paper, the discrimination between low, medium and high uncertainty as bias as shown in Figure 3 is quantified based on the experience of authors in evaluating many case studies as described in 4.

RM (or calibration), as shown in Figure 3, is suitable for tasks involving low bias (less than 3% bias from zero mean) and low uncertainty magnitudes (less than 10%). Such conditions require models that are high-fidelity approximations of reality, which is not common in full-scale civil engineering evaluations. However, RM is also suitable for developing regression models and data-only approaches (Bogoevska et al., 2017; Hoi et al., 2009; Laory et al., 2013; Neves et al., 2017; Posenato et al., 2008, 2010). Calibration in this context is typically limited to estimating coefficients of regression models where interpolation predictions are required.

BMU, involving either traditional or more advanced applications, is suitable for tasks that have low to medium bias (up to 10% deviation from zero-mean) and low to high uncertainty magnitudes. Traditional implementation of BMU is suitable for tasks with low model bias. This encompasses tasks that may be performed using RM such as Bayesian optimization of data-only models (Gardoni et al., 2002). BMU is best employed for analyzing laboratory experiments conducted in controlled environments (Prajapat and Ray-Chaudhuri 2016; Zhang et al., 2017; Rappel et al., 2018, 2020; Mohamedou et al., 2019; Rappel and Beex 2019) to reduce bias from modelling assumptions. BMU is also appropriate for analyzing large-scale systems when the physics-based models developed are unbiased approximations of reality as is often the case for mechanical-engineering applications (Abdallah et al., 2017; Avendaño-Valencia and Chatzi 2017; Hara et al., 2017; Argyris et al., 2020; Cooper et al., 2020; Patsialis et al., 2020).

When the bias is neither low nor high (between 3 and 10% deviation from zero-mean), advanced BMU methods can be used for data-interpretation. These include parameterization of the model error terms (Kennedy and O’Hagan 2001) as explained in Bayesian Model Updating With Parameterized Model-Error. Users have to be careful while employing these advanced methods as they may provide more precise solutions than EDMF (Goulet and Smith 2013; Pasquier and Smith 2015), while also being prone to unidentifiability challenges (Prajapat and Ray-Chaudhuri 2016; Song et al., 2020) due to requirements of estimating many parameters relative to information available from measurements.

While RM is the best method when uncertainty and bias magnitudes are low, EDMF is suitable (not necessarily optimal) for all tasks ranging from low to high uncertainty magnitudes and bias. However, EDMF was developed specifically for analyzing tasks with high magnitude of bias such as civil infrastructure (Goulet and Smith 2013). Models of civil infrastructure typically involve conservative modelling assumptions that lead to large systematic biases between model and real behavior (Goulet et al., 2013). Application of EDMF to tasks with large magnitude of bias and uncertainty have been listed in Table 2.

Validation of solutions obtained with data-interpretation is important as explained in Validation. Many researchers have used data-interpretation methodologies under conditions where the inherent assumptions are not satisfied in reality. RM does not account for model bias in the traditional form as described in Residual Minimization. However, applications of RM to tasks with large uncertainty magnitudes and large bias are common in literature (Chen et al., 2014; Sanayei et al., 2011; Sanayei and Rohela 2014). Similarly, traditional BMU has also been applied to tasks involving large systematic bias (Behmanesh et al., 2015a; b). While solutions obtained in such applications may be suitable for damage detection (interpolation) (Li et al., 2016), they are not suitable for asset management tasks where extrapolation predictions are required (Brynjarsdóttir and O’Hagan, 2014; Song et al., 2020).

Low model complexity ensures that not only deterministic RM but also probabilistic BMU and EDMF may be used to interpret data. Increasing model complexity increases the computational cost associated with practically implementing probabilistic data-interpretation methodologies such as BMU and EDMF.

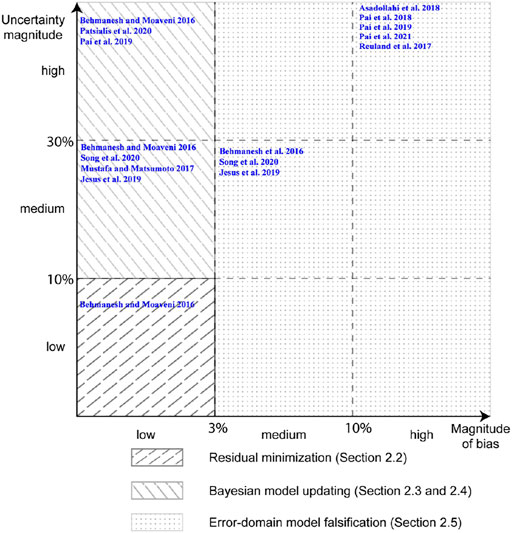

Medium Model Complexity

In this section, a methodology map to select an appropriate data interpretation methodology while using medium complexity models is presented. Figure 4 presents a methodology map to select the most appropriate data interpretation methodology based on the criteria of systematic bias and model uncertainty when the complexity of models available to interpret data is medium. Medium complexity models include finite element models involving two and three-dimensional elements such as shell and brick elements. These models when used for static and modal analysis involving matrix inversion have a complexity of O(n3), where n is the number of degrees of freedom in the model.

FIGURE 4. Methodology map describing best use of data-interpretation methodologies in relation to systematic bias and magnitude of uncertainty when using models with medium complexity (computational cost).

RM, as described in Residual Minimization, is suitable for tasks with low systematic bias and low magnitude of uncertainty using models with medium complexity (Behmanesh and Moaveni 2016). While medium complexity models are computationally more expensive than low complexity models, efficient application of RM for model updating is possible using adaptive sampling methods (Bianconi et al., 2020).

BMU in its traditional forms may be used for tasks with low levels of systematic bias and low-to-high uncertainty magnitudes. However, use of medium-complexity models for probabilistic evaluation is challenging. Adaptive sampling methods such as Markov Chain Monte Carlo (MCMC) sampling (Qian et al., 2003), transitional MCMC (Ching and Chen 2007; Betz et al., 2016) and Gibbs sampling (Huang and Beck 2018) may help to reduce the computational cost. However, the increase in number of parameters to be identified (in addition to the model parameters) while using the advanced forms of BMU (Kennedy and O’Hagan 2001; Behmanesh et al., 2015a) may be prohibitive for practical data interpretation using medium complexity models. Additionally, complex sampling and interpretation methods increase difficulty of practical implementation, which will be discussed in Suitability for use in Practice.

EDMF is suitable for all levels of systematic bias and uncertainty magnitudes, particularly cases involving high systematic bias (Reuland et al., 2017; Proverbio et al., 2018b; Pai et al., 2018, 2019, 2021; Reuland et al., 2019a; Reuland et al., 2019b; Drira et al., 2019; Wang et al., 2020). Depending upon the computational constraints, EDMF can be implemented with either a grid sampling approach or an adaptive sampling (Raphael and Smith 2003; Proverbio et al., 2018b). Although grid sampling is computationally expensive, it is convenient when data interpretation has to be revised with new information (Pai et al., 2019). For tasks involving medium levels of systematic bias, BMU may be used with its advanced forms. However, using these implementations with medium complexity models may be inefficient. EDMF is suitable for interpreting tasks with medium levels of systematic bias and provides choices for implementation of adaptive and grid-based sampling approaches to reduce computational cost while interpreting with models of medium complexity.

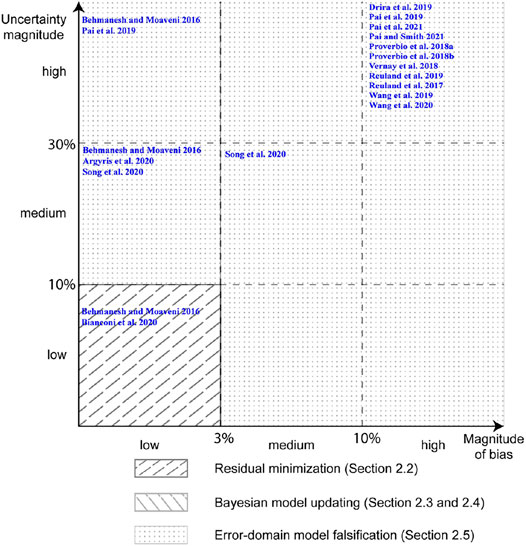

High Model Complexity

In this section, a methodology map to select an appropriate data interpretation methodology while using high complexity models is presented. In Figure 5, a methodology map is presented to select the most appropriate data interpretation methodology based on the criteria of systematic bias and model uncertainty when the complexity of models available to interpret data is high. Models such as finite element models that incorporate complex physics such as material non-linearity, geometric non-linearity as well as analysis involving contact mechanics and transient analysis can be considered as high complexity models. These models have many degrees of freedom and involve iterations either over time domain (transient analysis) or for convergence related to non-linear simulations. Each of these iterations with a model of complexity O(n3) leads to high computational cost.

FIGURE 5. Methodology map describing best use of data-interpretation methodologies in relation to systematic bias and magnitude of uncertainty when using models with high complexity (computational cost).

RM, as described in Residual Minimization, is suitable for tasks with low magnitudes of uncertainty and systematic bias. However, as previously mentioned, this is a popular methodology in practice (Bogoevska et al., 2017; Hoi et al., 2009; Laory et al., 2013; Neves et al., 2017; Posenato et al., 2008, 2010) due to its ease of implementation and its low computations cost. The computational cost of using RM with high complexity models is usually much lower than other probabilistic methodologies. Strategies such as particle swarm optimization (Gökdaǧ and Yildiz, 2012), genetic algorithms (Chou and Ghaboussi 2001; Gökdaǧ, 2013) and other sampling methods (Zhang et al., 2010; Majumdar et al., 2012) have been used by researchers to make RM over many dimensions a cost-effective data interpretation methodology.

While BMU may be used for data-interpretation tasks involving medium-to-high systematic bias and uncertainties, the cost of using high complexity models is prohibitive. Therefore, EDMF is a better choice for interpretation tasks involving medium-to-high systematic bias (greater than 3%) and uncertainties (greater than 10%) using high complexity models. EDMF, similar to BMU, is suitable for medium-to-high magnitudes of uncertainty with models of all levels of complexity and can be implemented with grid sampling approach and adaptive sampling (Raphael and Smith 2003; Proverbio et al., 2018b). EDMF has the added advantage of being computationally efficient when data interpretation has to be revised compared with BMU (Pai et al., 2019). The task of data interpretation in practice is typically iterative in nature due to changing operational and environmental conditions and also, as new information becomes available (Pasquier and Smith 2016; Reuland et al., 2019b; Pai et al., 2019).

Scalability of RM to various levels of model complexity and the array of sampling methodologies available for BMU demonstrate that significant research effort has been directed at improving computational efficiency. While this is sufficient for individual analyses at a given moment, such as damage detection, the task of asset management and prognosis is iterative in nature due to changing conditions and emergence of new information. Non-adaptive sampling methods and heuristic searches (Sanayei et al., 1997) are more amenable for use in practice for repeated data interpretation compared with sampling methods that would require a complete restart (Pai et al., 2019). RM and EDMF are most suitable for use of non-adaptive sampling methods making them better choices when using high-complexity models.

Suitability for use in Practice

Methodology maps presented in Figures 3–5 assist a user in selecting an appropriate data interpretation methodology considering uncertainty magnitudes, bias magnitudes and model complexity. However, practical implementation of these methodologies presents additional challenges. Application of any data interpretation methodology has three components:

• Estimation of prior distribution of model parameters.

• Updating strategy (objective function for RM, likelihood function for BMU and falsification thresholds for EDMF) as well as solution-exploration methods (for example, optimization techniques, adaptive sampling etc.).

• Interpretability of posterior (updated) distribution of model parameters for decision making.

The above three components are in addition to the task of data collection and quality control checks, such as assessing sensor noise and detection of outliers, that have to be performed.

The BMU methodology has gained popularity in the research community with an objective to support real-world data-interpretation tasks. Therefore, applicability of BMU is discussed first in this section. Subsequently, applicability of RM and EDMF is discussed relative to challenges in practical implement of BMU.

The choice of prior distribution for BMU is one of the first steps (see Traditional Bayesian Model Updating). Appropriate quantification of prior distributions of parameters in a model class can significantly influence results obtained with BMU (Freni and Mannina 2010; Efron 2013; Uribe et al., 2020). In the context of civil infrastructure, model parameters related to aspects such as boundary conditions are specific to each case and cannot be generalized. This complicates the task of informatively quantifying the prior of model parameters.

Traditional BMU, as described in Traditional Bayesian Model Updating, does not include parameters related to the model error in the model class. Novel BMU developments such as hierarchical BMU (Behmanesh et al., 2015b) estimate errors to improve accuracy, as explained in Bayesian Model Updating With Parameterized Model-Error. However, a small number of studies allow for various sources of uncertainty for specific measurement locations. Simoen et al. (2013) studied the effect of correlations on the posterior of model parameters, albeit with same error variance for all data points. Explicit modelling of the prediction error (variance, bias, and correlations) for specific measurement locations increases dimensionality of the inverse task. Consequently, this may lead to identifiability challenges and high computational costs (Prajapat and Ray-Chaudhuri 2016).

Another implementation step involved in carrying out BMU is the development of the likelihood function that incorporates information from measurements. Traditionally, this is defined by a zero-mean independent Gaussian PDF as shown in Eq. 2. Many researchers have demonstrated that the use of a zero-mean independent Gaussian likelihood function provides inaccurate solutions in the context of civil infrastructure (Simoen et al., 2012; Goulet and Smith 2013; Pasquier and Smith 2015). The choice of the likelihood function and its developments affect the accuracy of model-parameter estimation in BMU (Smith et al., 2010). Aczel et al. (Aczel et al., 2020) emphasized the need to perform checks for robustness to this choice while selecting a likelihood function (and priors). Appropriateness of choices made in estimating priors and developing the likelihood function can only be measured by testing predictions against reality (Feynman 1965), which in the absence of situations of either simple theoretical or experimental cases, requires statistical knowledge.

The results obtained with BMU are a joint posterior PDF of model parameters. Interpretation of this PDF is important for making decisions based on updated knowledge acquired from measurements. However, for appropriate decision making, asset managers have to be provided with information of the joint posterior PDF along with assumptions and choices that were made to define it, such as the prior, likelihood function and the physics-based model. Additionally, statistical knowledge is necessary for interpreting the posterior PDF (Aczel et al., 2020).

Application of BMU also suffers due to the requirement of adaptive samplers such as Markov Chain Monte Carlo sampling (Tanner 2012) and other variants (Ching and Chen 2007; Angelikopoulos et al., 2015; Huang and Beck 2018), which are difficult to implement (Pai et al., 2019). Random sampling such as Monte Carlo sampling may lead to poor and inaccurate estimation of the posterior (Qian et al., 2003) leading to inappropriate and unsafe asset management (Kuśmierczyk et al., 2019).

RM is widely used in practice despite providing inaccurate solutions in presence of large magnitudes of uncertainty and systematic bias as detailed in Residual Minimization, Low Model Complexity, Medium Model Complexity, High Model Complexity. This is due to the simplicity in application of RM. In RM, there is no strong requirement regarding the estimation of priors for model parameters. In typical civil-engineering contexts, a uniform prior is assumed for RM. The updating criteria is a minimization of error between model response and measurements. This minimization task may be performed using optimization algorithms (Majumdar et al., 2012; Koh and Zhang 2013; Nanda et al., 2014) as well as simple trial and error methods (Sanayei et al., 1997). Finally, the solution is a unique set of model-parameter values that provide the least error between model response and measurements. Admittedly, uniqueness of the result reduces the possibility of error in interpretation of solution due to lack of statistical knowledge.

EDMF, in a similar way to RM, has advantages over BMU due to ease of implementation. Additionally, EDMF may be used for a wider range of applications with large magnitudes of uncertainty and bias, as explained in Low Model Complexity, Medium Model Complexity, High Model Complexity. In EDMF, the prior PDF of model parameters is estimated using engineering knowledge and is typically assumed to be uniform. This is compatible with observations by researchers that using heuristic information can lead to quantification of appropriate priors (Parpart et al., 2018).

The updating procedure in EDMF is based on the philosophy of falsification as hypothesized by Karl Popper (Popper 1959). In EDMF, model instances that provide responses that are incompatible with measurements are rejected (falsified). The criteria for compatibility are defined based on the uncertainty associated with the interpretation task from sources such as modelling imperfections and sensor noise. As the engineer has to quantify this uncertainty and determine the falsification criteria, basic knowledge of statistical bounds is required. Fortunately, practicing engineers often use bounds to describe uncertainty.

The solution obtained with EDMF is a population of model instances that are compatible with observations. Due to lack of complete knowledge of uncertainties, all model instances in this solution set are assumed to be equally likely. The asset manager may use this population to make decisions. Unlike RM, the asset manager, while using EDMF, has to either use the entire population of solutions for decision making or may use specific model instances for decision making based on expert opinion and statistical knowledge. Working with a population of solutions rather than a joint posterior PDF (as obtained with BMU) requires less statistical knowledge and is more transparent for use by decision makers.

Application of EDMF is typically carried out with grid sampling (Pai et al., 2019), which has practical advantages over adaptive sampling methods (Proverbio et al., 2018b) when information has to be interpreted iteratively. Other sampling methods, such as Monte Carlo sampling and Latin hypercube sampling, have also been used to perform EDMF (Cao et al., 2019b). Unlike BMU, EDMF does not require sampling from the posterior to achieve accuracy, which allows use of simpler sampling techniques.

RM is the easiest method for use in practice, albeit with a limited range of applications as described in Residual Minimization, Low Model Complexity, Medium Model Complexity, High Model Complexity. BMU has a wider range of applications based on uncertainty considerations but is limited by challenges for use in practice due to expertise required in implementation. EDMF may be employed for a wide range of applications and overcomes many practical limitations associated with BMU for probabilistic data-interpretation. A summary of this discussion is presented schematically in Table 3.

In Table 3, the checkmarks indicate ease of implementation related to the specific step involved such as estimating the prior. The number of checkmarks from one to three indicate increasing ease of implementation. EDMF and RM have greater ease of implementation compared with BMU while performing the steps involved such as estimating the prior, updating, sampling and interpreting the posterior (solutions). EDMF, with possibilities for a wider range of applications as explained in Residual Minimization, Low Model Complexity, Medium Model Complexity, High Model Complexity, and relatively simpler implementation should thus be the methodology of choice for interpreting data related to most civil-infrastructure assessment tasks.

To aid in application of EDMF to full-scale case studies, an open access software called MeDIUM (Measurement Data Interpretation Using Models) has been developed. The software is available for downloading at the following link: https://github.com/MeDIUM-FCL/MeDIUM. MeDIUM facilitates predictions (prognoses), particularly extrapolations, through representing ranges of values of variables. MeDIUM is a software implementation of EDMF with additional tools for validation and assessment of uncertainty estimations. MeDIUM improves accessibility of EDMF to new users of data interpretation methods especially for the context of data interpretation when managing civil infrastructure. With this software, users may interpret monitoring data to obtain validated and updated distributions of model parameters to support asset management decision making. The welcome tab of the software is shown in Figure 6.

FIGURE 6. A software for measurement data interpretation using uncertain models (MeDIUM) for effective and sustainable asset management.

The software provides users functionalities such as performing what-if analysis. Users may assess the impact of uncertainty estimation on solutions of EDMF with simple sliders that controls factors such as magnitude of uncertainty and target reliability of identification. Users are also provided with options to perform cross-validation of solutions by leaving out (holdout) measurements. The users have full control over the measurements to be left out and uncertainty definitions. The results of performing EDMF and validation may also be visualized with the software.

Using the Methodology Maps

Methodology maps presented in Low Model Complexity, Medium Model Complexity, High Model Complexity, have been developed with knowledge of the data-interpretation methodologies that are described in Residual Minimization, Traditional Bayesian Model Updating, Bayesian Model Updating With Parameterized Model-Error, Error-Domain Model Falsification as well as the experience acquired through interpreting data from multiple case studies as outlined in Case Studies. The objective of these maps is to help users select an appropriate data-interpretation methodology.

In this section, the methodology maps are used to select an appropriate data-interpretation methodology for three examples based on uncertainty conditions and model complexity. Additionally, practical aspects as discussed in Suitability for use in Practice influence selection of the most appropriate methodology.

Example 1—Low Bias, Low Magnitude of Uncertainty and Low Model Complexity

Consider a cantilever beam of length, l = 3 m, loaded at the free end by a point load, P = 5 kN. The beam has a square cross section of 300 × 300 mm with a moment of inertia, I = 6.75 × 108 mm4. The true modulus of elasticity of the beam, E, is not known and hence it is modelled as a random variable with a uniform distribution and bounds 20 and 100 GPa.

The deformation of the beam under the point load is recorded with two deflection sensors placed 1.75 and 3 m from the clamped end of the beam. The measurements recorded with these sensors are affected by noise that is normally distributed with zero mean and a standard deviation of 0.02 mm.

The objective in this example is to interpret the true value (distribution) of the modulus of elasticity of the beam. The model for data-interpretation is that of an idealized cantilever beam loaded at the free end, derived using Euler-Bernoulli beam theory. The model response at any location x on the beam is given by Eq. 3.

The measurement data used to interpret the distribution of modulus of elasticity is simulated for this example. The simulated measurements are obtained using a true (hypothetical) modulus of elasticity of 80 GPa in Eq. 3 and then adding measurement uncertainty based on the assumed sensor noise (normally distributed with zero mean and standard deviation of 0.02 mm).

The model described in Eq. 3 is computationally inexpensive and of low complexity. As the same model is used to simulate measurements and real structural behavior, the magnitude of systematic bias is low. Additionally, the only source of uncertainty affecting the interpretation task is the measurement noise, which is also low. For low model complexity, refer to the methodology map in Figure 3. In this figure, for low systematic bias and magnitude of uncertainty, the appropriate choice of data interpretation methodology is RM.

The example described in this section is adapted from Goulet and Smith (2013). The uncertainty conditions assumed are those studied as the first scenario in the paper. For this scenario, using two measurements, RM provides an accurate estimation of modulus of elasticity. BMU and EDMF also provide accurate estimation of modulus of elasticity albeit less precise and at a larger computational cost. As indicated in Table 3, RM is the most practical choice of data-interpretation methodology when its uncertainty assumptions are strictly satisfied. Therefore, RM is the appropriate choice of data interpretation methodology in this situation.

Example 2–Medium Uncertainty, Low Bias and Low Model Complexity

Consider a multiple degree of freedom model of a four-story building. Let a hypothetical “real” model of this building be defined by a partially lumped mass at each floor and distributed vertically. Let plasticity be concentrated at each floor level with non-linear hysteretic rotational springs defined by the modified Takeda model (Takeda et al., 1970). This model is used to simulate the real behavior of the four-story building during the main shock of the Alkion, Greece, earthquake of 24 February 1981.

To ease the computational load, the simulation model for this building was developed using assumptions that are different from the model used to simulate the real behavior. In this behavior model, the mass is only lumped at each floor level. The hysteretic behavior of plasticity springs is defined by a Gamma-model (Lestuzzi and Badoux 2003).

The model class for identification includes parameters such as flexural stiffness, rotational stiffness of springs, base yield moment, post-yield stiffness of rotational springs and the Gamma factor of the hysteretic behavior model. Variations in mass distribution and hysteretic models between the simulation model and the true behavior model lead to low bias and medium uncertainty conditions. Additionally, the simplified lumped mass models with non-linearity can be considered to be low complexity models. Therefore, the user has to refer to Figure 3 to select an appropriate data interpretation methodology.

In Figure 3, the appropriate choice of data interpretation methodology for low bias and medium uncertainty condition is traditional BMU. Reuland et al. (2017) evaluated this example and concluded that under the assumed uncertainty conditions traditional BMU provides accurate identification while accounting for this uncertainty. Therefore, the methodology selected using Figure 3 is the initial choice.

Reuland et al. (2017) also concluded that EDMF provides accurate identification for this scenario. Based on Table 3, EDMF would be practically more advantageous to use than BMU. EDMF involves a simpler updating criteria and identification bounds. Additionally, Reuland et al. (2017) used a sequential grid sampling approach for EDMF to reduce computational cost of identification. This approach is more robust to changes and availability of new information compared with adaptive sampling algorithms such as MCMC sampling (Pai et al., 2019). In this example new information could be in the form of additional modal data and changes in structural condition after the main shock for post-hazard assessment. Therefore, using Table 3, EDMF would be practically a more appropriate choice for this example.

Example 3—High Uncertainty, High Bias, and Medium Model Complexity