- 1School of Electronic Information Engineering, Changsha Social Work College, Changsha, China

- 2Hunan Victor Petrotech Service Co., Ltd., Changsha, China

To address the low docking accuracy of existing robotic wheelchair/beds, this study proposes an automatic docking framework integrating light detection and ranging (LIDAR), visual positioning, and laser ranging. First, a mobile chassis was designed for an intelligent wheelchair/bed with independent four-wheel steering. In the remote guidance phase, the simultaneous localization and mapping (SLAM) algorithm was employed to construct an environment map, achieving remote guidance and obstacle avoidance through the integration of LIDAR, inertial measurement unit (IMU), and an improved A* algorithm. In the mid-range pose determination and positioning phase, the IMU module and vision system on the wheelchair/bed collected coordinate and path information marked by quick response (QR) code labels to adjust the relative pose between the wheelchair/bed and bed frame. Finally, in the short-range precise docking phase, laser triangulation ranging was utilized to achieve precise automatic docking between the wheelchair/bed and the bed frame. The results of multiple experiments show that the proposed method significantly improves the docking accuracy of the intelligent wheelchair/bed.

1 Introduction

To improve the quality of life of people with lower limb motor dysfunction and reduce the labor intensity of nursing staff, the development of assistive robots for the elderly and disabled has received significant attention from both industry and academia. The intelligent wheelchair/bed, which integrates the functionalities of a multifunctional nursing bed and an intelligent electric wheelchair, has become an important area of research in assistive robotics for the elderly and disabled. High-pre docking between the bed and wheelchair is a key challenge in the development of intelligent wheelchair/beds. Wheelchair/bed autonomous docking methods have evolved from magnetic strip navigation, machine vision combined with color tape navigation, machine vision with quick-response (QR) code navigation (Chen et al., 2018), light detection and ranging (LIDAR) navigation capable of autonomous path planning (Jiang et al., 2021), and multi-sensor fusion (Mishra et al., 2020). In the existing literature, pressure sensors have been employed for docking the wheelchair and bed (Mascaro and Asada, 1998), whereas other studies (Pasteau et al., 2016; Chavez-Romero et al., 2016; Li et al., 2019) have used machine vision to dock the wheelchair and bed, and some (Ning et al., 2017; Xu et al., 2022) have utilized LIDAR for wheelchair/bed autonomous docking. North Carolina State University designed a multi-sensor fusion simultaneous localization and mapping (SLAM) method based on depth cameras and LIDAR (Juneja et al., 2019). Shanghai University of Science and Technology introduced graph optimization algorithms for positioning in unknown environments, combining odometry and inertial measurement unit (IMU) data through extended Kalman filtering (EKF) for accurate autonomous indoor docking of intelligent wheelchair/beds (Su et al., 2021). However, the docking gap remains above 6 mm, requiring further improvements.

This study proposes an intelligent wheelchair/bed docking architecture based on multi-source information measurement. First, the mobile chassis of an intelligent robotic wheelchair/bed was improved with an independent four-wheel steering system, which structurally facilitates real-time omnidirectional corrections of errors detected during the docking process. Second, the traditional A* algorithm was improved to avoid diagonal crossings of obstacle vertices encountered during navigation. During the remote guidance phase, a laser radar and IMU fusion navigation system fixed on the wheelchair determined the wheelchair’s pose in the world coordinate system. This compensated for the onboard camera’s inability to directly observe the docking target, thus enabling visual guidance. In the medium-range navigation stage, a camera vision + IMU fixed on the wheelchair facilitated attitude positioning. At the close-range docking stage, a camera attached to the wheelchair enabled horizontal observation of landmark features on the auxiliary bed to determine the relative position and orientation between the wheelchair and the bed, thus enabling close-range visual docking.

2 Chassis design

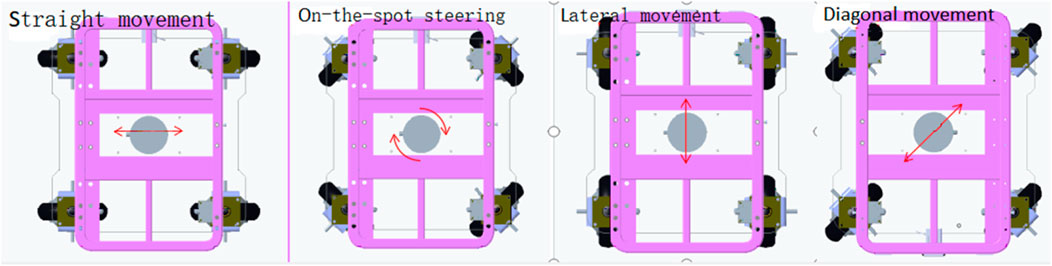

To meet the high-precision docking requirements of intelligent robotic wheelchair/beds, the mobile chassis must support on-the-spot steering to enable free maneuvering in narrow spaces, such as confined indoor home environments. The traditional wheel system of two active wheels plus two omni wheels has a large turning radius and lacks lateral movement capability, rendering it insufficient for high-precision docking of intelligent robotic wheelchair/beds. Therefore, the chassis of an intelligent robotic wheelchair/bed was upgraded to a four-wheel omnidirectional drive system, with four independently steered drive motors mounted in front and rear positions. Each drive motor is independently powered and controlled, enabling each wheelset to move independently. Figure 1 illustrates this structure.

Using this four-wheel independent drive steering configuration, the mobile chassis can perform straight, lateral, and diagonal movements, as well as on-the-spot steering, whereby the pose of the wheelchair/bed can be adjusted and corrected arbitrarily.

3 Proposed intelligent wheelchair/bed docking system

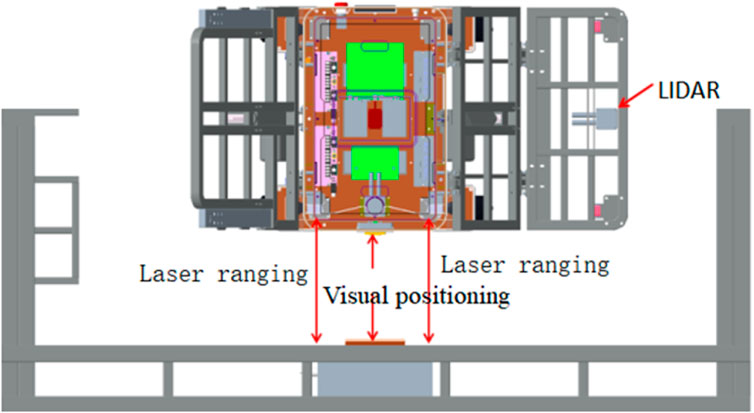

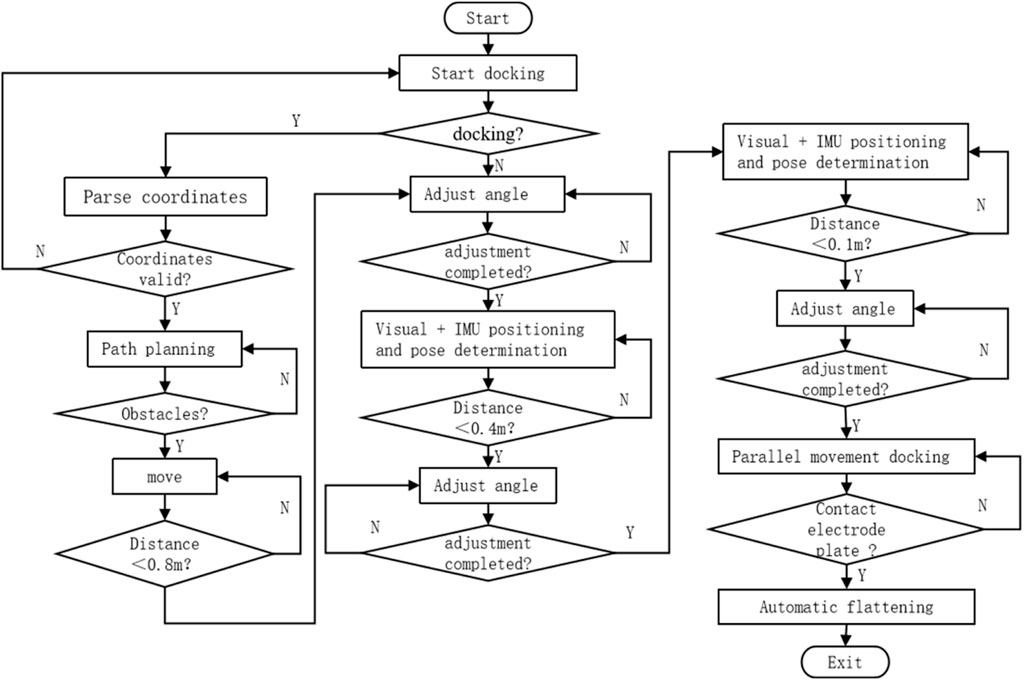

The intelligent wheelchair/bed docking system works in three phases: remote guidance, mid-range pose determination and positioning, and short-range precise docking. The schematic diagram and flowchart of the docking process are shown in Figures 2, 3, respectively.

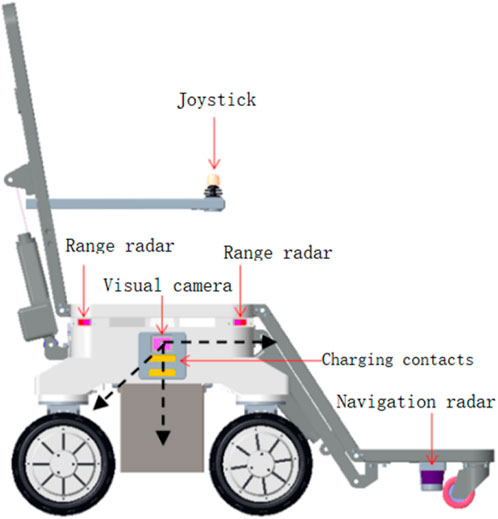

1) Remote Guidance Phase: When the distance between the intelligent electric wheelchair and fixed bed frame exceeds 80 cm, pressing the “one-click recall” button on the bed frame initiates the remote guidance through path planning. The LIDAR, WLR-716 from Vanjee Technology, is mounted at the bottom of the footrest of the intelligent electric wheelchair, and the IMU (GNW-SurPass-A100) operates on an industrial personal computer (IPC) with a main control panel, M67_I526L (6360U), executing the relevant algorithms.

2) Mid-Range Pose Determination and Positioning Phase: When the distance between the intelligent electric wheelchair and fixed bed frame is 10–80 cm, the docking process employs machine vision combined with IMU for positioning and pose determination. The camera on the intelligent wheelchair/bed has a resolution of 1920 mm × 1080 mm and a frame rate of 30 fps.

3) Short-Range Precise Docking Phase: When the distance between the intelligent electric wheelchair and fixed bed frame is less than 10 cm, the camera in the machine vision system is in a defocused state. Precise docking is achieved through the signal feedback between a laser rangefinder and contact electrodes. The laser rangefinder, TF40, has an accuracy of ± 2 mm, a distance resolution of 1 mm, and a repeatability of less than 2 mm.

4 Construction of control system

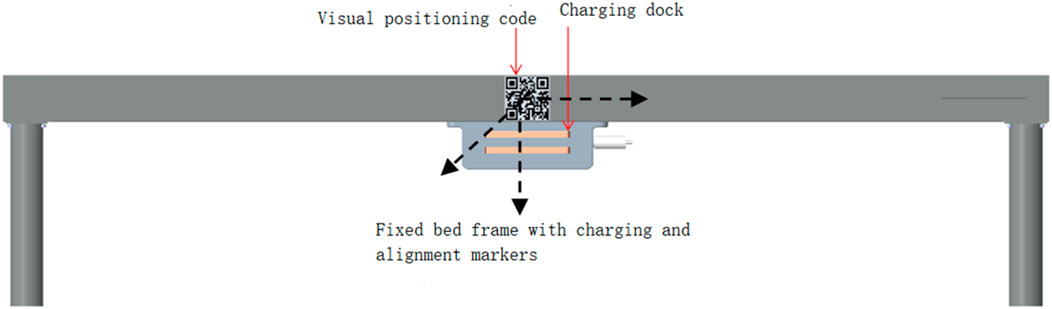

When the intelligent electric wheelchair, guided by LIDAR, is approximately 80 cm from the fixed bed frame, the camera mounted on the electric wheelchair (Figure 4) can clearly observe the QR code on the bed frame (Figure 5). Subsequently, the position and pose of the intelligent electric wheelchair are determined through visual and inertial fusion.

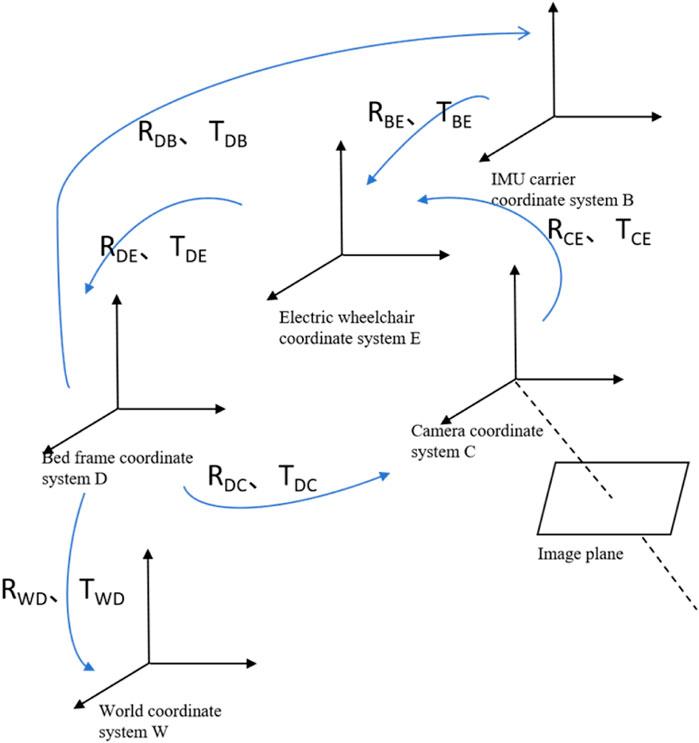

The coordinate systems of the camera, IMU carrier, intelligent electric wheelchair, fixed bed frame, and the world can be obtained through rotation and translation. The coordinate transformation relationships are shown in Figure 6. Setting the world coordinate system at the origin of the fixed bed frame coordinate system, the transformation matrix RWD and translation matrix TWD can convert the data from the bed frame coordinate system D to the world coordinate system W. Similarly, using the transformation matrices RDE, REB, RBC, and translation matrices TDE, TEB, TBC, the data can be converted among their respective coordinate systems. The coordinate systems lie on the same horizontal plane; hence, 3D coordinates can be converted to 2D coordinates. External parameters are obtained by optimizing the Levenberg–Marquardt algorithm to obtain the transformation matrix between the fixed bed frame and camera coordinate systems.

Based on Equations 1, 2, the relative pose relationships between the electric wheelchair, IMU carrier, and world coordinate systems OW-XWYWZW can be determined:

After obtaining the rotation matrix R and translation matrix T, the pose of the electric wheelchair in the world coordinate system OW-XWYWZW is (xw, zw, θw).

5 High-precision docking algorithm

5.1 Remote guidance algorithm

The intelligent wheelchair/bed employs LIDAR and IMU fusion navigation for remote guidance. The system framework is shown in Figure 7.

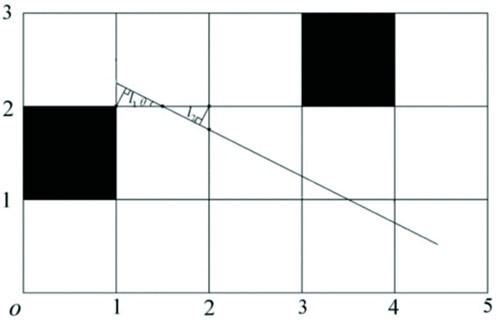

As a classic heuristic graph search algorithm (Yue et al., 2021), the A* algorithm can be considered an extension of Dijkstra’s algorithm. It is one of the most effective algorithms in static global path planning (Dong et al., 2023; Zhang et al., 2020). The algorithm has been widely used in various applications. However, the traditional A* algorithm has some limitations, such as neglecting the robot’s shape and size, generating paths that may be too close to obstacles, and creating unsmooth paths composed of multiple discrete points.

To address these limitations, the path equation was set as

By continuously searching surrounding nodes and updating the optimal function, the shortest path can be obtained. The best solution is evaluated using the path cost estimation function (Mishra et al., 2020):

In Equation 3, the g(n) is the estimated cost from the starting point to node n, and h(n) is the heuristic function, representing the estimated optimal path cost from node n to the target point.

Subsequently, a weighting factor γ (γ>1) is introduced to optimize the cost estimation function, as shown in Equation 4.

Introducing the weighting factor γ increases the weight of the unknown path in the total path cost, thereby enhancing the search depth of the algorithm and facilitating the solution of the optimal path.

5.2 Mid-range pose determination and positioning algorithm

5.2.1 Visual model

This study used a monocular high-speed camera to collect QR code information. Under the pinhole model projection, the 3D points are projected onto the normalized plane, yielding the coordinates of the 3D points on the image plane:

Using homogeneous coordinates, Equation 5 can be transformed into Equation 6

where K is a matrix of the camera’s intrinsic parameters; fx and fy are the scaling factors for the x and y-axes in the imaging plane and pixel coordinate system, respectively (pixel); Cx and Cy are the translation amounts (pixel); [x, y, z]T are the coordinates of point P in 3D space.

The manufacturing errors in lenses and cameras may cause the collected image to be distorted radially and tangentially during translation and scaling. Therefore, Equation 7 is used to correct these distortions on the normalized plane.

where, k1, k2 and k3 are the tangential distortion coefficients; p1 and p2 are the radial distortion coefficients; [x, y] and [xcorrect, ycorrect] are the coordinates before and after distortion correction, respectively; the square root of r2 = x2+y2 is the distance between the pixel and image center.

To obtain the pixel points on the pixel plane, the projection-corrected points need to be undistorted using the camera’s intrinsic parameters, as shown in Equation 8.

5.2.2 Inertial model

Inertial data are obtained from the IMU, and deterministic errors caused by biases, temperature, and scale errors can be excluded through prior calibration. Therefore, only random errors such as Gaussian white noise and biased random walk error need to be considered between the IMU and world coordinate systems. The IMU’s acceleration and angular velocity are modeled as shown in Equation 9.

where

From Equation 9, the kinematic equation of the intelligent wheelchair/bed can be obtained as follows:

where

the α, β, γ are shown in Equation 12 where

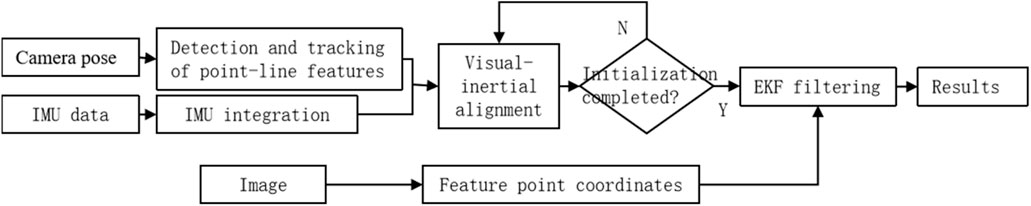

5.2.3 Visual/IMU positioning and pose determination algorithm

The algorithms proposed by Niu et al. (2023) and Li et al. (2023) can achieve the expected positioning accuracy, meeting the precision requirements for the mid-range positioning and pose determination of the intelligent wheelchair/bed. Inspired by this, a tightly coupled visual/IMU algorithm was adopted for the mid-range docking of the intelligent wheelchair/bed. The algorithm framework is illustrated in Figure 9. First, initialization is performed to completely calibrate IMU drift, gravity vector, and external parameters from the camera to the IMU; this ensures alignment of the sensors. Subsequently, the camera detects changes in the coordinates of the target in the system to obtain pose information. The obtained pose information is compared with the desired pose information, driving the intelligent wheelchair/bed’s motion system toward the fixed bed frame. Mid-range positioning and pose determination are terminated when the visual sensor is out of focus.

5.3 Short-range precise docking algorithm

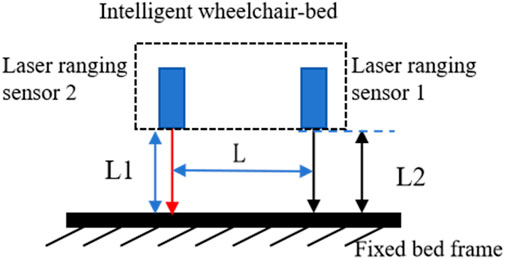

When the selected visual sensor is out of focus at a distance of 10 cm from the fixed bed frame, two laser-ranging sensors mounted on the intelligent wheelchair/bed are used to measure the distance between the intelligent wheelchair/bed and fixed bed frame. Thus, the tilt angle between the intelligent wheelchair/bed and fixed bed frame is calculated, achieving short-range precise docking.

As shown in Figure 10, the laser triangulation ranging system consists of two laser rangefinders mounted on the intelligent wheelchair/bed and fixed bed frame. The distance between the laser rangefinders is L; the distance between laser rangefinder 1 and fixed bed frame is L1; and the distance between laser rangefinder 2 and fixed bed frame is L2. The tilt angle between the intelligent wheelchair/bed and fixed bed frame is calculated using Equation 13.

Based on the tilt angle and distance, the four-wheel drive chassis adjusts the intelligent wheelchair/bed’s tilt angle, longitudinal offset, and lateral offset relative to the fixed bed frame. The automatic docking stops when the docking feedback signal is received.

6 Experimental results and analysis

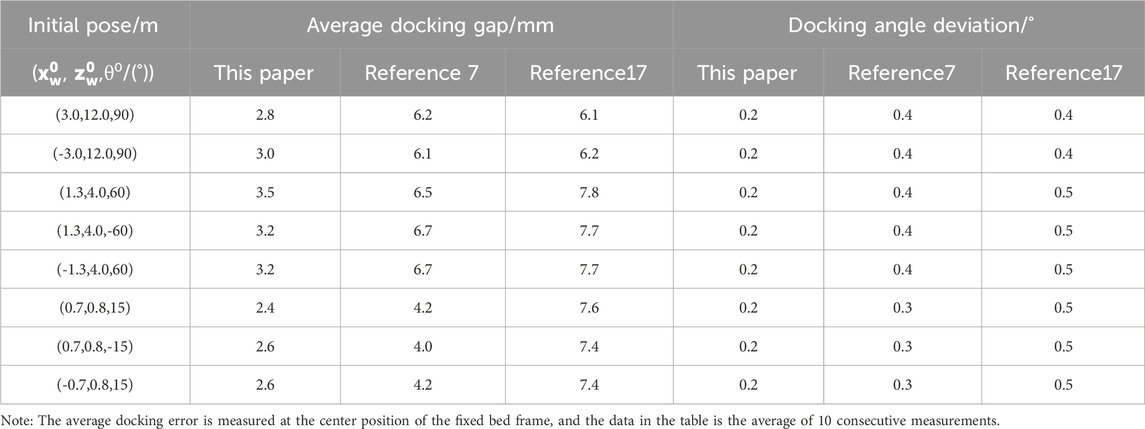

To verify the effectiveness of the proposed method, autonomous docking experiments were conducted on a self-developed intelligent wheelchair/bed prototype (Figure 11). A coordinate system was established with the fixed bed frame as a reference. The results of docking from different starting points of the mobile part of the intelligent wheelchair/bed are presented in Table 1. The initial pose in Table 1 was measured manually, and the final pose was the pose after the intelligent wheelchair/bed completed docking and automatically charged.

Table 1 indicates that in the final position, the proposed three-phase docking method with multi-sensor fusion achieves an average docking gap error of less than 5 mm and a docking angle deviation of less than 0.5°, outperforming the methods proposed by Li et al. (2019) and Fang et al. (2022). The proposed method can guide the mobile part of the intelligent wheelchair/bed to the specified position with high precision.

7 Conclusion

(1) A mobile chassis with independent four-wheel drive and steering capabilities was designed to structurally solve the free movement problem of the wheelchair/bed, thereby offering a mechanical structure that guarantees high-precision docking.

(2) A three-phase docking method integrating multi-source information was proposed to enable the high-precision docking of the wheelchair/bed. In the remote guidance phase, a docking approach combining LIDAR/IMU and an improved A* algorithm was employed to achieve indoor positioning, navigation, and obstacle avoidance of the wheelchair. During the mid-range pose determination and positioning phase, a visual/IMU was used for guiding the intelligent wheelchair/bed to a distance of 100 mm from the bed frame. When the distance between the wheelchair/bed and the bed frame was less than 100 mm, laser triangulation ranging was employed to achieve autonomous docking between the wheelchair and the bed.

(3) Practical prototype testing revealed that the designed three-stage docking method improved the docking accuracy of the intelligent wheelchair/bed to within 4 mm. This represents a significant improvement compared to the methods described by Li et al. (2019) and Fang et al. (2022), thereby verifying the feasibility of the proposed method.

(4) To achieve safe autonomous movement and high-precision docking of intelligent wheelchair/beds in unstructured indoor dynamic environments, future research will focus on key technologies such as autonomous positioning and semantic mapping, as well as collision-free automatic high-precision docking control between the wheelchair and bed frame or bucket.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

XL: Writing–original draft, Writing–review and editing. CT: Funding acquisition, Methodology, Writing–review and editing. XT: Formal Analysis, Investigation, Writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The author would like to acknowledge funding from 2024 Hunan Provincial Natural Science Foundation Project (2024JJ8074); China Disabled Persons’ Federation Project (2023CDPFAT-20); and Research Project of Professors and Doctors at Changsha Social Work College (2023JB02).

Conflict of interest

Author XT was employed by Hunan Victor Petrotech Service Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Chavez-Romero, R., Cardenas, A., Mendez, M. E. M., Sanchez, A., and Piovesan, D. (2016). Camera space particle filter for the robust and precise indoor localization of a wheelchair. J. Sens. 2016, 1–11. doi:10.1155/2016/8729895

Chen, Y., Chen, R., Liu, M., Xiao, A., Wu, D., and Zhao, S. (2018). Indoor visual positioning aided by CNN-based image retrieval: training-free, 3D modeling-free. Sensors 18 (8), 2692. doi:10.3390/s18082692

Dong, Y. N., Cao, J. S., and Li, G. (2023). Automatic guided vehicle path planning based on A-Star and DWA dual optimization algorithms. Sci. Technol. Eng. 23 (30), 12994–13001.

Fang, Z. D., Mei, L., and Jin, L. (2022). Research on autonomous docking Technology and methods of robotic wheelchair/beds based on multi-source information fusion. Chin. J. Ergonomics 28 (4), 56–61. doi:10.1109/RCAR52367.2021.9517453

Jiang, P., Chen, L., Guo, H., Yu, M., and Xiong, J. (2021). Novel indoor positioning algorithm based on Lidar/inertial measurement unit integrated system. Int. J. Adv. Robot. Syst. 18 (2), 172988142199992. doi:10.1177/1729881421999923

Juneja, A., Bhandari, L., Mohammadbagherpoor, H., Singh, A., and Grant, E. (2019). A comparative study of slam algorithms for indoor navigation of autonomous wheelchairs. IEEE Int. Conf. Cyborg Bionic Syst. 2019, 261–266. doi:10.1109/CBS46900.2019.9114512

Li, L., Zhang, Z., Yang, Y., Liang, L., and Wang, X. (2023). Ranging method based on point-line vision/inertial SLAM and object detection. J. Chin. Inert. Technol. 31 (3), 237–244. doi:10.13695/j.cnki.12-1222/o3.2023.03.004

Li, X. Z., Liang, X. N., Jia, S. M., and Li, M. A. (2019). Visual measurement based automatic docking for intelligent wheelchair/bed. Chin. J. Sci. Instrum. 40 (4), 189–197. doi:10.19650/j.cnki.cjsi.J1804420

Mascaro, S., and Asada, H. H. (1998). Docking control of holonomic omnidirectional vehicles with applications to a hybrid wheelchair/bed system. Int. Conf. Robotics Automation 1998, 399–405. doi:10.1109/ROBOT.1998.676442

Mishra, R., Vineel, C., and Javed, A. (2020) “Indoor navigation of a service robot platform using multiple localization techniques using sensor fusion,” in 2020 6th international conference on control, automation and robotics. Singapore, 124–129.

Ning, M., Ren, M., Fan, Q., and Zhang, L. (2017). Mechanism design of a robotic chair/bed system for bedridden aged. Adv. Mech. Eng. 9 (3), 168781401769569–8. doi:10.1177/1687814017695691

Niu, X. J., Wang, T. W., Ge, W. F., and Kuang, J. (2023). A high precision indoor positioning and attitude determination method based on visual two-dimensional code/inertial information. J. Chin. Inert. Technol. 31 (11), 1067–1075. doi:10.4028/www.scientific.net/AMM.571-572.183

Pasteau, F., Narayanan, V. K., Babel, M., and Chaumette, F. (2016). A visual serving approach for autonomous corridor following and doorway passing in a wheelchair. Robot. Auton. Syst. 75 (Part A), 28–40. doi:10.1016/j.robot.2014.10.017

Su, Y., Zhu, H., Zhou, Z., Hu, B., Yu, H., and Bao, R. (2021). Research on autonomous movement of nursing wheelchair based on multi-sensor fusion. IEEE Int. Conf. Real-time Comput. Robotics (RCAR) 2021, 1202–1206. doi:10.1109/RCAR52367.2021.9517453

Xu, X., Zhang, L., Yang, J., Cao, C., Wang, W., Ran, Y., et al. (2022). A review of multi-sensor fusion slam systems based on 3D LIDAR. Remote Sens. 14 (12), 2835. doi:10.3390/rs14122835

Yue, G. F., Zhang, M., Shen, C., and Guan, X. (2021). Bi-directional smooth A-star algorithm for navigation planning of mobile robots. Sci. Sin. Technol. 51 (4), 459–468. doi:10.1360/SST-2020-0186

Keywords: automatic docking, intelligent wheelchair/bed, visual positioning, lidar, IMU

Citation: Lei X, Tang C and Tang X (2024) High-precision docking of wheelchair/beds through LIDAR and visual information. Front. Bioeng. Biotechnol. 12:1446512. doi: 10.3389/fbioe.2024.1446512

Received: 10 June 2024; Accepted: 23 August 2024;

Published: 04 September 2024.

Edited by:

Wujing Cao, Chinese Academy of Sciences (CAS), ChinaCopyright © 2024 Lei, Tang and Tang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiangxiao Lei, eGlhbmdsZWkxMjQ4QHFxLmNvbQ==

Xiangxiao Lei

Xiangxiao Lei Chunxia Tang1

Chunxia Tang1