- 1Laboratory of Visuomotor Control and Gravitational Physiology, IRCCS Santa Lucia Foundation, Rome, Italy

- 2Laboratory of Neuromotor Physiology, Istituto di Ricovero e Cura a Carattere Scientifico—Scientific Institute for Research, Hospitalization and Healthcare, Santa Lucia Foundation, Rome, Italy

- 3Department of Systems Medicine and Centre of Space Bio-medicine, University of Rome Tor Vergata, Rome, Italy

Background: The vestibular end organs (semicircular canals, saccule and utricle) monitor head orientation and motion. Vestibular stimulation by means of controlled translations, rotations or tilts of the head represents a routine manoeuvre to test the vestibular apparatus in a laboratory or clinical setting. In diagnostics, it is used to assess oculomotor postural or perceptual responses, whose abnormalities can reveal subclinical vestibular dysfunctions due to pathology, aging or drugs.

Objective: The assessment of the vestibular function requires the alignment of the motion stimuli as close as possible with reference axes of the head, for instance the cardinal axes naso-occipital, interaural, cranio-caudal. This is often achieved by using a head restraint, such as a helmet or strap holding the head tightly in a predefined posture that guarantees the alignment described above. However, such restraints may be quite uncomfortable, especially for elderly or claustrophobic patients. Moreover, it might be desirable to test the vestibular function under the more natural conditions in which the head is free to move, as when subjects are tracking a visual target or they are standing erect on the moving platform. Here, we document algorithms that allow delivering motion stimuli aligned with head-fixed axes under head-free conditions.

Methods: We implemented and validated these algorithms using a MOOG-6DOF motion platform in two different conditions. 1) The participant kept the head in a resting, fully unrestrained posture, while inter-aural, naso-occipital or cranio-caudal translations were applied. 2) The participant moved the head continuously while a naso-occipital translation was applied. Head and platform motion were monitored in real-time using Vicon.

Results: The results for both conditions showed excellent agreement between the theoretical spatio-temporal profile of the motion stimuli and the corresponding profile of actual motion as measured in real-time.

Conclusion: We propose our approach as a safe, non-intrusive method to test the vestibular system under the natural head-free conditions required by the experiential perspective of the patients.

1 Introduction

Vestibular information plays a crucial role for our sense of spatial orientation and postural control. It is fundamental to encode self-motion information (heading direction and speed) (Angelaki and Cullen, 2008; Cullen, 2011; 2012), and to disambiguate visual object motion from self-motion (MacNeilage et al., 2012). In each ear, the vestibular end organs include the 3 semicircular canals, sensing angular accelerations, and the 2 otolith organs (saccule and utricle), sensing linear accelerations. Over the last several years, different methods have been used for stimulating the vestibular system in both basic science and clinical applications (Ertl and Boegle, 2019; Diaz-Artiles and Karmali, 2021). These methods range from galvanic or caloric vestibular stimulation to passive full-body accelerations. These last methods allow more naturalistic vestibular stimulation to the extent that they simulate the conditions of everyday life (Lacquaniti et al., 2023). Since other sources of sensory information in addition to the vestibular ones potentially contribute to passive self-motion perception, appropriate measures are generally taken to minimize non-vestibular cues, for instance by masking visual and auditory cues and by minimizing somatosensory cues.

In diagnostics, controlled translations, rotations or tilts of the head are used to assess oculomotor postural or perceptual responses (e.g., Merfeld et al., 2010). The vestibulo-ocular reflex (VOR) tends to stabilize the gaze during head motion, by producing eye movements in the direction opposite to that of head motion. On the other hand, perceptual tests can assess vestibular function independently of ocular motor responses. Thus, these tests can determine the subjective thresholds for detection or discrimination of head and body motion. Abnormal thresholds can reveal subclinical vestibulopathies or vestibular hypofunction due to aging or drugs (Valko et al., 2012; Kobel et al., 2021b; Diaz-Artiles Karmali, 2021).

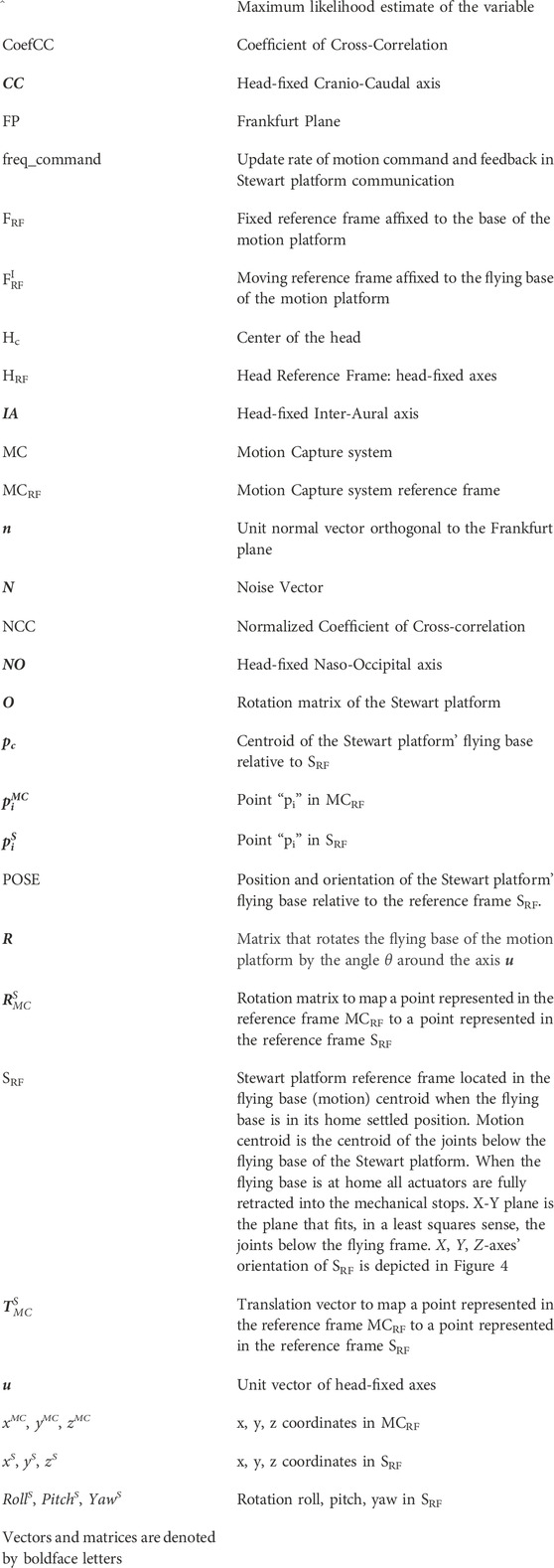

The adequate stimuli for naturalistic vestibular stimulation are represented by angular and linear accelerations of the head and static head tilt relative to gravity. In order to engage all vestibular sensors together or selectively, one must be able to move the person passively in all six degrees of freedom (DOF), by either translating linearly or rotating axially the head in 3-dimensional space. Stewart platforms are typically used for this purpose, since they generate these 6 DOFs movements with high temporal and spatial precision (e.g., Grabherr et al., 2008; MacNeilage et al., 2010; Crane, 2013; Ertl et al., 2022; La Scaleia et al., 2023). A Stewart platform consists of two rigid bodies (referred to as the base frame and the flying frame) connected through six extensible legs, each with spherical joints at both ends or with a spherical joint at one end and a universal joint at the other. The six extensible legs (hydraulic or electric linear actuators) are attached in pairs to three positions on the fixed base frame, crossing over to three mounting points below the flying frame (Dasgupta and Mruthyunjaya, 2000; Furqan et al., 2017). The person to be tested is placed above the flying frame, movable through 3D space in 6-DOF (Figure 1).

FIGURE 1. Different exemplary positions and/or orientations of the participant’s head relative to the motion platform. Participant seated straight in the chair (A), seated reclined in the chair (B) or standing erect on the platform (C) with safety straps.

In line of principle, primary stimulation of the vestibular end organs might require the best possible alignment of the externally imposed accelerations (rotational and/or translational) according to the anatomical geometry of the semicircular canals and otoliths (Wagner et al., 2022). The direction of the stimuli is also considered critical when using and interpreting the video head impulse test (vHIT) in vestibular testing (Curthoys et al., 2023). However, the orientation of the vestibular sensors within the head varies considerably across individuals, and it is generally unknown unless one obtains high-resolution magnetic resonance imaging of the inner ear (Bögle et al., 2020; Wu et al., 2021). Moreover, ipsilateral semicircular canals are not strictly orthogonal between each other, and bilateral pairs of canals are not strictly parallel between each other (Wu et al., 2021). Also, saccules and utricles are not planar and contain diverse orientations of hair cells (Goldberg et al., 2012).

Critically, however, the vestibular apparatus is designed to respond to arbitrary directions of stimuli and report the instantaneous orientation and motion of the head. Therefore, in practice, the motion stimuli should be aligned as close as possible with the cardinal axes of the head (naso-occipital, interaural, cranio-caudal). A common solution consists in holding the participant’s head in a predefined place via a restraint, such as a helmet carefully centered relative to the axes of rotation. However, this procedure could be uncomfortable for some participants. It might also be inaccurate, with errors of ∼1 cm (Grabherr et al., 2008; Mallery et al., 2010; Valko et al., 2012), and head position and orientation may change during the experimental stimulation. Another critical issue is that a tight restraint (such as a helmet) may result in a satisfactory stabilization of head position, but also in unwanted somatosensory inputs. In fact, minimal head shifts within the restraint and/or contact forces arising at the head-restraint interface generate potentially strong somatosensory stimuli. If the stimulation procedure is aimed at testing vestibular motion perception, such somatosensory stimuli represent a confound, since they can provide significant motion cues adding on top of the vestibular cues (Chaudhuri et al., 2013).

Importantly, clinical applications of vestibular stimulation, such as those of quantitative assessment of motion perception deficits due to vestibulopathies or aging, often require the least stressful setup. Wearing a tight helmet may engender anxiety, due to claustrophobic or other emotionally negative feelings. Moreover, health-related restrictions on tight-fitting head-instruments, such as the restrictions due to the recent COVID-19 pandemic, may prohibit their use tout court (for instance, this was the case in our laboratory).

Finally, the need for monitoring head position and orientation in the absence of external constraints arises if the experiment involves a protocol in which participants can freely move their head, for instance to track a visual or auditory target, to explore the environment, etc. This would also be the case in the study of whole-body postural perturbations of standing subjects using a motion platform (see Figure 1C). In such case, since the head typically moves as a result of the perturbation, it would be important to monitor its motion and feed it back to update the motion of the platform and/or that of visual stimuli in real-time. Head-centric motion stimulations are also relevant in the context of human-machine integrations aimed at employing sensations that provide feedback to facilitate high bodily ownership and agency (Mueller et al., 2021).

To address these issues, we propose an approach to deliver platform motion stimuli aligned with head-fixed axes under head-free conditions. The problem of updating the motion profile of a platform based on the subject’s movements has also been investigated in a few previous studies. Thus, Huryn et al. (2010) and Luu et al. (2011) developed and validated algorithms to move a Stewart platform, programmed with the mechanics of an inverted pendulum, in order to control the movement of the body of a standing participant in response to a change in the applied ankle torque. However, in their algorithms, the position and orientation of the participants relative to the Stewart platform was fixed and the only tested motion profile was a rotation in pitch around the axis that passed through the ankle joints. Here, on the other hand, we propose a procedure to control a motion platform using a moving reference frame (i.e., head-fixed axes under head-free conditions). To the best of our knowledge, this is the first attempt to address this issue.

We document algorithms for the identification of head-fixed axes (HRF) using a motion capture system, and for the definition of an appropriate motion profile to perform translations and/or rotations of the HRF with a motion platform. To accomplish the latter goal, the HRF axes are mapped to the platform reference frame (SRF). We report the following steps: a) spatial calibration procedure to estimate the roto-translation matrix required to map the 3D data acquired in the motion capture reference frame (MCRF) into the platform reference frame (SRF), b) identification of head axes based on the Frankfurt plane in SRF, c) definition of a mobile reference frame HRF with the three orthogonal axes of the head as coordinate axes, d) definition of the motion profile providing the required vestibular stimulation (rotation and/or translation relative to the HRF), e) remapping of the head motion profile in HRF into a motion profile of the Stewart platform in SRF, f) feasibility check of the motion profile to verify its compatibility with the platform physical limits, and g) real-time execution of the motion profile and head motion monitoring. We test the procedures in two different conditions: 1) the participant keeps the head in a resting position relative to the flying base, i. e., the head can assume a fully unrestrained posture with an arbitrary orientation relative to the flying base, while inter-aural, naso-occipital or cranio-caudal translations are applied, 2) the participant moves the head continuously while a naso-occipital translation is applied. The motion profile used to validate the procedure is a single cycle of sinusoidal acceleration, which is the typical motion profile used in protocols to evaluate vestibular motion perception (e.g., Benson et al., 1986; Valko et al., 2012; Bermúdez Rey et al., 2016; Bremova et al., 2016; Kobel et al., 2021a; La Scaleia et al., 2023).

2 System integration–hardware and software frame-work

2.1 The system

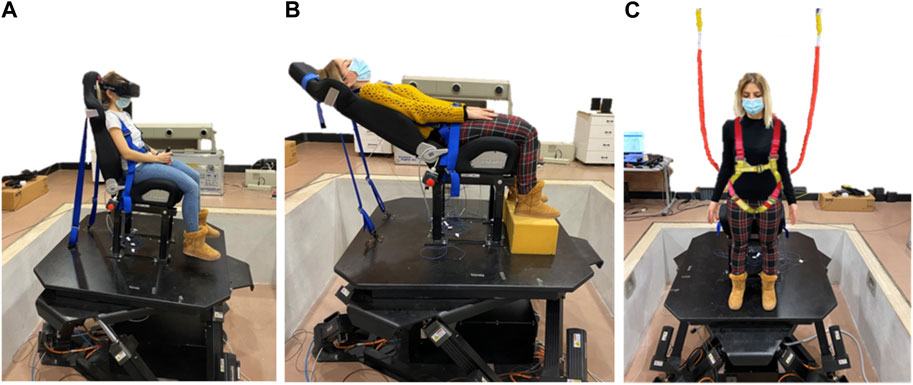

The system includes a Stewart platform, a motion capture system, and a control server (Central Control Unit) that enables system integration and communication, as well as the validation of the feasibility of the desired movement. The schematic setup and the block diagram are illustrated in Figures 2A, B, respectively.

FIGURE 2. Schematic set-up. (A) The Central Command station integrates the motion capture system (e.g., Vicon) and the motion platform (e.g., MOOG MB-E-6DOF/12/1000). The different reference frames are shown for the participant’s head (HRF), the motion capture system centered in the calibration wand (MCRF), and the motion platform (SRF) in blue, black, and red respectively. (B) Schematic of the design of hardware interactions with the Central Control Unit. The Central Control Unit connects to the motion platform and to the motion capture system via UDP. The Central Control Unit is also in charge of determining the head reference frame, producing the specific head-centric motion stimuli, validating the movement’s feasibility and recording the entire communication into a log-file, which can be accessed for post-processing analysis.

The six actuators of the Stewart platform are powered to modify their lengths, thereby changing the POSE (position and orientation) of the flying base in a controlled way. The origin of SRF is the flying base motion centroid position when the flying base is in its home settled position. Motion centroid is the centroid of the joints below the flying base of the Stewart platform. When the flying base is at home all actuators are fully retracted (with a predefined tolerance) into the mechanical stops. X-Y plane is the plane that fits, in a least squares sense, the joints below the flying frame. Z-axis is orthogonal to the flying base and oriented toward the fixed base of the platform. The POSE of the platform corresponds to the position of the flying base centroid (pc) and the orientation of the flying base (Roll, Pitch, Yaw) in SRF (

2.2 Spatial calibration procedure

The spatial calibration procedure estimates the transformation between the motion capture reference frame (MCRF) and the platform reference frame (SRF), in order to map the 3D data acquired in MCRF into SRF, i.e., it finds the relative rotation between the XYZ axes of the flying base reference system (SRF) and the XYZ axes of motion capture reference system (MCRF). The procedure also finds the relative position of the origin of MCRF relative to the origin of SRF.

A typical spatial calibration procedure consists in finding the rigid Euclidean transformation that aligns two sets of corresponding 3D data collected in two different reference frames, i.e., computing the rotation matrix (

where piS and piMC are the position vectors at time ti of a point with respect to the origin of the reference frame SRF and MCRF, respectively, Ni is the noise vector that accounts for measurement noise;

To overcome the above-mentioned critical issues, we take advantage of the platform motion to estimate

The predefined motion profile (pure translation or rotation) sampled at 1 kHz is sent to the motion control system of the Stewart platform as an ordered sequence of point-to-point positions of the flying base’ motion centroid. The actual positions of this motion centroid (pc) are recorded in SRF using the feedback POSE from the platform (the actual positions of the motion centroid versus time, sampled at 1 kHz, are estimated from the feedback received from the encoders built into the 6 parallel actuators and they are collected by the Moog Control Unit, see Figure 2B), and can be expressed in MCRF by means of a motion capture system that acquires the position of one or more markers strictly fixed to the motion platform (pMarker(s)). Markers can be fixed anywhere on the platform as long as they are always visible to the motion capture system during both sets of platform movements and they are one with the platform during its movement.

Using the platform motion, we can collect more than one point with the platform feedback (

2.2.1 Evaluation of rotation matrix

The rotation matrix is calculated from the motion data acquired during the execution of pure translation movements of the platform. In this manner, since the flying base orientation is fixed during the movement, the traveled distance by the centroid and by the markers is the same even if the 3D points are distinct physical points. The relative motion of the flying motion base with respect to its initial position and orientation at the beginning of the motion (in the reference system SRF) and the relative motion of the markers fixed to the Moog with respect to their initial positions (in the reference system MCRF) can be used to estimate the relative rotation between the axes of the two reference systems SRF and MCRF.

In order to minimize errors in the rotation matrix estimation, the 3D points collected in SRF (

To reduce the duration of the calibration procedure, here we propose to use one pure translation movements of the platform for each axis of SRF (antero-posterior, lateral, and vertical). We use three orthogonal translations to guarantee that the collected points are not contained in a plane, and cannot be fitted in a robust way by a plane. A single trial consists of 5 consecutive cycles of sinusoidal motion along xS, yS, and zS in order to have multiple measure of the same spatial points. The sinusoidal motion profile has zero phase starting from a predefined initial position (IP) of the flying base centroid to ensure that the oscillation is centered on the IP. The IP around which the flying base centroid oscillates can be in any arbitrary point of the workspace of the flying base centroid. We choose the neutral position at the center of the workspace of the flying base centroid as the IP to have a greater volume to explore during the calibration movements. To ensure accurate capture of the executed motion profile the sinusoidal motion frequency is 0.1 Hz, which is significantly lower than the acquisition frequencies of the motion platform and motion capture system, the amplitude is 0.1 m, in order to explore a significant portion of the flying base centroid’s workspace while also ensuring that the speed and the acceleration of execution of the movement is not excessively high, and the markers must be always visible to the motion capture system during the entire motion.

For each trial, the 3D position data of the flying base centroid (

We must ensure that the collected data (obtained from both platform feedback and motion capture system) start with the coordinates of the platform when it is motionless in IP. The data sampled with the lower frequency are interpolated at the frequency of the data sampled at the higher acquisition rate. The 3D data are then time-aligned (a possible approach is in Supplementary Appendix A1, paragraph S.1) in order to have the paired-3D data (

In order to find the transformation between the motion capture reference frame (MCRF) and the platform reference frame (SRF), i.e., in order to map the 3D data acquired in MCRF into SRF, we first estimate the relative rotation between the XYZ axes of motion capture reference system (MCRF) and the XYZ axes of the flying base reference system (SRF) as follows.

The position of the platform centroid in MCRF for each ith sample can be expressed as:

where

Since we use pure translation movements, for a given marker,

where

The least squares problem (Eq. 3) can be simplified by finding the centroids of both datasets (

If more than one marker is collected by the motion capture system, the mean position of the recorded markers, for each ith point, is assessed before the evaluation of

We can solve Eq. 3 by finding the maximum likelihood estimate of the rotation matrix

Several efficient methods have been developed to compute

One possible approach to solve Eq. 5 was developed by Arun et al., 1987. It is based on computing the singular value decomposition (SVD) of a covariance matrix, obtained from the matrix product between the two datasets after moving the origin of the two coordinate systems to the point set centroids. Another related approach, but based on exploiting the orthonormal properties of the rotation matrix, computes the eigensystem of a different derived matrix (Horn et al., 1988). Another algorithm, also developed by Horn (1987), involves computing the eigensystem of a matrix related to the representation of the rotational component as a unit quaternion.

Here we summarize the method developed by Arun et al., 1987.

Eq. 5 can be written as:

Eq. 6 is minimized when the last term is maximized:

where pdetrend is the 3×m matrix with

where the columns of U and V (orthogonal matrices) consist of the left and right singular vectors, respectively, and S is a 3 × 3 diagonal matrix, with non-negative elements, whose diagonal entries are represented by the singular values of H.

By substituting the decomposition into the trace that we have to maximize, we obtain:

Since S is a diagonal matrix with non-negative values, and V,

Of course, the larger is the dataset of non-aligned points (m), the better is the estimation of H, and therefore the smaller is the error resulting from the minimization procedure in the rotation matrix computation.

2.2.2 Evaluation of translation vector

The translation vector

We propose to use five cycles of sinusoidal rotation with frequency 0.1 Hz and amplitude 10° for RollS, PitchS, and YawS rotations around

With these motion profiles, each marker moves along arcs of circle that lie on a specific marker-sphere, whose radius corresponds to the distance between the marker and pc. When more than one marker is used for this purpose, the resulting spheres have different radii but the same center. This center corresponds to pc.

With a least squares sphere fitting, we calculate the center [xcjMC, ycjMC, zcjMC]T of the sphere over which the marker j has moved during the rotational movements (Jennings, 2022). For a given set of m markers, we have m estimates of the position of the flying base motion centroid in the motion capture reference system MCRF.

where xcjMC, ycjMC and zcjMC are the spatial coordinates in the MCRF of the center of the sphere over which the marker j has moved, and m is the number of recorded markers.

The best-fitting translation vector

3 Identification of head-fixed axes

3.1 Frankfurt plane of the head

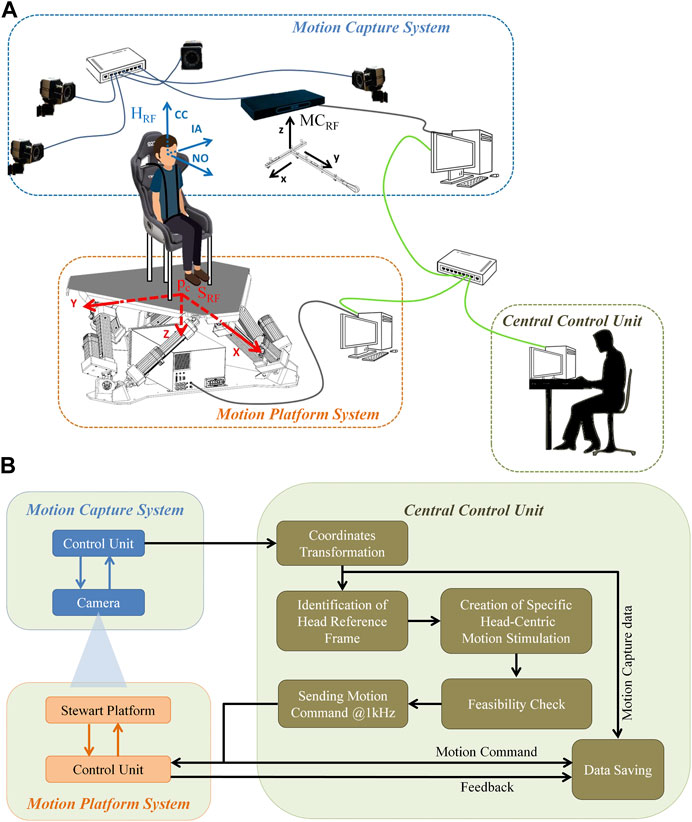

The anatomical coordinate system of the head can be based on the Frankfurt plane (FP), i.e., the plane through the inferior margin of the left orbit (OL) and the external auditory meatus of both ears (TL, TR). FP is often used to orient the head so that the plane is horizontal in standardized exams (Moorrees and Kean, 1958). To identify the Frankfurt plane in each experimental subject, we place 5 markers on the head over the following anatomical landmarks (see Figure 3): left orbital inferior margin (OL), right tragus (TR), left tragus (TL), right orbital inferior margin (OR), occiput (OP). An additional marker not lying in the FP plane is placed in an arbitrary position on the forehead (M6). This additional marker is placed on the head in an asymmetric position compared to the other markers, and it is used to identify the orientation of the cranio-caudal axis. To reduce the effect of measurement noise, the FP is identified using the least square method of the normal distance of 5 markers to the plane. The motion capture system is used to record the position of the 5 markers (

FIGURE 3. Markers placement to identify head position and orientation. (A) The left and right orbitales (OL, OR) are the lowest points of the lower margin of the left and right orbits (eye sockets). The porions correspond to the left and right superior margins of tragus (TL, TR), the most lateral points of the roofs of the left and right ear canals. Near the middle of the squamous part of the occipital bone is the external occipital protuberance, occiput (OP). The marker M6 is placed in an asymmetric position compared to the other markers and it is used to individuate the orientation of the cranio-caudal axis. Blue, red and green arrows represent the naso-occipital, inter-aural and cranio-caudal axes reconstructed with the aforementioned markers. The lateral semicircular canal is typically oriented at about 30° from the horizontal plane (FP). (B) The Frankfurt plane (FP) is defined as a plane that passes through the orbitales and the porions. FP is identified using the least square method of the normal distance of

The general form of the equation of a plane is:

where a, b, c are the Cartesian components of the unit normal vector n = [a b c]T. The column vector n is parallel to the cranio-caudal axis of the head (CC). Estimates of the unknown coefficients a, b, c are determined by minimizing the sum of squared orthogonal distances between the points qiS (

A possible procedure to identify FP and n is detailed below.

We must specify a priori a single point on the plane, and a plausible choice is given by the centroid of all measures, denoted as CS. Assuming that measurement errors (for example, due to inaccurate positioning of the markers) are random and unbiased, the plane should pass through the centroid of the measurements.

Accordingly, we define:

where xS,yS and zS are the spatial coordinates in SRF, and k = 5 is the number of measured points.

Let

The distance Disti of qiS to the plane is equal to the length of the projection of

In order to identify the FP, we have to find the vector n = (a, b, c)T that minimizes:

Given the matrix V and k markers

We can express Eq. 18 in matrix form

with A = VT*V. Eq. 20 has the form of a Rayleigh quotient (Strang, 1988). Thus, the vector n that minimizes Eq. 20 is the minimum eigenvector (i.e., the eigenvector corresponding to the minimum eigenvalue) of matrix A.

3.2 Head-fixed axes

We use a right-handed reference system to describe the head-fixed axes, naso-occipital (NO), inter-aural (IA) and cranio-caudal (CC), positive to the front, left ear, and upwards, respectively (see Figure 3). We first identify the inter-aural axis passing through the right and left tragus. To this end, we evaluate the orthogonal projection of both markers on the left and right tragus [TiS (xiS; yiS; ziS), with i = 1, 2 corresponding to marker TL and TR in SRF (Figure 3B)] on the FP.

Using the previously calculated point CS and the normal vector orthogonal to FP (n), the projection of TiS on FP plane is calculated as:

The inter-aural axis (IA) is defined as:

which has a unit vector:

The center of the head (Hc) is the midpoint of the line segment defined by

The orientation of the cranio-caudal axis CC (see Figure 3) can be obtained by finding the orientation of the vector

or

The naso-occipital axis (NO) lies on the FP, is orthogonal to the inter-aural axis and cranio-caudal axis, and passes through the Hc:

4 Head-centric vestibular stimulation

In theory, the assessment of the vestibular function may require the alignment of the motion stimuli with the anatomic orientation of the sensory receptors in the inner ear. However, in practice this is often not feasible due to the significant inter-individual variability of anatomy of the vestibular apparatus and the lack of high-resolution imaging of the inner ear (see Introduction). Therefore, the simplest solution is to align the motion stimuli with the cardinal axes of the head (see Section 3.2), by taking advantage of the fact that the vestibular apparatus is designed to respond to arbitrary directions of stimuli and report the instantaneous orientation and motion of the head. In our case, the motion platform is translated along (or rotated about) the head axis u (interaural, naso-occipital, or cranio-caudal), with ux2 + uy2 + uz2 = 1, which passes through Hc. POSE(t) = [

4.1 Translations

Here we translate the participant’s head along the selected head axis u = (ux, uy, uz) with a motion profile s(t).

We define the incremental displacement ds(ti) as:

with t = [0:dt:tend] (tend is the total duration in seconds of the motion profile, and dt = 1/freq_command, with ‘freq_command’ equal to the control frequency of the motion platform),

The algorithm requires that, at the beginning of stimulation (t = 0), the position and orientation of the platform is determined (POSE(t = 0) = [

The configuration of the platform that guarantees a translation of s(t) along the axis u passing through the Hc is given by:

4.2 Rotations

Here we rotate the participant’s head by the angle θ(t) around the head axis u passing through Hc. As before, the algorithm requires identifying the POSE of the motion platform at the start of the stimulation, and then defining the head orientation (u(t = 0)) and position (Hc(t = 0)) within that motion platform configuration and during the stimulation (u(t), Hc(t)).

Next, the incremental movement rotation dθ(ti), defined as the desired rotation in dt = ti+1 - ti, is applied around the chosen head axis, u(ti), centered in Hc(ti), translating and rotating the flying base of the motion platform.

Accordingly, the platform centroid position that ensures the correct head-centric vestibular stimulation is obtained by:

1) translating Hc(ti) to the origin of the motion platform system, so that the rotation axis u(ti) = (

2) rotating the flying base of the motion platform by the angle dθ(ti) around the axis u(ti) with

3) bringing Hc(ti) back to its initial position, that is, performing an inverse translation of that described at point 1) above (Eq. 29):

that is:

with

The platform orientation is given by the platform orientation in ti (Rotational matrix O(ti)) following by the incremental rotation (R(ti)):

with

where:

so:

Thus, the overall transformation of the POSE of the platform that guarantees a rotation of θ(t) about the head axis u that passes through the Hc is given by Eqs. 32–35.

5 Feasibility check of the stimulation profile

Before executing the vestibular stimulation motion profile (Eq. 28 for translations or Eqs 32–35 for rotations), we record the head position and orientation and we simulate the entire selected motion profile with that head configuration. The simulation is necessary to verify the feasibility of the motion profile, and prevent the platform from stalling midway. In the feasibility check, we consider that u(t) = u(t = 0). The limits of lengthening and shortening of the platform actuators constrain the range of motion. The feasibility check involves the measurement of the elongation of the actuators during the execution of the motion profile. Starting from a specified motion profile of the platform (in terms of position and orientation), we solve the inverse kinematic problem to estimate the corresponding time-history of length changes of the six actuators. The presented algorithm is based on the geometrical approach by Hulme and Pancotti (2014). If the motion profile is not compatible with the physical limits of the actuators, an iterative algorithm is used to find a “better-starting position” of the platform. If even the “better-starting position” does not guarantee a compatible motion, the specific motion profile is not further considered for execution.

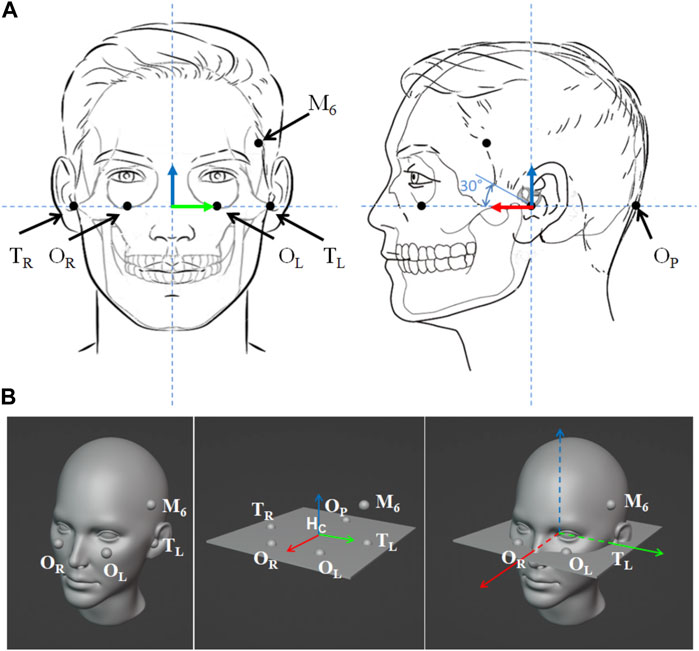

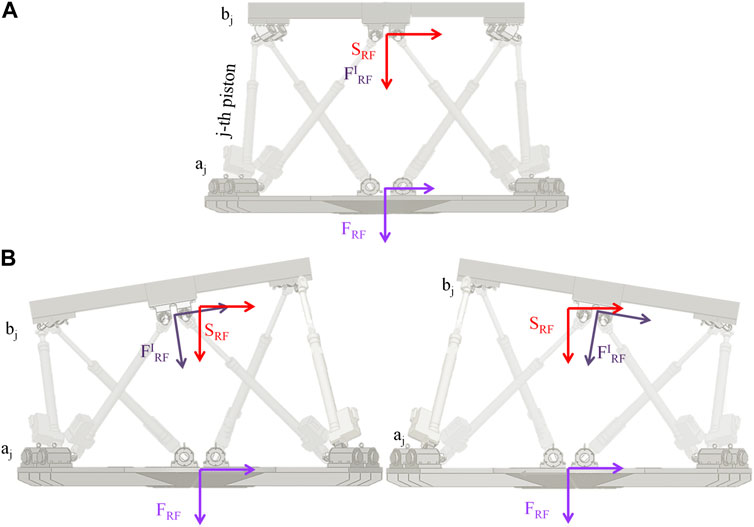

Consider a fixed reference frame (FRF) aligned with the base of the motion platform, and a moving reference frame (FIRF) aligned with the flying base of the motion platform centered in pc (see Figure 4). The position of the origin of FIRF with respect to that of FRF is defined by the vector:

FIGURE 4. Moving platform reference frames. In (A) the platform is in REST position (all fully retracted actuators). The fixed reference frame (FRF) affixed to the base of the motion platform, the moving reference frame (FIRF) affixed to the flying base of the motion platform and the Stewart platform Fixed reference frame (SRF) are shown in light violet, dark violet and red respectively. In REST position FIRF and SRF are coincident. (B), the same format of (A), but the platform is in two different POSEs.

The contact point of the jth actuator with the flying base of the motion platform (bj) can be expressed in FRF as:

The jth actuator length is computed as the length of the vector connecting the jth piston contact point with the base of the motion platform (

with j = 1:6, number of actuators.

This calculation is done for the entire motion profile, in order to have the length of every actuator, from the base contact points to those of the flying frame, over the entire movement duration. The elongation is then evaluated by subtracting the fully retracted actuator length (

Finally, the actuator elongation is:

The movement can be performed if the computed elongation for each motion instruction of the profile falls within the allowable range [Lj_min-Lj_max] of the actuators. Alternatively, the following approach is used to find a “better-starting point” of the motion platform centroid that is suitable for the specified motion profile:

1. Relocate the initial position of

2. Evaluate the motion profile that starts from the new initial position

3. Calculate the elongation of each actuator (Eq. 39)

4. Check the movement feasibility

5. If the new motion is still incompatible with the actuators physical constraints, repeat steps 2–4 after shifting the initial position (

6. If the motion remains incompatible after exploring the whole motion system workspace (changing the position of

6 Exemplary applications of head-centric stimulations under head-free conditions

To assess the vestibular function during passive head and body movements, one can restrain the participant’s head in a fixed position (for instance, with a helmet or tight forehead strap) and then output command motion signals to the platform relative to that fixed reference frame. Here, we consider the alternative option of asking participants to freely choose a comfortable position of their unrestrained head, and then not to move the head voluntarily during the stimulation. Importantly, participants are not asked to stiff their neck to keep the head stationary, but only to avoid moving the head on purpose. Since we monitor head position and orientation during the stimulation, we can verify either off-line or on-line by how much the head moved. There are two different approaches to achieve head-centric stimuli under head-free conditions, depending on whether one wants to account for head movement during the trial off-line or on-line.

The first solution (off-line) involves a “Fixed head-reference-frame.” In this case, one can generate the desired head-centric motion profile (POSE (t)) in each trial by using the head-centered reference frame evaluated at the start of the trial. To this end, one applies Eq. 28 for translations (with u(ti) = u(t = 0)) and Eqs 32–35 for rotations (with u(ti) = u(t = 0) and Hc(ti) = Hc(t = 0)). For a given POSE(t), the Central Control Unit sends the instantaneous desired POSE(ti) to the platform controller while also recording platform feedback. Simultaneously, the Central Control Unit acquires 3D position and orientation of the head and platform from the motion capture system (see Figure 2). During platform motion, a running average of 3D head position and orientation over 50-ms consecutive intervals is computed. If the head shifts (in x, y or z) or rotates (in roll, pitch, or yaw) relative to the chair (and platform) by more than a predefined tolerance window relative to the platform over any 50-ms interval, the trial can be discarded and repeated (La Scaleia et al., 2023). The tolerance window is specified a priori based on the task and the participant’s characteristics (for instance, the tolerance may be greater for patients with significant cognitive or sensorimotor impairments).

The second solution (on-line) involves a “Moving head-reference-frame.” In this case, the desired head-centric motion profile (POSE(t)) is created using the position (Hc(ti)) and orientation of the head (u(ti)) measured on-line during the trial (using Eq. 28 for translations and Eqs 32, 35 for rotations). This last solution is more general than the first solution, and can be used to test vestibular function both when the participant keeps the head stationary relative to the chair and when the participant moves the head voluntarily during externally applied motions.

7 Algorithms validation

We implemented and validated the algorithms using the two approaches mentioned above (Fixed and Moving head-reference-frame) with a MOOG MB-E-6DOF/12/1000 Kg (East Aurora, New York, United States) as Stewart Platform. We used Vicon 3D Motion Capture system (Vicon Motion Systems Ltd., United Kingdom), equipped with 10 IR Vero 2.2 cameras, to track head and platform motion in real time. In our set-up, the MOOG is controlled in Degrees Of Freedom mode by a server (Central Control Unit) that implements the UDP communication with the motion platform at 1 kHz (freq_command) according to the interface manual (CDS7330- MOOG Interface Definition Manual For Motion Bases). Moreover, the server opens a UDP socket allowing the connection with the motion capture system. All position profiles were programmed in LabVIEW 2023 (National Instruments, Austin, Texas, United States) with custom-written software, and input to the MOOG controller at 1 kHz. 3D data provided by the motion capture system were sampled at 200 Hz. Also the platform feedback was acquired at 200 Hz. Only for the spatial calibration (see Sections 2.2.1; 2.2.2), the platform feedback was acquired at 1 kHz. In order to find the corresponding paired points (from the Stewart Platform and the motion capture system, respectively) to include in the Least-Squares Fitting of Two 3D Point Sets algorithm (Arun et al., 1987), we interpolated the data collected with the motion capture system at 1 kHz using the Matlab function interp1, with the ‘spline’ method.

In our tests, we used single cycles of sinusoidal acceleration, consistent with several previous studies of vestibular motion perception (e.g., Benson et al., 1986; Valko et al., 2012; Bermúdez Rey et al., 2016; Bremova et al., 2016; Kobel et al., 2021a; La Scaleia et al., 2023). We restricted our tests to translational motions, but our approach can be easily applied to rotational motions or a combination of translations and rotations.

The equations defining a translation along a cardinal axis u (interaural, naso-occipital or cranio-caudal) are as follows:

Acceleration (a(t)), speed (v(t)), and position (s(t)) are all proportional to each other. We set the sinusoidal acceleration frequency at f = 0.2 Hz, the duration of the motion cycle at T = 5 s and Tnorm =

For the condition with “Fixed head-reference-frame,” we monitored a participant seated in the padded racing chair. During each trial, the person kept the head in a resting position, which was otherwise unrestrained from the outside. The motion platform was translated naso-occipitally (100 trials) by using the NO head axis that was assessed at the start of each trial. We tracked the 3D position and orientation of the head and platform by means of Vicon. To this end, we attached 4 markers at the chair and 6 markers at the head, i.e., the 5 markers of Figure 3, plus one in an arbitrary position to produce asymmetry (M6). For our tests, we chose the left pterion for this additional marker. The POSE of the motion platform (motion command and feedback), as well as the position and orientation of the head and chair, were all recorded synchronously at 200 Hz.

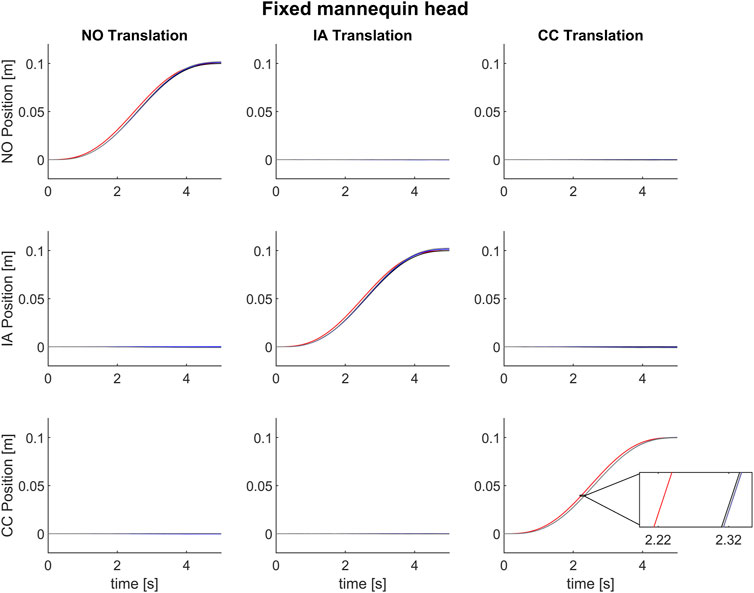

For the condition with “Moving head-reference-frame,” we performed two different experiments. First, we evaluated the delays inherent in the on-line algorithm implementation. In order to have a highly controlled condition with the absence of head movements, we firmly attached a mannequin head to the headrest of the platform chair, which was then translated along inter-aural, naso-occipital or cranio-caudal directions. The mannequin head was fixed in a position roughly corresponding to that of the head of participants in typical tests of vestibular motion discrimination (e.g., La Scaleia et al., 2023). We then controlled the motion platform using the head orientation monitored in real time. Specifically, we tracked the 3D position and orientation of the mannequin head (with the same markers’ position as in the previous condition) and the platform at 200 Hz using Vicon. Using Eqs. 26, 28 and Eq. 41, the motion platform was controlled on-line after choosing the head axis for stimulation (

We then carried out the same procedure used with the mannequin head on a head-free participant seated in the chair. In this experiment, the task for the participant was to move the head smoothly in order to explore the surrounding. In each trial, while the participant shifted the head, we applied a naso-occipital translation of the motion platform (100 trials) by using the NO head axis that was assessed in real-time during the trial (Eqs. 26, 28 and Eq. 41).

7.1 Data analysis

The 3D coordinates (x, y, z) of the motion platform recorded by the motion platform system (command and feedback data), as well as the 3D coordinates of the chair and the head (mannequin or real) recorded by Vicon were numerically low-pass filtered (bidirectional, first-order Butterworth filter, 15 Hz cut-off). For each trial, we computed the head orientation (u(t)) and the first time derivative of the platform command (

To assess how well the actual motion profiles matched the desired motion profile, for each trial we computed the cross-correlation between the desired motion profile of the platform (Eq. 41) and the command motion profiles (Eq. 43), and between the latter and the actual motion profiles executed as recorded by the MOOG feedback (Eq. 44) or by the Vicon. For the latter, we considered both the chair motion (Eq. 45) and the head motion (Eq. 46).

For the condition “Static head-reference-frame,” we computed the time lag as the time instant when the absolute value of the cross-correlation was maximum (with a 5 ms resolution, due to 200 Hz sampling frequency). A positive time lag indicates a delay of the actual motion with respect to the reference motion. We also defined the coefficient of cross-correlation (CoefCC) as the value of the cross-correlation at the time lag. The normalized coefficient of cross-correlation (NCC) was then calculated as CoefCC divided by the coefficient of autocorrelation of the desired motion profile (Eq. 41) at zero lag. The value of NCC indicates the degree of similarity between the two compared motion profiles: the closer the NCC is to one, the greater the similarity.

For the condition “Dynamic head-reference-frame,” we first computed the NCC and the time lag inherent the on-line algorithm implementation using the fixed mannequin head. In this manner we evaluated the time lag that represents the cumulative delay of the algorithm implementation and the overall system (i.e., Motion Platform, Motion Capture). Then, we assessed the NCC with the moving head of the participant using the time lag evaluated with the fixed mannequin head in order to evaluate the quality of the provided stimulus, taking into account the algorithm’s inherent delay, in the presence of the participant’s voluntary head movement.

7.2 Statistical analysis

Statistical analyses were performed in Matlab 2021b. We used the Shapiro–Wilk test to verify the normality of distribution of data, and we found that the data were not normally distributed (see Results below). Accordingly, we report median values and 95% confidence intervals (CI). We used non-parametric statistics (Friedman test) to evaluate if the command motion profiles computed with the proposed algorithm (POSE, Eq. 28) depend on the selected head axis (NO, IA, CC).

8 Experimental results

8.1 Static head-reference-frame

In each trial of this experiment (100 trials), the participant kept the unrestrained head in a resting, comfortable position. The motion platform was translated naso-occipitally by using the NO head axis assessed at the start of each trial. The median head orientation, in SRF, at trial start was 180.358°, 7.847°, −3.054° {95% CI (180.271°-180.446), (7.600°–8.095°), [(-3.388°)-(-2.720°)], n = 100} in roll, pitch and yaw, respectively. During the platform motion, the maximum head rotation relative to the head orientation evaluated at the start of the trial was 1.045°, 2.186° and 2.209° in roll, pitch and yaw, respectively. The median value of NCC between the desired motion profile of the platform (Eq. 42) and the command motion profiles (Eq. 43) was 0.997 [95% CI 0.997; 0.997].

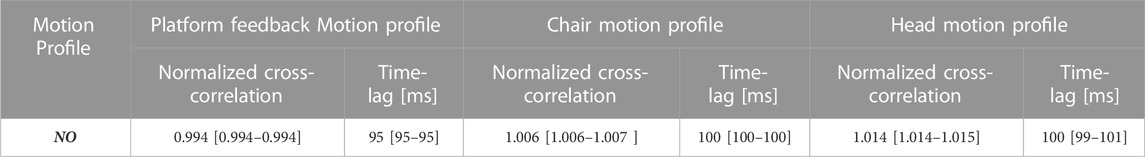

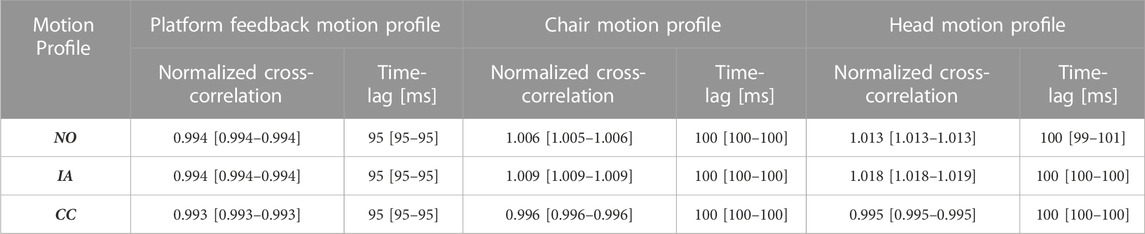

Table 1 reports the median (and 95% CI) of the normalized correlation coefficients (NCC) and time lags between the command motion profiles (Eq. 43), and the actual motion profiles executed as recorded by the MOOG feedback (Eq. 44) or by the Vicon. For the latter, we considered both the chair motion (Eq. 45) and the head motion (Eq. 46). The high values of NCC show the excellent agreement between the theoretical profile of the motion stimuli and the corresponding profile of actual motion as measured in real-time. The measured value of time lag (about 95 ms) between the command and feedback is intrinsic to the specific platform hardware we used (see specifications at Section 7) and is independent of the algorithm (Figure 2). This lag roughly agrees with the value of 117 ms reported by Huryn et al. (2010), who communicated with the platform at 60 Hz. The motion capture processing in our system introduces some additional 5 ms delay in the estimate of time lags measured at the chair and head.

TABLE 1. Static head-reference-frame: median [and (95% CI)] cross-correlation coefficient between the speed motion command profile and the actual speed motion profile (platform feedback, chair and head).

8.2 Dynamic head-reference-frame

8.2.1 Fixed mannequin head

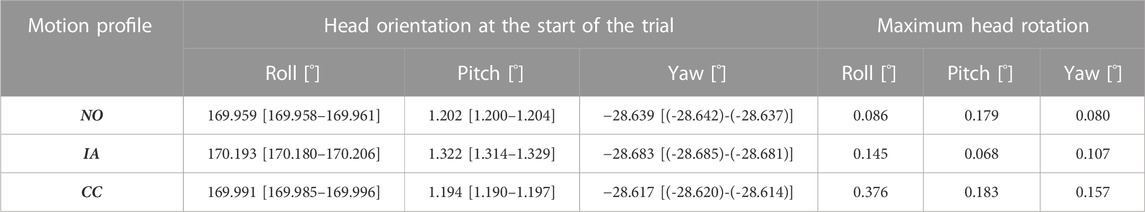

The head orientation in SRF at the start of the trial and the maximum head rotation relative to the initial head orientation during the motion platform are shown in Table 2.

TABLE 2. Dynamic head-reference-frame with fixed mannequin head: median [and (95% CI)] head orientation in SRF at the start of the trial and maximum head rotation, during the motion platform, relative to the head orientation at the start of the trial.

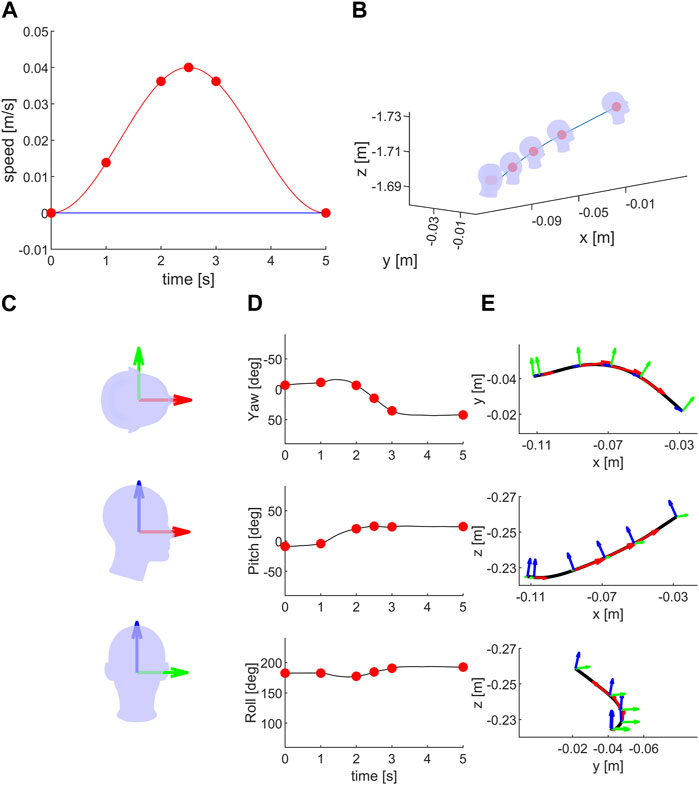

Figure 5 displays the mean motion profiles that were obtained over 100 trials along the head axes during stimulation in the NO, IA, or CC directions. Motion command is in red, while executed motions as revealed by the platform feedback, chair and head are in black, blue, and gray, respectively. One can notice the excellent agreement between the command and the actual motion, except for a time lag.

FIGURE 5. Mean motion profiles along the naso-occipital (top row), inter-aural (middle row) and cranio-caudal axis (bottom row) using the control “Dynamic head-reference-frame.” The motion stimulus consisted of a single cycle of 0.2 Hz sinusoidal acceleration in the naso-occipital (first column), inter-aural (second column) or cranio-caudal direction (third column) in the Stationary head condition. The red line represents the motion command sent to the motion platform. The black, blue, and gray lines show the executed motion in terms of platform feedback, chair and head data (as recorded by the motion tracking system). In the magnified inset notice a lag of about 95 ms between the command signal and the recorded motions by platform feedback, and of about 5 ms between executed motion in terms of platform feedback and the data recorded by motion capture system.

We found a high correlation between the desired motion profile of the platform (Eq. 41) and the command motion profiles (Eq. 43) evaluated using the on-line orientation of the mannequin head. NCC values were not normally distributed for any of the 3 motion directions (NO, IA, CC, all p-values <0.001, Shapiro–Wilk test). The NCC did not depend significantly on the translation direction (NO, IA or CC) (Friedman test χ2(2) = 1.14, p = 0.5655). The median value of NCC between the desired motion profiles and the command motion profiles across all trials and tested translation directions was 0.997 [95%CI 0.997; 0.997].

Table 3 reports the median (and 95% CI) of the normalized correlation coefficients (NCC) and time lags in the same format as in Table 1. Both sets of values are comparable to those obtained in the condition Static head-reference-frame, indicating that the procedure of estimating on-line the position and orientation of the mannequin head is robust and reliable.

TABLE 3. Dynamic head-reference-frame with fixed mannequin head: median [and (95% CI)] cross-correlation coefficient between the speed motion command profile and the actual speed motion profile (platform feedback, chair and head).

8.2.2 Moving head of the participant

Figure 6 shows an example of the condition in which the platform was translated along the NO axis while the participant moved the head voluntarily in arbitrary directions during the trial. The effect of head voluntary orientation during the passive translation in 3D Cartesian space can be appreciated in panel B. Panel D depicts the time changes of head orientation in 3D angular space associated with the voluntary rotation. Panel E depicts the platform path as well as the orientation of the head frame (HRFS) in top, lateral, and front views.

FIGURE 6. Moving head during NO translation: (A) Ideal stimulus: speed motion profile along the naso-occipital, inter-aural and cranio-caudal axes in red, green and blue lines, respectively. Green and blue lines are overlapped. Red dots represent the speed values in NO direction during the motion (after 1, 2, 2.5, 3 and 5 s from the start). (B) The head passive movement during the stimulation (blue line) in a single trial in SRF. The head orientation during the passive motion are shown at the start of the stimulation and at the same time of red dots of (A) The head schematic is not drawn to scale (∼1:15) relative to the passive movement (blue line). (C) Head and naso-occipital (red), inter-aural (green) and cranio-caudal (blue) axes in top, lateral and front views. (D) Head orientation, yaw, pitch and roll, during the same trial as in (B). (E) Black line represents the recorded platform movement, while red, green and blue arrows represent the head orientation during the platform motion as for (B). The length of the red arrow is proportional to the stimulus speed (A). The platform movement was updated online using the head orientation recording. The platform movement is tangential to the NO axis (for the head axes definition see Figure 3).

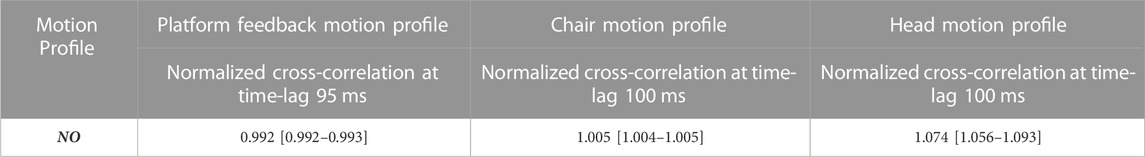

Table 4 reports the median (and 95% CI) of the normalized correlation coefficients (NCC). Even in this case, one can notice the excellent agreement between the commanded motion stimuli and the actual motion as measured on-line.

TABLE 4. Dynamic head-reference-frame with moving head of the participant: median [and (95% CI)] cross-correlation coefficient between the speed motion command profile and the actual speed motion profile (platform feedback, chair and head).

9 Conclusion and perspectives

We have detailed and validated the algorithms to generate arbitrary head-centered motion stimuli in 3D under head-free conditions. Motion stimuli were produced by a MOOG platform. We tested these algorithms using a Static or a Dynamic head-reference-frame. The head was stationary relative to the platform or it moved throughout the platform translation. In all tested cases, we found excellent agreement between the theoretical profile of the motion stimuli and the corresponding profile of actual motion as measured in real-time.

It is worth being mentioned that any deviation between the theoretical profile and the actual profile of the stimuli should be assessed vis-à-vis the specific behavioral outcome being tested. For instance, if the test involves the estimate of vestibular thresholds, no corrections in the stimuli implementation are needed if the errors are smaller than the variability in the thresholds.

Our approach lends itself to vestibular tests that require head-free conditions, either because head-restraints are not recommended or because the test involves voluntary or reflex head movements during the platform motion stimuli. Head-free testing is especially relevant in the light of the growing interest in the exploration of the vestibular function under ecological conditions (Carriot et al., 2014; Diaz-Artiles and Karmali, 2021; La Scaleia et al., 2023; Lacquaniti et al., 2023). Indeed, it is now known that the vestibular system performs best when faced with naturalistic inputs, such as those encountered during head-free conditions (Cullen, 2019; Carriot et al., 2022; Sinnott et al., 2023).

Body-centric computations, such as the head-centric algorithms we presented, are becoming crucial to explore human physiology from the subjective perspective of each individual, as well as for personalized health monitoring. We can also envisage applications of our algorithms in novel virtual realities for flight or driving simulators that aim at minimizing the probability of motion sickness (Groen and Bos, 2008).

From a diagnostic point of view, it can be very useful to administer vestibular stimuli with specific orientations with respect to semicircular canals rather than stimulating the vestibular system with rotational stimuli around cardinal axes of the head. The availability of individual anatomical high-resolution magnetic resonance images of the head makes it possible to determine the specific orientation of the semicircular canals with respect to the Frankfurt plane. The proposed methodology allows to administer vestibular stimuli in any direction and around any predefined axis with respect to the head (within the range of movement of the platform), then it allows also to administer vestibular stimuli around specific semicircular canals’ axes of rotations. The evaluation of vestibular perception of vestibular stimuli centered on the individual vestibular system can certainly facilitate the diagnosis of functional abnormalities of specific semicircular canals.

Future developments of our approach will involve compensation strategies to reduce the current delay between the command and the actual motion in our system. Although in the Dynamic head-reference-frame condition the motion profile was evaluated instantaneously using the on-line head orientation, it was executed after ∼100 ms. Most of this delay (about 95 ms) was intrinsic to the specific platform hardware that we used (the remaining 5 ms depended on our motion capture system). In general, the presence of substantial delays in the system poses limits to the kind of head movements that can be taken into account in the Dynamic head-reference-frame condition. Indeed, the participant who tested this condition moved the head smoothly and relatively slowly, as it typically occurs in smooth pursuit tracking of visual targets. Fast head movements, such as those involved in head saccades, would not be corrected in time to adjust the estimates of head axes reliably. To address this limitation, we can envisage either using faster motors driving a Stewart platform or predictive algorithms to extrapolate the current measurements of head position and orientation into the future (e.g., autoregressive or Kalman filter models). For instance, a lead-compensation strategy based on a heuristically tuned linear prediction of the desired position of the MOOG platform reduced the delay between the desired and actual position to 33 ms in a study of human balance (Luu et al., 2011), involving data communication with the platform at 60 Hz.

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Institutional Review Board of Santa Lucia Foundation (protocol no. CE/PROG.757). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the participant for the publication of any potentially identifiable images or data included in this article.

Author contributions

BL: Conceptualization, Methodology, Software, Writing–original draft, Writing–review and editing. CB: Software, Writing–original draft, Writing–review and editing. FL: Conceptualization, Writing–original draft, Writing–review and editing. MZ: Conceptualization, Methodology, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The experimental work performed in the Laboratory of Visuomotor Control and Gravitational Physiology was supported by the Italian Ministry of Health (Ricerca corrente, IRCCS Fondazione Santa Lucia, Ricerca Finalizzata RF-2018-12365985 IRCCS Fondazione Santa Lucia), Italian Space Agency (grant 2014-008-R.0, I/006/06/0), INAIL (BRIC 2022 LABORIUS), and Italian University Ministry (PRIN grant 20208RB4N9_002, PRIN grant 2020EM9A8X_003, PRIN grant 2022T9YJXT_002, PRIN grant 2022YXLNR7_002 and #NEXTGENERATIONEU (NGEU) National Recovery and Resilience Plan (NRRP), project MNESYS (PE0000006)—A Multiscale integrated approach to the study of the nervous system in health and disease (DN. 1553 11.10.2022)). The Moog platform was bought with grant ASI 2014-008-R.0 and I/006/06/0.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fbioe.2023.1296901/full#supplementary-material

References

Angelaki, D. E., and Cullen, K. E. (2008). Vestibular system: the many facets of a multimodal sense. Annu. Rev. Neurosci. 31, 125–150. doi:10.1146/annurev.neuro.31.060407.125555

Arun, K. S., Huang, T. S., and Blostein, S. D. (1987). Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Analysis Mach. Intell. PAMI-9 (5), 698–700. doi:10.1109/TPAMI.1987.4767965

Benson, A. J., Spencer, M. B., and Stott, J. R. (1986). Thresholds for the detection of the direction of whole-body, linear movement in the horizontal plane. Aviat. Space Environ. Med. 57 (11), 1088–1096.

Bermúdez Rey, M. C., Clark, T. K., Wang, W., Leeder, T., Bian, Y., Merfeld, D. M., et al. (2016). Vestibular perceptual thresholds increase above the age of 40. Front. neurology 7, 162. doi:10.3389/fneur.2016.00162

Bögle, R., Kirsch, V., Gerb, J., and Dieterich, M. (2020). Modulatory effects of magnetic vestibular stimulation on resting-state networks can be explained by subject-specific orientation of inner-ear anatomy in the MR static magnetic field. J. Neurol. 267 (Suppl. 1), 91–103. doi:10.1007/s00415-020-09957-3

Bremova, T., Caushaj, A., Ertl, M., Strobl, R., Böttcher, N., and Strupp, M. (2016). Comparison of linear motion perception thresholds in vestibular migraine and Menière’s disease. Eur. Arch. Otorhinolaryngol. 273, 2931–2939. doi:10.1007/s00405-015-3835-y

Carriot, J., Jamali, M., Chacron, M. J., and Cullen, K. E. (2014). Statistics of the vestibular input experienced during natural self-motion: implications for neural processing. J. Neurosci. 34 (24), 8347–8357. doi:10.1523/JNEUROSCI.0692-14.2014

Carriot, J., McAllister, G., Hooshangnejad, H., Mackrous, I., Cullen, K. E., and Chacron, M. J. (2022). Sensory adaptation mediates efficient and unambiguous encoding of natural stimuli by vestibular thalamocortical pathways. Nat. Commun. 13 (1), 2612. doi:10.1038/s41467-022-30348-x

Chaudhuri, S. E., Karmali, F., and Merfeld, D. M. (2013). Whole body motion-detection tasks can yield much lower thresholds than direction-recognition tasks: implications for the role of vibration. J. Neurophysiol. 110 (12), 2764–2772. doi:10.1152/jn.00091.2013

Crane, B. T. (2013). Limited interaction between translation and visual motion aftereffects in humans. Exp Brain Res. 224 (2), 165–178. doi:10.1007/s00221-012-3299-x

Cullen, K. E. (2011). The neural encoding of self-motion. Curr. Opin. Neurobiol. 21 (4), 587–595. doi:10.1016/j.conb.2011.05.022

Cullen, K. E. (2012). The vestibular system: multimodal integration and encoding of self-motion for motor control. Trends Neurosci. 35 (3), 185–196. doi:10.1016/j.tins.2011.12.001

Cullen, K. E. (2019). Vestibular processing during natural self-motion: implications for perception and action. Nat. Rev. Neurosci. 20 (6), 346–363. doi:10.1038/s41583-019-0153-1

Curthoys, I. S., McGarvie, L. A., MacDougall, H. G., Burgess, A. M., Halmagyi, G. M., Rey-Martinez, J., et al. (2023). A review of the geometrical basis and the principles underlying the use and interpretation of the video head impulse test (vHIT) in clinical vestibular testing. Front. Neurol. 14, 1147253. doi:10.3389/fneur.2023.1147253

Dasgupta, B., and Mruthyunjaya, T. (2000). The Stewart platform manipulator: a review. Mech. Mach. Theory 35 (1), 15–40. doi:10.1016/s0094-114x(99)00006-3

Diaz-Artiles, A., and Karmali, F. (2021). Vestibular precision at the level of perception. Eye movements, posture, and neurons. Neuroscience 468, 282–320. doi:10.1016/j.neuroscience.2021.05.028

Eggert, D., Lorusso, A., and Fisher, R. (1997). Estimating 3-D rigid body transformations: a comparison of four major algorithms. Mach. Vis. Appl. 9, 272–290. doi:10.1007/s001380050048

Ertl, M., and Boegle, R. (2019). Investigating the vestibular system using modern imaging techniques-A review on the available stimulation and imaging methods. J. Neurosci. Methods 326, 108363. doi:10.1016/j.jneumeth.2019.108363

Ertl, M., Prelz, C., Fitze, D. C., Wyssen, G., and Mast, F. W. (2022). PlatformCommander - an open source software for an easy integration of motion platforms in research laboratories. SoftwareX 17, 100945. doi:10.1016/j.softx.2021.100945

Furqan, M., Suhaib, M., and Ahmad, N. (2017). Studies on Stewart platform manipulator: a review. J. Mech. Sci. Technol. 31, 4459–4470. doi:10.1007/s12206-017-0846-1

Goldberg, J. M., Wilson, V. J., Cullen, K. E., Angelaki, D. E., Broussard, D. M., Buttner-Ennever, J., et al. (2012). The vestibular system: a sixth sense. New York, NY: Oxford University Press, 541.

Grabherr, L., Nicoucar, K., Mast, F. W., and Merfeld, D. M. (2008). Vestibular thresholds for yaw rotation about an earth-vertical axis as a function of frequency. Exp Brain Res. 186 (4), 677–681. doi:10.1007/s00221-008-1350-8

Groen, E. L., and Bos, J. E. (2008). Simulator sickness depends on frequency of the simulator motion mismatch: an observation. Presence 17 (6), 584–593. doi:10.1162/pres.17.6.584

Horn, B. K. P. (1987). Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 4, 629–642. doi:10.1364/josaa.4.000629

Horn, B. K. P., Hilden, H. M., and Negahdaripour, S. (1988). Closed-form solution of absolute orientation using orthonormal matrices. J. Opt. Soc. Am. A 5, 1127–1135. doi:10.1364/josaa.5.001127

Hulme, K. F., and Pancotti, A. (2004). “Development of a virtual D.O.F. motion platform for simulation and rapid synthesis,” in 45th AIAA/ASME/ASCE/AHS/ASC structures, Structural Dynamics & Materials Conference. doi:10.2514/6.2004-1851

Huryn, T. P., Luu, B. L., Loos, H. F., Blouin, J., and Croft, E. A. (2010). “Investigating human balance using a robotic motion platform,” in IEEE International Conference on Robotics and Automation, 5090–5095. doi:10.1109/ROBOT.2010.5509378

Jennings, A. (2022). Sphere fit-least squared. [MATLAB Central File Exchange. Retrieved]. https://www.mathworks.com/matlabcentral/fileexchange/34129-sphere-fit-least-squared (October 5, 2022).

Kobel, M. J., Wagner, A. R., and Merfeld, D. M. (2021a). Impact of gravity on the perception of linear motion. J. Neurophysiol. 126, 875–887. doi:10.1152/jn.00274.2021

Kobel, M. J., Wagner, A. R., Merfeld, D. M., and Mattingly, J. K. (2021b). Vestibular thresholds: a review of advances and challenges in clinical applications. Front. Neurol. 12, 643634. doi:10.3389/fneur.2021.643634

Lacquaniti, F., La Scaleia, B., and Zago, M. (2023). Noise and vestibular perception of passive self-motion. Front. Neurol. 14, 1159242. doi:10.3389/fneur.2023.1159242

La Scaleia, B., Lacquaniti, F., and Zago, M. (2023). Enhancement of vestibular motion discrimination by small stochastic whole-body perturbations in young healthy humans. Neuroscience 510, 32–48. doi:10.1016/j.neuroscience.2022.12.010

Luu, B. L., Huryn, T. P., Van der Loos, H. M., Croft, E. A., and Blouin, J. S. (2011). Validation of a robotic balance system for investigations in the control of human standing balance. IEEE Trans. Neural Syst. Rehabil. Eng. 19 (4), 382–390. doi:10.1109/tnsre.2011.2140332

MacNeilage, P. R., Banks, M. S., DeAngelis, G. C., and Angelaki, D. E. (2010). Vestibular heading discrimination and sensitivity to linear acceleration in head and world coordinates. J. Neurosci. 30, 9084–9094. doi:10.1523/jneurosci.1304-10.2010

MacNeilage, P. R., Zhang, Z., DeAngelis, G. C., and Angelaki, D. E. (2012). Vestibular facilitation of optic flow parsing. PLoS One 7 (7), e40264. doi:10.1371/journal.pone.0040264

Mallery, R. M., Olomu, O. U., Uchanski, R. M., Militchin, V. A., and Hullar, T. E. (2010). Human discrimination of rotational velocities. Experimental brain research 204 (1), 11–20. doi:10.1007/s00221-010-2288-1

Merfeld, D. M., Priesol, A., Lee, D., and Lewis, R. F. (2010). Potential solutions to several vestibular challenges facing clinicians. J. Vestib. Res. 20 (1-2), 71–77. doi:10.3233/ves-2010-0347

Moorrees, C. F. A., and Kean, M. R. (1958). Natural head position: a basic consideration in the interpretation of cephalometric radiographs. Am. J. Phys. Anthropol. 16, 213–234. doi:10.1002/ajpa.1330160206

Mueller, F. F., Lopes, P., Andres, J., Byrne, R., Semertzidis, N., Li, Z., et al. (2021). Towards understanding the design of bodily integration. Int. J. Hum. Comput. Stud. 152, 102643. doi:10.1016/j.ijhcs.2021.102643

Sarabandi, S., and Thomas, F. (2023). Solution methods to the nearest rotation matrix problem in ℝ3: a comparative survey. Numerical Linear Algebra with Applications. doi:10.1002/nla.2492

Sinnott, C. B., Hausamann, P. A., and MacNeilage, P. R. (2023). Natural statistics of human head orientation constrain models of vestibular processing. Sci. Rep. 13 (1), 5882. doi:10.1038/s41598-023-32794-z

Valko, Y., Lewis, R. F., Priesol, A. J., and Merfeld, D. M. (2012). Vestibular labyrinth contributions to human whole-body motion discrimination. J. Neurosci. 32 (39), 13537–13542. doi:10.1523/JNEUROSCI.2157-12.2012

Wagner, A. R., Kobel, M. J., and Merfeld, D. M. (2022). Impacts of rotation axis and frequency on vestibular perceptual thresholds. Multisens. Res. 35 (3), 259–287. doi:10.1163/22134808-bja10069

Wu, S., Lin, P., Zheng, Y., Zhou, Y., Liu, Z., and Yang, X. (2021). Measurement of human semicircular canal spatial attitude. Front. Neurology 12, 741948. doi:10.3389/fneur.2021.741948

Glossary

Keywords: personalized vestibular tests, unrestrained movements, vestibulopathies, ageing, Stewart platform, semicircular canals, otoliths

Citation: La Scaleia B, Brunetti C, Lacquaniti F and Zago M (2023) Head-centric computing for vestibular stimulation under head-free conditions. Front. Bioeng. Biotechnol. 11:1296901. doi: 10.3389/fbioe.2023.1296901

Received: 19 September 2023; Accepted: 16 November 2023;

Published: 07 December 2023.

Edited by:

Stefano Ramat, University of Pavia, ItalyReviewed by:

Paul MacNeilage, University of Nevada, Reno, United StatesDan M. Merfeld, The Ohio State University, United States

Copyright © 2023 La Scaleia, Brunetti, Lacquaniti and Zago. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Barbara La Scaleia, Yi5sYXNjYWxlaWFAaHNhbnRhbHVjaWEuaXQ=

Barbara La Scaleia

Barbara La Scaleia Claudia Brunetti

Claudia Brunetti Francesco Lacquaniti

Francesco Lacquaniti Myrka Zago

Myrka Zago