94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Bioeng. Biotechnol., 12 April 2023

Sec. Biomechanics

Volume 11 - 2023 | https://doi.org/10.3389/fbioe.2023.1104000

This article is part of the Research TopicWearable Sensors in Vital Health MonitoringView all 5 articles

We propose a co-simulation framework comprising biomechanical human body models and wearable inertial sensor models to analyse gait events dynamically, depending on inertial sensor type, sensor positioning, and processing algorithms. A total of 960 inertial sensors were virtually attached to the lower extremities of a validated biomechanical model and shoe model. Walking of hemiparetic patients was simulated using motion capture data (kinematic simulation). Accelerations and angular velocities were synthesised according to the inertial sensor models. A comprehensive error analysis of detected gait events versus reference gait events of each simulated sensor position across all segments was performed. For gait event detection, we considered 1-, 2-, and 4-phase gait models. Results of hemiparetic patients showed superior gait event estimation performance for a sensor fusion of angular velocity and acceleration data with lower nMAEs (9%) across all sensor positions compared to error estimation with acceleration data only. Depending on algorithm choice and parameterisation, gait event detection performance increased up to 65%. Our results suggest that user personalisation of IMU placement should be pursued as a first priority for gait phase detection, while sensor position variation may be a secondary adaptation target. When comparing rotatory and translatory error components per body segment, larger interquartile ranges of rotatory errors were observed for all phase models i.e., repositioning the sensor around the body segment axis was more harmful than along the limb axis for gait phase detection. The proposed co-simulation framework is suitable for evaluating different sensor modalities, as well as gait event detection algorithms for different gait phase models. The results of our analysis open a new path for utilising biomechanical human digital twins in wearable system design and performance estimation before physical device prototypes are deployed.

Wearable inertial measurement units (IMUs), among other measuring methods, enable researchers in biomechanics and rehabilitation to measure human kinematics and therefore expand our understanding of natural human movement (Derungs and Amft, 2020; Al Borno et al., 2022). In medicine, movement applications range from analysing walking performance (Balasubramanian et al., 2009), detecting functional impairment in patients after a stroke (Balaban and Tok, 2014), regaining walking autonomy (Canning et al., 2006), to monitoring fall risk in older adults and patients (Tulipani et al., 2020). Yet another application area of IMUs are assistive devices, including the control of orthoses, exoskeletons, and prostheses (Sánchez Manchola et al., 2019). Practical deployment of wearable motion analysis often involves iterative physical testing to optimise and correct sensor positioning (Förster et al., 2009; Niswander et al., 2020), which is tedious due to repeated measurements and prone to error due to, e.g., variance in motion execution, fatigue, loss of motivation, and examiner-dependent errors (Pacini Panebianco et al., 2018; Nilsson et al., 2022). Furthermore, iterative analyses imply, in addition to the considerable time required for study participants/patients and involved experts, that only certain sensor configurations can be evaluated at a time. Simulation-based analyses may offer an excellent tool to systematically address various challenges related to finding suitable sensor configurations and signal interpretation methods from the extensive design space and parametrisation options of wearable IMU systems. Additionally, inter-individual characteristics and coping strategies substantially influence wearable system design and performance of sensor-based movement interpretation (Altini et al., 2015; Derungs and Amft, 2020). For a given wearable application, error effects related to sensor type, body positioning, parametrisation of signal interpretation algorithms, etc., are to date solely available as expert knowledge, if at all. Modeling and simulation methods could enable researchers and practitioners to virtually select, augment, and exchange sensor devices, body positions, as well as to test different modalities and signal interpretation algorithms without patient involvement. Derungs and Amft (2020) performed a first quantitative analysis of gait performance estimation from acceleration signals synthesised in a co-simulation of human body and sensor models. While the work demonstrated that simulation can reveal the full potential of wearable motion sensors and increase their application in clinical practice, the work only focused on dynamic acceleration of the patient’s upper leg and did not cover gait phase events.

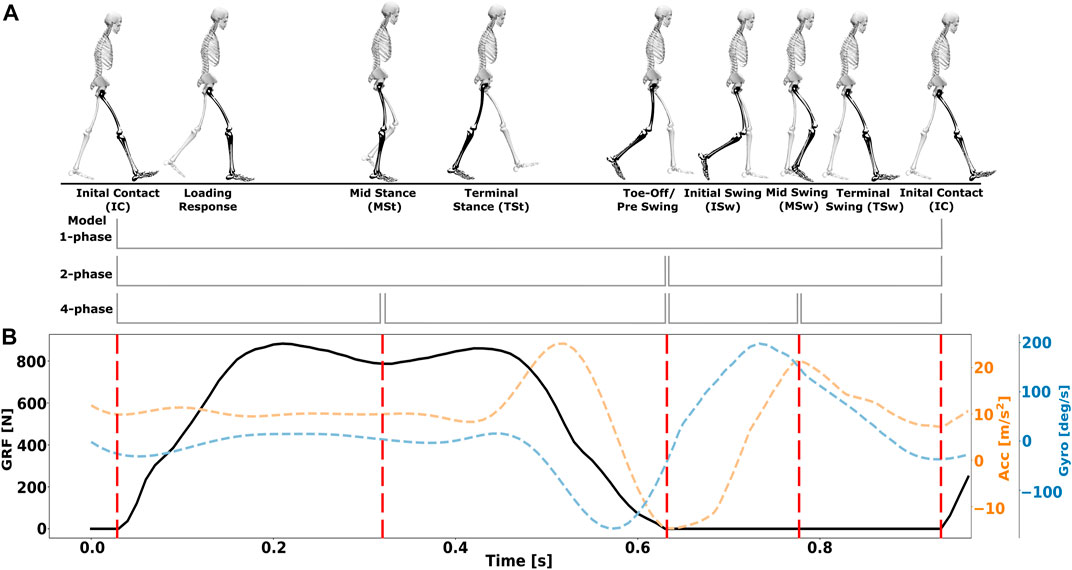

Gait phase assessment is used clinically to evaluate and diagnose gait disorders, thus helping clinicians to determine and provide optimal care and treatment for patients with walking impairments (Derungs et al., 2018; Han et al., 2019; Sánchez Manchola et al., 2019). Gait phases can be described during a gait cycle from one initial contact to the next initial contact of the same leg (Perry and Bunfield, 2010). One gait cycle consists of two main phases, i.e., stance and swing phase that can be partitioned into eight subphases (Perry and Bunfield, 2010): Initial Contact (IC), Loading Response (LR), Mid Stance (MSt), Terminal Stance (TSt), Pre Swing/Toe off (PSw/TO), Initial Swing (ISw), Mid Swing (MSw), and Terminal Swing (TSw), as shown in Figure 1. A widespread approach to describe gait relies on a 4-phase model (Sánchez Manchola et al., 2019), although events that determine gait phases differ across literature. A variety of published detection algorithms and experimental studies estimated and analysed gait phases, considering various IMU sensors and body positions. Nevertheless, gait detection algorithms were limited to process data derived from a specific body position, preventing performance comparison depending on placement (see also Section 2.3). Furthermore, synthesised sensor data is often used for model training in data augmentation tasks (see also Section 2.1), but the potential of synthesised data for performance analysis of different algorithms, as shown in this work, has hardly been considered. Additionally, there is no methodology to consolidate and analyse performance depending on the choices of algorithms, sensor modalities, and body mounting position, which could improve wearable system design to match applications or individual characteristics.

FIGURE 1. Illustrations of human gait phases. (A) Gait cycle division into 1,2,4-phase models. Adapted from (Perry and Bunfield, 2010). (B) Visualisation of the four gait events IC, MSt, TO, and MSw aligned with ground reaction force (GRF) data and synthesised accelerometer and gyroscope data of hemiparentic patients from our co-simulation framework.

This paper provides the following contributions:

1. We present a co-simulation framework for synthesising inertial sensor data based on two coupled and time-synchronsed subsystems: 1) personalised inverse kinematic body (i.e., digital twin) and shoe models, and 2) IMU sensor models. Compared to the earlier digital twin approaches, we focus on gait performance analysis of different algorithms and extend our inertial sensor models include triaxial transducers describing static and dynamic acceleration as well as triaxial transducers of angular velocity.

2. We validated our framework against data from a measurement study with healthy volunteers to prove correctness of our modeling and co-simulation approach. Subsequently, we show that the framework is capable of evaluating more challenging gait patterns. Therefore, in simulation experiments, we instantiated a total of 960 IMUs, virtually attached to upper leg, lower leg, and shoe, to analyse gait phase detection of impaired walking in hemiparetic patients.

3. The proposed framework enables system designers to perform a comprehensive error analysis (timing error between detected gait events and reference gait events) of common gait event detection algorithms across 1-, 2-, and 4-phase gait models, considering sensor type and sensor positioning. We varied sensor configuration and algorithm paramerterisation (user-depenent and sensor position-dependent parameterisation) as well as analysed errors that may originate from sensor position variation during wear.

The concept of human digital twins has already been used, in particular for accurate musculoskeletal models, validated for human motion analysis and simulations. Among others, the open-source platform OpenSim (Delp et al., 2007) has been validated in various human motion studies (Rajagopal et al., 2016; Seth et al., 2018; Karimi et al., 2021; Yap et al., 2021). Biomechanical models provide the basis for evaluating wearable system design, as shown by (Lambrecht et al., 2017; Derungs and Amft, 2020; Mundt et al., 2020; Al Borno et al., 2022). In healthcare and rehabilitation, the digital twin concept is increasingly used, e.g., to partially replace expensive laboratory experiments with in silico simulations (Kamel Boulos and Zhang, 2021). Kamel Boulos and Zhang (2021) provided an overview on digital twin concepts used in healthcare. Pizzolato et al. (2019) reviewed neuromusculoskeletal modeling approaches for recovery from spinal cord injury and concluded that the digital twins concept can be applied to people and assistive devices. Derungs and Amft (2020) were the first to leverage digital twins based on personalisable kinematic body models to synthesise sensor data and estimate sensor-dependent algorithm performance in co-simulations.

Collecting patient data can be tedious and error-prone. In addition, many detection models require training data, thus the synthesis of virtual sensor data is gaining interest in the research community. For example, the dataset of Knarr et al. (2013), including walking of eight hemiparetic patients, has been used in previous simulation work by Derungs and Amft (2019). The latter authors demonstrated how biomechanical simulations and synthesised acceleration data could be used to estimate motion-related clinical assessment scores, comparable to data of physical sensors (Wang et al., 2015). Mundt et al. (2020) estimated joint kinematics and kinetics using musculoskeletal modeling to synthesise acceleration and angular velocity data from five selected sensor positions as input for an artificial neural network. Their results showed that the simulation approach is a valid method for data augmentation in biomechanics. Furthermore, generative adversarial networks (GANs), which originally emerged from computer vision research (Zhang and Alshurafa, 2020; Hoelzemann et al., 2021), were proposed to synthesise sensor data for human activities. Zhang and Alshurafa (2020) proposed two deep generative cross-modal architectures for synthesising accelerometer data from video sequences. Kwon et al. (2020, 2021) presented IMUTube, an automated processing pipeline for human activity recognition (HAR) that integrates existing computer vision and signal processing techniques to convert video of human activity into virtual streams of IMU data. A similar approach was chosen by Lämsä et al. (2022), who used neural networks with VIDEO2IMU to generate IMU signals and features from monocular videos of human activities. Their results suggested that HAR systems trained using virtual sensor data could perform considerably better than baseline models, trained using only physical IMU data (Kwon et al., 2021; Lämsä et al., 2022). Esteban et al. (2017) deployed GANs to synthesise respiratory data, where a generator model was used to augment data, and a discriminator attempts to distinguish between real and artificial data. Hoelzemann et al. (2021) used the latter technique with a long-short-term memory layer. Their augmentation approach increased classification performance and produced simulated activity of daily living data similar to data derived from physical measurements. However, there are persisting challenges concerning validity and variability of data augmentation for sensor timeseries, e.g., random transformation is not applicable for every data set, time domain pattern mixing is only recommended for short timeseries or a comparable weak response of timeseries-based neural networks to data augmentation (Iwana and Uchida, 2021). In purely data-driven approaches, e.g., generative models based on neural networks, it is difficult to synthesise new, meaningful time series, as the relation to physical constraints cannot be assessed. In contrast, sensor synthesis from measurement-based inverse kinematic models implements a bottom-up knowledge ordering approach, thus introducing evidence at all model layers. So far, synthesised data was used for model training mostly, but the potential of synthesised data for performance analysis of different algorithms, as shown in this work, has hardly been considered. In this work, we rely on validated biomechanical models that are personalised to synthesise acceleration and angular velocity sensor data on multiple limbs. Compared to previous work, our simulation approach and algorithms aim to evaluate, among a selection of several hundred virtual sensor positions, which sensor configurations and algorithm parameterisations are suitable to estimate gait events.

Several approaches have been used to optimise movement monitoring and increase robustness against sensor repositioning. Previous work focused on activity recognition under varying sensor measurements, e.g., considering inter-individual differences and sensor displacement (Lester et al., 2006; Thiemjarus, 2010; Atallah et al., 2011; Banos Legran et al., 2012; Harms et al., 2012; Guo et al., 2016; Chen et al., 2017). Kunze and Lukowicz (2014), described rotation-independent signal features in frequency domain to mitigate sensor positioning effects. Förster et al. (2009) proposed orientation-robust features in addition to self-calibration algorithms to overcome effects of sensor displacement in activity and gesture recognition.

While previous work was limited to physical sensor measurements, Derungs and Amft (2020) investigated sensor positioning and biomarker estimation from synthesised acceleration data, focussing on stride duration, cadence, and step count in athletes and patients after stroke. For hemiparetic patients, differences in gait marker estimation performance were shown, as acceleration sensors were virtually attached to upper legs of affected and less affected body side. Estimation errors ranged up to ∼44% confirmed a sensor position dependency of gait marker estimation. Optimal sensor positions changed over a period of movement therapy. At the less affected body side a favourable ventral region was proposed for sensor positioning. For the affected body side, the area of interest shifted medial-dorsal from before to after an intervention. While the results of Derungs and Amft (2020) showed that personalised and application-adapted wearable systems can considerably outperform non-adapted ones, only dynamic acceleration and selected gait markers were considered. The aforementioned work analysed upper legs of hemiparetic patients only and did not provide a dedicated validation. In contrast, the present work considers complete acceleration and gyroscope sensor models and investigates gait event and stride duration estimation according to basic biomechanical definitions.

Due to the clinical relevance and the large number of gait disorders, gait phase analysis with IMUs has already entered practice in research movement labs, e.g., Zhao et al. (2019); Prasanth et al. (2021). Within this context, gait cycle segmentation can be divided into two main categories of applied algorithms (Sánchez Manchola et al., 2019; Vu et al., 2020): rule-based e.g., used by (Catalfamo et al., 2010; Rueterbories et al., 2014; Behboodi et al., 2015; Sánchez Manchola et al., 2019) and data-based, e.g., Hidden Markov Models (HMMs), Artificial Neural Networks and hybrids by (Mannini and Sabatini, 2010; Taborri et al., 2015; Mannini et al., 2016; Zhao et al., 2019), respectively. While HMMs have demonstrated top performance, their training- and time-intensive implementation may be unnecessary (Taborri et al., 2016). Indeed, recent publications show that rule-based algorithms with parameterised thresholds are actively deployed for gait phase detection (Vu et al., 2020; Prasanth et al., 2021). In addition, vertical and antero-posterior linear acceleration and angular velocity at the sagittal plane show periodic and repeating signal patterns over gait cycles, resulting in intuitive, rule-based algorithms (Taborri et al., 2016). Rule-based gait event and phase detection algorithms include time domain-based methods, e.g., (Catalfamo et al., 2010; Rueterbories et al., 2014), time-frequency analysis methods, e.g., (Zhou et al., 2016), and heuristic algorithms, e.g., using derivative peak detection and sign changes (Gouwanda and Gopalai, 2015). Detecting gait events often builds on measurements from gyroscopes and accelerometers individually or in combination (Taborri et al., 2016; Zhou et al., 2016; Vu et al., 2020). For example, Catalfamo et al. (2010) used a rule-based detection approach to segment gait into IC and TO events based on one gyroscope placed at the dominant lower leg. Different inclination levels in seven healthy participants were analysed. The algorithm showed an overall detection accuracy of 99% for level walking. Gouwanda and Gopalai (2015) used a lower leg angular velocity heuristic for real-time detection of two gait phases during walking with and without braces. Although all gait events were detected with an average error of less than 125 ms, abnormal gait patterns were simulated on healthy participants using ankle and knee braces instead of participants with pathological gait. Behboodi et al. (2015) extracted characteristics from lower leg angular velocity signals of four healthy individuals to detect seven gait events with a time difference of approx. 75 ms, and a detection accuracy of 99.8%. Lambrecht et al. (2017) implemented different online peak detection algorithms for segmenting walking in healthy participants. The input signals for each algorithm were chosen from lower leg angular velocity, lower leg segment angle, ankle joint angle, heel linear velocity, toe linear velocity, lower leg position, foot angular acceleration, and toe linear velocity, all obtained in OpenSim. Timing delays ranged between 6 ms and 190 ms, depending on algorithm and gait phase. Sánchez Manchola et al. (2019) proposed a gait phase detection system for a lower limb exoskeleton and evaluated it in nine healthy and nine hemiparetic patients. Vertical acceleration and medio-lateral angular velocity of a single IMU attached to the foot instep was used. They detected four gait phases (IC, FF, HO, TO) with a rule-based algorithm, among others. Compared to reference data from a pressure-sensing insole, the rule-based algorithm showed an overall accuracy of 65.43% and timing errors ranging from −28 ms to 9 ms. Chia Bejarano et al. (2015) placed two IMUs laterally at lower legs to determine angular velocity in the sagittal plane and the lower leg flexo-extension angle. They estimated three gait phases (IC, TO, MSw) with an accuracy of 87% and an average timing difference of up to 52 ms for ten stroke patients with severe impairment. Furthermore, Rueterbories et al. (2014) utilised a rule-based algorithm to identify four gait events from differential acceleration signals of one foot-worn sensor in hemiparetic patients. Their results showed a timing difference ranging from 65 ms to 104 ms, depending on the gait phase.

The above discussion shows that algorithms were restricted to process data derived from a specific body position, thus preventing a comparison of performance depending on placement. Moreover, measurements with several hundred sensor positions simultaneously is prohibitively expensive and not feasible regarding practicality and burden to study participants, e.g., patients. In contrast, our co-simulation framework enables us to investigate motion estimation error using virtually attached sensors at personalised biomechanical models. We demonstrate the co-simulation approach by evaluating estimation algorithms that use vertical acceleration, including static and dynamic acceleration components, as well as medio-lateral angular velocity, across a total of 960 sensors.

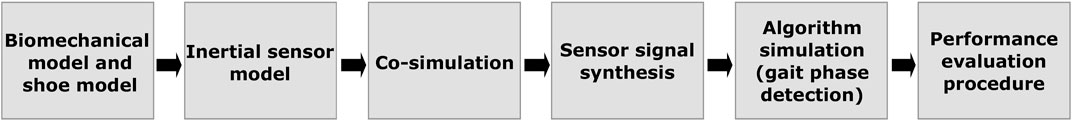

We propose a co-simulation framework comprising models to represent the human body as well as inertial sensors. After personalising the body models according to anthropometric data, and defining the inertial sensor placement, models are simulated with motion data (kinematic simulation). Based on the simulation’s kinematic output, inertial sensor signals for each virtual sensor are synthesised. Synthesised data is then fed into selected detection algorithms. Here we focus on gait event detection across 1-phase, 2-phase, and 4-phase gait models. Finally, algorithm performance was analysed in relation to sensor position and gait patterns, to demonstrate the evaluation process based on co-simulation. Figure 2 provides an overview on the co-simulation components and processing steps. In the following subsections, we detail the framework components and their implementation.

FIGURE 2. Co-simulation framework overview. Inertial sensor model instances are distributed across biomechanical and shoe models. Motion data is used to co-simulate the models and synthesise inertial sensor data. Based on the various simulated sensors, performance of selected gait event detection algorithms can be analysed in relation to sensor configuration, algorithm parameterisation, and sensor positioning.

A biomechanical body model represents the basis for the sensor simulation. We build on personalisable models, e.g., according to anthropometric data, which can be used for motion simulation. In this work, we used the OpenSim (OpenSim, Version 3.3, Simbios, Simbios/SimTK, CA, United States) Gait2354 inverse kinematic model (Delp et al., 2007). Gait2354 is a dynamic 23 degree-of-freedom musculoskeletal human model, including 54 musculotendon actuators of the lower body, torso, and head. The model was validated in healthy participants (John et al., 2013) and different patient groups (Richards and Higginson, 2010; Knarr et al., 2013). Personalisation of the biomechanical body model involved scaling, i.e., changing body mass properties and dimensions, by comparing static distance measurements between specified model landmarks and registering markers (default weights) placed on study participants. Thus, modeling input included body weight and measured motion capture markers.

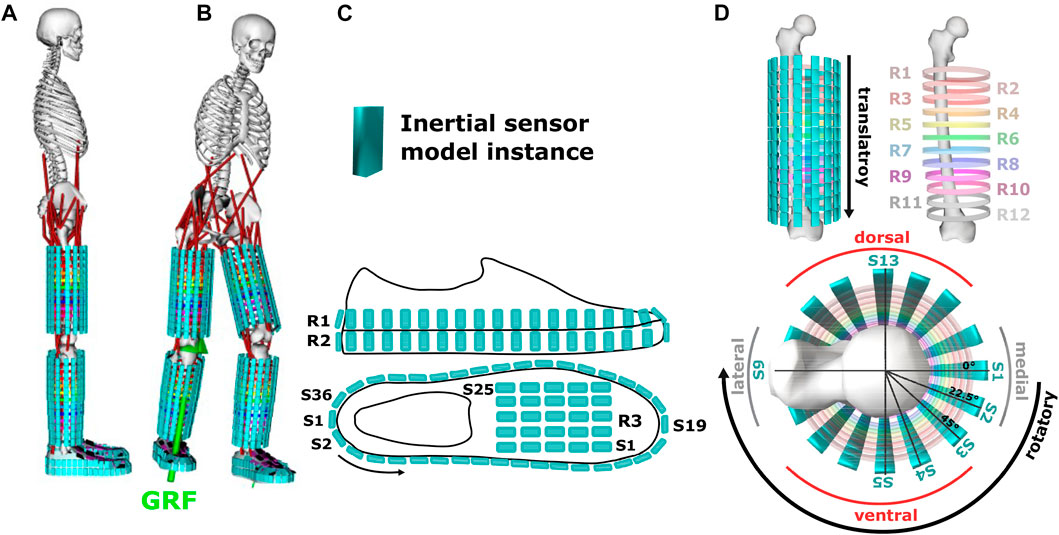

Since the original body model represents bones, joints, and muscle links only, an auxiliary structure with a cylindrical shape was designed to approximate limb silhouettes. On the auxiliary structure 12 rings with 16 positions per ring were defined to attach sensors per body side (see Figure 3).

FIGURE 3. (A) Illustration of inertial sensor models attached to a biomechanical body model and a shoe model. The body model uses an auxiliary structure of rings (R) to approximate the limb silhouette. (B) Movement simulation inside OpenSim using ground reaction force data (GRF) as reference. (C) Shoe sensor positions. (D) Sensor positions (S) on the upper and lower legs and visualisation of sensor repositioning along transversal (rotatory part) and longitudinal (translatory part) limb axis of body segments.

To represent the shoe, an inertia-free, non-deformable, feature-less geometric silhouette model was designed to determine silhouette and sensor positioning (2 rings with 36 sensor positions, and 1 ring with 25 sensor positions). The shoe model was attached to the body model at the foot segment and fixed via direct-link (WeldJoint).

A global 3D Cartesian coordinate frame G with coordinate axes (x,y,z) was defined in OpenSim. In addition, Cartesian coordinates of a body-local frame L were defined to support spatial representation of the body and shoe model as anterior-posterior (x-axis), vertical (y-axis), and medio-lateral (z-axis). Initial orientation of the vertical axes of the body-local frame L and the global frame G remained identical throughout all simulations.

An additive model was used to represent 3D acceleration signals, where

Vector

The static acceleration component

The unit vector

Angular velocity, as measured by gyroscope sensors, was synthesised by calculating the first derivative of the orientation estimate with respect to time:

where

Sensors were designed as 5 mm3 cubes without inertia and scaled in volume by factors of 0.001, 0.005, and 0.003 in x-, y-, and z-axis, respectively, to match the scaled biomechanical model in OpenSim (see Figure 3). Our approach was to systematically evaluate position-dependent sensor information. Thus, a systematically arranged sensor grid see Section 3.1 was defined with a maximum distance of 26 mm between individual sensors for a body height of 1.83 m which corresponds to the tallest participant in our evaluation study. On each upper and lower leg 192 sensors were virtually attached. In addition, 96 sensors were attached to each shoe, resulting in a total of 960 simulated sensors. Sensor attachment at body and shoe models was defined as a direct-link (WeldJoint). Figure 3 illustrates the simulated sensor positions.

Co-simulations of the models were performed using OpenSim inverse kinematics tool and motion data as input variables (see Section 5.1). MoCap marker data was filtered by a 6 Hz lowpass Butterworth. Orientation estimates were derived as rotation matrices

We adapted and contrasted threshold-based gait detection algorithms that were published with promising estimation results using at least one sensor position on the lower limbs. Algorithm (Algo.) A1 used acceleration data only (see Section 4.2.1) and was taken from Catalfamo et al. (2010), but parameterisation, i.e., thresholds were derived from training data (see Section 5.3) instead of visual inspection. We included Algo. A1 as a baseline. Algorithm A2 used gyroscope data corrected by accelerometer data (see Sections 4.2.2–4.2.5). Algo. A2 represents a combination of detection algorithms from Sánchez Manchola et al. (2019) to detect IC and Zhao et al. (2019) to detect Mst and MSw. Similar to Algo. A1, training data was used to derive parameters of Algo. A2. In the aforementioned studies, inertial sensors were attached to feet and lower legs to capture linear acceleration and/or angular velocity signals during gait. Instead we defined multiple sensor positions according to the biomechanical body and shoe models described in Section 3.1.

Synthesised data was preprocessed using a second order Butterworth filter (cut-off: 17 Hz for

In threshold-based gait event detection, peak detection is a fundamental element. Maxima were detected by a peak detection function

Θ refers to parameters of

The peak detection was parameterised by Θ = {θh, θd} during training, with minimum peak height θh and minimum distance between two consecutive peaks θd. Instead of visual inspection to find minimum peak height θh, as pursued in previous work (Kotiadis et al., 2010; Maqbool et al., 2016; Sánchez Manchola et al., 2019), in our training procedure, we initially applied peak detection without parameter θh, to find all candidate peaks

A wait time tw between gait events was introduced to avoid bouncing effects and thus false detections, according to (Rueterbories et al., 2013; Sánchez Manchola et al., 2019). Related work described wait times tw, ranging between 40 ms ≤ tw ≤ 300 ms, e.g., (Maqbool et al., 2016; Sánchez Manchola et al., 2019), or 50% of last stance phase duration (Catalfamo et al., 2010) to detect two to four gait phases. Accordingly, we set tw = 600 ms for the 1-phase model, tw = 300 ms for the 2-phase model, and tw = 150 ms for the 4-phase model.

For minima, peak detection

Subsequent sections detail specific parameterisation, depending on the algorithm (Algo. A1 or Algo. A2) and phase granularity (1-, 2-, 4-phase see Section 1; Figure 1).

Algo. A1 implemented a peak detection to obtain IC from acceleration signal

Minimal peak distance θd was set equal to tw = 600 ms between IC events.

The purely acceleration-based Algo. A1 was solely investigated for detection according to the 1-phase model, thus described here for IC event detection only.

IC gait events were detected by Algo. A2 by a positive to negative signal sign change function

Algo. A2 reported an IC event, if the sign change and positive peak conditions described above, were met within a time range of 50 ms, according to (Sánchez Manchola et al., 2019). In addition, wait time since the last detected IC event was set to tw = 600 ms. In the 2- and 4-phase model, we set tw = 300 ms, tw = 150 ms, since last detected gait event, respectively.

To find TO events with Algo. A2, negative peaks were detected in both, acceleration

A2 reported a TO event if

MSw was determined from peaks of the angular velocity signal

while the acceleration signal of the contralateral side

MSt was detected by peaks of the angular velocity signal at the contralateral side

We set minimum peak distance θd = 600 ms between MSt events. A2 peak detection reported a MSt event, if the acceleration signal exhibited minimal signal changes, determined as gradient (second order derivative within a tolerance of the 75th quantile) in signal segments with td = 50 ms.

Simulation data was extracted from a publicly available dataset (Knarr et al., 2013), comprising treadmill walking of eight hemiparetic patients after stroke. Patients walked at self-selected speed, before (PRE) and after (POST) an intervention therapy. Biomechanical motion references from marker-based video motion capture and measured ground reaction force (GRF) were used in our work. Our evaluation covers both body sides of hemiparetic patients, i.e., the affected and less-affected body side.

To obtain ground truth, we used GRF data, which provides details about gait phases (see Figure 1). GRF data served as reference to identify IC, MSt, TO, and MSw events for each study participant. IC and TO were extracted automatically by detecting signal sign changes of the GRF first derivative. MSt and MSw were visually labelled by an experienced sports scientist.

We apply five-fold cross-validation (CV) and Leave-One-Participant-Out CV as validation strategies to assess detection algorithm performance. The validation strategies target different evaluations, i.e., to estimate errors of user-dependent vs. user-independent parameterisation and errors of sensor position-dependent vs. sensor position-independent parameterisation at limb segments (see details below). During training, the respective algorithm parameters to find gait events (IC, TO, MSt, MSw, see Sections 4.2.1–4.2.4) were fitted, while the CV testing sets were used to evaluate performance. All algorithms were evaluated for PRE and POST intervention separately (see Section 5.1). Below we match validation strategies to algorithms, i.e., how minimum peak height θh was derived from training data and evaluated on testing data.

To assess event estimation performance in user-dependent and sensor position-dependent parameterisation, Algo. A1 and Algo. A2, variant a (A2a), were evaluated using 5-fold CV per participant and sensor position, thus each fold was split into 80% training data and 20% testing data. Training data was used to fit minimum peak height θh according to synthesised sensor data. Training procedure was repeated five times to cover each data fold once for testing and folds’ results were averaged.

To assess event estimation performance in user-independent parameterisation, Algo. A2, variant b (A2b), was evaluated using Leave-One-Participant-Out procedure. Training data was used to fit minimum peak height θh according to synthesised sensor data per sensor position, but averaged across all study participants, except one participant. Data of the held-out participant was assigned for testing. Training procedure was repeated to hold-out every participant once and the folds’ results were averaged.

To assess event estimation performance in user-dependent, but sensor position-independent parameterisation, Algo. A2, variant c (A2c), was evaluated using 5-fold CV per participant, thus each fold was split into 80% training data and 20% testing data. Training data was used to fit minimum peak height θh according to synthesised sensor data and subsequently averaged per body segment. According to the number of synthesised sensors per segment, 192 sensors at each upper and lower legs, and 96 sensors at the shoe were averaged before applying CV. Training procedure was repeated five times to cover each data fold once for testing and the folds’ results were averaged.

Performance of the gait event detection algorithms was evaluated by deriving timing error, i.e., mean absolute error (MAE) of detected events

Subsequently, normalised MAE (nMAE) was obtained in percent by normalisation with the reference average stride duration

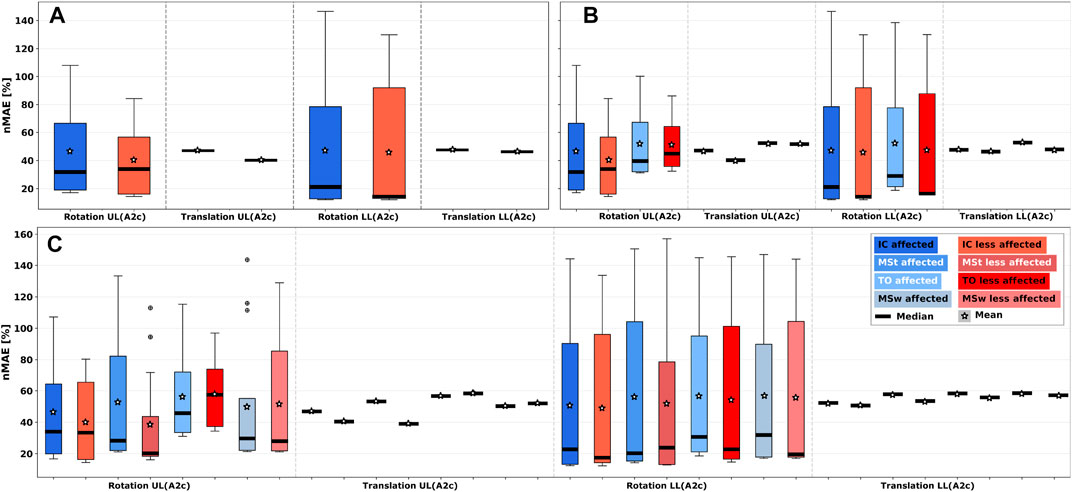

To investigate detection errors that may originate from position variation during sensor wearing, we analysed rotatory and translatory error subcomponents for Algo. A2c. Algo. A2c was designed as sensor-position independent, i.e., the same parameterisation for minimum peak height θhwas used for each sensor position per body segment (see Section 5.3.3). Rotatory errors were determined from estimated errors (nMAE) at sensor positions S1-S16 of auxiliary structure ring R1, see Figure 3D, thus simulating an uniaxial sensor rotation around a limb segment. Correspondingly, translatory errors were determined from estimated errors (nMAE) at sensor position S1 of auxiliary structure ring R1-R12, thus simulating an uniaxial sensor translation along a limb axis. The error components can be interpreted as voluntary or involuntary sensor position shifts on longitudinal and transversal limb axes. By dissecting error components, we assessed how susceptible the detection algorithms are to specific types of sensor position shifts.

The model validation was performed with specifically recorded motion data to confirm correct synthesis of acceleration and gyroscope signals as most public datasets lacked synchronous MoCap marker data and physical IMU measurements, which are needed for a direct comparison. Synthesised and measured (reference) sensor data timeseries of ten healthy participants during walking were analysed to validate our modeling and co-simulation approach.

A total of 24 reflective spherical MoCap markers and six IMUs (MyoMotion, Noraxon, United States) at lower limbs and pelvis were selected. A synchronized and calibrated 11-camera marker-based MoCap system (Qualisys, Sweden) was used to acquire gold-standard MoCap data. Cameras and IMUs were time-synchronized at a frame rate of 100 Hz. See Supplementary Table S1 for participant details and Supplementary Figure S13 for participant setup during model validation. All participants gave written consent and ethics approval was granted by the ethics committee of Friedrich-Alexander University Erlangen-Nuremberg.

After a static calibration trial (upright standing), participants were instructed to walk straight for a distance of 9 m. On average two gait cycles per body side and participant were captured for the analysis. MoCap data preprocessing included marker labelling and gap filling (Qualysis Track Manager, v. 2018). MoCap and IMU data were subsequently filtered by a 6 Hz lowpass Butterworth.

The Gait2354 inverse kinematic model was used to create personalised body models following the steps described in Sections 3.1, 3.3. The best matching sensor positions were selected (determined manually as lowest error and highest correlation across all participants) at the upper limbs (S4 on R12), lower limbs (S3 on R12) and foot (S22 on R3) for comparison with real measurements (see Figure 3).

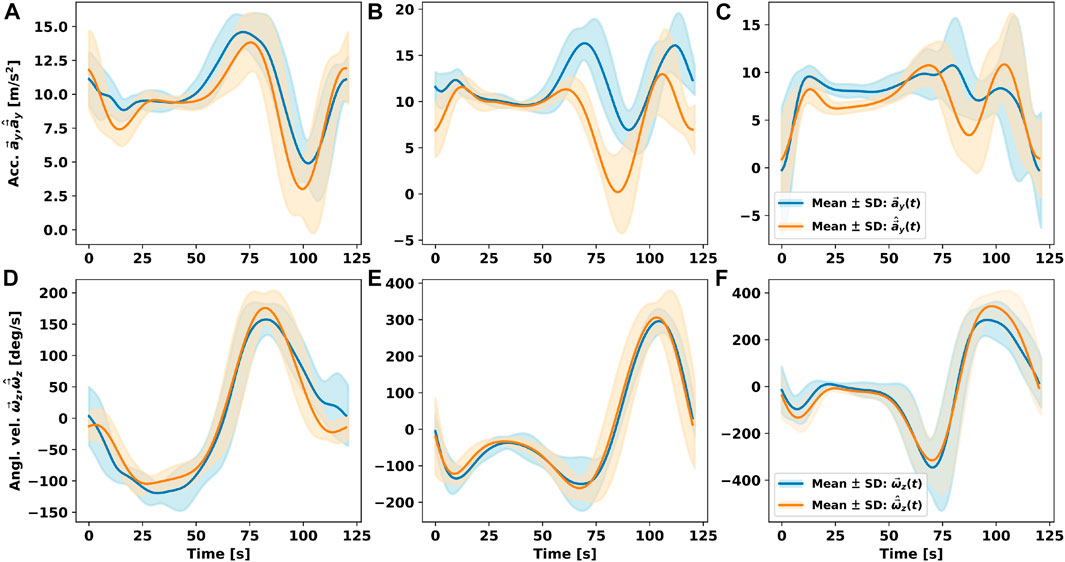

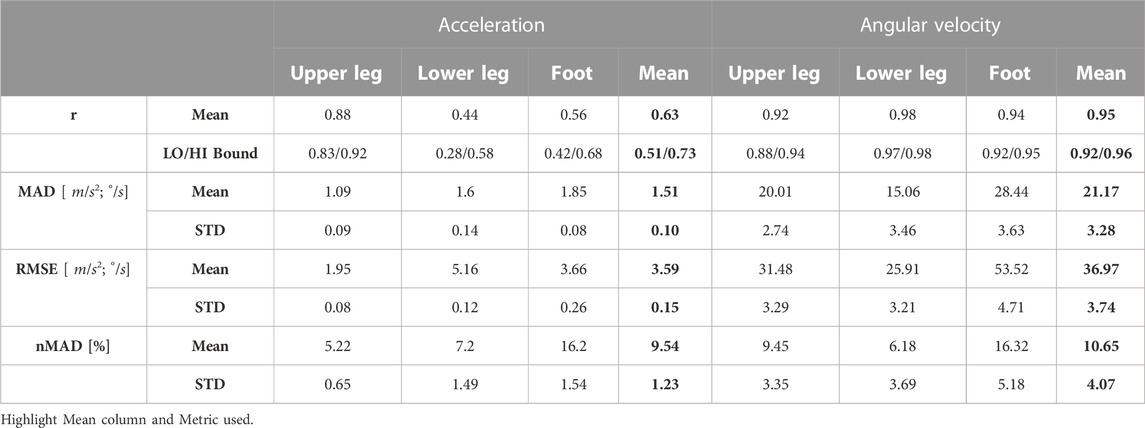

Figure 4 shows measured and simulated signals averaged per gait cycle for upper and lower legs and foot instep. Data of left and right body sides were combined, resulting in a total of 48 gait cycles per segment to be analysed. Synthesised and measured accelerometer data showed good average agreement at a Pearson’s correlation coefficient of r = 0.62 across all three sensor positions (cf. Figures 4A–C). Pearson’s correlation coefficients were derived between synthesised and measured sensor timeseries data per participant and sensor position. All Pearson correlation r values were transformed with the Fisher’s z transform as not all correlations were normally distributed. We calculated mean and 95% confidence interval (lower and upper bounds) from the transformed distributions. Subsequently, the derived means and bounds were inversely transformed (see Table 1). Correlation was r = 0.88 for upper legs, r = 0.44 for lower legs, and r = 0.56 for feet. Absolute signal minima and maxima varied between synthesised and measured timeseries, however, mean median absolute deviation (mean MAD) was 1.51 m/s2, mean RMSE was 3.59 m/s2, and mean normalised median absolute deviation (mean nMAD) was below 10% (see Table 1). Reduced correlation at lower legs and feet compared to upper legs may be due to the auxiliary structures used to approximate limb shapes: The cylindrical auxiliary structures had larger circumferential difference at lower legs, thus making it harder to map sensor positions, compared to upper legs. Similarly, the shoe model may have resulted in larger variation of foot sensor approximation compared to barefoot walking (cf. Figures 4A–C; Table 1). For angular velocity signals correlations were r = 0.98 for lower legs, r = 0.94 for feet, and r = 0.92 for upper legs. Figures 4D–F illustrates the agreement of simulation and measurements. Lowest mean nMAD were 7% for lower legs, overall mean nMAD was ∼10.7% (see Table 1).

FIGURE 4. Comparison of accelerometer (Acc.) and gyroscope (Angl. vel.) measurements timeseries

TABLE 1. Pearson correlation coefficients r, mean MAD, mean RMSE, mean nMAD, and STD between measured and synthesised sensor timeseries across all participants. LO/HI Bound: Lower and upper bound of 95% confidence interval.

Overall, angular velocity signal agreement exceeded that of acceleration. Nevertheless, signal patterns between synthesised and measured acceleration signals clearly showed similarity, too. The results indicate that acceleration is more susceptible to individual movement patterns and potential sensor position differences. An overall agreement between signal patterns is evident, suggesting that our co-simulation framework provides meaningful fidelity for sensor data synthesis of acceleration and angular velocity data.

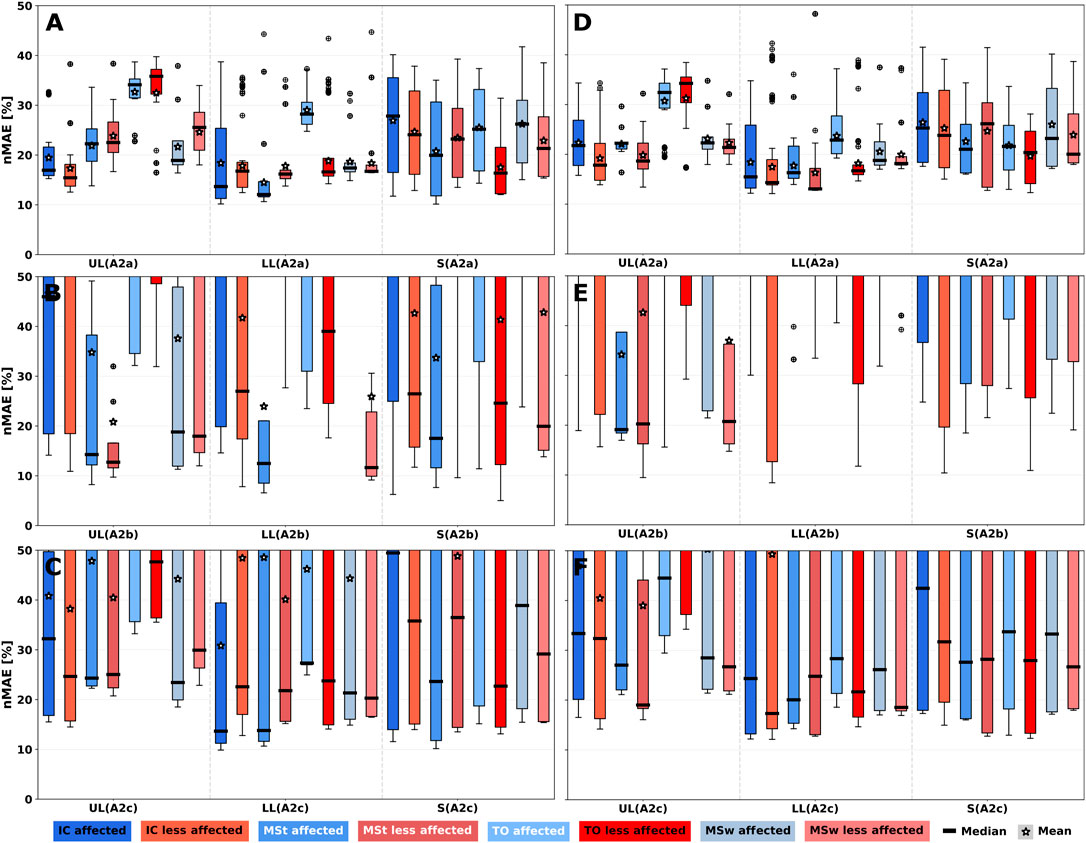

We summarise estimation results of gait event detection based on 1-, 2-, and 4-phase models. Result diagrams use a common boxplot style to show error ranges over all simulated sensors per limb, with whiskers extending to 1.5× the interquartile range (IQR) below/above the lower/upper quartile (Q1/Q3). Since error estimates were not always normally distributed, we compare median and IQR, but visualise error means too. We limit visible error ranges to highlight the performance of practically relevant sensors per limb with nMAE below 50%.

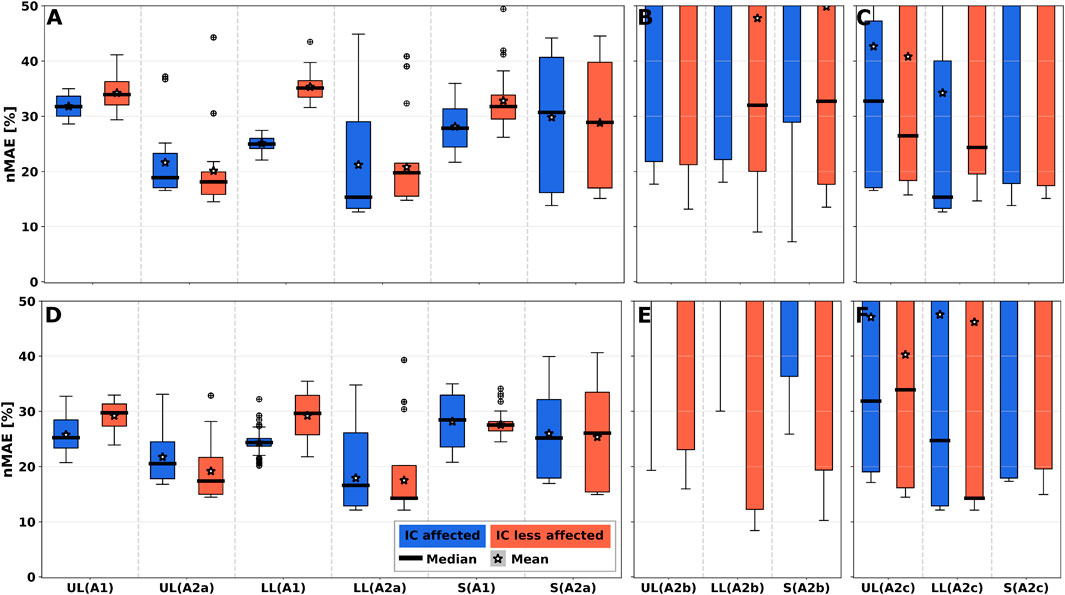

Figure 5 shows the nMAE between ground truth and predicted gait events of all simulated sensor positions on both body sides PRE intervention (see Figures 5A–C) and POST intervention (see Figures 5D–F). Event detection error ranged between 10% and 50%, corresponding to approx. 140–700 ms, depending on the body segment. Algo. A1 showed larger median nMAEs for the upper and lower leg, but lower IQR compared to Algo. A2a. Overall, median nMAE decreased from PRE to POST by 5%–10% on average.

FIGURE 5. 1-phase gait event nMAE of all simulated sensor positions summarised per body segment for all algorithms. nMAE axes were limited to highlight practically relevant sensor performances with nMAE below 50%. For full-range diagrams, please see Supplementary Figure S5. A1 shows larger nMAE in medians for all segments. (A–C) PRE intervention. (D–F) POST intervention. (A,D) User-dependent and sensor position-dependent parameterisation (Algo. A1, Algo A2a). (B,E) User-independent parameterisation by averaging parameters across training set patients (Algo. A2b). (C,D) User-dependent and sensor position-independent parameterisation by averaging parameters across body segments (Algo. A2c). UL: Upper leg; LL: Lower leg; S: Shoe.

Figures 5B, E show nMAEs of user-independent parameterisation of Algo. A2b, i.e., by averaging parameters across training set patients. Figures 5C, F show nMAEs of user-dependent and sensor position-independent parameterisation of Algo. A2c, i.e., by averaging parameters across body segments. User-indepedency or sensor position-independency increased nMAE median and IQR. Generally, nMAE median improved by about 65% depending on the body segment and algorithm choice (see Supplementary Table S2). Performance degradation is a result of the substantially increased nMAE IQR across sensor positions for Algo. A2b and Algo. A2c. Still, selected sensor positions (e.g., S5/S13 on upper and lower legs or S9/S15 on R3 on the shoe) could be identified that exhibit similar performance as the personalised algorithm variant (A2a), which can be seen in Supplementary Figure S8.

nMAE larger than 100% appeared due to missing peaks in at least one stride, or detecting additional peaks, which resulted in increased event time differences with respect to ground truth. Supplementary Figure S12 illustrates detection errors for two selected sensor positions.

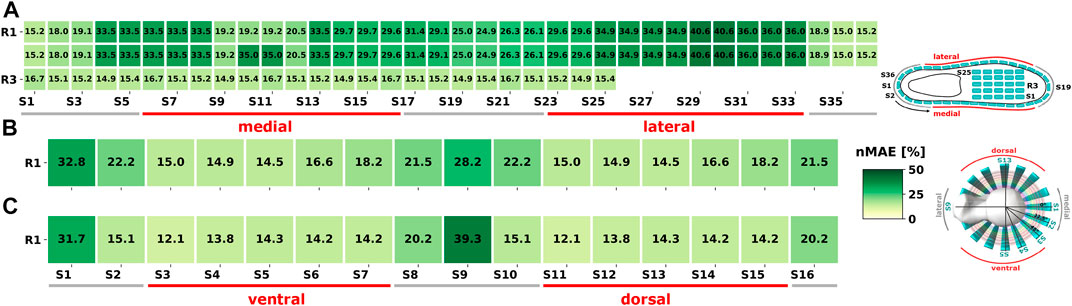

Shoe sensor positions showed larger error IQRs compared to upper and lower legs (see Figure 5), which can be attributed to varying orientation of the shoe-dependent sensors (see also Figure 3). Shoe sensor heatmaps showed a MAE decrease by ∼ 50% for instep and heel sensor positions compared to the shoe positions on the lateral and medial side (see Figure 6). Upper and lower leg positions present circular error patterns as gyroscope-based detection methods, i.e., Algo. A2, do not depend on limb axes shifts. Therefore, Figures 6B, C show one selected sensor ring only, to illustrate the error patterns. Medial and lateral sides of body segments were more prone to errors. The error pattern can be explained by the shift in the main sensor rotation axis during walking. Medial and lateral sensors rotate around an orthogonal axis compared to dorsal and ventral ones. In general, sensor orientation signals are inverted for opposing limb positions, which will affect detection performance for algorithms without position-adapted parameters or models. More detailed error analyses of all body segments can be found in the Supplementary Material.

FIGURE 6. 1-phase model: Exemplary nMAE heatmaps POST intervention on the less-affected body side for Algo. A2a. (A) All simulated shoe sensor positions. (B) Selected sensor ring at the upper leg. (C) Selected sensor ring at the lower leg. R: Ring; S: Sensor.

Since our analysis of the event detection with the 1-phase model yielded superior results for gyroscope-based algorithms compared to acceleration only algorithm A1, only Algo. A2 variants (A2a, A2b and A2c) were considered for increased phase granularity. Figure 7 shows nMAE for both predicted gait events of the 2-phase model of all simulated sensor positions on both body sides PRE (see Figures 7A–C) and POST (see Figures 7D–F). Additionally, nMAE for the three algorithm variants are shown. Parameter fitting showed the best performance of all variants (see Figures 7A, D). Consequently, user-independent parameterisation (Algo. A2b) resulted in largest median and IQR for both gait events (see Figures 7B, E; Supplementary Table S3).

FIGURE 7. 2-phase gait event nMAE of all simulated sensor positions summarised per body segment for Algo. A2 variants. nMAE axes were limited to highlight practically relevant sensor performances with nMAE below 50%. For full-range diagrams, please see Supplementary Figure S6. Gait events are Initial Contact (IC) and Toe off (TO). (A–C) PRE intervention. (D–F) POST intervention. (A,D) User-dependent and sensor position-dependent parameterisation (A2a). (B,E) User-independent parameterisation by averaging parameters across training set patients (A2b). (C,F) User-dependent and sensor position-independent parameterisation by averaging parameters across body segments (A2c). UL: Upper leg; LL: Lower leg; S: Shoe.

TO events showed larger median errors for upper and lower legs compared to IC, but smaller IQR, while shoe sensor position median and IQR were smaller for TO events compared to IC events. The result can be explained by the signal characteristic of synthesised data. As Supplementary Figure S16 illustrates, the negative signal peak is more pronounced on shoe sensor positions compared to the other leg segments and thus events can be recognised more robustly than at the leg.

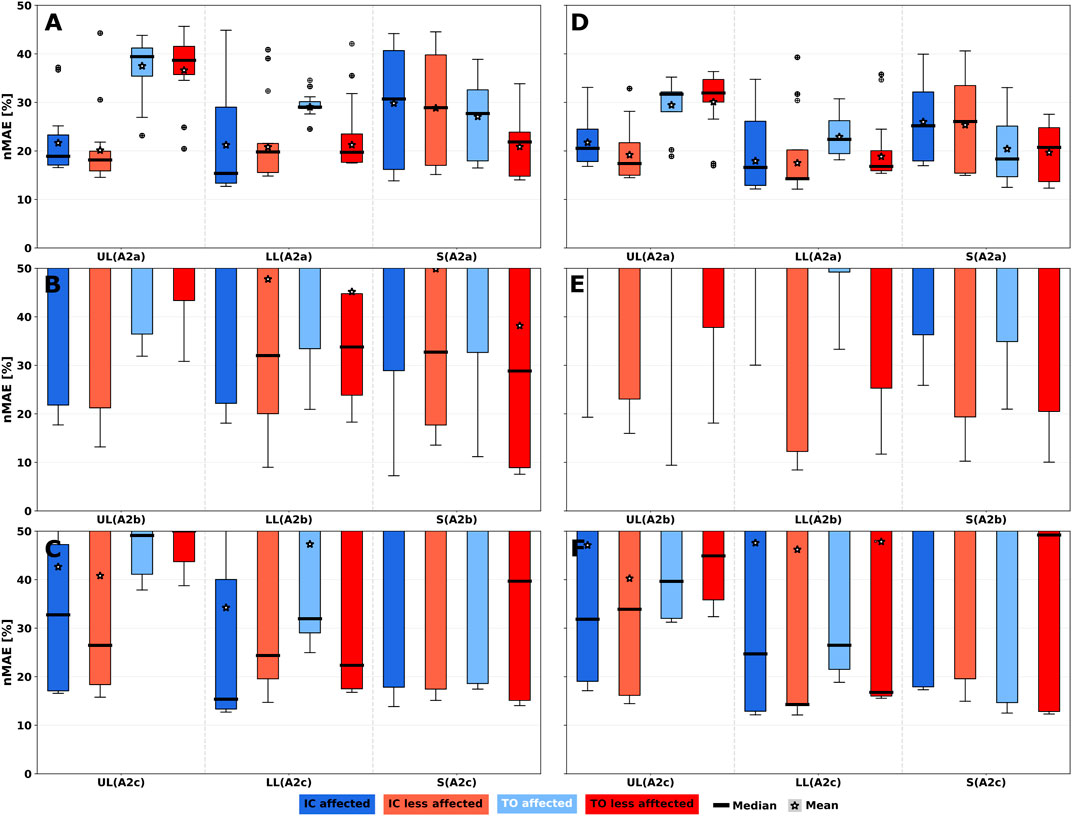

Results of the 4-phase gait event detection are shown in Figure 8. As the phase granularity increases from the 2-phase to the 4-phase model, detection algorithms get more complex with more event dependencies see Sections 4.2.2, 4.2.3, thus resulting in varying estimates for common events among the phase models, e.g., IC. For example, IC event detection yielded 14.3% nMAE in the 2-phase model vs. 17.3% in the 4-phase model and for TO, we observed 16.8% vs. 21.7%, all on the less-affected lower leg using Algo. A2c (see Supplementary Tables S3, S4). IQRs mostly decreased for POST intervention, which is particularly evident at shoe sensor positions. On average, TO showed larger median nMAE at UL and LL for both body sides compared to the other gait events. Among Algo. A2 variants, user-dependent and sensor position-dependent parameterisation (A2a) improved nMAE median and IQR considerably for all gait events of the 4-phase model. User-independent parameterisation of Algo. A2b (see Figures 8B, E) resulted in the largest medians and IQRs for all gait events, indicating that a homogeneous patient group is beneficial when relying on patient-adapted parameters

FIGURE 8. 4-phase gait event nMAE of all simulated sensor positions summarised per body segment for Algo. A2 variants. nMAE axes were limited to highlight practically relevant sensor performances with nMAE below 50%. For full-range diagrams, please see Supplementary Figure S7. Gait events are Initial Contact (IC), Mid Stance (MSt), Toe off (TO) and Mid Swing (MSw). (A–C) PRE intervention. (D–F) POST intervention. (A,D) User-dependent and sensor position-dependent parameterisation (A2a). (B,E) User-independent parameterisation. (C,F) User-dependent and sensor position-independent parameterisation. UL: Upper leg, LL: Lower leg, S: Shoe.

Gyroscope-based algorithms, i.e., Algo. A2, are more prone to rotatory error when repositioning around the axis of the body segment than along the limb axis. Consequently, larger IQRs of rotatory errors can be observed in Figure 9 for all phase models. Due to the translatory data invariance of gyroscope sensors along a body segment, translatory rearrangement along a limb axis did not result in IQRs, and median error remains constant. Shoe sensor positions were excluded from the analysis due to their asymmetric distribution.In 2- and 4-phase models (see Figures 9B, C), TO events for both, affected and less-affected body sides, showed larger median error compared to all other gait events. Increased IQRs for detected gait events can be attributed to a sensor rotation around a body segment. Compared to upper legs, a more uniformly distributed but larger IQR was observed at lower legs, even though error minima were smaller.

FIGURE 9. Sensor repositioning error analysis considering rotatory and translatory errors parts for Algo. A2c in all gait phase models. (A) 1-phase model. (B) 2-phase model. (C) 4-phase model. UL: Upper leg; LL: Lower leg. Larger IQRs for rotatory errors can be observed compared to translatory errors, as well as larger error IQRs in the 4-phase model compared to 1- and 2-phase models.

When comparing gait phase models, event detection for the 4-phase model showed a subtle increase in rotatory errors for IC and TO events, which could be attributed to the increased algorithm complexity and dependency of gait events compared to the other two phase models. Furthermore, the 4-phase model showed a more uniform error distribution, but larger IQR compared to 1- and 2-phase models. Thus, with increasing phase granularity, error IQRs across sensor positions increased, while the minimum error remained almost constant.

We introduced a comprehensive co-simulation framework to model body motion, i.e., gait patterns of hemiparetic patients, and inertial sensors, to interpret position and algorithm-dependent performance for 1-, 2-, and 4-phase gait event detection. In addition, we have investigated performance depending on rotatory and translatory changes in all three gait phase models to demonstrate versatility of our co-simulation framework.

Our comparative model validation (Section 6) aimed at quantifying similarities between synthesised and measured sensor signal patterns, as well as to confirm matching value ranges for acceleration and angular velocity signals. Model validation showed that (1) simulated sensors can approximate healthy walking, and 2) synthesised sensor signals showed moderate correlations (r = 0.63) for acceleration signals and high correlations (r = 0.95) for angular velocity signals, with nMAD below 11% for both.

Simulations are unlikely to yield perfect agreement with measured inertial sensor signals due to non-modelled, dynamic phenomena, e.g., IMU placement and fixation variability and marker loss. Nevertheless, our results across all sensor signals suggested meaningful synthesis performance. We attributed deviations for acceleration at lower legs and feet (see Figures 4B, C) to positioning variability along the gravitational axis that could naturally occur for sensor mounts and were not adequately captured by our sensor model’s auxiliary structures. RMSE and correlations observed in our validation analysis are within ranges reported in literature. Sharifi Renani et al. (2021) compared synthetic and measured IMU data at the pelvis, thigh, shank, and foot during walking, where RMSE for angular velocity ranged between 22.9°/s - 58.4°/s depending on sensor positioning. Correlations ranged between r = 0.3 – 0.9. RMSE for acceleration ranged between 0.6 m/s2 - 2.5 m/s2 with correlations between r = 0.7–0.9. Zimmermann et al. (2018) reported average correlations of r = 0.6 for accelerometers and r = 0.9 for gyroscopes at feet, shanks, thighs, and pelvis during walking. Average RMSE was 4.0 m/s2 and 35°/s for acceleration and angular velocity, respectively. The authors attributed the synthetic gap to additional artifacts due to clothing and soft-tissue that cause additional accelerations. Future work may focus on natural body shape representation by surface modeling, which can decrease model deviations (Uhlenberg and Amft, 2022).

For the present analysis, we selected parameterisable online, i.e., sliding window-based algorithms that could operate in real-time. The selected algorithm family extracts gait cycle events after detecting an initial contact following the respective gait cycle. Hence, the algorithms incur an event reporting delay of approx. one gait cycle. Algorithm parameters were initially fitted in a cross-validation procedure, but remained constant during the evaluation. Algo. A2a represents a typical personalised parameterisation, but implies that an initial parameter fitting is made, based on user-specific data. Algo. A2b was user-independent by fitting parameters from population data and thus might be easier deployed. Finally, for Algo. A2c sensor position dependency was removed, hence training data could originate from elsewhere at the same limb. The analysed algorithms are widely applied for gait event detection. Nevertheless, our co-simulation framework is not limited to one algorithm family. Other gait event detection algorithms, e.g., based on Hidden-Markov Models (HMM), as well as extended gait phase models, e.g., by additional gait phases, can be analysed using the proposed framework. Algorithms considered in our analysis rely on a bipedal detection approach, adapted from Zhao et al. (Zhao et al., 2019), which may be debatable with regard to body side-dependent gait patterns of hemiparetic patients. Our evaluation results however show that using the contralateral side to support gait event detection did not influence detection error of MSt and MSw events. The distribution of recognition errors in the 4-phase model was relatively constant across all phases. Similarly, the estimation errors of IC and TO in the 4-phase model were comparable to those of the 2-phase model (see Supplementary Tables S3, S4), even though the detection algorithms get more complex with additional gait events and gait phase granularity. Thus, we encourage further investigations of bipedal detection algorithms in hemiparetic patients.

Results showed better performance of Algo. A2a compared to Algo. A1 for event detection of the 1-phase model, which agrees well with previous measurement studies, e.g., (Lau and Tong, 2008; Kotiadis et al., 2010). A primary explanation of detection performance differences is that angular velocity signals used by A2 algorithms show more periodic and repeatable patterns along the gait cycle than acceleration signals, which confirms previous observations, e.g., made by Taborri et al. (2016).

Among Algo. A2 variants, user-dependent and sensor position-dependent parameterisation (Algo. A2a) showed lowest nMAE median (13.1%–41.4%) and IQR (1.4%–25.6%) for all gait events and gait models. User-dependent and sensor position-independent parameterisation (Algo. A2c) led to performance deterioration (increase in median nMAE by 5.5%–19.5%), although less severe than user-independent parameterisation (Algo. A2b), which showed an increase in median nMAE by 24.9%–80.2%. Parameter personalisation clearly adds algorithm complexity. Consequently, individual gait pattern variability should be specifically considered in future studies of gait event detection algorithms, e.g., by comparing algorithms under gait and sensor positioning variations and incorporating algorithm personalisation methods, where necessary. Most gait detection investigations to date have focused on healthy volunteers, fixed sensor position, and algorithm parameterisation with manually adjusted parameters or once-trained statistical models (Catalfamo et al., 2010; Kotiadis et al., 2010; Rueterbories et al., 2014; Maqbool et al., 2016; Sánchez Manchola et al., 2019). Our results however show that for hemiparetic patients a major potential to minimise event detection errors lies in adapting (e.g., personalising) the main rotation axis at medial-lateral vs. dorsal-ventral, depending on sensor position. We achieved the intended user-dependency by splitting the per-participant synthesised timeseries into folds. User-dependency is generally considered to maximise detection performance, but risking generalisability. Due to the 5-fold CV splits of the timeseries, testing data may be overly dependent on training data. Nevertheless, personalisation of different gait detection models has recently been successfully applied for other neurological disorders and gait rehabilitation robots (Martindale et al., 2018; Ye et al., 2020; Ingelse et al., 2022). Based on our results, further work on algorithm personalisation is warranted. Furthermore, we observed that particular sensor positions, e.g., at the shoe, show consistently robust signal patterns suggesting minimal detection errors (see Supplementary Figure S11, S16). Sensor positions found with our co-simulation framework may be favourable for gait event detection or serve as an alternative reference position in measurement studies, where minimal instrumentation is required.

nMAE median and IQR decreased on all body segments from PRE to POST intervention for all phase models, which could indicate an improvement in gait regularity at POST intervention. The hypothesised gait improvement is supported by findings of Knarr et al. (2013) and Derungs and Amft (2020), who respectively showed increased muscle activation of plantar flexors and decreased stride duration after rehabilitation intervention for the same dataset.

To compare our results with those of Derungs and Amft (2020), we additionally performed an analysis of stride duration using the normalised root mean square error (nRMSE) as described in the Supplementary Equation S2 and Supplementary Figure S5. However, the aforementioned work analysed upper leg sensor positions and dynamic acceleration only. Furthermore, ground truth data was taken from the heel MoCap marker rather than GRF in our work, which limits the comparison. Compared to Algo. A1 in our work; Derungs and Amft (2020) showed smaller nRMSE for the best performing sensor (0% vs. 17%), but larger errors for the worst performing sensor (44% vs. 36%), which may be due to the combined static and dynamic acceleration model and GRF-based ground truth used in our work. Algo. A2 showed similar nRMSE for the best sensor (0.1%) and considerably smaller errors for the worst performing sensor (8%). Thus Algo. A2 improves the error bound compared to an acceleration-based detection.

Timing error across hemiparetic patient dataset varied considerably between algorithm variants and sensor positions, ranging from 16% to 160% mean nMAEs, corresponding to temporal event deviations of approximately 220 ms–2,240 ms. Timing errors reported by previous measurement studies ranged between −28 ms and +190 ms, e.g., (Behboodi et al., 2015; Chia Bejarano et al., 2015; Gouwanda and Gopalai, 2015; Zhou et al., 2016; Lambrecht et al., 2017; Sánchez Manchola et al., 2019). We assume that elevated error ranges may be observed for particular patient groups and specific study designs (e.g., PRE and POST intervention). However, a substantial share of the larger error ranges found in our simulations can be explained by utilising an absolute error metric, instead of averaging signed errors. When averaging individual signed errors, early and late event detection errors across individual gait cycles may compensate and thus, result in artificially smaller mean error reporting. Similarly, metrics derived from signed error, including accuracy, precision, and recall may conceal the actual event timing error. Therefore, we suggest that MAE or a metric variant relative to stride duration, i.e., nMAE, as defined in Eq. 16, should be applied for analyses of sensor positioning and algorithm variants.

We consider our approach robust and reproducible: we used previously applied and validated biomechanical models in the well-established and validated musculoskeletal modeling environment OpenSim for our analysis (Delp et al., 2007; Seth et al., 2018; Karimi et al., 2021; Yap et al., 2021). We applied default personalisation procedures, using participant body weight and MoCap markers with unchanged default weights. Our sensor models were derived using fundamental physics-based equations. We validated our co-simulation approach by comparing synthesised data and actual IMU measurements (i.e., IMU data without modeling biases), resulting in meaningful synthesis performance. Nevertheless, further work may include sensitivity analyses, e.g., on MoCap marker weights and further algorithm parameters, to explore simulation variability.

According to rigid-body dynamics, vertical sensor translation along a body segment does neither affect rotation nor angular velocity. Thus, with a symmetric sensor distribution around limb axes, synthesised sensor signals and timing errors are identical at each ring of upper and lower legs (e.g., S1 on R1 vs. S1 on R2, see Figure 3.). Our error analysis revealed that A2 algorithms were more likely to incur rotational errors than translational errors, which is a direct effect of gyroscope-based measurement. To add further co-simulation fidelity, sensor placement may follow natural body shapes more accurately, e.g., captured from silhouette data. Further biomechanical research is needed to adapt silhouette modeling concepts, e.g., those currently investigated for animation purposes (Loper et al., 2015). A particular challenge of animation models is to maintain anatomically correct representation during motion (Baronetto et al., 2021). We deployed non-deformable, feature-less geometric silhouette models for shoes, which may insufficiently represent natural sensor signal variation. Similar to the auxiliary structure at limbs, the shoe model served as a substrate for sensors and was not individually validated. Shoe design and mechanical properties vary, including materials, fixtures, and rigid structures. We believe that our co-simulation framework enables researchers to investigate design variations by adding corresponding mesh models into the simulation, e.g., shoe and cloth designs.

A direct-link weld joint was used to simulate sensor attachment. While a fixed sensor-bone fixation may not be practically useful, it enabled us to contrast placement and algorithm parameterisations in the present analysis, which emphasised relative differences. Derungs and Amft (2020) showed that simulating accelerometer attachment variation by adding sinusoidal signal noise had only minor effects (

The proposed co-simulation framework focuses on optimising information retrieval. For a wearable system realisation, other sensor positioning priorities, e.g., according to user sensor wearing preferences and technical feasibility, could be considered. However, we consider that our information-driven simulation framework can provide system designers with an initial priorisation to select sensor positions, algorithms, and parameters. Furthermore, co-simulations enable us to represent temporal dynamics of individual motion and compensation patterns that are challenging to describe analytically.

Our methodology enables wearable system designers and algorithm developers to find suitable sensor types, position, and algorithm parametrisation, when MoCap data, or similar body orientation data is available. To implement an arbitrary algorithm analysis, developers may follow the methods described in Sections 3, 5.

Results of our analysis confirm a new pathway for wearable system development and in silico performance evaluation using human digital twins. Dynamic, model-based simulations of on-body systems could be used as a preliminary assessment before testing in the physical world. For example, our co-simulation framework could be used to assess wearable inertial sensor systems for clinical testing, athletic tracking, and further proof of concepts. In addition, initial simulation-based exploration could help in planning of remote monitoring applications, before physical prototypes are deployed or even fabricated. The human digital twins developed in this work describe biomechanics and motion phenomena, not any underlying neurological deficits. However, neural control affects kinematics, which were captured in the included study data.

We introduce a co-simulation framework that explores how personalised biomechanical and motion sensor models can assist in gait detection algorithm analysis and wearable system design to achieve optimal body placement. Initially, we validated sensor signal synthesis in a qualitative comparison against measurements at the same participants, confirming suitable performance of our co-simulation approach. Subsequently, we evaluated common gait event detection algorithms within our co-simulation framework and showed absolute detection errors.

Gait phase analysis in hemiparetic patients demonstrated that our framework can deal with highly complex gait patterns. The best gait event detection performance was observed for an user-dependent and sensor position-dependent algorithm parameterisation (Algo. A2a), an increase of 65% compared to an algorithm with user-independent parameterisation (Algo. A2b). For comparison, sensor-position dependent parameterisation added only 25% compared to position-independent detection (Algo. A2c). While our simulations confirmed that gyroscope-based gait event detection algorithms outperformed their acceleration-based counterparts, the rotatory sensor error analysis demonstrated limitations of a gyroscope-based gait phase detection: We found larger IQRs when repositioning sensors around the main body segment axis compared to repositioning sensors along the limb axis for the gyroscope-based detection (Algo. A2c). We showed that our framework can be used to evaluate different algorithms as well as different gait phase models. For the commonly used algorithms considered in this work, our analysis showed that there is a larger dependency on the user than sensor position. Thus, user personalisation of gait phase detection should be considered, while sensor position variation may be a secondary adaptation target.

Our co-simulation framework allowed us to evaluate arbitrary sensor positions on the body. For example, sensor positions at the shoe can lead to larger errors compared to upper and lower legs. In addition, we can evaluate specific geometries, including shoe shape, to determine optimal placement. For the shoe, instep and heel positions were more suitable compared to lateral and medial sensor positions.

Our approach opens a new pathway for utilising dynamic human digital twins in wearable system design and performance estimation, before physical prototypes are deployed or manufactured.

Publicly available datasets were analyzed in this study. This data can be found here: https://simtk.org/projects/fesprediction.

The studies involving human participants were reviewed and approved by the ethics commitee of Friedrich-Alexander-University Erlangen-Nuremberg. The patients/participants provided their written informed consent to participate in this study.

LU performed framework development, algorithm development, data analysis, and manuscript writing. AD supported sensor data synthesis and provided validation data of hemiparetic patients. OA supervised all development activities and helped in all facets of the manuscript preparation. All authors read and approved the final manuscript.

We acknowledge support by the Open Access Publication Fund of the University of Freiburg. We thank Knarr et al. (2013) for sharing motion capture data via OpenSimTK. Additionally, we thank Sánchez Manchola et al. (2019) for making their code and data available to us.

Author AD is employed by F. Hoffmann–La Roche Ltd., but declares that there is no commercial or financial interest in the research that represents a conflict of interest.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fbioe.2023.1104000/full#supplementary-material

Al Borno, M., O’Day, J., Ibarra, V., Dunne, J., Seth, A., Habib, A., et al. (2022). OpenSense: An open-source toolbox for Inertial-measurement-unit-based measurement of lower extremity kinematics over long durations. Journal of NeuroEngineering and Rehabilitation 19, 22. doi:10.1186/738s12984-022-01001-x

Altini, M., Penders, J., Vullers, R., and Amft, O. (2015). Estimating energy expenditure using body-worn accelerometers: A comparison of methods, sensors number and positioning. IEEE J. Biomed. Health Inf. 19, 219–226. doi:10.1109/JBHI.2014.2313039

Atallah, L., Lo, B., King, R., and Yang, G.-Z. (2011). Sensor positioning for activity recognition using wearable accelerometers. IEEE Trans. Biomed. Circuits Syst. 5, 320–329. doi:10.1109/TBCAS.2011.2160540

Balaban, B., and Tok, F. (2014). Gait disturbances in patients with stroke. PM R J. Inj. Funct. Rehabil. 6, 635–642. doi:10.1016/j.pmrj.2013.12.017

Balasubramanian, C. K., Neptune, R. R., and Kautz, S. A. (2009). Variability in spatiotemporal step characteristics and its relationship to walking performance post-stroke. Gait posture 29, 408–414. doi:10.1016/j.gaitpost.2008.10.061

Banos Legran, O., Damas, M., Pomares, H., Rojas, I., Toth, M., and Amft, O. (2012). “A benchmark dataset to evaluate sensor displacement in activity recognition,” in SAGAware 2012: International Workshop on Situation (Activity and Goal Awareness (ACM)), 1026–1055. doi:10.1145/2370216.2370437

Baronetto, A., Uhlenberg, L., Wassermann, D., and Amft, O. (2021). “Simulation of garment-embedded contact sensor performance under motion dynamics,” in ACM International Symposium on Wearable Computers (Virtual Conference). doi:10.1145/3460421.3480423

Behboodi, A., Wright, H., Zahradka, N., and Lee, S. C. K. (2015). “Seven phases of gait detected in real-time using shank attached gyroscopes,” in 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 5529–5532. doi:10.1109/EMBC.2015.7319644

Bogaarts, G., Zanon, M., Dondelinger, F., Derungs, A., Lipsmeier, F., Gossens, C., et al. (2021). “Simulating the impact of noise on gait features extracted from smartphone sensor-data for the remote assessment of movement disorders,” in 2021 43rd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC), 6905–6910. doi:10.1109/EMBC46164.2021.9630594

Canning, C. G., Ada, L., and Paul, S. S. (2006). Is automaticity of walking regained after stroke? Disabil. Rehabilitation 28, 97–102. doi:10.1080/09638280500167712

Catalfamo, P., Ghoussayni, S., and Ewins, D. (2010). Gait event detection on level ground and incline walking using a rate gyroscope. Sensors (Basel, Switz.) 10, 5683–5702. doi:10.3390/s100605683

Chen, Z., Zhu, Q., Soh, Y. C., and Zhang, L. (2017). Robust human activity recognition using smartphone sensors via CT-PCA and online SVM. IEEE Trans. Industrial Inf. 13, 3070–3080. doi:10.1109/TII.2017.2712746

Chèze, L., Fregly, B. J., and Dimnet, J. (1995). A solidification procedure to facilitate kinematic analyses based on video system data. J. Biomech. 28, 879–884. doi:10.1016/0021-9290(95)95278-D

Chia Bejarano, N., Ambrosini, E., Pedrocchi, A., Ferrigno, G., Monticone, M., and Ferrante, S. (2015). A novel adaptive, real-time algorithm to detect gait events from wearable sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 413–422. doi:10.1109/TNSRE.2014.2337914

Delp, S. L., Anderson, F. C., Arnold, A. S., Loan, P., Habib, A., John, C. T., et al. (2007). OpenSim: Open-Source software to create and analyze dynamic simulations of movement. IEEE Trans. Biomed. Eng. 54, 1940–1950. doi:10.1109/TBME.2007.901024

Derungs, A., and Amft, O. (2019). “Synthesising motion sensor data from biomechanical simulations to investigate motion sensor placement and orientation variations,” in 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Berlin, Germany: IEEE), 6391–6394. doi:10.1109/EMBC.2019.8857386

Derungs, A., and Amft, O. (2020). Estimating wearable motion sensor performance from personal biomechanical models and sensor data synthesis. Nat. Sci. Rep. 10, 11450. Available at: Https://rdcu.be/b5ynj. doi:10.1038/s41598-020-68225-6

Derungs, A., Schuster-Amft, C., and Amft, O. (2018). Physical activity comparison between body sides in hemiparetic patients using wearable motion sensors in free-living and therapy: A case series. Front. Bioeng. Biotechnol. 6, 136. doi:10.3389/fbioe.2018.00136

Esteban, C., Hyland, S. L., and Rätsch, G. (2017). Real-valued (medical) time series generation with recurrent conditional GANs. arXiv preprint arXiv:1706.02633.

Förster, K., Roggen, D., and Troster, G. (2009). “Unsupervised classifier self-calibration through repeated context occurences: Is there robustness against sensor displacement to gain?,” in ISWC 2009: International Symposium on Wearable Computers (Washington, DC, USA: IEEE press), 77–84. doi:10.1109/ISWC.2009.12

Gouwanda, D., and Gopalai, A. A. (2015). A robust real-time gait event detection using wireless gyroscope and its application on normal and altered gaits. Med. Eng. Phys. 37, 219–225. doi:10.1016/j.medengphy.2014.12.004

Guo, Y., Wei, Z., Keating, B. J., and Hakonarson, H. (2016). Machine learning derived risk prediction of anorexia nervosa. BMC Med. Genomics 9, 4. doi:10.1186/s12920-016-0165-x

Han, Y. C., Wong, K. I., and Murray, I. (2019). Gait phase detection for normal and abnormal gaits using IMU. IEEE Sens. J. 19, 3439–3448. doi:10.1109/JSEN.2019.2894143

Harms, H., Amft, O., and Tröster, G. (2012). “Does loose fitting matter? Predicting sensor performance in smart garments,” in Bodynets 2012: Proceedings of the International Conference on Body Area Networks (ACM), 1–4.

Hoelzemann, A., Sorathiya, N., and Van Laerhoven, K. (2021). “Data augmentation strategies for human activity data using generative adversarial neural networks,” in 2021 IEEE International Conference on Pervasive Computing and Communications Workshops and Other Affiliated Events (PerCom Workshops), 8–13. doi:10.1109/PerComWorkshops51409.2021.9431046

Ingelse, L., Branco, D., Gjoreski, H., Guerreiro, T., Bouça-Machado, R., Ferreira, J. J., et al. (2022). Personalised gait recognition for people with neurological conditions. Sensors 22, 3980. doi:10.3390/s22113980

Iwana, B. K., and Uchida, S. (2021). An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 16, e0254841. doi:10.1371/journal.pone.0254841

John, C. T., Anderson, F. C., Higginson, J. S., and Delp, S. L. (2013). Stabilisation of walking by intrinsic muscle properties revealed in a three-dimensional muscle-driven simulation. Comput. Methods Biomech. Biomed. Eng. 16, 451–462. doi:10.1080/10255842.2011.627560

Kamel Boulos, M., and Zhang, P. (2021). Digital twins: From personalised medicine to precision public health. J. Personal. Med. 11, 745. doi:10.3390/jpm11080745

Karimi, M. T., Hemmati, F., Mardani, M. A., Sharifmoradi, K., Hosseini, S. I., Fadayevatan, R., et al. (2021). Determination of the correlation between muscle forces obtained from OpenSim and muscle activities obtained from electromyography in the elderly. Phys. Eng. Sci. Med. 44, 243–251. doi:10.1007/s13246-021-00973-9

Knarr, B. A., Kesar, T. M., Reisman, D. S., Binder-Macleod, S. A., and Higginson, J. S. (2013). Changes in the activation and function of the ankle plantar flexor muscles due to gait retraining in chronic stroke survivors. J. NeuroEng. Rehabil. 10, 12. doi:10.1186/1743-0003-10-12

Kotiadis, D., Hermens, H., and Veltink, P. (2010). Inertial Gait Phase Detection for control of a drop foot stimulator: Inertial sensing for gait phase detection. Med. Eng. Phys. 32, 287–297. doi:10.1016/j.medengphy.2009.10.014

Kunze, K., and Lukowicz, P. (2014). Sensor placement variations in wearable activity recognition. IEEE Pervasive Comput. 13, 32–41. doi:10.1109/MPRV.2014.73

Kwon, H., Tong, C., Haresamudram, H., Gao, Y., Abowd, G. D., Lane, N. D., et al. (2020). IMUTube: Automatic extraction of virtual on-body accelerometry from video for human activity recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4. doi:10.1145/3411841

Kwon, H., Wang, B., Abowd, G. D., and Plötz, T. (2021). Approaching the real-world: Supporting activity recognition training with virtual IMU data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 5, 111:1–111:32. doi:10.1145/3478096

Lambrecht, S., Harutyunyan, A., Tanghe, K., Afschrift, M., De Schutter, J., and Jonkers, I. (2017). Real-time gait event detection based on kinematic data coupled to a biomechanical model†. Sensors 17, 671. doi:10.3390/s17040671

Lämsä, A., Tervonen, J., Liikka, J., Álvarez Casado, C., and Bordallo Lopez, M. (2022). Video2IMU: Realistic IMU features and signals from videos

Lau, H. Y. A., and Tong, R. K.-Y. (2008). The reliability of using accelerometer and gyroscope for gait event identification on persons with dropped foot. Gait posture 27, 248–257. doi:10.1016/j.gaitpost.2007.03.018

Lester, J., Choudhury, T., and Borriello, G. (2006). “A practical approach to recognizing physical activities,” in Pervasive 2006: Proceedings of the 4th International Conference on Pervasive Computing. Editors K. P. Fishkin, B. Schiele, P. Nixon, and A. Quigley (Springer Berlin, Heidelberg), 1–16. Lecture notes in computer science. doi:10.1007/11748625⟨sub⟩1⟨/sub⟩

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., and Black, M. J. (2015). SMPL: A skinned multi-person linear model. ACM Trans. Graph. 34, 1–16. doi:10.1145/2816795.2818013

Mannini, A., and Sabatini, A. M. (2010). Machine learning methods for classifying human physical activity from on-body accelerometers. Sensors (Basel, Switz. 10, 1154–1175. doi:10.3390/s100201154

Mannini, A., Trojaniello, D., Cereatti, A., and Sabatini, A. M. (2016). A machine learning framework for gait classification using inertial sensors: Application to elderly, post-stroke and huntington’s disease patients. Sensors 16, 134. doi:10.3390/s16010134

Maqbool, F., Husman, M., Awad, M., Abouhossein, A., Iqbal, N., and Dehghani-Sanij, A. (2016). A real-time gait event detection for lower limb prosthesis control and evaluation. IEEE Trans. Neural Syst. Rehabil. Eng. 1, 1500–1509. doi:10.1109/TNSRE.2016.2636367

Martindale, C. F., Roth, N., Gassner, H., Jensen, D., Kohl, Z., and Eskofier, B. (2018). “Mobile gait analysis using personalised hidden Markov models for hereditary spastic paraplegia patients,” in 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 5430–5433.