- 1Key Laboratory of Metallurgical Equipment and Control Technology of Ministry of Education, Wuhan University of Science and Technology, Wuhan, China

- 2Research Center for Biomimetic Robot and Intelligent Measurement and Control, Wuhan University of Science and Technology, Wuhan, China

- 3Hubei Key Laboratory of Mechanical Transmission and Manufacturing Engineering, Wuhan University of Science and Technology, Wuhan, China

- 4Precision Manufacturing Research Institute, Wuhan University of Science and Technology, Wuhan, China

- 5Hubei Key Laboratory of Hydroelectric Machinery Design and Maintenance, China Three Gorges University, Yichang, China

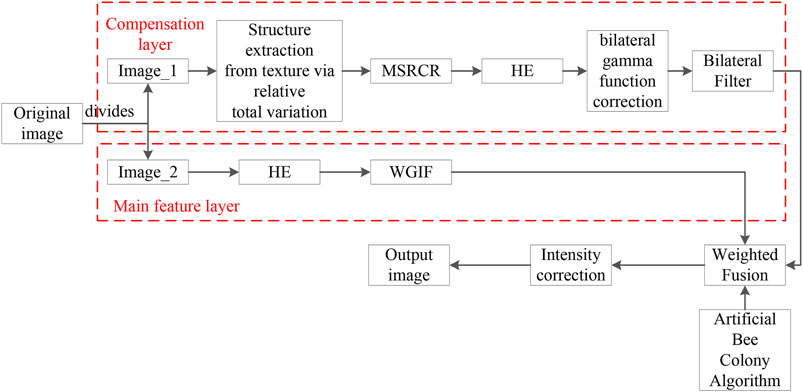

In order to solve the problems of poor image quality, loss of detail information and excessive brightness enhancement during image enhancement in low light environment, we propose a low-light image enhancement algorithm based on improved multi-scale Retinex and Artificial Bee Colony (ABC) algorithm optimization in this paper. First of all, the algorithm makes two copies of the original image, afterwards, the irradiation component of the original image is obtained by used the structure extraction from texture via relative total variation for the first image, and combines it with the multi-scale Retinex algorithm to obtain the reflection component of the original image, which are simultaneously enhanced using histogram equalization, bilateral gamma function correction and bilateral filtering. In the next part, the second image is enhanced by histogram equalization and edge-preserving with Weighted Guided Image Filtering (WGIF). Finally, the weight-optimized image fusion is performed by ABC algorithm. The mean values of Information Entropy (IE), Average Gradient (AG) and Standard Deviation (SD) of the enhanced images are respectively 7.7878, 7.5560 and 67.0154, and the improvement compared to original image is respectively 2.4916, 5.8599 and 52.7553. The results of experiment show that the algorithm proposed in this paper improves the light loss problem in the image enhancement process, enhances the image sharpness, highlights the image details, restores the color of the image, and also reduces image noise with good edge preservation which enables a better visual perception of the image.

Introduction

The vast majority of information acquired by humans comes from vision. Images, as the main carrier of visual information, play an important role in three-dimensional reconstruction, medical detection, automatic driving, target detection and recognition and other aspects of perception (Li B. et al., 2019; Wang et al., 2019; Yu et al., 2019; Huang et al., 2021; Liu et al., 2022a; Tao et al., 2022a; Yun et al., 2022a; Bai et al., 2022). With the rapid development of optical and computer technology, equipment for image acquisition are constantly updated, and images often contain numerous valuable information waiting to be discovered and accessed by humans (Jiang et al., 2019a; Huang et al., 2020; Hao et al., 2021a; Cheng and Li, 2021). However, due to the influence of light, weather and imaging equipment, the captured images are often dark, noisy, poorly contrasted and partially obliterated in detail in real life (Sun et al., 2020a; Tan et al., 2020; Wang et al., 2020). This kind of image makes the area of interest difficult to identify, thus reducing the quality of image and the visual effect of the human eyes (Jiang et al., 2019b; Hu et al., 2019), and also causes great inconvenience for the extraction and analysis of image information, generating considerable difficulty for computers and other vision devices to carry out normal target detection and recognition (Su and Jung, 2018; Sun et al., 2020b; Cheng et al., 2020; Luo et al., 2020; Hao et al., 2021b). Therefore, it is necessary to enhance the low-light images through image enhancement technology (Jiang et al., 2019c; Sun et al., 2020c), so as to highlight the detailed features of the original images, improve contrast, reduce noise, make the original blurred and low recognition images clear, improve the recognition and interpretation of images comparatively, and satisfy the requirements of certain specific occasions (Tao et al., 2017; Ma et al., 2020; Jiang et al., 2021a; Tao et al., 2021; Liu et al., 2022b). Metaheuristic algorithms have great advantages for multi-objective problem solving and parameter optimization (Li et al., 2020a; Yu et al., 2020; Chen et al., 2021a; Liu X. et al., 2021; Wu et al., 2022; Xu et al., 2022; Zhang et al., 2022; Zhao et al., 2022), Methods of Multiple Subject Clustering and Subject Extraction as well as, K-means clustering methods, steady-state analysis methods, numerical simulation techniques quantification and regression methods are also widely used in data processing (Li et al., 2020b; Sun et al., 2020d; Chen et al., 2022). Artificial Bee Colony (ABC) is an optimization method proposed to imitate the honey harvesting behavior of bee colony, which is a specific application of cluster intelligence idea. The main feature is that ABC requires no special information about the problem, but only needs to compare the advantages and disadvantages of the problem (Li C. et al., 2019; He et al., 2019; Duan et al., 2021), and through the individual local optimization-seeking behavior of each worker bee, the global optimum value will eventually emerge in the population, which has a fast convergence speed (Chen et al., 2021b; Yun et al., 2022b).

In response to the above problems, considering this advantage of ABC, this paper proposes a low-illumination image enhancement algorithm based on improved multi-scale Retinex and ABC optimization. Based on Retinex theory and image layering processing, this algorithm improves and optimizes the multi-scale Retinex algorithm with the structure extraction from texture via relative total variation, and replicates the original image to obtain the main feature layer and the compensation layer. In the image fusion process, the ABC algorithm is used to optimize the fusion weight factors of each layer and select the optimal solution to realize the processing enhancement of low-illumination images. Finally, the effectiveness of the algorithm in this paper is verified by conducting experiments on the LOLdataset dataset.

The other parts of this paper as follows: Related Work gives an overview of image enhancement methods in low illumination and Artificial Bee Colony algorithms; Basic Theory describes the basic theory of Retinex; The Algorithm Proposed in This Paper proposes a low illumination image enhancement algorithm based on improved multiscale Retinex and ABC optimization; Experiments and Results Analysis conducts verification experiments which compares with the traditional Retinex algorithm and the method proposed in this paper and the results were analyzed by Friedman test and Wilcoxon signed rank test; and the conclusions of this paper are summarized in Conclusion.

Related Work

Image enhancement algorithms are grouped into two main categories: spatial domain and frequency domain image enhancement algorithms (Vijayalakshmi et al., 2020). The methods of spatial domain enhancement mainly include histogram equalization (Tan and Isa, 2019) and Retinex algorithm, etc.

Histogram Equalization (HE) achieves the enhancement of image contrast by adjusting the pixel grayscale of the original image and mapping the image grayscale to more gray levels to make it evenly distributed, but often the noise of image processed by HE is also enhanced and the details are lost (Nithyananda et al., 2016); The Retinex image enhancement method proposed by Land E H (Land, 1964) combines well with the visual properties of the human eye, especially in low-illumination enhancement, and which performs well overall compared to other conventional methods. Based on the Retinex theory, Jobson D J et al.(Jobson et al., 1997) proposed the Single-Scale Retinex (SSR) algorithm, which can get better contrast and detail features by estimating the illumination map, but this algorithm can cause detail loss in image enhancement. Researchers subsequently proposed Multi-Scale Retinex (MSR), the image enhanced by this algorithm will have certain problems of color bias, and there will still be local unbalanced enhancement and “halo” phenomenon (Wang et al., 2021). Therefore, Rahman Z et al. (Rahman et al., 2004) proposed the Multi-Scale Retinex with Color Restoration (MSRCR), and the “halo” and color problems have been improved. The application of convolutional neural networks to deep learning has led to improved enhancement and recognition, but the difficulties in the construction of the network and the collection of data sets for training make this method difficult to implement (Liu et al., 2021b; Sun et al., 2021; Weng et al., 2021; Yang et al., 2021; Tao et al., 2022b; Liu et al., 2022c). Based on the Retinex algorithm, Wang D et al. (Wang et al., 2017) used Fast Guided Image Filtering (FGIF) to evaluate the irradiation component of the original image, combined with bilateral gamma correction to adjust and optimize the image, which preserved the details and colors of the image to some extent, but the overall visual brightness was not high. Zhai H et al. (Zhai et al., 2021) proposed an improved Retinex with multi-image fusion algorithm to operate and fuse three copies of images separately, and the images processed by this algorithm achieved some improvement in brightness and contrast, but the overall still had noise and some details lost.

The frequency domain enhancement methods mainly include Fourier transform, wavelet transform, Kalman filtering and image pyramid, etc (Li et al., 2019c; Li et al., 2019d; Huang et al., 2019; Chang et al., 2020; Tian et al., 2020; Liu et al., 2021c). This kind of algorithm can effectively enhance the structural features of the image, but the target details of the image which are enhanced by these methods are still blurred. The image layering enhancement method proposed by researchers in recent years has led to the application of improved low-light image enhancement methods based on this principle more and more widely (Liao et al., 2020; Long and He, 2020). The enhancement of image layer decomposes the input image into base layer and detail layer components, and then processes the enhancement of the two layers separately, and finally selects the appropriate weighting factor for image fusion. Commonly used edge-preserving filters are bilateral filtering, Guided Image Filtering (GIF), Fast Guided Image Filtering (Singh and Kumar, 2018), etc. Since GIF uses the same linear model and weight factors for each region of the image, it is difficult to adapt to the differences in texture features between different regions of the image. In order to resolve this problem of GIF, Li Z et al.(Li et al., 2014) proposed a Weighted Guided Image Filtering (WGIF) based on local variance, which constructs an adaptive weighting factor based on traditional guided filtering, which not only improves the edge-preserving ability but also reduces the “halo artifacts” caused by image enhancement.

Inspired by the honey harvesting behavior of bee colonies, Karaboga (Karaboga, 2005) proposed a novel global optimization algorithm based on swarm intelligence, Artificial Bee Colony (ABC), in 2005. Since its introduction, the ABC algorithm has attracted the attention of many scholars and has been analyzed comparatively. Karaboga et al. (Karaboga and Basyurk, 2008) analyze the performance of ABC compared with other intelligent algorithms under multidimensional and multimodal numerical problems and the effect of the scale of the ABC control parameters taken. Karaboga et al. (Karaboga and Akay, 2009) were the first to perform a detailed and comprehensive performance analysis of ABC by testing it against 50 numerical benchmark functions and comparing it with other well-known evolutionary algorithms such as Genetic Algorithms (GA), Particle Swarm Optimization (PSO), Differential Evolution Algorithm (DE), and Ant Colony Optimization (ACO). Akay et al. (Akay and Karaboga, 2009) analyzed the effect of parameter variation on ABC performance. Singh et al. (Singh, 2009) proposed an artificial bee colony algorithm for solving minimum spanning tree and verified the superiority of this algorithm for solving such problems. Ozurk et al. (Ozurk and Karaboga, 2011) proposed a hybrid method of artificial bee colony algorithm and Levenberg-Marquardts for the training of neural networks. Karaboga et al. (Karaboga and Gorkemli, 2014) modified the new nectar search formula to find the best nectar source near the exploited nectar source (set a certain radius value) to be exploited in order to improve the local merit-seeking ability of the swarm algorithm.

Basic Theory

Fundamentals of Retinex

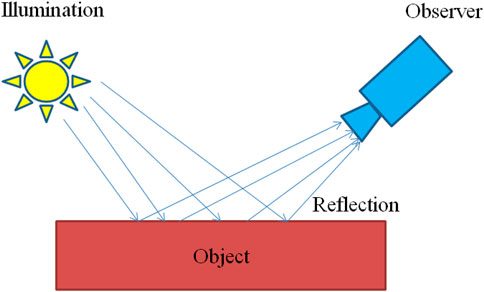

Retinex is a common method of image enhancement based on scientific experiments and scientific analysis, which is proposed by Edwin.H.Land in 1963 (Land and McCann, 1971). In this theory, two factors determine the color of an object being observed, as shown in Figure 1, namely the reflective properties of the object and the intensity of the light around the them, but according to the theory of color constancy, it is known that the inherent properties of the object are not affected by light, and the ability of the object to reflect different light waves determines the color of the object to a large extent (Zhang et al., 2018).

This theory shows that the color of the substance is consistent and depends on its ability to reflect wavelengths, which is independent of the absolute value of the intensity of the reflected light, in addition to being unaffected by non-uniform illumination, and is consistent, so Retinex is based on color consistency. While traditional nonlinear and linear only enhance one type of feature of the object, this theory can be adjusted in terms of dynamic range compression, edge enhancement and color invariance, enabling adaptive image enhancement.

The Retinex method assumes that the original image is obtained by multiplying the reflected image and the illuminated image, which can be expressed as

In Eq. 1, I(x,y) is the original image, R(x,y) is the reflection component with the image details of the target object, L(x,y) is the irradiation component with the intensity information of the surrounding light.

In order to reduce the computational complexity in the traditional Retinex theory, the complexity of the algorithm is usually simplified by taking logarithms on both sides with a base of 10 of Eq. 1 and converting the multiplication and division operations in the real domain to the addition and subtraction operations in the logarithmic domain. The conversion results are as follows:

Traditional Retinex Algorithm

The SSR method uses a Gaussian kernel function as the central surround function to obtain the illumination component by convolving with the original image and then subtracting it to obtain the reflection component in the logarithmic domain.

The specific expressions are as follows:

In Eqs 4, 5,

In order to maintain high image fidelity and compression of the dynamic range of the image, researchers proposed the Multi-Scale Retinex (MSR) method on the basis of SSR(Peiyu et al., 2020), The MSR algorithm uses multiple Gaussian wrap-around scales for weighted summation, The specific expressions are as follows:

In Eqs. 7, K is the number of Gaussian center surround functions. When K = 1, MSR degenerates to SSR.

Considering the color bias problem of SSR and MSR, the researchers developed the MSRCR (Weifeng and Dongxue, 2020), MSRCR adds a color recovery factor to MSR, which is used to adjust the color ratio of the channels, The specific expressions are as follows:

In Eq. 9,

The Algorithm Proposed in This Paper

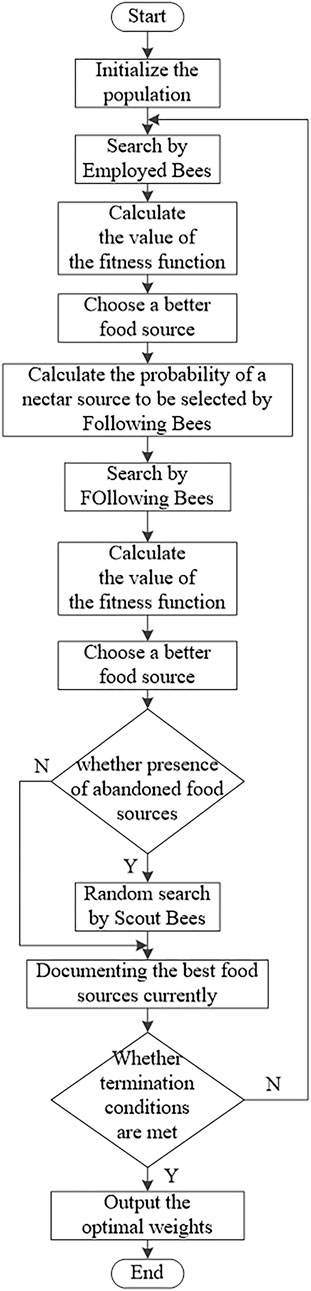

The low-illumination image enhancement algorithm based on improved multi-scale Retinex and ABC optimization, which is proposed in this paper, divides the image equivalently into a main feature layer and a compensation layer. For the main feature layer firstly, HE is used for image enhancement, and WGIF is selected for edge-preserving noise reduction. For the compensation layer, the irradiated component of the original image is first obtained by used the structure extraction from texture via relative total variation, and then the original image is processed with the MSRCR algorithm to obtain the reflected component for color recovery, and Histogram Equalization, bilateral gamma function correction, and edge-preserving filtering are applied to it. Finally, the main feature layer and the compensation layer are fused by optimal parameters, and the optimal parameters are obtained by adaptive processing correction with an ABC algorithm to achieve image enhancement under low illumination. The flow chart of the algorithm in this paper is shown in Figure 2.

Main Feature Layer

Weighted Guided Image Filtering

Guided Image Filter is a filtering method proposed by He K et al. (He et al., 2012), which is an image smoothing filter based on a local linear model. The basic idea of guided image filter is to assume that the output image is linearly related to the bootstrap image within a local window

To find the linear coefficients in Eq. 10, the cost function is introduced as follows:

Using least squares to minimize the value of the cost function

In Eqs. 10, 11, 12,

Since a pixel point in the output image can be derived by linear coefficients in different windows, the following expression can be obtained:

GIF uses a uniform regularization factor

In Eqs. 15, 16,

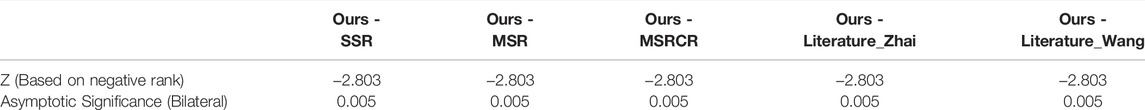

A comparison of the results processed by WGIF and FGIF is shown in Figure 3. As it can be seen in Figure 3, the FGIF-processed images still have some noise, while the results after WGIF processing are well improved in this aspect.

FIGURE 3. The first row is the image obtained after FGIF processing; The second row is the image obtained after WGIF processing.

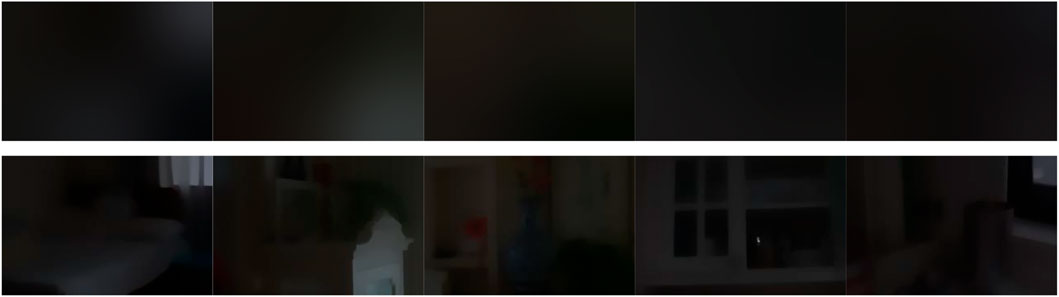

Obtaining the Main Feature Layer

HE is used for image enhancement and WGIF is selected for edge-preserving noise reduction. The results obtained from each step are shown in Figure 4. From this figure, it can be seen that the image obtained by HE has been improved compared with the original image, but in this process, the noise in the image is also extracted and amplified. Some of the details and noise in the image are filtered out by the process of WGIF, and the “halo” phenomenon and the “artifacts” caused by the gradient inversion are avoided, because WGIF takes into account the texture differences between regions in the image.

FIGURE 4. The results of main feature layer obtains. (A) Waiting to process images (B) Histogram Equalization (C) WGIF.

Compensation Layer

Structure Extraction From Texture via Relative Total Variation

As can be seen from 2.2, the traditional Retinex algorithm uses a Gaussian filter kernel function to convolve with the original image, and after eliminating the filtered irradiated component, the reflected component is used as the enhancement result, but the estimation of Gaussian filter at the edge of the image is prone to bias, and thus the “halo” phenomenon occurs, which undoubtedly This will undoubtedly lead to unnatural enhancement results due to the lack of illumination. To address this problem, this paper uses the structure extraction from texture via relative total variation in obtaining the irradiation component of the compensation layer, which was proposed by Xu L et al. (Xu et al., 2012) in 2012, to better preserve the main edge information of the image and thus reduce the “halo” phenomenon in the edge information-rich region. The model of the method is as follows:

In Eqs 17 and 18, 19, 20 and 21, S is the output image, p is the pixel index, λ is the weighting factor to adjust the degree of smoothing of the image, and the larger the value of λ is, the smoother the image is;

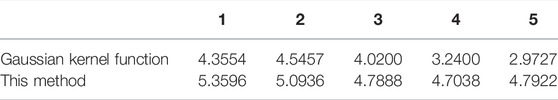

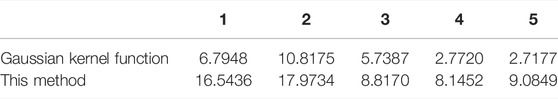

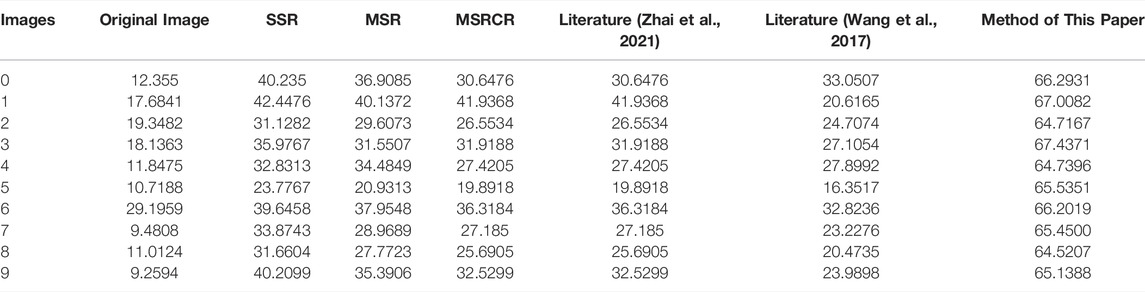

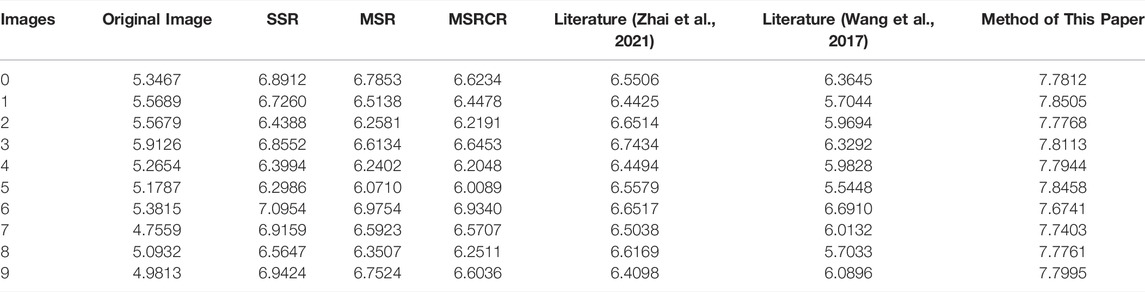

In order to demonstrate the advantages of this method from a practical point of view, the images in the LOLdataset were taken for the structure extraction from texture via relative total variation and convolution operations with the Gaussian kernel function in the traditional Retinex algorithm to obtain the irradiation components, respectively, and the results obtained by the two methods are shown in Figure 5. Meanwhile, Information Entropy and Standard Deviation were used to assess their quality, and the results are shown in Tables 1 and 2. From Figure 5, it can be seen that the structure extraction from texture via relative total variation method preserves the irradiation component better, and at the same time, it is known from the correlation evaluation function that the IE and SD of this method are greater than those of the Gaussian kernel function convolution method of the traditional Retinex algorithm, which proves that the structure extraction from texture via relative total variation method is better in preserving the image information in the acquisition of the irradiation component.

FIGURE 5. The first row is the irradiation component obtained by Gaussian kernel function; the second row is the irradiation component obtained by the structure extraction from texture via relative total variation method.

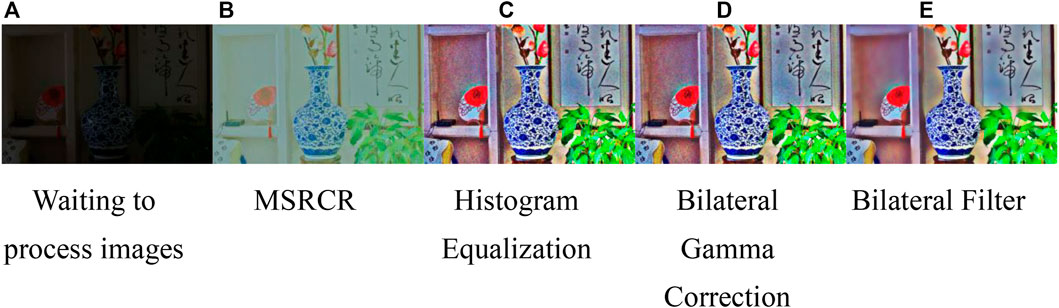

Obtaining of Compensation Layers

For the original image, a duplication layer is performed to obtain the image to be processed, and the structure extraction from texture via relative total variation is selected to obtain the irradiation component, and combined with the principle of Retinex and color recovery to obtain the reflection component, at the same time, histogram equalization, bilateral gamma correction and bilateral filtering are performed. The results obtained from each step are shown in Figure 6. As can be seen from the figure, the image content is basically recovered by the MSRCR algorithm processing, but the image saturation is not enough to restore the real scene in comparison. After HE method, the color was recovered to some extent, but the obtained image shows that the light and dark transition areas are not effective. Therefore, this paper used the improved bilateral gamma function for processing (Wang et al., 2021). The mathematical expression of the traditional gamma function is as follows:

FIGURE 6. The results of compensation layers obtains.(A) Waiting to process images (B) MSRCR (C) Histogram Equalization (D) Bilateral Gamma Correction (E) Bilateral Filter.

In Eq. 23,

Since the traditional bilateral gamma function can only be mechanically enhanced, to address this problem and considering the distribution characteristics of the illumination function, the mathematical expression of the scholars’ improved bilateral gamma function is as follows:

In Eq. 24, The value of

Hence an improved bilateral gamma function is used for adaptive correction of the luminance transition region; Finally, bilateral filtering is used for edge-preserving and noise-reducing to obtain the final compensation layer.

Image Fusion

Selection of the Fitness Function

Through the above processing flow, the main feature layer and compensation layer are finally obtained, and the corresponding fusion is performed at the end of the proposed method in this paper, where an image evaluation system is established and three evaluation indexes are introduced: Information Entropy, Standard Deviation and Average Gradient.

The Standard Deviation (SD) reflects the magnitude of the dispersion of the image pixels. The larger the standard deviation, the greater the dynamic range of the image and the more gradation levels. The formula to calculate SD is as follows:

In Equ. 25,

The Average Gradient (AG) represents the variation of small details in the multidimensional direction of the image. The larger the AG, the sharper the image detail, and the greater the sense of hierarchy. The formula to calculate AG is as follows:

The information entropy (IE) of image is a metric used to measure the amount of information in an image. The greater the IE, the more informative and detailed the image is, and the higher the image quality. The formula to calculate IE is as follows:

In Eq. 27, R is the image pixel gray level, usually R = 28-1, and P(x) is the probability that the image will appear at a point in the image when the gray value x is at that point.

On the concept of multi-objective optimization (Li et al., 2019e; Liao et al., 2021; Xiao et al., 2021; Liu et al., 2022d; Yun et al., 2022c), The IE, AG and SD are weighted together and balanced by using an equal proportional overlay, showing that IE, AG and SD are equally important in image evaluation. The mathematical expression of the fitness function obtained is as follows:

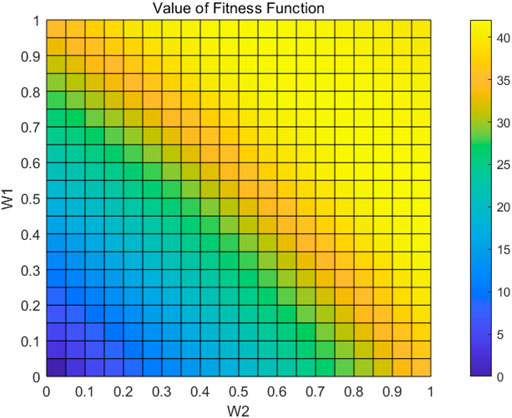

The values of the fitness function under different weights are obtained by applying different weights to the main feature layer and the compensation layer for image weighting fusion, as shown in Figure 7. It is clear from this figure that the value of the fitness function varies with different weights and that the maximum value should be generated in

Traditional nonlinear optimization algorithms update the objective solution by certain rules of derivatives, such as Gradient Descent, Newton’s Method and Quasi-Newton Methods. When solving multi-objective nonlinear optimization problems, it is difficult to satisfy the requirements because of the computational complexity of following the defined methodological steps for optimization The convergence of the Gradient Descent is slowed down when it approaches a minimal value, and requires several iterations; Newton’s method is second-order convergence, which is fast, but each step requires solving the inverse matrix of the Hessian matrix of the objective function, which is computationally complicated.

The metaheuristic algorithm models the optimization problem based on the laws of biological activity and natural physical phenomena. According to the laws of natural evolution, the natural evolution-based metaheuristic algorithm uses the previous experience of the population in solving the problem, and selects the methods that have worked well so that the target individuals are optimized in the iterations, and finally arrives at the best solution. Considering the computational complexity of the objective function and this feature of the metaheuristic algorithm, the artificial bee colony algorithm is chosen for the objective optimization.

Artificial Bee Colony Algorithm

Inspired by the honey harvesting behavior of bee colonies, Karaboga (2005) proposed a novel global optimization algorithm based on swarm intelligence, Artificial Bee Colony (ABC), in 2005. The bionic principle is that bees perform different activities during nectar collection according to their respective division of labor, and achieve sharing and exchange of colony information to find the best nectar source. In ABC, the entire population is divided into three types of bees, namely, employed bees, scout bees and follower bees. When a employed bee finds a honey source, it will share it with a follower bee with a certain probability; a scout bee does not follow any other bee and looks for the honey source alone, and when it finds it, it will become a employed bee to recruit a follower bee; when a follower bee is recruited by multiple employed bees, it will choose one of the many leaders to follow until the honey source is finished.

Determination of the initial location of the nectar source:

In Eq. 29,

Leading the bee search for new nectar sources:

In Eq. 30,

Probability of follower bees selecting the employed bee:

Scout bees searching for new nectar sources:

During the search for the nectar source, if it has not been updated to a better one after n iterations of the search reach the threshold T, then the source is abandoned. The scout bee then finds a new nectar source again. The flow chart of the artificial bee colony algorithm is shown in Figure 8.

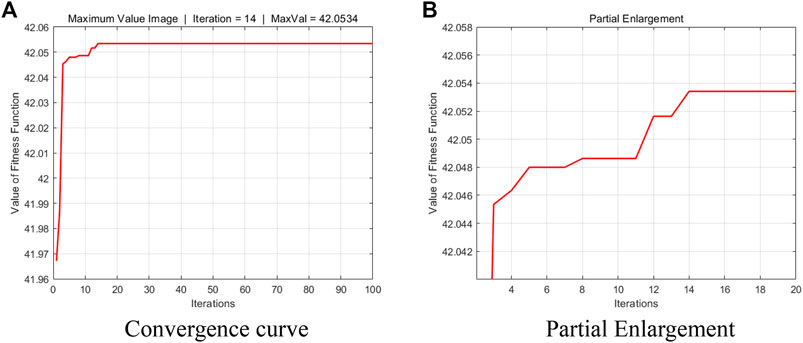

The above fitness function is selected and iteratively optimized by the artificial bee colony algorithm, each parameter is set to the number of variables is 2, max-iter is 100, n-pop is 45, and the maximum number of honey source mining is 90. The convergence curve of the optimal weight parameter is shown in Figure 9.The convergence curves of the optimal weight parameters by iterative optimization of the artificial bee colony algorithm by selecting the above fitness function are shown in Figure 8. Considering that the optimization algorithm is to obtain the minimum value, the results are inverted, and it can be seen from Figure 9 that the maximum value is 42.0534 under this fitness function, and convergence is completed at 14 times.

FIGURE 9. Convergence curve of artificial bee colony algorithm. (A) Convergence curve (B) Partial Enlargement.

Experiments and Results Analysis

The computer used in this experiment was a 64-bit Win10 operating system; CPU为Intel(R)Core(TM) i5-6300HQ at2.30GHz; GPU is NVIDIA 960M with 2G GPU memory; RAM is 8 GB; All algorithms in this paper were run on MATLAB 2021b and Python3.7 on the PyCharm platform, and statistical analysis of the results using IBM SPSS Statistics 26.

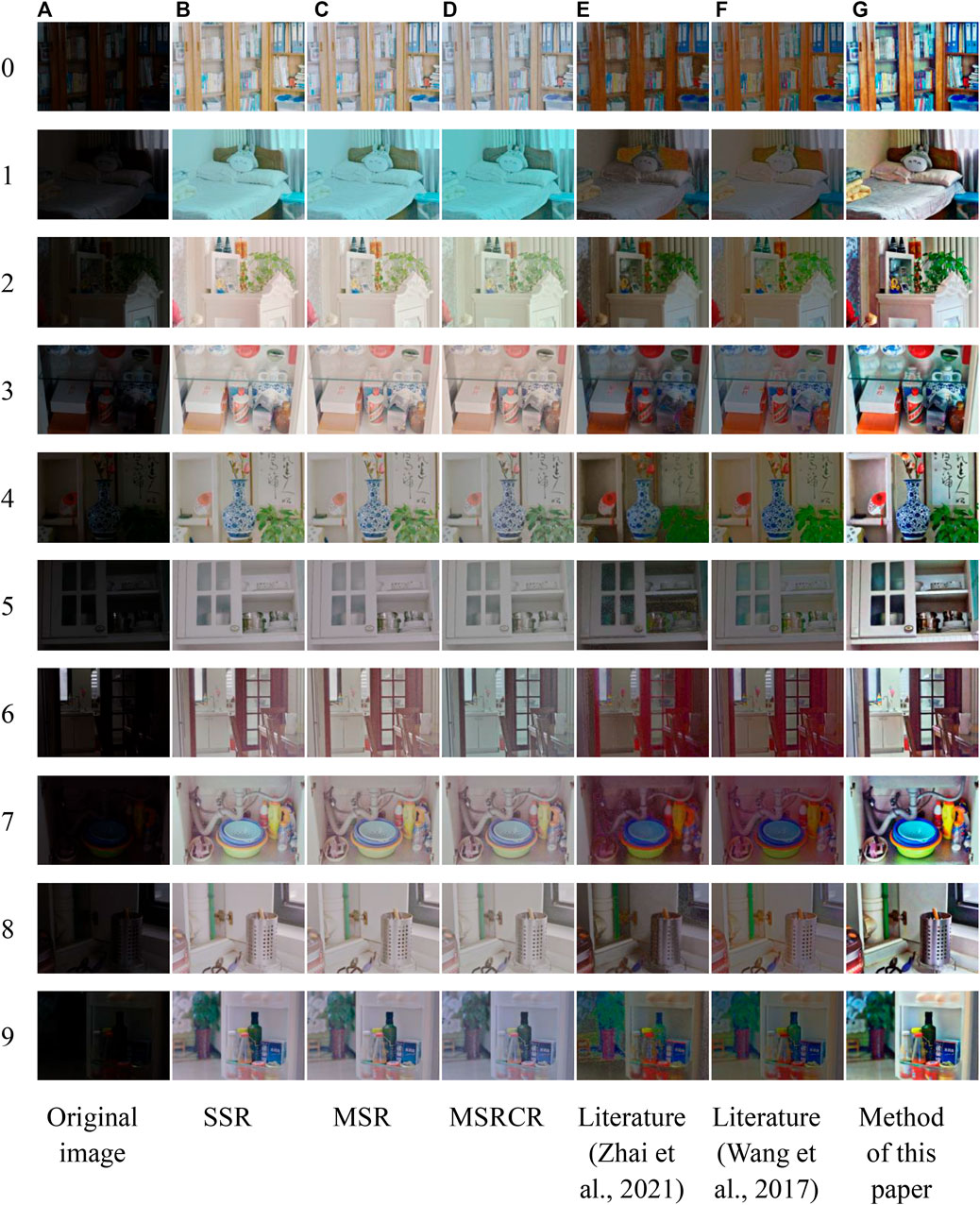

The images used in the experiments are all from the LOLdataset dataset, and 200 low-illumination images are randomly selected and tested one by one by the algorithm, and the representative images are selected for comparison of processing effects. The algorithm proposed in this paper is compared with SSR algorithm, MSR algorithm, MSRCR algorithm, literature (Zhai et al., 2021), and literature (Wang et al., 2017) algorithms, where the Gaussian surround scale parameters of SSR algorithm are set to 100; the Gaussian surround scale parameters of MSR algorithm are set to 15, 80, 250; the Gaussian surround scale parameters of MSRCR algorithm are set to 15, 80, 250, α = 125, β = 46; literature (Zhai et al., 2021) and literature (Wang et al., 2017) are built according to the content of the paper respectively, and the algorithm is restored as much as possible. In this paper, the image enhancement results under different methods are analyzed by subjective evaluation and objective evaluation, and the processing results of each method are shown in Figure 10.

FIGURE 10. Low-illumination image processing results under different algorithms. (A) Original image (B) SSR (C) MSR (D) MSRCR (E) Literature (Zhai et al., 2021) (F) Literature (Wang et al., 2017) (G) Method of this paper.

Subjective Evaluation

It can be shown from Figure 10 that the brightness of the image after processing by SSR and MSR algorithms is improved compared with the original image, but the color retention effect is poor, the image is whitish and the color loss is serious. MSRCR ensure the brightness improvement comparing to the former methods, the color is also restored to some extent, but the color reproduction is not high and there is loss of details. The processing results of literature (Zhai et al., 2021) are in better color reproduction, but general brightness enhancement, part of the detailed information not effectively enhanced is still annihilated in the dark areas of the image, specifically in the end of the bed in Figures 10–e-1, the shadow of the cabinet in the lower left corner of 10-(e)-4 and the cabinet in the middle of the image of 10-(e)-8, Meanwhile, it can be seen from the image that a small amount of noise still exists in part of the location, specifically in the edge of Figures 10–e-1 and the glass of 10-(e)-5; The processing result of the literature (Wang et al., 2017) makes the brightness of the image get some improvement, basically no noise and relatively good color retention, but the image is not strong in the sense of hierarchy, specifically in the bed sheet in Figures 10–f-1 and the cabinet in 10-(f)-4. Meanwhile, it can be seen from the image that the processing images of this method have some detail loss, specifically in the restored shadows in Figures 10–f-4 and 10-(f)-8.

The enhanced image obtained by the algorithm in this paper has higher color fidelity, more prominent details, better structural information effect, and more consistent with the visual perception of human eyes in overall comparison.

Objective Evaluation

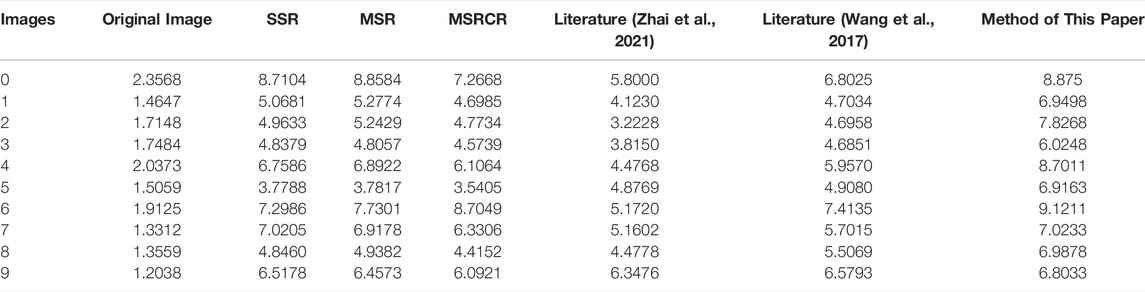

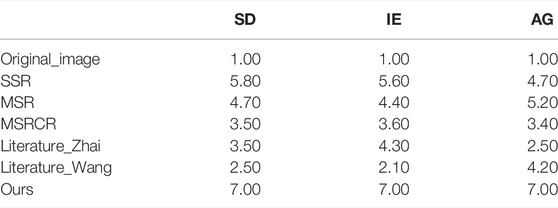

Subjective evaluation is susceptible to interference from other factors and varies from person to person. In order to have a better comparison of the image quality of the enhancement results under different methods and to ensure the reliability of the experiments, Standard Deviation, Information Entropy and Average Gradient are used as evaluation metrics in this paper. The Standard Deviation reflects the magnitude of the dispersion of the image pixels, the greater the Standard Deviation, the greater the dynamic range of the image; Information Entropy is a metric used to measure the amount of information in an image, the higher the Information Entropy, the more information in the image. The Average Gradient represents the variation of small details in the multidimensional direction of the image, the larger the Average Gradient, the stronger the image hierarchy. The evaluation results of low-illumination image enhancement with different algorithms are shown in Tables 3–5.

Statistical analysis is taken for the data in Tables 3–5. The Friedman test is used to analyze the variability of the results of experiments, and the Wilcoxon sign ranked test method is used to analyze the advantages of the proposed method in this paper with other methods.

The Friedman test is a statistical test for the chi-squaredness of multiple correlated samples, which was proposed by M. Friedman in 1973. The Friedman test requires the following requirements to be met: 1. sequential level data; 2. three or more groups; 3. relevant groups; And 4. a random sample of values from the collocation. Obviously, the data in Tables 3–5 all satisfy the requirements.

Under the Friedman test, the following hypothesis is set:

H0: No difference between the six methods compared.

H1: There are differences in the six methods of comparison.

The data is imported into SPSS software for analysis, and the results were obtained as shown in Tables 6 and 7.

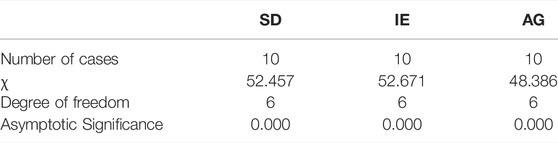

The Wilcoxon Signed Rank Test was proposed by F. Wilcoxon in 1945. In the Wilcoxon Signed Rank Test, it takes the rank of the absolute value of the difference between the observation and the central position of the null hypothesis and sums them separately according to different signs as its test statistic.

Under the Wilcoxon Signed Rank Test, it can be seen from Tables 3–5 that the method of this paper is numerically greater than the other algorithms, so the following hypothesis is set:

H0: The images enhanced by ours did not differ from the other methods.

H1: The images enhanced by ours differ from the other methods.

The data is imported into SPSS software for analysis, and the results of the data were obtained as shown in Table 8 and 9.

From the data in Tables 3–5, it can be seen that the algorithm in this paper achieves a large improvement in SD, IE and AG, which is significantly better than the other five algorithms, Meanwhile, after Friedman test, it can be seen from Table 6 and 7 that asymptotic significance is less than 0.001 in all three evaluation metrics, so the original hypothesis is rejected and this data is extremely different in statistics; After Wilcoxon Signed Rank Test, it can be seen from Table 8 and 9 that the bilateral asymptotic significance is less than 0.01 for all three evaluation metrics, so the original hypothesis is rejected and the method of this paper is effective, which is differ from the other methods. This shows that the images enhanced by the algorithm in this paper have increased brightness, richer details, less image distortion and better image quality, thus verifying the effectiveness of the algorithm proposed in this paper.

Conclusion

For the problems of poor image quality and loss of detail information in the process of low-illumination image enhancement, a low-illumination image enhancement algorithm is proposed in this paper, which is based on improved multi-scale Retinex and ABC optimization. Duplicate layering the original image, the main feature layer is processed by HE and WGIF, to enable image brightness enhancement, color restoration and noise elimination, and avoid the generation of gradient inversion artifacts; The structure extraction from texture via relative total variation method is performed on the compensation layer to estimate the irradiation component, and combined with bilateral gamma correction and other methods to avoid the occurrence of halo phenomenon; Finally, the Artificial Bee Colony algorithm is used to optimize the parameters for weighted fusion. The experimental results verify the rationality of the algorithm in this paper, and which achieves better results in both subjective and objective evaluations by comparing with other five methods.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This work was supported by grants of the National Natural Science Foundation of China (Grant Nos. 52075530, 51575407, 51505349, 51975324, 61733011, 41906177); the Grants of Hubei Provincial Department of Education (D20191105); the Grants of National Defense PreResearch Foundation of Wuhan University of Science and Technology (GF201705) and Open Fund of the Key Laboratory for Metallurgical Equipment and Control of Ministry of Education in Wuhan University of Science and Technology (2018B07,2019B13) and Open Fund of Hubei Key Laboratory of Hydroelectric Machinery Design & Maintenance in Three Gorges University(2020KJX02, 2021KJX13).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akay, B., and Karaboga, D. (2009). “Parameter Tuning for the Artificial Bee Colony Algorithm,” in International Conference on Computational Collective Intelligence. Editors N. T. Nguyen, B. H. Hoang, C. P. Huynh, D. Hwang, B. Trawiński, and G. Vossen (Berlin, Heidelberg: Springer), 608–619. doi:10.1007/978-3-642-04441-0_53

Bai, D., Sun, Y., Tao, B., Tong, X., Xu, M., Jiang, G., et al. (2022). Improved Single Shot Multibox Detector Target Detection Method Based on Deep Feature Fusion. Concurrency Comput. 34 (4), e6614. doi:10.1002/CPE.6614

Chang, L., Haifeng, Z., and Wu, C. (2020). Gaussian Pyramid Transform Retinex Image Enhancement Algorithm Based on Bilateral Filtering. Laser Optoelectronics Prog. 57 (16), 161019. doi:10.3788/LOP57.161019

Chen, T., Peng, L., Yang, J., Cong, G., and Li, G. (2021a). Evolutionary Game of Multi-Subjects in Live Streaming and Governance Strategies Based on Social Preference Theory during the COVID-19 Pandemic. Mathematics 9 (21), 2743. doi:10.3390/math9212743

Chen, T., Qiu, Y., Wang, B., and Yang, J. (2022). Analysis of Effects on the Dual Circulation Promotion Policy for Cross-Border E-Commerce B2B Export Trade Based on System Dynamics during COVID-19. Systems 10 (1), 13. doi:10.3390/systems10010013

Chen, T., Yin, X., Yang, J., Cong, G., and Li, G. (2021b). Modeling Multi-Dimensional Public Opinion Process Based on Complex Network Dynamics Model in the Context of Derived Topics. Axioms 10 (4), 270. doi:10.3390/axioms10040270

Cheng, Y., Li, G., Yu, M., Jiang, D., Yun, J., Liu, Y., et al. (2021). Gesture Recognition Based on Surface Electromyography-Feature Image. Concurrency Comput. Pract. Experience 33 (6), e6051. doi:10.1002/cpe.6051

Cheng, Y., Li, G., Li, J., Sun, Y., Jiang, G., Zeng, F., et al. (2020). Visualization of Activated Muscle Area Based on sEMG. Ifs 38 (3), 2623–2634. doi:10.3233/JIFS-179549

Duan, H., Sun, Y., Cheng, W., Yun, J., Jiang, D., Liu, Y., , et al. (2021). Gesture Recognition Based on Multi‐modal Feature Weight. Concurrency Comput. Pract. Experience 33 (5), e5991. doi:10.1002/cpe.5991

Hao, Z., Wang, Z., Bai, D., and Zhou, S. (2021b). Towards the Steel Plate Defect Detection: Multidimensional Feature Information Extraction and Fusion. Concurrency Comput. Pract. Experience 33 (21), e6384. doi:10.1002/CPE.6384

Hao, Z., Wang, Z., Bai, D., Tao, B., Tong, X., and Chen, B. (2021a). Intelligent Detection of Steel Defects Based on Improved Split Attention Networks. Front. Bioeng. Biotechnol. 9, 810876. doi:10.3389/fbioe.2021.810876

He, K., Sun, J., and Tang, X. (2013). Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35 (6), 1397–1409. doi:10.1109/TPAMI.2012.213

He, Y., Li, G., Liao, Y., Sun, Y., Kong, J., Jiang, G., et al. (2019). Gesture Recognition Based on an Improved Local Sparse Representation Classification Algorithm. Cluster Comput. 22 (Suppl. 5), 10935–10946. doi:10.1007/s10586-017-1237-1

Hu, J., Sun, Y., Li, G., Jiang, G., and Tao, B. (2019). Probability Analysis for Grasp Planning Facing the Field of Medical Robotics. Measurement 141, 227–234. doi:10.1016/j.measurement.2019.03.010

Huang, L., Fu, Q., He, M., Jiang, D., and Hao, Z. (2021). Detection Algorithm of Safety Helmet Wearing Based on Deep Learning. Concurrency Computat Pract. Exper 33 (13), e6234. doi:10.1002/cpe.6234

Huang, L., Fu, Q., Li, G., Luo, B., Chen, D., and Yu, H. (2019). Improvement of Maximum Variance Weight Partitioning Particle Filter in Urban Computing and Intelligence. IEEE Access 7, 106527–106535. doi:10.1109/ACCESS.2019.2932144

Huang, L., He, M., Tan, C., Jiang, D., Li, G., and Yu, H. (2020). Jointly Network Image Processing: Multi‐task Image Semantic Segmentation of Indoor Scene Based on CNN. IET image process 14 (15), 3689–3697. doi:10.1049/iet-ipr.2020.0088

Jiang, D., Li, G., Sun, Y., Hu, J., Yun, J., and Liu, Y. (2021b). Manipulator Grabbing Position Detection with Information Fusion of Color Image and Depth Image Using Deep Learning. J. Ambient Intell. Hum. Comput 12 (12), 10809–10822. doi:10.1007/s12652-020-02843-w

Jiang, D., Li, G., Sun, Y., Kong, J., Tao, B., and Chen, D. (2019a). Grip Strength Forecast and Rehabilitative Guidance Based on Adaptive Neural Fuzzy Inference System Using sEMG. Pers Ubiquit Comput. (10). doi:10.1007/s00779-019-01268-3

Jiang, D., Li, G., Sun, Y., Kong, J., and Tao, B. (2019b). Gesture Recognition Based on Skeletonization Algorithm and CNN with ASL Database. Multimed Tools Appl. 78 (21), 29953–29970. doi:10.1007/s11042-018-6748-0

Jiang, D., Li, G., Tan, C., Huang, L., Sun, Y., and Kong, J. (2021a). Semantic Segmentation for Multiscale Target Based on Object Recognition Using the Improved Faster-RCNN Model. Future Generation Comput. Syst. 123, 94–104. doi:10.1016/j.future.2021.04.019

Jiang, D., Zheng, Z., Li, G., Sun, Y., Kong, J., Jiang, G., et al. (2019c). Gesture Recognition Based on Binocular Vision. Cluster Comput. 22 (Suppl. 6), 13261–13271. doi:10.1007/s10586-018-1844-5

Jobson, D. J., Rahman, Z., and Woodell, G. A. (1997). Properties and Performance of a center/surround Retinex. IEEE Trans. Image Process. 6 (3), 451–462. doi:10.1109/83.557356

Karaboga, D., and Akay, B. (2009). A Comparative Study of Artificial Bee colony Algorithm. Appl. Math. Comput. 214 (1), 108–132. doi:10.1016/j.amc.2009.03.090

Karaboga, D. (2005). “An Idea Based on Honey Bee Swarm for Numerical Optimization,”. Technical report-tr06 (Kayseri, Turkey: Erciyes university, engineering faculty, computer engineering department), 1–10.

Karaboga, D., and Basturk, B. (2008). On the Performance of Artificial Bee colony (ABC) Algorithm. Appl. soft Comput. 8 (1), 687–697. doi:10.1016/j.asoc.2007.05.007

Karaboga, D., and Gorkemli, B. (2014). A Quick Artificial Bee colony (qABC) Algorithm and its Performance on Optimization Problems. Appl. Soft Comput. 23, 227–238. doi:10.1016/j.asoc.2014.06.035

Land, E. H. (1964). The Retinex. Am. Scientist 52 (2), 247–264. Available at: http://www.jstor.org/stable/27838994.

Land, E. H., and McCann, J. J. (1971). Lightness and Retinex Theory. J. Opt. Soc. Am. 61 (1), 1–11. doi:10.1364/JOSA.61.000001

Li, B., Sun, Y., Li, G., Kong, J., Jiang, G., Jiang, D., et al. (2019a). Gesture Recognition Based on Modified Adaptive Orthogonal Matching Pursuit Algorithm. Cluster Comput. 22 (Suppl. 1), 503–512. doi:10.1007/s10586-017-1231-7

Li, C., Li, G., Jiang, G., Chen, D., and Liu, H. (2020a). Surface EMG Data Aggregation Processing for Intelligent Prosthetic Action Recognition. Neural Comput. Applic 32 (22), 16795–16806. doi:10.1007/s00521-018-3909-z

Li, C., Liu, J., Liu, A., Wu, Q., and Bi, L. (2019b). Global and Adaptive Contrast Enhancement for Low Illumination gray Images. IEEE Access 7, 163395–163411. doi:10.1109/ACCESS.2019.2952545

Li, C., Sun, Y., Li, G., Jiang, D., Zhao, H., and Jiang, G. (2020b). Trajectory Tracking of 4-DOF Assembly Robot Based on Quantification Factor and Proportionality Factor Self-Tuning Fuzzy PID Control. Ijwmc 18 (4), 361–370. doi:10.1504/IJWMC.2020.108536

Li, G., Jiang, D., Zhou, Y., Jiang, G., Kong, J., and Manogaran, G. (2019c). Human Lesion Detection Method Based on Image Information and Brain Signal. IEEE Access 7, 11533–11542. doi:10.1109/ACCESS.2019.2891749

Li, G., Li, J., Ju, Z., Sun, Y., and Kong, J. (2019d). A Novel Feature Extraction Method for Machine Learning Based on Surface Electromyography from Healthy Brain. Neural Comput. Applic 31 (12), 9013–9022. doi:10.1007/s00521-019-04147-3

Li, G., Zhang, L., Sun, Y., and Kong, J. (2019e). Towards the SEMG Hand: Internet of Things Sensors and Haptic Feedback Application. Multimed Tools Appl. 78 (21), 29765–29782. doi:10.1007/s11042-018-6293-x

Liao, S., Li, G., Wu, H., Jiang, D., Liu, Y., Yun, J., et al. (2021). Occlusion Gesture Recognition Based on Improved SSD. Concurrency Comput. Pract. Experience 33 (6), e6063. doi:10.1002/cpe.606310.1002/cpe.6063

Liao, S., Li, G., Li, J., Jiang, D., Jiang, G., Sun, Y., et al. (2020). Multi-object Intergroup Gesture Recognition Combined with Fusion Feature and KNN Algorithm. Ifs 38 (3), 2725–2735. doi:10.3233/JIFS-179558

Liu, X., Jiang, D., Tao, B., Jiang, G., Sun, Y., Kong, J., et al. (2021a). Genetic Algorithm-Based Trajectory Optimization for Digital Twin Robots. Front. Bioeng. Biotechnol. 9, 793782. doi:10.3389/fbioe.2021.793782

Liu, Y., Jiang, D., Duan, H., Sun, Y., Li, G., Tao, B., et al. (2021c). Dynamic Gesture Recognition Algorithm Based on 3D Convolutional Neural Network. Comput. Intelligence Neurosci. 2021, 1–12. Article ID 4828102. 12 pages. doi:10.1155/2021/4828102

Liu, Y., Jiang, D., Tao, B., Qi, J., Jiang, G., Yun, J., et al. (2022c). Grasping Posture of Humanoid Manipulator Based on Target Shape Analysis and Force Closure. Alexandria Eng. J. 61 (5), 3959–3969. doi:10.1016/j.aej.2021.09.017

Liu, Y., Jiang, D., Yun, J., Sun, Y., Li, C., Jiang, G., et al. (2021b). Self-tuning Control of Manipulator Positioning Based on Fuzzy PID and PSO Algorithm. Front. Bioeng. Biotechnol. 9, 817723. doi:10.3389/fbioe.2021.817723

Liu, Y., Li, C., Jiang, D., Chen, B., Sun, N., Cao, Y., et al. (2022a). Wrist Angle Prediction under Different Loads Based on GA‐ELM Neural Network and Surface Electromyography. Concurrency Comput. 34 (3), e6574. doi:10.1002/CPE.6574

Liu, Y., Xiao, F., Tong, X., Tao, B., Xu, M., Jiang, G., et al. (2022d). Manipulator Trajectory Planning Based on Work Subspace Division. Concurrency Comput. Pract. Experience 34 (5), e6710. doi:10.1002/cpe.6710

Liu, Y., Xu, M., Jiang, G., Tong, X., Yun, J., Liu, Y., et al. (2022b). Target Localization in Local Dense Mapping Using RGBD SLAM and Object Detection. Concurrency Comput. 34 (4), e6655. doi:10.1002/CPE.6655

Long, X., and He, G. (2020). Image Enhancement Method Based on Multi-Layer Fusion and Detail Recovery. Appl. Res. Comput. 37 (02), 584–587. doi:10.19734/j.issn.1001-3695.2018.06.0572

Luo, B., Sun, Y., Li, G., Chen, D., and Ju, Z. (2020). Decomposition Algorithm for Depth Image of Human Health Posture Based on Brain Health. Neural Comput. Applic 32 (10), 6327–6342. doi:10.1007/s00521-019-04141-9

Ma, R., Zhang, L., Li, G., Jiang, D., Xu, S., and Chen, D. (2020). Grasping Force Prediction Based on sEMG Signals. Alexandria Eng. J. 59 (3), 1135–1147. doi:10.1016/j.aej.2020.01.007

Nithyananda, C. R., and Ramachandra, A. C. (2016). “Review on Histogram Equalization Based Image Enhancement Techniques,” in 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), IEEE), 2512–2517. doi:10.1109/ICEEOT.2016.7755145

Ozturk, C., and Karaboga, D. (2011). “Hybrid Artificial Bee Colony Algorithm for Neural Network Training,” in Proceedings of the 2011 IEEE congress of evolutionary computation (CEC), New Orleans, LA, USA, June 2011 (Piscataway, New Jersey, United States: IEEE), 84–88. doi:10.1109/CEC.2011.5949602

Parihar, A. S., and Singh, K. (2018). “A Study on Retinex Based Method for Image Enhancement,” in Proceedings of the 2018 2nd International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, January 2018 (Piscataway, New Jersey, United States: IEEE), 619–624. doi:10.1109/ICISC.2018.8398874

Peiyu, Z., Weidong, Z., Jinyu, S., and Jingchun, Z. (2020). Underwater Image Enhancement Algorithm Based on Fusion of High and Low Frequency Components. Adv. Lasers Optoelectronics 57 (16), 161010. doi:10.3788/LOP57.161010

Rahman, Z., Jobson, D. J., and Woodell, G. A. (2004). Retinex Processing for Automatic Image Enhancement. J. Electron. Imaging 13 (1), 100–110. doi:10.1117/1.1636183

Singh, A. (2009). An Artificial Bee colony Algorithm for the Leaf-Constrained Minimum Spanning Tree Problem. Appl. Soft Comput. 9 (2), 625–631. doi:10.1016/j.asoc.2008.09.001

Singh, D., and Kumar, V. (2018). Dehazing of Outdoor Images Using Notch Based Integral Guided Filter. Multimed Tools Appl. 77 (20), 27363–27386. doi:10.1007/s11042-018-5924-6

Su, H., and Jung, C. (2018). Perceptual Enhancement of Low Light Images Based on Two-step Noise Suppression. IEEE Access 6, 7005–7018. doi:10.1109/ACCESS.2018.2790433

Sun, Y., Hu, J., Li, G., Jiang, G., Xiong, H., Tao, B., et al. (2020a). Gear Reducer Optimal Design Based on Computer Multimedia Simulation. J. Supercomput 76 (6), 4132–4148. doi:10.1007/s11227-018-2255-3

Sun, Y., Tian, J., Jiang, D., Tao, B., Liu, Y., Yun, J., et al. (2020c). Numerical Simulation of thermal Insulation and Longevity Performance in New Lightweight Ladle. Concurrency Computat Pract. Exper 32 (22), e5830. doi:10.1002/cpe.5830

Sun, Y., Weng, Y., Luo, B., Li, G., Tao, B., Jiang, D., et al. (2020b). Gesture Recognition Algorithm Based on Multi‐scale Feature Fusion in RGB‐D Images. IET image process 14 (15), 3662–3668. doi:10.1049/iet-ipr.2020.0148

Sun, Y., Xu, C., Li, G., Xu, W., Kong, J., Jiang, D., et al. (2020d). Intelligent Human Computer Interaction Based on Non Redundant EMG Signal. Alexandria Eng. J. 59 (3), 1149–1157. doi:10.1016/j.aej.2020.01.015

Sun, Y., Yang, Z., Tao, B., Jiang, G., Hao, Z., and Chen, B. (2021). Multiscale Generative Adversarial Network for Real‐world Super‐resolution. Concurrency Computat Pract. Exper 33 (21), e6430. doi:10.1002/CPE.6430

Tan, C., Sun, Y., Li, G., Jiang, G., Chen, D., and Liu, H. (2020). Research on Gesture Recognition of Smart Data Fusion Features in the IoT. Neural Comput. Applic 32 (22), 16917–16929. doi:10.1007/s00521-019-04023-0

Tan, S. F., and Isa, N. A. M. (2019). Exposure Based Multi-Histogram Equalization Contrast Enhancement for Non-uniform Illumination Images. IEEE Access 7, 70842–70861. doi:10.1109/ACCESS.2019.2918557

Tao, B., Wang, Y., Qian, X., Tong, X., He, F., Yao, W., et al. (2022b). Photoelastic Stress Field Recovery Using Deep Convolutional Neural Network. Front. Bioeng. Biotechnol. 10, 818112. doi:10.3389/fbioe.2022.818112

Tao, B., Huang, L., Zhao, H., Li, G., and Tong, X. (2021). A Time Sequence Images Matching Method Based on the Siamese Network. Sensors 21 (17), 5900. doi:10.3390/s21175900

Tao, B., Liu, Y., Huang, L., Chen, G., and Chen, B. (2022a). 3D Reconstruction Based on Photoelastic Fringes. Concurrency Computat Pract. Exper 34 (1), e6481. doi:10.1002/CPE.6481

Tao, W., Ningsheng, G., and Guixiang, J. (2017). “Enhanced Image Algorithm at Night of Improved Retinex Based on HIS Space,” in Proceedings of the 2017 12th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Nanjing, China, November 2017 (Piscataway, New Jersey, United States: IEEE), 1–5. doi:10.1109/ISKE.2017.8258829

Tian, J., Cheng, W., Sun, Y., Li, G., Jiang, D., Jiang, G., et al. (2020). Gesture Recognition Based on Multilevel Multimodal Feature Fusion. Ifs 38 (3), 2539–2550. doi:10.3233/JIFS-179541

Vijayalakshmi, D., Nath, M. K., and Acharya, O. P. (2020). A Comprehensive Survey on Image Contrast Enhancement Techniques in Spatial Domain. Sens Imaging 21 (1), 1–40. doi:10.1007/s11220-020-00305-3

Wang, D., Yan, W., Zhu, T., Xie, Y., Song, H., and Hu, X. (2017). “An Adaptive Correction Algorithm for Non-uniform Illumination Panoramic Images Based on the Improved Bilateral Gamma Function,” in Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, NSW, Australia, December 2017 (Piscataway, New Jersey, United States: IEEE), 1–6. doi:10.1109/DICTA.2017.8227404

Wang, F., Zhang, B., Zhang, C., Yan, W., Zhao, Z., and Wang, M. (2021). Low-light Image Joint Enhancement Optimization Algorithm Based on Frame Accumulation and Multi-Scale Retinex. Ad Hoc Networks 113, 102398. doi:10.1016/j.adhoc.2020.102398

Wang, W., Wu, X., Yuan, X., and Gao, Z. (2020). An experiment-based Review of Low-Light Image Enhancement Methods. IEEE Access 8, 87884–87917. doi:10.1109/ACCESS.2020.2992749

Wang, Y.-F., Liu, H.-M., and Fu, Z.-W. (2019). Low-light Image Enhancement via the Absorption Light Scattering Model. IEEE Trans. Image Process. 28 (11), 5679–5690. doi:10.1109/TIP.2019.2922106

Weifeng, Z., and Dongxue, Y. (2020). Low-Illumination-Based Enhancement Algorithm of Color Images with Fog. Adv. Lasers Optoelectronics 57 (16), 161021. doi:10.3788/LOP57.161021

Weng, Y., Sun, Y., Jiang, D., Tao, B., Liu, Y., Yun, J., et al. (2021). Enhancement of Real-Time Grasp Detection by Cascaded Deep Convolutional Neural Networks. Concurrency Comput. Pract. Experience 33 (5), e5976. doi:10.1002/cpe.5976

Wu, X., Jiang, D., Yun, J., Liu, X., Sun, Y., Tao, B., et al. (2022). Attitude Stabilization Control of Autonomous Underwater Vehicle Based on Decoupling Algorithm and PSO-ADRC. Front. Bioeng. Biotechnol. 10, 843020. doi:10.3389/fbioe.2022.843020

Xiao, F., Li, G., Jiang, D., Xie, Y., Yun, J., Liu, Y., et al. (2021). An Effective and Unified Method to Derive the Inverse Kinematics Formulas of General Six-DOF Manipulator with Simple Geometry. Mechanism Machine Theor. 159, 104265. doi:10.1016/j.mechmachtheory.2021.104265

Xu, L., Yan, Q., Xia, Y., and Jia, J. (2012). Structure Extraction from Texture via Relative Total Variation. ACM Trans. Graph. 31 (6), 1–10. doi:10.1145/2366145.2366158

Xu, M., Zhang, Y., Wang, S., and Jiang, G. (2022). Genetic-Based Optimization of 3D Burch-Schneider Cage with Functionally Graded Lattice Material. Front. Bioeng. Biotechnol. 10, 819005. doi:10.3389/fbioe.2022.819005

Yang, Z., Jiang, D., Sun, Y., Tao, B., Tong, X., Jiang, G., et al. (2021). Dynamic Gesture Recognition Using Surface EMG Signals Based on Multi-Stream Residual Network. Front. Bioeng. Biotechnol. 9, 779353. doi:10.3389/fbioe.2021.779353

Yu, M., Li, G., Jiang, D., Jiang, G., Tao, B., and Chen, D. (2019). Hand Medical Monitoring System Based on Machine Learning and Optimal EMG Feature Set. Pers Ubiquit Comput (9983). doi:10.1007/s00779-019-01285-2

Yu, M., Li, G., Jiang, D., Jiang, G., Zeng, F., Zhao, H., et al. (2020). Application of PSO-RBF Neural Network in Gesture Recognition of Continuous Surface EMG Signals. Ifs 38 (3), 2469–2480. doi:10.3233/JIFS-179535

Yun, J., Jiang, D., Liu, Y., Sun, Y., Tao, B., Kong, J., et al. (2022b). Real-time Target Detection Method Based on Lightweight Convolutional Neural Network. Front. Bioeng. Biotechnol. doi:10.3389/fbioe.2022.861286

Yun, J., Liu, Y., Sun, Y., Huang, L., Tao, B., Jiang, G., et al. (2022c). Grab Pose Detection Based on Convolutional Neural Network for Loose Stacked Object. Front. Bioeng. Biotechnol. doi:10.3389/fbioe.2022.884521

Yun, J., Sun, Y., Li, C., Jiang, D., Tao, B., Li, G., et al. (2022a). Self-adjusting Force/Bit Blending Control Based on Quantitative Factor-Scale Factor Fuzzy-PID Bit Control. Alexandria Eng. J. 61 (5), 4389–4397. doi:10.1016/j.aej.2021.09.067

Zhai, H., He, J., Wang, Z., Jing, J., and Chen, W. (2021). Improved Retinex and Multi-Image Fusion Algorithm for Low Illumination Image Enhancement. Infrared Technol. 43 (10), 987–993.

Zhang, J., Zhou, P., and Xue, M. (2018). Low-light Image Enhancement Based on Directional Total Variation Retinex. J. Computer-Aided Des. Comput. Graphics 30 (10), 1943–1953. doi:10.3724/SP.J.1089.2018.16965

Zhang, X., Xiao, F., Tong, X., Yun, J., Liu, Y., Sun, Y., et al. (2022). Time Optimal Trajectory Planing Based on Improved Sparrow Search Algorithm. Front. Bioeng. Biotechnol. doi:10.3389/fbioe.2022.852408

Zhao, G., Jiang, D., Liu, X., Tong, X., Sun, Y., Tao, B., et al. (2022). A Tandem Robotic Arm Inverse Kinematic Solution Based on an Improved Particle Swarm Algorithm. Front. Bioeng. Biotechnol. doi:10.3389/fbioe.2022.832829

Keywords: multi-scale retinex, weighted guided image filtering, ABC algorithm, bilateral gamma function, image enhancement

Citation: Sun Y, Zhao Z, Jiang D, Tong X, Tao B, Jiang G, Kong J, Yun J, Liu Y, Liu X, Zhao G and Fang Z (2022) Low-Illumination Image Enhancement Algorithm Based on Improved Multi-Scale Retinex and ABC Algorithm Optimization. Front. Bioeng. Biotechnol. 10:865820. doi: 10.3389/fbioe.2022.865820

Received: 30 January 2022; Accepted: 01 March 2022;

Published: 11 April 2022.

Edited by:

Zhihua Cui, Taiyuan University of Science and Technology, ChinaReviewed by:

Marco Mollinetti, MTI ltd., JapanSouhail Dhouib, University of Sfax, Tunisia

Rodrigo Lisbôa Pereira, Federal Rural University of the Amazon, Brazil

Copyright © 2022 Sun, Zhao, Jiang, Tong, Tao, Jiang, Kong, Yun, Liu, Liu, Zhao and Fang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zichen Zhao, enpjMTE5Njc1MzQ3MUAxNjMuY29t; Du Jiang, amlhbmdkdUB3dXN0LmVkdS5jbg==; Xiliang Tong, dG9uZ3hpbGlhbmdAd3VzdC5lZHUuY24=; Zifan Fang, ZnpmQGN0Z3UuZWR1LmNu

Ying Sun

Ying Sun Zichen Zhao

Zichen Zhao Du Jiang

Du Jiang Xiliang Tong

Xiliang Tong Bo Tao

Bo Tao Guozhang Jiang1,2,3

Guozhang Jiang1,2,3 Juntong Yun

Juntong Yun Ying Liu

Ying Liu Xin Liu

Xin Liu Guojun Zhao

Guojun Zhao Zifan Fang

Zifan Fang