95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Bioeng. Biotechnol. , 21 March 2022

Sec. Bionics and Biomimetics

Volume 10 - 2022 | https://doi.org/10.3389/fbioe.2022.841958

Subtype classification is critical in the treatment of gliomas because different subtypes lead to different treatment options and postoperative care. Although many radiological- or histological-based glioma classification algorithms have been developed, most of them focus on single-modality data. In this paper, we propose an innovative two-stage model to classify gliomas into three subtypes (i.e., glioblastoma, oligodendroglioma, and astrocytoma) based on radiology and histology data. In the first stage, our model classifies each image as having glioblastoma or not. Based on the obtained non-glioblastoma images, the second stage aims to accurately distinguish astrocytoma and oligodendroglioma. The radiological images and histological images pass through the two-stage design with 3D and 2D models, respectively. Then, an ensemble classification network is designed to automatically integrate the features of the two modalities. We have verified our method by participating in the MICCAI 2020 CPM-RadPath Challenge and won 1st place. Our proposed model achieves high performance on the validation set with a balanced accuracy of 0.889, Cohen’s Kappa of 0.903, and an F1-score of 0.943. Our model could advance multimodal-based glioma research and provide assistance to pathologists and neurologists in diagnosing glioma subtypes. The code has been publicly available online at https://github.com/Xiyue-Wang/1st-in-MICCAI2020-CPM.

Glioma is one of the most common tumors originating from the brain, which accounts for about 80% of malignant brain tumors in adults (Banerjee et al., 2020). It is characterized by high morbidity, high recurrence, high mortality, and low cure rate (Ostrom et al., 2018). Glioma can be divided into three subtypes (Louis et al., 2016), such as glioblastoma, oligodendroglioma, and astrocytoma. The timely detection and treatment for each glioma subtype can effectively reduce mortality. For patients, different glioma subtypes means different risks (Mesfin and Al-Dhahir, 2019; Wang et al., 2019; Ostrom et al., 2020). For neurologists, accurate classification of glioma subtypes is critical to help customize proper therapeutic intervention (Decuyper et al., 2018). Therefore, glioma subtype classification has important implications.

Before 2016, the classification of glioma subtypes relied mainly on purely histopathological criteria (Louis et al., 2007). In the 2016 report of the World Health Organization (WHO) on the classification of central nervous system (CNS) tumors, molecular parameters were used for the first time to diagnose CNS tumors. Isocitrate dehydrogenase genes mutation, 1p/19q codeletion, and histone H3 genes mutations became decisive markers for the classification of diffuse gliomas (Louis et al., 2016). However, the tools for molecular analysis of tumors are not readily available in areas with low medical resource settings. Thus, it leaves room for glioma diagnosis based only on histopathological analysis (Louis et al., 2016; Pei et al., 2021). Also, non-invasive radiology images (e.g., magnetic resonance imaging, MRI) can also offer an alternative for tumor classification (Reza et al., 2019).

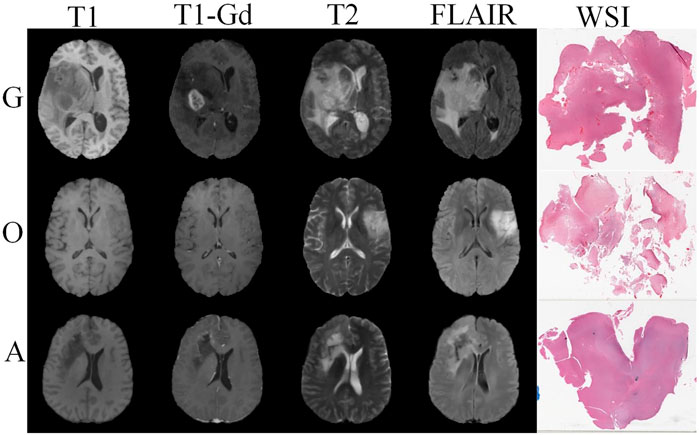

There are two common ways to observe gliomas (Mesfin and Al-Dhahir, 2019), as shown in Figure 1. MRI is a non-invasive technique, which provides images of the brain in 2D and 3D formats (Abdelaziz Ismael et al., 2020). Generally, there are four different MRI sequences, including the T1-weighted (T1), the T1-weighted gadolinium contrasted (T1-Gd), the T2-weighted (T2), and the T2-weighted fluid-attenuated inversion recovery (FLAIR). Different glioma subtypes have different radiological features. The use of radiological images alone may not be sufficient to reliably distinguish different glioma subtypes (van Lent et al., 2020). In addition to MRI, hematoxylin and eosin (H&E) stained tissue biopsy image is another technique to observe brain tumors. It can provide histological features (e.g. necrosis, hemorrhage, polymorphism, and nuclear heterogeneity, etc.) to distinguish glioma subtypes (Wesseling and Capper, 2018). The histopathological images are often considered as the gold standard for tumor diagnosis (Jothi and Rajam, 2017). However, it provides only histological information and is not comprehensive enough. Clinical studies have shown that using combined information from MRI scans and tissue biopsies is more helpful in the diagnosis of gliomas than using unimodal images (Young et al., 2015). However, the viewing of multimodal images is time-consuming and subjective. Even among experts, the diagnosis for the same tumor image (especially samples with complex feature information) is often inconsistent, which is called interobserver variability (Ker et al., 2019; Faust et al., 2019; van den Bent, 2010). With the increasing number of patients and the limited number of pathologists, this variability needs to be solved urgently (Ohgaki et al., 2004; Okamoto et al., 2004). Deep learning is a powerful tool that can not only provide physicians with more objective clinical references but also improve the efficiency in the tumor classification process (LeCun et al., 2015; Mobadersany et al., 2018; Faust et al., 2019). This is a possible alternative to using deep neural networks to learn different types of image features for glioma classification.

FIGURE 1. Visualization of glioblastoma (G), oligodendroglioma (O), and astrocytoma (A) in four sequences of MRI images and paired pathology images.

In this paper, we train a 3D fully convolutional network for radiology images classification (3D MRI model) and a 2D fully convolutional network for histopathological whole-slide image (WSI) classification (2D WSI model). In the experiments, it is easy to encounter the same problem as clinicians, where the features of glioblastoma are easy to learn while astrocytoma and oligodendroglioma are difficult to distinguish. This is due to the presence of “mixed gliomas” (Huse et al., 2015). Some gliomas contain a mixed feature of astrocytoma and oligodendroglioma, which makes the classification of astrocytoma and oligodendroglioma extremely challenging. To address this problem, we adopt a two-stage strategy that focuses on two different classification tasks, respectively. In the first stage of the learning task, the model classifies brain tumors into glioblastoma and others. In the second stage, the model focuses on learning the differences between oligodendroglioma and astrocytoma. Meanwhile, this approach alleviates the data imbalance problem to some extent. The MRI and WSI data pass through the two-stage design with their corresponding 3D and 2D models, respectively. Then, an ensemble classification network is designed to automatically integrate the features of the two modalities.

The contributions of our work are summarized as follows:

• We design two complementary MRI- and WSI-based models with ensemble learning to achieve higher diagnostic performance than most glioma grading methods.

• To address the clinical problem of differentiating mixed gliomas, we propose a two-stage strategy that allows the model to focus on learning mixed features between astrocytoma and oligodendroglioma with good performance.

• Our method achieves the best classification performance in the MICCAI 2020 CPM-RadPath Challenge. Our model and results can be used as benchmarks for automatic glioma classification algorithms. The code is publicly available for others to conduct reproducible research.

The rest of the paper is organized as follows: Section 2 shows the related work; Section 3 describes our algorithm implementation; Section 4 presents the data and experimental results; Section 5 summarizes our work.

Deep learning has achieved remarkable success in the computer vision community (Badrinarayanan et al., 2017; Isola et al., 2017; Pelt and Sethian, 2018). Benefiting from its superior performance, deep learning has also been widely used in the field of medical image processing, such as prostate MRI analysis (Milletari et al., 2016; Rundo et al., 2019; Rundo et al., 2020), neuronal structure segmentation (Ronneberger et al., 2015), brain tumor detection (Han et al., 2019), etc. For computer-assisted brain glioma diagnosis, current methods are based on unimodal (MRI or WSI). There is still a lack of multimodality-based glioma classification studies. Next, we will review MRI- and WSI-based glioma classification methods, respectively.

Many methods have been proposed to classify gliomas using MRI images through deep learning methods based on radiological characteristics (Mohan and Subashini, 2018).

Some studies focus on the glioma classification with single sequence images, such as the T1 sequence images (Hsieh et al., 2017; Kaya et al., 2017; Ge et al., 2018; Yang et al., 2018; Abdelaziz Ismael et al., 2020), the T1-Gd sequence images (Koley et al., 2016; Singh et al., 2017; Jang et al., 2018; Dikici et al., 2020; Zhou et al., 2020), the T2 sequence images (Wu et al., 2017; Yogananda et al., 2020), and the FLAIR sequence images (Gao et al., 2016; Wu et al., 2018; Su et al., 2019). Single sequence image diagnosis often relies on limited radiological features (Arbizu et al., 2011).

There are also some studies that classify tumors based on multiple sequences (T1 with T1-Gd, T1 with T2, and others) in MRI (Wu et al., 2019; Alis et al., 2020; Chen et al., 2020; Hamghalam et al., 2020; Lu et al., 2020; Zhang et al., 2020). However, most of these studies simply divide gliomas into high-grade gliomas (HGG) and low-grade gliomas (LGG). Further work on subtype classification is relatively scarce, which is due to the difficulty of subtype characterization using MRI (Reifenberger et al., 2017).

Some other methods have also been proposed to automatically classify gliomas based on histological features by deep learning methods, which will greatly improve diagnostic efficiency and improve patient outcomes (Jothi and Rajam, 2017; Mobadersany et al., 2018; Jin et al., 2020).

Several studies possess excellent performance in the WSI-based binary glioma classification. Ertosun et al. first proposed a modular approach to apply convolutional neural network for histopathological glioma classification (Ertosun and Rubin, 2015). Then, Yonekura et al. further investigated the automated glioma analysis method with deep learning techniques (Yonekura et al., 2017). Rathore et al. distinguished gliomas by learning phenotypic information (Rathore et al., 2020). Hou et al. trained patch-level classifiers to accomplish the glioma classification (Hou et al., 2016). While Zhu et al. learned patient-specific information from WSI for classification (Zhu et al., 2017).

All these methods use The Cancer Genome Atlas (TCGA) public dataset. However, limited by image annotation, they also simply classified gliomas into glioblastoma (GBM) and LGG. This does not provide substantial help for better treatment. There are also some studies that have created their own private datasets (Ker et al., 2019; Jin et al., 2020), where (Ker et al., 2019) used a full convolutional neural network (CNN) to distinguish normal brain, LGG, and HGG.

Jin et al. demonstrated that a more refined glioma subtype classification would benefit the design of treatment plans (Jin et al., 2020). They developed a new weighted cross-entropy based DenseNet model to automatically classify five types of glioma subtypes: oligodendroglioma, anaplastic oligodendroglioma, astrocytoma, anaplastic astrocytoma, and glioblastoma, with a patient-level accuracy of 87.5%. Nevertheless, the private dataset lacks third-party verification, and another problem is that publicly reproducible studies may not be possible.

The traditional glioma subtype classification under the single modality has insufficient information. Unlike previous studies, automatic classification methods based on multimodal brain images have been recently investigated. These related works mainly came out of the MICCAI 2019 and 2020 CPM-RadPath Challenge (Chan et al., 2019; Pei et al., 2019; Hamidinekoo et al., 2020; Lerousseau et al., 2020; Pei et al., 2020; Yin et al., 2020; Zhao et al., 2020). Based on the CPM-RadPath data, we expect to propose an accurate automatic classification method based on multimodal data.

This paper applies two kinds of fully convolutional networks to achieve an end-to-end glioma subtype classification. In the following, we introduce the image preprocessing and network framework in detail.

The MICCAI 2020 CPM-RadPath Challenge provides publicly available H&E stained digital histopathology images and matched multi-sequence radiology images, including three subtypes of gliomas: astrocytoma, glioblastoma, and oligodendroglioma.

Each image in the WSI dataset is very large (e.g., 95,200 × 87,000 pixels) and cannot be directly fed into our network. We crop these WSIs into patches with a size of 1,024 × 1,024 pixels. Then, the OTSU method is adopted to remove non-tissue regions (Otsu, 1979). Following the current studies (Ma and Jia, 2019; Pei et al., 2019; Pei et al., 2020; Pei et al., 2021), we exclude meaningless tissues using a simple but effective threshold technique. Specifically, we first calculate the mean value and standard deviation of each patch in RGB space and maintain patches with a mean value between 100 and 220 and standard deviations above 20 (Pei et al., 2019; Pei et al., 2020; Pei et al., 2021). Then, we convert each patch to the hue saturation value (HSV) space and exclude patches with the mean value below 50 in the H channel (Ma and Jia, 2019).

The volume of the original MRI image is 240 × 240 × 155 pixels. The beginning and end images (slices) in the scan are removed due to their limited brain tissue. As a result, the number of MRI images per sequence is reduced to 128. We also remove the black background at the edges. These MRI images in the four sequences are finally cropped into small images of size 192 × 192 × 128 pixels, which facilitates computational efficiency.

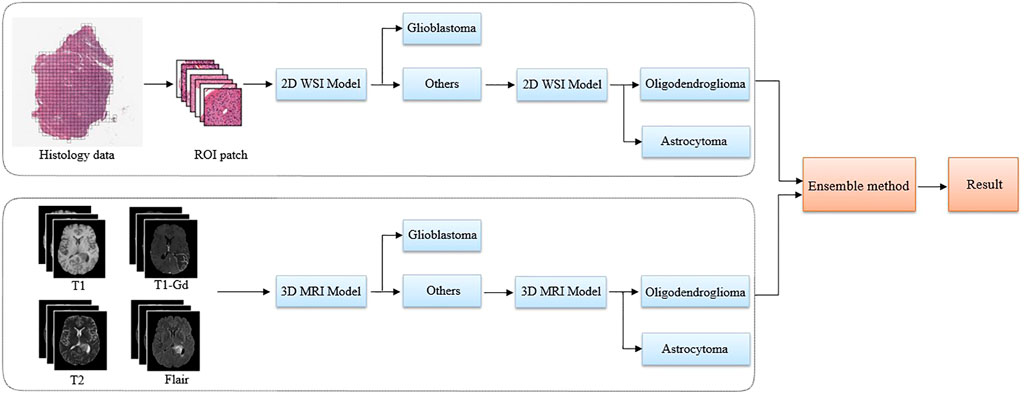

Figure 2 illustrates the overall architecture of our proposed glioma classification system, which is composed of a 2D WSI model (Figure 3) and a 3D MRI model (Figure 4). Both models adopt our two-stage strategy. The first stage classifies MRI images or histopathological images as glioblastoma and non-glioblastoma. Based on the obtained non-glioblastoma data, the second stage aims to distinguish astrocytoma and oligodendroglioma. The reason for using the two-stage strategy is the uneven data distribution. The differences between astrocytoma and oligodendroglioma are so subtle that it can be difficult to distinguish between the two. In contrast, glioblastoma is easier to identify. As confirmed by our experimental results, our two-stage strategy helps to improve the overall accuracy.

FIGURE 2. The proposed pipeline using multi-modality data to classify glioma subtypes. A two-stage classification strategy is applied to both the 2D pathology (WSI) and 3D MRI images. The glioblastoma with more serious anatomy representation is detected in the first step. Then, in the second step, our algorithm focuses on the classification of astrocytoma and oligodendroglioma.

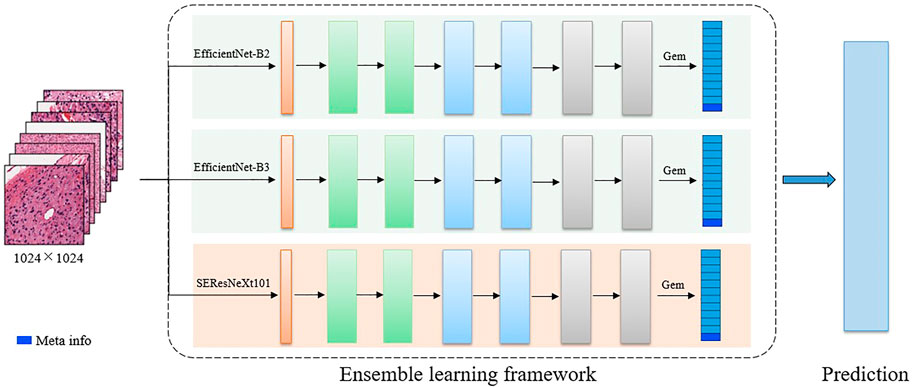

FIGURE 3. The detailed 2D CNN network. The backbone includes EfficientNet-B2, EfficientNet-B3, and SE-ResNext101. In the final feature representation, the meta-information (age) is included.

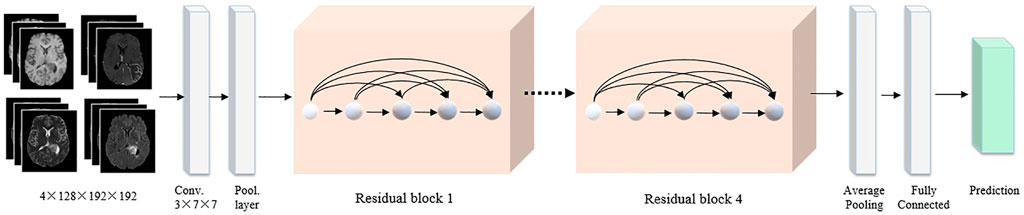

FIGURE 4. The detailed 3D CNN network. The four MRI modalities are integrated as the network input. All images are cropped to a fixed size of 128 × 192 × 192 pixels. The backbone adopts the 3D ResNet, followed by a global average pooling and a fully connected layer to classify the brain tumor.

Multiple related tasks can help learn from each other by potentially sharing representations, thus improving generalization ability. Therefore, for the 2D WSI model, we develop several multi-task-based convolutional neural network models, where the backbones include EfficientNet-B2, EfficientNet-B3 (Tan and Le, 2019), and SEResNeXt101 (Hu et al., 2018). Specifically, EfficientNet is a benchmark network that achieves performance gains by scaling network width, network depth, and resolution, which greatly reduces the number of parameters and computation complexity of the model. EfficientNet-B1 to B7 are obtained by synthetically optimizing the width, depth, and resolution of the EfficientNet. SEResNeXt101 is derived by embedding squeeze-and-excitation (SE) blocks into the ResNeXt model. ResNeXt replaces the original residual learning block (He et al., 2016) with parallel blocks of the same topology, which improves the model performance without significantly increasing the parameters. The SE block obtains the weight of each feature channel and assigns more weights to important features while suppressing features that are not useful for the current task. The outputs of these three classification models are averaged to obtain the final classification results.

Before the fully connected classification layer, the generalized-mean (GEM) pooling (Radenović et al., 2018) is applied to the learned features, which is defined as

where X is the input features and

After the GEM operation, age information of patient should also be taken into account (Reni et al., 2017; Ostrom et al., 2018). The reason is that glioma subtypes have different age distributions, where astrocytoma is more often seen in young men while glioblastoma is more frequently distributed in the older groups (Tamimi and Juweid, 2017). In our work, age is used as additional feature, which is concatenated with the extracted image features for the final classification task. Finally, these combined features are adopted as input to a classification branch and a regression branch. The two branches adopt a cross-entropy (LBCE) and a smooth L1 loss (Lloc), respectively, to achieve a more robust brain tumor classification.

where

For the 3D MRI model, the input images are four types of MRI sequence images, including T1, T1-Gd, T2, and FLAIR. Each 3D MRI image is cropped to 192 × 192 × 128 pixels. 3D ResNet is adopted as a backbone to learn the residual representation between the input and output, which has become the basic feature extraction network in the computer vision community. Then, global average pooling and fully connected layers are used to generate the final classification results. We also employ the cross-entropy and smooth L1 loss functions. Finally, the output probabilities of the 2D WSI and 3D MRI models are averaged to drive the final classification results.

In this section, we first present the utilized data from the MICCAI 2020 CPM-RadPath Challenge and describe the evaluation metrics and experimental setups. Then, we show the detailed classification results and validate the benefits of our proposed multimodal framework and two-stage strategy for glioma classification. Finally, we show the visualization results of three different glioma subtypes in the classification process.

We utilize a public dataset released from the MICCAI 2020 CPM-RadPath Challenge1, which is proposed for three subtypes of glioma classification, including Glioblastoma, Oligodendroglioma, and Astrocytoma. It includes paired radiology scans and digitized histopathology images with global image-level labels. The CPM-RadPath Challenge splits these data into three parts: training (270 cases), validation (35 cases), and testing (73 cases).

It is noted that only the annotations of training data are released. Thus, our model is developed depending on the training set. The validation and test sets have not released their labels, which can be regarded as two unseen test sets. All these data are collected using multi-parametric MRI (mp-MRI) and digital pathology scanners at 16 international institutions.

Specifically, all mp-MRI scans are acquired using 1–3T scanners. The MRI scans are provided as the NIFTI files (. nii.gz). Each case includes four sequences: T1, T1-Gd, T2, and FLAIR. All MRI images have been preprocessed, co-registered to the same anatomical template, and interpolated to the same resolution (1 cubic mm) in all three directions. Annotations are generated by board-certified neuroradiologists, neurosurgeons, and neuropathologists with at least 4 years of experience.

Meanwhile, tissue specimens are made from tissues removed from the patient during surgery and then stained with hematoxylin and eosin (H&E), which are scanned at 20× or 40× magnification to generate digital histopathology images called WSIs. The color and intensity of WSIs varied across images due to different acquisition times, image fading, or image acquisition artifacts. All WSIs are stored in tiled tiff format. Sixteen professional neuropathologists with at least 4 years of experience annotate these cases by referring to the 2016 WHO classification scheme.

The algorithmic performance is evaluated using three metrics, including F1-score, balanced accuracy, and Cohen’s kappa. The F1 score is a weighted average of accuracy and recall, which takes into account both false positives and false negatives. The balanced accuracy score is a more appropriate metric to evaluate data with imbalanced categories, which is defined as the arithmetic mean of the proportion of correct predictions in each category. Cohen’s kappa is used for consistency testing and can also be used to measure classification accuracy. A higher kappa coefficient means that the classifier is more effective.

It is assumed that true positive (TP), false positive (FP), false negative (FN) is the number of true positives, false positives, and false negatives respectively. The three metrics can be computed as follows.

In the balanced accuracy score, c is the number of classes. In Cohen’s kappa,

Our training data are augmented by horizontal flipping, vertical flipping, random scaling and rotation, and random jitter. We use the ImageNet pre-trained weights to initialize the 2D WSI and 3D MRI models, and the weights of the decoder part are initialized randomly. We used the Adam optimizer (Kingma and Ba, 2015) with an initial learning rate of 3 × 10−4 for all experiments. The learning rate decreases by 10 times at the 50th and 80th epochs. The training batch size is set to 24. All networks are implemented based on the PyTorch framework and trained using four NVIDIA Tesla P40 GPU cards.

We used 5-fold cross-validation based only on the training set to find the optimal network parameters for each deep learning model. The best-performing fold is taken as the final training model for the given architecture. It is noted that multiple models with different backbones are trained at each stage of the 2D WSI model, and their ensemble is used to obtain the final results.

Table 1 shows the results of our ablation experiments conducted on the validation data in the CPM-RadPath challenge. Table 2 shows the contribution of different components in our 2D classification framework. These networks are trained using the same parameter settings as described in the previous section.

As the performances shown in Table 1, our two-stage classification strategy contributes to gaining higher accuracy on three evaluation metrics. Moreover, the classification model of MRI and WSI data can complement each other to obtain more robust and accurate results. We conducted a corresponding ablation study to verify the benefits of our proposed multimodal framework and two-stage strategy. We use the same training schedule and parameter settings as the full method described in Section 4.3 for comparison with the ablation method (i.e., with the corresponding components removed).

The experiments demonstrate that our proposed multimodal framework can help improve the accuracy of glioma subtype classification. To validate the importance of multimodal image information, we use MRI or WSI alone as the training set under the most ideal conditions (two-stage), and the balanced accuracy is reduced by 6.7% or even 15.6%. It is well known that in such tasks with unbalanced data, the balanced accuracy provides a better measure of the performance of the algorithm (Brodersen et al., 2010). In addition, there is also a greater than 5% drop in F1 score in this case and a greater than 10% drop in Kappa. In contrast, the multimodal complementary model can reach the best results with 88.9% of the balanced accuracy on the validation set.

Also, we demonstrate the benefit of our two-stage scheme. Another set of experiments is conducted in this study by comparing our two-stage approach with the single-stage alternative. The first stage network is used directly to classify the three glioma subtypes. As shown in the corresponding rows of Table 1, compared to the two-stage approach, the single-stage strategy results in a 3.3 and 5.5% performance decrease in the balanced accuracy in the MRI validation set and WSI validation set, respectively. This result further demonstrates the advantages of our proposed two-stage solution. From the results, it can be seen that our network can integrate feature information from MRI and WSI data.

In addition, we also validate the advantages of the multi-task learning strategy in the 2D WSI model. We use three highly correlated classification networks to train the 2D WSI model: EfficientNet-B2, EfficientNet-B3, and SEResNeXt101. As shown in Table 2, with both classification branch (cls), regression branch (reg), and a fully connected layer (gem), we obtain better results than other methods.

Table 3 lists the results of the top six teams in the MICCAI 2020 CPM-RadPath Challenge. As shown in Table 3, our method has obtained the best classification performance in the testing phase.

In particular, we outperform the second-place team, Tabulo, by 8.8% in terms of balanced accuracy. According to a report submitted to the challenge organizer, Tabulo utilizes two additional publicly available datasets: the Multimodal Brain Tumor Segmentation Challenge 2019 (BraTS-2019)2 and MoNuSAC3. While our method does not use any external data, all models on the classification pipeline are not pre-trained on medical data. Plmoer, a team from the University of Pittsburgh Medical Center, which processes noisy labels in many patches from WSI of each category. It is similar to us while our two-stage strategy brings an obvious improvement. The Marvinler team used multi-instance learning for the WSI processing, which tends to have high memory requirements. In addition, the Hanchu team requires an additional image segmentation task, yet this does not improve the model performance. The Azh2 team trains a densely connected network for a specific configuration of the DenseNet, which also performs much worse than our model.

It should be noted that we are not able to upload the 3D MRI model in time, so this result is only available for the 2D WSI model with the two-stage strategy. However, it can be seen from Table 1 that the 2D WSI model combined with the 3D MRI model will improve the result substantially. So, we believe that better results will be obtained if the ensemble model is uploaded. Since the test dataset is not yet open, we would like to include the results of the ensemble model with the two-stage strategy if the test dataset is open.

Table 4 summarizes relevant work on the MICCAI 2019 and 2020 CPM-RadPath Challenge. These results are all obtained on the validation set and are all in the one-stage classification framework.

The early work (Chan et al., 2019; Pei et al., 2019) first segmented the tumors before classifying them in 2019, however, the final classification results are not satisfactory. In the MICCAI 2020 CPM-RadPath Challenge, (Pei et al., 2020; Yin et al., 2020; Zhao et al., 2020), still use the segmentation before the classification framework, with a significant improvement over last year’s results. The performance of these methods is affected by the segmentation results. Methods in (Hamidinekoo et al., 2020; Lerousseau et al., 2020) and our solutions do not require the segmentation process.

Without segmenting the images, a direct comparison between our method and (Lerousseau et al., 2020) is feasible because of the similar performance on the validation set. However, in the MICCAI 2020 CPM-RadPath Challenge, method in (Lerousseau et al., 2020) performs not well on the test set and the model does not have strong generalization ability. Similarly, although methods in (Yin et al., 2020; Zhao et al., 2020) perform equal or better than our method on the validation set, our method obtains first place on the final competition test set.

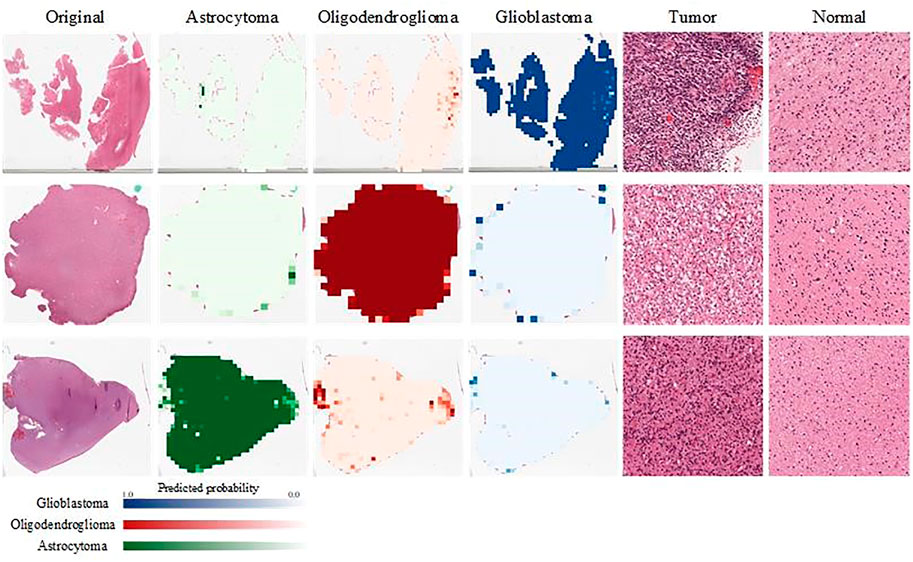

We visualize the output of our classification model. We show here the visualization of pathological images of three different glioma subtypes on a classification model. The input patch size is 1,024 × 1,024 pixels, and then each patch is put into our model to calculate the predicted probability for each glioma subtype. Each patch is labeled with the color that represents its probability. Finally, every patch is integrated to obtain the overall probability of the WSI belonging to glioblastoma or oligodendroglioma, or astrocytoma. As shown in Figure 5, probabilities are converted to color maps in logarithmic form. Therefore, those lower probabilities (<50%) are shown as very light colors, and higher probabilities (≥50%) are shown as dark colors.

FIGURE 5. Visualization of the probabilities of the output results. We evaluate the probability that each patch belongs to A/O/G. Green represents A, red represents O, and blue represents G. In addition, we show the patches of different glioma subtypes and normal tissues separately.

Two pathologists were invited to view these pathological images and their corresponding patches, and they confirmed the presence of the typical glioma subtype patches and normal patches, which are shown in the right two columns of Figure 5.

In this paper, we propose a two-stage glioma classification algorithm that integrates multimodal image information to classify brain glioma into three subtypes: astrocytoma, glioblastoma, and oligodendroglioma. We train a 2D WSI model and a 3D MRI model to learn histopathological image information and radiological image information, respectively. Our classification algorithm is designed based on the feature difference between lower and severe glioma grades. In our two-stage strategy, the first stage separates out the more severe glioblastoma, and the second stage focuses only on learning the difference between astrocytoma and oligodendroglioma. Our two-stage strategy is applied to the 2D WSI model and the 3D MRI model, respectively. The ablation experiments show that our proposed multimodal framework and two-stage strategy have achieved more accurate classification performance compared to the unimodal approach and one-stage classification approach. In addition, the 2D WSI model employs an ensemble strategy, which shows higher classification accuracy compared to directly training a single backbone.

Our method has been validated in the publicly available MICCAI 2020 CPM-RadPath Challenge and has ranked first in the challenge, which indicates that the proposed method has the potential to help neurologists or physicians make a fast and accurate glioma diagnosis. However, the limited data is a drawback of this work. Collecting paired multimodal imaging data is difficult due to patient privacy concerns and the heavy clinical workload of physicians. In future work, we will continue to focus on the disclosure of such multimodal data and perform algorithm validation on more data. Further, we will attempt to adopt unsupervised or self-supervised learning techniques to reduce the tedious annotation workload of pathologists.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Conceptualization, XW and RW; methodology, SY and XW; software, SY; validation, RW, SY; formal analysis, RW and XW; investigation, RW; resources, RW; data curation, XW and JZ; writing—original draft preparation, RW and XW; writing—review and editing, XW, RW, MW, and JZ; visualization, SY; supervision, DZ; project administration, JZ and XH. All authors have read and agreed to the published version of the manuscript.

This work is partly supported by the Natural Science Foundation of Shaanxi Province (No. 2020JM-073) and Science and technology department of Sichuan Province (No. 2020YFG 0081) and Fundamental Research Funds for the Central Universities under Grant (No. xzy022020054).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

MICCAI 2020 CPM-RadPath held the glioma classification challenge, providing WSI and matched MRI data with annotations provided by pathologists.

1https://miccai.westus2.cloudapp.azure.com/competitions/1.

2https://www.med.upenn.edu/cbica/brats2019/data.html.

3https://academictorrents.com/details/c87688437fb416f66eecbd8c419aba00dd12997f.

Abdelaziz Ismael, S. A., Mohammed, A., and Hefny, H. (2020). An Enhanced Deep Learning Approach for Brain Cancer MRI Images Classification Using Residual Networks. Artif. Intelligence Med. 102, 101779. doi:10.1016/j.artmed.2019.101779

Alis, D., Bagcilar, O., Senli, Y. D., Isler, C., Yergin, M., Kocer, N., et al. (2020). The Diagnostic Value of Quantitative Texture Analysis of Conventional MRI Sequences Using Artificial Neural Networks in Grading Gliomas. Clin. Radiol. 75 (5), 351–357. doi:10.1016/j.crad.2019.12.008

Arbizu, J., Domínguez, P. D., Diez-Valle, R., Vigil, C., García-Eulate, R., Zubieta, J. L., et al. (2011). Neuroimagen de los tumores cerebrales. Revista Española de Medicina Nucl. 30 (1), 47–65. doi:10.1016/j.remn.2010.11.001

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017). SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39 (12), 2481–2495. doi:10.1109/tpami.2016.2644615

Banerjee, S., Mitra, S., Masulli, F., and Rovetta, S. (2020). Glioma Classification Using Deep Radiomics[J]. SN Computer Sci. 1 (4), 1–14. doi:10.1007/s42979-020-00214-y

Brodersen, K. H., Ong, C. S., Stephan, K. E., and Buhmann, J. M. (2010). “The Balanced Accuracy and its Posterior Distribution[C],” in 2010 20th International Conference on Pattern Recognition (IEEE), 3121–3124.

Chan, H.-W., Weng, Y.-T., and Huang, T.-Y. (2019). “Automatic Classification of Brain Tumor Types with the MRI Scans and Histopathology Images,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 353–359. doi:10.1007/978-3-030-46643-5_35

Chen, Q., Wang, L., Wang, L., Deng, Z., Zhang, J., and Zhu, Y. (2020). Glioma Grade Prediction Using Wavelet Scattering-Based Radiomics. IEEE Access 8, 106564–106575. doi:10.1109/access.2020.3000895

Decuyper, M., Bonte, S., and Van Holen, R. (2018). “Binary Glioma Grading: Radiomics versus Pre-trained CNN Features,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Cham: Springer International Publishing), 498–505. doi:10.1007/978-3-030-00931-1_57

Dikici, E., Ryu, J. L., Demirer, M., Bigelow, M., White, R. D., Slone, W., et al. (2020). Automated Brain Metastases Detection Framework for T1-Weighted Contrast-Enhanced 3D MRI. IEEE J. Biomed. Health Inform. 24 (10), 2883–2893. doi:10.1109/jbhi.2020.2982103

Ertosun, M. G., and Rubin, D. L. (2015). Automated Grading of Gliomas Using Deep Learning in Digital Pathology Images: A Modular Approach with Ensemble of Convolutional Neural Networks. AMIA Annu. Symp. Proc. 2015, 1899–1908.

Faust, K., Bala, S., van Ommeren, R., Portante, A., Al Qawahmed, R., Djuric, U., et al. (2019). Intelligent Feature Engineering and Ontological Mapping of Brain Tumour Histomorphologies by Deep Learning. Nat. Mach Intell. 1 (7), 316–321. doi:10.1038/s42256-019-0068-6

Gao, Y., Shi, Z., Wang, Y., and Yu, J. (2016). “Histological Grade and Type Classification of Glioma Using Magnetic Resonance Imaging[C],” in 2016 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (IEEE), 1808–1813.

Ge, C., Qu, Q., Gu, I. Y-H., and Jakola, A. S. (2018). “3D Multi-Scale Convolutional Networks for Glioma Grading Using MR Images[C],” in 2018 25th IEEE International Conference on Image Processing (IEEE), 141–145.

Hamghalam, M., Lei, B., and Wang, T. (2020). High Tissue Contrast MRI Synthesis Using Multi-Stage Attention-GAN for Segmentation. Aaai 34 (04), 4067–4074. doi:10.1609/aaai.v34i04.5825

Hamidinekoo, A., Pieciak, T., Afzali, M., Akanyeti, O., and Yuan, Y. (2020). “Glioma Classification Using Multimodal Radiology and Histology Data,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 508–518. doi:10.1007/978-3-030-72087-2_45

Han, C., Rundo, L., Araki, R., Nagano, Y., Furukawa, Y., Mauri, G., et al. (2019). Combining Noise-To-Image and Image-To-Image GANs: Brain MR Image Augmentation for Tumor Detection. IEEE Access 7, 156966–156977. doi:10.1109/access.2019.2947606

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep Residual Learning for Image Recognition[C],” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778.

Hou, L., Samaras, D., Kurc, T. M., Gao, Y., Davis, J. E., Saltz, J. H., et al. (2016). “Patch-based Convolutional Neural Network for Whole Slide Tissue Image Classification[C],” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2424–2433.

Hsieh, K. L.-C., Lo, C.-M., and Hsiao, C.-J. (2017). Computer-aided Grading of Gliomas Based on Local and Global MRI Features. Computer Methods Programs Biomed. 139, 31–38. doi:10.1016/j.cmpb.2016.10.021

Hu, J., Shen, L., and Sun, G. (2018). “Squeeze-and-excitation Networks[C],” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 7132–7141.

Huse, J. T., Diamond, E. L., Wang, L., and Rosenblum, M. K. (2015). Mixed Glioma with Molecular Features of Composite Oligodendroglioma and Astrocytoma: a True "oligoastrocytoma"? Acta Neuropathol. 129 (1), 151–153. doi:10.1007/s00401-014-1359-y

Isola, P., Zhu, J. Y., Zhou, T., and Etros, A. (2017). “Image-to-image Translation with Conditional Adversarial Networks[C],” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (IEEE), 4–12.

Jang, B.-S., Jeon, S. H., Kim, I. H., and Kim, I. A. (2018). Prediction of Pseudoprogression versus Progression Using Machine Learning Algorithm in Glioblastoma. Sci. Rep. 8 (1), 12516. doi:10.1038/s41598-018-31007-2

Jin, L., Shi, F., Chun, Q., Chen, H., Ma, Y., Wu, S., et al. (2020). Artificial Intelligence Neuropathologist for Glioma Classification Using Deep Learning on Hematoxylin and Eosin Stained Slide Images and Molecular Markers. Neuro-Oncology 23 (1), 44–52. doi:10.1093/neuonc/noaa163

Jothi, J. A. A., and Rajam, V. M. A. (2017). A Survey on Automated Cancer Diagnosis from Histopathology Images[J]. Artif. Intelligence Rev. 48 (1), 31–81.

Kaya, I. E., Pehlivanlı, A. Ç., Sekizkardeş, E. G., and Ibrikci, T. (2017). PCA Based Clustering for Brain Tumor Segmentation of T1w MRI Images. Computer Methods Programs Biomed. 140, 19–28. doi:10.1016/j.cmpb.2016.11.011

Ker, J., Bai, Y., Lee, H. Y., Rao, J., and Wang, L. (2019). Automated Brain Histology Classification Using Machine Learning. J. Clin. Neurosci. 66, 239–245. doi:10.1016/j.jocn.2019.05.019

Kingma, D. P., and Ba, J. (2015). “Adam: A Method for Stochastic Optimization[C],” in International Conference on Learning Representations.

Koley, S., Sadhu, A. K., Mitra, P., Chakraborty, B., and Chakraborty, C. (2016). Delineation and Diagnosis of Brain Tumors from post Contrast T1-Weighted MR Images Using Rough Granular Computing and Random forest. Appl. Soft Comput. 41, 453–465. doi:10.1016/j.asoc.2016.01.022

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep Learning. Nature 521 (7553), 436–444. doi:10.1038/nature14539

Lerousseau, M., Deutsch, E., and Paragios, N. (2020). “Multimodal Brain Tumor Classification,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 475–486. doi:10.1007/978-3-030-72087-2_42

Louis, D. N., Ohgaki, H., Wiestler, O. D., Cavenee, W. K., Burger, P. C., Jouvet, A., et al. (2007). The 2007 WHO Classification of Tumours of the Central Nervous System. Acta Neuropathol. 114 (2), 97–109. doi:10.1007/s00401-007-0243-4

Louis, D. N., Perry, A., Reifenberger, G., von Deimling, A., Figarella-Branger, D., Cavenee, W. K., et al. (2016). The 2016 World Health Organization Classification of Tumors of the Central Nervous System: a Summary. Acta Neuropathol. 131 (6), 803–820. doi:10.1007/s00401-016-1545-1

Lu, Z., Bai, Y., Chen, Y., Su, C., Lu, S., Zhan, T., et al. (2020). The Classification of Gliomas Based on a Pyramid Dilated Convolution Resnet Model. Pattern Recognition Lett. 133, 173–179. doi:10.1016/j.patrec.2020.03.007

Ma, X., and Jia, F. (2019). “Brain Tumor Classification with Multimodal MR and Pathology Images[C],” in International MICCAI Brainlesion Workshop (Cham: Springer), 343–352.

Mesfin, F. B., and Al-Dhahir, M. A. (2019). Cancer, Brain Gliomas[M]. Treasure Island (FL): StatPearls Publishing.

Milletari, F., Navab, N., and Ahmadi, S-A. (2016). “Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation[C],” in 2016 Fourth International Conference on 3D Vision (IEEE), 565–571.

Mobadersany, P., Yousefi, S., Amgad, M., Gutman, D. A., Barnholtz-Sloan, J. S., Velázquez Vega, J. E., et al. (2018). Predicting Cancer Outcomes from Histology and Genomics Using Convolutional Networks. Proc. Natl. Acad. Sci. USA 115 (13), E2970–E2979. doi:10.1073/pnas.1717139115

Mohan, G., and Subashini, M. M. (2018). MRI Based Medical Image Analysis: Survey on Brain Tumor Grade Classification. Biomed. Signal Process. Control. 39 (jan), 139–161. doi:10.1016/j.bspc.2017.07.007

Ohgaki, H., Dessen, P., Jourde, B., Horstmann, S., Nishikawa, T., Di Patre, P.-L., et al. (2004). Genetic Pathways to Glioblastoma. Cancer Res. 64 (19), 6892–6899. doi:10.1158/0008-5472.can-04-1337

Okamoto, Y., Di Patre, P.-L., Burkhard, C., Horstmann, S., Jourde, B., Fahey, M., et al. (2004). Population-based Study on Incidence, Survival Rates, and Genetic Alterations of Low-Grade Diffuse Astrocytomas and Oligodendrogliomas. Acta Neuropathol. 108 (1), 49–56. doi:10.1007/s00401-004-0861-z

Ostrom, Q. T., Cote, D. J., Ascha, M., Kruchko, C., and Barnholtz-Sloan, J. S. (2018). Adult Glioma Incidence and Survival by Race or Ethnicity in the United States from 2000 to 2014. JAMA Oncol. 4 (9), 1254–1262. doi:10.1001/jamaoncol.2018.1789

Ostrom, Q. T., Patil, N., Cioffi, G., Waite, K., Kruchko, C., and Barnholtz-Sloan, J. S. (2020). CBTRUS Statistical Report: Primary Brain and Other Central Nervous System Tumors Diagnosed in the United States in 2013-2017. Neuro-Oncology 22 (Suppl. ment_1), iv1–iv96. doi:10.1093/neuonc/noaa200

Otsu, N. (1979). A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man. Cybern. 9 (1), 62–66. doi:10.1109/tsmc.1979.4310076

Pei, L., Hsu, W.-W., Chiang, L.-A., Guo, J.-M., Iftekharuddin, K. M., and Colen, R. (2020). “A Hybrid Convolutional Neural Network Based-Method for Brain Tumor Classification Using mMRI and WSI,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 487–496. doi:10.1007/978-3-030-72087-2_43

Pei, L., Jones, K. A., Shboul, Z. A., Chen, J. Y., and Iftekharuddin, K. M. (2021). Deep Neural Network Analysis of Pathology Images with Integrated Molecular Data for Enhanced Glioma Classification and Grading. Front. Oncol. 11, 668694. doi:10.3389/fonc.2021.668694

Pei, L., Vidyaratne, L., Hsu, W.-W., Rahman, M. M., and Iftekharuddin, K. M. (2019). “Brain Tumor Classification Using 3D Convolutional Neural Network,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 335–342. doi:10.1007/978-3-030-46643-5_33

Pelt, D. M., and Sethian, J. A. (2018). A Mixed-Scale Dense Convolutional Neural Network for Image Analysis. Proc. Natl. Acad. Sci. USA 115 (2), 254–259. doi:10.1073/pnas.1715832114

Radenović, F., Tolias, G., and Chum, O. (2018). Fine-tuning CNN Image Retrieval with No Human Annotation[J]. IEEE Trans. Pattern Anal. Machine Intelligence 41 (7), 1655–1668.

Rathore, S., Niazi, T., Iftikhar, M. A., and Chaddad, A. (2020). Glioma Grading via Analysis of Digital Pathology Images Using Machine Learning. Cancers (Basel) 12 (3), 1–578. doi:10.3390/cancers12030578

Reifenberger, G., Wirsching, H.-G., Knobbe-Thomsen, C. B., and Weller, M. (2017). Advances in the Molecular Genetics of Gliomas - Implications for Classification and Therapy. Nat. Rev. Clin. Oncol. 14 (7), 434–452. doi:10.1038/nrclinonc.2016.204

Reni, M., Mazza, E., Zanon, S., Gatta, G., and Vecht, C. J. (2017). Central Nervous System Gliomas. Crit. Rev. Oncology/Hematology 113, 213–234. doi:10.1016/j.critrevonc.2017.03.021

Reza, S. M. S., Samad, M. D., Shboul, Z. A., Jones, K. A., and Iftekharuddin, K. M. (2019). Glioma Grading Using Structural Magnetic Resonance Imaging and Molecular Data. J. Med. Imaging (Bellingham) 6 (2), 024501. doi:10.1117/1.JMI.6.2.024501

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: Convolutional Networks for Biomedical Image Segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Springer), 234–241. doi:10.1007/978-3-319-24574-4_28

Rundo, L., Han, C., Nagano, Y., Zhang, J., Hataya, R., Militello, C., et al. (2019). USE-net: Incorporating Squeeze-And-Excitation Blocks into U-Net for Prostate Zonal Segmentation of Multi-Institutional MRI Datasets. Neurocomputing 365, 31–43. doi:10.1016/j.neucom.2019.07.006

Rundo, L., Han, C., Zhang, J., Hataya, R., Nagano, Y., Militello, C., et al. (2020). “CNN-based Prostate Zonal Segmentation on T2-Weighted MR Images: A Cross-Dataset Study,” in Neural Approaches to Dynamics of Signal Exchanges (Singapore: Springer), 269–280. doi:10.1007/978-981-13-8950-4_25

Singh, M., Verma, A., and Sharma, N. (2017). Optimized Multistable Stochastic Resonance for the Enhancement of Pituitary Microadenoma in MRI. IEEE J. Biomed. Health Inform. 22 (3), 862–873. doi:10.1109/JBHI.2017.2715078

Su, C., Jiang, J., Zhang, S., Shi, J., Xu, K., Shen, N., et al. (2019). Radiomics Based on Multicontrast MRI Can Precisely Differentiate Among Glioma Subtypes and Predict Tumour-Proliferative Behaviour. Eur. Radiol. 29 (4), 1986–1996. doi:10.1007/s00330-018-5704-8

Tamimi, A. F., and Juweid, M. (2017). “Epidemiology and Outcome of Glioblastoma,” in Glioblastoma [Internet]. Editor S. D. Vleeschouwer (Brisbane, Australia: Codon Publications), 143–153. doi:10.15586/codon.glioblastoma.2017.ch8

Tan, M., and Le, Q. V. (2019). “Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks,” in International conference on machine learning (New York: ACM), 6105–6114.

van den Bent, M. J. (2010). Interobserver Variation of the Histopathological Diagnosis in Clinical Trials on Glioma: a Clinician's Perspective. Acta Neuropathol. 120 (3), 297–304. doi:10.1007/s00401-010-0725-7

van Lent, D. I., van Baarsen, K. M., Snijders, T. J., and Robe, P. A. J. T. (2020). Radiological Differences between Subtypes of WHO 2016 Grade II-III Gliomas: a Systematic Review and Meta-Analysis. Neurooncol. Adv. 2 (1), vdaa044. doi:10.1093/noajnl/vdaa044

Wang, X., Wang, D., Yao, Z., Bowen, X., Bao, W., Chuanjin, L., et al. (2019). Machine Learning Models for Multiparametric Glioma Grading with Quantitative Result Interpretations[J]. Front. Neurosci. 12, 1–1046. doi:10.3389/fnins.2018.01046

Wesseling, P., and Capper, D. (2018). WHO 2016 Classification of Gliomas. Neuropathol. Appl. Neurobiol. 44 (2), 139–150. doi:10.1111/nan.12432

Wu, Y. P., Lin, Y. S., Wu, W. G., Yang, C., Gu, J. Q., Bai, Y., et al. (2017). Semiautomatic Segmentation of Glioma on Mobile Devices. J. Healthc. Eng. 2017, 8054939. doi:10.1155/2017/8054939

Wu, Y., Liu, B., Wu, W., Lin, Y., Yang, C., and Wang, M. (2018). Grading Glioma by Radiomics with Feature Selection Based on Mutual Information. J. Ambient Intell. Hum. Comput 9 (5), 1671–1682. doi:10.1007/s12652-018-0883-3

Wu, Y., Hao, H., Li, J., Wu, W., Lin, Y., and Wang, M. (2019). Four-Sequence Maximum Entropy Discrimination Algorithm for Glioma Grading. IEEE Access 7, 52246–52256. doi:10.1109/access.2019.2910849

Yang, Y., Yan, L.-F., Zhang, X., Han, Y., Nan, H.-Y., Hu, Y.-C., et al. (2018). Glioma Grading on Conventional MR Images: A Deep Learning Study with Transfer Learning. Front. Neurosci. 12, 804. doi:10.3389/fnins.2018.00804

Yin, B., Cheng, H., Wang, F., and Wang, Z. (2020). “Brain Tumor Classification Based on MRI Images and Noise Reduced Pathology Images,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 465–474. doi:10.1007/978-3-030-72087-2_41

Yogananda, C. G. B., Shah, B. R., Yu, F. F., Pinho, M. C., Nalawade, S. S., Murugesan, G. K., et al. (2020). A Novel Fully Automated MRI-Based Deep-Learning Method for Classification of 1p/19q Co-deletion Status in Brain Gliomas. Neuro-Oncology Adv. 2 (Suppl. ment_4), iv42–iv48. doi:10.1093/noajnl/vdaa066

Yonekura, A., Kawanaka, H., Prasath, V. B. S., and Aronow, B. J. (2017). “Improving the Generalization of Disease Stage Classification with Deep CNN for Glioma Histopathological Images[C],” in 2017 IEEE International Conference on Bioinformatics and Biomedicine (IEEE), 1222–1226.

Young, R. M., Jamshidi, A., Davis, G., and Sherman, J. H. (2015). Current Trends in the Surgical Management and Treatment of Adult Glioblastoma. Ann. Transl Med. 3 (9), 121. doi:10.3978/j.issn.2305-5839.2015.05.10

Zhang, Z., Xiao, J., Wu, S., Lv, F., Gong, J., Jiang, L., et al. (2020). Deep Convolutional Radiomic Features on Diffusion Tensor Images for Classification of Glioma Grades. J. Digit Imaging 33 (4), 826–837. doi:10.1007/s10278-020-00322-4

Zhao, B., Huang, J., Liang, C., Liu, Z., and Han, C. (2020). “CNN-based Fully Automatic Glioma Classification with Multi-Modal Medical Images,” in International MICCAI Brainlesion Workshop (Cham: Springer International Publishing), 497–507. doi:10.1007/978-3-030-72087-2_44

Zhou, Z., Sanders, J. W., Johnson, J. M., Gule-Monroe, M., Chen, M., Briere, T. M., et al. (2020). MetNet: Computer-Aided Segmentation of Brain Metastases in post-contrast T1-Weighted Magnetic Resonance Imaging. Radiother. Oncol. 153, 189–196. doi:10.1016/j.radonc.2020.09.016

Keywords: convolutional neural networks, deep learning, glioma, pathology, magnetic resonance image

Citation: Wang X, Wang R, Yang S, Zhang J, Wang M, Zhong D, Zhang J and Han X (2022) Combining Radiology and Pathology for Automatic Glioma Classification. Front. Bioeng. Biotechnol. 10:841958. doi: 10.3389/fbioe.2022.841958

Received: 23 December 2021; Accepted: 23 February 2022;

Published: 21 March 2022.

Edited by:

Leyi Wei, Shandong University, ChinaReviewed by:

Linmin Pei, National Cancer Institute at Frederick (NIH), United StatesCopyright © 2022 Wang, Wang, Yang, Zhang, Wang, Zhong, Zhang and Han. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Zhang, amluZ196aGFuZ0BzY3UuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.