- 1Faculty of Law, Bond University, Gold Coast, QLD, Australia

- 2Institute for Human Resource Management, WU Vienna, Vienna, Austria

ChatGPT, a new language model developed by OpenAI, has garnered significant attention in various fields since its release. This literature review provides an overview of early ChatGPT literature across multiple disciplines, exploring its applications, limitations, and ethical considerations. The review encompasses Scopus-indexed publications from November 2022 to April 2023 and includes 156 articles related to ChatGPT. The findings reveal a predominance of negative sentiment across disciplines, though subject-specific attitudes must be considered. The review highlights the implications of ChatGPT in many fields including healthcare, raising concerns about employment opportunities and ethical considerations. While ChatGPT holds promise for improved communication, further research is needed to address its capabilities and limitations. This literature review provides insights into early research on ChatGPT, informing future investigations and practical applications of chatbot technology, as well as development and usage of generative AI.

1. Introduction

ChatGPT, developed by OpenAI, is a new language model that has generated significant buzz within the technology industry and beyond. With the launch of artificial intelligence-based Chat Generative Pre-trained Transformer (ChatGPT), OpenAI has taken the academic community by storm, forcing scientists, editors and publishers of scientific journals to rethink and adjust their publication policies and strategies. Whereas availability of ChatGPT has been sanctioned in some jurisdictions (e.g., China, Italy), like the creation of the internet, the emergence of ChatGPT may possibly become a marking line of a new era, and scholars need to embrace this technological development. Since its release, researchers have been exploring its capabilities and limitations across various fields such as healthcare, business, psychology, and computer science, building on the research of earlier language models (Testoni et al., 2022; Rocca et al., 2023; Roy et al., 2023). This literature review aims to provide an overview of early ChatGPT literature in multiple disciplines, analyzing how it is being used and what implications this has for future research and practical applications.

The literature reviewed in this study includes a range of perspectives on ChatGPT, from its potential benefits and drawbacks to ethical considerations related to the technology (Seth et al., 2023). The findings suggest that while early research is still limited by the scope of available data, there are already some clear implications for future research and practical applications in various fields. For example, many scholars have raised concerns about ChatGPT's potential impact on employment opportunities across different industries (Qadir, 2022; Ai et al., 2023). While early studies suggest promising results for chatbot technology in healthcare settings, there are still significant ethical considerations (Rahimi and Talebi Bezmin Abadi, 2023) to be addressed before widespread implementation can occur.

ChatGPT uses advanced machine learning techniques to generate natural language responses, making it an attractive tool for various industries seeking more efficient communication with customers or clients. Its potential applications range from customer service chatbots to virtual assistants in healthcare settings (Sallam, 2023). However, as ChatGPT is still a relatively new technology, there are many questions about its capabilities and limitations that need to be addressed by researchers across different fields. This literature review aims to provide insights into how early research on ChatGPT has evolved, highlighting key findings from sentiment analysis of articles related to chatbot technology in various academic areas.

2. Methodology

While most of the discussion takes place in the media, in committee meetings or informal fora, we also see that systematic scholarly research has started to emerge rapidly. From the launch of ChatGPT in late November 2022 until April 2023 there were 154 publications, with only two publications released in 2022. While we appreciate all the research dedicated explicitly to new technologies, for quality assurance, we limit our review to sources included in the rigorously monitored Scopus database, and in this paper we report only on a review of Scopus-indexed publications.

The sentiment analysis conducted in two popular software packages using different dictionaries showed dominance of negative sentiment in all papers examined and across all disciplines. We however refrain from conclusions about a general negative sentiment, since words expressing attitudes are subject-specific. Therefore, we selected a sub-sample of papers in the three disciplines (using the Scopus classification) in which authors have completed both formal education and possess research experience: (1) economics, econometrics, and finance, (2) business, management, and accounting, and (3) social sciences, which we read paragraph by paragraph, assessing sentiment of each as positive, neutral, or negative.

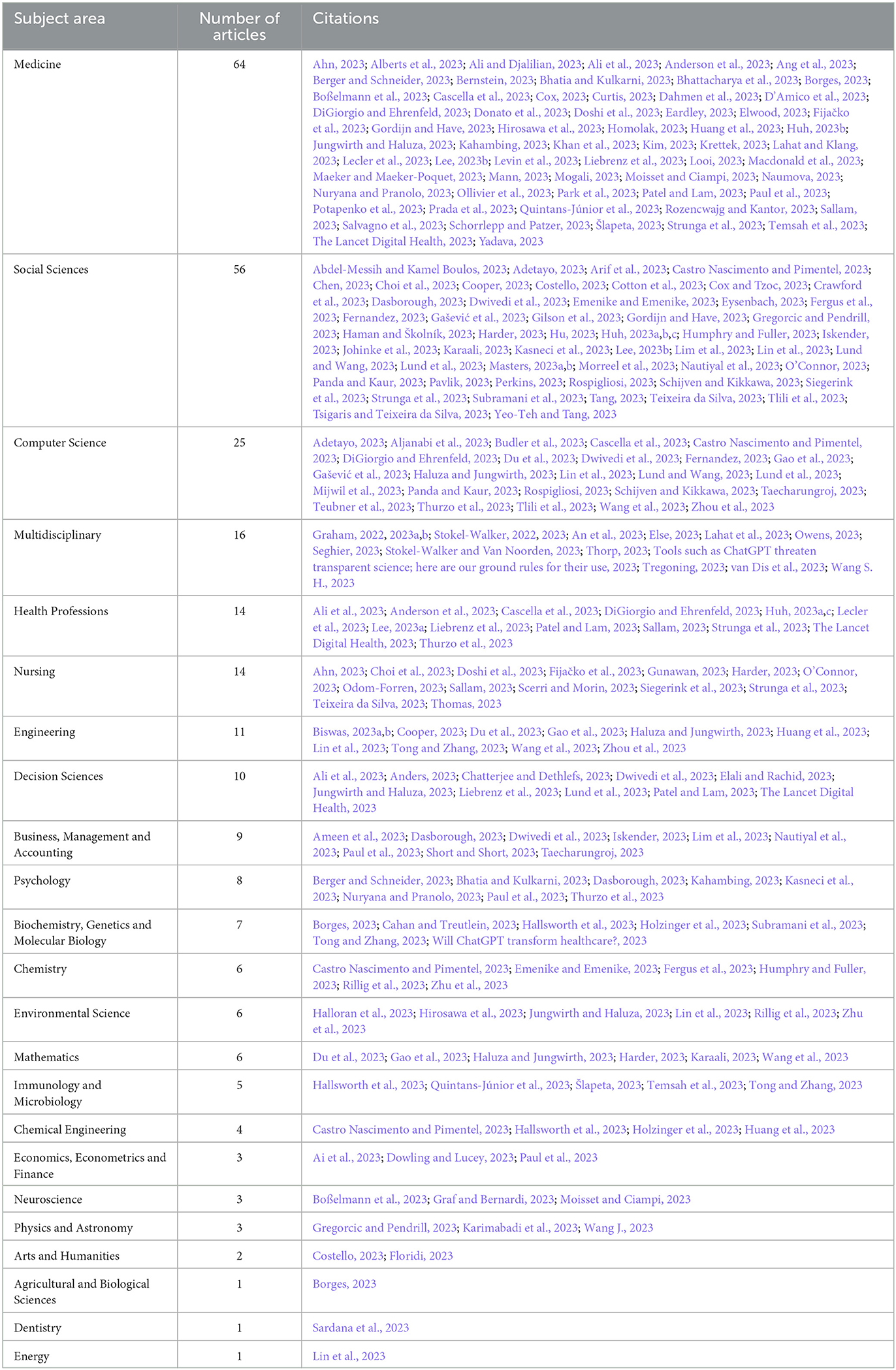

When reviewing publications for this paper, we followed usual procedures recommended for literature reviews in new and emerging fields of research (Gancarczyk et al., 2022; Liang et al., 2022). Having set the scope of the research to only Scopus-indexed publications published between November 2022 and April 2023, we first identified papers which contain the name “ChatGPT” either in the title, abstract, or keywords. This resulted in 156 entries. Next, we sorted out the received pool of papers into 22 subject areas. One hundred forty publications fitted into the pre-established categories, while the remaining 16 were classified as multidisciplinary. For details on the distribution and actual publications, see Table 1.

3. Limitations

Inevitably, given the time scope of our review, the research reviewed here is all based on the 3rd version of ChatGPT and its various iterations. Version 4, released in mid-March 2023, offers considerable amendments, for instance accepts image input, and is capable of generating longer texts (Bhattacharya et al., 2023). Even though the fundamental assumptions and the basis on which ChatGPT works remains comparable, the greater variety of usage will lead to more profound impact on the work of scholars and what scientific institutions can achieve, as well as on recipients of academic research. Consequently, we expect fast emergence of further research on ChatGPT, and this review should serve only as a record of initial reactions in scholarly literature.

4. Discussion

The early research on ChatGPT suggests a range of potential benefits and drawbacks across various fields such as healthcare, business, psychology, and computer science, among others. Like the beginnings of the internet or the creation of digital assets (Lawuobahsumo et al., 2022; Kapengut and Mizrach, 2023; Watters, 2023), ChatGPT and its underlying technology have the opportunity for both positive and negative disruption. While many scholars have raised concerns about the impact of ChatGPT on employment opportunities in different industries, there are also significant ethical considerations to be addressed before widespread implementation can occur.

The negative sentiment expressed in the literature toward ChatGPT is noteworthy, as it suggests that there are concerns or challenges associated with using this technology in various fields. While some studies have highlighted the potential benefits of ChatGPT, such as its ability to generate human-like responses and improve user experience, others have raised ethical and practical issues related to privacy, bias, transparency, and accountability. For instance, some researchers have argued that although OpenAI pays special attention to eliminate abusive vocabulary and hate-speech by design, the generative AI tools trained on text from the open Internet may still perpetuate or even amplify existing biases in language use and data representation, leading to discriminatory outcomes for certain groups of people (e.g., people who do not classify into a binary gender classification, or ethnic minorities). While important for language models, this issue has overlap with concerns surrounding social media and other sources of information (Thornhill et al., 2019; Kurpicz-Briki and Leoni, 2021), the impact on policy making (Lamba et al., 2021), and the risk of fake news (Wu and Liu, 2018; Shu et al., 2019). Others have pointed out the limitations of current models in terms of their ability to handle complex social interactions, emotional expressions, and cultural nuances that are essential for effective communication with humans. Therefore, it is essential to ensure that chatbot technology is trained on diverse datasets that represent different demographics and cultures. Additionally, privacy concerns arise when personal information is collected by ChatGPT during conversations with users. It is crucial to establish clear guidelines for data collection and usage to protect user privacy. Furthermore, transparency and accountability are essential in chatbot technology to ensure that users understand how their data is being used and who has access to it. As researchers continue to explore this new technology, it will be important to consider both the benefits and drawbacks of chatbot technology to fully understand its implications for future research and practical applications.

Early research on ChatGPT suggests that while there are clear implications for future research and practical applications in various fields, further studies need to be conducted to fully understand its capabilities and limitations. This includes addressing ethical considerations such as privacy concerns and bias in data sets used by ChatGPT. Despite the potential benefits of chatbot technology, early research is still limited by the scope of available data. However, as ChatGPT continues to evolve and become more advanced, it has the potential to revolutionize communication across various industries. For instance, customer service chatbots can provide 24/7 support to customers, reducing wait times and improving overall satisfaction. While one might expect a positive reception of transformative technologies in the academic literature, the negative sentiment in the early literature may be explained by the types of literature. Approximately 12% of articles had ethics as a key word and just over 8% had plagiarism. Not only is it logical that addressing ethical issues would produce articles with a negative sentiment, but these articles may also be published faster.

There is an increasing number of articles using LLMs and other AI-based solutions to benchmark hypothetical physical theories (Adesso, 2023), to process data, or for integration into medical practice. However, these studies usually take more time to conduct and, in the case of those involving humans or animals, have additional delays in receiving research ethics approval. Despite medicine being the largest category, the majority of articles were theoretical and discussed possible applications of ChatGPT. Necessarily, these types of articles address potential problems, whereas later scientific articles may focus more on solutions and therefore show a more positive sentiment. Not only is it logical that new technology would be treated with skepticism in the academic world, but it perhaps should not be surprising that early literature addresses the ethical concerns of researchers and postulates the problems that will need to be addressed in future research.

In healthcare settings, virtual assistants can help patients schedule appointments (Chow et al., 2023), answer medical questions, and even monitor vital signs. Use of AI in the medical context has also been a focus of literature even outside on context of ChatGPT (Merhbene et al., 2022). However, the limitations of current models in terms of their ability to handle complex social interactions, emotional expressions, and cultural nuances that are essential for effective communication with humans need to be addressed before widespread implementation can occur. The literature suggests that the technology is not yet ready for clinical use, due to its limited ability and privacy issues (Au Yeung et al., 2023; De Angelis et al., 2023) and legal concerns (Dave et al., 2023). As researchers continue to explore this new technology, it will be important to consider both the benefits and drawbacks of chatbot technology in order to fully understand its implications for future research and practical applications.

There is a notable lack of legal scholarship addressing ChatGPT and large language models which is surprising considering legal considerations are addressed in many of the articles. This, however, may be explained by SCOPUS's lack of legal coverage. Law articles tend to have low citation rates as they cite the law itself more than other articles and may be local in nature (Eisenberg and Wells, 1998). Therefore, aside from law and society topics that recieve higher citations rates, legal scholarship is largely ignored by SCOPUS and the Web of Science databases. Nevertheless, in additional to ethical issues such as plagiarism, legal issues including intellectual property rights are often discussed (D'Amico et al., 2023). Intellectual property is perhaps a greater issue with AI creating visual art than with most outputs for LLMs, especially if the LLM is trained on a sufficiently large dataset. Additionally, ensuring accuracy is arguably even a larger risk than plagiarism. D'Amico et al. (2023) state that “ChatGPT had been listed as the first author of four papers, without considering the possibility of ‘involuntary plagiarism' or intellectual property issues surrounding the output of the model.” The approach taken by these papers (ChatGPT Generative Pre-trained Transformer and Zhavoronkov, 2022; O'Connor, 2022; King and ChatGPT, 2023) is understandable considering it is unknown what standards will be adopted in the future. However, using the output is not all that different from pulling from one's own knowledge. Academics must cite all sources of information not only for ethical reasons but also because it strengthens the claims of a paper. Not only does a failure of cite a source of information constitute plagiarism, but it weakens the paper. However, over time people learn and they may make statements without remember the original source. Thus, as something becomes common knowledge the source becomes less likely to be cited. When using LLMs, if something is outside of the knowledge of an author, they will need to look it up and in so doing will be ethically compelled to cite the source confirming the knowledge. We therefore argue that the primary danger is that authors will publish material produced by an LLM without ensuring its accuracy. It is not unethical to use an LLM, but authors must ensure the veracity of the final work regardless of whether they use an LLM like ChatGPT, the built-in spelling and grammar checking software, other in text editing software like MS Word, or other AI solutions to assist with writing. More research, therefore, should focus on the risk of fake resources, including journals publishing articles falsely purporting to be from famous academics, a problem that will undoubtedly increase with the proliferation of LLM technology.

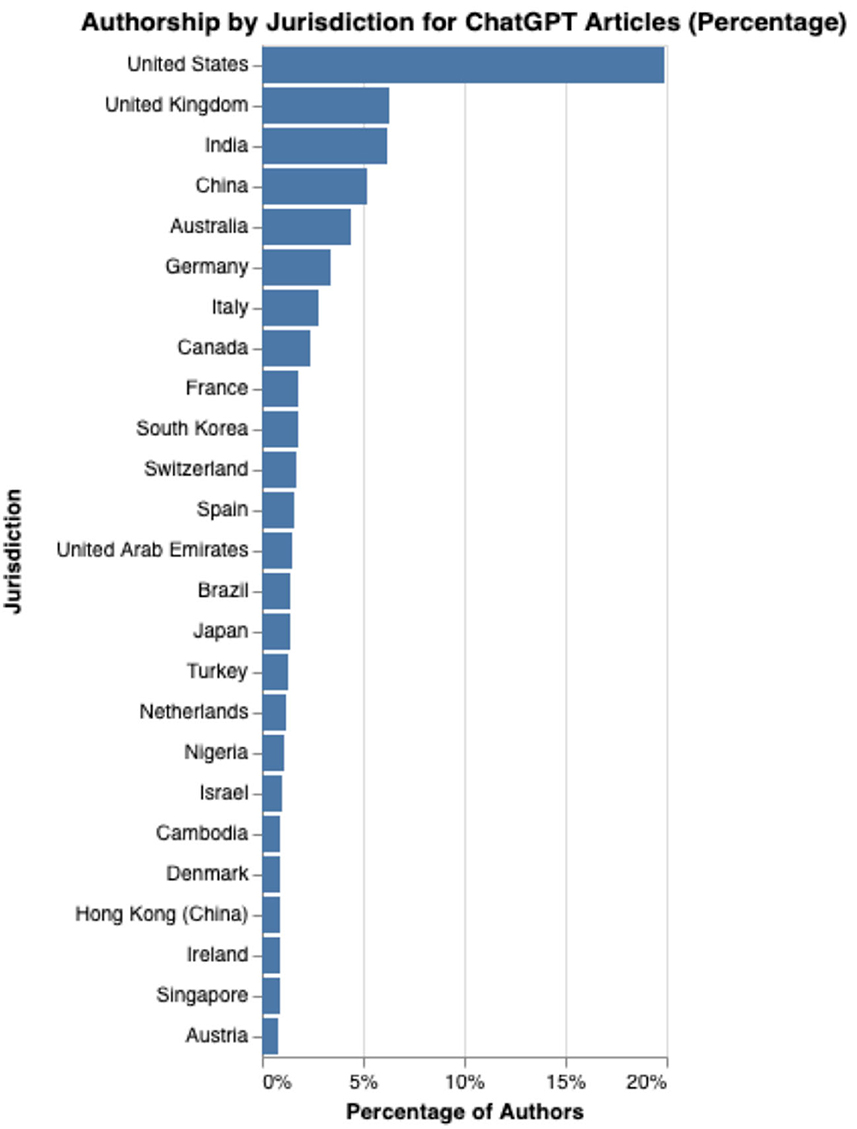

One surprising factor was the geographic universality of the findings. As can be seen in Figure 1, the top 25 countries by authorship included all six inhabited continents. In fact, the top three countries, the United States, United Kingdom, and India, are each on different continents. While there is a stronger representation of English-speaking countries, mainland China ranks fourth in the number of authors. It is perhaps not surprising that despite not being English speaking countries, China, Japan and South Korea, all leaders in technological development, would be amongst the top 15 regions.

Overall, the early literature on ChatGPT suggests that while it has great potential for improving communication across various industries, there are still many questions to be answered before its full impact can be realized. As researchers continue to explore this new technology, it will be important to consider both the benefits and drawbacks of chatbot technology to fully understand its implications for future research and practical applications. For instance, while ChatGPT has the potential to improve customer service by providing quick responses to frequently asked questions, there is a risk that customers may become frustrated if they encounter complex issues that cannot be resolved through automation. Additionally, chatbot technology may not be suitable for all industries or contexts, and it will be important to identify which applications are most effective in different settings. As ChatGPT continues to evolve and become more advanced, researchers must remain vigilant about the ethical considerations associated with its use, including privacy (Masters, 2023a) concerns, bias in data sets used by chatbots (Thornhill et al., 2019), transparency, accountability, and cultural sensitivity. By addressing these issues head-on, we can ensure that ChatGPT and similar solutions are deployed responsibly and effectively and the fact that all disciplines show negative sentiment toward ChatGPT in the early literature implies scholars are embracing this cautious approach.

5. Conclusion

In conclusion, the early literature on ChatGPT suggests that while there are promising results for its potential applications in various fields, there are also significant ethical considerations to be addressed before widespread implementation can occur. The negative sentiment across all academic areas related to early ChatGPT literature may be explained by limitations in current research or ethical concerns related to the use of GPT technology. As ChatGPT is still a relatively new technology, there are many questions about its capabilities and limitations that need to be addressed by researchers across different fields. The geographical dispersion and standing in university ranking of authors' institutions signals the interest is global in scope and a matter of importance for all sorts of institutions. In addition, the lack of comprehensive studies or datasets that can provide more nuanced insights into its capabilities and limitations beyond simple language processing tasks may contribute to negative sentiment across different disciplines. Overall, while early research is still limited by the scope of available data, there are already some clear implications for future research and practical applications in various fields. As ChatGPT technology continues to evolve, it will be important for researchers and stakeholders to work together to address these ethical considerations and ensure that this powerful tool is used responsibly and effectively across different industries.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdel-Messih, M. S., and Kamel Boulos, M. N. (2023). ChatGPT in clinical toxicology. JMIR Med. Educ. 9. doi: 10.22541/au.167715747.75006360/v1

Adesso, G. (2023). Towards the ultimate brain: exploring scientific discovery with Chatgpt Ai. Authorea. preprints. doi: 10.22541/au.167701309.98216987/v1

Adetayo, A. J. (2023). Artificial intelligence chatbots in academic libraries: the rise of ChatGPT. Library Hi Tech News 40, 18–21. doi: 10.1108/LHTN-01-2023-0007

Ahn, C. (2023). Exploring ChatGPT for information of cardiopulmonary resuscitation. Resuscitation 185, 109729. doi: 10.1016/j.resuscitation.2023.109729

Ai, H., Zhou, Z., and Yan, Y. (2023). The impact of Smart city construction on labour spatial allocation: evidence from China. Appl. Econ. doi: 10.1080/00036846.2023.2186367

Alberts, I. L., Mercolli, L., Pyka, T., Prenosil, G., Shi, K., Rominger, A., et al. (2023). Large language models (LLM) and ChatGPT: what will the impact on nuclear medicine be? Eur. J. Nucl. Med. Mol. Imaging 50, 1549–1552. doi: 10.1007/s00259-023-06172-w

Ali, M. J., and Djalilian, A. (2023). Readership awareness series–paper 4: Chatbots and ChatGPT - ethical considerations in scientific publications. Semin. Ophthalmol. 38, 403–404. doi: 10.1080/08820538.2023.2193444

Ali, S. R., Dobbs, T. D., Hutchings, H. A., and Whitaker, I. S. (2023). Using ChatGPT to write patient clinic letters. Lancet Digit. Health 5, e179–e181. doi: 10.1016/S2589-7500(23)00048-1

Aljanabi, M., Ghazi, M., Ali, A. H., Abed, S. A., and ChatGpt. (2023). ChatGpt: open possibilities. Iraqi J. Comput. Sci. Math. 4, 62–64. doi: 10.52866/20ijcsm.2023.01.01.0018

Ameen, N., Viglia, G., and Altinay, L. (2023). Revolutionizing services with cutting-edge technologies post major exogenous shocks. Serv. Ind. J. 43, 125–133. doi: 10.1080/02642069.2023.2185934

An, J., Ding, W., and Lin, C. (2023). ChatGPT: tackle the growing carbon footprint of generative AI. Nature 615, 586. doi: 10.1038/d41586-023-00843-2

Anders, B. A. (2023). Is using ChatGPT cheating, plagiarism, both, neither, or forward thinking? Patterns 4, 100694. doi: 10.1016/j.patter.2023.100694

Anderson, N., Belavy, D. L., Perle, S. M., Hendricks, S., Hespanhol, L., Verhagen, E., et al. (2023). AI did not write this manuscript, or did it? Can we trick the AI text detector into generated texts? The potential future of ChatGPT and AI in sports and exercise medicine manuscript generation. BMJ Open Sport Exerc. Med. 9, e001568. doi: 10.1136/bmjsem-2023-001568

Ang, T. L., Choolani, M., See, K. C., and Poh, K. K. (2023). The rise of artificial intelligence: addressing the impact of large language models such as ChatGPT on scientific publications. Singapore Med. J. 64, 219–221. doi: 10.4103/singaporemedj.SMJ-2023-055

Arif, T. B., Munaf, U., and Ul-Haque, I. (2023). The future of medical education and research: is ChatGPT a blessing or blight in disguise? Med. Educ. Online 28, 2181052. doi: 10.1080/10872981.2023.2181052

Au Yeung, J., Kraljevic, Z., Luintel, A., Balston, A., Idowu, E., Dobson, R. J., et al. (2023). AI chatbots not yet ready for clinical use. Front. Digit. Health 5, 1161098. doi: 10.3389/fdgth.2023.1161098

Berger, U., and Schneider, N. (2023). How ChatGPT will change research, education and healthcare? PPmP Psychother. Psychosom. Med. Psychol. 73, 159–161. doi: 10.1055/a-2017-8471

Bernstein, J. (2023). Not the Last Word: ChatGPT can't perform orthopaedic surgery. Clin. Orthop. Relat. Res. 481, 651–655. doi: 10.1097/CORR.0000000000002619

Bhatia, G., and Kulkarni, A. (2023). ChatGPT as co-author: are researchers impressed or distressed? Asian J. Psychiatr. 84, 103564. doi: 10.1016/j.ajp.2023.103564

Bhattacharya, K., Bhattacharya, A. S., Bhattacharya, N., Yagnik, V. D., Garg, P., Kumar, S., et al. (2023). ChatGPT in surgical practice—a new kid on the block. Indian J. Surg. doi: 10.1007/s12262-023-03727-x

Biswas, S. S. (2023a). Potential use of Chat GPT in global warming. Ann. Biomed. Eng. 51, 1126–1112. doi: 10.1007/s10439-023-03171-8

Biswas, S. S. (2023b). Role of Chat GPT in public health. Ann. Biomed. Eng. 51, 868–869. doi: 10.1007/s10439-023-03172-7

Borges, R. M. (2023). A braver new world? Of chatbots and other cognoscenti. J. Biosci. 48, 10. doi: 10.1007/s12038-023-00334-6

Boßelmann, C. M., Leu, C., and Lal, D. (2023). Are AI language models such as ChatGPT ready to improve the care of individuals with epilepsy? Epilepsia 64, 1195–1199. doi: 10.1111/epi.17570

Budler, L. C., Gosak, L., and Stiglic, G. (2023). Review of artificial intelligence-based question-answering systems in healthcare. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 13, e1487. doi: 10.1002/widm.1487

Cahan, P., and Treutlein, B. (2023). A conversation with ChatGPT on the role of computational systems biology in stem cell research. Stem Cell Rep. 18, 1–2. doi: 10.1016/j.stemcr.2022.12.009

Cascella, M., Montomoli, J., Bellini, V., and Bignami, E. (2023). Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J. Med. Syst. 47, 33. doi: 10.1007/s10916-023-01925-4

Castro Nascimento, C. M., and Pimentel, A. S. (2023). Do large language models understand chemistry? A conversation with ChatGPT. J. Chem. Inf. Model. 63, 1649–1655. doi: 10.1021/acs.jcim.3c00285

ChatGPT Generative Pre-trained Transformer and Zhavoronkov A.. (2022). Rapamycin in the context of Pascal's Wager: generative pre-trained transformer perspective. Oncoscience 9, 82. doi: 10.18632/oncoscience.571

Chatterjee, J., and Dethlefs, N. (2023). This new conversational AI model can be your friend, philosopher, and guide… even your worst enemy. Patterns 4, 100676. doi: 10.1016/j.patter.2022.100676

Chen, X. (2023). ChatGPT and its possible impact on library reference services. Internet Ref. Serv. Q. 27, 121–129. doi: 10.1080/10875301.2023.2181262

Choi, E. P. H., Lee, J. J., Ho, M. H., Kwok, J. Y. Y., and Lok, K. Y. W. (2023). Chatting or cheating? The impacts of ChatGPT and other artificial intelligence language models on nurse education. Nurse Educ. Today 125, 105796. doi: 10.1016/j.nedt.2023.105796

Chow, J. C. L., Sanders, L., and Li, K. (2023). Impact of ChatGPT on medical chatbots as a disruptive technology. Front. Artif. Intell. 6, 1166014. doi: 10.3389/frai.2023.1166014

Cooper, G. (2023). Examining science education in ChatGPT: an exploratory study of generative artificial intelligence. J. Sci. Educ. Technol. 32, 444–452. doi: 10.1007/s10956-023-10039-y

Costello, E. (2023). ChatGPT and the educational AI chatter: full of bullshit or trying to tell us something? Postdigital Sci. Educ. 2, 863–878. doi: 10.1007/s42438-023-00398-5

Cotton, D. R. E., Cotton, P. A., and Shipway, J. R. (2023). Chatting and cheating: ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. doi: 10.1080/14703297.2023.2190148

Cox Jr, L. A. (2023). Causal reasoning about epidemiological associations in conversational AI. Glob. Epidemiol. 5, 1–6. doi: 10.1016/j.gloepi.2023.100102

Cox, C., and Tzoc, E. (2023). ChatGPT Implications for academic libraries. Coll. Res. Libr. News 84, 99–102. doi: 10.5860/crln.84.3.99

Crawford, J., Cowling, M., and Allen, K. A. (2023). Leadership is needed for ethical ChatGPT: character, assessment, and learning using artificial intelligence (AI). J. Univ. Teach. Learn. Pract. 20. doi: 10.53761/1.20.3.02

Curtis, N. (2023). To ChatGPT or not to ChatGPT? The impact of artificial intelligence on academic publishing. Pediatr. Infect. Dis. J. 42, 275. doi: 10.1097/INF.0000000000003852

Dahmen, J., Kayaalp, M. E., Ollivier, M., Pareek, A., Hirschmann, M. T., Karlsson, J., et al. (2023). Artificial intelligence bot ChatGPT in medical research: the potential game changer as a double-edged sword. Knee Surg. Sports Traumatol. Arthrosc. 31, 1187–1189. doi: 10.1007/s00167-023-07355-6

D'Amico, R. S., White, T. G., Shah, H. A., and Langer, D. J. (2023). I Asked a ChatGPT to write an editorial about how we can incorporate chatbots into neurosurgical research and patient care…. Neurosurgery 92, 663–664. doi: 10.1227/neu.0000000000002414

Dasborough, M. T. (2023). Awe-inspiring advancements in AI: the impact of ChatGPT on the field of organizational behavior. J. Organ. Behav. 44, 177–179. doi: 10.1002/job.2695

Dave, T., Athaluri, S. A., and Singh, S. (2023). ChatGPT in medicine: an overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 6, 1169595. doi: 10.3389/frai.2023.1169595

De Angelis, L., Baglivo, F., Arzilli, G., Privitera, G. P., Ferragina, P., Tozzi, A. E., et al. (2023). ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health. Front. Public Health 11, 1166120. doi: 10.3389/fpubh.2023.1166120

DiGiorgio, A. M., and Ehrenfeld, J. M. (2023). Artificial intelligence in medicine and ChatGPT: de-tether the physician. J. Med. Syst. 47, 32. doi: 10.1007/s10916-023-01926-3

Donato, H., Escada, P., and Villanueva, T. (2023). The transparency of science with ChatGPT and the emerging artificial intelligence language models: where should medical journals stand? Acta Med. Port. 36, 147–148. doi: 10.20344/amp.19694

Doshi, R. H., Bajaj, S. S., and Krumholz, H. M. (2023). ChatGPT: temptations of progress. Am. J. Bioethics 23, 6–8. doi: 10.1080/15265161.2023.2180110

Dowling, M., and Lucey, B. (2023). ChatGPT for (Finance) research: the bananarama conjecture. Finance Res. Lett. 53, 103662. doi: 10.1016/j.frl.2023.103662

Du, H., Teng, S., Chen, H., Ma, J., Wang, X., Gou, C., et al. (2023). Chat with ChatGPT on intelligent vehicles: an IEEE TIV perspective. IEEE Trans. Intell. Veh. 8, 2020–2026. doi: 10.1109/TIV.2023.3253281

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., et al. (2023). “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manage. 71, 102642. doi: 10.1016/j.ijinfomgt.2023.102642

Eardley, I. (2023). ChatGPT: what does it mean for scientific research and publishing? BJU Int. 131, 381–382. doi: 10.1111/bju.15995

Eisenberg, T., and Wells, M. T. (1998). Ranking and explaining the scholarly impact of law schools. J. Legal Stud. 27, 373–413. doi: 10.1086/468024

Elali, F. R., and Rachid, L. N. (2023). AI-generated research paper fabrication and plagiarism in the scientific community. Patterns 4, 100706. doi: 10.1016/j.patter.2023.100706

Else, H. (2023). Abstracts written by ChatGPT fool scientists. Nature 613, 423. doi: 10.1038/d41586-023-00056-7

Elwood, T. W. (2023). Technological impacts on the sphere of professional journals. J Allied Health 52, 1. Available online at: https://pubmed.ncbi.nlm.nih.gov/36892853/

Emenike, M. E., and Emenike, B. U. (2023). Was this title generated by ChatGPT? Considerations for artificial intelligence text-generation software programs for chemists and chemistry educators. J. Chem. Educ. 100, 1413–1418. doi: 10.1021/acs.jchemed.3c00063

Eysenbach, G. (2023). The role of ChatGPT, generative language models, and artificial intelligence in medical education: a conversation with ChatGPT and a call for papers. JMIR Med. Educ. 9, e46885. doi: 10.2196/46885

Fergus, S., Botha, M., and Ostovar, M. (2023). Evaluating academic answers generated using ChatGPT. J. Chem. Educ. 100, 1672–1675. doi: 10.1021/acs.jchemed.3c00087

Fernandez, P. (2023). “Through the looking glass: envisioning new library technologies” AI-text generators as explained by ChatGPT. Library Hi Tech News. 40, 11–14. doi: 10.1108/LHTN-02-2023-0017

Fijačko, N., Gosak, L., Štiglic, G., Picard, C. T., and John Douma, M. (2023). Can ChatGPT pass the life support exams without entering the American heart association course? Resuscitation 185, 109732. doi: 10.1016/j.resuscitation.2023.109732

Floridi, L. (2023). AI as agency without intelligence: on ChatGPT, large language models, and other generative models. Philos. Technol. 36, 15. doi: 10.1007/s13347-023-00621-y

Gancarczyk, M., Łasak, P., and Gancarczyk, J. (2022). The fintech transformation of banking: governance dynamics and socio-economic outcomes in spatial contexts. Entrep. Bus. Econ. Rev. 10, 143–165. doi: 10.15678/EBER.2022.100309

Gao, Y., Tong, W., Wu, E. Q., Chen, W., Zhu, G., Wang, F., et al. (2023). Chat with ChatGPT on interactive engines for intelligent driving. IEEE Trans. Intell. Veh. 8, 2034–2036. doi: 10.1109/TIV.2023.3252571

Gašević, D., Siemens, G., and Sadiq, S. (2023). Empowering learners for the age of artificial intelligence. Comput. Educ. Artif. Intell. 4, 100130. doi: 10.1016/j.caeai.2023.100130

Gilson, A., Safranek, C. W., Huang, T., Socrates, V., Chi, L., Taylor, R. A., et al. (2023). How does ChatGPT perform on the united states medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Med. Educ. 9, e45312. doi: 10.2196/45312

Gordijn, B., and Have, H. (2023). ChatGPT: evolution or revolution? Med. Health Care Philos. 26, 1–2. doi: 10.1007/s11019-023-10136-0

Graf, A., and Bernardi, R. E. (2023). ChatGPT in research: balancing ethics, transparency and advancement. Neuroscience 515, 71–73. doi: 10.1016/j.neuroscience.2023.02.008

Graham, F. (2022). Daily briefing: will ChatGPT kill the essay assignment? Nature doi: 10.1038/d41586-022-04437-2

Graham, F. (2023a). Daily briefing: science urgently needs a plan for ChatGPT. Nature doi: 10.1038/d41586-023-00360-2

Graham, F. (2023b). Daily briefing: the science underlying the Turkey–Syria earthquake. Nature doi: 10.1038/d41586-023-00373-x

Gregorcic, B., and Pendrill, A. M. (2023). ChatGPT and the frustrated socrates. Phys. Educ. 58, 035021. doi: 10.1088/1361-6552/acc299

Gunawan, J. (2023). Exploring the future of nursing: insights from the ChatGPT model. Belitung Nurs. J. 9, 1–5. doi: 10.33546/bnj.2551

Halloran, L. J. S., Mhanna, S., and Brunner, P. (2023). AI tools such as ChatGPT will disrupt hydrology, too. Hydrol. Process. 37. doi: 10.1002/hyp.14843

Hallsworth, J. E., Udaondo, Z., Pedrós-Alió, C., Höfer, J., Benison, K. C., Lloyd, K. G., et al. (2023). Scientific novelty beyond the experiment. Microb. Biotechnol. 16, 1131–1173. doi: 10.1111/1751-7915.14222

Haluza, D., and Jungwirth, D. (2023). Artificial intelligence and ten societal megatrends: an exploratory study using GPT-3. Systems 11, 120. doi: 10.3390/systems11030120

Haman, M., and Školník, M. (2023). Using ChatGPT to conduct a literature review. Account. Res. 1–3. doi: 10.1080/08989621.2023.2185514

Harder, N. (2023). Using ChatGPT in simulation design: what can (or should) it do for you? Clin. Simul. Nurs. 78, A1–A2. doi: 10.1016/j.ecns.2023.02.011

Hirosawa, T., Harada, Y., Yokose, M., Sakamoto, T., Kawamura, R., Shimizu, T., et al. (2023). Diagnostic accuracy of differential-diagnosis lists generated by generative pretrained transformer 3 chatbot for clinical vignettes with common chief complaints: a pilot study. Int. J. Environ. Res. Public Health 20, 3378. doi: 10.3390/ijerph20043378

Holzinger, A., Keiblinger, K., Holub, P., Zatloukal, K., and Müller, H. (2023). AI for life: trends in artificial intelligence for biotechnology. Nat. Biotechnol. 74, 16–24. doi: 10.1016/j.nbt.2023.02.001

Homolak, J. (2023). Opportunities and risks of ChatGPT in medicine, science, and academic publishing: a modern Promethean dilemma. Croat. Med. J. 64, 1–3. doi: 10.3325/cmj.2023.64.1

Hu, G. (2023). Challenges for enforcing editorial policies on AI-generated papers. Account. Res. 1–3. doi: 10.1080/08989621.2023.2184262

Huang, J., Yeung, A. M., Kerr, D., and Klonoff, D. C. (2023). Using ChatGPT to predict the future of diabetes technology. J. Diabet. Sci. Technol. 17, 853–854. doi: 10.1177/19322968231161095

Huh, S. (2023a). Are ChatGPT's knowledge and interpretation ability comparable to those of medical students in Korea for taking a parasitology examination?: a descriptive study. J. Educ. Eval. Health Prof. 20, 1. doi: 10.3352/jeehp.2023.20.01

Huh, S. (2023b). Emergence of the metaverse and ChatGPT in journal publishing after the COVID-19 pandemic. Sci. Edit. 10, 1–4. doi: 10.6087/kcse.290

Huh, S. (2023c). Issues in the 3rd year of the COVID-19 pandemic, including computer-based testing, study design, ChatGPT, journal metrics, and appreciation to reviewers. J. Educ. Eval. Health Prof. 20, 5. doi: 10.3352/jeehp.2023.20.5

Humphry, T., and Fuller, A. L. (2023). Potential ChatGPT use in undergraduate chemistry laboratories. J. Chem. Educ. 100, 1434–1436. doi: 10.1021/acs.jchemed.3c00006

Iskender, A. (2023). Holy or unholy? Interview with open AI's ChatGPT. Eur. J. Tour. Res. 34, 3414. doi: 10.54055/ejtr.v34i.3169

Johinke, R., Cummings, R., and Di Lauro, F. (2023). Reclaiming the technology of higher education for teaching digital writing in a post—pandemic world. J. Univ. Teach. Learn. Pract. 20. doi: 10.53761/1.20.02.01

Jungwirth, D., and Haluza, D. (2023). Artificial intelligence and public health: an exploratory study. Int. J. Environ. Res. Public Health 20, 4541. doi: 10.3390/ijerph20054541

Kahambing, J. G. (2023). ChatGPT, ‘polypsychic' artificial intelligence, and psychiatry in museums. Asian J. Psychiatr. 83, 103548. doi: 10.1016/j.ajp.2023.103548

Kapengut, E., and Mizrach, B. (2023). An event study of the ethereum transition to proof-of-stake. Commodities 2, 96–110. doi: 10.3390/commodities2020006

Karaali, G. (2023). Artificial intelligence, basic skills, and quantitative literacy. Numeracy 16, 9. doi: 10.5038/1936-4660.16.1.1438

Karimabadi, H., Wilkes, J., and Roberts, D. A. (2023). The need for adoption of neural HPC (NeuHPC) in space sciences. Front. Astron. Space Sci. 10, 1120389. doi: 10.3389/fspas.2023.1120389

Kasneci, E., Sessler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., et al. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 103, 102274. doi: 10.1016/j.lindif.2023.102274

Khan, R. A., Jawaid, M., Khan, A. R., and Sajjad, M. (2023). ChatGPT-Reshaping medical education and clinical management. Pak. J. Med. Sci. 39, 605–607. doi: 10.12669/pjms.39.2.7653

Kim, S. G. (2023). Using ChatGPT for language editing in scientific articles. Maxillofac. Plast. Reconstr. Surg. 45, 13. doi: 10.1186/s40902-023-00381-x

King, M. R., and ChatGPT (2023). A conversation on artificial intelligence, chatbots, and plagiarism in higher education. Cell. Mol. Bioeng. 16, 1–2. doi: 10.1007/s12195-022-00754-8

Krettek, C. (2023). ChatGPT: milestone text AI with game changing potential. Unfallchirurgie 126, 252–254. doi: 10.1007/s00113-023-01296-y

Kurpicz-Briki, M., and Leoni, T. (2021). A world full of stereotypes? Further investigation on origin and gender bias in multi-lingual word embeddings. Front. Big Data 4, 625290. doi: 10.3389/fdata.2021.625290

Lahat, A., and Klang, E. (2023). Can advanced technologies help address the global increase in demand for specialized medical care and improve telehealth services? J. Telemed. Telecare. doi: 10.1177/1357633X231155520

Lahat, A., Shachar, E., Avidan, B., Shatz, Z., Glicksberg, B. S., Klang, E., et al. (2023). Evaluating the use of large language model in identifying top research questions in gastroenterology. Sci. Rep. 13, 4164. doi: 10.1038/s41598-023-31412-2

Lamba, H., Rodolfa, K. T., and Ghani, R. (2021). An empirical comparison of bias reduction methods on real-world problems in high-stakes policy settings. SIGKDD Explor. Newsl. 23, 69–85. doi: 10.1145/3468507.3468518

Lawuobahsumo, K. K., Algieri, B., Iania, L., and Leccadito, A. (2022). Exploring dependence relationships between bitcoin and commodity returns: an assessment using the gerber cross-correlation. Commodities 1, 34–49. doi: 10.3390/commodities1010004

Lecler, A., Duron, L., and Soyer, P. (2023). Revolutionizing radiology with GPT-based models: current applications, future possibilities and limitations of ChatGPT. Diagn. Interv. Imaging 104, 269–274. doi: 10.1016/j.diii.2023.02.003

Lee, J. Y. (2023a). Can an artificial intelligence chatbot be the author of a scholarly article? J. Educ. Eval. Health Prof. 20, 6. doi: 10.3352/jeehp.2023.20.6

Lee, J. Y. (2023b). Can an artificial intelligence chatbot be the author of a scholarly article? Sci. Edit. 10, 7–12. doi: 10.6087/kcse.292

Levin, G., Meyer, R., Kadoch, E., and Brezinov, Y. (2023). Identifying ChatGPT-written OBGYN abstracts using a simple tool. Am. J. Obstet. Gynecol. MFM 5, 100936. doi: 10.1016/j.ajogmf.2023.100936

Liang, Y., Watters, C., and Lemański, M. K. (2022). Responsible management in the hotel industry: an integrative review and future research directions. Sustainability 14, 17050. doi: 10.3390/su142417050

Liebrenz, M., Schleifer, R., Buadze, A., Bhugra, D., and Smith, A. (2023). Generating scholarly content with ChatGPT: ethical challenges for medical publishing. Lancet Digit. Health 5, e105–e106. doi: 10.1016/S2589-7500(23)00019-5

Lim, W. M., Gunasekara, A., Pallant, J. L., Pallant, J. I., and Pechenkina, E. (2023). Generative AI and the future of education: ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 21, 100790. doi: 10.1016/j.ijme.2023.100790

Lin, C. C., Huang, A. Y. Q., and Yang, S. J. H. (2023). A review of AI-driven conversational chatbots implementation methodologies and challenges (1999–2022). Sustainability 15, 4012. doi: 10.3390/su15054012

Lund, B. D., and Wang, T. (2023). Chatting about ChatGPT: how may AI and GPT impact academia and libraries? Library Hi Tech News. 74. doi: 10.2139/ssrn.4333415

Lund, B. D., Wang, T., Mannuru, N. R., Nie, B., Shimray, S., Wang, Z., et al. (2023). ChatGPT and a new academic reality: artificial intelligence-written research papers and the ethics of the large language models in scholarly publishing. J. Assoc. Inf. Sci. Technol. 74, 570–581. doi: 10.1002/asi.24750

Macdonald, C., Adeloye, D., Sheikh, A., and Rudan, I. (2023). Can ChatGPT draft a research article? An example of population-level vaccine effectiveness analysis. J. Glob. Health 13, 01003. doi: 10.7189/jogh.13.01003

Maeker, E., and Maeker-Poquet, B. (2023). ChatGPT: a solution for producing medical literature reviews? NPG Neurol. Psychiat. Geriatr. 23, 137–143. doi: 10.1016/j.npg.2023.03.002

Mann, D. L. (2023). Artificial intelligence discusses the role of artificial intelligence in translational medicine: a JACC: basic to translational science interview with ChatGPT. JACC: Basic Transl. Sci. 8, 221–223. doi: 10.1016/j.jacbts.2023.01.001

Masters, K. (2023a). Ethical use of artificial intelligence in health professions education: AMEE Guide No.158. Med. Teach. 45, 574–584. doi: 10.1080/0142159X.2023.2186203

Masters, K. (2023b). Response to: Aye, AI! ChatGPT passes multiple-choice family medicine exam. Med. Teach. 45, 666. doi: 10.1080/0142159X.2023.2190476

Merhbene, G., Nath, S., Puttick, A. R., and Kurpicz-Briki, M. (2022). BURNOUT ensemble: augmented intelligence to detect indications for burnout in clinical psychology. Front. Big Data 5, 86310. doi: 10.3389/fdata.2022.863100

Mijwil, M. M., Aljanabi, M., and ChatGPT. (2023). Towards artificial intelligence-based cybersecurity: the practices and ChatGPT generated ways to combat cybercrime. Iraqi J. Comput. Sci. Math. 4, 65–70. doi: 10.52866/ijcsm.2023.01.01.0019

Mogali, S. R. (2023). Initial impressions of ChatGPT for anatomy education. Anat. Sci. Educ. doi: 10.1002/ase.2261

Moisset, X., and Ciampi, D. de Andrade. (2023). Neuro-ChatGPT? Potential threats and certain opportunities. Rev. Neurol. 179, 517–519. doi: 10.1016/j.neurol.2023.02.066

Morreel, S., Mathysen, D., and Verhoeven, V. (2023). Aye, AI! ChatGPT passes multiple-choice family medicine exam. Med. Teach. 45, 665–666. doi: 10.1080/0142159X.2023.2187684

Naumova, E. N. (2023). A mistake-find exercise: a teacher's tool to engage with information innovations, ChatGPT, and their analogs. J. Public Health Policy 44, 173–178. doi: 10.1057/s41271-023-00400-1

Nautiyal, R., Albrecht, J. N., and Nautiyal, A. (2023). ChatGPT and tourism academia. Ann. Tour. Res. 99, 103544. doi: 10.1016/j.annals.2023.103544

Nuryana, Z., and Pranolo, A. (2023). ChatGPT: the balance of future, honesty, and integrity. Asian J. Psychiatr. 84, 103571. doi: 10.1016/j.ajp.2023.103571

O'Connor, S. (2022). Open artificial intelligence platforms in nursing education: tools for academic progress or abuse? Nurse Educ. Pract. 66, 103537–103537. doi: 10.1016/j.nepr.2022.103537

O'Connor, S. (2023). Corrigendum to “open artificial intelligence platforms in nursing education: tools for academic progress or abuse?” [Nurse Educ. Pract. 66, 103537.] Nurse Education in Practice (2023) 66, (S1471595322002517), (10.1016/j.nepr.2022.103537. Nurse Educ. Pract. 67, 103572. doi: 10.1016/j.nepr.2023.103572

Odom-Forren, J. (2023). The role of ChatGPT in perianesthesia nursing. J. Perianesth. Nurs. 38, 176–177. doi: 10.1016/j.jopan.2023.02.006

Ollivier, M., Pareek, A., Dahmen, J., Kayaalp, M. E., Winkler, P. W., Hirschmann, M. T., et al. (2023). A deeper dive into ChatGPT: history, use and future perspectives for orthopaedic research. Knee Surg. Sports Traumatol. Arthrosc. 31, 1190–1192. doi: 10.1007/s00167-023-07372-5

Owens, B. (2023). How Nature readers are using ChatGPT. Nature 615, 20. doi: 10.1038/d41586-023-00500-8

Panda, S., and Kaur, N. (2023). Exploring the viability of ChatGPT as an alternative to traditional chatbot systems in library and information centers. Library Hi Tech News. 40, 22–55. doi: 10.1108/LHTN-02-2023-0032

Park, I., Joshi, A. S., and Javan, R. (2023). Potential role of ChatGPT in clinical otolaryngology explained by ChatGPT. Am. J. Otolaryngol. Head Neck Med. Surg. 44, 103873. doi: 10.1016/j.amjoto.2023.103873

Patel, S. B., and Lam, K. (2023). ChatGPT: the future of discharge summaries? Lancet Digit. Health 5, e107–e108. doi: 10.1016/S2589-7500(23)00021-3

Paul, J., Ueno, A., and Dennis, C. (2023). ChatGPT and consumers: benefits, pitfalls and future research agenda. Int. J. Consum. Stud. 47, 1213–1225. doi: 10.1111/ijcs.12928

Pavlik, J. V. (2023). Collaborating with ChatGPT: considering the implications of generative artificial intelligence for journalism and media education. Journal. Mass Commun. Educ. 78, 84–93. doi: 10.1177/10776958221149577

Perkins, M. (2023). Academic integrity considerations of AI large language models in the post-pandemic era: ChatGPT and beyond. J. Univ. Teach. Learn. Pract. 20. doi: 10.53761/1.20.02.07

Potapenko, I., Boberg-Ans, L. C., Stormly Hansen, M., Klefter, O. N., van Dijk, E. H. C., and Subhi, Y. (2023). Artificial intelligence-based chatbot patient information on common retinal diseases using ChatGPT. Acta Ophthalmol. doi: 10.1111/aos.15661

Prada, P., Perroud, N., and Thorens, G. (2023). Artificial intelligence and psychiatry: questions from psychiatrists to ChatGPT. Rev. Med. Suisse 19, 532–536. doi: 10.53738/REVMED.2023.19.818.532

Qadir, J. (2022). Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. Available online at: https://www.researchgate.net/publication/366712815_Engineering_Education_in_the_Era_of_ChatGPT_Promise_and_Pitfalls_of_Generative_AI_for_Education

Quintans-Júnior, L. J., Gurgel, R. Q., Araújo, A. A. S., Correia, D., and Martins-Filho, P. R. (2023). ChatGPT: the new panacea of the academic world. Rev. Soc. Bras. Med. Trop. 56, e0060. doi: 10.1590/0037-8682-0060-2023

Rahimi, F., and Talebi Bezmin Abadi, A. (2023). ChatGPT and publication ethics. Arch. Med. Res. 54, 272–274. doi: 10.1016/j.arcmed.2023.03.004

Rillig, M. C., Ågerstrand, M., Bi, M., Gould, K. A., and Sauerland, U. (2023). Risks and benefits of large language models for the environment. Environ. Sci. Technol. 57, 3464–3466. doi: 10.1021/acs.est.3c01106

Rocca, R., Tamagnone, N., Fekih, S., Contla, X., and Rekabsaz, N. (2023). Natural language processing for humanitarian action: opportunities, challenges, and the path toward humanitarian NLP. Front. Big Data 6, 1082787. doi: 10.3389/fdata.2023.1082787

Rospigliosi, P. A. (2023). Artificial intelligence in teaching and learning: what questions should we ask of ChatGPT? Interact. Learn. Environ. 31, 1–3. doi: 10.1080/10494820.2023.2180191

Roy, K., Gaur, M., Soltani, M., Rawte, V., Kalyan, A., Sheth, A., et al. (2023). ProKnow: process knowledge for safety constrained and explainable question generation for mental health diagnostic assistance. Front. Big Data 5, 1056728. doi: 10.3389/fdata.2022.1056728

Rozencwajg, S., and Kantor, E. (2023). Elevating scientific writing with ChatGPT: a guide for reviewers, editors… and authors. Anaesth. Crit. Care Pain Med. 42, 101209. doi: 10.1016/j.accpm.2023.101209

Sallam, M. (2023). ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. Healthcare 11, 887. doi: 10.3390/healthcare11060887

Salvagno, M., Taccone, F. S., and Gerli, A. G. ChatGpt. (2023). Can artificial intelligence help for scientific writing? Crit. Care 27, 75. doi: 10.1186/s13054-023-04380-2

Sardana, D., Fagan, T. R., and Wright, J. T. (2023). ChatGPT: a disruptive innovation or disrupting innovation in academia? J. Am. Dent. Assoc. 154, 361–364. doi: 10.1016/j.adaj.2023.02.008

Scerri, A., and Morin, K. H. (2023). Using chatbots like ChatGPT to support nursing practice. J. Clin. Nurs. 32, 4211–4213. doi: 10.1111/jocn.16677

Schijven, M. P., and Kikkawa, T. (2023). Why is serious gaming important? Let's Have a Chat!” Simul. Gaming 54, 147–149. doi: 10.1177/10468781231152682

Schorrlepp, M., and Patzer, K. H. (2023). ChatGPT in der hausarztpraxis: die künstliche intelligenz im check. MMW Fortschr. Med. 165, 12–16. doi: 10.1007/s15006-023-2473-3

Seghier, M. L. (2023). ChatGPT: not all languages are equal. Nature 615, 216. doi: 10.1038/d41586-023-00680-3

Seth, I., Bulloch, G., and Angus Lee, C. H. (2023). Redefining academic integrity, authorship, and innovation: the impact of ChatGPT on surgical research. Ann. Surg. Oncol. 30, 5284–5285. doi: 10.1245/s10434-023-13642-w

Short, C. E., and Short, J. C. (2023). The artificially intelligent entrepreneur: ChatGPT, prompt engineering, and entrepreneurial rhetoric creation. J. Bus. Ventur. Insights 19, e00388. doi: 10.1016/j.jbvi.2023.e00388

Shu, K., Bernard, H. R., and Liu, H. (2019).” Studying fake news via network analysis: detection and mitigation,” in Emerging Research Challenges and Opportunities in Computational Social Network Analysis and Mining, eds N. Agarwal, N. Dokoohaki, and S. Tokdemir (Cham: Springer International Publishing), 43–65. doi: 10.1007/978-3-319-94105-9_3

Siegerink, B., Pet, L. A., Rosendaal, F. R., and Schoones, J. W. (2023). ChatGPT as an author of academic papers is wrong and highlights the concepts of accountability and contributorship. Nurse Educ. Pract. 68, 103599. doi: 10.1016/j.nepr.2023.103599

Šlapeta, J. (2023). Are ChatGPT and other pretrained language models good parasitologists? Trends Parasitol. 39, 314–316. doi: 10.1016/j.pt.2023.02.006

Stokel-Walker, C. (2022). AI bot ChatGPT writes smart essays — should academics worry? Nature. doi: 10.1038/d41586-022-04397-7

Stokel-Walker, C. (2023). ChatGPT listed as author on research papers: many scientists disapprove. Nature 613, 620–621. doi: 10.1038/d41586-023-00107-z

Stokel-Walker, C., and Van Noorden, R. (2023). What ChatGPT and generative AI mean for science. Nature 614, 214–216. doi: 10.1038/d41586-023-00340-6

Strunga, M., Urban, R., Surovková, J., and Thurzo, A. (2023). Artificial intelligence systems assisting in the assessment of the course and retention of orthodontic treatment. Healthcare 11, 683. doi: 10.3390/healthcare11050683

Subramani, M., Jaleel, I., and Krishna Mohan, S. (2023). Evaluating the performance of ChatGPT in medical physiology university examination of phase I MBBS. Adv. Physiol. Educ. 47, 270–271. doi: 10.1152/advan.00036.2023

Taecharungroj, V. (2023). “What can ChatGPT do?” Analyzing early reactions to the innovative AI Chatbot on twitter. Big Data Cogn. Comput. 7, 35. doi: 10.3390/bdcc7010035

Tang, G. (2023). Letter to editor: academic journals should clarify the proportion of NLP-generated content in papers. Account. Res. 1–2. doi: 10.1080/08989621.2023.2180359

Teixeira da Silva, J. A. (2023). Is ChatGPT a valid author? Nurse Educ. Pract. 68, 103600. doi: 10.1016/j.nepr.2023.103600

Temsah, M. H., Jamal, A., and Al-Tawfiq, J. A. (2023). Reflection with ChatGPT about the excess death after the COVID-19 pandemic. New Microbes New Infect. 52, 101103. doi: 10.1016/j.nmni.2023.101103

Testoni, A., Greco, C., and Bernardi, R. (2022). Artificial intelligence models do not ground negation, humans do. GuessWhat?! Dialogues as a case study. Front. Big Data 4, 736709. doi: 10.3389/fdata.2021.736709

Teubner, T., Flath, C. M., Weinhardt, C., van der Aalst, W., and Hinz, O. (2023). Welcome to the Era of ChatGPT et al.: the prospects of large language models. Bus. Inf. Syst. Eng. 65, 95–101. doi: 10.1007/s12599-023-00795-x

The Lancet Digital Health (2023). ChatGPT: friend or foe? Lancet Digit. Health 5, e102. doi: 10.1016/S2589-7500(23)00023-7

Thomas, S. P. (2023). Grappling with the implications of ChatGPT for researchers, clinicians, and educators. Issues Ment. Health Nurs. 44, 141–142. doi: 10.1080/01612840.2023.2180982

Thornhill, C., Meeus, Q., Peperkamp, J., and Berendt, B. (2019). A digital nudge to counter confirmation bias. Front. Big Data 2, 11. doi: 10.3389/fdata.2019.00011

Thorp, H. H. (2023). ChatGPT is fun, but not an author. Science 379, 313. doi: 10.1126/science.adg7879

Thurzo, A., Strunga, M., Urban, R., Surovková, J., and Afrashtehfar, K. I. (2023). Impact of artificial intelligence on dental education: a review and guide for curriculum update. Educ. Sci. 13, 150. doi: 10.3390/educsci13020150

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., et al. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 10, 15. doi: 10.1186/s40561-023-00237-x

Tong, Y., and Zhang, L. (2023). Discovering the next decade's synthetic biology research trends with ChatGPT. Synth. Syst. Biotechnol. 8, 220–223. doi: 10.1016/j.synbio.2023.02.004

Tools such as ChatGPT threaten transparent science; here are our ground rules for their use (2023). Nature 613, 612. doi: 10.1038/d41586-023-00191-1

Tregoning, J. (2023). AI writing tools could hand scientists the ‘gift of time'. Nature. doi: 10.1038/d41586-023-00528-w

Tsigaris, P., and Teixeira da Silva, J. A. (2023). Can ChatGPT be trusted to provide reliable estimates? Account. Res. 1–3. doi: 10.1080/08989621.2023.2179919

van Dis, E. A. M., Bollen, J., Zuidema, W., van Rooij, R., and Bockting, C. L. (2023). ChatGPT: five priorities for research. Nature 614, 224–226. doi: 10.1038/d41586-023-00288-7

Wang, F. Y., Miao, Q., Li, X., Wang, X., and Lin, Y. (2023). What does ChatGPT say: the DAO from algorithmic intelligence to linguistic intelligence. IEEE/CAA J. Autom. Sin. 10, 575–579. doi: 10.1109/JAS.2023.123486

Wang, J. (2023). ChatGPT: a test drive. Am. J. Phys. 91, 255–256. Available online at: https://pubs.aip.org/aapt/ajp/article/91/4/255/2878655/ChatGPT-A-test-drive

Wang, S. H. (2023). OpenAI — explain why some countries are excluded from ChatGPT. Nature 615, 34. doi: 10.1038/d41586-023-00553-9

Watters, C. (2023). When criminals abuse the blockchain: establishing personal jurisdiction in a decentralised environment. Laws 12, 33. doi: 10.3390/laws12020033

Wu, L., and Liu, H. (2018). “Tracing fake-news footprints: characterizing social media messages by how they propagate,” in Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining (Marina Del Rey, CA). doi: 10.1145/3159652.3159677

Yadava, O. P. (2023). ChatGPT—a foe or an ally? Indian J. Thorac. Cardiovasc. Surg. 39, 217–221. doi: 10.1007/s12055-023-01507-6

Yeo-Teh, N. S. L., and Tang, B. L. (2023). Letter to editor: NLP systems such as ChatGPT cannot be listed as an author because these cannot fulfill widely adopted authorship criteria. Account. Res. 1–3. doi: 10.1080/08989621.2023.2177160

Zhou, J., Ke, P., Qiu, X., Huang, M., and Zhang, J. (2023). ChatGPT: potential, prospects, and limitations. Front. Inf. Technol. Electron. Eng. doi: 10.1631/FITEE.2300089

Keywords: ChatGPT, large language model (LLM), transformer, GPT, disruptive technology, artificial intelligence, AI

Citation: Watters C and Lemanski MK (2023) Universal skepticism of ChatGPT: a review of early literature on chat generative pre-trained transformer. Front. Big Data 6:1224976. doi: 10.3389/fdata.2023.1224976

Received: 18 May 2023; Accepted: 10 July 2023;

Published: 23 August 2023.

Edited by:

José Valente De Oliveira, University of Algarve, PortugalReviewed by:

Ziya Levent Gokaslan, Brown University, United StatesGerardo Adesso, University of Nottingham, United Kingdom

Copyright © 2023 Watters and Lemanski. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Casey Watters, Y3dhdHRlcnNAYm9uZC5lZHUuYXU=

Casey Watters

Casey Watters Michal K. Lemanski2

Michal K. Lemanski2