95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Big Data , 18 July 2022

Sec. Recommender Systems

Volume 5 - 2022 | https://doi.org/10.3389/fdata.2022.898050

This article is part of the Research Topic Industrial Recommender Systems View all 5 articles

Intelligent personal assistants (IPA) enable voice applications that facilitate people's daily tasks. However, due to the complexity and ambiguity of voice requests, some requests may not be handled properly by the standard natural language understanding (NLU) component. In such cases, a simple reply like “Sorry, I don't know” hurts the user's experience and limits the functionality of IPA. In this paper, we propose a two-stage shortlister-reranker recommender system to match third-party voice applications (skills) to unhandled utterances. In this approach, a skill shortlister is proposed to retrieve candidate skills from the skill catalog by calculating both lexical and semantic similarity between skills and user requests. We also illustrate how to build a new system by using observed data collected from a baseline rule-based system, and how the exposure biases can generate discrepancy between offline and human metrics. Lastly, we present two relabeling methods that can handle the incomplete ground truth, and mitigate exposure bias. We demonstrate the effectiveness of our proposed system through extensive offline experiments. Furthermore, we present online A/B testing results that show a significant boost on user experience satisfaction.

Intelligent personal assistants (IPA) such as Amazon Alexa, Google Assistant, Apple Siri, and Microsoft Cortana that allow people to communicate with devices through voice are becoming a more and more important part of people's daily life. IPAs enable people to ask information for weather, maps, schedules, recipes and play games. The essential part of IPA is the Spoken Language Understanding (SLU) system which interprets user requests and matches voice applications (a.k.a skills) to it. SLU consists of both an automatic speech recognition (ASR) and a natural language understanding (NLU) component. ASR first converts the speech signal of a customer request (utterance) into text. NLU component thereafter assigns an appropriate domain for further response (Tur and De Mori, 2011).

However, utterance texts can be diverse and ambiguous, and sometimes contain spoken or ASR errors, which makes many utterances not able to be handled by the standard NLU system on a daily basis. As a result, they will trigger some NLU errors such as low confidence scores, unparsable, launch errors, etc. We call these utterances “unhandled utterances.” IPAs typically respond to them by phrases such as “Sorry, I don't understand.” However, these responses are not very satisfactory to the customers, and they harm the flow of the conversation. This paper focuses on developing a deep neural network based (DNN-based) recommender system (RS) to address this hard problem by recommending third-party skills to answer customers' unhandled requests.

As our system utilizes a voice-based interface, only the top-1 skill is suggested to the customer. The whole process is illustrated in Figure 1. The recommender system will first try to match a skill to the customer utterance, and if successful, the IPA responds with “Sorry, I don't know that, but I do have a skill you might like. It's called < skill_name>. Wanna try it?” instead of simply saying “Sorry, I don't know.” If customers respond “Yes,” we call it a successful suggestion. Our goal is to improve both the customer accepted rate for the skill suggestion from the recommender system and the overall suggestion rate (percentage of unhandled utterances for which the RS suggests a skill).

We emphasize that building the above skill recommender system is not an easy task. One reason is that third-party skills are independently developed by third-party developers without a centralized ontology and many skills have overlapping capabilities. For example, to handle the utterance “play the sound of thunder,” skills such as “rain and thunder sounds,” “gentle thunder sounds,” “thunder sound,” can all handle this request well. Another reason is that third-party skills are frequently added, and currently Alexa has more than one hundred thousand skills. Therefore, it is impossible to rely on human annotation to collect the ground truth labels for training.

Before we launch our new DNN-based recommender system, we first build a rule-based recommender system to solve the “skill suggestion task for unhandled utterance.” Rule-based system works as such: (1) when it is given a customer utterance, it invokes a keyword-based shortlister (Elasticsearch; Gormley and Tong, 2015) to generate K skill candidates; (2) a rule-based system picks one skill from the skill candidates list and suggests it to the customer for feedback; (3) If customer responds “Yes,” the system launches this skill. This is also the source where we collect our training data. One limitation for this automatically labeled dataset is that for a given utterance, we only collect the customer's response regarding a single skill. Thus, we have incomplete ground truth labels.

The rule-based system's shortlister only focuses on the lexical similarity between the customer utterance and the skill, which may omit good skill candidates. To remedy this limitation, we build a model-based shortlister which is able to capture the semantic similarity. We then combine both lists of skill candidates to form the final list. Our proposed DNN-based skill recommender system is composed of two stages, shortlisting and reranking. Shortlisting stage includes two components, shortlister and combinator. Reranking stage has the component reranker. The system works as follows. Given the customer utterance, model-based shortlister retrieves the top K1 most relevant skills from the skill pool. These skills are combined with K2 skills returned from the keyword-based shortlister of the rule-based RS in the combinator to form our final skill list. The reranker component ranks all skills in the final skill list. Based on the model score of the top-1 ranked skill, the skill recommender system decides whether to suggest this skill to the customer or not.

Biases are common in recommender systems as the collected data is observational rather than experimental. Exposure bias happens as users are only exposed to a part of specific items so that unobserved interactions do not always represent negative preference (Chen et al., 2020). When we build our DNN-based recommender system, we find that exposure bias is a big obstacle. Specifically, we collect our training/testing data based on the rule-based system, and the rule-based exposure mechanism of this system degrades the quality of our collected data as positive labels are only received on skills suggested by the rule-based system. For example, for one utterance, we only have the customer's true response to one skill A, while it is highly likely that another more appropriate skill B also exists and we collect no positive customer response on skill B. A simple approach such as treating unobserved (utterance, skill) pairs as negative is problematic and hurts the model's performance as it is likely to mimic the rule-based system's decision to suggest skill A instead of skill B. We solve this by utilizing relabeling techniques, either collaborative-based or self-training, which is illustrated in Section 2.5. Furthermore, we find that the exposure bias generates discrepancy between offline evaluation on test data and evaluation based on human annotation. In the end, we rely mainly on human annotation to draw the final conclusion.

To sum up, the contribution of this work is three-fold:

• A new architecture is proposed to generate a skill shortlist by combining a lexical similarity focused keyword-based Shortlister (SL) and a semantic similarity focused model-based SL. We also propose a robust model-based SL with multi-task learning, which naturally incorporates meta information of skills into the prediction. The new model-based SL achieves better performance than the keyword-based SL based on human annotation metrics and offline metrics computed on test data.

• Two relabeling approaches are proposed to solve the incomplete ground truth label and exposure bias problems. Both approaches significantly improve the reranker model's performance based on human annotation metrics.

• Recommender systems have widely changed people's daily life through many important applications. However, most of the works focus on developing complex architectures to better fit the observed data. When biases exist, this approach may not lead to better online metrics. We provide a concrete case study to demonstrate that exposure bias can lead to significant discrepancies between offline and online metrics.

Our proposed architecture consists of two stages, shortlisting and reranking. In the shortlisting stage, for each utterance text (u), we call the shortlister module to get the top K candidate skills (S = {s1, s2, …, sK}). The primary goal at this stage is to have a candidate skill list that has high recall to cover all relevant skills and low latency for computation. In the second reranking stage, the reranker module assigns a relevancy score to each skill in the candidate skill list. Finally, we choose the skill with the highest relevancy score and compare this score to a pre-defined cutoff value. If it is larger than the cutoff value, we suggest this skill to the customer. Otherwise, the user is given the generic rejection sentence, e.g., “Sorry, I don't know.”

We consider two types of shortlisters (SL): a keyword-based shortlister and a model-based shortlister. Both shortlisters can be formulated as follows. Assume the skill set (consists of skill_ids) size is Ns. Given the input utterance u, SL computes a function fSL(u, θ), which returns a Ns dimension score vector O = (o1, …, oNs). Each ok represents how likely skill k is a good match to the utterance u. SL then returns the list of skill candidates with the top-K highest scores ordered by scores in descending order.

In the keyword-based shortlister, we first index each skill using its keywords collected from various metadata (skill name, skill description, example phrases, etc.), and then a search engine is called to find the most relevant K skills to the utterance. We use Elasticsearch (Gormley and Tong, 2015) as our search engine as it is widely adopted in the industry and we find it to be both accurate and efficient. Specifically, Elasticsearch is called to measure the similarity score between each pair of utterance and skill by computing a matching score based on TF-IDF (Ramos, 2003). Top K skills with the highest similarity scores are returned as the keyword-based shortlister list Srule.

In the model-based shortlister, we utilize a DNN-based model to compute the similarity scores. The model takes the utterance text u as input, and as the ground truth label, where is the skill set size that we used to train SL model and yk = 1 if the k-th skill is suggested and accepted by the customer and 0 otherwise. In our training data, the number of components of Y that equals one is always one, where we exclude samples that customers provide negative feedback. As model-based SL's skill set only contains skills that exist in our training data, is significantly smaller than Ns ( is <10% of Ns) which we use in the keyword-based shortlister.

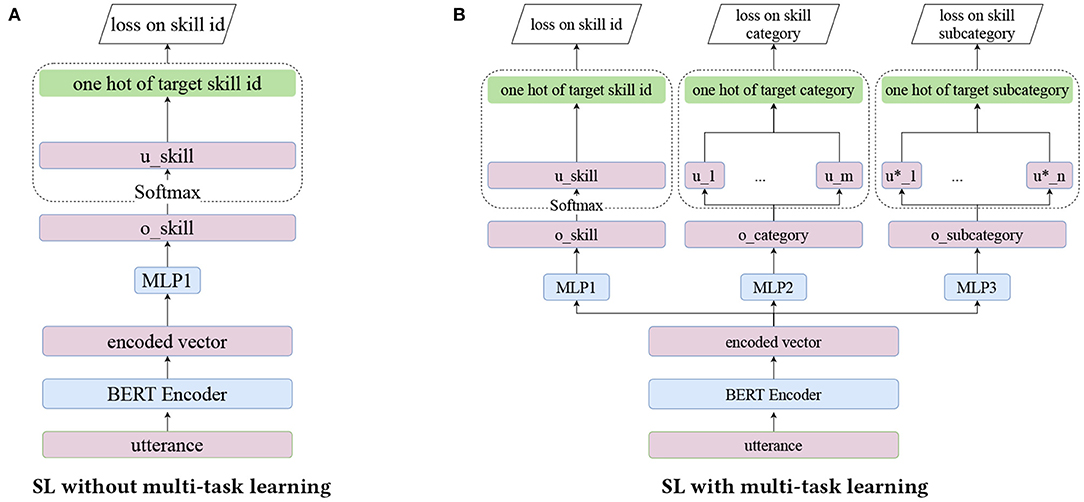

Model-based shortlister works as follows. Utterance text u is first fed to an encoder. Then, we feed the encoded vector to a two-layer multi-layer perceptron (MLP) of size (128, 64) with activation function “relu” and dropout rate 0.2. The output is then multiplied by a matrix of size to compute . For the encoder, we experiment with a RNN-based encoder, a CNN-based encoder and an in-house BERT (Devlin et al., 2018) encoder fine-tuned with Alexa data. We find that the BERT encoder has the best performance and we choose the first hidden vector of BERT output corresponding to [CLS] token as the encoded vector. In this paper, we only present the results with the BERT encoder. Please see Figure 2A for a demonstration.

Figure 2. Model architecture of shortlister model. (A) SL without multi-task learning. (B) SL with multi-task learning.

We experiment with two types of loss functions,

where softmax(O)k represents the k-th component of the vector O after a softmax transformation. Here L1 stands for one-vs.-all logistic loss and L2 is the multi-class logistic loss. In our experiment, we find that using different loss functions has little impact on the model's performance. In this paper, we show only results based on multi-class logistic loss.

Multi-task learning is a useful technique to boost model performance by optimizing multiple objectives simultaneously with shared layers. For our problem, skill category and subcategory are useful auxiliary information about the skill besides skill id as skill category/subcategory are tags assigned by its developers to skills based on their functionalities. Thus, in addition to multi-class logistic loss in Equation (2) which only consider the skill id, we also experiment with a multi-task learning based SL model which minimizes the combined loss

where the second and third loss functions have the same form as Equation (2) and the ground truths are given by the skill category and subcategory. Here, we treat (w1, w2, w3) as hyper-parameters and the model architectures are illustrated in Figure 2B. In our experiments, we find that applying multi-task learning slightly improves the SL model's performance. Thus, we only report the results of models trained with multi-task learning in this paper. The selected model has (w1, w2, w3) = (1/3, 1/3, 1/3) based on a grid search.

One limitation of the current model-based SL is that when a large number of new skills are added to the skill catalog, we need to update the SL model by retraining with the newly collected data from the updated skill catalog. A practical solution is to retrain the SL model every month.

In the DNN-based RS, unlike rule-based RS, we do not directly feed the skill candidates list (Smodel) returned from the shortlister component to the reranker. This is because the skill candidates list returned from model-based SL only contains skills that are collected in our training data which are suggested to customers based on the rule-based RS, and thus is incomplete and does not cover all important skills. Instead, we combine it with the skill candidate list returned from the keyword-based SL (Srule) by appending Srule to it. We exclude all duplicate skills in the combination process, where the skills in Srule which are also in Smodel are removed.

The reranker model ranks the K skill candidate list returned from the shortlisting stage to produce a better ranking. We consider two types of models: a pointwise reranker and a listwise reranker. The architectures are shown in Figure 3. The model encodes both the utterance and the skill name with the same BERT-based encoder. Additionally, the skill encoder utilizes the following variables: skill id, skill score bin (three-level binned score returned from the shortlisting stage), skill category, skill popularity (0/1), and skill flag (a binary indicator of the skill returned from keyword-based SL or model-based SL). These variables are encoded via an embedding layer, then are concatenated and fused (through a MLP layer) in the end to form the skill vectors. Utterance vector and skill vectors are concatenated and fed to a MLP layer to produce the predicted scores. Two architectures share the same loss function, which is the binary cross-entropy loss between target label Y = (y1, …, yK) and predicted score Ŝ = (ŝ1, …, ŝK), i.e.,

The only difference between the listwise reranker and the pointwise reranker is that the former one has an additional bi-LSTM layer which makes the information flow freely between different skills. Thus, the final ranking of the listwise model considers all K skill candidates together. In our experiments, the listwise approach outperforms the pointwise one.

We emphasize that the left tower of our architectures only utilizes the utterance. This part can be easily extended to incorporate user preference, session details or other contextual information to make more personalized recommendations. This is left for future exploration.

As pointed out in Section 1, our ground truth target Y is incomplete: for each utterance, only one of the skills has a ground truth label based on customer feedback to the rule-based RS. Furthermore, as the distribution of suggested skills is determined by the rule-based RS, this adds exposure bias to our data. Our setting is most close to the multi-label positive and unlabeled (multi-label PU) learning (Sun et al., 2010; Yu et al., 2014; Kanehira and Harada, 2016; Jain et al., 2017; Kim and Kim, 2020) setting, with one major difference: our observed targets are not all positive and can be negative as well.

A naive way to solve the above incomplete label problem is to assign zeros (negatives) to all of the unobserved skills. However, this approach is not reliable: based on manual annotation, we find that frequently there are multiple “good” skills that appear together in the skill candidate list. Assigning only one of them with a positive target confuses the model's label generation process and hurts the model's overall performance. Thus, we experiment with two relabeling approaches to alleviate this issue: collaborative relabeling and self-training relabeling. These two approaches borrow ideas from pseudo labeling (Lee, 2013) and self-training (Yarowsky, 1995; Rosenberg et al., 2005; Ruder and Plank, 2018), which are commonly utilized in semi-supervised learning. Specifically, self-training relabeling approach is proved to be a successful method to solve a similar domain classification problem in IPA (Kim and Kim, 2020).

Collaborative relabeling is a relabeling approach that borrows from kNN (k-nearest neighbors). For each target utterance, we first find all similar utterances in the training data and use the ground truth labels of these neighbors to relabel the target utterance. Specifically, for each utterance, we first compute its embedding based on a separate pre-trained BERT encoder. Then, for each target utterance, we compute its correlation to all of the other utterances based on cosine similarity and keep only the top m pairs with correlation at least r. We then combined the target information from these filtered neighbors and get a list of tuples {(skill1, p1, n1), …, (skillk, pk, nk)}, where (skilli, pi, ni) represents that there are ni neighbors with suggested skill skilli and average accept rate pi. We then filter out all skills with ni smaller than nc and pi smaller than pc. For the remaining skills, if they appear in the target utterance's shortlisting list and have missing labels, we label them as positive (negative) examples with probability pi (1−pi). Here m, r, nc, pc are hyperparameters and we find the optimal choice through grid search. From our experiment, m = 100, r = 0.995, nc = 6, pc = 0.45 achieves the best performance on the validation dataset.

Self-training relabeling is a relabeling method that uses the model's prediction to relabel the ground truth target. The algorithm is summarized in Algorithm 1. We experiment with a constant threshold (ci = c) and an adaptive threshold where we increase the threshold slowly over the iterations, that is ci = c+0.1*i. We find that the adaptive threshold with increasing cutoff value across iterations works better. As we increase the iterate i, our training data contains more and more positive labels due to relabeling, and we need to increase the threshold simultaneously to avoid adding false positive labels. The optimal iteration number i* and the optimal threshold are selected by a hyper-parameter search that minimizes that loss on validation data. We have done a grid search on the following threshold c values, 0.1, 0.3, …, 0.9. Based on our experiment, i* = 5, c = 0.3 works the best.

We collect 2 months' data (2020/4 and 2020/7) from Alexa traffic (unhandled English utterances only) of devices in the United State under the rule-based recommender system as our dataset. Individual users are de-identified in this dataset. The last week of the dataset is used as testing and the second to last week's data is used as validation. The rest is used for training. The models are all trained with AWS EC2 p3.2xlarge instance.

Using solely this test data to evaluate model performance results in severe exposure bias due to the aforementioned reasons of partial observation. More specifically, a matched skill can have ground truth label 0 only because this skill is not suggested to the customer by the rule-based RS. Thus, we randomly sample 1,300 utterances from our test dataset to form our human annotation dataset. We combine the predictions on this dataset from all of our models (including the various shortlisters) and the binary labels are manually annotated by human annotators.

We experiment with two different sizes of skill set for the model-based SL model, where the former vocabulary set contains the top 2,000 most frequently observed skills in the training dataset () and the latter one contains all skills that are observed at least two times ().

Table 1 summarizes shortlister models' performance. Due to Alexa's critical data confidential policy, we are not allowed to directly report their absolute metric scores. Instead, we report the normalized relative difference of each method when compared to the baseline method “keyword-based SL.” We present two common metrics in information retrieval (Precision@K and NDCG@K) to evaluate the models. Recall metrics are not provided as they are technically impossible to compute: there is more than one relevant skill for most utterances and we do not have access to this ground truth. From Table 1, we see that the model-based SL outperforms keyword-based SL in terms of both human annotation metrics and offline metrics computed on test data. In test data, the positive skill is derived from the rule-based RS and is always in the skill candidate list (length = 40) generated by the keyword-based SL. Thus, Precision@40 of keyword-based SL has the highest possible value when computed on test data, which is larger than model-based SL. However, this does not prove that keyword-based SL is better. Furthermore, we find that using a large skill set size () improves the SL model's performance. Thus, we use SL with in the two-stage RS comparison.

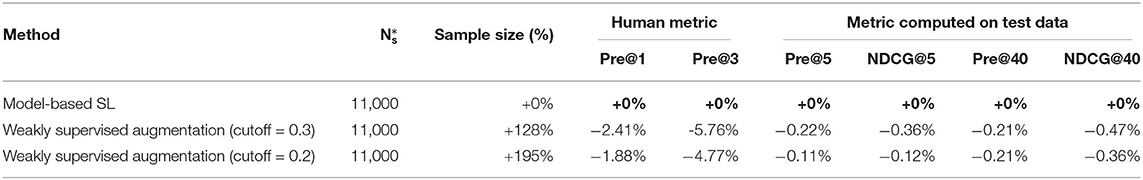

We also experiment with applying weakly supervised data augmentation to boost shortlister model's performance. Specifically, we firstly choose an optimal reranker model and add all (skill, utterance) pairs with predicted score above a cutoff to the training dataset of shortlister. Then we retrain shortlister models. The results are outlined in Appendix Table A1. We find weakly supervised data augmentation does not help to improve the shortlister model's performance. This is potentional due to the false positive samples added to the training dataset. In addition, we experiment with collaborative relabeling (same procedure as Section 2.5.1) as a data augmentation approach for shortlister model. We fix m = 100, and check the collaborative relabeling based shortlister's performance under several combinations of (r, nc, pc) values. The results are listed in Appendix Table A2. Overall, we does not find collaborative relabeling help to boost shortlister's performance.

We considered the following four reranker models, and compared their performance by reranking on the skill shortlist obtained from keyword-based SL and model-based SL (for model-based SL, we use the combined skill shortlist as illustrated in Section 2.3), respectively.

• Pointwise: reranker model with pointwise architecture as introduced in Section 2.4.

• Listwise: reranker model with listwise architecture as introduced in Section 2.4.

• Collaborative: reranker model with listwise architecture and trained with collaborative relabeling (Section 2.5).

• Self-training: reranker model with listwise architecture and trained with self-training relabeling (Section 2.5).

Table 2 summarizes the two-stage recommender systems' performance. As in the previous section, we only report the normalized relative difference of each method when compared to the baseline method “Listwise + keyword-based SL.” We present precision, recall, F1-score of the model at cutoff point 0.5, and the precision of the model at different suggestion rates (25, 40, 50, 75%) as our metrics. Here we control the model's suggestion rate by changing the cutoff value. For example, if we want a higher suggestion rate, we decrease the cutoff value and vice versa.

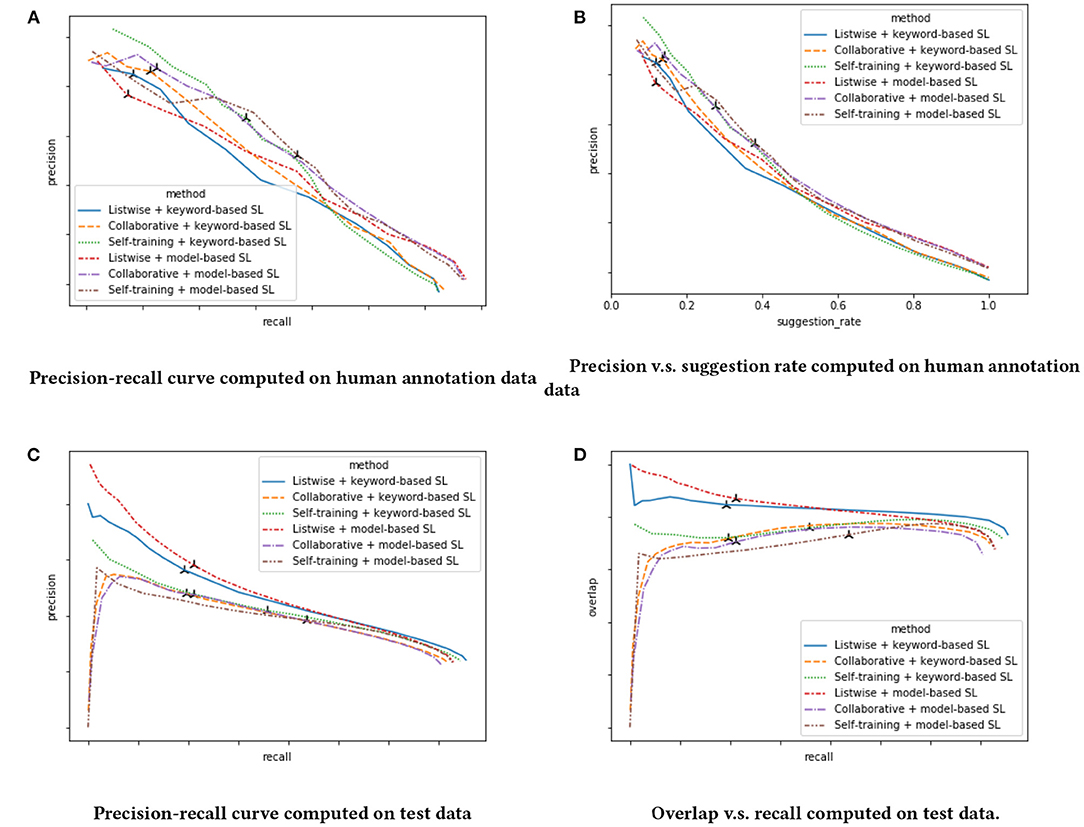

From Table 2, we find that it is hard to compare models based on precision, recall and F1-score as different models have very different recall levels. Thus, we also draw their precision-recall curves in Figure 4. From these figures, we find that there is a significant mismatch between human annotation metrics and metrics computed with offline test data. For example, in human annotation metrics, both collaborative and self-training relabeling improve the model performance. However, the opposite trend is observed on metrics computed on test data. In Figure 4D, we plot the curve of overlap (the probability that the model suggests the same skill as rule-based RS) vs recall. We discover that metrics computed on test data tend to overestimate a model's performance if its overlap with rule-based RS is high. This is intuitively reasonable as all positive ground truth labels are observational and can only be found in skills suggested with rule-based RS. This mismatch on metrics is due to exposure bias. Other works in the literature also find similar patterns and conclude that conventional metrics computed based on observation data suffer from exposure bias and may not be an accurate evaluation of the recommender system's performance (Schnabel et al., 2016; Yang et al., 2018; Chen et al., 2020). In our experiment, we use human annotation metrics to do a fair comparison between different models.

Figure 4. Model performance of reranker model. The model's metrics with cutoff point 0.5 is masked. (A) Precision-recall curve computed on human annotation data. (B) Precision vs. suggestion rate computed on human annotation data. (C) Precision-recall curve computed on test data. (D) Overlap vs. recall computed on test data.

We find that both collaborative and self-training relabeling improves the model's performance, and reranker models using skill list from model-based SL (combined list) outperform those that use skill list from keyword-based SL. This also justifies using model-based SL, as opposed to keyword-based SL. We also find that listwise reranker architecture significantly outperforms the pointwise reranker architecture. The overall winner is Collaborative + model-based SL.

For inference in production, we utilize AWS EC2 c5.xlarge instance and the 90% quantile of total latency of model-based RS is <300 ms.

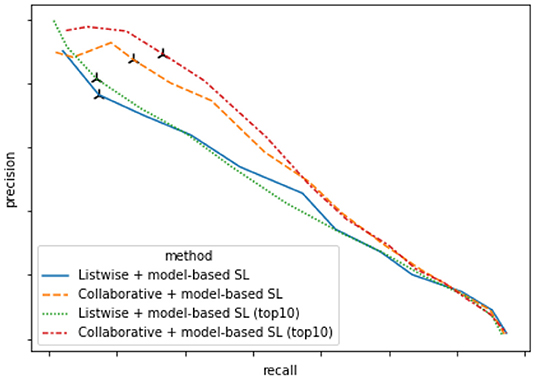

In the shortlisting stage, both keyword-based SL and model-based SL firstly returns a skill candidate list of length 40. Then, in model-based SL, its skill candidate list is combined with the keyword-based SL's list to form a combined list that is fed to the reranker model. Based on human annotation, we find that the most relevant skills are often returned in the top 10 candidates of the model-based SL's candidate list. In this section, we analyze whether reducing the candidate list's length of the model-based SL from 40 to 10 affects the overall RS performance. If the difference is not significant, one can instead rely on the top 10 candidates from model-based SL and enjoy faster inference during runtime.

Comparison of DNN-based RS's performance with skill candidate length 40 vs. 10 is provided in Figure 5. We find that both approaches have roughly the same performance. The collaborative relabeling with skill candidate length 40 (yellow line) seems to be worse than that with skill candidate length 10 (red line) when recall is low. However, this is mainly due to the variation as only a small-sized human annotation dataset is available for the evaluation when recall level is low.

Figure 5. Precision-recall curve of DNN-based RS's performance with skill candidate length 40 vs. 10 computed on human annotation data.

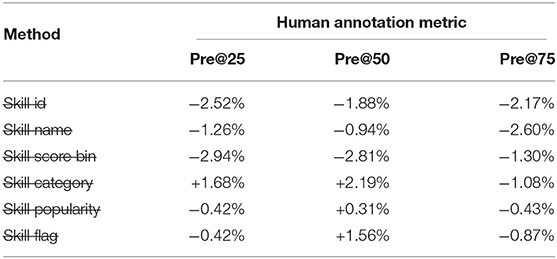

In this section, we study the contribution of each feature of the skills to the reranker model's performance. We choose the best model “Collaborative + model-based SL” as our baseline, and remove features one at a time while keeping all other features. Table 3 shows the result. We find that features like skill id, skill name, and skill score bin are the most important and removing them has a big negative impact on the model's performance.

Table 3. Summarization of ablation study. It reports normalized relative difference when removing each feature from baseline model.

We compare our DNN-based RS with rule-based RS through online A/B testing after observing the improvement in the offline metrics. We find that the new DNN-based RS significantly increases the average accept rate by 1.65% and reduces both the overall friction rate of customers and the customer interruption rate by 0.41 and 3.39%, respectively. The new DNN-based RS also suggests more diverse skills to customers: with the new model, customers discover and enable more skills. The increase of average number of enabled skills per customer can also improve the engagement of the users to Alexa in the long run. From the A/B testing, we find that the number of days a customer interacted with at least one skill has increased by 0.11% with DNN-based RS.

Recommender system is the last line of defense to filter overloaded information and suggest items that users might like to them proactively. Recommender systems are mainly categorized into three types: content-based, collaborative filtering, and a hybrid of both. Content-based RS recommends based on user and item features. They are most suitable to handle cold-start problems, where new items without user-item interaction data need to be recommended. Collaborative filtering (Sarwar et al., 2001; Linden et al., 2003), on the other hand, recommends by learning from user-item past interaction history through either explicit feedback (user's rating, etc) or implicit feedback (user's click history, etc.). Hybrid recommender systems integrate two or more recommendation techniques to gain better performance with fewer drawbacks of any single technique (Burke, 2002). Burke (2002); Zhang et al. (2019) provide thorough reviews of recommender systems. Traditional recommender techniques include matrix factorization (He et al., 2016), factorization machine (Rendle, 2010), etc. In recent years, deep learning techniques are integrated with recommender systems to better utilize the inherent structure of the features and to train the system end-to-end. Some important works in this realm include NeuralCF (He et al., 2017), DeepFM (Guo et al., 2017), Wide&Deep model (Cheng et al., 2016), and DIEN (Zhou et al., 2019). Deep learning based recommender systems gain great success in industry as well. For example, Covington et al. (2016) proposed a two-stage recommender system for youtube. The system is separated into a deep candidate generation model and a deep ranking model. Some other notable works include (Grbovic and Cheng, 2018; Zhou et al., 2018, 2019; Naumov et al., 2019).

In our work, collecting ground truth labels based on human annotation is impossible due to the large volume of skills. Therefore, we rely on observation data collected from a rule-based system to train our model. This adds exposure bias to the problem as the rule-based system controls which skill is suggested to the users and hence the collected labels. Such exposure biases generate discrepancy between offline and online metrics (Schnabel et al., 2016; Yang et al., 2018; Chen et al., 2020). Some previous works try to solve this issue using propensity score (Yang et al., 2018) in evaluation or sampling (Chen et al., 2019; Ding et al., 2019) in training.

Our work is also highly related to domain classification in SLU. Domain classification is an important component in standard NLU for intelligent personal assistants. They are usually formulated as a multi-class classification problem. Traditional NLU component usually covers tens of domains with a shared schema, but it can be extended to cover thousands of domains (skills) (Kim et al., 2018). Contextual domain classification using recurrent neural network is proposed in Xu and Sarikaya (2014). Chen et al. (2016) studies an improved end-to-end memory network. Kim et al. (2018) proposes a two-stage shortlister-reranker model for large-scale domain classification in a setup with 1,500 domains with overlapped capacity. Kim and Kim (2020) proposes to use pseudo labeling and negative system feedback to enhance the ground truth labels.

In this paper, we propose a two-stage shortlister-reranker based recommender system to match skills (voice apps) to handle unhandled utterances for intelligent personal assistants. We demonstrate that by combining candidate lists returned from a keyword-based SL and a model-based SL, the system generates a better skill list that covers both lexical and semantic similarity search. We describe how to build a new system by using observed data collected from a baseline rule-based system, and how the exposure biases generate discrepancy between offline and human metrics. We also propose two relabeling methods to handle the incomplete ground truth target issue, and empirically show their boost to the system's performance. Extensive experiments demonstrate the effectiveness of our proposed system. For the future work, we would incorporate contexual/personal information to learn a better utterance representation. We would also research more advanced approaches to handle the exposure biases problem. For example, one reasonable approach is that we could replace the rule-based system with a model-based system, randomly assign one of the top ranked skills to customers to collect feedback data, and gradually adjust the assignment probabilities to the optimal values following the setting of contextual bandit.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

WX contributed to research of the methods, run experiments, do the analysis, and wrote the first draft of the manuscript. QH, TM, ZG, and MA contributed to the discussion of the methodologies, especially the reranker section. XG and RA contributed to the data preparation. All authors contributed to manuscript revision, read, and approved the submitted version.

WX, QH, TM, ZG, XG, RA, and MA were employed by Amazon Alexa AI.

All claims expressed in this article are solely those of the author and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank Emre Barut, Melanie Rubino, and Andrew Arnold for their valuable feedback on this paper.

Burke, R.. (2002). Hybrid recommender systems: survey and experiments. User Model. User-Adapt. Interact. 12, 331–370. doi: 10.1023/A:1021240730564

Chen, J., Dong, H., Wang, X., Feng, F., Wang, M., and He, X. (2020). Bias and debias in recommender system: a survey and future directions. arXiv preprint arXiv:2010.03240.

Chen, J., Wang, C., Zhou, S., Shi, Q., Feng, Y., and Chen, C. (2019). “Samwalker: social recommendation with informative sampling strategy,” in The World Wide Web Conference (San Francisco, CA), 228–239. doi: 10.1145/3308558.3313582

Chen, Y.-N., Hakkani-Tür, D., Tür, G., Gao, J., and Deng, L. (2016). “End-to-end memory networks with knowledge carryover for multi-turn spoken language understanding,” in Interspeech (San Francisco, CA), 3245–3249. doi: 10.21437/Interspeech.2016-312

Cheng, H.-T., Koc, L., Harmsen, J., Shaked, T., Chandra, T., Aradhye, H., et al. (2016). “Wide & deep learning for recommender systems,” in Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, 7–10. doi: 10.1145/2988450.2988454

Covington, P., Adams, J., and Sargin, E. (2016). “Deep neural networks for youtube recommendations,” in Proceedings of the 10th ACM Conference on Recommender Systems (Boston, MA), 191–198. doi: 10.1145/2959100.2959190

Devlin, J., Chang, M.-W., Lee, K., and Toutanova, K. (2018). Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

Ding, J., Quan, Y., He, X., Li, Y., and Jin, D. (2019). “Reinforced negative sampling for recommendation with exposure data,” in IJCAI (Macao), 2230–2236. doi: 10.24963/ijcai.2019/309

Gormley, C., and Tong, Z. (2015). Elasticsearch: The Definitive Guide: A Distributed Real-Time Search and Analytics Engine. O'Reilly Media, Inc.

Grbovic, M., and Cheng, H. (2018). “Real-time personalization using embeddings for search ranking at AIRBNB,” in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (London), 311–320. doi: 10.1145/3219819.3219885

Guo, H., Tang, R., Ye, Y., Li, Z., and He, X. (2017). Deepfm: a factorization-machine based neural network for CTR prediction. arXiv preprint arXiv:1703.04247. doi: 10.24963/ijcai.2017/239

He, X., Liao, L., Zhang, H., Nie, L., Hu, X., and Chua, T.-S. (2017). “Neural collaborative filtering,” in Proceedings of the 26th International Conference on World Wide Web (Geneva), 173–182. doi: 10.1145/3038912.3052569

He, X., Zhang, H., Kan, M.-Y., and Chua, T.-S. (2016). “Fast matrix factorization for online recommendation with implicit feedback,” in Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval (New York, NY), 549–558. doi: 10.1145/2911451.2911489

Jain, V., Modhe, N., and Rai, P. (2017). “Scalable generative models for multi-label learning with missing labels,” in International Conference on Machine Learning, 1636–1644.

Kanehira, A., and Harada, T. (2016). “Multi-label ranking from positive and unlabeled data,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Las Vegas, NV), 5138–5146. doi: 10.1109/CVPR.2016.555

Kim, J.-K., and Kim, Y.-B. (2020). “Pseudo labeling and negative feedback learning for large-scale multi-label domain classification,” in ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 7964–7968. doi: 10.1109/ICASSP40776.2020.9053728

Kim, Y.-B., Kim, D., Kim, J.-K., and Sarikaya, R. (2018). A scalable neural shortlisting-reranking approach for large-scale domain classification in natural language understanding. arXiv preprint arXiv:1804.08064. doi: 10.18653/v1/N18-3003

Lee, D.-H.. (2013). “Pseudo-label: the simple and efficient semi-supervised learning method for deep neural networks,” in Workshop on Challenges in Representation Learning, ICML, Vol. 3 (Atlanta, GA).

Linden, G., Smith, B., and York, J. (2003). Amazon. com recommendations: item-to-item collaborative filtering. IEEE Intern. Comput. 7, 76–80. doi: 10.1109/MIC.2003.1167344

Naumov, M., Mudigere, D., Shi, H.-J. M., Huang, J., Sundaraman, N., Park, J., et al. (2019). Deep learning recommendation model for personalization and recommendation systems. arXiv preprint arXiv:1906.00091.

Ramos, J.. (2003). “Using TF-IDF to determine word relevance in document queries,” in Proceedings of the First Instructional Conference on Machine Learning, Vol. 242, 29–48.

Rendle, S.. (2010). “Factorization machines,” in 2010 IEEE International Conference on Data Mining (Sydney, NSW), 995–1000. doi: 10.1109/ICDM.2010.127

Rosenberg, C., Hebert, M., and Schneiderman, H. (2005). “Semi-supervised self-training of object detection models,” in Proceedings of the Seventh IEEE Workshops on Application of Computer Vision (Breckenridge, CO: IEEE). doi: 10.1109/ACVMOT.2005.107

Ruder, S., and Plank, B. (2018). Strong baselines for neural semi-supervised learning under domain shift. arXiv preprint arXiv:1804.09530. doi: 10.18653/v1/P18-1096

Sarwar, B., Karypis, G., Konstan, J., and Riedl, J. (2001). “Item-based collaborative filtering recommendation algorithms,” in Proceedings of the 10th International Conference on World Wide Web (New York, NY), 285–295. doi: 10.1145/371920.372071

Schnabel, T., Swaminathan, A., Singh, A., Chandak, N., and Joachims, T. (2016). “Recommendations as treatments: debiasing learning and evaluation,” in International Conference on Machine Learning (New York, NY), 1670–1679.

Sun, Y.-Y., Zhang, Y., and Zhou, Z.-H. (2010). “Multi-label learning with weak label,” in Twenty-fourth AAAI Conference on Artificial Intelligence (Atlanta, GA).

Tur, G., and De Mori, R. (2011). Spoken Language Understanding: Systems for Extracting Semantic Information From Speech. John Wiley & Sons. doi: 10.1002/9781119992691

Xu, P., and Sarikaya, R. (2014). “Contextual domain classification in spoken language understanding systems using recurrent neural network,” in 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (Florence), 136–140. doi: 10.1109/ICASSP.2014.6853573

Yang, L., Cui, Y., Xuan, Y., Wang, C., Belongie, S., and Estrin, D. (2018). “Unbiased offline recommender evaluation for missing-not-at-random implicit feedback,” in Proceedings of the 12th ACM Conference on Recommender Systems (Vancouver), 279–287. doi: 10.1145/3240323.3240355

Yarowsky, D.. (1995). “Unsupervised word sense disambiguation rivaling supervised methods,” in 33rd Annual Meeting of the Association for Computational Linguistics (Cambridge, MA), 189–196. doi: 10.3115/981658.981684

Yu, H.-F., Jain, P., Kar, P., and Dhillon, I. (2014). “Large-scale multi-label learning with missing labels,” in International Conference on Machine Learning (Beijing), 593–601.

Zhang, S., Yao, L., Sun, A., and Tay, Y. (2019). Deep learning based recommender system: a survey and new perspectives. ACM Comput. Surveys 52, 1–38. doi: 10.1145/3158369

Zhou, G., Mou, N., Fan, Y., Pi, Q., Bian, W., Zhou, C., et al. (2019). “Deep interest evolution network for click-through rate prediction,” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33 (Honolulu, HI), 5941–5948. doi: 10.1609/aaai.v33i01.33015941

Zhou, G., Zhu, X., Song, C., Fan, Y., Zhu, H., Ma, X., et al. (2018). “Deep interest network for click-through rate prediction,” in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (London), 1059–1068. doi: 10.1145/3219819.3219823

Table A1. Summarization of shortlister models' performance with weakly supervised data augmentation. Normalized relative difference of each method when compared to baseline method “model-based SL” is presented. Positive values (+) implies that the method outperforms baseline method.

Keywords: intelligent personal assistant, recommender system, pseudo labeling, bias, deep learning

Citation: Xiao W, Hu Q, Mohamed T, Gao Z, Gao X, Arava R and AbdelHady M (2022) Two-Stage Voice Application Recommender System for Unhandled Utterances in Intelligent Personal Assistant. Front. Big Data 5:898050. doi: 10.3389/fdata.2022.898050

Received: 16 March 2022; Accepted: 17 June 2022;

Published: 18 July 2022.

Edited by:

Jianpeng Xu, Walmart Labs, United StatesReviewed by:

Xiaohan Li, University of Illinois at Chicago, United StatesCopyright © 2022 Xiao, Hu, Mohamed, Gao, Gao, Arava and AbdelHady. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wei Xiao, d2VpeGlhb3dAYW1hem9uLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.