94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Big Data, 14 August 2019

Sec. Data Mining and Management

Volume 2 - 2019 | https://doi.org/10.3389/fdata.2019.00027

This article is part of the Research TopicBig Data for Food, Energy and WaterView all 8 articles

We consider multi-response and multi-task regression models, where the parameter matrix to be estimated is expected to have an unknown grouping structure. The groupings can be along tasks, or features, or both, the last one indicating a bi-cluster or “checkerboard” structure. Discovering this grouping structure along with parameter inference makes sense in several applications, such as multi-response Genome-Wide Association Studies (GWAS). By inferring this additional structure we can obtain valuable information on the underlying data mechanisms (e.g., relationships among genotypes and phenotypes in GWAS). In this paper, we propose two formulations to simultaneously learn the parameter matrix and its group structures, based on convex regularization penalties. We present optimization approaches to solve the resulting problems and provide numerical convergence guarantees. Extensive experiments demonstrate much better clustering quality compared to other methods, and our approaches are also validated on real datasets concerning phenotypes and genotypes of plant varieties.

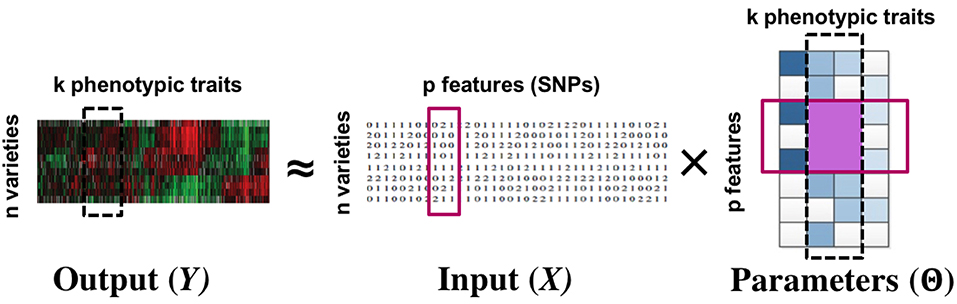

We consider multi-response and multi-task regression models, which generalize single-response regression to learn predictive relationships between multiple input and multiple output variables, also referred to as tasks (Borchani et al., 2015). The parameters to be estimated form a matrix instead of a vector. In several applications, there exist joint group relationships between inputs and outputs. A motivating example is that of multi-response Genome-Wide Association Studies (GWAS) (Schifano et al., 2013), where for instance a group of Single Nucleotide Polymorphisms or SNPs (input variables or features) might influence a group of phenotypes (output variables or tasks) in a similar way, while having little or no effect on another group of phenotypes. Similarly, as another example, stocks values of related companies can affect the future value of a group of stocks similarly. In such cases, the model parameters belonging to the same input-output group tend to be close to each other, and it is desirable to uncover and exploit these structures in estimating the parameter matrix. See Figure 1 for an example.

Figure 1. Multi-response GWAS: the simultaneous grouping relationship between phenotypic traits and SNPs manifest as a block structure (row + column groups) in the parameter matrix. The row and column groups are special cases of the more general block structure. Our proposed approach infers the parameter matrix as well as the group structures.

In this work, we develop formulations that simultaneously learn: (a) the parameters of multi-response/task regression models, and (b) the grouping structure in the parameter matrix (row or column or both) that reflects the group relationship between inputs and outputs. We present optimization approaches to efficiently solve the resulting convex problems, and show their numerical convergence. We describe and justify several hyperparameter choices we make during this optimization. Our methods are validated empirically on synthetic data and on real-world datasets concerning phenotypes and genotypes of plant varieties. From the synthetic data experiments, we find that our methods provide a much better and more stable (i.e., lesser standard error) recovery of the underlying group structure. In real-world data experiments, our approaches reveal natural groupings of phenotypes and checkerboard patterns of phenotype-SNP groups that inform us of the joint relationship between them.

We emphasize that the parameters as well as the grouping structures are fully unknown a-priori, and inferring them simultaneously is our major contribution. This is in contrast to the naive way of estimating the parameters first and then clustering. This naive approach has the danger of propagating the estimation error into clustering, particularly in high dimensions, where the estimator is usually inaccurate due to lack of sufficient samples. Moreover, the clustering step of the naive approach does not use the full information of the data. The joint estimation-clustering procedure we propose naturally promotes sharing of information within groups. Our formulations adopt the convex bi-clustering cost function (Chi et al., 2014) as the regularizer to encourage groupings between columns (tasks) and rows (features) in the parameter matrix. Note that, Chi et al. (2014) assume that the data matrix to be used for bi-clustering is known a-priori, which is obviously not the case for our setting. As a result, Chi et al. (2014) can deal only with fixed data and cannot estimate unknown model parameters, while our approaches can simultaneously estimate parameters and discover the clustering structure in them.

To the best of our knowledge, this is the first method that can simultaneously cluster and estimate the parameters efficiently in a unified optimization. We emphasize that our main goal is to discover the underlying parameter bi-cluster structure without compromising estimation accuracy. Experiments show that our clusterings are better than other methods while the estimation accuracy is no worse or sometimes even better.

The premise in multi-task learning is that appropriate sharing of information can benefit all the tasks (Caruana, 1998; Obozinski et al., 2006; Yu et al., 2018). Assuming all tasks to be closely related can be excessive as it ignores the underlying specificity of the mappings. There have been several extensions to multi-task learning that address this problem. The authors in Jalali et al. (2010) propose a dirty model for feature sharing among tasks, wherein a linear superposition of two sets of parameters—one that is common to all tasks, and one that is task-specific—are used. Kim and Xing (2010) leverages a predefined tree structure among the output tasks (e.g., using hierarchical agglomerative clustering) and imposes group regularizations on the task parameters based on this tree. The approach proposed in Kumar and Daume (2012) learns to share by defining a set of basis task parameters and posing the task-specific parameters as a sparse linear combination of these. Jacob et al. (2009) and Kang et al. (2011) assume that the tasks are clustered into groups and proceed to learn the group structure along with the task parameters using a convex and an integer quadratic program, respectively. However, these approaches do not consider joint clustering of the features. In addition, the mixed integer program of Kang et al. (2011) is computationally intensive and greatly limits the maximum number of tasks that can be considered. Another pertinent approach is the Network Lasso formulation presented in Hallac et al. (2015). This formulation, however, is limited to settings where only clustering among the tasks is needed.

As mentioned before, convex bi-clustering method (Chi et al., 2014) aims at grouping observations and features in a data matrix; while our approaches aim at discovering groupings in the parameter matrix of multi-response regression models while jointly estimating such a matrix, and the discovered groupings reflect groupings in features and responses.

In section 2, we will discuss the proposed joint estimation-clustering formulations; in section 3, we will present the optimization approaches. The choice of hyperparameters used and their significance is discussed in section 4. We illustrate the solution path for one of the formulations in section 4.3. We will provide results for estimation with synthetic data, and two case studies using multi-response GWAS with real data in sections 5, 6, respectively. We conclude in section 7. Additional details and convergence proofs for the optimization approaches are provided in the Supplementary Material.

We will motivate and propose two distinct formulations for simultaneous parameter learning and clustering with general supervised models involving matrix valued parameters. Our formulations will be developed around multi-task regression in this paper. We are interested in accurate parameter estimation as well as understanding the bi-cluster or checkerboard structure of the parameter matrix. More formally, denote by Z the observed data, Θ the model parameters to be estimated, and L(Z; Θ) a general loss function, and R(Θ) to be the regularization.

In multi-task regression, Z = {X, Y} where are the design matrices and are the response vectors for each task s = 1, …, k. Θ is a matrix in ℝp × k containing the regression coefficients for each task. A popular choice for L is the squared loss: . For regularization R(Θ), the ℓ1 norm, denoted as ||Θ||1, is commonly used. Here we wish to discover the bi-cluster structure among features and responses, respectively the rows and columns of Θ.

We begin with the simplest formulation, which, as we shall see, is a special case of the latter one.

Here L(Z; Θ) is the loss function, R(Θ) is a regularizer, and and Θ·i is the ith column of Θ. ΩW(Θ) is inspired by the convex bi-clustering objective (Chi et al., 2014, Equation 1) and it encourages sparsity in differences between columns of Θ. Similarly, encourages sparsity in the differences between the rows of Θ. When the overall objective is optimized, we can expect to see a checkerboard pattern in the model parameter matrix. Note that W and are non-negative weights that reflect our prior belief on the closeness of the rows and columns of Θ.

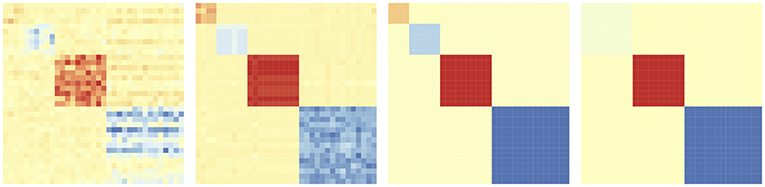

The degree of sharing of parameters and hence that of bi-clustering, is controlled using the tuning parameter λ2. When λ2 is small, each element of Θ will be its own bi-cluster. As λ2 increases, more elements of Θ fuse together, the number of rectangles in the checkerboard pattern will reduce. See Figure 2 for the change of the checkerboard structure as λ2 increases. Further, by varying λ2 we get a solution path instead of just a point estimate of Θ (see section 4.3). In the rest of the paper, we will use the same design matrix X across all tasks for simplicity, without loss of generality.

Figure 2. Evolution of the bi-clustering structure of model coefficient matrix Θ as regularization parameter λ2 increases.

For sparse multi-task linear regression, we have L(Z; Θ) = L(X, Y; Θ) and formulation 1 can be instantiated as,

Here the rows of Θ correspond to the features, i.e., the columns of X, and the columns of Θ correspond to the tasks, i.e., the columns of Y. Therefore, the checkerboard pattern in Θ provides us insights on the groups of features that go together with the groups of tasks.

Formulation 1 is natural and simple, but it forces the parameters belonging to the same row or column cluster to be equal, and this may be limiting. To relax this requirement, we introduce a surrogate parameter matrix Γ that will be used for bi-clustering. This will be mandated to be close to Θ. For multitask regression this yields the objective

Remark 1. To interpret this more carefully, let us assume that in Equation (3). In other words, Θ·i has a global component , and the component Γ·i that participates in the clustering. As λ2 → ∞, , and hence Θ·i → Γ·i. Now, formulation 2 reduces to formulation 1. Further, if λ1 and λ2 are held constant while only λ3 increases, , since Γ·i → Γ for all i. The key difference between formulation 2 and 1 is the presence of a task-specific global component , which lends additional flexibility in modeling the individual tasks even when λ3 → 0. Whereas, in Equation (2), when λ2 → ∞, all the components of Θ·i take the same value for all i, and the tasks are forced to share the same coefficients without any flexibility.

Remark 2. In certain applications, it might make sense to cluster together features/tasks whose effects have the same amplitude but different signs. This can be accommodated by considering where ci,j ∈ {−1, 1} are predefined constants reflecting whether the features or tasks are expected to be negatively or positively correlated.

We describe the optimization procedures to solve the two proposed formulations. Note that as long as the loss function L(X, Y; Θ) and the regularization R(Θ) are convex, our formulations are also convex in Θ and Γ, and hence can be solved using modern convex optimization approaches. Here we adopt two computationally efficient approaches.

For our formulation 1 we use the proximal decomposition method introduced in Combettes and Pesquet (2008). This is an efficient algorithm for minimizing the sum of several convex functions. Our general objective function (1) involves three such functions: f1 being L(X, Y; Θ), f2 being R(Θ), and f3 being the term that multiplies λ2. At a high level, the algorithm iteratively applies proximal updates with respect to these functions until convergence.

We stack the regression matrix Θ into a column vector . The proximal operator is given by: , where a and b are pk-dimensional vectors. The proximal operator of the regularized loss can be computed according to the specific L and R functions. The overall optimization procedure is given in Algorithm 1 and with the following update rules.

• Update for : Let (a1; …; ak) = proxσf1(b1; …; bk), For each s ∈ {1, …, k}, we have

This step corresponds to the closed-form formula of a ridge regression problem. For very large p we can employ efficient approaches, such as Lu and Foster (2014) and McWilliams et al. (2014).

• Update for : Let (a1; …; ak) = proxσf2(b1; …; bk), For each s ∈ {1, …, k}, j ∈ {1, …, p},

• Updates for : This is the standard bi-clustering problem on Θ and can be solved efficiently using the COnvex BiclusteRing Algorithm (COBRA) introduced in Chi et al. (2014), and described in Algorithm 3 (Supplementary Material) for completeness.

For our formulation 2 we use an alternating minimization method on Θ and Γ; i.e., we alternatively minimize over Θ and Γ with the other fixed. The first alternating step is to estimate Θ while fixing Γ. This minimization problem is separable for each column and each sub-problem can be easily written as a standard Lasso problem:

by defining and and hence can be solved efficiently and in parallel for each column. In the second step, we fix Θ and optimize for Γ. The optimization is

which is a standard bi-clustering problem on Θ and can be solved efficiently using the COnvex BiclusteRing Algorithm (COBRA) introduced in Chi et al. (2014), and described in Algorithm 3 (Supplementary Material) for completeness. The overall procedure is given in Algorithm 2.

We establish the following convergence result for our algorithms, when the loss function L(X, Y; Θ) is convex in Θ. The proofs are given in the Supplementary Material.

Proposition 1. The algorithm described in section 3.1 converges to the global minimizer.

Proposition 2. The algorithm described in section 3.2 converges to the global minimizer.

For both formulations 1 and 2, computational complexity is dominated by the use of the COBRA algorithm. COBRA solves a sequence of convex clustering problems. The subroutine used to solve each convex clustering subproblem scales in storage and computational operations as O(kpq), where k is the number of tasks, p is the number of features and q is the number of non-zero weights. In our case q is much smaller than p2 and k2. Indeed as we shall see in section 4.1, our weights are based on κ = 5 nearest neighbors.

We describe and justify the various hyperparameters choices for formulations 1 and 2.

The choice of the column and row similarity weights W and can affect the quality of the clustering results and we follow the suggestion in Chi et al. (2014) to set these. However, in their case the data matrix to be clustered is fixed and known, but in our case, the coefficient matrix Θ we want to cluster is not known. We will get a rough estimate by just minimizing the regularized loss function L(Z; Θ) + λ1R(Θ). For example, with multi-task regression in Equations (2) and (3), we can solve

where λ1 is tuned by cross-validation (CV) and re-used in the rest of the algorithm. From our multi-task regression experiment, we find that the clustering results are robust to the choice of λ1.

With the estimated we are ready to compute W and . The weights for the columns i and j are computed as where is 1 if j is among i's κ-nearest-neighbors or vice versa and 0 otherwise. Here ϕ is non-negative and ϕ = 0 corresponds to uniform weights. In our synthetic and real data experiments we fix ϕ = 20. is computed analogously. It is important to keep the two penalty terms ΩW(Θ) and on the same scale, else the row or column objective will dominate the solution. We normalize so that the column weights sum to and the row weights sum to .

We set the penalty multipliers (λ1, λ2, and λ3) for both the formulations using a CV approach. We randomly split our samples into a training set and a hold-out validation set, fitting the models on the training set and then evaluating the root-mean-squared error (RMSE) on the validation set to choose the best values. In order to reduce the computational complexity, we estimate the multipliers greedily, one or two at a time. From our simulations, we determined that this is a reasonable choice. We recognize that these can be tuned further on a case-by-case basis.

λ1 is set to the reasonable value as determined in section 4.1 for both formulations, since the clustering results are robust to this choice. For formulation 1, we estimate the best λ2 by CV using Equation (1). For formulation 2, the tuning process is similar, but we pick a sequence of λ2 and λ3. We estimate both and , but calculate RMSE with , since it is used in the clustering objective. When the path of bi-clusterings is computed, we fix λ2 to the CV estimate and vary only λ3.

One can obtain the entire solution paths for the estimated coefficients Θ by varying the penalty multipliers. Here we provide an example using a synthetic dataset generated as follows. We consider the multi-task regression model: Y = XΘ* + E with . All the entries of design matrix X are generated as iid from N(0, 1). The true regression parameter Θ* has a bi-cluster (checkerboard) structure. To simulate sparsity, we set the coefficients within many of the blocks in the checkerboard to 0. For the non-zero blocks, we follow the generative model recommended in Chi et al. (2014): the coefficients within each cluster are generated as θij = μrc + ϵij with to make them close but not identical, where μrc is the mean of the cluster defined by the rth row partition and cth column partition. We set n = 50, p = 20, and k = 15. For the non-zero blocks, we set μrc ~ Uniform{−2, −1, 1, 2} and set σϵ = 0.25. We set σ = 1.5. We use relatively small values for p and k since there will be a total of pk solution paths to visualize.

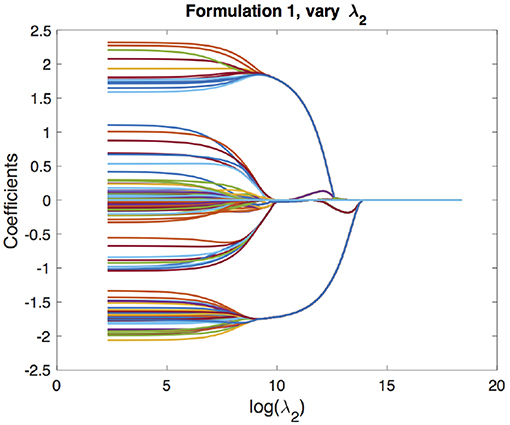

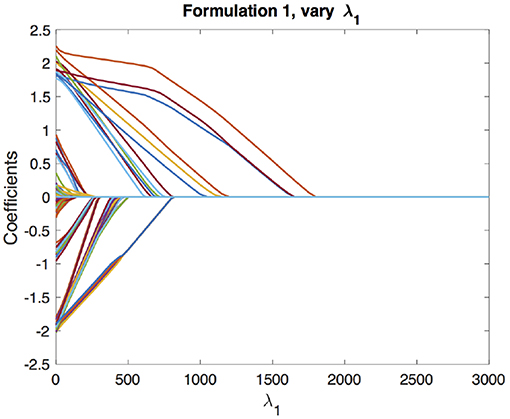

We begin with the solution paths for formulation 1. We first fix a reasonable λ1 and vary λ2 to get solution paths for all the coefficients. In our experiment, we chose λ1 based on cross-validation as described in section 4.1. These paths are shown in Figure 3. We can see that as λ2 increases, the coefficients begin to merge and eventually for large enough λ2 they are all equal. The solution paths are smooth in λ2. Similarly, we fix λ2 based on the cross-validation scheme described in section 4.2 and vary λ1 to get solution paths for all the coefficients. This is shown in Figure 4. It is well-known that the solution paths for LASSO are piecewise linear (Rosset and Zhu, 2007), when L is least squares loss. Here, we see that the solution paths are not piecewise linear, but rather a smoothed version of it. This smoothness is imparted by the convex clustering regularization, the third term in Equation (2).

Figure 3. Solution paths for formulation 1, fixing λ1 and varying λ2. Each line indicates a distinct coefficient.

Figure 4. Solution paths for formulation 1, fixing λ2 and varying λ1. Each line indicates a distinct coefficient.

We can obtain the solution path for formulation 2 as functions of two variables. We first fix a reasonable λ1 and vary λ2, λ3 to get solution paths for all the coefficients. These paths are shown in Figure 5. The solution paths are smooth in λ2 and λ3. Similarly, we fix a reasonable λ2 and vary λ1, λ3 to get solution paths for all the coefficients. These paths are shown in Figure 6. The solution paths are smooth in λ1 and λ3. The reasonable values are obtained using cross-validation.

It is well-known that LASSO tends to select too many variables (Meinshausen and Yu, 2009). Hence ||Θ·i − Θ·j||2 may not be exactly zero in most cases, and we may end up identifying too many clusters as well. In Chi et al. (2014) the authors defined the measure vij = ||Θ·i − Θ·j||2 and placed the ith and jth columns in the same group if vij ≤ τ for some threshold τ, inspired by Meinshausen and Yu (2009). In our formulation 1, after selecting the best tuning parameters and estimating Θ, we place the ith and jth rows in the same group if ||Θi· − Θj·||2 ≤ τr. Similarly, if ||Θ·i − Θ·j||2 ≤ τc we place the ith and jth columns in the same group. For formulation 2, we repeat the same approach using Γ instead of Θ.

To compute the thresholds τr and τc, we first calculate [vcol]ij = ||Θ·i − Θ·j||2 and stack this matrix to vector vcol; similarly we calculate [vrow]ij = ||Θi· − Θj·||2 and stack to vector vrow. In the case of sparse linear regression, τ should be on the order of the noise (Meinshausen and Yu, 2009): , where σ is typically estimated using the standard deviation of residuals. In general we could set τ proportional to the standard deviation of vrow or vcol.

However in our case, we have an additional regression loss term for estimating the parameters and hence there are two sources of randomness, the regression residual and the error in v. Taking these into account, we set and . We set the multiplier to , following the usual conservative heuristics.

Although formulations 1 and 2 have been developed for row-column bi-clustering, they can be easily specialized to clustering columns or rows alone, by respectively using only ΩW(Θ) or in Equation (2), or using only ΩW(Γ) or in Equation (3).

We demonstrate our approach using experiments with synthetic data on the problem of multi-task learning. As emphasized before, our main focus is on bi-clustering result instead of parameter estimation. We begin by describing the performance measures used to evaluate the clustering and estimation performance.

Assessing the clustering quality can be hard. In this paper, we use the following three measures to evaluate the quality of clustering: the adjusted Rand index (Hubert and Arabie, 1985) (ARI), the F-1 score (F-1), and the Jaccard index (JI).

Assume is the true clustering, define TP to be the number of pairs of elements in S that are in the same subset in and in the same subset in . This is the true positive and similarly we can define TN, FN, FP as true negative, false negative, and false positive, respectively. Define and , the F-1 score is defined as:

Using the same notation as F-1 score, the Jaccard Index is defined as:

For all these three measures, a value of 1 implies the best possible performance, and a value of 0 means that we are doing poorly. In order to compute ARI, F-1, and JI, we choose the value of the multiplier λ2 in formulation 1, and {λ2, λ3} in formulation 2 using the approach described in section 4.2, and obtain the estimated clusterings.

The estimation accuracy is measured by calculating the RMSE on an independent test set, and also the parameter recovery accuracy, where and Θ* are the estimated and true coefficient matrices.

We focus on multi-task regression: Y = XΘ* + E with . All the entries of design matrix X are generated as iid from N(0, 1). The true regression parameter Θ* has a bi-cluster (checkerboard) structure. To simulate sparsity, we set the coefficients within many of the blocks in the checkerboard to 0. For the non-zero blocks, we follow the generative model recommended in Chi et al. (2014): the coefficients within each cluster are generated as θij = μrc + ϵij with to make them close but not identical, where μrc is the mean of the cluster defined by the rth row partition and cth column partition. We set n = 200, p = 500, and k = 250 in our experiment. For the non-zero blocks, we set μrc ~ Uniform{−2, −1, 1, 2} and set σϵ = 0.25. We try the low-noise setting (σ = 1.5), where it is relatively easy to estimate the clusters, and the high-noise setting (σ = 3), where it is harder to obtain them.

We compare our formulations 1 and 2 with a 2-step estimate-then-cluster approach: (a) Estimate first using LASSO, and (b) perform convex bi-clustering on . is estimated by solving (6) while selecting the best λ1 as discussed in section 4.1, and the convex bi-clustering step is implemented using COBRA algorithm in Chi et al. (2014). Our baseline clustering performance is the best of: (a) letting each coefficient be its own group, and (b) imposing a single group for all coefficients.

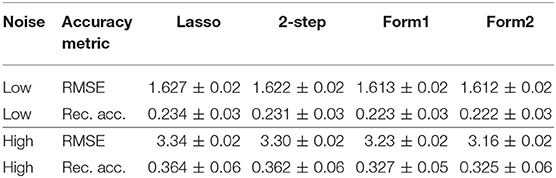

The average clustering quality results on 50 replicates are shown in Table 1 for low and high noise settings. Most performance measures are reported in the format mean±std.dev. In both tables, the first, second, and third blocks correspond to performances of row, column and row-column bi-clusterings, respectively. We optimize only for bi-clusterings, but the row and the column clusterings are obtained as by-products.

From Table 1 we see that both our formulation 1 and 2 give better results on row clustering, column clusterings, and row-column bi-clustering compared to the 2-step procedure. Moreover, the clustering results given by our formulations are more stable, with lesser spread in performance.

It is also instructive to note that the performance obtained for columns is substantially higher compared to those obtained with rows. This could be because of two reasons: (a) the columns of Θ have a one-to-one correspondence to the columns of the task responses Y, and hence any relationship between the tasks is easily inherited, (b) the rows of Θ can be noisier than the columns, since each row contributes to all the tasks.

The performance boost obtained with high noise is much higher compared to that with low noise. This makes sense because when noise level is low, the estimation step in the 2-step approach is more accurate and the error propagated into the clustering step is relatively small. However, at high noise levels, the estimation can be inaccurate. This estimation error propagates into the clustering step and makes the clustering result of 2-step approach unreliable. Since our formulations jointly perform estimation and clustering, they obtain more reliable and stable results.

The RMSEs evaluated on the test set and the parameter recovery accuracy are provided in Table 2. The oracle RMSE (with Θ known) is 1.5 for the low noise setting and 3.0 for the high noise setting in Table 2, and we can see that the proposed methods provide improvements over the others. We also observe improvements in the parameter recovery accuracy. Although the improvement is marginal, it demonstrates that we are not losing estimation accuracy because of the biclustering structure we considered.

Table 2. RMSE and parameter recovery accuracy of the estimation schemes for low noise (σ = 1.5) and high noise (σ = 3) settings.

We demonstrate the proposed approaches using real datasets obtained from experiments with Sorghum crops (Tuinstra, 2016). We consider two specific problems from this pipeline: (a) predictive modeling of plant traits using features from remote sensed data (section 6.1), (b) GWAS using the reference traits (section 6.2).

The experimental data was obtained from 18 Sorghum varieties planted in 6 replicate plot locations, and we considered the trait of plant height. The 18 variety names are given in the Supplementary Material.

From the RGB and hyperspectral images of each plot, we extract features of length 206. Hence n = 6, p = 206, and the number of tasks k = 18, for each trait considered. The presence of multiple varieties with replicates much smaller in number than predictors poses a major challenge: building separate models for each variety is unrealistic, while a single model does not fit all. This is where our proposed simultaneous estimation and clustering approach provides the flexibility to share information among tasks that leads to learning at the requisite level of robustness. Note that here we use the column-only clustering variant of formulation 1.

The dendrogram for task clusters obtained by sweeping the penalty multiplier λ2 is given in Figure 7. This provides some interesting insights from a plant science perspective. As highlighted in Figure 7, the predictive models (columns of Θ) for thicker medium dark plants are grouped together. Similar grouping is seen for thinner tall dark plants, and thick tall plants with many light leaves.

To compute RMSE, we perform 6-folds CV where each fold consists of at least one example from each variety. As we only have n = 6 samples per variety (i.e., per task), it is unrealistic to learn separate models for each variety. For each CV split, we first learn a grouping using one of the compared methods, treat all the samples within a group as i.i.d, and estimate their regression coefficients using Lasso. The methods compared with our approach include: (a) single model, which learns a single predictive model using Lasso, treating all the varieties as i.i.d., (b) No group multitask learning, which learns a traditional multitask model using Group Lasso where each variety forms a separate group, and (c) Kang et al. (2011), which uses a mixed integer program to learn shared feature representations among tasks, while simultaneously determining “with whom” each task should share. Results reported in Table 3, indicate the superior quality of our groupings in terms of improved predictive accuracy.

We apply our approach in a multi-response Genome-Wide Association Study (GWAS). While traditional GWAS focuses on associations to single phenotypes, we would like to automatically learn the grouping structure between the phenotypes as well as the features (columns and rows of Θ) using our proposed method. We use the proposed formulations 1 and 2 (bi-clustering variant) in this experiment.

The design matrix X consisted of SNPs of Sorghum varieties. We consider n = 911 varieties and over 80,000 SNPs. We remove duplicate SNPs and also SNPs that do not have significantly high correlation to at least one response variable. Finally, we end up considering p = 2,937 SNPs. The output data Y contains the following 6 response variables (columns) for all the n varieties collected by hand measurements:

1. Height to panicle (h1): The height of the plant up to the panicle of the Sorghum plant.

2. Height to top collar (h2): The height of the plant up to the top most leaf collar.

3. Diameter top collar (d1): The diameter of the stem at the top most leaf collar.

4. Diameter at 5 cm from base (d2): The diameter of the stem at 5 cm from the base of the plant.

5. Leaf collar count (l1): The number of leaf collars in the plant.

6. Green leaf count (l2): The total number of green leaves. This will be <l1 since some leaves may have senesced and will not be green anymore.

For each variety, each trait can be an average of measurements from up to four plants.

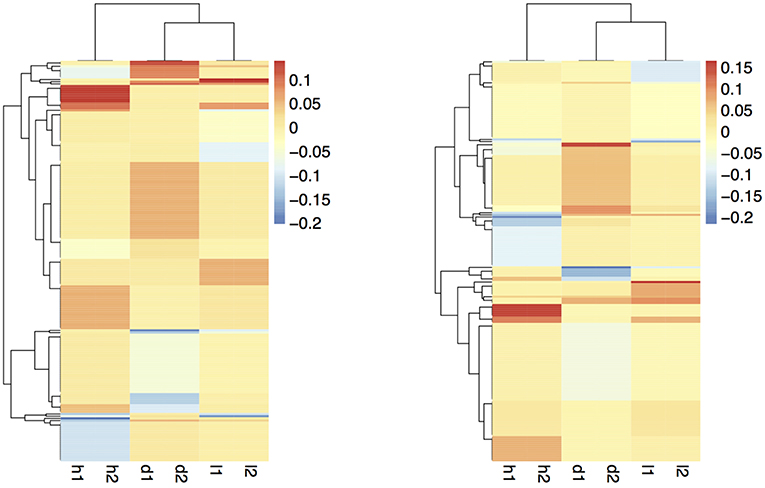

The coefficient matrix given by our formulations are visualized in Figure 8. To make the figure easier to interpret, we exclude the rows with all zero coefficients and take the average over the coefficients within each bi-cluster. The light yellow regions are coefficients close to zero; red and blue areas are positive and negative coefficients, respectively. The rows and columns are reordered to best show the checkerboard patterns. We wish to emphasize again that these checkerboard patterns in the coefficient matrices are automatically discovered using our proposed procedures, and are not readily evident, or trivially discoverable from the data.

Figure 8. Smoothed coefficient matrix obtained from formulations 1 (left) and 2 (right), revealing the bi-clustering structure.

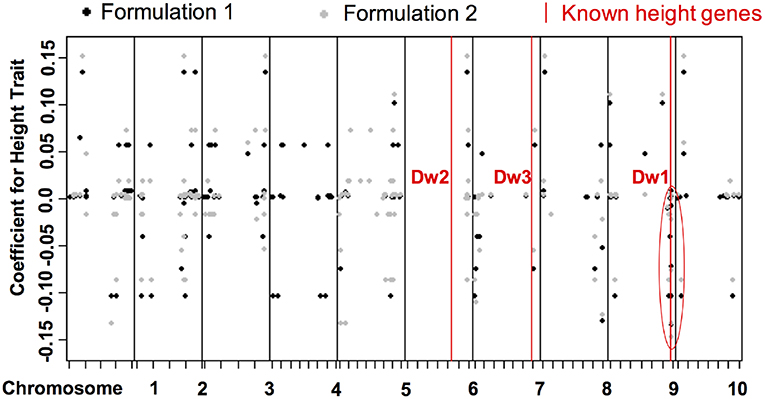

The two formulations reveal similar bi-clustering patterns up to reordering. For column clusters, the plant height tasks (h1 and h2), the stem diameter tasks (d1 and d2), and the leaf tasks (l1 and l2) group together. Also, the stem diameter and leaf tasks are more related to each other compared to the height tasks. The bi-clustering patterns reveal the groups of SNPs that influence similar phenotypic traits. Coefficients for height features in the GWAS (Figure 9) study show SNPs with strong effects coinciding with locations of Dwarf 3 (Multani et al., 2003) and especially Dwarf 1 (Hilley et al., 2016) genes known to control plant height that are segregating and significant in the population. The lack of any effect at the Dwarf 2 (Hilley et al., 2017) locus supports previous work indicating that this gene is not a strong contributing factor in this population. This demonstrates that we are able to discover existing factors. We also identify potentially new SNPs for further investigation and biological validation, since many coefficients align with loci outside of the previously identified known height genes.

Figure 9. Distribution of coefficients for height traits for all SNPs. The x-axis shows the positions of genetic variants on the chromosomes. The y-axis are the values of the coefficients for the discovered associations with height trait.The red lines are loci of known height genes, namely genes that are known to be associated to height, and the black and gray dots correspond to coefficients of formulations 1 and 2, respectively. Some correspond to known locations, some correspond to new locations of associated SNPS.

To evaluate predictive accuracy, we split our data set into three parts: 70% training, 15% validation, and 15% test. We estimate the coefficient matrices by optimizing our formulations on the training set, select the tuning parameters based on the validation set (sections 4.2, 4.4), and then calculate the RMSE on the test set. Table 4 shows the RMSE on test set.

We also estimate the RMSE of the proposed formulations and compare it with the RMSE provided by a simple Lasso model and 2-step procedure. This is shown in Table 4. We see that the RMSE of our formulations are slightly less than that of the Lasso and 2-step procedure. Hence, for similar estimation performance, we are able to discover additional interesting structure in the input-output relationship using our proposed methods.

In this paper we introduced and studied formulations for joint estimation and clustering (row or column or both) of the parameter matrix in multi-response models. By design, our formulations imply that coefficients belonging to the same (bi-)cluster are close to one another. By incorporating different notions of closeness between the coefficients, we can tremendously increase the scope of applications in which similar formulations can be used. Some future applications could include sparse subspace clustering and community detection.

Recently there has been a lot of research on non-convex optimization formulations, both from theoretical and empirical perspectives. It would be of interest to see the performance of our formulations on non-convex loss functions. Another extension would be to construct confidence intervals and perform hypothesis testing for the coefficients in each cluster.

The raw data supporting the conclusions of this manuscript will be made available by the authors, without undue reservation, to any qualified researcher.

MY discussed the idea, wrote the codes, ran the experiments, and wrote the second part of the paper. KN discussed the idea and wrote the first part of the paper. AT provided the real data and interpreted the real data experiments. AL proposed the original research idea, and wrote the first part of the paper.

The information, data, or work presented herein was funded in part by the Advanced Research Projects Agency-Energy (ARPA-E), U.S. Department of Energy, under the Award Number DE-AR0000593. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency there of.

KN and AL were employed by IBM.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors acknowledge the contributions of the Purdue and IBM project teams for field work, data collection, processing, and discussions. They thank Dr. Mitchell Tuinstra and Dr. Clifford Weil for leading and coordinating the planning, experimental design, planting, management, and data collection portions of the project. They thank Dr. Naoki Abe for scientific discussions.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdata.2019.00027/full#supplementary-material

Borchani, H., Varando, G., Bielza, C., and Larrañaga, P. (2015). A survey on multi-output regression. Wiley Interdiscip. Rev. Data Mining Knowl. Discov. 5, 216–233. doi: 10.1002/widm.1157

Caruana, R. (1998). “Multitask learning,” in Learning to Learn (Boston, MA: Kluwer Academic Publishers), 95–133.

Chi, E. C., Allen, G. I., and Baraniuk, R. G. (2014). Convex biclustering. Biometrics 73, 10–19. doi: 10.1111/biom.12540

Combettes, P. L., and Pesquet, J.-C. (2008). A proximal decomposition method for solving convex variational inverse problems. Inverse Probl. 24:065014. doi: 10.1088/0266-5611/24/6/065014

Hallac, D., Leskovec, J., and Boyd, S. (2015). “Network lasso: clustering and optimization in large graphs,” in Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (Sydney, NSW: ACM), 387–396.

Hilley, J., Truong, S., Olson, S., Morishige, D., and Mullet, J. (2016). Identification of dw1, a regulator of sorghum stem internode length. PLoS ONE 11:e0151271. doi: 10.1371/journal.pone.0151271

Hilley, J. L., Weers, B. D., Truong, S. K., McCormick, R. F., Mattison, A. J., McKinley, B. A., et al. (2017). Sorghum dw2 encodes a protein kinase regulator of stem internode length. Sci. Rep. 7:4616. doi: 10.1038/s41598-017-04609-5

Hubert, L., and Arabie, P. (1985). Comparing partitions. J. Classif. 2, 193–218. doi: 10.1007/BF01908075

Jacob, L., Vert, J.-P., and Bach, F. R. (2009). “Clustered multi-task learning: a convex formulation,” in Advances in Neural Information Processing Systems, eds D. Koller, D. Schuurmans, Y. Bengio, and L. Bottou (Vancouver, BC: Curran Associates, Inc.), 745–752.

Jalali, A., Sanghavi, S., Ruan, C., and Ravikumar, P. K. (2010). “A dirty model for multi-task learning,” in Advances in Neural Information Processing Systems, eds J. D. Lafferty, C. K. I. Williams, J. Shawe-Taylor, R. S. Zemel, and A. Culotta (Vancouver, BC: Curran Associates, Inc.), 964–972.

Kang, Z., Grauman, K., and Sha, F. (2011). “Learning with whom to share in multi-task feature learning,” in Proceedings of the 28th International Conference on Machine Learning (ICML-11) (Bellevue, WA), 521–528.

Kim, S., and Xing, E. P. (2010). “Tree-guided group lasso for multi-task regression with structured sparsity,” in Proceedings of the 27th International Conference on Machine Learning (ICML-10) (Haifa), 543–550.

Kumar, A., and Daume III, H. (2012). “Learning task grouping and overlap in multi-task learning,” in Proceedings of the 29th International Conference on International Conference on Machine Learning, ICML'12 (Edinburgh: Omnipress), 1723–1730. Available online at: http://dl.acm.org/citation.cfm?id=3042573.3042793

Lu, Y., and Foster, D. P. (2014). Fast ridge regression with randomized principal component analysis and gradient descent. arXiv [Preprint]. arXiv:1405.3952.

McWilliams, B., Heinze, C., Meinshausen, N., Krummenacher, G., and Vanchinathan, H. P. (2014). Loco: distributing ridge regression with random projections. Stat 1050:26. arXiv [Preprint]. arXiv:1406.3469

Meinshausen, N., and Yu, B. (2009). Lasso-type recovery of sparse representations for high-dimensional data. Ann. Stat. 37, 246–270. doi: 10.1214/07-AOS582

Multani, D. S., Briggs, S. P., Chamberlin, M. A., Blakeslee, J. J., Murphy, A. S., and Johal, G. S. (2003). Loss of an MDR transporter in compact stalks of maize br2 and sorghum dw3 mutants. Science 302, 81–84. doi: 10.1126/science.1086072

Obozinski, G., Taskar, B., and Jordan, M. (2006). Multi-Task Feature Selection. Berkeley, CA: Statistics Department, UC Berkeley, Tech. Rep, 2.

Rosset, S., and Zhu, J. (2007). Piecewise linear regularized solution paths. Ann. Stat. 35, 1012–1030. doi: 10.1214/009053606000001370

Schifano, E. D., Li, L., Christiani, D. C., and Lin, X. (2013). Genome-wide association analysis for multiple continuous secondary phenotypes. Am. J. Hum. Genet. 92, 744–759. doi: 10.1016/j.ajhg.2013.04.004

Tuinstra, M. R. (2016). “Automated sorghum phenotyping and trait development platform,” in Proceedings of KDD Workshop on Data Science for Food, Energy, and Water (San Francisco, CA).

Keywords: high-throughput phenotyping, multitask learning, convex clustering, bi-clustering, sparse linear regression, genome-wide association studies

Citation: Yu M, Natesan Ramamurthy K, Thompson A and Lozano AC (2019) Simultaneous Parameter Learning and Bi-clustering for Multi-Response Models. Front. Big Data 2:27. doi: 10.3389/fdata.2019.00027

Received: 05 April 2019; Accepted: 18 July 2019;

Published: 14 August 2019.

Edited by:

Ranga Raju Vatsavai, North Carolina State University, United StatesCopyright © 2019 Yu, Natesan Ramamurthy, Thompson and Lozano. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aurélie C. Lozano, YWNsb3phbm9AdXMuaWJtLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.