94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Big Data, 11 July 2019

Sec. Data Mining and Management

Volume 2 - 2019 | https://doi.org/10.3389/fdata.2019.00013

This article is part of the Research TopicCritical Data and Algorithm StudiesView all 9 articles

Social data in digital form—including user-generated content, expressed or implicit relations between people, and behavioral traces—are at the core of popular applications and platforms, driving the research agenda of many researchers. The promises of social data are many, including understanding “what the world thinks” about a social issue, brand, celebrity, or other entity, as well as enabling better decision-making in a variety of fields including public policy, healthcare, and economics. Many academics and practitioners have warned against the naïve usage of social data. There are biases and inaccuracies occurring at the source of the data, but also introduced during processing. There are methodological limitations and pitfalls, as well as ethical boundaries and unexpected consequences that are often overlooked. This paper recognizes the rigor with which these issues are addressed by different researchers varies across a wide range. We identify a variety of menaces in the practices around social data use, and organize them in a framework that helps to identify them.

“For your own sanity, you have to remember that not all problems can be solved. Not all problems can be solved, but all problems can be illuminated.” –Ursula Franklin1

We use social data as an umbrella concept for all kind of digital traces produced by or about users, with an emphasis on content explicitly written with the intent of communicating or interacting with others. Social data typically comes from social software, which provides an intermediary or a focus for a social relationship (Schuler, 1994). It includes a variety of platforms—like for social media and networking (e.g., Facebook), question and answering (e.g., Quora), or collaboration (e.g., Wikipedia)—and purposes from finding information (White, 2013) to keeping in touch with friends (Lampe et al., 2008). Social software enables the social web, a class of websites “in which user participation is the primary driver of value” (Gruber, 2008).

The social web enables access to social traces at a scale and level of detail, both in breadth and depth, impractical with conventional data collection techniques, like surveys or user studies (Richardson, 2008; Lazer et al., 2009). On the social web users search, interact, and share information on a mix of topics including work (Ehrlich and Shami, 2010), food (Abbar et al., 2015), or health (De Choudhury et al., 2014); leaving, as a result, rich traces that form what Harford (2014) calls found data: “the digital exhaust of web searches, credit card payments and mobiles pinging the nearest phone mast.”

People provide these data for many reasons: these platforms allow them to achieve some goals or receive certain benefits. Motivations include communication, friendship maintenance, job seeking, or self-presentation (Lampe et al., 2006; Joinson, 2008), which are often also key to understanding ethical facets of social data use.

Social data opens unprecedented opportunities to answer significant questions about society, policies, and health, being recognized as one core reason behind progress in many areas of computing (e.g., crisis informatics, digital health, computational social science) (Crawford and Finn, 2014; Tufekci, 2014; Yom-Tov, 2016). They are believed to provide insights into both individual-level and large human phenomena, with a plethora of applications and substantial impact (Lazer et al., 2009; Dumais et al., 2014; Harford, 2014; Tufekci, 2014). Concomitantly, there is also a growing consensus that while the ever-growing datasets of online social traces offer captivating insights, they are more than just an observational tool.

In this paper we aim to strengthen prior calls—including boyd and Crawford (2012); Ruths and Pfeffer (2014); Tufekci (2014); Ekbia et al. (2015) and Gillespie (2015)—to carefully scrutinize the use of social data against a variety of possible data and methodological pitfalls. Social data are being leveraged to make inferences about how much to pay for a product (Hannak et al., 2014), about the likelihood of being a terrorist or about users health (Yom-Tov, 2016) and employability (Rosenblat et al., 2014).2 While such inferences are increasingly used in decision- and policy-making, they can also have important negative implications (Diakopoulos, 2016; O'Neil, 2016). Yet, such implications are not always well understood or recognized (Tufekci, 2014; O'Neil, 2016), as many seem to assume that these data, and the frameworks used to handle them, are adequate, often as-is, for the problem at hand, with little or no scrutiny. A key concern is that research agendas tend to be opportunistically driven by access to data, tools, or ease of analysis (Ruths and Pfeffer, 2014; Tufekci, 2014; Weller and Gorman, 2015); or, as Baeza-Yates (2013) puts it, “we see a lot of data mining for the sake of it.”

In the light of Google Flu Trends' initial success (Ginsberg et al., 2009), the provocative essay “The End of Theory” (Anderson, 2008) sparked intense debates by saying: “Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecedented fidelity. With enough data, the numbers speak for themselves.” Yet, while the ability to capture large volumes of data brings along important scientific opportunities (Lazer et al., 2009; King, 2011), size by itself is not enough. Indeed, such claims were debunked by many critics (boyd and Crawford, 2012; Harford, 2014; Lazer et al., 2014; Giardullo, 2015; Hargittai, 2015), who emphasize that they ignore, among others, that size alone does not necessarily make the data better (boyd and Crawford, 2012) as “there are a lot of small data problems that occur in big data” which “don't disappear because you've got lots of the stuff. They get worse” (Harford, 2014).

Regardless of how large or varied social data are, there are also lingering questions about what can be learned from them about real-world phenomena (online or offline)—which have yet to be rigorously addressed (boyd and Crawford, 2012; Ruths and Pfeffer, 2014; Tufekci, 2014). Thus, given that these data are increasingly used to drive policies, to shape products and services, and for automated decision making, it is critical to gain a better understanding of the limitations around the use of various social datasets and of the efforts to address them (boyd and Crawford, 2012; O'Neil, 2016). Overlooking such limitations can lead to wrong or inappropriate results (boyd and Crawford, 2012; Kıcıman et al., 2014), which could be consequential particularly when used for policy or decision making.

At this point, a challenge for both academic researchers and applied data scientists using social data, is that there is not enough agreement on a vocabulary or taxonomy of biases, methodological issues, and pitfalls of this type of research. This review paper is intended for those who want to examine their own work, or that of others, through the lens of these issues.

While there is much research investigating social data and the various social and technical processes underlying its generation, most of the relevant studies do not position themselves within a framework that guides other researchers in systematically reasoning about possible issues in the social datasets and the methods they use. To this end, our goal is to gather evidence of a variety of different kinds of biases in social data, including their underlying social, technical and methodological underpinnings. While some of this evidence comes from studies that explicitly investigate a specific kind or source of bias; most of it comes from research that is leveraging social data with the goal of answering social science and social computing questions. That is, the evidence of bias and of broader implications about potential threats to the validity of social data research is often implicit in the findings of prior work, rather than a primary focus of it.

Through a synthesis of prior social data analyses, as well as literature borrowed from neighboring disciplines, we draw the connection between the patterns measured in various sources of online social data across diverse streams of literature and a variety of data biases, methodological pitfalls, and ethical challenges. We broadly categorize these biases and pitfalls as manifestations and causes of bias in order to better guide researchers who wish to systematically investigate bias-related risks as a result of their data and methods choice, as well as their implications for the stated research goals.

Other systematic accounts of biases and dilemmas. We recognize that some of the issues covered here are not unique to “social data," but instead relevant to data-driven research more broadly, and that aspects of these issues have been covered in other contexts as well (Friedman and Nissenbaum, 1996; Pannucci and Wilkins, 2010; Torralba and Efros, 2011). For instance, Pannucci and Wilkins (2010) study clinical trials that include a series of steps such planning, implementation, analysis/publication; and opportunities for bias at every step (similar to our idealized data pipeline section 2.3). Inspecting biases in data collections for object recognition, Torralba and Efros (2011) found that similar datasets merged together can be easily separated due to built-in biases: one can identify the dataset a specific data entry comes from. Broadly discussing bias in computer systems, Friedman and Nissenbaum (1996) characterize it according to its source such as societal, technical, or usage related. Theirs is, to the best of our knowledge, the first attempt to comprehensively characterize the issue of bias in computer systems, over 20 years ago.

Because of far-reaching impact, biases in social data require renewed attention. Social data has shaped entirely new research fields at the intersection of computer science and social sciences such as computational social science and social computing, fields that have also branched out into many neighboring application areas including crisis informatics, computational journalism, and digital health. As a cultural phenomenon, social media and other online social platforms have also provided a new expressive media landscape for billions of people, businesses, and organizations to communicate and connect, providing a window into social and behavioral phenomena at a large scale. Biases in social data and the algorithmic tools used to handle it can have, as a result, far-reaching impact. Further, while social datasets exhibit built-in biases due to how the datasets are created (González-Bailón et al., 2014a; Olteanu et al., 2014a), as is the case for other types of data, e.g., Torralba and Efros (2011), they also exhibit biases that are specific to social data, such as behavioral biases due to community norms (section 3.3).

The term “bias.” We also remark that “bias” is a broad concept that has been studied across many disciplines such as social science, cognitive psychology or law, and encompasses phenomena such as confirmation bias and other cognitive biases (Croskerry, 2002), as well as systemic, discriminatory outcomes (Friedman and Nissenbaum, 1996) or harms (Barocas et al., 2017). Oftentimes, however, it is difficult to draw clear boundaries between the more normative connotations and the statistical sense of the term—see Campolo et al. (2017) for a discussion on some of the competing meanings of the term “bias.” In this paper, we use the term mainly in its more statistical sense to refer to biases in social data and social data analyses (see our working definition of data bias in section 3.1).

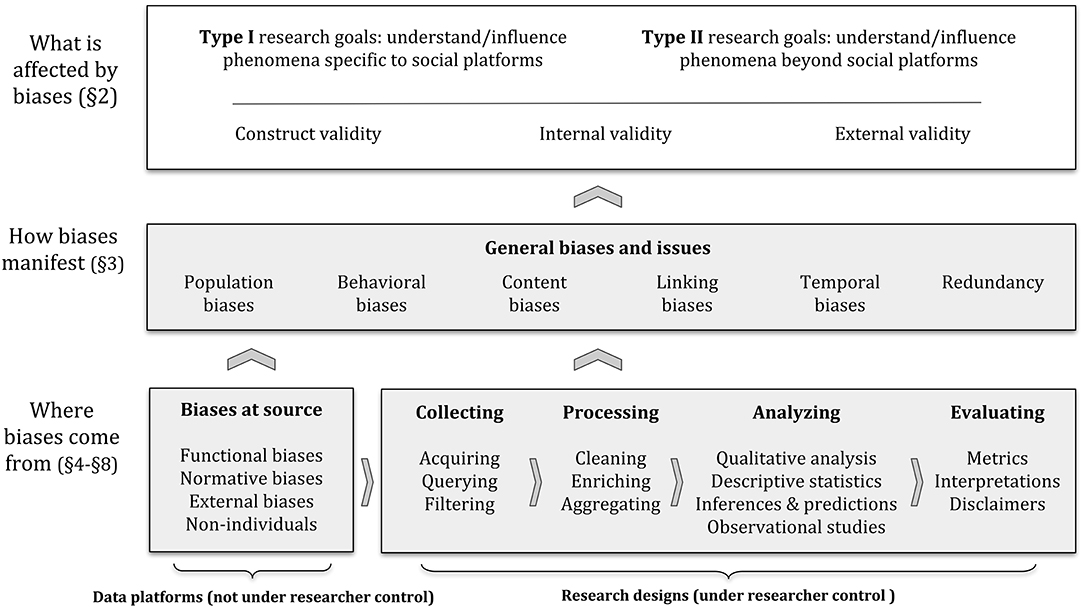

We begin our review by noting that whether a research method is adequate or not depends on the questions being asked and the data being used (section 2), and by covering a series of general biases and other issues in social data (section 3). While we note that research rarely happens in a linear fashion, we describe challenges along an idealized data pipeline, depicted in Figure 1. We first analyze problems at the data source (section 4) and introduced during data collection (section 5). Next, we describe issues related to data processing (section 6) and analysis (section 7), and issues that arise during the evaluation or interpretation of results (section 8). Finally, we discuss ethical issues of social data (section 9), before wrapping up with an brief overview of future directions (section 10).

Figure 1. Depiction of the framework we use to describe biases and pitfalls when working with social data. The arrows indicate how components in our framework directly tend to affect others, indicating that reaching certain social data analysis goals (section 2.1) requires research to satisfy certain validity criteria (section 2.2), which can be compromised by biases and other issues with social data (section 3). These biases and issues may occur at the source of the data (section 4), or they may be introduced along the data analysis pipeline (sections 5–8). See section 2.3 for a more detailed description.

Evaluating whether a dataset is biased or a methodology is adequate depends on the context in which research takes place, and fundamentally on the goals of the researcher(s). To better grasp how data and methodological issues might affect or shape research outcomes, we first describe the prototypical goals (section 2.1) and classes of validity threats (section 2.2) to social data research. We then overview our framework to describe biases and pitfalls in social data analysis along an illustrative vignette (section 2.3), showing how they can compromise research validity and goals.

Researchers and practitioners have explored the potential benefits of social data on a variety of domains and for many applications, of which we can broadly identify two classes of research goals:

I. to understand or influence phenomena specific to social software platforms, often with the objective of improving them; or

II. to understand or influence phenomena beyond social software platforms, seeking to answer questions from sociology, psychology, or other disciplines.

Type I research focuses on questions about social software platforms, including questions specific to a single platform or a family of related platforms, comparative analyses of platforms and of behaviors across platforms. This research typically applies methods from computer science fields such as data mining or human-computer interaction. This includes, for instance, research on maximizing the spread of “memes,” on making social software more engaging, and on improving the search engine or the recommendation system of a platform.

Type II research is about using data from social software platforms to address questions about phenomena that happens outside these platforms. This research may occur in emerging interdisciplinary domains, such as computational social science and digital humanities. Researchers addressing this type of problem may seek to use social data to answer questions and identify interventions relevant to media, governments, non-governmental organizations, and business, or to work on problems from domains such as health, economics, and education. Sometimes, the research question can be about the impact of social software platforms in these domains, e.g., to describe the influence of social media in a political election. In other cases, the goal may lie entirely outside social media itself, e.g., to use social data to help track the evolution of contagious diseases by analyzing symptoms reported online by social media users.

To discuss validity threats to social data research, for illustrative purposes, let us assume that a researcher is analyzing social data to test a given hypothesis.3 A challenge, then, is an unaddressed issue within the design and execution of a study that may put the proof or disproof of the hypothesis into question. Social data research is often interdisciplinary, and as such, the vocabulary and taxonomies describing such challenges is varied (Howison et al., 2011; boyd and Crawford, 2012; Lazer et al., 2014). Without prejudice, we categorize them along the following classes of threats to the validity of research conclusions:

Construct validity or Do our measurements over our data measure what we think they measure? (Trochim and Donnelly, 2001; Quinn et al., 2010; Howison et al., 2011; Lazer, 2015). In general, a research hypothesis is stated as some assertion over a theoretical construct, or an assertion over the relationships between theoretical constructs. Construct validity asks whether a specific measurement actually measures the construct referred to in the hypothesis.

Example: If a hypothesis states that “self-esteem” increases with age, research tracking self-esteem over time from social media postings must ask whether its assessment of self-esteem from the postings actually measures self-esteem, or if instead it measures some other related or unrelated construct. In other words, we need to know whether the observed behaviors (such as words or phrases used in postings) are driven primarily by users' self-esteem vs. by community norms (section 4.2), system functionalities (section 4.1), or other reasons (section 3.3). Construct validity is specially important when the construct (self-esteem) is unobservable/latent and has to be operationalized via some observed attributes (words or phrases used).

Internal validity or Does our analysis correctly lead from the measurements to the conclusions of the study? Internal validity focuses on the analysis and assumptions about the data (Howison et al., 2011). This survey covers subtle errors of this kind, such as biases that can be introduced through data cleaning procedures (section 6), the use of machine learned classifiers, mistaken assumptions about data distributions, and other inadvertent biases introduced through common analyses of social media (section 7).

Example: An analysis of whether “self-esteem” increases with age may not be internally valid if text filtering operations accidentally remove terms expressing confidence (section 5.3); or if machine learned classifiers were inadvertently trained to recognize self-esteem only in younger people (section 7). Of course, while we do not dwell on them, researchers should also be aware of more blatant logical errors—e.g., comparing the self-esteem of today's younger population to the self-esteem of today's older population would not actually prove that self-esteem increases with age (section 3.6).

External validity or To what extent can research findings be generalized to other situations? Checking external validity requires to focus on ways in which the experiment and the analysis may not represent the broader population or situation (Trochim and Donnelly, 2001). For example, effects observed on a social platform may manifest differently on other platforms due to different functionalities, communities, or cultural norms (Wijnhoven and Bloemen, 2014; Malik and Pfeffer, 2016). The concept of external validity includes what is sometimes called ecological validity, which captures to what extent an artificial situation (constrained social media platform) properly reflects a broader real-world phenomenon (Ruths and Pfeffer, 2014). It also includes temporal validity, which captures to what extent constructs change over time (Howison et al., 2011) and may invalidate previous conclusions about societal and/or platform phenomena; e.g., see the case of Google Flu Trends (Lazer et al., 2014).

Example: Even after we conclude a successful study of “self-esteem” in a longitudinal social media dataset collected from a given social media platform (section 4), its findings may not generalize to a broader setting as people who chose that particular platform may not be representative of the broader population (section 3.2); or perhaps their behaviors online are not representative of their behaviors in other settings (section 3.3).

Each of these validity criteria is complex to define and evaluate, being general to many types of research beyond social data analyses; the interested reader can consult (Trochim and Donnelly, 2001; Quinn et al., 2010; Howison et al., 2011; Lazer, 2015). Specific challenges are determined by the objectives and the research questions one is trying to answer. For instance, a study seeking to improve the ordering of photos shown to users on one photo sharing site may not need to be valid for other photo sharing sites (external validity). In contrast, a study concerned with how public health issues in a country are reflected on social media sites may aspire to ensure that results are independent of the websites selected for the study.

As depicted in Figure 1, social data analysis starts with certain goals (section 2.1), such as understanding or influencing phenomena specific to social platforms (Type I) and/or phenomena beyond social platforms (Type II). These goals require that research satisfies certain validity criteria, described earlier (section 2.2). These criteria, in turn, can be compromised by a series of general biases and issues (section 3). These challenges may depend on the characteristics of each data platform (section 4)—which are often not under the control of the researcher—and on the research designs choices made along a data processing pipeline (from sections 5 to 8)–which are often under the researcher control.

In this paper, for each type of bias we highlight, we include a definition (provided at the general level), the implications of the issue in terms of how it affects research validity and goals, and a list of common issues that we have identified based on prior work. We believe this organization facilitates the adoption by researchers and practitioners, as for practical reasons they can be assumed to know details about their own research design choices, even if other elements in the framework may be less explicitly considered.

Example/vignette: Let us consider another brief hypothetical example. Suppose we are interested in determining the prevalence of dyslexia in different regions or states of a country, and we decide to use observations of writing errors in social media postings to try answer this question.4 First, we determine that the research goal is of type II, as it seeks to answer a question that is external to social data platforms. Second, we consider different aspects of research validity. With respect to construct validity we observe that the literature on dyslexia indicates that in some cases this disorder is often noticeable in the way people write, but not always; hence we note the type of dyslexia we will be able to capture. With respect to internal validity we need to determine and describe the extent to which our method for content analysis will reflect this type of dyslexia. With respect to external validity we need to note the factors that may affect the generalization of our results.

Third, we consider each potential data bias; for illustrative purposes we mention population biases (section 3.2), content biases (section 3.4), functional biases (section 4.1), and normative biases (section 4.2). With respect to population biases, we need to understand to what extent the demographic characteristics of the population we sample from social media reflect those of the country's population, e.g., whether users are skewed toward the younger. Regarding content biases, we note the need to consider the effects of the context in which people write on social media and the attention they put in writing correctly. For functional biases, we need to note whether the platforms we study include functionalities that may affect our results, such as a spell-checker, and whether those functionalities are enabled by default. Regarding normative biases, we need to account for how users are expected to write in a site, which would be different, e.g., in a job search vs. an anonymous discussion site.

Fourth, we map these issues to choices in our research design. For example, data querying (section 5) should not be based on keywords that might be misspelled. Data cleaning (section 6) should not involve text normalization operations that may affect the writing patterns we want to capture. During the data analysis (section 7) we need to correctly separate different factors that may lead to a writing mistake, seeking ways to isolate dyslexia (e.g., by using a sample of texts written by people with dyslexia). Finally, the interpretation of our results (section 8) needs to be consistent with the elements that may affect our research design.

General challenges for research using social data include population biases (section 3.2), behavioral biases (section 3.3), content biases (section 3.4), linking biases (section 3.5), temporal variations (section 3.6), and redundancy (section 3.7). To situate these issues within the wider concept of data quality, we begin by briefly overviewing known data quality issues.

Data quality is a multifaceted concept encompassing an open-ended list of desirable attributes such as completeness, correctness, and timeliness; and undesirable attributes such as sparsity and noise, among others. The impact of these attributes on specific issues varies with the analysis task. In general, data quality bounds the questions that can be answered using a dataset. When researchers gather social datasets from platforms outside their control, they often have little leverage to control data quality.

Two well-known shortcomings in social data quality are sparsity and noise:

– Sparsity. Many measures follow a power-law or heavy-tailed distribution,5 which makes them easier to analyze on the “head” (in relation to frequent elements or phenomena), but difficult on “tail” (such as rare elements or phenomena) (Baeza-Yates, 2013). This can be exacerbated by platform functionality design (e.g., limiting the length of users' posts) (Saif et al., 2012), and may affect, for instance, data retrieval tasks (Naveed et al., 2011).6

– Noise. Noise refers to content that is incomplete, corrupted, contains typos/errors, or content that is not reliable or credible (Naveed et al., 2011; boyd and Crawford, 2012). The distinction between what is “noise” and what is “signal” is often unclear, subtly depending on the research question (Salganik, 2017); the problem rather being finding the right data (Baeza-Yates, 2013)–simply adding more data may increase the level of noise and reduce the quality and reliability of results.

Another important data quality issue, and the main focus of this paper, is data bias.

Definition (Data bias). A systematic distortion in the sampled data that compromises its representativeness.

Most research on social data uses a fraction of all available data (a “sample”) to learn something about a larger population. Sampling is so prevalent that we rarely question it. Thus, in many of these scenarios, the samples should be representative of a larger population of interest, defined with respect to criteria such as demographic characteristics (e.g., women over 55 years old) or behavior (e.g., people playing online games). Samples should also represent well the content being produced by different groups.

Determining representativeness is complicated when the available data does not fully capture the relevant properties of either the sampled users or the larger population. Considering the classification in section 2.1, sample representativeness affects research questions of type I (internal to a platform) that may need to focus on certain subgroups of users. Yet, identifying such groups is not trivial, as the available data may not capture all relevant properties of users. Research questions of type II (about external human phenomena) are further complicated. Often, they come along with a definition of a target population of interest (Ruths and Pfeffer, 2014) such as estimating the political preferences of young female voters or of citizens with a college degree.

Furthermore, there may also be multiple ways to express representativesness objectives (sections 3.2–3.6), including with respect to some target population, to some notion of relevant content, or to some reference behavior. However, obtaining a uniform random sampling may be difficult or impossible when acquiring social data (see section 5). Data quality will depend on the interplay of sample sizes and various sample biases—for a detailed and formal treatment of these issues, see Meng (2018). Data biases are often evaluated by comparing a data sample with reference samples drawn from different sources or contexts. Thus, data bias is rather a relative concept (Mowshowitz and Kawaguchi, 2005). For instance, when we speak of “content production biases” (section 3.4), we often mean that the content in two social datasets may systematically differ, even if the users writing those contents overlap.

Definition (Population biases). Systematic distortions in demographics or other user characteristics between a population of users represented in a dataset or on a platform and some target population.

The relationship between a studied population (e.g., adults on Twitter declaring to live in the UK) and a target population (e.g., all adults living in the UK) is often unknown. In general, both early surveys from the Pew Research Center and academic studies (Hargittai, 2007; Mislove et al., 2011) show that the demographic composition of major social platforms differs both with respect to each other, and with respect to the offline or Internet population (see e.g., Duggan, 2015 for the US).7 In other words, it shows that individuals do not randomly self-select when using social media platforms (Hargittai, 2015), with demographic attributes such as age, gender, race, socioeconomic status, and Internet literacy correlating with how likely someone is to use a social platform.

Implications. Population biases may affect the representativeness of a data sample and, as a result, may compromise the ecological/external validity of research. They are particularly problematic for research of type II (section 2.1), where conclusions about society are sought from social data, such as studies of public opinion. They can also impact the performance of algorithms that make inferences about users (Johnson et al., 2017), further compromising the internal validity of both type I and type II research.

Common issues. Three common manifestations of population bias are the following:

– Different user demographics tend to be drawn to different social platforms. Prior surveys and studies on the use of social platforms show differences in gender representation across platforms (Anderson, 2015), as well as race, ethnicity, and parental educational background (Hargittai, 2007). For instance, Mislove et al. (2011) found that Twitter users significantly over-represent men and urban populations, while women tend to be over-represented on Pinterest (Ottoni et al., 2013).

– Different user demographics use platform mechanisms differently. Prior work showed that people with different demographic, geographic, or personality traits sometimes use the same platform mechanisms for different purposes or in different ways. For instance, users of different countries tend to use Twitter differently—Germans tend to use hashtags more often (suggesting a focus on information sharing), while Koreans tend to reply more often to each other (suggesting a focus on conversations) (Hong et al., 2011). Another example is a question-answering site where the culture encourages hostile corrections, driving many users to remain “unregistered and passive.8” Thus, studies assuming a certain usage may misrepresent certain groups of users.

– Proxies for user traits or demographic criteria vary in reliability. Most users do not self-label along known demographic axes. For example, a study interested in the opinion of young college graduates about a new law may rely on a proxy population: those reporting on a social platform to be alumni of a given set of universities. This choice can end up being an important source of bias (Ruths and Pfeffer, 2014). In the context of predicting users' political orientations, researchers have shown that the choice of the proxy population drastically influences the performance of various prediction models (Cohen and Ruths, 2013).

Definition (Behavioral biases). Systematic distortions in user behavior across platforms or contexts, or across users represented in different datasets.

Behavioral biases appear across a wide range of user actions, including how they connect and interact with each other, how they seek information, and how they create and appraise content.9 For instance, studies looking at similarities and differences among social platforms found differences with respect to user personalities (Hughes et al., 2012), news spreading (Lerman and Ghosh, 2010), or content sharing (Ottoni et al., 2014).

Implications. Behavioral biases affect the ecological/external validity of research, as they may condition the results of a study on the chosen platform or context. They are not entirely dependent on population biases, and when (explicit or implicit) assumptions are made about the users' behavioral patterns, they can affect both type I and II research (section 2.1) that, e.g., looks into users' needs or interests, among others.

Common issues. We separately discuss behavioral biases affecting the generation of content by users (section 3.4) and those affecting linking patterns between users (section 3.5). Three other common classes of behavioral biases involve interactions among users, interactions between users and content, and the biases that cause users to be included or excluded from a study population.

– Interaction biases affect how users interact with each other. Differences in how people communicate are influenced by shared relationships, norms, and platform affordances. while others indicate that how users interact depends on the type of relation they share (Burke et al., 2013) and on shared characteristics (i.e., homophily) (McPherson et al., 2001).

– Content consumption biases affect how users find and interact with content, due to differences in their interests, expertise, and information needs. Studying web search behavior, Silvestri (2010) found that it varies across semantic domains, and Aula et al. (2010) that it changes as the task at hand becomes more difficult. By observing the interplay between what people search and share about health on social media, De Choudhury et al. (2014) found information seeking and sharing practices to vary with the characteristics of each medical condition, such as its severity.

Further, people's consumption behavior is correlated with their demographic attributes and other personal characteristics: Kosinski et al. (2013) links “likes” on Facebook with personal traits, while web page views vary across demographics, e.g., age, gender, race, income (Goel et al., 2012). Since users tend to consume more content from like-minded people, such consumption biases were linked to the creation of filter bubbles (Nikolov et al., 2015).

– Self-selection and response bias may occur due to behavioral biases. Studies relying on self-reports may be biased due to what users choose to report or share about, when they report it, and how they choose to do it. This can happen either because their activities are not visible (e.g., a dataset may not include people who only read content without writing), or due to self-censorship (e.g., not sharing or deleting a post) as a result of factors such as online harassment, privacy concerns, or some sort of social repercussion (Wang et al., 2011; Das and Kramer, 2013; Matias et al., 2015).

Apart from “missing” reports, inaccurate self-reports can also bias social datasets—often termed as response bias.10 Zhang et al. (2013) found that about 75% of Foursquare check-ins do not match users' real mobility, being influenced by Foursquare's competitive game-like mechanisms (Wang et al., 2016a). Further, users are more likely to talk about extreme or positive experiences than common or negative experiences (Kícíman, 2012; Guerra et al., 2014). Response bias may also affect the use of various platforms mechanisms; Tasse et al. (2017) show that while some users do not know they are geotagging their social media posts, many users consciously use geotagging to e.g., show off were they have been.

When overlooked, such reporting biases can also lead to discrimination (Crawford, 2013; Barocas, 2014). For example, data-driven public policies may only succeed in economically advantaged, urban, and data-rich areas (Hecht and Stephens, 2014; Shelton et al., 2014), if efforts are not made to improve data collection elsewhere (see the “digital divide” on section 9.4).

Definition (Content production biases). Behavioral biases that are expressed as lexical, syntactic, semantic, and structural differences in the content generated by users.

Implications. The same as for behavioral biases: content production biases affect ecological/external validity of both type I and II research. Further, these biases raise additional concerns as they affect several popular tasks, such as user classification, trending topics detection, language identification, or content filtering (Cohen and Ruths, 2013; Olteanu et al., 2014a; Blodgett et al., 2016; Nguyen et al., 2016), and may also impact users' exposure to a variety of information types (Nikolov et al., 2015).

Common issues. Variations in user generated content, particularly text, are well-documented across and within demographic groups.

– The use of language(s) varies across and within countries and populations. By mapping the use of languages across countries, Mocanu et al. (2013) observed seasonal variations in the linguistic composition of each country, as well as between geographical areas at different granularity scales, even at the level of city neighborhoods. Such variations were also observed across racial or ethnic groups (Blodgett et al., 2016).

– Contextual factors impact how users talk. The use of language is shaped by the relations among people; Further, Schwartz et al. (2015) show that the temporal orientation of messages (emphasizing the past, present, or future) may be swayed by factors like openness to new experiences, number of friends, satisfaction with life, or depression.

– Content from popular or “expert” users differs from regular users' content. For instance, Bhattacharya et al. (2014) found that on Twitter “expert” users tend to create content mainly on their topic of expertise, while

– Different populations have different propensities to talk about certain topics. For instance, by selecting political tweets during the 2012 US election, Diaz et al. (2016) noticed a user population biased toward Washington, DC; while Olteanu et al. (2016) found African-Americans more likely to use the #BlackLivesMatter Twitter hashtag (about a large movement on racial equality in the US).

Definition (Linking biases). Behavioral biases that are expressed as differences in the attributes of networks obtained from user connections, interactions or activity.

Implications. The social networks (re)constructed from observed patterns in social datasets may be fundamentally different from the underlying (offline) networks (Schoenebeck, 2013a), posing threats to the ecological/external validity. This is particularly problematic for type II research and, in cases where user interaction or linking patterns vary with time or context, it can also affect type I research. Linking biases impact the study of, e.g., social networks structure and evolution, social influence, and information diffusion phenomena (Wilson et al., 2009; Cha et al., 2010; Bakshy et al., 2012). On social platforms, they may also result in systematically biased perceptions about users or content (Lerman et al., 2016).

Common issues. Types of manifestations for linking biased include:

– Network attributes affect users' behavior and perceptions, and vice versa. while Dong et al. (2016) found age-specific social network distances (“degrees of separation”), with younger people being better connected than older generations. Further, homophily—the tendency of similar people to interact and connect (McPherson et al., 2001)—can systematically bias the perceptions of networked users, resulting in under- or over-estimations of the prevalence of user attributes within a population (Lerman et al., 2016).

In social datasets, linking biases can be further exacerbated by how data is collected and sampled, and by how links are defined, impacting the observed properties of a variety of network-based user attributes, such as their centrality within a social network (Choudhury et al., 2010; González-Bailón et al., 2014b) (see also section 5).

– Behavior-based and connection-based social links are different. Different graph construction methods can lead to different structural graph properties in the various kinds of networks that can be constructed from social data (Cha et al., 2010). Exploring the differences between social networks based on explicit vs. implicit links among users, Wilson et al. (2009) also showed that the network constructed based on explicit links was significantly denser than the one based on user interactions.

– Online social networks formation also depends on factors external to the social platforms. Geography has been linked to the properties of online social networks (Poblete et al., 2011; Scellato et al., 2011), with the likelihood of a social link decreasing with the distance among users, with consequences for information diffusion (Volkovich et al., 2012). Further, the type and the dynamics of offline relationships influence users propensity to create social ties and interact online (Subrahmanyam et al., 2008; Gilbert and Karahalios, 2009).

Definition (Temporal Biases). Systematic distortions across user populations or behaviors over time.

Data collected at different points in time may differ along diverse criteria, including who is using the system, how the system is used, and in the platform affordances. Further, these differences may exhibit a variety of patterns over time, including with respect to granularity and periodicity.

Implications. Temporal biases affect both the internal and ecological/external validity of social data research. They are problematic for both type I and II research (section 2.1), as they may affect the generalizability of observations over time (e.g., what factors vary and how they confound with the current patterns in the data). If a platform and/or the offline context are not stable, it may be impractical to disentangle the effects due to a specific variable of interest from variations in other possible confounding factors.

Common issues. How one aggregates and truncates datasets along the temporal axes impacts what type of patterns are observed and what research questions can be answered.

– Populations, behaviors, and systems change over time. Studies on both Facebook (Lampe et al., 2008) and Twitter (Liu et al., 2014) have found evidence of such variations. Even the user demographic composition and participation on a specific topic (Guerra et al., 2014; Diaz et al., 2016) or their interaction patterns (Viswanath et al., 2009) are often non-stationary. There are often complex interplays between behavioral trends on a platform (e.g., in the use of language) and the online communities' makeup and users' lifecycles (Danescu-Niculescu-Mizil et al., 2013), which means sometimes change happens at multiple levels. For instance, there are variations on when and for how long users focus on certain topics that may be triggered by current trends, seasonality or periodicity in activities, or even noise (Radinsky et al., 2012). Such trends can emerge organically or be engineered through platform efforts (e.g., marketing campaigns and new features).

– Seasonal and periodic phenomena. These can trigger systematic variations in usage patterns (Radinsky et al., 2012; Grinberg et al., 2013). When analyzing geo-located tweets, Kıcıman et al. (2014) found that different temporal contexts (day vs. night, weekday vs. weekend) changed the shapes of inferred neighborhood boundaries. while Golder and Macy (2011) observed links between the sentiment of tweets and cycles of sleep and seasonality.

– Sudden-onset phenomena affect populations, behaviors, and platforms. Examples include suddenly emerging data patterns (e.g., a spike or drop in activity) due to external events (e.g., an earthquake or accident) or platform changes. while real-world events like crisis situations may result in short-lived activity peaks (Crawford and Finn, 2014).

– The time granularity can be too fine-grained to observe long-term phenomena. Examples include phenomena maintaining fairly constant patterns or evolving over long periods (Richardson, 2008; Crawford and Finn, 2014). For instance, while social datasets related to real-world events are often defined around activity peaks, distinct events may have different temporal fingerprints that such datasets may miss (e.g., disasters may have longer-term effects than sport events). The temporal fingerprints of protracted situations like wars may also be characterized by multiple peaks. Others observed that long-term search logs (vs. short-term, within-session search information) provide richer insights into the evolution of users' interests, needs, or how their experiences unfold (Richardson, 2008; Fourney et al., 2015).

– The time granularity can be too coarse-grained to observe short-lived phenomena. This is important when tracking short-lived effects of some experience, or smaller phenomena at the granularity of, e.g., hours of minutes. showing that how users' timelines are aligned and truncated may influence what patterns they capture. Further, some of the patterns and correlations observed in social data may be evolving or may be only short-lived (Starnini et al., 2016).

– Datasets decay and lose utility over time. Social data decays with time as users delete their content and accounts (Liu et al., 2014; Gillespie, 2015), and platforms APIs' terms of service prevent sharing of datasets as they are collected. This often makes it impractical to fully reconstruct datasets over time, leaving important holes (“Swiss Cheese” decay) (Bagdouri and Oard, 2015). while Almuhimedi et al. (2013) that about 2.4% of tweets posted during 1 week in 2013 by about 300 million users, were later deleted (with about half of users deleting at least one tweet from that period).

There are several mechanisms rendering a message unavailable later on (Liu et al., 2014): it was explicitly deleted by the user; the user switched their account to “protected” or private; the user's account was suspended by the platform; or the user deactivated their entire account. Yet, often, it may be unclear why certain posts were removed and may be hard to gauge their impact on analysis results.

Definition (Redundancy). Single data items that appear in the data in multiple copies, which can be identical (duplicates), or almost identical (near duplicates).

Implications. Redundancy, when unaccounted for, may affect both the internal and ecological/external validity of research, in both type I and type II research (section 2.1). It may negatively impact the utility of tools (Radlinski et al., 2011), and distort the quantification of phenomena in the data.

Common issues. Lexical (e.g., duplicates, re-tweets, re-shared content) and semantic (e.g., near-duplicates or same meaning, but written differently) redundancy often accounts for a significant-fraction of content (Baeza-Yates, 2013), and may occur both within and across social datasets.

Other sources of content redundancy often include non-human accounts (section 4.4) such as the same entity posting from multiple accounts or platforms (e.g., spam), multiple users posting from the same account (e.g., organization accounts), or multiple entities posting or re-posting the same content (e.g., posting quotes, memes, or other types of content). This can sometimes distort results, yet, redundancy can be a signal by itself, for instance, reposting may be a signal of importance.

The behaviors we observe in online social platforms are partially determined by platform capabilities, which are engineered toward certain goals (Van Dijck, 2013a; Tufekci, 2014; Gillespie, 2015; Salganik, 2017). We first overview biases due to platform design and affordances (section 4.1) and due to behavioral norms that exist or emerge on each platform (section 4.2). Then, we examine factors external to social platforms, but which may influence user behavior (section 4.3). Finally, we briefly discuss the presence of non-individual accounts (section 4.4).

Definition (Functional biases). Biases that are a result of platform-specific mechanisms or affordances, that is, the possible actions within each system or environment.

Platform affordances are often driven by business considerations and interests (Van Dijck, 2013a; Salganik, 2017), and influenced by the politics, assumptions, and interests of those designing and building these platforms (Van Dijck, 2013a; West et al., 2019). Platform affordances and features are sometimes purposefully introduced to “nudge” users toward certain behaviors (Thaler and Sunstein, 2008). Each platform uses unique, proprietary, and often undocumented platform-specific algorithms to organize and promote content (or users), affecting user engagement and behavior. Ideally, research should use social data samples from different platforms, but due to limits in the availability of data, much research is concentrated on data from a few platforms, most notably Twitter. Its usage as a sort of “model organism” by social media research has been criticized (Tufekci, 2014).

Implications. Functional biases make conclusions from research studies difficult to generalize or transfer, as each platform exhibits its own structural differences (Tufekci, 2014), which can lead to platform-specific phenomena (Ruths and Pfeffer, 2014) that are often overlooked. The fact that most research is done using data from a handful of platforms makes this issue even more severe. Functional biases are problematic for type II research (section 2.1), affecting the external/ecological validity of social data research; and, when e.g., they change over time, they can also affect type I research. Their influence on behavior and adoption patterns, however, is often subtle and hard to disentangle from other factors.

Common issues. The functional peculiarities of social platforms may introduce population (section 3.2) and behavioral biases (section 3.3) by influencing which user demographics are drawn to each platform and the kind of actions they are more likely to perform (Tufekci, 2014; Salganik, 2017). Manifestations of functional biases include:

– Platform-specific design and features shape user behavior. Through randomized experiments (A/B tests) and longitudinal observations, one can observe how new features and feature changes impact usage patterns on social platforms. For example, Facebook observed that decreasing the size of the input area for writing a reply to a posting resulted in users sending shorter replies, faster, and more frequently.11 On Twitter, Pavalanathan and Eisenstein (2016) found that the introduction of emojis lead to a decrease in the usage of emoticons.

The effects of platform design on user behavior can also be observed through comparative studies. Both platforms allow book reviewing and rating, yet differ in the content of the reviews, the ratings, and how reviews get promoted. Users seem aware of such differences across platforms, highlighting key features in their adoption of a platform such as interface aesthetics, voting functionality, community size, as well as the diversity, recency and the quality of the available content (Newell et al., 2016b).

– Algorithms used for organizing and ranking content influence user behavior. User engagement with content and other users is influenced by algorithms that determine what information is shown, when is it shown, and how is it shown. This has been dubbed “algorithmic confounding” (Salganik, 2017). A illustrative example is a ranked list of content (e.g., search results) that “buries” content found in the lower positions, due to click and sharing bias or users perception of higher ranked content as being more trustworthy (Hargittai et al., 2010). This may provide an advantage to, e.g, certain ideological or opinion groups (Liao et al., 2016). Personalized rankings further complicate these issues. Hannak et al. (2013) observe that, on average, about 12% of Google search results exhibit differences due to personalization. This has important societal implications as it can lead to less diverse exposure to content, or to being less exposed to content that challenges one's views (Resnick et al., 2013).

– Content presentation influences user behavior. How different aspects of a data item are organized and emphasized, or how various data items are represented, also impact how users interact with and interpret them across platforms. For instance, Miller et al. (2016) show that variations in how emojis are displayed across smartphones can lead to confusion among users, as different renderings of the same concept are so different that they might be interpreted as having different meanings and emotional valence.

Definition (Normative biases). Biases that are a result of written or unwritten norms and expectations of acceptable patterns of behavior on a given online platform or medium.

Platforms are characterized by their behavioral norms, usually under the form of expectations about what constitutes acceptable use. These norms are shaped by factors including the specific value proposition of each platform, and the composition of their user base (boyd and Ellison, 2007; Ruths and Pfeffer, 2014; Newell et al., 2016b). For instance, Sukumaran et al. (2011) shows how news websites' users conform to informal standards set by others of when writing comments like length or number of covered aspects.

Implications. As with functional biases, normative biases affect the ecological/external validity of research, and are problematic for type II research (section 2.1), since research results may depend on the particular norms of each platform. They can also distort user behavior and tend to vary with context, time, or across sub-communities, also affecting type I research. Overlooking the impact of norms can impact any social data analysis studying or making assumptions about user behavior (Tufekci, 2014).

Common issues. There is a complex interplay between norms, platforms, and behaviors:

– Norms are shaped by the attitudes and behaviors of online communities, which may be context-dependent. Other elements, such as design choices, explicit terms of use, moderation policies, and moderator activities, also affect norms. Users may exhibit different behavioral patterns on different platforms (Skeels and Grudin, 2009): e.g., they may find acceptable to share family photos on Facebook, but not on LinkedIn (Van Dijck, 2013b).12 Norms are also sensitive to context, as the meaning of the same action or mechanism may change under different circumstances (Freelon, 2014). They may also change over time due to e.g., demographic shifts (McLaughlin and Vitak, 2012; Ruths and Pfeffer, 2014).

– The awareness of being observed by others impacts user behavior. People try to influence the opinion that others form about them by controlling their own behavior (Goffman, 1959), depending on who they are interacting with, and the place and the context of the interactions. In online scenarios, users often navigate how to appropriately present themselves depending on their target or construed audience (Marwick and Boyd, 2011). Besides self-presentation, privacy concerns may also affect what users do or share online (Acquisti and Gross, 2006).

Online, the observers may include platform administrators, platform users, or researchers, in what we dub “online” Hawthorne effect.13 For instance, users are more likely to share unpopular opinions or to make sensitive or personal disclosures in private or anonymous spaces, than they are to do so in public ones (Bernstein et al., 2011; Schoenebeck, 2013b; Shelton et al., 2015). It has been observed that users who disclose their real name are less likely to post about sensitive topics, compared to users who use a pseudonymous (Peddinti et al., 2014). Users were also found to be more likely to “check-in” at public locations (e.g., restaurants) than at private ones (e.g., a doctor's office) (Lindqvist et al., 2011).

– Social conformity and “herding” happen in social platforms, and such behavioral traits shape user behavior. For instance, prior ratings and reviews introduce significant bias in individual rating behavior and writing style, creating a herding effect (Muchnik et al., 2013; Michael and Otterbacher, 2014).

Definition (External biases). Biases resulting from factors outside the social platform, including considerations of socioeconomic status, ideological/religious/political leaning, education, personality, culture, social pressure, privacy concerns, and external events.

Social platforms are open to the influence of a variety of external factors that may affect both the demographic composition and the behavior of their user populations.

Implications. External biases are a broad category that may affect construct, internal, and external validity of research, and be problematic for both type I and type II research (section 2.1). In general, external factors may impact various quality dimensions of social datasets, including coverage and representativeness, yet they can also be subtle and easy to overlook, and affect the reliability of observations drawn from these datasets (Silvestri, 2010; Kícíman, 2012; Olteanu et al., 2015).

Common issues. We cover several types of extraneous factors including social and cultural context, external events, semantic domains and sources.

– Cultural elements and social contexts are reflected in social datasets. The demographic makeup of a platform's users has an effect on the languages, topics, and viewpoints that are observed (Preoţiuc-Pietro et al., 2015) (see also population biases, section 3.2). The effect of a particular culture is typically demonstrated through transversal studies comparing a platform's usage across countries. However, as the social context changes in each country, these effects may vary over time.

For instance, a user's country of origin was shown to be a key factor in predicting their questioning and answering behavior (Yang et al., 2011), and may explain biases observed in geo-spatial social datasets such as OpenStreetMap (Quattrone et al., 2015). Hence, it is important for guiding the design of cross-cultural tools (Hong et al., 2011; Yang et al., 2011), and for understanding socio-economic phenomena (Garcia-Gavilanes et al., 2013). In addition to cultural idiosyncrasies, the broader social context of users (including socio-economic or demographic factors) also plays a role in users' behaviors and interactions. For instance, the way in which users are perceived affects their interaction patterns (e.g., the volume of shared content or of followees), as well as their visibility on a platform (e.g., how often they are followed, added to lists, or retweeted) (Nilizadeh et al., 2016; Terrell et al., 2016).

– As other media, social media contains misinformation and disinformation. Misinformation is false information unintentionally spread, while disinformation is false information that is deliberately spread (Stahl, 2006). Users post misinformation due to errors of judgment, while disinformation is often posted purposefully (e.g., for political gain). Disinformation can take forms beyond the production of hoaxes or “fake news” (Lazer et al., 2018); and include other types of manipulation of social systems, such as Google bombs to associate a keyword to a URL by repeatedly searching for the keyword and clicking on the URL, or review spam (Jindal and Liu, 2008) to manipulate the reputation of a product/service.14

Both types of false information can distort social data, sometimes in subtle ways. Of the two, one could argue that disinformation is harder to deal with, since it occurs in an adversarial setting and the adversaries can engage in an escalation of countermeasures to avoid detection. While past studies show that such content is rarely shared, its' consumption is concentrated within certain groups like older users (Grinberg et al., 2019; Guess et al., 2019). Approaches to mitigate the effects of misinformation and disinformation exist, including graph-based (Ratkiewicz et al., 2011), and text-based (Castillo et al., 2013) methods.

– Contents on different topics are treated differently. This is notable with respect to sharing, attention, and interaction patterns. In addition, due to both automated mechanisms and human curation, social media also exhibits common forms of bias present in traditional news media (Lin et al., 2011; Saez-Trumper et al., 2013), including gatekeeping (preference for certain topics), coverage (disparity in attention), and statement bias (differences in how an issue is presented) (D'Alessio and Allen, 2000).

– High-impact events, whether anticipated or not, are reflected on social media. Just as in news media, high-impact sudden-onset events (e.g., disasters) and seasonal cultural phenomena (e.g., Ramadan or Christmas) tend to be covered prominently on social media. Their prominence affects not only how likely users are to mention them, but also what they say (Olteanu et al., 2017b); just as the characteristics of crisis events leave a distinctive “print” on social media with respect to time and duration, including variations in the kind of information being posted and by whom (Saleem et al., 2014; Olteanu et al., 2015).

Definition (Non-individual agents). Interactions on social platforms that are produced by organizations or automated agents.

Implications. Researchers often assume each account is an individual; when this does not hold, internal and external validity can be affected in both type I and type II research (section 2.1). For instance, studies using these datasets to make inferences about the prevalence of different opinions among the public may be particularly affected.

Common issues. There are two common types of non-individual accounts:

– Organizational accounts. Researchers have noted that “studies of human behavior on social media can be contaminated by the presence of accounts belonging to organizations” (McCorriston et al., 2015). Note that it is common practice for organizations (such as NGOs, government, businesses, and media) to have an active presence on social media. For instance, in a study of the #BlackLivesMatter movement, about 5% of the Twitter accounts that included the #BlackLivesMatter hashtag in their tweets were organizations (Olteanu et al., 2016). Furthermore, organizational accounts may produce more content than regular accounts (over 60% of the overall content in one study Olteanu et al., 2015).

– Bots. Bots and spamming accounts are increasingly prevalent (Abokhodair et al., 2015; Ferrara et al., 2016). Such accounts use tricks such as hijacking “trending” hashtags of keywords to gain visibility (Thomas et al., 2011), and can (purposefully or accidentaly) distort the statistics of datasets collected from social platforms (Morstatter et al., 2016;Pfeffer et al., 2018).

However, not all automated accounts are malicious. Some of them are used to post important updates about weather or other topics, such as emergency alerts. Others are designed by third party researchers to understand and audit system behavior (Datta et al., 2015). Broadly, the challenge is how to effectively separate them from accounts operated by individual users (boyd and Crawford, 2012; Ruths and Pfeffer, 2014). In fact, some users mix manual postings with automated ones, resulting in accounts that blend human with bot behavior—dubbed cyborgs (Chu et al., 2012). These cases are particularly difficult to detect and account for in an analysis. Further, simply identifying and removing bots from the analysis may be insufficient, as the behavior of such accounts (e.g., what content they post or who they “befriend”) influences the behavior of human accounts as well (Wagner et al., 2012).

Definition (Data collection biases). Biases introduced due to the selection of data sources, or by the way in which data from these sources are acquired and prepared.

Social datasets are strongly affected by their origin due to platform-specific phenomena: users of different platforms may have different demographics (population biases, section 3.2), and may behave differently (section 3.3) due to functional (section 4.1) and normative biases (section 4.2). This section examines issues resulting from data acquisition (section 5.1), of querying data APIs (section 5.2), and of (post-)filtering (section 5.3).

Implications. The ways in which the choicei of certain data sources affects the observations one makes, and thus the research results, can be described as source selection bias, affecting the external/ecological validity of type II research (section 2.1). Beyond source selection bias, several aspects related to how data samples are collected from these sources have been questioned, including their representativeness and completeness (González-Bailón et al., 2014b; Hovy et al., 2014), which is problematic for both type I and type II research.

Acquisition of social data is often regulated by social platforms, and hinges on the data they capture and make available, on the limits they may set to access, and on the way in which access is provided.

Common issues. The sometimes adversarial nature of data collection leads to several challenges:

– Many social platforms discourage data collection by third parties. Social media platforms may offer no programmatic access to their data, prompting researchers to use crawlers or “scrappers” of content, or may even actively discourage any type of data collection via legal disclaimers and technical counter-measures. The latter may include detection methods that block access to clients suspected of being automatic data collection agents, resulting in an escalation of covert (“stealth”) crawling methods (Pham et al., 2016). Platforms may also display different data to suspected data collectors, creating a gap between the data a crawler collects and what the platform shows to regular users (Gyongyi and Garcia-Molina, 2005).

– Programmatic access often comes with limitations. Some platforms provide Application Programmer Interfaces (APIs) to access data, but they set limitations on the quantity of data that can be collected within a given timeframe, and provide query languages of limited expressiveness (Morstatter et al., 2013; González-Bailón et al., 2014b; Olteanu et al., 2014a) (we discuss the latter in section 5.2). In general, legal and technical restrictions on API usage prevent third parties from collecting up-to-date, large, and/or comprehensive datasets. A main basis of these limits is probably that data is a valuable asset to these platforms, and having others copy large portions of it may reduce their competitive advantage.

– The platform may not capture all relevant data. Development efforts are naturally driven by the functionalities that are central to each platform. Hence, user traces are kept for the actions that are most relevant to the operation of a platform, such as posting a message or making a purchase. Other actions may not be recorded, e.g., to save development costs, minimize data storage costs, or even due to privacy-related concerns. For instance, we often know what people write, but not what they read, and we may know who clicked on or “liked” something, but not who read it or watched it (Tufekci, 2014). While these may seem to capture different behavioral cues, they can sometimes be used to answer the same question, e.g., both what people write and read can be used to measure their interest in a topic. Yet, using one or another may result in different conclusions.

– Platforms may not give access to all the data they capture. Some data collection APIs' restrictions stem from agreements between the platform and its users. For instance, social media datasets typically include only public content that has not been deleted, to which users have not explicitly forbidden access by setting their account as private, or to which users have given explicit access (e.g., through agreements or by accepting a social connection) (boyd and Crawford, 2012; Maddock et al., 2015).

– Sampling strategies are often opaque. Depending on the social platform, the available APIs for sampling data further limit what and how much of the public data we can collect; often offering few guarantees about the properties of the provided sample (Morstatter et al., 2013; Maddock et al., 2015). For instance, an API may return up to k elements matching a criteria, but not specify exactly how those k elements are selected (stating only that they are the “most relevant”). Further, in the case of Twitter, much research relies on APIs that give access to at most 1% of the data (González-Bailón et al., 2014a; Joseph et al., 2014; Morstatter et al., 2014). Data from these APIs have been compared against the full data stream, finding statistical disparities (Yates et al., 2016).

Data access through APIs usually involves a query specifying a set of criteria for selecting, ranking, and returning the data being requested. Different APIs may support different types of queries.

Common issues. There are a number of challenges related to the formulation of these queries:

– APIs have limited expressiveness regarding information needs. Many APIs support various types of predicates to query data, such as geographical locations/regions, keywords, temporal intervals, or users; and the combination of possible predicates determines their expressiveness (González-Bailón et al., 2014a). The specific information required for a particular task, however, might not be expressible within a specific API; which may result in data loss and/or bias in the resulting dataset.

For instance, keywords-based sampling may over-represent content by traditional media (Olteanu et al., 2014a) or content posted by social-media literate users, while geo-based samples may be biased toward users in large cities (Malik et al., 2015). Further, not all relevant content necessarily includes the chosen keywords (Olteanu et al., 2014a) and not all relevant content might be geo-tagged.15

– Information needs may be operationalized (formulated) in different ways. The operationalization of information needs in a query language is known as query formulation. There may be multiple possible formulations for a given information need, and distinct query formulations may lead to different results.

For instance, in location-based data collections, different strategies to match locations, such as using message geo-tags or the author's self-declared location, affect the user demographics and contents of a sample (Pavalanathan and Eisenstein, 2015). Studies relying on geo-tagged tweets often assume that geo-tags “correspond closely with the general home locations of its contributors;” yet, Johnson et al. (2016) found this assumption holds only in about 75% of cases in a study of three social platforms.

In user-based data collections, the selection criteria may include features held at a lower rate by members of certain groups (Barocas and Selbst, 2016), and it may over or under-emphasize certain categories of users such as those that are highly-active on a target topic (Cohen and Ruths, 2013). As a result, the proxy population represented the resulting dataset might fail to correctly capture the population of interest (Ruths and Pfeffer, 2014).

Query formulation strategies can also introduce linking biases (section 3.5), affecting the networks reconstructed from social media posts; query formulations may affect network properties (e.g., clustering, degree of correlation) more than API limitations (González-Bailón et al., 2014b).

– The choice of keywords in keyword-based queries shapes the resulting datasets. A recurrent discussion has been the problematic reliance on keyword-based sampling for building social media datasets (Magdy and Elsayed, 2014; Tufekci, 2014).

What holds for keywords holds for hashtags; plus, different hashtags used in the same context (e.g., during a political event) may be associated with distinct social, political or cultural frames, and, thus, samples built using them may embed different dimensions of the data (Tufekci, 2014). Ultimately, hashtags are a form of social tagging (or folksonomies), and even if we assume that all relevant data is tagged, their use is often inconsistent (varying formats, spellings or word ordering) (Potts et al., 2011).16 While some attempts to standardize the use of hashtags in certain contexts exist [e.g., see OCHA (2014) for humanitarian crises or Grasso and Crisci (2016) for weather warnings], hashtag-based collections may overlook actors that do not follow these standards.

There are efforts to improve and automatize data retrieval strategies to generate better queries (Ruiz et al., 2014)—including by expanding and adapting user queries (Magdy and Elsayed, 2014), by exploiting domain patterns for query generation and expansion (Olteanu et al., 2014a), by splitting the queries and run them in parallel (Sampson et al., 2015), or by employing active learning techniques (Li et al., 2014; Linder, 2017)—to mitigate possible biases by improving sampling completeness or representativeness.

Data filtering entails the removal of irrelevant portions of the data, which sometimes cannot be done during data acquisition due to the limited expressiveness of an API or query language. The data filtering step at the end of a data collection pipeline is often called post-filtering, as it is done after the data has been acquired or obtained by querying (hence the prefix “post-”).

Common issues. Typically, the choice to remove certain data items implies an assumption that they are not relevant for a study. This is helpful when the assumption holds, and harmful when it does not.

– Outliers are sometimes relevant for data analysis. Outlier removal is a typical filtering step. A common example is to filter out inactive and/or unnaturally active accounts or users from a dataset. In the case of inactive accounts, Gong et al. (2015, 2016) found that a significant fraction of users, though interested in a given topic, choose to remain silent. Depending on the analysis task, there are implications to ignoring such users. Similarly, non-human accounts (discussed in section 4.4) often have anomalous content production behavior, but despite not being “normal” accounts, they can influence the behavior of “normal” users (Wagner et al., 2012), and filtering them out may hide important signals.

– Text filtering operations may bound certain analyses. A typical filtering step for text, including that extracted from social media, is the removal of functional words and stopwords. Even if such words might not be useful for certain analyses, for other applications they may embed useful signals about e.g., authorship and/or emotional states (Pennebaker et al., 2003; Saif et al., 2014), threatening as a result the research validity (Denny and Spirling, 2016).

Definition (Data processing biases). Biases introduced by data processing operations such as cleaning, enrichment, and aggregation.

Assumptions in the design of data processing pipelines can affect datasets, altering their content, structure, organization, or representation (Barocas and Selbst, 2016; Poirier, 2018). Biases and errors might be introduced by operations such as cleaning (section 6.1), enrichment via manual or automatic procedures (section 6.2), and aggregation (section 6.3).

Implications. Bad data processing choices are particularly likely to compromise the internal validity of research, but they may also affect the ecological/external validity. For example, crowdsourcing is one of the dominant mechanisms to enrich data and build “ground truth” or “gold standard” datasets, which can then be used for a variety of modeling or learning tasks. However, some “gold standards” have been found to vary depending on who is doing the annotation, and this, in turn, may affect the algorithmic performance (Sen et al., 2015). As a result, they can affect both type I and II research (section 2.1).

The purpose of data cleaning is to ensure that data faithfully represents the phenomenon being studied (e.g., to ensure construct validity). It aims to detect and correct errors and inconsistencies in the data, typically until “cleaned” data can pass consistency or validation tests (Rahm and Do, 2000). Data cleaning is not synonym for data filtering: while data cleaning may involve the removal of certain data elements, it can encompass data normalization by correction or substitution of incomplete or missing values.

Common issues. Data cleaning procedures can embed the scientist's beliefs about a phenomenon and the broader system into the dataset. While well-founded alterations improve a dataset's validity, data cleaning can also result in incorrect or misleading data patterns, for example:

– Data representation choices and default values may introduce biases. Data cleaning involves mapping items, possibly from distinct data sources, to a common representation.17 Such mappings may introduce subtle biases that affect the analysis results. For instance, if a social media platform allows “text” and “image” postings, interpreting that an image posting without accompanying text has (i) null text, or (ii) text of zero length, can yield different results when computing the average text length.