94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

OPINION article

Front. Behav. Neurosci., 11 January 2023

Sec. Emotion Regulation and Processing

Volume 16 - 2022 | https://doi.org/10.3389/fnbeh.2022.951974

This article is part of the Research TopicCredition - An Interdisciplinary Approach to the Nature of Beliefs and BelievingView all 42 articles

Since the enlightenment period, beliefs have been considered widely as incompatible with science. This has promoted a reservation toward the notion of beliefs in the contemporary Western societies and the natural sciences, in particular. More recently, however, people have become aware that belief formation and believing can be a topic of increasing interest for a scientific discourse. Probably, this may have resulted from the observation that religious beliefs appeared as motivation for initiating outbursts of violence. It is important to realize, however, that beliefs are not limited to religious and political beliefs that are based on the narratives, but also comprise the so-called primal beliefs that do not depend on language functions as they concern objects and events in the environment (Seitz, 2022).

Believing is composed of cerebral processes involving the perception of external information and spontaneous appraisal of that information in terms of subjective value or meaning (Seitz et al., 2018). An important type of external information is human face, because facial expressions are considered as a human capacity to convey the emotional state of the given person (Russell, 1994). While the previous research on facial expressions of emotion has focused on the study of six basic categories, e.g., happiness, surprise, anger, sadness, fear, and disgust, recently, more than 20 compound facial expressions of emotions were identified that both can be produced and recognized as well (Du et al., 2014). In fact, humans are highly skilled to recognize the emotions in the rapidly changing facial expressions of other people (Fiske et al., 2007). Recognition of faces and facial expressions can be impaired in psychopathic disorders and alexithymia (Kyranides et al., 2022) as well as by the face masks that cover the nose and mouth (Kleiser et al., 2022). Importantly, however, the observing subject believes that she/he has recognized the facial expression of the other person and trust this belief (Brashier and Marsh, 2020). Moreover, upon recognition of the emotion in the facial expression of the other person, the facial muscles of the observing subject change in a corresponding fashion. This phenomenon demonstrated by electromyographic recordings was called facial mimicry (Franz et al., 2021). Accordingly, believing has an immediate impact on the expressive behavior of the believing subject (Seitz et al., 2022). The more pronounced the facial expressions are, the more likely the observing subject has recognized the observed emotion correctly and the more certain can she/he be in that belief.

Here, we requested healthy subjects to recognize the emotions in facial expressions that were displayed to them in video clips. Because we were interested in determining when the subjects believed to have recognized the emotions, we used video clips in which the emotional face expressions evolved within 20 s out of a neutral face. This allowed us to analyze the process of emotion recognition as compared to viewing the neutral face expressions and empathizing with the emotion seen in the face in the video clip. Empathy is the ability to take the other person's perspective that is considered a key element in entertaining interpersonal relationships (Bird and Viding, 2014). In a functional magnetic resonance imaging study, we found that recognition of an emotion in another person's facial expression results in the activation of large-scale cortico-subcortical circuits related to visual perception, emotion regulation, and action generation.

Overall, 16 healthy subjects (8 females, 8 males, 25 ± 6 years, normal or corrected-to-normal vision) passed a screening for alexithymia (TAS-20, Bagby et al., 1994) and capability of empathy (SPF, http://psydok.sulb.unisaarland.de/volltexte/2009/2363/pdf/SPF_Artikel.pdf). They gave informed written consent to participate in the study that was approved by the local ethics committee and conducted according to the Declaration of Helsinki. Male and female facial expressions of happiness, sadness, fear, and anger consisted of depersonalized frontal black and white images (Averaged Karolinska Institute AKDEF). Each emotion starting from a neutral facial expression evolving over time up to the strongest expression of the emotion (30 images of 750 ms each) was presented. The subjects were instructed to press a button as soon as they recognized the emotion or felt that they empathized with the emotional expressions.

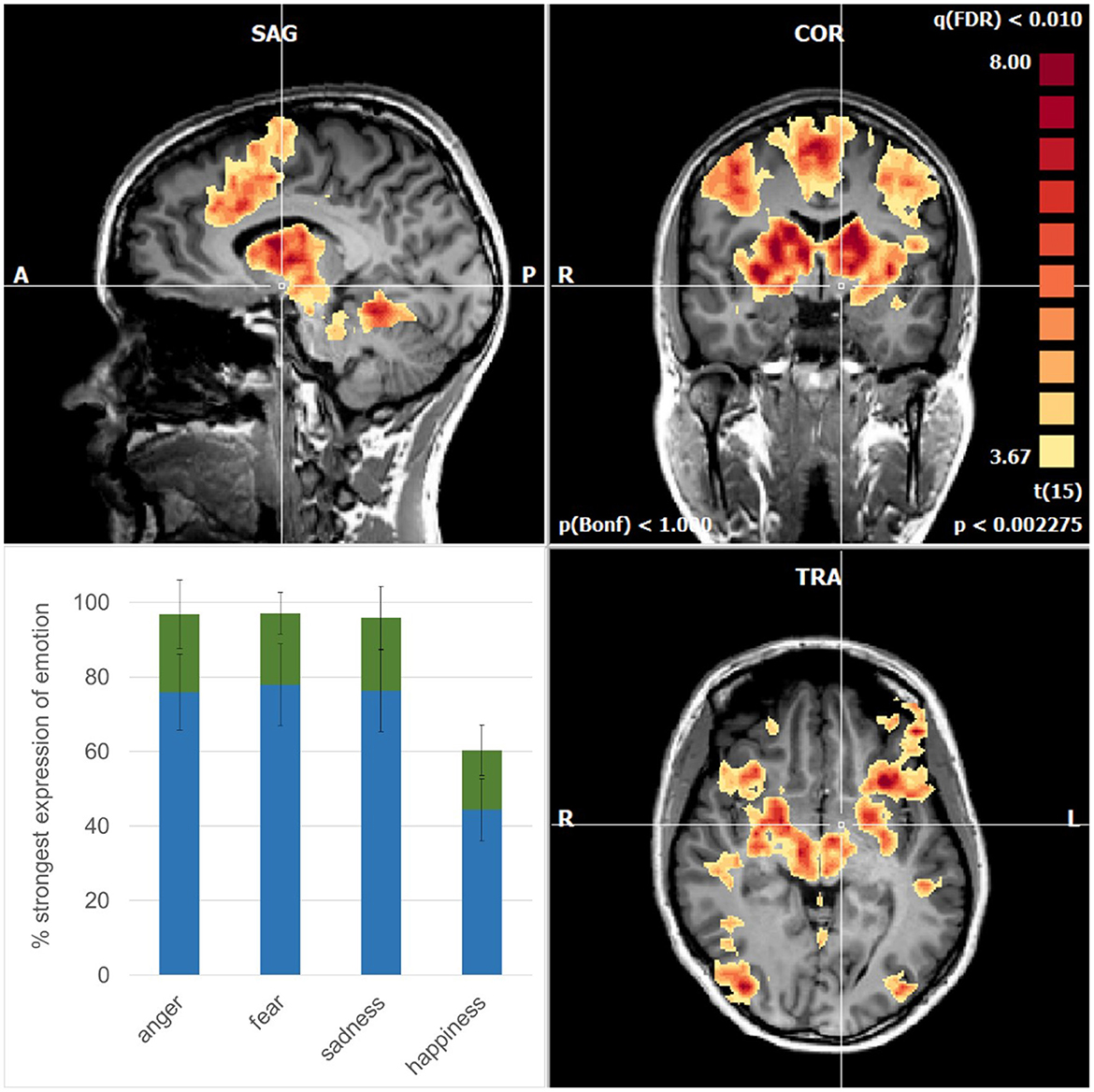

On average, the subjects recognized the emotion anger, fear, and sadness when each emotion had evolved in the video clips to ~80% (Figure 1). For comparison, happiness was recognized already when the emotion had evolved to some 40%, which is in accordance with the other studies (Adolphs, 2002). Interestingly, empathizing occurred while the facial expressions were still evolving with a similar delay across the four basic emotions (Figure 1).

Figure 1. Mean activations are related to the recognition of the emotions in sagittal (upper left), coronal (upper right), and axial planes (lower right). Note the symmetric pattern involves cortical areas and subcortical structures such as the basal ganglia, thalamus, amygdala, and brainstem nuclei. The cross of the stereotactic coordinates (x −4, y −4, z −6) signifies the left hypothalamus that is spared. (lower left) Degree of evolution of the emotional face expressions when the subjects recognized (blue) and empathized (green) with the emotions; error bars: standard deviations.

The focus of this functional magnetic resonance imaging (fMRI) study was to map the brain regions related to the processes of emotion recognition in stereotactic space (Talarairach and Tournoux, 1988). As validated in the brain of human primates, the changes in oxygenated blood as measured with fMRI are temporally and spatially related to the electrical field potential changes in neuronal assemblies following a definite sensory stimulus (Logothetis et al., 2001). fMRI was performed with a 3T MRI scanner (Siemens Magnetom Skyra) while the subjects were lying comfortably and viewed a mirror above them. Through this mirror, they observed the video clips. With a stimulation time of 22,500 ms and a control condition of 10,500 ms, this resulted in a total of 33,000 ms per block. A fixation cross was used as control stimulus shown between each trial to reset the BOLD signal (control condition). Each emotion was repeated six times, multiplied by four emotions in the two sexes resulting in a total of 48 repetitions and thus a total measurement time of 26.4 min. Whole-brain image analysis was done using the Brainvoyager QX software package version 21.4 (Brain Innovation, Maastricht, the Netherlands) as detailed elsewhere (Kleiser et al., 2017, 2022).

At that point when the subjects indicated by pressing a button to have recognized the emotions, there was a strong and widespread activation pattern involving cortical and subcortical brain structures (Figure 1). For comparison, before this time point, the activations were of a far weaker intensity (p < 0.05; FDR-corrected) occurring in brain areas related to the ventral pathway for the processing of shape, color, and faces, extending to V4, and to the fusiform gyrus (Courtney and Ungerleider, 1997). In addition, the dorsal pathway, related to the processing of motion such as visual area V5, the lateral parietal cortex, and the frontal eye fields were involved. In the phase, when the subjects indicated empathy with the emotions, there were activations (p < 0.05; FDR-corrected) as known to be activated in empathic processing such as the inferior frontal gyrus and the superior temporal gyrus (Shamay-Tsoory et al., 2009; Schurz et al., 2017). It is well possible that the subjects differed in how intensively and how long they were able to maintain empathy.

Importantly, however, the activations at the time point of recognition of the emotions exceeded an even higher level of significance (p < 0.01; FDR-corrected). In the cerebral cortex, they involved the dorsomedial frontal cortex including the supplementary motor area (SMA) and pre-SMA, the dorsolateral frontal cortex, the inferior frontal cortex, and the inferior temporal cortex in a virtually symmetric pattern in both cerebral hemispheres (Figure 1). In addition, there were strong activations in the basal ganglia, the entire thalamus, and amygdala and midbrain nuclei including the red nucleus. Finally, there was an involvement in the cerebellar vermis. These activations are compatible with the notion of an engagement of parallel cortico-subcortical cerebellar circuits (DeLong et al., 1984).

We have shown in the healthy subjects that believing to recognize the emotions in facial expressions engaged a widespread and distinctive network involving occipital, parietal, and frontal cortical areas. These areas are known to participate in the face recognition (Xu et al., 2021), oculomotor control (Pierrot-Deseilligny et al., 2004), and imitation of movement (Heiser et al., 2003). Moreover, strong activity was found also in subcortical structures such as the basal ganglia and the thalamus as a part of a highly developed cortico-subcortical relay circuitry, supporting these functions (DeLong et al., 1984). Furthermore, activity was found in the amygdala—a central neural component in emotion processing (Packard et al., 2021). Notably, this widespread pattern of cortical, subcortical, and cerebellar activations was similar to that recently observed in 944 participants during memory encoding of emotional pictures (Fastenrath et al., 2022). It is tempting to speculate that such an extended pattern of enhanced brain activity reflects the complexity of cerebral processing that may be suited to afford human conscious awareness (Greenfield and Collins, 2005).

With our experimental design, we were able to expand the duration of face presentation before the subjects recognized the emotion in the video clips. This allowed us to analyze the process of believing and to determine when the emotions were recognized correctly. It was amazing that this point occurred after the emotions were expressed to some 80%, and only happiness was recognized far earlier, corresponding to similar findings by others (Adolphs, 2002). The belief that the emotions were recognized correctly was substantiated subsequently when emotion was more pronounced. Thus, the subjects' trust in their correct recognition of the emotion was confirmed instantaneously in a rebound manner. This aspect probably also contributed to the strength of the activation pattern observed. In fact, these activations were far stronger than those related to viewing the faces that appeared neutral while empathizing with the emotional face expressions. Nevertheless, it was possibly the imaging that correlates with oscillatory binding of brain activity, when subjects become aware of information processing (Engel and Singer, 2001). That empathizing occurred only shortly later, suggesting that the awareness of the emotion was the bottle-neck process preceding empathizing. Moreover, subjective reports of the subjects support that the assumption empathizing with the emotions was strengthened by the slow progression into the emotion, as compared to an immediate exposure to an outspoken emotion upon viewing the static images of facial expressions.

People process sensory information with ease, which makes them susceptible to trusting these perceptions (Brashier and Marsh, 2020). Concerning affect recognition children, in contrast to adults, have been reported to observe both the eyes and the mouth (Guarnera et al., 2018). As happy faces typically have an open mouth that uncovers the teeth, this may serve as a clue for the observer to identify a happy emotion faster as compared to the other emotional states. The other basic emotions, such as sadness, anger, and fear, were recognized with similar ease (Kleiser et al., 2022). Importantly, however, humans believe that their perceptions are true reflections of the emotional states of the persons in their environment. This enables them to streamline the multitude of their sensory sensations according to those that are subjectively relevant for them and to select their subsequent behavioral actions accordingly (Seitz et al., 2022). Consequently, believing has not only a perceptive aspect about the subject's past experience, but also a prospective aspect concerning decision-making regarding the alternative actions with associated the predictions of what these actions will lead to and how the environment may react to these actions. The findings presented here provide empirical evidence for a putative neural basis for such processes of believing that afford intuitive, prelinguistic action generation (Seitz, 2022). Ultimately, they are apparently suited to support the concept that believing is a fundamental brain function (Angel and Seitz, 2016).

RK and DP contributed to conception and design of the study. RK and CW carried out the measurements. RK, CW, and MS performed data post-processing and statistical analysis. RS, RK, and MS wrote sections of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

This paper was funded by RS, via the Volkswagen Foundation, Siemens Healthineers, and the Betz Foundation. The funders were not involved in the study design, collection, analysis, interpretation of data, the writing of this article, or the decision to submit it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adolphs, R. (2002). Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 12, 169–177. doi: 10.1016/S0959-4388(02)00301-X

Angel, H. F., and Seitz, R. J. (2016). Process of believing as fundamental brain function: the concept of credition. SFU Res. Bull. 3, 1–20. doi: 10.15135/2016.4.1.1-20

Bagby, R. M., Parker, J. D., and Taylor, G. J. (1994). The twenty-item Toronto Alexithymia Scale-I. Item selection and cross-validation of the factor structure. J. Psychosom. Res. 38, 23–32. doi: 10.1016/0022-3999(94)90005-1

Bird, G., and Viding, E. (2014). The self to other model of empathy: providing a new framework for understanding empathy impairments in psychopathy, autism, and alexithymia. Neurosci. Biobehav. Rev. 47, 520–532. doi: 10.1016/j.neubiorev.2014.09.021

Brashier, N. M., and Marsh, E. J. (2020). Judging truth. Ann. Rev. Psychol. 71, 499–515. doi: 10.1146/annurev-psych-010419-050807

Courtney, S. M., and Ungerleider, L. G. (1997). What fMRI has taught us about human vision. Curr. Opin. Neurobiol. 7, 554–561. doi: 10.1016/S0959-4388(97)80036-0

DeLong, M. R., Alexander, G. E., Georgopoulos, A. P., Crutcher, M. D., Mitchell, S. J., Richardson, R. T., et al. (1984). Role of basal ganglia in limb movements. Hum. Neurobiol. 2, 235–244.

Du, S., Tao, Y., and Martinez, A. M. (2014). Compound facial expressions of emotion. Proc. Natl. Acad. Sci. USA 111, E1454–1462. doi: 10.1073/pnas.1322355111

Engel, A. K., and Singer, W. (2001). Temporal binding and the neural correlates of sensory awareness. Trends Cogn. Sci. 5, 16–25. doi: 10.1016/S1364-6613(00)01568-0

Fastenrath, M., Spalek, K., Coynel, D., Loos, E., Milnik, A., Egli, T., et al. (2022). Human cerebellum and corticocerebellar connections involved in emotional memory enhancement. Proc. Natl. Acad. Sci. USA 119, e2204900119. doi: 10.1073/pnas.2204900119

Fiske, S. T., Cuddy, A. J., and Glick, P. (2007). Universal dimensions of social cognition: warmth and competence. Trends Cogn. Sci. 11, 77–83. doi: 10.1016/j.tics.2006.11.005

Franz, M., Nordmann, M. A., Rehagel, C., Schäfer, R., Müller, T., Lundqvist, D., et al. (2021). It is in your face—alexithymia impairs facial mimicry. Emotion. 21, 1537–1549. doi: 10.1037/emo0001002

Greenfield, S. A., and Collins, T. F. T. (2005). A neuroscientific approach to consciousness. Prog. Brain Res. 150, 11–23. doi: 10.1016/S0079-6123(05)50002-5

Guarnera, M., Magnano, P., Pellerone, M., Cascio, M. I., Squatrito, V., Buccheri, S. L., et al. (2018). Facial expressions and the ability to recognize emotions from the eyes or mouth: a comparison among old adults, young adults, and children. J. Genetic Psychol. 179, 297–310. doi: 10.1080/00221325.2018.1509200

Heiser, M., Iacoboni, M., Maeda, F., Marcus, J., and Mazziotta, J. C. (2003). The essential role of Broca's area in imitation. Eur. J. Neurosci. 17, 1123–1128. doi: 10.1046/j.1460-9568.2003.02530.x

Kleiser, R., Raffelsberger, T., Trenkler, J., Meckel, S., and Seitz, R. J. (2022). What influence do face masks have on reading emotions in faces? NeuroImage Rep. 2, 100141. doi: 10.1016/j.ynirp.2022.100141

Kleiser, R., Stadler, C., Wimmer, S., Matyas, T., and Seitz, R. J. (2017). An fMRI study of training voluntary smooth circular eye movements. Exp. Brain Res. 235, 819–831. doi: 10.1007/s00221-016-4843-x

Kyranides, M. N., Christofides, D., and Çetin, M. (2022). Difficulties in facial emotion recognition: taking psychopathic and alexithymic traits into account. BMC Psychol. 10, 239. doi: 10.1186/s40359-022-00946-x

Logothetis, N. K., Pauls, J., Augath, M., Trinath, T., and Oeltermann, A. (2001). Neurophysiological investigation of the basis of the fMRI signal. Nature 412, 150–157. doi: 10.1038/35084005

Packard, M. G., Gadberry, T., and Goodman, J. (2021). Neural systems and the emotion-memory link. Neurobiol. Learn Mem. 185, 107503. doi: 10.1016/j.nlm.2021.107503

Pierrot-Deseilligny, C., Milea, D., and Müri, R. M. (2004). Eye movement control by the cerebral cortex. Curr. Opin. Neurol. 17, 17–25. doi: 10.1097/00019052-200402000-00005

Russell, J. A. (1994). Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 115, 102–141. doi: 10.1037/0033-2909.115.1.102

Schurz, M., Tholen, M. G., Perner, J., Mars, R. B., and Sallet, J. (2017). Specifying the brain anatomy underlying temporo-parietal junction activations for theory of mind: a review using probabilistic atlases from different imaging modalities. Hum. Brain Mapp. 38, 4788–4805. doi: 10.1002/hbm.23675

Seitz, R. J. (2022). Believing and beliefs—neurophysiological underpinnings. Front. Behav. Neurosci. 16, 880504. doi: 10.3389/fnbeh.2022.880504

Seitz, R. J., Angel, H. F., Paloutzian, R. F., and Taves, A. (2022). Believing and social interactions: effects on bodily expressions and personal narratives. Front. Behav. Neurosci. 16, 894219. doi: 10.3389/fnbeh.2022.894219

Seitz, R. J., Paloutzian, R. F., and Angel, H. F. (2018). From believing to belief: a general theoretical model. J. Cogn. Neurosci. 30, 1254–1264. doi: 10.1162/jocn_a_01292

Shamay-Tsoory, S., Aharon-Peretz, J., and Perry, D. (2009). Two systems for empathy: a double dissociation between emotional and cognitive empathy in inferior frontal gyrus versus ventromedial prefrontal lesions. Brain 132, 617–627. doi: 10.1093/brain/awn279

Talarairach, J., and Tournoux, P. (1988). Co-Planar Stereotactic Atlas of the Human Brain. New York: Thieme Medical Publishers.

Keywords: emotion, empathy, beliefs, face expression, fMRI

Citation: Sonnberger M, Widmann C, Potthoff D, Seitz RJ and Kleiser R (2023) Emotion recognition in evolving facial expressions: A matter of believing. Front. Behav. Neurosci. 16:951974. doi: 10.3389/fnbeh.2022.951974

Received: 24 May 2022; Accepted: 21 December 2022;

Published: 11 January 2023.

Edited by:

Sushil K. Jha, Jawaharlal Nehru University, IndiaReviewed by:

Shakeel Mohamed, Technical University of Malaysia Malacca, MalaysiaCopyright © 2023 Sonnberger, Widmann, Potthoff, Seitz and Kleiser. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Raimund Kleiser,  cmFpbXVuZC5rbGVpc2VyQGtlcGxlcnVuaWtsaW5pa3VtLmF0

cmFpbXVuZC5rbGVpc2VyQGtlcGxlcnVuaWtsaW5pa3VtLmF0

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.