- 1 UMR6552 – Ethologie Animale et Humaine, CNRS, Rennes, France

- 2 UMR655 – Ethologie Animale et Humaine, Université Rennes 1, Rennes, France

It is well known that visual information can affect auditory perception, as in the famous “McGurk effect,” but little is known concerning the processes involved. To address this issue, we used the best-developed animal model to study language-related processes in the brain: songbirds. European starlings were exposed to audiovisual compared to auditory-only playback of conspecific songs, while electrophysiological recordings were made in their primary auditory area (Field L). The results show that the audiovisual condition modulated the auditory responses. Enhancement and suppression were both observed, depending on the stimulus familiarity. Seeing a familiar bird led to suppressed auditory responses while seeing an unfamiliar bird led to response enhancement, suggesting that unisensory perception may be enough if the stimulus is familiar while redundancy may be required for unfamiliar items. This is to our knowledge the first evidence that multisensory integration may occur in a low-level, putatively unisensory area of a non-mammalian vertebrate brain, and also that familiarity of the stimuli may influence modulation of auditory responses by vision.

Introduction

Multisensory interactions are a common feature of communication in humans and animals (Partan and Marler, 1999). Seeing a speaker for example considerably improves identification of acoustic speech in noisy conditions (Sumby and Pollack, 1954) but it can also, under good listening conditions, lead to audiovisual illusions such as the famous “McGurk effect,” in which seeing someone producing one phoneme while hearing another one leads to the perception of a third, intermediate phoneme (McGurk and MacDonald, 1976). Multisensory signals often lead to redundancy, that is spatially coordinated and temporally synchronous presentation of the same information across two or more senses (Bahrick et al., 2004). Redundancy enables the perception of simultaneous events as unitary even in noisy environments (e.g., Gibson, 1966), often through enhancement of responses (e.g., Evans and Marler, 1991; Partan et al., 2009). It has been suggested that this could be related to one sensory modality inducing an enhanced attention toward another one: in the presence of a vibratory courtship signal, female wolf spiders are for example more receptive to more visually ornamented males (Hebets, 2005), suggesting that, in this case, the somatosensory modality may induce an enhanced attention toward the visual modality. Such an influence of one modality on another one can vary according to congruence of the stimulation. In horses, congruence between visual and auditory cues for example enhances individual recognition (Proops et al., 2009). It can also vary according to familiarity. Horses thus show more head turns when they hear unfamiliar whinnies than when they hear familiar ones (Lemasson et al., 2009), suggesting that they may seek for visual information when they hear an unfamiliar individual but not when they hear a familiar one. However, the question of the “binding problem,” that is how an organism combine different sources of information (e.g., visual and auditory) to create a coherent percept (Partan and Marler, 1999), remains acute, especially when it comes to understand where and how in the brain are several types of information integrated across two or more senses.

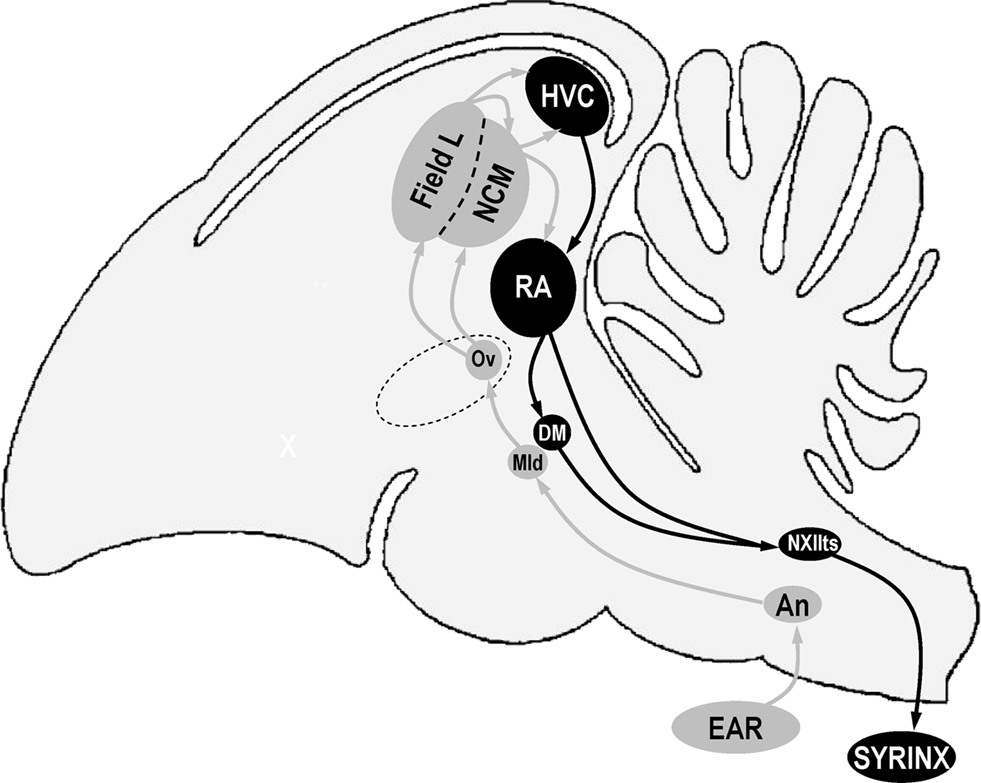

Studies of the cat’s superior colliculus have shown that audiovisual stimuli lead to multisensory response enhancement, suppression or no change in the firing rate of neurons (Meredith and Stein, 1983). In humans and monkeys, multisensory integration (e.g., “seeing speech”) may occur at very early stages of neural processing, in putatively unisensory areas (Calvert et al., 1997; Fort and Giard, 2004), suggesting for example that visual stimulation may tune the primary auditory cortex to the acoustic features of speech via attentional mechanisms and anticipation (Ojanen, 2005). Such a phenomenon could explain why socially deprived songbirds show not only hearing deficits but also impaired organization and selectivity of their primary auditory area (Sturdy et al., 2001; Cousillas et al., 2006). Birdsong is the best-developed model for the study of language development and its neural bases (e.g., Doupe and Kuhl, 1999; Jarvis, 2004), and it shares with human language the need for social influences (i.e., multimodal stimulation; Snowdon and Hausberger, 1997) in order to develop. Behavioral studies have shown that visual stimuli could enhance song learning (Todt et al., 1979; Bolhuis et al., 1999; Hultsch et al., 1999), and there appears to be a complex interplay between vision and vocal output in the courtship behavior of male songbirds (Bischof et al., 1981; George et al., 2006). Moreover, social context-dependent brain modulation is especially strong in relation to communicative behavior in songbirds, where brain activity during the production of specific vocalizations is dependent on the presence of a communicative target individual (e.g., Jarvis et al., 1998; Hessler and Doupe, 1999; Vignal et al., 2005). Finally, and most importantly, social segregation (that is the mere fact of not interacting with adults that are present in the environment) can, as much as physical separation (that is the total absence of adults in the environment), alter not only the development of song (Poirier et al., 2004) but also the development of the primary auditory area (Cousillas et al., 2006, 2008). Thus, given that stimulations with no auditory component can influence song behavior or development, and its neural bases, there must be some neural structures in the vocal-auditory system that are sensitive to non-auditory feedback. Visually evoked and visually enhanced acoustically evoked responses have indeed been recorded in the HVC (used as a proper name; see Reiner et al., 2004; Figure 1), which is a highly integrative vocal nucleus (Bischof and Engelage, 1985). However, the impact of direct social contacts (which involve at least audiovisual stimulations) could be observed as early as at the level of a cortical-like primary auditory area (namely the Field L; Cousillas et al., 2006, 2008; Figure 1) that is functionally connected to HVC (Shaevitz and Theunissen, 2007). One can therefore wonder whether multisensory interactions could already be observed at the level of the Field L, which is a putative unisensory area that is involved in the processing of complex sounds and that is analogous to the primary auditory cortex of mammals (Leppelsack and Vogt, 1976; Leppelsack, 1978; Muller and Leppelsack, 1985; Capsius and Leppelsack, 1999; Sen et al., 2001; Cousillas et al., 2005). In mammals, there has been evidence of multisensory integration in low-level putatively unisensory brain regions in a variety of species, including humans (review in Schroeder and Foxe, 2005). However, to date, no study could provide evidence of multisensory interactions in sensory regions involved in the perception of song (e.g., Avey et al., 2005; Seki and Okanoya, 2006). Here we tested the hypothesis that multisensory integration may occur in the Field L and that familiarity of the stimuli may modulate it.

Figure 1. Schematic representation of a sagittal view of a bird’s brain showing some of the nuclei that are involved in song perception (in gray) and production (in black). Anterior is left, dorsal is up. Structure names are based on decisions of the Avian Brain Nomenclature Forum as reported in Reiner et al. (2004). An, nucleus angularis; DM, dorsal medial nucleus of the intercollicular complex; HVC, used as a proper name; Mld, dorsal part of the lateral mesencephalic nucleus; NCM, caudal medial nidopallium; NXIIts, tracheosyringeal part of the hypoglossal nucleus; Ov, nucleus ovoidalis; RA, robust nucleus of the arcopallium.

Materials and Methods

Experimental Animals

Five wild-caught adult male starlings were kept together with other adult starlings (males and females) in an indoor aviary for 4 years. At the start of the experiment, birds were placed in individual soundproof chambers in order to record their song repertoire, and a stainless steel pin was then attached stereotaxically to the skull with dental cement, under halothane anesthesia. The pin was located precisely with reference to the bifurcation of the sagittal sinus. Birds were given a 2-day rest after implantation. From this time, they were kept in individual cages with food and water ad libitum. During the experiments, the pin was used for fixation of the head and as a reference electrode.

The experiments were performed in France (license no. 005283, issued by the departmental direction of veterinary services of Ille-et-Vilaine) in accordance with the European Communities Council Directive of 24 November 1986 (86/609/EEC).

Acoustic and Visual Stimuli

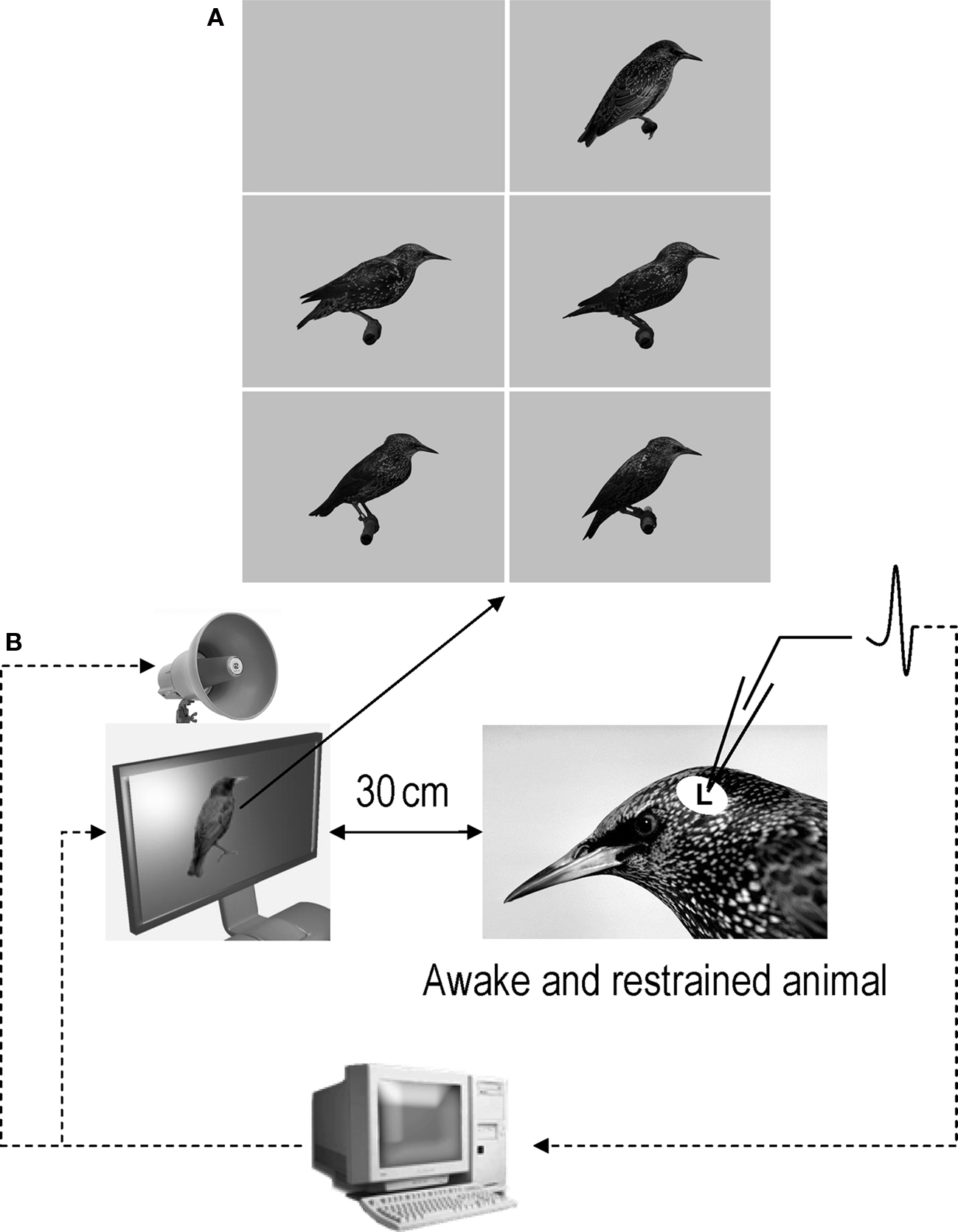

Acoustic stimuli were broadcast through a loudspeaker located over a 15′ TFT screen (placed 30 cm in front of the bird’s head) that displayed either a constant gray background (audio condition: A) or life-size images of starlings, perched, in profile, with the beak closed, in the center of the screen over the gray background (audiovisual condition: AV; Figure 2). Images were a picture of an unfamiliar starling found on the internet (© 1996–2010 www.oiseaux.net, Marcel Van der Tol) and pictures of the experimental birds taken in a plastic cage (71 cm × 40 cm × 40 cm) equipped with a perch and a front side made of Plexiglas. Pictures were all taken outside the breeding season, when the birds’ beaks were black. Starlings were then cut and past on a uniform gray background in order to obtain 300 × 300 dpi, 1018 × 746 pixel, 16.7 millions color (24 BitsPerPixel) images. We chose to take pictures of starlings perched, in profile, with the beak closed because these features were reproducible and easy to keep constant across birds.

Figure 2. (A) Images that were used as background and visual stimuli. The top-left image is the constant gray background that was displayed when acoustic stimuli were tested in audio condition. The other images were the images that were displayed in audiovisual condition. The top-right image is the image of an unfamiliar bird (© 1996–2010 www.oiseaux.net, Marcel Van der Tol) and the four other images are images of four of the five birds used in this study. Although images appear here in grayscale, they were displayed in colors during the experiments. (B) Schematic representation of the recording conditions.

Images on TFT screens have been shown to be realistic enough to elicit courtship behavior in male zebra finches (Ikebuchi and Okanoya, 1999), and approach behavior in female house finches (Hernandez and MacDougall-Shackleton, 2004; see also Bovet and Vauclair, 2000 for a review on picture recognition in animals). It has also been shown that a static zebra finch male is an appropriate stimulus with which to investigate the effects of audiovisual compound training on song learning (Bolhuis et al., 1999).

As acoustic and visual stimuli do not have to be in exact synchrony to be integrated (e.g., Munhall et al., 1996), visual stimuli appeared before (315.6 ± 25.5 ms) the onset of the acoustic stimuli in order to check for responses to visual stimuli only. Although it would have been better to present the visual stimulus on its own as a separate condition to control for visual responses, the presentation of the visual stimulus alone during 315.6 ± 25.5 ms before the onset of the acoustic stimulus was enough to detect visually evoked responses (peak latency of visual responses in the HVC, which is upstream to Field L in the stage of neural processing, has been shown to be about 140 ms; Bischof and Engelage, 1985). Moreover, to our knowledge, no visual responses have ever been reported in the avian Field L, and a recent study has shown the absence of direct projections between visual and auditory primary sensory areas in the telencephalon of pigeons (Watanabe and Masuda, 2010). Every acoustic stimulus was presented twice: once in A and once in AV condition, with a peak sound pressure of 85 dB SPL at the bird’s ears.

Acoustic and visual stimuli were always congruent: unfamiliar songs were presented along with the image of an unfamiliar starling (never seen before), and familiar songs with the image of the corresponding familiar birds (that is, the image that was displayed was the image of the individual that produced the broadcasted acoustic stimulus – see below; Figure 2A). Given that we did not have the pictures of the birds that produced the unfamiliar songs, we chose to avoid arbitrary association between images and songs and extensive testing of all the possible combinations, and to thus limit the number of stimuli (the higher the number of stimuli, the longer recording sessions are), by using only one picture of an unfamiliar starling.

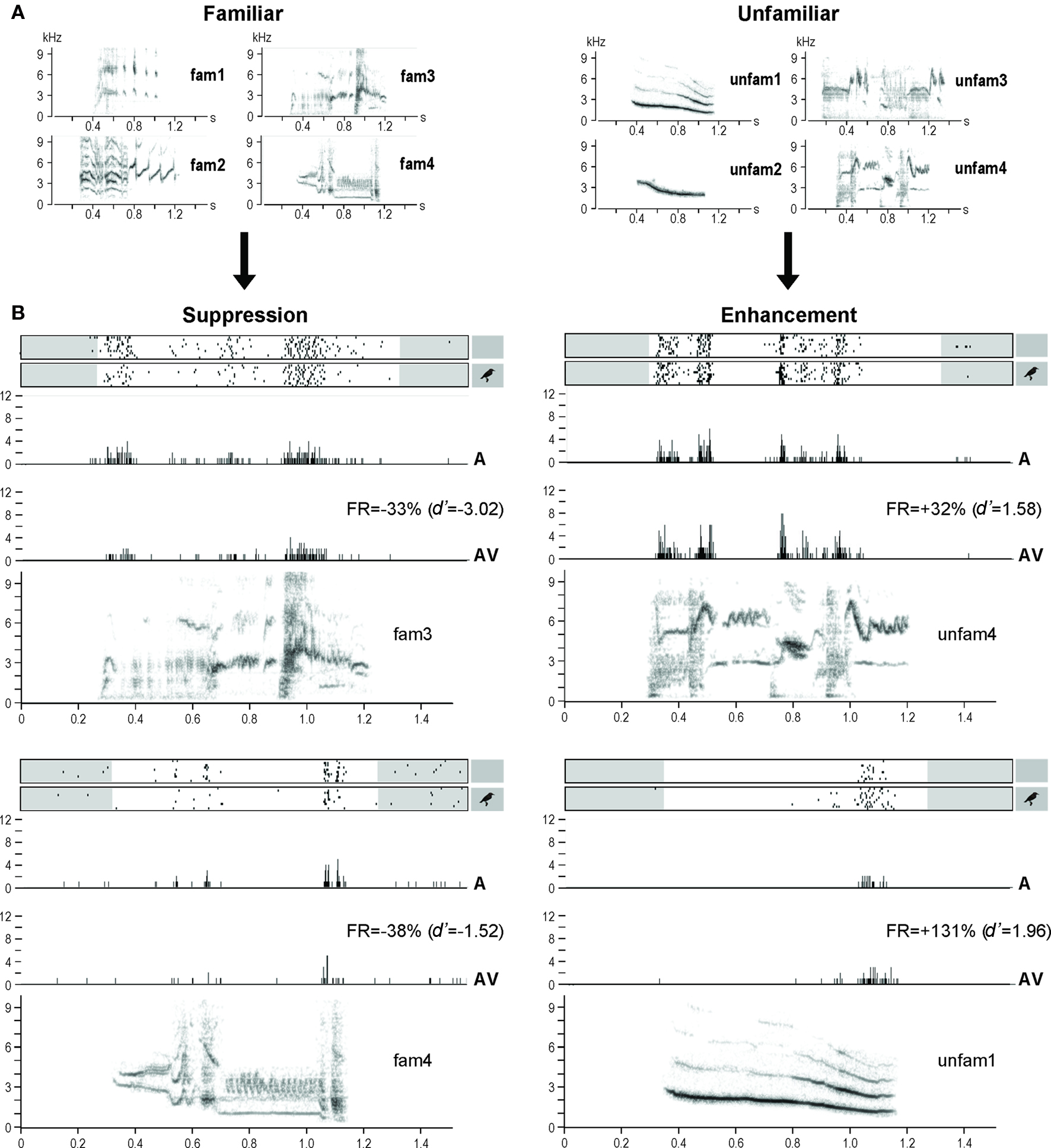

We used 8 acoustic stimuli that were the same for all birds (see Figure 3A): 4 unfamiliar songs (2 species-specific whistles and 2 warbling motifs that our experimental birds had never heard before the experiment, all coming from song libraries recorded in our laboratory; species-specific whistles came from wild starlings that were recorded in the field, in Rennes, in the 1980s, and warbling motifs came from wild-caught starlings that were recorded in the lab in 2002) and 4 familiar or bird’s own songs (BOS; all warbling motifs from our experimental birds; although we did our best to select motifs that were unique to each bird, it appeared that one of these motifs was shared by two birds; however, this did not appear to influence our results). The unfamiliar songs came from different birds. However, it is very unlikely that our birds could detect that these songs were produced by different individuals. Indeed, it has been shown that starlings learn to recognize the songs of individual conspecifics by memorizing sets of motifs that are associated with individual singers, and a critical role for voice characteristics in individual song recognition has been eliminated (Gentner, 2004). Moreover, given that starlings produce two main types of songs: whistles and warbles (e.g., Hausberger, 1997), we chose to have a representation of these two song types in our stimuli. Whistles were represented by class-I songs, which are short, simple, and loud whistles that are sung by all male starlings and that are used in species and population recognition in the wild (Hausberger, 1997). However, since class-I whistles are hardly produced in captivity (Henry, 1998), we could not obtain familiar and bird’s own whistles, and therefore used only warbles as familiar stimuli (see Results and Discussion for potential effect of this difference between unfamiliar and familiar stimuli on our results). Finally, regarding the use of BOS as familiar stimuli, it has been shown that, although Field L neurons respond selectively to complex sounds (Leppelsack and Vogt, 1976; Leppelsack, 1978; Muller and Leppelsack, 1985; Capsius and Leppelsack, 1999; Sen et al., 2001; Cousillas et al., 2005), they typically do not exhibit BOS-selective responses (e.g., Margoliash, 1986; Lewicki and Arthur, 1996; Janata and Margoliash, 1999; Grace et al., 2003). Moreover, a behavioral study has shown that female starlings do not show any special reaction to their own unique songs (Hausberger et al., 1997). It is therefore unlikely that the use of BOS as familiar stimuli has had an effect on our results.

Figure 3. (A) Sonograms of the acoustic stimuli used in the experiment: familiar stimuli recorded from the experimental birds, and unfamiliar stimuli recorded from unknown starlings. (B) Examples of response modulations. Neuronal activity is represented as raster plots corresponding to the 10 repetitions of the stimulation (white areas indicate the time windows considered for auditory responses, that is from the beginning of the acoustic stimulus to 100 ms after its end; small inserts on the right indicate whether the acoustic stimulus was presented with – AV condition – or without – A condition – a visual stimulus), and as peri-stimulus time histograms (PSTHs) of the action potentials (that is, number of action potentials per 2-ms time bin) corresponding to the raster plots presented above. The sonograms of the acoustic stimuli (x axis: time in seconds; y axis: frequency in kHz) are presented below the PSTHs. All traces are time aligned. FR, firing rate.

The songs used were 439–1214 ms long (mean ± SD = 862 ± 191 ms). The 8 stimuli were randomly interleaved into a single sequence of stimuli that was repeated 10 times at each recording site, and the A and AV trials for each stimulus were interleaved. The duration of the whole sequence of 16 stimuli (8 acoustic stimuli presented twice) was about 30 s. The mean (±SD) interval between stimuli was 688 ± 144 ms, with a minimum of 436 ms.

Data Collection

Neuronal activity during acoustic and visual stimulation was recorded systematically throughout Field L, using the same approach as George et al. (2003). In brief, we used an array of 4 microelectrodes (2 in each hemisphere) made of tungsten wires insulated by epoxylite (FHC n°MX41XBWHC1), each spaced 1.2 mm apart in the longitudinal plane and 2 mm apart in the sagittal plane. Electrode impedance was in the range of 3–6 MΩ.

Recordings were made outside the breeding season (October) in an anechoic, soundproof chamber, in awake-restrained starlings, in one sagittal plane in each hemisphere, at 1 mm from the medial plane. Recordings in the left and right hemispheres were made simultaneously, at symmetrical locations. Each recording plane consisted of 10 to 12 penetrations systematically placed at regular intervals of about 230 μm in a rostrocaudal row, between 1000–1645 and 3355–4000 μm from the bifurcation of the sagittal sinus. Use of these coordinates ensured that recordings were made over all the functional areas of the Field L as described by Capsius and Leppelsack (1999) and Cousillas et al. (2005).

Only one session per day, lasting 3–4 h, was made, leading to 5–6 days of data collection for each bird. Between the recording sessions, birds went back to their cage, and a piece of plastic foam was placed over the skull opening in order to protect the brain. Birds were weighed before each recording session, and their weight remained stable over the whole data collection.

Neuronal activity was recorded systematically every 200 μm, dorso-ventrally along the path of a penetration, independently of the presence or absence of responses to the stimuli we used, between 1 and 5.6 mm below the surface of the brain.

Data Analysis

Spike arrival times were obtained by thresholding the extra-cellular recordings with a custom-made time- and level-window discriminator (which means that a spike was identified and recorded if and only if the neural waveform amplitude exceeded the user-defined trigger point and passed below the same threshold in more than 45 μs and less than 2 ms; see George et al., 2003). Single units or small multiunit clusters of 2–4 neurons were recorded in this manner. Since several studies found that analyses resulting from single and multi units were similar (Grace et al., 2003; Amin et al., 2004), the data from both types of units were analyzed together.

The computer that delivered the stimuli also recorded the times of action potentials and displayed on-line rasters of the spike data for the four electrodes simultaneously. At each recording site, spontaneous activity was measured during 1.55 s before the presentation of the first stimulus of each sequence, which resulted in 10 samples of spontaneous activity (15.5 s).

Neuronal responsiveness was assessed as in George et al. (2005) by comparing activity level (number of action potentials) during stimulation and spontaneous activity, using binomial tests. Only responsive sites were further analyzed.

The difference between the response to an acoustic stimulus presented in AV condition and the response to the same stimulus presented in A condition was described with the psychophysical measure d′ (Green and Swets, 1966) such that:  where RSAV and RSA were the mean response strengths (RS) to the same stimulus in AV and A conditions, and σ2 was the variance of each mean RS. The RS of a neuronal site to a stimulus was the difference between the firing rate during that stimulus and the background rate (during 1.55 s before the stimulus sequence). The RS was measured for each stimulus trial and then averaged across trials to get the neuronal site’s RS to that stimulus, expressed in spikes per second. A

where RSAV and RSA were the mean response strengths (RS) to the same stimulus in AV and A conditions, and σ2 was the variance of each mean RS. The RS of a neuronal site to a stimulus was the difference between the firing rate during that stimulus and the background rate (during 1.55 s before the stimulus sequence). The RS was measured for each stimulus trial and then averaged across trials to get the neuronal site’s RS to that stimulus, expressed in spikes per second. A  indicated a response enhancement in AV compared to A condition, while a

indicated a response enhancement in AV compared to A condition, while a  reflected a response suppression. d′ was only calculated for neuronal sites that exhibited at least one significant response in one of the two conditions (see also Theunissen and Doupe, 1998; Solis and Doupe, 1999).

reflected a response suppression. d′ was only calculated for neuronal sites that exhibited at least one significant response in one of the two conditions (see also Theunissen and Doupe, 1998; Solis and Doupe, 1999).

Z-scores were used to assay the strength of neuronal responses within each condition. Z-scores are the difference between the firing rate during the stimulus and that during the background activity divided by the standard deviation of this difference quantity (see Theunissen and Doupe, 1998).

The mean values calculated for individual birds (n = 5) were used for statistical comparisons. Multi-factors repeated measures ANOVAs (Statistica 7.1 for Windows, StatSoft Inc.) were performed to test for potential modality (A and AV), stimulus (unfam 1–4, fam 1–4; Figure 2A), familiarity and/or hemisphere effects, independently for d′ and Z-scores. Since no difference between hemispheres was found for any measure, data of both hemispheres were pooled. These analyses were followed, when appropriate, by post hoc comparisons with PLSD Fisher tests (Statistica 7.1 for Windows, StatSoft Inc.). Unless otherwise indicated, data are presented as mean ± SEM.

Results

Multielectrode systematic recordings allowed us to record the electrophysiological activity of 2121 sites throughout the Field L of 5 awake-restrained adult male starlings (mean ± SEM = 424 ± 15 sites/bird). Recordings were made in both hemispheres but since no difference between hemispheres was found data of both hemispheres were pooled (see Materials and Methods). Fifty-two percent of the 2121 recorded sites displayed at least one significant response. No response to visual stimuli only were observed (responses were obtained only during acoustic stimulation). Only these auditory-responsive sites (n = 1104; mean ± SEM = 221 ± 19 neurons/bird) were further analyzed.

The AV condition modulated the auditory responses of almost half (42%; n = 465, mean ± SEM = 93 ± 9 neuronal sites/bird) of the 1104 responsive neuronal sites recorded (221 ± 19 sites/bird), leading to an enhancement (d′ ≥ 1; 40.1 ± 2.8% of the cases) or a suppression (d′ ≤ −1; 40.5 ± 2.5% of the cases) of the responses. Moreover, both enhancement and suppression could be observed at the same site (19.4 ± 3.8% of the cases), depending on the stimulus broadcast. On average, response enhancement corresponded to an increase of 150 ± 15% when comparing firing rates obtained in AV and A conditions, while response suppression corresponded to a decrease of 46 ± 2% (see Figure 3B for examples).

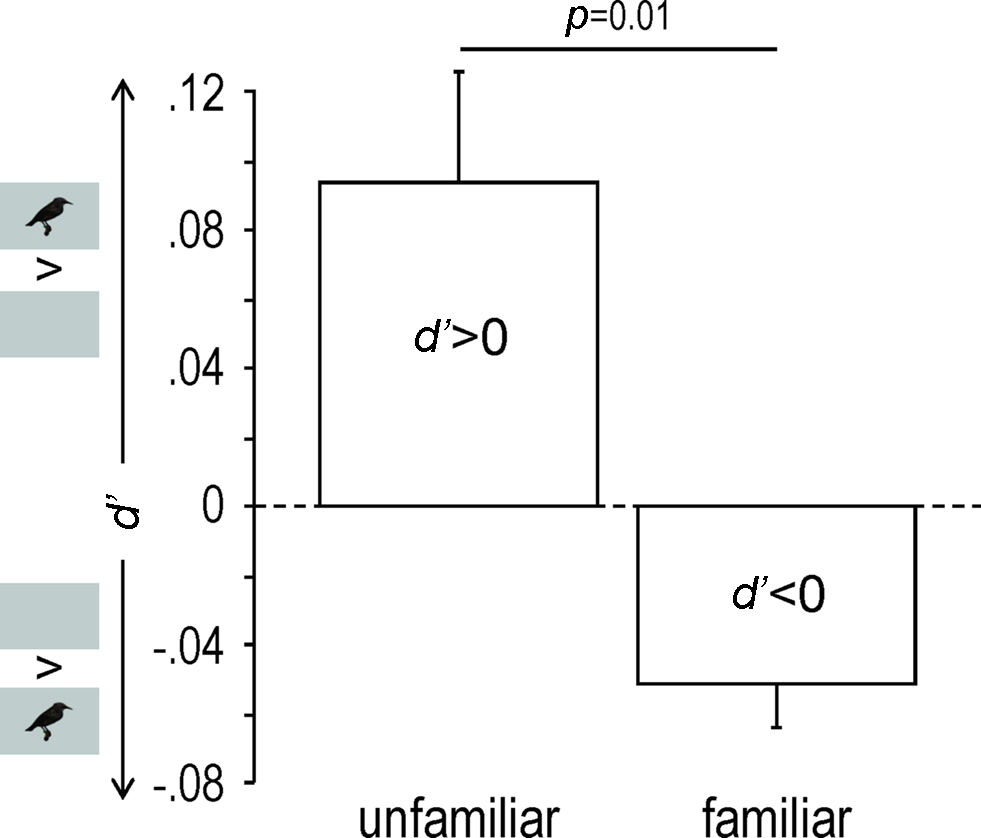

Familiarity appeared as a major factor in the response modulation (as measured by d′), which clearly depended on the stimulus (One-way repeated measures ANOVA, F7,4 = 11.65, p < 0.0001). Thus unfamiliar stimuli induced response enhancement (d′ > 0; One-sample t-test, df = 4, t = 2.88, p = 0.04) while familiar stimuli induced response suppression (d′ < 0; One-sample t-test, df = 4, t = −3.98, p = 0.02; unfamiliar versus familiar: One-way repeated measures ANOVA, F1,4 = 21.00, p = 0.01; Figures 3B and 4).

Figure 4. Response modulation as shown by mean (+SEM) d′ values obtained across birds for unfamiliar and familiar stimuli. Only neuronal sites exhibiting a|d′| value of 1 or more were taken into account. Small inserts along the y axis indicate whether the acoustic stimuli were presented with (AV condition) or without (A condition) visual stimuli.

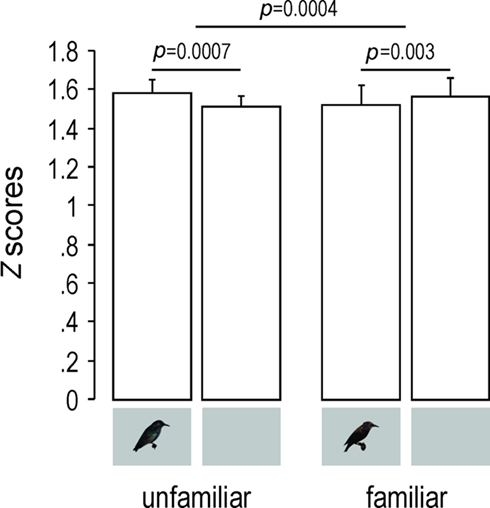

Response magnitude, as measured by Z-scores, was similarly influenced (Two-way repeated measures ANOVA, familiarity–modality interaction: F1,4 = 124.38, p = 0.0004; Figure 5). The AV condition, compared to the A one, elicited significantly stronger responses for unfamiliar stimuli (PLSD Fisher test, df = 4, p = 0.0007) and significantly weaker responses for familiar stimuli (PLSD Fisher test, df = 4, p = 0.003).

Figure 5. Response magnitude as shown by mean (+SEM) Z-scores values obtained across birds for unfamiliar and familiar stimuli. Only neuronal sites exhibiting a|d′| value of 1 or more were taken into account. Small inserts under the bars indicate whether the acoustic stimuli were presented with (AV condition) or without (A condition) visual stimuli.

Given that unfamiliar stimuli were made of whistles and warbles, we checked for any effect of stimulus type. No significant effect could be observed, neither for d′ (One-way repeated measures ANOVA, F1,4 = 5.23, p = 0.08) nor for Z-scores (Two-way repeated measures ANOVA, main effect: F1,4 = 3.74, p = 0.12, interaction: F1,4 = 4.64, p = 0.10).

Discussion

Our results show that auditory responses of almost half of the responsive neuronal sites recorded in the primary auditory area were modulated by the AV condition. Moreover, the type of modulation was influenced by stimulus familiarity. Unfamiliar stimuli induced response enhancement while familiar stimuli induced response suppression. This is to our knowledge the first evidence that multisensory integration may occur in a low-level, putatively unisensory brain region in a non-mammalian vertebrate species (review for mammals in Schroeder and Foxe, 2005).

The fact that we used only one picture of an unfamiliar bird may have been a limiting factor. However, if this had had an effect on our results, it would have been a habituation effect (since the unfamiliar image was presented more often than familiar images), which would suggest that the response enhancement that we observed for AV condition in the case of unfamiliar stimuli could actually be an underestimate. Moreover, this image was displayed with unfamiliar songs coming from different birds. However, it is very unlikely that our birds could detect that these songs were produced by different individuals. Indeed, it has been shown that starlings learn to recognize the songs of individual conspecifics by memorizing sets of motifs that are associated with individual singers, and a critical role for voice characteristics in individual song recognition has been eliminated (Gentner, 2004). Finally, unfamiliar acoustic stimuli were made of whistles and warbles, which might have influenced our results. However, no significant effect of the stimulus type (whistles or warbles) was observed (see Results).

Previous songbird studies failed to find multisensory interactions in sensory regions involved in the perception of song, which may be due to the measures (expression of ZENK gene, not expressed in Field L; Avey et al., 2005) or the birds’ state (anesthetized in Seki and Okanoya, 2006). Here the birds were awake and potentially attentive to stimulation. Moreover, our experiment was done outside the breeding season and we cannot exclude that our results could have been different if recordings had been done during the breeding season, especially as structural seasonal changes have been very recently observed in regions involved in auditory processing (De Groof et al., 2009; however see Duffy et al., 1999).

Selective attention has been proposed as a key mechanism in the process of song learning from adults (Poirier et al., 2004; Bertin et al., 2007), and both social segregation (lack of attention) and physical separation (unisensory stimulation) have been shown to impair the development of the primary auditory area (Cousillas et al., 2006). Attention may also explain the effect of familiarity observed here on auditory responses of adults. Thus, one could imagine that, when presented with an image of an unfamiliar starling, our birds somehow focused on the upcoming acoustic stimuli (which could help them identify the unknown individual), leading to response enhancement, whereas when presented with an image of a familiar starling, attention to the following acoustic stimulus was more diffuse (or distributed), leading to response suppression. Alternatively, it could be that birds reacted differently to a single (unfamiliar) image associated with four different songs compared to several (familiar) images each associated with a unique song. Further experiments using the same number of unfamiliar and familiar images will be needed to clarify this point. However, behavioral data from horses support the familiarity hypothesis: horses display lower visual attention after hearing familiar than unfamiliar individuals (Lemasson et al., 2009), suggesting that they may seek for visual information when hearing unfamiliar individuals but not when hearing familiar ones.

Attention may not only alter perception at the peripheral level (Puel et al., 1988) but also modulate multisensory integration processes. Thus, seeing speech is supposed to tune the primary auditory cortex of humans to the acoustic features of speech, via multisensory attentional mechanisms and anticipation (Ojanen, 2005). Moreover, Talsma et al. (2007), using a combined audiovisual streaming design and a rapid serial visual presentation paradigm, have found, in the human brain, reversed integration effects for attended versus unattended stimuli – that is, when both the visual and auditory senses were attended, a superadditive effect was observed (multisensory response > unisensory sum), whereas, when unattended, this effect was reversed (multisensory response < unisensory sum). According to them, this effect appeared to reflect late attention-related processing that had spread to encompass the auditory component of the multisensory object. In the same way, we could imagine that, in our birds, the opposite effect observed for unfamiliar versus familiar stimuli could reflect attention-related processing taking place in the HVC (a highly integrative vocal nucleus where both visually and auditory-evoked responses have been recorded; Bischof and Engelage, 1985) that had spread to the Field L (which is functionally connected to HVC; Shaevitz and Theunissen, 2007).

Overall, our findings add to the parallels between birdsong and human speech: in both cases, vision modulates audition in auditory areas of the brain, and they also open the way to promising lines of research on the attention-related integration of multisensory information and the role of audio/visual components in identifying the source of a signal.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank C. Aubry and L. Henry for their help with birds. This study has been supported by a grant of the French CNRS to Isabelle George.

References

Amin, N., Grace, J. A., and Theunissen, F. E. (2004). Neural response to bird’s own song and tutor song in the zebra finch field L and caudal mesopallium. J. Comp. Physiol. A 190, 469–489.

Avey, M. T., Phillmore, L. S., and MacDougall-Shackleton, S. A. (2005). Immediate early gene expression following exposure to acoustic and visual components of courtship in zebra finches. Behav. Brain Res. 165, 247–253.

Bahrick, L. E., Lickliter, R., and Flom, R. (2004). Intersensory redundancy guides the development of selective attention, perception, and cognition in infancy. Curr. Dir. Psychol. Sci. 13, 99–102.

Bertin, A., Hausberger, M., Henry L., and Richard-Yris, M.-A. (2007). Adult and peer influences on starling song development. Dev. Psychobiol. 49, 362–374.

Bischof, H. J., Böhner, J., and Sossinka, R. (1981). Influence of external stimulation on the quality of the song of the zebra finch (Taeniopygia guttata castanotis Gould). Z. Tierpsychol. 57, 261–267.

Bischof, H. J., and Engelage, J. (1985). Flash evoked responses in a song control nucleus of the zebra finch (Taeniopygia guttata castanotis). Brain Res. 326, 370–374.

Bolhuis, J. J., Van Mil, D. P., and Houx, B. B. (1999). Song learning with audiovisual compound stimuli in zebra finches. Anim. Behav. 58, 1285–1292.

Bovet, D., and Vauclair, J. (2000). Picture recognition in animals and humans. Behav. Brain Res. 109, 143–165.

Calvert, G. A., Bullmore, E. T., Brammer, M. J., Campbell, R., Williams, S. C., McGuire, P. K., Woodruff, P. W., Iversen, S. D., and David, A. S. (1997). Activation of auditory cortex during silent lipreading. Science 276, 593–596.

Capsius, B., and Leppelsack, H. J. (1999). Response patterns and their relationship to frequency analysis in auditory forebrain centers of a songbird. Hear. Res. 136, 91–99.

Cousillas, H., George, I., Henry, L., Richard, J.-P., and Hausberger, M. (2008). Linking social and vocal brains: could social segregation prevent a proper development of a central auditory area in a female songbird? PLoS ONE 3, e2194. doi: 10.1371/journal.pone.0002194

Cousillas, H., George, I., Mathelier, M., Richard, J.-P., Henry, L., and Hausberger, M. (2006). Social experience influences the development of a central auditory area. Naturwissenschaften 93, 588–596.

Cousillas, H., Leppelsack, H. J., Leppelsack, E., Richard, J.-P., Mathelier, M., and Hausberger, M. (2005). Functional organization of the forebrain auditory centres of the European starling: a study based on natural sounds. Hear. Res. 207, 10–21.

De Groof, G., Verhoye, M., Poirier, C., Leemans, A., Eens, M., Darras, V. M., and Van der Linden, A. (2009). Structural changes between seasons in the songbird auditory forebrain. J. Neurosci. 29, 13557–13565.

Doupe, A. J., and Kuhl, P. K. (1999). Birdsong and human speech: common themes and mechanisms. Annu. Rev. Neurosci. 22, 567–631.

Duffy, D. L., Bentley, G. E., and Ball, G. F. (1999). Does sex or photoperiodic condition influence ZENK induction in response to song in European starlings? Brain Res. 844, 78–82.

Evans, C. S., and Marler, P. (1991). On the use of video images as social stimuli in birds: audience effects on alarm calling. Anim. Behav. 41, 17–26.

Fort, A., and Giard, M. H. (2004). “Multiple electrophysiological mechanisms of audiovisual integration in human perception,” in The Handbook of Multisensory Processes, eds G. Calvert, C. Spence, and B. E. Stein (Cambridge, MA: The MIT Press), 503–514.

Gentner, T. Q. (2004). Neural systems for individual song recognition in adult birds. Ann. N. Y. Acad. Sci. 1016, 282–302.

George, I., Cousillas, H., Richard, J.-P., and Hausberger, M. (2003). A new extensive approach to single unit responses using multisite recording electrodes: application to the songbird brain. J. Neurosci. Methods 125, 65–71.

George, I., Cousillas, H., Richard, J.-P., and Hausberger, M. (2005). New insights into the auditory processing of communicative signals in the HVC of awake songbirds. Neuroscience 136, 1–14.

George, I., Hara, E., and Hessler, N. A. (2006). Behavioral and neural lateralization of vision in courtship singing of the zebra finch. J. Neurobiol. 66, 1164–1173.

Grace, J. A., Amin, N., Singh, N. C., and Theunissen, F. E. (2003). Selectivity for conspecific song in the zebra finch auditory forebrain. J. Neurophysiol. 89, 472–487.

Hausberger, M. (1997). “Social influences on song acquisition and sharing in the European starling (Sturnus vulgaris),” in Social Influences on Vocal Development, eds C. T. Snowdon and M. Hausberger (Cambridge: Cambridge University Press), 128–156.

Hausberger, M., Forasté, M., Richard-Yris, M.-A., and Nygren, C. (1997). Differential response of female starlings to shared and nonshared song types. Etologia 5, 31–38.

Hebets, E. A. (2005). Attention-altering signal interactions in the multimodal courtship display of the wolf spider Schizocosa uetzi. Behav. Ecol. 16, 75–82.

Henry, L. (1998). Captive and free living European starlings use differently their song repertoire. Rev. Ecol.-Terre Vie 53, 347–352.

Hernandez, A. M., and MacDougall-Shackleton, S. A. (2004). Effects of early song experience on song preferences and song control and auditory brain regions in female house finches (Carpodacus mexicanus). J. Neurobiol. 59, 247–258.

Hessler, N. A., and Doupe, A. J. (1999). Social context modulates singing-related neural activity in the songbird forebrain. Nat. Neurosci. 2, 209–211.

Hultsch, H., Schleuss, F., and Todt, D. (1999). Auditory-visual stimulus pairing enhances perceptual learning in a songbird. Anim. Behav. 58, 143–149.

Ikebuchi, M., and Okanoya, K. (1999). Male zebra finches and Bengalese finches emit directed songs to the video images of conspecific females projected onto a TFT display. Zool. Sci. 16, 63–70.

Janata, P., and Margoliash, D. (1999). Gradual emergence of song selectivity in sensorimotor structures of the male zebra finch song system. J. Neurosci. 19, 5108–5118.

Jarvis, E. D. (2004). Learned birdsong and the neurobiology of human language. Ann. N. Y. Acad. Sci. 1016, 749–777.

Jarvis, E. D., Scharff, C., Grossman, M. R., Ramos, J. A., and Nottebohm, F. (1998). For whom the bird sings: context-dependent gene expression. Neuron 21, 775–788.

Lemasson, A., Boutin, A., Boivin, S., Blois-Heulin, C., and Hausberger, M. (2009). Horse (Equus caballus) whinnies: a source of social information. Anim. Cogn. 12, 693–704.

Leppelsack, H. J. (1978). Unit responses to species-specific sounds in the auditory forebrain center of birds. Fed. Proc. 37, 2336–2341.

Leppelsack, H. J., and Vogt, M. (1976). Responses of auditory neurons in forebrain of a songbird to stimulation with species-specific sounds. J. Comp. Physiol. 107, 263–274.

Lewicki, M. S., and Arthur, B. J. (1996). Hierarchical organization of auditory temporal context sensitivity. J. Neurosci. 16, 6987–6998.

Margoliash, D. (1986). Preference for autogenous song by auditory neurons in a song system nucleus of the white-crowned sparrow. J. Neurosci. 6, 1643–1661.

Meredith, M. A., and Stein, B. E. (1983). Interactions among converging sensory inputs in the superior colliculus. Science 221, 389–391.

Muller, C. M., and Leppelsack, H. J. (1985). Feature extraction and tonotopic organization in the avian auditory forebrain. Exp. Brain Res. 59, 587–599.

Munhall, K. G., Gribble, P., Sacco, L., and Ward, M. (1996). Temporal constraints on the McGurk effect. Percept. Psychophys. 58, 351–362.

Ojanen, V. (2005). Neurocognitive Mechanisms of Audiovisual Speech Perception. Unpublished doctoral dissertation, Helsinki University of Technology, Espoo.

Partan, S. R., Larco, C. P., and Owens, M. J. (2009). Wild tree squirrels respond with multisensory enhancement to conspecific robot alarm behaviour. Anim. Behav. 77, 1127–1135.

Poirier, C., Henry, L., Mathelier, M., Lumineau, S., Cousillas, H., and Hausberger, M. (2004). Direct social contacts override auditory information in the song-learning process in starlings (Sturnus vulgaris). J. Comp. Psychol. 118, 179–193.

Proops, L., McComb, K., and Reby, D. (2009). Cross-modal individual recognition in domestic horses (Equus caballus). Proc. Natl. Acad. Sci. U.S.A. 106, 947–951.

Puel, J. L., Bonfils, P., and Pujol, R. (1988). Selective attention modifies the active micromechanical properties of the cochlea. Brain Res. 447, 380–383.

Reiner, A., Perkel, D. J., Bruce, L. L., Butler, A. B., Csillag, A., Kuenzel, W., Medina, L., Paxinos, G., Shimizu, T., Striedter, G., Wild, M., Ball, G. F., Durand, S., Güntürkün, O., Lee, D. W., Mello, C. V., Powers, A., White, S. A., Hough, G., Kubikova, L., Smulders, T. V., Wada, K., Dugas-Ford, J., Husband, S., Yamamoto, K., Yu, J., Siang, C., and Jarvis, E. D. (2004). Revised nomenclature for avian telencephalon and some related brainstem nuclei. J. Comp. Neurol. 473, 377–414.

Schroeder, C. E., and Foxe, J. (2005). Multisensory contributions to low-level, “unisensory” processing. Curr. Opin. Neurobiol. 15, 454–458.

Seki, Y., and Okanoya, K. (2006). Effects of visual stimulation on the auditory responses of the HVC song control nucleus in anesthetized Bengalese finches. Ornithol. Sci. 5, 39–46.

Sen, K., Theunissen, F. E., and Doupe, A. J. (2001). Feature analysis of natural sounds in the songbird auditory forebrain. J. Neurophysiol. 86, 1445–1458.

Shaevitz, S. S., and Theunissen, F. E. (2007). Functional connectivity between auditory areas Field L and CLM and song system nucleus HVC in anesthetized zebra finches. J. Neurophysiol. 98, 2747–2764.

Snowdon, C. T., and Hausberger, M. (1997). Social Influences on Vocal Development. Cambridge: Cambridge University Press.

Solis, M. M., and Doupe, A. J. (1999). Contributions of tutor and bird’s own song experience to neural selectivity in the songbird anterior forebrain. J. Neurosci. 19, 4559–4584.

Sturdy, C. B., Phillmore, L. S., Sartor, J. J., and Weisman, R. G. (2001). Reduced social contact causes perceptual deficits in zebra finches. Anim. Behav. 62, 1207–1218.

Sumby, W. H., and Pollack, I. (1954). Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215.

Talsma, D., Doty, T. J., and Woldorff, M. G. (2007). Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb. Cortex 17, 679–690.

Theunissen, F. E., and Doupe, A. J. (1998). Temporal and spectral sensitivity of complex auditory neurons in the nucleus HVc of male zebra finches. J. Neurosci. 18, 3786–3802.

Todt, D., Hultsch, H., and Heike, D. (1979). Conditions affecting song learning in nightingales. Z. Tierpsychol. 51, 23–35.

Vignal, C., Andru, J., and Mathevon, N. (2005). Social context modulates behavioural and brain immediate early gene responses to sound in male songbird. Eur. J. Neurosci. 22, 949–955.

Keywords: multisensory, audiovisual, primary auditory area, familiarity, European starling

Citation: George I, Richard J-P, Cousillas H and Hausberger M (2011) No need to talk, I know you: familiarity influences early multisensory integration in a songbird’s brain. Front. Behav. Neurosci. 4:193. doi: 10.3389/fnbeh.2010.00193

Received: 24 June 2010;

Accepted: 23 December 2010;

Published online: 25 January 2011.

Edited by:

Martin Giurfa, CNRS – Université Paul Sabatier – Toulouse III, FranceReviewed by:

Sarah Partan, Hampshire College, USACopyright: © 2011 George, Richard, Cousillas and Hausberger. This is an open-access article subject to an exclusive license agreement between the authors and Frontiers Media SA, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Isabelle George, UMR6552 – Ethologie Animale et Humaine, Université Rennes 1 – CNRS, 263 Avenue du Général Leclerc, Campus de Beaulieu – Bât. 25, 35042 Rennes Cedex, France. e-mail:aXNhYmVsbGUuZ2VvcmdlQHVuaXYtcmVubmVzMS5mcg==