- 1Department of Computer Science, Christ University, Bengaluru, India

- 2Independent Practitioner, San Francisco, CA, United States

Anxiety disorders are psychiatric conditions characterized by prolonged and generalized anxiety experienced by individuals in response to various events or situations. At present, anxiety disorders are regarded as the most widespread psychiatric disorders globally. Medication and different types of psychotherapies are employed as the primary therapeutic modalities in clinical practice for the treatment of anxiety disorders. However, combining these two approaches is known to yield more significant benefits than medication alone. Nevertheless, there is a lack of resources and a limited availability of psychotherapy options in underdeveloped areas. Psychotherapy methods encompass relaxation techniques, controlled breathing exercises, visualization exercises, controlled exposure exercises, and cognitive interventions such as challenging negative thoughts. These methods are vital in the treatment of anxiety disorders, but executing them proficiently can be demanding. Moreover, individuals with distinct anxiety disorders are prescribed medications that may cause withdrawal symptoms in some instances. Additionally, there is inadequate availability of face-to-face psychotherapy and a restricted capacity to predict and monitor the health, behavioral, and environmental aspects of individuals with anxiety disorders during the initial phases. In recent years, there has been notable progress in developing and utilizing artificial intelligence (AI) based applications and environments to improve the precision and sensitivity of diagnosing and treating various categories of anxiety disorders. As a result, this study aims to establish the efficacy of AI-enabled environments in addressing the existing challenges in managing anxiety disorders, reducing reliance on medication, and investigating the potential advantages, issues, and opportunities of integrating AI-assisted healthcare for anxiety disorders and enabling personalized therapy.

1 Introduction

Anxiety disorders are prevalent psychiatric conditions characterized by excessive and prolonged anxiety in response to various stimuli. The average lifetime prevalence of any anxiety disorder is approximately 16%, with a 12-month prevalence of around 11% (Kessler et al., 2009). These prevalence rates differ among studies, with higher estimates in developed Western countries compared to developing nations. Moreover, anxiety disorders are among the most prevalent mental health conditions, with a higher likelihood that the burden of these disorders will grow (Su et al., 2022). In recent years, a notable segment of the population, predominantly comprising the younger demographic, has suffered from mental health disorders, including anxiety and depression. Anxiety disorders, including conditions like depression, significantly impact medical care consumption. Failure to diagnose these conditions often leads to increased medical utilization as healthcare providers seek physical explanations for the symptoms.

Additionally, when depression co-exist with other general medical conditions, patient adherence to treatment worsens and reduces the chances of improvement or recovery from the other condition (Goldman et al., 1999). However, access to adequate treatment remains elusive to many within this cohort. This predicament reflects the broader societal context in which individuals facing mental health challenges hesitate to acknowledge their condition due to societal norms and pressure. Furthermore, for those who overcome societal stigmatization, the significant financial burden of seeking professional assistance poses an obstacle to accessibility (Furqan et al., 2020).

In a study exploring the prevalence of anxiety disorders, the authors analyzed the findings of a global survey conducted by the World Health Organization (WHO). The survey revealed that mental health conditions like depression, schizophrenia, and personality disorders are over-represented in inpatient treatment settings due to certain features of these disorders requiring hospitalization (Sartorius et al., 1993). In contrast, patients with anxiety disorders are generally underrepresented in inpatient care as their condition rarely necessitates hospitalization. These findings suggest a significant underestimation and inadequate treatment of anxiety disorders, indicating a need for increased awareness and appropriate interventions (Bandelow and Michaelis, 2015). Moreover, the standard anxiety disorder management guidelines across countries endorse psychotherapy as the primary therapeutic approach, including modalities such as cognitive behavioral therapy, relaxation therapy, exposure-response prevention, and several other psychotherapeutic interventions (Katzman et al., 2014; Bandelow et al., 2022). However, the limited availability of qualified therapists, especially in remote or developing areas, affects the accessibility of standardized and appropriate treatments for numerous patients (Su et al., 2022).

Despite the robust empirical evidence supporting specific psychological interventions, their extensive implementation in the field remains a significant obstacle (Sadeh-Sharvit et al., 2023). Furthermore, therapists employed in mental health centers are burdened with significant administrative obligations, resulting in an imbalance between work and personal life and the emergence of compassion fatigue in frontline mental health workers. These circumstances challenge the successful implementation of evidence-based practices (Moreno et al., 2020; Lluch et al., 2022; Wilkinson et al., 2017). In light of the complexities surrounding the proficiency of adopting particular psychotherapeutic modalities, patients may experience varying levels of engagement, with some demonstrating resistance to treatment. In such scenarios, therapists must embrace flexible strategies to deliver interventions effectively. Considering the challenges with anxiety disorder management, the integration of innovative technologies such as artificial intelligence (AI) environments and platforms emerges as imperative. These technologies hold the potential to assist therapists in managing and treating patients with anxiety disorders and facilitating precision-based therapeutic approaches while concurrently reducing their workload (Bickman, 2020). Additionally, adopting novel technologies to assess the impact of behavioral interventions and conducting regular evaluations of their efficacy are imperative steps toward enhancing patient outcomes. This necessity highlights the importance of implementing scalable AI-enabled mental healthcare delivery systems capable of facilitating the objective measurement of patient progress, streamlining workflow processes, supporting the customization of treatments, and automating administrative tasks (Sadeh-Sharvit and Hollon, 2020).

The effective delivery of therapy relies on establishing human connection, empathy, and the meticulous consideration of each patient’s needs. While these human elements are invaluable, AI-driven tools can complement therapy by enriching clinicians’ capacity to provide personalized care. AI technologies can support this by executing tasks that traditionally demand human intelligence, such as analyzing complex patterns in patient language, behavior, and emotional expressions. This enhancement enables clinicians to dedicate more attention to the human aspects of the therapeutic relationship, like empathy and comprehension, while harnessing AI to refine treatment approaches, monitor progress, and implement data-inspired modifications to enhance therapy outcomes (Graham et al., 2019). The utilization of AI technologies shows promise from the standpoint of patients as they can be incorporated into mental health treatment to enhance patient accessibility and involvement, ultimately resulting in improved quality of care. AI provides logical predictions and can aid clinicians in interpreting treatment data and enabling data-driven clinical decisions (Kellogg and Sadeh-Sharvit, 2022). Despite these advantages, the widespread utilization of AI-enabled digital decision support systems, tools, and environments in healthcare to effectively manage anxiety disorders and other mental health problems has not been achieved (Bertl et al., 2022; Antoniadi et al., 2021).

To address the gap in current research, this study aims to critically analyze the effectiveness of AI technologies in mental healthcare, particularly in managing anxiety disorders. Although there is ongoing research on the use of AI in mental health, there is a significant lack of understanding regarding the comparative effectiveness of AI-based interventions versus traditional therapeutic methods, specifically concerning personalized care, user engagement, and ethical considerations. This study seeks to fill this gap by exploring several critical research questions, including:

• What is the current state of research on the transformative potential of AI-based interventions in managing anxiety disorders, and how do these interventions compare with traditional therapeutic approaches in terms of efficacy.

• In what ways do AI-based interventions enhance precision and personalization in the treatment of anxiety disorders, particularly through adaptive and data-driven approaches tailored to individual needs?

• How do AI-driven environments, including technologies like VR and chatbots, transform user engagement and treatment adherence in the management of anxiety disorders?

• What are the key ethical, privacy, and regulatory challenges associated with the transformative use of AI technologies in managing anxiety disorders?

• Could the integration of AI-based interventions significantly reduce the reliance on medication for individuals with anxiety disorders, and what are the potential long-term outcomes and implications of such a shift?

• What are the key challenges and opportunities in automating mental healthcare services through AI, and how can these technologies be implemented to maximize their transformative impact in clinical practice?

• What transformative insights can be drawn from recent clinical trials on the efficacy of AI in mental healthcare, and how do these findings inform future research and the development of next-generation AI-driven therapeutic tools?

This review centers on exploring the transformative potential of artificial intelligence (AI) technologies in the management of anxiety disorders, emphasizing their ability to enhance personalized care, improve treatment accessibility, and address current limitations in traditional therapeutic methods.

2 Materials and methods

2.1 Strategy

A narrative review approach has been chosen for its reliability in exploring specific areas within the subject domain and enabling the synthesis of current theories and concepts (Ferrari, 2015). The aim is to identify the recurring patterns, emerging trends, and gaps in existing literature by adopting a narrative review. The study integrates various advantages of systematic reviews, including search strategies, inclusion and exclusion criteria, study selection, and data extraction. For comprehensive coverage, we have relied on established narrative review frameworks successfully implemented in previous studies (Sianturi et al., 2023; Green et al., 2006; Bourhis, 2017). We performed an extensive literature search across several databases such as Scopus, Web of Science, IEEE Digital Library, ACM, PubMed, Google Scholar, and NIH, covering all the existing literature from the previous decade until the present time. This review provides a comprehensive synthesis of knowledge and findings in the specific field of study and provides valuable insights and perspectives.

The research adopted a thorough approach to conduct a comprehensive literature review. The process began with compiling a comprehensive list of keywords and terms such as “artificial intelligence in mental healthcare,” “AI tools for managing anxiety disorders,” “AI-driven environment for treating anxiety disorders,” “use of AI as therapeutic tools in depression and anxiety,” “customizing anxiety treatment with AI technologies,” “digital AI interventions for anxiety,” “AI in psychotherapeutic approaches to anxiety disorders,” and “managing generalized anxiety disorder with AI-based cognitive tools.” These terms were used to search through several databases and search engines, including Google Scholar, Web of Science, and Scopus. The search was supplemented by a manual review of references within the selected studies to capture all pertinent literature, particularly those studies with concepts interlinked with the field, such as AI’s role in managing anxiety disorders, AI-based platforms for anxiety treatment, AI’s application in psychiatry, AI’s enhancement in mental health management, and the evaluation of psychological factors in anxiety disorders through AI tools. This search methodology enabled the identification of a broad spectrum of research on the application of AI in the treatment of anxiety disorders and broader mental healthcare.

2.2 Inclusion and exclusion criteria

The review centered on conducting a comprehensive evaluation of the efficacy of AI environments in managing anxiety disorders. As a result, it was necessary to establish precise inclusion and exclusion criteria to select studies that significantly contribute to this field. The inclusion criteria encompass various essential elements, including studies that prioritize utilizing AI technologies, machine learning algorithms, virtual reality simulation, and chatbots for treating and managing anxiety disorders. The review further covers a range of anxiety disorders, namely generalized anxiety disorder (GAD), social anxiety disorder (SAD), panic disorder, phobias, obsessive-compulsive disorder (OCD), and post-traumatic stress disorder (PTSD). The focus areas in most literature, encompassing interrelated concepts such as depression, anxiety, and other mental health disorders, were also given due consideration.

Moreover, particular attention was given to empirical investigations, such as randomized controlled trials, quasi-experimental designs, and observational studies, as they contribute to compiling comprehensive data concerning the efficacy of AI environments in treating anxiety disorders. Furthermore, studies that are chosen must evaluate outcomes that are relevant to the management of anxiety, including the reduction of anxiety symptoms, improvement in the quality of life, and treatment adherence. The study examined all articles published in the past decade to ensure the literature review is current. Furthermore, including English articles improves the accessibility and practicality of the literature review for a broader range of readers. In order to maintain a comprehensive evaluation of AI-enabled interventions, studies that solely investigate conventional therapies without incorporating AI technologies are excluded. Similarly, research articles that explore mental health conditions other than anxiety disorders are deliberately left out to uphold the review’s specificity. Furthermore, non-empirical sources, including editorials, opinion pieces, conference abstracts, dissertations, and preprints, are excluded from upholding the reliability of the synthesized evidence. A human-centric focus is maintained in the literature review by excluding studies that do not have direct implications for managing anxiety disorders in humans. Moreover, the exclusion of duplicate publications or redundant data from the same study populations during the review process serves to enhance the validity of the findings by preventing data duplication and bias.

2.3 Study selection

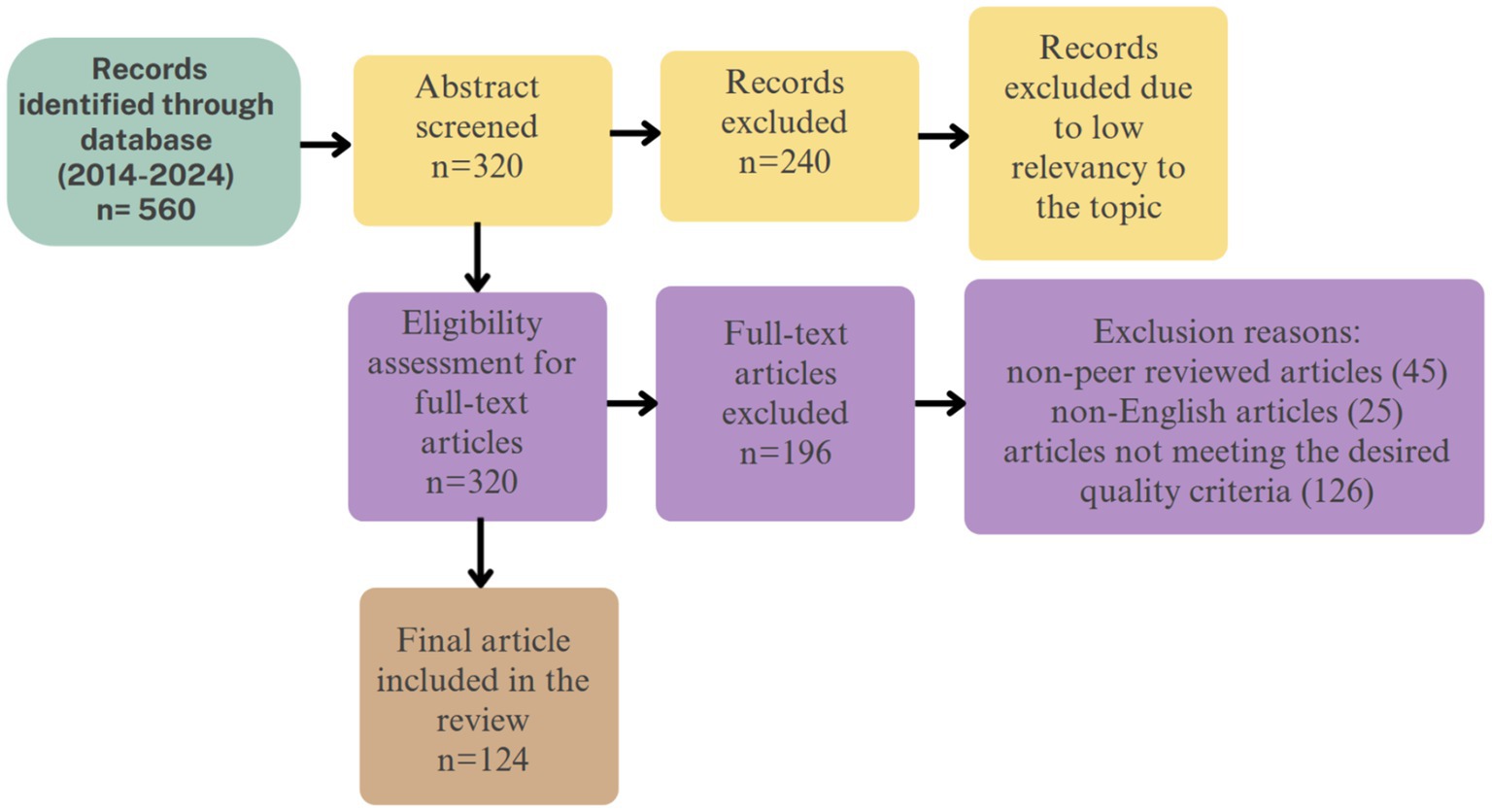

The selection of studies was conducted in a systematic two-stage process. Initially, titles and abstracts were screened from a comprehensive database search spanning Web of Science, Scopus, PubMed, ACM Digital Library, Google Scholar, IEEE, and PsycINFO. This initial screening aimed to identify studies published between 2014 and 2024, resulting in 560 literature sources. Two reviewers independently assessed these records against predetermined inclusion and exclusion criteria. The criteria specified that the studies be either case studies, randomized controlled trials, or practical experiments focused primarily on assessing the efficacy of artificial intelligence technologies in managing anxiety disorders. These studies needed to detail interventions involving AI technologies with participants assigned to treatment and control groups. Additionally, only studies published in English and available in full text were included, thereby excluding conference papers, preprints, or papers only available in abstract form.

Beyond database searches, the selection process was enriched by consultations with field experts who recommended pivotal studies and unpublished reports. We also conducted hand searches of conference proceedings and scanned the reference lists of identified papers to ensure comprehensive coverage and inclusion of relevant studies. This thorough approach included critical literature that may not have been indexed in the primary databases.

Following the initial screening, 320 studies were selected for a more detailed review. Full-text articles were meticulously examined to confirm relevance and methodological rigor. Discrepancies between the reviewers were resolved through discussion or, if necessary, by consulting a third reviewer.

This detailed examination narrowed the selection down to 196 studies for further analysis. Articles were excluded if they were non-peer-reviewed (Rundo et al., 2020), not in English (Graham et al., 2019), or failed to meet other quality criteria (Kessler et al., 2009). Finally, 125 peer-reviewed articles were thoroughly analyzed to extract insights and evidential support regarding the effectiveness of AI technologies in treating anxiety disorders. Figure 1 depicts the flowchart for the study selection and inclusion process.

2.4 Data extraction

The study employed a narrative approach to accommodate the expected study design and results variations. This approach involves qualitatively summarizing the data from the studies included in the analysis.

The study characteristics comprised data on authors, publication year, and study location. This information establishes the framework for the research and its conclusions. Conversely, the study design outlines the research design, encompassing randomized controlled trials or quasi-experimental approaches, and incorporates participant demographics, including gender and age, to enhance the contextual understanding. The sample sizes reflect the number of participants included in the study. A significant focus has been placed on including intervention details, specifically the utilization of AI applications in the study, encompassing the purpose and method of implementation. Furthermore, an examination was conducted on the impact on therapy outcomes and the management of anxiety disorders. Finally, the key findings encompassed the primary outcomes and conclusions from the study on the influence of AI interventions.

2.5 Data synthesis

Due to the varying nature of study designs and outcome measures, we used a qualitative narrative framework to synthesize data in this review. This method allowed for a comprehensive examination of the data without the restrictions of meta-analysis techniques, which may not be appropriate when there is high heterogeneity. The narrative synthesis involved a detailed assessment of each included study, focusing on identifying and understanding the diverse impacts of the interventions studied.

The analysis involved finding key patterns, themes, and trends. This included examining how different variables influenced the outcomes and identifying commonalities across studies to draw broader conclusions about the topic. During the analysis, we paid particular attention to contextual and methodological differences that could affect the interpretation of results, such as the settings where AI technologies were implemented and the populations targeted. We integrated the findings to construct a coherent narrative that reflects the potential and limitations of AI tools and environments in managing anxiety disorders. This approach highlights AI technologies’ effects and provides a solid foundation for future research, ensuring that investigations can build on a clear understanding of what has been established previously.

2.6 Bias evaluation

The bias evaluation process is critical to uphold the validity and reliability of the findings, addressing potential biases in study selection and data interpretation. A meticulous protocol was designed to mitigate potential biases throughout the review process. Initially, a structured and transparent method was adopted to formulate the research questions and set the inclusion and exclusion criteria, ensuring that the study selection was not influenced by the researcher’s preferences or preconceived notions. Additionally, data extraction was performed using a standard approach to reduce variability and maintain consistency across various studies.

A diverse range of databases was searched to combat publication bias and achieve a comprehensive review, including widely recognized sources. During the data synthesis phase, discrepancies and conflicts were managed by involving an independent reviewer who facilitated critical discussions and consensus building to enhance the credibility and objectivity of the outcomes. This process was not only to eliminate bias but also to emphasize the transparency and reproducibility of the research.

3 Findings

Researchers have demonstrated considerable interest and highlighted AI’s potential to enhance anxiety disorder management. AI-enabled interventions are varied and encompass various digital tools, such as AI-enabled virtual reality (VR) environments, chatbots for cognitive behavioral therapy, and machine learning (ML) algorithms designed to customize treatment plans to individual needs. The findings examine the research questions and outline the diverse studies identified throughout the review.

3.1 Standard AI techniques for managing anxiety and other mental health disorders

AI has the potential to transform mental health care by adhering to the tenets of personalized care and enhanced accessibility. Existing studies have highlighted the capability of AI technologies to provide continuous support, facilitate early detection of mental health disorders, and reduce the extensive wait times associated with traditional consultations. Furthermore, AI tools enable informed decision-making through robust data analysis, significantly enhancing mental health interventions (Zafar et al., 2024).

Machine learning (ML) has been employed to leverage insights from unstructured data sources, such as clinician notes, to enhance the accuracy of predictions related to anxiety disorders (Thieme et al., 2020). ML techniques enable the development of predictive models that assess the probability of individuals developing mental health disorders by analyzing various clinical factors, including historical data. These predictive outcomes can be used to personalize treatment approaches, recommending specific therapies, medications, or other interventions that align with each individual’s unique characteristics and response patterns (Graham et al., 2019). Furthermore, researchers have also applied ML in classifying mental health conditions using techniques such as support vector machines (SVM), logistic regression, random forests, decision trees, and artificial neural networks (Chung and Teo, 2022). Additionally, there is an emerging pattern of utilizing electronic health records (EHRs) to diagnose mental health disorders by employing ML or hybrid AI techniques. Finally, ML is also being utilized in the development of automated screening tools to aid in the identification of individuals who may be susceptible to specific mental health disorders (Iyortsuun et al., 2023).

Furthermore, ML can be broadly categorized into two main approaches, namely supervised and unsupervised learning. Supervised ML is a technique used to train algorithms using pre-labeled data to categorize or predict outcomes. This training process involves multiple inputs to accurately predict the results, including biological (genetic information), patient medical records, and lifestyle features (Vora et al., 2023). These predictions can take two primary forms: classification, which identifies the type of mental disorder, or quantitative prediction, which indicates the severity of the disease. In contrast, unsupervised learning operates without labeled data and focuses on identifying inherent patterns and relationships within the dataset. This method is critical for analyzing complex datasets like neuroimaging data. Unsupervised learning can aid in discovering new subtypes of mental disorders by identifying these patterns and potentially lead to the development of more effective treatment options based on the acquired information (Thakkar et al., 2024).

Deep learning (DL) is a specialized branch of machine learning that excels at identifying complex patterns and categorizing data. While DL can operate in an unsupervised manner, it is also highly effective in supervised learning tasks where labeled data is used for training. This flexibility has increased interest among researchers aiming to enhance precision in diagnosing and managing health disorders. DL is derived from Artificial Neural Network (ANN) and is inspired by the human brain’s neuronal structure, allowing it to process complex data across multiple layers. These models have become a valuable addition in the context of mental health research, particularly in areas like image classification for neuroimaging data from CT and MRI scans to identify potential markers of mental health conditions (Thakkar et al., 2024).

Reinforcement Learning (RL) involves training algorithms to optimize decision-making abilities by rewarding desired behaviors (Su et al., 2022). This helps the model to adjust treatment plans based on user feedback dynamically. In mental healthcare, the core principle of RL is to assign each trainee a RL agent to assist with personalized tasks or exercises. During the execution of the activities, the RL agents actively interact with the trainee, acquiring knowledge from each distinct interaction utilizing specific RL algorithms and characteristics. This procedure facilitates the development of personalized cognitive systems. The system progressively develops a personalized policy based on the trainee’s continuous performance. The trainee’s performance is a motivating incentive that facilitates effective policy adaptation. The long-term optimization of the trainee’s performance is achieved by carefully adjusting the parameters that determine the difficulty level. Consequently, the system can independently adapt and acquire the optimal approach to enhance cognitive training on an individualized level for each trainee (Zini et al., 2022; Stasolla and Di Gioia, 2023).

Similarly, virtual reality (VR) with AI-integrated techniques has been identified as being used in treating and assessing mental health conditions. VR-based exposure therapy is considered to benefit individuals in managing situations concerning anxiety over a feared situation or context in a safe and controlled manner (Bell et al., 2020). This results in less distress and better therapeutic outcomes. On the other hand, computer vision technology is used to interpret visual data and analyze non-verbal cues, such as facial expressions and gestures, to determine emotional states (Huang et al., 2023). This technology plays a critical role in mental health assessments and can support individuals with neurodevelopmental disorders (Hashemi et al., 2021; de Belen et al., 2020).

AI can transform mental health treatments by providing predictive insights, immediate feedback, and personalized care. This potential for transformation makes adopting these methodologies a significant step toward revolutionizing mental health care.

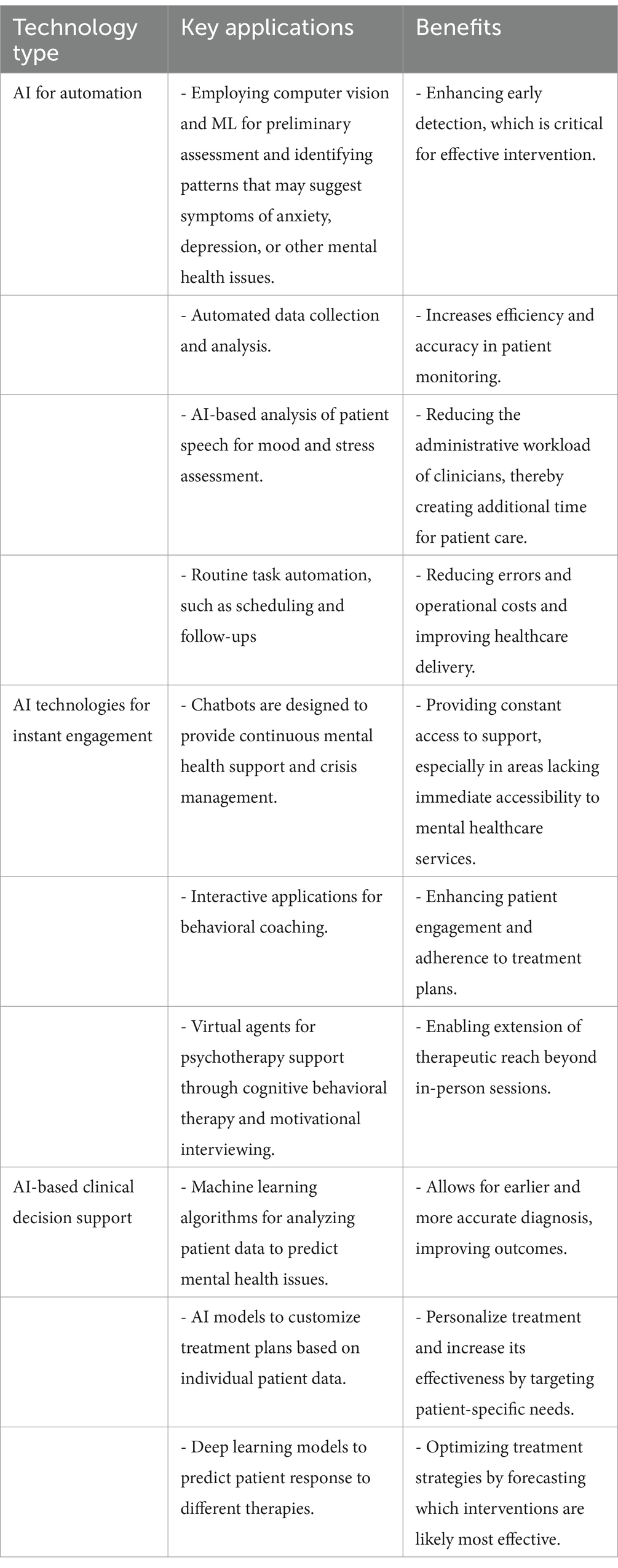

3.2 AI technologies for automating mental healthcare services

There are three primary categories of technologies relevant to mental health management: automation technologies, engagement technologies, and clinical decision support technologies. AI-enabled automation technologies optimize healthcare management processes and service delivery using machine learning and computer vision systems, often leveraging structured data like electronic health records for analysis. Meanwhile, recent advancements indicate the emergence of AI-based engagement technologies, such as Natural Language Processing (NLP), which can be used to develop chatbots and intelligent agents to converse and engage patients using unstructured data like spoken language or text (Bickman, 2020). Additionally, platforms that incorporate NLP and ML can offer chatbot-based screening and assist with routine tasks, which might be neglected due to the various responsibilities of clinicians. Technologies like computer vision, NLP, and ML can perform a wide range of tasks, such as emotion and conversation style recognition, leading to automating initial screening processes. This information can enable the recommendations for treatment or specific types of therapy as per individual needs (Chang et al., 2021). Additionally, AI-based technologies can support automating the data collection process and its analysis before, during, or after sessions, enabling digital surveys and extracting insights from patterns in patient’s speech tone and word choices (Kellogg and Sadeh-Sharvit, 2022).

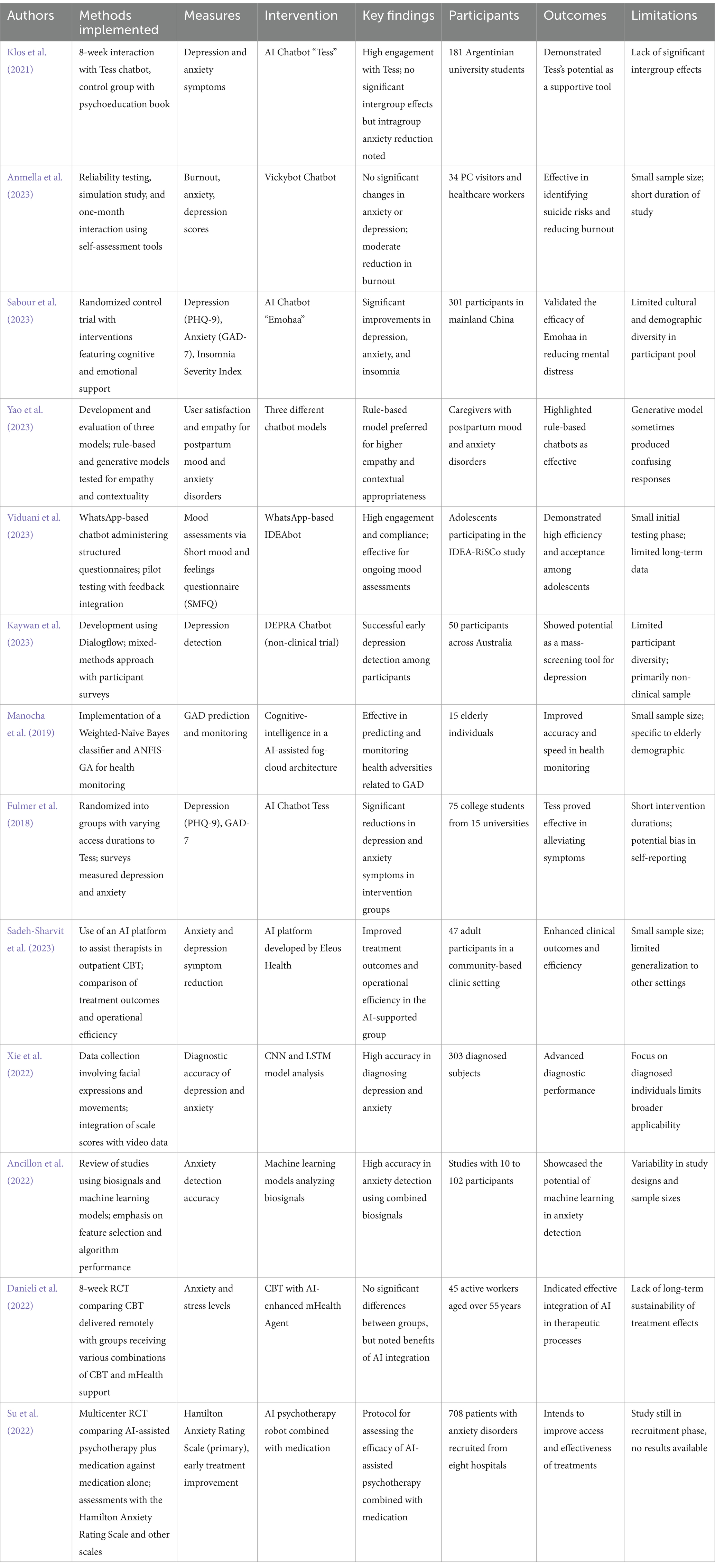

A critical aspect of AI-enabled screening tools is to improve the accuracy, accessibility, and scalability of the interventions (Smrke et al., 2021). Nonetheless, it is critical to acknowledge that these technologies are specifically developed to assist, rather than substitute, the expertise and know-how of clinicians and help address problems associated with workforce shortage in mental health (Balcombe and De Leo, 2021). Additionally, these tools facilitate the evaluation of patient responses to specific interventions, identifying instances where improvements are insufficient, and prioritizing more intensive care when necessary (Lim et al., 2021). Some key findings from evaluating the automation aspect of AI in mental healthcare settings are detailed in Table 1.

3.3 Challenges of automating mental healthcare service using AI

While AI-enabled tools and environments offer clear benefits for managing anxiety disorders and other mental health issues, there are notable drawbacks to keep in mind. Implementing AI-based automation in mental healthcare can lead to a dependence on automated systems, resulting in decreased clinician attentiveness. This issue may become acute in complex care situations where automated systems fail to grasp subtle patient needs. Additionally, many AI tools incorporate auto-complete functions that could lead clinicians to overlook errors in medication or therapy names, alerts, or unusual patient responses due to diminished vigilance (Kellogg and Sadeh-Sharvit, 2022; Parasuraman and Manzey, 2010).

Moreover, if AI systems are integrated to perform tasks such as the initial screening or data analysis, clinicians may feel their roles are diminished or their professional importance is undermined (Fiske et al., 2019). Another critical challenge is allocating tasks between AI technologies and clinicians. This uncertainty can affect the adoption and trust in AI technologies, which impacts their effectiveness in clinical settings. Lastly, integrating AI into mental healthcare raises ethical and practical issues. Clinicians and patients may have concerns about data security and privacy, and the potential biases inherent in AI models may lead to poor clinical applicability (Asan and Choudhury, 2021). Additionally, patients may still feel the absence of human elements in healthcare, which further compromises the adoption of AI, especially in critical settings like managing anxiety disorders (Rundo et al., 2020).

3.4 Natural language processing and chatbots in mental healthcare setting

NLP is an advanced AI technology that develops tools and environments to facilitate human-like interactions, providing real-time support and psychoeducational content. These chatbots perform dynamic processing of user inputs and deliver responses that simulate therapeutic interactions, enhancing user engagement while offering continuous accessibility-a critical feature in regions underserved by mental health professionals (Ahmed et al., 2023). NLP is currently used for various tasks, from analyzing social media to customer support and monitoring mental health in real-time by evaluating the emotional context in text and speech patterns (Thakkar et al., 2024; Khurana et al., 2023; Zhang et al., 2022). This technology facilitates the development of chatbots that provide instantaneous interaction, immediate support, and valuable insights.

Additionally, chatbots can analyze user inputs in order to identify indications of distress, anxiety, or depression (Bendig et al., 2019). AI chatbots can potentially overcome obstacles in seeking mental healthcare by providing personalized, easily accessible, cost-effective, and stigma-free confidential support. This can facilitate early intervention and generate critical data for research and policy development (Singh, 2023). Another significant benefit is that AI chatbots offer interactions free from human judgment, which is particularly important for individuals hesitant to seek help due to fear of stigmatization or bias (Khawaja and Bélisle-Pipon, 2023). Currently, there are existing evidence-based applications that incorporate chatbots, such as Ada, Wysa, Replika, and Youper, which have been examined in the context of mental health. Although existing research has focused broadly on mental health applications, it has been conducted narrowly on specific disorders such as anxiety. Therefore, a thorough review is essential to assess the variety of features these applications offer (Ahmed et al., 2021).

NLP-based chatbots are gaining traction in mental health interventions. These AI systems are designed for one-on-one interaction, aiming to provide human-like responses and support. A common data source for these chatbots comes from user interactions within dedicated mental health apps, such as mindfulness training apps or chatbots specifically designed for anxiety or depression management (Zhang et al., 2022). In mental healthcare, AI-driven tools with NLP and ML can be integrated to evaluate data for emerging trends in anxiety disorders, risk elements, and potential solutions (Zafar et al., 2024; Straw and Callison-Burch, 2020). However, a recent study emphasized the need for AI chatbots to incorporate human values such as empathy and ethical considerations, as this integration can address the limitations of AI systems and improve their interaction with users, making them more effective and trustworthy in sensitive domains like mental health (Balcombe, 2023). Additionally, the study highlights the pressing need for guidelines and regulations to ensure that AI chatbots are developed and deployed responsibly by incorporating principles of fairness, accountability, and transparency in AI design and operation (Timmons et al., 2023; Joyce et al., 2023). Furthermore, it has been indicated that education and training regarding the capabilities and limitations of AI chatbots are vital for both users and providers as they can help mitigate unrealistic expectations and promote effective use of technology in mental healthcare (Balcombe, 2023).

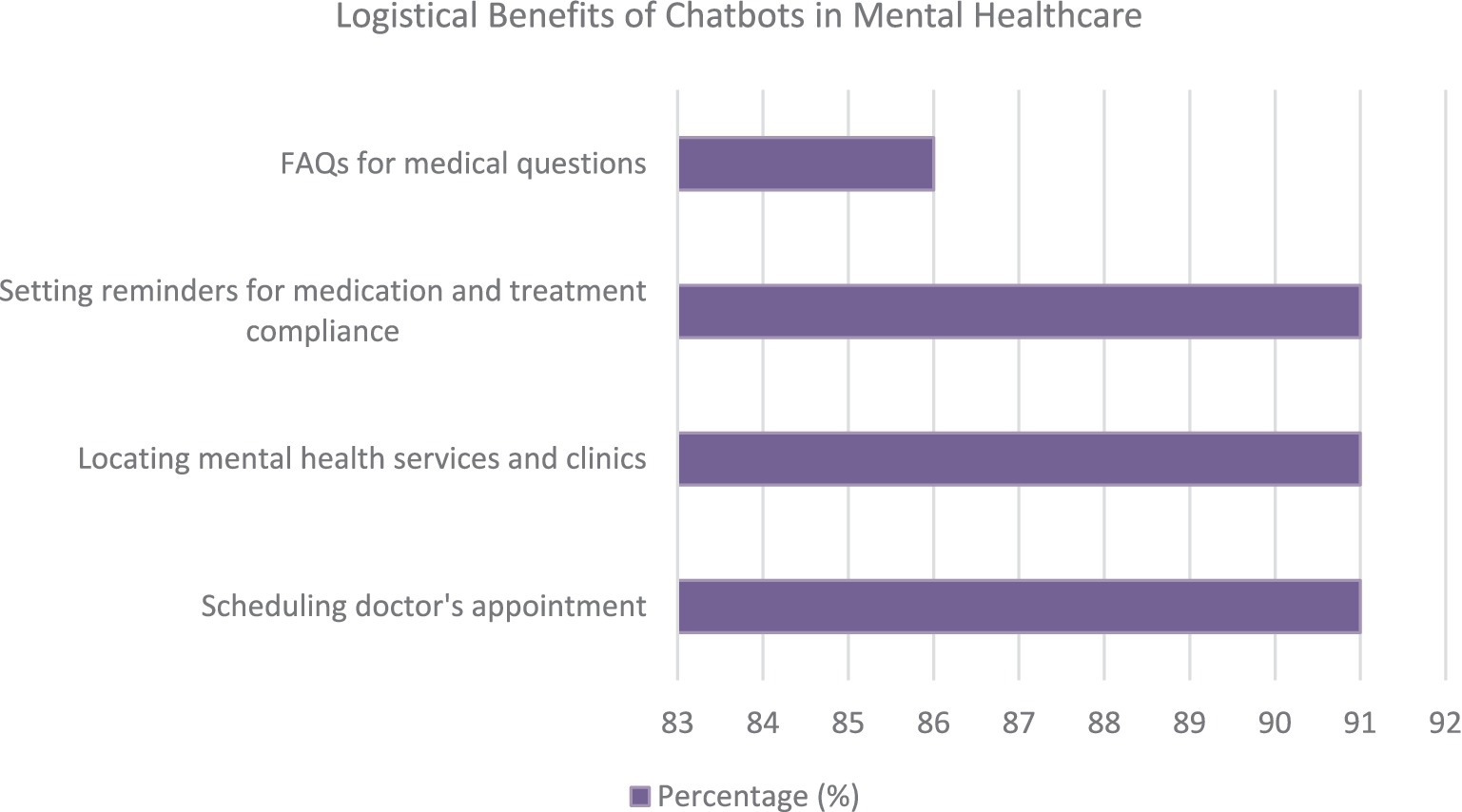

An investigation conducted by Mulvenna et al. examined the viewpoints of mental health professionals regarding integrating chatbots as a means of supporting mental health services. The majority of participants recognized the advantages of AI-enabled chatbots in mental healthcare, with some expressing the potential for chatbots to assist in their mental health management. Nonetheless, a prevailing concern was the perceived deficiency of chatbots in comprehending or expressing human emotions. However, as professionals acquired more experience, their confidence in the efficacy of healthcare chatbots in aiding patient self-management grew (Sweeney et al., 2021). The primary benefits of chatbots for mental healthcare service delivery are highlighted in Figure 2.

The study conducted by Mulvenna et al. also highlighted the potential logistical advantages of incorporating chatbots into mental healthcare. These benefits can potentially enhance mental. Figure 3 displays the mentioned benefits.

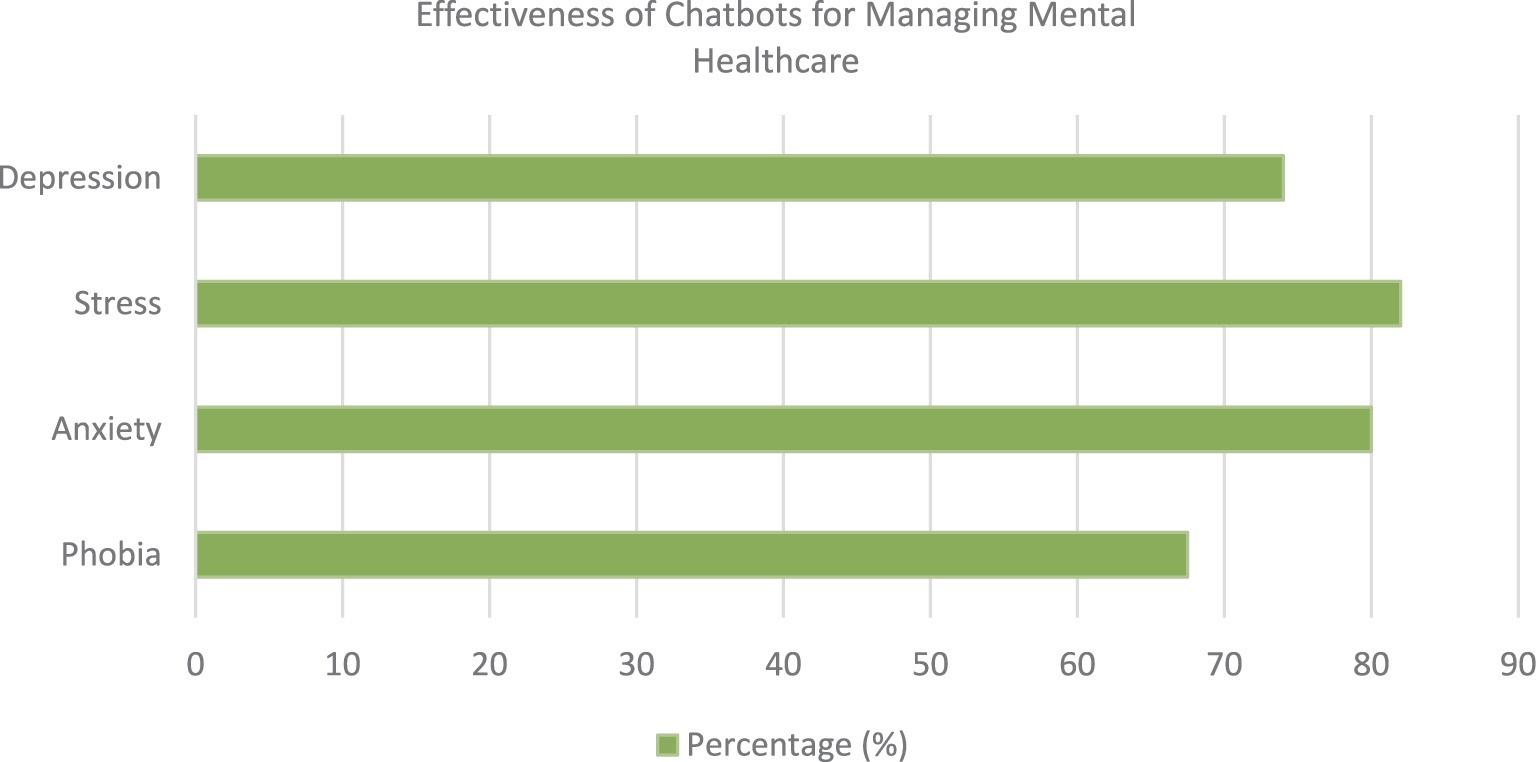

Moreover, chatbots are seen as potentially valuable tools in the domains of self-management (39%), education (36%), and training (35%). These tools are additionally recognized as beneficial aids in cognitive behavioral therapy, with nearly a quarter of professionals affirming their capacity to provide continuous support with minimal user involvement (Sweeney et al., 2021). The respondents indicated that they felt chatbots could effectively manage several mental health conditions, as depicted in Figure 4.

Notwithstanding these advantages, obstacles to the implementation of mental healthcare chatbots exist. A notable percentage of the participants (54%) emphasized multiple concerns, such as the chatbots’ limited understanding or expression of human emotions (86%), inadequate provision of comprehensive care (77%), and insufficient intelligence to assess clients (73%) accurately. Moreover, a considerable percentage of the participants (59%) raised concerns regarding protecting data privacy and confidentiality (Sweeney et al., 2021).

3.5 Wearables for managing anxiety disorders

A recent systematic review and meta-analysis assessed the efficacy of wearable artificial intelligence (AI) technologies in detecting and predicting anxiety disorders, indicating promising yet suboptimal performance. The meta-analysis included 21 studies, yielding a pooled mean accuracy of 0.82, alongside sensitivity and specificity values of 0.79 and 0.92, respectively. These findings suggest that wearable AI can reliably identify the presence and absence of anxiety in most instances. Nevertheless, due to the current performance limitations, wearable AI is not advised for exclusive clinical application. Instead, integration with conventional diagnostic methods is recommended to enhance accuracy. Furthermore, the study encourages the inclusion of additional data sources, such as neuroimaging, to improve the diagnostic capabilities of wearable technologies (Abd-alrazaq et al., 2023).

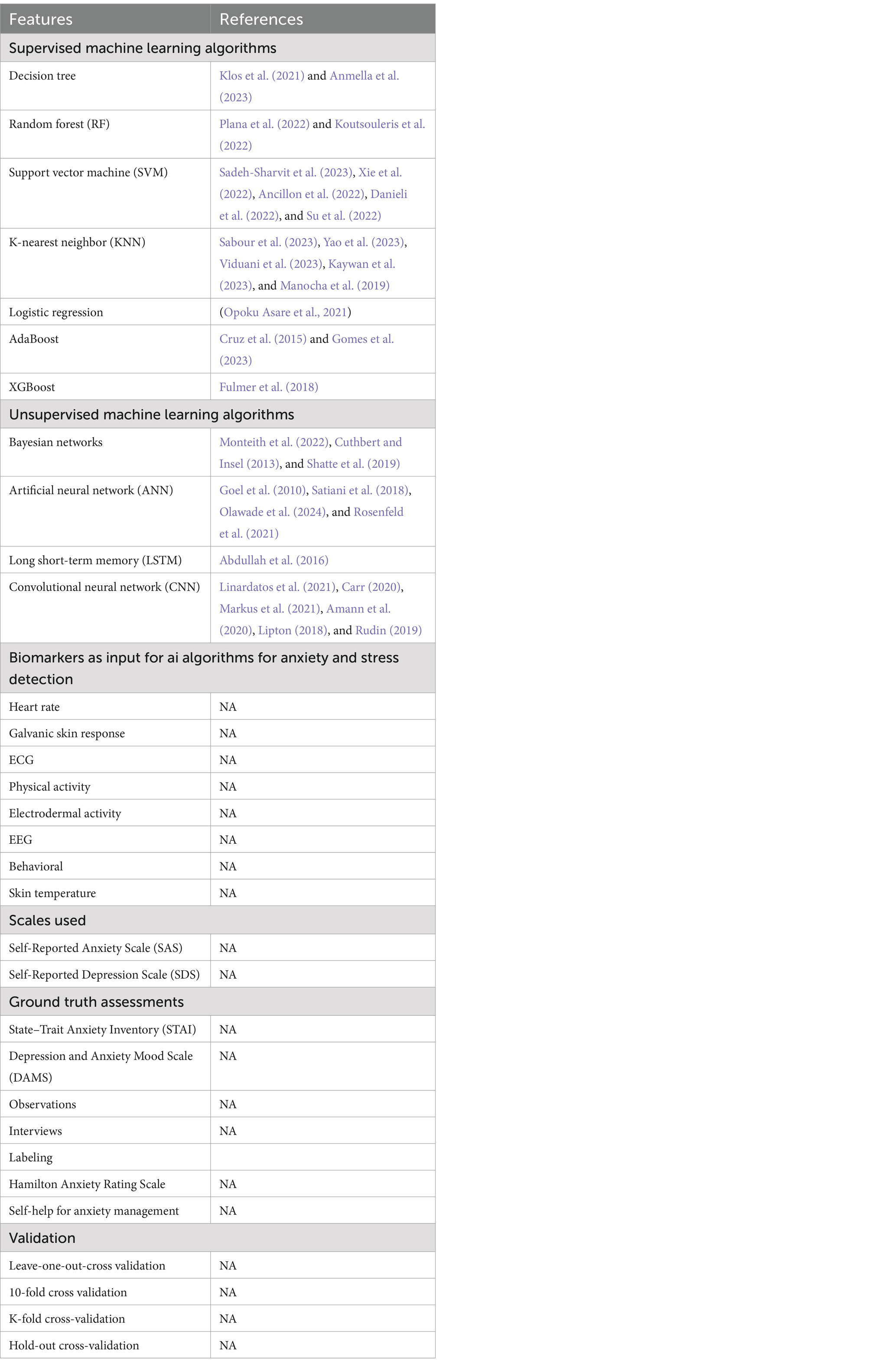

There are numerous wearable devices that incorporate machine learning (ML) algorithms to process and analyze the data they collect, providing users with valuable insights and real-time feedback on their health and wellness. This enables continuous health monitoring and management (Nahavandi et al., 2022). Recent research by Gedam and Paul has explored the application of ML algorithms to detect stress using data from multiple wearable sensors. This study highlighted heart rate and galvanic skin response as the most effective sensors (Gedam and Paul, 2021). Moreover, researchers like Cruz et al. have emphasized the importance of detecting and predicting Panic Disorder (PD), utilizing anomaly detection algorithms to distinguish between panic and non-panic states (Cruz et al., 2015). The existing literature regarding the application of machine learning in the analysis of physiological data for mental health monitoring through wearable technology is growing, although it remains in its early stages (Gomes et al., 2023). The findings concerning the most effective AI models and other features employed in using wearable sensors and hybrid approaches for anxiety and other mental health disorder detection and monitoring are summarized in Table 2.

Table 2. Commonly used AI models and algorithms and key features for anxiety detection and mental health monitoring.

Table 2 presents an extensive overview of the commonly used AI algorithms for anxiety detection and mental health monitoring. This includes Support Vector Machine (SVM), Random Forest (RF), AdaBoost, Decision Trees, Artificial Neural Networks (ANN), K-nearest Neighbors (KNN), Bayesian Networks, Logistic Regression, Long Short-Term Memory (LSTM), XGBoost, and Convolutional Neural Networks (CNN). These algorithms are referenced across various studies, demonstrating their efficacy and application in interpreting complex physiological and behavioral data for mental health assessment.

Key biomarkers such as Heart Rate, Galvanic Skin Response, ECG, Physical Activity, and others are used as inputs to enhance the precision of the models. The validation of these algorithms is conducted using established scales like the State–Trait Anxiety Inventory (STAI) and Self-Reported Anxiety Scale (SAS), which help ascertain their accuracy and reliability in clinical settings. This integration of advanced computational methods and multiple biomarkers underscores the evolving landscape of mental health diagnostics and holds the potential to improve personalized treatment plans and real-time monitoring of mental health conditions.

3.6 Outcomes of randomized trials and practical experiments

To understand the efficacy of AI in managing anxiety disorder and other interconnected mental health disorders, we have examined various randomized controlled trials, pilot studies, and practical implementations. In recent years, the integration of AI into mental health interventions has garnered significant attention, resulting in novel approaches to treatment and mental healthcare service delivery, with an emphasis on improving accessibility and effectiveness. The discussion is aimed not only at assessing the measurable outcomes of such trials but also at understanding the broader implications of integrating AI into routine clinical practice for anxiety and other mental health disorders. The outcomes are summarized in Table 3.

The table offers valuable insights into the diverse applications and outcomes of AI and ML in mental healthcare. While some studies, like the evaluation of the “Emohaa” chatbot in China, demonstrated notable efficacy in managing mental health symptoms, others, such as the Vickybot trial, highlighted the variability in AI’s effectiveness, particularly in altering core symptoms like anxiety and depression, despite showing promise in reducing burnout among healthcare workers. The findings of the various randomized controlled trials (RCTs) indicate that a more inclusive research approach is needed in future trials to ensure that findings are applicable across diverse populations. This is critical as the geographical concentration of these trials in high-resource settings may limit their applicability in lower-resource environments. In this context, a study by Plana et al. (2022) highlighted significant methodological shortcomings in medical machine learning RCTs, such as a high risk of bias and poor demographic inclusivity, which could affect the generalizability of the results.

While the studies collectively suggest that AI and ML have the potential to revolutionize mental healthcare treatment outcomes and patient care, the actual implementation in clinical settings is contingent on overcoming significant challenges related to trial design, data integrity, and ethical reporting standards. These insights are critical for understanding the current landscape of AI in healthcare and underscore the need for methodological rigor, enhanced reporting transparency, and greater inclusivity in future research designs. This synthesis serves as a valuable resource for stakeholders in healthcare and technology, guiding the refinement and implementation of AI-driven interventions in clinical settings.

4 Discussion

The integration of AI into mental healthcare represents a significant transformation in managing conditions such as anxiety disorders and offering advanced capabilities for diagnosis, personalizing treatment options, and broader accessibility. However, there are significant challenges in applying these technologies in real-world clinical settings. While AI can enhance predictive accuracy, there remains a notable gap between AI-generated results and the practical demands of healthcare environments, particularly in mental healthcare service delivery (Koutsouleris et al., 2022). Research indicates that while machine learning algorithms have shown promise in mental health applications, some studies suggest that their improvements over existing models might not be substantial enough to alter clinical decision-making processes significantly (Goel et al., 2010). Therefore, there is a critical need to refine AI models to bridge this gap so that they align more closely with clinical evaluations. This alignment is essential to expand access to diagnostic and prognostic services, particularly during crises or emergencies (Satiani et al., 2018).

The incorporation of AI can also enhance mental health service delivery by performing routine screenings, telehealth services, and personalized therapy, which contributes to the accessibility and efficacy of treatment (Olawade et al., 2024). A key benefit of AI is its ability to analyze vast amounts of data, identifying patterns that may be difficult for clinicians to detect manually. This comprehensive view of a patient’s mental health can help devise personalized treatment plans, potentially benefiting those reluctant to seek help due to stigma (Rosenfeld et al., 2021). AI can also support continuous symptom monitoring and early relapse detection, allowing clinicians to focus more on therapeutic relationships and quality care (Monteith et al., 2022).

The development of AI systems for specific tasks in a mental healthcare setting can enhance intervention strategies and therapy recommendations. In this context, the concept of “weak AI” may be suitable as it is more controllable and better suited for mental health treatment needs (Koutsouleris et al., 2022; Cuthbert and Insel, 2013). While some fear that AI could replace human expertise, others believe that healthcare professionals will continue to play a critical role; a collaborative approach with human expertise and AI can improve patient care. This collaboration could lead to enhanced patient management, where AI assists in identifying symptoms and supporting evidence-based decision-making (Koutsouleris et al., 2022). The potential of AI in diagnosing and treating mental health conditions such as anxiety and depression is immense (Shatte et al., 2019). In general, traditional diagnostic methods, which often rely on self-reporting and clinician assessments, are plagued by challenges such as stigma, social desirability bias, and a general tendency toward under-diagnosis (Opoku Asare et al., 2021). However, AI can mitigate these challenges by using vast behavioral and linguistic data to identify subtle indicators of mental health issues. AI algorithms can diagnose conditions early and more accurately, thereby preventing symptom escalation and reducing the burden on healthcare systems (Zafar et al., 2024; Abdullah et al., 2016).

However, implementing AI in mental health care necessitates careful consideration of ethical and practical challenges. AI and ML models, by nature, introduce a degree of uncertainty that must be managed when they fail to perform as expected in real-world scenarios. This indicates the importance of evaluating AI technologies not solely based on their predictive capabilities but also their practical applications, considering the tradeoffs between model simplicity, speed, explainability, and accuracy (Linardatos et al., 2021; Carr, 2020). Explainability is essential in clinical settings as it enables transparency in AI-driven decisions, especially when these decisions affect patient outcomes (Markus et al., 2021). In general, explainability can be an inherent feature of simpler models, such as linear or logistic regression, or approximated in more complex models like artificial neural networks (ANNs). Inherent explainability offers more accurate insights since it directly reflects the model’s decision-making process. In contrast, approximated methods are designed to make sense of “black-box” models like ANNs, where millions of internal parameters may make interpretation challenging (Amann et al., 2020). However, this introduces a trade-off between the high performance of advanced models and their interpretability, posing a significant challenge for the development of clinical decision support systems. As Lipton (Lipton, 2018) observes, the opacity of black-box models makes it difficult for stakeholders to comprehend the reasoning behind AI predictions, undermining confidence in these systems. Moreover, Rudin (2019) highlights that models with higher explainability provide transparent reasoning processes, which is critical when clinicians must justify or communicate AI-driven decisions to patients. Based on the findings from these studies, it is evident that balancing key factors such as model simplicity (which enables quicker deployment), speed (crucial for real-time applications), and accuracy (essential for precise predictions) is critical to improving the usability and acceptance of AI in mental health care.

Furthermore, AI’s impersonal nature can overlook the nuance of emotional aspects crucial in mental health assessment (Li et al., 2023). This challenge is compounded by concerns highlighted across research regarding data security, informed consent, confidentiality, trust, and the management of cognitive biases, all of which are particularly significant in healthcare applications (Thakkar et al., 2024; Rigby, 2019; Lovejoy et al., 2019; McCradden et al., 2023). While AI has made strides in many areas, its ability to consistently account for the emotional subtleties present in human interactions remains limited. There are also concerns regarding algorithmic biases, as AI systems are only as objective as the data they are trained on. In this case, it has been identified that biases may be mitigated through mixed-initiative interfaces that enhance shared decision-making (Bickman, 2020; Lattie et al., 2020). Additionally, the accuracy of AI tools, including wearables, can vary, raising concerns about their reliability in real-world applications. Acceptability also fluctuates across cultural and geographic boundaries, with some communities expressing hesitance toward machine-led care due to cultural perceptions and trust issues (Zafar et al., 2024). The limited availability of technology in resource-limited settings is another concern highlighted in various research, as it may create a digital divide in mental healthcare access (Nilsen et al., 2022).

Despite the concerns, realizing the potential of AI requires a considered and ethical approach to its implementation, as well as addressing issues such as data privacy, the need for clinician training on AI insights, and the broader ethical implications of technology in healthcare. The advancement of mental healthcare requires a collaborative approach, wherein AI complements the knowledge and skills of healthcare professionals, ultimately enhancing human capabilities instead of replacing them. By promoting a symbiotic relationship between human empathy and the analytical capabilities of AI, the mental health sector can utilize these technologies to propel the quality and reach of care, ultimately leading to a substantial enhancement in assistance for individuals with mental health conditions.

4.1 Strategic recommendations for implementing AI in mental healthcare

1. Development of mixed-initiative interfaces: Integrating AI systems that assist clinicians by delivering comprehensive, real-time data analysis while still upholding their authority in the final decision-making process. The incorporation of personalized patient data into broader clinical knowledge is anticipated to improve shared decision-making.

2. Addressing training and skill acquisition: The implementation of educational programs and ongoing training is necessary to facilitate clinicians’ comprehension and proficient utilization of AI tools. The educational program should emphasize AI’s capacities and constraints, equipping healthcare professionals with the knowledge to use these tools responsibly and efficiently.

3. Regulatory and ethical frameworks: The implementation of strict regulations and ethical guidelines is necessary to oversee the application of AI in mental healthcare. These frameworks aim to ensure that AI tools adhere to patient autonomy, confidentiality, and unbiased decision-making, thereby safeguarding clinical outcomes. Developers and healthcare institutions must align AI tools with existing regulatory standards such as the General Data Protection Regulation (GDPR) in Europe, which governs data privacy and security (GDPR, 2016). Additionally, the U.S. Food and Drug Administration (FDA) has provided guidelines for software as a medical device (SaMD), emphasizing the importance of transparency, validation, and accuracy of AI tools before clinical implementation (Center for Devices and Radiological Health, 2017).

From an ethical perspective, frameworks such as fairness, accountability, and transparency (FAT) should guide AI development to prevent reinforcing biases in AI-driven systems (Barocas et al., 2024). Furthermore, AI developers need to ensure informed consent, uphold patient confidentiality, and address potential algorithmic bias to build trust among users and clinicians (Mittelstadt et al., 2016). Ethical guidelines from the World Health Organization (WHO) concerning digital health and AI ethics offer additional direction, helping ensure that AI-based interventions align with global public health priorities and human rights standards (World Health Organization, 2021). Hence, incorporating these regulatory and ethical frameworks will be vital to creating AI systems that are both effective and socially responsible.

4. Enhancing patient-clinician relationships: Utilize AI technology to enhance, rather than replace, the therapeutic alliance between patients and clinicians. The role of AI should be perceived as a means to enhance this association, offering well-informed and empathetic aid, thus fortifying the essential trust necessary for effective mental healthcare.

5 Conclusion

Despite the notable benefits of AI in mental healthcare, such as improved accessibility, personalized treatment, and enhanced operational efficiency, several challenges must be addressed, including privacy concerns, potential biases, and the necessity of preserving a human touch in delivering care. Achieving a balance between these advantages and disadvantages necessitates the establishment of strong ethical frameworks, ongoing monitoring and enhancement of AI systems, and a concerted effort to integrate AI tools in a manner that complements rather than supplants human care. The objective of future research and policy development should be to tackle these challenges, ensuring that AI contributes positively to mental health outcomes while maintaining the highest standards of care and safeguarding patient privacy and dignity. The effective incorporation of AI into mental healthcare will rely on our capacity to navigate these complexities, establishing an environment where technology and human expertise work together to enhance service delivery and patient care.

Author contributions

KD: Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Resources, Validation, Visualization, Writing – original draft, Writing – review & editing. PG: Conceptualization, Data curation, Formal analysis, Investigation, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abd-alrazaq, A., AlSaad, R., Harfouche, M., Aziz, S., Ahmed, A., Damseh, R., et al. (2023). Wearable artificial intelligence for detecting anxiety: systematic review and meta-analysis. J. Med. Internet Res. 25:e48754. doi: 10.2196/48754

Abdullah, S., Matthews, M., Frank, E., Doherty, G., Gay, G., and Choudhury, T. (2016). Automatic detection of social rhythms in bipolar disorder. J. Am. Med. Inform. Assoc. 23, 538–543. doi: 10.1093/jamia/ocv200

Ahmed, A., Ali, N., Aziz, S., Abd-alrazaq, A. A., Hassan, A., Khalifa, M., et al. (2021). A review of mobile chatbot apps for anxiety and depression and their self-care features. Compu. Methods Progr. Biomed. Update 1:100012. doi: 10.1016/j.cmpbup.2021.100012

Ahmed, A., Hassan, A., Aziz, S., Abd-alrazaq, A. A., Ali, N., Alzubaidi, M., et al. (2023). Chatbot features for anxiety and depression: a scoping review. Health Informatics J. 29:14604582221146719. doi: 10.1177/14604582221146719

Amann, J., Blasimme, A., Vayena, E., Frey, D., and Madai, V. I.The Precise4Q Consortium (2020). Explainability for artificial intelligence in healthcare: a multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 20:310. doi: 10.1186/s12911-020-01332-6

Ancillon, L., Elgendi, M., and Menon, C. (2022). Machine learning for anxiety detection using biosignals: a review. Diagnostics 12:1794. doi: 10.3390/diagnostics12081794

Anmella, G., Sanabra, M., Primé-Tous, M., Segú, X., Cavero, M., Morilla, I., et al. (2023). Vickybot, a Chatbot for anxiety-depressive symptoms and work-related burnout in primary care and health care professionals: development, feasibility, and potential effectiveness studies. J. Med. Internet Res. 25:e43293. doi: 10.2196/43293

Antoniadi, A. M., Du, Y., Guendouz, Y., Wei, L., Mazo, C., Becker, B. A., et al. (2021). Current challenges and future opportunities for XAI in machine learning-based clinical decision support systems: a systematic review. Appl. Sci. 11:5088. doi: 10.3390/app11115088

Asan, O., and Choudhury, A. (2021). Research trends in artificial intelligence applications in human factors health care: mapping review. JMIR Hum. Factors 8:e28236. doi: 10.2196/28236

Balcombe, L. (2023). AI chatbots in digital mental health. Informatics 10:82. doi: 10.3390/informatics10040082

Balcombe, L., and De Leo, D. (2021). Digital mental health challenges and the horizon ahead for solutions. JMIR Ment. Health 8:e26811. doi: 10.2196/26811

Bandelow, B., and Michaelis, S. (2015). Epidemiology of anxiety disorders in the 21st century. Dialogues Clin. Neurosci. 17, 327–335. doi: 10.31887/DCNS.2015.17.3/bbandelow

Bandelow, B., Werner, A. M., Kopp, I., Rudolf, S., Wiltink, J., and Beutel, M. E. (2022). The German guidelines for the treatment of anxiety disorders: first revision. Eur. Arch. Psychiatry Clin. Neurosci. 272, 571–582. doi: 10.1007/s00406-021-01324-1

Barocas, S., Hardt, M., and Narayanan, A.. (2024) Fairness and machine learning. MIT Press. Available at: https://mitpress.mit.edu/9780262048613/fairness-and-machine-learning/ (Accessed September 9, 2024).

Bell, I. H., Nicholas, J., Alvarez-Jimenez, M., Thompson, A., and Valmaggia, L. (2020). Virtual reality as a clinical tool in mental health research and practice. Dialogues Clin. Neurosci. 22, 169–177. doi: 10.31887/DCNS.2020.22.2/lvalmaggia

Bendig, E., Erb, B., Schulze-Thuesing, L., and Baumeister, H. (2019). The next generation: Chatbots in clinical psychology and psychotherapy to Foster mental health – a scoping review. Verhaltenstherapie 32, 64–76. doi: 10.1159/000501812

Bertl, M., Ross, P., and Draheim, D. (2022). A survey on AI and decision support systems in psychiatry – uncovering a dilemma. Expert Syst. Appl. 202:117464. doi: 10.1016/j.eswa.2022.117464

Bickman, L. (2020). Improving mental health services: a 50-year journey from randomized experiments to artificial intelligence and precision mental health. Admin. Pol. Ment. Health 47, 795–843. doi: 10.1007/s10488-020-01065-8

Bourhis, J. (2017). Narrative literature review, the SAGE encyclopedia of communication research methods. Thousand Oaks: SAGE Publications, Inc.

Carr, S. (2020). ‘AI gone mental’: engagement and ethics in data-driven technology for mental health. J. Ment. Health 29, 125–130. doi: 10.1080/09638237.2020.1714011

Center for Devices and Radiological Health . Software as a medical device (SAMD): clinical evaluation. (2017) Available at: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/software-medical-device-samd-clinical-evaluation (Accessed September 9, 2024).

Chang, Z., Di Martino, J. M., Aiello, R., Baker, J., Carpenter, K., Compton, S., et al. (2021). Computational methods to measure patterns of gaze in toddlers with autism Spectrum disorder. JAMA Pediatr. 175, 827–836. doi: 10.1001/jamapediatrics.2021.0530

Chung, J., and Teo, J. (2022). Mental health prediction using machine learning: taxonomy, applications, and challenges. Appl. Comput. Intell. Soft Comput. 2022:9970363. doi: 10.1155/2022/9970363

Cruz, L., Rubin, J., Abreu, R., Ahern, S., Eldardiry, H., and Bobrow, D. G.. A wearable and mobile intervention delivery system for individuals with panic disorder. Proceedings of the 14th International Conference on Mobile and Ubiquitous Multimedia, MUM ‘15. New York, NY: Association for Computing Machinery (2015), 175–182.

Cuthbert, B. N., and Insel, T. R. (2013). Toward the future of psychiatric diagnosis: the seven pillars of RDoC. BMC Med. 11:126. doi: 10.1186/1741-7015-11-126

Danieli, M., Ciulli, T., Mousavi, S. M., Silvestri, G., Barbato, S., Natale, L. D., et al. (2022). Assessing the impact of conversational artificial intelligence in the treatment of stress and anxiety in aging adults: randomized controlled trial. JMIR Ment. Health 9:e38067. doi: 10.2196/38067

de Belen, R. A. J., Bednarz, T., Sowmya, A., and Del Favero, D. (2020). Computer vision in autism spectrum disorder research: a systematic review of published studies from 2009 to 2019. Transl. Psychiatry 10, 1–20. doi: 10.1038/s41398-020-01015-w

Ferrari, R. (2015). Writing narrative style literature reviews. Med. Writ. 24, 230–235. doi: 10.1179/2047480615Z.000000000329

Fiske, A., Henningsen, P., and Buyx, A. (2019). Your robot therapist will see you now: ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J. Med. Internet Res. 21:e13216. doi: 10.2196/13216

Fulmer, R., Joerin, A., Gentile, B., Lakerink, L., and Rauws, M. (2018). Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: randomized controlled trial. JMIR Ment. Health 5:e64. doi: 10.2196/mental.9782

Furqan, M., Aleem, Z., and Akhtar, A. Detecting and relieving anxiety on-the-go using machine learning techniques. (2020). Available at: https://www.researchgate.net/publication/362231028_Detecting_and_ Relieving_anxiety_on-the-go_using_Machine_Learning_Techniques?channel=doi&linkId=62de5012aa5823729ee0ade1&showFulltext=true

GDPR . General data protection regulation (GDPR) – legal text (2016). Available at: https://gdpr-info.eu/ (Accessed September 9, 2024).

Gedam, S., and Paul, S. (2021). A review on mental stress detection using wearable sensors and machine learning techniques. IEEE Access. 9, 84045–84066. doi: 10.1109/ACCESS.2021.3085502

Goel, S., Hofman, J. M., Lahaie, S., Pennock, D. M., and Watts, D. J. (2010). Predicting consumer behavior with web search. Proc. Natl. Acad. Sci. USA 107, 17486–17490. doi: 10.1073/pnas.1005962107

Goldman, L. S., Nielsen, N. H., and Champion, H. C.for the Council on Scientific Affairs AMA (1999). Awareness, diagnosis, and treatment of depression. J. Gen. Intern. Med. 14, 569–580. doi: 10.1046/j.1525-1497.1999.03478.x

Gomes, N., Pato, M., Lourenço, A. R., and Datia, N. (2023). A survey on wearable sensors for mental health monitoring. Sensors 23:1330. doi: 10.3390/s23031330

Graham, S., Depp, C., Lee, E. E., Nebeker, C., Tu, X., Kim, H.-C., et al. (2019). Artificial intelligence for mental health and mental illnesses: an overview. Curr. Psychiatry Rep. 21:116. doi: 10.1007/s11920-019-1094-0

Green, B. N., Johnson, C. D., and Adams, A. (2006). Writing narrative literature reviews for peer-reviewed journals: secrets of the trade. J. Chiropr. Med. 5, 101–117. doi: 10.1016/S0899-3467(07)60142-6

Hashemi, J., Dawson, G., Carpenter, K. L. H., Campbell, K., Qiu, Q., Espinosa, S., et al. (2021). Computer vision analysis for quantification of autism risk behaviors. IEEE Trans. Affect. Comput. 12, 215–226. doi: 10.1109/taffc.2018.2868196

Huang, Z.-Y., Chiang, C.-C., Chen, J.-H., Chen, Y.-C., Chung, H.-L., Cai, Y.-P., et al. (2023). A study on computer vision for facial emotion recognition. Sci. Rep. 13:8425. doi: 10.1038/s41598-023-35446-4

Iyortsuun, N. K., Kim, S.-H., Jhon, M., Yang, H.-J., and Pant, S. (2023). A review of machine learning and deep learning approaches on mental health diagnosis. Healthcare 11:285. doi: 10.3390/healthcare11030285

Joyce, D. W., Kormilitzin, A., Smith, K. A., and Cipriani, A. (2023). Explainable artificial intelligence for mental health through transparency and interpretability for understandability. Npj Digit. Med. 6:6. doi: 10.1038/s41746-023-00751-9

Katzman, M. A., Bleau, P., Blier, P., Chokka, P., Kjernisted, K., and Van Ameringen, M. (2014). the Canadian anxiety guidelines initiative group on behalf of the anxiety disorders Association of Canada/association Canadienne des troubles anxieux and McGill University. Canadian clinical practice guidelines for the management of anxiety, posttraumatic stress and obsessive-compulsive disorders. BMC Psychiatry 14 Suppl 1:S1. doi: 10.1186/1471-244X-14-S1-S1

Kaywan, P., Ahmed, K., Ibaida, A., Miao, Y., and Gu, B. (2023). Early detection of depression using a conversational AI bot: a non-clinical trial. PLoS One 18:e0279743. doi: 10.1371/journal.pone.0279743

Kellogg, K. C., and Sadeh-Sharvit, S. (2022). Pragmatic AI-augmentation in mental healthcare: key technologies, potential benefits, and real-world challenges and solutions for frontline clinicians. Front. Psych. 13:990370. doi: 10.3389/fpsyt.2022.990370

Kessler, R. C., Aguilar-Gaxiola, S., Alonso, J., Chatterji, S., Lee, S., Ormel, J., et al. (2009). The global burden of mental disorders: an update from the WHO world mental health (WMH) surveys. Epidemiol. Psichiatr. Soc. 18, 23–33. doi: 10.1017/s1121189x00001421

Khawaja, Z., and Bélisle-Pipon, J.-C. (2023). Your robot therapist is not your therapist: understanding the role of AI-powered mental health chatbots. Front. Digit. Health 5:1278186. doi: 10.3389/fdgth.2023.1278186

Khurana, D., Koli, A., Khatter, K., and Singh, S. (2023). Natural language processing: state of the art, current trends and challenges. Multimed. Tools Appl. 82, 3713–3744. doi: 10.1007/s11042-022-13428-4

Klos, M. C., Escoredo, M., Joerin, A., Lemos, V. N., Rauws, M., and Bunge, E. L. (2021). Artificial intelligence-based Chatbot for anxiety and depression in university students: pilot randomized controlled trial. JMIR Form. Res. 5:e20678. doi: 10.2196/20678

Koutsouleris, N., Hauser, T. U., Skvortsova, V., and Choudhury, M. D. (2022). From promise to practice: towards the realisation of AI-informed mental health care. Lancet Digit. Health 4, e829–e840. doi: 10.1016/S2589-7500(22)00153-4

Lattie, E. G., Nicholas, J., Knapp, A. A., Skerl, J. J., Kaiser, S. M., and Mohr, D. C. (2020). Opportunities for and tensions surrounding the use of technology-enabled mental health Services in Community Mental Health Care. Admin. Pol. Ment. Health 47, 138–149. doi: 10.1007/s10488-019-00979-2

Li, H., Zhang, R., Lee, Y.-C., Kraut, R. E., and Mohr, D. C. (2023). Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. Npj Digit. Med. 6:236. doi: 10.1038/s41746-023-00979-5

Lim, H. M., Teo, C. H., Ng, C. J., Chiew, T. K., Ng, W. L., Abdullah, A., et al. (2021). An automated patient self-monitoring system to reduce health care system burden during the COVID-19 pandemic in Malaysia: development and implementation study. JMIR Med. Inform. 9:e23427. doi: 10.2196/23427

Linardatos, P., Papastefanopoulos, V., and Kotsiantis, S. (2021). Explainable AI: a review of machine learning interpretability methods. Entropy 23:18. doi: 10.3390/e23010018

Lipton, Z. C. (2018). The mythos of model interpretability. Commun. ACM 61, 36–43. doi: 10.1145/3233231

Lluch, C., Galiana, L., Doménech, P., and Sansó, N. (2022). The impact of the COVID-19 pandemic on burnout, compassion fatigue, and compassion satisfaction in healthcare personnel: a systematic review of the literature published during the first year of the pandemic. Healthcare 10:364. doi: 10.3390/healthcare10020364

Lovejoy, C. A., Buch, V., and Maruthappu, M. (2019). Technology and mental health: the role of artificial intelligence. Eur. Psychiatry 55, 1–3. doi: 10.1016/j.eurpsy.2018.08.004

Manocha, A., Singh, R., and Bhatia, M. (2019). Cognitive intelligence assisted fog-cloud architecture for generalized anxiety disorder (GAD) prediction. J. Med. Syst. 44:7. doi: 10.1007/s10916-019-1495-y

Markus, A. F., Kors, J. A., and Rijnbeek, P. R. (2021). The role of explainability in creating trustworthy artificial intelligence for health care: a comprehensive survey of the terminology, design choices, and evaluation strategies. J. Biomed. Inform. 113:103655. doi: 10.1016/j.jbi.2020.103655

McCradden, M., Hui, K., and Buchman, D. Z. (2023). Evidence, ethics and the promise of artificial intelligence in psychiatry. J. Med. Ethics 49, 573–579. doi: 10.1136/jme-2022-108447

Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., and Floridi, L. (2016). The ethics of algorithms: mapping the debate. Big Data Soc. 3:2053951716679679. doi: 10.1177/2053951716679679

Monteith, S., Glenn, T., Geddes, J., Whybrow, P. C., Achtyes, E., and Bauer, M. (2022). Expectations for artificial intelligence (AI) in psychiatry. Curr. Psychiatry Rep. 24, 709–721. doi: 10.1007/s11920-022-01378-5

Moreno, C., Wykes, T., Galderisi, S., Nordentoft, M., Crossley, N., Jones, N., et al. (2020). How mental health care should change as a consequence of the COVID-19 pandemic. Lancet Psychiatry 7, 813–824. doi: 10.1016/S2215-0366(20)30307-2

Nahavandi, D., Alizadehsani, R., Khosravi, A., and Acharya, U. R. (2022). Application of artificial intelligence in wearable devices: opportunities and challenges. Comput. Methods Prog. Biomed. 213:106541. doi: 10.1016/j.cmpb.2021.106541

Nilsen, P., Svedberg, P., Nygren, J., Frideros, M., Johansson, J., and Schueller, S. (2022). Accelerating the impact of artificial intelligence in mental healthcare through implementation science. Implement. Res. Pract. 3:26334895221112033. doi: 10.1177/26334895221112033

Olawade, D. B., Wada, O. Z., Odetayo, A., David-Olawade, A. C., Asaolu, F., and Eberhardt, J. (2024). Enhancing mental health with artificial intelligence: current trends and future prospects. J. Med. Surg. Public Health 3:100099. doi: 10.1016/j.glmedi.2024.100099

Opoku Asare, K., Terhorst, Y., Vega, J., Peltonen, E., Lagerspetz, E., and Ferreira, D. (2021). Predicting depression from smartphone behavioral markers using machine learning methods, Hyperparameter optimization, and feature importance analysis: exploratory study. JMIR Mhealth Uhealth 9:e26540. doi: 10.2196/26540

Parasuraman, R., and Manzey, D. H. (2010). Complacency and bias in human use of automation: an attentional integration. Hum. Factors 52, 381–410. doi: 10.1177/0018720810376055

Plana, D., Shung, D. L., Grimshaw, A. A., Saraf, A., Sung, J. J. Y., and Kann, B. H. (2022). Randomized clinical trials of machine learning interventions in health care: a systematic review. JAMA Netw. Open 5:e2233946. doi: 10.1001/jamanetworkopen.2022.33946

Rigby, M. J. (2019). Ethical dimensions of using artificial intelligence in health care. AMA J. Ethics 21, 121–124. doi: 10.1001/amajethics.2019.121

Rosenfeld, A., Benrimoh, D., Armstrong, C., Mirchi, N., Langlois-Therrien, T., Rollins, C., et al. (2021). “6 - big data analytics and artificial intelligence in mental healthcare” in Applications of big data in healthcare. eds. A. Khanna, D. Gupta, and N. Dey (Academic Press), 137–171. Avaialbe at: https://www.researchgate.net/profile/Dr-Manjula/publication/350808710_APPLICATIONS_OF_BIG_DATA_IN_HEALTHCARE/links/6073d76492851c8a7bbe9745/APPLICATIONS-OF-BIG-DATA-IN-HEALTHCARE.pdf

Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215. doi: 10.1038/s42256-019-0048-x

Rundo, L., Pirrone, R., Vitabile, S., Sala, E., and Gambino, O. (2020). Recent advances of HCI in decision-making tasks for optimized clinical workflows and precision medicine. J. Biomed. Inform. 108:103479. doi: 10.1016/j.jbi.2020.103479

Sabour, S., Zhang, W., Xiao, X., Zhang, Y., Zheng, Y., Wen, J., et al. (2023). A chatbot for mental health support: exploring the impact of Emohaa on reducing mental distress in China. Front. Digit. Health 5:1133987. doi: 10.3389/fdgth.2023.1133987

Sadeh-Sharvit, S., Camp, T. D., Horton, S. E., Hefner, J. D., Berry, J. M., Grossman, E., et al. (2023). Effects of an artificial intelligence platform for behavioral interventions on depression and anxiety symptoms: randomized clinical trial. J. Med. Internet Res. 25:e46781. doi: 10.2196/46781

Sadeh-Sharvit, S., and Hollon, S. D. (2020). Leveraging the power of nondisruptive technologies to optimize mental health treatment: case study. JMIR Ment. Health 7:e20646. doi: 10.2196/20646

Sartorius, N., Ustün, T. B., Costa e Silva, J. A., Goldberg, D., Lecrubier, Y., Ormel, J., et al. (1993). An international study of psychological problems in primary care. Preliminary report from the World Health Organization collaborative project on “psychological problems in general health care.”. Arch. Gen. Psychiatry 50, 819–824. doi: 10.1001/archpsyc.1993.01820220075008

Satiani, A., Niedermier, J., Satiani, B., and Svendsen, D. P. (2018). Projected workforce of psychiatrists in the United States: a population analysis. Psychiatr. Serv. 69, 710–713. doi: 10.1176/appi.ps.201700344

Shatte, A. B. R., Hutchinson, D. M., and Teague, S. J. (2019). Machine learning in mental health: a scoping review of methods and applications. Psychol. Med. 49, 1426–1448. doi: 10.1017/S0033291719000151

Sianturi, M., Lee, J.-S., and Cumming, T. M. (2023). Using technology to facilitate partnerships between schools and indigenous parents: a narrative review. Educ. Inf. Technol. 28, 6141–6164. doi: 10.1007/s10639-022-11427-4

Singh, O. P. (2023). Artificial intelligence in the era of ChatGPT - opportunities and challenges in mental health care. Indian J. Psychiatry 65, 297–298. doi: 10.4103/indianjpsychiatry.indianjpsychiatry_112_23

Smrke, U., Mlakar, I., Lin, S., Musil, B., and Plohl, N. (2021). Language, speech, and facial expression features for artificial intelligence-based detection of Cancer survivors’ depression: scoping Meta-review. JMIR Ment. Health 8:e30439. doi: 10.2196/30439

Stasolla, F., and Di Gioia, M. (2023). Combining reinforcement learning and virtual reality in mild neurocognitive impairment: a new usability assessment on patients and caregivers. Front. Aging Neurosci. 15:1189498. doi: 10.3389/fnagi.2023.1189498

Straw, I., and Callison-Burch, C. (2020). Artificial intelligence in mental health and the biases of language based models. PLoS One 15:e0240376. doi: 10.1371/journal.pone.0240376

Su, Z., Sheng, W., Yang, G., Bishop, A., and Carlson, B. (2022). Adaptation of a robotic dialog system for medication reminder in elderly care. Smart Health 26:100346. doi: 10.1016/j.smhl.2022.100346

Su, S., Wang, Y., Jiang, W., Zhao, W., Gao, R., Wu, Y., et al. (2022). Efficacy of artificial intelligence-assisted psychotherapy in patients with anxiety disorders: a prospective, National Multicenter Randomized Controlled Trial Protocol. Front. Psych. 12:799917. doi: 10.3389/fpsyt.2021.799917

Sweeney, C., Potts, C., Ennis, E., Bond, R., Mulvenna, M. D., O’Neill, S., et al. (2021). Can Chatbots help support a Person’s mental health? Perceptions and views from mental healthcare professionals and experts. ACM Trans. Comput. Healthcare 2, 1–25. doi: 10.1145/3453175

Thakkar, A., Gupta, A., and De Sousa, A. (2024). Artificial intelligence in positive mental health: a narrative review. Front. Digit Health 6:1280235. doi: 10.3389/fdgth.2024.1280235

Thieme, A., Belgrave, D., and Doherty, G. (2020). Machine learning in mental health: a systematic review of the HCI literature to support the development of effective and implementable ML systems. ACM Trans. Comput. Hum. Interact. 27, 1–53. doi: 10.1145/3398069

Timmons, A. C., Duong, J. B., Simo Fiallo, N., Lee, T., Vo, H. P. Q., Ahle, M. W., et al. (2023). A call to action on assessing and mitigating bias in artificial intelligence applications for mental health. Perspect. Psychol. Sci. 18, 1062–1096. doi: 10.1177/17456916221134490

Viduani, A., Cosenza, V., Fisher, H. L., Buchweitz, C., Piccin, J., Pereira, R., et al. (2023). Assessing mood with the identifying depression early in adolescence Chatbot (IDEABot): development and implementation study. JMIR Hum. Factors 10:e44388. doi: 10.2196/44388

Vora, L. K., Gholap, A. D., Jetha, K., Thakur, R. R. S., Solanki, H. K., and Chavda, V. P. (2023). Artificial intelligence in pharmaceutical technology and drug delivery design. Pharmaceutics 15:1916. doi: 10.3390/pharmaceutics15071916

Wilkinson, C. B., Infantolino, Z. P., and Wacha-Montes, A. (2017). Evidence-based practice as a potential solution to burnout in university counseling center clinicians. Psychol. Serv. 14, 543–548. doi: 10.1037/ser0000156

World Health Organization . Ethics and governance of artificial intelligence for health (WHO). (2021). Available at: https://www.who.int/publications/i/item/9789240029200 (Accessed September 9, 2024).