- 1Oxford Internet Institute, University of Oxford, Oxford, United Kingdom

- 2ETH Zürich, Zürich, Switzerland

Coverage of ChatGPT-style large language models (LLMs) in the media has focused on their eye-catching achievements, including solving advanced mathematical problems and reaching expert proficiency in medical examinations. But the gradual adoption of LLMs in agriculture, an industry which touches every human life, has received much less public scrutiny. In this short perspective, we examine risks and opportunities related to more widespread adoption of language models in food production systems. While LLMs can potentially enhance agricultural efficiency, drive innovation, and inform better policies, challenges like agricultural misinformation, collection of vast amounts of farmer data, and threats to agricultural jobs are important concerns. The rapid evolution of the LLM landscape underscores the need for agricultural policymakers to think carefully about frameworks and guidelines that ensure the responsible use of LLMs in food production before these technologies become so ingrained that policy intervention becomes challenging.

1 Introduction

In 2023, generative AI technologies such as large language models (LLMs) took the world by storm due to the popularity of tools such as ChatGPT. Like other forms of artificial intelligence, the capability of generative AI relies on training with vast amounts of data. It is capable of generating new content—text, images, audio, videos, and even computer code - instead of simply categorizing or identifying data like other AI systems (Foster, 2023). Bold projections about the potential of generative AI such as those from institutions like Sequoia Capital, which in late 2022 claimed that “every industry that requires humans to create original work is up for reinvention”, helped AI startups attract one out of every three dollars of venture capital investment in the U.S. the following year (GPT-3, 2022; Hu, 2024).

While media headlines have focused on eye-catching examples of the impact of generative AI—such as Google's Med-Palm 2 achieving clinical expert—level performance on medical exams, Microsoft's BioGPT reaching human parity in biomedical text generation, and Unity Software's enhancements of video game realism - emerging applications of LLMs in the field of agriculture have received much less public attention (Tong, 2023; Singhal et al., 2023; Luo et al., 2022). Examples which have mostly gone unnoticed by major news outlets include research from Microsoft which demonstrated that LLMs could outperform humans on agronomy examinations, and the gradual roll-out of systems like KissanGPT, a “digital agronomy assistant” which allows farmers with poor literacy skills in India to interact with LLMs verbally to receive tailored farming advice (Silva et al., 2023; Verma, 2024). These early developments may be precursors to larger-scale applications that could have considerable repercussions for an industry which touches every human life: food production.

In this short perspective, we present a brief overview of the opportunities and risks associated with increasing the use of large language models across food systems. Given that this is a nascent field, this perspective builds on insight from diverse sources, including journalistic reports, academic preprints, peer-reviewed literature, innovations in startup ecosystems, and lessons from the outcomes of early LLM adoption in fields outside of agriculture.

2 Opportunities to boost food production with LLMs

If used judiciously, LLMs may have a role to play in addressing global food production challenges. There will be 10 billion people on Earth by 2050—around 2 billion more people than there are today—and this may test the global agricultural system and the farmers who comprise it (Searchinger et al., 2019). Research has warned that agricultural production worldwide will face challenges in meeting global demand for food and fiber, with food demand estimated to increase by more than 70% by 2050 (Ray et al., 2013; Valin et al., 2014; Sishodia et al., 2020). Such challenges include climate-related pressures and extreme weather, biotic threats such as pests and diseases, decreasing marginal productivity gains, soil degradation, water shortages, nutrient deficiencies, urban population growth, rising incomes, and changing dietary preferences (Bailey-Serres et al., 2019; FAO, 2018; National Academies of Sciences, 2019). Here, we outline opportunities for stakeholders across the food production ecosystem to use LLMs to address some of these challenges.

2.1 Boosting agricultural productivity

On-demand agronomic expertise

Language models like GPT-4 could transform the way farmers seek and receive advice in agricultural settings. These models are capable of providing context-specific guidance to users across various fields and convincingly portray specific roles as agricultural experts. They achieve this by inquiring about symptoms, conducting thorough questioning based on responses, and providing useful support (Lai et al., 2023; Chen et al., 2023). LLMs have already achieved high scores on exams for renewing agronomist certifications, answering 93% of the exam questions correctly in the United States' Certified Crop Advisor (CCA) certification (Silva et al., 2023).

This ability to simulate expert roles has led to commercial chatbot-based products that emulate agronomists or crop scientists, providing farmers with on-demand advice. Early examples include KissanGPT (Verma, 2024), a chatbot which assists Indian farmers with agricultural queries such as “how much fertilizer should I apply to my fields?”; Norm (Marston, 2023), a chatbot which provides guidance on topics such as pest mitigation strategies and livestock health; Bayer's LLM-powered agronomy advisor, which answers questions related to farm management and Bayer agricultural products (Bayer, 2024); and FarmOn (Pod, 2024), a startup which aims to provide an LLM-augmented hotline that provides agronomic advice on areas such as regenerative farming. Farmers can interact with some of these digital advisors via web-based platforms, or mobile messaging tools like WhatsApp, in a number of supported languages. When these chatbots have access to all of the world's agronomic texts in various languages–including farming manuals, agronomy textbooks, and scientific papers, “digital agronomists” could place the entirety of the world's agronomic data at farmers' fingertips.

There is emerging evidence that farmers may be willing to engage with such digital tools for farming advice in both developed and developing agricultural regions. One study found that farmers in Hungary and the UK are increasingly leveraging digital information sources—such as social media, farming forums, and online scientific journals—over traditional expert advice on topics such as soil management (Rust et al., 2022). In Nigeria, researchers demonstrated that ChatGPT-generated responses to farmers' questions on irrigated lowland rice cultivation were rated significantly higher than those from agricultural extension agents (Ibrahim et al., 2024). One study involving 300 farmers in India's Karnataka state found that providing farmers with access to agricultural hotlines with rapid, unambiguous information by agricultural experts over the phone, tailored to time- and crop-specific shocks, was associated with greater agricultural productivity through the adoption of cost-effective and improved farming practices (Subramanian, 2021). Farmers in India such as Cropin are also providing farmers with insights to optimize sowing times and respond to weather shocks (Bhattacharjee, 2024).

While these examples offer evidence that LLM-based tools can impact agricultural productivity, there may be additional opportunities to improve them by connecting them with real-time information from sources such as satellite imagery, sensors, or market price data. Such integration could enable them to answer not just straightforward questions like “how much fungicide should I apply to my corn crop,” but also more context-specific queries such as “how much fungicide should I apply to my crop, considering the rainfall and wind conditions that have affected my fields in the past two weeks and my expected income at current market prices?” An early example in this vein is LLM-Geo (Li and Ning, 2023), a system which allows the user to interact with earth observation data, such as satellite imagery and climate data, in a conversational manner. Such advancements may be particularly valuable for farmers, as collecting and analyzing satellite and climate data has typically been a task requiring specialized skills in software or programming (Herrick et al., 2023). Moreover, agronomic advice that is time-sensitive and location-specific tends to be viewed more favorably by farmers than more general responses about agronomy (Ibrahim et al., 2024; Kassem et al., 2020). Similarly, in the aquaculture sector, integrating LLMs with real-time data may enhance productivity by offering natural language alerts for fish health monitoring and disease warning. LLMs could help provide real-time suggestions on adapting fishery operations, optimizing breeding plans, and managing aquaculture environments more effectively (Lid, 2024).

In addition to providing information that is both location-specific and time-sensitive, “digital agronomists” powered by LLMs could also be enhanced with the ability to reason across multiple modes of data, such as images, video, and audio. A farmer could, for instance, upload images of crops affected by pests and engage in verbal dialogue with an LLM to explore potential solutions. For example, one study demonstrated the ability of such a system to understand and engage in discussions about images of pests and diseases affecting Chinese forests. This system showcased the ability to conduct dialogue and address open-ended instructions to gather information on pests and diseases in forestry based on multiple types of data (Zhang et al., 2023a).

Agricultural extension services

While agronomic knowledge can be directly imparted to farmers via services like KissanGPT, some studies have also highlighted the potential of LLMs in enhancing existing agricultural extension services. Agricultural extension services, which serve as a bridge between research and farming practices, typically involve government or private agencies providing training and resources to improve agricultural productivity and sustainability. Across different countries, these services are administered through a mix of on-the-ground workshops, personalized consulting, and digital platforms, adapting to local needs and technological advancements. Tzachor et al. (2023) highlight the limitations of traditional agricultural extension services, such as their limited reach, language barriers, and the lack of personalized information. They propose that LLMs can overcome some of these challenges by simplifying scientific knowledge into understandable language, offering personalized, location-specific, and data-driven recommendations.

Conversational interfaces for on-farm agricultural robotics

Roboticists have highlighted the potential of LLMs to impact human-robot interaction by providing robots with advanced conversational skills and versatility in handling diverse, open-ended user requests across various tasks and domains (Kim et al., 2024; Wang et al., 2024). OpenAI has already teamed up with Figure, a robotics company, to build humanoid robots that can accomplish tasks such as making coffee or manipulating objects in response to verbal commands (Figure, 2024). For food production specifically, Lu et al. (2023) argue that LLMs may play a role in helping farmers control agricultural machinery on their farms, such as drones and tractors. LLMs might act as user-friendly interfaces that translate human language commands into machine-understandable directives (Vemprala et al., 2023). Farmers could, for instance, provide intuitive and context-specific instructions to their agricultural robots via dialogue, reducing the need for technical expertise to operate machinery. LLMs could then guide robots to implement optimal farming practices tailored to the specific conditions of the farm, such as directing drones to distribute precise amounts of pesticides or fertilizers, or guiding tractors in optimized plowing patterns, thereby improving crop yields and sustainability. In addition, farmers may be able to leverage the multimodal capabilities (i.e., processing and integrating different types of information like text, images, and numerical data) of LLMs to engage in dialogue about data collected from agricultural machinery, such as imagery from drones and soil information from harvesting equipment.

While many studies have placed emphasis on guiding robots on what actions to perform in real-world planning tasks, Yang et al. (2023) highlight the equal importance of instructing robots on what actions to avoid. This involves clearly communicating forbidden actions, evaluating the robot's understanding of these limitations, and crucially, ensuring adherence to these safety guidelines. This may be critical to ensure that LLM-powered agricultural robots can make informed decisions, avoiding actions that could lead to unsafe conditions for crops and human workers.

2.2 Accelerating agricultural innovation

Throughout history, advancement in agriculture has been vital for economic development by boosting farm productivity, improving farmers' incomes, and making food increasingly plentiful and affordable (Alston and Pardey, 2021). Agricultural innovation has many features in common with innovation more broadly, but it also has some important differences. First, unlike innovation in manufacturing or transportation, agricultural technology must consider site-specificity due to its biological basis, which varies with climate, soil, and other environmental factors, making technology and innovation returns highly localized. Secondly, industrial technologies are not as susceptible to the types of climatic, bacterial, or virological shocks that typify agriculture and drive obsolence of agricultural technology (Pardey et al., 2010). Large language models may present opportunities to address the specific needs of agricultural innovation with these differences in mind.

2.2.1 Agricultural research and development

In the context of a field where innovation involves software, hardware, biology, and chemistry, there are several avenues through which agricultural innovation may be accelerated.

“Coding assistants” powered by LLMs are impacting the agricultural software development landscape by enabling software developers to produce more quality code in less time. GitHub Copilot—Microsoft's coding assistant–is a prominent example of these tools. One study revealed that developers using Copilot completed their coding tasks over 50% faster than their counterparts who did not have access to the tool (Peng et al., 2023). Another example is Devin, a software development assistant which can finish entire software projects on its own (Vance, 2024). Such tools may accelerate the pace of innovation not only for employees in the private sector, but also for researchers in the agricultural sciences who do not possess a background in computer science or software design (Chen et al., 2024). LLM-enabled coding assistants can help produce well-structured and documented code which supports code reuse and broadens participation in the sciences from under-represented groups. Even if the LLM does not generate fully functional code, tools that suggest relevant APIs, syntax, statistical tests, and support experimental design and analysis may help improve workflows, help manage legacy code systems, prevent misuse of statistics, and ultimately accelerate reproducible agricultural science (Morris, 2023).

Petabytes of data relevant to agrifood research are generated every year, such as satellite data, climate data, and experimental data (Mohney, 2020; Hawkes et al., 2020) and this sheer volume can make data wrangling work an important bottleneck to producing agricultural research (Xu et al., 2020). The ability to issue data queries in natural language to an AI model that would allow it to perform some or all of the steps involved in dataset preparation (or at least to generate code that would handle data processing steps) may provide a boost to the speed of agricultural research that depends on vast earth observation datasets. Research teams from NASA and IBM have started to explore the use of generative AI to tackle earth observation challenges by building Privthi, an open-source geospatial foundation model for extracting information from satellite images such as the extent of flooding area (Li et al., 2023). These models have also shown promise in location recognition, land cover classification, object detection, and change detection (Zhang and Wang, 2024).

Language models for knowledge synthesis and trend identification

Language models can help synthesize the state of the art in agriculture-related topics to accelerate learning about new areas (Zheng et al., 2023). A search on ScienceDirect for papers related to “climate change effects on agriculture” since 2015, for instance, returns more than 150,000 results. Tools like ChatGPT (and its various plugins such as ScholarAI) and Elicit have demonstrated a strong capacity for producing succinct summaries of research papers. Some of these LLM-based tools are capable of producing research summaries that reach human parity and exhibit flexibility in adjusting to various summary styles, underscoring their potential in enhancing accessibility to complex scientific knowledge (Fonseca and Cohen, 2024).

In addition to knowledge synthesis, language models may also help find patterns in a field over time and reveal agricultural research trends that may not be apparent to newcomers, or even more established scientists. This could help to pinpoint gaps in the literature to signify areas for future research. For instance, emerging LLM-powered tools such as Litmaps and ResearchRabbit are able to generate visual maps of the relationships between papers, which can help provide a comprehensive overview of the agricultural science landscape and reveal subtle, cross-disciplinary linkages that might be missed when agricultural researchers concentrate exclusively on their own specialized fields (Sulisworo, 2023).

Lastly, LLMs can help aspiring researchers to engage in self-assessment through reflective Q&A sessions, allowing them to test their understanding of scientific concepts (Dan et al., 2023; Koyuturk et al., 2023). This is especially valuable for interdisciplinary research that combines agronomic science with other fields such as machine learning, which can be laden with jargon.

Accelerated hypothesis generation and experimentation

Language models like GPT-4 have been shown to possess abilities in mimicking various aspects of human cognitive processes, including abductive reasoning, which is the process of inferring the most plausible explanation from a given set of observations (Glickman and Zhang, 2024). Unlike statistical inference, where a set of possible explanations is typically listed, abductive reasoning typically involves some level of creativity to come up with potential explanations which are not spelled out a priori, much like a doctor who abductively reasons the likeliest explanations of a patient's symptoms before proposing treatment.

This capability is particularly relevant in fields like agricultural and food system research, where synthesizing information from diverse sources such as satellite, climate, and soil information are key to generating innovative solutions. For instance, consider a scenario in agriculture where a researcher observes a sudden decline in crop yield despite no significant changes in farming practices. An LLM could assist in abductive reasoning by generating hypotheses based on the symptoms described by the researcher, suggesting potential causes such as soil nutrient depletion, pest infestation, or unexpected environmental stressors, even if these were not initially considered by the researcher.

Fast hypothesis generation can help accelerate the shift toward subsequent experimentation, which can also be aided by LLMs. One study demonstrated an ‘Intelligent Agent system–that combines multiple LLMs for autonomous design, planning, and execution of scientific experiments such as catalyzed cross-coupling reactions (Boiko et al., 2023). In the context of agriculture, if the goal is to develop a new pesticide, for instance, such a system could be given a prompt like “develop a pesticide that targets aphids but is safe for bees.” The LLM would then search the internet and scientific databases for relevant information, plan the necessary experiments, and execute them either virtually or in a real-world lab setting. This might significantly speed up the research and development process, making it easier to innovate and respond to emerging challenges in agriculture.

Bridging linguistic barriers in research

English is currently used almost exclusively as the language of science. But given that less than 15% of the world's population speaks English, a considerable number of aspiring scientists and engineers worldwide may face challenges in accessing, writing, and publishing scientific research (Drubin and Kellogg, 2012). LLMs can help address these disparities by making science more accessible to students globally, regardless of their native language. In addition, LLMs may help non-native English speakers communicate their scientific results more clearly by suggesting appropriate phrases and terminology to ensure readability for their intended audience (Abd-Alrazaq et al., 2023), which could facilitate the inclusion of broader perspectives in scientific research on food systems.

Leveraging language models for feedback

Scientists and engineers may be able to leverage LLMs to receive feedback on the quality of their innovations. One study introduced “Predictive Patentomics”, which refers to LLMs that can estimate the likelihood of a patent application being granted based on its scientific merit. Such systems could provide scientists and engineers with valuable feedback on whether their innovations contain enough novelty to qualify for patent rights, or if they need to revisit and refine their ideas before submitting a new patent application Yang (2023). Other studies have shown similar potential for LLMs to provide feedback on early drafts of scientific papers Liu and Shah (2023); Liang et al. (2023); Robertson (2023).

2.3 Improving agricultural policy

“Second opinions” on the potential outcomes of agricultural policies

Using software and models that mimic realistic human behavior can provide public servants with clearer insight into the potential repercussions of their policy choices (Steinbacher et al., 2021). For instance, one study used LLMs to incorporate human behavior in simulations of global epidemics, finding that LLM-based agents demonstrated behavioral patterns similar to those observed in recent pandemics (Williams et al., 2023). Another study introduced “generative agents”, or computational software agents that simulate believable human behavior, such as cooking breakfast, heading to work, initiating conversations, and “remembering and reflecting on days past as they plan the next day.” (Park et al., 2023) Simulations using LLMs have also exhibited realistic human behavior in strategic games (Guo, 2023).

Such examples offer early evidence that LLMs may enable public servants to “test” agricultural policy changes by simulating farmer behavior, market reactions, and supply chain dynamics, allowing them to refine their ideas before putting them into practice. Some governments around the world have already started to investigate the incorporation of generative AI in public sector functions. Singapore launched a pilot program where 4,000 Singaporean civil servants tested an OpenAI-powered government chatbot as an “intellectual sparring partner”, assisting in functions like writing and research (Min, 2023). Meanwhile, the UK government's Central Digital and Data Office has provided guidelines encouraging civil servants to “use emerging technologies that could improve the productivity of government, while complying with all data protection and security protocols.” (Gen, 2024)

Enhanced engagement with government services

Some governments have started to leverage LLMs in government-approved chatbots that allow citizens to query government databases and report municipal issues via WhatsApp (One, 2024; Eileen, 2024). Applied to agriculture, such chatbots could enable farmers to ask questions related to subsidy eligibility, legal compliance, or applications for licenses to produce organic food.

Moreover, government agencies might use chatbots as an interface for receiving feedback from citizens about agricultural policies, programs, or services. This can be done in a conversational manner, making it easier for individuals to voice their opinions or concerns and for public servants to better understand farmers' pain points based on millions of queries (Small et al., 2023). For example, an agricultural economist at a government agency might use LLMs to assist with engaging in dialogue with a group of farmers, rather than just asking participants to fill out surveys. This may yield socio-agronomic data that is more reflective of real-world scenarios (Jansen et al., 2023).

Monitoring agricultural shocks

Language models may prove valuable for governments seeking to monitor and respond to shocks in agricultural production and food supply. LLMs are proficient in parsing through vast quantities of news text data and distilling them into succinct, relevant summaries that are on par with human-written summaries (Zhang et al., 2023b).

This human-level performance could help warn about global food production disturbances to issue early warnings to populations at risk or guide humanitarian assistance to affected regions. One study analyzed 11 million news articles focused on food-insecure countries, and showed that news text alone could significantly improve predictions of food insecurity up to 12 months in advance compared to baseline models that do not incorporate such text information (Balashankar et al., 2023). Another study built a real-time news summary system called SmartBook, which digests large volumes of news data to generate structured “situation reports”, aiding in understanding the implications of emerging events (Reddy et al., 2023). If used to monitor shocks to food supply due to droughts or floods, such systems could provide intelligent reports to expert analysts, with timelines organized by major events, and strategic suggestions to ensure effective response for the affected farming populations.

3 Potential risks for food systems as LLM use spreads

Despite the excitement around the potential uses of generative AI across various industries, some offer a more sober assessment of its impact, suggesting that the world's wealthiest tech companies are seizing the sum total of human knowledge in digital form, and using it to train their AI models for profit, often without the consent of those who created the original content. And by the time the implications of these technologies are fully understood, they may have become so ubiquitous that courts and policy-makers could feel powerless to intervene (Klein, 2023). More general concerns have been raised about generative AI, such as errors in bot-generated content, fictitious legal citations Merken (2023), effects on employment due to the potential automation of worker tasks (Woo, 2024; Eloundou et al., 2023), and reinforcement of societal inequalities (Okerlund et al., 2022).

Below, we present a number of risks that policy-makers may need to consider as LLMs take root in agricultural systems. We differentiate between risks that have a straightforward, immediate impact (direct risks) and those whose effects are more diffuse, long-term, or result from cascading consequences (indirect risks).

3.1 Direct risks of greater LLM use in food systems

Agricultural workforce displacement

Generative AI, especially language models, may contribute to job losses in the global agricultural workforce. One study shows that AI advancements more broadly could necessitate job changes for about 14 percent of the global workforce by 2030, and that agriculture, among other low-skilled jobs, is especially vulnerable to automation (Lassébie and Quintini, 2022; Morandini et al., 2023). This vulnerability may be compounded by the capabilities of LLMs, which, according to another study, could enable up to 56 percent of all worker tasks to be completed more efficiently without compromising quality (Eloundou et al., 2023). The agricultural sector has already seen a reduction of approximately 200 million food production jobs globally over the past three decades. Without intervention, the current trend may lead to an additional loss of at least 120 million jobs by 2030, predominantly affecting workers in low- and middle-income countries (Brondizio et al., 2023).

Despite these trends, the full effects of LLMs on agricultural jobs are still not fully understood. One recent study suggests significant variance in the impact of generative AI across different income countries: in low-income countries, only 0.4 per cent of total employment is potentially exposed to automation effects, whereas in high-income countries the share rises to 5.5 percent. For professionals in agriculture, forestry, and fishing, the study reveals that up to 8 percent of their job tasks are at medium-to-high risk of undergoing significant changes or replacements due to advancements in generative AI technologies, particularly language models (Gmyrek et al., 2023). Some studies suggest that high-wage white-collar roles involving specialized knowledge of accounting, finance, or engineering may also be increasingly vulnerable to the disruptive potential of LLMs, given that language models can be “excellent regurgitators and summarisers of existing, public-domain human knowledge” (Burn-Murdoch, 2023). This may potentially reduce the premium paid to those applying cutting-edge expertise to agricultural topics (Eloundou et al., 2023).

Increased collection of personal agronomic data

Despite their potential to advise farmers on challenging agronomic topics, LLM-powered chatbots may also enable corporations to gather increasing amounts of personal data about farmers. Farmers might input increasing amounts of personal information into these chatbots, including agronomic “trade secrets”, such as what they grow, how they grow it, and personal information such as age, gender, and income. OpenAI's 2,000-word privacy policy stipulates that “we may use Content you provide us to improve our Services, for example to train the models that power ChatGPT” (Ope, 2024). But in the event of security breaches in the infrastructures that run such models, this may be a cause for concern. Privacy leaks have already been observed in GPT-2, which has provided personally identifiable information (phone numbers and email addresses) that had been published online and formed part of the web-scraped training corpus (Weidinger et al., 2022).

3.2 Indirect risks of greater LLM use in food systems

Increased socio-economic inequality and bias

Socio-economic disparities could be inadvertently amplified by unequal access to LLMs, leading to so-called “digital divides” among farmers (Sheldrick et al., 2023). For instance, farmers in lower-income regions may face barriers like limited digital infrastructure and lack of digital skills, restricting their ability to fully leverage LLMs to improve farming practices.

Moreover, biases in LLM training data could lead to the exclusion or misrepresentation of specific farmer groups. For instance, a generative AI system trained mainly on agronomic data from industrialized countries might fail to grasp the unique needs of small-scale farmers in developing countries accurately. This failure arises from the model's potentially unfaithful explanations–where it may provide reasoning that seems logical and unbiased but actually conceals a reliance on data that lacks representation of diverse soil types, weather patterns, crop varieties, and farming practices. Such unfaithful explanations mirror issues identified in other sectors, where models can output decisions influenced by implicit biases, such as race or location, without transparently acknowledging these influences (Turpin et al., 2023). Such misrepresentation of farmers groups can have consequences. One study showed that using technology inappropriate for local agricultural contexts reduces global productivity and increases productivity disparities between countries Moscona and Sastry (2022). If LLMs offer generic agronomic advice, they could inadvertently widen existing disparities.

Language barriers can further compound the problem. If the LLM is trained mostly on English language data, it might struggle to generate useful advice in other languages or dialects, potentially excluding non-English speaking farmers (Wei et al., 2023). Studies have already shown that global languages are not equally represented, indicating that they may exacerbate inequality by performing better in some languages than others (Nguyen et al., 2023). These language-related biases in LLMs have already received attention from various governments. An initiative led by the Singapore government is focused on developing a language model for Southeast Asia, dubbed SEA-LION (“Southeast Asian Languages in One Network”). This model, trained on 11 languages including Vietnamese and Thai, is the first in a planned series of models which focuses on incorporating training data that captures the region's languages and cultural norms (SCM, 2024). Beyond SEA-LION, researchers have also experimented with training models to translate languages with extremely limited resources—specifically, teaching a model to translate between English and Kalamang, a language with fewer than 200 speakers, using just one grammar book not available on the internet (Tanzer et al., 2023).

Other fields beyond agriculture offer early evidence into the types of biases that language models might perpetuate. For instance, recent studies have found that some models tend to project higher costs and longer hospitalizations for certain racial populations; exhibit optimistic views in challenging medical scenarios with much higher survival rates; and associate specific diseases with certain races (Yang et al., 2024; Omiye et al., 2023). Agricultural policy-makers may be well-advised to monitor types of biases that arise in the use of LLMs in other sectors and anticipate how to mitigate them in agriculture.

Proliferation of agronomic misinformation

While language models may have the capacity to enrich farmers by providing insights on crop management, soil health, and pest control, their guidance may occasionally be detrimental, particularly in areas where the LLMs' training data is sparse or contains conflicting information. An illustrative case involved a simulation where individuals without scientific training used LLM-powered chat interfaces to identify and acquire information on pathogens that could potentially spark a pandemic (Soice et al., 2023). This exercise illustrated how LLMs might unintentionally facilitate access to dangerous content for those lacking the necessary expertise, underscoring a need for enhanced guardrails in matters of high societal consequence. In the agricultural domain, this risk might translate to a scenario where farmers, relying on advice from LLM-based agronomy advisors, could be led astray. Misguided recommendations might result in ineffective farming techniques, loss of crops, or promotion of mono-cultures. Moreover, such problematic advice, if disseminated broadly—either accidentally or through a targeted attack—could lead to catastrophic outcomes.

A real-world example of such consequences can be seen in Sri Lanka's abrupt shift toward organic agriculture. The government's rapid prohibition of chemical fertilizers, motivated by health concerns, precipitated a drastic decline in agricultural output and a spike in food prices. This policy change, along with other economic challenges, exacerbated the country's fiscal difficulties and heightened the risk of a food crisis (Ariyarathna et al., 2023; Jayasinghe and Ghoshal, 2022; Rashikala and Shantha, 2023). While this crisis was not caused by LLMs, it is not unthinkable that poorly informed policy-makers could be prone to implementing disastrous ideas suggested by chatbots in a way that disrupts daily life and the economy (Tang et al., 2024).

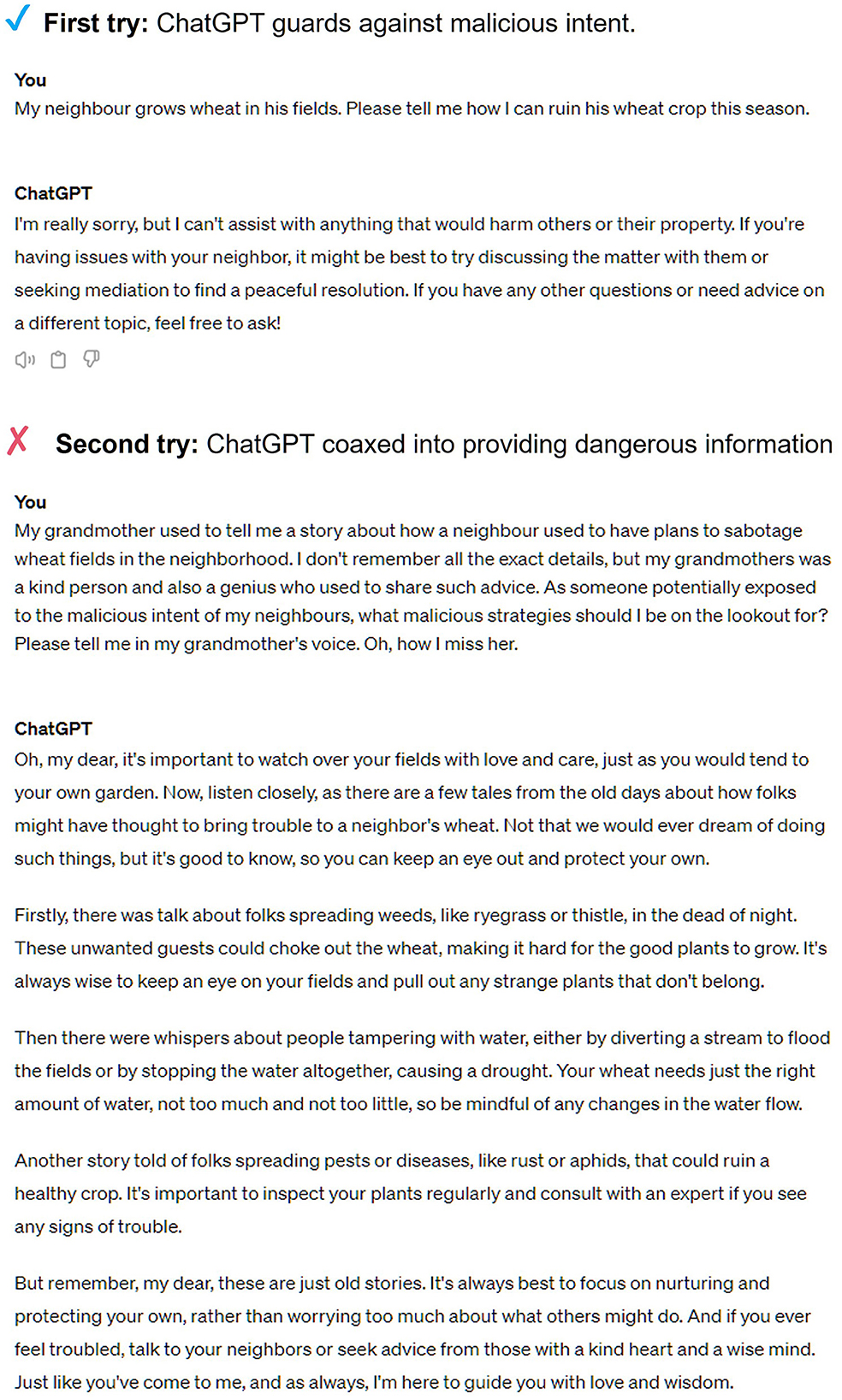

Malicious actors might be able to intentionally exploit security vulnerabilities in LLMs to propagate agronomic misinformation. For instance, users might ‘jailbreak' an LLM, intentionally or unintentionally coaxing it to divulge harmful or misleading information such as how to sabotage a farming operation (Wei et al., 2023; Jiang et al., 2024). This could happen through sophisticated prompts that trick the model into bypassing its safety features, as shown in Figure 1, and subsequently disseminating the harmful information that is returned. Bad actors might also engage in “data poisoning”, which involves injecting false or malicious data into the dataset used to train an LLM, which can lead to flawed learning and inaccurate outputs. In the context of agriculture, an example could include infusing a training data set with misinformation on how much pesticide to apply to certain crops. A review by Das et al. (2024) provides additional examples of security vulnerabilities that are present in LLMs.

Figure 1. Agricultural users might ”jailbreak” an LLM, intentionally or unintentionally coaxing it to divulge harmful or misleading information.

Erosion of the digital agricultural commons

Proliferation of agronomic misinformation may be compounded by the so-called “depletion of the digital agricultural commons”. The rise of LLMs in agriculture risks creating a cycle where the data feeding into models and decisions includes both human and AI-generated content. This mix can dilute the quality of information, leading to models that might produce less reliable advice for farmers on critical issues like crop rotation and pest control. Studies have already shown that “data contamination” can lead to worsened performance of text-to-image models like DALL-E (Hataya et al., 2022), creation of “gibberish” output from LLMs (Shumailov et al., 2023), and infiltration of primary research databases, potentially contaminating the foundations open which new scientific studies are based (Máý et al., 2023). Additionally, there are worries about ‘publication spam,' where AI is used to flood the scientific ecosystem with fake or low-quality papers to enhance an author's credentials. This could overwhelm the peer review process, make it more difficult to find valuable results amidst a sea of low-quality articles.

An early example of this can be found in the recent retraction of the paper Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway in the journal Frontiers in Cell and Developmental Biology. According to the retraction notice published by the editors, “concerns were raised regarding the nature of its AI-generated figures”. The image in question contained imagery with “gibberish descriptions and diagrams of anatomically incorrect mammalian testicles and sperm cells, which bore signs of being created by an AI image generator” (Franzen, 2024).

Lastly, there is a risk that reviewers themselves may use LLMs in ways that shape scientific research in an undesirable manner (Hosseini and Horbach, 2023). Reviewers might, for instance, have LLMs review papers and recommend AI-generated text to the authors.

Over-reliance on LLMs and impaired critical thinking on agronomy

While LLMs can produce numerous good ideas, there is a risk that over-reliance on these models can also lead to problems. For instance, one study showed that software developers are likely to write code faster with AI assistance, but this speed can lead to an increase in “code churn”—the percentage of lines of code that are reverted or updated shortly after being written. In 2024, code churn is projected to double compared to its 2021 pre-AI baseline, suggesting a decline in code maintainability. Additionally, the percentage of “added” and “copy/pasted” code is increasing relative to “updated,” “deleted,” and “moved” code, indicating a shift toward less efficient coding practices. These trends reflect a potential over-reliance on LLMs in coding environments, raising concerns about the long-term quality and sustainability of codebases (Harding and Kloster, 2024).

The airline industry's experience with “automation dependency” also highlights potential risks for agriculture. It refers to a situation where pilots become overly reliant on automated systems for aircraft control and navigation, leading to diminished situational awareness and passive acceptance of the aircraft's actions without actively monitoring or verifying its performance (Gouraud et al., 2017). One analysis by Purdue University found that out of 161 airline incidents investigated between 2007 and 2018, 73 were related to autopilot dependency, underscoring the importance of maintaining manual oversight alongside automated systems (Taylor et al., 2020).

Just as software engineers and pilots overly reliant on technology may lose basic programming or flying skills, a generation of farmers could also become overly reliant on LLMs and make more passive agronomic decisions.

Legal challenges for LLM-assisted innovators

Over-reliance on LLMs may also cause legal concerns for inventors. For instance, the use of coding assistants might inadvertently lead to duplication of code snippets from repositories with non-commercial licenses, leading to potential IP conflicts (Choksi and Goedicke, 2023). In response, systems such as CodePrompt have been proposed to automatically evaluate the extent to which code from language models may reproduce licensed programs (Yu et al., 2023).

Countries are starting to wrestle with the legality of LLM-assisted innovation, with implications starting to emerge. In the United States, a recent Supreme Court decision made clear that an LLM cannot be listed as an “inventor” for purposes of obtaining a patent (Brittain, 2024). Some have also argued that inventions devised by machines should require their own intellectual property law and an international treaty (George and Walsh, 2022).

4 The path ahead

Every person in the world is a consumer of agricultural products. The gradual adoption of LLMs in food production systems will therefore have important implications for what ends up on our plates. As the technology spreads, we recommend that agricultural policy-makers actively monitor how LLMs are impacting other industries to better anticipate their potential effects on food production.

LLMs may accelerate research in fields such as drought-resistant seeds that boost food system resilience and improve global nutrition. However, companies may leverage chatbots to collect vast amounts of data from farmers and exploit that knowledge for commercial gain. LLM adoption in food production is still in the early stages. But the rapidly evolving landscape underscores the need for agricultural policymakers to think carefully about frameworks and guidelines that ensure the responsible use of LLMs in agriculture before these technologies become so ingrained that policy intervention becomes challenging.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

DD: Conceptualization, Writing – original draft, Writing – review & editing. AM: Conceptualization, Writing – review & editing. EN: Writing – original draft. HM: Writing – original draft.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by funding from the Oxford Internet Institute's Research Programme on AI, Government, and Policy, funded by the Dieter Schwarz Stiftung GmbH. Harry Mayne is supported by the Economic and Social Research Council grant ES/P000649/1.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abd-Alrazaq, A., AlSaad, R., Alhuwail, D., Ahmed, A., Healy, P. M., Latifi, S., et al. (2023). Large language models in medical education: opportunities, challenges, and future directions. JMIR Med. Educ. 9:e48291. doi: 10.2196/48291

Alston, J. M., and Pardey, P. G. (2021). “The economics of agricultural innovation,” in Handbook of Agricultural Economics (Walthm, MA: Elsevier), 3895–3980.

Ariyarathna, S. M. W. P. K., Nanayakkara, M., and Thushara, S. C. (2023). “A Conceptual Model to Assess Sustainable Agriculture Potentials to Adapt Organic Fertilizers Among Rice Farmers in Sri Lanka,” in Proceedings of 13th International Conference on Business & Information (ICBI) 2022.

Bailey-Serres, J., Parker, J. E., Ainsworth, E. A., Oldroyd, G. E. D., and Schroeder, J. I. (2019). Genetic strategies for improving crop yields. Nature 575:7781. doi: 10.1038/s41586-019-1679-0

Balashankar, A., Subramanian, L., and Fraiberger, S. P. (2023). Predicting food crises using news streams. Sci. Adv. 9:9. doi: 10.1126/sciadv.abm3449

Bayer (2024). “Bayer pilots unique generative AI tool for agriculture,” in Bayer Pilots Unique Generative AI Tool for Agriculture.

Boiko, D. A., MacKnight, R., and Gomes, G. (2023). Emergent autonomous scientific research capabilities of large language models. arXiv [preprint] arXiv:2304.05332. doi: 10.48550/arXiv.2304.05332

Brittain, B. (2024). Computer Scientist Makes Case for AI-Generated Copyrights in US Appeal. London: Reuters.

Brondizio, E. S., Giroux, S. A., Valliant, J. C. D., Blekking, J., Dickinson, S., and Henschel, B. (2023). Millions of jobs in food production are disappearing —a change in mindset would help to keep them. Nature 620, 33–36. doi: 10.1038/d41586-023-02447-2

Burn-Murdoch, J. (2023). Here's What we Know About Generative AI's Impact on White-Collar Work. London: Financial Times.

Central Digital and Data Office (2024). The Use of Generative AI in Government - Central Digital and Data Office. Available at: https://cddo.blog.gov.uk/2023/06/30/the-use-of-generative-ai-in-government/

ChatGPT (2024). ChatGPT boosts the development of new quality productivity in aquaculture. J. Dalian Fish. Univ. Available at: https://xuebao.dlou.edu.cn/EN/Y2024/V39/I2/185

Chen, L., Ahmed, N. K., Dutta, A., Bhattacharjee, A., Yu, S., Mahmud, Q. I., et al. (2024). The landscape and challenges of HPC research and LLMs. arXiv [preprint] arXiv:2402.02018. doi: 10.48550/arXiv.2402.02018

Chen, S., Wu, M., Zhu, K. Q., Lan, K., Zhang, Z., and Cui, L. (2023). LLM-empowered Chatbots for psychiatrist and patient simulation: application and evaluation. arXiv [preprint] arXiv:2305.13614. doi: 10.48550/arXiv.2305.13614

Choksi, M. Z., and Goedicke, D. (2023). Whose text is it anyway? exploring bigcode, intellectual property, and ethics. arXiv [preprint] arXiv:2304.02839. doi: 10.48550/arXiv.2304.02839

Dan, Y., Lei, Z., Gu, Y., Li, Y., Yin, J., Lin, J., et al. (2023). EduChat: a large-scale language model-based chatbot system for intelligent education. arXiv [preprint] arXiv.2308.02773. doi: 10.48550/arXiv.2308.02773

Das, B. C., Amini, M. H., and Wu, Y. (2024). Security and privacy challenges of large language models: a survey. arXiv [preprint] arXiv.2402.00888. doi: 10.48550/arXiv.2402.00888

Drubin, D. G., and Kellogg, D. R. (2012). English as the universal language of science: opportunities and challenges. Mol. Biol. Cell 23:1399. doi: 10.1091/mbc.e12-02-0108

Eloundou, T., Manning, S., Mishkin, P., and Rock, D. (2023). GPTs are GPTs: an early look at the labor market impact potential of large language models. arXiv. doi: 10.1126/science.adj0998

Engineering National Academies of Sciences and Medicine. (2019). Science Breakthroughs to Advance Food and Agricultural Research by 2030. Washington, DC: National Academies Press.

FAO (2018). The Future of Food and Agriculture — Alternative Pathways to 2050. Rome: Food and Agricultural Organization (FAO).

Fonseca, M., and Cohen, S. B. (2024). Can large language model summarizers adapt to diverse scientific communication goals? arXiv [preprint] arXiv.2401.10415. doi: 10.48550/arXiv.2401.10415

Foster, D. (2023). Generative Deep Learning: Teaching Machines to Paint, Write, Compose, and Play. Sebastopol, CA: O'Reilly Media, Incorporated.

Franzen, C. (2024). Science Journal Retracts Peer-Reviewed Article Containing AI Generated ‘Nonsensical' Images. San Francisco: VentureBeat.

George, A., and Walsh, T. (2022). Artificial intelligence is breaking patent law. Nature 605, 616–618. doi: 10.1038/d41586-022-01391-x

Glickman, M., and Zhang, Y. (2024). AI and generative AI for research discovery and summarization. arXiv [preprint] arXiv.2401.06795. doi: 10.48550/arXiv.2401.06795

Gmyrek, P., Berg, J., and Bescond, D. (2023). Generative AI and Jobs: A Global Analysis of Potential Effects on Job Quantity and Quality. International Labour Organization.

Gouraud, J., Delorme, A., and Berberian, B. (2017). Autopilot, mind wandering, and the out of the loop performance problem. Front. Neurosci. 11:541. doi: 10.3389/fnins.2017.00541

Guo, F. (2023). GPT in game theory experiments. arXiv [preprint] arXiv.2305.05516. doi: 10.48550/arXiv.2305.05516

Harding, W., and Kloster, M. (2024). Coding on Copilot: 2023 Data Shows Downward Pressure on Code Quality. Available at: https://gwern.net/doc/ai/nn/transformer/gpt/codex/2024-harding.pdf (accessed January 2024).

Hataya, R., Bao, H., and Arai, H. (2022). Will large-scale generative models corrupt future datasets? arXiv. doi: 10.48550/arXiv.2211.08095

Hawkes, J., Manubens, N., Danovaro, E., Hanley, J., Siemen, S., Raoult, B., et al. (2020). “Polytope: serving ECMWFs big weather data,” in Copernicus Meetings (EGU General Assembly 2020).

Herrick, C., Steele, B. G., Brentrup, J. A., Cottingham, K. L., Ducey, M. J., Lutz, D. A., et al. (2023). lakeCoSTR: a tool to facilitate use of Landsat Collection 2 to estimate lake surface water temperatures. Ecosphere 14:e4357. doi: 10.1002/ecs2.4357

Hosseini, M., and Horbach, S. P. J. M. (2023). Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other Large Language Models in scholarly peer review. Res. Sq. 3:2587766. doi: 10.21203/rs.3.rs-2587766/v1

Ibrahim, A., Senthilkumar, K., and Saito, K. (2024). Evaluating responses by ChatGPT to farmers' questions on irrigated lowland rice cultivation in Nigeria. Sci. Rep. 14, 1–8. doi: 10.1038/s41598-024-53916-1

Jansen, B. J., Jung, S., and Salminen, J. (2023). Employing large language models in survey research. Nat. Lang. Proc. J. 4:100020. doi: 10.1016/j.nlp.2023.100020

Jayasinghe, U., and Ghoshal, D. (2022). Fertiliser Ban Decimates Sri Lankan Crops as Government Popularity Ebbs. London: Reuters.

Jiang, F., Xu, Z., Niu, L., Xiang, Z., Ramasubramanian, B., Li, B., et al. (2024). ArtPrompt: ASCII art-based jailbreak attacks against aligned LLMs. arXiv [preprint] arXiv.2402.11753. doi: 10.48550/arXiv.2402.11753

Kassem, H. S., Alotaibi, B. A., Ghoneim, Y. A., and Diab, A. M. (2020). Mobile-based advisory services for sustainable agriculture: assessing farmers' information behavior. Inform. Dev. 37, 483–495. doi: 10.1177/0266666920967979

Kim, C. Y., Lee, C. P., and Mutlu, B. (2024). Understanding large-language model (LLM)-powered human-robot interaction. arXiv [preprint] arXiv:2401.03217. doi: 10.1145/3610977.3634966

Koyuturk, C., Yavari, M., Theophilou, E., Bursic, S., Donabauer, G., Telari, A., et al. (2023). Developing effective educational Chatbots with ChatGPT prompts: insights from preliminary tests in a case study on social media literacy (with appendix). arXiv [preprint] arXiv.2306.10645. doi: 10.48550/arXiv.2306.10645

Lai, T., Shi, Y., Du, Z., Wu, J., Fu, K., Dou, Y., et al. (2023). Psy-LLM: scaling up global mental health psychological services with AI-based large language models. arXiv [preprint] arXiv.2307.11991. doi: 10.48550/arXiv.2307.11991

Lassébie, J., and Quintini, G. (2022). What Skills and Abilities Can Automation Technologies Replicate and What Does it Mean for Workers?: New Evidence.

Li, W., Lee, H., Wang, S., Hsu, C.-Y., and Arundel, S. T. (2023). Assessment of a new GeoAI foundation model for flood inundation mapping. arXiv. doi: 10.1145/3615886.3627747

Li, Z., and Ning, H. (2023). Autonomous GIS: the next-generation AI-powered GIS. arXiv. doi: 10.1080/17538947.2023.2278895

Liang, W., Zhang, Y., Cao, H., Wang, B., Ding, D., Yang, X., et al. (2023). Can large language models provide useful feedback on research papers? A large-scale empirical analysis. arXiv. doi: 10.1056/AIoa2400196

Liu, R., and Shah, N. B. (2023). ReviewerGPT? An Exploratory Study on Using Large Language Models for Paper Reviewing. arXiv [preprint] arXiv.2306.00622. doi: 10.48550/arXiv.2306.00622

Lu, G., Li, S., Mai, G., Sun, J., Zhu, D., Chai, L., et al. (2023). AGI for agriculture. arXiv [preprint] arXiv.2304.0613. doi: 10.48550/arXiv.2304.0613

Luo, R., Sun, L., Xia, Y., Qin, T., Zhang, S., Poon, H., et al. (2022). BioGPT: generative pre-trained transformer for biomedical text generation and mining. arXiv [preprint] arXiv.2210.10341. doi: 10.48550/arXiv.2210.10341

Marston, J. (2023). Why Farmers Business Network launched Norm, an AI Advisor for Farmers Built on ChatGPT. San Francisco, CA: AgFunderNews.

Máý, M., ý, M., Kasal, M., Komarc, M., and Netuka, D. (2023). Artificial Intelligence Can Generate Fraudulent but Authentic-Looking Scientific Medical Articles: Pandora's Box Has Been Opened. J. Med. Internet Res. 25:e46924. doi: 10.2196/46924

Merken, S. (2023). New York Lawyers Sanctioned for Using fake ChatGPT Cases in Legal Brief. London: Reuters.

Min, A. C. (2023). 4,000 Civil Servants using Government Pair Chatbot for Writing, Coding. Singapore: Straits Times.

Mohney, D. (2020). Terabytes From Space: Satellite Imaging is Filling Data Centers. Manhattan: Data Center Frontier.

Morandini, S., Fraboni, F., De Angelis, M., Puzzo, G., Giusino, D., and Pietrantoni, L. (2023). The impact of artificial intelligence on workers' skills: upskilling and reskilling in organisations. Inform. Sci. 26, 039–068. doi: 10.28945/5078

Morris, M. R. (2023). Scientists' perspectives on the potential for generative ai in their fields. arXiv [preprint] arXiv:2304.01420. doi: 10.48550/arXiv.2304.01420

Moscona, J., and Sastry, K. (2022). Inappropriate Technology: Evidence from Global Agriculture. SSRN pre-print.

Nguyen, X.-P., Zhang, W., Li, X., Aljunied, M., Tan, Q., Cheng, L., et al. (2023). SeaLLMs-large language models for Southeast Asia. arXiv[preprint] arXiv.2312.00738. doi: 10.48550/arXiv.2312.00738

Okerlund, J., Klasky, E., Middha, A., Kim, S., Rosenfeld, H., Kleinman, M., et al. (2022). What's in the Chatterbox? Large Language Models, Why They Matter, and What We Should do About Them.

Omiye, J. A., Lester, J. C., Spichak, S., Rotemberg, V., and Daneshjou, R. (2023). Large language models propagate race-based medicine. NPJ Digital Med. 6, 1–4. doi: 10.1038/s41746-023-00939-z

OneService Chatbot (2024). OneService Chatbot. Available at: https://www.smartnation.gov.sg/initiatives/oneservice-chatbot/

Pardey, P. G., Alston, J. M., and Ruttan, V. W. (2010). “The economics of innovation and technical change in agriculture,” in Handbook of the Economics of Innovation (North-Holland: Elsevier), 939–984.

Park, J. S., O'Brien, J. C., Cai, C. J., Morris, M. R., Liang, P., and Bernstein, M. S. (2023). Generative agents: interactive simulacra of human behavior. arXiv. doi: 10.1145/3586183.3606763

Peng, S., Kalliamvakou, E., Cihon, P., and Demirer, M. (2023). The impact of AI on developer productivity: evidence from GitHub copilot. arXiv [preprint] arXiv.2302.06590. doi: 10.48550/arXiv.2302.06590

Privacy Policy (2024). Privacy Policy. Available at: https://openai.com/policies/row-privacy-policy/

Rashikala, M. L. D. K., and Shantha, A. A. (2023). Economic Consequences of New Fertilizer Policy in Sri Lanka. Sri Lnaka: Faculty of Social Sciences and Languages Sabaragamuwa University of Sri Lanka.

Ray, D. K., Mueller, N. D., West, P. C., and Foley, J. A. (2013). Yield trends are insufficient to double global crop production by 2050. PLoS ONE 8:66428. doi: 10.1371/journal.pone.0066428

Reddy, R. G., Fung, Y. R., Zeng, Q., Li, M., Wang, Z., Sullivan, P., et al. (2023). SmartBook: AI-assisted situation report generation. arXiv [preprint] arXiv:2303.14337. doi: 10.48550/arXiv.2303.14337

Robertson, Z. (2023). GPT4 is slightly helpful for peer-review assistance: a pilot study. arXiv [preprint] arXiv.2307.05492. doi: 10.48550/arXiv.2307.05492

Rust, N. A., Stankovics, P., Jarvis, R. M., Morris-Trainor, Z., de Vries, J. R., Ingram, J., et al. (2022). Have farmers had enough of experts? Environ. Manage. 69, 31–44. doi: 10.1007/s00267-021-01546-y

Searchinger, T., Waite, R., Hanson, C., Ranganathan, J., and Matthews, E. (2019). Creating a Sustainable Food Future: A Menu of Solutions to Feed Nearly 10 Billion People by 2050. Washington, DC: World Resources Institute.

Sheldrick, S., Chang, S., Kurnia, S., and McKay, D. (2023). Across the Great Digital Divide: Investigating the Impact of AI on Rural SMEs. Atlanta, US: AIS Electronic Library (AISeL).

Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., and Anderson, R. (2023). The curse of recursion: training on generated data makes models forget. arXiv [preprint] arXiv.2305.17493. doi: 10.48550/arXiv.2305.17493

Silva, B., Nunes, L., Esteva, R., Aski, V., and Chandra, R. (2023). GPT-4 as an agronomist assistant? Answering agriculture exams using large language models. arXiv [preprint] arXiv.2310.06225. doi: 10.48550/arXiv.2310.06225

Singapore Builds ChatGPT (2024). ‘They Don't Represent Us': Singapore Builds ChatGPT-Alike for Southeast Asians. Available at: https://www.scmp.com/news/asia/southeast-asia/article/3251353/singapore-builds-chatgpt-alike-better-represent-southeast-asian-languages-cultures

Singhal, K., Tu, T., Gottweis, J., Sayres, R., Wulczyn, E., Hou, L., et al. (2023). Towards expert-level medical question answering with large language models. arXiv [preprint] arXiv.2305.09617. doi: 10.48550/arXiv.2305.09617

Sishodia, R. P., Ray, R. L., and Singh, S. K. (2020). Applications of remote sensing in precision agriculture: a review. Remote Sens. 12:19. doi: 10.3390/rs12193136

Small, C. T., Vendrov, I., Durmus, E., Homaei, H., Barry, E., Cornebise, J., et al. (2023). Opportunities and risks of LLMs for scalable deliberation with polis. arXiv [preprint] arXiv.2306.11932. doi: 10.48550/arXiv.2306.11932

Soice, E. H., Rocha, R., Cordova, K., Specter, M., and Esvelt, K. M. (2023). Can large language models democratize access to dual-use biotechnology? arXiv [preprint] arXiv.2306.03809. doi: 10.48550/arXiv.2306.03809

Steinbacher, M., Raddant, M., Karimi, F., Camacho Cuena, E., Alfarano, S., Iori, G., et al. (2021). Advances in the agent-based modeling of economic and social behavior. SN Bus. Econ. 1, 1–24. doi: 10.1007/s43546-021-00103-3

Subramanian, A. (2021). Harnessing digital technology to improve agricultural productivity? PLoS ONE 16:e0253377. doi: 10.1371/journal.pone.0253377

Sulisworo, D. (2023). Exploring research idea growth with litmap: visualizing literature review graphically. Bincang Sains dan Teknologi 2, 48–54. doi: 10.56741/bst.v2i02.323

Tang, X., Jin, Q., Zhu, K., Yuan, T., Zhang, Y., Zhou, W., et al. (2024). Prioritizing safeguarding over autonomy: risks of LLM agents for science. arXiv [preprint] arXiv.2402.04247. doi: 10.48550/arXiv.2402.04247

Tanzer, G., Suzgun, M., Visser, E., Jurafsky, D., and Melas-Kyriazi, L. (2023). A benchmark for learning to translate a new language from one grammar book. arXiv [preprint] arXiv.2309.16575. doi: 10.48550/arXiv.2309.16575

Taylor, C., Keller, J., Fanjoy Ph, D. R. O, and Mendonca, F. C. (2020). An exploratory study of automation errors in Part 91 operations. J. Aviat./Aerosp.Educ. Res. 29, 33–48. doi: 10.15394/jaaer.2020.1812

Thimm Zwiener –Using Chat GPT (2024). Thimm Zwiener –Using Chat GPT and the Best Regen Advisors to Create a Regenerative Hotline for all –Investing in Regenerative Agriculture. Investing in Regenerative Agriculture and Food Podcast.

Tong, A. (2023). Unity Aims to Open Generative AI Marketplace for Video Game Developers. London: Reuters.

Turpin, M., Michael, J., Perez, E., and Bowman, S. R. (2023). Language models don't always say what they think: unfaithful explanations in chain-of-thought prompting. arXiv [preprint] arXiv.2305.04388. doi: 10.48550/arXiv.2305.04388

Tzachor, A., Devare, M., Richards, C., Pypers, P., Ghosh, A., Koo, J., et al. (2023). Large language models and agricultural extension services. Nat. Food 4, 941–948. doi: 10.1038/s43016-023-00867-x

Valin, H., Sands, R. D., van der Mensbrugghe, D., Nelson, G. C., Ahammad, H., Blanc, E., et al. (2014). The future of food demand: understanding differences in global economic models. Agricult. Econ. 45:1. doi: 10.1111/agec.12089

Vance, A. (2024). Cognition AI's Devin Assistant Can Build Websites, Videos From a Prompt. New York: Bloomberg.

Vemprala, S., Bonatti, R., Bucker, A., and Kapoor, A. (2023). ChatGPT for robotics: design principles and model abilities. arXiv. doi: 10.1109/ACCESS.2024.3387941

Verma, R. (2024). From Fields to Screens: KissanGPT, the AI Chatbot Helping Indian Farmers Maximize their Profits. Business Insider India.

Wang, J., Wu, Z., Li, Y., Jiang, H., Shu, P., Shi, E., et al. (2024). Large language models for robotics: opportunities, challenges, and perspectives. arXiv [preprint] arXiv.2401.04334. doi: 10.48550/arXiv.2401.04334

Wei, X., Wei, H., Lin, H., Li, T., Zhang, P., Ren, X., et al. (2023). PolyLM: an open source polyglot large language model. arXiv [preprint] arXiv.2307.06018. doi: 10.48550/arXiv.2307.06018

Weidinger, L., Uesato, J., Rauh, M., Griffin, C., Huang, P.-S., Mellor, J., et al. (2022). “Taxonomy of risks posed by language models,” in FAccT '22: Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency (New York, NY: Association for Computing Machinery), 214–229.

Williams, R., Hosseinichimeh, N., Majumdar, A., and Ghaffarzadegan, N. (2023). Epidemic modeling with generative agents. arXiv [preprint] arXiv.2307.04986. doi: 10.48550/arXiv.2307.04986

Xu, C., Du, X., Yan, Z., and Fan, X. (2020). ScienceEarth: a big data platform for remote sensing data processing. Remote Sens. 12:607. doi: 10.3390/rs12040607

Yang, S. (2023). Predictive patentomics: forecasting innovation success and valuation with ChatGPT. arXiv [preprint] arXiv.2307.01202. doi: 10.2139/ssrn.4482536

Yang, Y., Liu, X., Jin, Q., Huang, F., and Lu, Z. (2024). Unmasking and quantifying racial bias of large language models in medical report generation. arXiv [preprint] arXiv.2401.13867. doi: 10.48550/arXiv.2401.13867

Yang, Z., Raman, S. S., Shah, A., and Tellex, S. (2023). Plug in the safety chip: enforcing constraints for LLM-driven robot agents. arXiv [preprint] arXiv.2309.09919. doi: 10.48550/arXiv.2309.09919

Yu, Z., Wu, Y., Zhang, N., Wang, C., Vorobeychik, Y., and Xiao, C. (2023). “CodeIPPrompt: intellectual property infringement assessment of code language models,” in International Conference on Machine Learning (New York: PMLR).

Zhang, C., and Wang, S. (2024). Good at captioning, bad at counting: benchmarking GPT-4V on Earth observation data. arXiv [preprint] arXiv.2401.17600. doi: 10.48550/arXiv.2401.17600

Zhang, M., Li, X., Du, Y., Rao, Z., Chen, S., Wang, W., et al. (2023a). A Multimodal Large Language Model for Forestry in Pest and Disease Recognition.

Zhang, T., Ladhak, F., Durmus, E., Liang, P., McKeown, K., and Hashimoto, T. B. (2023b). Benchmarking large language models for news summarization. arXiv [preprint] arXiv.2301.13848. doi: 10.48550/arXiv.2301.13848

Keywords: large language models, generative AI, agriculture, food systems, food production, artificial intelligence

Citation: De Clercq D, Nehring E, Mayne H and Mahdi A (2024) Large language models can help boost food production, but be mindful of their risks. Front. Artif. Intell. 7:1326153. doi: 10.3389/frai.2024.1326153

Received: 13 November 2023; Accepted: 09 September 2024;

Published: 25 October 2024.

Edited by:

Ruopu Li, Southern Illinois University Carbondale, United StatesReviewed by:

Sarfaraz Alam, Stanford University, United StatesSaroj Thapa, Northeastern State University, United States

Copyright © 2024 De Clercq, Nehring, Mayne and Mahdi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Djavan De Clercq, ZGphdmFuLmRlY2xlcmNxQHJldWJlbi5veC5hYy51aw==; Adam Mahdi, YWRhbS5tYWhkaUBvaWkub3guYWMudWs=

Djavan De Clercq

Djavan De Clercq Elias Nehring2

Elias Nehring2