95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Artif. Intell. , 27 September 2023

Sec. AI in Business

Volume 6 - 2023 | https://doi.org/10.3389/frai.2023.1252897

This article is part of the Research Topic Human-Centered AI at Work: Common Ground in Theories and Methods View all 10 articles

As part of the Special Issue topic “Human-Centered AI at Work: Common Ground in Theories and Methods,” we present a perspective article that looks at human-AI teamwork from a team-centered AI perspective, i. e., we highlight important design aspects that the technology needs to fulfill in order to be accepted by humans and to be fully utilized in the role of a team member in teamwork. Drawing from the model of an idealized teamwork process, we discuss the teamwork requirements for successful human-AI teaming in interdependent and complex work domains, including e.g., responsiveness, situation awareness, and flexible decision-making. We emphasize the need for team-centered AI that aligns goals, communication, and decision making with humans, and outline the requirements for such team-centered AI from a technical perspective, such as cognitive competence, reinforcement learning, and semantic communication. In doing so, we highlight the challenges and open questions associated with its implementation that need to be solved in order to enable effective human-AI teaming.

In the future, Mars is a target for long-duration space missions (Salas et al., 2015). Both governments and private space industries are fascinated by the Red Planet, and are aiming to send teams of astronauts on a mission to Mars in the late 2030's (Buchanan, 2017; NASA, 2017). For successful survival and operation on Mars, a habitat with intelligent systems, such as integrative Artificial Intelligence (Kirchner, 2020), and robots (e.g., for outdoor operations), are indispensable, among other things. To avoid unnecessary exposure to radiation the crew will be in the habitat most of the time. There, they will collaborate with technical systems with capabilities that are more like the cognitive abilities of humans compared to previous support systems. Advancements in Machine Learning and Artificial Intelligence (AI) have led to the development of systems that can handle uncertainties, adjust to changing situations, and make intelligent decisions independently (O'Neill et al., 2022). Intelligent autonomous agents can either exist as virtual entities or can embody a physical system such as a robot. Although much of the decision-making paradigm may be similar in both cases, the physical spatio-temporal constraints of robots must be properly considered in their decisions (Kabir et al., 2019). In the given context, autonomous agents perform tasks such as adaptively controlling light, temperature, and oxygen levels. In addition, they can gather important information about the outdoor environment and guide the crew's task planning by telling them when, for example, an outdoor mission is most advantageous due to weather conditions such as isotope storms. Additionally, for outdoor activities, multi-robot teams (Cordes et al., 2010) will facilitate efficient exploration in areas of low accessibility, transportation of materials, and analysis and transmission of information to the human crew.

In the previously described scenario, we are concerned with human-AI teaming (cf. Schecter et al., 2022), also often referred to as human-agent teaming (cf. Schneider et al., 2021) or human autonomy teaming (cf. O'Neill et al., 2022) (all abbreviated as HAT). Those are systems, in which humans and intelligent, autonomous agents work interdependently toward common goals (O'Neill et al., 2022). These forms of hybrid teamwork (cf. Schwartz et al., 2016a,b) are already present in some industries and workplaces and are becoming more and more relevant, for example in aviation, civil protection, firefighting or medicine. They provide opportunities for increased safety at work and productivity, thus supporting human and organizational performance.

A well-known example from the International Space Station is the astronaut assistant CIMON-2 (Crew Interactive MObile companioN), which has already worked with the astronauts. CIMON-2 is controlled by voice and aims to support astronauts primarily in their workload of experiments, maintenance, and repair work. Astronauts can also activate linguistic emotion analysis, so that the agent can respond empathically to its conversation partners (DLR, 2020). Another example of AI at work is the chatbot CARL (Cognitive Advisor for Interactive User Relationship and Continuous Learning), which has been in use in the human resources department of Siemens AG. CARL can provide information on a wide range of human resources topics and thus serves as a direct point of contact for all employees. Also, the human resources Shared Service Experts themselves use CARL as a source of information in their work. CARL understands, advises, and guides and is used extensively within the company. Carl has been positively received by the employees as well as the human resources experts and leads to a facilitation in the work like a colleague (IBM and ver.di, 2020). Artificial agents are also used in the medical sector, for example, when nurses and robots collaborate efficiently in the Emergency Department during high workload situations, such as resuscitation or surgery.

The question that emerges is how to effectively design such a novel form of teamwork that fully meets the needs of humans in a successful teamwork process (Seeber et al., 2020; Rieth and Hagemann, 2022). This perspective thus highlights the role of AI interacting with humans in a team instead of only using a normal high developed technology. Consequently, this perspective aims toward a comprehensive and interdisciplinary exploration of the key factors that contribute to successful collaboration between humans and AI. We (1) illustrate the requirements for successful teamwork in interdependent and complex work domains based on the model of an idealized teamwork process, (2) identify the implications of these requirements for successful human-AI teaming, and (3) outline the requirements for AI to be team-centered from a technical perspective. Our goal is to draw attention to the teamwork-related requirements to enable effective human-AI teaming, also in hybrid multi-team systems, and at the same time to create awareness of what this means for the design of technology.

As described above, the question arises as to what aspects need to be considered in human-centered AI in teamwork, both in terms of the human crew and the “team” of artificial agents to achieve effective and safe team performance. Imagine a scenario for major disasters on earth. Here we will not only have a team with one or two humans and one agent, but several teams of people, e.g., police, fire fighters, rescue services, and several agents, e.g., assistance system in the control center, robots in buildings and drones in the air, who must communicate and collaborate successfully.

Thus, HATs also exist in a larger context and work in dependence with other teams. These human-AI multi teams are called hybrid multi-team systems (HMTS) and refer to “multiple teams consisting of n-number of humans and n-number of semi-autonomous agents [i.e., AI] having interdependence relationships with each other” (Schraagen et al., 2022, p. 202). They consist of sub-teams, with each individual and team striving to achieve hierarchically structured goals. Lower-level goals require coordination processes within a single team and higher-level goals require coordination with other teams. Their interaction is shaped by the varying degrees of task interdependencies between the sub-teams (Zaccaro et al., 2012). HMTS highlight the complexity of the overall teamwork situation, as sub-teams consist of humans, of agents and of humans and agents. Therefore, teamwork relevant constructs such as communication (Salas et al., 2005), building and maintaining an effective situation awareness (Endsley, 1999) and shared mental models (Mathieu et al., 2000) as well as decision making (Waller et al., 2004) will not only be of high relevance in the human crew, but also in the AI teams (Schwartz et al., 2016b) as well as in the human-AI teams (cf. e.g., Carter-Browne et al., 2021; Stowers et al., 2021; National Academies of Sciences, Engineering, and Medicine, 2022; O'Neill et al., 2022; Rieth and Hagemann, 2022).

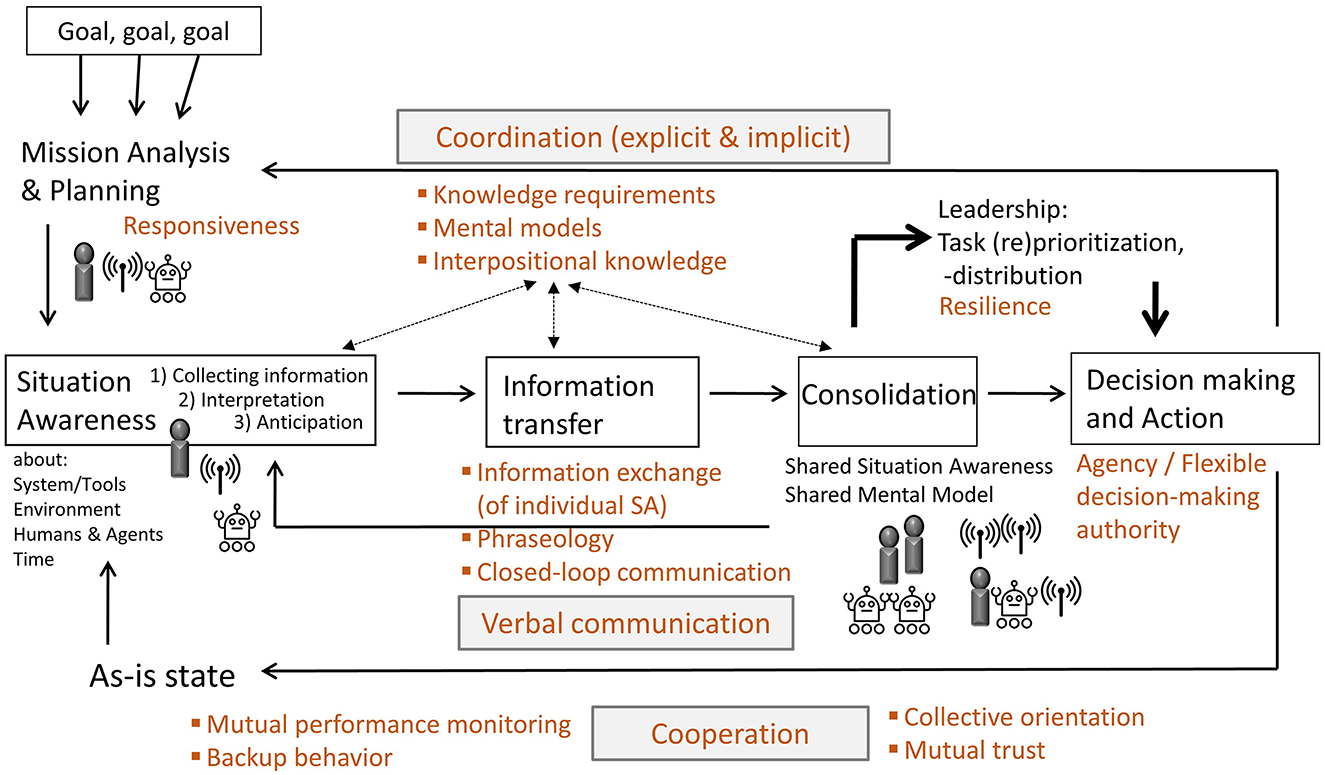

To date, research has focused on individual facets of successful HAT, ignoring the Input-Process-Output (IPO) framework (Hackman, 1987) in teamwork research (cf. O'Neill et al., 2023) which acknowledges the pivotal role of group processes (e.g., shared mental models or communication) in converting inputs (e.g., autonomy or task) into desired outcomes (e.g., team performance or work satisfaction). Often, the focus is on single aspects such as trust (Lyons et al., 2022), agent autonomy (Ulfert et al., 2022), shared mental models (Andrews et al., 2023), or speech (Bogg et al., 2021). Examining individual facets is important to understand human-AI teaming, yet we would like to point out that successful teamwork does not consist of individual components per se, but rather the big picture, i.e., the interaction of inputs, processes and outcomes. Thus, we would like to think of a teamwork-centered AI holistically and discuss relevant aspects for a successful human-AI teaming from a psychological and technical perspective using the model of the idealized teamwork process (Hagemann and Kluge, 2017; see the black elements in Figure 1).

Figure 1. Model of an idealized teamwork process of hybrid multi-team systems. Adapted from Hagemann and Kluge (2017), licensed under CC BY 4.0.

The cognitive requirements for effective teamwork and the team process demands are consolidated within the model of an idealized teamwork process (Kluge et al., 2014; Hagemann and Kluge, 2017). Figure 1 shows an adapted version of this model. The black elements in the model are from the original model by Hagemann and Kluge (2017). The brown elements of the model are additions which, based on literature analyses, are essential for team-centered AI addressing human needs and thus for successful human-AI teaming. These elements will now be discussed in more detail in the course of this article. Following the IPO model, our proposed model does not focus on solely a certain component of the model, such as only the input, but holistically all three components. Central elements of the model are situation awareness, information transfer, consolidation of individual mental models, leadership, and decision making (for a detailed explanation see Hagemann and Kluge, 2017). Human-AI teams are responsible for reaching specific goals (see top left of model), for example, search for, transport, and care for injured persons during a large-scale emergency, as well as extinguishing fires. Based on the overall goals, various sub goals exist for the all-human teams, the agent teams, and the human-AI teams that will be identified at the beginning of the teamwork process and communicated within the HMTS. For routine situations, there will be standard operating procedures known by all humans and agents. However, it becomes challenging for novel or unforeseen situations for which standard operating procedures do not yet exist. Here, an effective start requires an intensive exchange of mission analysis, goal specification, and strategy formulation, which are important teamwork processes occurring during planning activities (cf. Marks et al., 2001). Such planning activities are a major challenge, especially in multi-team systems, since between-team coordination is more difficult to achieve than within-team coordination, but it is also more important for effective multi-team system teamwork (Schraagen et al., 2022). Thus, the responsiveness of the agents will be important for a team-centered AI (see upper left corner in the model), meaning that the agents are able to align their goals and interaction strategies to the shifting goals and intentions of others as well as the environment (Lyons et al., 2022).

As depicted in our model, the defined goals provide the starting position for all teams building an effective situation awareness, which is important for successful collaboration within teams (Endsley, 1999; Flin et al., 2008). Situation awareness means collecting information from systems, tools, humans, agents, and environments, interpreting this information and anticipating future states. The continuous assessment of situations by all humans and agents is important, as they work independently as well as interdependently and each team needs to achieve a correct situation awareness and to share it within the HMTS. High-performing teams have been shown to spend more time sharing information and less time deciding on a plan, for example (Uitdewilligen and Waller, 2018). This implies the importance of a sound and comprehensive situation awareness between humans and agents (cf. McNeese et al., 2021b) and an accompanying goal-oriented and continuous exchange of information in HMTS. For developing a shared situation awareness, the information transfer focuses on sending and receiving single situation awareness between team members. Aligned phraseology between humans and agents (i.e. using shared language and terminology) and closed-loop communication (i.e. verifying accurate message understanding through feedback: statement, repetition, reconfirmation; Salas et al., 2005) are essential for effective teamwork. However, possible effects of closed-loop communication have not yet been investigated in a HAT. Thus, it is not clear, for example, whether this form of communication is more likely to be considered disruptive in joint work and whether it should be used only in specific situations, such as when performing particularly important or sensitive tasks. Nevertheless, these requirements for communication in a HAT are important to consider for successful teamwork, as it has been shown that performance and perception of teamwork are significantly higher with verbal communication in a HAT (Bogg et al., 2021). Therefore, for a team-centered AI, agents should communicate quite naturally with human team members in verbal language.

Expectations of all humans and agents based on their mental models and interpositional knowledge impact the situation awareness, the information transfer, and the consolidation phases. Mental models are cognitive representations of system states, tasks, and processes, for example, and help humans and agents to describe, explain, and predict situations (Mathieu et al., 2000). Interpositional knowledge refers to an understanding of the tasks and needs of all team members to develop an understanding of the impact of one's actions on the actions of other team members and vice versa. It lays a foundation for understanding the information needs of others and the assistance they require (Smith-Jentsch et al., 2001). Interpositional knowledge and mental models are important prerequisites for effective coordination in HMTS, i.e., temporally and spatially appropriately orchestrated actions (Andrews et al., 2023). Thus, a fully comprehensive and up-to-date mental model of the agents about the tasks and needs of the other human and artificial team members is highly relevant for team-centered AI.

Based on effective information transfer, a common understanding of tasks, tools, procedures, and competencies of all team members is developed in the consolidation phase in terms of shared mental models. These shared knowledge structures help teams adapt quickly to changes during high workload situations (Waller et al., 2004) and increase their performance (Mathieu et al., 2000). The advantage of shared mental models is that HMTS can shift from time-consuming explicit coordination to implicit coordination in such situations (cf. Schneider et al., 2021). For example, observable behaviors or explicit statements may cause the agent to exhibit appropriate behavior, such as a robot observes that the human has reached a certain point in the experiment and is already preparing the materials that the human will need in the next step. Accordingly, a team-centered AI must be able to coordinate with the humans in the team not only explicitly, but also implicitly. In addition, the agents in a HMTS must be able to detect when there is a breakdown in collaboration between humans and agents, or between the different agents, and intervene so that they can explicitly coordinate again.

As a result of the consolidation phase, the HMTS or leading humans and agents need to make decisions to take actions. Thus, it is important that the artificial agents have agency, i.e., they can have control over their actions and the decision authority to execute these actions (Lyons et al., 2022). For an effective collaboration of humans and agents, the HMTS needs a flexible decision-making authority, that is, authority dynamically shifting among the humans and agents in response to complex and changing situations (Calhoun, 2022; Schraagen et al., 2022). Requirements in this phase include task prioritization and distribution as well as re-prioritization and distribution of tasks according to changes in the situation or plan (Waller et al., 2004). The resilience of the system is thus also important for team-centered AI, so that the agents can adapt to changing processes and tasks (Lyons et al., 2022). In this phase, it is very important that the agents can interpret the statements of all the others and continue to think about the situation together with the humans. Only in this way can HMTS be as successful as only human high-performing teams. That is due to the fact that in the decision-making phase high-performing teams compared to low performing teams use more interpretation-interpretation sharing sequences: the process involves an initial statement made by one human or agent, followed by an interpretative response from another agent, leading to a subsequent statement by the first agent that builds upon and expands the reasoning and thus build a collective sensemaking (Uitdewilligen and Waller, 2018).

The result of decision-making and action flows back into individual situation awareness and the original goals are compared with the as-is state achieved. This model of a continuously idealized teamwork process includes diverse feedback loops that enable a HMTS to adapt to changing environments and goals. For the described processes to be successfully completed, cooperation is required within the HMTS. This includes, for example, mutual performance monitoring, in which humans and agents keep track of each other while performing their own tasks to detect and prevent possible mistakes at an early stage (Paoletti et al., 2021). Cooperation also requires backup behavior in the team, i.e., the discretionary help from other human or artificial team members as well as a distinct collective orientation of all members (Salas et al., 2005; Hagemann et al., 2021; Paoletti et al., 2021). For team-centered AI, the agents must be able to provide this support behavior for the other team members. A successful pass through the teamwork process model also depends on the trust of each team member (Hagemann and Kluge, 2017; McNeese et al., 2021a). Important for the trust of humans in agents is a reliable performance, i.e., as few to no errors as possible (Hoff and Bashir, 2015; Lyons et al., 2022). Nevertheless, the agent should not only be particularly reliable, but for a team-centered AI it should also be able to turn to all members of the HMTS in new situations and request an exchange because it cannot get on by itself.

Increased autonomy enables agents to make decisions independently in different situations, i.e., to develop situational awareness, even in situations where there is only a limited possibility of human intervention. For agents to be part of HMTS, it is mandatory to achieve a level of cognitive competence that allows them to grasp the intentions of their teammates (Demiris, 2007; Trick et al., 2019). This claim is much easier said than done as it requires the existence of mental models in agents that are comparable to the models that humans rely on, especially if they exchange information. However, such models cannot just be preprogrammed and then implanted into agents. One reason for this is that the process by which mental models are created in humans is still a subject under investigation (Westbrook, 2006; Tabrez et al., 2020). Even though this cognitive competence is required for team-centricity, this process is difficult to reproduce artificially. On the other hand, there is usually not just a single isolated model (or brain process) that generates human behavior, but rather an ensemble of models that are active at any given time and influence the observable outcome. Compared to humans that function based on cognitive decision-making, intuition, etc., machines are digitized, and act based on experience, their understanding of the current situation, and prediction models. These models must improve over time, based on a limited set of prerecorded data to move toward more accurate, robust systems. Thus, for future developments in HATs it would be important to design models with higher predictive power, which we define by how well the model can predict the outcome of its decisions based on the situation, experience and team behavior (see also Raileanu et al., 2018).

Moreover, agents must be competent and empowered to make decisions when needed, without having to wait for instructions from humans, especially in extreme environments where humans must adapt to particular conditions (Hambuchen et al., 2021), and resources are scarce. System resilience is also of high importance as the consequences of failure, on either side (human or agent) could be catastrophic. Whenever there is a potential threat to human lives, HMTS can prove more effective compared to homogeneous human or AI teams. During search and rescue operations on Earth (Govindarajan et al., 2016), responsiveness, coordination, and effective communication are crucial requirements for HMTS. Therefore, through teleoperation and on-site collaboration, HATs are able to mitigate the impact after a disaster. HATs can also be witnessed in modern medical applications that demand cooperation and high degrees of precision. For example, nurses and robots in the Emergency Department can efficiently handle high workloads, and safety-critical procedures like surgery and resuscitation, using a new reinforcement learning system design (Taylor, 2021). Reinforcement learning is a class of machine learning algorithms, wherein the agent receives either a reward or a penalty depending on the favorability of the outcome of a particular action.

In HATs of the future, we will thus have to work with agents that can learn over time to adjust to human behavior and shape the models of the environment and of other team members over time. This learning approach will enable the agents to exchange substantial information even with very few bits or in other words content and meaning will be exchangeable between humans and agents rather than bits and bytes. This process, also known as semantic communication, is currently under investigation by different teams from a more information theoretic approach over symbolic reasoning to an approach that is called integrative artificial intelligence (Kirchner, 2020). Beck et al. (2023) approach this problem by modeling semantic information as hidden random variables to achieve reliable communication under limited resources. This is a valuable step toward adapting to the problem of communication losses and latencies in applications like space, and exploration in remote areas. In a HAT setup, it is important to make some decisions regarding the nature of the team, either a priori or dynamically. Like pure human systems, assigning specific roles and defining hierarchies among agents in a team and between teams can enhance the overall mission strategy. Role-based task allocation is especially useful when the team consists of heterogeneous (Dettmann et al., 2022) and (or) reconfigurable (Roehr et al., 2014) agents. In HMTS, having every member trying to communicate with every other member is highly impractical, resource intensive and chaotic. This issue is further complicated when all members are authorized to act as they will. Implementing an organized hierarchical team structure (Vezhnevets et al., 2017) is therefore imperative for a team-centered AI successfully collaborating with humans.

To achieve seamless interaction between humans and agents, the latter must display behavioral traits that are acceptable to humans. An agent is truly team-centered when it can intelligently adapt to the situation and team requirements, in a team-oriented (Salas et al., 2005), rather than a dominant or submissive manner. Agents need to achieve predictive capabilities for other teammates and the environment to account for variation, as in Raileanu et al. (2018). In the autonomous vehicle domain, it is crucial that the vehicle can accurately predict the behavior of pedestrians and others to enable seamless navigation (Rhinehart et al., 2019). According to Teahan (2010), behavior is defined by how an agent acts while interacting with its environment. Interaction entails communication which can be either verbal or non-verbal. An interesting aspect will be to investigate deeper into large language models, like ChatGPT and to find out if these approaches can be extended to general interactive behavior (Park et al., 2023) instead of just text and images. Apart from language, verbal communication is also characterized by the acoustics of the voice, and style of speech. Moreover, movement is a fundamental component that defines the behavior of any team member. Depending on design, agents are already capable of performing and recognizing gestures (Wang et al., 2019; Xia et al., 2019) and emotions (Arriaga et al., 2017). Motion analyses have shown that the intention behind performing an action is intrinsically embedded in the style of movement, for instance, in the dynamics of the arm (Niewiadomski et al., 2021; Gutzeit and Kirchner, 2022). In all the scenarios, one of the biggest challenges faced by HMTS is the trustworthiness of the team. Cooperation requires building trust-based relationships between the team members. Bazela and Graczak (2023) evaluated, among other factors, “the team's willingness to consider it [the Kalman autonomous rover—an astronaut assistant] a partially conscious team member” (p. 369). The opposite also holds true. Agents must maintain a high trust factor of their human teammates, i.e., be able to trust humans, as this factor has a significant influence on the decision-making process (Chen et al., 2018). For instance, the agent's trust factor can be improved by means of reliable communication when the human switches strategies. From a technical perspective, humans are the chaos factor in the HAT equation and even though this can be modeled to a certain extent on the agent side, an effective collaboration largely depends on the predictability of human actions. A summary of all the requirements mentioned for team-centered successful human-AI teaming addressing human needs can be found with definitions of these and example references in the Supplementary Table 1.

The aim of this contribution is to discuss the central teamwork facets for successful HATs in an interdisciplinary way. Starting from a psychological perspective addressing the human needs, the importance of team-centered AI is revealed. However, its technical feasibility is challenging. It is an open problem from a standpoint of technical cognition if AI systems can ever be regarded and/or accepted as actual team members as this poses a very fundamental question of AI. This question refers to the challenge to replicate intelligence in technical systems as we only know it from human systems. This is an old and long-standing question that has been addressed by Turing (1948) in his famous paper on “intelligent machinery” already in the early last century. As a mathematician he concluded that it is not possible to build such systems ad hoc. One loophole that he identified in this paper is to create highly articulated robotic systems that learn—in an open-ended process- from the interaction with a real-world environment. It is his assumption that somewhere along this process, which is open-ended, some of the features that we associate with intelligence may emerge and thus the resulting system will eventually be able to simulate intelligence well enough such that it will be regarded as intelligent by humans. If this is actually feasible has never been tested but could be a worthwhile experiment to perform with technologies of the 21st century. However, for the meantime, teamwork attributes like responsiveness, situation awareness, closed-loop communication, mental models, and decision making remain to be buzz words in this context and are technical features that we will be able to implement to a limited extent into technical systems in order to enable these systems to act as valuable tools for humans in well-defined environments and contexts. But whether this will qualify the agents as team members is unknown so far (cf. also Rieth and Hagemann, 2022). This would in fact require a much deeper understanding of the processes that enable cognition in human systems as we have it today and even if we had that understanding, it will still be an open question if the understanding of mental processes is also a blueprint or design approach to achieve the same in technical systems. Overall, the manuscript provides insights into the team-centered requirements for effective collaboration in HATs and underscores the importance of considering teamwork-related factors in the design of technology. Our proposed guidelines can be used to design and evaluate future concrete interactive systems. In the experimental testing of the single facets discussed for a truly team-centered and successful HAT, which considers the needs of the humans in the HAT, many highly specific further research questions will arise, the scientific treatment of which will be of great importance for the implementation of future HATs. Thus, further research in this area is needed to address the challenges and unanswered questions associated with HMTS. Solving them will open doors to applying hybrid systems in diverse setups, thus leveraging the advantages of both, human and agent members, as human-AI multi-team systems.

VH had the idea for this perspective article and took the lead in writing it. MR, AS, and FK contributed to the discussions and writing of this paper. All authors contributed to the writing and review of the manuscript and approved the version submitted.

FK was employed by DFKI GmbH.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frai.2023.1252897/full#supplementary-material

Andrews, R. W., Lilly, J. M., Srivastava, D., and Feigh, K. M. (2023). The role of shared mental models in human-AI teams: a theoretical review. Theor. Iss. Ergon. Sci. 24, 129–175. doi: 10.1080/1463922X.2022.2061080

Arriaga, O., Valdenegro-Toro, M., and Plöger, P. (2017). Real-time convolutional neural networks for emotion and gender classification. arXiv preprint. 1710, 07557. doi: 10.48550/arXiv.1710.07557

Bazela, N., and Graczak, P. (2023). “HRI in the AGH space systems planetary rover team: A study of long-term human-robot cooperation,” in Social Robots in Social Institutions: Proceedings of Robophilosophy eds R. Hakli, P. Mäkelä, and J. Seibt (Amsterdam: IOS Press), 361–370.

Beck, E., Bockelmann, C., and Dekorsy, A. (2023). Semantic information recovery in wireless networks. arXiv preprint 2204, 13366. doi: 10.3390/s23146347

Bogg, A., Birrell, S., Bromfield, M. A., and Parkes, A. M. (2021). Can we talk? How a talking agent can improve human autonomy team performance. Theoret. Iss. Ergon. Sci. 22, 488–509. doi: 10.1080/1463922X.2020.1827080

Calhoun, G. L. (2022). Adaptable (not adaptive) automation: forefront of human-automation teaming. Hum. Fact. 64, 269–277. doi: 10.1177/00187208211037457

Carter-Browne, B. M., Paletz, S. B., Campbell, S. G., Carraway, M. J., Vahlkamp, S. H., Schwartz, J., et al. (2021). There is No “AI” in Teams: A Multidisciplinary Framework for AIs to Work in Human Teams. Maryland, MA: Applied Research Laboratory for Intelligence and Security (ARLIS).

Chen, M., Nikolaidis, S., Soh, H., Hsu, D., and Srinivasa, S. (2018). Planning with trust for human-robot collaboration. Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction (Chicago, IL), 307–315. doi: 10.1145/3171221.3171264

Cordes, F., Kirchner, F., and Bremen, R. I. C. (2010). Heterogeneous robotic teams for exploration of steep crater environments. International Conference on Robotics and Automation (ICRA), Anchorage, Alaska.

Demiris, Y. (2007). Prediction of intent in robotics and multi-agent systems. Cogn. Process. 8, 151–158. doi: 10.1007/s10339-007-0168-9

Dettmann, A., Voegele, T., Ocón, J., Dragomir, I., Govindaraj, S., De Benedetti, M., et al (2022). COROB-X: A Cooperative Robot Team for the Exploration of Lunar Skylights. Available online at: https://hdl.handle.net/10630/24387 (accessed September 11, 2023).

DLR (2020). Auch CIMON-2 Meistert Seinen Einstand auf der ISS. Available online at: https://www.dlr.de/de/aktuelles/nachrichten/2020/02/20200415_auch-cimon-2-meistert-seinen-einstand-auf-der-iss (accessed September 11, 2023).

Endsley, M. R. (1999). “Situation awareness in aviation systems,” in Handbook of Aviation Human Factors, eds D. J. Garland, J. A. Wise, and V. D. Hopkin (Mahwah, NJ: Lawrence Erlbaum Associates Publishers), 257-276.

Govindarajan, V., Bhattacharya, S., and Kumar, V. (2016). “Human-robot collaborative topological exploration for search and rescue applications,” in Cho Distributed Autonomous Robotic Systems, eds N. Y. Chong and Cho Y.-J. (Springer), 17-32. doi: 10.1007/978-4-431-55879-8_2

Gutzeit, L., and Kirchner, F. (2022). Unsupervised segmentation of human manipulation movements into building blocks. IEEE Access 10, 125723–125734. doi: 10.1109/ACCESS.2022.3225914

Hackman, J. R. (1987). “The design of work teams,” in Handbook of Organizational Behavior, ed J.W. Lorsch (Englewood Cliffs, NJ: Prentice-Hall), 315–342.

Hagemann, V., and Kluge, A. (2017). Complex problem solving in teams: the influence of collective orientation on team process demands. Front. Psychol. Cog. Sci. 8, 1730. doi: 10.3389/fpsyg.2017.01730

Hagemann, V., Ontrup, G., and Kluge, A. (2021). Collective orientation and its implications for coordination and team performance in interdependent work contexts. Team Perform. Manag. 27, 30–65. doi: 10.1108/TPM-03-2020-0020

Hambuchen, K., Marquez, J., and Fong, T. (2021). A review of NASA human-robot interaction in space. Curr. Robot. Rep. 2, 265–272. doi: 10.1007/s43154-021-00062-5

Hoff, K. A., and Bashir, M. (2015). Trust in automation: integrating empirical evidence on factors that influence trust. Hum. Fact. 57, 407–434. doi: 10.1177/0018720814547570

IBM ver.di (2020). Künstliche Intelligenz: Ein sozialpartnerschaftliches Forschungsprojekt untersucht die neue Arbeitswelt. Available online at: https://www.verdi.de/++file++5fc901bc4ea3118def3edd33/download/20201203_KI-Forschungsprojekt-verdi-IBM-final.pdf (accessed September 11, 2023).

Kabir, A. M., Kanyuck, A., Malhan, R. K., Shembekar, A. V., Thakar, S., Shah, B. C., et al. (2019). Generation of synchronized configuration space trajectories of multi-robot systems. International Conference on Robotics and Automation (ICRA) (Montreal: IEEE), 8683-8690. doi: 10.1109/ICRA.2019.8794275

Kirchner, F. (2020). AI-perspectives: the turing option. AI Perspect. 2, 2. doi: 10.1186/s42467-020-00006-3

Kluge, A., Hagemann, V., and Ritzmann, S. (2014). “Military crew resource management – Das Streben nach der bestmöglichen Teamarbeit [Striving for the best of teamwork],” in Psychologie für Einsatz und Notfall [Psychology for mission and emergency], eds G. Kreim, S. Bruns, and B. Völker (Bernard and Graefe in der Mönch Verlagsgesellschaft mbH), 141–152.

Lyons, J. B., Jessup, S. A., and Voc, T. Q. (2022). The role of decision authority and stated social intent as predictors of trust in autonomous robots, Top. Cogn. Sci. 4, 1–20. doi: 10.1111/tops.12601

Marks, M. A., Mathieu, J. E., and Zaccaro, S. J. (2001). A temporally based framework and taxonomy of team processes. Acad. Manag. Rev. 26, 356–376. doi: 10.2307/259182

Mathieu, J. E., Heffner, T. S., Goodwin, G. F., Salas, E., and Cannon-Bowers, J. A. (2000). The influence of shared mental models on team process and performance. J. Appl. Psychol. 85, 273–283. doi: 10.1037/0021-9010.85.2.273

McNeese, N. J., Demir, M., Chiou, E. K., and Cooke, N. J. (2021a). Trust and team performance in human–autonomy teaming. Int. J. Elect. Comm. 25, 51–72. doi: 10.1080/10864415.2021.1846854

McNeese, N. J., Demir, M., Cooke, N. J., and She, M. (2021b). Team situation awareness and conflict: a study of human–machine teaming. J. Cogn. Engin. Dec. Mak. 15, 83–96. doi: 10.1177/15553434211017354

NASA (2017). NASA's Journey to Mars. Available online at: https://www.nasa.gov/content/nasas-journey-to-mars (accessed September 11, 2023).

National Academies of Sciences Engineering, and Medicine. (2022). Human-AI Teaming: State-of-the-Art and Research Needs. Washington, DC: The National Academies Press.

Niewiadomski, R., Suresh, A., Sciutti, A., and Di Cesare, G. (2021). Vitality forms analysis and automatic recognition. J. Latex Class Files 14, 1. doi: 10.36227/techrxiv.16691476.v1

O'Neill, T. A., Flathmann, C., McNeese, N. J., and Salas, E. (2023). Human-autonomy teaming: need for a guiding team-based framework? Comput. Human Behav. 146, 107762. doi: 10.1016/j.chb.2023.107762

O'Neill, T. A., McNeese, N., Barron, A., and Schelble, B. (2022). Human–autonomy teaming: a review and analysis of the empirical literature. Hum. Fact. 64, 904–938. doi: 10.1177/0018720820960865

Paoletti, J., Kilcullen, M., and Salas, E. (2021). “Teamwork in space exploration,” in Psychology and Human Performance in Space Programs, eds L. B. Landon, K. Slack, and E. Salas (CRC Press), 195-216. doi: 10.1201/9780429440878-10

Park, J. S., O'Brien, J. C., Cai, C. J., Morris, M. R., Liang, P., Bernstein, M. S., et al. (2023). Generative agents: interactive simulacra of human behavior. arXiv preprint 2304, 03442. doi: 10.48550/arXiv.2304.03442

Raileanu, R., Denton, E., Szlam, A., and Fergus, R. (2018). Modeling others using oneself in multi-agent reinforcement learning. International Conference on Machine Learning (Stockholm: PMLR), 4257-4266.

Rhinehart, N., McAllister, R., Kitani, K., and Levine, S. (2019). “Precog: prediction conditioned on goals in visual multi-agent settings,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2821-2830. doi: 10.1109/ICCV.2019.00291

Rieth, M., and Hagemann, V. (2022). Automation as an equal team player for humans? A view into the field and implications for research and practice. Appl. Ergon. 98, 103552. doi: 10.1016/j.apergo.2021.103552

Roehr, T. M., Cordes, F., and Kirchner, F. (2014). Reconfigurable integrated multirobot exploration system (RIMRES): heterogeneous modular reconfigurable robots for space exploration. J. Field Robot. 31, 3–34. doi: 10.1002/rob.21477

Salas, E., Sims, D., and Burke, S. (2005). Is there a “Big Five” in Teamwork? Small Group Res. 36, 555–599. doi: 10.1177/1046496405277134

Salas, E., Tannenbaum, S. I., Kozlowski, S. W. J., Miller, C. A., Mathieu, J. E., Vessey, W. B., et al. (2015). Teams in space exploration: a new frontier for the science of team effectiveness. Curr. Dir. Psychol. Sci. 24, 200–207. doi: 10.1177/0963721414566448

Schecter, A., Hohenstein, J., Larson, L., Harris, A., Hou, T-. Y., Lee, W-. Y., et al. (2022). Vero: an accessible method for studying human-AI teamwork. Comput. Human Behav. 141, 107606. doi: 10.1016/j.chb.2022.107606

Schneider, M., Miller, M., Jacques, D., Peterson, G., and Ford, T. (2021). Exploring the impact of coordination in human–agent teams. J. Cogn. Engin. Dec. Mak. 15, 97–115. doi: 10.1177/15553434211010573

Schraagen, J. M., Barnhoorn, J. S., van Schendel, J., and van Vught, W. (2022). Supporting teamwork in hybrid multi-team systems. Theoret. Iss. Ergon. Sci. 23, 199–220. doi: 10.1080/1463922X.2021.1936277

Schwartz, T., Feld, M., Bürckert, C., Dimitrov, S., Folz, J., Hutter, D., et al. (2016a). Hybrid Teams of humans, robots and virtual agents in a production setting. Proceedings of the 12th International Conference on Intelligent Environments, (IE-16), 12, 9.-13.9.2016 (London: IEEE). doi: 10.1109/IE.2016.53

Schwartz, T., Zinnikus, I., Krieger, H. U., Bürckert, C., Folz, J., Kiefer, B., et al. (2016b). “Hybrid teams: flexible collaboration between humans, robots and virtual agents,” in Proceedings of the 14th German Conference on Multiagent System Technologies, eds M. Klusch, R. Unland, O. Shehory, A. Pokhar, and S. Ahrndt (Klagenfurt: Springer, Series Lecture Notes in Artificial Intelligence), 131-146.

Seeber, I., Bittner, E., Briggs, R. de Vreede, T., de Vreede, G.-J., Elkins, G. J., et al. (2020). Machines as teammates: a research agenda on AI in team collaboration. Inform. Manag. 57, 1–22. doi: 10.1016/j.im.2019.103174

Smith-Jentsch, K. A., Baker, D. P., Salas, E., and Cannon-Bowers, J. A. (2001). “Uncovering differences in team competency requirements: the case of air traffic control teams.,” in Improving Teamwork in Organizations. Applications of Resource Management Training, eds E. Salas, C. A. Bowers, and E. Edens (Mahwah, NJ: Lawrence Erlbaum Associates Publishers), 31-54.

Stowers, K., Brady, L. L., MacLellan, C., Wohleber, R., and Salas, E. (2021). Improving teamwork competencies in human-machine teams: perspectives from team science. Front. Psychol. 12, 590290. doi: 10.3389/fpsyg.2021.590290

Tabrez, A., Luebbers, M. B., and Hayes, B. (2020). A survey of mental modeling techniques in human–robot teaming. Curr. Rob. Rep. 1, 259–267. doi: 10.1007/s43154-020-00019-0

Taylor, A. (2021). Human-Robot Teaming in Safety-Critical Environments: Perception of and Interaction with Groups (Publication No. 28544730) [Doctoral dissertation, University of California]. ProQuest Dissertations and Theses Global.

Trick, S., Koert, D., Peters, J., and Rothkopf, C. A. (2019). Multimodal uncertainty reduction for intention recognition in human-robot interaction. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Macau), 7009-7016. doi: 10.1109/IROS40897.2019.8968171

Uitdewilligen, S., and Waller, M. J. (2018). Information sharing and decision making in multidisciplinary crisis management teams. J. Organ. Behav. 39, 731–748. doi: 10.1002/job.2301

Ulfert, A-. S., Antoni, C. H., and Ellwart, T. (2022). The role of agent autonomy in using decision support systems at work. Comput. Human Behav. 126, 106987. doi: 10.1016/j.chb.2021.106987

Vezhnevets, A. S., Osindero, S., Schaul, T., Heess, N., Jaderberg, M., Silver, D., et al. (2017). Feudal networks for hierarchical reinforcement learning. International Conference on Machine Learning (Sydney), 3540–3549.

Waller, M. J., Gupta, N., and Giambatista, R. C. (2004). Effects of adaptive behaviors and shared mental models on control crew performance. Manage. Sci. 50, 1534–1544. doi: 10.1287/mnsc.1040.0210

Wang, L., Gao, R., Váncza, J., Krüger, J., Wang, X. V., Makris, S., et al. (2019). Symbiotic human-robot collaborative assembly. CIRP Annals 68, 701–726. doi: 10.1016/j.cirp.2019.05.002

Westbrook, L. (2006). Mental models: a theoretical overview and preliminary study. J. Inform. Sci. 32, 563–579. doi: 10.1177/0165551506068134

Xia, Z., Lei, Q., Yang, Y., Zhang, H., He, Y., Wang, W., et al. (2019). Vision-based hand gesture recognition for human-robot collaboration: a survey. 5th International Conference on Control, Automation and Robotics (ICCAR), (Beijing) 198-205. doi: 10.1109/ICCAR.2019.8813509

Keywords: human-agent teaming, hybrid multi-team systems, cooperation, communication, teamwork, integrative artificial intelligence

Citation: Hagemann V, Rieth M, Suresh A and Kirchner F (2023) Human-AI teams—Challenges for a team-centered AI at work. Front. Artif. Intell. 6:1252897. doi: 10.3389/frai.2023.1252897

Received: 04 July 2023; Accepted: 04 September 2023;

Published: 27 September 2023.

Edited by:

Uta Wilkens, Ruhr-University Bochum, GermanyReviewed by:

Riccardo De Benedictis, National Research Council (CNR), ItalyCopyright © 2023 Hagemann, Rieth, Suresh and Kirchner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Vera Hagemann, dmhhZ2VtYW5uQHVuaS1icmVtZW4uZGU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.