94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Artif. Intell., 29 September 2023

Sec. AI in Business

Volume 6 - 2023 | https://doi.org/10.3389/frai.2023.1250725

This article is part of the Research TopicHuman-Centered AI at Work: Common Ground in Theories and MethodsView all 10 articles

Introduction: With the advancement of technology and the increasing utilization of AI, the nature of human work is evolving, requiring individuals to collaborate not only with other humans but also with AI technologies to accomplish complex goals. This requires a shift in perspective from technology-driven questions to a human-centered research and design agenda putting people and evolving teams in the center of attention. A socio-technical approach is needed to view AI as more than just a technological tool, but as a team member, leading to the emergence of human-AI teaming (HAIT). In this new form of work, humans and AI synergistically combine their respective capabilities to accomplish shared goals.

Methods: The aim of our work is to uncover current research streams on HAIT and derive a unified understanding of the construct through a bibliometric network analysis, a scoping review and synthetization of a definition from a socio-technical point of view. In addition, antecedents and outcomes examined in the literature are extracted to guide future research in this field.

Results: Through network analysis, five clusters with different research focuses on HAIT were identified. These clusters revolve around (1) human and (2) task-dependent variables, (3) AI explainability, (4) AI-driven robotic systems, and (5) the effects of AI performance on human perception. Despite these diverse research focuses, the current body of literature is predominantly driven by a technology-centric and engineering perspective, with no consistent definition or terminology of HAIT emerging to date.

Discussion: We propose a unifying definition combining a human-centered and team-oriented perspective as well as summarize what is still needed in future research regarding HAIT. Thus, this work contributes to support the idea of the Frontiers Research Topic of a theoretical and conceptual basis for human work with AI systems.

With the uprise of technologies based on artificial intelligence (AI) in everyday professional life (McNeese et al., 2021), human work is increasingly affected by the use of AI, with the growing need to cooperate or even team up with it. AI technologies describe intelligent systems executing human cognitive functions such as learning, interacting, solving problems, and making decisions, which is an enabler for using them in a similarly flexible manner as human employees (e.g., Huang et al., 2019; Dellermann et al., 2021). Thus, the emerging capabilities of AI technologies allow them to be implemented directly in team processes with other artificial and human agents or to overtake functions that support humans in a way team partners would. Such can be referred to as human-AI teaming (HAIT; McNeese et al., 2018). HAIT constitutes a human-centered approach to AI implementation at work, as its aspiration is to leverage the respective strengths of each party. The diverse but complementary capabilities of human-AI teams foster effective collaboration and enable the achievement of complex goals while ensuring human wellbeing, motivation, and productivity (Kluge et al., 2021). Other synergies resulting from human-AI teaming facilitate strategic decision making (Aversa et al., 2018), the development of individual capabilities, and thus employee motivation in the long term (Hughes et al., 2019).

Up to now, the concept of HAIT has been investigated from various disciplinary perspectives, e.g., engineering, data sciences or psychology (Wilkens et al., 2021). An integration of these perspectives seems necessary at this point to design complex work systems as human-AI teams with technical, human, task, organizational, process-related, and ethical factors in mind (Kusters et al., 2020). In addition to this, a conceptual approach with a unifying definition is needed to unite research happening under different terms, but with a potentially similar concept behind it. To evolve from multi- to interdisciplinarity, the field of HAIT research needs to overcome several obstacles:

(1) The discipline-specific definitions and understandings of HAIT have to be brought together or separated clearly.

(2) Different terms used for the same concept, e.g., human-autonomy teaming (O'Neill et al., 2022) and human-AI collaboration (Vössing et al., 2022), have to be identified to enable knowledge transfer and integration of empirical and theoretical work.

(3) The perspectives on either the technology or the human should be seen as complementary, not as opposing.

As “construct confusion can [...] create difficulty in building a cohesive body of scientific literature” (O'Neill et al., 2022, p. 905), it is essential that different disciplines find the same language to talk about the challenges of designing, implementing and using AI as a teammate at work. Therefore, the goal of this scoping review is to examine the extent, range, and nature of current research activities on HAIT. Specifically, we want to give an overview of the definitory understandings of HAIT and of the current state of empirically investigated and theoretically discussed antecedents and outcomes within the different disciplines. Based on a bibliometric network analysis, research communities will be mapped and analyzed regarding their similarities and differences in the understanding of HAIT and related research activities. By this, our scoping review reaches synergistic insights and identifies research gaps in examining human-AI teams, promoting the formation of a common understanding.

As technologies progress and AI becomes more widely applied, humans will no longer work together only with other humans but will increasingly need to use, interact with and leverage AI technologies to achieve complex goals. Increasingly “smart” AI technologies entail characteristics that require new forms of work and cooperation between human and technology (Wang et al., 2021), developing from “just” technological tools to teammates to human workers (Seeber et al., 2020). According to the CASA-paradigm, people tend to perceive computers as social actors (Nass et al., 1996), which is probably even more true with highly autonomous technologies driven by AI, being seen as very agentic. This opens opportunities to move the understanding of AI as a helpful technological application to a team member that interdependently works with the employee toward a shared and valued goal (Rix, 2022). Thus, human-AI teams evolve as a new form of work, pairing human workforce and abilities with that of AI.

Why is a shift in parameters needed? Our proposed answer is that that it offers a new, humane attempt toward AI implementation at work that respects employees' needs, feeling of belongingness and experience (Kluge et al., 2021). Additionally, employees' acceptance, and a positive attitude in working with an AI can improve when it is seen as a teammate (see, e.g., Walliser et al., 2019). Thus, HAIT provides an opportunity to create attractive and sustainable workplaces by harnessing people's capabilities and enabling learning and mutual support. This in turn leads to synergies (Kluge et al., 2021), increased motivation and wellbeing on the part of humans, by spending more time on identity-forming and creative tasks, while safety-critical and monotonous tasks can be handed over to the technology (Jarrahi, 2018; Kluge et al., 2021; Berretta et al., 2023). In addition to the possibility of creating human-centered workplaces, the expected increase in efficiency and performance due to complementary capabilities of humans and AI technologies, described as synergies, are further important reasons for the parameter shift (Dubey et al., 2020; Kluge et al., 2021).

However, those advantages connected to the human workforce and the performance do not just come naturally when pairing humans with AI systems. The National Academies of Sciences, Engineering, and Medicine (2021) defines four conditions for a human-AI team to profit from these synergies:

(1) The human part has to be able to understand and anticipate the behaviors of the deployed intelligent agents.

(2) To ensure appropriate use of AI systems, the human should be able to establish an appropriate relationship of trust.

(3) The human part can make accurate decisions when using the output information of the deployed systems and

(4) has the ability to control and handle the systems appropriately.

These conditions demonstrate that successful teaming depends on technical (e.g., design of the AI system) as well as human-related dimensions (e.g., trust in the system) and additionally requires interaction/teamwork issues (e.g., form of collaboration). This makes HAIT an inherently multidisciplinary field, that should be explored in the spirit of joint optimization to achieve positive results in all dimensions (Vecchio and Appelbaum, 1995). Nevertheless, joint consideration and optimization is still not common practice in the development of technologies or the design of work systems (Parker et al., 2017), so that much research looks at HAIT solely from one perspective. The following section introduces two perspectives on teams in work contexts relevant for the proposed, joint HAIT approach.

The field of human-technology teaming encompasses a number of established concepts, including human-machine interaction (e.g., Navarro et al., 2018) or human-automation interaction (e.g., Parasuraman et al., 2000). These constructs can, but do not have to, include aspects of teaming: they describe a meta-level of people working in some kind of contact with technologies. Concepts further specify on two different aspects: the interaction aspect and the technology aspect. The term “interaction” as a broad concept is increasingly replaced by terms trying to detail the type of interaction such as co-existence, cooperation and collaboration (Schmidtler et al., 2015), usually understood as increasingly close and interdependent contact. Maximally interdependent collaboration including an additional aspect of social bonding (team or group cohesion, see Casey-Campbell and Martens, 2009) is called teaming. In terms of the technology aspect, a range of categories exists from general terms like technology, machines or automation, which can be broad or specific, depending on the context (Lee and See, 2004). More specific categories include autonomy, referring to adaptive, self-governed learning technologies (Lyons et al., 2021), robots or AI.

A recent and central concept in this research field is human-autonomy teaming, as introduced by O'Neill et al. (2022) in their review. Although using a different term than HAIT, this concept plays a crucial role in consolidating and unifying research on the teaming of humans and autonomous, AI-driven systems. Their defining elements of human-autonomy teaming include:

(1) a machine with high agency,

(2) communicativeness of the autonomy,

(3) conveying information about its intent,

(4) evolving shared mental models,

(5) and interdependence between humans and the machines (O'Neill et al., 2022).

However, there are several critical aspects to consider in this review: The term “human-autonomy teaming” can elicit associations that may not contribute to the construct of HAIT. The definition of autonomy varies between different fields and the term alone can be misleading, as it can be understood as the human's autonomy, the autonomy of a technical agent, or as the degree of autonomy in the relationship. Additionally, O'Neill et al.'s (2022) reliance on the levels of automation concept (Parasuraman et al., 2000) reveals a blind spot in human-centeredness, because the theory fails to consider different perspectives (Navarro et al., 2018) and is not selective enough to describe complex human-machine interactions. Furthermore, the review primarily focuses on empirical research, neglecting conceptual work on teaming between humans and autonomous agents. As a result, the idea of teaming is—despite the name—not as prominent as expected, and the dynamic, mutually supportive aspect of teams is overshadowed by the emphasis on technological capabilities for human-autonomy teaming.

In addition to the emerging problem of research focusing solely on technology aspects, which is important, but insufficient to fully describe and understand a multidimensional system like HAIT, different definitions exist to describe what we understand by human-AI teams. Besides the already mentioned definition of human-autonomy teaming, Cuevas et al. (2007) for example describe HAIT as “one or more people and one or more AI systems requiring collaboration and coordination to achieve successful task completion” (p. 64). Demir et al. (2021, p. 696) define that in HAIT “human and autonomous teammates promptly interact with one another in response to information flow from one team member to another, adapt to the dynamic task, and achieve common goals”. While these definitions share elements, such as the idea of working toward a common goal with human and autonomous agents, there are also dissimilarities among the definitions, for example, in the terminology used, as seemingly similar terms like interaction and collaboration represent different constructs (Wang et al., 2021).

In an evolving research field, terminology ambiguity can inspire different research foci, but also pose challenges. Different emerging research fields might refer to the same phenomenon using various terms (i.e., human-AI-teaming vs. human-autonomy-teaming or interaction vs. teaming), which is known as jangle-fallacy and can cause problems in research (Flake and Fried, 2020). Such conceptual blurring may hinder interdisciplinary exchange and the integration of findings from different disciplines due to divergent terminology (O'Neill et al., 2022).

Another important perspective to consider is that of human teams, which forms the foundation of team research. Due to its roots in psychology and social sciences, the perspective on teams is traditionally a human-centered one, implying relevant insights on the blind spot of human-technology teaming research. The term “team” refers to two or more individuals interacting independently to reach a common goal and experiencing a sense of “us” (Kauffeld, 2001). Each team member is assigned a specific role or function, usually for a limited lifespan (Salas et al., 2000). Teamwork allows for the combination of knowledge, skills, and specializations, the sharing of larger tasks, mutual support in problem-solving or task execution, and the development of social structures (Kozlowski and Bell, 2012).

The roots of research on human teams can be traced to the Hawthorne studies conducted in the 1920s and 1930s (Mathieu et al., 2017). Originally designed to examine the influence of physical work conditions (Roethlisberger and Dickson, 1939), these studies unexpectedly revealed the impact of group dynamics on performance outcomes, leading to a shift in focus toward interpersonal relationships between workers and managers (Sundstrom et al., 2000). In this way, psychology's understanding of teamwork and its effects has since stimulated extensive theory and research on group phenomena in the workplace (Mathieu et al., 2017). Following over a century of research, human teamwork, once a “black box” (Salas et al., 2000, p. 341), is now well-defined and understood. According to Salas et al. (2000), teams are characterized by three main elements: Firstly, team members have to be able to coordinate and adapt to each other's requirements in order to work effectively as a team. Secondly, communication between team members is crucial, particular in uncertain and dynamic environments, where information exchange is vital. Lastly, a shared mental model is essential for teamwork, enabling team members to align their efforts toward a common goal and motivate each other. Moreover, successful teamwork requires specific skills, such as adaptability, shared situational awareness, team management, communication, decision-making, coordination, feedback, and interpersonal skills (Cannon-Bowers et al., 1995, see Supplementary Table 1 for concept definitions).

Commonalities of human-human teams and human-AI teams have already been identified in terms of relevant features and characteristics that contribute to satisfactory performance, including shared mental models, team cognitions, situational awareness and communication (Demir et al., 2021). Using human-human teams research insights as a basis for HAIT offers access to well-established and tested theories and definitions, but leaves unclarities in the questions which characteristics and findings can be effectively transferred to HAIT research and what the vital existing differences are (McNeese et al., 2021).

A consideration of both the human-human and human-technology teaming perspectives serves as a useful and necessary starting point for exploring human-AI teams. In order to advance our understanding, it is crucial to combine the findings from these perspectives and integrate them within a socio-technical systems approach. The concept of socio-technical systems recognizes that the human part is intricately linked to the technological elements in the workplace, with both systems influencing and conditioning each other (Emery, 1993). Therefore, a comprehensive understanding of human-AI teams can only be achieved through an integrative perspective that considers the interplay between humans and technology, as well as previous insights from both domains regarding teaming. In our review, we aim to address the lack of integration by…

• establishing the term human-AI teaming (HAIT) as an umbrella term for teamwork with any sort of artificially intelligent (partially), autonomously acting system.

• omitting a theoretical basement for embedding our literature search and analysis. We want to neutrally identify how (different) communities understand and use HAIT and what might be the core to it, without pre-assumptions on the characteristics.

• taking a human-centered perspective and using the ideas of socio-technical system designs to discuss our findings, anyways.

• including a broad range of scientific literature, which contains conceptual and theoretical papers—thereby being able to cover a deeper examination of HAIT-related constructs.

• seeing if the understanding of teaming has developed since the review by O'Neill et al. (2022) and if there are papers considering especially the team level and dynamics associated with agents sharing tasks.

The goal of this paper is to examine the scope, breadth, and nature of the most current research on HAIT. In this context, we are interested in understanding the emerging research field, the streams and disciplines involved, by visualizing and analyzing current research streams using clusters based on a bibliometric network analysis (“who cites who”). The aim is to use mathematical methods to capture and analyze the relationships between pieces of literature, thereby representing the quantity of original research and its citation dependencies to related publications (Kho and Brouwers, 2012). The investigation of resulting networks can reveal research streams and trends in terms of content and methodology (Donthu et al., 2020). Concisely, the objective of the network analysis is to investigate the following research question:

RQ1: Which clusters can be differentiated regarding interdisciplinary and current human-AI teaming research based on their relation in the bibliometric citation network?

Further, the publications of the identified clusters will be examined based on a scoping review concerning the definitory understanding of human-AI teams as well as their empirically investigated or theoretically discussed antecedents and outcomes. This should contribute to answering the subsequent research questions:

RQ2: Which understandings of human-AI teaming emerge from each cluster in the network?

RQ3: Which antecedents and outcomes of human-AI teaming are currently empirically investigated or theoretically discussed?

This second part of the analysis should lead to a consideration of the quality of publications in the network in addition to the quantity within the network analysis (Kho and Brouwers, 2012). We want to give an overview of what is seen as the current core of HAIT within different research streams and identify differences and commonalities. On the one hand, making differences in the understanding of HAIT explicit is important, as it allows future research to develop into decidedly distinct research strands. On the other hand, the identification of similarities creates a basis for the development of a common language about HAIT, which will allow the establishment of common ground in the future so that the interdisciplinary exchange on what HAIT is and can be grows in stringency. To also contribute to this aspect, we aim to identify a definition of HAIT that serves the need for a common ground. In doing so, the definition is intended to extend that of O'Neill et al. (2022), reflecting the latest state of closely related research as well as addressing and considering the problems identified earlier. If we are not able to find this kind of a definition within the literature that focuses on the teaming aspect, we want to use the insights from our research to newly develop such a definition of HAIT. Thus, our fourth research question, which we will be able to answer after collecting all other results and discussing their implications, is:

RQ4: How can we define HAIT in a way that is able to bridge different research streams?

This is expected to help researchers from different disciplines finding a shared ground in definitions and concepts and explicating divergences in understanding. By identifying the current state of research streams and corresponding understandings of HAIT, as well as the antecedents and outcomes, synergistic insights and research gaps can be identified. A unifying definition will further help stimulate and align further research on this topic.

To identify research networks and to analyze their findings on HAIT, the methods of bibliometric network analysis and scoping review were combined. The pre-registration for this study can be accessed here: https://doi.org/10.23668/psycharchives.12496.

The basis for the network analysis and the scoping review was a literature search in Clarivate Analytic's Web of Science (WoS) and Elsevier's Scopus (Scopus) databases. Those were chosen because they represent the main databases for general-purpose scientific publications, spanning articles, conference proceedings and more (Kumpulainen and Seppänen, 2022). The process of the literature search was conducted and is reported according to the PRISMA reporting Guidelines for systematic reviews (Moher et al., 2009), more specifically the extension for scoping reviews (PRISMA-ScR; Tricco et al., 2018). Figure 1 provides an overview of the integrated procedure.

The literature search was conducted on the 25.01.2023. The keywords for our literature search (see Table 1) were chosen to include all literature in the databases that relates to HAIT in the workplace. Thus, the components “human,” “AI,” “teamwork,” and “work” all needed to be present in any (synonymous) form. Furthermore, only articles published since the year 2021 were extracted. This limited time frame was chosen as the goal was to map the most current research front, using the European industrial strategy “Industry 5.0” (Breque et al., 2021) as s starting point. Its focus on humans, their needs, and capabilities instead of technological system specifications represents a shift in attention to the individual that is accompanied by the explicit mention of creating a team of human(s) and technical system(s) (Breque et al., 2021), therefore marking a good starting point of a joint human-AI teaming understanding. Accordingly, only the most current literature published since the introduction of Industry 5.0 and not yet included in the review of O'Neill and colleagues is taken into account in our review (note that by analyzing the references in bibliometric coupling and qualitatively evaluating the referred concepts of HAIT, we also gain information on older important literature). Included text types were peer-reviewed journal articles, conference proceedings and book chapters (not limited to empirical articles) in English or German language. As shown in the PRISMA-diagram in Figure 2, the search resulted in n = 1,963 articles being retrieved. After removing n = 440 duplicates, abstract-screening was conducted using the web-tool Rayyan (Ouzzani et al., 2016). In case of duplicates, the WoS version was kept for its preferable data structure.

Six researchers familiar with the subject screened the abstracts, with every article being judged by at least two blind raters. Articles not dealing with the topic “human-AI teaming in the workplace” or being incorrectly labeled in the database and not fitting our eligibility criteria were excluded. In case of disagreement or uncertainty, raters discussed and compared their reasoning and decided on a shared decision, and/or consulted the other raters. In total, 1,159 articles (76%) were marked for exclusion. Exclusion criteria were: (a) publication in another language than English or German, (b) publication form of a book (monography or anthology), (c) work published before 2021, (d) work not addressing human-AI-teaming in title, abstract or keywords, (e) work not addressing work context in title, abstract or keywords. The remaining articles (n = 364, 24%) were used in the network analysis (see Figure 2).

To map and cluster the included literature and thus describe the network that structures the research field of HAIT, a bibliometric mapping approach and clustering algorithm had to be chosen. Networks consist of publications that are mapped, called nodes, and the connection between those nodes, which are called edges (Hevey, 2018). Which publications appear in the nodes and how the edges are formed depends on the mapping approach used. The variety includes direct citation, bibliometric coupling, and co-citation networks (Boyack and Klavans, 2010), but bibliometric coupling analysis has been shown to be the most accurate (Boyack and Klavans, 2010). It works by first choosing a sample of papers, serving as the network nodes. The edges are then created by comparing the references of the node-papers, adding edges between two publications if they share references (Jarneving, 2005). Thus, the newest publications are mapped, while the cited older publications themselves are not included in the network (Boyack and Klavans, 2010; Donthu et al., 2021). Since our goal was to map and cluster the current research front, we chose bibliometric coupling for our network analysis approach.

The article metadata from WoS and Scopus were prepared for network analysis using R (version 4.2, R Core Team, 2022), as well as their reference lists. We did this in a way that the first author, including initials, the publishing year, the starting page, and the volume were extracted from all cited references. This information was then combined in a new format string. In total, n = 17.323 references containing at least first author and year were generated. Of those, 8.955 references contained missing data about the starting page, the volume or both. To minimize the risk of two different articles randomly having the same reference string, we excluded all references that missed both volume and starting page information (n = 3,794). We kept all references that only had either starting page (n = 1,384) or volume (n = 3,794) information missing, due to a low probability and influence of single duplicates.

Using the newly created format, we conducted a coupling network analysis using R and the packages igraph (version 1.3.1; Aria and Cuccurullo, 2017) and bibliometrix (version 4.1.0; Csárdi et al., 2023). The used code can be accessed here: https://github.com/BjoernGilles/HAIT-Network-Analysis. Bibliometrix was used to create the first weighted network with no normalization. Then it was converted into igraph format, removing any isolated edges with degree = 0. The degree centrality refers to the number of edges a node is connected by to other nodes, while the weighted degree centrality adapts this measure by multiplying it with the strength of the edge (Donthu et al., 2021). Then, the multilevel community clustering algorithm was used to identify the dominant clusters. Multilevel-clustering was chosen since the network's mixing parameter was impossible to predict a-priori and since it shows stable performance for a large range of clustering structures (Yang et al., 2016). The stability of our clustering solution was checked by comparing our results with 10,000 recalculations of the multilevel-algorithm on our network-data.

Afterwards, all clusters containing ≥ 20 nodes were selected and split into subgraphs. The top 10% of papers with the highest weighted degree of each subgraph were selected for qualitative content analysis (representing the most connected papers for each cluster). Additionally, we selected the 10% papers with the highest weighted degree in the main graph for content analysis (i.e., representing the most connected papers over all the clusters, i.e., in the whole network). We decided to use the weighted degree as a measure for centrality, because our goal was to identify the most representative and strongest connected nodes in each cluster.

To analyze the content of our literature network and the respective clusters, we chose the scoping review approach. It is defined as a systematic process to map existing literature on a research object with the distinctiveness of including all kinds of literature with relevance to the topic, not only empirical work (Arksey and O'Malley, 2005). It is especially of use with emerging topics and evolving research questions (Armstrong et al., 2011) and to identify or describe certain concepts (Munn et al., 2018). Its aims are to show which evidence is present, clarify concepts, how they are defined and what their characteristics are, explore research methods and find knowledge (Munn et al., 2018)—and thus, match our research goals. Whilst this approach lead our systematic literature selection, as described before, it also was our guideline in analyzing the content of the network and the selected publications within.

To understand the network that the respective analysis produced, we looked at the 10% publications with the highest weighted degree in each cluster, analyzing both the metadata such as authors and journals involved, and the content of those papers. For this, we read the full texts of all those publications that were available to us (n = 41), as well as the abstracts of the literature without full-text access (n = 4). To find the literature's full texts, we looked into the databases and journals that were available to us as university members as well as for open access publication websites, e.g., on Research Gate. For those articles we could not find initially, we contacted the authors. Nevertheless, we could still not get access to four papers, namely Jiang et al. (2022) (cluster 1), Silva et al. (2022) (cluster 3), Tsai et al. (2022) (central within network), Zhang and Amos (2023) (central within network). For those, as they were amongst the most connected publications based on the bibliographic clustering, we considered at least information from the title and abstract.

We first synthesized the main topics of each of the clusters, identifying a common sense or connecting elements within. To then differentiate the clusters, we described them based on standardized categories including the perspective of the articles, research methods used, forms of AI described, role and understanding of AI, terms for and understandings of HAIT and contexts under examination. This, in addition to the network analysis itself, helped to answer RQ1 on clusters within interdisciplinary HAIT research.

The focus then was on answering RQ2 about the understandings of HAIT represented within the network. For this, we read the full texts central within the clusters and within the whole network, marking all phrases describing, defining, or giving terms for HAIT, presenting the results on a descriptive base. We as well-sorted the network-related papers by the terms they used and the degree of conceptuality behind the constructs to get an idea of terminology across the network.

To answer RQ3 about antecedents and outcomes connected to HAIT, we marked all passages in the literature naming or giving information about antecedents and outcomes. Under antecedents, we understood those variables that have been shown to be preconditions for a successful (or unsuccessful) HAIT. We included those variables that were discussed or investigated by the respective authors as preceding or being needed for teaming (experience), without having a pre-defined model of antecedents and outcomes in mind. For the outcomes, we summarized the variables that have been found to be affected by the implementation of HAIT in terms of the human and technical part, team and task level, performance, and context. We only looked at those variables that were under examination empirically or centrally discussed within the non-empirical publications. Antecedents or outcomes only named in the introductions or theoretical background were not included, as those did not appear vital within the literature. We synthesized the insights for all clusters and gave an overview over all antecedents and outcomes, quantifying their appearance. This was done by listing each publication's individual variables and then subsequently grouping and sorting the variables within our researcher team to achieve a differentiated, yet abstracted picture about all factors under examination within the field of HAIT.

After removing isolated nodes (n = 63) without connections and two articles with missing reference meta-data, the network consisted of 299 nodes (i.e., papers) and 2,607 edges (i.e., paths between the publications). Each paper had on average 17.44 edges connected to it. This is in line with the expected network structure, given that a well-defined and curated part of the literature was analyzed, where most papers share references with other papers. The strength (corrected mean strength = 18.23) was slightly higher than the average degree (17.44), showing a small increase in information gained by using a weighted network instead of an unweighted one. The uncorrected mean strength was 200.55. Transitivity, also known as global clustering coefficient, measures the tendency of nodes to cluster together and can range between the 0 and 1, with larger numbers indicating greater interconnectedness (Ebadi and Schiffauerova, 2015). The observed transitivity was 0.36, which is much higher than random degree of clustering, compared to a transitivity of 0.06 of a random graph with the same number of edges and nodes. The network diameter (longest path between two nodes) was 6, and the density (number of possible vs. observed edges) was 0.06. Overall, this shows that the papers analyzed are part of a connected network that also displays clustering, providing further insights about the network's character.

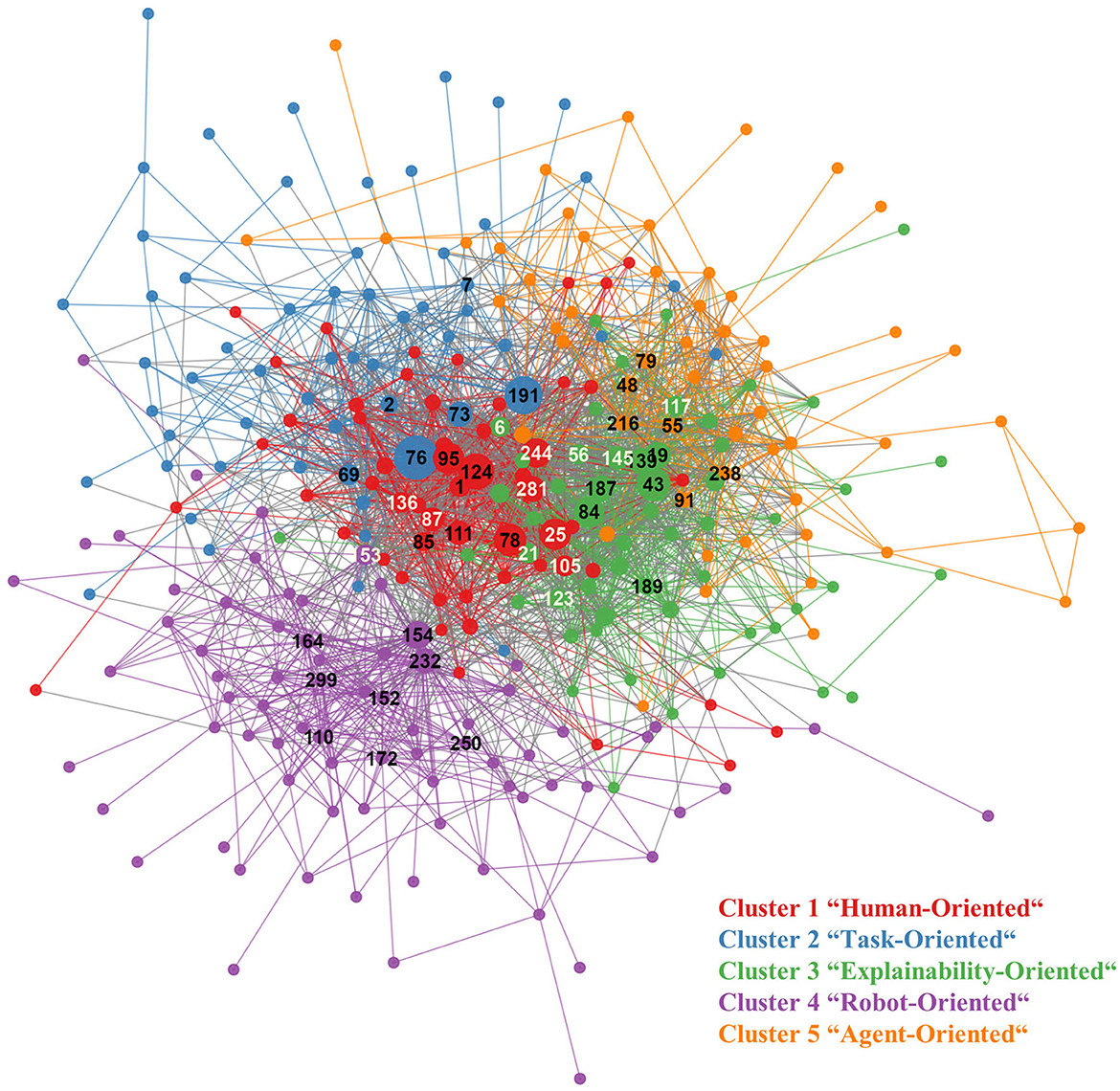

In total, multilevel community clustering identified five clusters that fit our criteria of a cluster size of ≥20 edges (see Figure 3). The sizes for the five clusters were: n1 = 55, n2 = 58, n3 = 55, n4 = 75, n5 = 54. Thus, all except two edges could be grouped in these clusters. The modularity of the found cluster solution was 0.36. Modularity is a measure introduced by Newman and Girvan (2004) that describes the quality of a clustering solution A modularity of 0 indicates no better clustering solution than random, while the maximum value of 1 indicates a very strong clustering solution. Our observed modularity of 0.36 fell in the lower range of commonly observed modularity measures of 0.3–0.7 (Newman and Girvan, 2004).

Figure 3. Graph of the bibliometric network. Numbers indicate publications included in the content analysis. Publications are matched to their reference numbers in Table 2. White numbers represent papers included based on their relevance for the whole network, black numbers represent papers selected based on their relevance in their cluster. The clusters' titles will be further explained in section 4.2.

Overall, the network involved about 1,400 authors (including the editors of conference proceedings and anthologies). While most of them were the authors of one to two publications within the network, some stood out with four or more publications: Jonathan Cagan (five papers), Nathan J. McNeese (eight papers) & Beau G. Schelble (four papers), Andre Ponomarev (four papers), Myrthe L. Tielman (four papers) and Dakuo Wang (four papers; see Table 2).

Looking at publication organs, we list all journals, conference proceedings or anthologies of the respective 10% most connected publications within and across the clusters in Figure 4 for economic reasons. To give further insights, we classified those publication organs according to their thematic focus based on color coding.

Figure 4. Publication organs within the analyzed papers in the network, sorted by their point of orientation. Pr., proceedings; Con, conference; Int., international.

For the content analysis, we decided to include the publications with the 10% highest weighted degree from each cluster to deduce the focus in terms of content and research of these identified clusters and in general. Thus, we read six representative contributions for clusters 1, 2, 3, and 5, eight publications from the larger cluster 4, and for the 10% of articles with the highest weighted degree across the network, another 13 publications were screened, resulting in n = 45 publications within the network being reviewed concerning the topic of human-AI teaming.

Regarding RQ1, we subsequently provide a description of the thematic focus within the five clusters. However, it should be acknowledged that the content of theses clusters exhibits a high degree of interconnectedness, making it more challenging to distinguish between them as originally anticipated. The distinctions among the clusters are based on subtle variations in research orientation or the specific AI systems under investigation. A noteworthy commonality across all clusters is the prevailing technical orientation observed in current HAIT research. This orientation is also reflected in the disciplinary backgrounds of the researchers involved, with a predominant presence of computer science and engineering expertise across the clusters and in the whole network and partially in the publication organs. The nuanced aspects of this predominantly one-sided perspective, which we were able to discern, are outlined in the subsequent section. Table 2 provides information on the composition of each cluster, including the contributing researchers and the weighted degree of each contribution.

The 10% most central articles within this first cluster were all journal articles, mostly from ergonomics and psychology-oriented journals: Three of them belonged to Computers in Human Behavior, while the others were from Human Factors, Ergonomics and Information System Frontiers. Two articles shared the two authors McNeese and Schelble. The papers are not regionally focused, with contributions from the US, Germany, Australia, China and Canada. All take a human-oriented approach to HAIT, looking at or discussing a number of subjective outcomes of HAIT such as human preferences, trust and situation awareness. All the papers seem to follow the goal of finding key influencing factors on the human side for acceptance and willingness to team up with an AI. One exception was the paper by O'Neill et al. (2022), which is based more on the traditional technology-centered LOA model in its argumentation, but still reports on many studies looking at human-centered variables.

Whilst the 10% most central articles did not have much in common considering geographic origin, authors, journals and conferences, they share a rooting in information science. All the papers, except for Yam et al. (2023), discuss different types of intelligence automation or roles of AI. They argued from a task perspective, with a focus on the application context and specific ideas for collaboration strategies dependent on the task at hand.

The 10% most central articles from Cluster 3 were conference proceedings (four) and journal articles (two) all within the field of human-computer interaction. Three of the articles incorporated practitioner cooperations (with practitioners from Microsoft, Amazon, IBM and/or Twitter). The authors were mainly from the USA, the UK and Canada. Methodologically, the articles were homogeneous in that they all reported laboratory experiments in which a human was tasked with a decision-making scenario during which they were assisted by an AI. The articles took a technical approach to the question of how collaboration, calibrated trust and decision-making can be reached through AI explainability (e.g., local or global explanations, visualizations). Explainability can be defined as an explainer giving a corpus of information to an addressee that enables the latter to understand the system in a certain context (Chazette et al., 2021). The goal of the articles was to facilitate humans to adequately accept or reject AI recommendations based on the explainability of the system. AI has been characterized as an advisor/helper or assistant and the understanding of AI is focused on the algorithm/machine-in-the-loop paradigm, involving algorithmic recommendation systems that inform humans in their judgements. This is seen as a fundamental shift from full automation toward collaborative decision-making that supports rather than replaces workers.

Cluster 4 can be described as a technology-oriented cluster, which focused primarily on robots as the technology under study. Of the 10% most central articles in this cluster, a majority were journal articles (six), added by two conference contributions. The papers were mainly related to computer science and engineering and similar in their methods, as most of the papers (six) provided literature and theoretical reviews. No similarities could be found regarding the location of publication: While a large part of the articles included in Cluster 4 were published in Europe (Portugal, Scotland, UK, Sweden, and Italy), there were also contributions from Canada, Brazil, and Russia. All included papers dealt with human-robot collaboration as a specific, embodied form of AI, with an overarching focus on the security aspects during this collaboration. The goal of the incorporated studies was to identify factors that are important for a successful collaboration in a modern human-robot collaboration. In this context, communication emerged as an important influencing component, taking place also on a physical level in the case of embodied agents, which necessitates special consideration of security aspects. Furthermore, the articles had a rather technology-oriented approach to safety aspects in common and in most of the articles, concrete suggestions for the development and application of robot perception systems were made. Nevertheless, the papers also discussed the importance of taking human aspects into account in this specific form of collaboration. Additionally, they shared a common understanding of the robot as a collaborative team partner whose cooperation with humans goes beyond simple interaction.

The 10% most connected articles within the cluster consisted of conference proceedings (five) and one journal article, all from the fields of human-machine systems and engineering. The authors were mostly from the USA, but also from Germany, Australia, Japan, China and Indonesia and from the field of technology/engineering or psychology. Methodologically, the papers all reported on laboratory or online experiments/simulations. A connecting element between the articles was the exploration of how human trust and confidence in AI is formed based on AI performance/failure. One exception is the paper by Wang et al. (2021), which is a panel invitation on the topic of designing human-AI collaboration. Although it announced a discussion on a broader set of design issues for effective human-AI collaboration, it also addressed the question of AI failure and human trust in AI. In general, the articles postulated that with increasing intelligence, autonomous machines will become teammates rather than tools and should thus be seen as collaboration partners and social actors in human-AI collaborative tasks. The goal of the articles was to investigate how the technical accuracy of AI affects human perceptions of AI and performance outcomes.

The main focus of the clusters, similarities as well as differences are summarized in Table 3. Taken together, the description of the individual clusters reveals slightly different streams of current research on HAIT and related constructs, within the scope of more technology-driven research yet interested in the interaction with humans.

To answer RQ2 on understandings of human-AI teaming and to find patterns in terminology and definitions potentially relevant for the research question on a common ground definition, the following section deals with the understandings of human-AI teams that emerged from the individual clusters and the overarching 10% highly weighted papers.

Within cluster 1, there were several definitions and defining phrases in the papers. The most prominent and elaborate within the cluster might be that of O'Neill et al. (2022), underlining that “If [the AI systems] are not recognized by humans as team members, there is no HAT” (p. 907) and defining human-autonomy teaming as “interdependence in activity and outcomes involving one or more humans and one or more autonomous agents, wherein each human and autonomous agent is recognized as a unique team member occupying a distinct role on the team, and in which the members strive to achieve a common goal as a collective” (p. 911). This definition is also referred to by McNeese et al. (2021). To this, the latter added the aspects of dynamic adaptation and changing task responsibility. Endsley (2023) differentiated two different views on human-AI work: one being a supportive AI enhancing human performance (which is more of where Saßmannshausen et al., 2021 and Vössing et al., 2022 position themselves), and one being human-autonomy teams with mutual support and adaptivity (thereby referring to the National Academies of Sciences, Engineering, and Medicine, 2021). What unites those papers' definitions of HAIT are the interdependency, the autonomy of the AI, a shared goal, and dynamic adaptation.

In cluster 2, there were not many explicit definitions of HAIT, but a number of terms used to describe it, with “teaming” not being of vital relevance. Overall, the understanding of HAIT—or cooperation—is very differentiated in this cluster, with multiple papers acknowledging that “various modes of cooperation between humans and AI emerge” (Li et al., 2022, p. 1), comparable to when humans cooperate. The focus in these papers lies on acknowledging and describing those differences. Jain et al. (2022) pointed out that there can be different configurations in the division of labor, dependent on work design, “with differences in the nature of interdependence being parallel or sequential, along with or without the presence of specialization” (p. 1). Li et al. (2022) differentiated between inter- and independent behaviors based on cooperation theory (Deutsch, 1949), describing how the preference for those can be dependent on the task goal. Having this differentiation in mind, intelligence augmentation could happen in different modes or by different strategies, as well as mutually, with AI augmenting human or humans augmenting AI (Jain et al., 2021). This led to different roles evolving for humans and robots, although the distinct, active role of AI was underlined as a prerequisite for teaming (Li et al., 2022; Chandel and Sharma, 2023). The authors claimed that research is needed on the different cooperation modes.

In cluster 3, the central papers argued that the pursuit of complete AI automation is changing toward the goal of no longer aspiring to replace domain workers, but that AI “should be used to support” their decisions and tasks (Fan et al., 2022, p. 4) by leveraging existing explainability approaches. In that, the aspiration to reach collaborative processes between humans and AI was understood as a “step back” from full automation, which becomes necessary due to ethical, legal or safety reasons (e.g., Lai et al., 2022). Collaboration, along with explainability, is a central topic in cluster 3, which Naiseh et al. (2023, p. 1) broadly defined as “human decision-makers and [...] AI system working together”. The goal of human-AI collaboration was defined as “‘complementary performance' (i.e., human + AI > AI and human + AI > human)” (Lai et al., 2022, p. 3), which should be reached by explainability or “algorithm-in-the-loop” designs, i.e., a paradigm in which “AI performs an assistive role by providing prediction or recommendation, while the human decision maker makes the final call” (Lai et al., 2022, p. 3). Thus, the understanding of human-AI teaming was based on the perspective that AI should serve humans as an “assistant” (Fan et al., 2022; Lai et al., 2022; Tabrez et al., 2022) or “helper” (Rastogi et al., 2022); the notion of AI being a “team member” was only used peripherally in the cluster and HAIT was not explicitly defined as a central concept by the selected papers of cluster 3.

In cluster 4, which focused mainly on robots as technological implementations of AI, the term teaming was not used once to describe the way humans and AI (or humans and robots) work together. The terms “human-robot interaction” (HRI) and “human-robot collaboration” (HRC) were used much more frequently, with a similar understanding throughout the cluster: An interaction was described as “any kind of action that involves another human being or robot” (Castro et al., 2021, p. 5), where the actual “connection [of both parties] is limited” (Othman and Yang, 2022, p. 1). Collaboration, instead, was understood as “a human and a robot becom[ing] partners [and] reinforcing [each other]” (Galin and Meshcheryakov, 2021, p. 176) in accomplishing work and working toward a shared goal (Mukherjee et al., 2022). Thus, the understanding of collaboration in cluster 4 is similar to the understanding in Cluster 3, differentiating between distinct roles in collaboration as in Cluster 2. The roles that were distinguished in this cluster are the human as a (a) supervisor, (b) subordinate part or (c) peer of the robot (Othman and Yang, 2022). A unique property of cluster 4 involved collaboration that could occur through explicit physical contact or also in a contactless, information-based manner (Mukherjee et al., 2022). The authors shared the understanding that “collaboration [is] one particular case of interaction” (Castro et al., 2021, p. 5; Othman and Yang, 2022) and that this type of interaction will become even more relevant in the future, aiming to “perceive the [technology] as a full-fledged partner” (Galin and Meshcheryakov, 2021, p. 183). However, more research on human-related variables would be needed to implement this in what has been largely a technology-dominated research area (Semeraro et al., 2022).

In cluster 5, the understanding of HAIT is based on the central argument that advancing technology means that AI is no longer just a “tool” but, due to anthropomorphic design and intelligent functions, becomes an “effective and empowering” team member (Chong et al., 2023, p. 2) and thus a “social actor” (Kraus et al., 2021, p. 131). The understanding of AI as a team member was only critically reflected in the invitation to the panel discussion by Wang et al. (2021) who mentioned potential “pseudo-collaboration” and raised the question of whether the view of AI as a team member is actually the most helpful perspective for designing AI systems. The shift from automation to autonomy has been stressed as a prerequisite for effective teaming. Thus, rather than understanding HAIT as a step back from full automation (see cluster 3), incorporating autonomous agents as teammates into collaborative decision-making tasks was seen as the desirable end goal that becomes realistic due to technological progress.

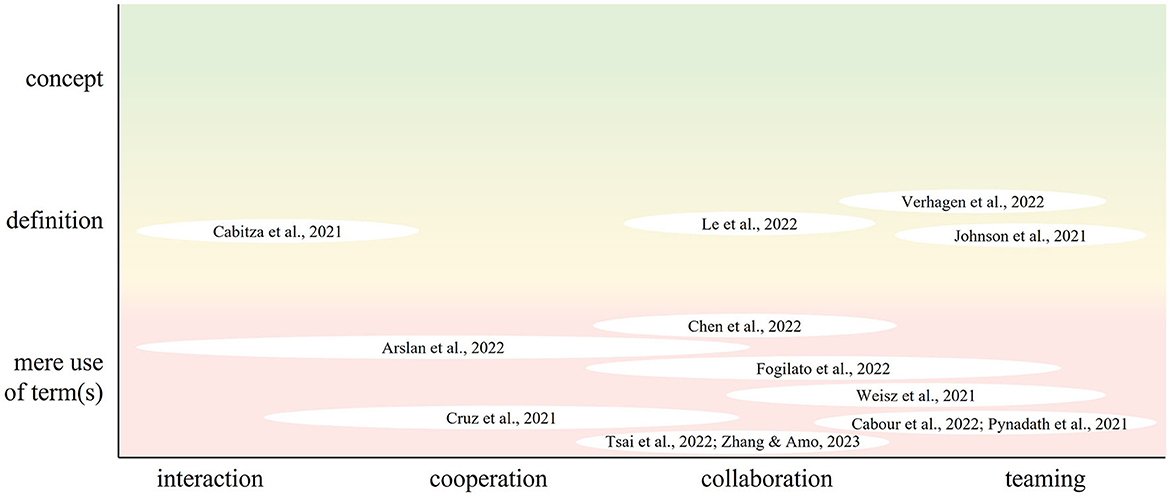

In addition to the clusters and their interpretation of teaming, we looked at the 10% papers with the highest weighted degree in the whole network, i.e., the papers that had the most central reference lists across all the literature on HAIT. We expected those papers to deliver some “common sense” about the core topic of our research, as they are central within the network and connected with papers from all clusters. Contrary to our expectations, none of those articles focused on trying to classify and differentiate the concept of HAIT from other existing terminologies in order to create a common understanding across disciplines. See Figure 5 for a classification of the articles based on the extent to which the construct was defined in relation to the term used to depict collaboration.

Figure 5. Papers with the most impactful connections within the network on HAIT, classified according to their definitory approach and their use of terms for teaming. “Mere use of term(s)” refers to using one of the listed terms without employing or referencing a definition. “Definition” includes the articles in which the understanding of the used teaming term is specified. “Concept” refers to a deep understanding toward the used term, e.g., by differentiating it from other terms or deriving/proposing a definition.

Four of the central papers showed attempts to define HAIT or related constructs: In the context of human-robot teaming, Verhagen et al. (2022) explored the concept of HART (human-agent/robot team), which encompassed the collaboration and coordination between humans and robots in joint activities, either acting independently or in a synchronized manner. A key aspect emphasized by the authors is the need for mutual trust and understanding within human-robot teams. Similarly, the study conducted by Le et al. (2023) also used robots as interaction partners, although the terminology used was “collaboration”. They drew a comparison between the streams of research focusing on human-robot collaboration, which is technically oriented, and human-human collaboration, which is design oriented. To develop their approach to human-robot collaboration, they considered not only the relevant literature on collaboration, but also the theory of interdependence (Thibaut and Kelley, 1959). In turn, Johnson et al. (2021) discussed the concept of human-autonomy teaming and emphasized the importance of communication, coordination, and trust at the team level, similar to Verhagen et al. (2022). Their perspective was consistent with the traditional understanding of teaming, recognizing these elements as critical factors for successful teamwork. Another perspective was taken by Cabitza et al. (2021) who used the term “interaction” to a large extent including AI not only for dyadic interaction with humans but also as a supportive tool for human decision teams. They emphasized a contrast to the conventional understanding of human-AI interaction, which views AI either as a tool or as an autonomous agent capable of replacing humans (Cabitza et al., 2021).

The remaining papers referred to HAIT or related constructs in their work but provided minimal to no definition or references for their understanding: Arslan et al. (2022) emphasized that AI technologies are evolving “beyond their role as just tool[s]” (Arslan et al., 2022, p. 77) and are becoming visible players in their own right. They primarily used the term “interaction” and occasionally “collaboration”, focusing on the team level without delving into the characteristics and processes of actual teaming. Cabour et al. (2022), similar to Cruz et al. (2021), discussed HAIT only within the context of explainable AI, without providing a detailed definition or explanation. Cruz et al. (2021) specifically used the term “human-robot interaction” rather than teaming, where the robot provides explanations of its actions to a human who is not directly involved in the task. Emphasizing the “dynamic experience” (Chen et al., 2022, p. 549) of both parties adapting to each other, Chen et al. (2022) used mostly the term “human-AI collaboration”. They adopted a human-centered perspective on AI and the development of collaboration. In addition, the paper by Tsai et al. (2022) discussed human-robot work, primarily using the notion of collaboration to explore different roles that robots can take, including follower, partner, or leader. The paper by Zhang and Amos (2023) focused on collaboration between humans and algorithms. Fogliato et al. (2022) focused on “AI-assisted decision-making” (p. 1362) and used mainly the term “collaboration” to describe the form of interaction. They only used the term “team” to describe the joint performance output without further elaboration on its characteristics or processes. Weisz et al. (2021) took the notion of teaming a step further, discussing future potential of generative AI as a collaborative partner or teammate for human software engineers. They used terms such as “partnership,” “team,” and “collaboration” to describe the collaborative nature of AI working alongside human engineers. Finally, Pynadath et al. (2022) discussed human-robot teams and emphasized the “synergistic relationship” (p. 749) between robots and humans. However, they also did not provide additional explanations or background information on their understanding of teamwork.

What we see overall is that there are different streams of current research on HAIT, examining different aspects or contexts of HAIT. Whilst there is one cluster centered around human perception of HAIT, with a tendency to use the term teaming, the other clusters focus more on the AI technology or on the task, describing teaming in a sense of cooperation or collaboration, partially envisioning the AI as a supportive element. Also, within the network's most connected papers, we find this diversity in understandings and terminology and, yet again, a lack in conceptual approaches and definitions.

To structure the antecedents and outcomes under examination within the clusters on RQ3, we developed a structural framework helping to group them according to the part of the (work) system they refer to. We used the structuring of Saßmannshausen et al. (2021) as an orientation, who differentiate AI characteristics, human characteristics and (decision) situation characteristics as categories for antecedents. As our reference was HAIT and not only the technology part (as with Saßmannshausen et al., 2021), we needed to broaden this scheme and chose the categories of human, AI, team, task (and performance for outcomes) and context to describe the whole sociotechnical system. We as well-added a perception category for each category to clearly distinguish between objectively given inputs (see also O'Neill et al., 2022) and their subjective experience, both being potential (and independent) influence factors or outcomes of HAIT. Note that all antecedents and outcomes were classified as such by the authors of the respective publications (e.g., by stating that “X is needed to form a successful team”) and can relate to either building a team, being successful as a team, creating a feeling of team cohesion etc. The concrete point of reference differs depending on the publication's focus but is always related to teaming of human and AI.

Cluster 1 contained a high number of antecedents of HAIT or variables necessary to it such as trust. Amongst these were the (dynamic) autonomy of the AI, trust, but also aspects relating to explainability of the AI and situation awareness. Two of the papers took a more systematic view on antecedents, structuring them into categories. The review by O'Neill et al. (2022) contained in this cluster, sorts the antecedents they found into characteristics of the autonomous agents, team composition, task characteristics, individual human variables and training. Communication was found to serve as a mediator. Saßmannshausen et al. (2021) structure their researched antecedents (of trust in the AI team partner) into AI characteristics, human characteristics and decision situation characteristics. For outcomes, cluster 1 included—next to a number of performance- and behavioral outcomes—many different subjective outcomes, e.g., perceptions of the AI characteristics, perceived decision authority, mental workload or willingness to collaborate. O'Neill et al. (2022) did not provide empirical data on outcomes of HAIT itself, but presented an overview of the literature on various outcomes, including performance on the individual and team level (70 studies), workload (39 studies), trust (24 studies), situation awareness (23 studies), team coordination (15 studies) and shared mental models (six studies).

Cluster 2 incorporated relatively few antecedents and outcomes of teaming, as most papers focused on the structure or mode of teaming itself. These cooperation modes could be considered as the central antecedent of the cluster. AI design, explainability as well as the specificity of the occupation, task (and goal) or the organizational context were also named. They were supposed to affect subjective variables such as trust, role clarity, attitude toward cooperation and preference for a feedback style, but also broad organizational aspects such as competitive advantages.

In cluster 3, AI explainability emerged as the main antecedent considered by all central articles. The articles differed in the way that explainability was technologically implemented (e.g., local vs. global explanations), but all considered it as an antecedent for explaining outcomes related to calibrated decision-making (objective, i.e., accuracy of decisions as well as subjective, i.e., confidence/trust in decision).

The majority of the contributions in cluster 4 consisted of theoretical reviews and frameworks, in which antecedents of a successful human-robot collaboration were derived and discussed. Identified antecedents, primarily related to the physical component of a robotic system, were robot speed, end-effector force/torque, and operational safety aspects. Indicated antecedents, which were discussed and can also be applied to non-embodied AI systems, were the ability of the system to learn and thus to generalize knowledge and apply it to new situations, as well as effective communication between the cooperation partners, a shared mental model to be able to work toward the same goal, and (bidirectional) trust. In addition, the usability of the system, its adaptability, and ease of programming, the consideration of the psychophysiological state of the human (e.g., fatigue, stress) and the existing roles in the workplace were identified as prerequisites for a create harmonious collaboration between humans and technologies. When considering the antecedents addressed, expected outcomes included increased productivity and efficiency in the workplace, reduced costs, and better data management.

The articles in cluster 5 considered or experimentally manipulated AI performance (accuracy, failure, changes in performance) and the general behavior of the system (proactive dialogue). The articles argued that this is a central antecedent for explaining how trust is developed, lost or calibrated in human-AI teams.

Overall, the antecedents and outcomes on HAIT have received a large amount of research interest, thus a number of variables have already been studied in this context (see Tables 4, 5 for an overview).

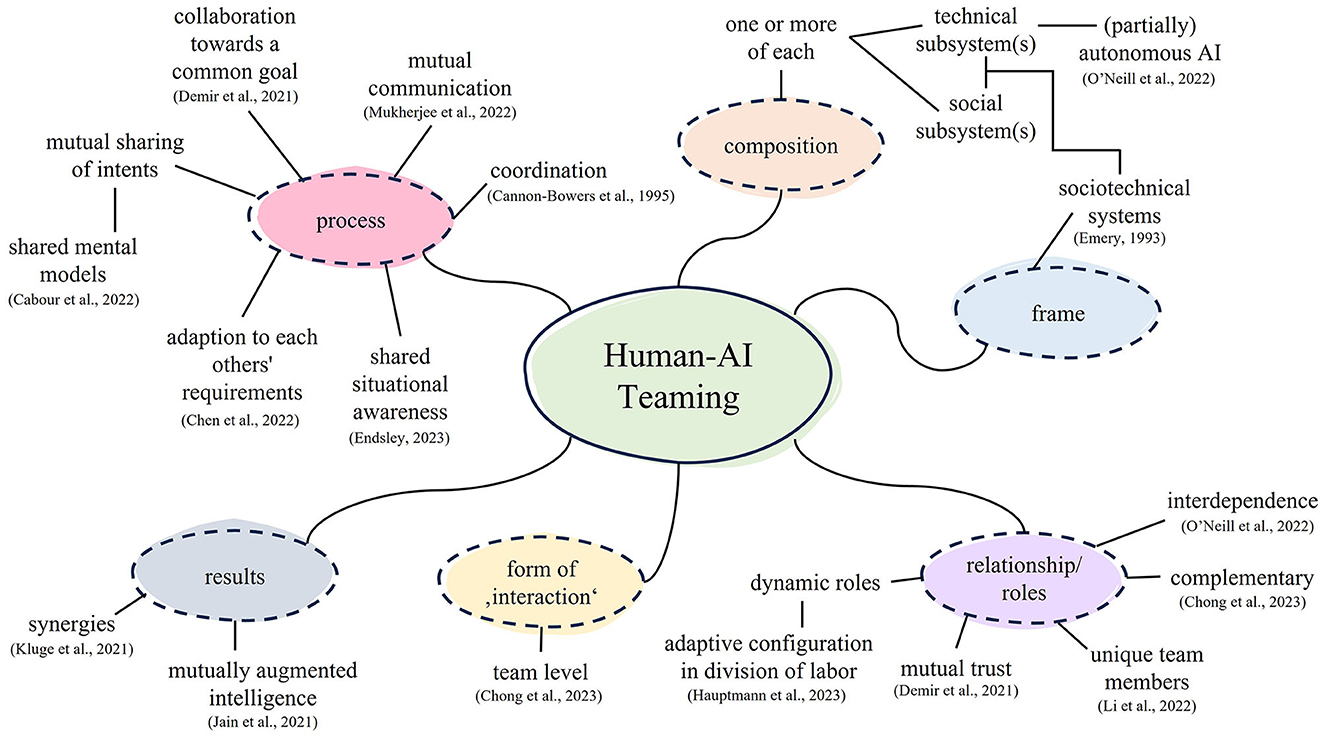

Our final RQ4 was to identify, if feasible, a cohesive definition that would bridge the diverse aspects addressed in current HAIT research. However, as evident from the results of the other research questions, a lack of defining approaches and concepts is apparent throughout the network. We only found one elaborate definition with O'Neill et al. (2022), which was also cited, but not by the breadth of publications. Notably, the included publications, including O'Neill et al. (2022), predominantly adopt a perspective that focuses on one of the two subsystems within a team (i.e., the human or the AI), and tend to be primarily technology-oriented. That means that it is mainly examined which conditions a technical system needs for teaming or, which characteristics the human being should bring along and how these can be promoted for collaboration. This one-sided inclination is also reflected in the addressed antecedents and outcomes (see Tables 4, 5).

However, in order to foster a seamless teaming experience and promote effective collaboration, it is crucial to consider the team-level perspective as a primary focus. Questions regarding the requisite qualities for optimal human-AI teams and the means to measure or collect these qualities remain largely unaddressed in the included publications, resulting in a blind spot in the network and the current state of HAIT research, despite the fundamental reliance on the concept of teaming. While the review of O'Neill et al. (2022) on human-autonomy teaming dedicates efforts toward defining the concept and offering insights into their understanding, an extension of this concept, particularly with regard to the team-level perspective, is needed. The subsequent sections of the discussion will expound on the reasons for this need in greater detail and propose an integrative definition that endeavors to unite all relevant perspectives.

In this work, we aimed to examine the current scope and breadth of literature of HAIT as well as research streams to comprehend the study field, the existing understandings of the term and important antecedents and outcomes. For this purpose, we conducted a bibliometric network analysis revealing five main clusters, followed by a scoping review examining the content and quality of the research field. Before delving into the terminology and understanding of HAIT and what we can conclude from the antecedents and outcomes under examination, we point out the boundaries and connected risks of our work. This serves as the background for our interpretation and the following idea of conceptualizing and defining the construct of HAIT, which is complemented by demands for future research from a perspective on humane work-design and socio-technics.

Choosing our concrete approach of a bibliometric network analysis and follow-up scoping review helped us answer our research questions, despite posing some boundaries on the opportunity of insight. First of all, the chosen methods determined the kind of insights possible. Network analyses rely on citation data to establish connections between publications (Bredahl, 2022). Thereby, the quality and completeness of the citation data may vary, leading to missing or insufficient citations of certain publications, thus causing bias and underrepresentation of certain papers or research directions (Kleminski et al., 2022). We are not aware of a bias toward certain journals, geographic regions or disciplines within our network, but do not know if this also holds for the cited literature. This might lead to certain areas of HAIT research, such as literature on the teaming level, not being considered by the broad body of literature or by the most connected papers (maybe also due to the mentioned inconsistent terminology), which would reflect also in the papers' content revealing blind spots. Furthermore, bibliometric network analyses focus mainly on the structural properties of the network and hence often disregard contextual information (Bornmann and Daniel, 2008), which is why we decided to conduct a scoping review additionally. Scoping reviews are characterized by a broad coverage of the research area (Arksey and O'Malley, 2005), which is both a strength and a weakness of the method: On the one hand, a comprehensive picture of the object of investigation emerges, but on the other hand, a limitation in the depth of detail as well as in the transparency of quality becomes apparent. Only being able to look into the 10% most connected papers within each cluster also limited our opportunity to go into more detail and map the whole field of research, again with the risk of leaving blind spots that are actually covered by literature. Hence, we considered also the most connected papers within the whole network to get a broader picture.

The basis of our network analysis and review was a literature search in WoS and Scopus. Although these are the most comprehensive databases available (Kumpulainen and Seppänen, 2022), there is a possibility that some relevant work are not listed there or were not identified by our search and screening strategy. More than in the databases, this problem might lie in restricting our search to publications published from 2021 onwards. It might be that important conceptual and definitory approaches can be found in the prior years, although we found no indications for that within the qualitative analyses of terminology or referenced definitions. Confining our search strongly to the last 2 years of research enabled us to address a relatively wide spectrum of the latest literature in a field that is very hyped and has a large output of articles and conference contributions. While there is a risk associated with excluding “older” research, we sought to partially balance it out by analyzing the papers' content, including their references to older definitions and concepts. Nonetheless, it remains a concern that our conclusions may primarily apply to the very latest research stream, potentially overlooking an influential stream of, for instance, team-level research on HAIT, that held prominence just a year earlier. Therefore, it is important to view our results as representing the latest research streams in HAIT.

Finally, bibliometric studies analyze only the literature of a given topic and time period (Lima and de Assis Carlos Filho, 2019), which can limit our results because of research not being found under the selected search terms, and the clustering algorithms used are based on partially random processes (Yang et al., 2016), which limits transparency on how results are achieved. We tried to balance this out by properly documenting our whole analysis procedure and all decisions taken within the analysis.

Another limitation was discovered in our results during the analyses. Our primary idea was to find different clusters in the body of literature which illuminate the construct HAIT from different disciplinary perspectives. From this, we wanted to extract the, potentially discipline-specific, understandings of HAIT and compare them among the clusters. Although we identified five clusters approaching HAIT with different research foci, they did not differ structurally in their disciplinary orientation. The differences in terminology and understanding within the clusters sometimes were just as high as between. Almost all of the identified publications, as well as most of the clusters, took a more technology-centered perspective, which means that some disciplines are not broadly covered in our work. For example, psychological, legal, societal, and ethical perspectives are poorly represented in our literature network. An explanation for this may be that there has been little research on HAIT from these disciplines, or that publications within the network that were not included in the review on a content base or literature form former years not included in our network highlighted these perspectives. Finally, it should be noted that even though very different aspects are researched and focused on within the clusters, the understanding of the construct of HAIT within which the research takes place is either not addressed in detail or only in very specific aspects, limiting our ability to answer our RQ2 adequately.

Summarizing the findings within our literature network on HAIT under examination or discussion, we can identify some general trends, but also some research gaps and contradictions.

To answer RQ1 about human-AI teaming research clusters, we identified five distinct clusters with varying emphases. Despite their shared focus on technological design while considering human aspects, which also reflects in the network metrics, subtle differences in research foci and the specific AI systems under investigation were discernible: Cluster 1 focuses mainly on human variables that are important for teaming. Cluster 2 examines task-dependent variables. Cluster 3 especially investigates the explainability of AI systems, cluster 4 concentrates on robotic systems as special AI applications, and cluster 5 deals mainly with the effects of AI performance on humans' perception. Except for cluster 1, the publications exhibit a focus on technology and are grounded in engineering principles. This is reflected in the publication organs, which are mainly technically oriented, with many at the intersection of human and AI, but primarily adopting a technological perspective. While other perspectives exist, they are not as prevalent. While reasonable due to technological system development's origin in this field (Picon, 2004), research should allocate equal or even more attention to the human and team component in in socio-technical systems. Human perceptions can impact performance (Yang and Choi, 2014), contrasting with technological systems that perform independently of perceptions and emotions (Šukjurovs et al., 2019). However, current research streams continue to emphasize the technological aspects.

Regarding RQ2, both terminologies and their comprehension within the clusters were examined to investigate the understanding of HAIT. A broad range of terms is used, often inconsistently within publications. While “teaming” is occasionally used, broader terms like “interaction” and “cooperation” prevail, with “collaboration” being the most common. Interestingly, many terms used do not focus on the relational or interactional part of teaming but instead highlight technology as support, a partner or a teammate, reflecting the technology-centeredness once again. In parallel, it becomes apparent that the phenomena of work between humans and AI systems are rarely defined or classified by the authors. Instead, the terms “cooperation,” “collaboration,” “interaction,” and “teaming” are used in a taken-for-granted and synonymous manner. Paradoxically, a differentiated understanding emerges in some of the papers: “interaction” denotes shared workspace and task execution with sequential order or just any contact between human and AI, “cooperation” involves access to shared resources to gather task-related information, but retains separate work interests, and “collaboration” entails humans and technologies working together on complex, common tasks. However, this differentiation that is very established in human-robot interaction research (see, e.g., Othman and Yang, 2022), is not consistently reflected within the majority of papers within our network. Except for O'Neill et al.'s (2022) paper, the term “teaming” is underdefined or unclassified in other works. Possible reasons include the dominance of a technology-centric perspective (Semeraro et al., 2022) in current research efforts, as collaboration aspects are likely to attract more interest in other research domains, such as psychology or occupational science (Bütepage and Kragic, 2017). Regarding the exemplary publication organs, those are underrepresented in our network. Another possible reason could be the novelty of the research field of teaming with autonomous agents (McNeese et al., 2021). Compared to the other definable constructs, the concept of teaming has only been increasingly used in recent years, which means that research in this field is still in its infancy and, thus, it has not yet fully crystallized what the defining aspects of teaming are. However, it raises questions about conducting high-quality research in the absence of a well-defined construct, as terms like “teammate” or “partner” alone lack the scientific clarity required for construct delineation.

One interesting idea shown in some of the publications offers a way to unite the different terms used within the field: the concept of existing collaboration modes or different views on human-AI work. Authors such as McNeese et al. (2021), Li et al. (2022), Chandel and Sharma (2023), and Endsley (2023) address that there might be different ways (or degrees) of AI and humans collaborating: Some aim to support the human, which reflects more of a cooperative perspective with distinct, not necessarily mutually interdependent tasks. Others are conceptualized as human-AI teams from the very beginning, with mutual intelligence augmentation, dynamic adaptation to one another and collaborative task execution. One can discuss if these should be seen as different categories of interaction, or if they are considered different points on a continuum of working together.

To answer RQ3 on antecedents and outcomes of HAIT we note that for antecedents, nearly all components of a human-AI team were under examination or discussion at least in a few publications, except for team and human perception. Research on AI characteristics dominated the field, with many constructs under research from the, apparently most important, topic of explainability (10 publications) to dynamics and levels of automation of AI. For team variables, most papers looked at team interaction as well as the conglomerate of (shared) situation awareness and mental models. What we can see overall is a focus on characteristics of the work system, but also quite a few perceptional and subjective antecedents under investigation. This shows the importance of considering not only objectively given or changeable characteristics, e.g., in AI design, but also how humans interact with those characteristics, how they perceive them on a cognitive and affective level.