95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Artif. Intell. , 19 September 2023

Sec. AI in Food, Agriculture and Water

Volume 6 - 2023 | https://doi.org/10.3389/frai.2023.1203546

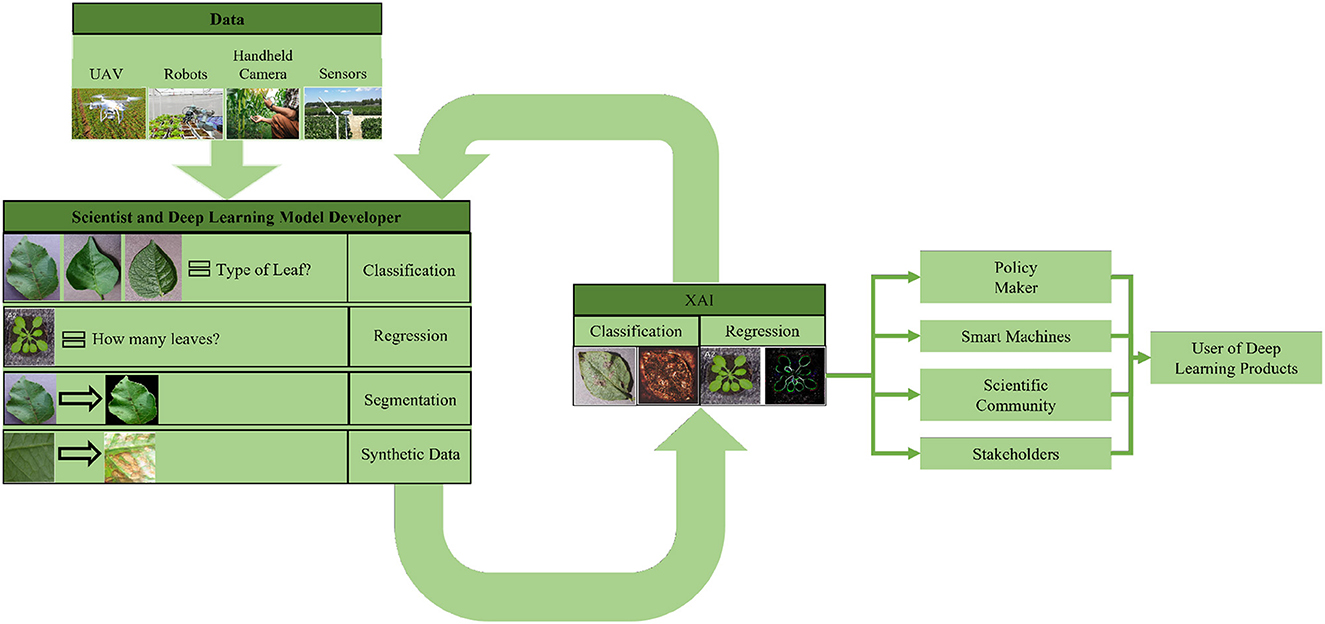

The increasing human population and variable weather conditions, due to climate change, pose a threat to the world's food security. To improve global food security, we need to provide breeders with tools to develop crop cultivars that are more resilient to extreme weather conditions and provide growers with tools to more effectively manage biotic and abiotic stresses in their crops. Plant phenotyping, the measurement of a plant's structural and functional characteristics, has the potential to inform, improve and accelerate both breeders' selections and growers' management decisions. To improve the speed, reliability and scale of plant phenotyping procedures, many researchers have adopted deep learning methods to estimate phenotypic information from images of plants and crops. Despite the successful results of these image-based phenotyping studies, the representations learned by deep learning models remain difficult to interpret, understand, and explain. For this reason, deep learning models are still considered to be black boxes. Explainable AI (XAI) is a promising approach for opening the deep learning model's black box and providing plant scientists with image-based phenotypic information that is interpretable and trustworthy. Although various fields of study have adopted XAI to advance their understanding of deep learning models, it has yet to be well-studied in the context of plant phenotyping research. In this review article, we reviewed existing XAI studies in plant shoot phenotyping, as well as related domains, to help plant researchers understand the benefits of XAI and make it easier for them to integrate XAI into their future studies. An elucidation of the representations within a deep learning model can help researchers explain the model's decisions, relate the features detected by the model to the underlying plant physiology, and enhance the trustworthiness of image-based phenotypic information used in food production systems.

The emergence of deep learning has allowed researchers to perform tasks that were previously thought to be impossible. Deep learning is popularly used in the fields of computer-aided diagnosis (Xie et al., 2021), drug discovery (Chen et al., 2018), healthcare (Esteva et al., 2019), law enforcement (Raaijmakers, 2019), autonomous vehicles (Rao and Frtunikj, 2018), robotics (Sünderhauf et al., 2018), and so on. Research (2020) predicted that the global market value of the deep learning industry will increase to $60.5 billion by 2025 from $12.3 billion in 2020, an increase in the growth rate of 37.5%. Among industrial sectors, agriculture is one of the slowest to adopt deep learning but has a high potential for its use to combat global food insecurity.

The increasing world population poses a threat to food security. According to the Food and Agricultural Organizations of the United Nations, global food production needs to increase by 70% to provide for 9 billion people by the year 2050 (Askew, 2017). However, this increased food production must be done on about the same amount of farmland used today. The only way to ensure food security is to increase the production of the crops. According to the world food summit in 1996, food security is defined as, “Food security exists when all people, at all times, have physical and economic access to sufficient, safe and nutritious food that meets their dietary needs and food preferences for an active and healthy life” (Godfray et al., 2010). So, ensuring increased food production is not enough for food security. We need to provide nutritious food (Tripathi et al., 2019). We can ensure food security by breeding new cultivars of crops that have higher quality, higher yield, better nutrition, and greater resilience to variable environmental conditions. Food security can also be enhanced by introducing better management systems to maximize the outcome of our food production systems (Jiang and Li, 2020). Scientists have been working relentlessly to introduce new ways of achieving food security, and they believe that the inclusion of technology in agriculture can help us achieve this goal. The study of plant phenotyping can not only help us in designing better crop management systems but also provide new ways of improving crop characteristics, such as yield. Along with increasing food production, we also need to ensure food quality and safety, as well as the economic and environmental sustainability of the food production system. Plant phenotyping plays an important role by informing both crop breeding and crop management.

Plant phenotyping is the assessment of complex plant traits such as growth, development, abiotic and biotic tolerance and resistance, architecture, physiology, ecology, yield, and the basic measurement of individual quantitative parameters that form the basis for complex trait assessment (Li et al., 2014). Recently, there has been significant improvement in plant phenotyping studies. The inclusion of smart farming (Wolfert et al., 2017) and precision agriculture (Gebbers and Adamchuk, 2010) have allowed the extension of conventional tools and provided farmers aware systems that are autonomous, context-aware, and can be controlled remotely. Big data technology is playing an essential role in this development (Wolfert et al., 2017), and deep learning models are an integral part of it. Recently, there has been an increased interest in deep learning-based plant phenotyping studies due to their superiority over traditional analysis (Chandra et al., 2020; Jiang and Li, 2020; Ren et al., 2020; Kolhar and Jagtap, 2021; Arya et al., 2022).

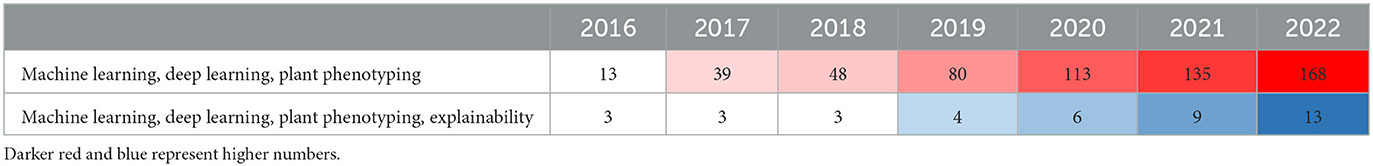

We use deep learning models to process a large amount of data to build decision systems without properly understanding the decision-making process (Guidotti et al., 2018). In May 2018, the General Data Protection Regulation law was enforced in the European Union and European Economic Area, which indicates that whoever uses automated systems for profiling and/or decision making has to ensure fairness, transparency and provide anyone with a meaningful explanation of the logic used (EU, 2018). As a result, in recent years, there has been significant growth in the study of explainable deep learning models (Biran and Cotton, 2017; Preece, 2018; Vilone and Longo, 2021), more commonly known as Explainable AI (XAI). XAI is being adopted in different fields of study to explain existing models and develop better models (Tonekaboni et al., 2019; Bhatt et al., 2020; Bai et al., 2021; Gulum et al., 2021; Thomas et al., 2021). Table 1 shows the number of publications each year where the studies used deep learning or XAI for plant phenotyping. We retrieved the data from a PubMed search with the keywords machine learning or deep learning and plant phenotyping, and machine learning or deep learning and plant phenotyping and explainability. We considered other keywords (e.g., explainable AI, transparent AI, XAI) during our search, however, we found that the combination of the mentioned keywords returned the most relevant papers. From Table 1 it is evident that more plant phenotyping studies are adopting deep learning. Although the trend shows that more researchers are using XAI for studies, it is still in its early stages.

Table 1. Evolution of the number of publications that refers to deep learning in plant phenotyping and XAI in plant phenotyping.

The availability of deep learning algorithms has allowed plant scientists to easily incorporate them into their studies and achieve impressive results on challenging problems. However, due to the black-box nature of deep learning models, plant scientists are sometimes unaware of how such results were achieved. As a result, any mistake in the development of a deep learning model remains unnoticed, which might affect the generalisability of the models. This black box nature also limits the ability of plant scientists to understand the relation between the results of a deep learning model and plant traits. Additionally, the lack of understanding makes it difficult for scientists to explain the results of a deep learning model to the user of the resulting tool or services. XAI has the capability of explaining the decisions of a model. Such explanations can be utilized to better understand the model and relate the features detected by the model to the plant traits.

The motivation of this study is to provide a detailed overview of the recent developments in XAI techniques so that researchers working in plant phenotyping are able to develop explainable and transparent deep learning models. We thus focus on achieving the following objectives.

• Review XAI techniques in deep learning studies: This objective focuses on reviewing existing XAI techniques that may assist researchers in interpreting the predictions and explaining the decisions of a deep learning model. This comprehensive review is to help researchers understand the capabilities of different XAI techniques and the appropriate contexts and modalities for their application.

• Explore the application of deep learning in plant phenotyping: This objective focuses on reviewing popular studies in the field of plant phenotyping that have utilized deep learning models, to provide researchers insights into how deep learning is advancing and improving the outcomes of plant phenotyping studies.

• Investigate the limitations and opportunities of XAI techniques in plant phenotyping: This objective includes a review of plant phenotyping studies utilizing XAI techniques. Also, an exploration of how XAI can reveal plant traits by analyzing large plant datasets, as well as help build trust in the predicted traits for use in downstream experiments and sections in breeding programs.

Researchers have been using intelligent and automated systems for a long time. Moore and Swartout (1988) was the first to point out the necessity of explaining intelligent systems. During the same period, Swartout (1983) developed a system to justify the decision of a code, and used it to explain the behavior of a Digitalis Therapy Advisor. However, Van Lent et al. (2004) was the first to use the term XAI to describe the architecture and reasoning capabilities of a U.S. Army's training system. We have come a long way since then. In this section, we first define XAI, then describe its categories, and finally provide an overview of existing XAI techniques.

Despite the recent development, researchers are still divided on the definitions and terminologies used in XAI techniques. XAI refers to tools, techniques, and methods that help humans understand, interpret and trust the decision of an artificial intelligence model (Adadi and Berrada, 2018; Gunning et al., 2021; Vilone and Longo, 2021). XAI methods are also commonly known as interpretable AI, which is a system where the users can interpret how the input and output are mathematically related (Bellucci et al., 2021). Although the tools provide an interpretation of the features a model uses for its decision-making, the terms interpretability and explainability are used interchangeably in the literature. However, Adadi and Berrada (2018) stated that the interpretable model could be considered explainable if humans can understand the operation of the model. Additionally, Linardatos et al. (2020) mentioned that the depth of human understanding of the internal procedure of a model depends on the quality of the explanation. In the context of machine learning, explainability techniques summarize the behavior of the models and describe the system's internal reasoning and dynamics (Gilpin et al., 2018). On the other hand, interpretability is considered to be the degree to which a human can understand the reason for a machine learning model's decision (Miller, 2019). Although the techniques described in the following sections are more concerned with interpretation than explanation, for consistency with other literature, we address them as XAI techniques.

The ethical aspects of a deep learning model have given rise to the term responsible AI. Explainability techniques that deal with the social impact and ethical and moral obligations are called responsible AI (Dignum, 2017; Arrieta et al., 2020). Transparent AI is another common term that is related to explainability techniques. Lipton (2018) considered an AI model transparent if a domain expert is able to calculate the model's prediction in a reasonable time using the input data and model parameters. Although this definition applied to linear models, through transparent AI, researchers try to build non-linear models whose decisions can be explained even when they behave unexpectedly (Lyons, 2013; Larsson and Heintz, 2020).

There are several ways to categorize different XAI techniques. The most common ways of categorizing XAI techniques are based on the scope of explanation, the level of implementation, and the transferability of algorithms. Figure 1 shows an overview of the different categories of XAI Techniques.

Depending on the explanation's scale, several researchers have categorized the explanation as either global or local (Ribeiro et al., 2016; Adadi and Berrada, 2018; Ghorbani et al., 2019b; Ibrahim et al., 2019; Burns et al., 2020; Mohseni et al., 2021). Global explainability techniques provide a comprehensive explanation of how the model works (Liu et al., 2016; Nguyen et al., 2016; Kim et al., 2018; Ghorbani et al., 2019b; Ibrahim et al., 2019). Although global explanations allow the user to gain an overview of the model and help them quickly identify what features might be impacting the model's performance, the global explanation's efficiency is largely dependent on the complexity of the model. On the other hand, the explanation of the model's decision for a specific instance is considered to be a local explanation (Ribeiro et al., 2016; Lundberg and Lee, 2017; Mehdiyev and Fettke, 2021; Huang et al., 2022). Local explainability techniques can easily be adapted for a complex model but lack generalizability. We can use local explainability techniques to help users understand how a model performs for different examples. In our study, we found that local explanation techniques are more popular than global explanation methods. This may be due to the complexity of the process of how a deep learning model makes a decision. Designing an algorithm to explain the model's decision for a single example is generally easier than designing the algorithm considering the decisions for all the instances. In future, the innovation of global XAI techniques can provide detailed insight into the inner working of the models.

Another important categorization of XAI techniques relates to how the XAI method is implemented in the model. It can either be implemented within the model or implemented as an external algorithm (Samek and Müller, 2019; Danilevsky et al., 2020; Das and Rad, 2020; Belle and Papantonis, 2021). XAI techniques that are model dependent and embedded within the model, are called intrinsic XAI techniques (Schetinin et al., 2007; Grosenick et al., 2008; Caruana et al., 2015; Letham et al., 2015). Intrinsic techniques are usually applicable to linear models. Such techniques are nontransferable, and any change in the architecture can cause the XAI technique to fail. A more popular way of explaining deep learning models is called post-hoc explanations. An XAI technique is considered post-hoc if an external explainer is used on a trained model to understand the reasoning behind its decision (Bach et al., 2015; Lundberg and Lee, 2017; Tan et al., 2018; Brunese et al., 2020; Soares et al., 2020). We found that most XAI techniques designed for deep learning models are post-hoc. The intrinsic explanations require the techniques to be embedded within the model. In deep learning models, there are a large number of parameters which are distributed over different layers and the layers are non-linearly correlated to each other. As a result, it is difficult to develop intrinsic explanations for deep learning models. So, researchers tend to use post-hoc external algorithms to explain models.

Depending on the transferability of an XAI method, it can either be model-specific or model-agnostic. A model-agnostic XAI technique does not depend on the model architecture. These methods can be applied to any deep learning model (Ribeiro et al., 2016, 2018; Li et al., 2018). Model-agnostic techniques provide a trade-off between the accuracy of the explanation and generalizability. Explanations produced by a model-specific XAI technique are restricted to a specific model or dataset. Such techniques are not transferable to other models (Hendricks et al., 2016; Lapuschkin et al., 2016; Soares et al., 2020; Agarwal et al., 2021). As a result, they tend to be more accurate in explaining a specific model's decision. So, researchers prefer model-specific techniques rather than model-agnostic ones.

The first deep learning algorithm was developed by Ivakhnenko (1968), where the authors proposed a multilayer artificial neural network that was capable of updating its own architecture and complexity. Fukushima (1988) was the developer of the first Convolutional Neural Network (CNN) model, Necognition, that used reinforcement learning for training and used pooling layers and convolutional layers. Inspired by Necognition, LeCun et al. (1989) proposed ConvNet, a deep learning model using supervised training and backpropagation for analyzing image data. Since then, researchers have developed more complex and more capable models, such as Generative Adversarial Networks (Goodfellow et al., 2014), Inception (Szegedy et al., 2015) architectures, and Transformers (Vaswani et al., 2017). In comparison to the development of more accurate and efficient models, there have been far fewer prior works focusing on explaining such complex models. However, there has been a recent increase in interest in model explainability techniques (Arrieta et al., 2020).

The notions used in XAI studies lack a proper and consistent definition, and therefore researchers without domain knowledge may find it difficult to understand XAI concepts. To close the gap of understanding, a popular structure for review papers on this topic are to introduce notions, taxonomies, and relatable concepts and then review the related articles (Adadi and Berrada, 2018; Gilpin et al., 2018; Arrieta et al., 2020; Das and Rad, 2020; Vilone and Longo, 2020). Arrieta et al. (2020) reviewed 400 articles and proposed a novel definition of explainability, and emphasized that XAI is necessary to ensure security. In machine learning, fairness is considered a subsection of machine learning interpretability and addresses the social and ethical consequences of machine learning algorithms (Tian et al., 2022). Linardatos et al. (2020) studied the fairness of machine learning models where the authors mentioned that researchers favor groups of individuals with different attributes over ensuring individuals are treated similarly; thus, the importance of individuals is often ignored. Chatzimparmpas et al. (2020) reviewed the studies of visualization and visual interpretation of machine learning models by categorizing them and qualitatively representing them, and finally identified the research gaps in the utilization of XAI and proposed ways of integrating them. Liu et al. (2017) provided an overview and summary of interactive models in deep learning, which can help users better explain models by interacting with them. In their study, Adadi and Berrada (2018) described the need for XAI in different fields and the implication of adapting it to the current AI systems. A methodological approach to evaluate XAI based on a taxonomy of interpretability was proposed by Gilpin et al. (2018). Preece (2018) reviewed the latest XAI techniques and demonstrated that the fundamental problems associated with machine learning algorithms have a long history and the elements of earlier research can help advance today's XAI models.

There are also domain-specific reviews of XAI studies (Danilevsky et al., 2020; Jiménez-Luna et al., 2020; Tjoa and Guan, 2020). Danilevsky et al. (2020) reviewed the recent advancement of XAI techniques in natural language processing and found that researchers prefer local XAI over global ones. This preference is influenced by the increased usage of the black box models in comparison to the white box models. Tjoa and Guan (2020) studied the state-of-the-art XAI techniques and suggested how the techniques can be utilized in the medical domain. The authors emphasized the importance of accountability and transparency in deep learning models within the medical sector and how XAI can help ensure these attributes. In the review conducted by Jiménez-Luna et al. (2020), the authors explore the application of XAI in the field of drug discovery and highlight the potential of XAI techniques to address the challenges faced in this domain. They emphasize that despite the popularity of deep learning models, the mathematics behind the model still remains elusive to most researchers, and XAI can help expand our understanding by providing interpretability and insights into these models. In Table 2, we have summarized the reviews on explainability techniques, which can help us understand the structure of the reviews and the techniques discussed.

To study the prospect of XAI techniques in plant phenotyping, we believe it is important to have knowledge of the existing XAI techniques. We have categorized the existing XAI techniques into six different categories based on the explanation generated by the techniques. Table 3 provides an overview of the models discussed in this section.

Visualizing the decisions made by different parts of a deep learning model or visualizing the learned features that contributed to the prediction of an instance is a popular way of explaining a model. Layer-Wise Relevance Propagation (LRP) is a deep learning model explanation technique which helps to quickly find relevant features responsible for the prediction (Bach et al., 2015). LRP can be used for various deep learning architectures and data types, which makes it popular in XAI. Lapuschkin et al. (2016) analyzed the reasoning behind the prediction of a Fisher Vector and Deep Neural Network (DNN) models. The authors used a heat mapping technique to find the pixels contributing to the prediction which can be used to determine whether or not the model uses relevant features for prediction. Hendricks et al. (2016) combined natural language processing with visual analytics to generate explainable systems that humans easily understand. The authors trained to separate systems where the first system was trained for image classification, and the second system was trained to generate text descriptions of the discriminating features of a class. The loss function is an essential part of a deep learning model. Instead of optimizing the loss functions, Li et al. (2018) proposed a loss landscape visualization technique that can better capture the sharpness and flatness in the landscape. They also showed that the visualization technique is more intuitive and easily understandable than other techniques. Gradient-weighted class activation mapping (GradCAM) uses the class-specific gradient flowing through a CNN's final convolutional layers to visualize the input's important features in a saliency map. Assaf and Schumann (2019) used GradCAM to explain a model trained on multivariate time-series data.

GradCAM++ is an extension of GradCAM (Chattopadhay et al., 2018). It allows for the visualization of multiple objects of the same class in the image as it uses a weighted combination of positive partial derivatives of the last convolutional layer to generate the visualizations. Ghorbani et al. (2019b) proposed a concept-based approach called Automated Concept-based Explanations (ACE) which can help reveal whether a model's prediction correlates to any unwanted features. Zintgraf et al. (2017) proposed a saliency map generation technique, where for each prediction, a relevance value is assigned to each input feature with respect to the class. Simonyan et al. (2013) created saliency maps representing the discriminative features of a class by passing a single backpropagation through a CNN. Guided Backpropagation (GBP) is a gradient-based visualization technique that allows the visualization of the image features that activate the neurons in a deep learning model (Springenberg et al., 2014). In the field of medical science, Lee et al. (2019) proposed a deep learning-based explainable acute intracranial hemorrhage system that generated saliency maps showing the relevant features in a class.

A surrogate model is a simple model that is used to explain a complex model. Local Interpretable Model-agnostic Explanation (LIME) (Ribeiro et al., 2016) is a popular example of a surrogate model, that can help identify regions in the input essential for the prediction. Ribeiro et al. (2016, 2018) proposed extensions of LIME called Sub-modular Pick LIME (SP-LIME) and Anchors, respectively. Shapley additive explanations (Lundberg and Lee, 2017) is another example of using the surrogate model to explain a deep learning model, which assigns an importance value to each feature for an instance. Tan et al. (2018) proposed Distill-and-Compare that can explain an inaccessible black-box model by training a model with labeled data with risk factors and then training another model to predict the outcome. Che et al. (2015) used knowledge distillation from deep learning models to explain the features and prediction rules with gradient boosting trees. Soares et al. (2020) proposed a rule-based surrogate XAI model for deep reinforcement learning where the results of the reinforcement learning model are replicated with an if-then rule-based model.

The benefit of a global explanation is that it allows for a description of the neural network using a single set of features. Global Attributions Mapping proposed by Ibrahim et al. (2019) allows granularity of analysis by increasing or decreasing the size of the subpopulation. Erion et al. (2019) developed a framework called attribution prior using the feature attribution method that enforces a deep learning model to train based on prior expectations and allows encoding of human intuitions without the necessity of knowing unimportant features beforehand.

Generalized additive models are a class of linear model (Lou et al., 2012) that combines multiple models where each model is trained with individual features. A drawback of this model is that it fails to work with non-linear functions. An extension of this approach was proposed by Agarwal et al. (2021), called Neural Additive Models (NAM), where, a linear combination of neural networks models is used to generate a prediction. NAM can help generate an explanation of individual features for a prediction.

In a perturbation-based XAI technique, explanations are generated by probing a trained model with different variations of the input data. The Interpretability Randomization Test and the One-Shot Feature Test proposed by Burns et al. (2020) are perturbation-based XAI methods. The intermediate layers of a CNN model were visualized by Zeiler and Fergus (2014). The authors hide different parts of an input image and used a Deconvolutional Neural Network to regenerate the input. The saliency maps generated by this process represent the features responsible for the activation of the feature map. Angelov and Soares (2020) proposed a generative explainable deep learning model that is automatically built from the training data without defining parameters, problem-specific thresholds, and intervention. The swap test is an explainable deep learning model that generates heatmaps representing the area of interest in the MRI images of Alzheimer's patients (Nigri et al., 2020).

Recently several XAI techniques have been developed and applied in different fields of studies (Cabitza et al., 2017; Bhatt et al., 2020; Bai et al., 2021; Gulum et al., 2021; Puyol-Antón et al., 2021; Thomas et al., 2021). In our review, we found that researchers prefer the visualization-based XAI and saliency map-based XAI techniques over others. These models are capable of explaining the decisions of a model through the generation of different visualization maps and images, therefore researchers find them easy to understand and adopt. In Table 3 we summarize the XAI techniques described above. In addition, Table 3 offers valuable insights into the dataset employed and the deep learning models utilized in each study, along with the scope and type of the explanations. Specifically, we provided information regarding where the dataset was first proposed, enabling researchers to acquire comprehensive knowledge about its intricacies.

An XAI technique aims to generate explanations that can help humans understand how the decisions are made in a deep learning model (Gerlings et al., 2020). Existing XAI techniques are more focused on explaining the models, or variables responsible for the decisions of a model, and there is a lack of XAI techniques that utilize the explanations to improve the model's performance. Furthermore, although XAI techniques are designed to explain the decision of deep learning models, they are used less frequently in deep learning studies than expected. The inadequate adoption of the XAI techniques can be attributed to the lack of proper XAI evaluation techniques, the unreliability of XAI techniques, and the unavailability of XAI platforms and tools. Although researchers have proposed a few XAI evaluation techniques (Arras et al., 2016, 2017; Samek et al., 2016; Ancona et al., 2017; Adebayo et al., 2018a,b; Alvarez Melis and Jaakkola, 2018; Mohseni et al., 2018; Ribeiro et al., 2018; Yang and Kim, 2019; Holzinger et al., 2020), the techniques usually suffer from limited generalizability and inconsistency. Furthermore, researchers found that the explanations for similar models vary from one XAI technique to another thus promoting reliability concerns (Adebayo et al., 2018b; Ghorbani et al., 2019a; Kindermans et al., 2019; Weerts et al., 2019). Finally, compared to the deep learning models, there is a lack of platforms and resources that can help researchers easily adopt XAI in their studies. Explaining the decisions of a deep learning model is crucial for fostering user trust and facilitating transparency. This practice can enable researchers from diverse domains to confidently integrate deep learning models into their studies, ensuring a robust foundation for their investigations. We believe the implementation of XAI techniques in the analysis of plant phenotyping data can help plant scientists develop a better understanding of data-derived plant traits. We explore this topic in the following section.

Plant phenotyping is the study of characterizing and quantifying the physical and physiological traits of a plant (Chandra et al., 2020). Plant phenotyping can help us understand plant characteristics like chlorophyll content, leaf surface temperature, leaf size, leaf count, shoot biomass, photosynthesis efficiency, plant growth rate, germination time, and emergence time of leaves (Kolhar and Jagtap, 2021). The results of plant phenotyping studies allow us to develop a better crop management system (Bauer et al., 2019). We can detect plant disease, type of plant, the water content in the plant, and flowering of plants, and take necessary steps if a problem arises (DeChant et al., 2017; Ghosal et al., 2019; Arya et al., 2022). Recently, scientists have started applying deep learning techniques to plant phenotyping studies (Almahairi et al., 2018; Ghosal et al., 2019; Mortensen et al., 2019). Deep learning models can analyze large amounts of data, find previously thought impossible features, and do all these more accurately than ever before. As a result, researchers are increasingly adopting deep learning into their studies. However, deploying deep learning techniques requires domain knowledge of machine learning algorithms as numerous models perform various tasks. It creates a dilemma among plant scientists in deciding which model to choose, how to use it, and how to incorporate the results into their studies.

There are few reviews that look into the advancements of deep learning techniques in plant phenotyping. Jiang and Li (2020), Arya et al. (2022), Chandra et al. (2020), Ren et al. (2020), Kolhar and Jagtap (2021) provide detailed overviews of how deep learning is advancing plant phenotyping studies. Although they used different categorization techniques to discuss the papers, the motivation was to introduce plant scientists to deep learning (Ubbens and Stavness, 2017). A more domain-specific study was conducted by Singh et al. (2018), where the authors reviewed deep learning for plant stress phenotyping and suggested that deep learning models utilizing image data in plant phenotyping hold significant potential for early diagnosis of plant stress. The study conducted by Atkinson et al. (2019) emphasizes the importance of integrating deep learning-based 2D systems with large-scale quantitative genetic data analysis as a pivotal progression in the field of root phenotyping, offering valuable insights for understanding root biology and its implications. The modern deep learning-based plant disease detection techniques were discussed in Lee et al. (2020). The authors found that models trained on disease and independent of crops performed better than crop disease pair, and for transfer learning, a popular concept in plant phenotyping, pre-training with a plant-specific task can help reduce the effect of overfitting. The review on the advancement of deep learning for pest and leaf disease detection by Ngugi et al. (2021) reported a notable challenge faced by the deep learning models used in plant phenotyping is the model's inability to generalize across diverse datasets and field conditions. Hasan et al. (2021) reviewed the machine learning techniques for weed detection and classification and revealed the necessity of a large labeled dataset specifically designed for weed detection to overcome the limitations of the current studies that require pre-trained models to improve the detection accuracy and only utilize the existing small datasets. Danilevicz et al. (2022) addresses the challenges of applying machine learning models for predicting phenotypic traits using genetic markers and presents the advantages and disadvantages of using explainable model structures in plant phenotyping. Additionally, the authors reiterated the necessity of labeled data in plant phenotyping studies and suggested that the model accuracy can be improved if the weights of the existing models are updated by training on new datasets.

To help the researchers understand the capabilities of deep learning models and their usage in plant phenotyping, we reviewed the popular deep learning studies in this area in the following sections. We categorized the papers based on the application of deep learning models.

Plant disease can severely damage the quality and production of crops. Timely and accurate disease detection can help take proper steps to prevent or stop its spread. A simple LeNet (LeCun et al., 1989) based architecture was used by Amara et al. (2017) to classify banana disease using the PlantVillage dataset. Despite its simple architecture, the model performed well under varying conditions.

In plant phenotyping, the most popular dataset for classification tasks is the PlantVillage dataset. The dataset contains images of leaves of 14 crop species and 26 diseases. The dataset consists of colored, grayscale, and segmented images of the leaves which were captured in a controlled environment. There are 39 classes in the PlantVillage dataset and the distribution of images among different classes is shown in Figure 2 which also provides information about the different diseases and species covered in the dataset. In their paper, Mohanty et al. (2016) introduced the dataset and also classified the images using AlexNet and GoogleNet. The authors demonstrated the models' performance for different training and test combinations and showed that the models performed exceptionally well. However, the classification of their best-trained model dropped to 31% when tested on field data. Such results might be because images of individual leaves in the dataset were taken in controlled conditions and against a constant background. So, the trained models failed to generalize and performed inadequately when there were changes in illumination, background, or number of leaves. However, this dataset is still used frequently in plant phenotyping and helps scientists develop their models.

DeChant et al. (2017) proposed a deep learning-based framework capable of detecting northern leaf blight-infected maize plants from images acquired by unmanned aerial vehicles (UAV). The framework consists of training several models and combining their results for prediction. At first, the authors trained five deep learning models on image patches to detect the presence of lesions and achieved 94% accuracy. Next, they generated heatmaps showing the probability of infection of every region in an image using the trained models. Finally, they trained another CNN model to classify whether there were infected leaves in the image and the inclusion of the heatmaps increased the classification to 97.8%.

A different approach for analyzing the plant phenotyping traits was adopted by Taghavi Namin et al. (2018). They proposed a CNN and Long Short Term Memory (LSTM) based classifier where the plant growth information was used for the classification. A sequence of plant images representing different stages of growth was used as the input for the CNN model. The CNN model extracted features of an individual plant and passed them on to LSTM. LSTM analyzes this sequence of features by considering the temporal features and using them to classify plants. CNN-LSTM can use the plant's growth information and model the phenotypic and genotypic information. The combination of CNN and LSTM helped the authors achieve 93% accuracy in comparison to 76.8% accuracy by using just CNN. A similar structure was adopted by Ubbens et al. (2020) to detect and quantify a plant's response to the treatment.

Lodging is a state of the crop where it bends and does not return to its original state, often the shoot lays on the ground and is subject to disease and decomposition. LodgeNet is a CNN classifier that can classify images of lodged crops from five spectral channel orthomosaic images of canola and wheat plants (Mardanisamani et al., 2019) where the images are captured using UAVs. In LodgeNet, a seven-layer CNN model is used to extract texture features from the orthomosaic images. In addition, two texture feature extraction algorithms (local binary patterns and gray-level co-occurrence matrix) are also used to extract additional features. Features extracted from the CNN and the texture descriptors were combined to train a deep learning classifier and achieved 97.70 and 99.06% accuracy for wheat and canola, respectively. The results of the LodgeNet were compared with other popular deep learning models and the authors reported an improvement of classification accuracy by 8.84%. The authors concluded that simple deep learning models are capable of performing as efficiently as complex models. The capability of simple deep learning models were also tested by Hati and Singh (2021) where the authors implemented Residual Network (ResNET) based classifiers for the classification of different species and plant health conditions. The results of the ResNet-based classifier were compared with AlexNet and ResNet provided 16% higher F1-score than AlexNet.

In deep learning, regression is used to investigate the relationship between independent variables or features and a dependent variable or outcome (Kuleshov et al., 2018), and segmentation is used to partition an image into different parts or regions depending on the image pixel's characteristics (Haralick and Shapiro, 1985). In a deep learning-based regression, segmentation is usually used to detect the objects and count them. To accomplish this, an annotated dataset is required. The lack of annotated datasets for plant phenotyping magnifies the challenges and limitations faced in this field of study. To resolve this issue, Dobrescu et al. (2017) proposed a ResNet-based leaf counter that only requires the total leaf count per plant. Another interesting contribution is that the authors combined a dataset of different sources and species to perform better than previous models. In the analysis, the authors observed that using a pre-trained ResNet on the ImageNet dataset performed better than training the model from scratch. It shows that transfer learning can be a viable option to resolve the insufficient data problem.

The authors of Aich and Stavness (2017) used a combination of deconvolutional networks and convolutional networks to count rosette leaves. The networks were trained separately but not independently. At first, a segmentation network was trained to generate binary masks representing the leaves in the image. Next, the binary mask and the images were used to train a counting network. The segmentation was developed using SegNet (Badrinarayanan et al., 2017), and the counting network was developed using VGG16. The study's results showed that the proposed network's generalization capability was better than other state-of-the-art leaf counters. Ubbens et al. (2018) also developed a rosette leaf counter using deep learning. The authors also showed that the synthetic 3D plants could be used to generate augmented training data for the deep learning model when the dataset is not large enough.

A deep learning model is initially trained in a weakly supervised training process with few labeled data. Then the trained model is again retrained with unlabeled data. Ghosal et al. (2019) used weak training to develop sorghum head detection and a counting network. The deep learning model was based on RetinaNet (Lin et al., 2017) and ResNet50. In this work, the first stage was to detect the sorghum heads in the image, and the next stage was to count those heads. In addition, a regression model was used to generate bounding boxes around the sorghum heads. To understand the learning of the residual network, feature maps were visualized. The authors considered the visualization a “trust mechanism”, which showed that the model extracted a significant amount of features from the plant head. The authors proposed a counting framework that can work when there is a shortage of labeled data for the deep learning model.

Pound et al. (2017) proposed an hourglass (Newell et al., 2016) based deep learning architecture capable of localizing wheat spikes and spikelets with 4.09 and 0.34% error, respectively. Another important contribution of the paper is the introduction of the ACID dataset which consists of wheat crop images with annotation and labeling. The model could also classify awned wheat with 99% accuracy in the segmented images. The accuracy curve of the model indicates that it achieved peak accuracy at ~200 epochs out of the total 500 epochs of training, raising the possibility of overfitting as the model at the 500th epoch was utilized for the analysis.

Segmentation plays a crucial role in plant phenotyping. In field conditions, the crop or leaf of a plant is usually accompanied by other plant parts. Proper segmentation is often required to detect the object of interest and use the object of interest for other purposes. However, another interesting application of segmentation is to generate annotated datasets. An oil radish growth dataset was presented by Mortensen et al. (2019), which contained images of oil radish collected over weeks. In the study, the authors used the fully connected neural network proposed by Long et al. (2015) for semantic segmentation of the oil radish and other plants and achieved 71.2% mean intersection over union (mIoU). For annotation in the GrassClover image dataset, Skovsen et al. (2019) used a similar network for semantic segmentation of grass and clover from the field images, where both crops were mixed and reached a mIoU of 55.0%. Bernotas et al. (2019) used recurrent neural network (RNN) (Ren and Zemel, 2017) and Mask R-convolutional neural network (Mask R-CNN) (He et al., 2017) for instance segmentation of rosettes and individual leaves to monitor the growth of the plant. Using RNN and Mask R-CNN Keller et al. (2018) also segmented soybean leaf using color-based thresholding, random forest classifier and deep convolutional network and achieved 87.52, 51.24, and 78.65% mIoU, respectively.

Two stages of CNN models were used for citrus plant detection in Ampatzidis and Partel (2019) by utilizing multispectral images from a UAV. In the first stage, a YOLOv3 model was used to detect tree locations in the images. After using computer vision algorithms on the detected trees, another YOLOv3 was used to find trees in the locations that the first model might have missed. These images were then used in a threshold-based algorithm for image segmentation. The proposed method detected trees with 99.8% accuracy, tree gaps with 94.2% accuracy, and estimated individual tree canopy area with 85.5% accuracy. In Vit et al. (2019), object detection and point of interest identification models were introduced to measure the height of a banana tree and the length, width, and aspect ratio of a banana leaf. The models were developed using Mask R-CNN and Faster RNN and obtained an average deviation of 3% for detecting the height of the tree and 7–8% deviation for leaf width and length estimation.

To train deep learning models, a large dataset is required. In plant phenotyping, there is a lack of such datasets. Also, collecting data for the datasets is very time-consuming and costly. So, Generative Adversarial Network (GAN) to synthesize images to mitigate the scarcity of plant images for deep learning is a popular choice. Cycle-GAN, an image-to-image translator developed by Almahairi et al. (2018), has inspired researchers to adopt this in plant phenotyping studies to generate augmented plant phenotyping data.

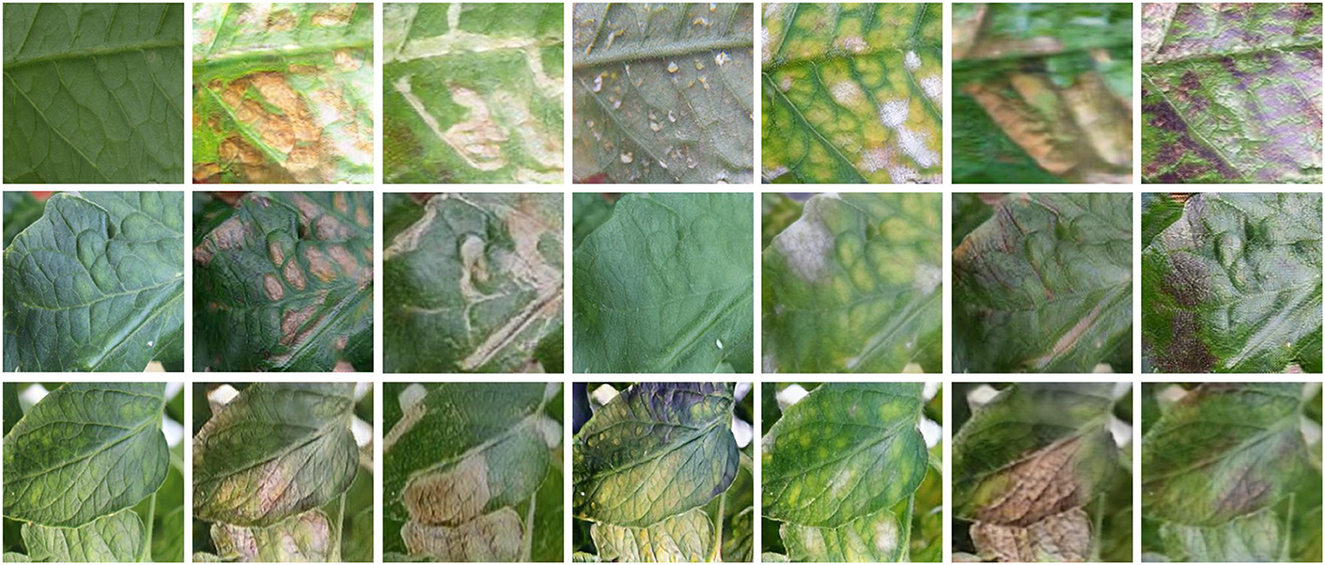

Nazki et al. (2020) proposed an unsupervised image-to-image translator called AR-GAN by adopting and improving the concept of CycleGAN. The authors transferred diseased patches from an unhealthy tomato leaf to a healthy tomato leaf. The leaf texture in the synthetic data was consistent with the actual data. The synthetic data was mixed with the training data, and a ResNet model was trained to classify different types of disease. The inclusion of the synthetic data increased the classification accuracy by 5.2%. AR-GAN can help plant phenotyping-based deep learning studies generate cost-effective, larger, and more diverse datasets. Figure 3 shows examples of images generated by AR-GAN.

Figure 3. Examples of synthetic images generated by AR-GAN. The leftmost column shows the real images and the rest of the columns show synthetic images with effects of canker, miner, whitefly, powdery mildew, plague, and low temperature (left to right), respectively (Nazki et al., 2020).

Cap et al. (2022) showed that AR-GAN failed to synthesize images when the images had a complex background similar to field conditions. So the authors proposed LeafGAN, an image-to-image translating GAN model. Compared to the AR-GAN, LeafGAN utilizes a proposed image segmentation technique, LFLSeg, which segments the leaf from the background. LFLSeg is a CNN that is trained to classify between whole leaf, partial leaf (parts of the whole leaf), and non-leaf. After training the CNN model, for a prediction, Grad-CAM is used to generate a heatmap of the features, which are supposed to be the leaf pixels in this case. The heatmap is turned into a binary mask, and the input image is segmented. The purpose of LFLSeg is to guide the CycleGAN model to focus on the leaf instead of the background. In comparison with CycleGAN, LeafGAN performed superiorly. However, for a new dataset training, the LFLSeg might be difficult. As the partial leaf class was hand-picked, it may require considerable time to generate. The Grad-CAM itself still lacks reliability. Analyzing individual masks generated by Grad-CAM might be impossible for a large dataset.

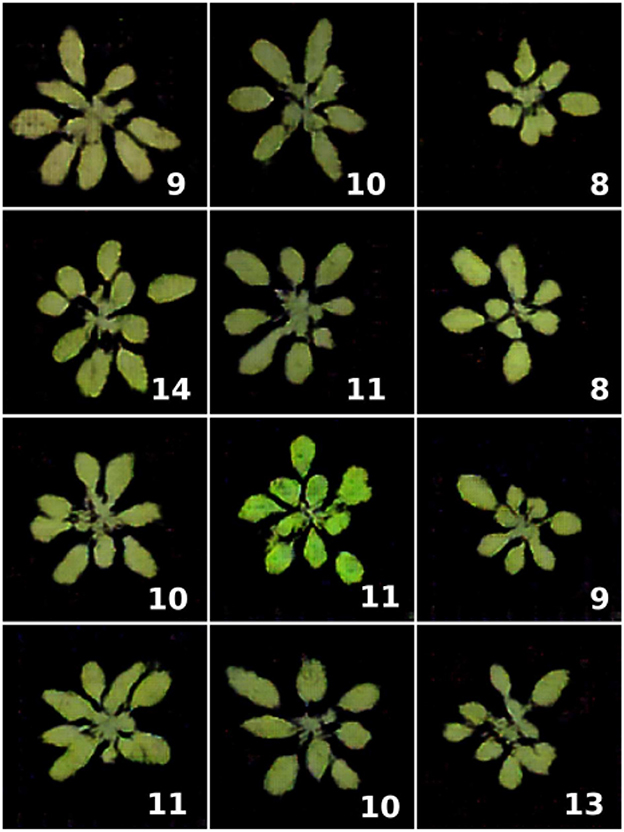

To generate rosette plant leaves, Valerio Giuffrida et al. (2017) developed ARIGAN, a GAN-based synthetic image generator. This DCGAN (Radford et al., 2015) based model takes the number of leaves that should be present in the image, uses random noise, and transforms it into the image of a rosette plant with a given number of leaves. The authors used the model to generate 57 images of rosette plants with a varying number of leaves. However, if we look closely at the images in Figure 4, the leaves' variability seems minimal.

Figure 4. Samples of augmented images generated by ARIGAN. The bottom right represents the number of leaves (Valerio Giuffrida et al., 2017).

The focus of this review has been on shoot phenotyping as the majority of the deep learning-based plant phenotyping analyses in the literature have focused on shoot phenotyping. Recently, there has been an extensive increase in deep learning-based root phenotyping. Root phenotyping or the study of plant root architecture and morphology aims at understanding the role of genetic differences in root system architecture in more efficient acquisition of mineral nutrients and water, and response to climate and soil change (Gong et al., 2021). Roots are harder to study in situ as roots grow in opaque and complex soil. Along with growing the roots in a transparent media or soil-filled rhozoboxes (Lube et al., 2022), researchers also have focused on developing nondestructive tools and systems to analyze the roots and deep learning has proved to be a great asset for such studies (Mairhofer et al., 2013; Shen et al., 2020).

Segmentation of the plant roots from images captured using different nondestructive imaging techniques is an important part of root phenotyping studies. Thesma and Mohammadpour Velni (2022) proposed a binary semantic segmentation model to segment plant root images of the Arabidopsis thaliana using SegNet (Badrinarayanan et al., 2017). Although SegNet was designed to consume less memory during inference time, it achieved comparable segmentation performance with a mean intersection over union of 60.10%. RootNav 2.0 is an automatic root system extraction tool proposed by Yasrab et al. (2019). Along with providing root architecture from images, this autoencoder based deep learning model can locate seeds, and first order and second order root tips with 66.1% mean intersection over union. Seidenthal et al. (2022) proposed an iterative deep learning architecture ITErRoot that allows the refinement of the detected roots during each iteration of model training. The iterative approach of the model can accurately detect the thin and branched root system and generate high quality segmented root images. The authors also proposed a 2D root image dataset with ground truths.

Falk et al. (2020) proposed a high-throughput, cost-effective end-to-end root phenotyping pipeline. The authors developed a low-cost growth chamber to observe the growth of plant roots in a non-destructive manner, a CNN to segment the root structure, and finally used an automatic root imaging analysis tool on the segmented images to study the plant traits. Yasrab et al. (2021) took a different approach to study root phenotyping by developing a GAN model that can forecast the growth of a plant and generate segmentation masks of root and shoot systems by using the forecast data. A simple deep learning classifier was developed by Xu et al. (2022) to classify root types into branch type, taproot type, and an intermediate taproot-branch type. Xu et al. (2022) compared the results of the deep learning model with supervised and semi-supervised traditional machine learning models and concluded that deep learning models perform better.

Deep learning models demonstrate superior performance compared to shallow and traditional machine learning algorithms across a wide range of tasks due to their ability to learn intricate representations and capture complex patterns in data (Hu et al., 2021; Janiesch et al., 2021; Sarker, 2021). Despite its efficiency, deep learning models are rarely adopted to perform plant phenotyping tasks. The main reasons for the lack of adoption of deep learning in plant phenotyping can be attributed to the absence of proper tools and the lack of large adequately labeled, task-specific plant datasets. Researchers require domain knowledge of the models to build and apply the models to their studies. Furthermore, the development of a generalizable deep learning model relies heavily on the quality and quantity of available data. Substantial computational resources are also necessary to train and deploy deep learning models. To resolve the lack of proper tools, Ubbens and Stavness (2017) developed an open-source deep learning tool called Deep Plant Phenomics. This tool contains pre-trained deep learning models for leaf counting, mutant classification, and age regression. The models were trained for canola and rosette leaves. Hypocotyl UNet by Dobos et al. (2019) is another publicly available tool that can estimate the hypocotyl length in seedlings and can be adapted for different datasets. Nakhle and Harfouche (2021) developed an interactive tutorial with open-source libraries to analyze plant phenotyping data using deep learning models. The authors believe that the tool can benefit early career researchers and students in extracting biologically meaningful information from deep learning models. They also reviewed the tools, techniques, and services available to study plant phenotyping with XAI based image analysis. Plant scientists may consider incorporating these tools into their studies to obtain previously thought impossible results.

In this review paper, we have summarized the plant phenotyping studies utilizing deep learning, as presented in Table 4. The table encapsulates essential details about the purpose of the deep learning model and the specific phenotyping task performed by the researchers. Furthermore, detailed information about the dataset and deep learning model employed in each study has been incorporated into the table. This includes information as to whether the researchers proposed a novel model or dataset, utilized an existing model and dataset, or employed well-established, commonly known datasets and models.

As discussed earlier, explainability is becoming an important part of deep learning models as it allows better understanding and provides model optimization capabilities. XAI is needed in plant phenotyping studies as plant scientists need to verify the predictions and be confident in the result so that the model can be applied in practice. Explaining the prediction of the model works as an extra layer of security for plant scientists. In comparison with other fields of study, XAI is still in its earlier stage in plant phenotyping. To the best of our knowledge, the article by Harfouche et al. (2022) is the only other review that discusses XAI in plant phenotyping. However, the focus of the review was to analyze the contribution of XAI in data bias, the infrastructure needed to accommodate XAI in plant phenotyping and the responsibility of humans to utilize XAI. The focus of this study is to discuss XAI techniques and their use cases. In this section, we discuss the studies where XAI was used in plant phenotyping for validation and analysis of results.

Classification is an important and common task in plant phenotyping studies concerned with identifying plant species, rating plant traits, or rating disease severity. Consequently, the majority of the XAI techniques in plant phenotyping are tailored for the classification models, enabling researchers to better understand the important features for model development and validate the results produced by deep learning models.

Deep learning models have significantly improved the efficiency of detecting plant and leaf diseases from plant images, surpassing the traditional image analysis methods. A stress identification and classification framework for soybean were developed by Ghosal et al. (2018). The proposed framework had two parts. In the first part, a modified version of the CNN proposed by Krizhevsky et al. (2017) was used for stress identification and classification. In the second part, the authors proposed a visualization technique to identify the features in the input image responsible for a prediction. For the visualization, the authors used all the feature maps for all of the healthy leaf images of a low-level layer and calculated a stress activation threshold by computing the probability distribution of the mean activation levels of the feature maps. Next, a feature importance score was assigned to every feature map based on each feature map's mean activation level, computed over those pixels with activation levels above the threshold computed earlier. Finally, based on the importance score, k-feature maps were selected, and an explanation map was generated by computing the weighted average of the top-k feature maps. The average intensity of the explanation map worked as the percentage of the stress level. The framework worked well for plant stress identification and quantification. The authors mentioned it to be a model-agnostic technique with transfer learning ability. So, it may be used to identify stress in other plants. However, the dataset used in the study was collected in a lab environment where the picture of individual leaves was taken by placing them in front of a black background. In calculating the stress activation threshold, only the foreground pixels were used. In a field condition, several leaves may stay together, and it is hard to distinguish the foreground from the background in such cases. Moreover, we might need a very large dataset to apply the framework to other plants. The plant phenotyping community still lacks such large labeled datasets.

In deep learning-based plant phenotypic studies, researchers often use popular pre-trained models (e.g., InceptionV3, GoogleNet, AlexNet, ResNet) to perform a task (Ngugi et al., 2021). However, these models are designed for large datasets and are very complex in design. Toda and Okura (2019) studied plant disease classification and using visualization techniques showed that complex models do not necessarily contribute to the inference. At first, the authors developed an InceptionV3 (Szegedy et al., 2016) based classifier to classify plant diseases using the Plant Village (Hughes and Salathé, 2015) dataset. Next, the authors used four different classes of visualization techniques, i.e., hidden layer output visualization, feature visualization, semantic dictionary, and perturbation-based visualization, to explain the model. They visualized every layer of the model to understand the learning of the model. Based on the visualization, the authors removed 75% of the network parameters and achieved similar performance. The authors also found that GradCAM (Assaf and Schumann, 2019) and Explanation Map (Krizhevsky et al., 2017) were the most descriptive and cost-effective explanation techniques for visualizing feature maps. Although the study showed that XAI could help select a desirable model depth for plant phenotypic tasks, using the framework in practice could be time-consuming. Domain expertise and understanding of the deep learning architecture might be required to perform the analysis and develop the desired model.

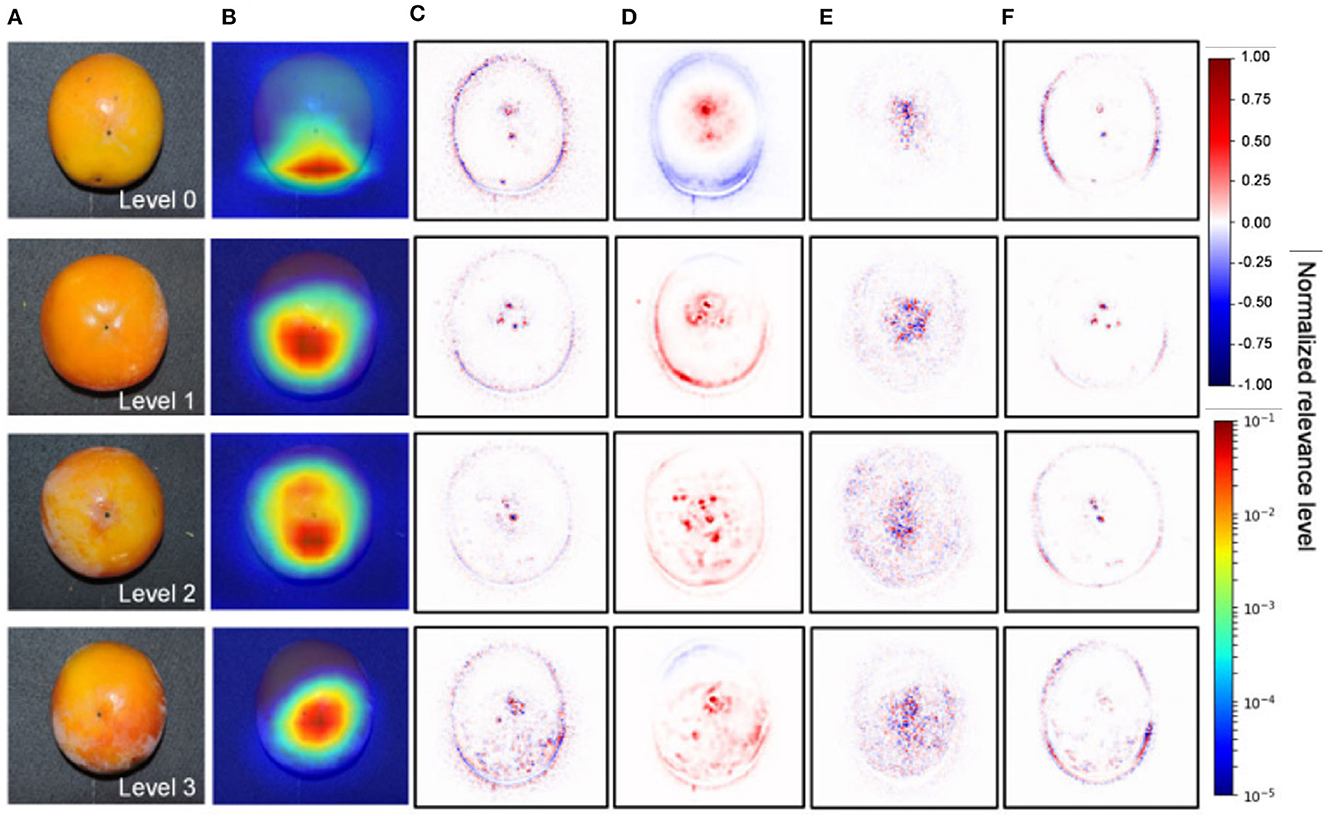

The visualization of the feature maps of a deep learning model has helped plant scientists diagnose the internal disorder in persimmon fruit which the experts even missed. Akagi et al. (2020) developed a deep learning classifier capable of classifying calyx-end cracking in persimmon fruit. They used five different CNNs for the classification between healthy and cracking and achieved 90% accuracy. In the final step, the authors visualized the feature maps to detect cracking (Figure 5). The visualization showed higher relevance around the apex area and peripheral of the fruits, which might be related to particular stress. The lack of a large dataset to validate the findings is a significant drawback of the study.

Figure 5. Visualization of the feature maps representing significant features contributing to the diagnosis of calyx-end cracking for the VGG16 model (Akagi et al., 2020). (A) Original image. (B) Grad-CAM. (C) Guided backpropagation. (D) LRP-Sequential B. (E) LRP-Epsilon. (F) Guided Grad-CAM.

The contribution of the appearance characteristics and the texture characteristics of leaf lesions during the feature extraction process of a deep learning model was studied by Wei et al. (2022). The authors trained VGG, GoogleNet, and ResNet for disease classification and used GradCAM, LIME, and Smoothgrad (Smilkov et al., 2017) to visualize the features learned by the models. The visualization showed that the pixels of the lesion position are the most important for the prediction. However, the authors were inconclusive of the contribution of the appearance of the leaves. During the comparison of the explanations generated by different XAI techniques, the authors found that the explanations generated by GradCAM were more intuitive and easy to understand than the Smoothgrad and LIME.

Mostafa et al. (2021, 2022) studied the relation between the depth of a deep learning model to its performance by using GBP. The authors proposed using a SSIM cut curve, which can help select the required depth of a model to achieve the desired performance by utilizing the structural similarity index (SSIM) of the feature maps generated at different depths of the model. In the study, different plant datasets were used to verify the results of the SSIM cut curve. Using the proposed algorithm the authors showed that higher depth deep learning models do not necessarily contribute to better performance.

Ghosal et al. (2017) proposed a classification model for foliar stresses in the soybean plant. The authors used GradCAM to isolate the visual symptoms that contribute to the model's prediction. Nagasubramanian et al. (2020) also proposed a DenseNet-121-based soybean stresses classifier and used several XAI techniques to understand the features learned by the model. The authors observed that sometimes the deep learning models learn features that might not be relevant to the infection in the plant.

In plant phenotyping, hyperspectral imaging (HSI) plays an important role. It allows for capturing plants' abiotic, biotic, chemical, and quality traits along with spatial and spectral information. In Nagasubramanian et al. (2019), the authors utilized HSI to develop a soybean disease classifier. They proposed a 3DCNN to utilize the HSI images' spectral and spatial information for the classification. Next, a saliency map (Simonyan et al., 2013) was used to detect the regions in the images that contributed to the prediction. Due to the use of HSI, the saliency maps helped detect the wavelength channel that maximally activated the feature maps. Using this information, a histogram showed the distribution of wavelengths across all the pixels. The use of saliency maps helped the authors authenticate the proposed method. This study opens new avenues of plant disease classification using HSI and deep learning.

Schramowski et al. (2020) proposed explanatory interactive learning, a framework for deep learning models in plant phenotyping. The authors used HSI images to demonstrate that inclusion of explanations of the decisions of a deep learning model into the model development process can help reveal Clever Hans (utilization of insignificant features within datasets) like behavior. The authors developed an interactive deep learning model where users can control the model development based on the explanations.

Desai et al. (2019) proposed a classifier-based paddy rice's flowering panicle counter. Desai et al. (2019) used a sliding window that passes over the training image and extracts image patches. The patches were then used in a classifier to detect whether a flowering panicle was present in the patch. Depending on the presence of the flowering region, a bounding box is generated. Finally, the flowering regions are counted to get an estimation of the number of flowering in the image. The authors used GradCAM to observe the regions that the model used for classification and found that the flower regions mainly influenced the prediction.

A different approach for plant classification was adopted by Grinblat et al. (2016), where a vein morphological pattern was used to classify white bean, red bean, and soybean plants using deep learning models. The hit or miss algorithm by Soille (1999) was used to extract the veins and create a binary image. Next, patches of veins were cropped from the images, and the images were used to train a deep learning model, which was proposed in this study. In addition, the feature maps for different classes were visualized using the saliency map visualization by Zeiler and Fergus (2014). The visualization helped the authors realize that the model extracted features from different parts of veins for the prediction of different classes.

Minamikawa et al. (2022) proposed a method to automatically measure the morphological features of citrus fruits by the image analysis of cross-sectional images of citrus fruits. The authors used GradCAM to visualize the features in the fruit images that were important for the classification of peeling and fruit hardness. The authors combined GradCAM visualization with the information of the fruit morphological features to reveal key features important for the prediction. The authors proposed that it is important to connect the visualization results with knowledge on plant physiology and breeding to increase the reliability of deep learning models and understand the molecular mechanism of targeted traits.

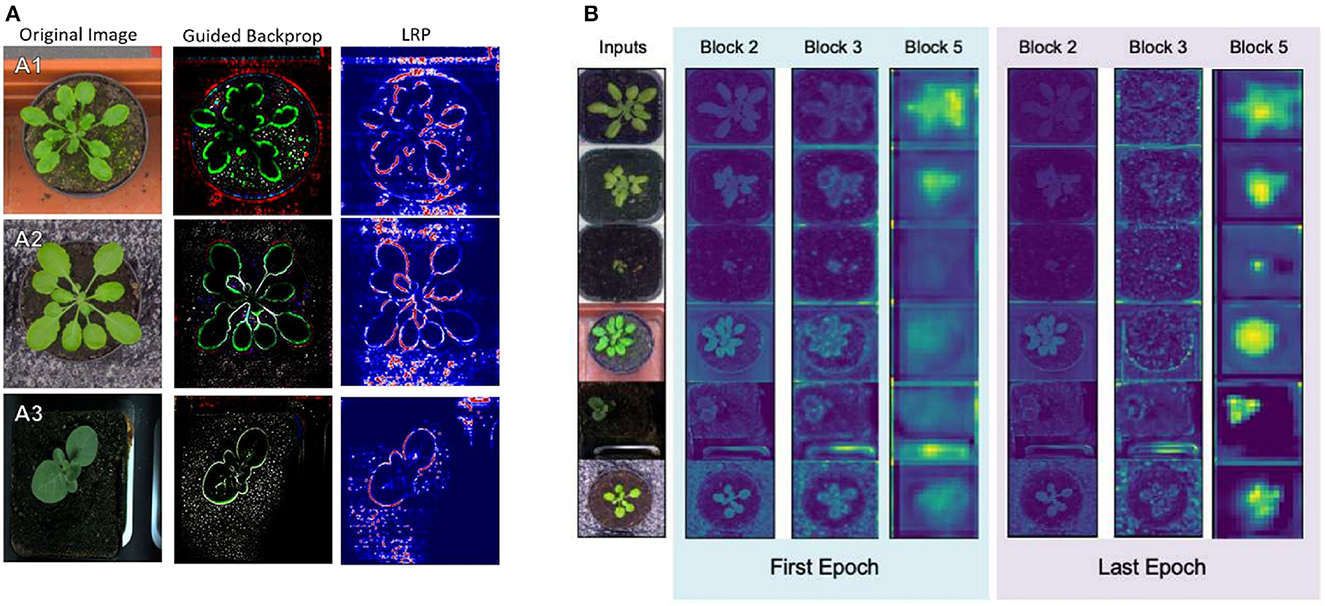

In Dobrescu et al. (2017), the authors proposed a leaf counter for rosette plants. The salient regions that contributed to the regression were shown in work using a simple heatmap technique. It helped the authors emphasize that the model was learning from the leaf regions as obstructing the leaf generated error and was visible in the heatmap. To investigate the learning of the VGG16 based regression model counting plant leaves, Dobrescu et al. (2019) used GBP and LRP to visualize the feature maps of the model (Figure 6). The authors found that the initial layers learn low-level features, the deeper layers focus on the leaf edge, and the final layer produces the highest activation in the plant region. Experiments also showed that the regression model discards the leaf surface and uses the leaf edge information for counting. In a regression model, the visualization techniques may ensure that the model is indeed learning from the object of interest, allowing users to gain confidence in the results but also helps them explain the model accurately.

Figure 6. Visualization of the learning of a VGG16 based regression model using GBP and LRP (Dobrescu et al., 2019). (A) GBP and LRP visualization of different plants. (B) Average activations at the end of several convolutional blocks at the start and end of training.

TasselNetV3 is a plant counting deep learning model that uses model explanation to enhance the human-level interpretability (Lu et al., 2021). In TasselNetV3, the authors proposed dynamic unfolding that assigns weights to the local region by learning from the ground-truth density maps. Dynamic unfolding replaces the averaging of the local count into the receptive field, which greatly improves the model performance. The authors also visualized the feature maps that helped them find which instances were counted. Such visualization helped to find what might have caused the model to fail.

Regression plays a crucial role in high-throughput plant phenotyping, facilitating rapid and precise counting of plants and plant organs (e.g., flowers, leaves, spikes, kernels). Through the XAI techniques, researchers have gained insight into the importance of object edges in regression and identified the probable cause for model failures. However, further investigation and validation across diverse datasets consisting of various imaging and field conditions are necessary to generalize and replicate these findings effectively.

Drees et al. (2022) proposed TransGrow, a conditional generative adversial network that can generate time-dependent high-quality and realistic images of irregular and incomplete sequences in above-ground plant phenotypes. TransGrow allows farmers to predict future above-ground phenotype at any time point in the growing season. In addition to proposing TransGrow, the authors visualized the pixel-wise uncertainty of plant growth for each time and found noticeable differences at the leaf edges, where the variance of plant growth is naturally the highest.

The lack of large plant phenotyping dataset has already been discussed. An overview of XAI studies in plant phenotyping is provided in Table 5.

As the field of plant sciences continues to embrace the potential of deep learning, it is essential to recognize the ethical implications and biases that can arise in the development and use of these deep learning models. These biases can impact various aspects, including data collection, analysis, decision-making, and the overall outcomes of plant research. In this section, we explore the potential benefits of using deep learning, the challenges associated with it, and how explainability can help in addressing some of these challenges in adopting deep learning.

Deep learning algorithms excel at analyzing large scale datasets and finding patterns within these datasets to gain insights that might not be feasible with traditional approaches to analyzing data. This can significantly reduce the amount of time required in decision-making processes and potentially uncover hidden insights that were previously thought not feasible to identify. Deep learning models can help in predictions for improving crop varieties by optimizing crops for disease and drought resistance, mineral nutrient uptake and planning to adapt to and also mitigate environmental change. By harnessing the power of deep learning algorithms, researchers can improve crops to be more resilient to environmental challenges and for better resource use efficiency.

Image-based analysis can be deployed in multiple scenarios to assess and enhance the performance of the plants in different conditions. For example, in disease and pest detection, images can be used to categorize and quantitatively assess diseases on a plant. In plant phenotyping to improve shoot and root architecture traits using plant breeding programs, deep learning algorithms can be used to improve plant image quality and data consistency while reducing the workload of researchers so they can focus on other important research components. Traditional image processing algorithms can be rigid to specific applications, while deep learning algorithms can model to provide generalization capability for processing images that aid plant phenotyping research. Employing deep learning for analyzing plant phenotyping datasets can significantly improve the throughput of the research as there is less time required to design tools specifically for all different applications. Hence, the application of deep learning to process images (plant phenotyping) related to changes in DNA sequence (plant genotyping) can help in accelerating breeding programs, ultimately leading to reduced time in generating improved varieties of plants.

Moreover, deploying deep learning models for analysis can help uncover insights that may have been thought too difficult to achieve before. For example, in plant research, the use of deep learning models can help uncover a phenotype in an abstract domain (such as latent space) that may help understand treatment effects on genetically different plants of the same species. In another example, a functional phenotype of root architecture can help understand variations in root architecture by comparing how similar they are to each other.

The adoption of deep learning in plant science is impacted by many challenges associated with understanding how these models operate and provide insights into collected data. Moreover, it is critical to fully incorporate plant biologists who are experts in the specific area of research the deep learning model is being applied to, as plant growth is a complex process with a number of factors involved. Specifically, understanding Genotype by Environment (GxE) interaction to produce a phenotype is an active area of research. Plants have evolved elegant response systems to adapt to changes in their local environment. One example, in the soil, there can be a very complex heterogeneity of the concentrations of specific soil mineral nutrients the plant roots must absorb to thrive [especially the major fertilizer nutrients, nitrogen (N), phosphorous (P), and potassium (K)]. For example, the primary form of nitrogen absorbed from the spoil is the nitrate (NO3) anion. Nitrate is very mobile in soils and can move with the groundwater. Hence there can be patches of soil that are low or high in nitrate. Plant roots have evolved an elegant nitrate sensing and response system. This involves complex gene and protein networks that note when the soil nitrate is low and shut down lateral root growth. This enables more of the root carbon to be used by the primary root to grow faster and find regions of high soil nitrate. When these regions are accessed by the primary root, these networks then turn on and stimulate lateral root growth to absorb this much-needed nutrient (Remans et al., 2006; Wang Y.-Y. et al., 2018; Maghiaoui et al., 2020). Hence, relatively minor changes in the plant environment or plant genetic makeup could produce quite different plant phenotypes, be they above or below ground.

As plant researchers embrace advances in deep learning to aid in enhancing crops for sustainable production of food, the relatively black box nature of the deep learning algorithms can deter some plant researchers from adopting these tools and techniques in their research programs.

Due to a lack of available and accessible data, some of the trained deep learning models augment datasets with simulated plant datasets. The field of plant research is always evolving, and it is difficult to model plant growth to simulate plant images. Some available tools for simulating plants digitally do not consider all the different factors involved in plant growth. For example, a tool developed by (OpenSimRoot), simulates plant root growth based on multiple parameters. However, it assumes that when drought-stressed, bean roots tend to grow deeper, which might not be completely true. Drought often quickly inhibits both root and shoot growth (Reinelt et al., 2023). When a deep learning model is trained with such simulated datasets, it raises the question of whether the bias of the simulator is built into the deep learning model as well and may prevent other researchers from using it. In a different scenario, if it was used by a research group, the built-in bias would propagate through other research projects and lead to improper outcomes.

Abstract phenotypes obtained by using machine learning models proposed by Ubbens et al. (2020), (based on latent space phenotyping) may not help plant scientists understand what that means even after finding a region of interest other than that there is some difference in latent space phenotype for treated vs non-treated plants of the same line. Being able to interpret what that actually means in the realms of plant physiology may be more useful to other researchers. There is a huge potential for new phenotypes, such as latent space phenotype, in understanding plant function, but having a translation of what that actually means in real-world physiology is as important.

The deep learning models are trained with large datasets of images or other data related to plant phenotyping. These trained models can inherit the bias present in the dataset itself. For example, training a model with an image dataset that consists of data points collected in highly controlled environments such as growth chambers with highly regulated lighting and temperature growth conditions can make it biased toward predicting better with images acquired in those conditions, but could fail to predict when the deep learning algorithm is subjected to images from real-world conditions where light and temperature (and other conditions) can vary significantly throughout the day/night cycle. From our experience, for phenotyping plant roots grown in hydroponics, even in the more controlled growth chambers, root growth can be affected by a number of factors, including changes in temperatures, light and humidity in different growth chambers, use of different materials for germination, the pH of nutrient solutions, and proper aeration within the growth solution. When imaging the plant root systems, factors that can impact datasets, which may not be directly related to genetic variation, include proper lighting conditions, the color of the filter paper used to provide optimal contrast with the white roots, imaging roots placed under water vs out of the water, camera parameters such as exposure, aperture and shutter speed, and presence of a meniscus along roots that are grown in water but imaged in air, can lead to generating biased datasets that can result in deep learning model favoring one imaging condition than other.

These biased datasets raise concerns about the generalizability and reliability of deep learning models and can lead to a skeptical view about the efficacy of these approaches by some plant researchers. Another possibility is that the use of these models as de facto state-of-the-art models in assessing plant varieties can result in poor decision-making and skewed, possibly wrong, results being published in high-quality journals, which are followed by other researchers. This can have a negative ripple effect on the future of plant research.