- 1Department of Treasury, Thurgauer Kantonalbank, Weinfelden, Switzerland

- 2Centre for Banking and Finance, Eastern Switzerland University of Applied Sciences, St. Gallen, Switzerland

- 3Master Programme UZH ETH in Quantitative Finance, Zürich, Switzerland

- 4Entris Banking, Berne, Switzerland

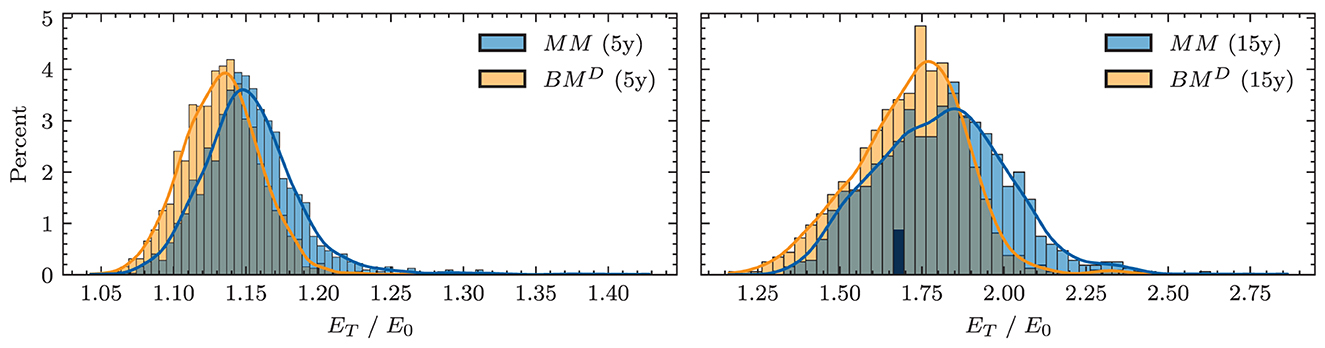

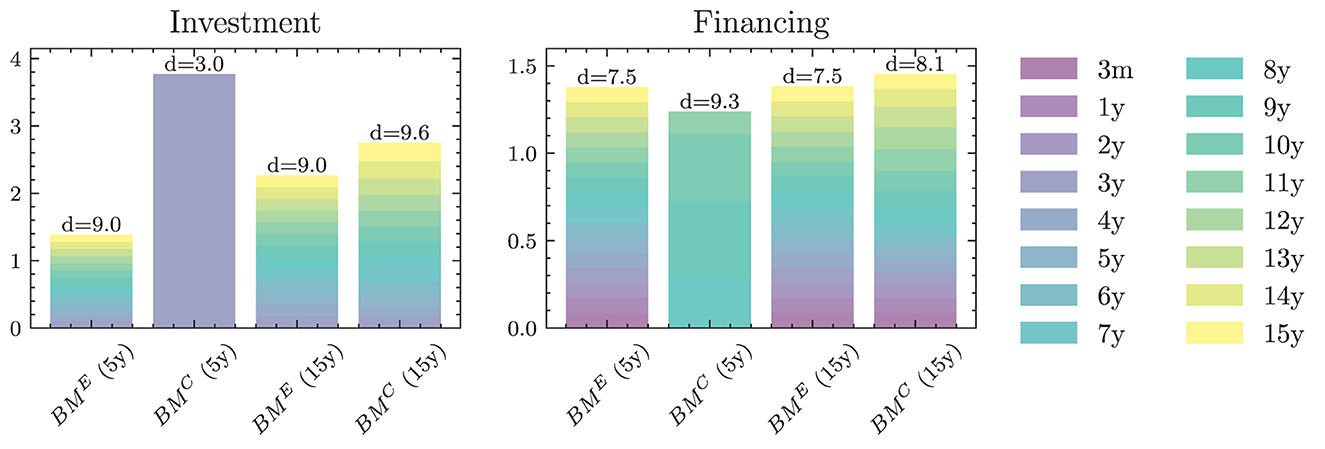

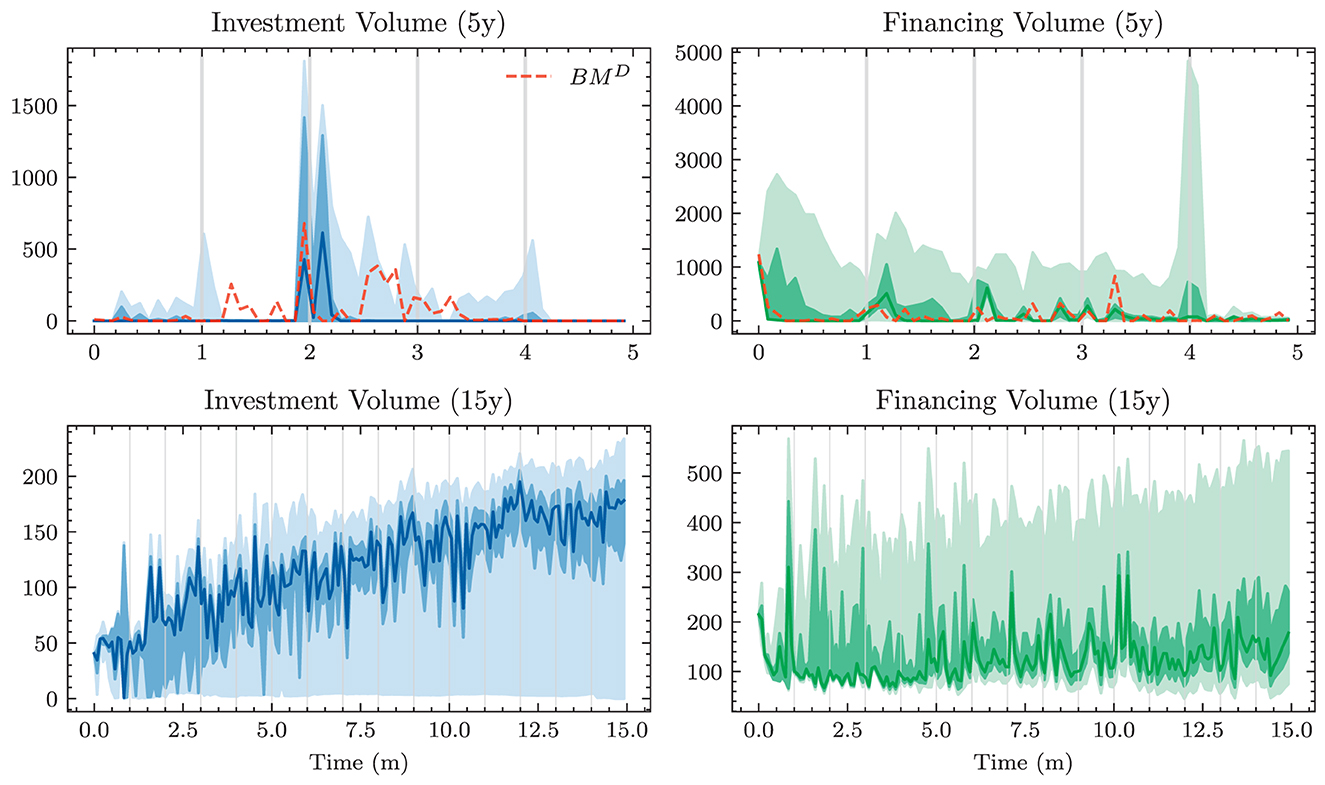

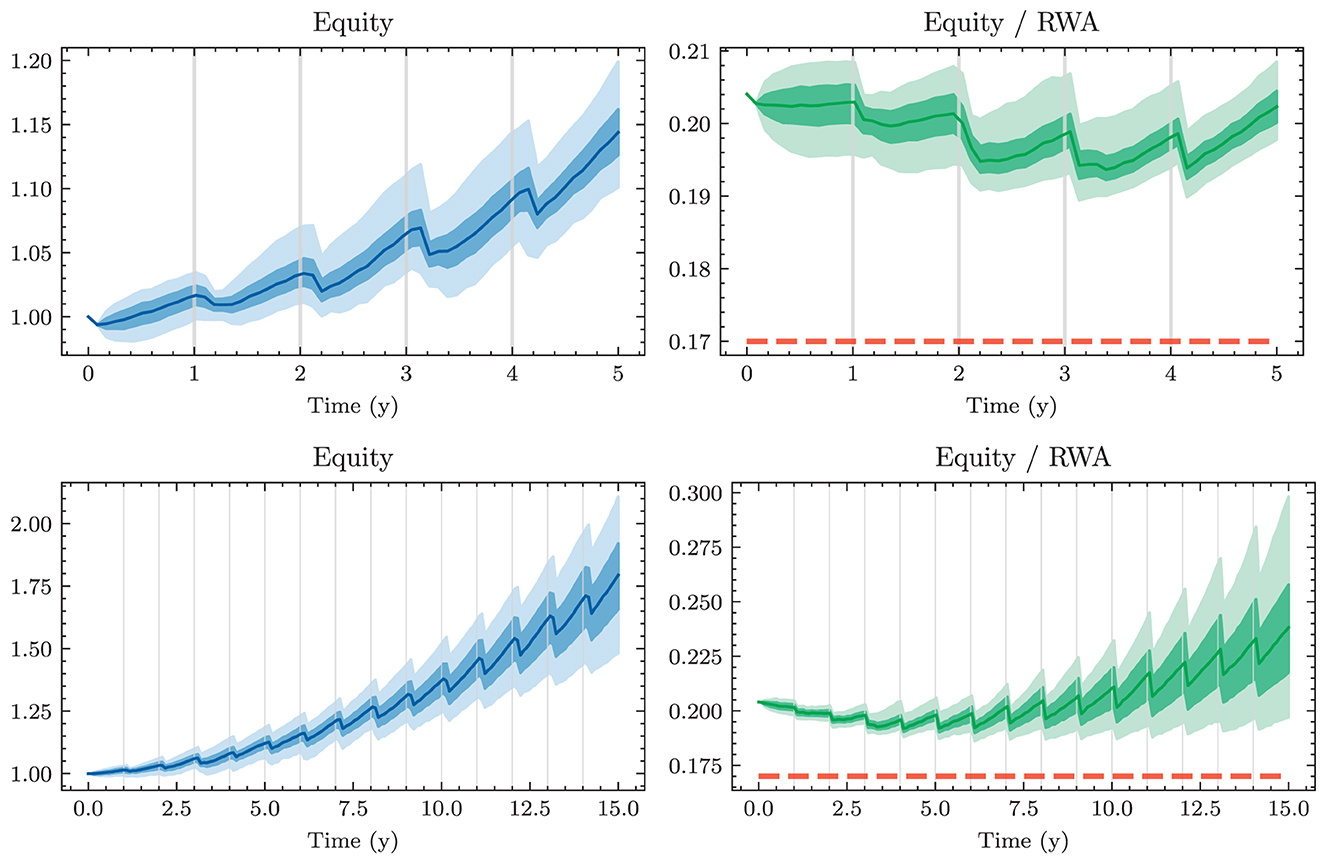

Retail banks use Asset Liability Management (ALM) to hedge interest rate risk associated with differences in maturity and predictability of their loan and deposit portfolios. The opposing goals of profiting from maturity transformation and hedging interest rate risk while adhering to numerous regulatory constraints make ALM a challenging problem. We formulate ALM as a high-dimensional stochastic control problem in which monthly investment and financing decisions drive the evolution of the bank's balance sheet. To find strategies that maximize long-term utility in the presence of constraints and stochastic interest rates, we train neural networks that parametrize the decision process. Our experiments provide practical insights and demonstrate that the approach of Deep ALM deduces dynamic strategies that outperform static benchmarks.

1. Introduction

1.1. Background

Recently, deep learning-based techniques have successfully been applied to stochastic control problems in finance. As opposed to classical approaches that rely on the analytical tractability of the problem, recent approaches such as deep stochastic control feature a high flexibility. Intricate impediments such as constraints, frictions, and arbitrarily complex stochastic dynamics can be accounted for without further ado. The field of Asset Liability Management (ALM) can particularly profit from the flexibility of this new modeling paradigm. In the context of retail banking, ALM has the task of managing the bank's interest rate risk, which arises from the maturity mismatch of loans and deposits. To this end, banks invest their customers' funds, raise money to finance lending, and enter into interest rate derivatives such as swaps. At the same time, banks have to adhere to regulatory constraints and follow several concurrent objectives. ALM is consequently a challenging problem to both model and solve. This article approaches these two tasks: we develop a modeling framework for ALM and use deep learning techniques to find optimal investment and financing decisions.

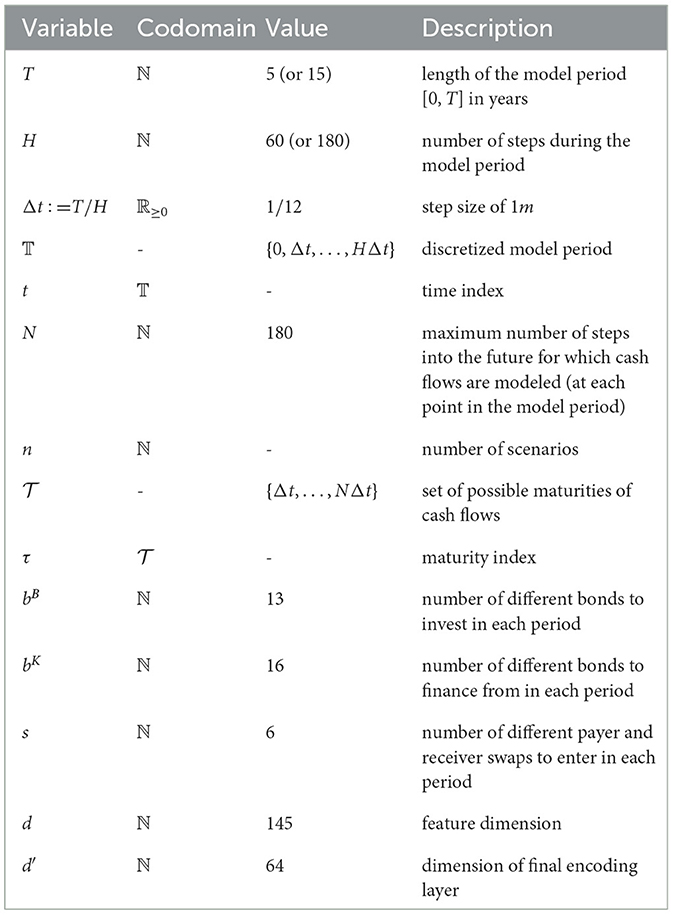

Retail banks face interest rate risk because cash flows that originate from their loans on the asset side and deposits on the liability side differ in terms of their maturity structure and predictability. If the term structure of interest rates changes, the economic value of the bank's assets might change to a different extent than that of its liabilities, leading to a change in the net position: the bank's equity. Banks do not want to be susceptible to the volatility of interest rates and use ALM to reduce interest rate risk. This involves reducing the discrepancy in the cash flow characteristics of assets and liabilities, and modeling how interest rates might change in future. Yield curve modeling becomes particularly important when applying deep learning-based techniques to the problem. These techniques are 'data-hungry' in the sense that their optimization requires a large bundle of scenarios that specify how interest rates might evolve in future. For this purpose, this article discusses different models for yield curve simulation including a method that generates a variety of yield curve shapes and paths within the HJM framework; see Heath et al. (1992). Figure 1 depicts the historical development of the CHF yield curve1 over the last couple of decades.

Figure 1. Historical CHF term structures—The optimal balance sheet structure and the interest rate exposure of a bank highly depend on the current and future states of the yield curve. Historically, the term structure featured extremely high and inverted yields in the early 90s. Since the mid 90s, there has been a long-term trend of falling yields with presumed trend reversal during the year 2022.

The yield curve scenarios are used to optimize an ALM strategy that is parametrized with neural networks (Deep ALM). This deep learning-based optimization approach, as presented by Han and Weinan (2016) in a general stochastic control setting, is motivated by the success of deep hedging (Buehler et al., 2019), which uses neural network-based strategies to hedge financial derivatives. Deep ALM focuses on the problem of hedging interest rate risk of the asset and liability portfolios of banks. In the case of hedging a runoff portfolio, Krabichler and Teichmann (2020) demonstrate that their deep learning-based strategy outperforms a static replication approach as commonly used in practice. This article expands on their approach of hedging a single portfolio and applies deep stochastic control in a more comprehensive model of the ALM problem. This comprises bond portfolios on either side of the balance sheet, decisions on investments and financing, and more realistic constraints. The Deep ALM framework has been developed in collaboration with a Swiss retail bank, hereafter simply referred to as the bank. Because the numerical experiments use data provided by the bank, results are sometimes presented in aggregation or on a relative scale.

1.2. Asset liability management

The core business of retail banks consists of borrowing and lending funds from and to customers at a variety of maturities. This means that a majority of the bank's assets and liabilities, the so-called banking book, consists of long and short positions in future cash flows. The economic value2 of this portfolio is given by discounting the cash flows based on the current term structure of interest rates. The value of a bank is thereby largely driven by the external factor of the prevailing yield curve, which can be quite volatile. It is not in the interest of banks and their investors that equity as the net position of assets and liabilities is susceptible to a high market volatility. Instead, banks aim to hedge this interest rate risk. This is the core responsibility of ALM. At the same time, banks often keep some exposure to interest rate risk, which allows them to profit from upward slopes in the yield curve. Managing this exposure while adhering to constraints and expectations from different stakeholders makes ALM a challenging problem.

Interest rate risk occurs because cash flows from assets and liabilities differ in several characteristics. First, cash flows occur at different times, and contracts are entered into for different maturities. For instance, mortgages are usually granted for long maturities while deposits are a source of short-term financing. This maturity mismatch leads to a duration gap between assets and liabilities. A second fundamental difference lies in the predictability of future cash flows. For most assets in the banking book, banks know what future interest payments they supposedly receive. For instance, interest payments of fixed-rate mortgages are determined when the mortgage is granted to the customer. On the other side of the balance sheet, future interest rates on deposits are unknown. They relate to market interest rates (such as interbank rates) through competition between banks. If interbank rates increase, some banks will offer higher interest rates to their customers, forcing other banks to follow until an equilibrium is reached. In times of positive interest rates, this equilibrium rate is typically lower than that from the interbank market. During the recent negative interest rates regime, customer rates were often floored at 0%, implying that customers essentially held a real option on interest rate payments. Furthermore, most deposits are not placed for a fixed maturity and can be withdrawn by customers at any time. Regarding non-maturing deposits, future interest rates are not only unknown but also the timing of when the notional becomes due. This imbalance of deterministic (or at least 'foreseeable') cash flows from assets and stochastic cash flows from deposits is one of the key challenges of ALM.

A common ALM approach for hedging interest rate risk is found on the notion of replicating portfolios. Liabilities in the banking book with undetermined cash flows are invested in a bond portfolio that replicates the interest rate sensitivity of the liability portfolio, such that the net interest rate risk is minimal. Similarly, assets with undetermined interest rate payments can be financed with matching replicating portfolios. The difficulty of this approach lies in selecting a suitable mix of maturities in the replicating portfolios. For instance, to replicate the deposit position, the bank should choose maturities such that the interest earned on the replicating portfolio moves parallel with the interest paid to customers. The risk of rising interest rates can be mitigated by investing in short maturities; higher interest payments on deposits can be financed from the replicating portfolio that is renewed continually. Nonetheless, investing in longer maturities usually offers higher yields (at the cost of a more pronounced interest rate risk).

Banks typically keep some interest rate exposure to exploit spreads that banks charge customers when lending and borrowing money. Most often, banks keep a higher duration3 on their assets than on their liabilities; long-term investments through rolled over short-term funding. If the yield curve features a positive slope, it allows banks to lend funds at the far end of the curve while borrowing funds at short maturities with smaller rates. If yields stay relatively constant over time, this carry trade generates a profit for the bank. But this maturity transformation is subject to the risk of rising interest rates. If the yield curve shifts upwards, refinancing at the short end becomes more expensive while the interest earned on previously issued loans remains unaffected. This leads to an obliteration of projected revenue.

1.3. Deep learning for stochastic control problems

The following introduces a general stochastic control problem in discrete and finite time with time instances t ∈ {0, 1, 2, …, T} on the filtered probability space . The observable information that characterizes the control problem at time t is summarized via the -measurable and d-dimensional state variable xt. The state xt evolves to state xt+1 according to a transition function bt. If the control problem is Markovian, as in the setting of Han and Weinan (2016), bt maps the current state , the control , and a random shock εt+1 to the next state xt+1. We assume a slightly more general setting, where the transition might also depend on the history ht of the previously attained states.4 At each time step, the function assigns utility or a reward with the current state-action pair. The optimization aims to optimize the cumulative utility in expectation while respecting potential inequality constraints and equality constraints . In summary, this gives the stochastic control problem

Deep learning can be used to approximately solve stochastic control problems. By parametrizing controls with neural networks, these controls can be optimized using gradient descent. This method, hereafter referred to as deep stochastic control (DSC)5, is the basis of deep hedging (Buehler et al., 2019), deep replication (Krabichler and Teichmann, 2020), and the Deep ALM approach developed in this article.

Let L, N1, N2, …, NL ∈ ℕ with L ≥ 2, σ:ℝ → ℝ and let be an affine function. A feedforward neural network is a function such that

for the layers l = 1, 2, …, L−1. The activation function σ is applied componentwise. The entries of the matrices {Wl}l = 1, 2, …, L−1 and vectors {bl}l = 1, 2, …, L−1 are called the weights of the neural network. These weights are referred to as θ and the dependence of the neural network on its weights is highlighted via the notation gθ.

The key idea behind DSC is to parametrize the action at at each time instance t with a neural network that determines the action based on the relevant and available information at time t. Assuming this information can be captured by the state xt and a memory cell , the neural network maps the concatenated input to the action space, i.e., . The objective (Equation 1a) can now be formulated as a maximization over the parameters of all neural networks , i.e.,

Assuming knowledge and differentiability of the transition dynamics {bt}t = 0, 1, …, T−1, the optimization can be approached using techniques based on gradient descent. First, parameters {θt}t = 0, 1, …, T−1 are initialized randomly. Subsequently, given the initial state x0 and the ability to sample the random shocks {εt}t = 1, 2, …, T, one can collect complete roll-outs, i.e., paths of states, actions, and rewards that have occurred over the entire model period. This is achieved by chaining the forward passes through the decision networks as well as the transitions {bt}t = 0, 1, …, T−1 in their temporal order. Utilizing the collected rewards {ut}t = 1, 2, …, T, one can calculate a loss signal for each path that is backpropagated through the entire computational graph.

Simply optimizing for the cumulative reward would generally neglect the constraints of the stochastic control problem, if they are not accounted for otherwise.6 In that case, one prevalent approach for dealing with constraints is to consider negative reward signals, whenever those are violated. The cumulative loss or cost until and including time t is then given by

for suitable penalty weights λ, σj ≥ 0 and penalty functions Pe(·) and Pie(·) that monitor the occurrence and magnitude of breaches. The final loss signal to be minimized is then given by CT.

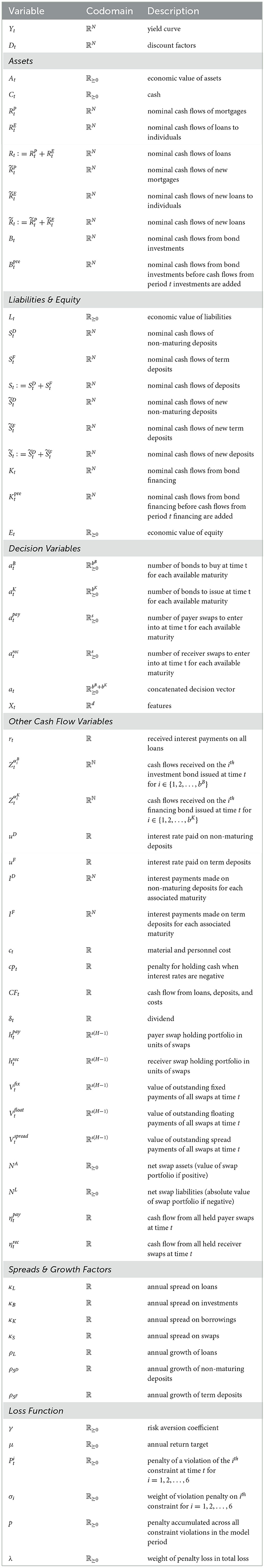

Han and Weinan (2016) illustrate that the concatenated computations to calculate a single roll-out can be regarded as a single deep neural network where the transitions {bt}t = 0, 1, …, T−1 are differentiable layers without trainable parameters (see Figure 2). As outlined later, it might make sense to share weights between the neural networks, i.e., setting . In that case, the computational graph reminds one of the computations in a recurrent neural network with the addition of the transition layer. In this context, backpropagating the final error signal by unfolding the recurrent structure (as illustrated in Figure 2) is referred to as backpropagation through time; see Werbos (1990). This connection becomes noticeable when applying DSC to ALM, where gradients are found to be vanishing (see, Hochreiter, 1998). This is a common problem in training recurrent neural network architectures and is not surprising considering the computational similarities.

Figure 2. Computational flow (DSC)—This figure illustrates the order of computations made in the DSC algorithm to reach the final state xT from the initial state x0. The figure is largely based on Figure 1 from Han and Weinan (2016), but extended by the memory cells h0, h1, …, hT−1.

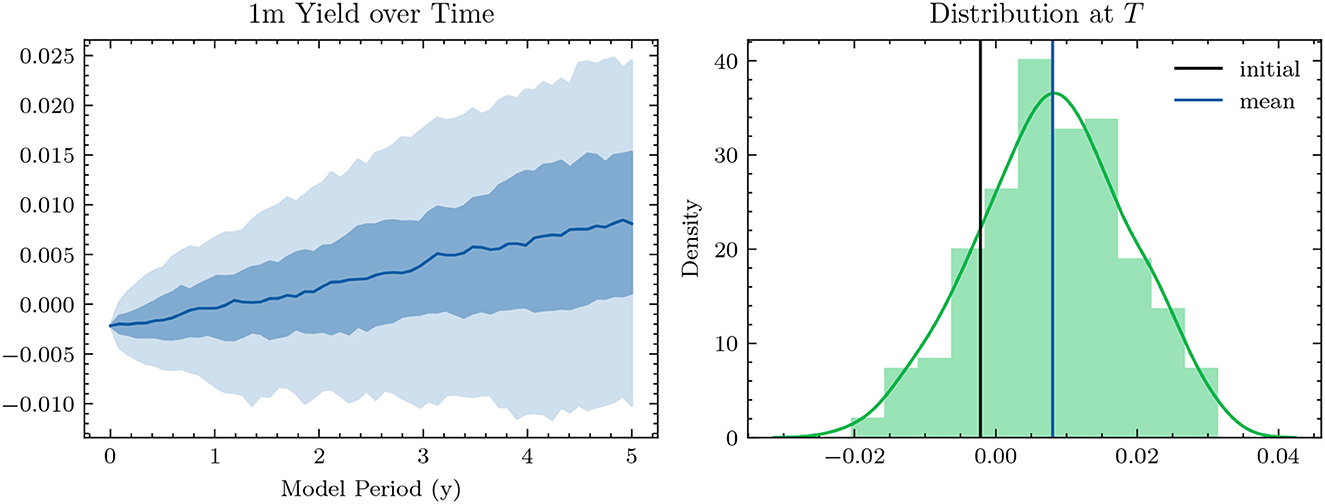

Figure 3. Simulated 1m-Yields (HJM-PCA)—In the plot on the left-hand side, the solid line represents the median 1m-yield over all scenarios. The darker shaded area is enclosed by lines representing the 25% quantile and the 75% quantile. The lighter shaded area is enclosed by the 5% quantile and the 95% quantile.

Generally speaking, reinforcement learning is about training agents to execute action sequences that maximize cumulative rewards in a possibly non-continuous, non-differentiable, partially observable environment (see, Faccio et al., 2022). In this sense, the stochastic control setting presented earlier is a reinforcement learning problem with the particularity that the dynamics of the environment are known and differentiable. The lack of these two properties motivates most of reinforcement learning, where the environment is either assumed to be a black box (model-free paradigm) or has to be learnt (model-based paradigm). Being able to immediately take the gradient of the reward with respect to the policy's parameters eliminates the need to reparametrize the gradient (Williams, 1992) or resort to purely correlating actions with rewards; see Lillicrap and Santoro (2019) for a discussion.

1.4. Machine learning in finance

Particularly for applications arising in finance, it is certainly meaningful to tackle intricate optimization problems with the efficiency and natural simplicity of the DSC algorithm. It can be implemented by utilizing the amenities of automatic differentiation engines in modern deep learning libraries that handle all gradient computations. Instead of solving the full problem for all points in time and space (and being exposed to the so-called curse of dimensionality, under which the running time of an algorithm grows exponentially in the number of dimensions), one learns a convincing strategy with respect to an initial state x0 and a bundle of scenarios. This trades off generality as models have to be retrained once the initial state, the transition logic, or the scenario generator have changed. Whereas, this might be undesirable in some applications, e.g., when deep-hedging many different derivatives on different underlyings issued recurringly (Buehler et al., 2022b), it is less problematic in the case of Deep ALM since, in practice, there is indeed only one entity subject to a single initial state.

Deep hedging (Buehler et al., 2019) has received significant attention from both academics and practitioners because it often outperforms classical methods relying on tractable models. As opposed to the classical modeling and problem-solving paradigm, deep hedging offers a generic approach for finding an approximately optimal hedging strategy: parametrization of the hedging strategy with neural networks and training thereupon to minimize the hedging error on a set of simulated market trajectories. The modeling task is split into a simulation task and an optimization task, that is conditional on the simulated paths. This allows for using all kinds of techniques for generating scenarios; e.g., see Buehler et al. (2020) and Wiese et al. (2020). The flexibility of the deep hedging framework does not only lie in the choice of the market simulator but also in the simple adaptability to arbitrary (possibly path-dependent) payoffs and in the extensibility to account for market frictions such as transaction cost and liquidity squeezes. Even a fundamental change to the problem such as replacing a Markovian market simulator with non-Markovian dynamics can be accounted for by replacing the feed-forward neural networks with recurrent neural networks (see, Horvath et al., 2021).

While most of the work on deep hedging focuses on managing financial derivatives, Krabichler and Teichmann (2020) apply the DSC approach to the problem of funding and hedging a runoff portfolio. Exemplarily, it represents a not yet unwound liability on the balance sheet of a property and casualty insurance company. Practitioners do not want to keep these positions unhedged and look rather for a strategy to maximize risk-adjusted returns of the net portfolio (i.e., equity). Investment decisions become high-dimensional because at each point in time, a whole series of bonds with different maturities is issued along the term structure. Krabichler and Teichmann (2020) demonstrate that applying the DSC approach in due course leads to a dynamic strategy that outperforms a static replication scheme which is commonly used in practice. Since the replication of a bond portfolio is a fundamental task within ALM on either side of the balance sheet for investment and financing decisions, the success of deep replication motivates the application of DSC to a full description of the ALM problem, which we denote as Deep ALM. Incorporating all components of the ALM problem while finding the right balance between all the different goals without adversely affecting the robustness of the learning process entails some engineering work; see Krabichler and Teichmann (2020). In this article, we pursue this engineering work, develop a realistic ALM framework, and apply the DSC approach within it. We expand on the stylized replication problem because we make investment decisions for non-maturing portfolios involving stochastic depreciation, extend the hedging instruments to swaps, and replace the simple liquidity constraint by more realistic counterparts. More precisely, these comprise a minimum reserve, standard liquidity measures, a leverage constraint, risk limits in terms of an interest rate sensitivity, and a minimum target return.

Other ALM problems that have been approached with machine learning and reinforcement learning techniques are different from our setting. There is an extensive literature on the use of deep learning for mere investment portfolio optimization (whereby funding and other intricacies of ALM are not treated); e.g., see Zhang et al. (2020). Fontoura et al. (2019) consider the problem of determining the allocation of funds toward asset classes such that a portfolio of liabilities can be paid off using these assets. Their problem setting is different from ours as they consider a runoff setting (no going concern), only optimize relative investment decisions (not the scale), do not consider financing decisions or constraints, and use a binary objective of whether assets are sufficient to pay all debts or not. Cheridito et al. (2020) use neural networks to approximate the value and thereby the risk of a liability portfolio consisting, for instance, of options or variable annuities. This deviates from our setting since we face asset and liability portfolios that consist mainly of bonds and other deterministic as well as stochastic cash flows.

1.5. Structure of the article

Section 2 elaborates on the ALM problem. Subsequently, the Deep ALM approach and its implementation is outlined in Section 3. Section 4 presents the main results and a comprehensive set of in-depth analyses. Section 5 provides a brief summary of the key findings and lists remaining issues before deploying Deep ALM. An overview of all variables and parameters is attached in the Appendix.

2. The ALM optimization problem

2.1. States and actions

2.1.1. Setting the scene

To apply deep learning techniques to ALM, one needs a comprehensive mathematical description of the balance sheet roll-forward. In this section, ALM is formulated as a stochastic control problem. This inevitably involves simplifying assumptions on components of ALM that are potentially much more complex in reality. Many of the simplifications (e.g., deterministic depositing behavior) can be replaced by more complex models in a straightforward manner such that the Deep ALM approach is still feasible. To obtain convincing results after refining the problem setting, additional work such as the incorporation of additional features may well be required.

While many reinforcement learning problems can be formalized as Markov decision processes (MDP), we do not frame the ALM problem as an MDP as we do not restrict the transition function to be Markovian. Still, the following sections describe—analogously to the description of an MDP—what variables are modeled (state), what decisions can be made (actions), how these decisions impact the model state (transition), and how given states are evaluated (rewards) to optimize the actions. Since we are going to introduce a considerable number of variables, we provide a comprehensive overview of the notation in the Appendix.

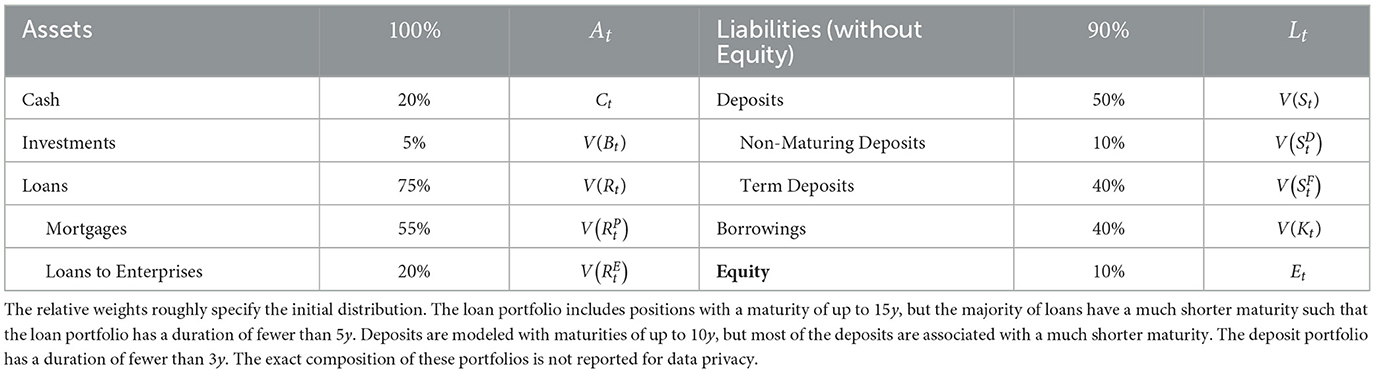

This section provides a brief overview of the model variables. They consist of positions on an aggregated and simplified balance sheet of a bank, the so-called banking book as specified in Table 1, other variables that impact the bank's income statement, and the yield curve. Each variable is modeled over H equidistant time steps t ∈ 𝕋 : = {0, Δt, …, T} with T = (H−1)Δt on the filtered probability space , where . The time discretization is chosen such that the step size Δt = 1/12 corresponds to 1 month. We distinguish between variables representing nominal cash flows and variables representing the value of a cash flow (i.e., the discounted cash flow). At any time t, a nominal cash flow is tracked up to N time steps Δt into the future and modeled as an N-dimensional (random) vector, where the ith entry refers to a cash flow at the global time step t+i, i.e., a cash flow being due after i steps when viewed from time t. A cash flow being settled i time steps has consequently a maturity of τ = iΔt and the set of all maturities is denoted as . Some of the nominal cash flows are assumed to be deterministic, while all discounted cash flows are random variables due to their dependence on the yield curve.

Table 1. Economic balance sheet—Loans and deposits are demand-driven, whereas investments and borrowings are subject to the control of the bank.

2.1.2. Yield curve and discount factors

In our formulation of the ALM optimization problem, the yield curve is the most important component because it is the only source of randomness. The yield curve determines bond prices (or rather coupons), discounted values of nominal cash flows, as well as additional effects on the bank's income such as depreciation and penalties on cash. The yield curve is modeled as the random vector , where the ith entry of Yt denotes the yield prevailing at time t for a maturity of iΔt. We sometimes refer to this yield as Y(iΔt). As the yield curve is the single source of randomness in this model, it is -measurable by the definition of . The yield curve Yt determines the discount factors for all maturities as

The discount factors are used to value nominal cash flows. The value of a nominal cash flow at time t is denoted as V(Xt). It is given by the inner product with the discount factors at time t, i.e., V(Xt) = 〈Dt, Xt〉.

2.1.3. Balance sheet items

2.1.3.1. Cash and cash equivalents

This position represents all highly liquid assets of a bank. It changes at each model step as loans are issued and paid out, deposits are posted and withdrawn, and costs are settled. It decreases with additional bond investments and increases when raised by issuing bonds. It is highly dependent on the exact decisions made and thus modeled as a random variable Ct:Ω → ℝ. The cash position essentially represents cash flows of maturity zero such that V(Ct) = Ct.

2.1.3.2. Loans

The bank issues two types of loans: mortgages and loans to enterprises. The number of new loans that the bank grants each period is assumed to be driven by demand and not influenced by any decision made by the bank. Loan defaults occur whenever the yield curve shifts significantly over a single year. The cash flows of mortgages and loans to enterprises outstanding at time t are modeled as random vectors and , respectively. Aggregated loans are referred to as

2.1.3.3. Investments

We assume that the bank can only invest in bonds. At the beginning of the model period, the bank has a legacy portfolio of bonds. At each model step, the bank has the opportunity to invest in several newly issued bonds with different maturities up to a maturity of N steps. We assume that bonds cannot be sold (including no short-selling) and are always held-to-maturity. The aggregated cash flows of all outstanding bonds the bank has invested in up to and including time t are denoted by the random vector . We further distinguish this bond portfolio Bt, that includes payoffs from bonds bought in period t, from the bond portfolio which does not include payoffs from the period t investments.

2.1.3.4. Deposits

Customers can make two types of deposits: non-maturing and term deposits. Deposits are assumed to be driven by deterministic demand and not influenced by decisions made by the bank.7 While cash flows originating from term deposits naturally have a maturity associated with them, cash flows from non-maturing deposits technically do not have a maturity. Customers can simply withdraw their money whenever they want.8 At the same time, it is unlikely that all non-maturing deposits are withdrawn in any single period. Hence, we assume a maturity structure for the non-maturing deposits. “Outstanding” cash flows from non-maturing deposits at time t can then be modeled as a deterministic vector . Similarly, cash flows from term deposits due at time t are given by , and aggregated deposits are defined as .

2.1.3.5. Financing

In addition to the funding from deposits, we assume that the bank can only raise additional capital by issuing bonds, which are modeled analogously to those on the asset side. Correspondingly, the bank has at each model step the opportunity to issue several new bonds and to add them to its existing financing portfolio. The financing portfolio is modeled analogously to the investment portfolio. Financing positions are always held-to-maturity and cannot be unwound prematurely. The random vector denotes the aggregated cash flows originating from all outstanding bonds, that the bank has issued before time t, and denotes the financing portfolio including the bonds issued at time t.

2.1.3.6. Aggregation

Cash, investments, and loans constitute the bank's assets. Its value At:Ω → ℝ is thus given by At: = Ct+V(Rt)+V(Bt). With an abuse of notation, liabilities consist of deposits and financing. The value of liabilities is referred to as Lt:Ω → ℝ and given by Lt: = V(St)+V(Kt). Consequently, the bank's equity Et:Ω → ℝ is the residue Et: = At−Lt. The final value of equity ET is the quantity that the optimization aims to maximize. Note again that we only keep track of the economic balance sheet and ensure that the balance sheet is indeed balanced under this valuation regime. We leave out other accounting aspects such as accruals and amortized cost, which are typically considered in ALM depending on the accounting standard and legislation.

2.1.4. Actions

As previously mentioned, the bank faces investment and financing decisions each period. It can invest in bB bonds and borrow from bK bonds that all trade at par and have different maturities.9 Once bought or issued, bonds must be held until maturity. Short-selling is not allowed, which includes that the bank cannot invest in its own issued bonds. Both investment and financing can be done fractionally. We denote the actions made at time t by the vector . Its first bB entries represent the number of bonds bought at each of the available investment maturities, also referred to as . Its last bK entries represent the number of bonds issued at each of the available financing maturities, also referred to as .

2.2. Transition of decision-independent variables

The model state at a given time t ∈ 𝕋 is captured by the variables introduced earlier. The next two sections describe how the state transitions from time t to the next discretized instance t+Δt. In the language of DSC, we specify how the transition function bt acts on the state xt. Because the state in our setting is quite high dimensional and the transition function is a concatenation of many calculations, we omit this notation in the following. Instead, it is more comprehensible to directly describe the evolution of the model variables that make up the model state. We structure the description of the transitions based on whether the transition of a model variable depends on the decisions or not. For decision-independent variables, transitions can later be calculated outside of the optimization. We start by introducing some notation following Krabichler and Teichmann (2020). Let

where 0 ∈ ℝN−1 is the zero vector and the identity matrix. When applied to an N-dimensional vector X, U shifts all entries up by one, eliminates the first entry, and appends a zero as the new last entry. Moreover, let π(k):ℝN → ℝ denote the projection onto the kth component of an N-dimensional vector.

2.2.1. Yield curve, discount factors, and bonds

The transition of the yield curve can generally be given by any term structure model, such as those presented in Section 2.7. Discount factors are then recalculated via (Equation 4). In each period, bB new investment bonds and bK new financing bonds are issued. Following the setup in Krabichler and Teichmann (2020), bonds pay a semi-annual coupon that is chosen such that bonds trade at par at issuance. The corresponding coupon payments are calculated as follows. For a given investment bond i ∈ {1, 2, …, bB} issued at time t, we denote its payout structure as with semi-annual coupon . Denoting with the kth entry of , the payout structure is defined for all as

As indicated, is chosen such that the bond trades at par, i.e., it is the solution to the linear equation

Note that the bank actually receives less than this fair coupon on this investment as it faces an annualized spread of κB = −15 bps. 10 The cash flow adjusted by spreads is in the following referred to as . Financing bonds are treated analogously: the spread-adjusted (κK = 15 bps) cash flow of the ith financing bond issued at time t, where i ∈ {1, 2, …, bK}, is referred to as .

2.2.2. Loans

The initial loan portfolios for both mortgages and loans to enterprises are provided by the bank and assumed to evolve according to a simple growth scheme. In each period, loans mature leading to repayments of the loaned amount which increases cash. At the same time, new loans are granted such that . Granting new loans leads to a reduction in cash. The total amount of new loans granted in period t is assumed to be

The loan position grows by slightly more than ρL = 3% per year. The amount of new loans is split over several maturities. For the loans to enterprises, new loans are assumed to be granted equally for maturities of 1m–3m. New mortgages are attributed to 11 different maturities of 2y–12y based on a distribution provided by the bank that mimics realistic customer behavior. This distribution is assumed to be deterministic and the same for each model period, which corresponds to the assumption that there is neither stochastic nor interest rate sensitive borrowing behavior of the bank's customers. Mortgages are assumed to be default-free, whereas loans to individuals have some default risk in times of quickly increasing yield curves: at the end of the year, the bank has to depreciate loans to enterprises by a factor of (k−2%), if the 6m interest rate has increased by k > 2% over the past year. The depreciation amount is split proportionally over the current portfolio of loans to enterprises. The dependence of depreciation on the yield curve makes the loan portfolio stochastic.

All loans are assumed to bear fixed interest payments. The monthly interest payment on a loan issued at time t with time to maturity τ is calculated based on Yt(τ), i.e., the yield prevailing at time t for time to maturity τ. In addition, the bank is assumed to charge its customers an annual spread of κL > 0 and never offers its customers negative interest on loans. The latter assumption is reasonable as most Swiss banks did not offer loans with negative coupons in recent years. Finally, the monthly interest rate payment r for a loan granted at time t with maturity τ is calculated as

The sum of all interest payments that the bank receives at time t on its loans is denoted by rt. For simplicity, interest payments from loans in the legacy portfolio are calculated in the same way using the initial yield curve Y0, as opposed to calculating them from the yield curve history. Once a loan has been depreciated, it does not pay interest any longer.

2.2.3. Deposits

As mentioned earlier, we assume a maturity structure for non-maturing deposits such that non-maturing and term deposits are treated equivalently from the computational viewpoint. The distribution of deposits over different maturities is simulated via a rolling scheme. Each deposit is associated with a maturity of years. Once a deposit with face amount A and reference maturity τ matures, the amount gets reinvested in equal parts into monthly tranches up to the maturity τ. Thus, gets assigned to each maturity Δt, 2Δt, …, τ in the total deposit portfolio. The initial assignment of deposits to the reference maturities is provided by the bank. In addition, new non-maturing deposits with face amount

where , are placed with the bank. They are assigned to the reference maturities via the same distribution used for the initial deposits portfolio. Term deposits increase analogously by with growth rate . New total deposits are then given by . Interest paid on deposits varies with the level of a reference rate. The latter is defined as the 3m moving average of the 6m-yield Yt(0.5). This approximates the 6m-CHF-OIS, a relevant reference swap rate in practice. In addition, the bank imposes caps (and floors) on the paid interest rates depending on the type of the deposit. The time t interest rates for non-maturing deposits and term deposits are given by

The interest on non-maturing deposits is less than the interest on term deposits because non-maturing deposits are more liquid than term deposits. The time t interest payment on a non-maturing deposit (analogous for term deposits) with nominal 1 is then given by

Interest payments are assumed to be reinvested rather than paid out. The reinvestment of interest payments is treated in the same way as the reinvestment of maturing deposits. Deposits are the equivalent of loans on the right-hand side of the balance sheet as they can be seen as short positions in loans. The reinvestment of interest on deposits introduces an asymmetry between these two items, as interest on loans is assumed to be paid out.

2.2.4. Decision-independent cash flow

All other costs that impact the bank's income are summarized as personnel and material costs. These have to be paid at each time step. Material costs are assumed to be the same for each time step, whereas personnel costs grow by 2% annually. The total costs paid at time t are denoted by ct ∈ ℝ. Cash flows resulting from changes in loans and deposits, interest received on loans rt, and costs are independent of model decisions. Hence, the aggregated cash flow

Can be calculated outside of the training loop.

2.3. Transition of decision-dependent variables

This section describes the evolution of model variables that depend on the investment and financing decisions made. This includes the value of the bank's equity at the final model step, which determines the reward (loss) assigned to a given set of actions. Consequently, the decision-dependent model variables have to be recalculated during the training to optimize the actions. The transitions of model variables in this section follow logically from fundamental relationships of double-entry accounting. The decision-dependent variables are the cash position, the investment portfolio, the financing portfolio, and consequently, the bank's assets, liabilities, and equities. Their transition can be split into the following iterative scheme: in each period, the balance sheet is rolled forward, investment and financing decisions are made, and the balance sheet gets restructured based on those decisions.

2.3.1. Income statement

On the one hand, the balance sheet is always with respect to a snapshot in time and can be interpreted as a state variable. On the other hand, the income statement is always with respect to a certain time period and builds the bridge from the initial to the final balance sheet of that period. While all revenues and most costs result from cash flows of the balance sheet items listed earlier, operational costs such as personnel and material costs have to be accounted for in each model step. Furthermore, profit distributions are made annually to shareholders. Thus, it is essential to monitor equity over time. For our purpose, we do not need to break down the profit & losses (P&L) into explanatory components such as net interest income, depreciations, and operational costs. Instead, we simply track the gross and net P&L before and after dividends, respectively, on an aggregated basis; see later.

2.3.2. Roll-forward step

At the beginning of each period, the cash flows associated with all balance sheet positions are realized. This step does not occur in period t = 0, implying that the initial balance sheet is given with no outstanding settlements. Recall that the cash flow resulting from maturing and newly issued loans and deposits, interest received on loans, and other costs has already been computed as the quantity CFt outside of the training loop; see Equation (13). Furthermore, cash flows resulting from coupon and nominal payments in both the investment and borrowing bonds are realized. While the cash position is assumed not to earn positive interest, the bank might have to pay interest on its cash: in times when the short end of the yield curve is negative, the bank is granted a maximal allowance to deposit cash at the central bank which is exempted from negative interest. This amount is limited based on the minimum reserves MR of the bank; see Equation (24b) in the following for the exact terms. If the bank exceeds this limit in cash, it has to pay the market interest rate at the short end of the yield curve (i.e., for the maturity Δt). This mechanism is modeled by charging the bank a cash penalty cpt that corresponds to the negative interest the bank has to pay, namely

which, due to its dependence on Ct, is decision-dependent. Thus, during the roll-forward step, all cash flows together result in the cash update

where pre indicates that is not yet the cash at the end of period t+Δt, but rather an intermediate quantity as it has not been updated yet by the bond transactions initiated at time t+Δt.

As cash flows are realized, balance sheet positions have to be updated. Loans and deposit portfolios evolve as discussed in Section 2.2. Investment and borrowing bond portfolios also have to be rolled forward: the due amounts (i.e., the first entry in the vectors Bt and Kt) are removed and all other payoffs are moved forward in time (entries in vectors are shifted up by one position), restoring the interpretation that the kth entry of the vector Bt+Δt represents cash flows being settled in period t+(1+k)Δt. Finally, all cash flows need to be reevaluated under the prevailing yield curve at time t+Δt. Therefore, the state of the balance sheet positions after the roll-forward step is thus given by

2.3.3. Restructure step

With the roll-forward of the balance sheet, the new period t+Δt has now started, and the bank can make its investment decisions and financing decisions . These decisions could be the result of any policy the bank wants to pursue, including one that directly parametrizes the actions with neural networks, i.e., the Deep ALM approach as presented in Section 3.2. The restructure step updates the balance sheet according to the investment and financing decisions made. This involves updating the bond portfolios by adding the cash flows of the newly bought and issued bonds to the existing portfolios at the correct maturities, i.e.,

Consistently, cash is updated as

Bond transactions affect bank's equity since transaction costs need to be borne (in terms of a spread). This implies that the decision-making and restructuring steps are not income-neutral, and we generally have . The value of equity at the end of the period can be calculated as

The last restructure step is conducted at time T−Δt. It is followed by a terminal roll-forward, whose resulting equity component will be decisive in the optimization exercise.

2.3.4. Annual closing step

At the end of each year, the bank distributes a dividend δt amounting to 50% of its profits over the present year. The distributed cash directly decreases the bank's equity. On the monthly time scale, this translates into performing every 12th time steps an additional update

where t ∈ (𝕋\ {T}) ∩ℕ and post indicates that these are the cash and equity values after the dividend has been paid out.

2.4. Constraints

The bank operates in a highly regulated environment that imposes constraints on the bank's decisions. We are seeking for optimized control when adhering to all rules. We already restricted the bank's behavior inherently via the assumptions that all bonds are held-to-maturity and that short sales are not allowed. In addition, we take into account five regulatory constraints inspired by Basel III (see Basel Committee on Banking Supervision 2011), whose compliance is controlled whenever the balance sheet has been restructured. The weights below were determined in close collaboration with the bank to reflect the real weighting based on a more detailed accounting basis as closely as possible.

2.4.1. Leverage constraints

To limit the leverage of banks, the Basel III framework divides the bank's capital into different tiers and places leverage constraints on each tier of capital. For model tractability, we summarize these constraints into a single leverage constraint on the ratio between equity Et and risk-weighted assets RWAt. The latter is a weighted sum of the bank's assets, where the weights reflect the risk associated with each class. The constraint is defined as

2.4.1.1. Liquidity constraints

As opposed to previous regulations, the introduction of the Basel III framework placed a significant focus on liquidity risks that became particularly apparent during the financial crisis in 2008. In our framework, liquidity risks are monitored by two ratios, the liquidity coverage ratio (LCR) and the net stable funding ratio (NSFR). The LCR ensures that the bank has enough liquidity to cover the net cash outflow during a 30d stress period, denoted by . This outflow is approximated as a linear combination of the outstanding deposits and financing. High-quality liquid assets (HQLA) are required to exceed the net outflows by a buffer of at least 5%. More precisely,

The NSFR aims to enforce liquidity over a longer horizon. It considers the ratio between the available stable funding (ASF) and the required stable funding (RSF) of the balance sheet. Similarly,

In addition to LCR and NSFR, Swiss banks have to hold a minimum reserve at the SNB. In times of negative interest rates, the SNB demands higher reserves than usual. We frame this constraint via the cash to minimum reserve ratio (CMR) as

2.4.1.2. Interest rate sensitivity

The final constraint that is motivated from a regulatory perspective restricts the interest rate risk. To this end, one calculates the sensitivity of the bank's equity toward a parallel shift of the yield curve by ±100 bps. Let denote the residual equity if all other balance sheet items are reevaluated under the discount factors implied by the shifted yield curve. It is imposed that

2.4.1.3. Minimum annual return

Finally, we impose a lower bound on the annual revenue, which the bank is not supposed to undercut. It is motivated by preventing losses under any circumstances. The profit ought to exceed at least the basic operational cost plus an additional buffer of mCHF 6. Formulated in terms of the excess yearly return-on-equity (EYR), it reads

This constraint is calculated on an annual basis during the annual closing step for t ∈ (𝕋\ {T}) ∩ℕ.

2.5. Objective

Formulating reward functions for real-world reinforcement learning applications is challenging, since one has to capture human preferences on the policy and its outcomes via a single number. ALM involves many stakeholders that have detailed and potentially different preferences on the ALM policy and the resulting evolution of balance sheet positions. Even the fundamental goal of ALM is ambiguous because the bank must trade off profitability versus hedging; see Spillmann et al. (2019, Chapter 2). As profits are recognized in equity, we act as if preferences in the ALM problem could actually be reduced to characteristics of the bank's equity distribution at the horizon T. The prerequisite that constraints should not be violated is additionally incorporated into the loss signal.

The assumption of solely focusing on the value of the bank's equity at time T might not truly capture preferences in this setting. Indeed, not all paths with the same final equity value ET are valued equally from a practical perspective. Banks prefer their equity to be steadily increasing along its path to time T and are concerned with its maximum drawdown. This path preference is to some extent accounted for in the constraints; Equation (26) implies that equity paths with elevated drawdowns feature a higher loss provided that the minimum annual return has ever been violated at all. Otherwise, two equity paths will be evaluated as indifferent if they have the same final equity value. A possible remedy could entail to replace the single reward with compounded rewards based on, e.g., E1, E2, …, ET. We restrict our analysis to loss functions based only on ET because even rewarding intermediate equity values does not entirely solve the more pressing issue of neglecting how well the bank will do after time T. Ignoring long-term success is not in alignment with true preferences as the bank will not be liquidated after time T but is a going concern. Ideally, this should not be problematic as all balance sheet items are valued fairly. If the cash flow structure of balance sheet positions is determined to be suboptimal for the bank after time T, it could simply be restructured without decreasing the bank's equity. In the presence of market frictions and short-selling constraints, it becomes questionable whether restructuring at negligible cost is possible. The experiments presented below indicate that the time horizon T has an impact on the learnt strategies. Furthermore, the bank seeks to avoid significant restructuring within short time periods.

The going concern principle motivates modeling the problem as an infinite decision problem, in which discounted rewards are issued periodically. DSC, as presented earlier, is not well suited for a problem with an infinite time horizon. We would require a different type of algorithm. Therefore, we restrict our formulation of the ALM problem to a finite time horizon T. If cutting off the problem leads to degenerate behavior toward the end of the model period, increasing the horizon T might make it less relevant: as long as there is enough time between today and time T, current actions might be unrelated to this behavior, and thus still be useful. We will investigate this issue later by comparing strategies for different model horizons T.

2.5.1. Constant relative risk aversion

If preferences are rational11, maximizing preferences on the distribution of ET becomes equivalent to maximizing the expected utility 𝔼[u(ET)], where the so-called Bernoulli utility u(x):ℝ → ℝ assigns a real value to a given realization x of ET. The assumption that investors are risk averse translates into the requirement that u is concave and non-decreasing. Since the underlying preference structure of the bank's shareholders is elusive, it is unclear what Bernoulli utility u describes the risk appetite most accurately. This problem is commonly approached by restricting u to be from a specific class of utility functions that are characterized by a small number of parameters. This includes the class of utility functions with constant relative risk aversion (CRRA), where u is of the form

As indicated by the name, relative risk aversion, defined by −xu″(x)/u′(x), equals γ for all x > 0. For DSC, the parameter γ is reverse engineered such that the terminal equity distribution of the learnt strategies is balanced. Because the ALM problem is framed as a minimization problem, we define the utility loss component ℓu as the negative utility of the equity ratio, i.e.,

The equity ratio is floored at 0 < ε/E0 ≪ 1 to ensure that the CRRA utility remains well defined.

2.5.2. Target return

Alternatively to the formulation as a utility maximization problem, Krabichler and Teichmann (2020) suggest framing the ALM problem as a hedging problem. Given an annual return target μ, this approach aims at minimizing the difference between the bank's final equity and the implied target value. This target loss is defined as

Economically, this loss function encodes a preference for adequate risk-adjusted returns. It has the advantage that the hyperparameter μ is easily interpretable as opposed to the abstract notion of the risk aversion coefficient.

2.5.3. Penalties

The bank aims to maximize investor utility while sticking to several constraints. We encode this in the loss function by penalizing any violation of one of the six constrained quantities from Section 2.4. Denoting by the constrained quantity (e.g., LCRt) at time t and by βi the bound corresponding to the constraint (e.g., 105% for LCR), the extent of the ith breach at time t is calculated as

where i = 5 in the order of Section 2.4 corresponds to the interest rate sensitivity constraint. Taking the square of the violations encodes the preference that large violations are “more than linearly” worse than small violations. The intuition is that slight violations of a specific constraint are bad for the bank, while significant violations are detrimental.

The accumulated penalty p is defined as the weighted sum of all violations . It is used to calculate the loss component for constraint violations

The penalty is squared again, implying that large violations over the entire model period are “more than linearly” worse than small violations. The weights σi can be chosen to adjust for different magnitudes of the constrained quantities. Moreover, one can use these weights to encode preferences over the relative importance of different constraints. For instance, the weight of the penalty for violations of the minimum return is relatively small as this constraint is less binding than the regulatory constraints.12

2.5.4. Loss

Finally, the loss associated with a single path i is given as a weighted sum of the utility loss and the penalty loss, i.e.,

where λ > 0 determines the impact of the penalty on the total loss. The ALM problem is given by

where ET and p result from the transition dynamics outlined in this section. Calculating the loss concludes the forward computations in the ALM framework. Algorithm 1 provides an overview in which order the presented steps are executed to obtain the final loss signal.

2.6. Swaps

This section describes a model refinement by additionally incorporating plain-vanilla interest rate swaps into the decision process. Interest rate swaps are contracts between two counterparties that exchange floating payments for fixed payments. At predetermined times, one party (payer) pays a fixed payment and receives a floating payment, and the other party (receiver) receives the fixed payment and makes the floating payment. The fixed payments are called fixed as their amount is determined when the contract is entered into. The floating payments are determined throughout the duration of the contract based on a floating rate (e.g., LIBOR, up until recently, and compounded overnight rates). A brief and relevant introduction to swaps can be found in Filipović (2009, Chapter 1).

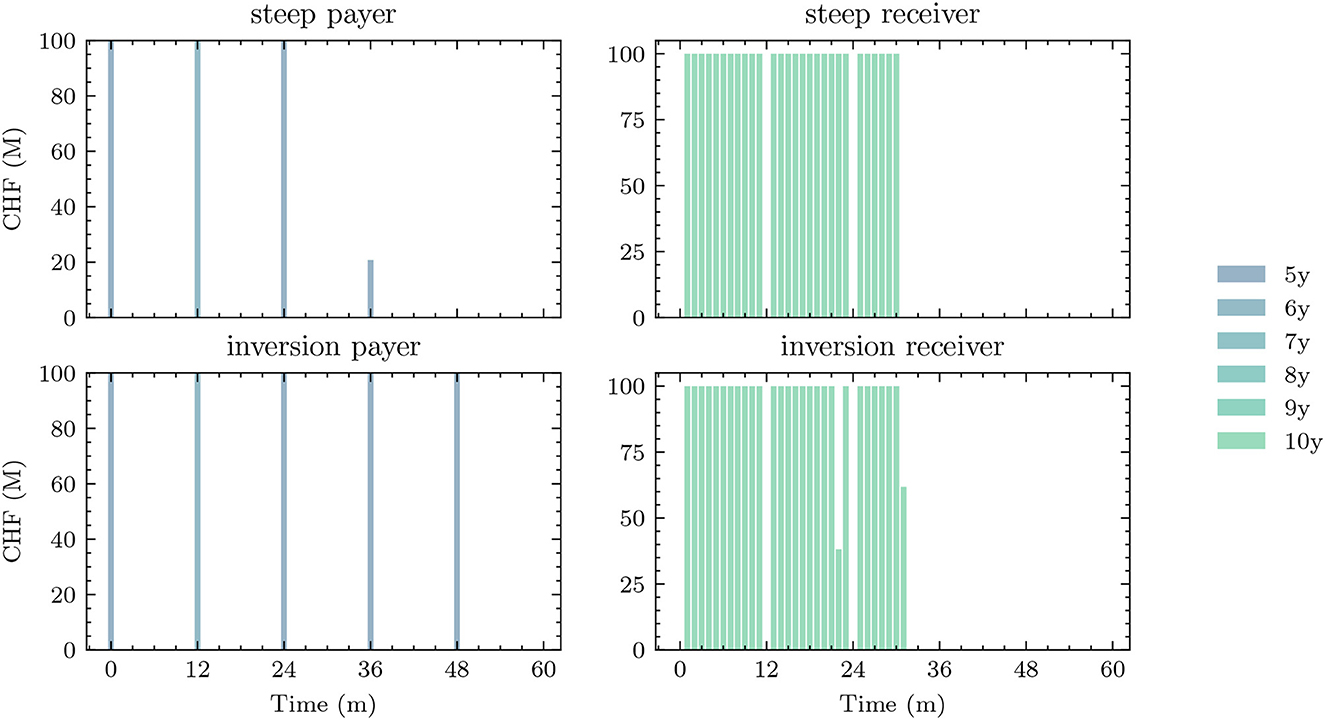

The basic ALM framework presented above is limited by the assumption that the bank can only invest in bonds and issue bonds. This neglects the bank's ability to control its interest rate exposure via swaps. In a second step, we extend the ALM framework by the introduction of interest rate swaps. Each period, the bank can additionally enter into s = 6 payer and receiver swaps, respectively, with maturities 5y–10y. Similarly to bonds, swaps cannot be sold in the secondary market and must be held until expiration. Swap payments occur on an annual basis starting exactly 1 year after issuance. The floating payments are determined 1 year before their payment and are given by the simple 1y spot rate prevailing at that time. The fixed payments are chosen such that the initial value of the swap is zero. The bank has to pay an annual spread of κS = 0.02% on both payer and receiver swaps. In absolute terms, the spread on swaps is smaller than the spread on bonds.

Recall that we keep track of bond positions by simply adding the entire cash flow of a bought or issued bond to the aggregated cash flow of the bond portfolio. This mechanism does not work for swaps as the cash flows of the floating leg are not known at issuance and are scenario-dependent. Instead, the position in each swap has to be kept separately. Computationally, this is done by keeping track of the holding portfolios and that denote the number of payer and receiver swaps, respectively, that are owned at a given time t ∈ 𝕋. The first dimension of is the number of payer swaps that exist over the entire model horizon. Hence, each entry of the denotes the volume with which a specific payer swap has been entered into at time t. The holding portfolios are initialized with zeros at all entries, i.e., we assume that there are no legacy swaps. At any time step t, the bank can then decide on the number of new payer swaps and new receiver swaps it wants to enter into. In the restructuring step, () is added to () at the correct indices.13 Also note that and have to be included in the control at. In the extended setting, at is therefore of dimension 2(b+s) and a concatenation of , , , and .

The change in cash within the roll-forward step has to be adjusted to account for payoffs originating from swaps. If a swap i ∈ {1, 2, …, s(H−1)} has an exchange of cash flows in period t and the fixed payoff is given by ki, the net cash flow from the position in this swap is given by

depending on whether we are dealing with a payer or receiver swap. The cash flows , from all swaps i ∈ {1, 2, …, s(H − 1)} have to be added to the cash update in Equation (15). While the initial value of any swap contracts is zero (with the exception of a spread), swap positions have to be reevaluated in every roll-forward (Equation 16) and restructuring step (Equation 19). The fixed leg, the floating leg, and the associated spreads of each swap are valued using standard techniques involving the forward rate curve. The net value of all swap positions is considered to be an asset or liability for the bank if it is positive or negative, respectively. To calculate the required replacement values, we define the net swap assets NA and net swap liabilities NL as

and add NA to the asset calculation and NB to the liability calculation in the valuation step (Equation 16) and restructuring step (Equation 19), respectively.

We assume that the general calculation of constraints from Section 2.4 does not need to be adjusted in the extended setting. In particular, the impact of swaps on LCR, NSFR, and RWA is negligible. Still, the inclusion of swaps in the balance sheet impacts the leverage constraint on the ratio Et/RWAt. Furthermore, the interest rate sensitivity constraint is of course significantly impacted by the inclusion of swaps. The value of the swap portfolio impacts both the value of equity Et and the value of equity under the shifted yield curve . In the presence of swaps, this constraint becomes particularly important as the model could otherwise enter into positions with large exposures to interest rate risk.

While most balance sheet constraints remain unchanged, the number of payer swaps () and receiver swaps () that the bank can enter into in each period is constrained. Next to the solely computational requirement that these must be non-negative14, we place the liquidity constraint that and . This implies that each month, the bank can only enter into payer and receiver swaps involving a notional amount up to mCHF 100. Finding counterparties for larger swap positions may not be easily possible in due course. Moreover, the total sum of outstanding payer and receiver swaps is required to be less than mCHF 3 800 and mCHF 2 800, respectively. More precisely, the constraints

must be satisfied for all t ∈ 𝕋\{T}. This is a simplification of a typical requirement from hedge accounting. The volume of payer and receiver swaps should not exceed the volume of unhedged assets and liabilities, respectively, at a given maturity. Correspondingly, swaps are intended to hedge outstanding interest rate risk, in contrast to taking on interest rate risk. The upper bounds were provided by the bank. They represent approximatively the volume of unhedged assets and liabilities that have maturities between 5y and 10y. While a dynamic recalculation of such limits would be more precise, we suspect that it should not impact results heavily, considering that loans and deposits evolve almost deterministically.

2.7. Term structure models

All approaches make use of a Monte Carlo approximation of the expected loss (Equation 33). This requires simulating a set of scenarios for the evolution of the yield curve, as discussed in Section 2.7. In principle, the approaches presented later can be applied to any set of yield curve scenarios. This general applicability does not mean that the “performance” of the different approaches does not differ based on the choice of simulated yield curves. Indeed, the contrary is the case: our experiments demonstrate how the yield curve simulator induces a bias in the model's decisions.

While treating the yield curve as a function R(t, ·):[t, ∞) → ℝ is mathematically convenient, prices are observed in practice for several types of bonds, but only for a limited number of maturities. For the Deep ALM framework, we need to model the yield curve at only N maturities. We therefore refer to the N-dimensional vector Y as the yield “curve”, where the kth entry of Y is equal to R(t, t+kΔτ) for a maturity step size Δτ. Apart from the last paragraph in Section 2.7.4, the following can be skipped by the knowledgeable reader.

2.7.1. Simulation

We approach the ALM problem with a Monte Carlo-based deep learning method. The method uses a collection of scenarios to optimize the ALM decisions. Each scenario specifies the future development of variables that are relevant to the ALM problem. While some of those variables evolve deterministically, others are stochastic, i.e., differ between scenarios. The most important stochastic variable in ALM is the yield curve as it determines the rates at which the bank can lend and borrow money from both customers and investors. In fact, in our model of the ALM problem, the yield curve is the only source of randomness. Yield curve scenarios can be obtained by specifying a model for interest rate dynamics and then sampling from it. Ideally, this model satisfies the following criteria. First, it is financially reasonable to impose absence of arbitrage in the simulated bond market. Even statistical arbitrage is undesirable when using the simulation for training deep learning-based traders. They are likely to find and exploit risk-free profits that exist under the training distribution. But the simulated training distribution relies on an estimation of the mean returns of the traded assets (here bonds). If the estimation is flawed, trading strategies that were profitable under the simulated distribution are certainly not guaranteed to be so in practice; for a discussion in the related context of deep hedging, see Buehler et al. (2022a).

In the ALM framework developed in Section 2, the bank faces significant trading restrictions. This means that even if there exists arbitrage in the market, the bank might not be able to exploit this opportunity (at all, or at least on an arbitrarily large scale). This is pointed out similarly in the context of deep hedging by Buehler et al. (2019). First, the bank faces spreads when interacting with the market. Hence, an arbitrage opportunity can only occur when the payoff of this trading strategy net of the initial transaction costs is almost surely non-negative. Second, the bank is restricted to long-only buy-and-hold-strategies in its bond portfolios. This naturally restricts the set of trading strategies available to the bank.

When training a deep learning model on these paths, it is likely beneficial, if not necessary, to have a sufficiently rich class of yield curve scenarios; see also Reppen and Soner (2023). Having variability among scenarios helps the model explore the space of future attainable yield curves. This likely helps the performance of the deep learning model at inference on the real-world scenario. From an empirical perspective, it might be desirable to have a yield curve simulator that reproduces patterns observed in the past. Such stylized facts include that the yield curve tends to be shaped upwards, that short-maturity yields tend to fluctuate more than long-maturity yields, and that yield curve inversions usually happen when short-term rates are high; see Pedersen et al. (2016, p. 11).

The Deep ALM method splits the optimization into two parts: simulating a set of yield curve scenarios and then solving the optimization conditional on the simulated data. This means that irrespective of what model is chosen to simulate yield curves, the choice itself represents a source of model risk in the Deep ALM framework. This type of model risk is not unique to the Deep ALM framework but is present in many deep learning applications in quantitative finance; see Cohen et al. (2021).

2.7.2. Svensson model

While for some maturities one might observe multiple prices in real-world fixed income markets as bonds are issued by different institutions, for other maturities they might not observe any bond prices at a given point in time. Hence, to obtain 'the' yield curve, some form of interpolation (or even extrapolation) is necessary. To this end, central banks such as the ECB or the SNB fit specific exponential-polynomial functions with a parsimonious parametrization to observed market yields. A popular choice is the model proposed by Svensson (1994), where the yield for a maturity m > 0 is given by

The six parameters β0, β1, β2, β3, τ1, and τ2 are calibrated to fit observed market yields. Both ECB and SNB provide daily data of the fitted parameters from which historical yields for any maturity can be obtained. This is useful in the Deep ALM framework when calibrating a yield curve simulator to historical yield curves.

2.7.3. Principal component analysis

From the previous analysis it is obvious that, at least for a fine grid size Δτ, the yield curve is a high-dimensional random vector with high dependencies between its elements. For modeling yield curve dynamics, it is natural to consider a lower-dimensional representation of the increments via a dimensionality reduction technique like principal component analysis (PCA); e.g., see Murphy (2012, Chapter 12). The yield curve dynamics can often be sufficiently described via its first three principal components; e.g., see Litterman and Scheinkman (1991). In this article, the approach to simulate yield curves is slightly different compared to classical PCA models. First, we apply a PCA on historical CHF forward curves and infer a deterministic, term-dependent volatility of a three-dimensional, risk-neutral HJM-type term structure. Second, PCA is utilized directly on simulated yield curves to obtain a low-dimensional representation of the yield curve as a feature for Deep ALM.

2.7.4. Heath-Jarrow-Morton framework

Let the stochastic basis be a filtered probability space in continuous time. Heath et al. (1992) proposed modeling the term structure of interest rates by specifying the stochastic evolution of the entire instantaneous forward rate curve. Under the no-arbitrage condition, these dynamics are fully specified by the volatility structure. A brief to the HJM framework can be found in Filipović (2009, Chapter 6).

Let α and σ be two stochastic processes, taking values in ℝ and ℝd that depend on two indices t and T, i.e., α = α(ω, t, T) and σi = σi(ω, t, T) for all i = 1, 2, …, d. The forward rate process {f(t, T)}t≥0 for 0 ≤ t ≤ T is assumed to follow the dynamics

where W denotes a d-dimensional Brownian motion under the objective measure ℙ. Equation (38) is well defined under some measurability and integrability assumptions; see Filipović (2009, Chapter 6) for further details. The initial forward curve f(0, T) is a model input and can be chosen to reflect the prevailing yield curve in the market. Heath et al. (1992) show that under an equivalent local martingale measure ℚ for the discounted bond price process, the forward rate dynamics 0 ≤ t ≤ T are given by

where W* is a d-dimensional Brownian motion under ℚ. The HJM framework is very general and many classical interest rate models can be derived within it; see Brigo and Mercurio (2007, Chapter 5). Because the initial forward curve is a model input, HJM models match the initial term structure without any calibration. This is important in a practical application like ours and an advantage over simple short-rate models such as, e.g., Vašiček (1977). But there are also practical challenges associated with the large degree of freedom that the HJM framework offers. This includes the important choice of the volatility structure σ. For a general choice of σ, the short rate r(t) = f(t, t) is not Markovian, which, while undesirable for many practical applications, is not necessary for the Deep ALM framework. For the simple tenor-dependent volatility structure used in Section 2.7.5, the dynamics of the short rate actually are Markovian with respect to a finite-dimensional state; see Cheyette (2001).

For the remainder of this article, we make the simplifying assumption that the real-world measure and the risk-neutral measure coincide, i.e., ℙ = ℚ. This assumption could be relaxed by exogenously specifying the market price of risk. While this would not change the general ALM framework developed in the next sections, it would impact the learnt strategies and possibly lead to different interpretations.

2.7.5. Tenor-dependent HJM model

Specifying a yield curve model that meets all the requirements from Section 2.7.1 is not trivial. Simple short rate models, such as Vašiček (1977), are not well suited as their few degrees of freedom have to be used for calibration to the initial yield curve. The HJM-type model that we use to simulate yield curves assumes a simple structure of the instantaneous volatilities. These are assumed to be constant over time and tenor-dependent. The method matches the initial yield curve inherently and generates a variety of shapes.

In the following, the forward curve refers to the random N-dimensional vector Ft instead of the function f(t, T). The jth entry of Ft is equal to f(t, t+jΔτ) for some discretization size Δτ. Similarly, denotes the vectorized version of α(t, T) and denotes the vectorized version of σ(t, T). Note that the latter is a matrix as σ(t, T) already is a d-dimensional vector. Evaluating (38) at all the tenors of Ft yields the N-dimensional stochastic differential equation

describing the dynamics of the forward curve vector. In this form, the instantaneous volatility structure is captured by the matrix Vt, which fully determines the drift At under the risk-neutral measure; see Equation (39). For modeling purposes, one has to specify the dynamics of {Vt}t≥0. We use a particularly simple model where the instantaneous volatility structure is assumed to be constant over time, i.e., Vt ≡ V. This means that the forward curve is exposed to the d-dimensional shock Wt with a possibly different magnitude at each tenor. The jth column vector of V, denoted V(j), specifies the tenor-dependent exposure to . The matrix V is fitted to historical data by decomposing the historical forward curve increments into its principal components. Moreover, this relies on the assumption ℙ = ℚ. The precise fitting method is as follows:

1. Estimate the covariance matrix of weakly forward curve changes {ΔFt}t = 0, 1, …, T and annualize it by multiplying by 52.

2. Apply an eigendecomposition on the scaled, estimated covariance matrix, i.e., , where the columns of Q ∈ ℝN×N are the eigenvectors of and Λ ∈ ℝN×N is the diagonal matrix containing the eigenvalues of .

3. Keep the first d = 3 eigenvectors and scale them by their eigenvalues, i.e., for j = 1, 2, …, d.

4. To regularize the estimate, approximate the vectors as polynomial functions of the tenors. The degree of the polynomials is a modeling choice; we use cubic polynomials.

5. Set the jth column vector of V equal to the jth fitted polynomial evaluated at the relevant tenors.

We model σ(t, T) as a d-dimensional vector where the entries are polynomials as functions of the tenor T−t. Following the HJM approach, the risk-neutral drift At ≡ A is given by the drift in Equation (39) and can be approximated using the trapezoidal rule. Future forward curve paths can be simulated via

where ∂τFt is approximated as the forward difference.15 Finally, the kth entry of the vector of zero coupon bond prices Dt (discount factors) is calculated by the approximation from Glasserman (2003, Chapter 3)

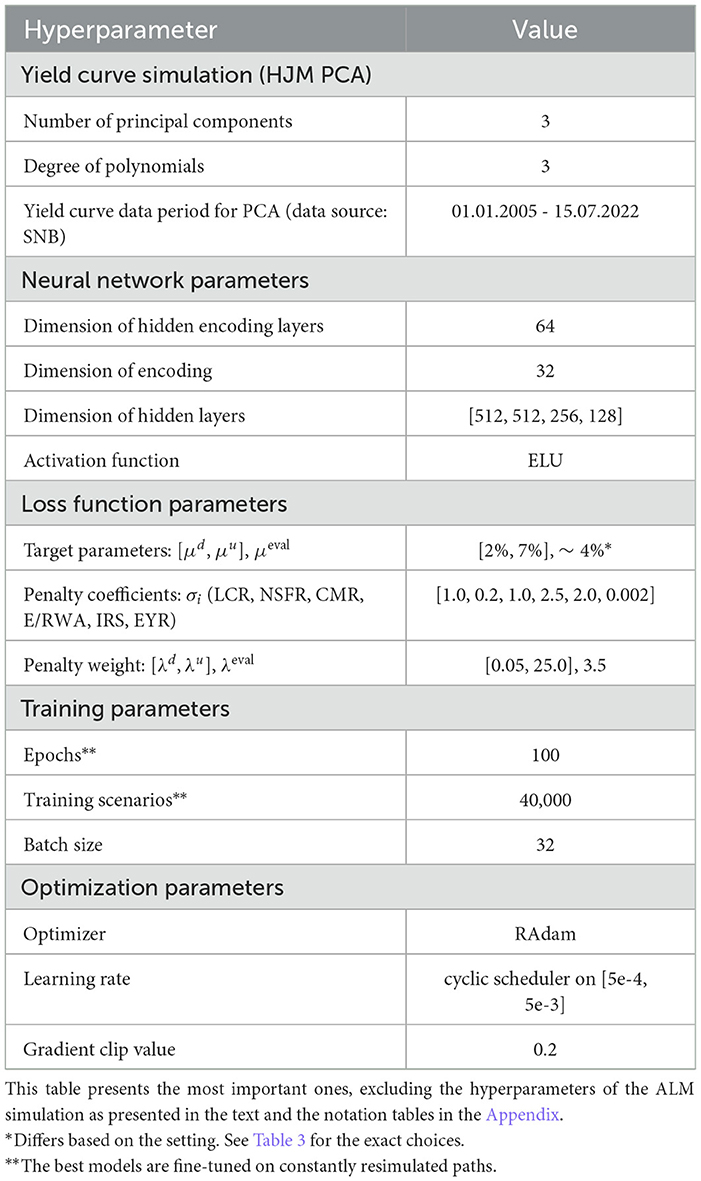

where the superscripts refer to the components of the respective vectors. We refer to this method as the HJM-PCA approach. The following hyperparameters are associated with it: the start and end date of the historical data used to fit the principal components, the time discretization (here weekly), the number of principal components fitted, and the degrees of the polynomials fitted to the principal components. See Table 2 for our concrete choices.

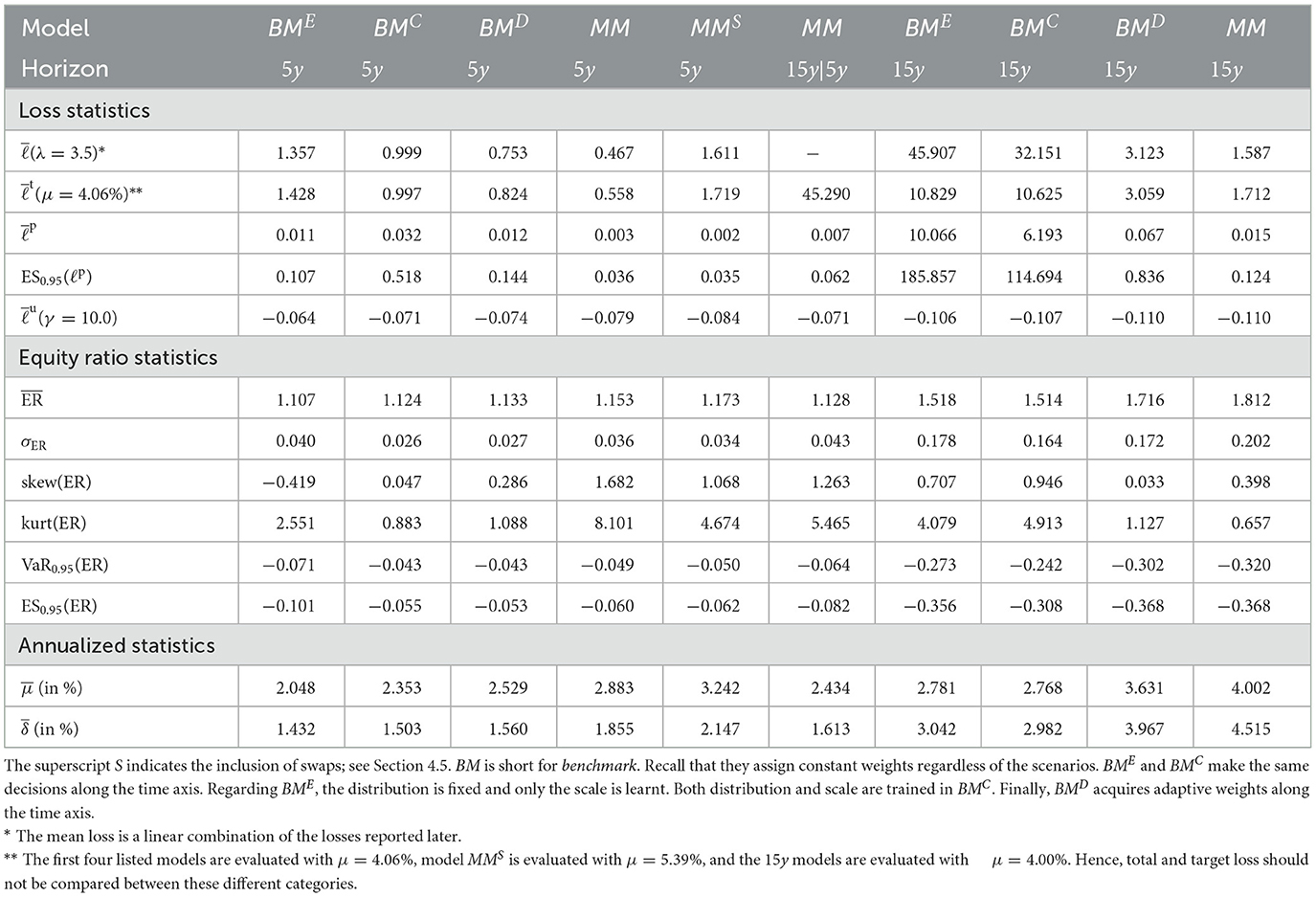

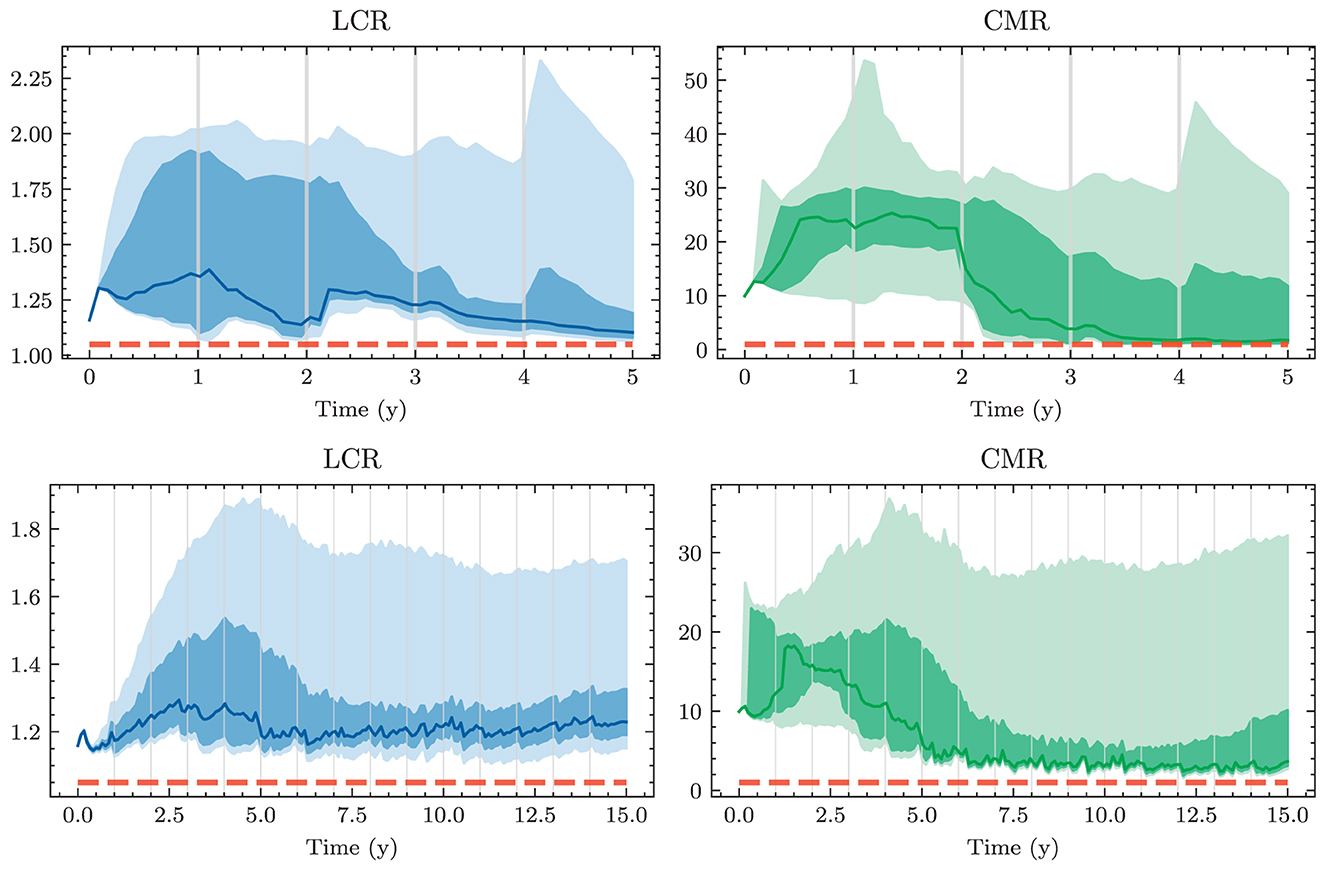

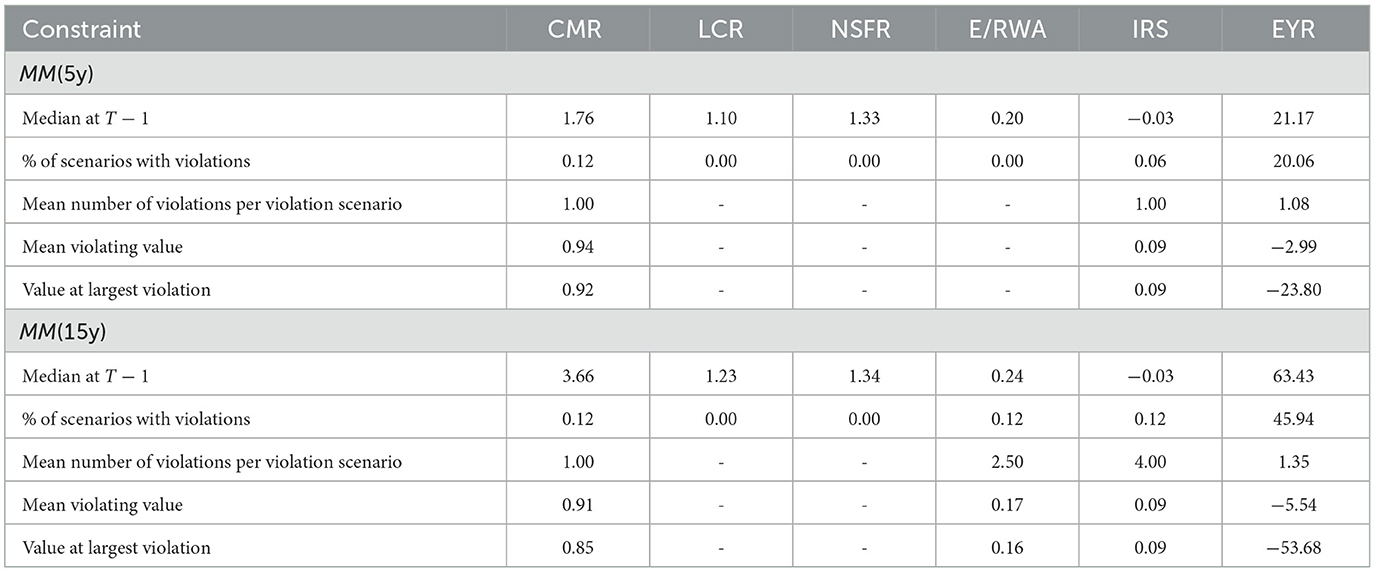

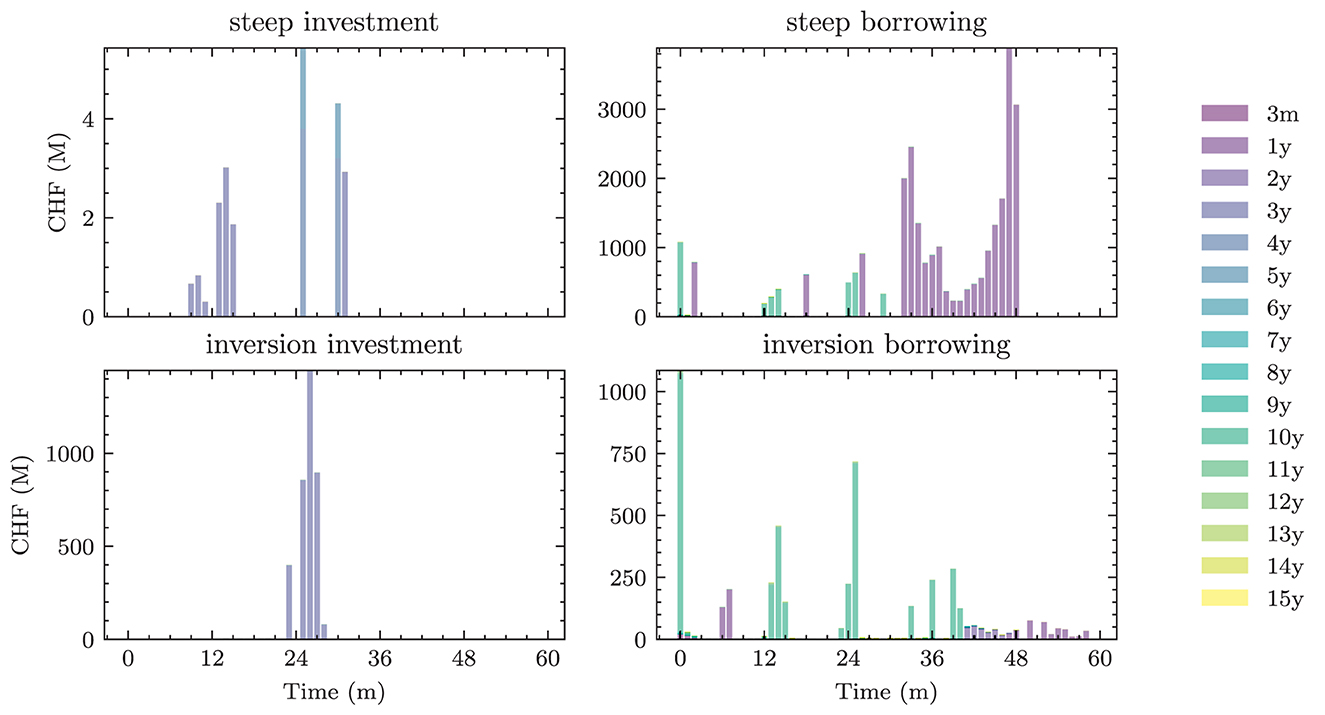

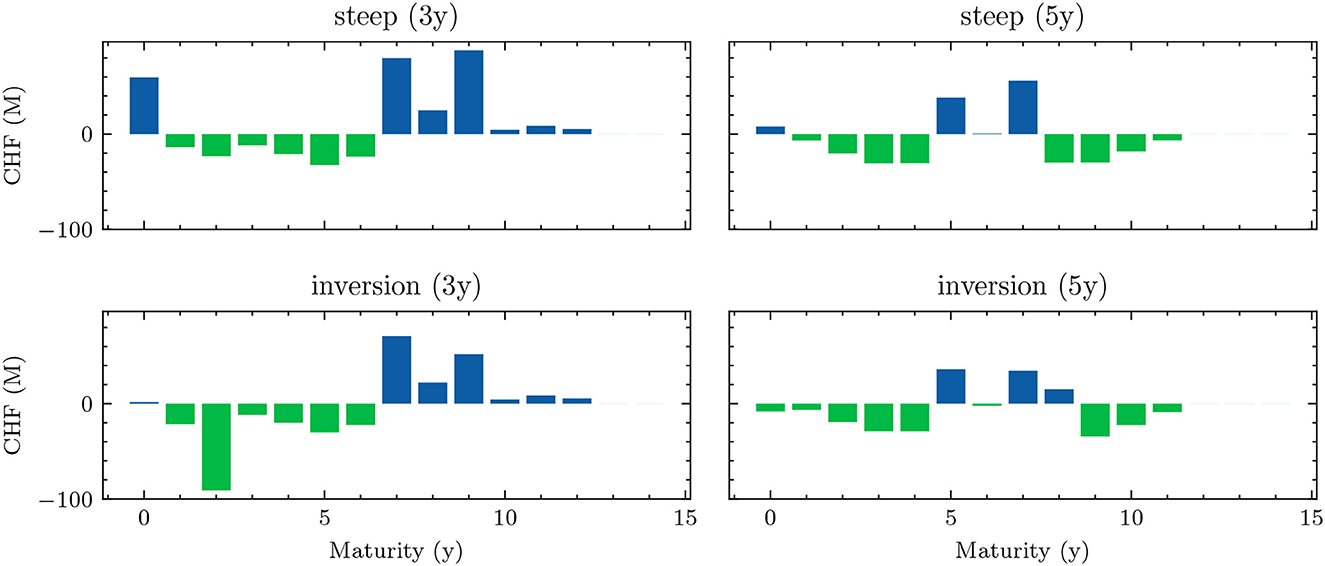

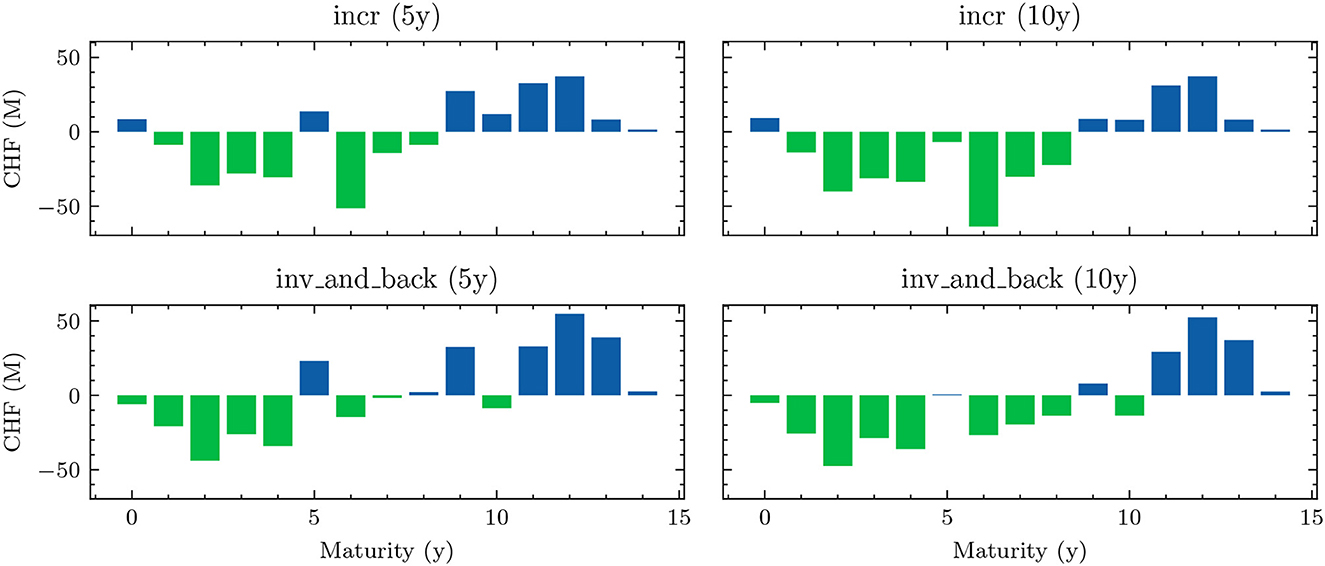

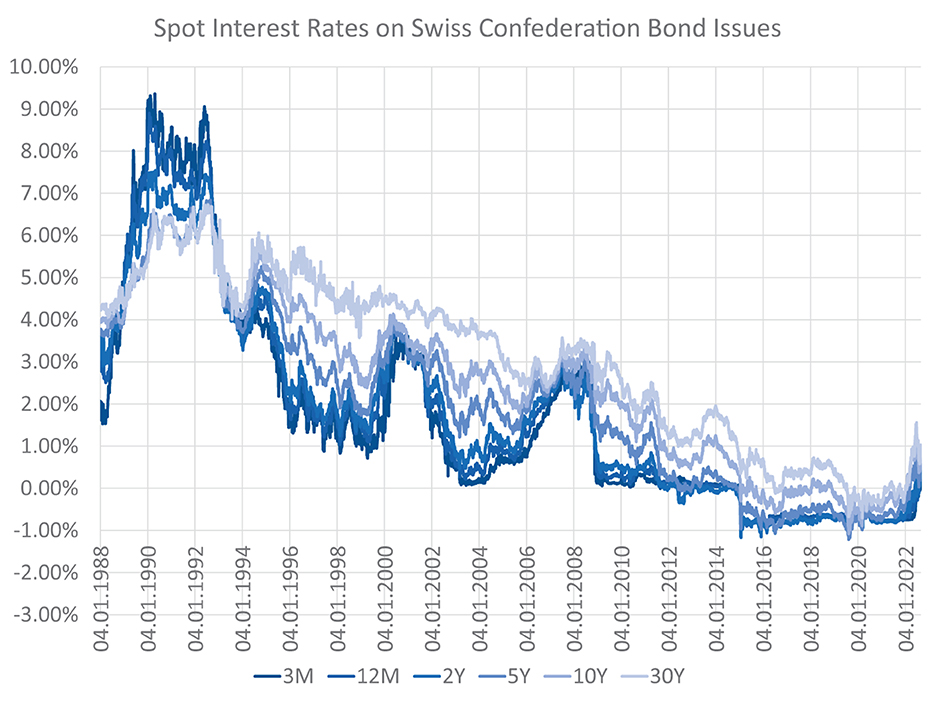

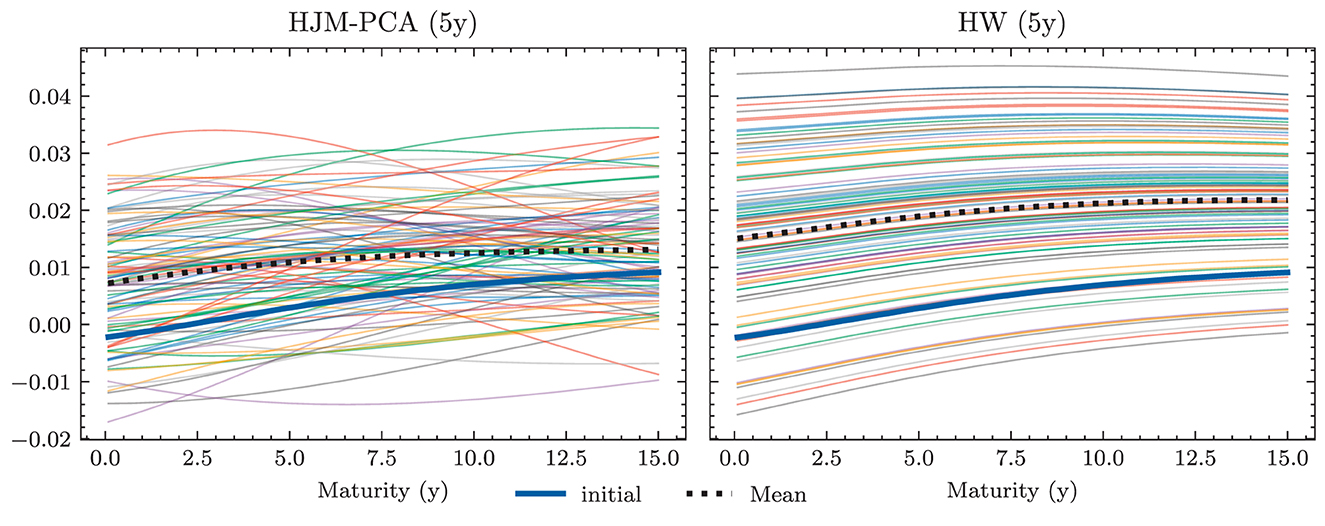

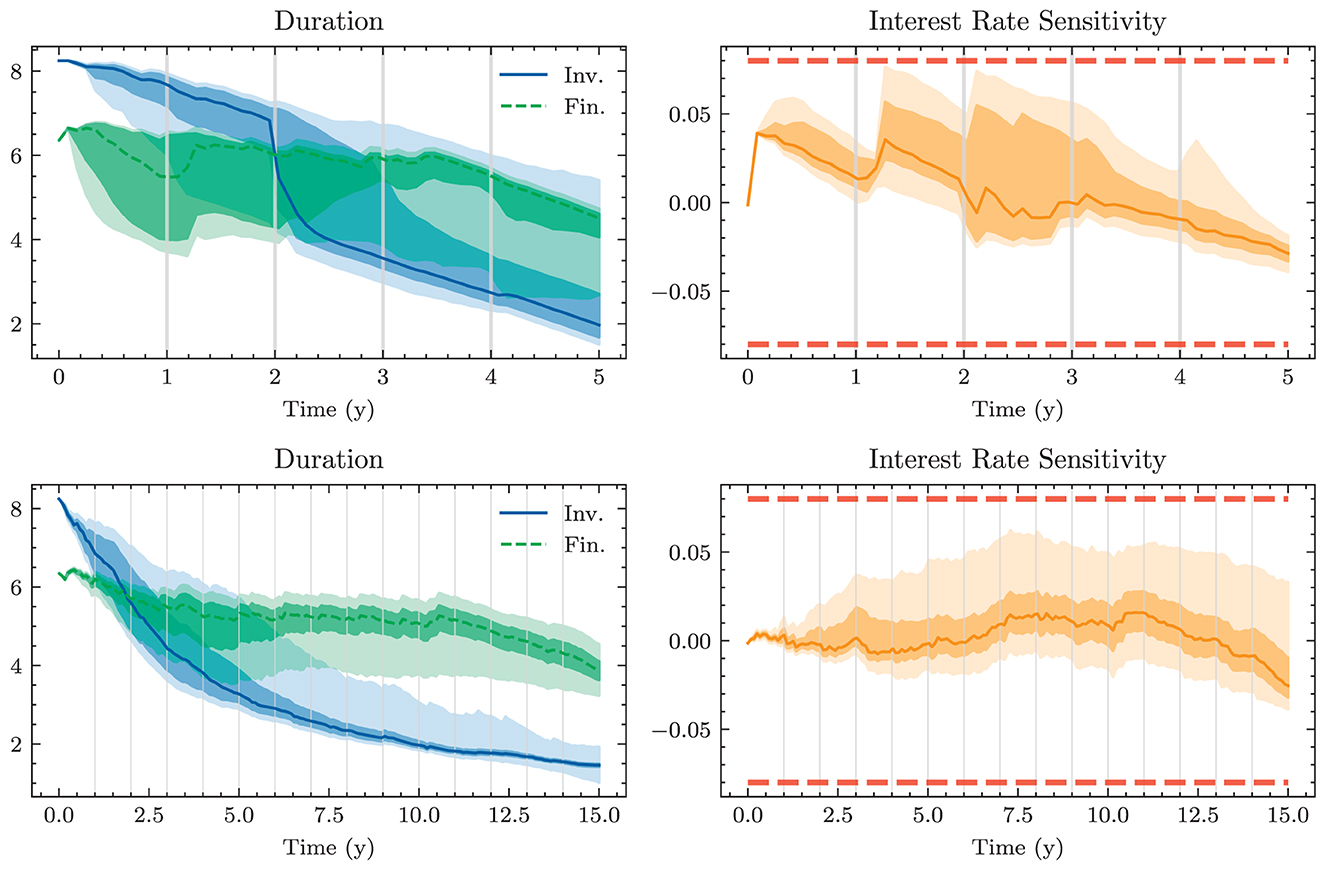

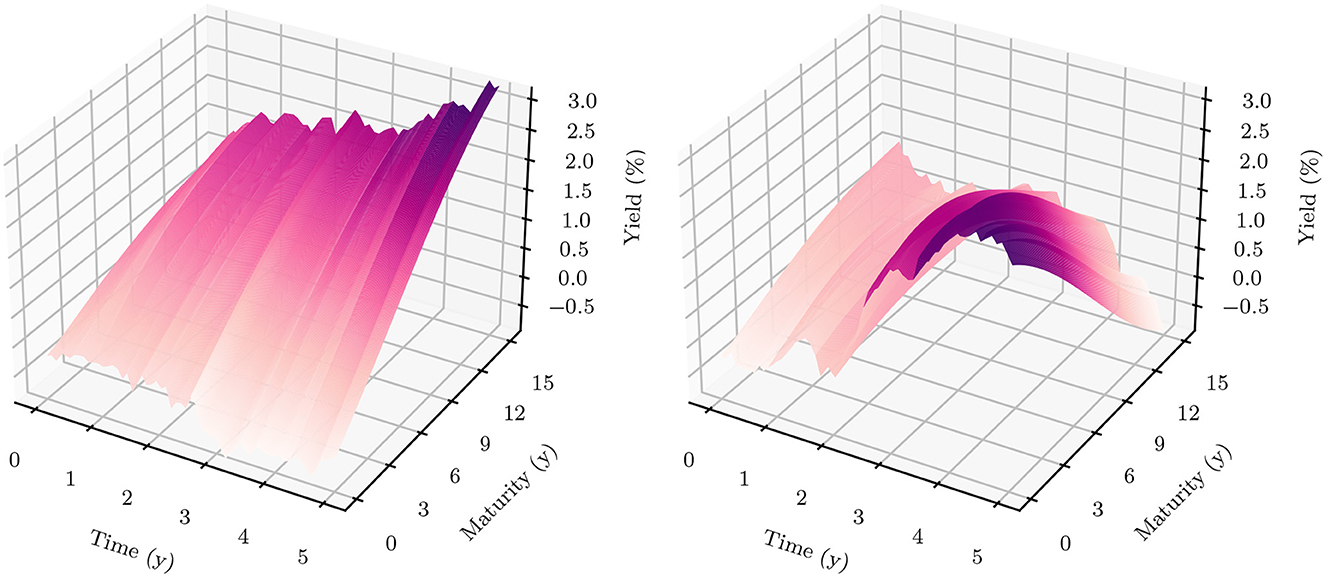

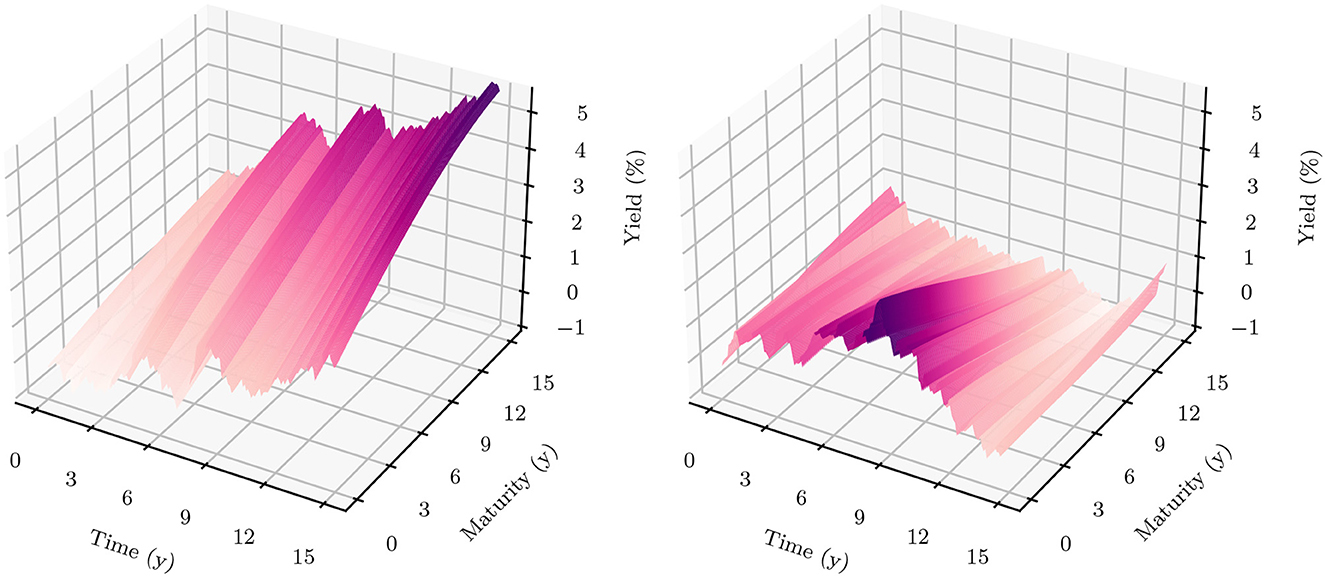

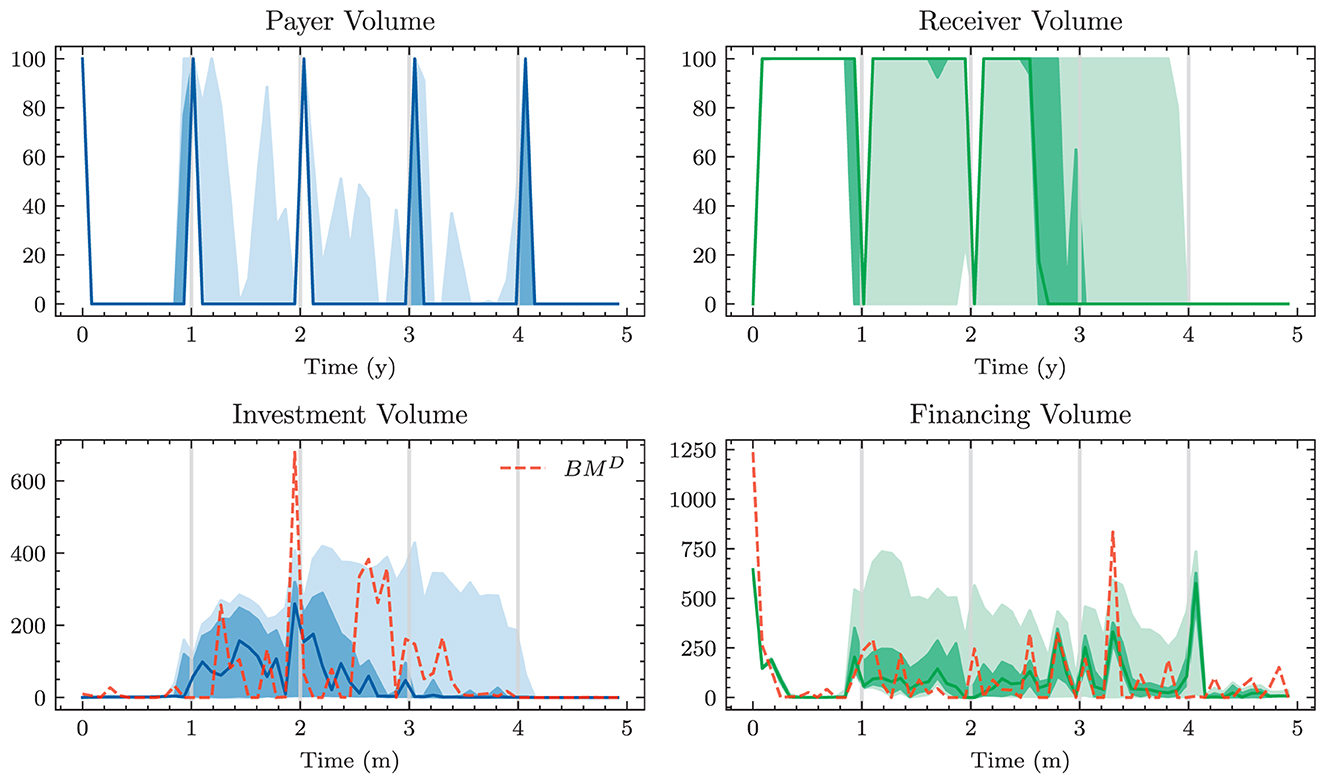

Figure 4 shows the 1m-yields simulated using the HJM-PCA approach. Short-term rates are increasing in most scenarios which is roughly in line with expectations expressed by the bank on physical realizations of future yields. In more than 95% of the scenarios, the short-term yield stays above −1%. This is desirable for our application as many of the modeling assumptions in the ALM framework would be poor if yields were to fall to historically unprecedented negative levels. The left-hand side of Figure 5 shows entire yield curves attained using the HJM-PCA approach to simulate 5 years into the future. Most yield curves lie above the initial curve and there is a decent variety of yield curve shapes. In the mean, yield curves have a slightly positive slope that is smaller than that of the initial yield curve. According to the bank, this is a reasonable assumption as the yield curve has been artificially steep in times of negative interest rates. Overall, the simulated yield curves seem reasonable and suited for our application. While the HJM-PCA model is certainly not perfect, yield curve simulation is not the main focus of this article. Instead, the focus lies on optimizing an ALM policy conditional on a set of simulated yield curves. The striking feature of our Deep ALM approach is that one can easily substitute the HJM-PCA model by any other method for simulating yield curve movements.

Figure 4. Simulated yield curves in 5y—The left-hand side shows a random sample of the terminal term structure simulated with HJM-PCA over a horizon of 5y. We encounter a rich family of different shapes. On the right-hand side, we see a random sample generated by a Hull-White-extended Vašiček model calibrated to the recent past; e.g., see Brigo and Mercurio (2007, Chapter 5). We chose the long-term mean time-dependent to match the initial yield curve and left the mean reverting rate as well as the instantaneous volatility constant. Regarding Deep ALM, the encountered diversity is not sufficient to get convincing results.

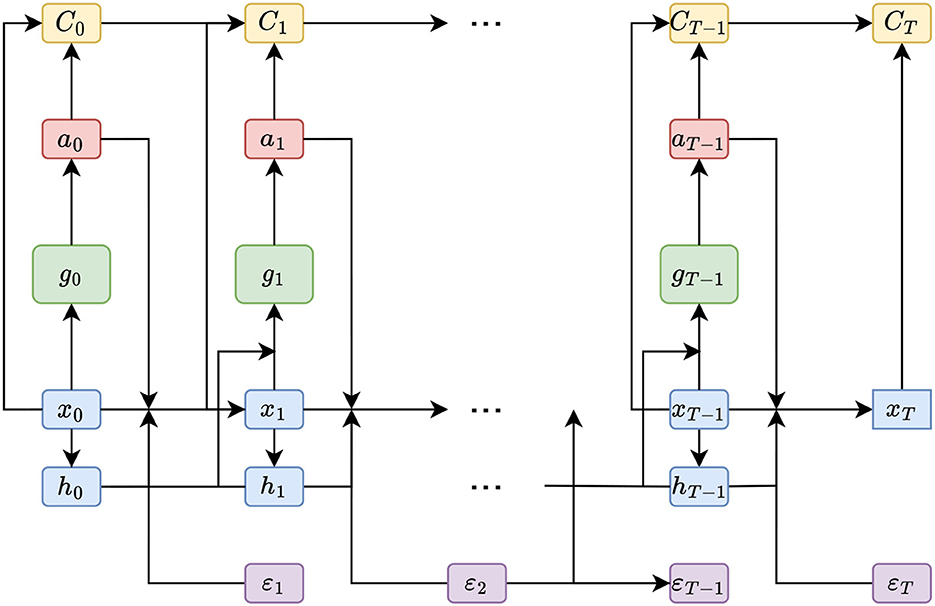

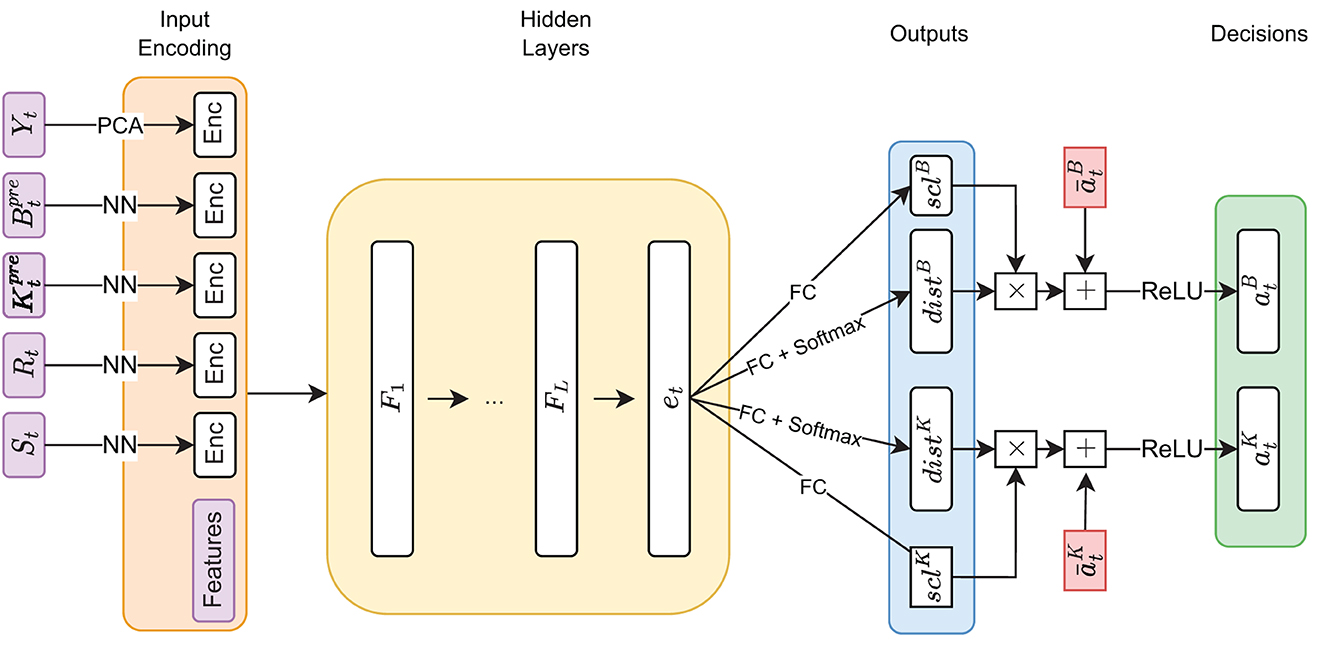

Figure 5. Decision network architecture—arrows annotated with “NN” denote a forward pass through a shallow neural network, the annotation “FC” denotes a single fully connected layer. The notations scl and dist are used to indicate the scale and distribution decisions made by the model.

3. Deep ALM

In this section, we present approaches for solving the ALM problem defined in Section 2: how should the investment and financing decisions (at)t ∈ 𝕋\{T} be made to minimize the expected loss (Equation 33)? We start by presenting simple benchmarks (Section 3.1) and then describe the full Deep ALM approach for tackling the basic ALM setting without swaps.

3.1. Benchmarks

Defining an algorithmic benchmark that mimics how banks make ALM decisions in practice is virtually impossible. These decisions are often made through a combination of simple models and expert judgment. Having a strategy that can easily be computed is of course one of the main motivations behind Deep ALM. For benchmarking purposes, we therefore define and optimize strategies endogenously in the ALM framework. The benchmarks were especially valuable during the development of the deep learning models because the benchmarks are much simpler to optimize.

3.1.1. Equal allocation

A naive approach for choosing the investment and financing maturities is the 1/N strategy. Each period, an amount and is split equally among all investment and financing bonds, respectively. In the simplest case, this amount is the same in each period leading to the constant policies and . Hence, this policy is characterized by only two parameters ∥aB∥1 and ∥aK∥1. Having to invest or borrow the same amount in each period is very restrictive. Instead, one can pursue a 1/N strategy where the scales and are time-dependent. This strategy has 2(H−1) parameters, as there are H−1 periods where decisions are made.

3.1.2. Optimal constant allocation

A slightly more sophisticated benchmark strategy can be built by relaxing the 1/N assumption and choosing a potentially more optimal distribution over the investment and financing maturities instead. Such a benchmark strategy can be specified with different degrees of freedom. In the simplest case, where decisions are assumed to be constant over time, this strategy has bB+bK parameters. When decisions are allowed to vary over time, the number of parameters increases to (bB + bK) × (H − 1). When investment and financing maturities differ, as it is the case in our setup, equal allocation introduces an asymmetry in the duration of investment and financing decisions. In our case, where the maturities 3m, 1y, and 2y are only available for financing purposes, investments under the 1/N strategy have a higher duration than borrowings.

Each of these benchmarks makes the same decision in each scenario at a given model step t ∈ 𝕋\{T}. This is in contrast to the Deep ALM approach presented in Section 3.2, which tries to optimally adapt a given strategy to the current balance sheet structure and interest rate environment. The simplicity of the benchmark strategies makes them useful for more than just comparison purposes. Because they are easy to interpret, these strategies can provide valuable insights for ALM practitioners. The parameters of the benchmark strategies are optimized using gradient descent (see the optimizer in Table 2), i.e., in the same way that weights of neural networks are optimized in the Deep ALM approach. While this is straightforward within our ALM framework, it is already more complex than many prevalent tools in practice.