95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Artif. Intell. , 14 October 2022

Sec. Pattern Recognition

Volume 5 - 2022 | https://doi.org/10.3389/frai.2022.989860

Emotion recognition is useful in many applications such as preventing crime or improving customer satisfaction. Most of current methods are performed using facial features, which require close-up face information. Such information is difficult to capture with normal security cameras. The advantage of using gait and posture over conventional biometrics such as facial features is that gaits and postures can be obtained unobtrusively from faraway, even in a noisy environment. This study aims to investigate and analyze the relationship between human emotions and their gaits or postures. We collected a dataset made from the input of 49 participants for our experiments. Subjects were instructed to walk naturally in a circular walking path, while watching emotion-inducing videos on Microsoft HoloLens 2 smart glasses. An OptiTrack motion-capturing system was used for recording the gaits and postures of participants. The angles between body parts and walking straightness were calculated as features for comparison of body-part movements while walking under different emotions. Results of statistical analyses show that the subjects' arm swings are significantly different among emotions. And the arm swings on one side of the body could reveal subjects' emotions more obviously than those on the other side. Our results suggest that the arm movements together with information of arm side and walking straightness can reveal the subjects' current emotions while walking. That is, emotions of humans are unconsciously expressed by their arm swings, especially by the left arm, when they are walking in a non-straight walking path. We found that arm swings in happy emotion are larger than arm swings in sad emotion. To the best of our knowledge, this study is the first to perform emotion induction by showing emotion-inducing videos to the participants using smart glasses during walking instead of showing videos before walking. This induction method is expected to be more consistent and more realistic than conventional methods. Our study will be useful for implementation of emotion recognition applications in real-world scenarios, since our emotion induction method and the walking direction we used are designed to mimic the real-time emotions of humans as they walk in a non-straight walking direction.

Recently, research on emotion recognition and analysis has gained much popularity due to its usefulness. This technology makes it possible to implement several types of applications, which include improving the quality of human-robot interaction (Yelwande and Dandavate, 2020), evaluating customer satisfaction (Bouzakraoui et al., 2019), detecting suspicious behaviors for crime prevention (Anderez et al., 2021), and assessing student engagement during online classes (Tiam-Lee and Sumi, 2019).

Due to the popularity of emotion analysis research, a specific research field called Affective Computing (Picard, 2000) has emerged. This research field focuses on giving computers the capability to understand human emotion as well as to generate human-like affects for various applications. Several affective computing applications have been proposed in recent years. For example, in the education field, an affective computing algorithm can be applied to a program of online exercises by analyzing students' emotions and interacting with the students so they can study more effectively while also improving their mental health (Tiam-Lee and Sumi, 2019).

In security applications, gait analysis is also useful in crime prevention. Since CCTV systems and security cameras are already standard equipment widely installed in many places, due to the advances in computer vision and machine learning technology, human gait can be analyzed quickly using on-board computation devices. Identifying suspicious behaviors can thus be carried out effectively (Anderez et al., 2021). Smart Visual Surveillance applications can be implemented using gait analysis, including re-identification and forensic analysis, since gaits can be captured at a distance without the awareness or cooperation of the subjects (Bouchrika, 2018).

Human emotion analysis is also useful for improving the experience of human-robot interaction. Nowadays, robot usage is increasing in many tasks and situations including delivery tasks, warehousing tasks, and so on. Making robots move in crowded environments without disturbing or annoying humans, through choosing appropriate paths, remains a vital issue to tackle. By integrating emotion recognition of humans with robot movement strategy, a socially aware robot can be achieved. A socially aware robot can minimize its interference with humans while improving the user's quality of life (Yelwande and Dandavate, 2020).

In the past, emotion prediction could be performed using human observers (Montepare et al., 1987). However, using human observers is time consuming and not sufficiently consistent for use in real-world applications. Automatic emotion recognition, which is a more suitable and accurate approach, has thus been developed (Stephens-Fripp et al., 2017). Most publicly available methods nowadays use facial expressions as features for emotion analysis and prediction. Emotion prediction using facial features has good accuracy in some situations, but it still has limitations (Busso et al., 2004). For example, in situations such as a noisy environment, facial features are difficult to obtain, and high-quality facial images cannot be captured with standard security cameras. In some cases, if the subject has a mustache, beard, or eyeglasses, these can interfere with emotion recognition that depends on facial expressions. Emotion intensity is another important issue, since some subjects do not express intense feelings on their faces. Due to these limitations, emotion prediction techniques based on facial features are suitable for use only in limited situations. If face images can be clearly captured, such as when the subject is facing forward near the camera, facial emotion recognition is an appropriate choice. If the face images cannot be clearly captured, other features might be better for implementation of emotion recognition in reality.

Gait and posture are known as movement patterns of the human body as people walk or perform activities. There are no requirements for high-resolution images or video. Gait and posture features can be collected without interfering with the normal life of humans. Furthermore, gait and posture data can be collected without the subjects' awareness. These features make gait and posture recognition successful in many applications such as human identification (Khamsemanan et al., 2017; Limcharoen et al., 2020), human re-identification (Limcharoen et al., 2021), age estimation (Lu and Tan, 2010; Zhang et al., 2010; Nabila et al., 2018; Gillani et al., 2020), and gender recognition (Isaac et al., 2019; Kitchat et al., 2019). Therefore, human gait and posture are appropriate features for recognition of human emotions as shown in several previous studies (Montepare et al., 1987; Janssen et al., 2008; Roether et al., 2009; Karg et al., 2010; Barliya et al., 2013; Venture et al., 2014; Li B. et al., 2016; Li S. et al., 2016; Zhang et al., 2016; Chiu et al., 2018; Quiroz et al., 2018).

The objective of this study is to analyze the differences in human gaits and postures under different emotions as subjects walk in a non-straight walking path. Several experiments were conducted to investigate the differences in body-part movement under different emotions and to verify whether these differences in movement could be used to identify the current emotion of a subject. The participants in our experiments consist of male and female undergraduate university students. They were asked to walk with their natural postures in a non-straight walking path while watching emotional videos. Conventional methods where videos are shown to the subjects before walking pose the risks of the induced emotions not being consistent and not lasting until the end of walking (Kuijsters et al., 2016). Moreover, if we show videos on a normal screen while the subject walks in a non-straight path, the subject would need to bend or turn his or her head to watch the videos on the screen. With the proposed method, the subjects can see the videos directly on HoloLens 2, so they can walk in a natural way and watch the video at the same time. In addition, the induced emotion will be more consistent and last until the end of walking. In this study, gait data were analyzed using one-way and multi-factor Analysis of Variance (ANOVA) as well as Linear Regression Analysis.

Experiments and analyses in this study were conducted to prove two hypotheses. The first hypothesis is that different emotions have different effects on body-part movements while subjects walk, so the emotions of subjects can be recognized from their walking postures. The second hypothesis is that the movements of the left and right body parts are not symmetric if the subjects walk in a non-straight path, causing one side of the body to reveal the current emotion of a subject better than the other side. Since our study focuses on human gait analysis while the subjects walk in a non-straight walking path, it is dissimilar to most other conventional studies that analyze human gait only when the subjects walk in a straight walking path (Janssen et al., 2008; Michalak et al., 2009; Roether et al., 2009; Karg et al., 2010; Gross et al., 2012; Barliya et al., 2013; Destephe et al., 2013; Venture et al., 2014; Li B. et al., 2016; Li S. et al., 2016; Sun et al., 2017).

In short, our experiments and analyses show that human gait and posture are different under different emotions, especially with left arm-swing movements.

This article is organized as follows. Section 2 gives an overview of other works related to ours. Section 3 explains the method, equipment and materials we used to collect data for our analysis. Section 4 shows the detailed procedure of how we preprocessed the data we collected. Section 5 describes the method we used to extract gait features. Section 6 describes the statistical methods used to analyze our gait data and the results of each method. Section 7 discusses and analyzes the results we found from statistical analyses. Finally, Section 8 summarizes everything we have accomplished in this study.

Studies on emotion recognition are very popular, and several research projects on this topic have been proposed in recent years due to its potential usefulness. Most of the proposed emotion recognition methods are based on facial expression. These techniques can achieve accurate results for specific applications. However, emotion recognition using facial features still have limitations in some real-world usages, as mentioned in Section 1. We found that fewer studies focusing on emotion recognition have used gait and posture features than facial features. In this study, related works that are useful and relevant to our study are reviewed.

Xu et al. (2020) conducted a survey to investigate many studies on gait analysis. They found that gait analysis could be used not only for identification of subjects but also for the prediction of subjects' current emotions. They found that humans walking under different emotions show different characteristics. By using this information, automatic emotion recognition can be achieved. There are several advantages to using gait compared with traditional biometrics such as facial features, speech features, and physiological features. Gait can be observed from far away without a subject's awareness. Gait is difficult to imitate. Gait can also be obtained without a subject's cooperation. Due to these advantages, gait is a very effective type of expression that can be used for automatic emotion recognition. Gait can be recorded using many types of devices. For example, a force plate can be used for recording velocity and pressure data (Janssen et al., 2008). Infrared light barrier systems also perform well in recording velocity data (Lemke et al., 2000; Janssen et al., 2008). Motion capturing systems, e.g., Vicon, can capture coordinate data accurately using markers attached to the body (Michalak et al., 2009; Roether et al., 2009; Karg et al., 2010; Gross et al., 2012; Barliya et al., 2013; Destephe et al., 2013; Venture et al., 2014). Microsoft Kinect is another efficient tool that can capture the human skeleton by processing a depth image with a color image to predict the position of body joints (Li B. et al., 2016; Li S. et al., 2016; Khamsemanan et al., 2017; Sun et al., 2017; Kitchat et al., 2019; Limcharoen et al., 2020, 2021). An accelerometer sensor on a wearable device such as a smartphone or smart watch can also record the movement data for gait analysis (Zhang et al., 2016; Chiu et al., 2018; Quiroz et al., 2018). After gait data collection, there are several preprocessing steps that can be used. For instance, a low-pass Butterworth filter (Destephe et al., 2013; Kang and Gross, 2015, 2016) or sliding window Gaussian filtering (Li B. et al., 2016; Li S. et al., 2016). Data transformation from the time domain to others such as Discrete Fourier Transform (Li B. et al., 2016; Li S. et al., 2016; Sun et al., 2017) or Discrete Wavelet Transform are also widely used (Ismail and Asfour, 1999; Nyan et al., 2006; Baratin et al., 2015). Gait features are categorized into Spatiotemporal Features, such as stride length, velocity, step width, and step length, and Kinematic Features, such as coordinate data, joint angles, and angular range of motion. Some approach involves dimension reductions of gait features such as Principal Component Analysis (Shiavi and Griffin, 1981; Wootten et al., 1990; Deluzio et al., 1997; Sadeghi et al., 1997; Olney et al., 1998). Finally, the emotion recognition phase can be performed using many popular techniques, e.g., Multilayer Perceptrons (Janssen et al., 2008), Naive Bayes (Karg et al., 2010; Li B. et al., 2016; Li S. et al., 2016), Nearest Neighbors (Karg et al., 2010; Ahmed et al., 2018), Support Vector Machine (Karg et al., 2010; Li B. et al., 2016; Li S. et al., 2016; Zhang et al., 2016; Chiu et al., 2018), and Decision Tree (Zhang et al., 2016; Ahmed et al., 2018; Chiu et al., 2018). As for the results, useful findings were derived from many of the studies they surveyed. For happiness, the subject steps faster (Montepare et al., 1987), strides are longer (Halovic and Kroos, 2018), arm movement increases (Halovic and Kroos, 2018), and joint angle amplitude increases (Roether et al., 2009). For sadness, the arm swing decreases (Montepare et al., 1987), torso and limb shapes contract (Gross et al., 2012), and joint angles are reduced in amplitude (Roether et al., 2009). Many gait analysis studies have been proposed in recent decades. Several applications can be achieved by analyzing human gait. The following examples are illustrative: human identification or re-identification (Khamsemanan et al., 2017; Limcharoen et al., 2020, 2021), gender prediction (Isaac et al., 2019; Kitchat et al., 2019), emotion prediction (Janssen et al., 2008; Xu et al., 2020), and mental illness prediction (Lemke et al., 2000; Michalak et al., 2009). Several of the above methods collected gait data by such means as a force plate, a light barrier, a motion-capturing system, a video camera, or an accelerometer. We focus only on methods that extract 3-dimensional coordinates, binary silhouette, and body part angles as gait features, since these gait features are sensitive to walking patterns. Most current studies propose using a straight walking path in their experiments to achieve high-quality gait data (Sadeghi et al., 1997; Lemke et al., 2000; Janssen et al., 2008; Michalak et al., 2009; Roether et al., 2009; Barliya et al., 2013; Venture et al., 2014; Kang and Gross, 2015, 2016; Li B. et al., 2016; Li S. et al., 2016; Sun et al., 2017; Chiu et al., 2018). However, a few studies have used a free-style walking path, where subjects can choose any walking pattern they want instead of straight walking (Khamsemanan et al., 2017; Kitchat et al., 2019; Limcharoen et al., 2020, 2021). By developing methods for free-style walking data, there are greater opportunities to implement the proposed methods in a real-world scenario, in which humans walk without awareness of being observed in public spaces. Such methods are also motivated by the difficulty of obtaining adequate straight-walking data in noisy environments compared with free-style walking data.

In our study, we show emotion-inducing videos to subjects using Microsoft HoloLens 2 smart glasses while they walk. We were also concerned whether human gait could suffer from interference due to watching videos using smart glasses. These concerns were related to studies that measured gait performance of subjects while using smart glasses in performing attention-demanding tasks while walking, and there are some findings on this that should be considered. For example, level-walking performance was not affected in comparison to using a paper-based display and baseline walking. In addition, subjects walked more conservatively and more cautiously when crossing obstacles (Kim et al., 2018). Unfortunately, adverse impacts such as walking instability can occur when using smart glasses, but the stability issue was not as significant as when using a smartphone and a paper-based system (Sedighi et al., 2018, 2020).

Based on these related studies, we decided to use Microsoft HoloLens 2 for displaying emotional videos to our participants while they walked in the recording area, despite the possibility that some adverse effects, such as walking instability, could occur when using smart glasses while walking. We coped with this issue by asking the participants to take one rehearsal walk through the walking area without wearing HoloLens 2 to make them familiar with the walking space and another rehearsal walk while wearing HoloLens 2 without displaying anything to make them familiar with walking while wearing smart glasses at the same time. For the walking pattern, straight walking should result in cleaner gait data but it has more limitations when implemented in real-world scenarios. On the other hand, walking freely without any path guidance would be difficult for the subjects. Since they have to concentrate on the video content shown by HoloLens 2 while walking, if they also need to determine the walking path at the same time, they cannot focus well on the video content and their gait can be affected by interference. Therefore, we decided to use a lax circular walking path for our experiments. By walking circularly in clockwise or counter-clockwise direction without marking the path line on the floor, we can have both straight walking and non-straight walking data in a single walking trial.

In most previous studies in the fields of emotional recognition and analysis, participants were asked to walk in a straight line after watching emotional movies or asked to walk in a straight line while thinking about personal experiences. Various issues arise in these settings. In cases where participants were asked to walk after watching emotional videos, it is possible that some participants do not sustain the same emotions toward the end of the walk or do not have the same emotion at all after watching the videos. These conditions can lead to inaccurate relationships between gaits and emotions. In cases where participants were asked to feel certain ways using their personal experiences, it is also possible that some participants cannot recall their feelings well enough for them to be reflected in their body movements. These problems can lead to faulty information.

To eliminate the above issues leading to faulty information and inaccurate relationships between gaits and emotions, our experiments are designed so that participants are constantly exposed to emotion-inducing videos while walking. We used the latest smart glasses technology, i.e., Microsoft HoloLens 2, to show videos to subjects while they were walking. To the best of our knowledge, no currently proposed study has ever used this kind of emotion induction method. By using HoloLens 2 for viewing videos, subjects can see the room environment and the videos at the same time. Because we show emotional videos to participants while they walk, the results are closer to real-life situations when a subject sees certain events and feels a certain emotion due to those events. In other words, we attempted to simulate the real-time emotions of the participants by showing emotion-inducing videos while they were walking. Moreover, the intensity of the induced emotions should be more consistent than with previous methods that showed emotional videos to subjects prior to walking trials.

Currently, there are two main types of motion-capturing equipment: marker-less and marker-based devices. Marker-less devices are more convenient to use in real-life situations because there is no need to attach any equipment to the subject's body. Coordinates of body parts are calculated by image processing technology using depth data recorded by an infrared camera together with RGB images from a color camera. For the marker-based type, several markers must be attached to the subject's body at the desired positions, such as on the head, hand, or elbow. A marker-based device is more complex to set up because it requires several cameras to capture the infrared reflection from the markers attached to the subject's body for reconstruction of the markers' coordinates in 3-dimensional space. However, the body-tracking accuracy of a marker-less device is lower than that of a marker-based type because a marker-less system predicts the position of each body part while a marker-based system uses the actual position obtained from several cameras.

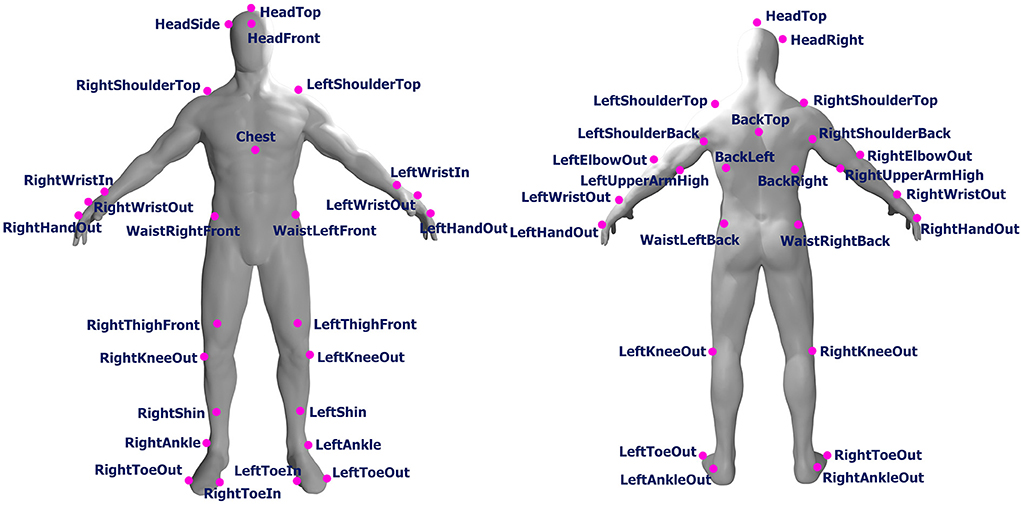

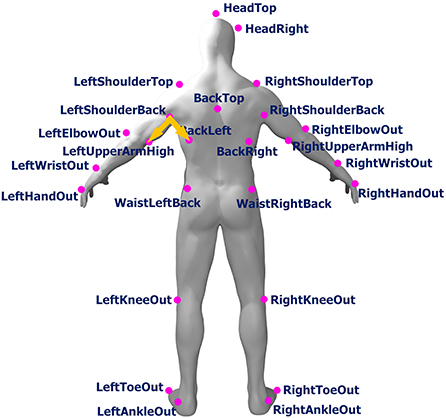

In this study, we used OptiTrack, a well-known marker-based motion-capturing system, for our data collection. Fourteen OptiTrack Flex 3 cameras were used in our experimental design. We used the baseline marker set of 37 markers, which is the standard configuration for human skeleton tracking. With this baseline marker set configuration, the 37 markers were attached to each subject's body. The names of the markers are listed in Table 1, and the positions of the markers are shown in Figure 1. Figure 2 shows an image of how these OptiTrack cameras were installed.

Figure 1. Position of front and back markers (original human figure source: dox012 on Sketchfab1).

We marked a rectangle on the floor for use as the walking area that can be captured by the OptiTrack motion tracking system, using black tape as shown in Figure 3. Fourteen OptiTrack Flex 3 motion-capture cameras were installed on seven camera stands. That is, two cameras were mounted on each stand at different heights as shown in Figure 2. The seven camera stands were placed around the walking area as illustrated in Figure 4. The size of the walking area is 2.9 by 3.64 m.

We selected three videos as stimuli for emotion induction. These videos were shown to the subjects using HoloLens 2 as they walked through the recording area.

• Neutral video:

The nature landscape video from YouTube named Spectacular drone shots of Iowa corn fields uploaded by the YouTube user named The American Bazaar.2

• Negative video:

An emotional movie selected from the LIRIS-ACCEDE database named Parafundit by Riccardo Melato.

• Positive video:

An emotional movie selected from the LIRIS-ACCEDE database named Tears of steel by Ian Hubert and Ton Roosendaal.

A neutral video was selected from landscape videos on YouTube, based on the assumption that it would not induce any emotion.

Neutral video is the nature view of a corn field located in Des Moines, Iowa, USA. It was recorded by a drone camera so it contains the aerial view of the corn field which has mostly green color for the entire video. This video does not have any sound. Because of these reasons, we thought it would not induce any emotion. Positive (inducing happy emotion) and negative (inducing sad emotion) videos were selected from the public annotated movie database LIRIS-ACCEDE3 published by Baveye et al. (2015). This database contains many Creative Commons movies and their emotional annotations. In this study, we used the Continuous LIRIS-ACCEDE collection that contains 30 movies and emotion annotations in Valence-Arousal ranking. Most movies contain both positive and negative valence in the same movie. We carefully selected one movie that has positive valence for the entire movie and one movie that has negative valence for the entire movie to design a complete walking trial that contains only one emotion.

The negative video we selected is a short movie named Parafundit. This movie is the story of a man living alone. He commits suicide at the end of the movie using a gun. In addition to the valence score which is negative for the entire movie, we thought the mood and tone of this video are very suitable for inducing sadness. For positive video, we selected a movie named Tears of steel which is a short science fiction movie. The main idea of this movie is a group of scientists attempts to save the world from destructive robots. The attempt has been successful at the end of this movie. Therefore, we chose this movie as the movie to induce happiness as well as the valence score from the LIRIS-ACCEDE annotation is positive for the entire movie. We were also concerned about the length of each video, so we decided that none of the videos used would exceed 15 min in length. The lengths of the neutral video, negative movie, and positive movie are 5:04, 13:10, and 12:14 min, respectively. Audio of negative and positive videos contain music, sound effects, and conversation in English. Subjects could hear the videos' sound from the HoloLens 2 built-in speakers as they walked. The neutral video does not contain any sound to ensure that it does not induce any emotion.

The procedures of our data collection are shown in Figure 5. First, each participant was asked to answer the health questionnaire and sign the consent form before participating in our experiments. The health questionnaire consists of the following questions.

1. Do you have any neurological or mental disorders?

2. Do you have a severe level of anxiety or depression?

3. Do you have hearing impairment that cannot be corrected?

4. Do you have any permanent disability or bodily injury that affects your walking posture?

5. Do you feel sick now? (e.g., fever, headache, stomachache)

6. If you have any problem with your health condition, please describe it.

Based on the answers to this questionnaire, a subject could be excluded from participation in our experiment if he or she had any health issue. In this study, we found that all subjects were healthy, so all of them could participate in our experiment. After we confirmed that the subject was physically and mentally healthy, the subject was asked to walk in a circular pattern inside the recording area marked by black tape on the floor as shown in Figure 3. All participants could select the direction they wanted to walk, between clockwise or counter-clockwise, inside the walking area. In addition, the subjects could switch their walking direction from clockwise to counter-clockwise or vice versa whenever they wanted for an unlimited number of times.

Before performing the actual recording, each subject was asked to walk naturally in the recording area for 3 min without wearing HoloLens 2 as Rehearsal Walk #1 to make the subject feel familiar with the walking space. Then, each subject was asked to wear the HoloLens 2 while it did not show anything and walk again for 3 min as Rehearsal Walk #2 to make the subject feel familiar with walking while wearing HoloLens 2. In this walk, the HoloLens 2 is just a pair of transparent smart glasses showing no content at all. As we found in previous studies, including Kim et al. (2018), Sedighi et al. (2018), and Sedighi et al. (2020), if the participants never experienced using smart glasses while walking, adverse effects could occur and gait performance could be unstable. Our attempt to cope with this issue was to ask all subjects to take the rehearsal walks with and without wearing HoloLens 2 before performing actual recording.

To perform actual recording, the Neutral Video was displayed on HoloLens 2. Each subject was asked to walk and watch the video at the same time. The goal of this experiment is to capture a Neutral Walk. Participants started walking when the video started playing and stopped walking when the video ended. Then, we performed recording for the first Emotional Walk using a similar method as that used for Neutral Walk. In this experiment, each subject was asked to walk while watching the Positive Video or Negative Video selected from the LIRIS-ACCEDE database as mentioned in Section 3.3. After finishing the first Emotional Walk, each subject was asked to leave the experiment room to take a 10-min break as a reset of the induced emotion back to normal condition. After the break, we performed the second Emotional Walk experiment by showing another emotional video on HoloLens 2 while the subjects walked. If the first emotional walk was done using Positive Video, the second emotional walk was done using Negative Video. The order of Negative Walk and Positive Walk was swapped for the next subject. Hence, if the first emotional walk was done using Negative Video, the second emotional walk was done using Positive Video. Note that each subject was asked to answer the self-reported emotion questionnaire before and after walking and watching each video. These questions appeared as follows.

1. Please choose your current feeling: Happy, Sad, Neither (Not Sad and Not Happy)

2. How intense is your feeling: 1 (Very Little) to 5 (Very Much).

There were 49 participants in this dataset: 41 male and 8 female subjects. The average age of participants was 19.69 years. The standard deviation of participants' ages was 1.40 years. The average height was 168.49 cm. The standard deviation of height was 6.34 cm. The average weight was 58.88 kg, and the standard deviation of weight was 10.84 kg. The variance of subjects' ages was not large since all participants were undergraduate university students. We also made separate statistics for male and female subjects as follows.

For female subjects:

• Number of subjects: 8 participants

• Average/SD of Age: 19.25/0.89 years

• Average/SD of Height: 160.38/3.58 cm

• Average/SD of Weight: 51.25/3.28 kg.

For male subjects:

• Number of subjects: 41 participants

• Average/SD of Age: 19.78/1.47 years

• Average/SD of Height: 170.07/5.51 cm

• Average/SD of Weight 60.37/11.18 kg.

Each subject walked and watched three videos including Neutral Video, Negative Video, and Positive Video. In all, a total of 147 walking trials were conducted.

For the order of videos shown to the subjects, 24 subjects watched Negative Video before Positive Video, and 25 subjects watched Positive Video before Negative Video.

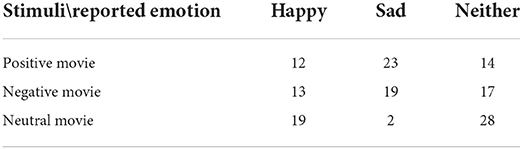

According to the answers from the self-reported emotion questionnaire completed after subjects finished walking and watching each video, we had 44 Sad walking trials, 44 Happy walking trials, and 59 Neither walking trials. These emotion tags (Reported Emotion) were used in our analysis instead of the emotion tags of the videos (Expected Emotion) because not all subjects felt Happy after watching Positive Video and not all subjects felt Sad after watching Negative Video. Table 2 shows the numbers of subjects who felt Happy, Sad, and Neither from the self-reported emotion questionnaire for each video stimulus.

Table 2. Comparison of expected emotion from stimuli and reported emotion from self-reported questionnaire.

Sample images of a subject walking in circular patterns in the recording area while watching a video on HoloLens 2 are shown in Figure 6, and a photo of a subject wearing the OptiTrack Motion Capture Suit with 37 markers and HoloLens 2 is shown in Figure 7.

From a total of 147 walking trials, one was corrupted during recording, so we had 146 usable walking trials. For the direction of walking, we had 99 counter-clockwise walking trials, 21 clockwise walking trials, and 26 walking trials with both clockwise and counter-clockwise directions in one walk.

After extracting 3-dimensional coordinates of 37 body markers captured by OptiTrack, we performed preprocessing steps to clean up the data and remove unusable data and noise as follows.

First, we removed 1 min from the beginning and 1 min from the end of each walking trial's data. Since the OptiTrack motion-capturing system can capture marker coordinate data at 100 frames per second, the first 6,000 frames and the last 6,000 frames from each walking trial were removed.

In the feature extraction process, we extracted Walking Straightness and Body Part Angles. The feature extraction process is explained in Section 5. However, Walking Straightness and Body Part Angles have different preprocessing steps as shown in Figure 8. Therefore, the preprocessing steps of Walking Straightness and Body Part Angles are explained separately below.

After removing the first 6,000 and the last 6,000 frames, for Walking Straightness feature extraction, we used the position of Left Foot and Right Foot. Therefore, we checked each frame for whether the coordinate data of LeftAnkleOut and RightAnkleOut were available. If these two markers' data were missing in any frame, we excluded that frame from the straightness calculation.

For Body Part Angles feature extraction, in addition to removing the first and last 6,000 frames, we also removed any frame that contained arm movement that was not part of natural walking. Examples of these movements are when subjects raised their arms to check the time on their watch, subjects tried to adjust the position of the HoloLens 2 smart glasses, or subjects scratched their head while walking. This preprocessing step was done by checking the Y-coordinates of arm-related markers, i.e., left and right HandOut, WristOut, WristIn, and ElbowOut. For each arm-related marker, we calculated mean value and standard deviation value of its Y-coordinate for each walking trial. If the Y-coordinate in any frame was less than Mean − 2 × SD or more than Mean + 2 × SD, we removed that frame from the angle calculation.

After the preprocessing of coordinate data, there were some missing frames between the walking trials. This made the walking trial no longer continuous. Before we could proceed to the next step, we coped with this issue by splitting a walking trial into multiple chunks based on the missing frames. If there were more than 25 contiguous missing frames, we spit the trial into new walking chunks. If the length of missing frames was less than 25 frames, we still kept the next available frames in the same chunk. A diagram showing chunk splitting is given in Figure 9. However, if a chunk was smaller than 50 frames (0.5 s), we discarded that chunk since it was too small and not usable.

To calculate the straightness of a walking trial, we detected the steps of a walk first. For each chunk, we calculated distance between left foot and right foot in the top-view for all frames. That is, we calculated Euclidean distance between the X and Z coordinates of left foot and right foot. Then, we performed data smoothing by Savitzky-Golay filter before detecting the peaks of distance value from each chunk. By finding the peaks of distance between two feet, we could detect walking steps. If any chunk has less than three steps, we also discarded that chunk.

We calculated straightness of walking in each chunk by using three consecutive walking steps. An example diagram of straightness calculation from three consecutive steps is shown in Figure 10. In this figure, Step #1 to Step #2 is left step, and Step #2 to Step #3 is right step. Therefore, the first straightness value for Step #1 to Step #3 is the angle between the vector of left step (Step #1 to Step #2) and right step (Step #2 to Step #3). The detailed process of straightness calculation is as follows.

1. If Step #1 to Step #2 is left step, define the left step vector vleft12 as a vector from (X, Z) point of Step #1 to (X, Z) point of Step #2

2. Define the right step vector vright23 as a vector from (X, Z) point of Step #2 to (X, Z) point of Step #3

3. Calculate angle θ13 of vleft12 to vright23

4. Define the next left step vector vleft34 as a vector from (X, Z) point of Step #3 to (X, Z) point of Step #4

5. Calculate angle θ24 of vleft34 to vright23

6. Define the next right step vector vright45 as a vector from (X, Z) point of Step #4 to (X, Z) point of Step #5

7. Calculate angle θ35 of vleft34 to vright45

8. If Step #1 to Step #2 is right step, the vector from (X, Z) point of Step #1 to (X, Z) point of Step #2 is vright12, and the vector from (X, Z) point of Step #2 to (X, Z) point of Step #3 is vleft23. This rule applies to all consecutive steps, i.e., θ13 is angle between vright12 and vleft23, θ24 is angle between vright34 and vleft23 and θ35 is angle between vright34 and vleft45.

9. Continue calculating the angle between left and right vector until each chunk is finished

10. Angle between left and right step vector is used as straightness value of the frames having these steps.

After obtaining the walking straightness of each of the three consecutive steps, we assigned the straightness values to all frames consisting of the corresponding steps. Now all frames have their own straightness values. Then, for each frame, the angles between the three body parts' positions were calculated. These include, for example, the angle between LeftShoulderBack and LeftUpperArmHigh and LeftShoulderBack and BackLeft, the angle between BackTop and BackLeft and BackTop and HeadTop, and so forth. In total, we calculated 24 angles from each of the three markers. All angles we used in this study are listed in Table 3.

Next, a frame that consists of 24 angles was grouped into seven straightness groups based on the straightness value of that frame. Our straightness groups include one group for straight walking and six groups for curved walking as listed below. Every frame in a walking trial was grouped using its straightness value.

Because we used three consecutive steps to calculate a straightness value, the frames of connecting steps for each group of three consecutive steps had two straightness values, and in this case, the average of the two straightness values for those frames was used. For example, the straightness value of Step #1 to Step #3 is the angle between Step #1 and Step #2 and Step #2 and Step #3, and the straightness value of Step #2 to Step #4 is the angle between Step #2 and Step #3 and Step #3 and Step #4; in this case, the frames of Step #2 to Step #3 had two straightness values. Therefore, the average straightness value of Step #1 to Step #3 and Step #2 to Step #4 was assigned to those frames.

After grouping frames into seven straightness groups, the mean value and standard deviation value of each angle in each group were calculated for each walking trial.

• −35° to −25° (Large Curved Walking - Clockwise)

• −25° to −15° (Moderate Curved Walking - Clockwise)

• −15° to −5° (Small Curved Walking - Clockwise)

• −5° to 5° (Straight Walking)

• 5° to 15° (Small Curved Walking - Counter-Clockwise)

• 15° to 25° (Moderate Curved Walking - Counter-Clockwise)

• 25° to 35° (Large Curved Walking - Counter-Clockwise).

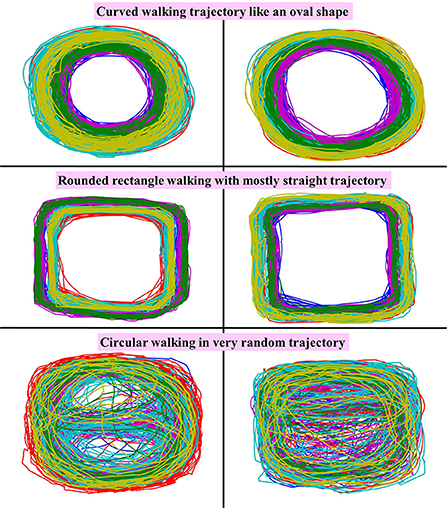

We also checked the walking direction of each walking trial, since subjects were instructed to walk in a circular path inside the recording area but there was no designated path. Each subject chose his or her own path to walk inside the recording area, and we found that some subjects walked in an extremely curved pattern while other subjects walked in a very straight pattern. Consequently, there were multiple walking curvatures in one walking trial, e.g., straight walking part and non-straight (curved) walking part. Samples of walking trajectories for six subjects are shown in Figure 11, which reveals various walking trajectories. The walking paths of the first two subjects are curvy and closer to an oval shape, whereas the walking paths of the next two subjects are very straight for most of the parts, with some curved part only when they turned. These walking paths look more like a rounded-rectangle shape. However, for the last two subjects, their walking paths were very random but still in a circular pattern. In other words, these two subjects walked in a very random circle size with random trajectories. According to our dataset, we found that the walking paths of most participants are quite regular like the first four examples. Very few subjects had highly random walking paths like the last two examples. Therefore, our dataset consists of many walking trajectories and curvatures. We can say that our dataset is direction-free, since the participants had the freedom to choose their walking path as they wished between clockwise and counter-clockwise directions.

Figure 11. Sample of walking path from six subjects in this dataset, including oval-shaped walking, rounded-rectangular walking, and random circular walking.

The following analyses were performed on the mean and standard deviations of each angle.

We performed 1-way ANOVA to check whether emotion differences affect the movements of each body part. Both Expected Emotion, which is the emotion label from annotated videos we used as stimuli, and Reported Emotion, which is the emotion label from self-reported questionnaire answers, were used in this analysis.

• Factor to test: Expected Emotion (Positive, Negative, Neutral) and Reported Emotion (Happy, Sad, Neither)

• Dependent variable: Mean value and SD value of each angle in each straightness group.

Mean and SD values of all angles for the two types of emotions, Expected Emotion and Reported Emotion, were compared separately according to their straightness groups in this analysis.

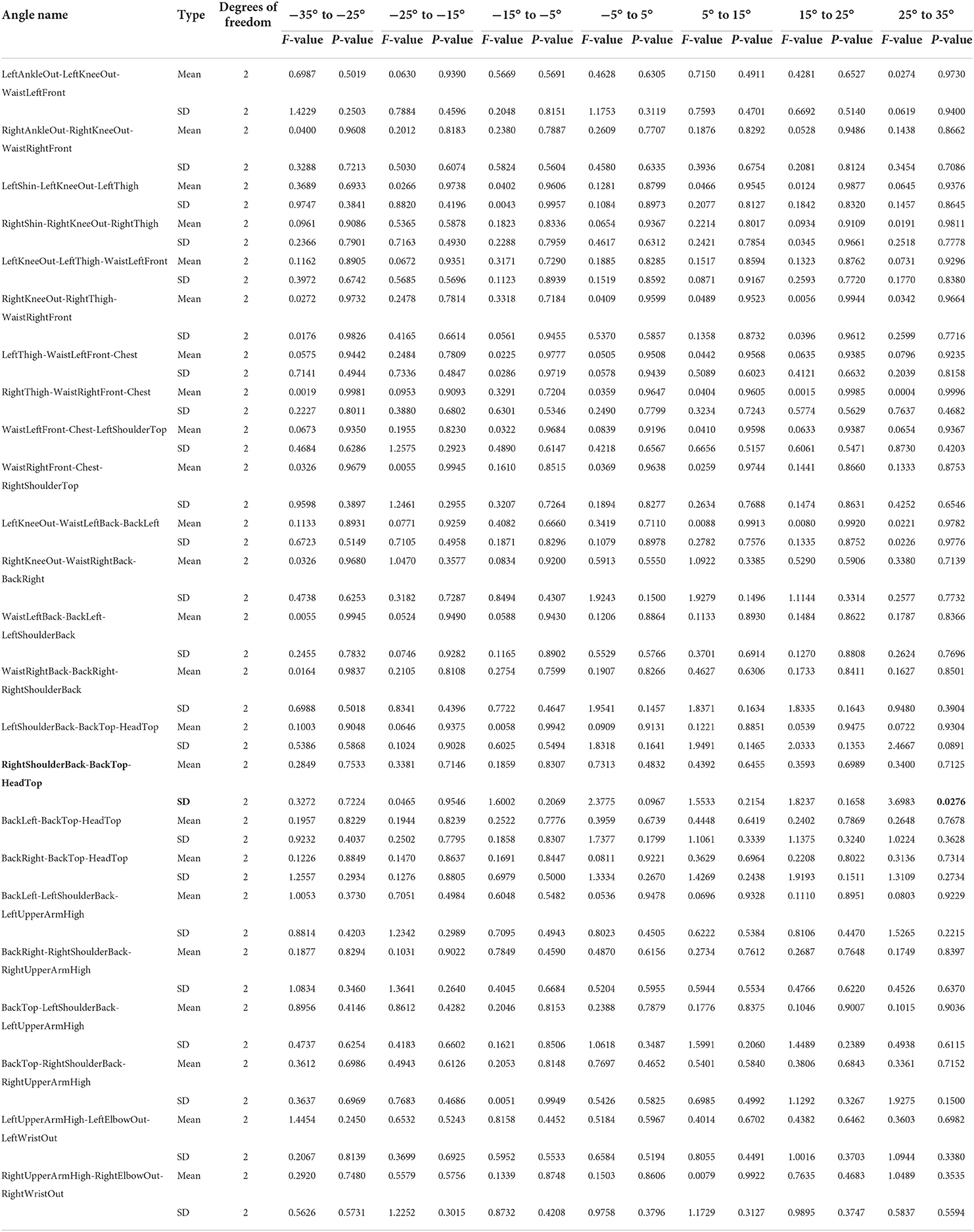

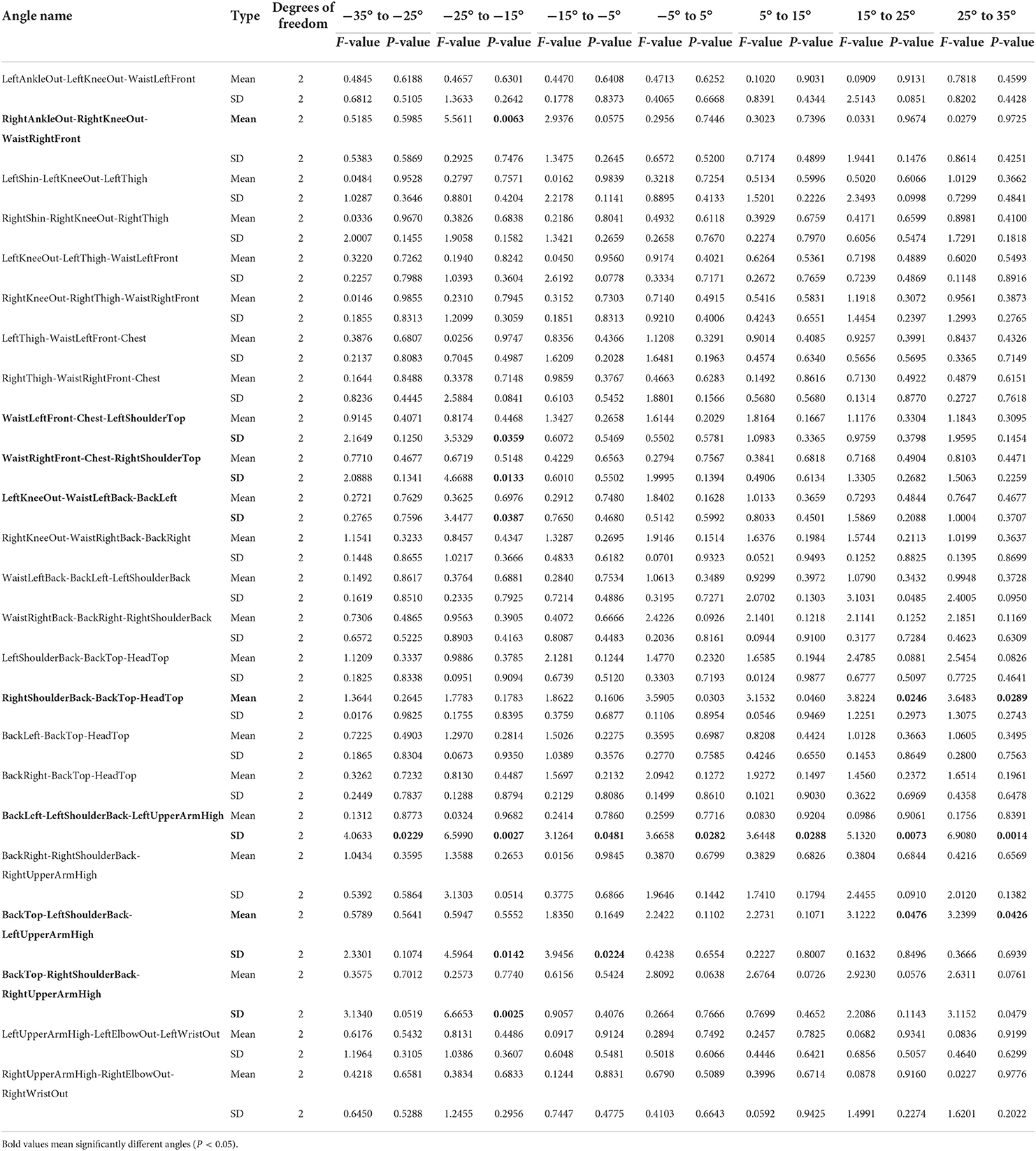

One-way ANOVA was used for checking the effects of Expected Emotions and Reported Emotions on the movements of body parts. Detailed results of the ANOVA test on mean and SD of each angle in each straightness group are shown in Table 4 for Expected Emotion and Table 5 for Reported Emotion. If the mean or SD value of any angle in any straightness group is significantly different among emotions, we performed a Tukey test with that mean or SD of that angle to find the pair of emotions that has significant effects on body movements, e.g., Happy vs. Sad or Neither vs. Sad. Tukey test results for Expected Emotion and Reported Emotion are shown in Table 6.

Table 4. Results of 1-way ANOVA for mean and SD of each angle in each straightness group (factor: expected emotion).

Table 5. Results of 1-way ANOVA for mean and SD of each angle in each straightness group (factor: reported emotion).

Table 6. Tukey test results of significantly different mean and SD of each angle in each straightness group (factor: expected emotion).

As shown in Tables 4, 6, in all straightness groups, the difference of Expected Emotion only affected the SD of one angle, that is, RightShoulderBack-BackTop-HeadTop in the 25° to 35° walking straightness group. In addition, the pair that has a significant effect is Negative Video vs. Neutral Video. In other words, only a high curvature walk in the counter-clockwise direction has a different magnitude of head movement between Negative Video and Neutral Video.

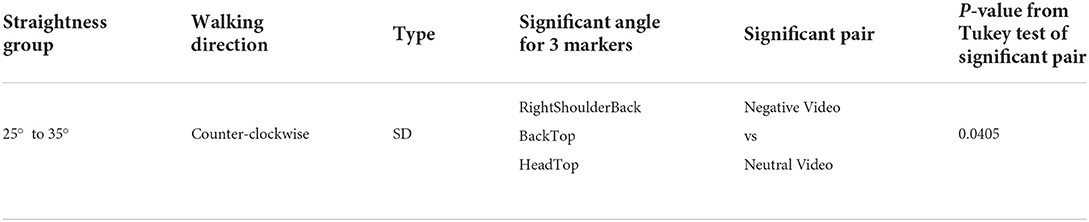

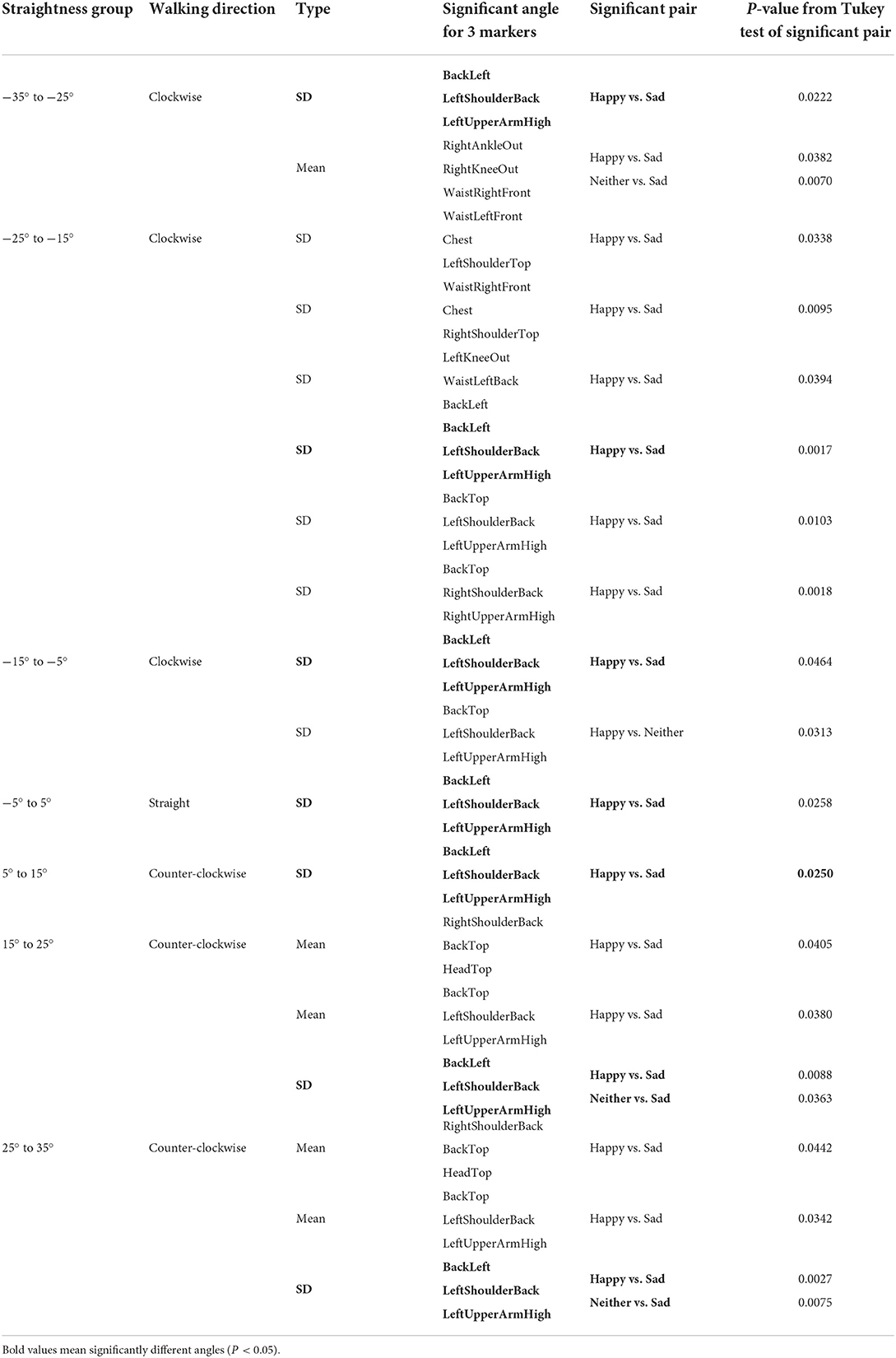

For Reported Emotions analysis, according to Table 5, there are many mean or SD values of angles that are significantly different among the different reported emotions. We also performed the Tukey test with each mean or SD of an angle to check the pairs of emotions that have a significant effect on body movements. Table 7 shows the results from the Tukey test of each mean or SD of an angle. We found that all walking straightness groups have at least one mean or SD of an angle that was affected significantly by happy emotion compared with sad emotion. However, there is only one SD of body part angle that is significantly affected by the emotional differences between happy and sad regardless of walking straightness, i.e., the SD of BackLeft-LeftShoulderBack-LeftUpperArmHigh, which can be interpreted as Left Arm Swing Magnitude. This angle is illustrated in Figure 12.

Table 7. Tukey test results of significantly different mean and SD of each angle in each straightness group (factor: reported emotion).

Figure 12. Angle of BackLeft-LeftShoulderBack-LeftUpperArmHigh (arm swing) in body skeleton image (original human figure source: dox012 on Sketchfab4).

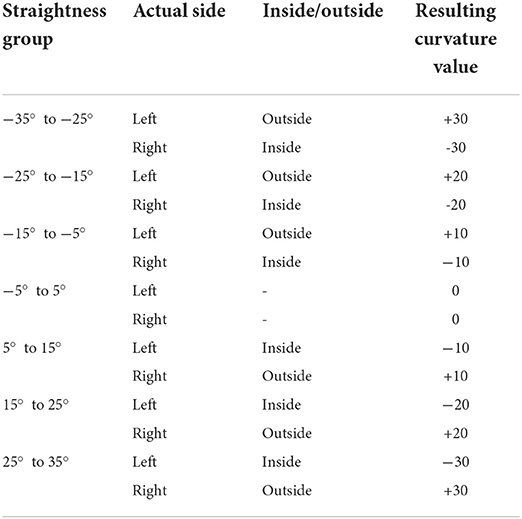

When subjects walk circularly, one arm's side is inside and the other arm's side is outside of the walking path. We also check the relationship between behavior of left and right arm swings in each emotion with the inside-outside status of that arm and the walking curvatures of the subjects. The inside and outside status of left and right arm can be determined by the walking direction. That is, the left arm is outside and the right arm is inside when subjects are walking in the clockwise direction, and in the counter-clockwise direction, the left arm is inside and the right arm is outside. Additionally, the curvature level can be determined from the straightness group. For example, if the angle of straightness is between −35° to −25° or 25° to 35°, it can be considered large curved walking, and −5° to 5° can be considered straight walking. For the direction, minus sign means clockwise, while plus sign means counter-clockwise. A list of all curvature levels and walking directions is also shown in Section 5. We plot the arm swing magnitude of the left arm and right arm in all emotions together with its curvature level and its inside-outside status in Figures 13, 14, respectively.

Figure 13 shows the left arm swing magnitude with its inside-outside status and the curvature level. We can see from this figure that when the arm is outside in high curvature walking, the difference between happy and sad is quite obvious. Although the difference between happy and sad is reduced when the curvature decreases, the difference is still large enough to distinguish these emotions. For inside-outside status, we found that when the arm is inside, the difference between happy and sad is smaller than when the arm is outside.

The right arm swing magnitude according to its inside-outside status and the walking curvature are shown in Figure 14. For right arm swing, the differences between happy and sad are smaller than left arm swing at all curvature levels. However, when the subject walks at a high curvature level and the right arm is outside, the arm swing is still higher than when the subject walks in a smaller curvature and the right arm is inside.

Based on the results of 1-way ANOVA, Reported Emotion shows more differences in body part movements between emotions than does Expected Emotion. Results of Expected Emotion are listed in Table 6, and only one straightness group has a significantly different mean or SD of angle. In Table 7, Reported Emotion has one or more significantly different mean or SD of angle in all straightness groups. Therefore, we focused on the analysis of Reported Emotion only.

In one-way ANOVA analysis of Reported Emotion, some straightness groups had multiple mean or SD values of angle affected by the emotional difference between happy and sad, while other straightness groups had only one mean or SD value affected. The results in Table 7 show that only the left arm swing magnitude (BackLeft-LeftShoulderBack-LeftUpperArmHigh) was affected by emotional difference regardless of curvature level and inside-outside status of the arm. In multi-factor ANOVA, we tested several factors including Reported Emotion, Curvature, Angle Side, and combinations of these factors to find the relationship between the factors and the left and right arm swing magnitudes. Additionally, we performed multi-factor ANOVA analysis with the arm swing magnitude data of all straightness groups at once.

The factors we used in multi-factor analysis are as follows.

• Reported Emotion: Happy, Sad, Neither

• Curvature: -30, -20, -10, 0, 10, 20, 30

• Angle Side: Left, Right

• Gender: Male, Female

• Reported Emotion × Curvature: Happy/-30, Sad/0, Neither/10, etc.

• Reported Emotion × Angle Side: Happy/Left, Happy/Right, Sad/Left, Sad Right, etc.

• Curvature × Angle Side: -30/Left, -20/Right, 10/Left, etc.

• Reported Emotion × Gender: Happy/Male, Sad/Male, Happy/Female, etc.

First, the Reported Emotion factor is for checking whether the arm swing magnitudes are affected by different emotions if we do not consider any other factor.

For the Curvature factor, we converted the straightness groups to curvature values before performing multi-factor ANOVA. The sign of curvature value (plus or minus) was selected by checking whether the left arm and right arm were Inside or Outside when the subjects are walking in a circular walking path. For all curved walking groups, outside arm used plus sign and inside arm used minus sign as shown in Table 8. For straight walking group, the curvature was 0 for both left arm and right arm, since there was no inside or outside in straight walk. By checking the curvature factor, we could verify whether the curvatures of walking, including small curve, moderate curve, and large curve, and the inside-outside status of that arm have significant effects on arm swing magnitude. Since our dataset has a circular walking path that has never been used in conventional studies, we are uncertain whether outside arm swing and inside arm swing during circular walking are symmetric. If the two sides are not symmetric, this implies that one side can be used to more clearly distinguish a subject's current emotion. Moreover, we believe that the curvature of walking can affect arm swinging. For these reasons, we decided to perform an analysis on curvature and the inside-outside status of the arm.

Table 8. Conversion from straightness group to curvature for multi-factor ANOVA (plus sign for outside and minus sign for inside).

For the Angle Side factor, because we found from a one-way ANOVA test that only left arm swing is significantly different between happy and sad emotions, we checked whether the arm side (left or right) affects the arm swing magnitude.

Genders of subjects are also possible to have effects with subjects' arm swings while walking, multi-factor ANOVA was also performed with the Gender factor to check whether this factor has any significant effect with arm swing magnitudes.

The results from multi-factor ANOVA analysis are listed in Table 9. We found that some factors have a significant effect with arm swing magnitude, including Reported Emotion, Curvature and Gender. In addition, we found interaction effects between Reported Emotion with Angle Side, Curvature with Angle Side, and Reported Emotion with Gender.

We also checked the Reported Emotion factor by performing a Tukey test. The results from the Tukey test are shown in Table 10. From this table, every pair of emotion, including Happy vs. Neither, Happy vs. Sad, and Neither vs. Sad, has a significant effect with arm swing magnitude, regardless of any other factors.

The Gender factor is also checked by Tukey test. Table 11 shows the result of Tukey test for this factor. As shown in this table, male subjects and female subjects have significantly different arm swing magnitudes, regardless of any other factor.

Because the behaviors of left arm swings and right arm swings are different, i.e, the left arm has statistically significant arm swing differences among emotions while the right arm does not show such significant differences. We performed linear regression analysis of the left arm and right arm to check whether the regression slopes of each arm side are similar. The regression equation is as follows.

In this equation, α is the intercept, β is the slope, X is the curvature value, and Y is the predicted arm swing magnitude. That is, we find the most suitable α and β value for each emotion in each arm side that can minimize the differences between predicted arm swing magnitude (YPredict) and actual arm swing magnitude (Y) for each curvature value.

The regression equations for left arm swing magnitude in each emotion are as follows.

The regression equations for right arm swing magnitude in each emotion are as follows.

Accordingly, we find the α and β values of left arm swing and right arm swing for happy, sad, and neither emotion separately. If βHappyLeft, βSadLeft, and βNeitherLeft are equal, the slopes of all emotions are similar for the left arm. That is, the difference in emotions does not have a significant effect on the left arm swing magnitude in each curvature. For the right arm, we also checked whether βHappyRight, βSadRight, and βNeitherRight were equal. Consequently, we can follow the same rule used for the left arm to verify whether emotion differences significantly affect the right arm swing.

The results of linear regression of each emotion for the left arm are as follows.

• Happy: α = 4.5521, β = 0.0259

• Neither: α = 3.8916, β = 0.0017

• Sad: α = 3.1764, β = 0.0038.

For the right arm, the linear regression results are as follows.

• Happy: α = 4.2854, β = 0.0206

• Neither: α = 4.0596, β = 0.0227

• Sad: α = 3.6643, β = 0.0142.

According to the above results, for left arm swings, the β values from linear regression of happy and sad are largely different (β = 0.0259 and β = 0.0038, respectively). The results suggest that the left arm swing magnitude when the subjects feel happy is much different from the left arm swing magnitude when the subjects feel sad, since the slope of these two emotions are significantly different. In addition, for right arm swings, the β values of happy and sad are also different, but the difference in the right arm swings' slopes is smaller than that in the left arm swings' slope (β = 0.0206 and β = 0.0142, respectively). Hence, the right arm swing magnitudes under happy and sad emotions are also different from each other, but not so much different as they are in the left arm. Under the neither emotion, the slope of the left arm swings is much different from the slopes of happy and sad emotions (β = 0.0017). However, the slope of the right arm swings is quite similar to the slope of happy emotion (β = 0.0227). Therefore, the neither emotion has significantly different arm swings compared to those of the happy and sad emotions for the left arm side. Nevertheless, for the right arm, the neither emotion has quite similar arm swings to those of the happy emotion.

In summary, the slopes are affected by emotion differences largely for the left arm side, but not so much for the right arm side. These results are in agreement with those from one-way ANOVA.

From the results of all statistical analyses, we examine particularly noteworthy findings as follows.

First, from one-way ANOVA results, we found that human gait is noticeably more affected by the reported emotion, which is from a self-reported questionnaire, than by the expected emotion, which is the annotated emotion of the emotion induction video we used. When we performed a comparison between expected emotions, all subjects watched the same video of each emotion type including neutral, positive and negative video; in each video, the visual patterns, dialogues, sounds, and musical rhythms are identical. Consequently, the results suggest that the gait differences we found from our analyses are not based on the raw video or audio stimuli but on the subjects' reported feelings induced by these videos.

In addition, from one-way ANOVA results of reported emotion, the behaviors of several body part movements are affected by human emotion. These include, for example, the mean values of angles related to the head and shoulders of subjects and the standard deviation values of angles, i.e., movement magnitude related to the chest, waist, and arms of subjects. In particular, the left arm swing magnitude is obviously different among reported emotions regardless of walking curvature. However, only the left arm swing magnitude is significantly affected by the difference in emotions among all curvatures, whereas the right arm swing magnitude is not affected in this way. Since the participants in our study did not walk in a straight walking path but circularly with different curvatures, it is possible that this phenomenon occurred due to subjects' walking curvature.

From these results, we investigated the arm swing magnitude in different walking curvatures. We found from the plots of arm swing magnitude shown in Figures 13, 14 that the inside arm has a smaller arm swing magnitude compared with the outside arm, regardless of reported emotion or the arm side (left or right arm). That is, when comparing within the same emotion, for both left arm and right arm, the arm swing magnitude is smaller if the arm is inside than if the arm is outside. This effect is very obvious for happy emotion. Additionally, arm swing magnitudes of sad emotion are always smaller comparing to happy emotion for both left and right arm according to the experimental results using our dataset. Although the arm swing when the arm is inside is smaller than when the arm is outside in the same emotion, the smallest arm swing of happy emotion remains greater than the largest arm swing of sad emotion as shown in Figures 13, 14. Therefore, we can distinguish the differences between happy and sad emotions from the arm swing magnitudes even we do not know the walking curvature and inside-outside status, but if we have this additional information, it will be easier to distinguish between emotions. Our finding about differences of arm swing magnitudes between happy emotion and sad emotion agrees with previous works in related fields including the studies proposed by Montepare et al. (1987), Michalak et al. (2009) and Halovic and Kroos (2018). All of them have similar findings that subjects' arm swings when feeling sad are smaller than the arm swings when feeling happy. Due to these results of one-way ANOVA, we decided to focus only on arm swing magnitude for reported emotion in the remaining analyses, since we found that arm swing can reveal subjects' emotion better than other body parts. Moreover, the effects of walking curvature and arm side can be investigated from arm swing magnitude using multi-factor ANOVA.

Based on to the results of multi-factor ANOVA, various issues should be considered as follows. First, for the main effects, we found that the reported emotion and curvature have effects on arm swing magnitude. However, the angle side (left or right arm) does not have a significant effect on arm swing magnitude. Furthermore, we found two interaction effects related to the angle side: first, the Reported Emotion factor with Angle Side factor (F = 5.3906, P = 0.0047); second, the Curvature factor with the Angle Side factor (F = 3.4769, P = 0.0020). It is possible that these differences are due to the dominant hand of the subjects. Unfortunately, in the current dataset we collected, the dominant hand is not balanced, i.e., most subjects are right-handed. Therefore, this hypothesis cannot be verified and it is still an open question for future investigation. Moreover, the reported emotion and walking curvature do not have interaction effects with each other.

Gender is also another factor that should be investigated. As we want to verify whether female and male subjects have different arm swing magnitudes, multi-factor ANOVA was performed with Gender factor in addition to Reported Emotion, Curvature, and Angle Side factors. From multi-factor ANOVA results, gender is another factor that has significant effect with arm swing magnitudes. Gender factor also has an interaction effect with the reported emotion factor (F = 55.7149, P = 0.0000). From other related studies, for example, Venture et al. (2014) which used four professional actors including 2 men and 2 women in their experiment, they found that inter-gender recognition is feasible and emotional expressions of male and female subjects are similar. In the study proposed by Gross et al. (2012), most features are not affected by gender e.g., gait velocity, cadence, range of motion at several joints, lateral tilt of pelvis and trunk etc. However, some other features are different among genders such as stride length, elbow range of motion, trunk extension. This study contains 30 subjects with 50% female participants so it should be more reliable as there are more number of subjects. Also, in the study by Kang and Gross (2016) that performed experiment using 11 women and 7 men, some features such as gait speed or stride length are not affected by gender while some other features such as the normal jerk score of elbow and wrist are affected because of gender difference. Hence, it is normal that some features can be affected by gender difference while some features are not. Unfortunately, our study has very imbalanced numbers of male and female subjects so we cannot make the final conclusion about this issue.

As shown in other studies, there are several works about gait analysis which attempted to estimate subjects' ages from their gaits. These studies reveal that human gaits can be used for age estimation (Lu and Tan, 2010; Zhang et al., 2010; Nabila et al., 2018; Gillani et al., 2020). This means that gait patterns from subjects with different age ranges are also different. Because of this issue, emotion recognition accuracy might decrease if the difference of subjects' ages is large. In this study, the average age of participants is 19.69 years with 1.40 years standard deviation as our participants are undergraduate students in our university. It is possible that if the dataset contains more age diversity, the results of investigation could be changed. Therefore, we want to state that the findings from our study are based on a sample group with similar age range.

According to the linear regression analysis, we found that the arm swings in each emotion are affected differently between the left and right arm as the regression slopes of the left arm are very different under each emotion, whereas the regression slopes of the right arm are quite similar among all emotions. This means that the effects of emotions on the left and right arm swings appear differently. The results suggest that we should also consider the arm side in addition to the arm swing magnitude so that we can distinguish between different emotions more easily with higher accuracy.

In summary, according to the results from all analyses, we can confirm the following hypotheses. First, body part movements are different under different emotions. Hence, walking posture can reveal the emotion of subjects. Second, the body part movements of the left and right sides while walking in a non-straight walking path are not symmetric, and thus one side can reveal the emotion of a subject better than the other side.

This study reveals several useful findings for the emotion recognition research field. We found that human gait can reveal the current emotion of subjects while walking, even in a non-straight walking path. Arm swing magnitude shows the differences in subjects' emotions effectively. Consequently, if we know the walking curvature as well as the arm side, it will be easier to distinguish between emotions. The results from our study can be used to develop an emotion recognition system that performs accurately and unobtrusively in real-life situations where the subjects are walking in a crowded environment, without the need for high-quality cameras or specific equipment. Since human emotions can be detected by their arm swing magnitudes, we can use any camera with pose-estimation software to calculate the essential features for emotion prediction. Nevertheless, some issues remain unexplored due to the limitations of our dataset, including the effect of dominant hand and the differences between male and female subjects. We plan to collect more data so we can investigate these issues in the near future.

In this study, we investigated the differences in body part movements while subjects are walking in a non-straight path and watching emotion-inducing videos using Microsoft HoloLens 2. Since the walking path is not straight, we can collect gait data for different curvatures. Body part movements were captured by the OptiTrack motion-capturing system with 37 markers. For emotion induction using emotion-inducing videos, we found that not all subjects felt the same emotion that we expected them to feel; therefore, it is important to always ask for their feelings after finishing emotion induction. For gait features, we calculated 24 angles that show the movements of body parts while subjects are walking. We also calculated walking straightness and the curvature level of walking, along with the inside-outside status of body side, i.e., left side or right side. According to the results of one-way ANOVA, multi-factor ANOVA, and linear regression analyses on gait data, we found that the magnitudes of arm swing are larger when the subjects are walking and feeling happy than when the subjects are feeling sad. In our opinion, the results agree with human nature that subjects will move slower with less magnitudes when they are feeling sad in comparison to when they are feeling happy. Furthermore, if the subjects walk in a non-straight walking path, observing one side of the body movements will be easier for prediction of emotion than the other side, since the left arm swings can reveal subjects' current emotion better than the right arm swings, especially when the left arm is the outside arm and the subjects are walking at a high curvature level. From all of the analyses we conducted, we conclude that body movements while walking are different under different emotions, so we can detect subjects' emotions using their gait. In particular, arm swing magnitude reveals the current emotions of subjects better than any other part of the body.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Future University Hakodate. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

NJ conducted the data collection, data preprocessing, feature extraction, and statistical analyses, and wrote this manuscript. KS and AU contributed to the experimental design for data collection, gave suggestions and checked all processes of this study, and reviewed this manuscript before submission. NK and CN made suggestions and checked the data collection, data preprocessing, and feature extraction process, as well as checking this manuscript before submission. All authors contributed to the article and approved the submitted version.

This work was supported by JST Moonshot R&D Grant Number JPMJMS2011.

We would like to express our gratitude to all volunteers who participated in our experiments. Also, we would like to thank all members of the KS Laboratory at Future University Hakodate, who greatly helped us in our experiments by setting up the venue and equipment, translating written instructions and questions into Japanese, and interpreting for participants who preferred to talk in their native language.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^https://sketchfab.com/3d-models/man-5ae6bd9271ac4ee4905b96e5458f435d (last accessed April 6, 2022).

2. ^https://www.youtube.com/watch?v=4R9HpESkor8 (last accessed: April 6, 2022).

3. ^https://liris-accede.ec-lyon.fr/ (last accessed: April 6, 2022).

4. ^https://sketchfab.com/3d-models/man-5ae6bd9271ac4ee4905b96e5458f435d (last accessed: April 6, 2022).

Ahmed, F., Sieu, B., and Gavrilova, M. L. (2018). “Score and rank-level fusion for emotion recognition using genetic algorithm,” in 2018 IEEE 17th International Conference on Cognitive Informatics &Cognitive Computing (ICCI* CC) (Berkeley, CA: IEEE), 46–53. doi: 10.1109/ICCI-CC.2018.8482086

Anderez, D. O., Kanjo, E., Amnwar, A., Johnson, S., and Lucy, D. (2021). The rise of technology in crime prevention: opportunities, challenges and practitioners perspectives. arXiv[Preprint].arXiv:2102.04204. doi: 10.48550/arXiv.2102.04204

Baratin, E., Sugavaneswaran, L., Umapathy, K., Ioana, C., and Krishnan, S. (2015). Wavelet-based characterization of gait signal for neurological abnormalities. Gait Posture 41, 634–639. doi: 10.1016/j.gaitpost.2015.01.012

Barliya, A., Omlor, L., Giese, M. A., Berthoz, A., and Flash, T. (2013). Expression of emotion in the kinematics of locomotion. Exp. Brain Res. 225, 159–176. doi: 10.1007/s00221-012-3357-4

Baveye, Y., Dellandréa, E., Chamaret, C., and Chen, L. (2015). Deep learning vs. kernel methods: Performance for emotion prediction in videos,” in 2015 International Conference on Affective Computing and Intelligent Interaction (ACII) (Xi'an: IEEE), 77–83. doi: 10.1109/ACII.2015.7344554

Bouchrika, I. (2018). “A survey of using biometrics for smart visual surveillance: gait recognition,” in Surveillance in Action (Springer), 3–23. doi: 10.1007/978-3-319-68533-5_1

Bouzakraoui, M. S., Sadiq, A., and Alaoui, A. Y. (2019). “Appreciation of customer satisfaction through analysis facial expressions and emotions recognition,” in 2019 4th World Conference on Complex Systems (WCCS) (Ouarzazate: IEEE), 1–5. doi: 10.1109/ICoCS.2019.8930761

Busso, C., Deng, Z., Yildirim, S., Bulut, M., Lee, C. M., Kazemzadeh, A., et al. (2004). “Analysis of emotion recognition using facial expressions, speech and multimodal information,” in Proceedings of the 6th International Conference on Multimodal Interfaces (State College, PA), 205–211. doi: 10.1145/1027933.1027968

Chiu, M., Shu, J., and Hui, P. (2018). “Emotion recognition through gait on mobile devices,” in 2018 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops) (Athens: IEEE), 800–805. doi: 10.1109/PERCOMW.2018.8480374

Deluzio, K. J., Wyss, U. P., Zee, B., Costigan, P. A., and Serbie, C. (1997). Principal component models of knee kinematics and kinetics: normal vs. pathological gait patterns. Hum. Movement Sci. 16, 201–217. doi: 10.1016/S0167-9457(96)00051-6

Destephe, M., Maruyama, T., Zecca, M., Hashimoto, K., and Takanishi, A. (2013). “The influences of emotional intensity for happiness and sadness on walking,” in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Osaka: IEEE), 7452–7455. doi: 10.1109/EMBC.2013.6611281

Gillani, S. I., Azam, M. A., and Ehatisham-ul Haq, M. (2020). “Age estimation and gender classification based on human gait analysis,” in 2020 International Conference on Emerging Trends in Smart Technologies (ICETST) (Karachi: IEEE), 1–6. doi: 10.1109/ICETST49965.2020.9080735

Gross, M. M., Crane, E. A., and Fredrickson, B. L. (2012). Effort-shape and kinematic assessment of bodily expression of emotion during gait. Hum. Movement Sci. 31, 202–221. doi: 10.1016/j.humov.2011.05.001

Halovic, S., and Kroos, C. (2018). Not all is noticed: kinematic cues of emotion-specific gait. Hum. Movement Sci. 57, 478–488. doi: 10.1016/j.humov.2017.11.008

Isaac, E. R., Elias, S., Rajagopalan, S., and Easwarakumar, K. (2019). Multiview gait-based gender classification through pose-based voting. Pattern Recogn. Lett. 126, 41–50. doi: 10.1016/j.patrec.2018.04.020

Ismail, A. R., and Asfour, S. S. (1999). Discrete wavelet transform: a tool in smoothing kinematic data. J. Biomech. 32, 317–321. doi: 10.1016/S0021-9290(98)00171-7

Janssen, D., Schöllhorn, W. I., Lubienetzki, J., Fölling, K., Kokenge, H., and Davids, K. (2008). Recognition of emotions in gait patterns by means of artificial neural nets. J. Nonverbal Behav. 32, 79–92. doi: 10.1007/s10919-007-0045-3

Kang, G. E., and Gross, M. M. (2015). Emotional influences on sit-to-walk in healthy young adults. Hum. Movement Sci. 40, 341–351. doi: 10.1016/j.humov.2015.01.009

Kang, G. E., and Gross, M. M. (2016). The effect of emotion on movement smoothness during gait in healthy young adults. J. Biomech. 49, 4022–4027. doi: 10.1016/j.jbiomech.2016.10.044

Karg, M., Kühnlenz, K., and Buss, M. (2010). Recognition of affect based on gait patterns. IEEE Trans. Syst. Man Cybernet. B 40, 1050–1061. doi: 10.1109/TSMCB.2010.2044040

Khamsemanan, N., Nattee, C., and Jianwattanapaisarn, N. (2017). Human identification from freestyle walks using posture-based gait feature. IEEE Trans. Inform. Forensics Sec. 13, 119–128. doi: 10.1109/TIFS.2017.2738611

Kim, S., Nussbaum, M. A., and Ulman, S. (2018). Impacts of using a head-worn display on gait performance during level walking and obstacle crossing. J. Electromyogr. Kinesiol. 39, 142–148. doi: 10.1016/j.jelekin.2018.02.007

Kitchat, K., Khamsemanan, N., and Nattee, C. (2019). “Gender classification from gait silhouette using observation angle-based geis,” in 2019 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM) (Bangkok: IEEE), 485–490. doi: 10.1109/CIS-RAM47153.2019.9095797

Kuijsters, A., Redi, J., De Ruyter, B., and Heynderickx, I. (2016). Inducing sadness and anxiousness through visual media: measurement techniques and persistence. Front. Psychol. 7, 1141. doi: 10.3389/fpsyg.2016.01141

Lemke, M. R., Wendorff, T., Mieth, B., Buhl, K., and Linnemann, M. (2000). Spatiotemporal gait patterns during over ground locomotion in major depression compared with healthy controls. J. Psychiatr. Res. 34, 277–283. doi: 10.1016/S0022-3956(00)00017-0

Li, B., Zhu, C., Li, S., and Zhu, T. (2016). Identifying emotions from non-contact gaits information based on microsoft kinects. IEEE Trans. Affect. Comput. 9, 585–591. doi: 10.1109/TAFFC.2016.2637343

Li, S., Cui, L., Zhu, C., Li, B., Zhao, N., and Zhu, T. (2016). Emotion recognition using kinect motion capture data of human gaits. PeerJ 4, e2364. doi: 10.7717/peerj.2364

Limcharoen, P., Khamsemanan, N., and Nattee, C. (2020). View-independent gait recognition using joint replacement coordinates (JRCs) and convolutional neural network. IEEE Trans. Inform. Forensics Sec. 15, 3430–3442. doi: 10.1109/TIFS.2020.2985535

Limcharoen, P., Khamsemanan, N., and Nattee, C. (2021). Gait recognition and re-identification based on regional lstm for 2-second walks. IEEE Access 9, 112057–112068. doi: 10.1109/ACCESS.2021.3102936

Lu, J., and Tan, Y.-P. (2010). Gait-based human age estimation. IEEE Trans. Inform. Forensics Sec. 5, 761–770. doi: 10.1109/TIFS.2010.2069560

Michalak, J., Troje, N. F., Fischer, J., Vollmar, P., Heidenreich, T., and Schulte, D. (2009). Embodiment of sadness and depression–gait patterns associated with dysphoric mood. Psychosom. Med. 71, 580–587. doi: 10.1097/PSY.0b013e3181a2515c

Montepare, J. M., Goldstein, S. B., and Clausen, A. (1987). The identification of emotions from gait information. J. Nonverbal Behav. 11, 33–42. doi: 10.1007/BF00999605

Nabila, M., Mohammed, A. I., and Yousra, B. J. (2018). Gait-based human age classification using a silhouette model. IET Biometrics 7, 116–124. doi: 10.1049/iet-bmt.2016.0176

Nyan, M., Tay, F., Seah, K., and Sitoh, Y. (2006). Classification of gait patterns in the time-frequency domain. J. Biomech. 39, 2647–2656. doi: 10.1016/j.jbiomech.2005.08.014

Olney, S. J., Griffin, M. P., and McBride, I. D. (1998). Multivariate examination of data from gait analysis of persons with stroke. Phys. Therapy 78, 814–828. doi: 10.1093/ptj/78.8.814

Quiroz, J. C., Geangu, E., and Yong, M. H. (2018). Emotion recognition using smart watch sensor data: mixed-design study. JMIR Mental Health 5, e10153. doi: 10.2196/10153

Roether, C. L., Omlor, L., Christensen, A., and Giese, M. A. (2009). Critical features for the perception of emotion from gait. J. Vision 9, 15–15. doi: 10.1167/9.6.15

Sadeghi, H., Allard, P., and Duhaime, M. (1997). Functional gait asymmetry in able-bodied subjects. Hum. Movement Sci. 16, 243–258. doi: 10.1016/S0167-9457(96)00054-1

Sedighi, A., Rashedi, E., and Nussbaum, M. A. (2020). A head-worn display (“smart glasses”) has adverse impacts on the dynamics of lateral position control during gait. Gait Posture 81, 126–130. doi: 10.1016/j.gaitpost.2020.07.014

Sedighi, A., Ulman, S. M., and Nussbaum, M. A. (2018). Information presentation through a head-worn display (“smart glasses”) has a smaller influence on the temporal structure of gait variability during dual-task gait compared to handheld displays (paper-based system and smartphone). PLoS ONE 13, e0195106. doi: 10.1371/journal.pone.0195106

Shiavi, R., and Griffin, P. (1981). Representing and clustering electromyographic gait patterns with multivariate techniques. Med. Biol. Eng. Comput. 19, 605–611. doi: 10.1007/BF02442775

Stephens-Fripp, B., Naghdy, F., Stirling, D., and Naghdy, G. (2017). Automatic affect perception based on body gait and posture: a survey. Int. J. Soc. Robot. 9, 617–641. doi: 10.1007/s12369-017-0427-6

Sun, B., Zhang, Z., Liu, X., Hu, B., and Zhu, T. (2017). Self-esteem recognition based on gait pattern using kinect. Gait Post. 58, 428–432. doi: 10.1016/j.gaitpost.2017.09.001

Tiam-Lee, T. J., and Sumi, K. (2019). “Analysis and prediction of student emotions while doing programming exercises,” in International Conference on Intelligent Tutoring Systems (Kingston: Springer), 24–33. doi: 10.1007/978-3-030-22244-4_4