94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Artif. Intell., 14 July 2022

Sec. AI for Human Learning and Behavior Change

Volume 5 - 2022 | https://doi.org/10.3389/frai.2022.908261

This article is part of the Research TopicDistributed Cognition in Learning and Behavioral Change – Based on Human and Artificial IntelligenceView all 9 articles

In the present article, we explore prospects for using artificial intelligence (AI) to distribute cognition via cognitive offloading (i.e., to delegate thinking tasks to AI-technologies). Modern technologies for cognitive support are rapidly developing and increasingly popular. Today, many individuals heavily rely on their smartphones or other technical gadgets to support their daily life but also their learning and work. For instance, smartphones are used to track and analyze changes in the environment, and to store and continually update relevant information. Thus, individuals can offload (i.e., externalize) information to their smartphones and refresh their knowledge by accessing it. This implies that using modern technologies such as AI empowers users via offloading and enables them to function as always-updated knowledge professionals, so that they can deploy their insights strategically instead of relying on outdated and memorized facts. This AI-supported offloading of cognitive processes also saves individuals' internal cognitive resources by distributing the task demands into their environment. In this article, we provide (1) an overview of empirical findings on cognitive offloading and (2) an outlook on how individuals' offloading behavior might change in an AI-enhanced future. More specifically, we first discuss determinants of offloading such as the design of technical tools and links to metacognition. Furthermore, we discuss benefits and risks of cognitive offloading. While offloading improves immediate task performance, it might also be a threat for users' cognitive abilities. Following this, we provide a perspective on whether individuals will make heavier use of AI-technologies for offloading in the future and how this might affect their cognition. On one hand, individuals might heavily rely on easily accessible AI-technologies which in return might diminish their internal cognition/learning. On the other hand, individuals might aim at enhancing their cognition so that they can keep up with AI-technologies and will not be replaced by them. Finally, we present own data and findings from the literature on the assumption that individuals' personality is a predictor of trust in AI. Trust in modern AI-technologies might be a strong determinant for wider appropriation and dependence on these technologies to distribute cognition and should thus be considered in an AI-enhanced future.

Today, modern technologies are an indispensable part of peoples' lives and it is hard to image living without smartphones and other technical gadgets. Currently, around 1.3 billion smartphones are sold worldwide per year (idc.com, 2022) and, for instance, in Austria about 83% of individuals above 15 years own a smartphone (KMU Forschung Austria, 2020). People are using their technical devices for several reasons, such as staying in contact, using the internet, taking pictures, or playing games. Furthermore, technical devices can be used to externalize cognitive processes, which is referred to as cognitive offloading (Risko and Gilbert, 2016). Individuals offload cognitive processes by, for instance, relying on navigation applications instead relying on one's own spatial abilities or by storing appointments or shopping lists in their smartphones instead of memorizing them. Also, modern technologies can be used to access up-to-date knowledge and thus individuals do not need to rely on outdated, internally memorized information. Indeed, the majority of adults indicates using technical devices regularly or even very often as external memory stores (Finley et al., 2018). Also, empirical studies show that individuals flexibly distribute cognitive demands between internal and external resources when solving problems (e.g., Cary and Carlson, 2001). Distributed cognition using modern technologies should thereby facilitate task performance.

Besides the mentioned basic applications of a technical device, also smart applications that are based on artificial intelligence (AI) might be used for offloading. For instance, smart speakers could be used to store appointments and to be reminded of them. Due to the effortless interaction with such smart applications distributed cognition might reach a new all-time high in the coming years. Here, we aim at discussing this development of distributed cognition with modern technologies. First, we give an overview of recent findings regarding cognitive offloading. We discuss determinants that foster the offloading of cognitive processes in modern technologies as well as possible benefits and risks of offloading. Second, we provide an outlook on how distributed cognition (and thus cognitive offloading) might change in the future–when AI-technologies are used on a daily basis by many people–especially with regard to the educational sector. Furthermore, we discuss whether distributed cognition will actually increase in the future or whether people will rather aim at enhancing their internal cognitive abilities. One crucial factor for using AI-technologies to distribute cognition might be peoples' trust in these technologies. Therefore, we also describe personality traits as potential predictors of trust in AI by summarizing findings from the literature and presenting our own data. Finally, we argue for more psychological research that could support and inform the development of modern technologies.

While cognitive offloading comes in many different forms (e.g., for short term memory offloading see Meyerhoff et al., 2021; for navigation offloading see Fenech et al., 2010), investigations on the offloading of cognitive processes into modern technology can be summarized into two lines of research: (1) the determinants of cognitive offloading and (2) the consequences of offloading behavior.

Modern technologies could be used for offloading whenever they are available, but offloading might be applied more or less depending on certain conditions. On the one hand, offloading was shown to depend on external factors such as the design of technical tools (e.g., Gray et al., 2006; Grinschgl et al., 2020) or characteristics of the to-be-processed information (e.g., Schönpflug, 1986; Hu et al., 2019). Regarding tool design, studies observed that individuals offload more cognitive processes when the offloading process (i.e. the interaction with a technical tool) is fast vs. associated with temporal delays (e.g., Gray et al., 2006; Waldron et al., 2011; Grinschgl et al., 2020). Similarly, also when interacting with technical tools requires less vs. more operational steps offloading increases (e.g., O'Hara and Payne, 1998; Cary and Carlson, 2001). Furthermore, Grinschgl et al. (2020) observed that when participants performed a task on a tablet using its touch function, they offloaded more working memory processes than when using a computer mouse. Regarding the characteristics of the to-be-processed information, it was shown that offloading increases when a task and accompanying information is more complex, relevant, or difficult (e.g., Schönpflug, 1986; Hu et al., 2019). Additionally, a larger amount of to-be-processed information fosters offloading behavior (e.g., Gilbert, 2015a; Arreola et al., 2019). Overall, these external factors suggest that the distribution of cognitive processes on internal and external resources depends on situational cost-benefit considerations (e.g., Gray et al., 2006; Grinschgl et al., 2020).

On the other hand, internal factors such as individuals' cognitive abilities and metacognitive beliefs can impact offloading. Studies observed more offloading when one's working memory capacity is lower (e.g., Gilbert, 2015b; Meyerhoff et al., 2021). Moreover, individuals commonly offload more when they believe that their internal performance is worse (Gilbert, 2015b; Boldt and Gilbert, 2019; but see Grinschgl et al., 2021a for conflicting results). To our best knowledge, an investigation of other individual differences with regard to cognitive offloading is still lacking (e.g., there is no research on the interplay between personality and offloading).

Together, these determinants of offloading behavior suggest that individuals do not maximally offload under all circumstances, but instead especially the easy and fast access to modern technology fosters offloading (e.g., Grinschgl et al., 2020). With an increase in offloading due to modern technologies, the question arises whether offloading is accompanied by positive and/or negative consequences. Cognitive offloading was shown to improve immediate task performance by accelerating it and/or reducing errors (e.g., Boldt and Gilbert, 2019; Grinschgl et al., 2021b). Furthermore, studies showed that offloading improves simultaneous secondary task performance (Grinschgl et al., 2022) and later performance of unrelated tasks (Storm and Stone, 2015; Runge et al., 2019). Hence, it is assumed that cognitive offloading releases internal cognitive resources that can be devoted to other, simultaneous or subsequent tasks. Furthermore, the retrieval and storage of information using modern technologies might help individuals to refresh their internal knowledge and thus to act as always-updated knowledge professionals.

Importantly, cognitive offloading is also accompanied by risks. In three experiments, Grinschgl et al. (2021b) observed a trade-off for cognitive offloading: while the offloading of working memory processes increased immediate task performance, it also decreased subsequent memory performance for the offloaded information. Similarly, the offloading of spatial processes by using a navigation device impairs spatial memory (i.e., route learning and subsequent scene recognition; Fenech et al., 2010). Thus, information stored in a technical device might be quickly forgotten (for an intentional/directed forgetting account see Sparrow et al., 2011; Eskritt and Ma, 2014) or might not be processed deeply enough so that no long-term memory representations are formed (cf. depth of processing theories; Craik and Lockhart, 1972; Craik, 2002). In addition to detrimental effects of offloading on (long-term) memory, offloading hinders skill acquisition (Van Nimwegen and Van Oostendorp, 2009; Moritz et al., 2020) and harms metacognition (e.g., Fisher et al., 2015, 2021; Dunn et al., 2021); e.g. the use of technical aids can inflate one's knowledge. In Dunn et al. (2021), the participants had to answer general knowledge questions by either relying on their internal resources or additionally using the internet. Metacognitive judgments showed that participants were overconfident when they are allowed to use the internet. Similarly, Fisher et al. (2021) concluded that searching for information online leads to a misattribution of external information to internal memory.

To summarize, cognitive offloading is accompanied by both–benefits and risks. While it can improve immediate task performance, it might also be accompanied by detrimental long-term effects. However, the investigated time-frames were rather short (effects over hours or days). Thus, it remains unclear how offloading might impact cognition over the lifespan (for a discussion see Cecutti et al., 2021). While these authors see humans' development with modern technologies rather positive, others pose modern technologies as a threat for humans (e.g., Carr, 2008; Spitzer, 2012). If distributing cognition actually is a blessing or a threat for human cognition cannot be answered here, but we will provide a brief outlook on how distributed cognition might be affected by the rise of AI.

As the use of many AI-technologies such as smart speakers appears quite effortless, they might be used to easily store appointments, take notes, retrieve up-to-date knowledge, or to perform other cognitive tasks (e.g., calculating, navigating). AI-technologies might thus be the “future” of distributed cognition by replacing classical offloading tools. With this prospect, it is important to consider how the omnipresence of AI as offloading tools might impact human cognition and how we–as humans–might foster a worthwhile integration of AI into our life.

To date, cognitive offloading research has shown positive and negative consequences of using modern technology to distribute demands on internal and external resources. However, these studies did not target the use of AI-technologies as offloading tools, but standard technologies such as tablets/computers without AI applications. To our best knowledge, research investigating distributed cognition in the context of AI is lacking. While we see a high potential for new studies on this matter, we also think that previous results can be transferred to the current and future use of AI. Therefore, AI-technologies should be used with caution as they might diminish cognitive abilities such as learning and memory–consequences that are especially relevant when it comes to children's education.

Studies suggest that even young children offload cognitive processes instead of completely relying on their internal resources (e.g., Armitage et al., 2020; Bulley et al., 2020). Thus, already in crucial learning phases during childhood tools are used to distribute cognitive demands onto internal and external resources. Such offloading behavior might increase with the ever earlier access to modern AI-technologies in smartphones and computers. Especially regarding education, it must be discussed whether there should be a “ban” of cognitive offloading due to potential detrimental effects thereof or whether students need to learn how to properly use technical tools without causing harm for their cognition (cf. Bearman and Luckin, 2020; Dawson, 2020). In line with these authors, we advocate to teach students how to use technical devices so that they satisfy their needs but to not (unintentionally) harm cognition. For instance, students need to learn how to differentiate between their own knowledge and externally stored knowledge, so that the effect of inflated knowledge is avoided. Furthermore, students should be made aware of their offloading behavior and that they won't be able to access their technical tools in critical situations such as during exams. This is especially important as a study showed that cognitive offloading was not detrimental for long-term memory when participants were forced to offload but also were instructed to internally memorize the relevant information (Grinschgl et al., 2021b). Thus, offloading is not always detrimental for building long-term memory representations and instead detriments of offloading might be compensated by proper learning instructions. Additionally, modern (AI-)technologies can benefit education by providing students with automated feedback to improve learning (Bearman and Luckin, 2020). Hence, the availability of modern (AI)-technologies is accompanied by both benefits and risks.

One alternative to strongly relying on AI to perform demanding tasks, might be the enhancement of one's own cognition. Especially the Transhumanism movement in philosophy proposes the enhancement of human cognition, such as intelligence, so that we are able to solve global problems (e.g., the climate crisis; Liao et al., 2012; Sorgner, 2020). This enhancement should be achieved by enhancement methods such as taking smart drugs, stimulating the brain, or modifying genes (Bostrom and Sandberg, 2009). However, so far the effects of most enhancement methods are at best moderate (Hills and Hertwig, 2011; Jaušovec and Pahor, 2017). The future might bring new possibilities to foster human enhancement and this might enable humans to compete with AI (for a discussion on the implications of human enhancement and AI see Neubauer, 2021).

As human enhancement is not a promising strategy to become smarter yet, individuals might rather rely on the available technologies to improve their performance. As outlined before, individuals do not rely on technical tools all the time, but the distribution of cognitive processes onto internal and external resources rather depends on factors such as tool design, one's abilities, or metacognitive beliefs. Another factor that might strongly influence the reliance on AI might be the trust in these technologies. In a recent review, Matthews et al. (2021) identified trust as a major factor when it comes to human-machine interaction and suggested that trust in AI should be systematically investigated as humans are approaching a technology-enhanced future. Trust can be seen as the specific beliefs about technology and the willingness to rely on technology in risky situations (Siau and Wang, 2018). Thus, trust might determine if and how individuals interact with AI (see also Glikson and Woolley, 2020; Chong et al., 2022) for distributed cognition. The question arises whether there are individual differences when it comes to trusting AI. An important source for individual differences might be individuals' personality such as the Big 5 traits (Hoff and Bashir, 2015; Matthews et al., 2021). Therefore, we briefly summarize research investigating personality traits as potential predictors of trust in AI and present our own data on this matter.

On one hand, several studies investigated trust regarding specific AI-technologies. Li et al. (2020) consistently observed correlations between openness and trust in automated driving. While the participants showed lower trust in automated driving with higher openness in a questionnaire, they also showed a higher monitoring frequency, more frequent, and earlier and longer “take overs” in an automated driving simulator. Individuals high in openness might strive for more intellectually demanding tasks and thus do not heavily rely on automated systems (but see Zhang et al., 2020, for conflicting results). Additionally, higher extraversion was related to less trust in the questionnaire but no other effects were observed (Li et al., 2020). In contrast, Kraus et al. (2021) did not observe a relationship between openness and trust in automated driving, but indirect effects of neuroticism, extraversion, and agreeableness. In a path model, higher neuroticism was related to less affinity for technology. A higher affinity for technology was positively related to trust in automated driving (see also Zhang et al., 2020). Moreover, higher extraversion and agreeableness were related to more interpersonal trust which was related to more trust in automated driving. These findings suggest that there is a common factor underlying both trust in humans and trust in automated systems (Kraus et al., 2021). Besides automated driving, Sharan and Romano (2020) investigated trust in AI by providing participants with suggestions from an AI-algorithm when making decisions in a card game and found none of the Big 5 traits correlated to any trust indicators.

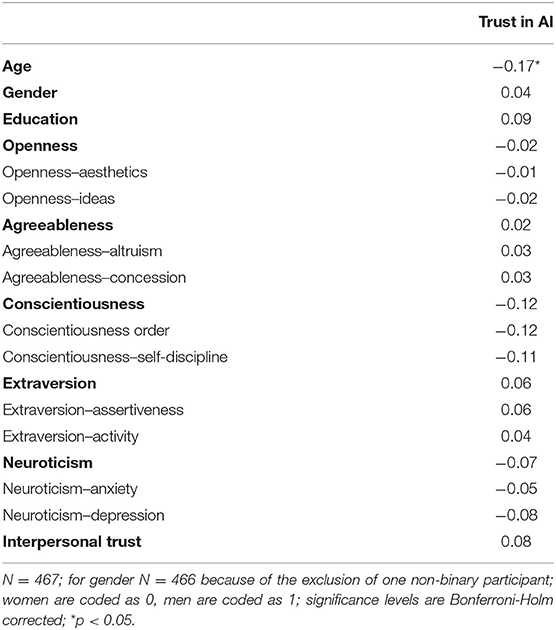

Other studies assessed general trust in AI- (or related) technologies. For instance, Chien et al. (2016) observed that higher agreeableness and conscientiousness were related to more trust in automated systems, with no significant relationships for the other Big 5 traits. Merrit and Ilgen (2008) observed that extraversion is positively related to the propensity to trust machines; additionally, age was negatively related to trust in machines. In our own, exploratory study (N = 467; for details see Supplementary Material), we assessed general trust in AI by a 7-item questionnaire. Additionally, we measured participants sociodemographic data (age, gender, and education), Big 5 traits and facets, and their interpersonal trust. The correlations of these variables with general trust in AI can be found in Table 1. In contrast to single previous studies (e.g., Chien et al., 2016), we did not observe any relationships between trust in AI and Big 5 traits as well as their facets. Additionally, interpersonal trust was not related to trust in AI. We, thus, cannot confirm the findings of Kraus et al. (2021), suggesting trust in others and in technology are positively correlated. However, in line with Merrit and Ilgen (2008), we observed a negative relationship between age and trust in AI as well as no gender effects. Older adults seem to have less trust in AI, potentially due to less experience with these systems and modern technology in general (but see Zhang et al., 2020, for diverging results).

Table 1. Correlations between sociodemographic data, Big 5 traits and facets, interpersonal trust, and general trust in AI.

To summarize, results regarding personality traits as predictors of trust in AI seem rather inconsistent and depend on the targeted AI-technology (see also Schäfer et al., 2016). We thus urge for more systematic research to identify personality traits and other factors that might predict the trust in and use of AI-technologies in general but also for distributed cognition more specifically. Besides personality, other factors might play a major role for trusting and using AI. For instance, the perceived usability of AI-technologies, computer anxiety, task characteristics, transparency, or perceived intelligence of AI might determine if and how AI-technologies are used (for an overview and further differentiation see Glikson and Woolley, 2020; Kaplan et al., 2021; Matthews et al., 2021).

While smartphones and other technical gadgets are already frequently used to distribute cognition, the rise of easy-to-use AI-technologies might further foster the offloading of cognitive processes. This development urges a need to investigate consequences of using AI for distributed cognition and to inform the public about these consequences. Although AI is already often discussed by information scientists, politics, and the general public, psychologists and psychological findings are barely integrated into these discussions. We see a high potential for psychological research to inform the public about potentials of modern technologies but also about accompanied risks. Moreover, identifying individuals that would easily rely on AI (e.g., due to a high trust in these systems) could help in specifically targeting these individuals when it comes to potential negative consequences of heavily relying on technology. Thus, we argue for more individual differences research to systematically investigate which factors (e.g., personality) predict trust in and use of AI. Growing up with modern technologies will likely affect our cognition as well as our attitude toward technologies. Such findings should be considered both when designing modern technologies and when using them in different situations (e.g., in private life, educational settings)–already now and in an AI-enhanced future.

The raw data supporting the conclusions of this article will be made available by the authors upon request, without undue reservation.

The studies involving human participants were reviewed and approved by Ethics Committee of the University Graz. The patients/participants provided their written informed consent to participate in this study.

SG: conceptualization, methodology, data curation, data analysis, and writing—original draft. AN: conceptualization, methodology, and writing—review and editing. All authors contributed to the article and approved the submitted version.

The authors acknowledge the financial support by the University of Graz for the Open Access Publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frai.2022.908261/full#supplementary-material

Armitage, K. L., Bulley, A., and Redshaw, J. (2020). Developmental origins of cognitive offloading. Proc. R. Soc. B 287:20192927. doi: 10.1098/rspb.2019.2927

Arreola, H., Flores, A. N., Latham, A., MacNew, H., and Vu, K.-P. L. (2019). Does the use of tablets lead to more information being recorded and better recall in short-term memory tasks? In: Proceedings of the 21st HCI International Conference, Human Interface and Management of Information Cham: Springer. doi: 10.1007/978-3-030-22660-2_20

Bearman, M., and Luckin, R. (2020). “Preparing university assessment for a world with AI: Tasks for human intelligence,” In: Re-Imagining University Assessment in a Digital World, eds M. Bearman, P. Dawson, R. Ajjawi, J. Tai, and D. Boud (Cham: Springer). doi: 10.1007/978-3-030-41956-1_5

Boldt, A., and Gilbert, S. (2019). Confidence guides spontaneous cognitive offloading. Cognit. Res. 4:45. doi: 10.1186/s41235-019-0195-y

Bostrom, N., and Sandberg, A. (2009). Cognitive enhancement: Methods, ethics, regulatory challenges. Sci. Eng. Ethics 15, 311–341. doi: 10.1007/s11948-009-9142-5

Bulley, A., McCarthy, T., Gilbert, S. J., Suddendorf, T., and Redshaw, J. (2020). Children devise and selectively use tools to offload cognition. Curr. Biol. 17, 3457–3464.e3. doi: 10.1016/j.cub.2020.06.035

Carr, N. (2008). Is Google Making Us Stupid? The Atlantic. https://www.theatlantic.com/magazine/archive/2008/07/is-google-making-us-stupid/306868/

Cary, M., and Carlson, R. A. (2001). Distributing working memory resources during problem solving. J. Experi. Psychol. 27, 836–848. doi: 10.1037/0278-7393.27.3.836

Cecutti, L., Chemero, A., and Lee, S. W. S. (2021). Technology may change cognition without necessarily harming it. Nat. Hum. Behav. 5, 973–975. doi: 10.1038/s41562-021-01162-0

Chien, S.-Y., Lewis, M., Sycara, K., Liu, J.-S., and Kumru, A. (2016). “Relation between trust attidudes toward automation, Hofstede's cultural dimensions, and Big Five personality traits,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting. doi: 10.1177/1541931213601192

Chong, L., Zhang, G., Goucher-Lambert, K., Kotovsky, K., and Cagan, J. (2022). Human confidence in artificial intelligence and in themselves: The evolution and impact of confidence on adoption of AI advice. Comput. Human Behav. 127:107018. doi: 10.1016/j.chb.2021.107018

Craik, F. I. M. (2002). Levels of processing: Past, present...and future? Memory 10, 305–318. doi: 10.1080/09658210244000135

Craik, F. I. M., and Lockhart, R. S. (1972). Levels of processing: A framework for memory research. J. Verbal Learning Verbal Behav. 11, 671–648. doi: 10.1016/S0022-5371(72)80001-X

Dawson, P. (2020). “Cognitive offloading and assessment,” In: Re-Imagining University Assessment in a Digital World, eds M. Bearman, P. Dawson, R. Ajjawi, J. Tai, and D. Boud (Cham: Springer). doi: 10.1007/978-3-030-41956-1_4

Dunn, T. L., Gaspar, C., McLean, D., Koehler, D. J., and Risko, E. F. (2021). Distributed metacognition: Increased bias and deficits in metacognitive sensitivity when retrieving information form the internet. Technol. Mind Behav. 2:39. doi: 10.1037/tmb0000039

Eskritt, M., and Ma, S. (2014). Intentional forgetting: Note-taking as naturalistic example. Mem. Cognit. 42, 237–246. doi: 10.3758/s13421-013-0362-1

Fenech, E. P., Drews, F. A., and Bakdash, J. Z. (2010). “The effects of acoustic turn by turn navigation on wayfinding,” In: Proceedings of the Human Factors and Ergonomics Society 54th Annual Meeting. doi: 10.1177/154193121005402305

Finley, J. R., Naaz, F., and Goh, F. W. (2018). Memory and Technology: How We Use Information in the Brain and in the World. Cham: Springer Nature Switzerland. doi: 10.1007/978-3-319-99169-6

Fisher, M., Goddu, M. K., and Keil, F. C. (2015). Searching for explanations: How the internet inflates estimates of internal knowledge. J. Experi. Psychol. 144, 674–678. doi: 10.1037/xge0000070

Fisher, M., Smiley, A. H., and Grillo, T. L. H. (2021). Information without knowledge: the effects of internet search on learning. Memory. 20, 375–387. doi: 10.1080/09658211.2021.1882501

Gilbert, S. J. (2015a). Strategic offloading of delayed intentions into the external environment. Q. J. Exp. Psychol. 68, 971–992. doi: 10.1080/17470218.2014.972963

Gilbert, S. J. (2015b). Strategic use of reminders: Influence of both domain-general and task-specific metacognitive confidence, independent of objective memory ability. Conscious. Cogn. 33, 245–260. doi: 10.1016/j.concog.2015.01.006

Glikson, E., and Woolley, A. W. (2020). Human trust in artificial intelligence: Review of empirical research. Acad. Manage. Ann. 14:57. doi: 10.5465/annals.2018.0057

Gray, W. D., Sims, C. R., and Fu, W.-T. (2006). The soft constraints hypothesis: A rational analysis approach to resource allocation for interactive behavior. Psychol. Rev. 113, 461–482. doi: 10.1037/0033-295X.113.3.461

Grinschgl, S., Meyerhoff, H. S., and Papenmeier, F. (2020). Interface and interaction design: How mobile touch devices foster cognitive offloading. Comput. Human Behav. 108:106317. doi: 10.1016/j.chb.2020.106317

Grinschgl, S., Meyerhoff, H. S., Schwan, S., and Papenmeier, F. (2021a). From metacognitive beliefs to strategy selection: Does fake performance feedback influence cognitive offloading? Psychol. Res. 85, 2654–2666. doi: 10.1007/s00426-020-01435-9

Grinschgl, S., Papenmeier, F., and Meyerhoff, H. S. (2021b). Consequences of cognitive offloading: Boosting performance but diminishing memory. Q. J. Experi. Psychol. 74, 1477–1496. doi: 10.1177/17470218211008060

Grinschgl, S., Papenmeier, F., and Meyerhoff, H. S. (2022). Mutual interplay between cognitive offloading and secondary task performance.

Hills, T., and Hertwig, R. (2011). Why aren't we smarter already: Evolutionary trade-offs and cognitive enhancements. Curr. Dir. Psychol. Sci. 20, 373–377. doi: 10.1177/0963721411418300

Hoff, K. A., and Bashir, M. (2015). Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 57, 407–437. doi: 10.1177/0018720814547570

Hu, X., Luo, L., and Fleming, S. M. (2019). A role for metamemory in cognitive offloading. Cognition 193:104012. doi: 10.1016/j.cognition.2019.104012

idc.com (2022). Smartphone Shipments Declined in the Fourth Quarter But 2021 Was Still a Growth Year With 5.7% Increase in Shipments According to IDC. Available online at: https://www.idc.com/getdoc.jsp?containerId=prUS48830822

Kaplan, A. D., Kessler, T. T., Brill, J. C., and Hancock, P. A. (2021). Trust in artificial intelligence: Meta-analytic findings. Hum. Factors. 47, 915–926. doi: 10.1177/00187208211013988

KMU Forschung Austria (2020). E-Commerce-Studie Österreich 2020. https://www.kmuforschung.ac.at/e-commerce-studie-2020/

Kraus, J., Scholz, D., and Baumann, M. (2021). What's driving me? Exploration and validation of a hierarchical personality model for trust in automated driving. Hum. Fact. 63, 1076–1104. doi: 10.1177/0018720820922653

Li, W., Yao, N., Shi, Y., Nie, W., Zhang, Y., Li, X., et al. (2020). Personality openness predicts driver trust in automated driving. Automotive Innovation 3, 3–13. doi: 10.1007/s42154-019-00086-w

Liao, M. S., Sandberg, A., and Roache, R. (2012). Human engineering and climate change. Ethics Policy Environ. 15, 206–221. doi: 10.1080/21550085.2012.685574

Matthews, G., Hancock, P. A., Lin, J., Panganiban, A. R., Reinerman-Jones, L. E., Szalma, J. L., et al. (2021). Evolution and revolution: Personality research for the coming world of robots, artificial intelligence, and autonomous systems. Pers. Individ. Dif. 169:e109969. doi: 10.1016/j.paid.2020.109969

Merrit, S. M., and Ilgen, D. R. (2008). Not all trust is created equal: Dispositional and history-based trust in human-automation interactions. Hum. Fact. 50, 194–210. doi: 10.1518/001872008X288574

Meyerhoff, H. S., Grinschgl, S., Papenmeier, F., and Gilbert, S. J. (2021). Individual differences in cognitive offloading: a comparison of intention offloading, pattern copy, and short-term memory capacity. Cognit. Res. 6:34. doi: 10.1186/s41235-021-00298-x

Moritz, J., Meyerhoff, H. S., and Schwan, S. (2020). Control over spatial representation format enhances information extraction but prevents long-term learning. J. Educ. Psychol. 112, 148–165. doi: 10.1037/edu0000364

Neubauer, A. C. (2021). The future of intelligence research in the coming age of artificial intelligence–with special consideration of the philosophical movements of trans- and posthumanism. Intelligence 87:101563. doi: 10.1016/j.intell.2021.101563

O'Hara, K. P., and Payne, S. J. (1998). The effects of operator implementation cost on planfulness of problem solving and learning. Cogn. Psychol. 35, 34–70. doi: 10.1006/cogp.1997.0676

Risko, E. F., and Gilbert, S. J. (2016). Cognitive offloading. Trends Cogn. Sci. 20, 676–688. doi: 10.1016/j.tics.2016.07.002

Runge, Y., Frings, C., and Tempel, T. (2019). Saving-enhanced performance: saving items after study boosts performance in subsequent cognitively demanding tasks. Memory 27, 1462–1467. doi: 10.1080/09658211.2019.1654520

Schäfer, K. E., Chen, J. Y. C., Szalma, J. L., and Hancock, P. A. (2016). A meta-analysis of factors influencing the development of trust in automation: Implications for understanding autonomy in future systems. Hum. Fact. 58, 377–400. doi: 10.1177/0018720816634228

Schönpflug, W. (1986). The trade-off between internal and external information storage. J. Mem. Lang. 25, 657–675. doi: 10.1016/0749-596X(86)90042-2

Sharan, N. N., and Romano, D. M. (2020). The effects of personality and locus of control on trust in humans versus artificial intelligence. Heliyon 6:e04572. doi: 10.1016/j.heliyon.2020.e04572

Siau, K., and Wang, W. (2018). Building trust in artificial intelligence, machine learning, and robotics. Cutter Business Technol. J. 31, 47–53.

Sorgner, S. L. (2020). On Transhumanism. Penn State Univeristy Press. doi: 10.5040/9781350090507.ch-003

Sparrow, B., Liu, J., and Wegner, D. (2011). Google effects on memory: Cognitive consequences of having information at out fingertips. Science 33, 776–778. doi: 10.1126/science.1207745

Spitzer, M. (2012). Digitale Demenz: Wie wir uns und unsere Kinder um den Verstand bringen. Droemer.

Storm, B. C., and Stone, S. M. (2015). Saving-enhanced memory: The benefits of saving on the learning and remembering of new information. Psychol. Sci. 26, 182–188. doi: 10.1177/0956797614559285

Van Nimwegen, C., and Van Oostendorp, H. (2009). The questionable impact of an assisting interface on performance in transfer situations. Int. J. Ind. Ergon. 39, 501–508. doi: 10.1016/j.ergon.2008.10.008

Waldron, S. M., Patrick, J., and Duggan, G. B. (2011). The influence of goal-state access cost on planning during problem solving. Q. J. Experi. Psychol. 64, 485–503. doi: 10.1080/17470218.2010.507276

Keywords: technology, artificial intelligence (AI), distributed cognition, cognitive offloading, trust

Citation: Grinschgl S and Neubauer AC (2022) Supporting Cognition With Modern Technology: Distributed Cognition Today and in an AI-Enhanced Future. Front. Artif. Intell. 5:908261. doi: 10.3389/frai.2022.908261

Received: 30 March 2022; Accepted: 24 June 2022;

Published: 14 July 2022.

Edited by:

Xiangen Hu, University of Memphis, United StatesReviewed by:

Paul Lefrere, Clear Communication Associates, United KingdomCopyright © 2022 Grinschgl and Neubauer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sandra Grinschgl, c2FuZHJhLmdyaW5zY2hnbEB1bmktZ3Jhei5hdA==; orcid.org/0000-0001-6666-9426

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.