94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Artif. Intell., 24 March 2020

Sec. AI for Human Learning and Behavior Change

Volume 3 - 2020 | https://doi.org/10.3389/frai.2020.00011

This article is part of the Research TopicArticle Collection on Human Aspects in Adaptive and Personalized Interactive Environments (HAAPIE)View all 10 articles

This paper investigates how humans adapt next learning activity selection (in particular the knowledge it assumes and the knowledge it teaches) to learner personality and competence to inspire an adaptive learning activity selection algorithm. First, the paper describes the investigation to produce validated materials for the main study, namely the creation and validation of learner competence statements. Next, through an empirical study, we investigate the impact on learning activity selection of learners' emotional stability and competence. Participants considered a fictional learner with a certain competence, emotional stability, recent and prior learning activities engaged in, and selected the next learning activity in terms of the knowledge it used and the knowledge it taught. Three algorithms were created to adapt the selection of learning activities' knowledge complexity to learners' personality and competence. Finally, we evaluated the algorithms through a study with teachers, resulting in an algorithm that selects learning activities with varying assumed and taught knowledge adapted to learner characteristics.

Intelligent Tutoring Systems extend the traditional information-delivery learning system by considering learners' characteristics to improve the effectiveness of a learner's experience (Brusilovsky, 2003). Whilst traditional e-learning has contributed to flexibility in learning and reduced education costs, ITS attempt to fit the particular needs of each individual (Park and Lee, 2004; Brusilovsky and Millán, 2007; Siddappa and Manjunath, 2008; Dascalu et al., 2016).

Adapting ITS to individual learner characteristics helps learners to achieve learning goals and supports personalized learning (Brusilovsky, 1998a,b; Ford and Chen, 2000; Drachsler et al., 2008a; Santos and Boticario, 2010). Several studies have shown that the main problem with traditional e-learning is the lack of learner satisfaction due to delivering the same learning experience to all learners, irrespective of their prior knowledge, experience, and preferences (Ayersman and von Minden, 1995; Cristea, 2003; Rumetshofer and Wöß, 2003; Stewart et al., 2005; Di Iorio et al., 2006; Sawyer et al., 2008). Researchers have tried to address this dissatisfaction by attempting to personalize the learning experience for the learner. A personalized learning experience can help to improve learner satisfaction with the learning experience, learning efficiency, and educational effectiveness (Brusilovsky, 2001; De Bra et al., 2004; Huang et al., 2009). Most research on adaptive learning interaction shows an increase in learning outcomes (Anderson et al., 1995; Vandewaetere et al., 2011).

An important aspect of adaptive e-learning is the adaptive selection of learning activities. In fact, the main goal of adaptive e-learning was identified by Dagger et al. (2005) as “e-learning content, activities and collaboration, adapted to the specific needs and influenced by specific preferences of the learner and built on sound pedagogic strategies.” Studies have confirmed that the role of adaptation in e-learning is to improve the instruction content given to heterogeneous learner groups (Brusilovsky et al., 1998; Seters et al., 2011). Personalizing the selection of learning activities is needed to make learning more efficient (Camp et al., 2001; Salden et al., 2004; Kalyuga and Sweller, 2005).

Previous studies show that adaptive activity selection impacts factors, such as attitude and behavior (Ones et al., 2007), skills acquisition (Oakes et al., 2001), and productivity (Judge et al., 1999; Bozionelos, 2004). Considering individual differences among learners will improve learning achievement (Shute and Towle, 2003; Tseng et al., 2008). Personalized activity selection yields more effective and efficient learning outcomes, with researchers reporting a positive effect on learners' motivation and learning efficiency (Schnackenberg and Sullivan, 2000; Corbalan et al., 2008).

Learning is influenced by both characteristics of the learner (such as expertise, abilities, attitudes, performance, mental effort, personality) and characteristics of the learning activity (LA) (such as LA complexity, LA type, amount of learner support provided) (Lawless and Brown, 1997; Zimmerman, 2002; Salden et al., 2006; Okpo et al., 2018).

Previously, in six focus group studies (Alhathli et al., 2018b), we investigated what type of LAs to select for a particular learner. Results showed a clear impact of personality (self-esteem, openness to experience, emotional stability) on the use of prior knowledge and topics taught in LA selection. Focus group participants mentioned several other factors that should be considered when selecting a LA, such as a learner's academic record and ability and the LA's difficulty. Given the focus group results, we decided to investigate the impact of Emotional Stability (ES) and learners' competence on the selection of the next LA. In particular, this paper investigates the impact on the selected LA content: both the knowledge taught by the LA and the prior knowledge it uses. The LA style (e.g., visual vs. textual) is not included in this study, as we studied the impact of personality on the selected LA style before (Alhathli et al., 2018a).

Researchers have shown an increased interest in adapting to learner characteristics, such as personality traits, motivation, performance, cognitive efficiency, needs, and learning style (Miller, 1991; Wolf, 2003; Shute and Zapata-Rivera, 2007; Schiaffino et al., 2008; Komarraju et al., 2011; Vandewaetere et al., 2011; Richardson et al., 2012; Alhathli et al., 2016, 2017; Dennis et al., 2016; Okpo et al., 2016, 2017). There is considerable debate around which learner characteristics are worth modeling more than others1. Vandewaetere et al. (2011) classified individual characteristics into three groups:

• Cognitive, which is a collection of cognition related characteristics, such as the previous knowledge of the learner (Graesser et al., 2007), learners' abilities (Lee and Park, 2008), learning style (Germanakos et al., 2008), and learning objectives (Kelly and Tangney, 2002);

• Affective, which is a collection of feeling related attributes, such as learner mood (Beal and Lee, 2005b), self-efficacy (Mcquiggan et al., 2008), disappointment (Forbes-Riley et al., 2008) and confusion (Graesser et al., 2008); and

• Behavior, whereby a learner behaves differently when they are interacting with computers. These behavioral characteristics can be related to the need for help or feedback (Koutsojannis et al., 2001), the degree of self-regulated learning (Azevedo, 2005), and the number of attempts, tasks and learner experience (Hospers et al., 2003).

In our classification in Table 1, we broadened the affective category to psychological aspects, including also personality traits, motivation, and mental effort. We extended the cognitive category to include cognitive style as distinct from learning style. We renamed the behavioral category to include performance. We added an additional category called personal information for learner characteristics not covered by the classification of Vandewaetere et al. (2011), such as demographics and cultural background. Table 1 shows examples of research into adapting to these learner characteristics.

The learner characteristics investigated in this paper are Personality and Prior knowledge and Competence.

Previous studies have acknowledged that both personality and general cognitive ability influence learners' performance (Ree et al., 1994; Barrick et al., 2001; Barrick, 2005). It has been suggested that there is a convincing relation between personality and other factors, such as attitude and behavior (Ones et al., 2007), skill acquisition (Oakes et al., 2001), and productivity (Judge et al., 1999; Bozionelos, 2004). Personality can be defined as the individual differences in people's emotional, interpersonal, experiential, attitudinal and motivational styles (John and Srivastava, 1999). Researchers have shown an increased interest in adapting to personality traits (Miller, 1991; Komarraju et al., 2011; Richardson et al., 2012; Dennis et al., 2016; Okpo et al., 2016, 2017).

The most adopted model of personality is the Five-Factor Model (also known as the Big Five), which is based on five dimensions (Costa and McCrae, 1992a, 1995): (i) extroversion, (ii) agreeableness, (iii) conscientiousness, (iv) emotional stability, (v) openness to experience (McCrae, 1992). Extroversion refers to a higher degree of sociability, energy, assertiveness, and talkativeness. Emotional stability refers to the opposite of neurotism, i.e., someone who is calm and not easily upset. Openness to experience refers to those who are interdependent-minded, and intellectually strong. Conscientiousness refers to being disciplined, organized, and achievement-oriented. Finally, Agreeableness refers to being good-natured, helpful, trustful, and cooperative (Miller, 1991). These traits have been found across all cultures (McCrae and Costa, 1997; Salgado, 1997). In addition, these traits are relatively stable over time (Costa and McCrae, 1992b).

Several studies have shown the effect of personality on the learning process, and it has been investigated that certain personality traits consistently correlate with learner achievement, motivation, and success (Komarraju and Karau, 2005; Poropat, 2009; Clark and Schroth, 2010; Komarraju et al., 2011; Hazrati-Viari et al., 2012; Richardson et al., 2012).

Numerous terms have been used to refer to prior knowledge (e.g., current knowledge, expert knowledge, personal knowledge, and experiential knowledge) (Dochy, 1992, 1994). Interest in a learner's prior knowledge has appeared in many educational studies. An individual's prior knowledge is considered as a set of skills, or abilities that are present in the learning process (Jonassen and Grabowski, 1993; Shane, 2000). Previous investigations have demonstrated the potential impact of prior knowledge on cognitive processes, with positive and significant effects on learner's performance, abilities, and achievement (Byrnes and Guthrie, 1992; Dochy, 1994; Gaultney, 1995; Thompson and Zamboanga, 2003).

In our focus groups, we found that prior knowledge impacts the selection of the next LA. Thus, we decided to use learners' competence in terms of learner knowledge and ability.

Competence can be defined differently depending on the discipline. The dictionary defines competence as a condition or as quality of effectiveness. Competence refers to an individual's capability, sufficiency, ability, and successes. A large amount of competence research refers to the skills and requirements needed for a particular task or profession (Willis and Dubin, 1990; Parry, 1996). Competence is seen as a reflection of multiple concepts, such as performance. Competence and performance are related, with competence depicting the mean of better performance (Klemp, 1979; Woodruffe, 1993).

However, performance can be affected by other factors, such as motivation and effort (Schambach, 1994). Competencies are also considered as a core component of goal achievement. Achievement goals are defined as a cognitive representation of a competence efficiency and ability that an individual seeks to obtain (Elliot, 1999; Bong, 2001; Elliot and McGregor, 2001). Competence can be defined depending on the standard or referent that is used in evaluation (Elliot and Thrash, 2001). Competence may be evaluated according to three different standards, as follows: (1) absolute, the requirement of the task itself; (2) intra-personal, past or maximum attainment; and (3) normative, the performance of others (Butler, 1988; Elliot and McGregor, 2001).

Many aspects of an educational system can be adapted to a learner. For example, Masthoff (1997) argued for adapting navigation through the course content, exercise selection, feedback, instructions, provision of hints, and content presentation. For example, feedback has been adapted to performance and personality (Dennis et al., 2016) and culture (Adamu Sidi-Ali et al., 2019), difficulty level to performance, personality and effort (Okpo et al., 2018), navigational control to learner goals and knowledge (Masthoff, 1997), and learning content and presentation to learning styles (Bunderson and Martinez, 2000).

This paper focuses on adaptive learning activity selection. Table 2 provides examples of adaptive educational systems that include adaptive LA selection, the learner characteristics used to guide the adaptation, and the system control used to provide the adaptation. The following types of system control can be distinguished:

(1) Curriculum Sequencing provides learners with a planned sequence of learning contents and tasks (Brusilovsky, 2003),

(2) Adaptive Navigation Support helps learners to find their paths in the learning contents according to the goals, knowledge, and other characteristics of an individual learner (Brusilovsky, 1996),

(3) Collaborative Filtering (and other educational recommender systems' techniques) supports learners to find learning resources that are relevant to their needs and interests (Recker and Walker, 2003; Recker et al., 2003; Schafer et al., 2007; Drachsler et al., 2008a),

(4) Adaptive Presentation supports learners by providing individualized content depending on their preferences, learning style and other information stored in the learner model (Beaumont and Brusilovsky, 1995).

The LA selection in this paper is concerned with selecting activities that are well-suited to learners' personality, prior knowledge and competence. This is related to Adaptive Navigation and Educational Recommender Systems, given a LA is selected as most suitable for a learner based on the knowledge the LA assumes and teaches. The LA selected by the system can be used to support learners in finding what LA to do next or by an ITS to automatically present that LA.

The domain model in our studies contains the LAs, and in particular their topics and the type and quantity of knowledge they use and produce.

Educational systems which recommend or provide personalized learning contents often require information about the topics covered in the learning materials, courses, and assignments it selects from (Liang et al., 2006; Soonthornphisaj et al., 2006; Prins et al., 2007; Yang and Wu, 2009; Ricci et al., 2011). Bloom's Taxonomy defines three overarching domains of LAs: Cognition (e.g., teaching mental skills), Affective (e.g., teaching attitudes), and Psychomotor (teaching manual of physical skills) (Bloom, 1956). This paper focuses on the cognitive domain. Within the cognitive domain, there are many sub-domains. For example, educational recommender systems have been developed for programming (Mitrovic et al., 2002; Wünsche et al., 2018) and learning languages (Hsu, 2008; Wang and Yang, 2012). Even within such a sub-domain, multiple topics exist. For example, when teaching somebody English, one could have a LA on ordering food, and a different activity on buying groceries. Educational recommender systems often select based on learner interests, so need detailed information on the topics covered in a LA.

Incorporating learner characteristics, such as the learner's knowledge, interests and goals in an adaptive educational system is a well-established approach discussed by Brusilovsky (2003, 2007). To adapt LA selection, a match needs to be made between the learner's knowledge, goals and interests and what LAs have to offer and require. In traditional education, LAs are often described in terms of prerequisites (the knowledge required of a learner to participate in a LA) and learning outcomes (the knowledge the learner will gain by successfully completing a LA) (Anderson et al., 2001).

This section describes the development and validation of competence statements used in later studies. Many statements can be used to describe different levels of competency, but no existing list clearly defined varying levels of individual competence. Initially, 26 statements were produced to cover five categories of learners' competence. All statements are commonly used to depict different competence levels. Table 3 shows the resulting statements and their initial categorization. These statements will be used in our investigations on the impact of personality and competence on the selection of LA.

Thirty participants (staff and students of the university) completed an on-line survey (7% aged 18–25, 53% 26–35, 40% 36–45), which took about 15 min to complete. The data from 18 participants were used for the final analysis (9 male, 9 female). The others were excluded due to the low quality of their responses: either straight-lining (giving the same answer to all statements), or not putting “No competence” toward the bottom of the scale as directed in the explanation.

Participants were shown 26 statements, and rated how much they felt these statements reflect the individual competence of a learner from 1 (no competence at all) to 10 (maximum competence). The order of the competence statements was randomized for each participant. Table 3 shows the percentage of participants who mapped a statement to a particular number.

Table 3 shows the percentage of participants who mapped a statement to a particular number. Some statements (e.g., “limited,” “slight”) showed little agreement between participants, whilst others showed better agreement. We decided to use three statements (shown in bold) for the main studies, which are “very low,” “moderate,” and “outstanding.” These statements were selected to ensure a spread of learners' competence, good agreement between participants, and based on the average ratings and the median. However, we decided to exclude “no competence” as it was used in the explanation of the scale that participants saw in the validation experiment, and we excluded “Medium competence” and “Extreme competence” as they could be affected by a comparison with other learners in the class. More statements could be used in future studies; for example “Little competence” (or “Low competence”) and “High competence” could be used if one needed five competence statements. For our future studies, we needed only three.

In this study, we investigate the impact of learners' emotional stability and competence on the selection of the next LA. In particular, we investigate the impact on the selection of both the knowledge taught by the LA and the prior knowledge it uses. We use three levels of competence: “very low,” “moderate,” and “outstanding.” Through an empirical study, we investigate how humans select the next LA for a learner with various levels of emotional stability and competence. Participants considered a fictional learner with certain levels of competence and emotional stability, recent and prior LA engaged in, and selected the next LA in terms of the knowledge it used and the knowledge it taught.

The independent variables used for the study are: Personality Trait Story: Participants were shown a story about a learner which portrayed a personality trait. Two stories were used depicting Emotional Stability (ES) at either a low or high level. The ES stories were developed and validated by Dennis et al. (2012), Smith et al. (2019). Learner competence: Three levels of competence were used: very low, moderate, and outstanding. The dependent variable for the studies is Learning activity selected: Participants were shown a table with each row containing a LA (numbered from 1 to 18). For each LA, the table showed the PRE knowledge the LA uses, with a distinction made between old knowledge (topics A and B) and recent knowledge (D,E). It also showed the POST knowledge the LA teaches; this could contain new (F and G), old (A,B), or recent knowledge (D,E). For example, for LA 9, it indicated that it uses old knowledge A and recent knowledge D, and teaches F and B. The LAs available for selection are showed in Figure 1. Participants selected one LA, and in doing so made a choice for PRE and POST knowledge. We will use PRE and POST as the dependent variables.

Participants had to pass an English fluency test (Cloze test, Taylor, 1953), to ensure that they could understand the study. Then, they were shown a description of the LA table with examples and two verification questions to ensure they had understood what the table indicated. Next, they were shown six scenarios with different learner competence (very low, moderate, and outstanding), three scenarios depicting Josh who was high on ES and another three depicting James who was low on ES. They were told that the learner has previously learned topics A and B, and recently finished a LA which taught topics D and E. For each scenario, they selected the next LA for that learner from the table described before e.g., “Which learning activity would you give to Josh to do next, if you know his competence in both old and recent knowledge is ‘Very low’?” Next, they rated how much they think the selected LA is suited to that learner on a scale from 1 (Not at all) to 5 (Totally suited), and to what extent the selected LA would be enjoyable, would increase skills and confidence (on a scale from 1 strongly disagree to 5 strongly agree).

Fifty-three participants responded to the on-line survey. 24 responses were excluded from the study either because they did not pass the English test, or answered the verification questions incorrectly. Twenty-nine participants successfully completed the study (16 female, 13 male; 2 aged 18–25, 11 aged 26–40, 10 aged 36–45, 6 over 46; 8 were students, 19 were teachers, 2 were trainee-teachers).

We hypothesized that:

• H1. Participants will select different LAs for high ES than low ES learners:

– H1.1. They will select LAs with less complicated POST for low ES than high ES.

– H1.2. They will select LAs with less complicated PRE for low ES than high ES.

• H2. Participants will select different LAs for learners with different competence levels:

– H2.1. They will select LAs with less complicated POST for lower levels of competence.

– H2.2. They will select LAs with less complicated PRE for lower levels of confidence.

• H3. There will be an interaction between ES and competence on LA selection.

• H4. Participants will rate the suitability of a selected LA and the extent to which it increases confidence differently depending on the PRE and POST. In particularly, we expect that for low ES:

– H4.1. They will rate the suitability of a selected LA higher when it has less complicated POST.

– H4.2. They will rate the suitability of a selected LA higher when it has less complicated PRE.

– H4.3. They will rate the extent to which the selected LA increases confidence higher when it has less complicated POST.

– H4.4. They will rate the extent to which the selected LA increases confidence higher when it has less complicated PRE.

Figure 2 shows the proportion of participants who selected a particular LA. The LAs available for selection are summarized in the second and third row of the figure, where PRE indicates the topics the LA uses, and POST indicates the topics the LA teaches. To make the results easier to read, we use more meaningful codes here instead of the A-G participants saw. For PRE we use: (O) only one old topic, (2O) two old topics, (OR) one old and one recent topic, (R) only one recent topic, and (2R) two recent topics. For POST we use: (N) one new topic, (NO) one new topic and one old topic, (NR) one new and one recent topic and (2N) two new topics. For example, the figure shows that 7% selected a LA with PRE knowledge O and POST knowledge N for the very low competence and high ES learner. From the figure, we observe the following:

• Very low competence: Participants tended to select LAs that required old knowledge (O, 2O, OR) for both ES levels. However, participants tended to select LAs that involved learning a combination of new and old knowledge (NO) for high ES, and more just new knowledge (N) for low ES.

• Moderate competence: Participants tended to select LAs that required old knowledge for both levels of ES, but mainly OR, with O and 2O not selected much. Interestingly, a higher proportion of participants selected LAs teaching NR or 2N for high ES, whilst more selected N for low ES.

• Outstanding competence: Participants selected LAs that involved less knowledge to learn (N vs. 2N) for low ES compared to high ES.

So, overall, there is evidence of participants changing their LA selection based on ES, and were indeed selecting LAs with less complicated POST for the low ES learner. This supports hypothesis H1.1. We do not find support here for H1.2.

There is also evidence in support of hypotheses H2.1 and H2.2, as Figure 2 clearly shows that the proportion of participants selecting more complicated PRE (particularly 2R) and more complicated POST (particularly 2N) increased with an increase in competence.

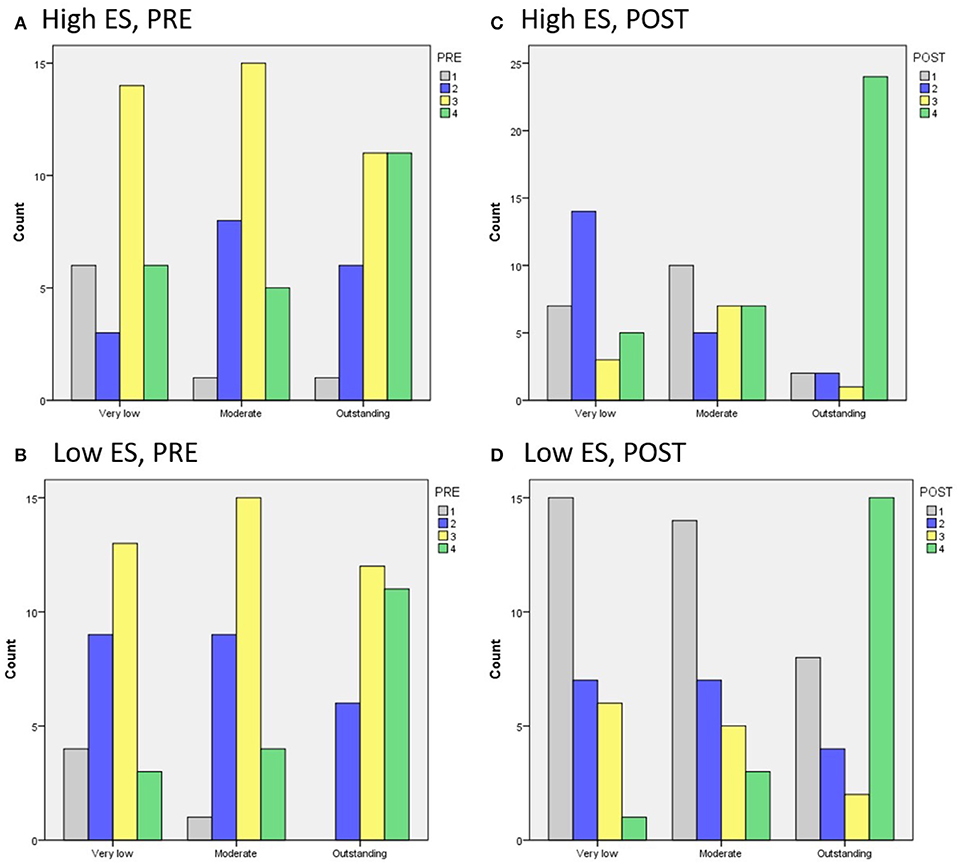

For the statistical analysis, we coded PRE and POST in such a way that a higher number indicates more (complicated) knowledge. For PRE, we coded O=1, 2O=2, R=2, OR=3 and 2R=4, so assigning higher numbers the more (complicated) knowledge is used2. For POST, we coded N=1, NO=2, NR=3, 2N=4, so assigning higher numbers the more (complicated) knowledge was taught. Figures 3–5 show the overall impact of ES and competence on PRE and POST.

1. Emotional Stability: There was a significant main effect of ES on POST [F(1, 168) = 12.3, p < 0.005]3, but not on PRE. LAs selected for high ES taught more new knowledge than LAs selected for low ES. Figure 3 shows a trend for LAs with more PRE being selected for high ES than low ES. However, the difference was small and not significant. This support hypothesis H1.2 but not H2.2.

2. Competence: There was a significant main effect of competence on both PRE and POST [F(2, 168) = 6.0, p < 0.005; F(2, 168) = 22.7, p < 0.0005, respectively]4. For POST, pairwise comparisons showed a significant difference between “very low” and “moderate” competence on the one hand, and “outstanding” competence on the other (p < 0.0005), with LAs with more POST selected for “outstanding” competence (mean difference = 1.24 and 1.09, respectively). For PRE, there was a significant difference only between “very low” and “outstanding” competence (p < 0.005), with more PRE selected for “outstanding” competence (mean difference = 0.53) (see Figure 4). This supports hypotheses H2.1 and H2.2.

3. Interaction between ES and competence: Figure 5 shows the PRE and POST per competency level for high and low ES. There was no significant interaction effect between ES and competence, so there is no evidence in support of H3.

Figure 5. The impact of competence on PRE for (A) high and (B) low ES, and on POST for (C) high and (D) low ES.

Figure 6 shows participants' suitability ratings for the most selected LAs. Table 4 shows participants' enjoyment, skills and confidence ratings for the most selected LAs for the different levels of competence and ES. Overall, there were no significant effects of ES and competence on suitability. There was a significant effect of ES on enjoyment [F(1, 168) = 9.6, p < 0.005] with a higher enjoyment rating for high ES (mean of 3.7 compared to 3.3), but not on skills and confidence. There was also a significant effect of competence on enjoyment [F(2, 168) = 4.3, p < 0.05], with a higher enjoyment rating for higher competence (mean of 3.8 for outstanding competence compared to 3.5 for moderate and 3.3 for very low), but not on skills and confidence. There were no significant interaction effects. Given participants' selection of LAs (and hence the LAs for which suitability, enjoyment, skills and confidence were rated) differed based on competence and ES, we also explored this in more detail, though the number of participants is too low for statistical tests.

For high ES, the LA which uses more recent knowledge (2R) was rated more suitable than those that used more old knowledge (O and OR) with the same POST (NO). The skills rating for this LA was also higher, whilst it confidence rating was lower. Participants may have felt that the high ES learner did not require a LA that would increase their confidence but rather their skills. For low ES, the LA that teaches less knowledge (N instead of NO) with the same PRE (OR) was rated more suitable. This LA also had a higher rating for confidence, which may mean that participants felt the low ES learner needed to gain more confidence.

For low ES, the LA which uses less knowledge (R) was rated more suitable than the one that uses more knowledge (OR) with the same POST (N). This LA also had a much higher rating for confidence. For high ES, the LA that teaches NR was rated more suitable than the ones teaching N or 2N, with the same PRE (OR). This LA also rated higher on the other aspects.

For low ES, the LA which teaches less knowledge (N) was rated more suitable than the one that teaches more knowledge (2N) with the same PRE (2R). This LA also had a higher rating for confidence, whilst it had a lower rating for skills.

Overall, this seems to suggest that LAs that are teaching less knowledge or using less knowledge are seen as more suitable for low ES, because they may increase confidence. This provides some support for hypotheses H4.1–H4.4.

The main concern of this paper was to investigate how to select the next LA for a learner with a particular level of ES and competence. Using the data presented in Figure 2, three initial approaches were used to produce algorithms for selecting LAs:

1. Most frequently chosen LA. For each combination of competence and ES, we considered which LA was most frequently selected (see summary in Table 5). In case of outstanding competence and high ability, two LAs were chosen as often. In this case we selected the one with the same PRE as had been selected for low ES, given there had not been a significant effect of ES on PRE. This resulted in Algorithm 1.

2. LA produced by combining the most frequently chosen PRE and the most frequently chosen POST. For each combination of competence and ES, we considered which PRE and which POST were most frequently selected (see summary in Table 5). Using the LA which combines the most frequently selected PRE and the most frequently selected POST produced the same results as using the most frequently selected LA5. Hence, Algorithm 1 is already in line with the outcome of this approach and no new algorithm was produced.

3. Top 3 LA exhibiting the largest increase in selection compared to the opposite ES case. The differences in frequency between the most selected LAs and the second (or even third) most selected LAs tended to be relatively small. Therefore, we also considered for each combination of competence and ES, which top 3 LA showed the largest increase in frequency of selection compared to the opposite ES case. For example, for outstanding competence and high ES, 2R→2N is the top 3 LA which the largest increase in frequency (31% for high ES and only 10% for low ES). This resulted in Algorithm 2.

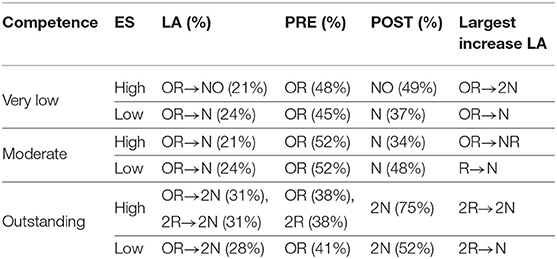

Table 5. Most frequently selected LA, PRE, and POST, and the percentage of participants who selected them, and LA with largest increase in selection.

In the next section, a more complicated statistical approach will be used resulting a third algorithm, and the three algorithms will be evaluated below.

Using the data of the study, two cumulative odds ordinal logistic regressions with proportional odds were run to predict the PRE and POST based on ES and competence6. The final model for both PRE and POST statistically significantly predicted the PRE and POST level over and above the intercept-only models [χ2(2) = 10.458, p < 0.01; χ2(2) = 41.759, p < 0.0005, respectively]. The odds ratio of selecting a higher POST level for learners with high ES vs. low ES is 2.707 (95% CI, 1.531–4.787), a statistically significant effect, Wald χ2(1) = 11.740, p < 0.005. This supports hypothesis H1.1. An increase in competence was associated with selecting a higher POST level with an odds ratio of 2.768 (95% CI, 1.919–3.983), Wald χ2(1) = 29.734, p < 0.0005. This supports H2.1 and also provides evidence that competence has slightly more impact on POST than ES. An increase in competence was also associated with selecting a higher PRE level with an odds ratio of 1.756 (95% CI, 1.240–2.487), Wald χ2(1) = 10.059, p < 0.005. This supports H2.2. The odds-ratio for high ES vs. low ES for PRE was not significant, so there is again no evidence of H1.2. The model is using an interaction between ES and competence, so provides some support for H3.

The model provides coefficients to calculate a value, as well as thresholds to compare the calculated value against to produce cumulative odds for PRE and POST levels.

The model's coefficients result in the following formulae to calculate Value for PRE and POST:

• PRE:

– 0.563 X Competence + 0.175 if ES = High

– 0.563 X Competence if ES = Low

• POST:

– 1.018 X Competence + 0.996 if ES = High

– (1.018 X Competence) if ES = Low

The thresholds lead to the following formulae to calculate the natural logarithm of the cumulative odds for PRE and POST:

• PRE:

– ln(Odds(PRE ≤ 1)) = −1.378 –Value

– ln(Odds(PRE ≤ 2)) = 0.381 –Value

– ln(Odds(PRE ≤ 3)) = 2.471 –Value

• POST:

– ln(Odds(POST ≤ 1)) = 1.572 –Value

– ln(Odds(POST ≤ 2)) = 2.643 –Value

– ln(Odds(POST ≤ 3)) = 3.386 –Value

Using these formulae, for each combination of competence and ES we calculated:

• Value, see Table 6

• Odds(PRE ≤ d), for all PRE levels d

• Probability P(PRE ≤ d) for all PRE levels d

• P(PRE=d) for all PRE levels d, using that P(PRE ≤ 1) = P

• (PRE = 1) and P(PRE = d+1) = P(PRE ≤ d+1) –P(PRE ≤ d)

• Median PRE m such that P(PRE ≤ m) ≥ 0.5 ∧ P (PRE ≥ m) ≥ 0.5.

Similar calculations were performed for POST. Table 6 shows the calculated values for all our combinations of competence and ES for PRE and POST, respectively, and how these values map onto the median PRE and POST levels. The predicted median PRE and POST levels were used to produce Algorithm 3.

This study investigated the impact of learner personality (emotional stability) and competence on the selection of a LA based on the knowledge it uses and the knowledge it teaches. ES and competence both impacted the selection of LAs. There were significant effects of ES on POST knowledge, and competence on both PRE and POST knowledge. A further exploratory analysis suggests that selecting LAs with less POST or PRE knowledge is better for low ES learners in terms of suitability and to increase confidence. Based on the data analysis, three algorithms have been constructed to adapt LA selection to different levels of ES and competence (see summary in Table 7).

Above, we created three algorithms to adapt the selection of LAs to learner personality (ES) and competence. This section describes an evaluation of key aspects of these algorithms with teachers, resulting in a final algorithm.

Twenty-seven participants took part. Six were excluded from the study due to their incorrect answer to the verification question. The final sample consisted of 21 participants (11 female, 9 male, 1 non-disclosed; 9 26–35, 7 36–45, 3 over 46, and 2 prefer not to say; 10 teachers, 11 trainee-teachers).

We used the following materials:

1. Two stories depicting ES at either a low or high level developed by Dennis et al. (2012).

2. Three validated levels of competence: very low, moderate, and outstanding.

3. Seven LAs selected based on the three algorithms produced above (LAs 8–12, 16, 18 from Figure 1). LAs were shown as before.

Ethical approval was obtained from the University of Aberdeen's Engineering and Physical Sciences ethics board. Before taking part, participants provided informed consent. Participants first provided demographic information (age, gender and occupation). They were shown two scenarios, one depicting Josh who was high on ES and another depicting James who was low on ES. They were told that the learners had previously learned topics A and B, and recently finished a learning activity which taught topics D and E. For each scenario, three questions were asked, each highlighting a different competence level (very low, moderate, outstanding). Participants ranked a subset of the seven LAs, based on their suitability for that learner. Table 8 shows for each level of competence and ES which LAs participants ranked, using the PRE and POST to describe the LAs. These LAs were chosen such that they included the LAs recommended by each of the three algorithms for that combination of competence and ES (as denoted in Table 8), as well as any LAs recommended by the algorithms for that level of competence but for the opposite ES.

We investigated the following research questions:

1. For each level of learner competence and ES, how highly are the selected LAs by Algorithm 1, Algorithm 2, and Algorithm 3 ranked by the teachers, and which LA is ranked highest?

2. Which algorithm matches the rankings of the teachers best?

3. What modifications are needed to the best algorithm to be in line with teachers' preferences?

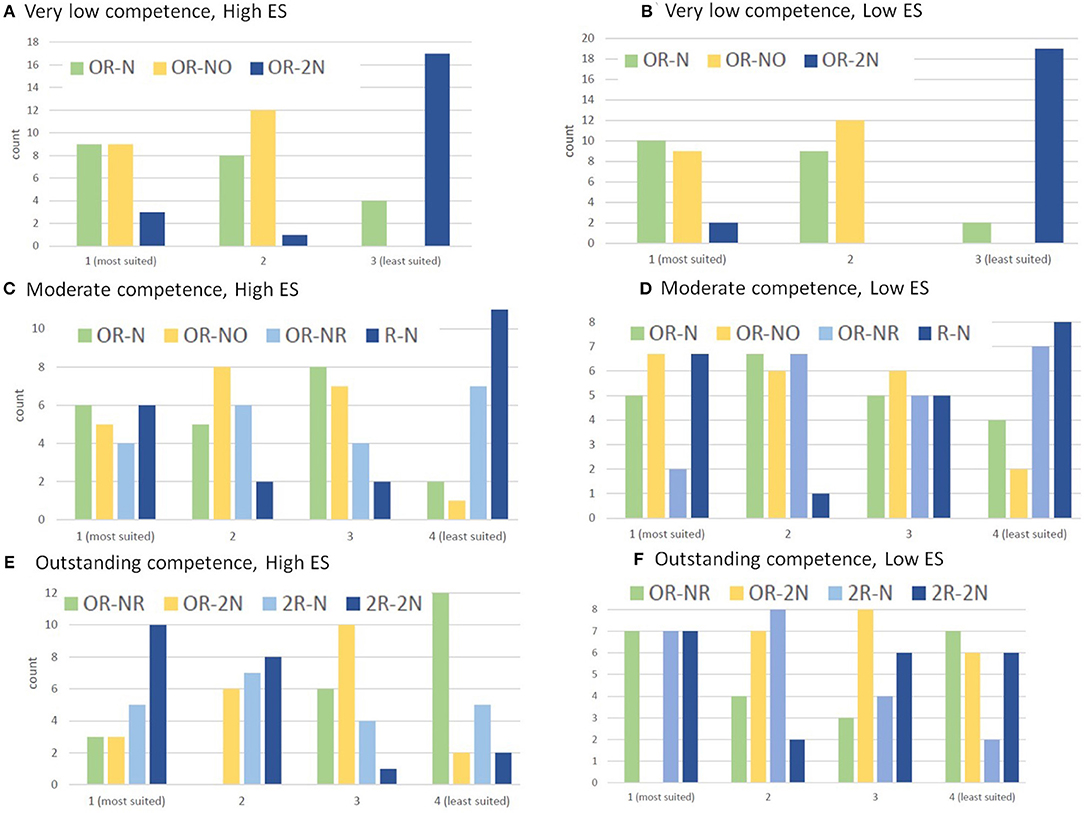

Table 8 and Figure 7 show the results of the ranking. We calculated both the average rank and the median rank.

Figure 7. Teachers' rankings for LAs for (A,B) very low competence, (C,D) moderate competence, and (E,F) outstanding competence, for (A,C,E) high ES and (B,D,F) low ES.

For high ES, the teachers' ranking is best for OR→NO, in line with the predictions of Algorithms 1 and 3. For low ES, the teachers ranking is also best for OR→NO, matching the prediction of Algorithm 3. The prediction by Algorithm 2 did badly in the high ES case, with teachers clearly preferring less complicated LAs than Algorithm 2 had predicted. In fact LAs that involved more new knowledge to learn (2N) were deemed to be the least suited LAs for both ES levels. OR→NO and OR→N did about equally well in the low ES case, so overall the predictions by Algorithm 1 are also good. The teachers clearly where in two minds on whether adaptation to ES would be a good idea for learners with very low competence. Follow on studies measuring learners' attainment and motivation should show whether it is better to use OR→NO or OR→N for low ES learners.

For high ES, the teachers' ranking is best for OR→NO. This is not predicted by any of the algorithms. Algorithm 1 predicted a less complicated LA, namely OR→N, whilst Algorithms 2 and 3 predicted a more complicated LA, namely OR→NR. Teachers went for an LA in between, with the ranking of that LA close to that predicted by Algorithm 1. For low ES, the teachers' ranking is best for OR→NO, in line with the prediction of Algorithm 3. Algorithm 2 did badly for both levels of ES. For moderate competence, there is no evidence of adapting to ES levels.

For high ES, the teachers' ranking is best for 2R→2N, in line with the prediction by Algorithm 2. We recall that two LAs scored equally well when constructing Algorithm 1. We selected OR→2N at the time, given the lack of a statistically significant effect of PRE. The alternative was 2R→2N. The teachers clearly preferred the latter one. For low ES, the teachers' ranking is best for 2R→N, again in line with the prediction of Algorithm 2, and showing that teachers are adapting their rankings based on ES, using less new knowledge to learn for the low ES learner. Overall, for outstanding competence, Algorithm 2's predictions were perfect. For both levels of ES, teachers ranked the two LAs that required only recent knowledge higher than the two LAs that required a combination of old an recent knowledge, showing an inclination to only use recent knowledge for outstanding competence.

We did not find that one algorithm performed better than the others. Algorithm 3 performed best for the Very low competence case (and Algorithm 1 almost equally well), and also for low ES in the Moderate competence case. In contrast, Algorithm 2 performed best for the Outstanding competence case, but badly in the other ones. We decided to produce a new algorithm, combing elements from Algorithms 3 and 2. Table 8 shows the selections of LAs made for Algorithm 4, which were based on the best median rankings by the teachers. The resulting algorithm is shown in Algorithm 4.

This paper investigated the impact of learner personality (emotional stability) and competence on the selection of a LA based on the knowledge it uses and the knowledge it teaches. We also investigated the extent to which the selected LAs are perceived to be enjoyable and to increase learners' confidence and skills. ES and competence both impacted the selection of LAs. There were significant effects of ES on POST knowledge, and competence on both PRE and POST knowledge. A further exploratory analysis suggests that selecting LAs with less POST or PRE knowledge is better for low ES learners in terms of suitability and to increase confidence.

Based on the data analysis, an algorithm has been constructed to adapt LA selection to different levels of ES and competence. we obtained four algorithms for adapting LA selection to learners' personality and competence. Algorithms 3 and 4 are the most promising to investigate further, with Algorithm 4 best matching the teachers' preferences, and Algorithm 3 being most aligned to the teachers' preferences from the algorithms based on the data in study 4. These algorithms can be used in an Intelligent Tutoring System, or, as we recommend in future work, can be used as a basis for further research. In addition, we obtained an insight into how teachers adapt LA selection and how this matches the algorithms developed. We found evidence that teachers take emotional stability into account when selecting different LAs.

This paper has several limitations and opportunities for future work. First, we did not measure actual enjoyment, increase in confidence and increase in skills, but perceptions of those. Studies with learners and real learning tasks are needed to investigate actual impact. Second, the studies in this paper used an abstract notation for learning topics, using letters, such as A, E to indicate which concepts are needed to be known to study something, and which concepts are learned in an activity. This was done on purpose, so that we could study learning activity selection without participants' preconceived ideas about difficulty level of individual concepts and learning domains interfering. However, clearly further studies need to show to what extent what was learned in this paper can be generalized to real learning topics. Further studies are also needed to investigate the possible impacts of learning domains. Third, our algorithm requires a certain structure of the learning activities, namely what is taught (i.e., learning outcomes) and what is used (i.e., prerequisites) in a learning activity. It also requires a learner model in terms of these outcomes, so that we know what a learner has already studied. This may limit its applicability, however, the use of learning outcomes (and also prerequisites) is well-established, and strongly advocated in educational science (Kennedy, 2006). Fourth, we only investigated three levels of competence and ES only at the high and low level. The competence level validation reported in this paper would allow investigating another two levels. It would also be interesting to investigate finer gradations of ES. Fifth, other learner characteristics could be investigated, for example, the impact of learner goals and interests, or as advocated by Zhu et al. (2019) participation levels. As initial research by Adamu Sidi-Ali et al. (2019) showed that cultural background may impact desired learner emotional support, we would also like to investigate whether cultural background should matter for learning activity selection. Sixth, we did not consider other personality traits. Based on previous research (Okpo et al., 2018), we expect learner self-esteem to also matter. Seventh, this paper does not consider how long ago previous topics were studied. A forgetting model will be needed to take into account the likelihood that a learner still masters a topic or that a topic may need to be used in order to prevent forgetting (see Ilbeygi et al., 2019 for an overview and recent work on forgetting models). Eight, this paper only considered learning activity selection for individual learners. This becomes an even more complicated issue when learning activities need to be selected for groups of learners for a collaborative learning experience. Finally, we only considered PRE and POST knowledge, but did not explicitly address difficulty levels.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by a University of Aberdeen ethics committee. The participants provided their written informed consent to participate in the studies.

MA and JM designed and analyzed the studies, and wrote the paper. NB contributed to the study design and helped to improve the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Ph.D. of MA has been supported by Princess Nourah Bint Abdul Rahman University in Saudi Arabia.

1. ^This includes a debate about whether learning styles are a valid construct to consider at all Kirschner (2017). Our own research in Alhathli et al. (2017) in fact showed little impact of learning styles.

2. ^As it is hard to say whether 2O or R requires more knowledge, we coded them the same.

3. ^A similar significant effect was found using a non-parametric test.

4. ^Similar significant effects were found using non-parametric tests.

5. ^Similarly to the discussion above, for outstanding competence and high ES, the most frequently selected PRE could also have been 2R instead of OR.

6. ^ES was used as a factor. Competence was used as a ordinal co-variate, with competence coded 1-3 for very low till outstanding.

Adamu Sidi-Ali, M., Masthoff, J., and Dennis, M. (2019). “Adapting performance and emotional support feedback to cultural differences,” in 27th ACM Conference on User Modeling, Adaptation and Personalisation, UMAP 2019 (Larnaca). doi: 10.1145/3320435.3320444

Alhathli, M., Masthoff, J., and Siddharthan, A. (2016). “Exploring the impact of extroversion on the selection of learning materials,” in Proceedings of the 6th Workshop on Personalization Approaches in Learning Environments at UMAP. CEUR Workshop Proceedings (Halifax, NS).

Alhathli, M., Masthoff, J., and Siddharthan, A. (2017). “Should learning material's selection be adapted to learning style and personality?” in Adjunct Publication of the 25th Conference on User Modeling, Adaptation and Personalization (Bratislava: ACM).

Alhathli, M. A. E., Masthoff, J. F. M., and Beacham, N. A. (2018a). “The effects of learners' verbal and visual cognitive styles on instruction selection,” in Proceedings of Intelligent Mentoring Systems Workshop Associated With the 19th International Conference on Artificial Intelligence in Education, AIED 2018 (London).

Alhathli, M. A. E., Masthoff, J. F. M., and Beacham, N. A. (2018b). “Impact of a learner's personality on the selection of the next learning activity,” in Proceedings of Intelligent Mentoring Systems Workshop Associated With the 19th International Conference on Artificial Intelligence in Education, AIED 2018 (London).

Anderson, J. R., Corbett, A. T., Koedinger, K. R., and Pelletier, R. (1995). Cognitive tutors: lessons learned. J. Learn. Sci. 4, 167–207. doi: 10.1207/s15327809jls0402_2

Anderson, L. W., Krathwohl, D. R., Airasian, P. W., Cruikshank, K. A., Mayer, R. E., Pintrich, P. R., et al. (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives, Abridged Edition. White Plains, NY: Longman.

Anderson, M., Ball, M., Boley, H., Greene, S., Howse, N., Lemire, D., et al. (2003). “Racofi: A Rule-Applying Collaborative Filtering System,” in Proceedings of IEEE/WIC COLA'03 (Halifax, NS).

Avancini, H., and Straccia, U. (2005). User recommendation for collaborative and personalised digital archives. Int. J. Web Based Commun. 1, 163–175. doi: 10.1504/IJWBC.2005.006061

Ayersman, D. J., and von Minden, A. (1995). Individual differences, computers, and instruction. Comput. Hum. Behav. 11, 371–390. doi: 10.1016/0747-5632(95)80005-S

Azevedo, R. (2005). Using hypermedia as a metacognitive tool for enhancing student learning? The role of self-regulated learning. Educ. Psychol. 40, 199–209. doi: 10.1207/s15326985ep4004_2

Barrick, M. R. (2005). Yes, personality matters: moving on to more important matters. Hum. Perform. 18, 359–372. doi: 10.1207/s15327043hup1804_3

Barrick, M. R., Mount, M. K., and Judge, T. A. (2001). Personality and performance at the beginning of the new millennium: What do we know and where do we go next? Int. J. Select. Assess. 9, 9–30. doi: 10.1111/1468-2389.00160

Beal, C., and Lee, H. (2005a). “Creating a pedagogical model that uses student self reports of motivation and mood to adapt its instruction,” in Workshop on Motivation and Affect in Educational Software, in Conjunction With the 12th International Conference on Artificial Intelligence in Education, Vol. 574 (Amsterdam).

Beal, C. R., and Lee, H. (2005b). “Creating a pedagogical model that uses student self reports of motivation and mood to adapt its instruction,” in Proceedings of the Workshop on Motivation and Affect in Educational Software (Amsterdam).

Beaumont, I., and Brusilovsky, P. (1995). “Educational applications of adaptive hypermedia,” in Human–Computer Interaction, eds K. Nordby, P. Helmersen, D. J. Gilmore, and S. A. Arnesen (Boston, MA: Springer), 410–414. doi: 10.1007/978-1-5041-2896-4_71

Bloom, B. S. (1956). Taxonomy of Educational Objectives: The Classification of Educational Goals. Book 1: Cognitive Domain. New York, NY: Longman.

Bong, M. (2001). Between-and within-domain relations of academic motivation among middle and high school students: self-efficacy, task value, and achievement goals. J. Educ. Psychol. 93:23. doi: 10.1037/0022-0663.93.1.23

Bozionelos, N. (2004). The relationship between disposition and career success: a British study. J. Occupat. Organ. Psychol. 77, 403–420. doi: 10.1348/0963179041752682

Brusilovsky, P. (1996). Methods and techniques of adaptive hypermedia. User Model. User Adapt. Interact. 6, 87–129. doi: 10.1007/BF00143964

Brusilovsky, P. (1998a). “Methods and techniques of adaptive hypermedia,” in Adaptive Hypertext and Hypermedia, eds P. Brusilovsky, A. Kobsa, and J. Vassileva (Dordrecht: Springer), 1–43. doi: 10.1007/978-94-017-0617-9_1

Brusilovsky, P. (1998b). “Adaptive educational systems on the world-wide-web: a review of available technologies,” in Proceedings of Workshop “WWW-Based Tutoring” at 4th International Conference on Intelligent Tutoring Systems (ITS'98) (San Antonio, TX).

Brusilovsky, P. (2001). Adaptive hypermedia: methods and techniques. Int. J. User Model. User Adapt. Interact. 11, 87–110. doi: 10.1023/A:1011143116306

Brusilovsky, P. (2003). Adaptive navigation support in educational hypermedia: the role of student knowledge level and the case for meta-adaptation. Br. J. Educ. Technol. 34, 487–497. doi: 10.1111/1467-8535.00345

Brusilovsky, P. (2007). “Adaptive navigation support,” in The Adaptive Web, eds P. Brusilovsky, A. Kobsa, and W. Nejdl (Berlin; Heidelberg: Springer), 263–290. doi: 10.1007/978-3-540-72079-9_8

Brusilovsky, P., Eklund, J., and Schwarz, E. (1998). Web-based education for all: a tool for development adaptive courseware. Comput. Netw. ISDN Syst. 30, 291–300. doi: 10.1016/S0169-7552(98)00082-8

Brusilovsky, P., and Millán, E. (2007). “User models for adaptive hypermedia and adaptive educational systems,” in The Adaptive Web, eds P. Brusilovsky, A. Kobsa, and W. Nejdl (Berlin; Heidelberg: Springer), 3–53. doi: 10.1007/978-3-540-72079-9_1

Brusilovsky, P., Schwarz, E., and Weber, G. (1996). “Elm-art: an intelligent tutoring system on world wide web,” in International Conference on Intelligent Tutoring Systems (Montreal, QC: Springer), 261–269. doi: 10.1007/3-540-61327-7_123

Bunderson, C. V., and Martinez, M. (2000). Building interactive world wide web (web) learning environments to match and support individual learning differences. J. Interact. Learn. Res. 11, 163–195.

Byrnes, J. P., and Guthrie, J. T. (1992). Prior conceptual knowledge and textbook search. Contemp. Educ. Psychol. 17, 8–29. doi: 10.1016/0361-476X(92)90042-W

Camp, G., Paas, F., Rikers, R., and van Merrienboer, J. (2001). Dynamic problem selection in air traffic control training: a comparison between performance, mental effort and mental efficiency. Comput. Hum. Behav. 17, 575–595. doi: 10.1016/S0747-5632(01)00028-0

Chang, H., Cohn, D., and Mccallum, A. K. (2000). “Learning to create customized authority lists,” in ICML'00: Proceedings of the Seventeenth International Conference on Machine Learning (Stanford, CA), 127–134.

Cheng, I., Shen, R., and Basu, A. (2008). “An algorithm for automatic difficulty level estimation of multimedia mathematical test items,” in Advanced Learning Technologies, 2008. ICALT'08 (Santander: IEEE), 175–179. doi: 10.1109/ICALT.2008.105

Clark, M., and Schroth, C. A. (2010). Examining relationships between academic motivation and personality among college students. Learn. Individ. Differ. 20, 19–24. doi: 10.1016/j.lindif.2009.10.002

Cocea, M., and Weibelzahl, S. (2006). “Motivation: included or excluded from e-learning,” in Cognition and Exploratory Learning in Digital Age, CELDA 2006 Proceedings (Barcelona: IADIS Press).

Corbalan, G., Kester, L., and Van Merriënboer, J. J. (2008). Selecting learning tasks: effects of adaptation and shared control on learning efficiency and task involvement. Contemp. Educ. Psychol. 33, 733–756. doi: 10.1016/j.cedpsych.2008.02.003

Costa, P., and McCrae, R. (1992a). Neo PI-R: Professional Manual: Revised Neo PI-R and Neo-FFI. Odessa, FL: Psychological Assessment Resources.

Costa, P. T., and McCrae, R. R. (1992b). Four ways five factors are basic. Pers. Individ. Differ. 13, 653–665. doi: 10.1016/0191-8869(92)90236-I

Costa, P. T., and McCrae, R. R. (1995). Solid ground in the wetlands of personality: a reply to block. Psychol. Bull. 117, 216–220; discussion 226–229. doi: 10.1037/0033-2909.117.2.216

Cristea, A. I. (2003). Adaptive patterns in authoring of educational adaptive hypermedia. Educ. Technol. Soc. 6, 1–5. doi: 10.2316/Journal.208.2004.4.208-0826

Dagger, D., Wade, V., and Conlan, O. (2005). Personalisation for all: making adaptive course composition easy. J. Educ. Technol. Soc. 8, 9–25.

Dascalu, M.-I., Bodea, C.-N., Mihailescu, M. N., Tanase, E. A., and Ordoñez de Pablos, P. (2016). Educational recommender systems and their application in lifelong learning. Behav. Inform. Technol. 35, 290–297. doi: 10.1080/0144929X.2015.1128977

Davidovic, A., Warren, J., and Trichina, E. (2003). Learning benefits of structural example-based adaptive tutoring systems. IEEE Trans. Educ. 46, 241–251. doi: 10.1109/TE.2002.808240

De Bra, P., Aroyo, L., and Cristea, A. (2004). “Adaptive web-based educational hypermedia,” in Web Dynamics, eds M. Levene and A. Poulovassilis (Berlin; Heidelberg: Springer), 387–410. doi: 10.1007/978-3-662-10874-1_16

Dennis, M., Masthoff, J., and Mellish, C. (2012). “The quest for validated personality trait stories,” in Proceedings of the 2012 ACM International Conference on Intelligent User Interfaces (Lisbon: ACM), 273–276. doi: 10.1145/2166966.2167016

Dennis, M., Masthoff, J., and Mellish, C. (2016). Adapting progress feedback and emotional support to learner personality. Int. J. Artif. Intell. Educ. 26, 877–931. doi: 10.1007/s40593-015-0059-7

Di Iorio, A., Feliziani, A. A., Mirri, S., Salomoni, P., and Vitali, F. (2006). Automatically producing accessible learning objects. Educ. Technol. Soc. 9, 3–16.

Dochy, F. (1994). “Prior knowledge and learning,” in International Encyclopedia of Education, 2nd Edn, eds T. Husen and T. N. Postlethwaite (Oxford; New York, NY: Pergamon Press), 4698–4702.

Dochy, F. J. R. C. (1992). Assessment of Prior Knowledge as a Determinant for Future Learning: The Use of Prior Knowledge State Tests and Knowledge Profiles. Milton Keynes: Centre for Educational Technology and Innovation, Open University.

Drachsler, H., Hummel, H., and Koper, R. (2008a). Identifying the goal, user model and conditions of recommender systems for formal and informal learning. J. Digit. Inf. 10, 4–24.

Drachsler, H., Hummel, H. G., and Koper, R. (2008b). Personal recommender systems for learners in lifelong learning networks: the requirements, techniques and model. Int. J. Learn. Technol. 3, 404–423. doi: 10.1504/IJLT.2008.019376

El-Bishouty, M. M., Chang, T.-W., Graf, S., and Chen, N.-S. (2014). Smart e-course recommender based on learning styles. J. Comput. Educ. 1, 99–111. doi: 10.1007/s40692-014-0003-0

Elliot, A. J. (1999). Approach and avoidance motivation and achievement goals. Educ. Psychol. 34, 169–189. doi: 10.1207/s15326985ep3403_3

Elliot, A. J., and McGregor, H. A. (2001). A 2 × 2 achievement goal framework. J. Pers. Soc. Psychol. 80:501. doi: 10.1037/0022-3514.80.3.501

Elliot, A. J., and Thrash, T. M. (2001). Achievement goals and the hierarchical model of achievement motivation. Educ. Psychol. Rev. 13, 139–156. doi: 10.1023/A:1009057102306

Forbes-Riley, K., Rotaru, M., and Litman, D. J. (2008). The relative impact of student affect on performance models in a spoken dialogue tutoring system. User Model. User Adapt. Interact. 18, 11–43. doi: 10.1007/s11257-007-9038-5

Ford, N., and Chen, S. Y. (2000). Individual differences, hypermedia navigation, and learning: an empirical study. J. Educ. Multimed. Hypermed. 9, 281–311.

Gaultney, J. F. (1995). The effect of prior knowledge and metacognition on the acquisition of a reading comprehension strategy. J. Exp. Child Psychol. 59, 142–163. doi: 10.1006/jecp.1995.1006

Germanakos, P., Tsianos, N., Lekkas, Z., Mourlas, C., and Samaras, G. (2008). Capturing essential intrinsic user behaviour values for the design of comprehensive web-based personalized environments. Comput. Hum. Behav. 24, 1434–1451. doi: 10.1016/j.chb.2007.07.010

Gilbert, J. E., and Han, C. Y. (1999). Adapting instruction in search of ‘a significant difference’. J. Netw. Comput. Appl. 22, 149–160. doi: 10.1006/jnca.1999.0088

Graesser, A. C., D'Mello, S. K., Craig, S. D., Witherspoon, A., Sullins, J., McDaniel, B., et al. (2008). The relationship between affective states and dialog patterns during interactions with autotutor. J. Interact. Learn. Res. 19:293–312.

Graesser, A. C., Jackson, G. T., and McDaniel, B. (2007). AutoTutor holds conversations with learners that are responsive to their cognitive and emotional states. Educ. Technol. 47, 19–23.

Hazrati-Viari, A., Rad, A. T., and Torabi, S. S. (2012). The effect of personality traits on academic performance: the mediating role of academic motivation. Proc. Soc. Behav. Sci. 32, 367–371. doi: 10.1016/j.sbspro.2012.01.055

Henze, N., Naceur, K., Nejdl, W., and Wolpers, M. (1999). Adaptive hyperbooks for constructivist teaching. KI 13, 26–31.

Hospers, M., Kroezen, E., Nijholt, A., op den Akker, R., and Heylen, D. (2003). “An agent-based intelligent tutoring system for nurse education,” in Applications of Software Agent Technology in the Health Care Domain, eds A. Moreno and J. L. Nealon (Basel: Springer), 143–159. doi: 10.1007/978-3-0348-7976-7_10

Hsu, M.-H. (2008). A personalized english learning recommender system for esl students. Exp. Syst. Appl. 34, 683–688. doi: 10.1016/j.eswa.2006.10.004

Huang, Y.-M., Huang, T.-C., Wang, K.-T., and Hwang, W.-Y. (2009). A markov-based recommendation model for exploring the transfer of learning on the web. J. Educ. Technol. Soc. 12, 144–162.

Hwang, G.-J. (1998). A tutoring strategy supporting system for distance learning on computer networks. IEEE 41, 343–343. doi: 10.1109/TE.1998.787369

Ilbeygi, M., Kangavari, M. R., and Golmohammadi, S. A. (2019). Equipping the act-r cognitive architecture with a temporal ratio model of memory and using it in a new intelligent adaptive interface. User Model. User Adapt. Interact. 29, 943–976. doi: 10.1007/s11257-019-09239-2

John, O. P., and Srivastava, S. (1999). The big five trait taxonomy: history, measurement, and theoretical perspectives. Handb. Pers. Theory Res. 2, 102–138.

Jonassen, D., and Grabowski, B. (1993). Individual Differences and Instruction. New York, NY: Allen & Bacon.

Judge, T. A., Higgins, C. A., Thoresen, C. J., and Barrick, M. R. (1999). The big five personality traits, general mental ability, and career success across the life span. Pers. Psychol. 52, 621–652. doi: 10.1111/j.1744-6570.1999.tb00174.x

Kalyuga, S., and Sweller, J. (2005). Rapid dynamic assessment of expertise to improve the efficiency of adaptive e-learning. Educ. Technol. Res. Dev. 53, 83–93. doi: 10.1007/BF02504800

Kelly, D. (2008). Adaptive versus learner control in a multiple intelligence learning environment. J. Educ. Multimed. Hypermed. 17, 307–336.

Kelly, D., and Tangney, B. (2002). “Incorporating learning characteristics into an intelligent tutor,” in International Conference on Intelligent Tutoring Systems (Biarritz: Springer), 729–738. doi: 10.1007/3-540-47987-2_73

Kennedy, D. (2006). Writing and Using Learning Outcomes: A Practical Guide. Cork: University College Cork.

Kirschner, P. A. (2017). Stop propagating the learning styles myth. Comput. Educ. 106, 166–171. doi: 10.1016/j.compedu.2016.12.006

Klemp, G. O. Jr. (1979). Identifying, measuring, and integrating competence. New Direct. Exp. Learn. 3, 41–52.

Komarraju, M., and Karau, S. J. (2005). The relationship between the big five personality traits and academic motivation. Pers. Individ. Differ. 39, 557–567. doi: 10.1016/j.paid.2005.02.013

Komarraju, M., Karau, S. J., Schmeck, R. R., and Avdic, A. (2011). The big five personality traits, learning styles, and academic achievement. Pers. Individ. Differ. 51, 472–477. doi: 10.1016/j.paid.2011.04.019

Koutsojannis, C., Prentzas, J., and Hatzilygeroudis, I. (2001). “A web-based intelligent tutoring system teaching nursing students fundamental aspects of biomedical technology,” in Engineering in Medicine and Biology Society, 2001. Proceedings of the 23rd Annual International Conference of the IEEE, Vol. 4 (Istanbul: IEEE), 4024–4027. doi: 10.21236/ADA412356

Latham, A., Crockett, K., McLean, D., and Edmonds, B. (2012). A conversational intelligent tutoring system to automatically predict learning styles. Comput. Educ. 59, 95–109. doi: 10.1016/j.compedu.2011.11.001

Lawless, K. A., and Brown, S. W. (1997). Multimedia learning environments: issues of learner control and navigation. Instruct. Sci. 25, 117–131. doi: 10.1023/A:1002919531780

Lee, J., and Park, O. (2008). “Adaptive instructional systems,” in Handbook of Research on Educational Communications and Technology, eds J. M Spector, M. D. Merrill, J. van Merrienboer, and M. P. Driscoll (New York, NY: Lawrence Erlbaum), 469–484.

Liang, G., Weining, K., and Junzhou, L. (2006). “Courseware recommendation in e-learning system,” in International Conference on Web-Based Learning (Penang), 10–24. doi: 10.1007/11925293_2

Lo, J.-J., Chan, Y.-C., and Yeh, S.-W. (2012). Designing an adaptive web-based learning system based on students' cognitive styles identified online. Comput. Educ. 58, 209–222. doi: 10.1016/j.compedu.2011.08.018

Magoulas, G. D., Papanikolaou, Y., and Grigoriadou, M. (2003). Adaptive web-based learning: accommodating individual differences through system's adaptation. Br. J. Educ. Technol. 34, 511–527. doi: 10.1111/1467-8535.00347

Mampadi, F., Chen, S. Y., Ghinea, G., and Chen, M.-P. (2011). Design of adaptive hypermedia learning systems: a cognitive style approach. Comput. Educ. 56, 1003–1011. doi: 10.1016/j.compedu.2010.11.018

Masthoff, J. (1997). An Agent-Based Interactive Instruction System. Eindhoven: Technical University.

McCrae, R. (1992). The five-factor model: Issues and applications. J. Pers. 60, 175–215. doi: 10.1111/j.1467-6494.1992.tb00970.x

McCrae, R. R., and Costa, P. T. Jr. (1997). Personality trait structure as a human universal. Am. Psychol. 52:509. doi: 10.1037/0003-066X.52.5.509

Mcquiggan, S. W., Mott, B. W., and Lester, J. C. (2008). Modeling self-efficacy in intelligent tutoring systems: an inductive approach. User Model. User Adapt. Interact. 18, 81–123. doi: 10.1007/s11257-007-9040-y

Melis, E., Andres, E., Budenbender, J., Frischauf, A., Goduadze, G., Libbrecht, P., et al. (2001). Activemath: a generic and adaptive web-based learning environment. Int. J. Artif. Intell. Educ. 12, 385–407.

Miller, A. (1991). Personality types, learning styles and educational goals. Educ. Psychol. 11, 217–238. doi: 10.1080/0144341910110302

Mitrović, A., Djordjević-Kajan, S., and Stomenov, L. (1996). Instruct: Modeling students by asking questions. User Model. User Adapt. Interact. 6, 273–302. doi: 10.1007/BF00213185

Mitrovic, A., Martin, B., and Mayo, M. (2002). Using evaluation to shape its design: results and experiences with SQL-tutor. User Model. User Adapt. Interact. 12, 243–279. doi: 10.1023/A:1015022619307

Nunes, M. A. S., and Hu, R. (2012). “Personality-based recommender systems: an overview,” in Proceedings of the Sixth ACM Conference on Recommender Systems (Dublin: ACM), 5–6. doi: 10.1145/2365952.2365957

Oakes, D. W., Ferris, G. R., Martocchio, J. J., Buckley, M. R., and Broach, D. (2001). Cognitive ability and personality predictors of training program skill acquisition and job performance. J. Bus. Psychol. 15, 523–548. doi: 10.1023/A:1007805132107

Odo, C. R., Masthoff, J. F. M., Beacham, N. A., and Alhathli, M. A. E. (2018). “Affective state for learning activities selection,” in Proceedings of Intelligent Mentoring Systems Workshop Associated With the 19th International Conference on Artificial Intelligence in Education, AIED 2018 (London). doi: 10.1007/978-3-319-93846-2_98

Okpo, J., Dennis, M., Masthoff, J., Smith, K. A., and Beacham, N. (2016). “Exploring requirements for an adaptive exercise selection system,” in PALE 2016: Workshop on Personalization Approaches in Learning Environments (Halifax, NS).

Okpo, J., Masthoff, J., Dennis, M., Beacham, N., and Ciocarlan, A. (2017). “Investigating the impact of personality and cognitive efficiency on the selection of exercises for learners,” in Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, UMAP '17 (New York, NY: ACM), 140–147. doi: 10.1145/3079628.3079674

Okpo, J. A., Masthoff, J., Dennis, M., and Beacham, N. (2018). Adapting exercise selection to performance, effort and self-esteem. New Rev. Hypermed. Multimed. 24, 193–227. doi: 10.1080/13614568.2018.1477999

Ones, D. S., Dilchert, S., Viswesvaran, C., and Judge, T. A. (2007). In support of personality assessment in organizational settings. Pers. Psychol. 60, 995–1027. doi: 10.1111/j.1744-6570.2007.00099.x

Papanikolaou, K. A., Grigoriadou, M., Kornilakis, H., and Magoulas, G. D. (2001). “INSPIRE: an intelligent system for personalized instruction in a remote environment,” in Workshop on Adaptive Hypermedia. (Berlin; Heidelberg: Springer), 215–225.

Park, O.-C, and Lee, J. (2004). Adaptive instructional systems. in Handbook of research for education communications and technology, 2nd Edn, ed D. H. Jonassen. (Mahwah, NJ: Erlbaum), 651–684.

Petrovica, S. (2013). “Adaptation of tutoring to students' emotions in emotionally intelligent tutoring systems,” in 2013 Second International Conference on e-Learning and e-Technologies in Education (ICEEE) (Lodz: IEEE), 131–136. doi: 10.1109/ICeLeTE.2013.6644361

Pholo, D., and Ngwira, S. (2013). “Integrating explicit problem-solving teaching into activemath, an intelligent tutoring system,” in 2013 International Conference on Adaptive Science and Technology (ICAST) (Pretoria: IEEE), 1–8. doi: 10.1109/ICASTech.2013.6707521

Poropat, A. E. (2009). A meta-analysis of the five-factor model of personality and academic performance. Psychol. Bull. 135:322. doi: 10.1037/a0014996

Prins, F., Nadolski, R., Berlanga, A., Drachsler, H., Hummel, H., and Koper, R. (2007). Competence description for personal recommendations: the importance of identifying the complexity of learning and performance situations. Educ. Technol. Soc. 11, 141–152.

Rafaeli, S., Barak, M., Dan-Gur, Y., and Toch, E. (2004). Qsia-a web-based environment for learning, assessing and knowledge sharing in communities. Comput. Educ. 43, 273–289. doi: 10.1016/j.compedu.2003.10.008

Ray, R. D., and Belden, N. (2007). Teaching college level content and reading comprehension skills simultaneously via an artificially intelligent adaptive computerized instructional system. Psychol. Rec. 57, 201–218. doi: 10.1007/BF03395572

Reategui, E., Boff, E., and Campbell, J. A. (2008). Personalization in an interactive learning environment through a virtual character. Comput. Educ. 51, 530–544. doi: 10.1016/j.compedu.2007.05.018

Recker, M. M., and Walker, A. (2003). Supporting “word-of-mouth” social networks through collaborative information filtering. J. Interact. Learn. Res. 14, 79–98.

Recker, M. M., Walker, A., and Lawless, K. (2003). What do you recommend? Implementation and analyses of collaborative information filtering of web resources for education. Instruct. Sci. 31, 299–316. doi: 10.1023/A:1024686010318

Ree, M. J., Earles, J. A., and Teachout, M. S. (1994). Predicting job performance: not much more than G. J. Appl. Psychol. 79:518. doi: 10.1037/0021-9010.79.4.518

Revilla, M. A., Manzoor, S., and Liu, R. (2008). Competitive learning in informatics: the UVA online judge experience. Olymp. Inform. 2, 131–148.

Ricci, F., Rokach, L., and Shapira, B. (2011). Introduction to recommender systems handbook. in Recommender Systems Handbook, eds F. Ricci, L. Rokach, B. Shapira, and P. Kantor (Boston, MA: Springer). doi: 10.1007/978-0-387-85820-3_1

Richardson, M., Abraham, C., and Bond, R. (2012). Psychological correlates of university students' academic performance: a systematic review and meta-analysis. Psychol. Bull. 138:353. doi: 10.1037/a0026838

Robison, J., McQuiggan, S., and Lester, J. (2010). “Developing empirically based student personality profiles for affective feedback models,” in International Conference on Intelligent Tutoring Systems (Pittsburgh, PA: Springer), 285–295. doi: 10.1007/978-3-642-13388-6_33

Rumetshofer, H., and Wöß, W. (2003). Xml-based adaptation framework for psychological-driven e-learning systems. J. Educ. Technol. Soc. 6, 18–29.

Salden, R. J., Paas, F., Broers, N. J., and Van Merriënboer, J. J. (2004). Mental effort and performance as determinants for the dynamic selection of learning tasks in air traffic control training. Instruct. Sci. 32, 153–172. doi: 10.1023/B:TRUC.0000021814.03996.ff

Salden, R. J., Paas, F., and Van Merriënboer, J. J. (2006). Personalised adaptive task selection in air traffic control: effects on training efficiency and transfer. Learn. Instruct. 16, 350–362. doi: 10.1016/j.learninstruc.2006.07.007

Salgado, J. F. (1997). The five factor model of personality and job performance in the european community. J. Appl. Psychol. 82:30. doi: 10.1037/0021-9010.82.1.30

Santos, O. C., and Boticario, J. G. (2010). Modeling recommendations for the educational domain. Proc. Comput. Sci. 1, 2793–2800. doi: 10.1016/j.procs.2010.08.004

Sawyer, R. K. (2008). Optimising learning implications of learning sciences research. Innov. Learn Learn Innov. 45, 35–98. doi: 10.1787/9789264047983-4-en

Schafer, J. B., Frankowski, D., Herlocker, J., and Sen, S. (2007). “Collaborative filtering recommender systems,” in The Adaptive Web, eds P. Brusilovsky, A. Kobsa, and W. Nejdl (Berlin; Heidelberg: Springer), 291–324. doi: 10.1007/978-3-540-72079-9_9

Schambach, T. P. (1994). Maintaining Professional Competence: An Evaluation of Factors Affecting Professional Obsolescence of Information Technology Professionals. PhD Dissertation, University of South Florida, Tampa, FL, United States.

Schiaffino, S., Garcia, P., and Amandi, A. (2008). eteacher: Providing personalized assistance to e-learning students. Comput. Educ. 51, 1744–1754. doi: 10.1016/j.compedu.2008.05.008

Schnackenberg, H. L., and Sullivan, H. J. (2000). Learner control over full and lean computer-based instruction under differing ability levels. Educ. Technol. Res. Dev. 48, 19–35. doi: 10.1007/BF02313399

Seters, J. v., Ossevoort, M., Goedhart, M., and Tramper, J. (2011). Accommodating the difference in students' prior knowledge of cell growth kinetics. Electron. J. Biotechnol. 14:1. doi: 10.2225/vol14-issue2-fulltext-2

Shane, S. (2000). Prior knowledge and the discovery of entrepreneurial opportunities. Organ. Sci. 11, 448–469. doi: 10.1287/orsc.11.4.448.14602

Shen, L.-P., and Shen, R.-M. (2004). Learning Content Recommendation Service Based-on Simple Sequencing Specification. Berlin; Heidelberg: Springer Berlin Heidelberg. doi: 10.1007/978-3-540-27859-7_47

Shute, V., and Towle, B. (2003). Adaptive e-learning. Educ. Psychol. 38, 105–114. doi: 10.1207/S15326985EP3802_5

Shute, V. J. (1995). Smart: student modeling approach for responsive tutoring. User Model. User Adapt. Interact. 5, 1–44. doi: 10.1007/BF01101800

Shute, V. J., and Zapata-Rivera, D. (2007). Adaptive technologies. ETS Res. Rep. Ser. 2007:i-34. doi: 10.1002/j.2333-8504.2007.tb02047.x

Siddappa, M., and Manjunath, A. (2008). “Intelligent tutor generator for intelligent tutoring systems,” in Proceedings of World Congress on Engineering and Computer Science (San Francisco, CA), 578–583.

Smith, K. A., Dennis, M., Masthoff, J., and Tintarev, N. (2019). A methodology for creating and validating psychological stories for conveying and measuring psychological traits. User Model. User Adapt. Interact. 29, 573–618. doi: 10.1007/s11257-019-09219-6

Soonthornphisaj, N., Rojsattarat, E., and Yim-Ngam, S. (2006). “Smart e-learning using recommender system,” in Computational Intelligence, eds D. S. Huang, K. Li, and G. W. Irwin (Berlin; Heidelberg: Springer), 518–523. doi: 10.1007/978-3-540-37275-2_63

Specht, M., Weber, G., Heitmeyer, S., and Schöch, V. (1997). “AST: adaptive www-courseware for statistics,” in Proceedings of Workshop Adaptive Systems and User Modeling on the World Wide Web at UM97 (Chia Laguna), 91–95.

Stewart, C., Cristea, A. I., Brailsford, T., and Ashman, H. (2005). “‘Authoring once, delivering many': creating reusable adaptive courseware,” in International conference on Web-based Education (Grindelwald).

Sugiyama, K., Hatano, K., and Yoshikawa, M. (2004). “Adaptive web search based on user profile constructed without any effort from users,” in Proceedings of World Wide Web Conference (ACM), 675–684. doi: 10.1145/988672.988764

Sun, P.-C., and Cheng, H. K. (2007). The design of instructional multimedia in e-learning: a media richness theory-based approach. Comput. Educ. 49, 662–676. doi: 10.1016/j.compedu.2005.11.016

Taylor, W. L. (1953). “Cloze procedure”: a new tool for measuring readability. J. Bull. 30, 415–433. doi: 10.1177/107769905303000401

Thompson, R. A., and Zamboanga, B. L. (2003). Prior knowledge and its relevance to student achievement in introduction to psychology. Teach. Psychol. 30, 96–101. doi: 10.1207/S15328023TOP3002_02

Triantafillou, E., Pomportsis, A., Demetriadis, S., and Georgiadou, E. (2004). The value of adaptivity based on cognitive style: an empirical study. Br. J. Educ. Technol. 35, 95–106. doi: 10.1111/j.1467-8535.2004.00371.x

Trotman, A., and Handley, C. (2008). Programming contest strategy. Comput. Educ. 50, 821–837. doi: 10.1016/j.compedu.2006.08.008

Tseng, J. C., Chu, H.-C., Hwang, G.-J., and Tsai, C.-C. (2008). Development of an adaptive learning system with two sources of personalization information. Comput. Educ. 51, 776–786. doi: 10.1016/j.compedu.2007.08.002

Tsiriga, V., and Virvou, M. (2004). Evaluating the intelligent features of a web-based intelligent computer assisted language learning system. Int. J. Artif. Intell. Tools 13, 411–425. doi: 10.1142/S0218213004001600

Vandewaetere, M., Desmet, P., and Clarebout, G. (2011). The contribution of learner characteristics in the development of computer-based adaptive learning environments. Comput. Hum. Behav. 27, 118–130. doi: 10.1016/j.chb.2010.07.038

Vassileva, D., and Bontchev, B. (2006). “Self Adaptive Hypermedia Navigation Based on Learner Model Characters,” in Proceedings of IADAT-e2006 (Barcelona), 46–52.

Verdú, E., Regueras, L. M., Verdú, M. J., Leal, J. P., de Castro, J. P., and Queirós, R. (2012). A distributed system for learning programming on-line. Comput. Educ. 58, 1–10. doi: 10.1016/j.compedu.2011.08.015

Wang, M., Hauze, S., Olmstead, W., Aziz, B., Zaineb, B., and Ng, J. (2014). “An exploration of intelligent learning (ilearning) systems,” in IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE) (Wellington), 327–332. doi: 10.1109/TALE.2014.7062558

Wang, P.-Y., and Yang, H.-C. (2012). Using collaborative filtering to support college students' use of online forum for english learning. Comput. Educ. 59, 628–637. doi: 10.1016/j.compedu.2012.02.007

Widyantoro, D. H., Ioerger, T. R., and Yen, J. (1999). “An adaptive algorithm for learning changes in user interests,” in Proceedings of the Eighth International Conference on Information and Knowledge Management (Kansas City, MO: ACM), 405–412. doi: 10.1145/319950.323230

Willis, S., and Dubin, S. (1990). Competence Versus Obsolescence: Understanding the Challenge Facing Today's Professionals. Maintaining Professional Competence: Approaches to Career Enhancement, Vitality, and Success Throughout a Work Life. San Francisco, CA: Josey-Bass.