- Department of Management Information Systems, College of Business Administration, King Faisal University, Al Ahsa, Saudi Arabia

In the era of advancing artificial intelligence (AI), its application in agriculture has become increasingly pivotal. This study explores the integration of AI for the discriminative classification of corn diseases, addressing the need for efficient agricultural practices. Leveraging a comprehensive dataset, the study encompasses 21,662 images categorized into four classes: Broken, Discolored, Silk cut, and Pure. The proposed model, an enhanced iteration of MobileNetV2, strategically incorporates additional layers—Average Pooling, Flatten, Dense, Dropout, and softmax—augmenting its feature extraction capabilities. Model tuning techniques, including data augmentation, adaptive learning rate, model checkpointing, dropout, and transfer learning, fortify the model's efficiency. Results showcase the proposed model's exceptional performance, achieving an accuracy of ~96% across the four classes. Precision, recall, and F1-score metrics underscore the model's proficiency, with precision values ranging from 0.949 to 0.975 and recall values from 0.957 to 0.963. In a comparative analysis with state-of-the-art (SOTA) models, the proposed model outshines counterparts in terms of precision, recall, F1-score, and accuracy. Notably, MobileNetV2, the base model for the proposed architecture, achieves the highest values, affirming its superiority in accurately classifying instances within the corn disease dataset. This study not only contributes to the growing body of AI applications in agriculture but also presents a novel and effective model for corn disease classification. The proposed model's robust performance, combined with its competitive edge against SOTA models, positions it as a promising solution for advancing precision agriculture and crop management.

1 Introduction

Evaluating the quality of agricultural products has long been a significant concern for various countries. The quality assessment of these products holds immense importance as it directly impacts various aspects of the agricultural industry and food supply chain [1]. In recent years, the emergence of precision agriculture has brought about stricter requirements and advanced techniques for assessing the quality of agricultural products. Precision agriculture utilizes innovative technologies such as remote sensing, drones, image classification and data analytics to gather detailed information about crops and their growing conditions. These advancements have enabled more precise and accurate evaluation of agricultural product quality [2].

The assessment of agricultural product quality is crucial for several reasons. Firstly, it ensures accurate identification and effective control of seed pests and diseases. By implementing stringent quality assessment practices, farmers and agricultural professionals can identify potential issues early on and take necessary measures to mitigate the spread of pests and diseases, safeguarding crop health and productivity [2, 3].

Secondly, the quality assessment of agricultural products plays a vital role in grain storage and distribution management. Precise evaluation helps in preserving seed quality, ensuring that only high-quality seeds are stored and distributed. This contributes to maintaining crop diversity and promoting better yields in subsequent seasons. Furthermore, evaluating agricultural product quality is essential in reducing food waste. Accurate assessment helps identify and separate products that meet the desired quality standards, minimizing waste throughout the supply chain. This not only benefits economic efficiency but also addresses environmental concerns associated with food waste.

Corn, also known as maize (Zea mays), is one of the most widely cultivated cereal crops worldwide. It is a staple food for many populations and plays a vital role in various industries, including agriculture, animal feed, and biofuel production. United States Department of Agriculture (USDA) has estimated that the corn production for the year 2022/23 will be 1,161.86 million metric tons worldwide. Whereas the corn production of last year was 1,216.87 million tons [4]. So, this year it can be predicted that there will around 4.52% decrease in corn production worldwide. Fusarium graminearum, Fusarium cepacia, Fusarium proliferatum, and Fusarium subglutinans are well-recognized pathogens that commonly contribute to the development of root, stalk, and cob rot in maize [5]. The presence of diseased seeds serves as a significant source of initial infestation, leading to plant diseases and facilitating the long-distance dissemination of such pathogens. This, in turn, adversely affects the germination rate of seeds [6]. Moreover, infected seeds pose challenges for storage, as they can contaminate other seeds, resulting in mold formation and substantial food losses. Furthermore, the compromised quality of these seeds renders them unsuitable for consumption [7].

Traditional approaches for assessing grain quality and safety often involve laborious and time-consuming microbial experiments, such as spore counting and enzyme-linked immunosorbent assays. While these methods exhibit high accuracy in disease detection, their drawbacks include their time-consuming nature, labor-intensive requirements, and destructive nature [8]. Phenotypic seed detection, as a non-destructive testing method, serves as a fundamental approach for evaluating seed quality. However, manual testing methods are subject to subjective factors, resulting in variations in test results among different individuals and yielding low detection efficiency, thereby increasing the likelihood of misjudgment [9, 10]. Consequently, quality inspectors urgently require a rapid and objective methodology to detect diseases in corn seeds.

Artificial Intelligence (AI), particularly deep learning, has demonstrated remarkable capabilities in extracting features efficiently and accurately from complex data [11]. This transformative technology has found applications across various domains [12], including healthcare [13–18], education [19, 20], e-commerce [21], and agriculture [22, 23] and other domains [24, 25]. Deep learning holds great promise in revolutionizing disease identification and management in corn crops. With its ability to efficiently analyze large volumes of data and extract intricate patterns, deep learning models can assist in the early detection of diseases, enabling timely intervention and improved crop health.

From the literature, it is evident that many researchers have incorporated deep learning in agriculture. Tian et al. [26] have proposed a deep learning model using wavelet threshold method. The proposed model is trained on 6 different classes on corn diseases and has achieved 96.8% accuracy. Mishra et al. [27] has proposed a CNN model to classify corn leaf diseases and has achieved 88.46% accuracy. A deep learning model based on VGG16 was proposed to classify 14 different types of seeds [28]. The modified CNN model has incorporated many model-tuning techniques such as transfer learning, model checkpointing and data augmentation. With the help of these techniques the proposed model has achieved 99% accuracy. Yu et al. [29] have performed a comparative study to examine the classification of three common corn diseases using different CNN models. they have tested VGG-16, ResNet18, Inception v3, VGG-19 based on a dataset containing three classes. These models have achieved accuracy of 84.42%, 83.75%, 83.05% and 82.63% respectively. Ahmad et al. [30] have proposed a deep learning model for corn disease identification. They have created a dataset using Unmanned Aerial System (UAS) imagery and have collected 59,000 images over three different corn fields. The dataset contains three common diseases found in corn. They claim that the model achieved 98.85% accuracy. In another study the authors have conducted a comparative study [31] in which they have compered state-of-the-art (SOTA) models based on a created dataset. VGG16, ResNet50, InceptionV3, DenseNet169, and Xception were trained to identify the corn diseases. They claim that DenseNet169 has achieved 100% accuracy and has outperformed all other SOTA models. Albarrak et al. [32] proposed a modified deep learning model based on MobileNetV2 for classifying eight different types of date fruit. They incorporated transfer learning and added new layers to the based model to improve the accuracy. The modified model achieved 99% accuracy in identifying different types of date fruits. Fraiwan et al. [33] proposed a deep learning model for classification of three commonly diseases of corn leaf. They have incorporated transfer learning and without using any other feature extraction technique to conduct their experiments. They have achieved 98.6% accuracy while identifying corn leaf diseases. In other studies [34–36], the authors tried to classify different types of fruit using deep learning models. In study [34], author classified forty different types of fruits by proposing a deep learning model based on MobileNetV2 and has achieved 99% accuracy. Whereas in study [35], authors have trained a deep learning model based on YOLO architecture for classifying oil palm fruit and has achieved 98.7% accuracy.

Masood et al. [37] have proposed a MaizeNet deep learning model for classifying maize leaf diseases. MaizeNet is based on ResNet50 and is trained on public dataset called corn disease. The proposed model has achieved 97.89% accuracy with mAP value of 0.94. Ahmad et al. [38] have conducted a study in which they have trained five SOTA models (InceptionV3, ResNet50, VGG16, DesneNet169, and Xception) on five datasets containing the images of corn diseases. They claim that DenseNet169 has achieved highest accuracy of 81.60% among other models based on all five datasets. Hatem et al. [39] have used a dataset found on Kaggle containing three common diseases of found in corn. They have used pretrained models such as GoogleNet, AlexNet, ResNet50 and VGG16 for experimentations and claim that these models achieved 98.57%, 98.81%, 99.05%, and 99.36% accuracy respectively. Divyanth et al. [40] conducted a study in which they classified different types of corn diseases. Before classifying they used three models, SegNet, UNet, and DeepLabV3+ for segmentation. After segmentation they identified that UNet performed well during segmentation. Based on that they developed two-stage deep learning model to classify commonly diseases found in corn. Rajeena et al. [41] proposed a modified version of EfficientNet model for classifying corn leaf diseases. The authors claim that they have achieved 98.85% accuracy during training.

This study presents an innovative approach to corn seed disease classification in precision agriculture, leveraging advanced Deep Learning techniques. The primary focus is on optimizing the MobileNetV2 architecture for enhanced accuracy in identifying distinct corn diseases, addressing the challenges of limited dataset size and class imbalances through strategic model tuning. The contributions of the study are as follows:

• Tailored MobileNetV2 architecture: the study introduces a modified MobileNetV2 architecture, incorporating additional layers like Average Pooling, Flatten, Dense, Dropout, and softmax. This tailored design optimally captures intricate features relevant to corn diseases, enhancing the model's discriminative capabilities.

• Effective data augmentation: to overcome data limitations, the research employs data augmentation techniques, generating diverse images from the original dataset. This process significantly expands the dataset, contributing to improved model training and robustness.

• Strategic model tuning techniques: the proposed work implements adaptive learning rate, model checkpointing, dropout, and transfer learning to fine-tune the model. These techniques collectively contribute to preventing overfitting, expediting training, and enhancing the model's adaptability to diverse patterns within the data.

• Comprehensive performance analysis: the study provides a detailed analysis of the proposed model's performance, including accuracy, precision, recall, and F1-score across various corn disease classes. The results showcase the model's efficiency in accurate classification and its ability to generalize well to unseen data.

• Comparison with state-of-the-art models: the proposed model's performance is benchmarked against state-of-the-art models (SOTA) and existing studies in the literature. The comparative analysis demonstrates the superiority of the proposed MobileNetV2-based model, emphasizing its competitiveness and efficacy in the field of agricultural disease classification.

2 Materials and methods

2.1 Dataset description

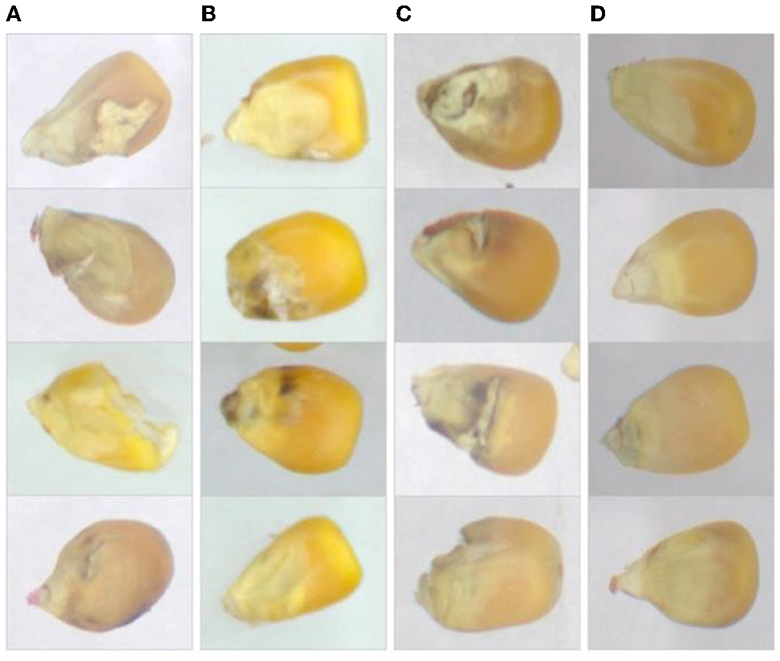

The dataset used in this study is the publicly available Corn Seeds Dataset [42] provided by a laboratory in Hyderabad, India. This dataset encompasses a collection of 17,801 images of corn seeds, which are classified into four distinct categories: pure, broken, discolored, and silkcut. Among the entire dataset, ~40.8% of the seeds are classified as healthy, while the remaining 59.2% are categorized as diseased seeds. Further breakdown of the diseased seeds reveals that 32% of them are broken, 17.4% are discolored, and 9.8% are silkcut. The Corn Seeds Dataset [42] serves as a valuable resource for researchers and practitioners in the field of corn disease identification. It provides a diverse set of seed images, encompassing both healthy and diseased samples, enabling the development and evaluation of deep learning models specifically tailored for corn disease classification. The inclusion of various disease types, such as broken, discolored, and silkcut seeds, ensures the dataset's representation of real-world scenarios and enhances its utility in training accurate and robust classification models. Furthermore, the distribution of healthy and diseased seeds within the dataset reflects the prevalence of these conditions. Figure 1 presents the sample of corn dataset. Upon scrutinizing the final dataset, the original training set is composed of 6,972 images representing the pure class, 5,489 images for the broken class, 2,748 images allocated to the discolored class, and 1,569 images assigned to the Silkcut class. Acknowledging the inherent imbalance in the dataset, a data augmentation approach is introduced, detailed in the subsequent section.

2.2 Model selection

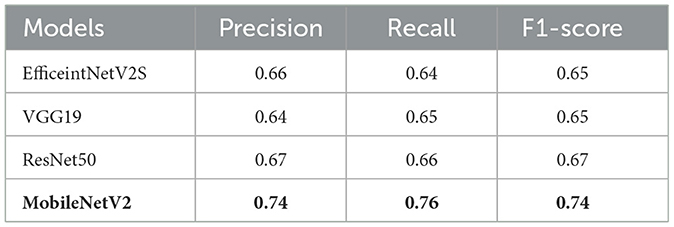

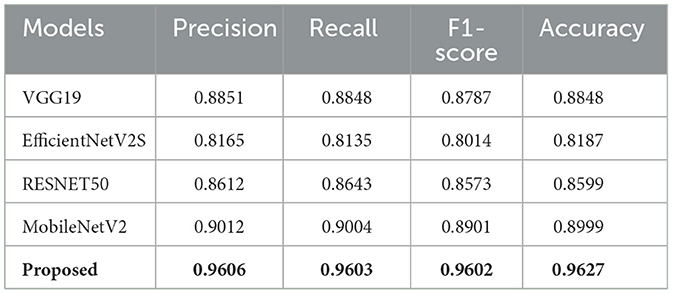

In the realm of image processing, CNN has garnered increased attention due to its substantial economic potential and consistently high accuracy. Recognized CNN architectures, such as MobileNetv2 [43], EfficientNetV2S [44], VGG19 [45], and ResNet50 [46], enjoy widespread popularity in image processing and classification. The convolution operation(s) play a pivotal role in any computer vision task, albeit contributing to heightened processing times and costs in larger, deeper networks like EfficeintNetV2S, VGG19, ResNet etc. In contrast, MobileNetV2 distinguishes itself through an inverse residual structure and linear bottleneck configuration, resulting in reduced convolution calculations. Its preference over other architectures is attributed to its simplicity and memory-efficient characteristics. Table 1 outlines the precision, recall, and F1-score of state-of-the-art (SOTA) models such as AlexNet, VGG16, InceptionV3, ResNet, and MobileNetV2. It is crucial to emphasize that all models underwent training on the Corn dataset without the utilization of any pre-processing techniques. The classification layer was the sole modification, adjusted based on the number of classes within the dataset.

In examining the performance metrics of SOTA models on the Corn Dataset, it is evident that MobileNetV2 emerges as the standout performer, achieving the highest accuracy among the models. With a precision of 0.74, recall of 0.76, and an F1-score of 0.74, MobileNetV2 showcases its exceptional ability to accurately discern and classify features within the corn dataset. The outstanding performance of MobileNetV2 can be attributed to its efficient architecture, featuring an inverse residual structure and a linear bottleneck configuration. These design elements not only contribute to reduced computational requirements but also make MobileNetV2 particularly well-suited for resource-constrained environments, such as mobile devices. It is noteworthy that MobileNetV2's superior accuracy makes it an optimal choice as the base model for further exploration and application in corn-related image recognition tasks. In light of its high-performance metrics and efficient design, MobileNetV2 has been selected as the foundational model for this study, reflecting its capability to handle the complexities of the Corn Dataset with precision and effectiveness.

2.3 Proposed model

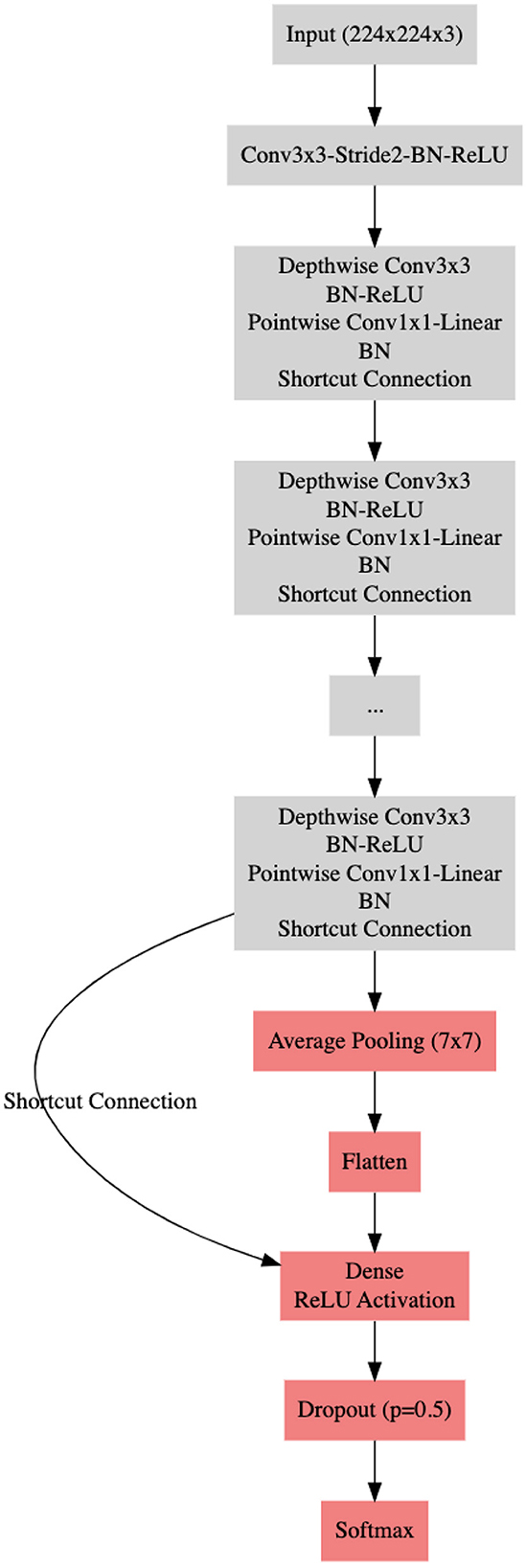

Our primary goal is the discriminative classification of various corn diseases. To achieve this objective, we have strategically selected the optimal model, MobileNetV2, based on initial screening. To further enhance the model's efficiency, we have incorporated additional layers preceding the classification layers. These layers include (i) Average Pooling layer, (ii) Flatten layer, (iii) Dense layer, (iv) Dropout layer, and (v) softmax. The addition of these layers yields several benefits. The Average Pooling layer serves to down-sample the spatial dimensions, reducing computational complexity while retaining essential features and it is set to (7 × 7). The Flatten layer transforms the output from the preceding layers into a one-dimensional array, facilitating its input into the subsequent Dense layer. The introduction of a Dense layer with the activation function set as Relu enhances the model's ability to capture complex patterns within the data. Moreover, the incorporation of a Dropout layer with a probability value of 0.5 helps prevent overfitting by randomly deactivating a proportion of neurons during training, promoting better generalization to unseen data. The subsequent addition of four nodes within the classification layer further refines the model's ability to distinguish between different classes of corn diseases.

Implementing these modifications has resulted in an enhanced version of the MobileNetV2 architecture, featuring four distinct nodes in its final (classification) layer. This configuration proves to be an optimal and well-suited model for addressing the specified problem in this study, offering improved efficiency, robustness, and a heightened capacity for accurate classification of diverse corn diseases. The proposed model is presented in Figure 2.

2.4 Model tuning

Within this study, various preprocessing and model tuning techniques have been incorporated to mitigate the risk of model overfitting. The following techniques are briefly explained:

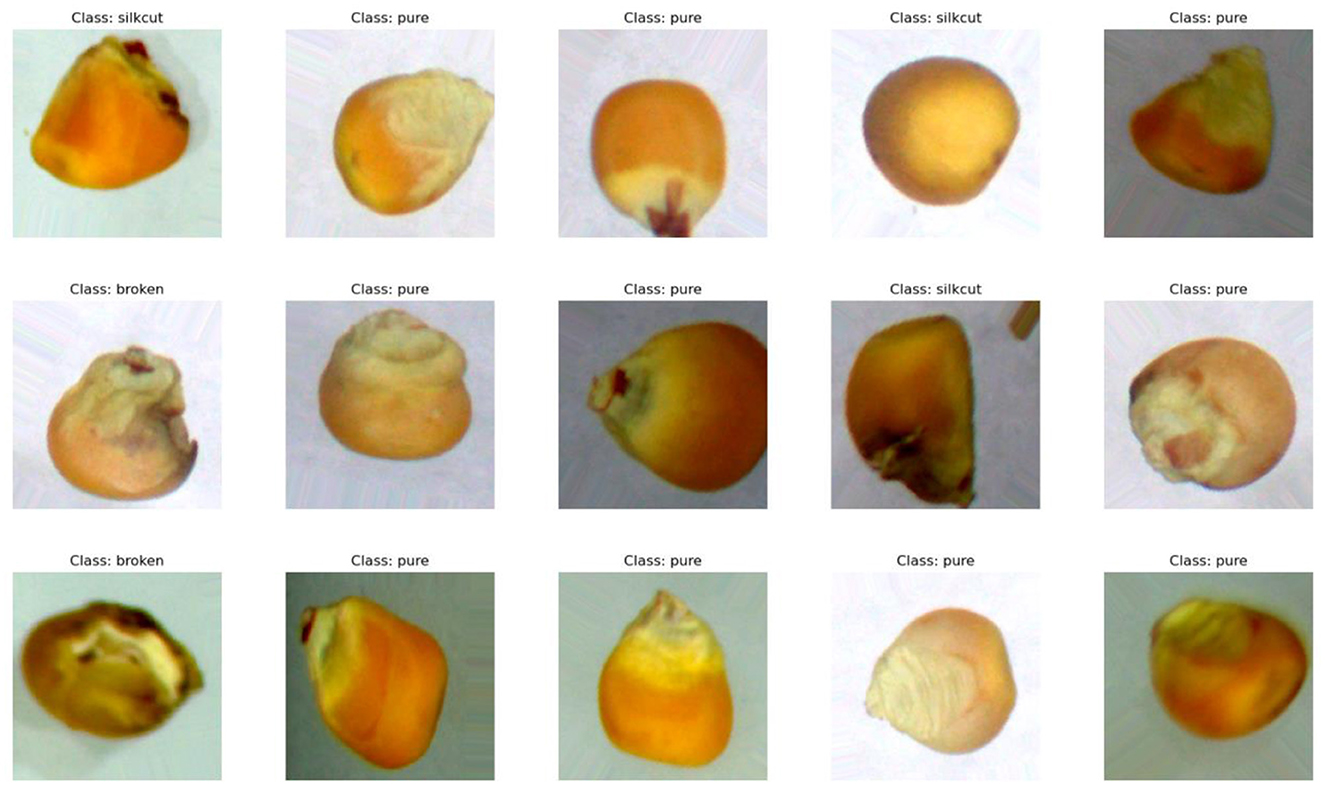

• Data augmentation: to address the challenge of limited data [47, 48], the data augmentation technique has been employed. This method generates random artificial images from the source data through processes such as shifts, shears, random rotations, and flips. In this study, a built-in function in the Keras library [35] has been utilized to create 10 images from each original image by applying random zooming of 20%, adjusting height by 10%, shifting width by 10%, and rotating by 30% [48, 49]. Figure 3 shows the resulted augmented images after implementing the transformations on the dataset. After data augmentation, the total number of images in test set for corn seed is 1,237 for Broken, 1,414 for Discolored, 5,350 for Pure, and 813 for silk cut. Following the augmentation process, the augmented dataset now comprises a total of 6,972 images belonging to the pure class, 5,489 images representing the broken class, 5,494 images for the discolored class, and 4,707 images denoting the silk cut class. The distribution of the entire dataset follows an 8:1:1 ratio for training, validation, and testing, respectively.

• Adaptive learning rate: this technique aims to expedite training and alleviate the burden of selecting a learning rate and schedule. In this work, the initial learning rate is set to INIT_LR = 0.001, and a decay of the form decay = INIT_LT/EPOCHS is implemented.

• Model checkpointing: during model training, a checkpoint is established to monitor any positive changes in accuracy. The model's weights are saved whenever the accuracy reaches an optimum level. In this research, a model checkpoint of the form checkpoint = ModelCheckpoint (fname, monitor = “val_loss,” mode = “min,” save_best_only = True, verbose = 1) is employed. This callback monitors the validation loss, overwriting the trained model only when there is a decrease in loss compared to the previous best model.

• Dropout: the dropout technique is employed to combat overfitting. During training, neurons are randomly selected and discarded, temporarily ignoring their contribution to the activation of downstream neurons. Weight changes are not applied to these neurons during the backward pass.

• Transfer learning: additionally, transfer learning is utilized as part of the model tuning process. This involves leveraging pre-trained models on large datasets before fine-tuning them for the specific task at hand [48, 50]. Transfer learning allows the model to benefit from the knowledge gained during the training on a different but related task, enhancing its performance in the current context. In this research work, a hybrid approach has been adopted for transfer learning. Initially, during the early stages of training, only the newly added layers are trained using the fruit dataset. Upon reaching the 20th iteration, the previously frozen existing layers are unfrozen. Subsequently, minor weight adjustments are applied to the trained layers of the model, aligning them with the characteristics of the specified dataset.

2.5 Experimental environment settings and performance evaluation metrics

The proposed model was implemented using Python (v. 3.8), OpenCV (v. 4.7), Keras Library (v. 2.8) were used on Windows 10 Pro OS, with system configuration using an Intel i5 processor running at 2.9 GHz, an Nvidia RTX 2060 Graphical Processing Unit and 16 GB RAM.

Various metrics were utilized to assess the effectiveness of different categorizing corn seed diseases, including commonly used indicators such as accuracy, precision, recall, and the F1-score. Accuracy represents the proportion of correctly identified samples from all classes, Recall measures the ratio of correctly classified positive instances among all actual positives, and Precision quantifies the proportion of correctly identified positive instances out of all expected positives. These metrics were computed using Equations (1) through (4).

3 Results and discussion

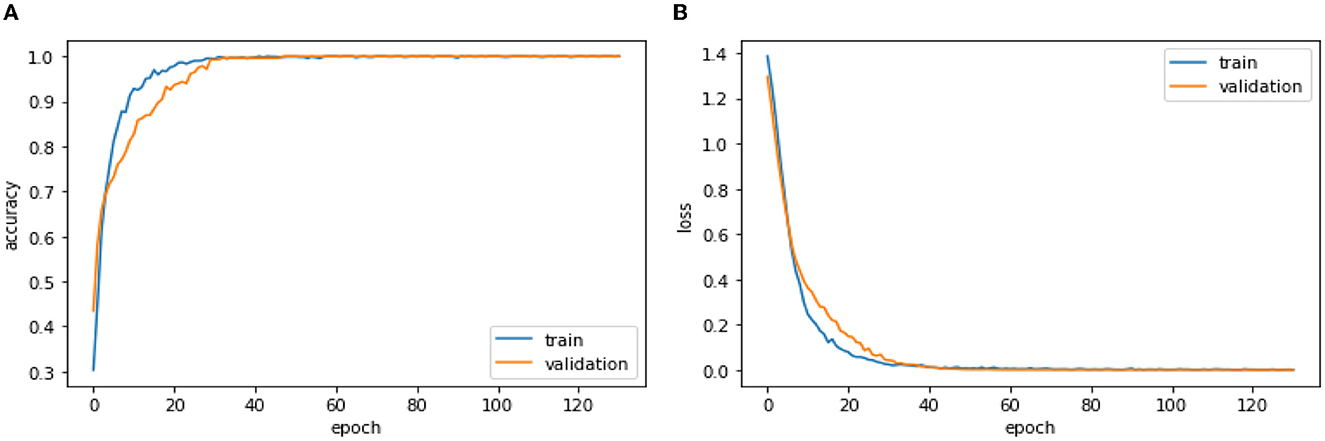

The results of the proposed model, an extended iteration of MobileNetV2, underscore its remarkable performance, achieving high accuracy rates through a strategic combination of architectural enhancements and meticulous model tuning techniques. Trained on augmented data and leveraging transfer learning, the proposed model's accuracy and loss dynamics are vividly depicted in Figure 4.

In Figure 4A, we observe the compelling trajectory of the proposed model's accuracy during both training and validation phases. Notably, during the initial training iterations, the model commenced its learning journey at a modest 30% accuracy rate. Demonstrating a steady ascent, the accuracy improved progressively with each epoch, reaching an impressive 87% by the 10th iteration. This upward trend persisted beyond the 10th iteration, with the model continuing to refine its understanding of the data, culminating in a robust accuracy of ~96% by the 35th iteration. Remarkably, the accuracy remained stable from the 35th iteration onward, showcasing the model's adeptness in sustaining its high performance throughout the training process. The validation accuracy closely mirrored the training accuracy, commencing at 43% and steadily climbing to a parallel 96.27% by the 37th iteration. This parity between training and validation accuracy is a testament to the model's generalization capability and its effectiveness in accurately classifying unseen data. Crucially, the absence of oscillation in the training and validation curves signifies a lack of overfitting. This resilience is attributed to the thoughtful incorporation of various model techniques, including data augmentation, transfer learning, and adaptive learning rate, ensuring the model's adaptability to diverse data patterns.

Figure 4B complements the accuracy visualization by presenting the loss dynamics of the proposed model. In the initial stages, both training and validation losses were relatively high. However, as the training progressed, a notable reduction in loss was observed, reaching a minimum at the 35th iteration. This substantial decrease in loss underscores the model's capacity to converge effectively, refining its predictive capabilities and minimizing errors. Importantly, the sustained low loss from the 35th iteration onward signifies the model's stability and robustness in capturing the underlying patterns within the data.

The exceptional accuracy rates and minimized loss achieved by the proposed model can be attributed to the incorporation of additional layers (Average Pooling, Flatten, Dense, Dropout, and softmax) that enhance feature extraction and classification. Furthermore, the implementation of various model tuning techniques plays a crucial role in fortifying the model's performance. The use of adaptive learning rate, with an initial rate set to INIT_LR = 0.001 and a decay calculated as decay = INIT_LT/EPOCHS, expedites training while dynamically adjusting the learning rate. This adaptability ensures efficient convergence and alleviates the challenge of manually selecting an optimal learning rate and schedule.

The employment of model checkpointing further enhances the model's robustness. This technique establishes checkpoints during training, monitoring positive changes in accuracy. By saving the model's weights when accuracy reaches an optimum level, the model checkpointing mechanism ensures that the trained model is preserved at its best-performing state, thereby preventing overfitting and enhancing generalization to unseen data. The dropout technique serves as a potent tool in addressing overfitting concerns. By randomly selecting and discarding neurons during training, the model avoids relying too heavily on specific neurons, promoting better generalization. This, in turn, ensures that the model remains resilient when faced with diverse datasets.

Additionally, the incorporation of transfer learning, especially the adopted hybrid approach, significantly contributes to the model's success. The utilization of pre-trained models on extensive datasets, followed by fine-tuning on the specific fruit dataset, empowers the model with a wealth of knowledge obtained during training on related tasks. The hybrid transfer learning approach, involving training newly added layers initially and subsequently fine-tuning existing layers, facilitates the alignment of the model with the characteristics of the specified dataset. This strategic approach ensures that the model leverages its pre-existing knowledge while adapting to the intricacies of the fruit dataset, ultimately enhancing its overall performance and accuracy.

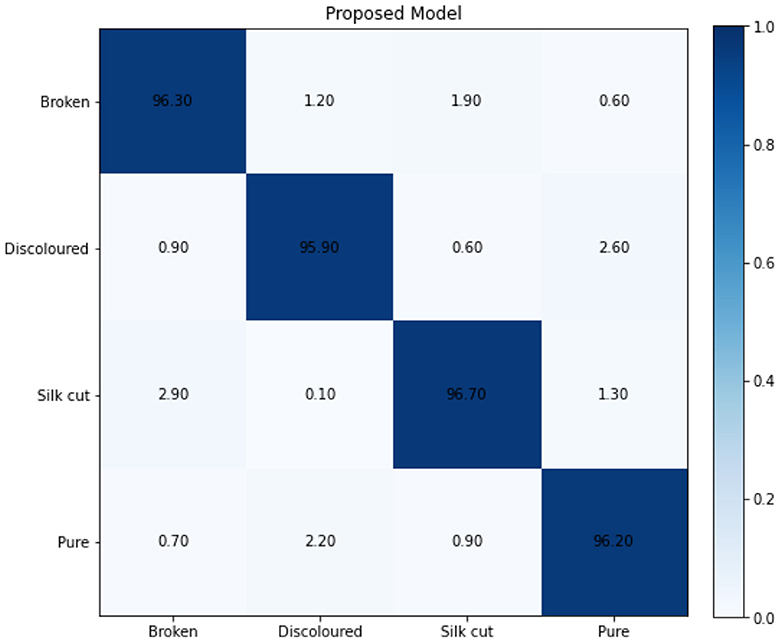

Figure 5 provides a detailed view of the confusion matrix generated during the validation phase of the proposed model, offering insights into its performance across the four distinct classes of the corn disease dataset: Broken, Discolored, Silk cut, and Pure. The diagonal elements of the confusion matrix represent the true positive rates for each class, signifying the instances correctly classified by the model. Notably, the proposed model excels in accurately identifying instances of Broken, Discolored, Silk cut, and Pure, with high percentages of 96.3%, 95.9%, 96.7%, and 96.2%, respectively. These high true positive rates underscore the model's proficiency in recognizing and classifying instances of each specific corn disease category.

However, a closer examination reveals some instances of misclassification within the off-diagonal elements. For example, there is a small percentage (1.2%) of instances belonging to the Broken class that are misclassified as Discolored. Similarly, 2.9% of instances from the Silk cut class are misclassified as Broken. These misclassifications could be attributed to the inherent resemblance between certain symptoms of Broken and Silk cut diseases, creating challenges for the model in distinguishing between them accurately. Another noteworthy misclassification occurs between the Discolored and Pure classes, with 2.6% of instances from Discolored being incorrectly classified as Pure, and 2.2% of instances from Pure being misclassified as Discolored. This misclassification is plausible due to the visual similarities between the symptoms of Discolored and Pure diseases, potentially leading to confusion for the model. The misclassification between Broken and Silk cut, as well as Discolored and Pure, underscores the complexity of distinguishing between these classes, given the visual resemblances in certain instances. The model's reliance on visual features may lead to misinterpretations when faced with subtle differences or overlapping symptoms between these classes.

While the proposed model demonstrates high accuracy and true positive rates across the four classes, the confusion matrix sheds light on specific challenges in differentiating between classes with visual similarities. The misclassifications, particularly between Broken and Silk cut, and Discolored and Pure, highlight the intricacies of the task and suggest avenues for further refinement, such as incorporating additional features or leveraging more advanced techniques to enhance the model's discriminatory capabilities in visually challenging scenarios.

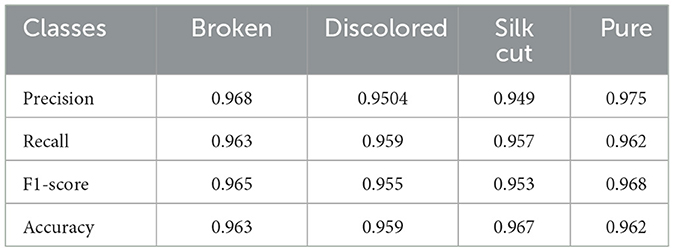

Table 2 presents a comprehensive overview of the evaluation metrics for the proposed model across the different classes of the corn disease dataset: Broken, Discolored, Silk cut, and Pure. These metrics, including Precision, Recall, F1-score, and Accuracy, provide a nuanced understanding of the model's performance in terms of both correctness and completeness in classification. Precision, as outlined in the table, measures the accuracy of positive predictions, indicating the proportion of instances correctly classified as belonging to a specific class. The proposed model exhibits high precision values across all classes, ranging from 0.949 for Silk cut to 0.975 for Pure. These high precision values underscore the model's effectiveness in minimizing false positives, demonstrating its capability to accurately identify instances belonging to each specific corn disease class. The Recall values, also known as Sensitivity or True Positive Rate, represent the proportion of actual positive instances correctly identified by the model. The proposed model achieves commendable Recall values, ranging from 0.957 for Silk cut to 0.963 for Broken. These values highlight the model's ability to capture a significant portion of the actual positive instances for each class, emphasizing its sensitivity to detecting instances of corn diseases.

F1-score, a harmonic mean of Precision and Recall, provides a balanced metric that considers both false positives and false negatives. The proposed model achieves high F1-scores across all classes, ranging from 0.953 for Silk cut to 0.968 for Pure. These scores indicate a strong balance between precision and recall, showcasing the model's overall effectiveness in achieving both accuracy and completeness in classification. The Accuracy values, representing the overall correctness of the model across all classes, are consistently high, ranging from 0.959 for Discolored to 0.967 for Silk cut. This demonstrates the model's proficiency in making correct predictions for the entire dataset. The evaluation metrics in Table 2 collectively highlight the proposed model's robust performance in classifying instances across various corn disease classes. The high precision, recall, and F1-score values underscore its effectiveness in achieving accurate and comprehensive classification. The consistent accuracy values across all classes further reinforce the model's overall reliability and suitability for the specified task.

3.1 Feature mapping analysis

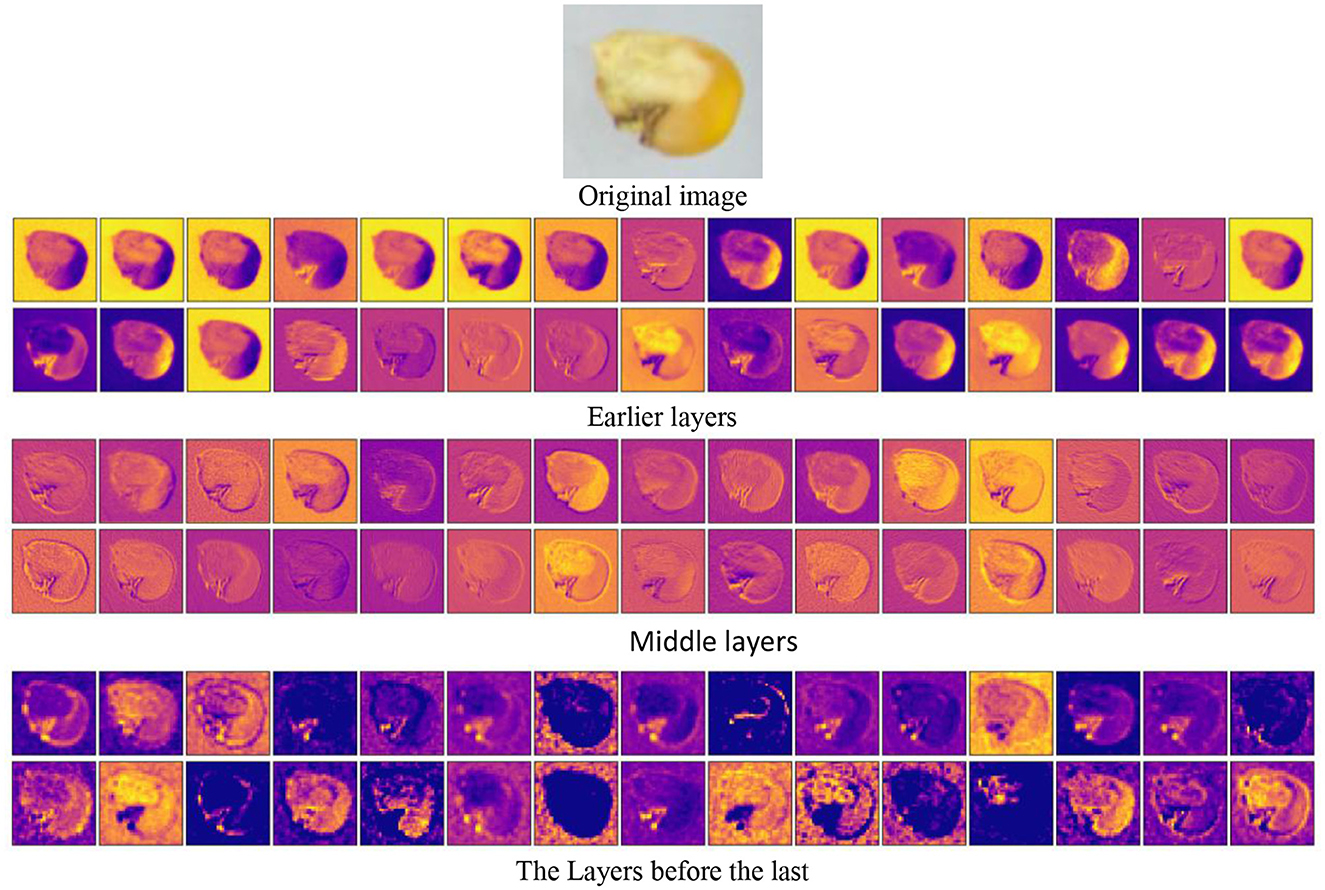

In a quest to unravel the intricate workings of the proposed model, a pivotal aspect of our analysis involves delving into the feature mapping visualization, particularly focused on the Silk Cut corn class.

Figure 6 intricately illustrates the CNN's journey through various layers, deciphering the essence of the original image. Commencing with the unaltered Silk Cut snapshot, the CNN's earlier layers reveal an intriguing lack of consistency, detecting diverse elements of the image. Progressing through middle layers, a heightened focus emerges as the network zeroes in on edges and boundaries, delving into the structural intricacies of the corn. Finally, in the layers just before the last, the CNN, despite a slight blurring effect from activation functions, meticulously dissects small and fine details, emphasizing its adeptness at capturing the nuanced features that define the Silk Cut image. This visual narrative provides a profound insight into the CNN's feature learning process, showcasing its evolving understanding of the corn image's complexities.

3.2 Comparative analysis of proposed model with state-of-the-art models

The comparative analysis of the proposed model with state-of-the-art (SOTA) models in corn seed disease classification, offers valuable insights into the landscape of deep learning applications in agriculture. Table 3 provides a comprehensive overview of the overall performance of the proposed model in comparison to SOTA models during the validation phase. It is crucial to note that SOTA models in this context have undergone training and validation using advanced model tuning techniques such as data augmentation and transfer learning rate. In contrast, the proposed model, as elucidated in the previous section, underwent modification through the addition of five distinct layers. Additionally, model tuning techniques were applied, including Adaptive Learning Rate, Model Checkpointing, Dropout, and Transfer Learning.

The performance metrics, including Precision, Recall, F1-score, and Accuracy, are reported for each model in Table 3. Notably, the proposed model outshines the SOTA models in terms of these metrics. VGG19, EfficientNetV2S, RESNET50, and MobileNetV2 are benchmarked against the proposed model. MobileNetV2, as the proposed model, achieves the highest values across all performance metrics, indicating its superiority in accurately classifying instances in the validation set.

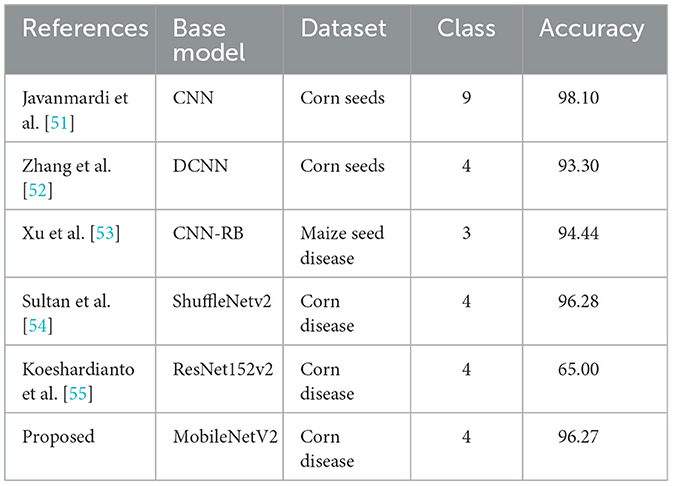

Moving beyond the direct comparison with SOTA models, Table 4 extends the evaluation to include existing models from the literature. Each entry in Table 4 represents a distinct study, detailing the base model used, the dataset employed, the number of classes, and the accuracy achieved. Notably, the proposed model, employing MobileNetV2 as the base model, achieves an accuracy of 96.27% on a Corn Disease dataset with four classes. This positions the proposed model as a robust and high-performing solution, demonstrating its competitiveness against existing models in the literature.

Both Tables 3, 4 underscore the significance of the proposed model in the realm of image classification. Its superior performance against SOTA models and its competitive edge against existing studies highlight the effectiveness of the model modifications and tuning techniques employed. The proposed model, leveraging MobileNetV2, emerges as a promising solution for accurate and efficient classification tasks in the domain of agriculture and corn seed disease detection.

4 Conclusion

The proposed model, aiming to address the discriminative classification of various corn seed diseases, adopts MobileNetV2 as the optimal base model. Enhancements include the addition of five layers—Average Pooling, Flatten, Dense, Dropout, and softmax. The Average Pooling layer reduces computational complexity, while the Flatten layer facilitates one-dimensional array transformation for input into the Dense layer, enhancing the model's feature extraction capabilities. The Dense layer with Relu activation captures complex patterns, and the Dropout layer prevents overfitting. Four nodes in the classification layer refine the model's ability to distinguish between corn disease classes. Model tuning techniques, such as data augmentation, adaptive learning rate, model checkpointing, dropout, and transfer learning, further contribute to the model's efficiency. Data augmentation generates artificial images, mitigating data limitations. Adaptive learning rate expedites training, and model checkpointing prevents overfitting. Dropout combats overfitting by deactivating neurons, and transfer learning leverages pre-trained models for task-specific fine-tuning. Results showcase the proposed model's proficiency, with accuracy reaching 96.27%. The model's trajectory demonstrates steady accuracy improvement and minimal loss. Evaluation metrics, including precision, recall, F1-score, and accuracy, underscore the model's effectiveness across Broken, Discolored, Silk cut, and Pure classes. Feature mapping analysis visualizes the model's learning process, revealing its evolution in capturing nuanced features.

Comparative analysis with SOTA models and existing studies highlights the proposed model's superiority, achieving higher precision, recall, F1-score, and accuracy. The model, employing MobileNetV2, outperforms counterparts, positioning it as a robust solution for corn seed disease classification. Misclassifications between similar classes indicate potential areas for improvement, suggesting avenues for further refinement. The proposed model demonstrates excellence in corn disease classification, showcasing the impact of architectural enhancements and effective model tuning techniques. Limitations include misclassifications in visually similar classes, indicating scope for future enhancements. Future work may involve incorporating additional features, leveraging advanced techniques, and exploring diverse datasets for comprehensive model refinement.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

MA: Data curation, Formal analysis, Investigation, Project administration, Software, Supervision, Validation, Writing – original draft, Writing – review & editing. YG: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia, under the Project Grant 5,215.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Phupattanasilp P, Tong SR. Augmented reality in the integrative internet of things (AR-IoT): application for precision farming. Sustainability. (2019) 11:2658. doi: 10.3390/su11092658

2. Kong J, Wang H, Wang X, Jin X, Fang X, Lin S, et al. Multi-stream hybrid architecture based on cross-level fusion strategy for fine-grained crop species recognition in precision agriculture. Comput Electron Agric. (2021) 185:106134. doi: 10.1016/j.compag.2021.106134

3. Hamid Y, Wani S, Soomro AB, Alwan AA, Gulzar Y. Smart seed classification system based on MobileNetV2 architecture. In: Proceedings of the 2022 2nd International Conference on Computing and Information Technology (ICCIT). Tabuk: IEEE (2022). p. 217–22. doi: 10.1109/ICCIT52419.2022.9711662

4. World Corn Production 2022/2023. Available online at: http://www.worldagriculturalproduction.com/crops/corn.aspx (accessed July 3, 2023).

5. Watson A, Burgess LW, Summerell BA, O'Keeffe K. Fusarium species associated with cob rot of sweet corn and maize in New South Wales. Australas Plant Dis Notes. (2014) 9:1–4. doi: 10.1007/s13314-014-0142-1

6. Sastry KS. Seed-Borne Plant Virus Diseases. New York, NY: Springer (2013). doi: 10.1007/978-81-322-0813-6

7. Schmidt M, Horstmann S, De Colli L, Danaher M, Speer K, Zannini E. et al. Impact of fungal contamination of wheat on grain quality criteria. J Cereal Sci. (2016) 69:95–103. doi: 10.1016/j.jcs.2016.02.010

8. Franco-Duarte R, Cernáková L, Kadam S, Kaushik KS, Salehi B, Bevilacqua A, et al. Advances in chemical and biological methods to identify microorganisms—from past to present. Microorganisms. (2019) 7:95–103. doi: 10.3390/microorganisms7050130

9. Lu Z, Zhao M, Luo J, Wang G, Wang D. Design of a winter-jujube grading robot based on machine vision. Comput Electron Agric. (2021) 186:106170. doi: 10.1016/j.compag.2021.106170

10. Li J, Wu J, Lin J, Li C, Lu H, Lin C, et al. Nondestructive identification of litchi downy blight at different stages based on spectroscopy analysis. Agriculture. (2022) 12:402. doi: 10.3390/agriculture12030402

11. Dhiman P, Bonkra A, Kaur A, Gulzar Y, Hamid Y, Mir MS, et al. Healthcare trust evolution with explainable artificial intelligence: bibliometric analysis. Information. (2023) 14:541. doi: 10.3390/info14100541

12. Hamid Y, Elyassami S, Gulzar Y, Balasaraswathi VR, Habuza T, Wani S, et al. An improvised CNN model for fake image detection. Int J Inf Technol. (2022) 15:1–11. doi: 10.1007/s41870-022-01130-5

13. Alam S, Raja P, Gulzar Y. Investigation of machine learning methods for early prediction of neurodevelopmental disorders in children. Wirel Commun Mob Comput. (2022) 2022:5766386. doi: 10.1155/2022/5766386

14. Anand V, Gupta S, Gupta D, Gulzar Y, Xin Q, Juneja S, et al. Weighted average ensemble deep learning model for stratification of brain tumor in MRI images. Diagnostics. (2023) 13:1320. doi: 10.3390/diagnostics13071320

15. Khan SA, Gulzar Y, Turaev S, Peng YSA. Modified HSIFT descriptor for medical image classification of anatomy objects. Symmetry. (2021) 13:1987. doi: 10.3390/sym13111987

16. Gulzar Y, Khan SA. Skin lesion segmentation based on vision transformers and convolutional neural networks—a comparative study. Appl Sci. (2022) 12:5990. doi: 10.3390/app12125990

17. Mehmood A, Gulzar Y, Ilyas QM, Jabbari A, Ahmad M, Iqbal S, et al. SBXception: a shallower and broader xception architecture for efficient classification of skin lesions. Cancers. (2023) 15:3604. doi: 10.3390/cancers15143604

18. Archana K, Kaur A, Gulzar Y, Hamid Y, Mir MS, Soomro AB, et al. Deep learning models/techniques for COVID-19 detection: a survey. Front Appl Math Stat. (2023) 9:1303714. doi: 10.3389/fams.2023.1303714

19. Sahlan F, Hamidi F, Misrat MZ, Adli MH, Wani S, Gulzar Y, et al. Prediction of mental health among university students. Int J Percept Cogn Comput. (2021) 7:85–91. Available online at: https://journals.iium.edu.my/kict/index.php/IJPCC/article/view/225

20. Hanafi MFFM, Nasir MSFM, Wani S, Abdulghafor RAA, Gulzar Y, Hamid YA, et al. Real time deep learning based driver monitoring system. Int J Percept Cogn Comput. (2021) 7:79–84. Available online at: https://journals.iium.edu.my/kict/index.php/IJPCC/article/view/224

21. Gulzar Y, Alwan AA, Abdullah RM, Abualkishik AZ, Oumrani MOCA. Ordered clustering-based algorithm for E-commerce recommendation system. Sustainability. (2023) 15:2947. doi: 10.3390/su15042947

22. Dhiman P, Kaur A, Balasaraswathi VR, Gulzar Y, Alwan AA, Hamid Y, et al. Image acquisition, preprocessing and classification of citrus fruit diseases: a systematic literature review. Sustainability. (2023) 15:9643. doi: 10.3390/su15129643

23. Malik I, Ahmed M, Gulzar Y, Baba SH, Mir MS, Soomro AB, et al. Estimation of the extent of the vulnerability of agriculture to climate change using analytical and deep-learning methods: a case study in Jammu, Kashmir, and Ladakh. Sustainability. (2023) 15:11465. doi: 10.3390/su151411465

24. Ayoub S, Gulzar Y, Reegu FA, Turaev S. Generating image captions using Bahdanau attention mechanism and transfer learning. Symmetry. (2022) 14:2681. doi: 10.3390/sym14122681

25. Khan F, Ayoub S, Gulzar Y, Majid M, Reegu FA, Mir MS, et al. MRI-based effective ensemble frameworks for predicting human brain tumor. J Imaging. (2023) 9:163. doi: 10.3390/jimaging9080163

26. Tian J, Zhang Y, Wang Y, Wang C, Zhang S, Ren TA, et al. Method of corn disease identification based on convolutional neural network. In: Proceedings of the Proceedings - 2019 12th International Symposium on Computational Intelligence and Design, ISCID (2019), Vol. 1. Hangzhou: IEEE (2019). p. 245–248. doi: 10.1109/ISCID.2019.00063

27. Mishra S, Sachan R, Rajpal D. Deep convolutional neural network based detection system for real-time corn plant disease recognition. Proc Procedia Comput Sci. (2020) 167:2003–10. doi: 10.1016/j.procs.2020.03.236

28. Gulzar Y, Hamid Y, Soomro AB, Alwan AA, Journaux LA. Convolution neural network-based seed classification system. Symmetry. (2020) 12:2018. doi: 10.3390/sym12122018

29. Yu H, Liu J, Chen C, Heidari AA, Zhang Q, Chen H, et al. Corn leaf diseases diagnosis based on K-means clustering and deep learning. IEEE Access. (2021) 9:143824–35. doi: 10.1109/ACCESS.2021.3120379

30. Ahmad A, Saraswat D, Gamal AE, Johal GS. Comparison of deep learning models for corn disease identification, tracking, and severity estimation using images acquired from UAV-mounted and handheld sensors. In: Proceedings of the American Society of Agricultural and Biological Engineers Annual International Meeting, ASABE (2021), Vol. 3. (2021). p. 1572–1582. doi: 10.13031/aim.202100566

31. Ahmad A, Aggarwal V, Saraswat D, El Gamal A, Johal GS. GeoDLS: a deep learning-based corn disease tracking and location system using RTK geolocated UAS imagery. Remote Sens. (2022) 14:4140. doi: 10.3390/rs14174140

32. Albarrak K, Gulzar Y, Hamid Y, Mehmood A, Soomro AB. A deep learning-based model for date fruit classification. Sustainability. (2022) 14:6339. doi: 10.3390/su14106339

33. Fraiwan M, Faouri E, Khasawneh, N. Classification of corn diseases from leaf images using deep transfer learning. Plants. (2022) 11:2668. doi: 10.3390/plants11202668

34. Gulzar Y. Fruit image classification model based on MobileNetV2 with deep transfer learning technique. Sustainability. (2023) 15:1906. doi: 10.3390/su15031906

35. Mamat N, Othman MF, Abdulghafor R, Alwan AA, Gulzar Y. Enhancing image annotation technique of fruit classification using a deep learning approach. Sustainability. (2023) 15:901. doi: 10.3390/su15020901

36. Aggarwal S, Gupta S, Gupta D, Gulzar Y, Juneja S, Alwan AA, et al. An artificial intelligence-based stacked ensemble approach for prediction of protein subcellular localization in confocal microscopy images. Sustainability. (2023) 15:1695. doi: 10.3390/su15021695

37. Masood M, Nawaz M, Nazir T, Javed A, Alkanhel R, Elmannai H, et al. MaizeNet: a deep learning approach for effective recognition of maize plant leaf diseases. IEEE Access. (2023) 11:52862–76. doi: 10.1109/ACCESS.2023.3280260

38. Ahmad A, Gamal AE, Saraswat D. Toward generalization of deep learning-based plant disease identification under controlled and field conditions. IEEE Access. (2023) 11:9042–57. doi: 10.1109/ACCESS.2023.3240100

39. Hatem AS, Altememe MS, Fadhel MA. Identifying corn leaves diseases by extensive use of transfer learning: a comparative study. Indones J Electr Eng Comput Sci. (2023) 29:1030–8. doi: 10.11591/ijeecs.v29.i2.pp1030-1038

40. Divyanth LG, Ahmad A, Saraswat D. A two-stage deep-learning based segmentation model for crop disease quantification based on corn field imagery. Smart Agric Technol. (2023) 3:100108. doi: 10.1016/j.atech.2022.100108

41. Rajeena FPP, Ashwathy SU, Moustafa SU, Ali MAS. Detecting plant disease in corn leaf using EfficientNet architecture—an analytical approach. Electronics. (2023) 12:1938. doi: 10.3390/electronics12081938

42. Nagar S, Pani P, Nair R, Varma G. Automated seed quality testing system using GAN & active learning. arXiv [preprint]. (2021). doi: 10.48550/arXiv.2110.00777

43. Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C. Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, UT: IEEE (2018). p. 4510–20. doi: 10.1109/CVPR.2018.00474

44. Tan M, Le QV. EfficientNet: rethinking model scaling for convolutional neural networks. In: 36th International Conference on Machine Learning, ICML 2019. Long Beach, CA (2019). p. 10691–700.

45. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings 2015. San Diego, CA (2015).

46. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV: IEEE (2016). p. 770–8. doi: 10.1109/CVPR.2016.90

47. Ayoub S, Gulzar Y, Rustamov J, Jabbari A, Reegu FA, Turaev S, et al. Adversarial approaches to tackle imbalanced data in machine learning. Sustainability. (2023) 15:7097. doi: 10.3390/su15097097

48. Gulzar Y, Ünal Z, Aktas HA, Mir MS. Harnessing the power of transfer learning in sunflower disease detection: a comparative study. Agriculture. (2023) 13:1479. doi: 10.3390/agriculture13081479

49. Khan F, Gulzar Y, Ayoub S, Majid M, Mir MS, Soomro AB, et al. Least square-support vector machine based brain tumor classification system with multi model texture features. Front Appl Math Stat. (2023) 9:1324054. doi: 10.3389/fams.2023.1324054

50. Majid M, Gulzar Y, Ayoub S, Khan F, Reegu FA, Mir MS, et al. Enhanced transfer learning strategies for effective kidney tumor classification with CT imaging. Int J Adv Comput Sci Appl. (2023) 14:2023. doi: 10.14569/IJACSA.2023.0140847

51. Javanmardi S, Miraei Ashtiani SH, Verbeek FJ, Martynenko A. Computer-vision classification of corn seed varieties using deep convolutional neural network. J Stored Prod Res. (2021) 92:101800. doi: 10.1016/j.jspr.2021.101800

52. Zhang J, Dai L, Cheng F. Corn seed variety classification based on hyperspectral reflectance imaging and deep convolutional neural network. J Food Meas Charact. (2021) 15:484–94. doi: 10.1007/s11694-020-00646-3

53. Xu P, Fu L, Xu K, Sun W, Tan Q, Zhang Y, et al. Investigation into maize seed disease identification based on deep learning and multi-source spectral information fusion techniques. J Food Compos Anal. (2023) 119:105254. doi: 10.1016/j.jfca.2023.105254

54. Sultan M, Shamshiri RR, Ahamed S, Farooq M, Lu L, Liu W, et al. Lightweight corn seed disease identification method based on improved ShuffleNetV2. Agriculture. (2022) 12:1929. doi: 10.3390/agriculture12111929

Keywords: deep learning, corn, precision agriculture, image classification, corn seed, corn seed disease

Citation: Alkanan M and Gulzar Y (2024) Enhanced corn seed disease classification: leveraging MobileNetV2 with feature augmentation and transfer learning. Front. Appl. Math. Stat. 9:1320177. doi: 10.3389/fams.2023.1320177

Received: 11 October 2023; Accepted: 07 December 2023;

Published: 03 January 2024.

Edited by:

Umar Muhammad Modibbo, Modibbo Adama University, NigeriaCopyright © 2024 Alkanan and Gulzar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yonis Gulzar, eWd1bHphckBrZnUuZWR1LnNh

Mohannad Alkanan

Mohannad Alkanan Yonis Gulzar

Yonis Gulzar