94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Appl. Math. Stat., 14 October 2022

Sec. Mathematical Biology

Volume 8 - 2022 | https://doi.org/10.3389/fams.2022.947053

This article is part of the Research TopicData science in neuro- and onco-biologyView all 6 articles

Andreas A. Ioannides1*

Andreas A. Ioannides1* Constantinos Kourouyiannis1

Constantinos Kourouyiannis1 Christodoulos Karittevlis1

Christodoulos Karittevlis1 Lichan Liu1

Lichan Liu1 Ioannis Michos2

Ioannis Michos2 Michalis Papadopoulos2

Michalis Papadopoulos2 Evangelos Papaefthymiou2

Evangelos Papaefthymiou2 Orestis Pavlou2

Orestis Pavlou2 Vicky Papadopoulou Lesta2

Vicky Papadopoulou Lesta2 Andreas Efstathiou2

Andreas Efstathiou2In this article, we present a unified framework for the analysis and characterization of a complex system and demonstrate its application in two diverse fields: neuroscience and astrophysics. The framework brings together techniques from graph theory, applied mathematics, and dimensionality reduction through principal component analysis (PCA), separating linear PCA and its extensions. The implementation of the framework maps an abstract multidimensional set of data into reduced representations, which enable the extraction of its most important properties (features) characterizing its complexity. These reduced representations can be sign-posted by known examples to provide meaningful descriptions of the results that can spur explanations of phenomena and support or negate proposed mechanisms in each application. In this work, we focus on the clustering aspects, highlighting relatively fixed stable properties of the system under study. We include examples where clustering leads to semantic maps and representations of dynamic processes within the same display. Although the framework is composed of existing theories and methods, its usefulness is exactly that it brings together seemingly different approaches, into a common framework, revealing their differences/commonalities, advantages/disadvantages, and suitability for a given application. The framework provides a number of different computational paths and techniques to choose from, based on the dimension reduction method to apply, the clustering approaches to be used, as well as the representations (embeddings) of the data in the reduced space. Although here it is applied to just two scientific domains, neuroscience and astrophysics, it can potentially be applied in several other branches of sciences, since it is not based on any specific domain knowledge.

Complex systems, a common framework for scientific studies of phenomena composed of more than one entity, stand as a prominent framework of scientific computing. It is hard to think of single phenomenon which do not involve a number of interacting entities.

The notion of network modeling lies in the heart of systems science, providing a solid framework for the study of systems of many interacting entities, requiring no central control. In a network, simple rules of operation can give rise to sophisticated information processing, and adaptation via learning or evolution [1]. A network or a graph [2] can be used to represent any system of a set of entities (consisting of the nodes of the graph) that may be related to each other via pairwise relationships (constituting the edges of the graph). The entities could be any set; a set of atoms, brain centers, molecules, humans, societies, machines, brain centers, countries, planets, stars, or galaxies. The edges are pairwise relations that may declare dependency among the involved entities, conflict, binding, allocation, assignment, (dis)-similarity, friendship, positive or negative relationship, etc. Despite its simple definition, networks and network science have become one of the most powerful interdisciplinary frameworks for the study of complex systems. The strong mathematical foundation of graph theory supports the core of network science while its flexibility and generality make it adaptable to applications in a wide and diverse range of domains of knowledge.

Defining what the nodes and the edges of a graph correspond to in the real system is a crucial step and can be a deceptively challenging task. For more details about structure/function relationships, emerging properties and other factors playing a critical role in the description of complex systems refer to Turnbull et al. [3].

In this work, we present a unified framework for analyzing complex systems and apply it to problems in two diverse fields: neuroscience and astrophysics. The framework brings together different approaches for the analysis of a complex system, i.e., principal component analysis (PCA) and graph theory, under common ground. It provides a number of different computational paths for the analysis of complex systems which enables the juxtaposition of each of them. It also allows different visualization of the core elements of the system, revealing different aspects of it (physical properties or similarities, etc.).

In particular, the framework consists of the following steps: (i) the modeling of the data set as a system in a concrete mathematical form leading to a matrix representation (i.e., a similarity matrix), (ii) The representation of the system either as a graph or as a set of features in space of few dimensions, where (some of the original) metric properties are preserved. These reduced representations provide a fundamental skeleton that captures the most intrinsic and hidden properties of the system. (iii) The application of several kinds of clustering algorithms either directly on the graph or in the reduced feature space, and finally, (iv) The application of various embeddings of the clustered data in the reduced space or using the graph. The embeddings allow both the comparison of the cluster data with other physical properties, the extraction of the most important features of the data set, possible embeddings of new data in the feature space, and the interpretation of the results with the use of domain knowledge. Overall, the framework allows the clarification of the underlying mechanisms and processes within each scientific domain. It can also unveil hidden theoretical similarities in the description of complex systems that can guide the quest for a better overall understanding of each individual system and highlight global patterns that run across scientific domains.

The applicability of the framework is demonstrated in the study of two distinct and apparently very different complex systems, each one affording clear definitions of the nodes and edges at distinct spatial and temporal scales. The first system comes from the domain of neuroscience with structural elements on the large spatial scale of the entire brain, with constituent parts of the cytoarchitectonic areas (CA) [4] on the cortical mantle and other areas in the deep brain nuclei. Two neuroscience problems are addressed, each one as a problems of clustering of functional data from a number of CAs. The first one probes the organization of sleep, where the framework is utilized to characterize the relationship between sleep stages and accommodate the periods of high activity within each sleep stage and periods representing transitions between them. For the second neuroscience application, the framework is applied to probe the nature of evoked responses and the way the thalamus and cortex influence each other. The second system where the framework is applied deals with problems in astrophysics, where galaxies are the nodes. In particular, we study the evolution of ultraluminous infrared galaxies (ULIRGs), through the study of their spectral energy distributions (SEDs). The framework is employed here for the detection of galaxies of similar SEDs which correspond to different galaxy evolution stages, and for the investigation of the relation between various physical properties of the galaxies and in relation to their SEDs.

In Section 2, we first introduce the theoretical background we use and then present the proposed framework. The first two subsections of Section 2 introduce the related theory and methods of the PCA together with one of its non-linear extensions (Section 2.1) and graph theory (Section 2.2). The following Section 2.3 presents and discusses the proposed framework. Section 2.4 introduces two specific extensions of the framework which are used to produce good effect in the applications of neuroscience. The applicability of the framework is demonstrated in Section 3, where it is successfully applied to the analysis of two distinct complex systems in Neuroscience (Section 3.1) and a problem in Astrophysics (Section 3.2). The article concludes with Section 4 where we summarize the proposed framework, its advantages, and its applicability in diverse sciences.

Dimension Reduction (DR) techniques provide a mapping of the original data into a lower dimensional space which maintains its main features. PCA is the prototypical dimensionality reduction method, with applications in data clustering, pattern recognition, image analysis, etc. [5, 6]. It is a linear method with the data spread in a Euclidean space of N dimensions that yields an appropriate low-dimensional orthogonal coordinate system with axes defining the direction of the principal variances of the data.

Due to its limited applicability to linearly structured data, various extensions of PCA have been developed in order to cover data with non-linear dependencies [see [5, 7]]. Non-linear DR techniques can deal with highly complex data by assuming that the data lies on a highly non-linear manifold that can be described in a linear space of much lower dimensions than that of linear PCA. In this study, we will also explore a common non-linear dimension reduction technique, the Kernel PCA method [8].

Assume a set of data of N elements in a D−dimensional Euclidean space, called Input space, represented by N column vectors xi, i ∈ [N] 1, each of dimension D, constituting the D × N matrix X. For technical reasons, we consider centered initial data by subtracting the average vector μ2 from the data obtaining the new variables and the corresponding matrix .

The principal component analysis method attempts to project optimally3. the data points onto a linear subspace (affine subspace in the case of uncentered raw data) of ℝD of dimension d (usually d << D), which reduces the eigenvalue decomposition of the D × D matrix . The principal directions of the variations of the data are given by the eigenvectors uj of the matrix S with corresponding eigenvalues λj that yield the projected variance4.

Since the matrix S is positive semi-definite, its eigenvalues are all non-negative numbers that can be arranged in descending order and the corresponding eigenvectors are pairwise orthogonal, a fundamental property that provides linear independent principal directions. The dimensional reduction of the problem is then achieved by considering only the d-eigenvectors that correspond to the d-largest eigenvalues, where d is a parameter that can be determined using the spectral gap heuristic, which is explained next in Section 2.1.3. These eigenvectors constitute the principal directions or components of the variance of the data. As a result, projections of the d (most significant) eigenvectors of the data correspond to their embeddings in a d-dimensional space that captures their main features, i.e., in the feature space of the data.

The Kernel PCA method (KPCA), introduced by Schölkopf et al. [8], is a particular non-linear extension of linear PCA. Many modern methods of analysis, e.g., machine learning, make extensive use of it [9]. It is based on the idea of embedding the data into a higher-dimensional space, called Feature Space, and denoted by F. Application of linear PCA on F allows the data to be eventually linearly separable. More formally, KPCA transforms the data from the input space to the feature space through a non-linear map ϕ:ℝD → F which relates the feature variables to the input variables as xi↦ϕ(xi). The feature space F can have an arbitrarily large dimension which will be denoted by . The new data matrix will be given in terms of the matrix Φ, formed by the columns of the centered transformed data as .

The principal directions in F correspond to the N eigenvectors v1, …, vN of , associated to the non-zero eigenvalues . The remaining eigenvectors correspond to the zero eigenvalues. This suggests that there exist N-column vectors wi, i ∈ [N], such that

Due to the increase in dimension of the problem from D to , the order of can be huge posing computational challenges. However5, the problem is reduced to the eigenvalue problem of the N × N matrix:

It can be shown that the eigenvectors of G are precisely the N-vectors wi of (1) [see [5]]. The latter allows the recovering of the principal directions (i.e., the vectors vi) of the problem. We note that we choose to normalize wi6 so that the principal axes vi become orthonormal.

In every non-linear PCA method, in general, ϕ is an unknown map. This can be resolved by the formulation of the KPCA method, thought a certain positive definite kernel function7 K : ℝD × ℝD → ℝ such that

In terms of centered data, the formula for the corresponding kernel function, i.e., , can be calculated by the matrix form expression , where , with I being the identity matrix and 1 is a matrix of ones. In view of (3), we can use an a priori positive definite kernel, which, in turn, will implicitly introduce a corresponding map ϕ from the input space to the feature space F [see [5, 11]].

In the literature, there is a variety of kernels that can be used to extract the type of non-linear structures that govern the physical problem at hand. The most commonly used kernel, apart from the linear one —which is just the Euclidean dot product, so that KPCA naturally generalizes PCA—is the Gaussian kernel

where and σ determines the width of the kernel [12]. We have chosen to apply the Gaussian kernel on our data. A common choice of σ is the standard deviation of the sample of the distances ||xi − xj|| [see [13]].

Having chosen the kernel function K, now (2) yields the following N × N Gramian matrix of the centered data:

The final step of the method states that for every data vector xi, its l-th non-linear principal component is given by the number: where Coli(G) denotes the i-th column of the matrix G.

Successful application of both linear and Gaussian PCA (KPCA for the Gaussian kernel) methods depends on two important issues: the realization of the tuning parameter γ needed for the Gaussian PCA, and the determination of the actual number d of the principal components to encapsulate the main variations of the data (needed both for PCA and Gaussian PCA). To address these two issues, we need to find the optimal value γ* of γ and the optimal dimension d that maximize the variance of the data. In the literature [e.g., see [14]], it is commonly accepted that both γ* and d are dictated by the largest gap, known as the spectral gap, in the eigenvalue spectrum of the Gramian matrix G, i.e., . More precisely, γ* is the particular value of γ for which is maximized if such a value exists, and the optimal dimension d is equal to the smallest index i* + 1, for which this maximization occurs. Additionally, more features of the data can be captured with a more detailed analysis of the spectral gap together with domain knowledge, leading to a slightly larger. Finally, the parameter d of the principal components obtained corresponds to the number of clusters partitioning the data.

Here, we present the graph theoretic background utilized in this work. We start with some basic graph theoretic notions and then we present the graph clustering problem and related algorithms utilized.

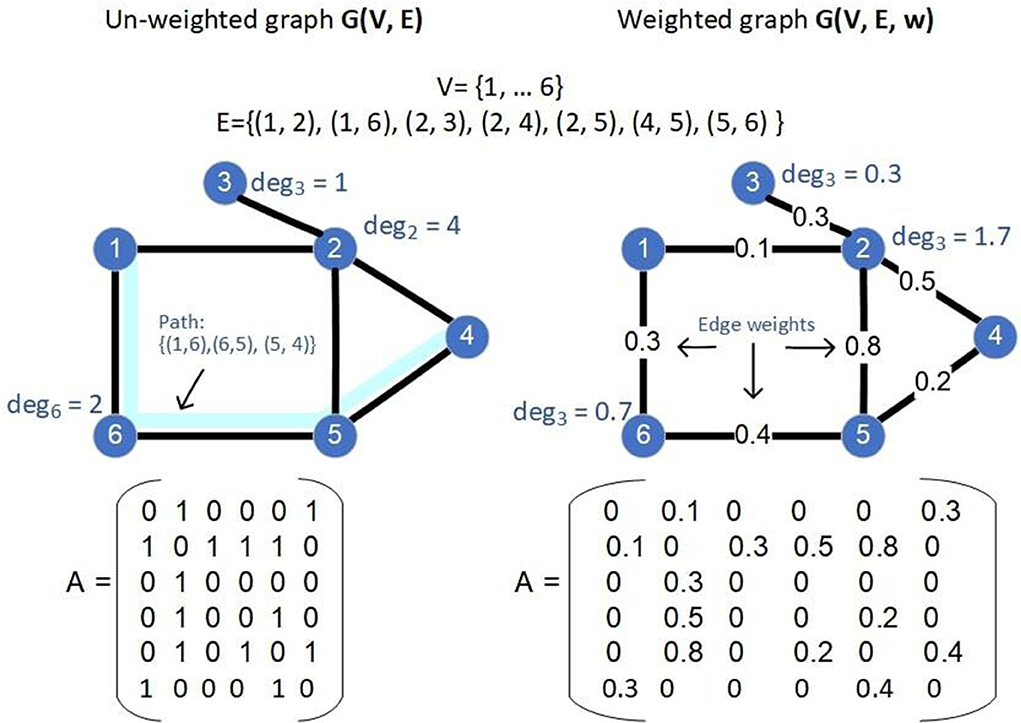

Graph Theory, standing out as a foundation concept in Network Science [15], is one of the oldest branches of Mathematics, with remarkable interdisciplinary applicability in diverse areas, spanning from Social and Political Sciences, Biology, Chemistry to Neuroscience [16] and Astrophysics/Cosmology [17–19]. A graph can be used to model any system of entities that are pairwise related to each other. The entities in a graph are represented by the vertices (nodes) of it, while the pair-wised (possibly weighted) relations of entities are captured by the edges of the graph, connecting corresponding nodes. More formally, a graph G is an ordered triple G = G(V, E), where the set V specifies the set of vertices of the graph G and E the set of its edges; a set of un-ordered pairs (i, j) of vertices of V, connecting the corresponding nodes. We denote by N the cardinality |V| of V. In a weighted graph G(V, E, w), each edge (i, j) is associated with a numerical value, w(i, j), specified by the function w : E → ℝ+. A binary or un-weighted graph is a graph such that all the weights in G are binary, i.e., w(i, j) ∈ {0, 1}. To represent a graph G = G(V, E, w), we use its (weighted) adjacency matrix: a N × N matrix A = (aij) where aij≡w(i, j), for each edge e = {i, j} ∈ E and aij = 0, otherwise. The degree of the vertex i, denoted as degi, is the sum of the weights of the edges incident to it, i.e., .

A path of the graph G is a sequence of nodes {v1, …, vi, vi + 1, …, vk} such that (vi, vi + 1) ∈ E. A complete graph G(V, E) is a graph for which ∀i, j ∈ V, it holds that (i, v) ∈ E. A graph G′ = (V′, E′) is a sub-graph of a graph G = (V, E) if V′ ⊆ V and E′ ⊆ E. For any S ⊆ V, we denote by , its complement V\S in V. The (un-normalized) Laplacian matrix of G is defined as L = D−A, where D is the degree matrix of G8. Examples of un-weighted and weighted graphs and their various properties are shown in Figure 1.

Figure 1. Two examples of graphs: an un-weighted one (left) and a weighted one (right), the values of the sets V, E and edge weights (in case of the weighted graph, specified by the function w), the adjacency matrix A, the degree of some nodes as well as a path (in light blue) are shown in the figure.

A similarity function associated with the edges of a graph is a function w:E → ℝ that measures how similar the corresponding nodes associated with each edge are. The corresponding graph and its adjacency matrix are called similarity graph and similarity matrix, respectively. Assume a set of N elements in a D−dimensional space, represented by , for i = 1, …, N. A widely used non-linear similarity measure is the Gaussian kernel, defined in Section 2.1.2, where where ||xi − xj|| is the Euclidean distance between the vectors and γ = 1/2σ2. There exist various suggestions for choosing the parameter σ, discussed in Sections 2.1.2 and in more detail in Section 2.1.3.

Graph sparsification is a graph simplification method that applies to the dense (complete) similarity graph in order to reduce its density, making the resulting graph sparse while keeping its most significant (of large relatively weights) edges. Two of the most common sparsification methods apply either a global or a local criterion for choosing which edges to be removed. Using a global threshold on the whole graph, we keep only the edges above that threshold [ϵ-neighborhood method [12]]. Using a local criterion, at each node, we keep the k largest weighted edges incident to it [k-nearest neighbor (k-nn) method9 [12]]. In order to choose the parameters ϵ, k there are no clear guidelines. However, we note that a basic prerequisite is that the resulting graph should be connected. In this work, we utilize the k-nn method for graph sparsification, with a careful choice for the value of k. In particular, we choose the parameter k using the mutual k-nn, in order to guarantee high levels of connectivity with mutually significant edges between nodes and then we apply the k-nn method for the construction of the sparsified graph, in order to guarantee connectivity between regions of different densities.

The graph clustering problem is the task of finding a partition of the nodes of the graph into groups, called clusters or communities, such that the nodes within each group are highly connected to each other, while the inter-crossing connections between nodes of different groups are as few as possible [20]. In this work, we consider similarity graphs. A clustering in such a graph reveals a partitioning into nodes of similar properties. Note that in graph clustering, the number of communities to be detected—denoted here by r—is not a priori known, in contrast to the similar problem of graph partitioning, where this information is part of the input of the problem10.

Modularity [21] is perhaps the most common measure of good graph clustering , denoted by . It measures the fraction of the edges that fall within clusters minus the expected number in an equivalent network where edges were distributed at random. Thus, the larger the value of the modularity is, the better the quality of the clustering. Note that . The conductance of a cluster C [20] of a graph clustering , denoted as h(C), is the fraction of edges with only one end in the cluster over the total number of edges of the cluster C (with either one of two ends in the cluster). The conductance of the clustering is the maximum such value over its clusters . Thus, the smaller the value of the conductance is, the better is the quality of the clustering. Note that .

After the construction of the similarity graph and its sparsification, one can employ graph clustering algorithms in an attempt to simplify the system to significantly fewer entities—the clusters—which however manage to maintain the fundamental properties of the initial entities. This allows a characterization of the clusters of similar entities enabling finally the feature extraction of the initial complex system under investigation. Existing graph clustering algorithms follow several approaches for the detection of the clusters (or communities) in a given network, spanning from hierarchical ones [22] [agglomerative [23]] or divisive ones [e.g., [21, 24]], ones driven by various measures of clustering quality [e.g., modularity based [24, 25]], using various graph properties, such as edges or cycles [e.g., [26]], ones utilizing spectral graph theory [e.g., [27, 28]], and ones that combine the aforementioned approaches.

Here, we choose to present the results of two of the aforementioned approaches that follow a distinct method for obtaining the clusters: the first algorithm chosen is the Walktrap algorithm of Pons and Latapy [29] applied directly on the similarity graph, while the second one is a particular spectral graph clustering algorithm applied to the eigenvectors of the (Laplacian matrix) of the similarity graph to detect the clusters. The outputs of both algorithms are correspondingly evaluated using modularity and conductance as quality measures of the clustering obtained. The selected algorithms have been shown experimentally to obtain the most natural and representative results among several graph clustering algorithms tested.

The Walktrap algorithm is a hierarchical agglomerative algorithm. Using random walks to measure the similarity between two vertices, it detects network communities by building a hierarchy of clusters, starting from each node being a cluster of its own and merging pairs of clusters, trying to maximize the quality of the obtained clusters.

The second type of algorithm we utilize is the spectral graph clustering algorithm [12] and in particular the algorithm of Shi and Malik [28]11. The algorithm uses the eigen decomposition of the un-normalized Laplacian matrix to perform a dimension reduction of the data space in a lower dimensional space, such that similar items are embedded closely in that space. It extracts the r (number of clusters to be detected) generalized eigenvectors of the un-normalized Laplacian of the graph, corresponding to the smallest eigenvalues. Finally, on this reduced dimension space, a standard clustering method then applies; typically the k-means clustering [30]. We note that this algorithm, as all other spectral clustering algorithms, requires the number of clusters r to be given as input. Here, for the specification of the value of r, we utilize a popular method followed, i.e., the eigengap heuristic [12].

We now present a unified framework that brings together seemingly different approaches for modeling and analyzing complex systems, in particular principal component and graph theoretic analysis described above, under a common ground. This common ground allows one to understand better the differences, advantages, disadvantages, and commonalities between various approaches that can be used for analyzing a complex system, such as dimension reduction, clustering, and embeddings. Additionally, the framework does not provide a single procedure to follow but a number of computational paths or branches (i.e., PCA and Graph theoretical approach, and within each branch, other sub-branches). The framework is open to alternate usage of its elements. It can be used to analyze a complex system through a set of distinct questions. Each of these questions can be addressed by following different computational paths of the framework, as demonstrated in the Section 3.1.2. Different computational paths can be tested and compared for choosing the most suitable one to address each question. Finally, although it can be customized and enriched with domain knowledge, its individual steps are generic and domain independent. As a result, it is applicable to a wide range of scientific domains.

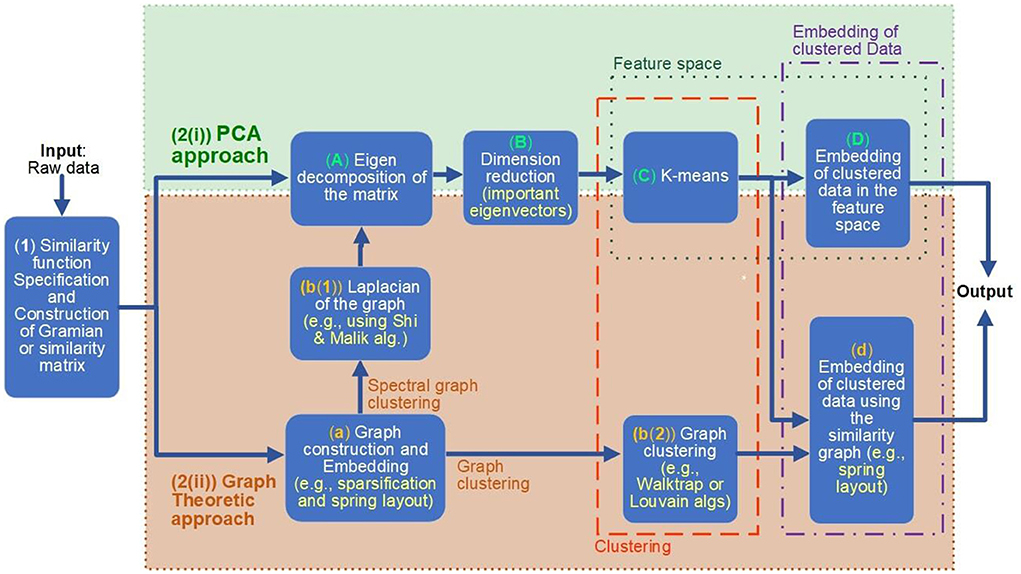

According to this framework, demonstrated in Figure 2, the analysis can be divided into the following steps and branches:

Figure 2. The unified framework for modeling, clustering, embedding, and feature extraction of complex systems. Various numbered boxes indicate the steps of the analysis. After the first step, where the appropriate similarity function is chosen and applied [box (1) of the figure], the framework allows two alternative computational paths to be followed; both of them allow a clustering (orange dotted surrounding box) and then embedding of the (clustered) data (purple dotted surrounding box): the principal component analysis (PCA) approach [indicated with green dotted surrounding box, numbered as 2(i)] or the graph theoretic approach [indicated with brown dotted surrounding box, numbered as 2(ii)]. Following the PCA approach computational path, the Gramian matrix is mapped to the Eigen space (i.e., the Feature space), where clustering is performed [boxes (A–C) of the figure]. On the other hand, following the graph theoretic computational path, the clustering is performed on the constructed similarity graph [computed in step (a)], either directly on the graph [box b(2)], following the graph clustering option (indicated with corresponding labeled arrow) or indirectly on the eigen space of the Laplacian of the graph, following box [b(2) and then (A–C)]. Finally, the clustered data are embedded either in the feature space [step (D)], if the PCA approach is followed, or through various graph embeddings (including also the eigen space embedding), in the case where the graph theoretic approach is chosen.

(1) The first step of the analysis [box (1) of Figure 2] takes as input the raw data of the problem (i.e., a collection of values (vectors or scalars) of the data set). During this step, a suitable Kernel function or the similarity function (see Sections 2.2.1.1 and 2.1.2) is specified. This function is then applied to the input raw data for the computation of the Gramian or similarity matrix.

(2) In the second step of the analysis, we distinguish two computational paths: (i) the PCA approach and (ii) the graph theoretic approach. Both approaches perform the tasks of clustering and embedding the data to reveal features of the complex system. The steps of each of these computational paths are indicated with colored dotted boxes surrounding them: a green surrounding box, numbered as [2(i)] PCA approach and a brown surrounding box, numbered as [2(ii)] Graph Theoretic approach, of Figure 2.

2(i) PCA approach

(A) Compute the N (N is the number of entities of the system) eigenvectors of the Gramian matrix G. Let V be the corresponding N × N matrix containing the eigenvectors of G as columns [box (A) of Figure 2].

(B) Select the first d columns of V. This reduced matrix gives the reduced feature space of data, capturing their d most significant features [box (B) of Figure 2]. For the specification of parameter d, the spectral gap method can be applied (refer to Sections 2.1 and 2.1.3).

(C) Now, in the reduced feature space of the data set, apply a data clustering algorithm, usually, the k-means [30] algorithm to cluster the data to groups of similar properties, according to the similarity function or Kernel chosen [box (C) of Figure 2]. For specification of the value of k needed for the k-means algorithm, again the spectral gap can be utilized as explained in Section 2.1.3.

(D) The final step of the analysis is to embed the clustered data in the feature space, selecting two or three of the principal components identified [box (D) of Figure 2]. This will reveal possible relations both between the clusters obtained but also with relation to other properties of the entities of the system.

2(ii)Graph Theoretic approach

(a) Alternatively, following a graph theoretic approach [surrounding dotted brown box 2(ii) of Figure 2], from the Gramian or similarity matrix computed in step (1), here we construct a sparsified version of the original dense graph (see Section 2.2), where the edges connecting pairs of entities with low similarity are removed. Finally, in this step, we display the constructed (sparse) graph using suitable embeddings, in order to visualize appropriate properties and relations between entities of the system under investigation. For example, we can use the force-directed layout [31], in order to place neighbor nodes closely in the plane. This step is shown in box (a) of Figure 2.

(b) The next step of the graph theoretic analysis is to apply graph clustering on the graph to obtain groups of entities (nodes in the graph) of similar properties [boxes b(1) and b(2) of Figure 2]. For this task, there are two alternative computational paths: either to move the graph in the eigenvectors space and perform the clustering in the reduced feature space or to apply graph clustering algorithms directly on the similarity graph. Each one of the two computation paths is indicated with corresponding labeled brown arrows in Figure 2.

- Spectral Graph clustering: Following this computational path, we basically apply the PCA method on the Laplacian of the graph, as described by some of the most popular spectral graph clustering algorithms, such as the Shi and Malik [28] algorithm: we first compute the Laplacian (or a variation of it) of the graph [step b(1)] and then (mainly) follow steps (A)–(C) of the PCA approach [surrounding box 2(i)] of the framework.

- Graph clustering: Following this computational path, we apply a graph clustering algorithm, such as the Louvain algorithm Blondel et al. [25], Leiden Traag et al. [32], or Pons and Latapy [29], directly to the (sparsified) similarity graph [box b(2)] of Figure 2).

(c) The final step of the graph theoretic approach is, similarly to the PCA approach, to draw or embed the graph with the clustered nodes (entities). The graph theoretic approach allows the embedding of the graph to be done either in the reduced feature space (eigenspace) selecting two or three of the principal components of the eigen decomposition of the graph [step (D) of the PCA approach] or using an appropriate graph layout, such as the force-directed layout [31], in which neighbor nodes are placed closely in the plane.

As illustrated in Figure 2, the framework brings together different approaches for analyzing complex systems, i.e., a PCA approach [green surrounding box numbered as 2(i) PCA approach, of Figure 2] and a graph theoretic approach [brown surrounding box numbered as 2(ii) Graph Theoretic Approach, of Figure 2], under a common ground, in an aim to help analyzers to compare and choose between alternative computational paths as well to help for a better understanding of commonalities, differences, advantages, and disadvantages between the various computationally paths.

In particular, it distinguishes the different steps of the analysis, juxtaposing the characteristic of corresponding steps in each approach. For example, the representation of the system can be made through the eigen decomposition of the Gramian matrix, following the PCA approach [box (A) of the framework] or through the construction and corresponding embedding of the similarity graph, following the graph theoretic approach or through [box (a) of the framework]. The graph theoretic approach allows the visualization of the system data through the graph embedding while the eigen decomposition enables the representation of the data through another space, i.e., the feature space.

After the representation of the system, according to the framework, the next main step is clustering the data [surrounding orange box labeled as Clustering and boxes (C) and b(2) within it]. This task can be performed in the feature space, following the PCA approach [box (C)] or it can be performed either directly in the similarity graph [box b(2)] or indirectly on the reduced representation of the system through the most important eigenvectors of the Gramian matrix [box b(1)].

Finally, the last step of the framework is the embedding of the clustered data either of the reduced feature space or of the similarity graph (purple surrounding box, labeled as Embedding, of the framework). The PCA approach [green dotted surrounding box, numbered as 2(i)] allows the embedding to be done using combinations of the principal components (eigenvectors) of the reduced representation of the system. In contrast, the graph theoretic approach [green dotted surrounding box, numbered as 2(ii)] allows both an embedding using selections of the principal components (eigenvectors), through the spectral graph clustering computational branch of the graph theoretic approach, or using various graph embeddings, such as the spring-layout embedding that places neighbor nodes close in the plane.

Both the PCA and the graph theoretic approach possess individual characteristics, which can be seen as advantages or disadvantages by the analyzer, depending on the specific targeted goals of the analysis. Overall, the graph theoretic approach offers insights on the relations (similarities) between the entities of the system, through the various graph layouts, such as the force-directed layout [31]. However, these representations “loose” the real (initial) distances (dissimilarities) of the entities, and additionally most of the graph drawings use some randomization which makes the corresponding layout also non-deterministic.

As already pointed out, the framework is flexible. It can be used for exploratory data analysis and to provide displays of skeleton results as part of an iterative procedure, as used in the first extension of the framework described next. It can also allow a mixed representation of what we might loosely refer to as structural and functional properties, as described in the second extension of the framework.

In the general framework we have outlined so far, results are represented in a way that is practically independent of the specific domain from where the data are coming from.

In the graph theory side of the representations, even the metric properties of the space are lost in the dimensionality reduction process. It is then difficult to assign a measure of how close the actual nodes which belong to a cluster are compared to nodes in other clusters. In the case of PCA, the metric properties are preserved and can be computed taking into account only the retained principal components. Nevertheless, there is no guarantee that nodes that appear close in the reduced space are not far apart in one or more of the hidden dimensions. If the reduced space is indeed representative of the natural patterns of interest in the data, large excursions in the hidden dimensions may represent noise or irrelevant information and hence the dimensionality reduction process can also be viewed as a noise elimination pre-processing.

In the non-linear kernel PCA, the same considerations hold regarding the metric properties within and beyond the retained spaces. In addition, the data are preferentially distributed in the native manifold described by the kernel function and the specific values chosen for its adjustable parameters. The images of the manifold (extracted from the data) can be useful tools. For example, consider two ways of introducing new data. First, new data can be generated by fresh measurements using the same sensors and processes as the ones used to record the original data. Second, new nodes can be generated by combining in some way a subset of nodes of the original data set, for example the nodes of predefined clusters. If the original data are dense and cover well the underlying manifold we would expect that newly introduced data will lie close to the original manifold, and can therefore be easily embedded in the existing displays. In the case of the generated data to represent clusters identified in the data set, the representative node representing each cluster can be defined by averaging the coordinates across the nodes of each cluster in each (reduced) dimension. All new nodes can then be added to the existing representation. In some applications, it is possible to make a preliminary assignment of each node on the basis of a priori information, accepting that some of the labels may be wrong. In such cases, for each cluster, a subset of the nodes within it can be used to define the representative nodes of that cluster. In this way, the original nodes can be hidden, maintaining in storage their coordinates and labels while leaving on the displays the representative nodes of each cluster and an image of the underlying manifold. The images of the cluster exemplars, which we will call CENTERS, can be displayed with the manifold derived from the original data. The process transforms the abstract mathematical distributions into a semantic map, i.e., a labeled map of the data terrain.

An iterative clustering approach can be defined to extract a skeleton of the underlying structure, derived directly from the input data. The linear PCA or the non-linear Gaussian Kernel PCA can first be used to define the reduced representation of the input data. A combination of prior information (if it is available) and clustering techniques can then be used to define distinct clusters. For each cluster, a representative prototype, the CENTER can be defined (if necessary excluding outliers). The display of the CENTERs in the reduced representation of the first few principal components constitutes an initial semantic map of the underlying structure. Each CENTER can in turn be expanded (bringing back outliers). The distribution of the nodes of the expanded center can be visually inspected to decide whether a single representative CENTER suffices or more than one CENTER must be defined, producing a sequence of refined semantic maps. Similar operations can be defined for merging nearby clusters (in the reduced representation), and the process is repeated to arrive at a more refined semantic map.

In many applications, the data separate into well-defined classes. The data between these classes define connecting pathways between the classes. Such pathways can be very important but, by nature, difficult to describe. The unified framework we propose offers a natural way of incorporating such pathways, as geodesic paths in the manifold defined by the combined data of the stable classes and the transition data. We simply embed the transition data into the existing manifold or recompute the manifold after augmenting the original data by adding the data belonging to transitions. In this way, the semantic maps of the last section become dynamic maps showing the transitions with the semantic maps in the background. This is particularly useful when different time scales are involved, which in the limit of very different dual time scales can describe structural/functional relationships. We will describe such an example in the first neuroscience application, where we show how the framework we propose can provide a powerful description of sleep with a semantic map providing the structural properties derived from periods of equilibrium (quiet periods of each sleep stage). Even during periods of apparently chaotic behavior, the dynamics can be described in a meaningful way. Nodes representing successive periods within a well-defined transition are connected in a strict chronological order and displayed as a path in the reduced space with labeled CENTERS serving as background (semantic map). A good example of such dual time-scale analysis is the first neuroscience application presented in Section 3.1.

In this section, we demonstrate the application of the unified framework to three problems. The first two are from neuroscience, one dealing with a new approach to sleep staging and the other applying the framework for the clustering of early somatosensory evoked responses. The third one is from astrophysics and deals with the study of galaxy evolution.

Network analysis is highly relevant for modern neuroscience. Great advances in neuroimaging methods have demonstrated that brain function can be understood as a double parcellation of processing in space and time: “What appears as a noisy pattern when a single channel or a single area activation is observed from trial to trial, is seen to be less so when the activations between regions, across single trials, are examined” [33].

In the first parcellation, what we might call a structural network [34], the brain is partitioned into areas spread on the cortical mantle and in individual sub-cortical structures and their subdivisions. These areas are connected into well-defined networks. Some of these deal with processing signals from the sensory organs while others deal with attention, emotion arousal, and even the neural representation of self.

The second parcellation, what we might call a functional network [35], describes how particular processes evolve to accomplish specific tasks. Brain function involves an orchestrated organization in the time of cascades of activity within the nodes leading to diverging output from some nodes to many others and converging input on some nodes from many others. The normal operation of the functional network demands exquisite organization in time, which is achieved through multiplexing of interactions. We can think of them as cross-frequency coupling between the nodes of the network.

We distinguish two distinct ways of studying brain function and describe the application of our unified framework to one example from each one. In the first, we study periods of (apparent) quiescence which is further divided into resting states, awake state, and sleep stages; we show how the unified framework can guide the exploration of sleep stages and transitions between them. In the second, we study how the brain responds to well-defined stimulation; we show how the unified framework can help us separate responses to repeated identical median nerve stimulation into clusters. The input for this analysis will be special linear combinations of the raw magnetoencephalography (MEG) and electroencephalography (EEG) signals designed to selectively identify the first evoked responses at the level of the thalamus and cortex.

Sleep has been the subject of theorizing and speculation for millenia. The scientific study of sleep started in earnest in the early twentieth century with the pioneering studies of Dr. Nathaniel Kleitman in the first sleep laboratory established in the 1920s at the University of Chicago. Three decades of work culminated in the 1950s with landmark discoveries, including the identification of Rapid Eye Movement (REM) sleep [36]. The detailed study of sleep in many laboratories using the then available EEG technology led to the notion of distinctive periods of sleep, which was codified by Allan Rechtschaffen and Anthony Kales in the first guideline for assigning periods of sleep into “sleep stages” [37]. The guidelines depend on the identification of high amplitude or oscillations at specific frequencies that become the hallmarks of each sleep stage. The identification of these hallmarks assigns sleep period (usually 30 s) to undefined/movement, eyes closed waking before sleep (ECW), light or deep sleep, and REM. Non-REM (NREM) sleep is further divided into four parts, with NREM1 and NREM2 making up light sleep and NREM3 and NREM4 constituting deep sleep. Quiet periods with no graphoelements are found between periods with characteristic graphoelements of a given sleep stages; these quiet periods inherit the sleep stage label of such preceding periods, thus contributing to the smoothness of the sleep staging outcome. During each evening, a sleep cycle (SC), i.e., the progression from light to deep sleep and REM repeats three to five times. The resulting summary of a night's sleep is called hypnogram. The hypnogram has been the cornerstone for both sleep research and sleep medicine [35, 38].

A huge amount of research effort has been devoted to understanding the essence of each sleep stage through understanding its hallmarks, but with limited success. The key conclusion from this work has been the realization that each exemplar of a hallmark of a sleep stage was very different and that each one was made up of widely distributed and highly variable focal generators; in the case of spindles, the first and last focal generators were usually only detected better with MEG, with only a fraction of spindles extended widely in between and sometimes appeared as synchronous EEG events [39].

Classical sleep staging was introduced more than half a century ago, revolutionizing sleep research and clinical medicine. Nevertheless, at the time of its introduction, knowledge about brain processes and ways of monitoring them were limited compared to what is routinely available today. Using some of the new information that can be routinely extracted with today's EEG and MEG technology, it allows us to go beyond classical sleep staging and extend the description of sleep to periods where the standard hypnogram could not describe and even demonstrate that a different classification scheme may be more informative. For example, the sleep stage REM would separate into at least two clusters, as suggested recently [40]. Recent changes in sleep staging, collapse the two divisions of deep sleep into the new NREM3 sleep stage [41] and prescribe ways of resolving ambiguities when hallmarks of different sleep stages are identified within the same 30 s period used for sleep staging. In our recent work, we have ignored these recent changes because although they reinforce uniformity across sleep scoring by human experts, they have no other theoretical foundation and eliminate valuable details [42–45].

Classical sleep staging relies on human experts to interpret large excursions of the EEG recordings from the time domain descriptions. The implicit assumption that individual exemplars of each hallmark represented similar events is inconsistent with the Dehghani et al. [39] results and demonstrated to be wrong by more recent results using intracranial recordings [46–49] and tomography of MEG data [43, 45]. It, therefore, seems that the periods of large graphoelements are unlikely to be useful for revealing the key properties of each sleep stage because they are chaotic periods representing a system that has nearly gone out of control on its way back to equilibrium. A key result of our recent studies was the demonstration that the quiet periods of sleep that classical sleep staging ignores completely, far from being uninteresting and void of useful information, are in many respects the best representatives of the core characteristics of each sleep stage [50]. Our earlier results led us to the then disruptive claim that sleep staging is possible from the properties of core periods alone. We will provide evidence of the veracity of this claim in this subsection using the extensions of the framework we have described in Section 2.4. Since there is no time marker to time-lock events, this question is best addressed using spectral analysis as we will describe below. For this analysis, we used a unique set of whole night MEG data, collected from four subjects at the Brain Science Institute RIKEN, some 20 years ago [51]. We will show results from one subject which are typical of the results we obtained for each one of the four subjects.

The details of the analysis have been described elsewhere; magnetic field tomography (MFT) was used for extracting tomographic estimates of brain activity for each time slice of available MEG data [52, 53]. The full pipeline for preprocessing and MFT analysis leading to regional spectra of each 2 s segment of tomographic estimates of activity and further statistical analysis is described in detail [can be found in [43]]. For the purpose of the analysis in this subsection, 29 Regions of interest (ROI) are defined in areas of the brain known to change appreciably during sleep. The normalized regional spectral power, hereafter simply referred to as the regional spectra are then computed for each 2 s exemplar of MFT estimates of brain activity. We typically use 8 or more exemplars for each category of data (e.g., sleep stage). The regional spectra for each exemplar can be used over the entire spectrum 0–98 Hz in steps 0.2 Hz or reduced to the power within each of the classical bands, delta (1–4 Hz), theta (4–8 Hz), alpha (8–12 Hz), sigma (11–17 Hz), beta (15–35 Hz), low gamma (35–45 Hz), and high gamma (55–95 Hz).

The Gramian matrix [step (1) of the framework] is then constructed with each element computed as the Gaussian kernel of weighted overlaps of pairs of regional spectra. It is then used as the input for step (A) of the framework, for the computation of its eigen decomposition [step (B)], following the PCA approach [surrounding box 2(i)] of the framework. For the main clustering analysis [step (C) of the PCA approach of the framework], the input consists of three sets of data: the bulk of the data is from the noise data, ECW, and each sleep stage. The second set of events included in the analysis are from the hallmarks of NREM2, spindles, and unitary k-complexes (KC1) i.e., avoiding KCs running in succession. Finally, new nodes representing transitions or other periods (e.g, representing hallmarks of individual sleep stages) are added. For each hallmark exemplar, two regional spectra are used, one from the 2 s before the start of the hallmark and the other from the 2 s beginning with the stat of the hallmark. The final set consists of a set of three successive periods of the 2 s of undefined period between two sleep stages or between the awake state and sleep (i.e., transitions). The clustering is performed with ROI details presented at one of two resolutions. In the united ROI resolution, only the category of the time period is retained (e.g., sleep stage, transition etc.), while the identity of each ROI is lost. In the un-united resolution, each node represents the 2 s period for each ROI separately, i.e., a single united node splits into a number (in our case 29) of distinct nodes, one for each ROI. In general, PCA is sufficient for the analysis at the united resolution level, while for the un-united resolution, KPCA is more appropriate. We will show examples of both next.

An automatic approach to sleep staging is possible but tedious because the choice of frequencies and ROIs for best separation between pairs of sleep stages must be made and these can vary a little from subject to subject. Here, we followed a slightly different approach which is a natural progression from the classical procedures. The approach utilizes the Extension 2.4.1 of the framework. First, we used expert classification as a starting point. The centers for each sleep stage are then defined using all available exemplars, except extreme outliers. If transitions are included, then all exemplars of each transition are collapsed to their respective centers. At this point, the centers are defined by the list of indices of the chosen exemplars. The centers of each sleep stage are then embedded in the reduced space for the case of linear PCA or on the manifold in the case of the KPCA. The actual nodes are then hidden, leaving a skeleton display showing only the centers in the reduced dimensions or on the manifold.

A second iteration of the framework is then initiated validating the expert classification for each sleep stage in turn. The nodes of each category, one category each time, are expanded (made visible). Usually, either visual inspection of the distribution of nodes or a formal clustering approach is enough to make a confident decision whether the single center is maintained or more than one centers must be used to adequately describe poles with high node density. The outliers and the nodes of each new center are then hidden leaving at the end of the second iteration, a revised skeleton display of the new centers in the reduced space or on the manifold. Note that the centers representing transitions are not processed in the second iteration step. A final step can be added in which the outliers are returned to the display and any ones that now are close to one of the centers are incorporated in to it; this allows to completely reverse the original expert classification in a data driven way. The final presentation can represent the centers in either the reduced space on the manifold with any one or more categories expanded to show how the nodes representing individual exemplars are spread. Also, one or more of the centers representing transitions can be expanded and the nodes of each transition linked to a path by joining successive nodes for which an ordering is appropriate (for example close chronological order).

Finally, we showed the topology of categories (e.g., sleep stages) relative to each other and transitions between these categories in the labeled terrain, implementing extension 2.4.2 of the framework. The labeled terrain could be displayed across planes in the reduced dimensions of the linear space of the PCA components or as the manifold of the terrain formed by the KPCA analysis. Next, we presented four displays showing results for the analysis of the sleep of one subject; for the first two, we employed the linear PCA analysis, switching to its Gaussian Kernel extension for the last two displays.

For the first analysis, we used eight exemplars of regional spectra for each set of the 29 ROIs of each sleep stage and the ECW condition. We also used the noise measurement of the MEG (recordings with the MEG system in exactly the same position as in the real MEG measurements, but with no subject in the shielded room). Exactly the same analysis is performed for the noise as for the real data to compute virtual brain activations in the time and frequency domain. The resulting virtual noise regional spectra are appended in the analysis as exemplars of an additional “noise” conditions, which we interpret as a generalized origin. The actual spread of the images of the noise exemplars represented the spread of this zero level activity. Finally, the analysis is performed for eight exemplars for the periods before and during the spindles and unitary k-complexes (KC1). We also add three 2 s periods corresponding to a quiet period just before the first NREM1 period, representing the transition from the awake state to light sleep. Counting all these cases, we have 91 nodes available for the united ROI resolution analysis and 2,639 nodes for the un-united ROI resolution.

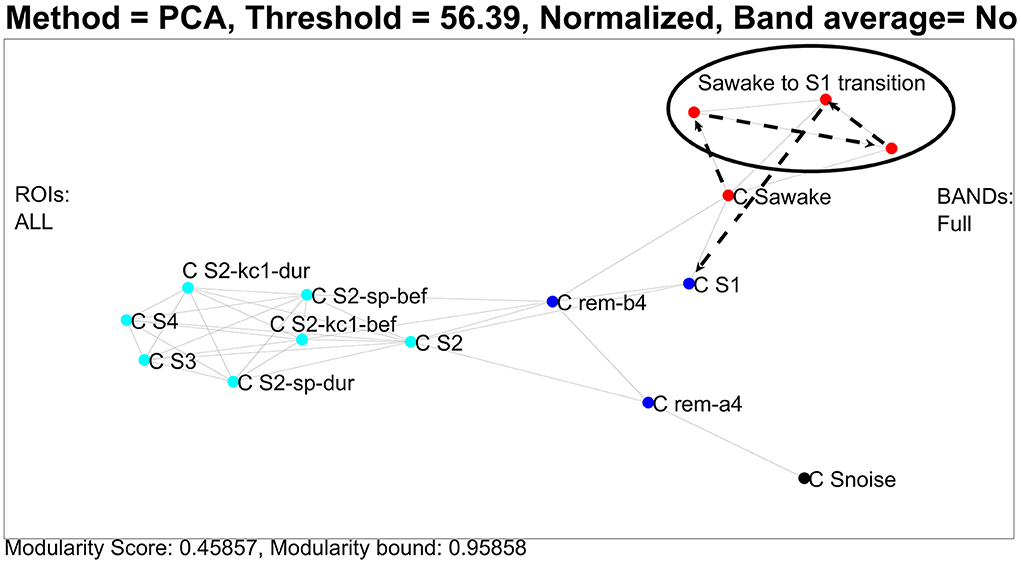

In the first analysis, we use the united ROI resolution and perform a PCA analysis of the 91 nodes using as components all ROIs and all frequency steps. The procedure described in the previous section is used to define centers, one for each category, except for REM which we separate into two sub-categories. The results are displayed in Figure 3, which shows the clusters extracted by the analysis described above. This graph was generated by using the Euclidean distance on the feature space and an optimum threshold. The values above this threshold are given the value of zero. The non-zero elements of the resulting matrix were the edges of the graph with edge weight the inverse of each value (Euclidean distance). We also mark with separate colors the clusters extracted from the k-means algorithm [implementation of step (C) of the PCA approach 2(i) of the framework]. Using four clusters, the k-means algorithm groups the noise center and the noise nodes in a separate (black) cluster, the awake state, and transition into a second (red) cluster, both the two REM clusters and NREM1 into the third (deep blue) cluster and all remaining ones [NREM2, deep sleep, and the periods before and during the hallmarks of NREM2 (spindle and KC events)] into the fourth (pale blue cluster). The transition from awake state to light sleep is highlighted by connecting with heavy black, dash arrows the ECW center (C Sawake) to the sequence of transition nodes, finally connecting in a similar way the last of the transition nodes to the NREM1 center (C S1), providing a visualization of the path in the graph [implementation extension 2.4.2 of the PCA approach 2(i) of the framework].

Figure 3. Clustering and transitions from eyes closed waking (ECW) to Non-REM1 (NREM1) on the semantic map defined by CENTERS of key sleep categories. To produce the figure, 94 (united) nodes were used, which were made up of the 91 nodes from core periods, awake, noise, and NREM2 hallmarks (pre and during spindles and KC1 events), and three nodes from the transition period from ECW to just before the NREM1 period. The details of the computations and what is actually displayed are described in the main text. The CENTER representations of the main categories are displayed in the reduced space of the first two eigenvectors of the PCA analysis providing a semantic map, on which the transition from ECW to sleep is portrayed by connecting (in chronological order) the nodes representing that period. For clarity and to avoid clutter in the display, the labels of the CENTER nodes begin with C and then S for the NREM sleep stage (e.g., C S1 for CENTER of NREM1) and obvious notations for the rest (e.g., C S2-sp- dur for the CENTER of the NREM2 period during spindles).

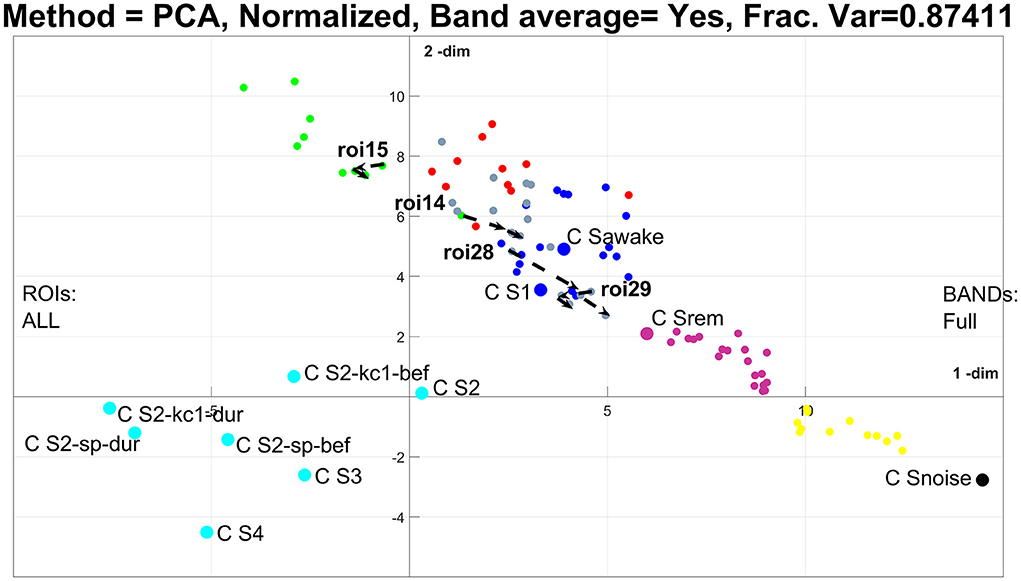

In the previous paragraph, the description of Figure 3 demonstrates how well the results of the united representation reflect the known properties of sleep stages, which in turn serve as a background for the dynamic display of the short transition from the awake state to sleep. The united approach collapses the details of regional spectra of each ROI for all exemplars of each category into one CENTER node. In the final representation, a single CENTER node represents the core period of each sleep stage or one of the other categories for which exemplars were included. This is a huge simplification, arrived through an essentially unsupervised application of the framework. The resulting display portrays well the known similarity between the classical sleep stages in a novel way. The skeleton of the representation is defined by the CENTERS of the core periods of each sleep stage. Within this skeleton the algorithm places in a very reasonable way two CENTERS for each hallmark of NREM2, one for the two second periods before and one for the two seconds that start with the onset of each hallmark event. Furthermore, a representation of a virtual baseline is included that provides a natural minimal level point of reference. The raw signal for this baseline are eight measurements, each of two second duration, recorded with no subject in the shielded room. These background noise measurements were processed in exactly the same way as the measurements with the subject in place. The regional spectra produced by these computation represented images of the background noise of the instrument and the ambient magnetic field inside the shielded room. The images of these eight noise exemplars were collapsed into a single CENTER, which is the best representation of the zero level of our data, which we refer to as a virtual origin for our skeleton representation. This static representation of the structure of sleep can serve as the background for the description of highly dynamic processes like transitions from the awake state to sleep and between sleep stages. The display thus summarizes an entire night's sleep of a single subject. A measure of what has been achieved can be appreciated by noting that, to the best of our knowledge, this is the first such representation of sleep, from either supervised or unsupervised analysis of sleep data. The result is also deceptively simple because underneath its simplicity lurks a more complex system, which can only be exposed if the un-united analysis is adopted, as we describe next. Figure 4 shows again the results of PCA analysis, but this time using the un-united ROI.

Figure 4. The results of PCA analysis using the un-united region of interest (ROI) resolution in the plane defined by the first two PCA. Exactly the same data are used as in the previous figure, and the same analysis, except that in collapsing the centers, each sleep stage, including REM, is represented by a single representative node (CENTER). The three nodes representing the transition from ECW to NREM1 in figure are now represented by 87 nodes and the single transition in Figure 3 is now represented by four paths, one for each V1 and FG in each hemisphere.

In Figure 4, each category of sleep is collapsed into one node (collapsing all exemplars and ROI nodes into one including REM), leaving only the nodes for each ROI for the three transition periods (3 × 29 = 87 nodes). Because of the large number of nodes, no attempt is made to produce a graph, thus no edges are shown in Figure 4. For this case, the nonlinear PCA is often a better representation (as we will explore in the next two figures), but for now, we stay with PCA, so there is a better correspondence in the methods when we compare Figures 3 and 4. Note that the k-means clustering (using seven clusters) maintains some of the patterns of the previous figure; the noise is still in a separate (black) cluster, and a separate (pale blue) cluster groups together NREM2 core periods and hallmarks with deep sleep (NREM3 and NREM4). This time ECW, NREM1, and REM are grouped into one (deep blue) cluster. Importantly, the transition period separates into a set of clusters beginning close to the noise cluster and extending across and beyond the REM (magenta), ECW and NREM1 (deep blue) cluster and beyond. The k-means cluster assigns some of the 87 nodes to the deep blue cluster and others to five more (yellow, magenta, gray, red, and green) clusters. These results suggest that collapsing all ROIs together may provide a powerful grand summary of sleep albeit, at the expense of missing considerable detail about the behavior of each ROI. An optimal description that may demand the use of more complicated methods may be appropriate, e.g., multilayer networks. Nevertheless, some useful observations can be made for the ROIs that are known to show distinct regional spectra for the start and end points of transitions. For example, in the classification of sleep stages from regional spectra, the ROIs for left and right primary visual cortex (V1) (14 and 15, respectively) and left and right Fusiform Gyrus (FG) (28 and 29, respectively) are effective for separating the awake state and NREM1. In Figure 4, the paths of the four regional spectra for V1 and FG are displayed (by connecting in the correct chronological order the three exemplars for the transition), showing that a consistent movement in the space of the two PCA, which is more apparent on the right hemisphere (ROIs 14 and 28). Finally, we draw attention to the pattern identified here for the transition from ECW to NREM1, more clearly seen in the united representation of Figure 3: the initial direction away from ECW is away from the NREM1 center, which just before the NREM1 onset, turns round 180° jumping to the NREM1 neighborhood of the semantic map. This pattern is not a peculiarity of this subject; it is identified in most other subjects during the first transition from ECW to NREM1 in SC1. This feature has important implications which are beyond this methodological paper and will be fully described elsewhere.

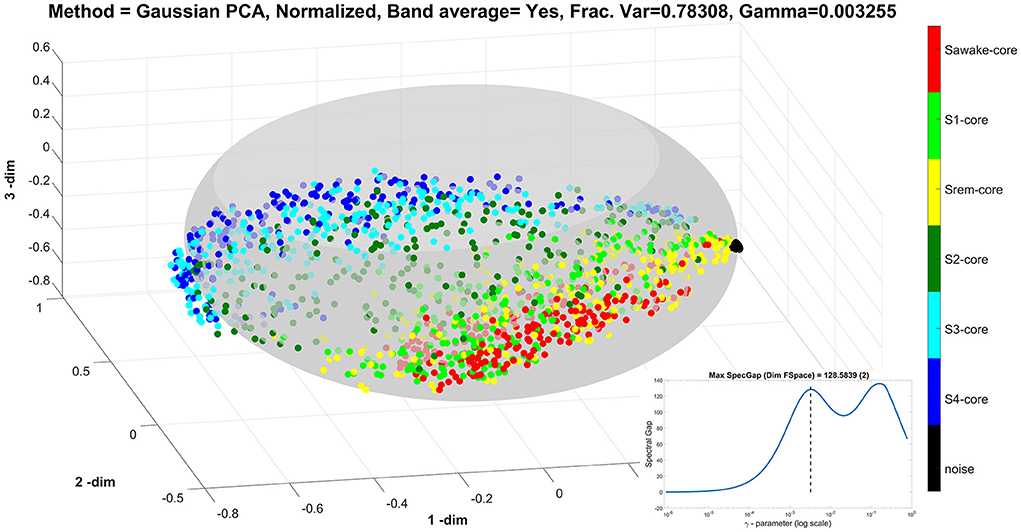

For the next two displays, Figures 5, 6, we use the same data in the un-united ROI resolution with the Gaussian kernel for the PCA analysis. We use only the noise, ECW, and the five sleep stages, which produce 1,624 nodes. The plot of the spectral gap shows two peaks, which correspond to two different scales in our data. The lower peak is found for γ = 0.003255 and corresponds to the scale appropriate for the variations encountered in the actual ROIs. The next display, Figure 5 shows the nodes spread in the ellipsoid with different colors representing the noise, ECW, and the different sleep stages, as these were defined by the human sleep experts. Note the importance of the noise data, which in the scale of the real brain activations are minute, thus collapsing to almost a point which we can use as the marker for the origin from which each node can be measured. There is a clear tendency for the nodes to spread in two dimensions with the long axis of the ellipse to describe a global increase, probably the overall strength of activations, while the variation in the orthogonal direction along the ellipsoid's surface to spread the nodes according to sleep stage membership. The pattern shows three bands. The lower band contains nodes belonging to awake (red), REM (yellow) and NREM1 (light green). The higher band contains nodes belonging to deep sleep, i.e. NREM3 and NREM4 shown by different pales of blue. Most NREM2 nodes (dark green) fall in the middle band on the ellipsoid surface, between the two bands described above.

Figure 5. The results of Gaussian Kernel PCA analysis using the un-united ROI resolution in the 3D space defined by the first three PCA. There are over one and a half thousand nodes which are now labeled according to the category each one belongs (noise, ECW, and the five sleep stages). The insert shows the spectral gap as a function of the γ-parameter value in a semi-log scale, which has two peaks. The display shows the results using the value of γ at the first peak, marked by the dash vertical line in the insert (at γ = 0.00326). With this choice of γ, the nodes from the noise measurement collapse to almost a point which serves as the origin of the representation since it represents the region of feature space with very small values. The nodes corresponding to measurements with the subject in place are well-spread forming a good representation of the underlying ellipsoid.

Figure 6. It is same as Figure 5, but for the value of γ at the second peak, marked by the dash vertical line in the insert (at γ = 0.1497). With this choice of γ, the ellipsoid is lost because the non-linear mapping emphasizes on the distribution of the nodes representing the low amplitude noise. In this case, the method selects the null (noise) direction of the data to be the first principal direction (the most important direction), while the remaining of the data are distributed along the second and third principal direction representing the amplitude of the deep and light sleep. The ellipsoid is lost because the non-linear representation emphasizes the representation of the nodes representing the low amplitude noise in the principal direction constraining the nodes representing the real data on the 2D plane of the second and third principal directions, thus projecting the ellipsoid onto a plane.

The final display for the sleep analysis, Figure 6 shows the same analysis as that of Figure 5, but with the γ = 0.1497, which is value of the second and higher peak (also marked here in the insert of the semi-log plot of spectral gap vs. the γ-value). This γ value is appropriate for the range of values of the virtual brain activations generated by the analysis of the noise data. These noise variations are very small compared to the real brain activations, except in the directions that are in the null (noise) space of the real data, where these are scaled to adequately represent their range (which actually is over a very small real magnitude). Therefore, the noise components in the directions where the real data are silent are emphasized, forcing the first principal direction along the null space of the real data. The real data are then branching off in two, nearly orthogonal, directions in the plane at right angles to the first principal direction defined by the noise measurements.

In the previous application, the brain was studied during sleep where no external stimuli is presented to the subject. In contrast, in this application, early brain responses elicited by stimulation of the median nerve of the wrist are analyzed. Such somatosensory stimulation gives rise to the so called somatosensory evoked potentials (SEP) and somatosensory evoked fields (SEF) that are recorded and seen in the EEG and MEG signals, respectively. These kind of brain responses are quite strong compared to the background activity of the brain, easily identified in the raw EEG/MEG signals, time-locked to the stimulus onset and one of the most reproducible brain responses to an external stimulus [54]. While this type of stimulation excites many areas across the brain, experiments with animals and humans have identified the times of the first arrival of the signal in the area of the thalamus [55–57] and in the cortical area S1 [55, 57–60].

Specifically, at around 14 ms after the presentation of the stimulus, a prominent positive peak is shown at the SEPs, called the P14 component; this is related to the neural activity in the thalamus [55, 56]. A few milliseconds later, around 20 ms post stimulus, peaks can be seen in both in SEPs and SEFs; this is related to the neural activity in S1 [55, 56, 60, 61]. The peaks at 20 ms, are known as P20 for EEG and M20 for MEG, and they are seen as dipolar patterns rotated by 90° to each other. Both the P20 and the M20 have been localized independently in the primary somatosensory cortex, Broadman area 3b [59, 60, 62]. For our purposes, the primary thing is that these are the first arrivals of the evoked response at the level of the thalamus and the cortex, and they are therefore the components that are least influenced by activity in the many other cortical areas that come later.

In experiments with EEG/MEG recordings in response to somatosensory stimulation, the stimulus is presented to the subject many times with a predefined interstimulus interval. Each repetition of the stimulus is called a trial. Even though responses to somatosensory stimulation are quite strong and time-locked to the stimulus, there are still some variations from trial to trial, for reasons that are not yet understood. Here, we explore the causes of these variations using the following two steps. First, the concept of the virtual sensor (VS) is used to get a good estimation of the underlying sources at the single trial level, then the framework is utilized for clustering of the single trial virtual sensor signals. The input to the unified framework for clustering is the time-domain signals extracted directly from the output of the VS applied to the raw signals.

The data used here are simultaneous EEG/MEG recordings from 1 human subject. The EEG/MEG recordings were made available to us in an anonymized form without any MR images, by the corresponding author of the study [60]. Experiment involved 1,198 trials (repetitions) of electrical stimulation of the median nerve at the right wrist of subject. The raw EEG and MEG data were cleaned from stimulation artifacts, line noise, and band pass filtered in the frequency range [20–250 Hz]. In the case of EEG, the mean of all channels was used for re-referencing the signals of the individual EEG electrodes. Once the data were cleaned and filtered, the continued data were epoched into trials of 0.3 s total duration. Each trial was defined as the signals starting 100 ms before (pre-stimulus period) and 200 ms after (post-stimulus period) stimulus onset. The next step was to group the trials into sets, such that the members of each set contained trials with the head in the same position relative to the MEG sensors. This was done by identifying the periods with head movement from visual inspection of the continuous MEG signals. Since the MEG sensors are stationary above the head, even slight movements of the head cause big distortions of the signals that can be easily distinguished visually. Finally, one group of 239 trials with no head movement was selected for further analysis. From the mean of the 239 trials, the P14 and M20 components were identified as prominent peaks at 14 and 20 ms after stimulus onset, respectively.

We know that even for the relatively strong stimulus used here, there is some variation in the responses of each trial. In order to get a good estimation of the time course of the underlying sources, one VS was defined for each component. Analysis of evoked responses using virtual sensors has been employed for the identification of early sensory responses for the somatosensory, auditory, and visual cortex [63–65]. A VS is constructed using a very simple procedure founded on well-understood physics principles for the generation of electric and magnetic fields. In this work, we construct two VS, the EEG P14-VS to get an estimate for the thalamic activity at around 14 ms, and the MEG M20-VS to get an estimate for the activity of S1 at 20 ms, using the EEG and MEG raw signals, respectively. Here, the selection of the channels for the construction of the two VS is based on the signal power (SP) and signal to noise ratio (SNR) at the specific latencies (14, 20 ms) and across all the 239 trials. The definition of the VS is fixed from the average signal at the time points of little variation of the first thalamic and primary somatosensory cortex (BA 3b) from the average of the ensemble of all trials. The estimate of each thalamic and cortical (BA 3b) response is then estimated from the signals of each individual trial.

For the strong median nerve stimulation used in this experiment, the evoked response at the level of the cortex is present in almost all single trials. The M20 corresponds to the first arrival at the cortex and there is little interference from any other generators since all previous responses were at the level of the thalamus and the brainstem. We can therefore assume that the M20-VS estimates within the first 20 ms correspond to the activity of the primary somatosensory cortex. In contrast, the thalamic activity around 14 ms is likely to have some contribution from other deeper brain activations corresponding to the arrival of the slower components of the evoked responses or even different pathways. Hence, the EEG P14-VS estimates at the ST level will vary. We use the unified framework to cluster the 239 trials into groups of trials. The clustering is applied to the signals from both VS, separately.

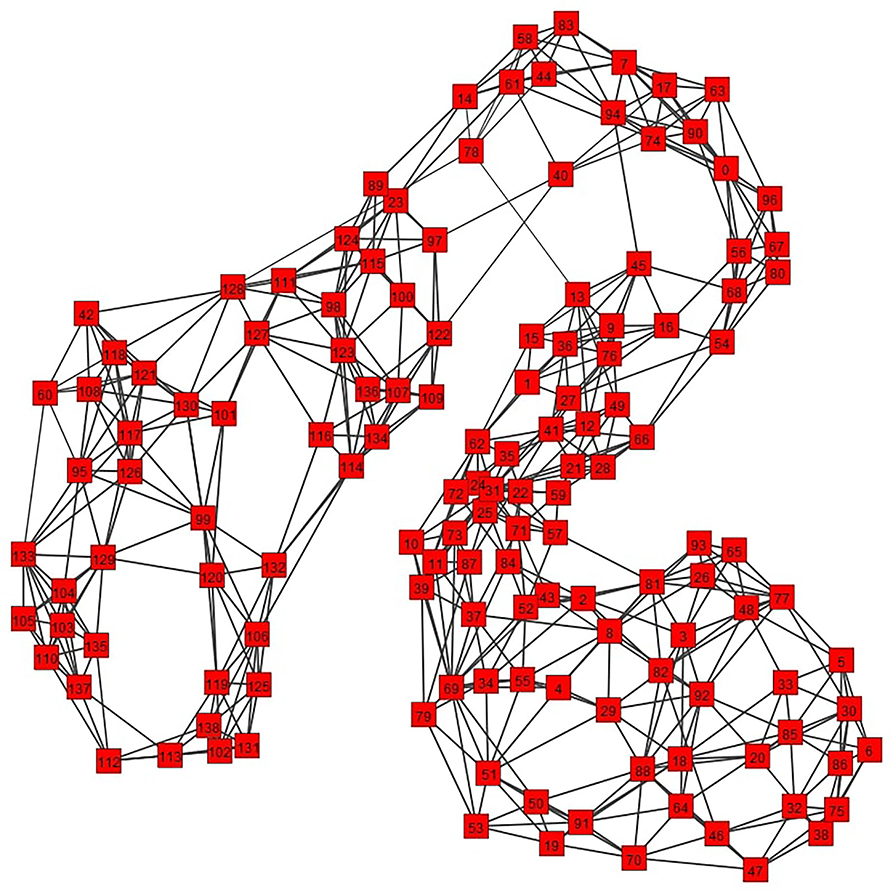

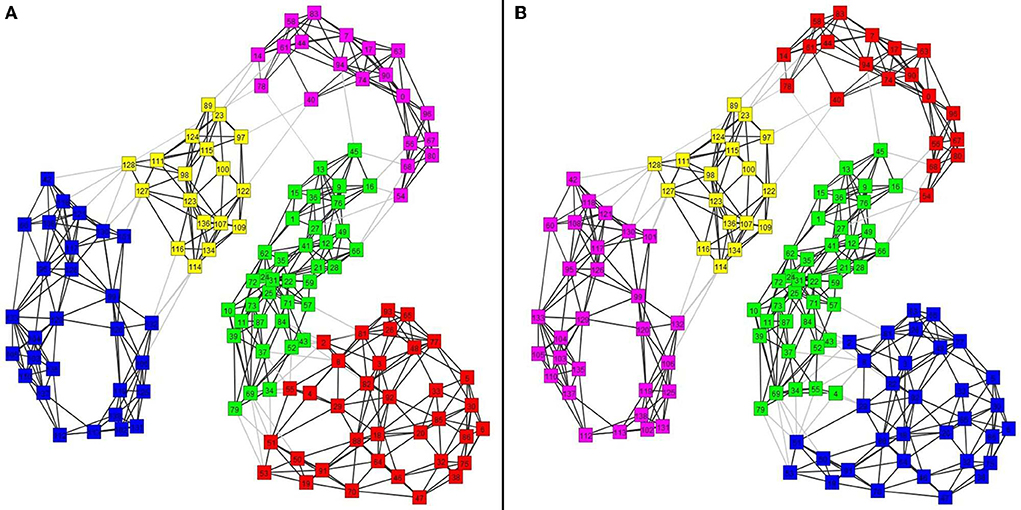

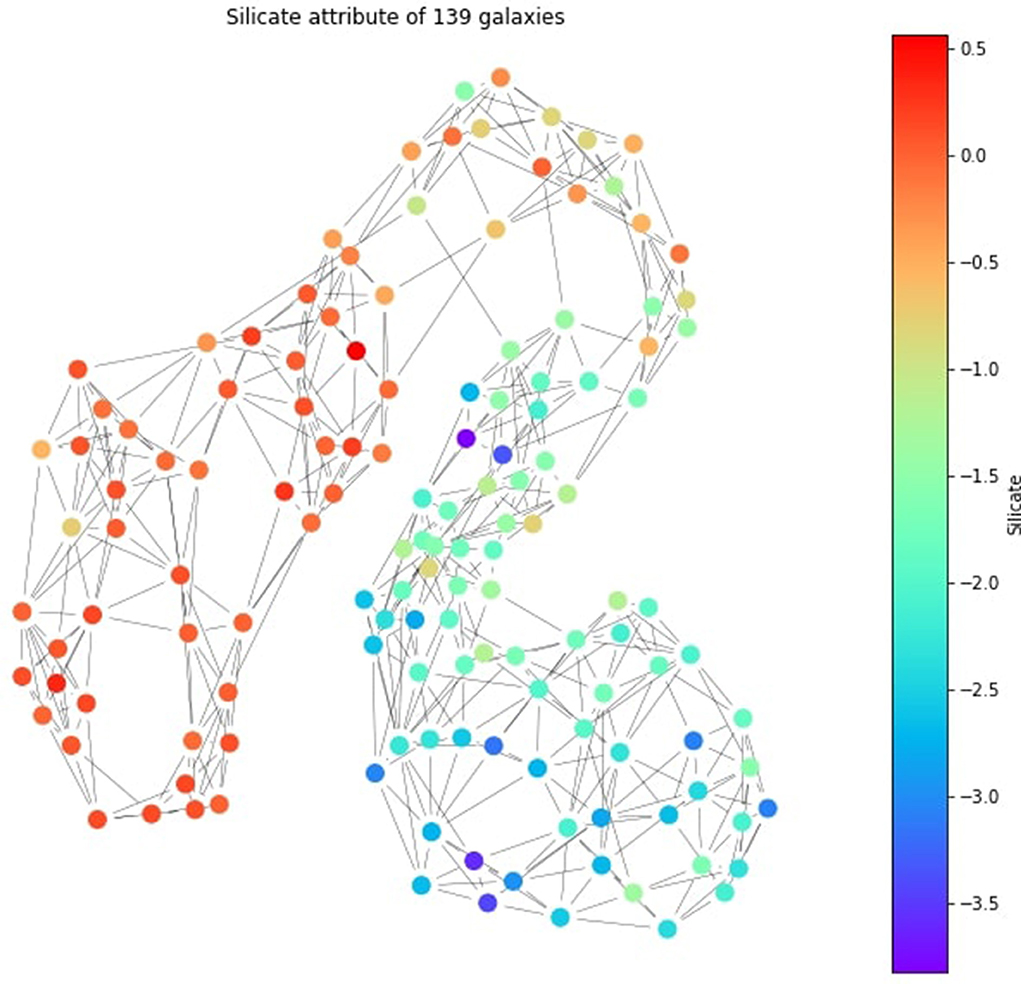

First, we use the Gaussian Kernel as a similarity function on the trial signals, to construct a similarity matrix [step (1) of the framework, see Figure 2]. In the resulting graph, the nodes represent the single trials and the edges between nodes measure how similar the corresponding nodes (trials) are. Next, we apply graph sparsification [step (a) of the graph theoretic approach 2(ii) of the framework] in order to get a good visualization of the corresponding similarity graph. Then, we utilize the spectral graph clustering computational path, following steps [b(1)] and (A)–(C) of the framework, to detect nodes (trials) with similar signals. The last step of the graph theoretic analysis is to draw the graph, using the force-directed layout [31] and show the detected clusters [step (d) of the framework]. Once the single trials (nodes) have been assigned to different clusters, we compute and show the average signal for each detected cluster.

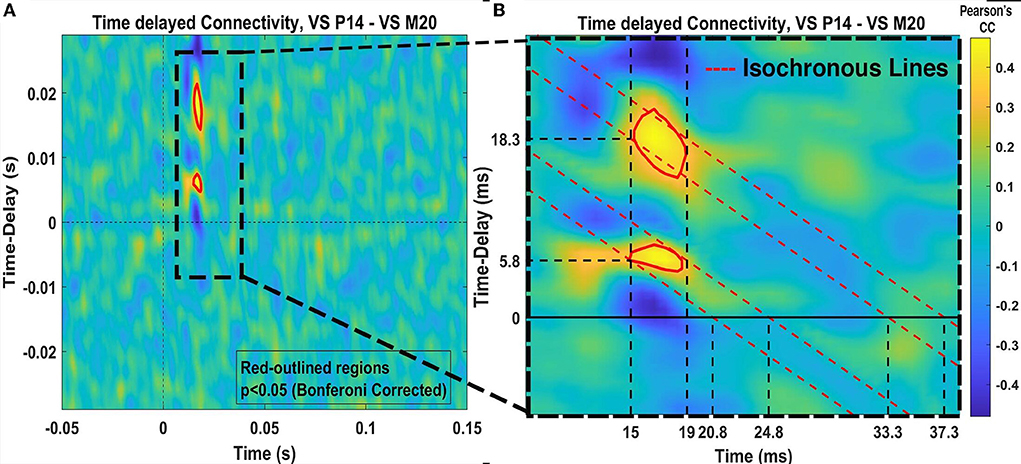

Finally, functional connectivity [3] analysis on a single trial level is performed between the clusters of the two different VS. This is done with the goal to identify possible communication mechanisms between the two areas (thalamus—cortex) in response to somatosensory stimulation. The Pearson's correlation coefficient (PCC) in a fixed window of 8 ms length and with introduced time delays at every 0.833 ms, is used to quantify the values of the time-delayed correlation between the time courses of two signals.

For the implementation part, we have developed our own Matlab code for extracting and pre-processing the necessary data from this data set and for the connectivity analysis. Also, we have employed Python and the package igraph for the graph theoretical analysis employed.

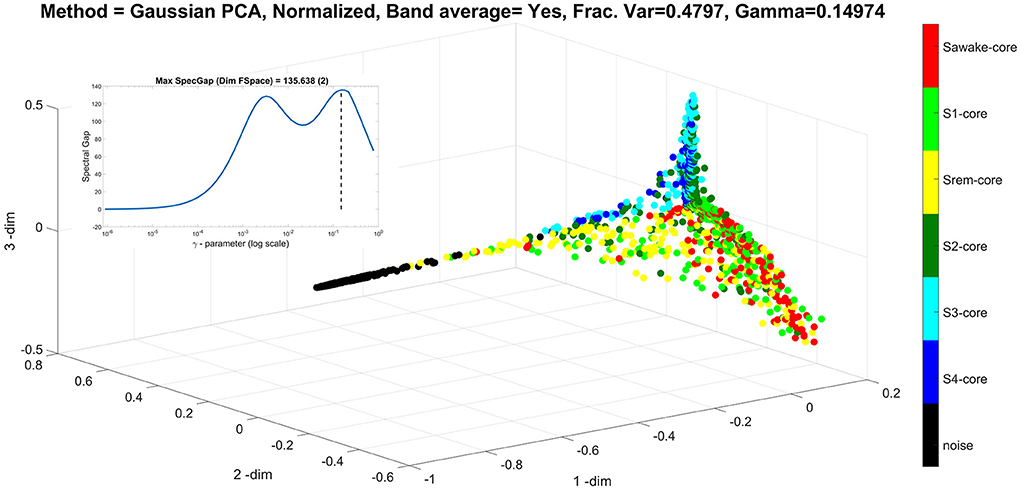

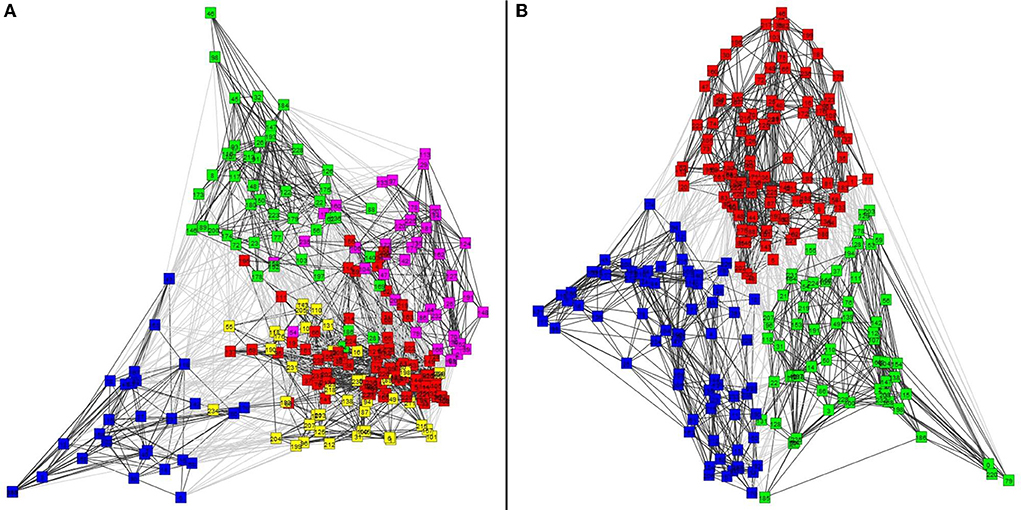

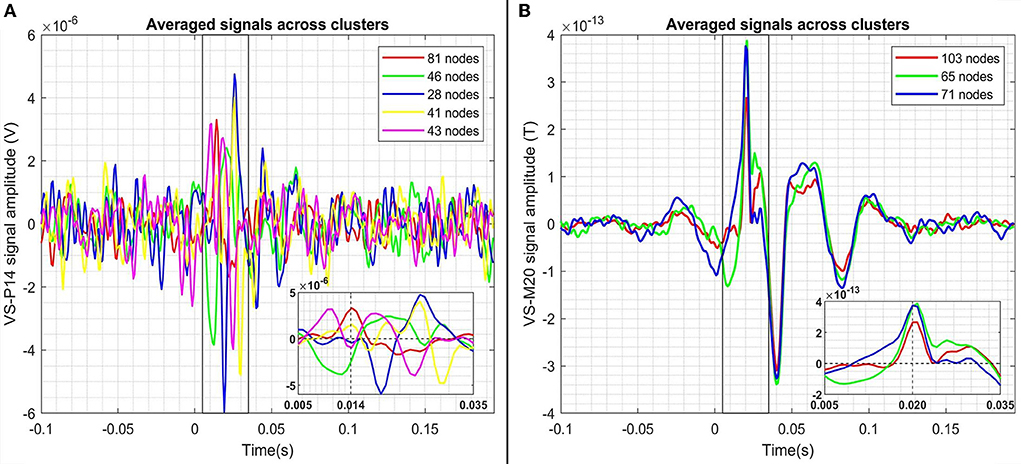

Figure 7 shows the results of the graph clustering of the VS single trial signals for case of the P14-VS (left plot) and M20-VS (right plot). For the embedding of (clustered) data, the step (d) of the approach [2(ii)] of the framework has been used. In order to explore the differences between the signals belonging to each cluster, we compute and plot the average time-course across the signals (nodes) of the same cluster. The results are shown in Figure 8. As expected, in the case of the M20-VS signals (Figure 7B), there are fewer clusters (three clusters) compared to the P14-VS signals (five clusters). More importantly, all three clusters extracted from the M20-VS show the first peak at exactly the same latency, 20 ms. In contrast, the five clusters extracted from the P14-VS show distinct peaks for a range of latencies before and after the expected peak at 14 ms. This is an argument that the “thalamic” VS picks up strong contributions from more than one deep area.

Figure 7. The clusters of virtual sensors (VS) single trial signals obtained. (A) Shows with different colors, five clusters for the P14-VS detected using the Shi and Malik [28] algorithm and obtained modularity = 0.36 and conductance = 0.44. (B) Shows three clusters for the M20-VS, detected using the Shi and Malik [28] algorithm and obtained modularity = 0.45 and conductance = 0.27.