- 1Department of Mathematics, Al-Aqsa University, Gaza, Palestine

- 2Department of Applied Statistics and Econometrics, Faculty of Graduate Studies for Statistical Research, Cairo University, Giza, Egypt

- 3Department of Quantitative Analysis, College of Business Administration, King Saud University, Riyadh, Saudi Arabia

In the linear regression model, the multicollinearity effects on the ordinary least squares (OLS) estimator performance make it inefficient. To solve this, several estimators are given. The Kibria-Lukman (KL) estimator is a recent estimator that has been proposed to solve the multicollinearity problem. In this paper, a generalized version of the KL estimator is proposed, along with the optimal biasing parameter of our proposed estimator derived by minimizing the scalar mean squared error. Theoretically, the performance of the proposed estimator is compared with the OLS, the generalized ridge, the generalized Liu, and the KL estimators by the matrix mean squared error. Furthermore, a simulation study and the numerical example were performed for comparing the performance of the proposed estimator with the OLS and the KL estimators. The results indicate that the proposed estimator is better than other estimators, especially in cases where the standard deviation of the errors was large and when the correlation between the explanatory variables is very high.

Introduction

The statistical consequences of multicollinearity are well-known in statistics for a linear regression model. Multicollinearity is known as the approximately linear dependency among the columns of the matrix X in the following linear model

where y is an n × 1 vector of the given dependent variable, X is a known n × p matrix of the given explanatory variables, β is an p × 1 vector of given unknown regression parameters, and ε is described as an n × 1 vector of the disturbances. Then, the ordinary least squares (OLS) estimator of β for the model (1) is given as:

The multicollinearity problem effects on the behavior of the OLS estimator make it inefficient. Sometimes, it produces wrong signs [1, 2]. Many studies were conducted to handle this. For example, Hoerl and Kennard [2] proposed the ordinary ridge and the generalized ridge (GR) estimators, while Liu [3] introduced the popular Liu and the generalized Liu (GL), and very recently, Kibria and Lukman [1] proposed a ridge-type estimator called the Kibria–Lukman (KL) estimator which is defined by

This estimator has been extended for use in different generalized linear models, such as Lukman et al. [4, 5], Akram et al. [6], and Abonazel et al. [7].

According to recent papers [8–10], we can say that the efficiency of any bias estimator will increase if the estimator is modified or generalized using bias parameters that vary from observation to observation in the sample (ki and/or di) rather than in fixed bias parameters (k and/or d). Hence, the main purpose of this paper is to develop a general form of the KL estimator to combat the multicollinearity in the linear regression model.

The rest of the discussion in this paper is structured as follows: Section Statistical Methodology presents the statistical methodology. In Section Superiority of the Proposed GKL Estimator, we theoretically compare the proposed general form of the KL estimator with each of the mentioned estimators. In Section The Biasing Parameter Estimator of the GKL Estimator, we give the estimation of the biasing parameter of the proposed estimator. Different scenarios of the Monte Carlo simulation are done in Section A Monte Carlo Simulation Study. A real data is used in Section Empirical Application. Finally, Section Conclusion presents some conclusions.

Statistical Methodology

Canonical Form

The canonical form of the model in equation (1) is used as follows:

where Z = XR, α = R′β, and R is an orthogonal matrix such that Then, the OLS of α is as:

and the matrix mean squared error (MMSE) is given as,

Ridge Regression Estimators

The OR and the GR of αare, respectively, defined as follows [2]:

where , k > 0 and , with K = diag(k1, k2, ..., kp), ki > 0, and i = 1, 2, ..., p.

The MMSE of the OR and the GR are given respectively as:

Liu Regression Estimators

The Liu and the GL of αare respectively defined as follows [3]:

where

The MMSE of the Liu and the GL are, respectively, given as:

Kibria–Lukman Estimator

The KL estimator of α is given as Kibria and Lukman [1]:

where M1 = [G−kIp] and the MMSE of this estimator is given as:

The Proposed GKL Estimator

Now, by replacing W1 with W2 and M1 with M2 = [G−K] in the KL estimator, we obtain the general form of the GKL estimator as follows:

then, the MMSE of the proposed GKL estimator is computed by,

Superiority of the Proposed GKL Estimator

In this section, we make a comparison of the proposed GKL estimator with each of OLS, GR, GL, and KL estimators. First, we offer some useful lemmas for our comparisons of estimators.

Lemma 1: Wang et al. [11]: Suppose M and N are n × n positive definite matrices, then M > N if and only if (iff) λ − 1max, where λ − 1max is the maximum eigenvalue of NM−1 matrix.

Lemma 2: Farebrother [12]: Let S be an n × n positive definite matrix. That is, S > 0 and α be some vector. Then, S − αα′ > 0 iff α′S−1α < 1.

Lemma 3: Trenkler and Toutenburg [13]: Let αi = Uiw, i = 1, 2 be any two linear estimators of α. Suppose that , where be the covariance matrix of and . Then,

iff where

Theorem 1: is superior to iff

Proof: The covariance matrices difference is written as

where becomes positive definite iff or (gi + ki) − (gi − ki) > 0. It is clear that for ki > 0, i = 1, 2, ..., p, (gi + ki) − (gi − ki) = 2ki > 0. Therefore, this is done using Lemma 3.

Theorem 2: When λ − 1max, is superior to iff

where , , and .

Proof:

For ki > 0, it is obvious that M > 0 and N > 0. Then, M − N > 0 iff λ − 1max, where λ − 1max is the maximum eigenvalue of NM−1. So, this is done by Lemma 1.

Theorem 3: is superior to iff

where .

Proof: The covariance matrices difference is written as

where becomes positive definite iff or (gi + ki)(gi + di) − (gi − ki)(gi + 1) > 0. So, if ki > 0 and 0 < di < 1, (gi + ki)(gi + di) − (gi − ki)(gi + 1) = ki(2gi + di + 1) + gi(di − 1) > 0. So, this is done by Lemma 3.

Theorem 4: is superior to iff

where .

Proof: The covariance matrices difference is written as

where becomes positive definite iff or (gi + ki)(gi − k) − (gi − ki)(gi + k) > 0. So, if ki > 0 and ki > k, (gi + ki)(gi − k) − (gi − ki)(gi + k) = 2gi(ki − k) > 0. So, this is done by Lemma 3.

The Biasing Parameter Estimator of the GKL Estimator

The performance of any estimator depends on its biasing parameter. Therefore, the determination of the biasing parameter of an estimator is an important issue. Different studies analyzed this issue (e.g., [2, 3, 8–10, 14–24]).

Kibria and Lukman [1] proposed the biasing parameter estimator of the KL estimator as follows:

Here, we find the estimation of the optimal values of ki for the proposed GKL estimator. The optimal values of ki are obtained by minimizing

Differentiating m(k1, k2, ..., kp) with respect to ki and setting [, the optimal values of ki after replacing σ2 and by their unbiased estimators become as follows:

A Monte Carlo Simulation Study

The explanatory variables are generated as follows [25–27]:

where aji are the independent pseudo-random numbers that have the standard normal distribution and ρ is known that the correlation between two given explanatory variables. The dependent variable y are given by:

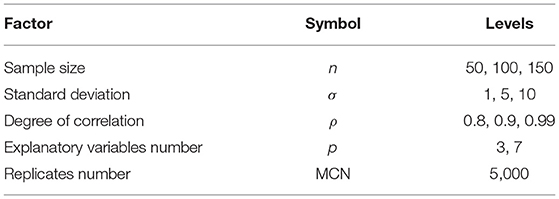

where εj are the i.i.dN(0, σ2). The values of β are given such that β′β = 1 as discussed in Dawoud and Abonazel [28], Algamal and Abonazel [29], Abonazel et al. [7, 30], and Awwad et al. [31]. Also, all factors that used in the simulation are given in Table 1.

In order to see the performance of the OLS, KL, and the proposed GKL estimators with their biasing parameters estimators presented in Section Statistical Methodology, the estimated mean squared error (EMSE) are calculated for each replicate with different values of σ, ρ, n, and p using the following formula:

where is the estimated vector of α at the lth experiment of the simulation.

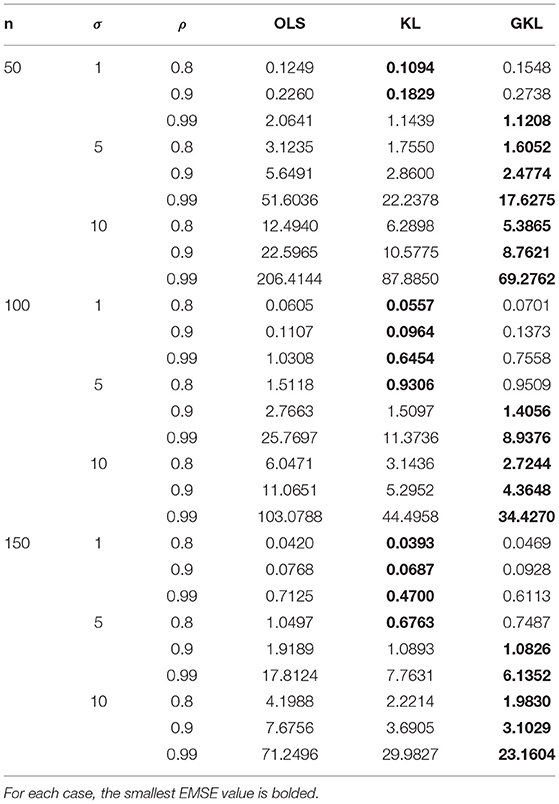

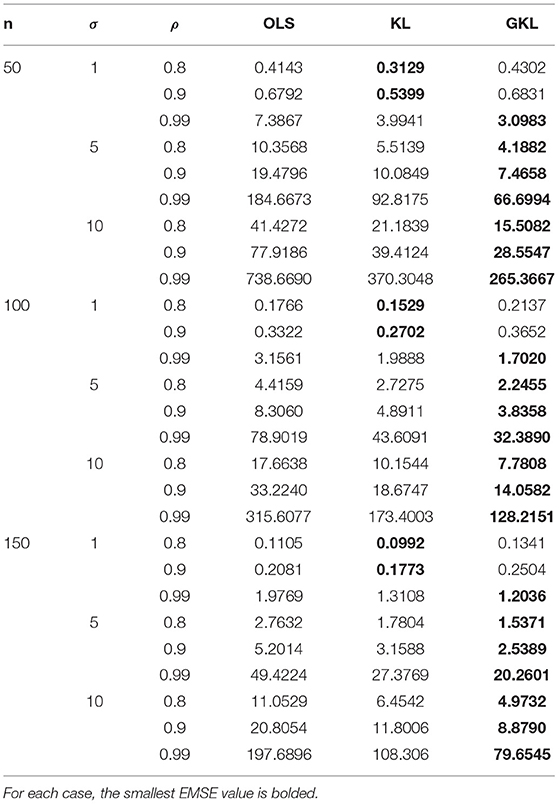

The EMSE values of the OLS, KL, and GKL estimators are presented in Tables 2, 3. We can conclude the following based on the simulation results:

1. When the standard deviation (σ), the degree of multicollinearity (ρ), and the explanatory variables number (p) get an increase, the EMSE values of estimators get an increase.

2. The EMSE values of estimators get a decrease in case of the sample size gets an increase.

3. The GKL is better than the OLS estimator in all different values of factors except when σ = 1 and ρ = 0.80, 0.90 with the considered values of p and n.

4. The GKL is better than the KL estimator in all different values of factors except the following cases: (i) for n = 50 when σ = 1 and ρ = 0.80, 0.90 with p = 3 or 7, (ii) for n = 100, 150 when σ = 1 in all presented values of ρ with p = 3 or when σ = 5 and ρ = 0.80 with p = 3, and (iii) for n = 100, 150 when σ = 1 and ρ = 0.80, 0.90 with p = 7.

5. Finally, we see that the proposed GKL estimator is obviously efficient in case of the standard deviation getting large and when the correlation among the explanatory variables are very high.

Empirical Application

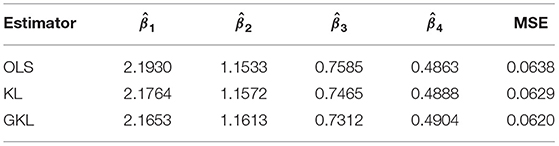

For clarifying the performance of the proposed GKL estimator, the dataset of the Portland cement that was originally due to Woods et al. [32], which was considered in Kibria and Lukman [1], where the dependent variable is the heat evolved after 180 days of curing and measured in calories per gram of cement. In this study, the first explanatory variable is tricalcium aluminate, the second explanatory variable is tricalcium silicate, the third explanatory variable is tetracalcium aluminoferrite, and the fourth explanatory variable is β-dicalcium silicate. The eigenvalues of X′X matrix are 44,676.21, 5,965.42, 809.95, and 105.42. Then, the condition number is 20.58. Therefore, multicollinearity exists among the predictors. The estimated error variance is , which shows high noise in the data. The estimated values of the optimal parameters in the GKL estimator are calculated as derived in Section Statistical Methodology. Also, the equation proposed by Kibria and Lukman [1] for estimating the biasing parameter of the KL estimator is used. Consequently, the mean square error (MSE) of the OLS, KL, and GKL estimators are presented in Table 4. From Table 4, we can note that the KL estimator is better than the OLS estimator, and the GKL estimator is better than the OLS and KL estimators.

Conclusion

In this paper, we proposed the GKL estimator. The performance of the proposed GKL estimator is theoretically compared with the OLS, GR, GL, and KL estimators in terms of known matrix mean squared error. Moreover, the optimal shrinkage parameter of the proposed GKL estimator is presented. A simulation study and the numerical example were performed for comparing the performance of the proposed GKL estimator with the OLS and KL estimators based on the estimated mean squared error criterion. The results indicate that the proposed estimator is better than other estimators, in particular, in the case the standard deviation of the errors was large and when the correlation between the explanatory variables is very high.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

ID, MA, and FA contributed to conception and structural design of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank the Deanship of Scientific Research at King Saud University, represented by the Research Center, at CBA for supporting this research financially.

References

1. Kibria BMG, Lukman AF. A new ridge-type estimator for the linear regression model: simulations and applications. Hindawi Sci. (2020) 2020:9758378. doi: 10.1155/2020/9758378

2. Hoerl AE, Kennard RW. Ridge regression: biased estimation for nonorthogonal problems. Technometrics. (1970) 12:55–67.

3. Liu K. A new class of biased estimate in linear regression. Commun Stat Theory Methods. (1993) 22:393–402.

4. Lukman AF, Algamal ZY, Kibria BG, Ayinde K. The KL estimator for the inverse Gaussian regression model. Concurr Comput Prac Exp. (2021) 33:e6222. doi: 10.1002/cpe.6222

5. Lukman AF, Dawoud I, Kibria BM, Algamal ZY, Aladeitan B. A new ridge-type estimator for the gamma regression model. Scientifica. (2021) 2021:5545356. doi: 10.1155/2021/5545356

6. Akram MN, Kibria BG, Abonazel MR, Afzal N. On the performance of some biased estimators in the gamma regression model: simulation and applications. J Stat Comput Simul. (2022) 1–23. doi: 10.1080/00949655.2022.2032059

7. Abonazel MR, Dawoud I, Awwad FA, Lukman AF. Dawoud–Kibria estimator for beta regression model: simulation and application. Front Appl Math Stat. (2022) 8:775068. doi: 10.3389/fams.2022.775068

8. Rashad NK, Hammood NM, Algamal ZY. Generalized ridge estimator in negative binomial regression model. J Phys. (2021) 1897:012019. doi: 10.1088/1742-6596/1897/1

9. Farghali RA, Qasim M, Kibria BM, Abonazel MR. Generalized two-parameter estimators in the multinomial logit regression model: methods, simulation and application. Commun Stat Simul Comput. (2021) 1–16. doi: 10.1080/03610918.2021.1934023

10. Abdulazeez QA, Algamal ZY. Generalized ridge estimator shrinkage estimation based on particle swarm optimization algorithm. Electro J Appl Stat Anal. (2021) 14:254–65. doi: 10.1285/I20705948V14N1P254

12. Farebrother RW. Further results on the mean square error of ridge regression. J R Stat Soc Ser B. (1976) 38:248–50.

13. Trenkler G, Toutenburg H. Mean squared error matrix comparisons between biased estimators-an overview of recent results. Stat Pap. (1990) 31:165–79.

14. Hoerl AE, Kannard RW, Baldwin KF. Ridge regression: some simulations. Commun. Stat. (1975) 4:105–23.

15. Khalaf G, Shukur G. Choosing ridge parameter for regression problems. Commun Stat Theory Methods. (2005) 34:1177–82. doi: 10.1081/STA-200056836

16. Khalaf G, Månsson K, Shukur G. Modified ridge regression estimators. Commun Stat Theory Methods. (2013) 42:1476–87. doi: 10.1080/03610926.2011.593285

17. Månsson K, Kibria BMG, Shukur G. Performance of some weighted Liu estimators for logit regression model: an application to Swedish accident data. Commun Stat Theory Methods. (2015) 44:363–75. doi: 10.1080/03610926.2012.745562

18. Kibria BMG, Banik S. Some ridge regression estimators and their performances. J Mod Appl Stat Methods. (2016) 15:206–38. doi: 10.22237/jmasm/1462075860

19. Algamal ZY. A new method for choosing the biasing parameter in ridge estimator for generalized linear model. Chemometr Intell Lab Syst. (2018) 183:96–101. doi: 10.1016/j.chemolab.2018.10.014

20. Abonazel MR, Farghali RA. Liu-type multinomial logistic estimator. Sankhya B. (2019) 81:203–25. doi: 10.1007/s13571-018-0171-4

21. Qasim M, Amin M, Omer T. Performance of some new Liu parameters for the linear regression model. Commun Stat Theory Methods. (2020) 49:4178–96. doi: 10.1080/03610926.2019.1595654

22. Suhail M, Chand S, Kibria BG. Quantile based estimation of biasing parameters in ridge regression model. Commun Stat Simul Comput. (2020) 49:2732–44. doi: 10.1080/03610918.2018.1530782

23. Babar I, Ayed H, Chand S, Suhail M, Khan YA, Marzouki R. Modified Liu estimators in the linear regression model: an application to tobacco data. PLoS ONE. (2021) 16:e0259991. doi: 10.1371/journal.pone.0259991

24. Abonazel MR, Taha IM. Beta ridge regression estimators: simulation and application. Commun Stat Simul Comput. (2021) 1–13. doi: 10.1080/03610918.2021.1960373

25. McDonald GC, Galarneau DI. A Monte Carlo evaluation of some ridge-type estimators. J Am Stat Assoc. (1975) 70:407–16. doi: 10.2307/2285832

27. Kibria BMG. Performance of some new ridge regression estimators. Commun Stat Simul Comput. (2003) 32:419–35. doi: 10.1081/SAC-120017499

28. Dawoud I, Abonazel MR. Robust Dawoud–Kibria estimator for handling multicollinearity and outliers in the linear regression model. J Stat Comput Simul. (2021) 91:3678–92. doi: 10.1080/00949655.2021.1945063

29. Algamal ZY, Abonazel MR. Developing a Liu-type estimator in beta regression model. Concurr Comput Pract Exp. (2022) 34:e6685. doi: 10.1002/cpe.6685

30. Abonazel MR, Algamal ZY, Awwad FA, Taha IM. A new two-parameter estimator for beta regression model: method, simulation, and application. Front Appl Math Stat. (2022) 7:780322. doi: 10.3389/fams.2021.780322

31. Awwad FA, Dawoud I, Abonazel MR. Development of robust Özkale–Kaçiranlar and Yang–Chang estimators for regression models in the presence of multicollinearity and outliers. Concurr Comput Pract Exp. (2022) 34:e6779. doi: 10.1002/cpe.6779

Keywords: generalized liu estimator, multicollinearity, generalized ridge estimator, biasing parameter, ridge-type estimator

Citation: Dawoud I, Abonazel MR and Awwad FA (2022) Generalized Kibria-Lukman Estimator: Method, Simulation, and Application. Front. Appl. Math. Stat. 8:880086. doi: 10.3389/fams.2022.880086

Received: 21 February 2022; Accepted: 14 March 2022;

Published: 20 April 2022.

Edited by:

Min Wang, University of Texas at San Antonio, United StatesReviewed by:

Muhammad Suhail, University of Agriculture, Peshawar, PakistanZakariya Yahya Algamal, University of Mosul, Iraq

Copyright © 2022 Dawoud, Abonazel and Awwad. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohamed R. Abonazel, bWFib25hemVsQGN1LmVkdS5lZw==

Issam Dawoud

Issam Dawoud Mohamed R. Abonazel

Mohamed R. Abonazel Fuad A. Awwad

Fuad A. Awwad