- 1Department of Mathematics, Faculty of Mathematics and Natural Sciences, Universitas Indonesia, Depok, Indonesia

- 2School of Quantitative Sciences, Institute of Strategic Industrial Decision Modelling, Universiti Utara Malaysia, Sintok, Kedah, Malaysia

In this work, a new class of spectral conjugate gradient (CG) method is proposed for solving unconstrained optimization models. The search direction of the new method uses the ZPRP and JYJLL CG coefficients. The search direction satisfies the descent condition independent of the line search. The global convergence properties of the proposed method under the strong Wolfe line search are proved with some certain assumptions. Based on some test functions, numerical experiments are presented to show the proposed method's efficiency compared with other existing methods. The application of the proposed method for solving regression models of COVID-19 is provided.

Mathematics subject classification: 65K10, 90C52, 90C26.

1. Introduction

The coronavirus disease, often called COVID-19, is an acute vector infectious disease that emerged in 2019. This disease is caused by the newly discovered coronavirus (SARS-CoV-2) and can be transmitted through droplets produced when an infected person exhales, sneezes, or coughs. Most people infected with the virus will experience mild to moderate symptoms, such as low-grade fever, runny nose, and difficulty breathing, and recover without special treatment [1].

Clinically, as of December 19, 2021, a total of 4,260,544 confirmed cases of COVID-19, with 4,111,619 recoveries and 144,002 deaths, were recorded from all regions in Indonesia since the disease was first reported in Wuhan, China [2]. To date, many studies have been carried out to model various aspects related to the coronavirus outbreak, and several researchers have also applied numerical methods to several COVID-19 models. For instance, Aggarwal et al. [3] proposed a partial differential equation model to calculate the number of COVID-19 cases in Punjab by using the modified cubic B-spline function and differential quadrature method. Other numerical methods which are applied to solve the COVID-19 model were proposed by Amar et al. [4] and Sulaiman et al. [5]. Amar et al. used various statistics and machine learning modeling approaches to forecast the COVID-19 spread in Egypt. Meanwhile, Sulaiman et al. proposed a new three-term conjugate gradient optimization method for the data from the global confirmed cases of COVID-19 from January to September 2020.

The conjugate gradient (CG) method plays an important role in solving large-scale optimization models because it uses low memory and good convergence properties. This method was first introduced by Hestenes and Stiefel [26] and is used to solve a system of linear equations. After that, in 1964, Fletcher and Reeves extended the form of the conjugate gradient method to solve large-scale nonlinear systems of equations and optimization problems without constraints. The results of the expansion carried out by Fletcher and Reeves prompted researchers to propose a new conjugate gradient method to improve computational performance and the level of convergence [6]. In 2020, Jian et al. proposed a conjugate gradient method with a spectral conjugate gradient type named the JYJLL method which is a modification of the Fletcher-Reeves (FR) and conjugate descent (CD) methods [7]. The author has determined the convergence analysis of the JYJLL method which resulted in an efficient computational performance. In addition, Zheng and Shi [8] also proposed a modification of the conjugate gradient method with a three-term type symbolized by ZPRP. This ZPRP method is an extension of the Polak-Ribiére-Polyak (PRP) method [9, 10] in which modifications are made by changing the denominator of the parameters in the PRP method. The computational performance resulting from this method is very efficient when compared to the CG-Descent method [11]. Several CG methods that have been proposed can be seen in literature [12–17]. Besides the CG method, the class of accelerated gradient descent schemes of Quasi-Newton type also contains very efficient and robust methods and can be considered for solving optimization problems. The accelerated parameters highlights can be seen in other studies [18–22]. However, in this paper we restrict the discussion to the CG method.

The CG method has recently been used to solve various problems related to optimization. For example, image reconstruction [23–25], compressed sensing [26], signal processing [27], robotic motion control [5, 15, 16, 28, 29], portfolio selection [5, 13, 14, 29–31], regression analysis [5, 32] and many more.

In this paper, we consider the general unconstrained optimization problems as follows:

where f : ℝn → ℝ is the continuously differentiable function and its gradient is written by h(r) = ∇f(r). The iterative formula of the standard CG method can be formulated as

and

where rk is the current iteration, hk is the gradient value of h at rk, zk is the search direction, βk is the conjugate parameter and αk > 0 is the step size to be obtained by some line search techniques. To calculate the step size αk > 0, we can use exact line search, weak Wolfe line search, or strong Wolfe line search. The exact line search is computed such that αk satisfy

The weak Wolfe line search is computed such that αk satisfy

and the strong Wolfe line search is computed such that αk satisfy

where 0 < δ < σ < 1.

The most well-known standard CG methods are the Hestenes-Stiefel (HS) method [33], the Fletcher-Reeves (FR) method [34], the Polak-Ribiére-Polyak (PRP) method [9, 10], the Conjugate-Descent (CD) method [35], the Dai-Yuan (DY) method [36], the Liu-Storey (LS) method [37], and the Rivaie-Mustafa-Ismail-Leong (RMIL) method [38] and their βk parameters are

respectively, where qk−1: = hk − hk−1 and ||.|| is a symbol for Euclidean norm on ℝn.

The zk in formula (2) is the search direction used as a guide to move to the next point and must satisfy the descent direction property

It should be noted that formula (6) is an important property for the CG method to be globally convergent.

Inspired by the JYJLL method, in this study we propose a modification of the new CG method to improve the computational performance. In addition, in this study, we also apply the new method for solving a model of COVID-19 in Indonesia in which the data is taken from March 2020 (the month of the first recorded case) until May 2022.

The paper is structured as follows. In Section 2, we describe the proposed method, algorithm, and convergence analysis. In Section 3, we present the numerical experiments to show the efficiency of our new method. Finally, the application of regression models of COVID-19 using the new method is illustrated in Section 4.

2. Proposed method, algorithm and convergence analysis

Recently, Jian et al. [7] proposed a new spectral JYJLL CG method where the method satisfies the descent condition without depending on any line search. The JYJLL method is globally convergent under a weak Wolfe line search and the numerical result is efficient compared with HZ [39], KD [40], AN1 [41], and LPZ [42] methods. This new method has search direction as follows:

where is the spectral parameter defined as

and is formulated as

Additionally, Zheng and Shi [8] proposed a modified three-term HS method by taking a modification to the denominator of the HS formula. The new method is named ZHS where the search direction is defined as follows:

and

The ZHS method satisfies the sufficient descent condition without relying on a certain line search. Under some conditions, the ZHS method fulfills global convergence properties under a weak Wolfe line search and the numerical results are better than the CG-DESCENT method [39].

Motivated by the JYJLL and ZHS parameters, in this paper, the new conjugate parameter is proposed in the form as follows:

that is, replacing the JYJLL denominator with the ZHS denominator and retaining the JYJLL numerator. In addition, we retain the same formula of the spectral parameters by the JYJLL method as in formula (7). So, the search direction of our proposed method is defined as follows:

Our proposed method is called the spectral FMSD (Fevi-Malik-Sulaiman-Dipo) method.

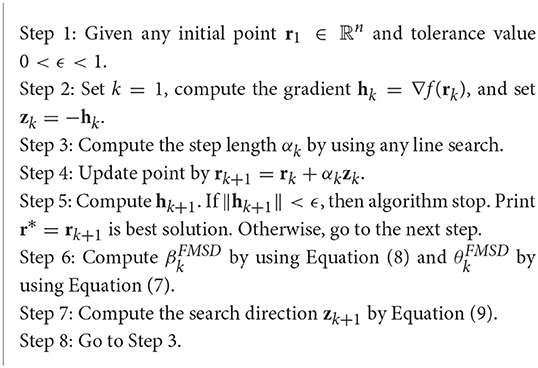

Next, we give the algorithm of our proposed method below.

The following lemma shows that the spectral FMSD always satisfies the descent direction condition regardless of any line search.

Lemma 2.1. Suppose that zk is generated by formula (9), then

1. the search direction zk satisfies the descent direction property, that is, for k ≥ 1.

2. .

Proof: We will prove the theorem by induction. For k = 1, it is true, i.e., . Now, assume that is true for k − 1, thus we prove is true for k. With regard to formula (8), the proof is divided into two cases, as presented below:

• Case 1: if and μ > 0, then

it implies

Let θk is angle between hk and zk−1, then

From formulas (8), (7), (9), (10) and (11), we have

• Case 2: if and μ > 0, then . Using formulas (8), (7), (9), and (11), we get

Hence, is satisfied for k ≥ 1.

Next, we will prove the interval of . From formulas (12 and (13, we obtain the relation . Furthermore, since , we have .

Now, from formulas (8) and (7), we get

Thus, holds. The proof is complete.

In the analysis below, we establish the global convergence properties of the spectral FMSD method. First, we need the following assumption, proposition, and Zoutendijk conditions.

Assumption 2.2. (A1) The level set is bounded where r0 is the starting point; (A2) In a neighborhood of the function f is continuously differentiable and its gradient Lipschitz continuous on . That is, we can find L > 0 such that

Proposition 2.3. Suppose that zk is generated by formula (9) and Assumption 2.2 holds. If the step length αk is calculated by weak Wolfe line search (4) and (5), then

where σ and L are positive constant in Assumption 2.2 and formula (5) respectively.

Proof: Both sides of formula (5) are subtracted by , we get

combining with Lipschitz continuity, we obtain

Since zk is a descent direction and σ < 1, formula (14 holds immediately.

Zoutendijk condition [43] is often used to prove the global convergence of the CG method. The following lemma shows that the Zoutendijk condition holds for the proposed method under the weak Wolfe line search conditions formulas (4) and (5).

Lemma 2.4. Suppose Assumption 2.2 holds and consider any iterative expression formula (2, where zk is generated by formula formula (9). If αk is calculated by weak Wolfe line search formulas (4) and (5), then the following so-called Zoutendijk condition holds:

Proof: From weak Wolfe condition (4), we have

combining with formula (14), we get

Summing up both sides of formula (16), and applying the condition (A1) in Assumption 2.2, zoutendijk condition (15) holds.

Lemma 2.5. Suppose that Assumption 2.2 holds and consider the sequences {hk} and {zk} are generated by Algorithm 1, where αk is calculated by weak Wolfe line search (4–(5, then

Proof: From formula (9), we have

Squaring up both sides of formula (18) and using the first condition in Lemma 2.1, we obtain

multiplying up both sides by , we get

Since z1 = −h1 holds, we obtain

The proof is finished.

Based on Lemmas 2.1, 2.4, and 2.5, we can establish the theorem of global convergence of the FMSD method.

Theorem 2.6. Suppose that Assumption 2.2 is satisfied. Consider {rk} is generated by Algorithm 1, where αk is calculated by weak Wolfe line search (4–5), then

Proof: We prove this theorem by contradiction. Suppose that formula (19) is not true, then there exists a positive constant a > 0 such that

Using the above relation and formula (17), we obtain

It implies

which contradicts with the Zoutendijk condition in formula (15). Hence, formula (19) is true. The proof is finished.

3. Numerical experiments

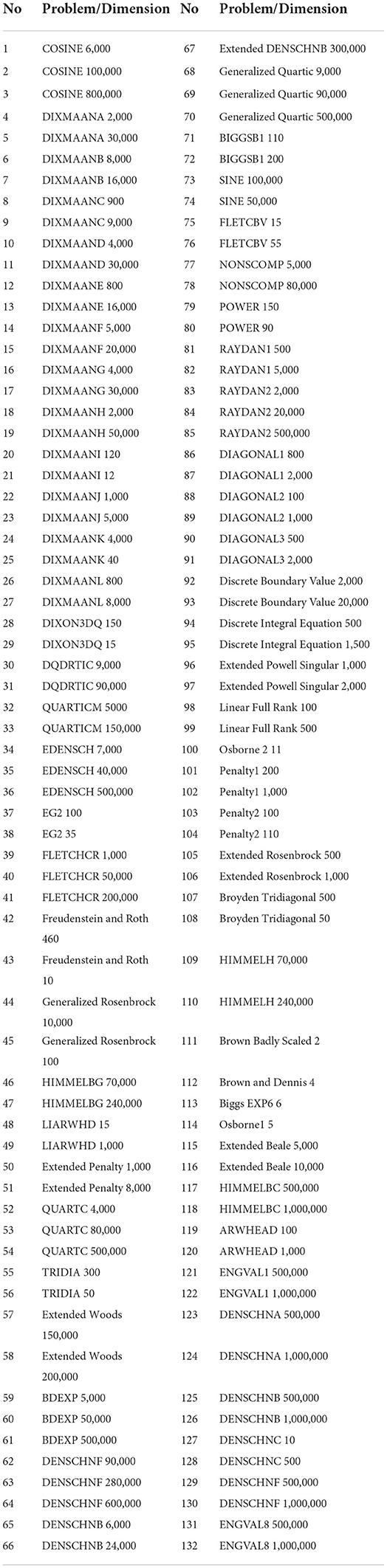

In this part, we report the numerical experiments of the FMSD method and compare the computational performance with the JYJLL method proposed by Jian et al. [7]. Both the methods were coded in MATLAB 2019a and ran using a personal computer with an Intel Core i7 processor, 16 GB RAM, 64 bit Windows 10 Pro operating system. The comparisons are made under the weak Wolfe line search (4–5) with σ = 0.2 and δ = 0.02 for the FMSD method and σ = 0.1 and δ = 0.01 for the JYJLL method. We tested 132 unconstrained problems in the CUTEr library suggested by Andrei [6, 44] and Moré et al. [45] with dimensions from 2 to 1,000,000. Mostly, we used two different dimensions for the problem and the iteration stopped using the criteria. The initial point used for all problems can be seen in Jiang et al. [25]. Table 1 details the test function and dimensions of the test problems.

Detailed numerical results are provided in Table 2 which include the number of iterations (NOI), the total number of function evaluations (NOF), and the CPU time in seconds (CPU). In Table 2, “-” indicates that the methods failed to solve the corresponding problems within 2000 iterations.

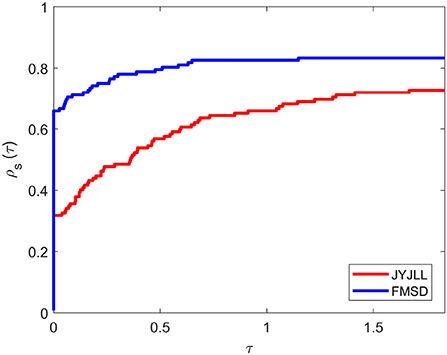

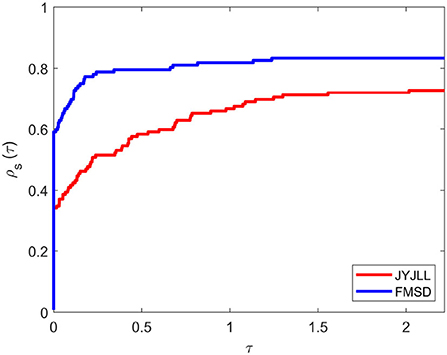

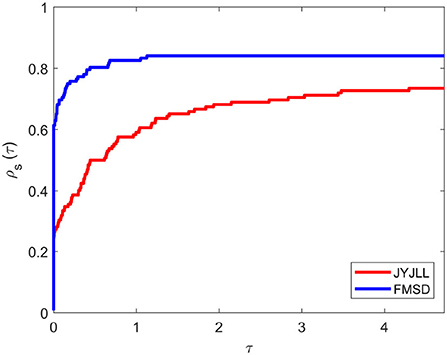

To clearly determine a method that has good computational performance, here we use the performance profiles suggested by Dolan and Moré [46] to show the performance under NOI, NOF, and CPU time, respectively. Comparison results are obtained by running a solver on a set P of problems and recording relevant information such as NOI, NOF, and CPU time. Suppose that S is the set of solvers under consideration and assume S is made up of ns solvers and P is made up of np problems. For each problem p ∈ P and solver s ∈ S, we denote tp,s as the CPU time (or NOI or NOF, etc.) required to solve problem p ∈ P by solver s ∈ S. The comparison between different solvers is based on the performance ratio described by

Let ρs(τ) be the probability for solver s ∈ S that a performance ratio rp,s is within a factor τ ∈ ℝn. For example, the value of ρs(1) is the probability that the solver will win over the rest of the solvers. The formula of ρs(τ) is defined as follows:

According to the rule of the performance profile above, we can describe the performance curves based on Table 2 as in Figures 1–3. Based on the three figures, we can see that the FMSD method is superior to the JYJLL method under the unconstrained problems in Table 1.

4. Application to regression models of COVID-19

SARS-CoV-2 virus popularly known as the COVID-19 infection was first reported in the Asian continent from Wuhan province, Hubei city of China toward the end of 2019. As of 20 June 2022, almost all the countries in Asia except Turkmenistan have reported at least one case of the infection [47]. However, countries that include India, South Korea, Vietnam, Japan, and Iran recorded the highest rates of confirmed cases of the infection [48]. The first positive COVID-19 case in Indonesia was recorded on March 2, 2020, but within the first 6 weeks, the presence of the virus has been confirmed in almost all the provinces of the country [49]. Despite the early wide-scale response from the government, the country has recorded a high number of deaths from the positive cases of the infection [50]. According to the WHO, Indonesia has so far recorded a total of 156,695 deaths from a total of 6,069,255 confirmed cases of the infection as of 20 June 2022 [51] of which more than 750 deaths are front-line health workers. Based on recent figures, we can say that Indonesia has been able to contain the disease outbreak. This can be attributed to the admirable resilience of the country's front-line health workers, strict health protocols, and successful vaccination programs. Data from the WHO shows that the total of people that have been administered the vaccine doses as of 15 June 2020 stands at 417,522,347 [51].

In recent times, several works of literature have employed different mathematical and numerical approaches for modeling the COVID-19 outbreak [see [5, 32, 52]]. This paper aims to study the performance of the proposed method on a parameterized COVID-19 regression model. For deriving the COVID-19 regression model, the study will consider the total Indonesian monthly positive confirmed cases of the infection from March 2020 (the month of the first recorded case) until May 2022. The obtained data would be transformed into an unconstrained optimization model which would later be solved using the proposed method.

A regression analysis function of the form:

has the response variable denoted by y, ε represents the error, and the predictor is given as xi, i = 1, 2, …, p, p > 0. The type of function plays an important role in the statistical modeling of problems in applied sciences, physical sciences, management sciences, and more. Based on the above description, we can describe regression analysis as a statistical procedure employed to estimate the relationships between a dependent and one or several independent variables. For any given regression analysis-related problem, the linear regression function can be derived by computing y such that

with a0, …, ap representing the regression parameters. These parameters a0, a1, …, ap are estimated to minimize the error ε value. Based on several works of literature, the linear regression process rarely occurs in situations because most problems are often nonlinear in nature. Based on the non-linearity of the problems, studies usually consider the nonlinear regression process [5]. This and other considerations motivated the idea of using the nonlinear regression procedure in this study.

To construct the parameterized regression model, we considered the death cases recorded from those infected by the COVID-19 virus from the first month Indonesia confirmed the first case; March 2020 until May 2022, totaling 27 months. The data were obtained from the Indonesia COVID Coronavirus Statistics Worldometer [53] and the detailed description of the model formulation process was presented as follows. Note: it may be confirmed that the statistics of recorded cases are less than the actual number, this might be a result of limited testing. From the data presented in Table 3, the x-variable would represent the months considered while the y-variable represents the confirmed death cases for that month. Also, only data of 26 months (March 2020 to April 2022) would be considered for data fitting because data for May 2022 would be reserved for error analysis.

Table 3. Statistics of confirmed positive cases and death recorded from COVID-19 infection in Indonesia from March 2020 to May, 2022.

Based on the data of x and y given in Table 3, the approximate function for the nonlinear least square method was obtained as follows:

The above function (22) will be utilized when approximating the y data values based on x data values. Since this study considered the monthly confirmed cases, the xj would be used to denote the months while yj will present the confirmed cases for that month. Based on this information, the least squares method defined by function (22) would be transformed into an unconstrained minimization problem of the form:

The data for the first 26 months from Table 3 will be used to derive the nonlinear quadratic function for the least square method. The derived function would be extended to construct the unconstrained optimization function. Based on the above discussion, it is obvious that there exist some parabolic relations between the regression parameters u0, u1, u2, the regression function (20) with the data xj and the value of yj.

To define the nonlinear quadratic unconstrained minimization model, Equation (24 would be transformed using data from Table 3 as follows:

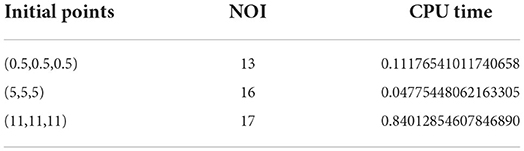

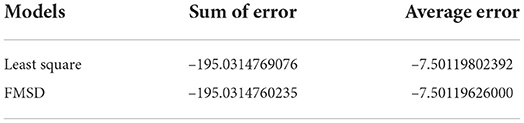

The above nonlinear quadratic model was constructed using data from the first month until the 26th month because the data for the 27th month was reserved for relative error analysis of the predicted data. Now, we can apply the proposed method to solve the model (25). The results presented in Table 4 illustrate the performance of the proposed FMSD algorithm for problem (25) under the weak Wolfe line search conditions (4–5).

The proposed method was employed as an alternative method to compute the values of u0, u1, u2 because of the difficulty faced when using matrix inverse. For the proposed method, different initial points were considered for the model. The iteration was terminated if the iterations exceeded 1,000 or the method was unable to solve the problem.

4.1. Trend line method

In finance and related areas, one of the easiest processes to boost the likelihood of making a successful trade is to understand the direction of an underlying trend because it assures that the overall market dynamics are in your favor. Trend lines are bounding lines that traders use to connect a sequence of prices of security on charts. It is created when three or more price pivot points or more can be connected diagonally. In this section, the proposed FMSD and existing least squares methods were employed to estimate data from Table 3. Microsoft Excel software was used to plot the trend line for data for the first 26 months. The graph demonstrated in Figure 4 was obtained by plotting the real data from Table 3 with x and y denoting the x-axis and y-axis respectively.

The efficiency of the proposed method is further demonstrated by comparing the approximation functions of FMSD with those of the trend line and least squares methods. Table 5 presents the estimation Point and relative Errors for the three methods based on the reserved data for the 27th month.

From Table 5, we can see that the error ε has been minimized which agrees with the main purpose of regression analysis. This shows that the proposed FMSD method is efficient and promising, and thus, can find a wider range of other real-life applications.

5. Conclusions

In this paper, we have presented a spectral conjugate gradient method for solving unconstrained optimization problems by modifying the spectral parameter of the JYJLL method in Jian et al. [7]. Based on some conditions, the global convergence properties were established under a weak Wolfe line search. A numerical comparison of the proposed method with the JYJLL method shows that the proposed method is efficient, fast, and robust. Moreover, our proposed method can solve the COVID-19 case model in Indonesia.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

MM and FN: conceptualization. MM and IS: methodology, numerical experiments, and writing—original draft preparation. MM and DA: formal analysis. IS: application. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by Hibah Riset Penugasan FMIPA UI (Grant No. 002/UN2.F3.D/PPM.00.02 /2022).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Disease (COVID-19) C (2022). Available online at; https://www.who.int/health-topics/coronavirus (accessed June 7, 2022).

2. Data CCP (2022). Available online at: https://www.worldometers.info/coronavirus/#countries (accessed June 7, 2022).

3. Aggarwal V, Arora G, Emadifar H, Hamasalh FK, Khademi M. Numerical simulation to predict COVID-19 cases in punjab. Comput Math Methods Med. (2022) 2022:7546393. doi: 10.1155/2022/7546393

4. Amar LA, Taha AA, Mohamed MY. Prediction of the final size for COVID-19 epidemic using machine learning: a case study of Egypt. Infect Dis Model. (2020) 5:622–34. doi: 10.1016/j.idm.2020.08.008

5. Sulaiman IM, Malik M, Awwal AM, Kumam P, Mamat M, Al-Ahmad S. On three-term conjugate gradient method for optimization problems with applications on COVID-19 model and robotic motion control. Adv Continuous Discrete Models. (2022) 2022:1–22. doi: 10.1186/s13662-021-03638-9

6. Andrei N. Nonlinear Conjugate Gradient Methods for Unconstrained Optimization. Berlin; Heidelberg: Springer (2020).

7. Jian J, Yang L, Jiang X, Liu P, Liu M. A spectral conjugate gradient method with descent property. Mathematics. (2020) 8:280. doi: 10.3390/math8020280

8. Zheng X, Shi J. A modified sufficient descent Polak–Ribiére–Polyak type conjugate gradient method for unconstrained optimization problems. Algorithms. (2018) 11:133. doi: 10.3390/a11090133

9. Polak E, Ribiere G. Note sur la convergence de méthodes de directions conjuguées. Revue française d'informatique et de recherché opérationnelle Série rouge. (1969) 3:35–43. doi: 10.1051/m2an/196903R100351

10. Polyak BT. The conjugate gradient method in extremal problems. USSR Comput Math Math Phys. (1969) 9:94–112. doi: 10.1016/0041-5553(69)90035-4

11. Hager WW, Zhang H. A survey of nonlinear conjugate gradient methods. Pacific J Optim. (2006) 2:35–58.

12. Malik M, Mamat M, Abas SS, Sulaiman IM, Sukono F. A new coefficient of the conjugate gradient method with the sufficient descent condition and global convergence properties. Eng Lett. (2020) 28:704–14.

13. Malik M, Sulaiman IM, Mamat M, Abas SS, Sukono F. A new class of nonlinear conjugate gradient method for unconstrained optimization and its application in portfolio selection. Nonlinear Funct Anal Appl. (2021) 26:811–37. doi: 10.22771/nfaa.2021.26.04.10

14. Abubakar AB, Kumam P, Malik M, Chaipunya P, Ibrahim AH. A hybrid FR-DY conjugate gradient algorithm for unconstrained optimization with application in portfolio selection. AIMS Math. (2021) 6:6506–27. doi: 10.3934/math.2021383

15. Abubakar AB, Kumam P, Malik M, Ibrahim AH. A hybrid conjugate gradient based approach for solving unconstrained optimization and motion control problems. Math Comput Simulat. (2021) 201:640–57. doi: 10.1016/j.matcom.2021.05.038

16. Abubakar AB, Malik M, Kumam P, Mohammad H, Sun M, Ibrahim AH, et al. A Liu-Storey-type conjugate gradient method for unconstrained minimization problem with application in motion control. J King Saud Univer Sci. (2022) 34:101923. doi: 10.1016/j.jksus.2022.101923

17. Malik M, Mamat M, Abas SS, Sulaiman IM, Sukono F. A new spectral conjugate gradient method with descent condition and global convergence property for unconstrained optimization. J Math Comput Sci. (2020) 10:2053–69.

18. Petrović MJ, Stanimirović PS. Accelerated double direction method for solving unconstrained optimization problems. Math Problems Eng. (2014) 2014:965104. doi: 10.1155/2014/965104

19. Petrović MJ. An accelerated double step size model in unconstrained optimization. Appl Math Comput. (2015) 250:309–19. doi: 10.1016/j.amc.2014.10.104

20. Petrović M, Ivanović M, Ðorðević M. Comparative performance analysis of some accelerated and hybrid accelerated gradient models. Univers Thought Publicat Natural Sci. (2019) 9:57–61. doi: 10.5937/univtho9-18174

21. Petrović MJ. Hybridization rule applied on accelerated double step size optimization scheme. Filomat. (2019) 33:655–65. doi: 10.2298/FIL1903655P

22. Petrović MJ, Valjarević D, Ilić D, Valjarević A, Mladenović J. An improved modification of accelerated double direction and double step-size optimization schemes. Mathematics. (2022) 10:259. doi: 10.3390/math10020259

23. Mirhoseini N, Babaie-Kafaki S, Aminifard Z. A nonmonotone scaled fletcher-reeves conjugate gradient method with application in image reconstruction. Bull Malays Math Sci Soc. (2022) 45. doi: 10.1007/s40840-022-01303-2

24. Babaie-Kafaki S, Mirhoseini N, Aminifard Z. A descent extension of a modified Polak–Ribière–Polyak method with application in image restoration problem. Optim Lett. (2022) 2022. doi: 10.1007/s11590-022-01878-6

25. Jiang X, Liao W, Yin J, Jian J. A new family of hybrid three-term conjugate gradient methods with applications in image restoration. Num Algorith. (2022) 91. doi: 10.1007/s11075-022-01258-2

26. Ebrahimnejad A, Aminifard Z, Babaie-Kafaki S. A scaled descent modification of the Hestense-Stiefel conjugate gradient method with application to compressed sensing. J New Res Math. (2022). doi: 10.30495/jnrm.2022.65570.2211

27. Aminifard Z, Babaie-Kafaki S. Dai-Liao extensions of a descent hybrid nonlinear conjugate gradient method with application in signal processing. Num Algorith. (2022) 89:1369–87. doi: 10.1007/s11075-021-01157-y

28. Sulaiman IM, Malik M, Giyarti W, Mamat M, Ibrahim MAH, Ahmad MZ. The application of conjugate gradient method to motion control of robotic manipulators. In: Enabling Industry 4.0 Through Advances in Mechatronics. Singapore: Springer (2022). p. 435–45.

29. Awwal AM, Sulaiman IM, Malik M, Mamat M, Kumam P, Sitthithakerngkiet K. A spectral rmil+ conjugate gradient method for unconstrained optimization with applications in portfolio selection and motion control. IEEE Access. (2021) 9:75398–414. doi: 10.1109/ACCESS.2021.3081570

30. Deepho J, Abubakar AB, Malik M, Argyros IK. Solving unconstrained optimization problems via hybrid CD-DY conjugate gradient methods with applications. J Comput Appl Math. (2022) 405:113823. doi: 10.1016/j.cam.2021.113823

31. Malik M, Sulaiman IM, Abubakar AB, Ardaneswari G, Sukono F. A new family of hybrid three-term conjugate gradient method for unconstrained optimization with application to image restoration and portfolio selection. AIMS Math. (2022) 8:1–28. doi: 10.3934/math.2023001

32. Sulaiman IM, Bakar NA, Mamat M, Hassan BA, Malik M, Ahmed AM. A new hybrid conjugate gradient algorithm for optimization models and its application to regression analysis. Indon J Electr Eng Comput Sci. (2021) 23:1100–9. doi: 10.11591/ijeecs.v23.i2.pp1100-1109

33. Hestenes MR, Stiefel E. Methods of conjugate gradients for solving linear systems. J Res Natl Bureau Standards. (1952) 49:409–36. doi: 10.6028/jres.049.044

34. Fletcher R, Reeves CM. Function minimization by conjugate gradients. Comput J. (1964) 7:149–54. doi: 10.1093/comjnl/7.2.149

36. Dai YH, Yuan YX. A nonlinear conjugate gradient method with a strong global convergence property. SIAM J Optim. (1999) 10:177–82. doi: 10.1137/S1052623497318992

37. Liu Y, Storey C. Efficient generalized conjugate gradient algorithms, part 1: theory. J Optim Theory Appl. (1991) 69:129–37. doi: 10.1007/BF00940464

38. Rivaie M, Mamat M, June LW, Mohd I. A new class of nonlinear conjugate gradient coefficients with global convergence properties. Appl Math Comput. (2012) 218:11323–32. doi: 10.1016/j.amc.2012.05.030

39. Hager W, Zhang H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J Optim. (2005) 16:170–92. doi: 10.1137/030601880

40. Kou CX, Dai YH. A modified self-scaling memoryless Broyden-Fletcher-Goldfarb-Shanno method for unconstrained optimization. J Optim Theory Appl. (2015) 165:209–24. doi: 10.1007/s10957-014-0528-4

41. Andrei N. New accelerated conjugate gradient algorithms as a modification of Dai-Yuan's computational scheme for unconstrained optimization. J Comput Appl Math. (2010) 234:3397–410. doi: 10.1016/j.cam.2010.05.002

42. Liu JK, Feng YM, Zou LM. A spectral conjugate gradient method for solving large-scale unconstrained optimization. Comput Math Appl. (2019) 77:731–9. doi: 10.1016/j.camwa.2018.10.002

43. Zoutendijk G. Nonlinear programming, computational methods. In: Integer and Nonlinear Programming. Amsterdam (1970). p. 37–86.

44. Andrei N. An unconstrained optimization test functions collection. Adv Model Optim. (2008) 10:147–61.

45. Moré JJ, Garbow BS, Hillstrom KE. Testing unconstrained optimization software. ACM Trans Math Softw. (1981) 7:17–41. doi: 10.1145/355934.355936

46. Dolan ED, Moré JJ. Benchmarking optimization software with performance profiles. Math Program. (2002) 91:201–13. doi: 10.1007/s101070100263

47. COVID-19 pandemic in Asia W (2022). Available online at: https://en.wikipedia.org/w/index.php?title=COVID%2019%20pandemic%20in%20Asia&oldid=1089905138 (accessed June 21, 2022).

48. by Country | Asia CCC (2022). Available online at: https://tradingeconomics.com/country-list/coronavirus-cases?continent=asia (accessed June 21, 2022).

49. Aisyah DN, Mayadewi CA, Diva H, Kozlakidis Z, Siswanto, Adisasmito W. A spatial-temporal description of the SARS-CoV-2 infections in Indonesia during the first six months of outbreak. PLoS ONE. (2020) 15:e0243703. doi: 10.1371/journal.pone.0243703

50. to COVID-19 in Indonesia (As of 4 April 2022) Indonesia ReliefWeb SUR (2022). Available online at: https://reliefweb.int/report/indonesia/situation-update-response-covid-19-indonesia-4-april-2022 (accessed June 7, 2022).

51. Data IWCDCDWV (2022). Available online at: https://covid19.who.int (accessed June 21, 2022).

52. Sulaiman IM, Mamat M. A new conjugate gradient method with descent properties and its application to regression analysis. J Num Anal Ind Appl Math. (2020) 12:25–39.

53. Worldometer ICCS (2022). Available online at: https://www.worldometers.info/coronavirus/country/indonesia/ (accessed June 22, 2022).

Keywords: unconstrained optimization, descent condition, global convergence, regression models, spectral conjugate gradient method

Citation: Novkaniza F, Malik M, Sulaiman IM and Aldila D (2022) Modified spectral conjugate gradient iterative scheme for unconstrained optimization problems with application on COVID-19 model. Front. Appl. Math. Stat. 8:1014956. doi: 10.3389/fams.2022.1014956

Received: 09 August 2022; Accepted: 03 October 2022;

Published: 08 November 2022.

Edited by:

Lixin Shen, Syracuse University, United StatesReviewed by:

Youssri Hassan Youssri, Cairo University, EgyptMilena Petrovic, University of Pristina, Serbia

Copyright © 2022 Novkaniza, Malik, Sulaiman and Aldila. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fevi Novkaniza, ZmV2aS5ub3ZrYW5pemFAc2NpLnVpLmFjLmlk

Fevi Novkaniza

Fevi Novkaniza Maulana Malik

Maulana Malik Ibrahim Mohammed Sulaiman

Ibrahim Mohammed Sulaiman Dipo Aldila1

Dipo Aldila1