- 1Max Planck Institute for Dynamics and Self-Organization, Göttingen, Germany

- 2Third Institute of Physics and Bernstein Center for Computational Neuroscience, University of Göttingen, Göttingen, Germany

- 3Tübingen AI Center, University of Tübingen, Tübingen, Germany

- 4Institute for the Dynamics of Complex Systems, University of Göttingen, Göttingen, Germany

- 5Institute of Pharmacology and Toxicology, University Medical Center Göttingen, Göttingen, Germany

- 6German Center for Cardiovascular Research (DZHK), Partner Site Göttingen, Göttingen, Germany

The mechanical contraction of the pumping heart is driven by electrical excitation waves running across the heart muscle due to the excitable electrophysiology of heart cells. With cardiac arrhythmias these waves turn into stable or chaotic spiral waves (also called rotors) whose observation in the heart is very challenging. While mechanical motion can be measured in 3D using ultrasound, electrical activity can (so far) not be measured directly within the muscle and with limited resolution on the heart surface, only. To bridge the gap between measurable and not measurable quantities we use two approaches from machine learning, echo state networks and convolutional autoencoders, to solve two relevant data modelling tasks in cardiac dynamics: Recovering excitation patterns from noisy, blurred or undersampled observations and reconstructing complex electrical excitation waves from mechanical deformation. For the synthetic data sets used to evaluate both methods we obtained satisfying solutions with echo state networks and good results with convolutional autoencoders, both clearly indicating that the data reconstruction tasks can in principle be solved by means of machine learning.

1 Introduction

Cardiac arrhythmias, such as ventricular or atrial fibrillation, are electro-mechanical dysfunctions of the heart that are associated with complex, chaotic spatio-temporal excitation waves within the heart muscle resulting in incoherent mechanical contraction and a significant loss of pump function [1–3]. Ventricular fibrillation (VF) is the most common deadly manifestation of a cardiac arrhythmia and requires immediate defibrillation using high-energy electric shocks. Atrial fibrillation (AF) is the most common form of a cardiac arrhythmia, affecting 33 million patients worldwide [62]. While not immediately life-threatening, AF is considered to be responsible for 15% of strokes if left untreated [63, 64]. The structural substrate and functional mechanisms that underlie the onset and perpetuation of VF and AF are not fully understood. It is generally agreed that imaging of the cardiac electrical and mechanical function is key to an improved mechanistic understanding of cardiac disease and the development of novel diagnosis and therapy. This has motivated the development of non-invasive and invasive electrophysiological measurement and imaging modalities. Electrical activity of the heart can (so far) non-invasively be measured on its surface, only. Direct measurements can be made in vivo inside the heart using so-called basket catheters with typically 64 electrodes or in ex-vivo experiments, where an extracted heart in a Langendorff perfusion set-up is kept beating and the cell membrane voltage on the epicardial surface is made visible using fluorescent dyes (a method also known as optical mapping) [4]. A method for indirect observation of electrical excitation waves is ECG imaging where an array of EEG-electrodes is placed on the body surface and an (ill-posed) inverse problem is solved to estimate the potential on the surface of the heart. Mechanical contraction and deformation of the heart tissue can be studied in full 3D using ultrasound, in 2D using real-time MRT [5] or (using optical mapping) by motion tracking in Langendorff experiments.

The reconstruction of patterns of action potential wave propagation in cardiac tissue from ultrasound has been introduced by Otani et al. [6, 7]. They proposed to use ultrasound to visualize the propagation of these waves through the mechanical deformations they induce and to reconstruct action potential-induced active stress from the deformation. Provost et al. [8] introduced electromechanical wave imaging to map the mechanical deformation of cardiac tissue at high temporal and spatial resolutions. The observed deformations resulting from the electrical activation were found to be closely correlated with electrical activation sequences. The cardiac excitation-contraction-coupling (ECC) [9] has also been studied in optical mapping experiments in Langendorff-perfused isolated hearts [10–12]. Using electromechanical optical mapping [12], it was shown that during ventricular tachyarrhythmias electrical rotors introduce corresponding rotating mechanical waves. These co-existing electro-mechanical rotors were observed on the epicardial surface of isolated Langendorff-perfused intact pig and rabbit hearts using optical mapping [13]. Using high-resolution ultrasound, these mechanical rotors were also observed inside the ventricular wall during ventricular tachycardia and fibrillation [13].

All these measurement modalities are limited, in particular those suitable for in vivo applications. Measurements with basket catheters are effectively undersampling the spatio-temporal wave pattern. Inverse ECGs suffer from ill-posedness and require regularization that may lead to loss of spatial resolution and blurring. Limited spatial resolution is also an issue with ultrasound measurements, but they are currently the only way to “look inside” the heart, albeit measuring only mechanical motion. Electrical excitation waves inside the heart muscle are so far not accessible by any measurement modality available.

These limitations motivated the search for algorithms to reconstruct electro-mechanical wave dynamics in cardiac tissue from measurable quantities. Berg et al. [14] devised synchronization-based system identification of extended excitable media, in which model parameters are estimated by minimizing the synchronization error. Using this approach, Lebert and Christoph [15] demonstrated that electro-mechanic wave dynamics of excitable-deformable media can be recovered from a limited set of observables using a synchronization-based data assimilation approach. Hoffman et al. reconstructed electrical wave dynamics using ensemble Kalman filters [16, 17]. In another approach, it was shown that echo state networks [18] and deep convolutional neural networks [19, 20] provide excellent cross estimation results for different variables of a mathematical model describing complex electrical excitation waves during cardiac arrhythmias. Following this approach, Christoph and Lebert [21] demonstrated the reconstruction of electrical excitation and active stress from deformation using a simulated deformable excitable medium. To continue this research and to address the general challenge of missing or impaired observations we consider in this article two tasks: (i) recovering electrical excitation patterns from noisy, blurred or undersampled observations and (ii) reconstructing electrical excitation waves from mechanical deformation. To solve the corresponding data processing and cross-prediction tasks two machine learning methods are employed and evaluated: echo state networks and convolutional autoencoders. Both algorithms are applied to synthetical data generated by prototypical models for electrophysiology and electromechanical coupling.

2 Methods

In this section we will first introduce in Sections 2.1 and 2.2 the mathematical models describing cardiac dynamics which were used to generate the example data for the two tasks to be solved: (i) recovering electrical wave pattern from impaired observations and (ii) cross-predicting electrical excitation from mechanical deformation. Then in Section 2.3 both machine learning methods used for solving these tasks, echo state networks (Section 2.3.1) and convolutional autoencoders (Section 2.3.2), will be briefly introduced.

2.1 Recovering Complex Spatio-Temporal Wave Patterns From Impaired Observations

For motivating, illustrating, and evaluating the employed methods for dealing with incomplete or distorted observations we shall use spatio-temporal time series generated with the Bueno-Orovio-Cherry-Fenton (BOCF) model [22] describing complex electrical excitation patterns in the heart during cardiac arrhythmias. The BOCF model is a set of partial differential equations (PDEs) with four variables and will be introduced in Section 2.1.1. In Section 2.1.2 a formal description of the data recovery tasks will be given.

2.1.1 Bueno–Orovio–Cherry–Fenton Model

Cardiac dynamics is controlled by electrical excitation waves triggering mechanical contractions of the heart. In the case of cardiac arrhythmias like lethal ventricular fibrillation, wave break-up and complex chaotic wave patterns occur resulting in significantly reduced pump performance of the heart. From the broad range of mathematical models describing this spatio-temporal dynamics [23] we chose the Bueno–Orovio–Cherry–Fenton (BOCF) model [22] to generate spatio-temporal time series that are used as a benchmark to validate our approaches for reconstructing complex wave patterns in excitable media from incomplete data. The BOCF model consists of four system variables whose evolution is given by four (partial) differential equations

The variable u represents the continuum limit representation of the membrane voltage of cardiac cells and the variables v, w, and s are gating variables controlling ionic transmembrane currents

Here

For simulating the dynamics we used the set of parameters given in Table 1 for which the BOCF model was found [22] to exhibit excitation wave dynamics similar to the Ten Tusscher–Noble–Noble–Panfilov (TNNP) model [24] describing human heart tissue.

TABLE 1. TNNP model parameter values for the BOCF model [22].

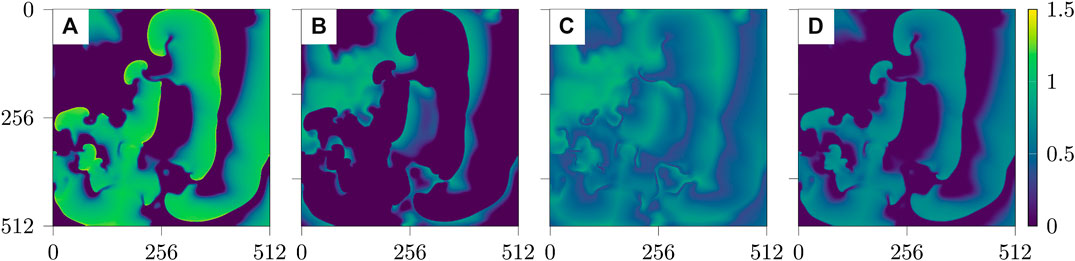

Typical snapshots of the four variables during a chaotic evolution are shown in Figure 1. The spatio-temporal chaotic dynamics of this system is actually transient chaos whose lifetime grows exponentially with system size [25, 26]. To obtain chaotic dynamics with a sufficiently long lifetime the system has been simulated on a domain of

FIGURE 1. Snapshots of the four fields u, v, w, s of the BOCF model Eq. (1) (from left to right).

2.1.2 Reconstruction Tasks

Experimental measurements of the dynamics of a system of interest often allow only the observation of some state variables (e.g., the membrane voltage) and may provide only incomplete or distorted information about the measured observable. Typical limitations are (additive) measurement noise and low-spatial resolution (due to the experimental conditions and/or the available hardware). Formally, measurements impaired due to noise, blurring or undersampling can be described as follows: Let

1. Noisy data: To add noise each element of

2. Blurred data: Date with reduced spatial resolution are obtained as Fourier low-pass filtered data

3. Undersampled data: To generate undersampled date

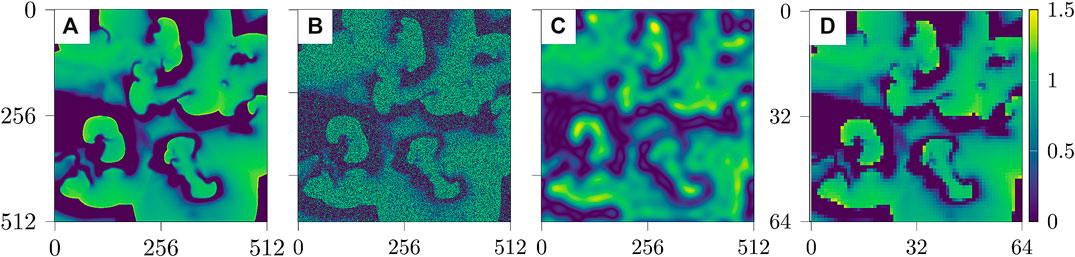

Figure 2 shows examples of the three types of impaired observations.

FIGURE 2. Snapshots of the three cases of impaired data based on u [from left to right: (A) reference data u, (B) noisy data, (C) blurred data, and (D) undersampled data].

2.2 Predicting Electrical Excitation From Mechanical Contraction

To learn the relation between mechanical deformation and electrical excitation inverse modelling data were generated by a conceptual electro-mechanical model consisting of an Aliev–Panfilov model describing the electrical activity and a driven mass-spring-system [15].

2.2.1 Aliev–Panfilov Model

Specifically developed to mimic cardiac action potentials in the myocardium, the Aliev–Panfilov model is a modification of the FitzHugh–Nagumo model, which reproduces the characteristic shape of electric pulses occurring in the heart [27]. It is given by a set of two differential equations,

in which u and v are the normalized membrane voltage and the recovery variable, respectively, and a, b and k are model parameters. The term

Within the heart muscle, the myocardium, cells contract upon electrical excitation through a passing action potential. At this point it is important to note that muscle fibre contracts along its principal orientation which has to be considered during the implementation of the mechanical part of the simulation. To couple the mechanical contraction of the muscle fibre to electrical excitation of a cell, as an extension to the Aliev–Panfilov model the active stress

where

Here,

2.2.2 Mass-Spring Damper System

The elasto-mechanical properties of the cardiac muscle fibre were implemented using a modified two-dimensional mass-spring damper system [31]. For the current study the mass-spring system was implemented in two dimensions because this allows shorter runtimes and primarily serves as a proof-of-principle for the evaluated reconstruction approach. In its two-dimensional form this mechanical model might correspond best to a cut-out of the atrium’s wall, since there the muscle tissue is less than 4 mm thick. However, this mass-spring system can easily be expanded to three dimensions (see [15]).

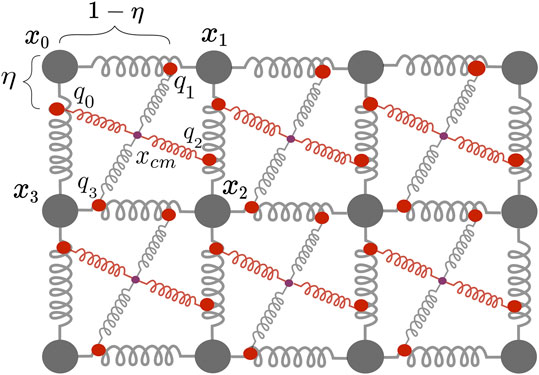

Placed on a regular lattice, one mechanical cell is made up of four particles

FIGURE 3. Two dimensional mass-spring damper system with one active spring modelling fibre orientation (red) and one passive spring (gray), the centre of mass

Using

Here

In addition, the mechanical grid is held together by structural forces between the corner particles, which can be computed using

with

Finally, with all the above forces acting on particle

with the sum

The area of each cell was calculated with a simple formula for a general quadrilateral using the positions of its four corners. As a measure of contraction, the relative change of area

has been used. The numerical algorithm for solving the full set of electro-mechanical ODEs is summarized in the Appendix.

2.2.3 Reconstruction Task

The inverse modelling data are generated by forward modelling

2.3 Machine Learning Methods

In this section we will introduce the two machine learning approaches, echo state networks (ESN) [32] and convolutional autoencoders (CAE) [33], that will be applied to solve the reconstruction tasks defined in Section 2.1.2.

2.3.1 Echo State Network

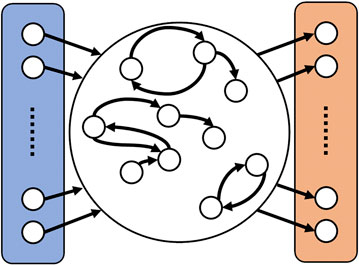

Echo state networks have been introduced in 2001 by Jaeger [32] as a simplified type of recurrent neural network, in which the weights describing the strength of the connections within the network are fixed. In its general composition an ESN subdivides into three sections [32], as illustrated in Figure 4. First of all, there is the input layer into which the input signal

FIGURE 4. Schematic representation of an ESN. On the left side (colored in blue) is the input layer where the input signal

The concatenated bias-input vector

in which

are adapted during the training process by minimizing the cost function [34]

where Tr denotes the trace of a matrix and λ controls the impact of the regularization term that prevents overfitting [35]. The final output matrix is given by the minimum of the cost function at

Both matrices

Reservoir computing using ESNs for predicting chaotic dynamics has already been demonstrated in 2004 by Jaeger and Haas [37]. Since then many studies appeared analyzing and optimizing this approach (see, for example [38–44], and references cited therein). In particular, it has been pointed out how reservoir computing exploits generalized synchronization of uni-directionally coupled systems [45, 46].

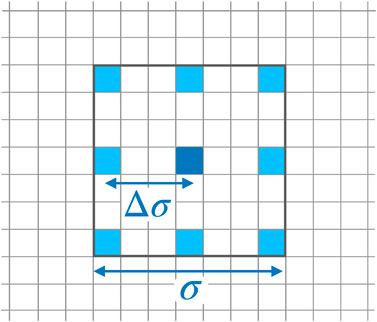

Recently, applications of ESNs to spatio-temporal time series have been presented [18, 47] employing many networks operating in parallel at different spatial locations based on the concept of (reconstructed) local states [48]. In particular, using this mode of reservoir computing it was possible to perform a cross-prediction between the four different variables of the BOCF model [18]. Therefore, for the current task of reconstructing data from impaired observations we build on the previous ESN design and modelling procedure. For each pixel an ESN is trained receiving input from neighboring pixels, only, representing the local state at the location of the reference pixel as illustrated in Figure 5. This design introduces two new hyperparameters σ and

FIGURE 5. Stencil for locally sampling data used as input of the ESN operating at the location of the dark blue pixel in the center. The stencil is characterized by its width σ and the spatial separation

2.3.2 Convolutional Autoencoder

A convolutional autoencoder [33] is a special architecture of a feed forward network (FFN) with convolutional layers similar to convolutional neural networks (CNNs) [49]. Generally a CAE learns a representation of the training set

Convolutional layers: Convolution of the input by a kernel sliding over the input. The number of rows and columns of the kernel are hyper-parameters, in this work they are set to be

Batch normalization layer: Normalization of the activations of the previous layer during training and for each batch. Batch normalization allows the use of higher learning rates, being computationally more efficient, and also acts as a regularizer [50].

Leaky ReLU [51] layer: Leaky version of a rectified linear unit (ReLU) [52], such that:

Max pooling layer: Sample-based operation for discretization based on a kernel that slides over the input like the convolutional operator but only the maximum value of the kernel is passed to the next layer. Width and height of the kernel are hyper-parameters (in this work

Dropout layer: Regularization method to prevent overfitting where during training some weights are set randomly to zero [53]. In this work the probability of setting the weights to zero is 0.05.

The eponymous part of the CAEs are the convolutional layers, a convolution of

with

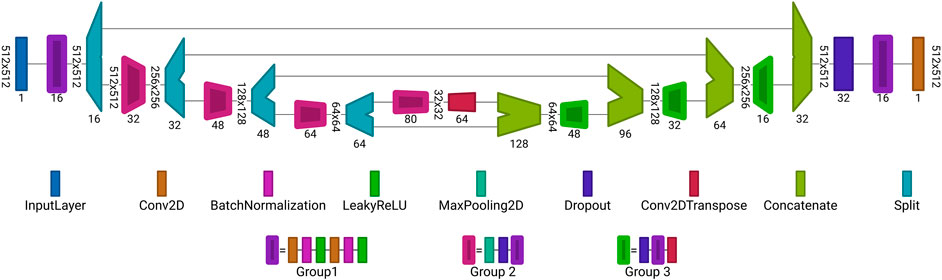

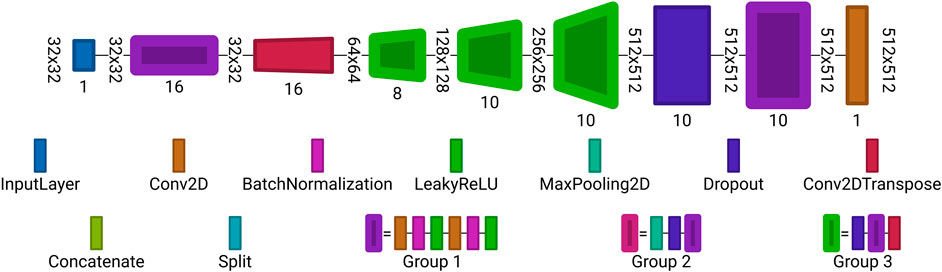

In this work two architectures are used. The first one employed to reconstruct the data from noisy, blurred and inverse modelling data is illustrated in Figure 6. The architecture is the same for the tasks in Section 2.1.2 and Section 2.2.3 but the sizes of

FIGURE 6. Proposed autoencoder architecture for reconstruction of data from noisy or blurred input. Each block is a set of layers. The values written vertically describe the dimension of the input for each layer, e.g., for noisy and blurred data r = 512, c = 512 and for the inverse modelling data r = 100, c = 100. The horizontally written values at the layers are the number of channels or number of filters. Group 1 is an combination of layers, consisting of: Conv2D, BatchNormlization, LeakyReLU, Conv2D, BatchNormlization and LeakyReLU layers. Group 2 is an extension of Group 1 where a MaxPooling2D and Dropout layer are placed before Group 1. Similar applies to Group 3, it consists of a Dropout layer followed by the layers from Group 1 and finalized by a Conv2DTranspose layer follows. The architecture was visualized with Net2Vis [55].

FIGURE 7. Autoencoder architecture used for reconstruction from undersampled observations. Each block is a set of layers. The layer labeling is the same as in Figure 6. Visualized with Net2Vis [55].

3 Results

In the following both machine learning methods will be applied to two tasks: (i) Reconstructing electrical excitation waves from noisy blurred and under sampled data (Section 3.1) and (ii) Predicting electrical excitation from mechanical contraction (Section 3.2).

3.1 Recovering Complex Spatio-Temporal Wave Patterns From Impaired Observations

To benchmark both reconstruction methods, using ESNs and CAEs, we use time series generated by the BOCF model introduced in Section 2.1.1. The same data were used for both methods, consisting of 5,002 samples in the training data set, 2,501 samples in the validation data set, and 2,497 samples in the test data set. The sampling time of all time series equalled

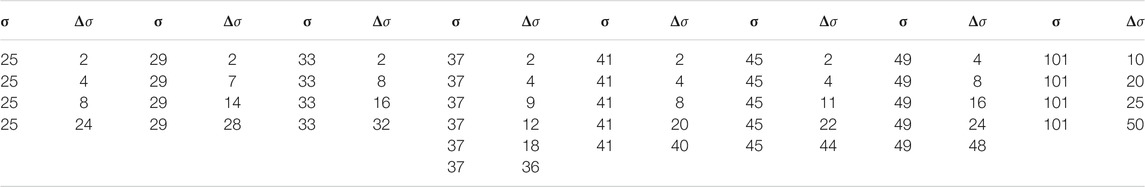

For the implemention of the ESN we used the software package easyesn [56]. To determine the optimal ESN hyperparameters a grid search is performed as described in [18] using the training and validation subsets of the data. This search consists of two stages: first, for each combination of the local states’ hyperparameters σ and

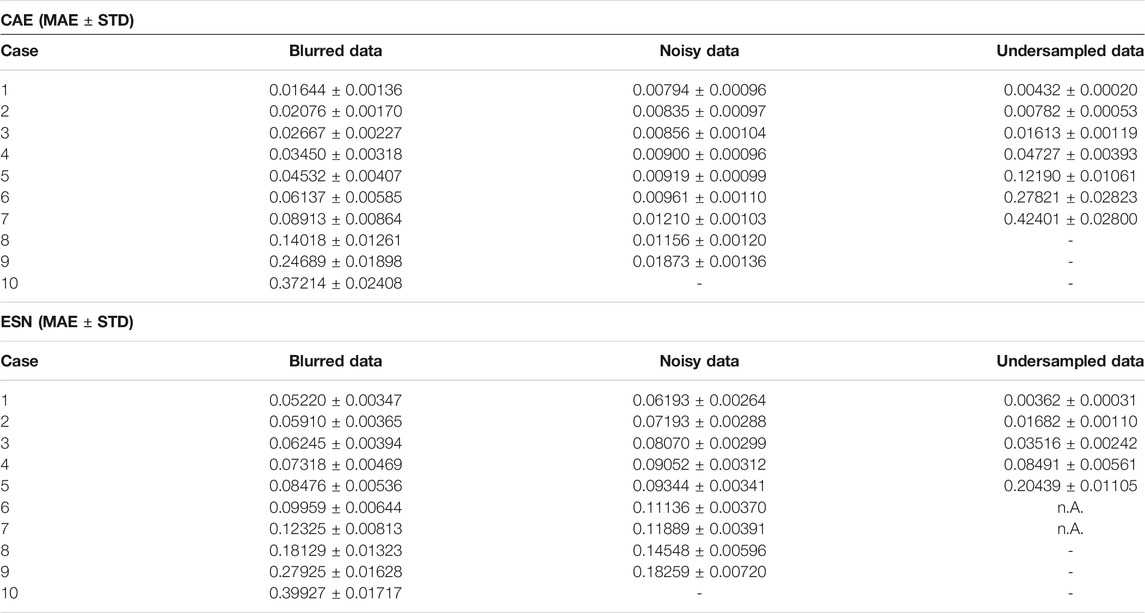

TABLE 3. Comparison of the MAE obtained when applying the CAE method and the ESN method to the test data set.

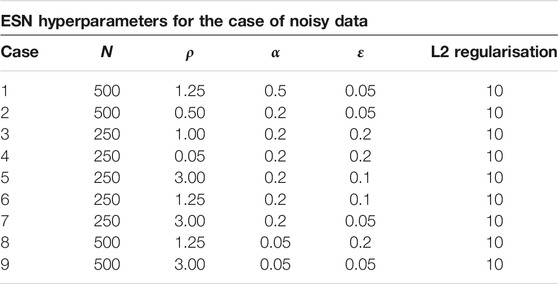

In the second stage, for each of the 37 sets of optimal hyperparameters determined before (for each combination of σ and

Next, the different optimal ESNs obtained for different stencils

The CAE was trained using the ADAM optimizer [57], implemented with TensorFlow [58] in version 2.3, with early stopping when the validation loss has not improved at least by

where N is the number of elements in the data set,

3.1.1 Noisy Data

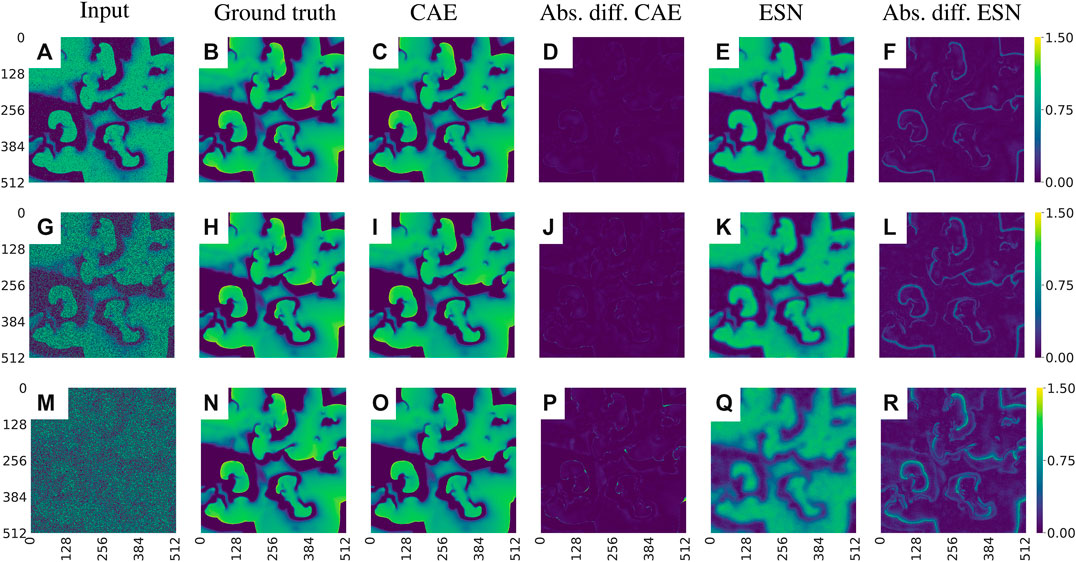

Figure 8 shows snapshots of the noisy input data, the corresponding ground truth, the outputs provided by the CAE and the ESN, respectively, and the absolute values of their prediction errors with respect to the ground truth.

FIGURE 8. Exemplary visualization of the input and output for both networks for data with different noise levels p: (A)–(F)p = 0.1, (G)–(L)p = 0.5, and (M)–(R)p = 0.9. Comparing the absolute differences between the prediction and the ground truth [(D), (J), (P) for the CAE and (F), (L), (R) for the ESN] one can see that the CAE is less sensitive to noise. Note that the errors develop primarily on the fronts of the waves.

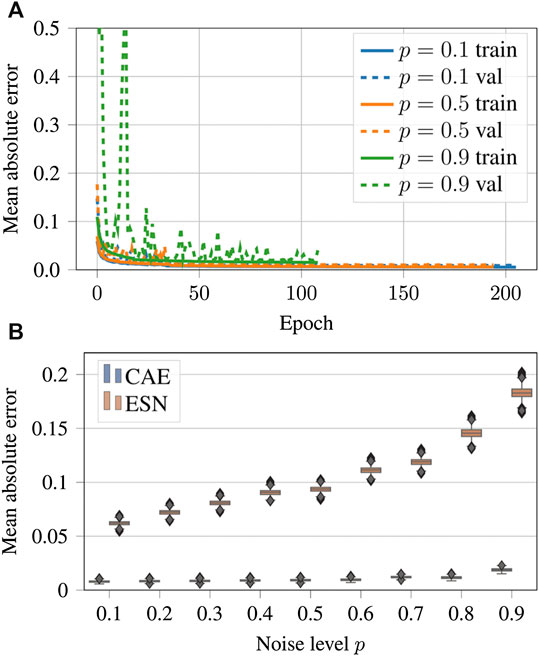

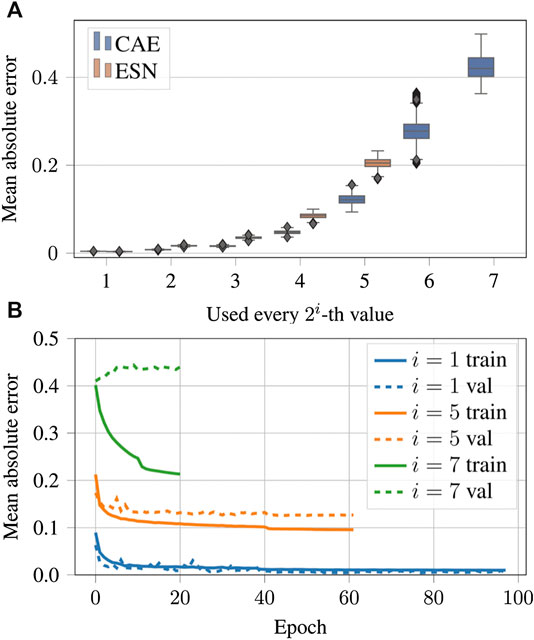

The evolution of the loss function during the training epochs is shown in Figure 9A. In all cases the error decreases and the training converges, but the duration of the training depends on the complexity of the case.

FIGURE 9. (A) Evolution of the loss function values over the epochs for noisy input data generated with noise levels

Figures 9B shows a comparison of the performance of the CAE and the ESN for noisy data with nine different noise levels

3.1.2 Blurred Data

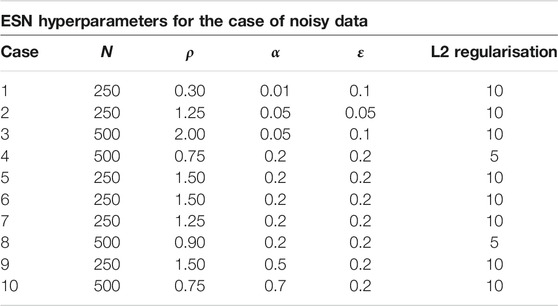

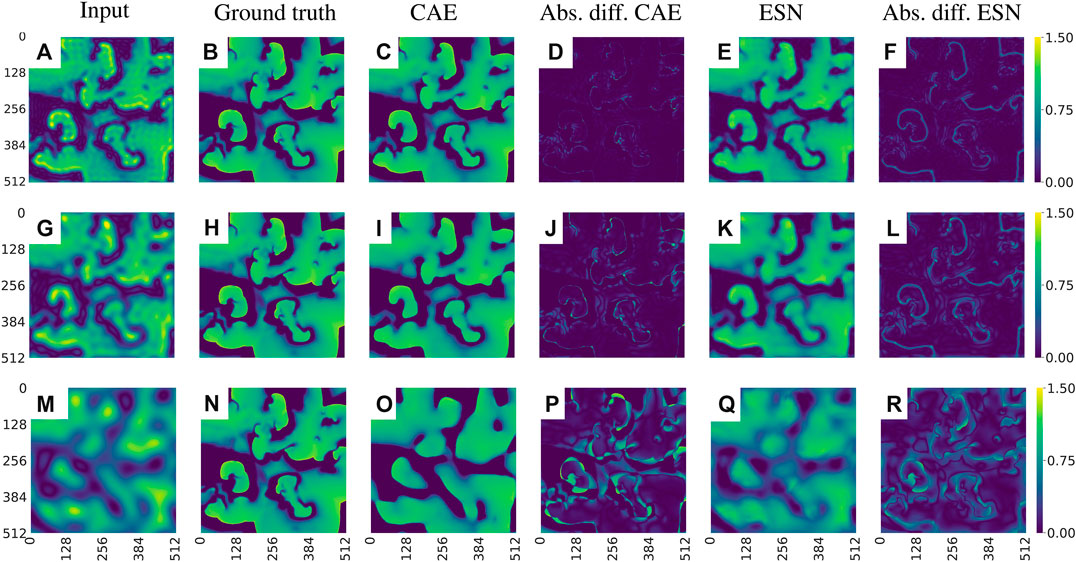

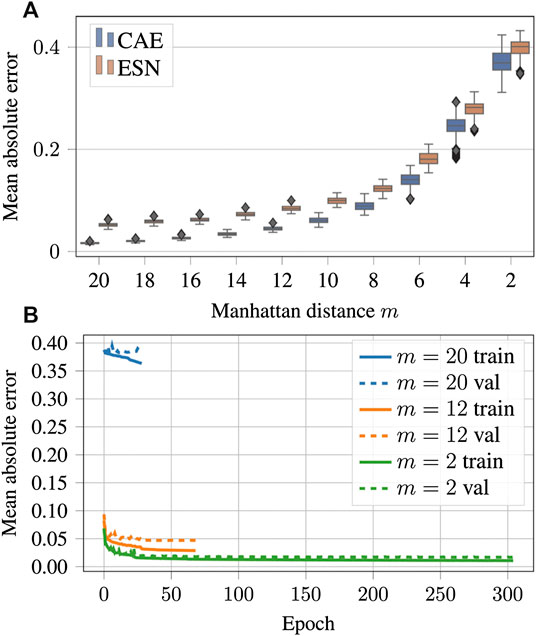

To evaluate the performance of CAE and ESN for recovering full resolution (ground truth) data from blurred observations we consider nine cases where the radius m of Fourier low-pass filtering ranges from

FIGURE 10. Exemplary visualization of the input and output for both networks, CAE and ESN, when recovering the original data from blurred measurements. (A)–(F) corresponds to case one, where

FIGURE 11. (A) Mean absolute errors of reconstructions using CAE or ESN from data blurred with different values of the low-pass filter parameter m. Like in Figure 9B boxes are horizontally shifted for better visibility. A tabular overview of the values can be found in Table 3. (B) Evolution of the loss function values over the training epochs for blurred data. Training was always terminated by reaching the early stopping criterion, defined in Section 3.1. One epoch trained approximately 108 s on a GTX 1080 Ti.

3.1.3 Undersampled Data

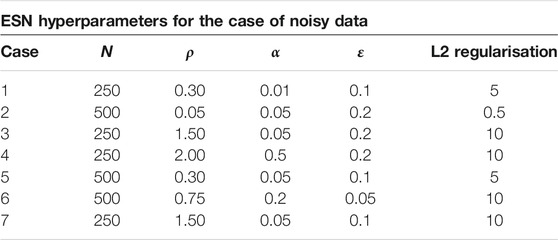

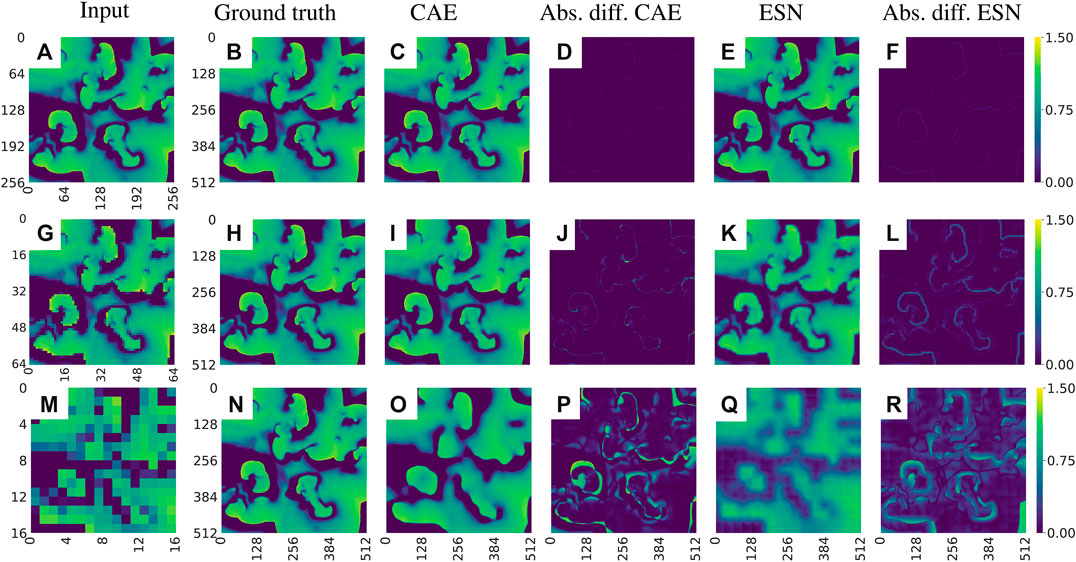

Figure 12 shows examples of data reconstructed from undersampled data. In total we considered seven cases of undersampling by

FIGURE 12. Exemplary visualization of the reconstruction of the u-field of the BOCF model from undersampled data. (A)–(F) corresponds to a sampling parameter

FIGURE 13. (A) Same as Figures 9B but for the case of the undersampled data. A tabular overview of the values can be found in Table 3. (B) Evolution of the loss function values over the epochs for undersampled input data.

3.2 Predicting Electrical Excitation From Mechanical Contraction

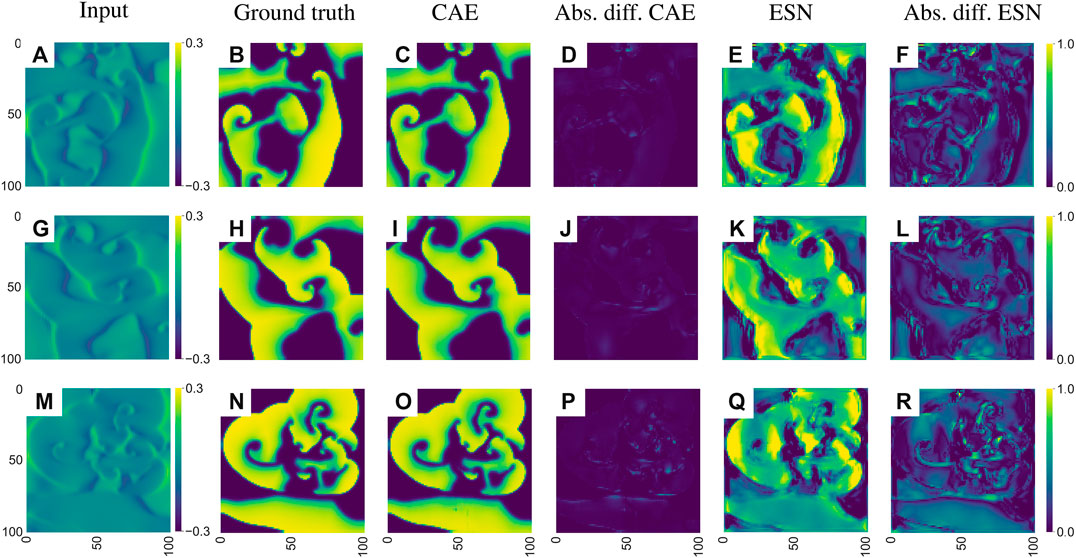

Echo state networks as well as convolutional autoencoders have been trained with time series generated by the electromechanical model introduced in Section 2.2 to predict the membrane voltage

FIGURE 14. Exemplary visualization of the input and output for both networks for the inverse reconstruction of the membrane voltage uEq. (4) based on the mechanical deformation

As illustrated in Figure 14 both networks can solve the inverse problem and reconstruct the electrical potential field u from Eq. (4). However, the reconstruction of the CAE is more precise, which is particularly noticeable at the edges of the reconstructed electrical potential field. Considering the median of the MAE over the entire test data the ESN approach achieves an error of

4 Conclusion

Using synthetic data generated with conceptual models describing complex cardiac dynamics we have demonstrated possible applications of machine learning methods to complete and enhance experimental observations. It was shown that echo state networks as well as convolution autoencoders provide promising results, where the latter turned out to be the method of choice in terms of more faithful reconstructions. At this point, however, we would like to stress, that we didn’t try to fully optimize the algorithms employed. One could, for example, increase the size of the ESNs used or extend and refine the grid search of hyperparameters. Also with the CAE several options exist to improve the performance even more. Instead of the MAE in the loss function one could use an adaptive robust loss function [59] or the Jensen-Shannon divergence [19]. The weights of the CAE could be optimized with a stochastic gradient descend approach instead of the ADAM algorithm [57]. But we expect such modifications would show only minor improvements (if at all) [60, 61].

A comparison of the computing times with ESNs and CAEs is unfortunately not immediately possible. For the CAE the training time depends on the convergence, as illustrated in Figures 9A, 11B and 13B. In contrast to this, for ESNs training times depend strongly on the search for optical hyperparameters, especially the size of the reservoir, and this search is strongly dependent on the search space size and the number of parameters. For the task where electrical excitation is predicted from mechanical contraction our computations took 3,382 s on two Intel Xeon CPU E5-4620. While the CAE simulations have been run on GPUs the training and application of the ESN was on CPUs which makes a direct comparison difficult. Furthermore, the runtime of the programmes used is highly dependent on the libraries used and how well they have been adapted to special system architectures. In general we would estimate that in this work the effort to train and search hyperparameters for an ESN was a bit less demanding, in the sense of computational resources, compared to the training of the CAE. However, we would not consider the difference big enough to be an advantage for the ESN approach. Once trained both approaches need comparable execution times when applied to new data and executed on a CPU. In future work, using more realistic numerical simulations (and experimental data) such an optimization should be performed to achieve the best possible result for the intended medical application. Since here we used only data from conceptual models we refrained from fully optimizing the machine learning methods applied. The fact that already a straight-forward application of known algorithms and architectures provided very good results for the considered reconstruction tasks is very promising and encourages to address in future work extended tasks (including other variables, like calcium concentration, mechanical stress and strain, etc.) and reconstruction tasks with more realistic synthetic data (from 3D models, for example) combined with experimental measurements.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation. The source code will be available at https://gitlab.gwdg.de/sherzog3/reconstructing-cardiac-excitation-waves.git.

Author Contributions

SH performed the CAE simulations and RZ and JA did the ESN modelling. All authors designed the study, analyzed the results, and wrote the manuscript.

Funding

SH acknowledges funding by the International Max Planck Research Schools of Physics of Biological and Complex Systems. UP and SL acknowledge funding by the Max Planck Society, the German Center for Cardiovascular Research (DZHK) partner site Goettingen, and the SFB 1002 Modulatory Units in Heart Failure (Project C03).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

SH and UP thank Florentin Wörgötter and Thomas Lilienkamp for continuous support and inspiring scientific discussions.

Appendix 1: solution of the electro-mechanical dynamics

The differential equations from the extended Aliev-Panfilov model in Eqs. A1–A3 have been integrated using the forward Euler method

where i, j are the indices of the grid points and h denotes the spacing constant between the cells.

For the excitation variable u in the electrical part of the simulation, no-flux boundary conditions have been used which were imposed by setting the two outermost cells to the same value, i.e.,

with the total force

A padding layer of ten electrically inactive cells was implemented outside the electrical grid to account for boundary effects in the mechanical network. In addition, the active stress variable

To improve numerical accuracy for each time step

References

1. Gray, RA, Pertsov, AM, and Jalife, J. Spatial and temporal organization during cardiac fibrillation. Nature (1998). 392:75–8. doi:10.1038/32164

2. Davidenko, JM, Pertsov, AV, Salomonsz, R, Baxter, W, and Jalife, J. Stationary and drifting spiral waves of excitation in isolated cardiac muscle. Nature (1992). 355:349–51. doi:10.1038/355349a0

3. Witkowski, FX, Leon, LJ, Penkoske, PA, Giles, WR, Spano, ML, Ditto, WL, et al. Spatiotemporal evolution of ventricular fibrillation. Nature (1998). 392:72–82. doi:10.1038/32170

4. Efimov, IR, Nikolski, VP, and Salama, G. Optical imaging of the heart. Circ Res (2004). 95:21–33. doi:10.1161/01.RES.0000130529.18016.35

5. Rosenzweig, S, Scholand, N, Holme, HCM, and Uecker, M. Cardiac and respiratory self-gating in radial mri using an adapted singular spectrum analysis (ssa-fary). IEEE Trans Med Imag (2020). 39:3029–3041.

6. Otani, N, Luther, S, Singh, R, and Gilmour, R. Transmural ultrasound-based visualization of patterns of action potential wave propagation in cardiac tissue. Ann Biomed Eng (2010a). 38:3112–23.

7. Otani, N, Luther, S, Singh, R, and Gilmour, R. Methods and systems for functional imaging of cardiac tissueInternational Patent. London: World Intellectual Property Organization (2010b).

8. Provost, J, Lee, WN, Fujikura, K, and Konofagou, EE. Imaging the electromechanical activity of the heart in vivo. Proc Natl Acad Sci USA (2011). 108:8565–70. doi:10.1073/pnas.1011688108

9. Bers, DM. Cardiac excitation-contraction coupling. Nature (2002). 415:198–205. doi:10.1038/415198a

10. Bourgeois, EB, Bachtel, AD, Huang, J, Walcott, GP, and Rogers, JM. Simultaneous optical mapping of transmembrane potential and wall motion in isolated, perfused whole hearts. J Biomed Optic (2011). 16:096020. doi:10.1117/1.3630115

11. Zhang, H, Iijima, K, Huang, J, Walcott, GP, and Rogers, JM. Optical mapping of membrane potential and Epicardial deformation in beating hearts. Biophys J (2016). 111:438–51. doi:10.1016/j.bpj.2016.03.043

12. Christoph, J, Schröder-Schetelig, J, and Luther, S. Electromechanical optical mapping. Prog Biophys Mol Biol (2017). 130:150–69. doi:10.1016/j.pbiomolbio.2017.09.015

13. Christoph, J, Chebbok, M, Richter, C, Schroeder-Schetelig, J, Stein, S, Uzelac, I, et al. Electromechanical vortex filaments during cardiac fibrillation. Nature (2018). 555:667–72. doi:10.1038/nature26001

14. Berg, S, Luther, S, and Parlitz, U. Synchronization based system identification of an extended excitable system. Chaos: An Interdisciplinary Journal of Nonlinear Science (2011). 21:033104. doi:10.1063/1.3613921

15. Lebert, J, and Christoph, J. Synchronization-based reconstruction of electromechanical wave dynamics in elastic excitable media. Chaos: An Interdisciplinary Journal of Nonlinear Science (2019). 29:093117. doi:10.1063/1.5101041

16. Hoffman, MJ, LaVigne, NS, Scorse, ST, Fenton, FH, and Cherry, EM. Reconstructing three-dimensional reentrant cardiac electrical wave dynamics using data assimilation. Chaos Interdiscipl J Nonlinear Sci (2016). 26:013107. doi:10.1063/1.4940238

17. Hoffman, MJ, and Cherry, EM. Sensitivity of a data-assimilation system for reconstructing three-dimensional cardiac electrical dynamics. Phil Trans Math Phys Eng Sci (2020). 378:20190388. doi:10.1098/rsta.2019.0388

18. Zimmermann, RS, and Parlitz, U. Observing spatio-temporal dynamics of excitable media using reservoir computing. Chaos Interdiscipl J Nonlinear Sci (2018). 28:043118. doi:10.1063/1.5022276

19. Herzog, S, Wörgötter, F, and Parlitz, U. Data-driven modeling and prediction of complex spatio-temporal dynamics in excitable media. Front Appl Math Stat (2018). 4:60. doi:10.3389/fams.2018.00060

20. Herzog, S, Wörgötter, F, and Parlitz, U. Convolutional autoencoder and conditional random fields hybrid for predicting spatial-temporal chaos. Chaos (2019). 29:123116. doi:10.1063/1.5124926

21. Christoph, J, and Lebert, J. Inverse mechano-electrical reconstruction of cardiac excitation wave patterns from mechanical deformation using deep learning. Chaos (2020). 30:123134. doi:10.1063/5.0023751

22. Bueno-Orovio, A, Cherry, EM, and Fenton, FH. Minimal model for human ventricular action potentials in tissue. J Theor Biol (2008). 253:544–60. doi:10.1016/j.jtbi.2008.03.029

23. Clayton, R, Bernus, O, Cherry, E, Dierckx, H, Fenton, F, Mirabella, L, et al. Mathematical and modelling foundations, models of cardiac tissue electrophysiology: progress, challenges and open questions Prog Biophys Mol Biol (2011). 104:22–48. doi:10.1016/j.pbiomolbio.2010.05.008

24. Ten Tusscher, K, Noble, D, Noble, P, and Panfilov, AV. A model for human ventricular tissue. Am J Physiol Heart Circ Physiol (2004). 286:H1573–H1589.

25. Strain, MC, and Greenside, HS. Size-dependent transition to high-dimensional chaotic dynamics in a two-dimensional excitable medium. Phys Rev Lett (1998). 80:2306–9. doi:10.1103/PhysRevLett.80.2306

26. Lilienkamp, T, Christoph, J, and Parlitz, U. Features of chaotic transients in excitable media governed by spiral and scroll waves. Phys Rev Lett (2017). 119:054101.

27. Aliev, RR, and Panfilov, AV. A simple two-variable model of cardiac excitation. Chaos Solit Fractals (1996). 7:293–301. doi:10.1016/0960-0779(95)00089-5

28. Nash, MP, and Panfilov, AV. Electromechanical model of excitable tissue to study reentrant cardiac arrhythmias. Prog Biophys Mol Biol (2004). 85:501–22. doi:10.1016/j.pbiomolbio.2004.01.016

29. Göktepe, S, and Kuhl, E. Electromechanics of the heart: a unified approach to the strongly coupled excitation–contraction problem. Comput Mech (2009). 45:227–43. doi:10.1007/s00466-009-0434-z

30. Eriksson, TSE, Prassl, A, Plank, G, and Holzapfel, G. Influence of myocardial fiber/sheet orientations on left ventricular mechanical contraction. Math Mech Solid (2013). 18:592–606. doi:10.1177/1081286513485779

31. Bourguignon, D, and Cani, MP. Controlling anisotropy in mass-spring systems. Eurographics. Vienna: Springer (2000). p. 113–23. doi:10.1007/978-3-7091-6344-3_9

32. Jaeger, HGMD Report. The ‘echo state’ approach to analysing and training recurrent neural networks—with an erratum note. Kaiserslauten: German National Research Institute for Computer Science (2001). p. 43.

33. Cheng, Z, Sun, H, Takeuchi, M, and Katto, J. Deep convolutional autoencoder-based lossy image compressionPicture Coding Symposium, PCS 2018—Proceedings. London: Institute of Electrical and Electronics Engineers Inc. (2018). p. 253–7. doi:10.1109/PCS.2018.8456308

34. Lukoševičius, M. A practical guide to applying echo state networks. Lecture notes in computer science. Berlin: Springer (2012). p. 659–86. doi:10.1007/978-3-642-35289-8_36

35. Lukoševičius, M, and Jaeger, H. Reservoir computing approaches to recurrent neural network training. Comput Sci Rev (2009). 3:127–49. doi:10.1016/j.cosrev.2009.03.005

36. Yildiz, IB, Jaeger, H, and Kiebel, SJ. Re-visiting the echo state property. Neural Netw (2012). 35:1–9. doi:10.1016/j.neunet.2012.07.005

37. Jaeger, H, and Haas, H. Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science (2004). 304:78–80. doi:10.1126/science.1091277

38. Lu, Z, Pathak, J, Hunt, B, Girvan, M, Brockett, R, and Ott, E. Reservoir observers: model-free inference of unmeasured variables in chaotic systems. Chaos Interdiscipl J Nonlinear Sci (2017). 27:041102. doi:10.1063/1.4979665

39. Carroll, TL, and Pecora, LM. Network structure effects in reservoir computers. Chaos Interdiscipl J Nonlinear Sci (2019). 29:083130. doi:10.1063/1.5097686

40. Griffith, A, Pomerance, A, and Gauthier, DJ. Forecasting chaotic systems with very low connectivity reservoir computers. Chaos Interdiscipl J Nonlinear Sci (2019). 29:123108. doi:10.1063/1.5120710

41. Thiede, LA, and Parlitz, U. Gradient based hyperparameter optimization in echo state networks. Neural Network (2019). 115:23–9. doi:10.1016/j.neunet.2019.02.001

42. Haluszczynski, A, Aumeier, J, Herteux, J, and Rth, C. Reducing network size and improving prediction stability of reservoir computing. Chaos Interdiscipl J Nonlinear Sci (2020). 30:063136. doi:10.1063/5.0006869

43. Carroll, TL. Dimension of reservoir computers. Chaos Interdiscipl J Nonlinear Sci (2020). 30:013102. doi:10.1063/1.5128898

44. Fan, H, Jiang, J, Zhang, C, Wang, X, and Lai, YC. Long-term prediction of chaotic systems with machine learning. Phys Rev Res (2020). 2:012080. doi:10.1103/PhysRevResearch.2.012080

45. Parlitz, U, and Hornstein, A. Dynamical prediction of chaotic time series. Chaos Complex Lett (2005). 1:135–44.

46. Lu, Z, Hunt, BR, and Ott, E. Attractor reconstruction by machine learning. Chaos Interdiscipl J Nonlinear Sci (2018). 28:061104. doi:10.1063/1.5039508

47. Pathak, J, Hunt, B, Girvan, M, Lu, Z, and Ott, E. Model-free prediction of large spatiotemporally chaotic systems from data: a reservoir computing approach. Phys Rev Lett (2018). 120. doi:10.1103/physrevlett.120.024102

48. Parlitz, U, and Merkwirth, C. Prediction of spatiotemporal time series based on reconstructed local states. Phys Rev Lett (2000). 84:1890–3. doi:10.1103/physrevlett.84.1890

49. LeCun, Y, Boser, BE, Denker, JS, Henderson, D, Howard, RE, Hubbard, WE, et al. Handwritten digit recognition with a back-propagation network. In: DS Touretzky, editor. Advances in neural information processing systems, 2 Burlington, MA: Morgan-Kaufmann (1990). p. 396–404.

50. Ioffe, S, and Szegedy, C. Batch normalization: accelerating deep network training by reducing internal covariate shift. Proc Int Conf Mach Learn (2015). 37:448456. doi:10.1609/aaai.v33i01.33011682

51. Maas, AL, Hannun, AY, and Ng, AY. “Rectifier nonlinearities improve neural network acoustic models Speech and Language Processing.” In ICML workshop on deep learning for audio (2013). p. 3.

52. Hahnloser, RH, Sarpeshkar, R, Mahowald, MA, Douglas, RJ, and Seung, HS. Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit. Nature (2000). 405:947.

53. Srivastava, N, Hinton, G, Krizhevsky, A, Sutskever, I, and Salakhutdinov, R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res (2014). 15:1929–58. doi:10.1109/iwcmc.2019.8766500

54. Dumoulin, V, and Visin, F. A guide to convolution arithmetic for deep learning (2016). arXiv e-printsarXiv:1603.07285

55. Bäuerle, A, and Ropinski, T. Net2vis: transforming deep convolutional networks into publication-ready visualizations (2019). arXiv preprint arXiv:1902.04394.

56. Zimmermann, R, and Thiede, L. easyesn (2020). Available at: https://github.com/kalekiu/easyesn.

57. Kingma, DP, and Ba, J. Adam: a method for stochastic optimization (2014). arXiv e-printsarXiv:1412.6980.

58. Abadi, M, Agarwal, A, Barham, P, Brevdo, E, Chen, Z, Citro, C, et al. TensorFlow: large-scale machine learning on heterogeneous systems (2015). Software available from tensorflow.org.

60. Rosser, JB. Nine-point difference solutions for poisson's equation. Comput Math Appl (1975). 1:351–60. doi:10.1016/0898-1221(75)90035-8

61. Scherer, POJ. Computational physics: simulation of classical and quantum systems. Berlin: Springer (2010). p. 147.

62. Chugh, SS, Havmoeller, R, Narayanan, K, Singh, D, Rienstra, M, Benjamin, EJ, et al. Worldwide epidemiology of atrial fibrillation: a Global Burden of Disease 2010 Study. Circulation (2014) 129:837–847. doi:10.1161/CIRCULATIONAHA.113.005119

63. Wolf, PA, Dawber, TR, Thomas, HE, and Kannel, WB. Epidemiologic assessment of chronic atrial fibrillation and risk of stroke: the Framingham study. Neurology (1978) 28:973–977.

Keywords: reservoir computing, convolutional autoencoder, image enhancement, cross-prediction, cardiac arrhythmias, excitable media, electro-mechanical coupling, cardiac imaging

Citation: Herzog S, Zimmermann RS, Abele J, Luther S and Parlitz U (2021) Reconstructing Complex Cardiac Excitation Waves From Incomplete Data Using Echo State Networks and Convolutional Autoencoders. Front. Appl. Math. Stat. 6:616584. doi: 10.3389/fams.2020.616584

Received: 12 October 2020; Accepted: 07 December 2020;

Published: 18 March 2021.

Edited by:

Axel Hutt, Inria Nancy - Grand-Est Research Centre, FranceReviewed by:

Petia D. Koprinkova-Hristova, Institute of Information and Communication Technologies (BAS), BulgariaMeysam Hashemi, INSERM U110 6 Institut de Neurosciences des Systèmes, France

Copyright © 2021 Herzog, Zimmermann, Abele, Luther and Parlitz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ulrich Parlitz, dWxyaWNoLnBhcmxpdHpAZHMubXBnLmRl

Sebastian Herzog

Sebastian Herzog Roland S. Zimmermann

Roland S. Zimmermann Johannes Abele

Johannes Abele Stefan Luther

Stefan Luther Ulrich Parlitz

Ulrich Parlitz