- Department of Mathematics, University of Oslo, Blindern, Norway

CARMA(p, q) processes are compactly defined through a stochastic differential equation (SDE) involving q + 1 derivatives of the Lévy process driving the noise, despite this latter having in general no differentiable paths. We replace the Lévy noise with a continuously differentiable process obtained via stochastic convolution. The solution of the new SDE is then a stochastic process that converges to the original CARMA in an L2 sense. CARMA processes are largely applied in finance, and this article mathematically justifies their representation via SDE. Consequently, the use of numerical schemes in simulation and estimation by approximating derivatives with discrete increments is also justified for every time step. This provides a link between discrete-time ARMA models and CARMA models in continuous-time. We then analyze an Euler method and find a rate of convergence proportional to the square of the time step for discretization and to the inverse of the convolution parameter. These must then be adjusted to get accurate approximations.

1. Introduction

Continuous-time autoregressive moving-average processes, CARMA in short, represent the continuous-time version of the well-known ARMA models. Because of their linear structure, they are convenient for modeling empirical data, hence they find application in several fields, particularly in finance. Indeed, with a Lévy process as a driver for the noise, one obtains a rich class of possibly heavy-tailed continuous-time stationary processes, and such heavy tails are frequently observed in finance [1]. In Barndorff-Nielsen and Shepard [2], for example, a Lévy-driven Ornstein-Uhlenbeck process (corresponding to a CAR(1)) is employed in a stochastic volatility model, and [3] extend the idea to a wider class of Lévy-driven CARMA processes with non-negative kernel, allowing for non-negative, heavy-tailed processes with a larger range of autocovariance functions.

In view of financial applications, Brockwell et al. [4] derive efficient estimates for the parameters of a non-negative Lévy-driven CAR(1) process, and Brockwell et al. [5] generalize the technique to higher-order CARMA processes with a non-negative kernel. In Benth et al. [6] and Benth and Benth [7], the authors exploit the link between discrete-time ARMA and continuous-time ARMA to model the observed processes in a continuous manner, starting from discrete-time observations-based estimates, in the context of energy markets and weather derivatives. It is also worth mentioning that CARMA processes allow us to construct a wide range of long-memory processes, as shown, e.g., in Marquardt [8] and Marquardt and James [9].

Among the several financial applications, in Paschke and Prokopczuk [10, 11] a CARMA process is employed for the long-term equilibrium component in the two-factors spot price model introduced by Schwartz and Smith [12] (which uses an Ornstein-Uhlenbeck process), while Benth and Koekebakker [13] use a CARMA process for the short-term factor instead. In Benth et al. [14] and García et al. [15], a CARMA process models the spot price in the electricity market, while in Benth et al. [6] and Benth and Benth [7] it models weather variables, such as temperature and wind speed. Finally, Andresen et al. [16] apply CARMA processes to interest rates modeling and [17] to the dynamics of regional ocean freight rates.

Formally, CARMA(p, q) processes are compactly defined through a stochastic differential equation (SDE) involving q + 1 derivatives of the Lévy process driving the noise, despite the latter having in general no differentiable paths. Numerical schemes, such as the Euler scheme, are also applied both for simulations and estimation of CARMA processes. These schemes approximate derivatives with discrete increments with respect to a time step Δ, and their convergence for Δ approaching zero is indeed a well-studied topic. However, this opens up for a discrepancy since it is not mathematically clear what happens when the time step approaches zero, as, in general, the derivatives of a Lévy process are not well-defined.

We give a mathematical justification for the representation via SDE of a CARMA process, and, consequently, for the discrete approximations used for simulating and estimating the continuous-time dynamics of CARMA processes. This is done by replacing the Lèvy process L driving the noise with a process smooth enough so that the SDE makes mathematical sense. For ε > 0 we construct a continuously differentiable function Lε, which converges in the L2 sense to the original Lévy process in the space [0, T] × Ω, for T < +∞. More precisely, the process Lε is constructed via stochastic convolution of L with a smooth sequence of functions {ϕε}ε>0 depending on the convolution parameter ε > 0 and with first derivative converging to the delta-Dirac function when ε approaches zero. We show a rate of convergence of order 1.

For the Lε continuous differentiable, the framework developed solves the differentiability issue for CAR(p) processes in which no more than the first derivative of the driving noise process is required. We can, however, make use of the result in Brockwell et al. [5, Proposition 2]. This states that, under some conditions on the coefficients of the SDE, a CARMA(p, q) process can be decomposed into a sum of p dependent CAR(1) processes. The approach can then be extended to CARMA(p, q) processes of every order q ≥ 0 by considering p different approximations based on the continuous differentiable Lε and taking their sum. This justifies an Lε-only continuous differentiable, as the general case q ≥ 0 is still covered.

We thus replace the Lèvy process L with Lε in the SDE defining the CARMA process X. This gives a different SDE, which depends on ε, whose solution is the new process Xε. We show that Xε converges to X in L2([0, T] × Ω) and that the convergence is of order 2.

The ε-approximation of the Lévy process justifies the use of an Euler scheme for every time step Δ. We present a possible approach for simulating Lε starting from a simulated path of the Lèvy process L and then Xε starting from Lε. This, for a fixed time interval Δ, gives a new noise process and a third process . We show a rate of convergence for both and proportional to Δ2 and inversely so to ε. This highlights that the two parameters Δ and ε must be chosen in a way to get accurate simulations and prevent them from exploding.

We finally consider a possible Euler scheme approach for estimating the CARMA dynamics starting from the simulated time series. It is worth mentioning that the process Lε is not a Lèvy process. Due to the convolution operator, it indeed fails on the crucial property of independent increments. We show in particular that the increments depend on the ratio , and, as this ratio becomes smaller, the increments become more dependent on each other. This has a negative drawback when it comes to estimation. We find indeed that the Euler scheme recovers the true dynamics of the CARMA process only if ε is smaller than Δ.

All the results are deeply investigated and are also confirmed by numerical experiments. In particular, we consider two different Lèvy processes, a Brownian motion and a normal inverse Gaussian (NIG) process, and CARMA processes of two different orders, a Gaussian CAR(1) and a Gaussian CARMA(3, 2). Crucial to all the numerical simulations is the convolution property of both the Gaussian and the NIG distribution function, which allows us to compare path-wise different time grids by simulating only one path of the Lèvy process.

Since CARMA processes have significant application within finance, from the modeling of the electricity spot price to the modeling of wind speed and temperature, with the present study, we justify its mathematical formulation and application in a rigorous way. Moreover, this may open for further applications in the context of sensitivity analysis with respect to paths of the driving noise process. Indeed, since the new process Lε admits derivatives, one could potentially analyze the sensitivity of the CARMA process, and hence of the variable of interest (e.g., the spot price), with respect to the Lévy noise in a path-wise sense. It might also be that when observing a signal, the driving-noise process is more regular than, e.g., a Brownian motion. This might then be modeled by the regular process here introduced and studied.

The rest of the paper is organized as follows. In section 2 we construct the approximating process Lε by stochastic convolution, and we study the rate of convergence. In section 3 we introduce CARMA processes and their spectral representation. We then show the convergence of the new process Xε to the original CARMA process, and we briefly recover the general case q > 0. In section 4 we study an Euler scheme to simulate the process Xε starting from the simulations of Lε, and then to calibrate the parameters of the CARMA dynamics starting from the discrete time series. Appendix A in Supplementary Material contains the proofs of the main results.

2. Construction and Convergence Results

Let be a probability space. We consider a one-dimensional Lèvy process L, which we assume to be a square-integrable martingale with characteristic triplet (κ, Σ, ν), ν being the Lévy measure. From Cont and Tankov [18, Proposition 3.13], for every t > 0 the variance of L(t) is of the form

Moreover, from Cont and Tankov [18, Proposition 3.18], we also know that L is a martingale if, and only if,

We now consider a sequence of measurable functions {ϕε}ε>0 :[0, T] → ℝ such that, for every ε > 0, ϕε is twice continuously differentiable on the interval [0, T] and ϕε(0) = 0. We then construct the process {Lε(t), t ≥ 0} by stochastic convolution of the Lévy process L with ϕε:

Lε is well-defined in [0, T] for every T ∈ ]0, +∞[, and, in particular, the condition ϕε(0) = 0 is used to prove the following result, where the symbol * denotes the convolution operator.

Lemma 2.1. The process {Lε(t), t ≥ 0} in Equation (2.3) can be expressed by

Proof: See Appendix A.1 in Supplementary Material.

For , since ϕε(0) = 0, we write that so that ϕε is twice continuously differentiable for any continuously differentiable ψε. We introduce the function , and for every ε > 0 we define

Let us notice that , while the total area of the mollifier function usually equals 1. However, we construct the process {Lε(t), t ≥ 0} by stochastic convolution restricted to the interval [0, t], and, denoting by Φ the standard Gaussian cumulative distribution function, the area of ψε restricted to [0, t] is

so that the unitary total integral property for ψε is preserved in the limit.

By differentiating Equation (2.4) with respect to t, according to the Leibniz rule, we get

This shows that , though for any n > 1, because of the term L(t) which is not differentiable, unless , which is not our case. Hence, we start with the Lévy process L, which is not differentiable, and we end up with Lε, which is continuously differentiable. For a CARMA(p, q) process, we need . From Equation (2.7), this means to have for every 0 ≤ m ≤ q + 1, being the m-th derivative of ϕε. However, for our particular choice of the convolution function ψε, these conditions of null derivatives in zero are not satisfied. Another possible choice would have been, for example, to take a sequence of gamma densities with scale parameter θε converging to 0 as ε converges to 0 and shape parameter k > 0, such as For k ≥ q + 1, the first q derivatives of dε are indeed null in t = 0.

With Lε constructed above, we thus address the differentiability issue in the stochastic differential equation defining the CARMA process only for q = 0, namely when the process is a CAR(p). However, Brockwell et al. [5, Proposition 2] shows that, under some conditions, we can express a CARMA(p, q) process as the sum of p dependent and possibly complex-valued CAR(1) processes. The idea is then to construct the ε-approximation with Lε in Equation (2.3) for each of the p processes of CAR(1)-type and obtain the ε-CARMA(p, q) process by summing them up. We can thus deal with q > 0, but it must be done separately. With the gamma densities mentioned above, for example, the special treatment for q > 0 would not be necessary. However, we started this analysis from CAR(p) processes, and in this setting the scaled Gaussian densities ψε make the job. We shall analyze the case q > 0 in section 3.2.

A first important fact about Lε in Equation (2.3) is that the convolution operation does not preserve the properties of the Lévy process L.

Proposition 2.2. The process {Lε(t), t ≥ 0} is not a Lévy process.

Proof: See Appendix A.2 in Supplementary Material.

By Proposition 2.2, for L = B, a Brownian motion, the approximated process Bε is no longer a Brownian motion. We can, however, show that the distribution of B is preserved.

Lemma 2.3. For every ε > 0, the process {Bε(t), t ≥ 0} has Gaussian distribution with zero mean and variance

Proof: Bε is defined in Equation (2.3) as the stochastic integral of ϕε with respect to the Brownian motion B. Then Bε has Gaussian distribution with zero mean and variance , which can be explicitly calculated.□

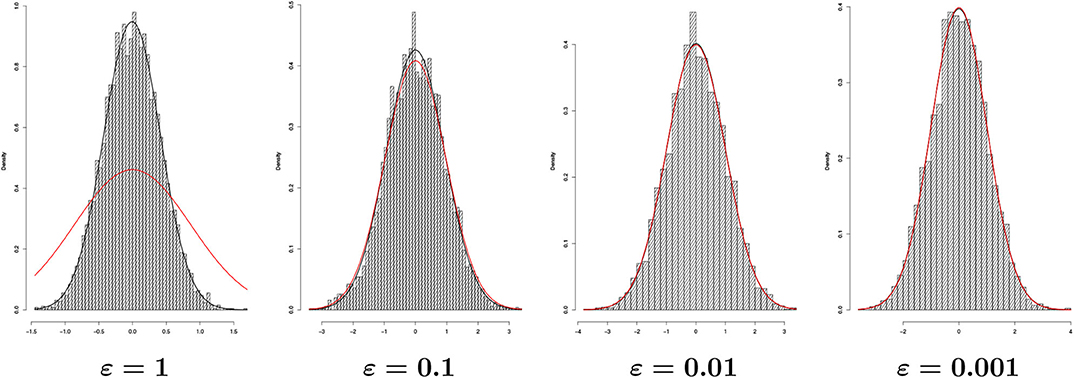

Let us point out that Lemma 2.3 is valid for every choice of the functions {ϕε}ε>0, with the obvious modification for the variance formula . Moreover, from Lemma 2.3, we get that , as one would expect. Figure 1 shows indeed the typical bell-shape for a Gaussian distribution. We sampled the process Bε at time t = 1 for 5, 000 times and different values of ε. The empirical (black line) and the theoretical (red line) distributions (according to Lemma 2.3) are also shown. For ε = 1, there is a certain discrepancy between empirical and theoretical distributions, which may be due to the fact that for big values of ε the increments of Bε are more dependent from each other than for smaller values of ε. The same phenomena will be encountered in section 4.3.

Figure 1. Histograms of Bε(t) for t = 1 after 5, 000 simulations with Δtrue = 2−10 (n = 1, 024 points) and different ε's. Together, we have empirical (black line) and theoretical distribution (red line).

2.1. Convergence of the Lévy Approximation

We prove now the convergence of the process Lε to the Lévy process L.

Theorem 2.4. The process {Lε(t), t ≥ 0} converges to {L(t), t ≥ 0} in L2([0, T] × Ω).

Proof: See Appendix A.3 in Supplementary Material. The proof is based on some results from Benth et al. [19, Appendix A].

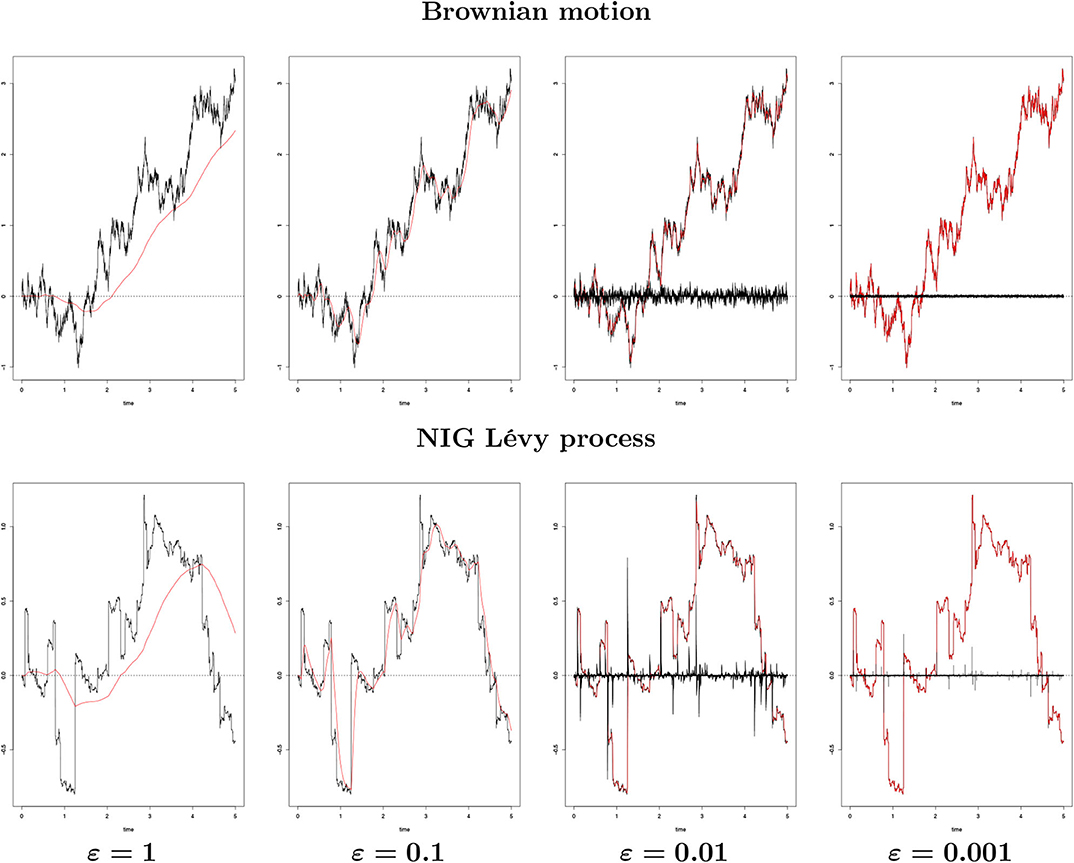

Figure 2 shows graphically the convergence stated in Theorem 2.4 for a Brownian motion and for a normal inverse Gaussian process with parameters (α, β, δ, μ) = (1, 0, Δtrue, 0), Δtrue being the time step used in the Euler discretization (see section 4). As ε decreases, the error between Lε and L decreases. Even with a large ε (ε = 1), the process Lε is able to capture the shape of L. It seems however to be slightly shifted to the right.

Figure 2. {L(t), 0 ≤ t ≤ 5} (black line) against {Lε(t), 0 ≤ t ≤ 5} (red line) for different ε's generated from a single path with Δtrue = 2−10 (n = 5, 120 points) for a Brownian motion and a NIG process with parameters (α, β, δ, μ) = (1, 0, Δtrue, 0). The black bar lines in the four rightmost images represent the difference between L and Lε.

We compute explicitly the squared error between Lε and L at the terminal point t = T.

Lemma 2.5. For every ε > 0 and T < +∞, the squared error between Lε and L is given by

for σ2 in Equation (2.1) and φ and Φ respectively the density and the cumulative distribution functions of a standard Gaussian random variable.

Proof: The proof applies the integration by parts formula several times.□

The following lemma concerning the cumulative Gaussian distribution function Φ is useful.

Lemma 2.6. The function Φ can be expanded by integration by parts into the series

!! denoting the double factorial. Moreover, for large x, the asymptotic expansion is also valid

We now use the expansion (2.8) to calculate the rate of convergence of Lε to the Lévy process L, starting from Lemma 2.5. The expansion (2.9) will be used further in the paper.

Proposition 2.7. For every ε > 0 and T < +∞, there exists a constant K1 > 0 such that

Proof: See Appendix A.4 in Supplementary Material.

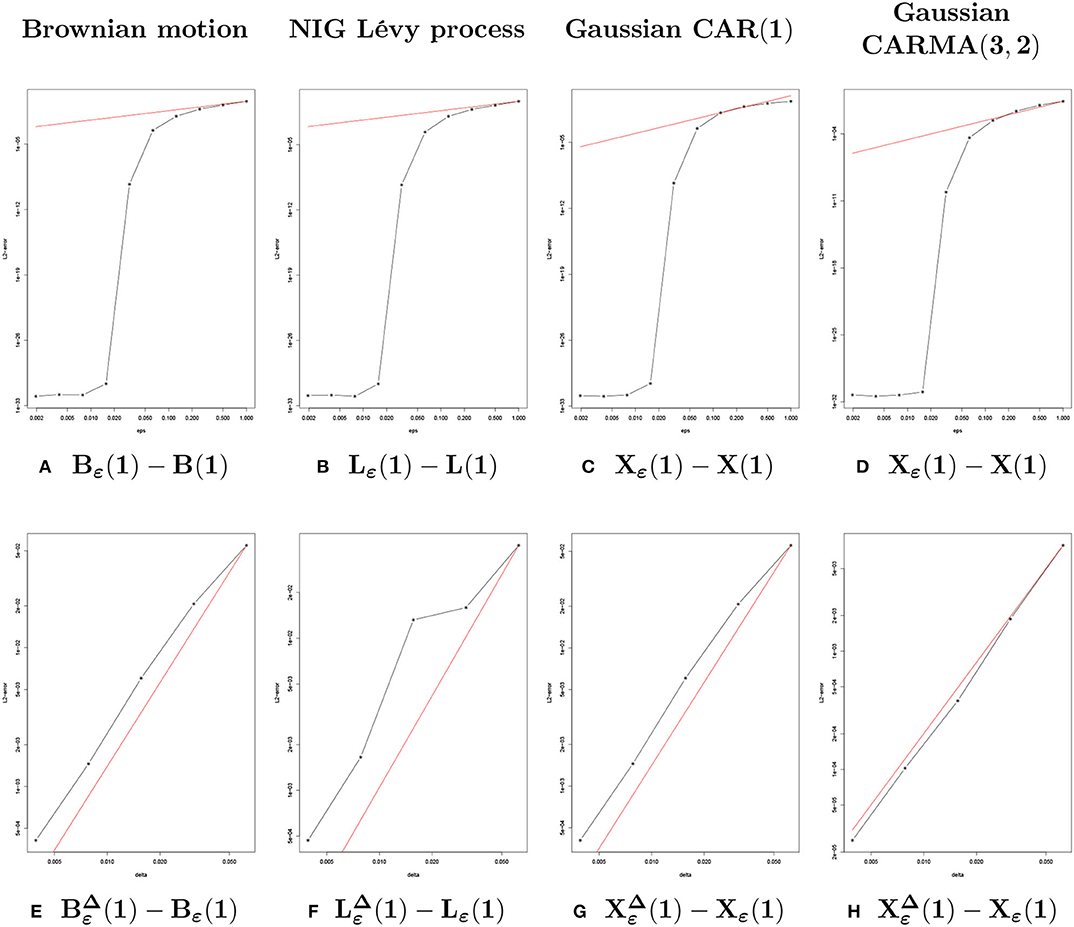

Similarly to Lemma 2.3, the rate of convergence found in Proposition 2.7 can be calculated explicitly for every choice of the functions {ϕε}ε>0, possibly obtaining a different order. The L2 norm of the approximation error studied in Proposition 2.7 as a function of ε is in Figure 3A for the Brownian motion and in Figure 3B for the NIG Lévy process. In the next section we shall introduce CARMA processes in a more rigorous way and study their ε-approximation.

Figure 3. Log-log plots for the squared approximation error after 1, 000 simulations. Above, the error is a function of ε with fixed Δ = 2−3. Below, the error is a function of Δ with fixed ε = 0.01.

3. CARMA Representation and Convergence Results

For p > q and t ≥ 0, a second-order Lévy-driven continuous-time ARMA process of order (p, q) is formally defined by the stochastic differential equation

where P and Q are the characteristic polynomials of the CARMA process of the form

with aj ≥ 0, j = 1, …, p, and ap > 0, bq = 1 and bj = 0 for q < j < p. Equation (3.1) is, however, usually interpreted by means of its state-space representation

where ⊤ denotes the transpose operator, and

By Itô's rule, the solution of the first equation in (3.3) at time t ≥ s is given by

Moreover, from Brockwell et al. [5, Proposition 1], if the eigenvalues λ1, …, λp of A satisfy the condition

then the process {Y(t), t ≥ 0} is strictly stationary and the CARMA(p, q) process is given by

The function g is referred to as the kernel function associated to the CARMA process. We also notice that the process X is a causal function of L, in the sense that X(t) is independent of {L(s) − L(t), s ≥ t} for all t ≥ 0. We shall assume that condition (3.4) is satisfied, referring to it as the causality condition or stationarity condition.

In a similar way, we introduce the state-space representation for Xε. This is the process obtained by replacing the Lévy process L with the smoother Lε, namely

The solution to Equation (3.7) can be expressed in terms of the kernel function g by

We point out that the kernel function g in Equation (3.6) is continuously differentiable. Its value at x = 0 is given in the following lemma, where δ denotes the Kronecker delta.

Lemma 3.1. For p > q, the value of the kernel function at x = 0 is equal to g(0) = δp,q+1.

Proof: The proof follows by direct verification.□

From the basic algebra analysis, if A has distinct eigenvalues, then the matrix exponential eAx can be decomposed into the matrix product

Λ and V being the eigenvalues and eigenvectors matrices. From Brockwell et al. [5, Remark 2], the eigenvalues λ1, …, λp of the matrix A coincide with the roots of the polynomial P in Equation (3.2), and the i-th eigenvector is of the form . From Brockwell et al. [5, Proposition 2], we also get that , where

P′ being the derivative of the polynomial P. We then find the following spectral representations for the kernel function g and its first derivative h(x): = g′(x).

Lemma 3.2. The functions g and h can be expressed in terms of the spectral representations:

for γi in Equation (3.10), and , j = 1, …, p.

Proof: The proof comes from Equations (3.6) and (3.9) by writing explicitly the matrix product and .□

These representations will be used for proving the convergence results in the next section.

3.1. Convergence of the CARMA Approximation

We prove the convergence of Xε to X. We first start with the following preliminary result.

Lemma 3.3. The processes {X(t), t ≥ 0} and {Xε(t), t ≥ 0} can be rewritten by

Here h(x) = g′(x) and , for g(0) given in Lemma 3.1.

Proof: See Appendix A.5 in Supplementary Material.

From Lemma 3.2, we also derive the spectral representation

and prove the first convergence result.

Proposition 3.4. For every x > 0, the function hε(x) converges pointwise to h(x).

Proof: See Appendix A.6 in Supplementary Material.

In Proposition 3.4, we do not require the limit to hold in x = 0. However, this does not affect the convergence in L2 since X and Xε are both integral functions, and {x = 0} is a set of measure 0. Indeed, we can prove the required convergence for Xε.

Theorem 3.5. The process {Xε(t), t ≥ 0} converges to {X(t), t ≥ 0} in L2([0, T] × Ω).

Proof: See Appendix A.7 in Supplementary Material.

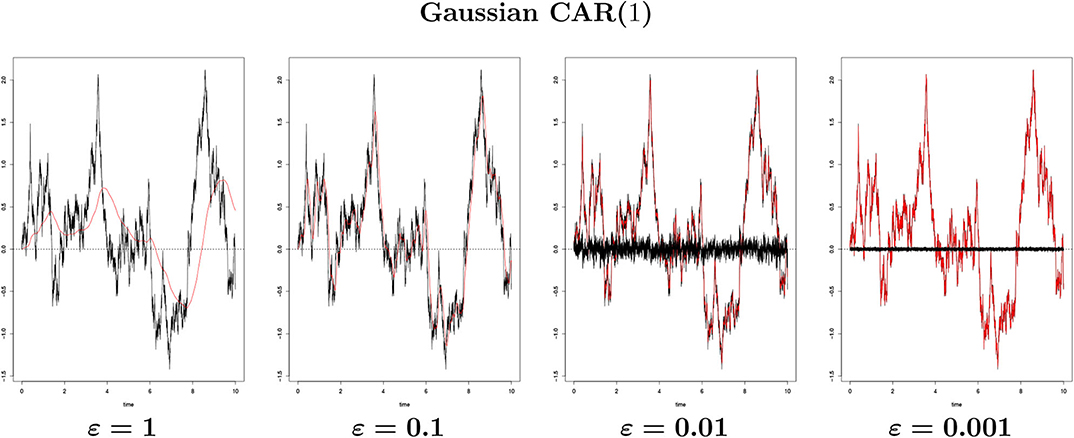

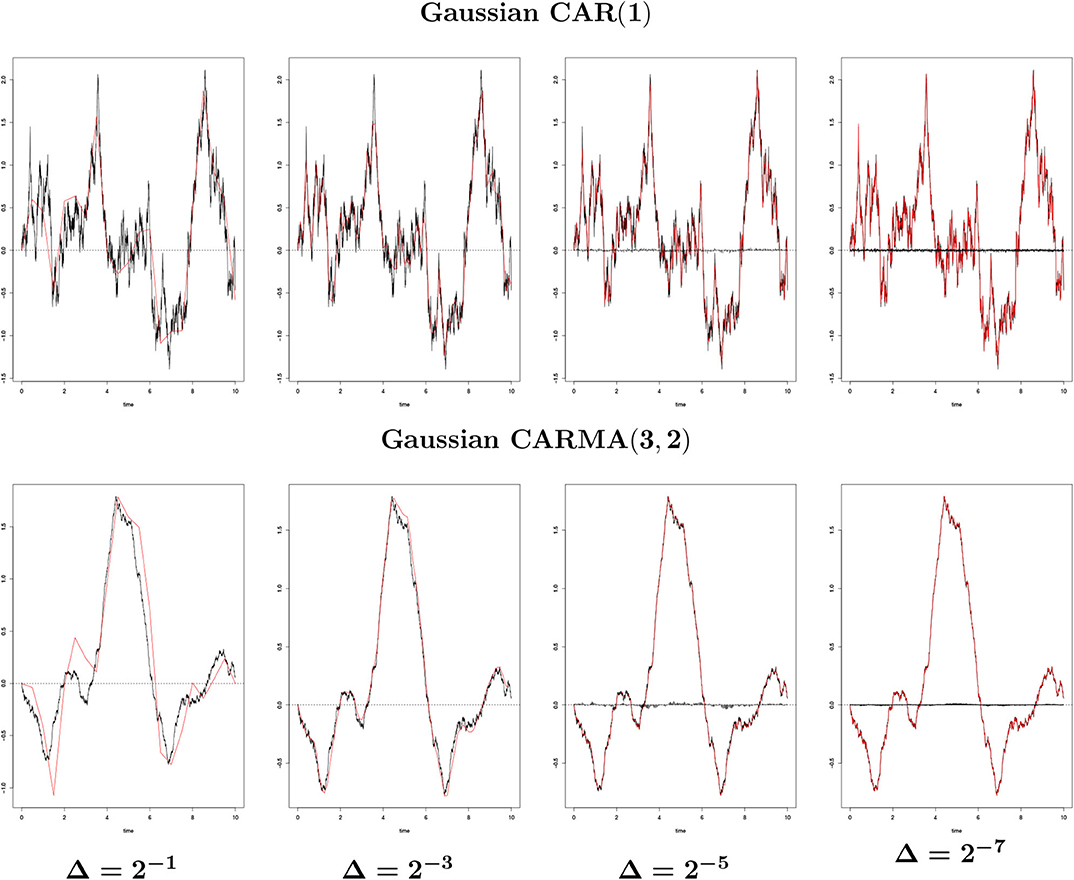

Figure 4 shows the convergence proven in Theorem 3.5 for a Gaussian CAR(1) process with parameter a = 0.6. We notice that Xε captures the shape of X even with ε large, and we observe a right-shifting of Xε with respect to X, as noticed already for the Lévy process approximation.

Figure 4. The Gaussian CAR(1) process {X(t), 0 ≤ t ≤ 10} (black line) with parameter a = 0.6 against {Xε(t), 0 ≤ t ≤ 10} (red line) for different ε's generated from a single path with Δtrue = 2−10 (n = 10, 240 points). The black bar lines in the two rightmost images represent the difference between X and Xε.

We now study explicitly the rate of convergence of Xε to the CARMA process X.

Proposition 3.6. For every ε > 0 and T < +∞, there exists a constant K2 > 0 such that

Proof: See Appendix A.8 in Supplementary Material.

The rate of convergence obtained in Proposition 3.6 is shown in Figure 3C for a CAR(1).

We point out that the results in this section have been stated and proved for a general CARMA(p, q) process. The convergence results are indeed valid for every q ≥ 0, even when Lε is not smooth enough to make the SDE having mathematical sense. We then present them for the general case. Since the same ideas apply to other families of convolution functions, such as the Gamma densities previously mentioned, this is useful for possible further applications.

We end this section with a distribution property holding for Xε when the original noise is driven by a Brownian motion. If the CARMA process X is driven by a Brownian motion B, then X has Gaussian distribution. We show that the same holds for Xε. This is indeed not a straightforward result since the process Bε is no longer a Brownian motion by means of Proposition 2.2. A rigorous proof is thus necessary.

Proposition 3.7. For every ε > 0, the process {Xε(t), t ≥ 0} has Gaussian distribution.

Proof: See Appendix A.9 in Supplementary Material.

3.2. When q > 0

The process Lε is continuously differentiable but is not twice continuously differentiable. However, at the right-hand side of the stochastic differential equation (3.1), we require q + 1 derivatives of the driving noise process. For the general case q > 0 we need the following result.

Proposition 3.8. Under the causality condition (3.4) and if the matrix A has distinct eigenvalues, the CARMA(p, q) process X can be expressed by the sum

Here, the X(r) are dependent and possibly complex-valued CAR(1) processes with .

Proof: See [5, Proposition 2].

By means of Proposition 3.8, starting from Lε in Equation (2.3), the idea is to construct p-approximating processes and obtain Xε as the sum of them, namely,

In particular, from Itô's calculus, we can prove that, for any r = 1, …, p and t ≥ 0, the process X(r), respectively , satisfies the stochastic differential equation

that will be used for numerical purposes in section 4. In Equation (3.12), we write ε in parentheses since it applies to both X(r) and .

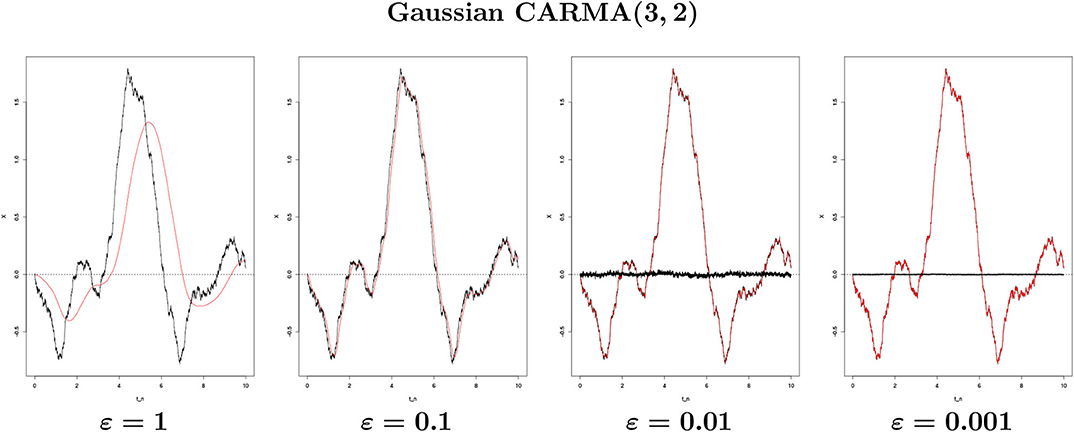

In Figure 5 we consider a Gaussian CARMA(3, 2) process with parameters (a1, a2, a3) = (2.043, 1.339, 0.177) taken from [6] where a CAR(3) is used to model the daily average temperature dynamics in Stockholm (Sweden) and (b0, b1, b2) = (2.0, 0.8, 1.0). In Figure 3D, we observe the rate of convergence as a function of ε.

Figure 5. The Gaussian CARMA(3, 2) process {X(t), 0 ≤ t ≤ 10} (black line) with parameters (a1, a2, a3, b0, b1, b2) = (2.043, 1.339, 0.177, 2.0, 0.8, 1.0) against {Xε(t), 0 ≤ t ≤ 10} (red line) for different ε's generated from a single path with Δtrue = 2−10 (n = 10, 240 points). The black bar lines in the two rightmost images represent the difference between X and Xε.

4. Numerical Schemes and Results

We consider a possible approach to simulate X and Xε for some CARMA parameters, and we then estimate these parameters starting from the simulated time series. In particular, we need first to simulate L, then Lε, and only afterwards can we simulate Xε. For n > 0, we shall consider the partition such that is constant, that is , and for each j = 0, …, n.

4.1. The Lévy Simulations

By definition, for a fixed time interval Δ, the increments of the Lèvy process , j = 1, …, n, are independent and identically distributed random variables following a certain distribution, which depends only on the time increment Δ. For example, for L = B, the increments have all Gaussian distribution with zero mean and variance Δ. A possible approach to simulate Lε in an exact way is to find the distribution of Lε(t), starting from the distribution of L(t). For example, for L = B, by means of Lemma 2.3, we can simulate Bε sampling directly from a Gaussian distribution with zero mean and variance in Lemma 2.3. However, with this approach, L and Lε are from two different ω's in Ω, and comparing the two paths we would not get the error measure required. We then need an alternative approach that simulates Lε and L from the same ω ∈ Ω.

From Equation (2.3), Lε is the stochastic integral of ϕε with respect to the Lévy process L. From the definition of the stochastic integral as a limit of approximating Riemann-Stieltjes sums [20], for t ∈ Π(n) such that , for m = m(t) and 1 ≤ m ≤ n, we approximate Lε(t) by approximating the integral with a sum, namely

where . The approximation scheme (4.1) adds an error to the simulation. It, however, constructs Lε starting from the simulations of L, allowing to compare the two paths for different Δ's. In the next proposition, we study the approximation error.

Proposition 4.1. For every Δ > 0, ε > 0 and T < +∞, there exists a constant K3 > 0 such that the simulation error is bounded by

Proof: See Appendix A.10 in Supplementary Material.

With Proposition 4.1, we estimated an upper bound for the error added by the Euler scheme for simulating the process Lε by means of the process . In particular, it shows that, for a fixed ε > 0, the simulation error decreases at the decreasing of the time step Δ, as we would expect. In Kloeden et al. [21, section 9.3], the authors study the rate of convergence for the Euler scheme when applied to an Itô process, with respect to the absolute error criterion, finding a strong rate of convergence equal to 1/2. Here, in line with the rest of the paper, we consider the squared error in Proposition 4.1 instead. This gives a rate of convergence equal to 2, corresponding to a strong order 1. From Proposition 4.1, we also notice that the bound depends on the inverse of ε, meaning that, for ε going to zero, the simulation error might explode.

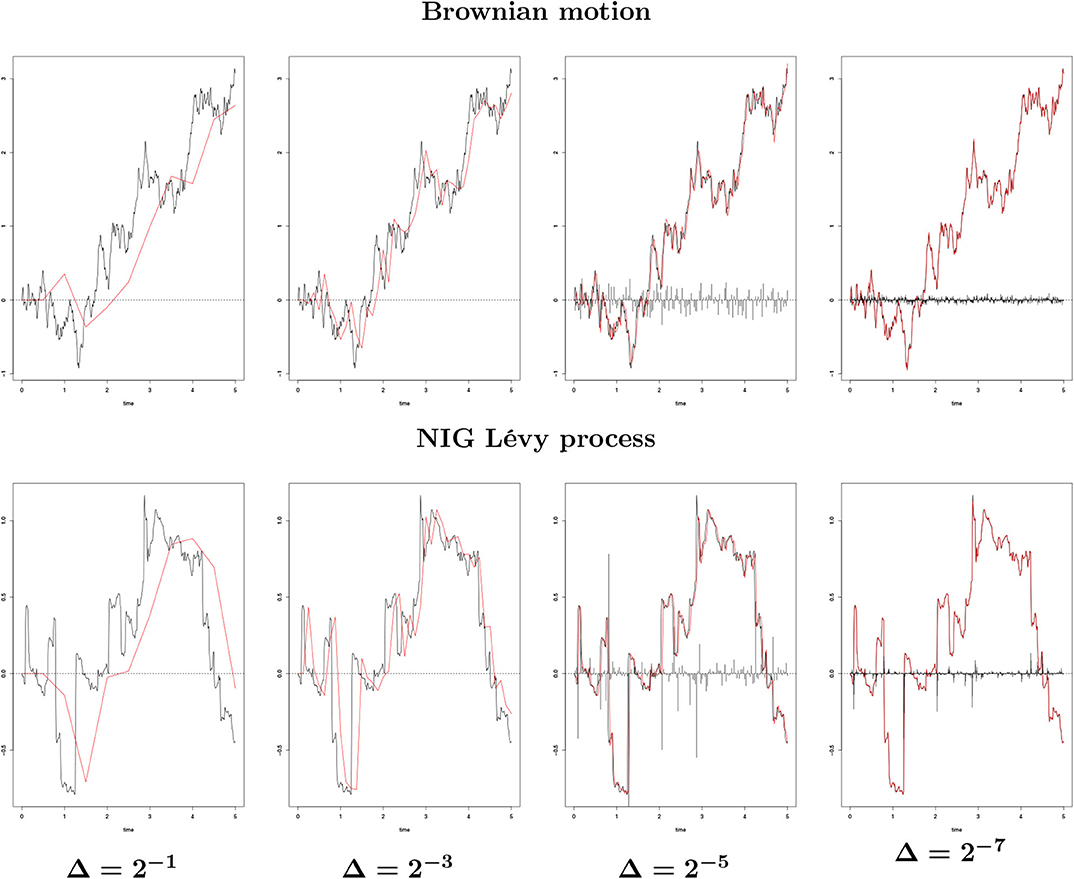

Figure 5 shows the convergence of the Euler scheme (4.1) for a Brownian motion and for the normal inverse Gaussian process introduced in section 2.1. Since an exact simulation is not possible, to generate Bε, we consider the scheme (4.1) with a small time step Δtrue = 2−10. Moreover, since we want to compare Bε and path-wise for different values of Δ, Bε and must be related to the same ω ∈ Ω, that is, to the same realization of the Brownian motion B. To get this, we exploit the following well-known property: given , for i = 1, …, k, independent Gaussian random variables, their sum follows the distribution . Thus, we start by simulating the true process Bε for a certain time step Δtrue << 1. For this, we sample the increments from a Gaussian distribution with zero mean and variance Δtrue and obtain Bε as in Equation (4.1). Then, we choose a time step Δ multiple of Δtrue, namely, Δ = kΔtrue for a certain k ∈ ℕ. The Brownian motion path to be used in Equation (4.1) for simulating is then obtained by summing up the increments of B (above simulated) at groups of k elements. These increments have indeed Gaussian distribution with zero mean and variance , as required. In Figure 6, we considered Δtrue = 2−10 and Δ ∈ {2−7, 2−5, 2−3, 2−1}. We see that, for a fixed ε, as Δ decreases, the error between and Bε decreases. Even with a large Δ (Δ = 2−1), the process captures the shape of Bε. In Figure 3E we show the rate of convergence of the Euler scheme proven in Proposition 4.1 as function of time step Δ and for a fixed ε (ε = 0.01).

Figure 6. {Lε(t), 0 ≤ t ≤ 5} (black line) against (red line) for different Δ's generated from a single path with ε = 0.01, for a Brownian motion and an NIG process with parameters (α, β, δ, μ) = (1, 0, Δtrue, 0). The black bar lines in the four rightmost images represent the difference between Lε and .

Similarly, for the NIG Lévy process, to simulate and compare Lε with path-wise, we use the same idea as in the Brownian motion case. We can indeed exploit the convolution property of the NIG distribution (see [22]): given Xi ~ NIG(α, β, δi, μi), for i = 1, …, k, independent normal inverse Gaussian random variables with common parameters α and β, but individual scale-location parameters δi and μi, their sum follows . In section 2.1, we introduced NIG distribution with parameters (1, 0, Δtrue, 0), for Δtrue being the time step used in the Euler discretization. As above, we thus simulate the true process Lε from Equation (4.1) by sampling the increments of L from a NIG distribution with parameters (1, 0, Δtrue, 0) for a certain Δtrue << 1. Then, we obtain by choosing Δ so that Δ = kΔtrue for a certain k ∈ ℕ, and summing up the increments of L (simulated above) at groups of k elements. These increments have indeed NIG distribution with parameters as required. We consider the same Δ's as for the Brownian motion. We notice that, for a fixed ε, captures the shape of Lε even with a large Δ. In Figure 3F we show the rate of convergence for the Euler scheme proved in Proposition 4.1 as function of the time step Δ and for a fixed ε (ε = 0.01).

From Proposition 2.7 and 4.1, we calculate the squared error for with respect to L.

Proposition 4.2. For every Δ > 0 and ε > 0 small enough, we get the following bound

Proof: This is a direct consequence of Proposition 2.7 and 4.1 by the inequality (a + b)2 ≤ 2(a2 + b2) for and b = Lε(T) − L(T).□

Proposition 4.2 shows that the squared error between and L decreases when Δ decreases. However, because of the term proportional to the inverse of ε, it might also explode for small values of ε. We then need to tune the parameters Δ and ε to find a combination that minimizes the error preventing it from taking large values.

4.2. The CARMA Simulations

It is possible to construct X and Xε starting from Equations (3.5) and (3.8) and considering the same kind of numerical approximation as done for Lε, that is, by replacing the integral with a suitable sum. However, to not add a second simulation error to the one already due to Lε, which is approximated by , we start from the state-space representations instead, Equations (3.3) and (3.7), respectively. By using the Euler scheme, according to [21, Chapter 9], we get (here we write ε in parenthesis since the equation holds both for X and Xε):

where , for j = 1, …, n. For the reasons already discussed, we apply the scheme (4.2) only for q = 0, that is, when b = e1. For q > 0, by means of Proposition 3.8, we instead apply the Euler scheme to the stochastic differential equation (3.12) for each of the p components of X(ε), and we get X(ε) as the sum of them, namely,

When simulating , we use the approximation in Equation (4.1), and this adds an error. We study the rate of convergence for to the true process Xε in the following proposition.

Proposition 4.3. For every ε > 0 and , there exist two constants K4, K5 > 0 such that the simulation error for the Euler scheme (4.2) is bounded by

Proof: See Appendix A.11 in Supplementary Material.

Let us notice that the bound on Δ in Proposition 4.3 has been introduced to obtain the estimate for the squared error. The scheme (4.2) works however for every Δ > 0.

In Figure 7 we show the convergence for the simulations of a Gaussian CAR(1) and a Gaussian CARMA(3, 2). In both cases we notice that for Δ decreasing, the Euler approximation converges to Xε, and, even with a large value of Δ (Δ = 2−1), captures the shape of Xε. In Figures 3G,H, we show the rate of convergence for the two processes as function of Δ2 and for a fixed value of ε (ε = 0.01).

Figure 7. {Xε(t), 0 ≤ t ≤ 10} (black line) against (red line) for different Δ's generated from a single path and ε = 0.01, for X a Gaussian CAR(1) with parameter a = 0.6 and a Gaussian CARMA(3, 2) with parameters (a1, a2, a3, b0, b1, b2) = (2.043, 1.339, 0.177, 2, 0.8, 1). The black bar lines in the four rightmost images represent the difference between Xε and .

From Proposition 3.6 and 4.3, we estimate the squared error for with respect to X.

Proposition 4.4. For every Δ > 0 and ε > 0 small enough, the following bound applies:

Proof: This is a direct consequence of Proposition 3.6 and 4.3 by the inequality (a + b)2 ≤ 2(a2 + b2) for and b = Xε(T) − X(T).□

4.3. CARMA Estimation

We introduce an approach to estimate the parameters (a1, …, ap, b0, …, bq) starting from the time series for {X(t), 0 ≤ t ≤ T} and {Xε(t), 0 ≤ t ≤ T} by applying the Euler scheme. Following Benth et al. [7, section 4.3], we focus on CAR models only, that is, on the process X(t) = Y1(t), for Y1(t) being the first coordinate of the vector Y(t) in Equation (3.3). Then, for a uniform time step Δ, we get the relation

ϵ(t) being the Lévy noise. The coefficients are defined as for i = 1, …, p, and, through the recursion , for j = 1, …, p−1 and i ≥ 2. We refer to Benth et al. [7, section 4.3] for more details. In particular, Equation (4.3) is a linear combination of Y1(t + pΔ), …, Y1(t) and the error term ϵ(t). Thus, we have an AR(p) representation model for Y1(t), and the parameters a1, …, ap can be estimated by means of relation (4.3) starting from the AR estimated parameters. We consider as examples p = 1 and p = 3. For p = 1, X(t) = Y1(t) is an Ornstein-Uhlenbeck process and Equation (4.3) becomes

which coincides with the Euler scheme (4.2) when p = 1. For p = 3, from Equation (4.3) we get

From the definition for , rearranging the terms, the last equation can be rewritten as

Then, one first estimates the AR coefficients θ1, θ2, θ3 and afterwards recover the CAR coefficients a1, a2, a3 by

This scheme holds for every Lévy process. To recover the case q > 0 different approaches have to be considered, see, e.g., [23] and [24].

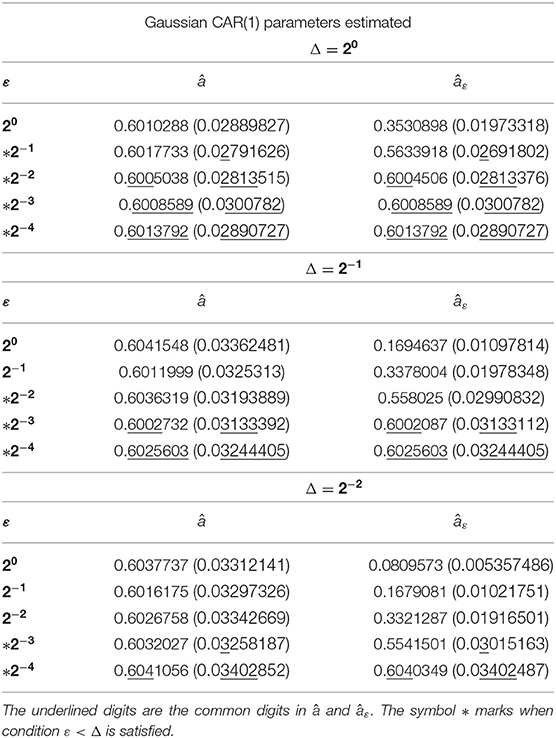

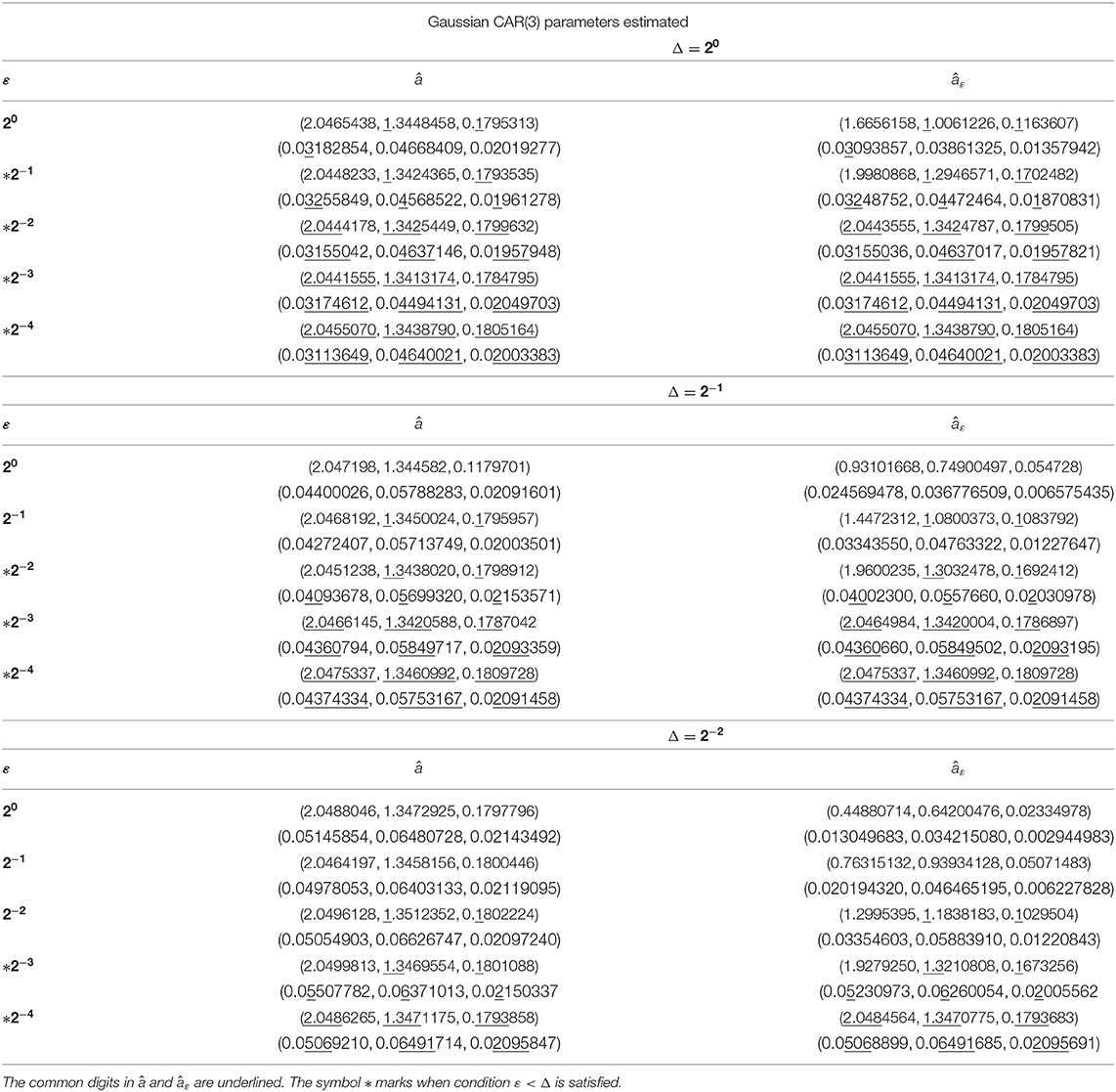

In Tables 1, 2 we report the parameters estimated for a Gaussian CAR(1) and a Gaussian CAR(3) for different ε's and different Δ's. In particular, â is the vector of parameters estimated starting from the time series for X, and âε is the vector of parameters estimated starting from the time series for Xε. The underlined digits are the common digits between â and âε, with respect to the same ε and the same Δ. The estimates get more accurate for a small ε, but they are not accurate if ε is bigger than Δ. We marked the cases corresponding to ε < Δ with the symbol *, and we notice that as soon as ε gets smaller than Δ, the accuracy improves and gets better for smaller values of ε. We summarize this empirical result with a proposition.

Table 1. Parameters estimated and standard deviation (in parenthesis) for different ε's and Δ's after 1, 000 simulations for a Gaussian CAR(1) with a = 0.6.

Table 2. Parameters estimated and standard deviation (every second row) for different ε's and Δ's after 1, 000 simulations for a Gaussian CAR(3) with a = (2.043, 1.339, 0.177).

Proposition 4.5. The Euler scheme (4.3) converges to the true CARMA parameters if ε < Δ.

Proof: See Appendix A.12 in Supplementary Material.

A more rigorous study should be performed regarding the condition in Proposition 4.5, analysing in details the estimation technique for ARMA processes, which is the starting point to estimate the CARMA parameters. This is, however, beyond the purposes of the current article.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The author acknowledges Fred Espen Benth for the several fruitful discussions that let to this paper and two referees for additional interesting comments.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fams.2020.00037/full#supplementary-material

References

1. Brockwell PJ. Lévy-driven CARMA processes. Ann Inst Stat Math. (2001) 53:113–24. doi: 10.1023/A:1017972605872

2. Barndorff-Nielsen OE, Shepard N. Non-Gaussian OU based models and some of their uses in financial economics. J R Stat Soc B Stat Methodol. (2001) 63:167–241. doi: 10.1111/1467-9868.00282

3. Brockwell PJ, Marquardt T. Lévy-driven and fractionally integrated ARMA processes with continuous time parameter. Stat Sin. (2005) 15:477–94. Available online at: https://www.jstor.org/stable/24307365

4. Brockwell PJ, Davis RA, Yang Y. Estimation for nonnegative Lévy-driven Ornstein-Uhlenbeck processes. J Appl Probab. (2007) 44:977–89. doi: 10.1239/jap/1197908818

5. Brockwell PJ, Davis RA, Yang Y. Estimation for non-negative Lévy-driven CARMA processes. J Bus Econ Stat. (2011) 29:250–9. doi: 10.1198/jbes.2010.08165

6. Benth FE, Benth JS, Koekebakker S. Stochastic Modelling of Electricity and Related Markets. Vol. 11. Singapore: World Scientific (2008).

7. Benth FE, Benth JS. Modelling and Pricing in Financial Markets for Weather Derivatives. Vol. 17. Singapore: World Scientific (2012).

8. Marquardt T. Fractional Lévy processes with an application to long memory moving average processes. Bernoulli. (2006) 12:1099–126. doi: 10.3150/bj/1165269152

9. Marquardt T, James LF. Generating Long Memory Models Based on CARMA Processes. Technical report (2007).

10. Paschke R, Prokopczuk M. Integrating multiple commodities in a model of stochastic price dynamics. J Energy Markets. (2009) 2:47–82. doi: 10.21314/JEM.2009.025

11. Paschke R, Prokopczuk M. Commodity derivatives valuation with autoregressive and moving average components in the price dynamics. J Bank Finance. (2010) 34:2742–52. doi: 10.1016/j.jbankfin.2010.05.010

12. Schwartz E, Smith JE. Short-term variations and long-term dynamics in commodity prices. Manage Sci. (2000) 46:893–911. doi: 10.1287/mnsc.46.7.893.12034

13. Benth FE, Koekebakker S. Pricing of forwards and other derivatives in cointegrated commodity markets. Energy Econ. (2015) 52:104–17. doi: 10.1016/j.eneco.2015.09.009

14. Benth FE, Klüppelberg C, Müller G, Vos L. Futures pricing in electricity markets based on stable CARMA spot models. Energy Econ. (2014) 44:392–406. doi: 10.1016/j.eneco.2014.03.020

15. García I, Klüppelberg C, Müller G. Estimation of stable CARMA models with an application to electricity spot prices. Stat Model. (2011) 11:447–70. doi: 10.1177/1471082X1001100504

16. Andresen A, Benth FE, Koekebakker S, Zakamulin V. The CARMA interest rate model. Int J Theor Appl Finance. (2014) 17:1450008. doi: 10.1142/S0219024914500083

17. Adland R, Benth FE, Koekebakker S. Multivariate modeling and analysis of regional ocean freight rates. Transport Res E. (2018) 113:194–221. doi: 10.1016/j.tre.2017.10.014

19. Benth FE, Di Persio L, Lavagnini S. Stochastic modelling of wind derivatives in energy markets. Risks. (2018) 6:56. doi: 10.3390/risks6020056

20. Øksendal BK. Stochastic Differential Equations: An Introduction With Applications. 5th ed. Heidelberg; New York, NY: Springer-Verlag (2003).

21. Kloeden PE, Platen E. Numerical Solution of Stochastic Differential Equation. Berlin; Heidelberg: Springer-Verlag (1992).

22. Hanssen A, Øigård TA. The normal inverse Gaussian distribution: a versatile model for heavy-tailed stochastic processes. In: 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings. Vol. 6. Salt Lake City, UT (2001). doi: 10.1109/ICASSP.2001.940717

23. Brockwell PJ, Davis RA. Introduction to Time Series and Forecasting. 2nd ed. New York, NY: Springer (2002). doi: 10.1007/b97391

Keywords: CARMA processes, stochastic differential equations, Lévy processes, Euler approximation, stochastic convolution, normal inverse Gaussian process

Citation: Lavagnini S (2020) CARMA Approximations and Estimation. Front. Appl. Math. Stat. 6:37. doi: 10.3389/fams.2020.00037

Received: 30 April 2020; Accepted: 27 July 2020;

Published: 11 November 2020.

Edited by:

Ehsan Azmoodeh, Ruhr University Bochum, GermanyCopyright © 2020 Lavagnini. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Silvia Lavagnini, c2lsdmFsQG1hdGgudWlvLm5v

Silvia Lavagnini

Silvia Lavagnini