95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Appl. Math. Stat. , 25 June 2019

Sec. Dynamical Systems

Volume 5 - 2019 | https://doi.org/10.3389/fams.2019.00028

This article is part of the Research Topic Chimera States in Complex Networks View all 14 articles

We investigated interactions within chimera states in a phase oscillator network with two coupled subpopulations. To quantify interactions within and between these subpopulations, we estimated the corresponding (delayed) mutual information that—in general—quantifies the capacity or the maximum rate at which information can be transferred to recover a sender's information at the receiver with a vanishingly low error probability. After verifying their equivalence with estimates based on the continuous phase data, we determined the mutual information using the time points at which the individual phases passed through their respective Poincaré sections. This stroboscopic view on the dynamics may resemble, e.g., neural spike times, that are common observables in the study of neuronal information transfer. This discretization also increased processing speed significantly, rendering it particularly suitable for a fine-grained analysis of the effects of experimental and model parameters. In our model, the delayed mutual information within each subpopulation peaked at zero delay, whereas between the subpopulations it was always maximal at non-zero delay, irrespective of parameter choices. We observed that the delayed mutual information of the desynchronized subpopulation preceded the synchronized subpopulation. Put differently, the oscillators of the desynchronized subpopulation were “driving” the ones in the synchronized subpopulation. These findings were also observed when estimating mutual information of the full phase trajectories. We can thus conclude that the delayed mutual information of discrete time points allows for inferring a functional directed flow of information between subpopulations of coupled phase oscillators.

Oscillatory units are found in a spectacular variety of systems in nature and technology. Examples in biology include flashing fireflies [1], cardiac pacemaker cells [2–6], and neurons [7–11]; in physics one may think of Josephson junctions [12–14], electric power grids [15–21], and, of course, pendulum clocks [22]. Synchronization plays an important role in the collective behavior of and the communication between individual units [23–25]. In the last two decades or so, many studies addressed the problem of synchronization in networks with complex structure, such as networks of networks, hierarchical networks and multilayer networks [26–29]. Alongside efforts studying synchronization on networks, a new symmetry breaking regime coined chimera state has been observed. In a chimera state an oscillator population “splits” into two parts, one being synchronized and the other being desynchronized [30–32], or more generally, different levels of synchronization [33]. This state is a striking manifestation of symmetry breaking, as it may occur even if oscillators are identical and coupled symmetrically; see [34, 35] for recent reviews. Chimera states have spurred much interest resulting in many theoretical investigations, but they have also been demonstrated in experimental settings using, e.g., mechanical and (electro-)chemical oscillators or lasers [36–39], and electronic circuits implementing FitzHugh-Nagumo neurons [40].

Chimera states can be considered patterns of localized synchronization. As such they may contribute to the coding of information in a network. This is particularly interesting since systems with chimera states typically display multi-stability, i.e., different chimera configurations may co-exist for identical parameters [41–44]. Such a network may hence be able to encode different stimuli through different chimera states without the need for adjusting parameters or altering network structure. It is even possible to dynamically switch between different synchronization patterns, thus allowing for dynamic coding of information [45, 46].

The mere existence of different levels of synchronization in the same network can also facilitate the transfer of information across a network, especially between subpopulations. Coherent oscillations between neurons or neural populations have been hypothesized to provide a communication mechanism while full neural synchronization is usually considered pathological [24, 47, 48]. A recent study reported chimera-like states in neuronal networks in humans, more specifically in electro-encephalographic patterns during epileptic seizures [49]. Admittedly, our understanding of these mechanism is still in its infancy also because the (interpretations of) experimental studies often lack mathematical rigor, both regarding the description of synchronization processes and the resulting implications for the network's capacity for information processing. What is the information transfer within and between synchronized and desynchronized populations? We investigated the communication channels between two subpopulations in a system of coupled phase oscillators. Since we designed that system to exhibit chimera states, we expected non-trivial interactions between the subpopulations. To tackle this, we employed the delayed version of mutual information which measures the rate at which information can be sent and recovered with vanishingly low probability of error [50]. Furthermore, assuming symmetry of our configuration, any directionality found in the system should be regarded of functional nature. With this we sought not only to answer the aforementioned question but to contribute to a more general understanding of how information can be transferred between subpopulations of oscillators. In view of potential applicability in other (experimental) studies, we also investigated whether we could gain sufficient insight into this communication by looking at the passage times through the oscillators' Poincaré sections rather than evaluating the corresponding continuous-time data. Such data may resemble, e.g., spike trains in neurophysiological assessments.

Our paper is structured as follows. First, we introduce our model in the study of chimera states [32, 51–53]. It consists of two subpopulations that either can be synchronized or desynchronized. We generalize this model by including distributed coupling strengths among the oscillatory units as well as additive Gaussian white noise. We briefly sketch the conditions under which chimera states can exist. Second, we outline the concept of delayed mutual information and detail how to estimate this for “event-based” data where we define events via Poincaré sections of the phase oscillators' trajectories. With this tool at hand, we finally investigate the flow of information within and between the two subpopulations, and characterize its dependency on the essential model parameters.

We build on a variant of the noisy Kuramoto-Sakaguchi model [54], generalized to M subpopulations of phase oscillators [55]. The phase ϕj, μ(t) of oscillator j = 1, …, N in population μ = 1, …, M evolves according to

where ωj, μ is the natural frequency of the oscillator j in subpopulation μ. Throughout our study ωj, μ is drawn from a zero-centered Gaussian distribution with variance . Oscillator j in population μ and oscillator k in population ν interact sinusoidally with coupling strength Cjk,μν and a phase lag α. The phase lag α varies the interaction function between more cosine (α → π/2) or more sine like-behavior (α → 0) and can be interpreted as a (small) transmission delay between units [34]. The additive noise term dWj, μ(t) represents mean-centered Gaussian noise with variance (strength) , i.e., .

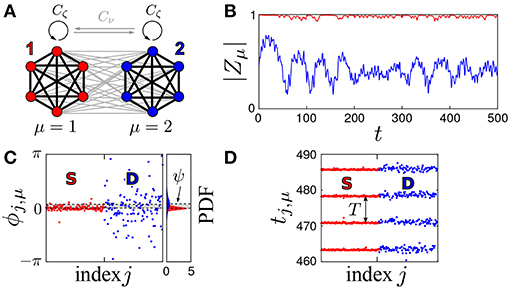

For the sake of legibility, we restrict this model to the case of M = 2 subpopulations of equal size N1 = N2 = N, as illustrated in Figure 1A. In the absence of noise and in the case of identical oscillators, uniform phase lag and homogeneous coupling, i.e., for σW = 0, σω = 0, αμν: = α, and σC = 0, respectively, the aforementioned studies establishes corresponding bifurcation diagrams [32, 51, 52].

Figure 1. (A) Chimera state in a network with M = 2 oscillator subpopulations and non-uniform coupling, simulated using Equation (1). (B) The time evolution of the order parameter, |Zμ(t)|, μ = 1, 2, reflects the fluctuations driven by the coupling and the external field. Despite temporary deviations off the synchronization manifold |Z1| = 1, the system remains near the chimera state attractor. That is, chimera states appear robust to both coupling heterogeneity and additive noise. Considering the time-average of the order parameters, the state may be classified as a stable chimera. (C) Snapshot in time of the phases ϕj, μ(t). The probability density distributions (PDF) of the phases reveal a phase shift between subpopulations 1 and 2, given by ψ: = arg(Z2/Z1), which remains on average constant in time, ψ = 0.26. (D) Times correspond to the Poincaré sections of the phases i.e., such that . The time period of collective oscillation is defined as T : = 1/Ω and where 〈·〉t and 〈·〉j denote averages over time and oscillators, respectively. Parameters are: A = 0.275, β = 0.125, σC = 0.01, σω = 0.01, .

To generalize the model toward more realistic, experimental setting, we included distributed coupling strengths via a non-uniform, (statistically) symmetric coupling between subpopulations. This can be cast in the following coupling matrix:

with block matrices . The self-coupling within the subpopulation and the neighbor coupling between subpopulations are represented by Cζ and Cη, respectively. Each block Cζ (or Cη) is composed of random numbers drawn from a normal distribution with mean ζ (or η) and variance . By this, we can tune the heterogeneity in the network. We would like to note that, in general, the chosen coupling is only symmetric for σC > 0 in the sense of a statistical average, and that the expected coupling values ζ and η in each block only can be retrieved by the mean in the limit of large numbers. Although the symmetry between the two subpopulations 1↔2 is broken and only preserved in a statistical sense in the limit of large numbers, we verified that chimera states appeared in both configurations. That is, subpopulation 1 is synchronized and subpopulation 2 is desynchronized (SD) and subpopulation 2 is synchronized and subpopulation 1 is desynchronized (DS), for a particular choice of parameters using numerical simulations. Another consequence of distributed coupling strengths is that only a very few oscillators may have experienced negative (inhibitory) coupling, which can be neglected.

Following Abrams et al. [51], we parametrize the relation between strengths by A = ζ − η with ζ + η = 1 and the phase lag αμ, ν by which here can be kept homogeneous for the entire network, i.e., βμ, ν: = β. By this, we may recover the results reported in Montbrió et al. [32] and Abrams et al. [51], in the limit σC → 0, under the proviso of σW = σω = 0. In brief, increasing A from 0 while fixing α (or β : = π/2−α) yields the following bifurcation scheme: a “stable chimera” is born through a saddle-node bifurcation and becomes unstable in a supercritical Hopf bifurcation with a stable limit cycle corresponding to a “breathing chimera,” which eventually is destroyed in a homoclinic bifurcation. For all parameter values, the fully synchronized state exists and is a stable attractor; see also Figure A1. Subsequent studies demonstrated robustness of chimera states against non-uniformity of delays (αμν ≠ α) [33], heterogeneity of oscillator frequencies (σω > 0) [56], and additive noise [57]. Although non-uniform phase lags lead to less degenerate dynamics and give room for more complex dynamics [33, 53], we restrict ourselves to the case of uniform phase lags without compromising the essential phenomenology of chimera states.

The macroscopic behavior, i.e., the synchronization of the two populations, may be characterized by complex-valued order parameters, which are either defined on the population level, i.e., , or globally, i.e., . As common in the studies of coupled phase oscillators, the level of synchrony can be given by Rμ: = |Zμ|. Thus, Rμ = 1 implies that oscillators in population μ are perfectly phase synchronized (S), while for Rμ < 1 the oscillators are imperfectly synchronized or de-synchronized (D). For our two-subpopulation case, full synchrony (SS) hence occurs when and , and chimera states are present if R1 < 1 and or vice versa. The angular order parameter, Φμ: = argZμ keeps track of the average phase of the (sub)population. Fluctuations inherent to the model may affect the order parameter as illustrated in Figure 1B (please refer to section 3.1 for the numerical specifications). We therefore always considered averages over time, 〈Rμ〉t, when discussing the stability of a state. In fact, in our model chimera states remain stable for relatively large coupling heterogeneity, σC > 0 presuming σω > 0 and σW > 0, as is evidenced by numerical simulations; see also Figure A1. The perfect synchronization manifold with R = 1 cannot be achieved; see Figure 1B. Further aspects of these noisy dynamics will be presented elsewhere.

Adding noise and heterogeneity to the system may alter its dynamics. In the present work we concentrated on parameter regions characterized by the occurrence of dynamic states that did resemble stable chimeras, i.e., 〈R1〉t > 〈R2〉t, where 〈·〉t denotes the average over a duration of T = 9·106 time steps (after removing a transient of T = 105 time steps). Figure A1 provides an overview and explanation of how parameter points A and β were selected.

For the numerical implementation we employed a Euler-Maruyama scheme with Δt = 10−2 for N = 128 phase oscillators per subpopulation that were evolved for T = 106 [54]. We varied the coupling parameter A and the phase lag parameter β, while we fixed the width (standard deviation) of the natural frequency distribution to σω = 0.01 and of the coupling distribution to σC = 0.01. The additive noise had variance .

Mutual information I(X; Y), first introduced by Shannon and Weaver [58], is meant to assess the dependence between two random variables X and Y. It measures the amount of information that X contains about Y. In terms of communication theory, Y can be regarded as output of a communication channel which depends probabilistically on its input, X. By construction, I(X; Y) is non-negative; it is equal to zero if and only if X and Y are independent. Moreover, I(X; Y) is symmetric, i.e., I(X; Y) = I(Y; X) implying that it is not directional. The mutual information may also be considered as a measure of the reduction in the uncertainty of X(Y) due to the knowledge of Y(X) or, in terms of communication, the rate at which information is being shared between the two [50]. The maximum rate at which one can send information over the channel and recover it at the output with a vanishingly low probability of error is called channel capacity, . In networks with oscillatory nodes, the random variables are the degree of synchronization of different subpopulations, and the rate of information shared will depend on the state of the system for each of the subpopulations.

Mutual information can be defined via the Kullback-Leibler divergence or in terms of entropies,

where H(X) is the entropy of the random variable X, i.e., with pX(x) being the corresponding probability density.

For time-dependent random variables, one may generalize this definition to that of a delayed mutual information. This variant dates back to Fraser and Swinney [59], who used the delayed mutual information for estimating the embedding delay in chaotic systems—in that context the delayed auto-mutual information is often considered a “generalization” of the auto-correlation function [60]. Applications range from the study of coupled map lattices [61] via spatiotemporal [62] and general dependencies between time series [63] to the detection of synchronization [64]. With the notation IXY : = I(X; Y) and HX : = H(X), the delayed mutual information for a delay τ may read

which has the symmetry IXY(τ) = IYX(−τ)1. With this definition, one can measure the rate of information shared between X and Y as a function of time delay τ. In fact, we are not particularly interested in the specific value of the mutual information but rather focus here on the time delay at which the mutual information is maximal. Hence, we define τmax : = arg maxτ IXY(τ). A positive (negative) value of τmax implies that Y shares more information with a delayed (advanced) X. This means there is an information flow from X(Y) to Y(X).

When the time-dependent random variables are continuous time series uμ(t) and uν(t) associated to populations μ and ν, the delayed mutual information can be estimated from Equation (4) for X(t) = uμ(t) and Y(t) = uν(t),

We determined the probability densities using kernel density estimators with Epanetchnikov kernels with bandwidths given through a uniform maximum likelihood cross-validation search. For our parameter settings (254 oscillators and 106 samples), the resulting bandwidths ranged from about 0.10 to 0.18 rad. This software implementation is part of the KDE-toolbox; cf. [65] and Thomas et al. [66] for alternative schemes.

For the aforementioned event signals in the subpopulations 1 and 2, i.e., discrete time points are defined as passing moments through the respective Poincaré sections. The probability densities to incorporate when estimating the mutual information are densities of events, or densities of times. We implemented the probability estimates as follows. Let Sμ be a set of event times, i.e., where stands for the time of the m-th event of oscillator i in subpopulation μ. Then, the probability density for an event to happen at time t in subpopulation μ is pμ(t) = P(t ∈ Sμ) and the probability of an event to happen at time t in subpopulation μ and time t+τ in subpopulation ν is

The delayed mutual information can be given as

We again computed the probability densities using kernel density estimators [65] but now involving Gaussian kernels. We also adjusted the bandwidth selection to a spherical, local maximum likelihood cross-validation due to the sparsity of the data and the resulting bandwidths ranged from about 25 to 35 time units. These results appeared robust when using the aforementioned uniform search; again we employed the KDE-toolbox.

We analyzed the times t1, μ, t2, μ, …tN, μ at which the individual phases ϕ(t)j, μ passed through their respective Poincaré sections. The latter were defined as . As mentioned above, every subpopulation μ generated an “event sequence” ; which, as already said, may be considered reminiscent of spike trains; cf. Figure 1C.

To determine the directionality of the information flow in the network we computed the time lagged mutual information within the subpopulations, I11(τ) and I22(τ) and between them, I12(τ) = I21(−τ).

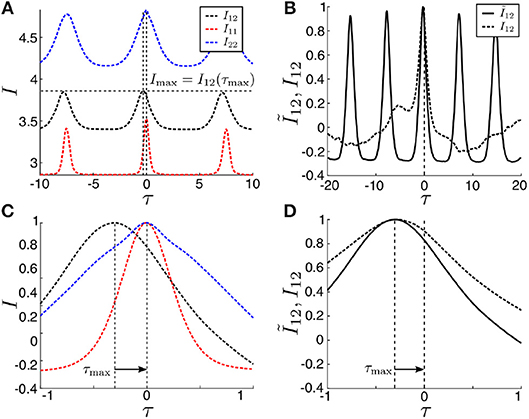

The first results for the event data outlined in section 3.5 are shown in Figure 2A. Since the recurrence of events mimicked the mean frequency of the phase oscillators, the mutual information turned out periodic. As expected, we observed (average) values of the mutual information that differed between I11 (red), I22 (blue), I12 (black). This relates to the difference in entropy of the subpopulations, with the less synchronized one (μ = 2) being more disordered. The latter, hence, contained more entropy. However, since we were not interested in the explicit values of Iμν(τ), we could rescale the mutual information to maximum value which allowed for a comparative view when zooming in to the neighborhood of τ ≈ 0; see Figure 2C. The off-zero peak of Imax: = I12(τmax) in τ = τmax clearly indicated a directed information flow, i.e., there is a functional directionality despite the structural symmetry of our model.

Figure 2. (A) Mutual informations Iμν as a function of τ between and within the two subpopulations μ and ν. (C) The range-zoomed curve reveals a directionality in the mutual information I12(τ) between the two subpopulations 1 and 2, with a maximum peak Imax : = I12(τmax) located at τmax < 0. (B,D) Comparison of the average mutual information obtained from continuous time data (solid), Ĩ12, vs. event time data (dotted), I12. Obviously, locations of the peaks agree very well. (B–D) display the average mutual information with 〈I〉t denoting the average over time after discarding a transient (see text for details). Parameters across panels are A = 0.275, β = 0.125, σω = 0.01, σC = 0.01, .

When comparing the estimates for the mutual information obtained from the event data, I, with those from the continuous-time, Ĩ, we found little to no difference in peak location. That is, the positions τmax of the maximum peaks, Ĩmax and Imax, were nearly identical using either method as shown in Figures 2B,D. Thus, to study the effects of varying model parameters on Iμν(τ), our subsequent analysis may solely rely on the event data approach.

Varying the values of A and β revealed a strong relation between the location of the peak of the delayed mutual information τmax and the relative phase arg(Z1/Z2) between the two subpopulations; see Figure 3A. This convincingly shows that our approach to analyze the event-based data reveals important information about the (otherwise continuous) dynamics of the subpopulations, here given by the phases (arguments) of the local order parameters. By contrast, the relative strength of local synchronization |Z1/Z2| had little to no effect on τmax; see Figure 3B. These dependencies were inverted when looking at the value of mutual information, Imax/; see Figures 3C,D. Consequently, the value of mutual information was affected by relative strength of local synchronization |Z1/Z2| | after all the more a subpopulation is synchronized, the lower the corresponding entropy. However, effects were small and probably negligible when transferring to (selected) experimental data. More details about the normalization factor are discussed in Appendix B.

Figure 3. Dependency of (Imax, νmax) on the synchronization level and on the parameters β and A. (A) The maximizing time delay τmax has a nearly linear dependency on the angular phase difference of order parameters arg(Z1/Z2). (B) τmax appears largely independent of the ratio of the magnitude of order parameters |Z1/Z2|, though results are weakly dependent on β. (C,D) The normalized maximum peak of mutual information Imax/ shows a weak dependency on the ratio of order parameters. All other parameters are fixed at .

The delayed mutual information from event data, here, the passing times through the oscillators' Poincaré sections, agreed with that of the continuous data. This is an important finding as it shows that studying discrete event time series allows for inferring information-related properties of continuous dynamics. This offers the possibility to bring our approach to experimental studies, be that in neuroscience, where spike trains are a traditional measure of continuous neuronal activity, or economics, where stroboscopic assessments of stocks are common practice. Here, we used this approach to explore the information flow within and between subpopulations of a network of coupled phase oscillators. This information flow turned out to be directed.

Mutual information is the traditional measure of shared information between the two systems [58]. Our findings relied on the introduction of a time delay τ, which readily allows for identifying a direction of flow of information. This concept is much related to the widely used transfer entropy [67, 68]. In fact, transfer entropy is the delayed conditional mutual information [69, 70]. It therefore differs from our approach primarily by a normalization factor. Since here we were not interested in the absolute amount of information (flow), we could simplify assessments and focus on delayed mutual information | adding the conditional part jeopardizes the approximation of probabilities, i.e., estimating transfer entropy in a reliable manner typically requires more data than our delayed mutual information. A detailed discussion of these differences is beyond the scope of the current study.

For our model we found that the delayed mutual information between the synchronized subpopulation and the less synchronized one peaked at a finite delay τmax≠0. Hence, there was a directed flow of information between the two subpopulations. Since we found that τmax < 0 for I12(τ), the direction of this flow was from the less synchronized subpopulation to the fully synchronized one, i.e., 2 → 1 or D → S. In fact, the delay largely resembled the relative phase between the corresponding local order parameters, as shown in of Figure 3A. We could not only readily identify the relative phase by encountering event data only, but our approach also allowed for attaching a meaningful interpretation in an information theoretic sense. This is promising since event data are, as stated repeatedly, conventional outcome parameters in many experimental settings. This is particularly true for studies in neuroscience, for which the quest on information processing is often central. There, networks are typically complex and modular. The complex collective dynamics switches at multiple scales, rendering neuronal networks especially exciting when it comes to information routing [71]. As of yet, our approach does not allow for unraveling dynamics information routing. This will require extending the (time-lagged) mutual information to a time-dependent version, e.g., by windowing the data under study. We plan to incorporate this in future studies.

Estimating the delayed mutual information based on time points at which the individual phases passed through their respective Poincaré sections allows for identifying the information flow between subpopulations of networks. If the network displays chimera states, the information flow turns out to be directed. In our model of coupled phase oscillators, the flow of information was directed from the less synchronized subpopulation to the fully synchronized one since the first preceded the latter. Our approach is a first step to study information transfer between spike trains. It can be readily adopted to static well-defined modular networks and needs to be upgraded to a time dependent version to be applied to real, biological data.

ND conducted the simulations and analysis and wrote the manuscript. AD set up the study, incorporated the kernel density estimation and wrote the manuscript. DB contributed to the first concepts of the study and wrote the manuscript. EM set up the study, conducted simulations and wrote the manuscript.

This study received funding from the European Union's Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement #642563 (COSMOS).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

ND and AD want to thank to Rok Cestnik and Bastian Pietras for fruitful discussions.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fams.2019.00028/full#supplementary-material

1. ^This follows because of: IXY(τ) = I(X(t); Y(t + τ)) = I(X(t − τ); Y(t)) = I(Y(t); X(t − τ)) = IYX(−τ).

1. Ermentrout GB, Rinzel J. Beyond a pacemaker's entrainment limit: phase walk-through. Am J Physiol-Regul Integr Compar. Physiol. (1984) 246:R102–6. doi: 10.1152/ajpregu.1984.246.1.R102

2. Guevara MR, Glass L, Shrier A. Phase locking, period-doubling bifurcations, and irregular dynamics in periodically stimulated cardiac cells. Science. (1981) 214:1350–3. doi: 10.1126/science.7313693

3. Guevara MR, Alonso F, Jeandupeux D, Van Ginneken ACG. Alternans in periodically stimulated isolated ventricular myocytes: experiment and model. Goldbeter A. In: Faculté des Sciences, Université Libre de Bruxelles, Brussels, editor. Cell to Cell Signalling. New York, NY: Elsevier; Academic Press (1989). p. 551–63.

4. Glass L, Mackey MC. From Clocks to Chaos: The Rhythms of Life. Princeton, NJ: Princeton University Press (1988).

5. Zeng WZ, Courtemanche M, Sehn L, Shrier A, Glass L. Theoretical computation of phase locking in embryonic atrial heart cell aggregates. J Theor Biol. (1990) 145:225–44. doi: 10.1016/S0022-5193(05)80128-6

6. Glass L, Shrier A. Low-dimensional dynamics in the heart. In: Theory of Heart. New York, NY: Springer-Verlag (1991). p. 289–312.

8. Gray CM, König P, Engel AK, Singer W. Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature. (1989) 338:334. doi: 10.1038/338334a0

9. MacKay WA. Synchronized neuronal oscillations and their role in motor processes. Trends Cogn Sci. (1997) 1:176–83.

10. Singer W, Gray CM. Visual feature integration and the temporal correlation hypothesis. Annu Rev Neurosci. (1995) 18:555–86. doi: 10.1146/annurev.ne.18.030195.003011

11. Stern EA, Jaeger D, Wilson CJ. Membrane potential synchrony of simultaneously recorded striatal spiny neurons in vivo. Nature. (1998) 394:475. doi: 10.1038/28848

12. Jain AK, Likharev K, Lukens J, Sauvageau J. Mutual phase-locking in Josephson junction arrays. Phys Rep. (1984) 109:309–426. doi: 10.1016/0370-1573(84)90002-4

13. Saitoh K, Nishino T. Phase locking in a double junction of Josephson weak links. Phys Rev B. (1991) 44:7070. doi: 10.1103/PhysRevB.44.7070

14. Valkering T, Hooijer C, Kroon M. Dynamics of two capacitively coupled Josephson junctions in the overdamped limit. Physica D Nonlinear Phenomena. (2000) 135:137–53. doi: 10.1016/S0167-2789(99)00116-5

15. Menck PJ, Heitzig J, Kurths J, Schellnhuber HJ. How dead ends undermine power grid stability. Nat Commun. (2014) 5:3969. doi: 10.1038/ncomms4969

16. Nardelli PHJ, Rubido N, Wang C, Baptista MS, Pomalaza-Raez C, Cardieri P, et al. Models for the modern power grid. Eur Phys J Spec Top. (2014) 223:2423–37. doi: 10.1140/epjst/e2014-02219-6

17. Motter AE, Myers SA, Anghel M, Nishikawa T. Spontaneous synchrony in power-grid networks. Nat Phys. (2013) 9:191. doi: 10.1038/nphys2535

18. Dörfler F, Chertkov M, Bullo F. Synchronization in complex oscillator networks and smart grids. Proc Natl Acad Sci USA. (2013) 110:2005–10. doi: 10.1073/pnas.1212134110

19. Lozano S, Buzna L, Díaz-Guilera A. Role of network topology in the synchronization of power systems. Eur Phys J B. (2012) 85:231. doi: 10.1140/epjb/e2012-30209-9

20. Susuki Y, Mezic I. Nonlinear koopman modes and coherency identification of coupled swing dynamics. IEEE Trans Power Syst. (2011) 26:1894–904. doi: 10.1109/TPWRS.2010.2103369

21. Rohden M, Sorge A, Timme M, Witthaut D. Self-organized synchronization in decentralized power grids. Phys Rev Lett. (2012) 109:064101. doi: 10.1103/PhysRevLett.109.064101

22. Huygens C. Horologium Oscillatorium Sive de Motu Pendulorum ad Horologia Aptato Demonstrationes Geometricae. Paris: F. Muguet (1673).

23. Pikovsky A, Rosenblum M, Kurths J, Kurths J. Synchronization: A Universal Concept in Nonlinear Sciences. Vol. 12. Cambridge, UK: Cambridge University Press (2003).

26. Boccaletti S, Bianconi G, Criado R, Del Genio CI, Gómez-Gardenes J, Romance M, et al. The structure and dynamics of multilayer networks. Phys Rep. (2014) 544:1–122. doi: 10.1016/j.physrep.2014.07.001

27. Arenas A, Díaz-Guilera A, Kurths J, Moreno Y, Zhou C. Synchronization in complex networks. Phys Rep. (2008) 469:93–153. doi: 10.1016/j.physrep.2008.09.002

28. Kivelä M, Arenas A, Barthelemy M, Gleeson JP, Moreno Y, Porter MA. Multilayer networks. J Complex Netw. (2014) 2:203–71. doi: 10.1093/comnet/cnu016

29. Rodrigues FA, Peron TKD, Ji P, Kurths J. The Kuramoto model in complex networks. Phys. Rep. (2016) 610:1–98. doi: 10.1016/j.physrep.2015.10.008

30. Kuramoto Y, Battogtokh D. Coexistence of coherence and incoherence in nonlocally coupled phase oscillators. Nonlinear Phenomena Complex Syst. (2002) 4:380–5. Available online at: http://www.j-npcs.org/abstracts/vol2002/v5no4/v5no4p380.html

31. Abrams DM, Strogatz SH. Chimera states for coupled oscillators. Phys Rev Lett. (2004) 93:174102. doi: 10.1103/PhysRevLett.93.174102

32. Montbrió E, Kurths J, Blasius B. Synchronization of two interacting populations of oscillators. Phys Rev E. (2004) 70:056125. doi: 10.1103/PhysRevE.70.056125

33. Martens EA, Bick C, Panaggio MJ. Chimera states in two populations with heterogeneous phase-lag. Chaos. (2016) 26:094819. doi: 10.1063/1.4958930

34. Panaggio MJ, Abrams DM. Chimera states: coexistence of coherence and incoherence in networks of coupled oscillators. Nonlinearity. (2015) 28:R67. doi: 10.1088/0951-7715/28/3/R67

35. Schöll E. Synchronization patterns and chimera states in complex networks: Interplay of topology and dynamics. Euro Phys J Spec Top. (2016) 225:891–919. doi: 10.1140/epjst/e2016-02646-3

36. Martens EA, Thutupalli S, Fourriere A, Hallatschek O. Chimera states in mechanical oscillator networks. Proc Natl Acad Sci USA. (2013) 110:10563–7. doi: 10.1073/pnas.1302880110

37. Tinsley MR, Nkomo S, Showalter K. Chimera and phase-cluster states in populations of coupled chemical oscillators. Nat Phys. (2012) 8:1–4. doi: 10.1038/nphys2371

38. Hagerstrom AM, Murphy TE, Roy R, Hövel P, Omelchenko I, Schöll E. Experimental observation of chimeras in coupled-map lattices. Nat Phys. (2012) 8:1–4. doi: 10.1038/nphys2372

39. Wickramasinghe M, Kiss IZ. Spatially organized dynamical states in chemical oscillator networks: synchronization, dynamical differentiation, and chimera patterns. PLoS ONE. (2013) 8:e80586. doi: 10.1371/journal.pone.0080586

40. Gambuzza LV, Buscarino A, Chessari S, Fortuna L, Meucci R, Frasca M. Experimental investigation of chimera states with quiescent and synchronous domains in coupled electronic oscillators. Phys Rev E. (2014) 90:032905. doi: 10.1103/PhysRevE.90.032905

41. Martens EA. Bistable chimera attractors on a triangular network of oscillator populations. Phys Rev E. (2010) 82:016216. doi: 10.1103/PhysRevE.82.016216

42. Martens EA. Chimeras in a network of three oscillator populations with varying network topology. Chaos. (2010) 20:043122. doi: 10.1063/1.3499502

43. Shanahan M. Metastable chimera states in community-structured oscillator networks. Chaos. (2010) 20:013108. doi: 10.1063/1.3305451

44. Bick C. Heteroclinic switching between chimeras. Phys Rev E. (2018) 97:050201. doi: 10.1103/PhysRevE.97.050201

45. Bick C, Martens EA. Controlling chimeras. New J Phys. (2015) 17:033030. doi: 10.1088/1367-2630/17/3/033030

46. Martens EA, Panaggio MJ, Abrams DM. Basins of attraction for chimera states. New J Phys. (2016) 18:022002. doi: 10.1088/1367-2630/18/2/022002

47. Fries P. A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends Cogn Sci. (2005) 9:474–80. doi: 10.1016/j.tics.2005.08.011

48. Fell J, Axmacher N. The role of phase synchronization in memory processes. Nat Rev Neurosci. (2011) 12:105. doi: 10.1038/nrn2979

49. Andrzejak RG, Rummel C, Mormann F, Schindler K. All together now: analogies between chimera state collapses and epileptic seizures. Sci Rep. (2016) 6:23000. doi: 10.1038/srep23000

51. Abrams DM, Mirollo RE, Strogatz SH, Wiley DA. Solvable model for chimera states of coupled oscillators. Phys Rev Lett. (2008) 101:084103. doi: 10.1103/PhysRevLett.101.084103

52. Panaggio MJ, Abrams DM, Ashwin P, Laing CR. Chimera states in networks of phase oscillators: the case of two small populations. Phys Rev E. (2016) 93:012218. doi: 10.1103/PhysRevE.93.012218

53. Bick C, Panaggio MJ, Martens EA. Chaos in Kuramoto oscillator networks. Chaos. (2018) 28:071102. doi: 10.1063/1.5041444

54. Acebrón J, Bonilla L, Pérez Vicente C, et al. The Kuramoto model: a simple paradigm for synchronization phenomena. Rev Mod Phys. (2005) 77:137. doi: 10.1103/RevModPhys.77.137

55. Bick C, Laing C, Goodfellow M, Martens EA. Understanding synchrony patterns in biological and neural oscillator networks through mean-field reductions: a review. arXiv.[preprint] arXiv:1902(05307v2). (2019).

56. Laing CR. Chimera states in heterogeneous networks. Chaos. (2009) 19:013113. doi: 10.1063/1.3068353

57. Laing CR. Disorder-induced dynamics in a pair of coupled heterogeneous phase oscillator networks. Chaos. (2012) 22:043104. doi: 10.1063/1.4758814

58. Shannon CE, Weaver W. The Mathematical Theory of Communication. Urbana, IL: University of Illinois Press (1949).

59. Fraser AM, Swinney HL. Independent coordinates for strange attractors from mutual information. Phys Rev A. (1986) 33:1134–40. doi: 10.1103/PhysRevA.33.1134

60. Abarbanel H. Analysis of Observed Chaotic Data. New York, NY: Springer Science and Business Media (2012).

61. Kaneko K. Lyapunov analysis and information flow in coupled map lattices.Pdf. Physica D Nonlinear Phenomena. (1986) 23D:436–47. doi: 10.1016/0167-2789(86)90149-1

62. Vastano JA, Swinney HL. Information transport in spatial-temporal system. Phys Rev Lett. (1988) 60:1773–6. doi: 10.1103/PhysRevLett.60.1773

63. Green ML, Savit R. Dependent variables in broad band continuous time series. Physica D Nonlinear Phenomena. (1991) 50:521–44. doi: 10.1016/0167-2789(91)90013-Y

64. Paluš M. Detecting phase synchronization in noisy systems. Phys Lett A. (1997) 235:341–51. doi: 10.1016/S0375-9601(97)00635-X

65. Ihler A, Mandel M. Kernel Density Estimation (KDE) Toolbox for Matlab. (2003). Available online at: http://www.ics.uci.edu/ĩhler/code

66. Thomas RD, Moses NC, Semple EA, Strang AJ. An efficient algorithm for the computation of average mutual information: validation and implementation in Matlab. J Math Psychol. (2014) 61:45–59. doi: 10.1016/j.jmp.2014.09.001

67. Schreiber T. Measuring information transfer. Phys Rev Lett. (2000) 85:461. doi: 10.1103/PhysRevLett.85.461

68. Wibral M, Pampu N, Priesemann V, Siebenhühner F, Seiwert H, Lindner M, et al. Measuring information-transfer delays. PLoS ONE. (2013) 8:e55809. doi: 10.1371/journal.pone.0055809

69. Dobrushin RL. A general formulation of the fundamental theorem of Shannon in the theory of information. Uspekhi Matematicheskikh Nauk. (1959) 14:3–104.

70. Wyner AD. A definition of conditional mutual information for arbitrary ensembles. Inform Control. (1978) 38:51–9.

Keywords: chimera states, phase oscillators, coupled networks, mutual information, information flow

Citation: Deschle N, Daffertshofer A, Battaglia D and Martens EA (2019) Directed Flow of Information in Chimera States. Front. Appl. Math. Stat. 5:28. doi: 10.3389/fams.2019.00028

Received: 15 December 2018; Accepted: 21 May 2019;

Published: 25 June 2019.

Edited by:

Ralph G. Andrzejak, Universitat Pompeu Fabra, SpainReviewed by:

Jan Sieber, University of Exeter, United KingdomCopyright © 2019 Deschle, Daffertshofer, Battaglia and Martens. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nicolás Deschle, bi5kZXNjaGxlQHZ1Lm5s; Andreas Daffertshofer, YS5kYWZmZXJ0c2hvZmVyQHZ1Lm5s; Erik A. Martens, ZWFtYUBkdHUuZGs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.