- 1Department of Computer Science, Aalto University, Otakaari, Finland

- 2Department of Computer Science, Delft University of Technology, Delft, Netherlands

We present a novel condition, which we term the network nullspace property, which ensures accurate recovery of graph signals representing massive network-structured datasets from few signal values. The network nullspace property couples the cluster structure of the underlying network-structure with the geometry of the sampling set. Our results can be used to design efficient sampling strategies based on the network topology.

1. Introduction

A recent line of work proposed efficient convex optimization methods for recovering graph signals which represent label information of network structured datasets (cf. [1, 2]). These methods rest on the hypothesis that the true underlying graph signal is nearly constant over well-connected subsets of nodes (clusters).

In this paper, we introduce a novel recovery condition, termed the network nullspace property (NNSP), which guarantees convex optimization to accurately recovery of clustered (“piece-wise constant”) graph signals from knowledge of its values on a small subset of sampled nodes. The NNSP couples the clustering structure of the underlying data graph to the locations of the sampled nodes via interpreting the underlying graph as a flow network.

The presented results apply to an arbitrary partitioning, but are most useful for a partitioning such that nodes in the same cluster are connected with edges of relatively large weights, whereas edges between clusters have low weights. Our analysis reveals that if cluster boundaries are well-connected (in a sense made precise) to the sampled nodes, then accurate recovery of clustered graph signals is possible by solving a convex optimization problem.

Most of the existing work on graph signal processing applies spectral graph theory to define a notion of band-limited graph signals, e.g., based on principal subspaces of the graph Laplacian matrix, as well as sufficient conditions for recoverability, i.e., sampling theorems, for those signals [3, 4]. In contrast, our approach does not rely on spectral graph theory, but involves structural (connectivity) properties of the underlying data graph. Moreover, we consider signal models which amount to clustered (piece-wise constant) graph signals. These models, which have also been used in Chen et al. [5] for approximating graph signals arising in various applications.

The closest to our work is [6, 7], which provide sufficient conditions such that a variant of the Lasso method (network Lasso) accurately recovers clustered graph signals from noisy observations. However, in contrast to this line of work, we assume the graph signal values are only observed on a small subset of nodes.

The NNSP is closely related to the network compatibility (NCC) condition, which has been introduced by a subset of the authors in Jung et al. [8] for analyzing the accuracy of network Lasso. The NCC is a stronger condition in the sense that once the NCC is satisfied, the NNSP is also guaranteed to hold.

2. Problem Formulation

Many important applications involve massive heterogeneous datasets comprised heterogeneous data chunks, e.g., mixtures of audio, video and text data [9]. Moreover, datasets typically contain mostly unlabeled data points; only a small fraction is labeled data. An efficient strategy to handle such heterogenous datasets is to organize them as a network or data graph whose nodes represent individual data points.

2.1. Graph Signal Representation of Data

In what follows we consider datasets which are represented by a weighted data graph with nodes , each node representing an individual data point. These nodes are connected by edges . In particular, given some application-specific notion of similarity, the edges of the data graph connect similar data points by an edge . In some applications it is possible to quantify the extent to which data points are similar, e.g., via the distance between sensors in a wireless sensor network [10]. Given two similar data points , we quantify the strength of their connection by a non-negative edge weight Wi, j ≥ 0 which we collect in the symmetric weight matrix .

In what follows we will silently assume that the data graph is oriented by declaring for each edge one node as the head e+ and the other node as the tail e−. For the oriented data graph we define the directed neighborhoods of a node as and . We highlight that the orientation of the data graph is not related to any intrinsic property of the underlying data set. In particular, the weight matrix W is symmetric since the weights Wi, j are associated with undirected edges . However, using an (arbitrary but fixed) orientation of the data graph will be notationally convenient in order to formulate our main results.

Beside the edges structure , network-structured datasets typically also carry label information which induces a graph signal defined over . We define a graph signal x[·] over the graph as a mapping , which associates (labels) every node with the signal value x[i] ∈ ℝ. In a supervised machine learning application, the signal values x[i] might represent class membership in a classification problem or the target (output) value in a regression problem. We denote the space of all graph signals, which is also known as the vertex space (cf. [11]), by .

2.2. Graph Signal Recovery

We aim at recovering (learning) a graph signal defined over the data graph , from observing its values on a (small) sampling set , where typically M ≪ N.

The recovery of the entire graph signal x[·] from the incomplete information provided by the signal samples is possible under a clustering assumption, which is also underlying many supervised machine learning methods [12]. This assumption requires the signal values or labels of data points which are close, with respect to the data graph topology, to be similar. More formally, we expect the underlying graph signal to have a relatively small total variation (TV)

The total variation of the graph signal x[·] obtained over a subset of edges is denoted .

Some well-known examples of clustered graph signals include low-pass signals in digital signal processing where time samples at adjacent time instants are strongly correlated and close-by pixels in images tend to be colored likely. The class of graph signals with a small total variation are sparse in the sense of changing significantly over few edges only. In particular, if we stack the signal differences x[i] − x[j] (across the edges ) into a big vector of size , then this vector is sparse in the ordinary sense of having only few significantly large entries [13].

In order to recover a signal with small TV ||x[·]||TV, from its signal values , a natural strategy is:

There exist highly efficient methods for solving convex optimization problems of the form (1) (cf.[14–16] and the references therein).

3. Recovery Conditions

The accuracy of any learning method which is based on solving (1) depends on the deviations between the solutions of the optimization problem (1) and the true underlying graph signal . In what follows, we introduce the NNSP as a sufficient condition on the sampling set and graph topology such that any solution of (1) accurately resembles an underlying clustered (piece-wise constant) graph signal of the form (cf. [5])

The signal model (2) is defined using a fixed partition of the entire data graph into disjoint clusters . The signal model (2) has been studied in Chen et al. [5], where it was demonstrated that it allows, compared to band-limited graph signal models, for more accurate approximation of datasets obtained from weather stations.

While our analysis applies to an arbitrary partition , our results are most useful for partitions where the nodes within clusters are connected by many edges with large weight, while nodes of different clusters are loosely connected by few edges with small weights. Such reasonable partitions can be obtained by one of the recently proposed highly scalable clustering methods, e.g., [17, 18].

We highlight that the knowledge of the partition underlying the signal model (2) is only required for the analysis of signal recovery methods (such as sparse label propagation [15]), which are based on solving the recovery problem (1), However, the partition is not required for the actual implementation of those methods, as the recovery problem (1) itself does not involve the partition.

We will characterize a partition by its boundary

which is the set of edges connecting nodes from different clusters. We highlight that the recovery problem (1) not require knowledge of the partition .

3.1. Network Nullspace Property

Consider a clustered graph signal of the form (2). We observe its values x[i] at the sampled nodes only. In order to have any chance for recovering the complete signal only from the samples we have to restrict the nullspace of the sampling set, which we define as

Thus, the nullspace contains exactly those graph signals which vanish at all nodes of the sampling set . Clearly, we have no chance in recovering any signal which belongs to the nullspace as it cannot be distinguished from the all-zero signal , for all nodes , which result in exactly the same (vanishing) measurements for all .

In order to define the NNSP, we need the notion of a flow with demands [19].

Definition 1. Given a graph , a flow with demands g[i] ∈ ℝ, for , is a mapping satisfying the conservation law

at every node .

For a more detailed discussion of the concept of network flows, we refer to [19]. In this paper, we use the concept of network flows in order to characterize the connectivity properties or topology of a data graph by interpreting the edge weights Wi, j as capacity constraints that limit the amount of flow along the edge .

In particular, the notion of network flows with demands allows to adapt the nullspace property, introduced within the theory of compressed sensing [20, 21] for sparse signals, to the problem of recovering clustered graph signals (cf. (2)).

Definition 2. Consider a partition of pairwise disjoint subsets of nodes (clusters) and a set of sampled nodes . The sampling set is said to satisfy the NNSP relative to the partition , denoted NNSP-, if for any signature , which assigns the sign σe to a boundary edge , there is a flow f[e]

• with demands g[i] = 0, for ,

• its values satisfy

It turns out that a sampling set satisfies NNSP- for a given partition of the data graph, then the nullspace (cf. (4)) of the sampling set cannot contain a non-zero clustered graph signal of the form (2).

A naive verification of the NNSP involves a search over all signatures, whose number is around , which might be intractable for large data graphs. However, similar to many results in compressed sensing, we expect using probabilistic models for the data graph to render the verification of NNSP tractable [20]. In particular, we expect that probabilistic statements about how likely the NNSP is satisfied for random data graphs (e.g., conforming to a stochastic block model) can be obtained easily.

3.2. Exact Recovery of Clustered Signals

Now we are ready to state our main result, i.e., the NNSP ensures the solution (1) to be unique and to coincide with the true underlying clustered graph signal of the form (2).

Theorem 3. Consider a clustered graph signal (cf. (2)) which is observed only at the sampling set . If the sampling set satisfies NNSP-, then the solution of (1) is unique and coincides with xc[·].

Proof: see Appendix.

Thus, if we sample a clustered graph signal x[·] (cf. (2)) on a sampling set which satisfies NNSP-, we can expect convex recovery algorithms, which are based on solving (1) to accurately recover the true underlying graph signal x[·].

A partial converse. The recovery condition provided by Theorem 3 is essentially tight, i.e., if the sampling set does not satisfy NNSP-, then they are solutions to (1) which are different from the true underlying clustered graph signal.

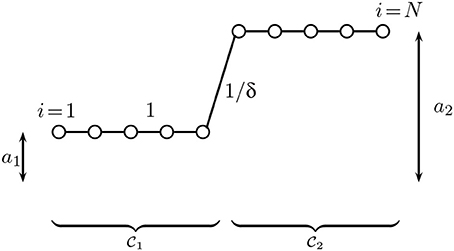

Consider a clustered graph signal

defined over a chain graph containing an even number N of nodes (Figure 1). We partition the graph into two equal-sized clusters with and . The edges within clusters are connected by edges with unit weight, while the single edge {N/2, N/2+1} connecting the two clusters has weight 1/δ. Let us assume that we sample the graph signal xc[i] on the sampling set .

Figure 1. A clustered graph signal (cf. (2)) defined over a chain graph which is partitioned into two equal-size clusters and which consist of consecutive nodes. The edges connecting nodes within the same cluster have weight 1, while the single edge connecting nodes from different clusters has weight 1/δ.

For any δ > 1, the sampling set satisfies the NNSP- with κ = δ > 1 (cf. (6)). Thus, as long as the boundary edge has weight 1/δ with δ > 1, Theorem 3 guarantees that the true clustered graph signal xc[i] can be perfectly recovered via solving (1).

If, on the other hand, the weight of the boundary edge is 1/δ with some δ ≤ 1, then the sampling set does not satisfy the NNSP-. In this case, as can be verified easily, the true graph signal xc[i] is not the unique solution to (1) anymore. Indeed, for δ ≤ 1 it can be shown that the graph signal (7) has a TV norm at least as large as the graph signal , which linearly interpolates between the sampled signal values xc[1] and xc[N].

3.3. Recovery of Approximately Clustered Signals

The scope of Theorem 3 is somewhat limited as it applies only to graph signals which are precisely of the form (2). We now state a more general result applying to any graph signal .

Theorem 4. Consider a graph signal which is observed only at the sampling set . If NNSP- holds with κ = 2 in (6), any solution of (1) satisfies (cf. (2))

Proof: see Appendix.

Thus, as long as the underlying graph signal x[·] can be well approximated by a clustered signal of the form (2), any solution of (1) is a graph signal which varies significantly only over the boundary edges . We highlight that the error bound (8) only controls the TV (semi-)norm of the error signal . In particular, this bound does not directly allow to quantify the size of the global mean squared error . However, the bound (8) allows to characterize identifiability of the underlying partition . Indeed, if the signal values in (2) satisfy , we can read off the cluster boundaries from the signal differences (over edges ).

One particular use of Theorems 3, 4 is to guide the choice for the sampling set . In particular, one should aim at sampling nodes such that the NNSP is likely to be satisfied. According to the definition of the NNSP, we should sample nodes which are well connected (in the sense of allowing for a large flow) to the boundary edges which connect different clusters. This approach has been studied empirically in [22, 23], verifying accurate recovery by efficient convex optimization methods using sampling sets which satisfy the NNSP (cf. Definition 2) with high probability.

4. Numerical Experiments

We now verify the practical relevance of our theoretical findings by means of two numerical experiments. The first experiment is based on a synthetic data set whose underlying data graph is a chain graph . A second experiment revolves around a real-world data set describing the roadmap of Minnesota [5, 24].

4.1. Chain Graph

We generated a synthetic data set whose data graph is a chain graph . This chain graph contains nodes which are connected by undirected edges {i, i + 1}, for i ∈ {1, …, 99} and partitioned into equal-size clusters , each cluster containing 10 consecutive nodes. The edges connecting nodes in the same cluster have weight Wi, j = 4, while those connecting different clusters have weight Wi, j = 2. For this data graph we generated a clustered graph signal x[i] of the form with alternating coefficients al ∈ {1, 5}.

The graph signal x[i] is observed only at the nodes belonging to a sampling set, which is either or . The sampling set contains exactly one node from each cluster and thus, as can be verified easily, satisfies the NNSP (cf. Definition 2). While having the same size as , the sampling set does not contain any node of clusters and .

In Figure 2, we illustrate the recovered signals obtained by solving (1) using the sparse label propagation (SLP) algorithm [15], which is fed with signal values on the sampling set (being either or ). The signal recovered from the sampling set , which satisfies the NNSP, closely resembles the true underlying clustered graph signal. In contrast, the sampling set , which does not satisfy the NNSP, results in a recovered signal which significantly deviates from the true signal.

Figure 2. Clustered graph signal x[i] along with the recovered signals obtained from sampling sets and .

4.2. Minnesota Roadmap

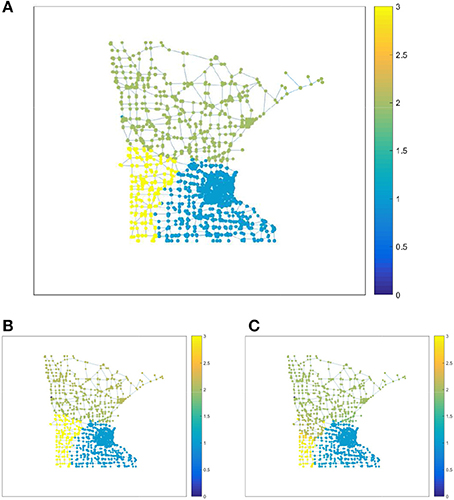

The second data set, with associated data graph , represents the roadmap of Minnesota [5]. The data graph consists of nodes, and edges. We generate a clustered graph signal defined over by randomly selecting three different nodes {i1, i2, i3} which are declared as “cluster centres” of the clusters . The remaining nodes are then associated to the cluster whose center is nearest in the sense of smallest geodesic distance. The edges connecting nodes within the same cluster have weight Wi, j = 4, and those connecting different clusters have weight Wi, j = 2.

We use SLP to recover the entire graph signal from its values obtained for the nodes in a sampling set. Two different choices and for the sampling set are considered: The sampling set is based on the NNSP and consists of all nodes which are adjacent to the boundary edges between two different clusters. In contrast, the sampling set is obtained by selecting uniformly at random a total of nodes from , i.e., we ensure .

The resulting MSE is 0.0023 for the sampling set (conforming with NNSP), while the recovery using the random sampling set incurred an average (over 100 i.i.d. simulation runs) MSE of 0.0502. In Figure 3, we depict the recovered graph signals using signal samples from either or (one typical realization). Evidently, the recovery using the sampling set (which is guided by the NNSP) results in a more accurate recovery compared to using the random sampling set .

Figure 3. True graph signal (A) and recovered signals for the Minnesota roadmap data set obtained (B) when using sampling set , (C) or sampling set .

5. Conclusions

We considered the problem of recovering clustered graph signals, defined over complex networks, from observing its signal values on a small set of sampled nodes.

By applying tools from compressed sensing, we introduced the NNSP as a sufficient condition on the graph topology and sampling set such that a convex recovery method is accurate. This recovery condition is based on the connectivity properties of the underlying network. In particular, it requires the existence of certain network flows with the edge weights of the data graph being interpreted as capacities.

The NNSP involves both, the sampling set and the cluster structure of the data graph. Roughly speaking it requires to sample more densely near the boundaries between different clusters. This intuition has be verified by means of numerical experiments on synthetic and real-world datasets.

Our work opens up several avenues for future research. In particular, it would be interesting to analyze how likely the NNSP holds for certain random network models and sampling strategies. The tightness of the resulting recovery guarantees could then be contrasted with fundamental lower bounds obtained from an information-theoretic approach to minimax-estimation. Moreover, we would like to study variations of the SLP recovery method which are more suitable for classification problems.

Author Contributions

AJ: Designing research. Deriving main theoretical results. Writing paper. MH: Numerical experiments. Proofreading paper.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Parts of the material underlying this work has been presented in Jung et al. [25]. However, this paper significantly extends [25] by providing detailed proofs as well as extended discussions of the main results. This manuscript is available as a pre-print [26] at the following address: https://arxiv.org/abs/1705.04379. Copyright of this pre-print version rests with the authors.

References

1. Jung A, Berger P, Hannak G, Matz G. Scalable graph signal recovery for big data over networks. In: Proceeddings of IEEE-SP Workshop on Signal Processing Advances in Wireless Communications (SPAWC). Edinburgh (2016).

2. Hannak G, Berger P, Matz G, Jung A. Efficient graph signal recovery over big networks. In: Proceedings of Asilomar Conference Signals, Systems, Computers. Pacific Grove, CA (2016). p. 1–6.

3. Marques AG, Segarra S, Leus G, Ribeiro A. Sampling of graph signals with successive local aggregation. IEEE Trans Signal Process. (2016) 64:1832–43. doi: 10.1109/TSP.2015.2507546

4. Chen S, Varma R, Sandryhaila A, Kovačević J. Discrete signal processing on graphs: sampling theory. IEEE Trans Signal Process. (2015) 63:6510–23. doi: 10.1109/TSP.2015.2469645

5. Chen S, Varma R, Sandryhaila A, Kovačević J. Representations of piecewise smooth signals on graphs. In: Proceedings of IEEE ICASSP 2016. Shanghai (2016).

6. Sharpnack J, Rinaldo A, Singh A. Sparsistency of the edge lasso over graphs. In: Proceedings 15th International Conference on Artificial Intelligence and Statistics (AISTATS). La Palma (2012).

7. Wang Y-X, Sharpnack J, Smola A J, Tibshirani R J. Trend filtering on graphs. J Mach Lear Res. (2016) 17:120–45.

8. Jung A, Tran N, Mara A. When is network lasso accurate? Front Appl Math Stat. (2018) 3:28. doi: 10.3389/fams.2017.00028

9. Cui S, Hero A, Luo ZQ, Moura J. editors. Big Data over Networks. La Palma: Cambridge University Press (2016).

10. Zhao F, Guibas LJ. Wireless Sensor Networks: An Information Processing Approach. Amsterdam: Morgan Kaufmann (2004).

12. Chapelle O, Schölkopf B, Zien A. editors. Semi-Supervised Learning. Cambridge, MA: The MIT Press (2006).

13. Donoho DL. Compressed sensing. IEEE Trans Inf Theory (2006) 52:1289–306. doi: 10.1109/TIT.2006.871582

14. Zhu Y. An augmented ADMM algorithm with application to the generalized lasso problem. J Comput Graph Stat. (2017) 26:195–204. doi: 10.1080/10618600.2015.1114491

15. Jung A, Hero AO III Mara, A, Jahromi B. Semi-supervised learning via sparse label propagation. arXiv (2017) 315–39. Available online at: https://arxiv.org/abs/1612.01414

16. Chambolle A, Pock T. A first-order primal-dual algorithm for convex problems with applications to imaging. J Math Imaging Vis. (2011) 40:120–45. doi: 10.1007/s10851-010-0251-1

17. Spielman D, Teng S-H. A local clustering algorithm for massive graphs and its application to nearly linear time graph partitioning. SIAM J Comput. (2013) 42:1–26. doi: 10.1137/080744888

18. Fortunato S. Community detection in graphs. Phys Rep. (2010) 486:75–174. doi: 10.1016/j.physrep.2009.11.002

20. Foucart S, Rauhut H. A Mathematical Introduction to Compressive Sensing. New York, NY: Springer (2012).

21. Eldar YC. Sampling Theory - Beyond Bandlimited Systems. Cambridge: Cambridge University Press (2015).

22. Mara A, Jung A. Recovery conditions and sampling strategies for network lasso. In: Proceedings of Asilomar Conference Signals, Systems, Computers. Pacific Grove, CA (2017).

23. Basirian S, Jung A. Random walk sampling for big data over networks. In: Proceedings of International Conference on Sampling Theory and Applications (SampTA). Tallinn (2017). p. 427–431.

25. Jung, A, Heimowitz, A, and Eldar, YC The network nullspace property for compressed sensing over networks. In: Proceedings of International Conference on Sampling Theory and Applications (SampTA). Tallinn (2017).

26. Jung A, Hulsebos M. The network nullspace property for compressed sensing of big data over networks. arXiv (2017).

27. Nam S, Davies M, Elad M, Gribonval R. The cosparse analysis model and algorithms. Appl Comput Harmon Anal. (2013) 34:30–56. doi: 10.1016/j.acha.2012.03.006

28. Kabanava M, Rauhut H. Cosparsity in compressed sensing. In: Boche H, Calderbank R, Kutyniok G, Vybiral J, editors, Compressed Sensing and Its Applications. New York, NY: Springer (2015). p. 315–39.

29. Kabanava M, Rauhut H. Analysis ℓ1-recovery with frames and gaussian measurements. Acta Appl Math. (2015) 140:173–95. doi: 10.1007/s10440-014-9984-y

Appendix

The proofs for Theorem 3 and Theorem 4 rely on recognizing the recovery problem (1) as an analysis ℓ1-minimization problem [27]. A sufficient condition for analysis ℓ1-minimization to deliver the correct solution x[·] is given by the analysis nullspace property [27, 28]. In particular, the sampling set is said to satisfy the stable analysis nullspace property w.r.t. an edge set if

for some constant κ > 1.

Lemma 5. Consider a data graph and fixed partitioning of its nodes into clusters . We observe a clustered graph signal x[·] with at the sampled nodes . If (9) holds for , then (1) has a unique solution given by x[·].

Proof. Consider a graph signal , which is different from the true underlying graph signal x[·], being feasible for (1), i.e, for all sampled nodes . Then, the difference belongs to the kernel (cf. (4)). Note that, since x[i] is constant for all nodes in the same cluster,

By the triangle inequality,

and, since , in turn

Thus, we have shown that any graph signal which is different from the true underlying graph signal x[·] but coincides with it at all sampled nodes , must have a larger TV norm than the true signal x[·] and therefore cannot be optimal for the problem (1). □

The next result extends Lemma 5 to graph signals which are not exactly clustered, but which can be well approximated by a clustered signal of the form (2).

Lemma 6. Consider a data graph and a fixed partition of its nodes into disjoint clusters . We observe a graph signal at the sampling set . If (9) holds for and κ = 2, any solution of (1) satisfies

Proof. The argument closely follows the proof of [29, Theorem 8]. First note that any solution of (1) obeys

since x[·] is trivially feasible for (1). From (12), we have

Since is feasible for (1), i.e., for every sampled node , the difference belongs to (cf. (4)). Applying the triangle inequality to (13),

Combining (14) with (9) (for the signal u[·] = v[·]),

Using (9) again,

For any clustered graph signal xc[·] of the form , we have xc[i]−xc[j] = 0 for any (note that ) and, in turn,

□

Let us now render Lemma 5 and Lemma 6 for clustered graph signals x[·] of the form (2) by stating a condition on the graph topology and sampling set which ensures (9).

Lemma 7. If a sampling set satisfies NNSP-, then it also satisfies the stable analysis nullspace property (9).

Proof. Consider a signal which vanishes at all sampled nodes, i.e., . We will now show that .

Let us assume that for each boundary edge , the flow f[·] in Definition 2 has the same sign as u[e+]−u[e−]. We are allowed to assume this since according to Definition 2, if there exists a flow with f[e′] > 0 for a boundary edge , there is another flow with for the same edge , but otherwise identical to f[·], i.e., for all .

Next, we construct an augmented graph by adding an extra node s to the data graph which is connected to all sampled nodes via an edge ei = {s, i} which is oriented such that . We assign to each edge ei = {s, i} the flow f[ei] = g[i] (cf. (5)). It can be verified easily that the flow over the augmented graph has zero demands for all nodes. Thus, we can apply Tellegen's theorem [30] to obtain . □

We obtain Theorem 3 by combining Lemma 7 with Lemma 5. In order to verify Theorem 4 we note that, by Lemma 7, the NNSP according to Definition 2 implies the stable nullspace property (9) for . Therefore, we can invoke Lemma 6 to reach (8).

Keywords: compressed sensing, big data, semi-supervised learning, complex networks, convex optimization

Citation: Jung A and Hulsebos M (2018) The Network Nullspace Property for Compressed Sensing of Big Data Over Networks. Front. Appl. Math. Stat. 4:9. doi: 10.3389/fams.2018.00009

Received: 03 November 2017; Accepted: 10 April 2018;

Published: 15 May 2018.

Edited by:

Yiming Ying, University at Albany, United StatesReviewed by:

Junhong Lin, École Polytechnique Fédérale de Lausanne, SwitzerlandGuohui Song, Clarkson University, United States

Copyright © 2018 Jung and Hulsebos. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alexander Jung, YWxleGFuZGVyLmp1bmdAYWFsdG8uZmk=

Alexander Jung

Alexander Jung Madelon Hulsebos

Madelon Hulsebos