94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Aging Neurosci., 29 March 2022

Sec. Parkinson’s Disease and Aging-related Movement Disorders

Volume 14 - 2022 | https://doi.org/10.3389/fnagi.2022.728212

This article is part of the Research TopicTechnological Advancements in Aging and Neurological Conditions to Improve Physical Activity, Cognitive Functions, and Postural ControlView all 15 articles

Bianca Guglietti1

Bianca Guglietti1 David A. Hobbs2,3

David A. Hobbs2,3 Bradley Wesson2

Bradley Wesson2 Benjamin Ellul1

Benjamin Ellul1 Angus McNamara1

Angus McNamara1 Simon Drum4

Simon Drum4 Lyndsey E. Collins-Praino1*

Lyndsey E. Collins-Praino1*Whilst Parkinson’s disease (PD) is typically thought of as a motor disease, a significant number of individuals also experience cognitive impairment (CI), ranging from mild-CI to dementia. One technique that may prove effective in delaying the onset of CI in PD is cognitive training (CT); however, evidence to date is variable. This may be due to the implementation of CT in this population, with the motor impairments of PD potentially hampering the ability to use standard equipment, such as pen-and-paper or a computer mouse. This may, in turn, promote negative attitudes toward the CT paradigm, which may correlate with poorer outcomes. Consequently, optimizing a system for the delivery of CT in the PD population may improve the accessibility of and engagement with the CT paradigm, subsequently leading to better outcomes. To achieve this, the NeuroOrb Gaming System was designed, coupling a novel accessible controller, specifically developed for use with people with motor impairments, with a “Serious Games” software suite, custom-designed to target the cognitive domains typically affected in PD. The aim of the current study was to evaluate the usability of the NeuroOrb through a reiterative co-design process, in order to optimize the system for future use in clinical trials of CT in individuals with PD. Individuals with PD (n = 13; mean age = 68.15 years; mean disease duration = 8 years) were recruited from the community and participated in three co-design loops. After implementation of key stakeholder feedback to make significant modifications to the system, system usability was improved and participant attitudes toward the NeuroOrb were very positive. Taken together, this provides rationale for moving forward with a future clinical trial investigating the utility of the NeuroOrb as a tool to deliver CT in PD.

Whilst Parkinson’s disease (PD) is primarily characterized as a motor disorder, many individuals also experience some degree of cognitive impairment (CI). Impairments in one or more cognitive domains may be observed even in early PD (<5 years) (Goldman et al., 2015), with many individuals also at risk of progression to mild CI (PD-MCI) and dementia (PD-D) (Litvan et al., 2012). Even in newly diagnosed individuals, PD-MCI is a common finding, with one study, the ICICLE-PD study, reporting that 42.5% of newly diagnosed individuals met criteria for PD-MCI using level 2 criteria (1.5 SDs lower than normative values) (Yarnall et al., 2014). By 3–5 years post-diagnosis, an estimated 20–57% of individuals qualify for diagnosis of PD-MCI (Caviness et al., 2007), with 20% converting to PD-D within 3 years (Saredakis et al., 2019). After 8 years, approximately 80% of individuals with PD develop PD-D (Aarsland et al., 2003). PD-D, classified as one of the Lewy Body dementias, is thought to be at least partially related to Lewy body pathology within limbic and neocortical areas (Smith et al., 2019), with Braak et al. (2005) reporting a correlation between declining scores on the Mini-Mental State Exam (MMSE) and higher neuropathologic state (Braak et al., 2005). Nevertheless, Lewy body pathology is only one contributor to the complex pathophysiology of PD-D, with other factors, including degeneration of neurotransmitter systems, the co-occurrence with Alzheimer’s disease-related pathology, and genetic factors also playing a role (for review, see Aarsland et al., 2021).

Cognitive impairment in PD may manifest in multiple domains, including executive function, attention, processing speed, visuospatial function, memory and verbal fluency, although not all domains are equally affected, particularly early in the course of the disease (Kehagia et al., 2010). Additionally, CIs, particularly those affecting executive function, are a key contributor to deficits in motor learning observed in PD, which may lead to increased gait and balance symptoms for individuals, and, ultimately, heightened risk of adverse events, such as falls (Olson et al., 2019). In support of this, the regulation of gait variability and rhythmicity, while an automatic process in healthy individuals, requires active attention in those with PD, with executive function deficits further exacerbating the increases in gait variability seen during dual-task performance (Yogev et al., 2005). Overall, CI represents the single biggest predictor of quality of life, mortality and caregiver burden for individuals with PD (Duncan et al., 2014).

Despite the prevalence and significant burden of CI in PD, however, interventions are limited. Whilst dopamine (DA) replacement therapies, such as levodopa, are effective at providing symptomatic relief for motor impairments, evidence for the treatment of CI is mixed, with some studies noting that intervention may paradoxically worsen cognition in certain domains (Schneider et al., 2013; Poston et al., 2016). The only currently approved treatment specifically for CI in PD is the use of cholinesterase inhibitors (Sun and Armstrong, 2021). These are, however, associated with prominent side effects (Ravina et al., 2005), variable efficacy between patients (Emre et al., 2014), and may even exacerbate the motor-symptoms of the disease (Collins et al., 2011). Furthermore, such therapies only address symptomatic presentation of established CI, unable to prevent or slow the development of cognitive dysfunction in PD (Cerasa and Quattrone, 2015). Consequently, current pharmacological interventions fall short in addressing CI in PD and, as such, interest has grown in non-pharmacological interventions, such as cognitive training (CT) (Guglietti et al., 2021; Sun and Armstrong, 2021).

Cognitive training is defined as training programs providing cognitive stimulation that offer structured practice on specific cognitive tasks (Clare and Woods, 2004). Multiple studies have established the efficacy of CT in improving or maintaining cognitive function in various neurological patient populations in areas such as global cognition, executive functions, learning, visuospatial abilities and memory (Sinforiani et al., 2004; Mohlman et al., 2011; Naismith et al., 2013; Petrelli et al., 2015; Folkerts et al., 2018). CT has also been shown to be effective both in cognitively healthy PD patients (Glizer and MacDonald, 2016), as well as those with PD-MCI (Reuter et al., 2012; Maggio et al., 2018), and the benefits may be maintained long-term up to 12 months (Petrelli et al., 2015; Bernini et al., 2019). Despite this, however, recent reviews of the literature as a whole have reported mixed results on the benefits of CT in PD (see, for example, Guglietti et al., 2021), with a Cochrane review evaluating the effectiveness of CT for PD-MCI and PD-D reporting no difference between CT intervention and control groups in measures of global or specific cognitive skills (Orgeta et al., 2020). It is important to note, however, that this review had strict inclusion criteria, capturing only randomized control trials.

The variability in outcomes for CT in the PD population may potentially be linked to differences in implementation strategies between programs, with many not optimized for use specifically in individuals with PD. In particular, the motor impairments observed in PD may represent a significant barrier to traditional CT modalities, with activities requiring a high level of manual dexterity, such as the use of pen and paper, which proves challenging for individuals with dyskinesia/akinesia (Thomas et al., 2017). Whilst the move from traditional pen-and-paper techniques to more computer-based programs may address some of these barriers, a 2010 survey found that nearly 80% of PC-users with PD have significant and severe difficulties using a computer due to their illness (Nes Begnum, 2010). In particular, muscle stiffness, inertia and tremor were frequent problems, resulting in significantly higher severe difficulties using a standard mouse (42%) and keyboard (27%) (Nes Begnum, 2010). Similarly, previous studies have shown one-third to half of computer users’ time is spent dragging a cursor via the mouse, which is considered a complex motor operation (Johnson et al., 1993) and represents a major obstacle to successful computer use for people with motor difficulties (Trewin and Pain, 1999; Kouroupetroglou, 2014). As such, this could represent a significant barrier to the delivery of CT in PD, potentially confounding the evaluation of outcomes. To address such barriers, assistive technologies and appropriate hardware, adapted for the PD population, are needed.

Engagement with the CT paradigm itself may also be key to the ultimate success of the intervention. In support of this, CT in a cohort of psychiatric patients determined engagement during training was a significant independent predictor of cognitive gains, irrespective of simple exposure (Harvey et al., 2020). Given that individuals with PD experience decreased reward sensitivity in an off-dopaminergic medication state, as well as increased apathy (Muhammed et al., 2016), this may be a particularly relevant concern for use of CT in this population. One strategy that may improve engagement is the addition of game-like features (gamification) into CT programs (Lumsden et al., 2016; Van De Weijer et al., 2019). This can be attributed to the incorporation of features such as high-score and reward incentives, narrative, personalization, self-directed challenge, exploration, free-play, competition and graphics into the training platform (Nagle et al., 2015). Gamification may also have benefits beyond improvement of engagement alone, with a systematic review of the literature of gamification in CT highlighting seven reasons researchers opted to gamify CT programs, including increased usability/intuitiveness for target age groups, increased ecological validity, increased suitability for the target disorder, and increased brain stimulation (Lumsden et al., 2016). The review concluded that gamified training is highly engaging and motivational and found evidence that gamification may be effective at enhancing CT in the elderly and ADHD populations (Lumsden et al., 2016). Similarly, recently, the Parkin’Play study showed enhanced global cognition scores after 24-weeks of individuals with PD participating in a home-based, gamified CT intervention (Van De Weijer et al., 2019).

To date, however, a system that targets the motor impairments that limit the use of traditional CT delivery methods in PD, while also incorporating elements of gamification that may improve engagement and treatment adherence, is lacking. In order to address this, we aimed to develop a novel “Serious Gaming” system, NeuroOrb, that would incorporate both assistive hardware and a custom gaming suite, designed to target the cognitive domains most affected in PD. Prior to deciding to embark on a large-scale trial to evaluate the potential cognitive benefits of CT delivered using the NeuroOrb system, we first engaged with individuals with PD in a reiterative co-design process, in order to evaluate the usability of the system in this population. Following incorporation of all feedback into system design, individuals were again asked to engage with the NeuroOrb system and evaluate the effectiveness of the changes. This manuscript details the outcomes of that co-design process, with future clinical trials planned to evaluate the cognitive benefit of the NeuroOrb system in individuals with PD.

Participants (n = 13) were recruited from the community via Parkinson’s South Australia. Participation was voluntary and no incentive to participate was provided. Inclusion criteria included a prior diagnosis of PD by a registered neurologist and fluency in English. Exclusion criteria included significant hearing and visual impairments not corrected by glasses/contacts, a neurological disorder other than PD, or a previous diagnosis of a learning disability. All participants provided written informed consent prior to testing and the research conducted was approved by the Human Research Ethics Committee of the University of Adelaide (H-2020-214).

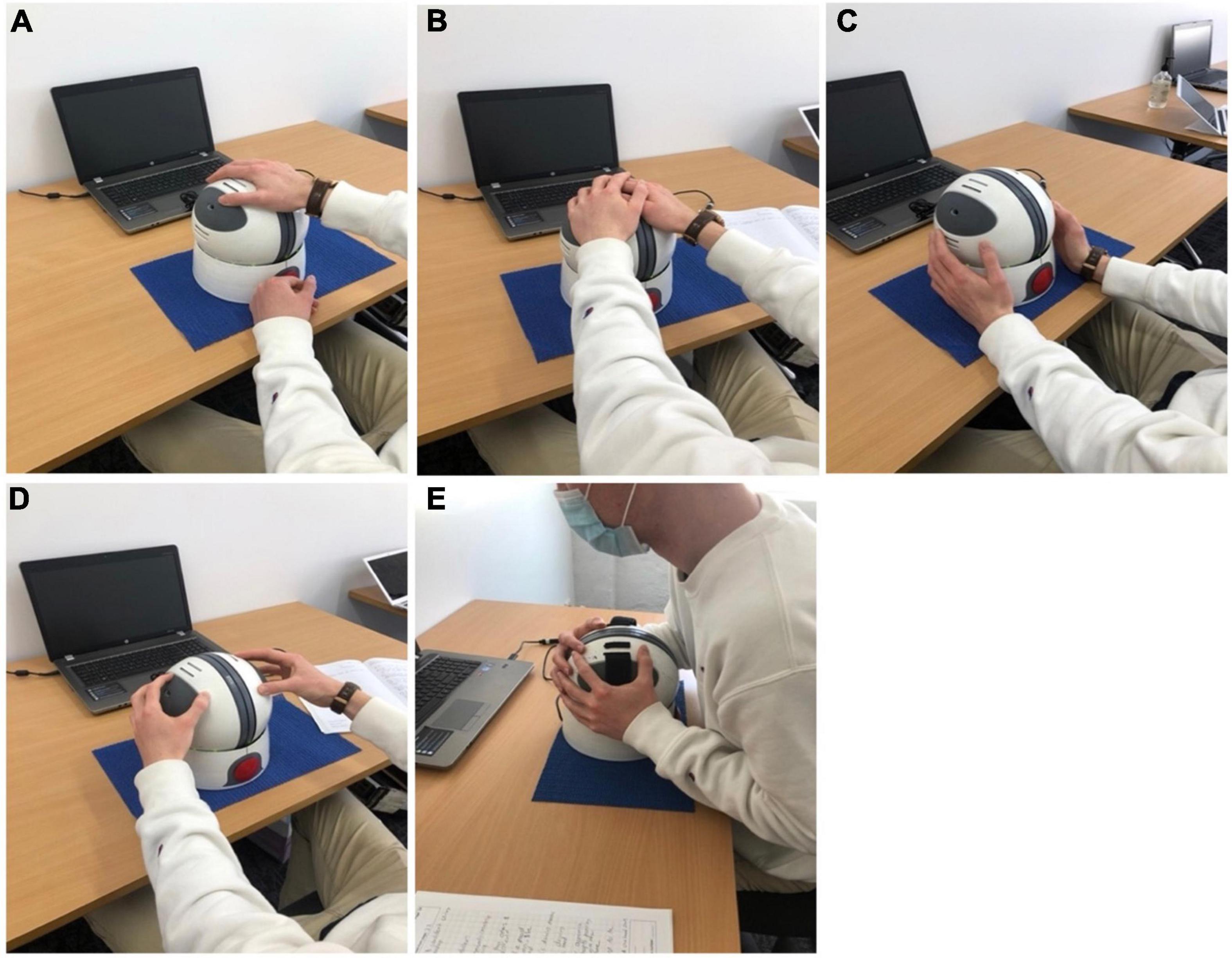

The NeuroOrb serious gaming system involves two main components. The first is the use of an assistive hardware via the ‘Orby’ controller (Figure 1). Orby is an innovative novel controller that was custom co-designed by one of the authors of the current study (DH) to address barriers associated with motor dysfunction, such as reduced fine motor control and tremor (Walker and Hobbs, 2014; Hobbs et al., 2019). The spherical “Orb” part of the controller is 200 mm in diameter and the top of the controller is 230 mm above the table surface. The spherical shape allows for ergonomic bimanual control, with grip pads on either side to indicate ideal hand placement. Importantly, the sensitivity of responsiveness can be adjusted for the individual, with the movement recognized as “purposeful” altered to take into account the extent of motor impairment. This is particularly important for PD patients with resting tremor or drug-induced dyskinesias, as unintentional tremors can be ignored, leaving only intentional movements to direct the controller during CT. The controller also includes vibration, which allows for haptic feedback triggered by actions within each game. Finally, the large red button for selection minimizes the requirement for fine motor control that may be required with devices such as iPad or keyboard keys, which may otherwise be a concern for PD patients. Orby has previously been trialed successfully in individuals with hand impairments due to disability, such as cerebral palsy and in adults post-stroke (Hobbs et al., 2017), but has not previously been trialed in individuals with PD.

Figure 1. The Orby Controller, which consists of several features specifically adapted for motor dysfunction, including: (1) a spherical shape to allow for ergonomic bimanual control, (2) gray grip pads (partially obscured during use) and optional hand straps to support hand placement, (3) an adjustable sensitivity threshold, to prevent random movements from being interpreted as purposeful, (4) vibration to provide haptic feedback to the player, and (5) a large red selection button on the front of the controller to reduce the fine motor requirement (not shown).

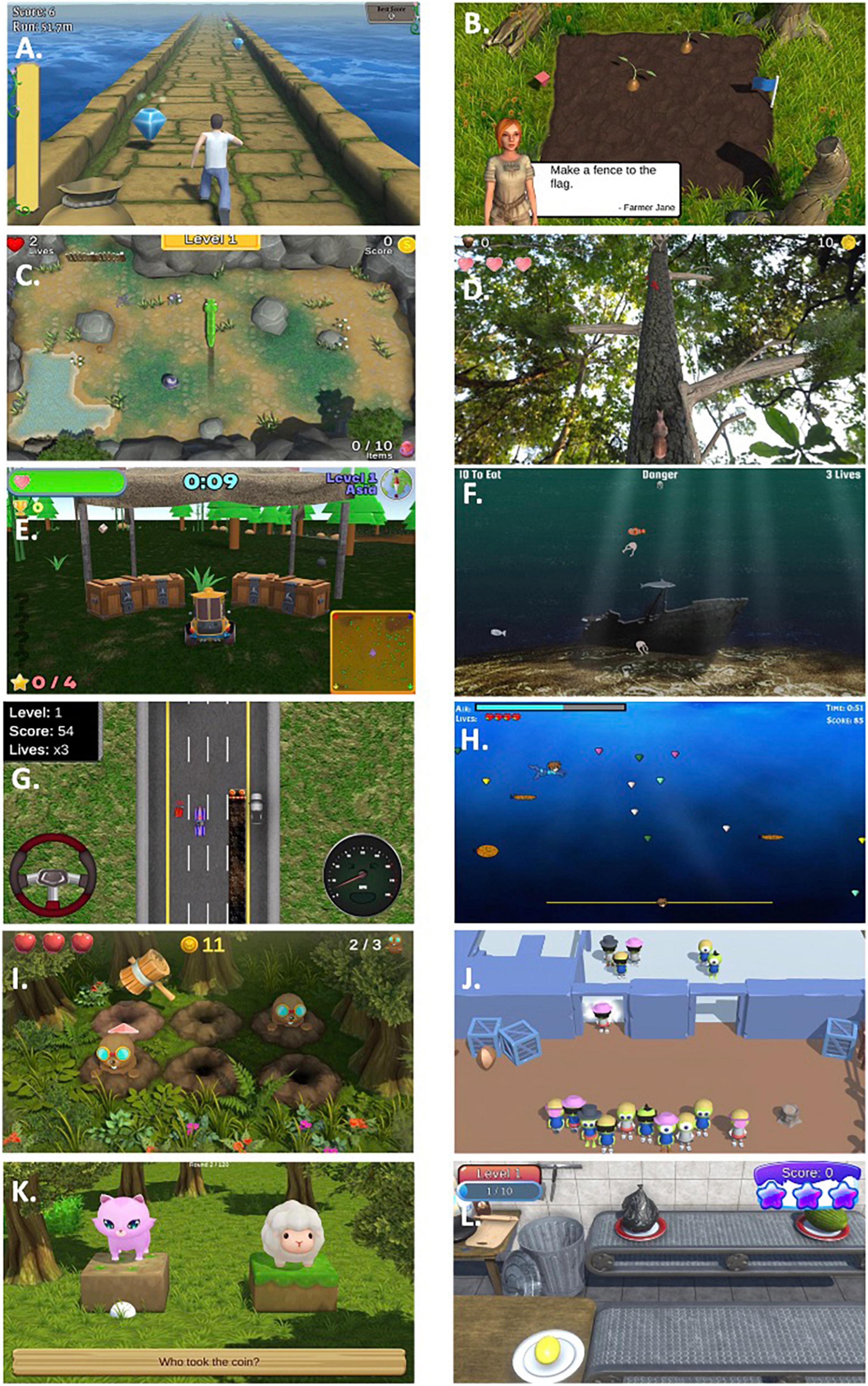

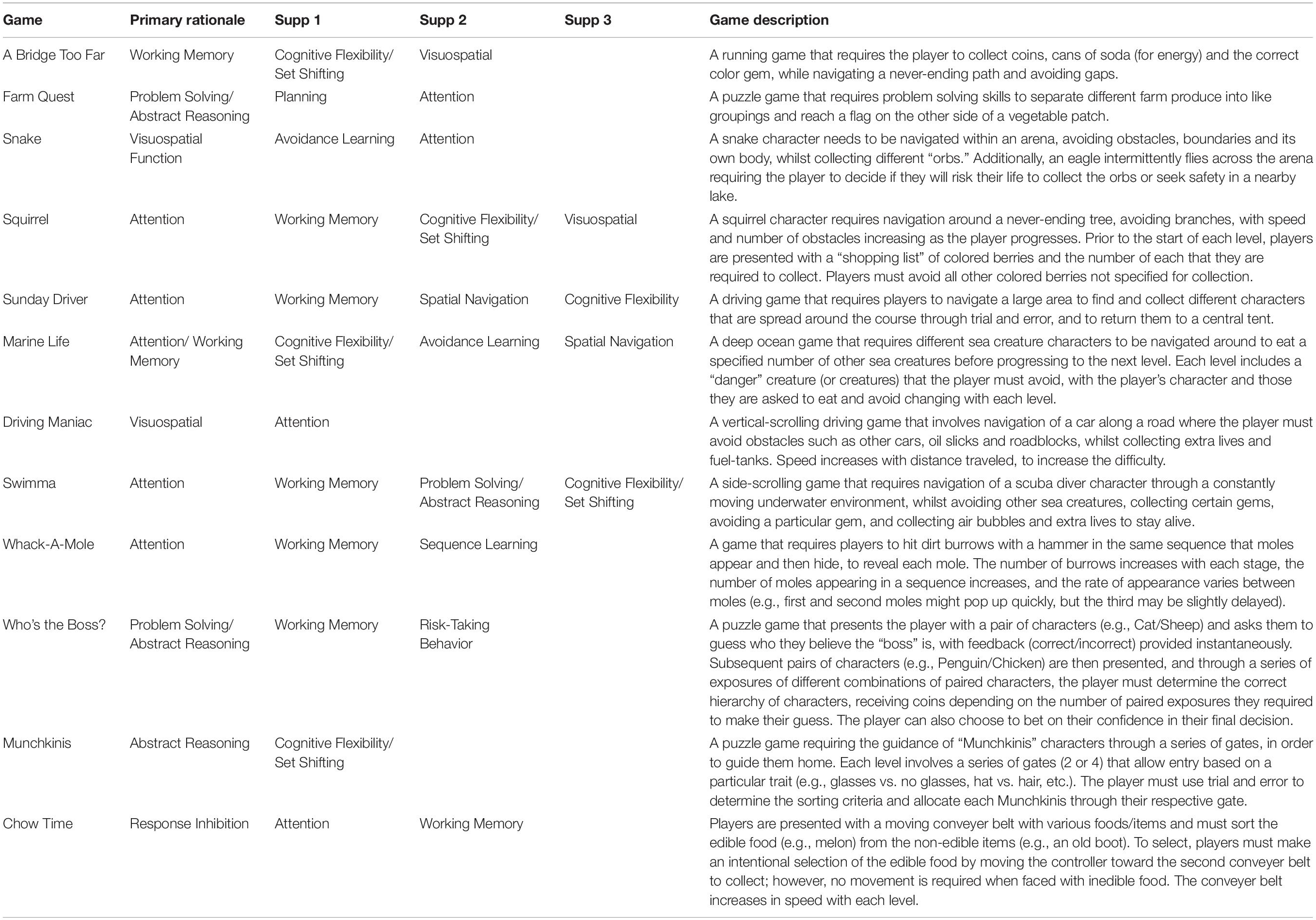

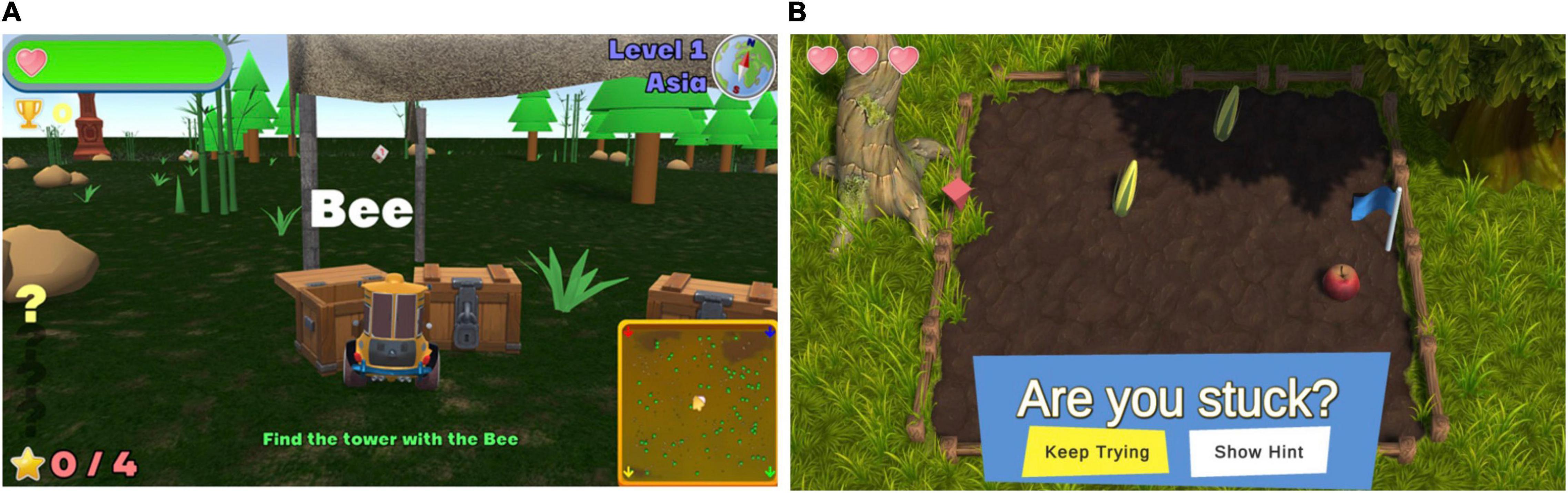

Secondly, to improve user engagement, a custom serious gaming suite was designed to target the cognitive domains most affected in PD, including executive function (working memory, attention, cognitive flexibility, problem solving), visuospatial function and learning (Watson and Leverenz, 2010; Figure 2). Some of these games were adapted from existing games designed by one of the authors of this study (DH). Other games were developed specifically for the NeuroOrb system. Each game is described below, with further information on the cognitive domains that each game targets summarized in Table 1. Due to the nature of gameplay, several of the games encompass training in multiple domains. This gamification of the CT paradigm introduces elements of high-user control, self-directed challenge, exploration and free-play, which have previously been shown to improve outcomes in home-based CT compared to more automated task delivery (Nagle et al., 2015).

Figure 2. Representative screenshots from each of the games included within the NeuroOrb suite: (A) A Bridge Too Far; (B) Farm Quest; (C) Snake; (D) Squirrel; (E) Sunday Driver; (F) Marine Life; (G) Driving Maniac; (H) Swimma; (I) Whack-a-Mole; (J) Munchkinis; (K) Who’s the Boss?; (L) Chow Time!

Table 1. Overview of primary and supplementary cognitive domains trained in the gaming suite and game descriptions.

In order to assess overall acceptability of NeuroOrb, the well-validated System Usability Scale (SUS) was administered both pre- and post-modification (Peres et al., 2013). Additionally, to investigate participants’ perceptions of the Orby controller, games catalog and NeuroOrb system overall, two surveys were developed in-house. These surveys were specifically developed for the purposes of this co-design trial and have not been externally validated.

The first of these assessed feedback on the NeuroOrb system, with questions regarding whether individuals enjoyed the system as a whole (calculated as % responding yes), whether they found the system challenging to use (calculated as % responding yes), how enjoyable/difficult they found the games/Orby controller to use (rated on a scale of 1–10, with 1 = very poor/easy and 10 = very challenging/enjoyable), and their confidence in whether they thought that routine engagement with the NeuroOrb system would result in improvement or maintenance of cognitive function (rated on a scale of 1–10, with 1 = not at all confident and 10 = very confident). This survey was repeated both pre- and post-modification of the NeuroOrb system, with an additional question added post-modification asking whether participants felt that their comments had been addressed.

The second survey, given both pre- and post-modification of the NeuroOrb system, focused on the content and usability of the individual games themselves. Participants were asked whether they enjoyed each game, whether they found the game challenging, whether they found the instructions for each game clear, and whether they found the game easy to play (all calculated as % responding yes). Further, for each game, participants were asked to rate their enjoyment of the game, as well as how difficult they found each game (each rated on a scale of 1–10, with 1 = very poor/easy and 10 = very challenging/enjoyable). Finally, features of each game (i.e., color/animation/sound) and features of the game control were both rated on a scale of 1–3, with 1 = poor/not good, 2 = average and 3 = good.

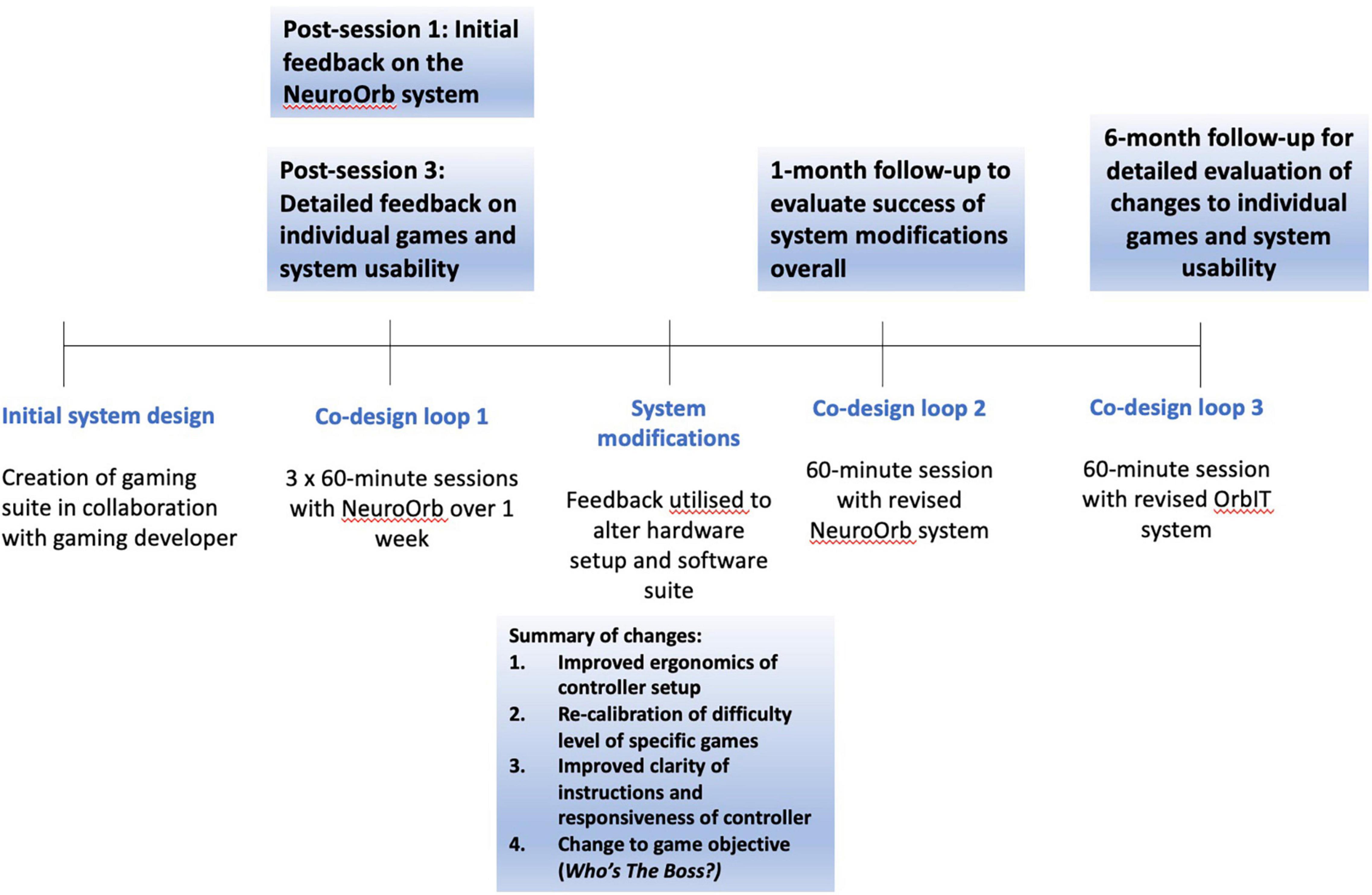

Eligible participants (n = 13) attended the Brain and Body Fitness Studio (BBFS) at Parkinson’s South Australia and completed three 60-min sessions with NeuroOrb over the course of a week (Figure 3). All participants were tested in the “on”-stage of their medications (i.e., the period in which motor symptoms are well-controlled by the medication). While caregivers were not present during the training sessions, participants were supervised during game play by a member of the research team with prior expertise in using the NeuroOrb system. A pre-exposure battery, consisting of demographic questions, the Mini-Mental State Examination (MMSE) to assess baseline cognitive function (Folstein et al., 1975), the Parkinson’s Disease Questionnaire-39 (PDQ-39) to assess disease-specific quality of life (QoL; Jenkinson et al., 1997) and the Geriatric Depression Scale (GDS) as a self-report measure to assess depression in older adults (Pocklington et al., 2016), was administered on day 1, followed by a 60-min NeuroOrb session. Day 2 involved 60-min of supervised gameplay and day 3 involved 60-min of gameplay, followed by a post-exposure battery. This post-exposure battery consisted of a series of questionnaires on previous game experience, system feedback and individual game feedback. Additionally, the System Usability Scale (SUS) was administered to assess overall acceptability of NeuroOrb (Peres et al., 2013).

Figure 3. A timeline of the co-design trial. The NeuroOrb system was first developed in collaboration with a gaming developer (BW). Participants were then invited to participate in three 60-min sessions over the course of a week, spaced 48 h apart. Extensive survey and verbal feedback was collected at the end of the first and third sessions. This feedback was then used to make significant changes to both the hardware and software of the NeuroOrb system. Following these changes (∼1-month post-exposure), participants were invited back for another 60-min gaming session and were encouraged to provide initial feedback on the changes made. They were then invited back a second time (∼6 months post-exposure) for a further 60-min session with the NeuroOrb system, followed by the completion of detailed feedback about the gaming suite and system as a whole.

Participants were provided with a brief controller demonstration and written instructions for each game and assistance was provided if requested during the first session to help familiarize individuals with the controller and game objectives. Play for each game was restricted to 15 min over the course of the week to ensure an even spread of training across multiple domains. Feedback was collected via both written surveys and verbal communication across the three sessions and adjustments to the gaming suite and controller setup were made based on this feedback.

Participants were invited back 1-month and 6-months later for two additional 60-min sessions with the adjusted setup to provide feedback on implementation of suggested changes. The 1-month session was conducted to allow individuals to assess the success of changes overall, while the original gameplay experience was still fresh in their minds. Conversely, the 6-month session was conducted to allow enough time to have passed from the initial system engagement to minimize carry-over effects that could potentially bias the final system and games evaluation. During co-design loop 2 (∼1-month post-engagement), follow-up feedback on the overall changes to NeuroOrb, as well as assessment of overall system enjoyment, was collected. During co-design loop 3 (∼6 months post-engagement), individuals again completed the SUS and were asked for feedback on the individual games. Throughout all sessions, observations were recorded and verbal feedback was noted by trained members of the research team.

Demographic data are presented as either Mean (±SD) or Range/Proportion, depending on the variable. For rating scales, each item was scored on either a 10-point or 3-point Likert scale. Data are presented as Mean (±SD), with the exception of measures presented as the percentage of participants endorsing “yes” for a particular measure. For pre- to post-modification improvement, the absolute increase in percentage for percentage-based measures and the absolute increase in points for scale-based measures was calculated. Data were analyzed using SPSS (Version 26).

Thirteen participants were included in the pilot (6M/7F, Mean Age 68.15 ± 8.54) years), with an average disease duration of 8 ± 5.43 years. Demographics are summarized in Table 2. Twelve of the 13 participants completed all 3 sessions of the CT training period, with 1 completing 2/3 due to time constraints. Eleven of the 12 participants (92%) attended the 1-month follow-up and nine of the 12 participants (75%) attended the 6-month follow-up. Participants did not appear to be cognitively impaired, with MMSE scores all > 27 (Mean 29 ± 0.82). Despite not scoring in the CI range on the MMSE, a subjective survey revealed participants commonly reported cognitive concerns, including remembering events (30.1%), remembering information (38.5%), paying attention (15.4%), learning new tasks (15.4%), remembering words (15.4%) and managing day-to-day tasks (15.4%). In addition, 38.5% self-reported experiencing motor difficulties.

Unfortunately, neither the MDS-Unified Parkinson’s Disease Rating Scale (MDS-UPDRS) nor Hoehn and Yahr (HY) staging was available for the current sample. However, previous work has suggested that the median disease duration for HY staging is 4 years for Stage 1, 5 years for Stage 2, 7 years for Stage 3, 10 years for Stage 4 and 14 years for Stage 5, with individuals with disease duration from 6 to 10 years, which represents the majority of individuals in the current sample, evenly represented across all five stages (Skorvanek et al., 2017). From observations carried out during game play with the NeuroOrb system, motor impairments did not appear to prevent any participant from actively engaging with the system, and no participant expressed concerns to this effect in their comments.

At baseline, PD participants did not report a decrease in health-related QoL in any domain measured by the PDQ-39 compared with normative data (Jenkinson et al., 1997). Furthermore, participants mean GDS (3.42 ± 4.08) did not indicate depression, with scores > 11 indicating depression on this measure (Mondolo et al., 2006).

With relation to previous game experience, 92.3% of participants reported playing games, with 53.8% reporting a frequency of 1 or more times daily. The preferred gaming formats reported were card games (76.9%), word and number games (76.9%) and puzzle/tile/board games (38.5%). In terms of computerized games, 30.8% reported playing games in an online format, whilst only 1 participant (7.7%) reported a preference for video games, indicating the sample group had minimal experience with computerized video games prior to engaging in the co-design process.

After one session, 91% of participants reported that they enjoyed the NeuroOrb gaming system, with an average rating of 7.58 ± 2.19/10 (Table 3). 100% of participants reported finding the games challenging, with the average difficulty level of the games rated as 6.25 ± 1.29/10. However, the games were still reported as being enjoyable, with an average overall enjoyment rating of 7.75 ± 2.18/10. Furthermore, participants reported a high degree of confidence in the ability of the NeuroOrb system to improve/maintain cognitive function (7.92 ± 1.78/10). One participant reported that they would not use the system at home, one reported occasional use, six reported use several times a week and five reported the likelihood of daily use.

With regards to accessibility of the Orby controller itself, this received only a moderate rating (5.83 ± 1.85/10) after the first exposure to the system. Observationally, participants appeared to become more comfortable with controller use by the second exposure and proficient by the third session, with one participant noting, “the gaming platform was not too awkward to operate once I became used to the tension of the [NeuroOrb] i.e., not to be too forceful gripping.” Encouragingly, with repeated use, participants also optimized their own use of the Orby controller. Despite being shown a traditional grip (one hand over each grip pad) in the initial session, throughout the trial and across different games, participants adopted several different techniques to control the device, including one handed (Figure 4A), upper hold (Figure 4B) or lower hold (Figure 4C), fingertips (Figure 4D), and bear grip (Figure 4E) for those with more prominent motor dysfunction (Figure 4).

Figure 4. Despite being shown a traditional grip (one hand over each grip pad) in the initial session, following additional exposures to the Orby controller, participants adopted several different techniques to control the device, including one handed (A), upper hold (B) or lower hold (C), fingertips (D) and bear grip (E) for those with more prominent motor dysfunction.

Following completion of the third session of play, feedback was collected on the individual games. Data were separated into two categories: content (enjoyment, interest, challenge, difficulty and features) and usability (instructions, ease of play and controls). An overall system rating was also obtained. For each game, the number of participant responses received depended on whether the game was played during their sessions. Overall ratings for each game are summarized in Table 4.

Overall, the response to the games was positive, with all games (except Snake) receiving an above average (>5) overall rating. These results were probed further based on feedback regarding content and usability. Content was broken down into enjoyment, difficulty and game features. In terms of enjoyment, the majority of games were considered enjoyable, with >90% of participants reporting enjoyment of A Bridge Too Far (7.42 ± 1.82/10), Squirrel (7.17 ± 1.36/10), Marine Life (7 ± 2.23/10) and Driving Maniac (7.71 ± 2.18/10) and >50% of participants reporting enjoyment of Swimma (6.71 ± 1.50/10), Sunday Driver (6.44 ± 1.97/10), Whack-A-Mole (7.55 ± 1.58/10), Munchkinis (7.64 ± 2.33/10), Who’s the Boss? (5.85 ± 2.16/10) and Chow Time! (6.3 ± 2.64/10). Games with a below average (<50%) number of participants reporting enjoyment included Farm Quest (5.73 ± 2.31/10) and Snake (4.54 ± 1.83/10).

With regards to difficulty, we sought to balance achieving an effective challenge with reducing the “cognitive cost” of games by ensuring that all games fell into a difficulty level of between 6 and 8 on a 10-point scale. All games were within this range, with the exception of Chow Time!, with only 30% of participants reporting this game to be challenging. This was corroborated with observations and verbal feedback, with participants reporting the speed of the conveyer belt started off “too slow” and did not become challenging until at least level 5. Conversely, although still within an acceptable range, Munchkinis was considered the most difficult game included in the suite. Based on observations during the first three sessions, this also appeared to be related to progression. For example, the first level of Munchkinis involved the sorting of features based on 2 criteria, before level 2 progressed to sorting based on multiple characteristics. This progression was reported to be “too steep,” with only one participant observed to successfully complete the second stage.

In terms of game features, including the use of color, animations and sound, all games were rated > 2.5 on a three-point scale, with no notable comments or observations made with regards to the visual features of the games’ design. Interestingly, one participant did comment on the reliance on color for sorting in many of the games, particularly Squirrel, as this would be a potential barrier for implementation in those with color blindness. Whilst this feedback was not directly addressed in the initial round of changes, future adaptations could include the use of shape, rather than color, to overcome this particular concern.

Usability of the games was assessed based on the ease of play, clarity of instructions provided and controller responsiveness for each game. A Bridge Too Far and Chow Time! were considered easy to play by 100% of participants. Whilst Chow Time’s ease is likely attributed to the slow progression of the game, A Bridge Too Far appeared to be quite challenging for participants based on observations. This may be because the game is the first listed in the suite and, as such, all participants chose to begin with this game. This meant that, when observers were guiding participants through the features and use of the controller for the first time, it was via this game. This may have led users to feel particularly supported in how to play the game, raising their confidence level and inflating their perception of the ease of the game. Conversely, games which scored poorly (<60%) for ease of play included Farm Quest, Snake, Sunday Driver, Swimma and Munchkinis. Based on participant comments, this was attributable to poor clarity of the instructions and poor responsiveness of the controls. For example, participants specifically commented “instructions not clear” or “not easy to follow” for Farm Quest. For Sunday Driver, participants commented it was “not clear had to go to center tent first.” Whereas comments for Snake included “didn’t seem to respond to controls” and “controlling the snake was difficult, needs refining.”

Concerningly, upon initial rating, only half of all games received an endorsement of >85% for clarity of instructions. For five of the remaining games (A Bridge Too Far, Farm Quest, Snake, Swimma and Who’s the Boss?), a more moderate percentage of participants (65–70%) reported that the instructions were clear. Overall, participants reported that “having instructions built into the program would be ideal.” This discrepancy was also noticed in observations, with considerable guidance required initially to assist participants in identifying the objectives and features of the games.

Following the third session, participants were asked to complete the SUS to assess overall usability of the NeuroOrb System. Results are summarized in Table 5. Interpretation of usability based on standard SUS guidelines resulted in an overall score of 65.58/100. According to the SUS grading system, this is considered below the average score of 68 (Gomes and Ratwani, 2019). Furthermore, using the scale developed by Sauro (2011), this score equates to a grade of “C” (approximately 42nd percentile) (Sauro, 2011).

Based on participant feedback, this may, at least in part, be due to general setup/handling issues, with several participants noting comments such as, “I think the device needs to be anchored to a base, so it doesn’t move about the table.” Fatigue/discomfort may also have played a role, with two participants commenting that their shoulders/arms became tired or sore during the session. Additionally, one participant ended 2/3 of their sessions 5 min early due to fatigue.

To address the issues identified during the co-design, significant alterations were made, including: (1) improved ergonomics of the controller setup, (2) re-calibration of the difficulty level of tasks within specific games, (3) improved clarity of instructions and responsiveness of controller and, in the case of “Who’s the Boss?” specifically, (4) a change to the game objective.

The controller setup itself was altered, in order to improve user experience and decrease fatigue associated with extended use. This included the introduction of a grip mat, improving how the controller remained in place on the table, as well as the introduction of the optional straps that the controller was designed with, in which participants could place their hands for improved grip/handling of the controller. The straps also enabled participants to “rest” their hands to reduce fatigue and shoulder strain whilst still maintaining control. This reduction of shoulder/arm fatigue was further enhanced through the use of height-adjustable ergonomic chairs with arm rests to provide additional support. Overall, changes to the ergonomics of the set-up and controller were well received, as demonstrated by comments such as, “mat beneath controller helps;” “straps help when moving object on game i.e., are great help when having to just select” and “using straps and rubber mat was good.” Most encouragingly, one participant noted, “the controller was much easier than a mouse and keyboard” and mentioned that, although they hadn’t taken their medication yet, “it was still easy to navigate on the [NeuroOrb].” This was also reflected in the follow-up survey where, on a scale of 1 (much worse) to 10 (much better), changes to the controller itself were rated 7.64 ± 1.75/10.

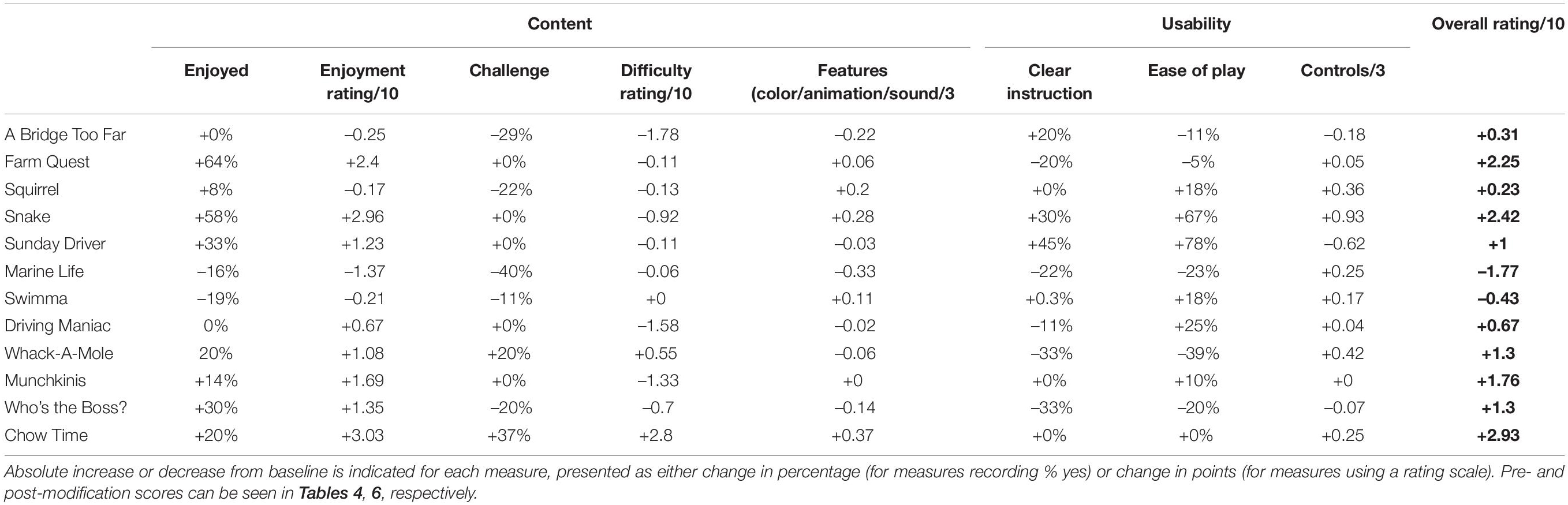

In order to ensure that all games fell in our ideal range of 6–8/10, modulation of task difficulty was made for both Chow-Time! and Munchkinis. For Chow-Time!, the initial speed of the conveyer belt, and accordingly the required processing speed, was increased. Following modifications, the percentage of participants that reported finding the game challenging increased 37% (from 30 to 67%) and the difficulty rating increased 2.8 points (from 4.2 ± 1.75/10 to 7.0 ± 1.00/10), bringing the game in line with our target difficulty. This was well received, as reflected in verbal feedback in the follow-up session, with participants commenting “starting speed is better,” which “made it more interesting.” Additionally, the overall rating of the game increased 2.93 points (from 6.4 ± 2.84/10 to 9.33 ± 0.58/10). For Munchkinis, conversely, an additional level comparable to the first one was added, in order to allow the participants more time to identify and familiarize themselves with the sorting criteria. Following these changes, observers noted an increase in the number of participants reaching the final level at follow-up. This was accompanied by a 1.33 point decrease in difficulty rating (from 8.0 ± 1.41/10 to 6.67 ± 2.31/10), as well as a 1.76 point increase in overall rating (from 7.57 ± 1.90/10 to 9.33 ± 0.58/10). Interestingly, at post-modification rating, three games fell just below the target range of 6–8: A Bridge Too Far (5.22 ± 1.56/10), Snake (5.75 ± 1.71/10) and Driving Maniac (5.75 ± 3.40/10). It is important to note, however, that this was based on a small number of ratings and may be influenced by previous exposure effects.

One of the main changes implemented across all games was the incorporation of instructions into each game’s menu, rather than in a separate written booklet. Additionally, images were included in the instructions to assist in familiarizing the participants with elements they encounter during game play. This appeared to have an immediately beneficial effect, with participants commenting that they had not previously recognized elements of gameplay prior to reading the new on-screen instructions. The addition of explanatory pictures seemed to be a key driver of this, with one participant noting, “I love the pictures in the instructions so I knew what to look for.” Of all games, Sunday Driver received the poorest rating of instruction clarity at baseline (22.2%), a finding corroborated by observers, who needed to provide considerable guidance for players. Accordingly, for this game, instructions were incorporated to appear during game play itself, rather than solely all at the beginning. This allowed the player to be guided by instructions based on their game play (e.g., if they stayed stationary, a prompt would appear instructing them of their next goal) (Figure 5A), leading to enhanced clarity about what was required at each stage of game play and better engagement with the game during a follow-up session. This was reflected in the post-modification ratings, with an absolute increase of 45% (from 22 to 67%) in the percentage of individuals reporting that the instructions were clear and an absolute increase of 78% (from 22 to 100%) in the percentage of individuals endorsing the game as easy to play.

Figure 5. (A) One of the changes made to Sunday Driver included the addition of prompts to instruct individuals based on their gameplay. For example, players would be reminded of the goal if they remained stationary for too long. (B) One of the changes made to Farm Quest included the addition of a time-activated hint trigger, offering the player the option of a hint for the next move.

Overall, changes to the instructions were well-received at follow-up sessions, with one-third of games (A Bridge Too Far, Snake, Sunday Driver and Who’s the Boss?) showing an improvement in the percentage of individuals reporting that the instructions were clear compared to baseline. A further one-third of games (Squirrel, Swimma, Munchkinis, and ChowTime!) did not show a change from baseline, but this is likely reflective of the fact that all of these, with the exception of Swimma, had already obtained a 100% rating for instruction clarity at baseline. For the remaining one-third of games (Farm Quest, Marine Life, Driving Maniac, and Whack-a-Mole) that showed a decrease in the percentage of individuals reporting that instructions were clear, this may be biased by the small number of ratings (just four per game).

Given that three of these four games, with the exception of Marine Life, showed an improvement in overall rating compared to baseline, however, it may also indicate that factors other than instruction clarity were driving game feedback. In line with this, for Farm Quest, participants attributed their difficulty in understanding what was required to progress between levels not to the instructions, but instead to their perceived ability, with one stating, “problem was mainly me I feel” and going on to state that their dissatisfaction with the game was “just due to my comprehending or not understanding the rules.” Given that Farm Quest involves problem solving and abstract reasoning, this may reflect particular challenges with this cognitive domain in individuals with PD (Beatty and Monson, 1990; Cronin-Golomb et al., 1994; Young et al., 2010). As such, in order to minimize frustrations, a time-activated hint trigger was added, offering the player the option of a hint for the next move if required (Figure 5B). Encouragingly, this was associated with an absolute increase of 64% (from 36 to 100%) in the percentage of individuals reporting that they enjoyed the game and an increase of 2.25 points in the overall rating of the game (from 5.5 ± 2.36/10 to 7.75 ± 0.96/10).

Modification was also made to the controller sensitivity and responsiveness. This modification was particularly noteworthy for Snake, which was the only game to initially receive a below average (<2) rating (1.82 ± 0.75/3) of the usability of specific controls within the game. This translated to poor measures of enjoyment, with only 41.7% of participants reporting that the game was enjoyable and an overall rating of just 4.54 ± 1.83/10, the lowest for any game. Participants were not able to pass the first level and did not attempt to play the game after the first exposure. Accordingly, several changes were made, including optimization of the controller sensitivity to movement and directional changes, a reduction in speed of the snake, an increase in arena size and the removal of obstacles from the first three levels, in order to give the player a larger margin of error to make directional decisions and more time to adjust to the controls. These changes were well received, with participants reporting they could “feel [the controller] responding better,” and the rating of the usability of controls within the game increasing 0.93 points (from 1.82 ± 0.75/3 to 2.75 ± 0.50/3). Ratings of the game itself also changed strikingly post-modification, with an absolute increase of 58% (from 42 to 100%) in the percentage of individuals endorsing the game as enjoyable and an absolute increase of 67% (from 33 to 100%) in the percentage of those reporting that the game was easy to play. Similarly, the enjoyment rating of the game increased 2.96 points (from 4.54 ± 1.83/10 to 7.5 ± 0.58/10), with overall rating increasing 2.42 points (from 4.83 ± 1.80/10 to 7.25 ± 0.96/10).

Who’s the Boss? was originally the least successful of all games in the catalog, receiving an average enjoyment score of just 5.85 ± 2.16/10 and a difficulty rating of 7.3 ± 1.49/10. It also received the most negative comments of all games, with participants reporting the game to be “frustrating,” “hard to find a pattern” and “just a bit of random guesswork most of the time.” Accordingly, the game was re-pitched entirely, from a confusing and monotonous task based on reinforcement learning principles to a hierarchical structure that required problem solving/abstract reasoning for individuals to identify “the boss” based on the lowest number of exposed pairings, with more coins rewarded for faster guesses. The game was also divided into “stages” to incorporate an element of progression, with the number of new characters increasing by 2 with each stage (e.g., 4, 6, 8, etc.). Additionally, a betting element was added, with participants able to wager based on their confidence in their guess. These changes were well received during follow-up, with participants verbally reporting the game was a “better format” and “much better than last time.” This was also reflected in the post-modification ratings of the game, with an absolute increase of 30% in the percentage of individuals reporting the game as enjoyable (from 70 to 100%) and an overall increase of 1.3 points in the rating of the game (from 6.3 ± 1.34/10 to 7.6 ± 1.67/10). Importantly, these changes also meant that there was a second game available, in addition to Farm Quest, that focused on the training of problem solving/logical deduction.

This positive impact of the changes to the games was reflected in a follow-up survey where, on a scale of 1 (much worse) to 10 (much better), changes to the games were rated 7.91 ± 1.04/10.

Table 6 summarizes the individual and overall rating changes per game post-modification, and Table 7 shows the absolute increase or decrease (in either percentage or point values) from baseline for each individual game, following all modifications.

Table 7. Absolute increase/decrease from baseline for each individual games following modifications.

A post-session survey revealed a positive response to the changes made, with 100% of participants reporting they felt their comments were addressed. Confidence in the ability of repeated use of the NeuroOrb gaming system to be beneficial for cognitive function was reported by all participants (100%) and an overall enjoyment rating of the system was 8.18 ± 1.08/10, which represents an 8% improvement upon the initial system rating in the first session. Importantly, the revised SUS score increased from a 65.58 to a 74.17, which is considered above the average score of 68 on the SUS grading system (Gomes and Ratwani, 2019; Table 5). Using the scale developed by Sauro (2011), this score equates to a grade of “B” (70th percentile) (Sauro, 2011).

This study used a reiterative co-design process to develop a novel serious gaming system, NeuroOrb, for the delivery of CT in individuals with PD. Feasibility was assessed by evaluating a combination of outcomes, including enjoyment, accessibility and acceptability of both the software and hardware. Overall, the NeuroOrb system demonstrated positive feedback in all areas assessed, with integration of feedback resulting in high ratings of enjoyment and confidence in the benefits of the CT program. It is important to note, however, that the lack of a formal motor rating scale, such as the MDS-UPDRS, is a major limitation of the current study, as it is not possible to ascertain from our data whether degree of motor impairment affected participants’ perceptions of either the usability or enjoyability of the NeuroOrb, which could have impacted upon system ratings.

The cohort in the current study was high functioning, with no evidence of CI (average MMSE = 29), depression or motor impairment significant enough to interfere with daily activities. This is surprising, as the cohort included a broad range of disease duration, ranging from 1 to 19 years (average 8 years ± 5.43). Given that participants in the co-design trial were not specifically recruited based on cognitive function (i.e., PD without CI, PD-MCI, and PD-D), this may represent a selection bias, with only high functioning individuals volunteering to take place in the study. This may make it difficult to interpret how those with PD-MCI or PD-D would have engaged with the NeuroOrb system and whether they would have been able to understand the game instructions, even post-modification. Additionally, given the lack of sensitivity of the MMSE to detect either MCI or dementia in PD (Hoops et al., 2009), the group may have been more impaired than suggested based on MMSE scores alone. In support of this, a percentage of the participants self-reported cognitive difficulty [remembering events (30.1%), remembering information (38.5%), paying attention (15.4%), learning new tasks (15.4%), remembering words (15.4%), and managing day-to-day tasks (15.4%)]. It is possible that a measure more sensitive to detecting CI in PD, such as the Montreal Cognitive Assessment (MoCA) (Hoops et al., 2009), may have been better able to detect some cases of MCI or mild dementia in the current cohort. Nevertheless, while it may be difficult to extrapolate the results from this small sample to the wider PD population, including those with more severe motor and CIs, this is also likely to represent the target demographic who may derive the most benefit from CT. In support of this, recent research supports that older adults with higher baseline cognitive function are more likely to benefit from CT than those who are already impaired (the so-called magnification effect) (Mohlman et al., 2011; Fu et al., 2020).

Encouragingly, initial impressions of the NeuroOrb system were positive, with the majority of participants reporting that they enjoyed the system and rating it highly. Indications of acceptability for the implementation of the CT program were also high, with most participants expressing confidence in the NeuroOrb system to improve or maintain cognitive function. These are important positive predictors, as game enjoyment and perceptions of cognitive benefit toward gamified CT in an older population have been correlated with motivation (Boot et al., 2016). Accordingly, high levels of initial enjoyment and confidence reported in the NeuroOrb system are likely to reflect motivation to engage further with the system. In support of this, 10 out of 13 of the participants in the current study reported that they would use the NeuroOrb system several times per week, or even daily, if it were available commercially. This is particularly relevant in PD, where ∼40% of individuals experience disorders of motivation (den Brok et al., 2015). Such motivation to engage may, in turn, translate to improvements in adherence to a long-term CT regime, enhancing the efficacy of the CT program overall.

Concerningly, the Orby controller itself received only a moderate rating for accessibility after the first exposure to the system. Given the challenges associated with motor function in the PD population (Mazzoni et al., 2012), it is important for the controller to be considered accessible, and initial accessibility feedback was not as positive as hoped. This rating could be related to several factors; for example, it may indicate issues with handling of the controller sensitivity and responsiveness of the controller to specific games, or ergonomics of the overall set-up. Furthermore, this rating may be reflective of the minimal exposure and unfamiliarity of the participants to computerized games, specifically video games. Minimal experience with handling similar technologies suggests a steeper learning curve, which may also have impacted initial accessibility impressions of the controller. Over time, participants appeared to become more comfortable with controller use and were able to optimize their own use of the Orby controller. This versatility exemplifies a positive adaptive feature of the Orby controller, allowing it to cater for the heterogeneity of motor impairments in the PD population (Greenland et al., 2019).

Although individuals did become more comfortable with the system with repeated exposure, however, some problems persisted, with an overall below-average SUS indicating compromised usability. While unclear exactly what may have driven these difficulties, they may have been due to either general setup/handling issues or to participant fatigue/discomfort. Fatigue is a commonly reported symptom in PD, with a reported prevalence of 50% (Siciliano et al., 2018) and is one of the three Rs (i.e., “Repeatedly”) suggested by Kouroupetroglou (2014) to represent particular barriers for computer use in motor impaired populations. As such, it may have affected the ability of participants to engage throughout the session and, in turn, affected their perception of the usability of the system overall. It is also possible, however, that difficulties with the games themselves negatively impacted on overall system usability, making it critical to interpret overall usability in the context of ratings of the gaming suite.

Overall, the response to the games was positive, with all games (except Snake) receiving an above average overall rating. In terms of enjoyment, the majority of games were considered enjoyable. This is important, as enjoyment is a strong motivator and positively associated with effortful engagement (Cacioppo et al., 1996). Games with a below average (<50%) number of participants reporting enjoyment included Farm Quest and Snake. Lower participant enjoyment of these games may have been reflective of a number of factors, such as inappropriate game difficulty or issues with game features.

The relationship between perceived task difficulty and performance is not well understood for CT in PD; however, studies suggest a balance is important. In older adults specifically, Selective Engagement Theory (Hess, 2014) proposes that increased ‘cognitive costs’ associated with activities later in life results in a reduction in the cost/benefit ratio, reducing the willingness of older adults to engage in demanding activities (Hess et al., 2016). It is critical to balance this, however, against the theoretical framework proposed by Lövdén et al. (2010) for achieving cognitive plasticity in adults. According to this model, the transfer of gains from CT across multiple cognitive domains or to real-world contexts depends on the difficulty of the training task, with sustained cognitive challenges required to induce lasting neural changes. This necessitates a continual mismatch between the demand of the task (i.e., the cognitive load) and the cognitive capacity of the individual. In support of this, adaptive training of working memory (i.e., where task demands are continually increased based on performance) resulted in far transfer to an untrained episodic memory task, as well as accompanying neural changes (Flegal et al., 2019). In light of these considerations, we sought to achieve an effective challenge, while also reducing “cognitive cost.” While we were able to achieve this for the majority of games, Chow Time! was rated as too easy and Munchkinis was rated as too difficult. Interestingly, however, this did not appear to affect enjoyment of these games.

Similarly, enjoyment of the games did not appear to be negatively affected by game features, with use of color, animation and sound all highly rated. This is important, as basic stimuli (images and texts) associated with traditional pen and paper CT can make therapy boring for patients (Alloni et al., 2017). The inclusion of 3D graphics in computerized training is considered beneficial, due to increased entertainment and involvement of the patient, as well as the introduction of new elements (such as spatial perception) into training, ultimately improving direct interaction compared to more abstract 2D counterparts (Alloni et al., 2017). Given that neither game difficulty or features appeared to negatively impact either enjoyment or rating of the game, it may be that usability of the games themselves, including clarity of instructions provided and controller responsiveness for each game, was most important for determining the enjoyability and overall rating of the game. In support of this, both Farm Quest and Snake, the two lowest rated games for enjoyment, scored poorly for ease of play, with both also receiving only moderate ratings for clarity of instructions.

Encouragingly, through the co-design process and the subsequent alterations made to the NeuroOrb system, we seemed to successfully address all points raised, with 100% of respondents stating that they felt as though their feedback was addressed. Following implementation of these changes, participants rated changes to both the controller and the games extremely highly. Furthermore, overall enjoyment of the system as a whole increased and there was a notable improvement in SUS score from the 42nd to the 70th percentile (Sauro, 2011), indicating that NeuroOrb is likely to have high usability as a tool for individuals with PD.

Importantly, the number of issues that we were able to pre-emptively identify and address through this study highlights the importance of engaging in a co-design process with key stakeholders, prior to engaging in a large-scale intervention trial. Such a cooperative approach is in line with current best practice guidelines for the design of interventions for use in patient populations, and is anecdotally reported to lead to more effective services and better outcomes for individuals (although more rigorous assessment of outcomes and cost-benefit analysis is needed) (Clarke et al., 2017). Co-design has previously been successfully used in many healthcare indications. In PD specifically, co-design has been used to design eHealth services (Revenas et al., 2018), collaborative care (Kessler et al., 2019), and even smart home technology (Bourazeri and Stumpf, 2018). Most recently, and highly relevant to the current work, co-design was used to put forth recommendations for the design of a personalized gaming suite for use by individuals with PD (Dias et al., 2020). Within the current study, without consultation with key stakeholders in a reiterative co-design process, many of the issues we identified would have been missed. This could have had a disastrous impact on any intervention trials, as issues with the games themselves (e.g., understanding what the objective is) or with the hardware (e.g., navigating the controller or avoiding fatigue) could have negatively affected engagement with the system, or even successful completion of the trial. This, in turn, may have confounded the assessment of effects on cognitive function, potentially masking any benefits derived from NeuroOrb. Instead, following our extensive incorporation and evaluation of suggested modifications, we are now well-placed to proceed to a large-scale clinical trial using NeuroOrb to deliver customized CT in individuals with PD, evaluating any potential benefits using a sensitive and comprehensive cognitive assessment battery.

Considering the positive ratings of the controller, gaming suite and the NeuroOrb system overall, we have developed a customized “Serious Games” approach to CT, optimized for use in individuals with PD and ready for deployment in subsequent intervention trials designed to assess its efficacy. Through our co-design process, we believe that the incorporation of novel elements into both the hardware and software of the NeuroOrb system represent a significant improvement on other CT systems developed to date, which often use commercially available software packages or non-validated paradigms, without consultation from key stakeholders (Thompson et al., 2020). Instead, our system allows us to target the areas of cognitive function that present the most concern for individuals with PD in an accessible and highly engaging way. This makes us well-placed to obtain maximal benefit from use of the system to deliver CT in this population. While it ultimately remains to be determined if NeuroOrb will result in cognitive benefits for individuals with PD, or whether such benefits will last or transfer to everyday ADLs, this process nevertheless illustrates the importance of co-design and appropriate consultation of key stakeholders when designing future therapeutic strategies.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Human Research Ethics Committee of the University of Adelaide (H-2020-214). The patients/participants provided their written informed consent to participate in this study.

BG coordinated the co-design trial, analyzed the data, and drafted the manuscript. DH designed the NeuroOrb system, contributed to the co-design trial, and supervised the project. BW was the game developer for the software suite. BE, AM, and SD contributed to the co-design trial and analyzed the data. LC-P contributed to the design of the software suite, led the co-design trial, conducted data analysis, supervised the project and substantially revised the manuscript. All authors contributed to the final version of the manuscript.

This work was supported by a grant to LC-P and DH from the Perpetual Impact Philanthropy Application Program from the estate of Olga Mabel Woolger and by an Australian Government Research Training Program (RTP) Scholarship to BG.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The authors wish to thank Simone Martin, Olivia Nassaris, and the volunteers of Parkinson’s South Australia for assistance with participant recruitment. Furthermore, the authors are grateful to Amelia Carter and Philippa Tsirgiotis for the feedback provided.

Aarsland, D., Andersen, K., Larsen, J. P., Lolk, A., and Kragh-Sorensen, P. (2003). Prevalence and characteristics of dementia in Parkinson disease: an 8-year prospective study. Arch. Neurol. 60, 387–392. doi: 10.1001/archneur.60.3.387

Aarsland, D., Batzu, L., Halliday, G. M., Geurtsen, G. J., Ballard, C., Ray Chaudhuri, K., et al. (2021). Parkinson disease-associated cognitive impairment. Nat. Rev. Dis. Primers 7:47.

Alloni, A., Sinforiani, E., Zucchella, C., Sandrini, G., Bernini, S., Cattani, B., et al. (2017) Computer-based cognitive rehabilitation: the CoRe system. Disabil. Rehabil. 39, 407–417. doi: 10.3109/09638288.2015.1096969

Beatty, W. W., and Monson, N. (1990). Problem solving in Parkinson’s disease: comparison of performance on the Wisconsin and California Card Sorting Tests. J. Geriatr. Psychiatry Neurol. 3, 163–171. doi: 10.1177/089198879000300308

Bernini, S., Alloni, A., Panzarasa, S., Picascia, M., Quaglini, S., Tassorelli, C., et al. (2019). A computer-based cognitive training in mild cognitive impairment in Parkinson’s disease. Neuro Rehabilitation 44, 555–567. doi: 10.3233/NRE-192714

Boot, W. R., Souders, D., Charness, N., Blocker, K., Roque, N., and Vitale, T. (2016). “The gamification of cognitive training: older adults’ perceptions of and attitudes toward digital game-based interventions,” in Human Aspects of IT for the Aged Population. Design for Aging, eds J. Zhou and G. Salvendy (Cham: Springer International Publishing), 290–300. doi: 10.1007/978-3-319-39943-0_28

Bourazeri, A., and Stumpf, S. (2018). “Co-designing smart home technology with people with dementia or Parkinson’s disease,” in Proceedings of the 10th Nordic Conference on Human-Computer Interaction, (New York, NY: ACM).

Braak, H., Rüb, U., Jansen Steur, E. N., Del Tredici, K., and De Vos, R. A. (2005). Cognitive status correlates with neuropathologic stage in Parkinson disease. Neurology 64, 1404–1410. doi: 10.1212/01.WNL.0000158422.41380.82

Cacioppo, J. T., Petty, R. E., Feinstein, J. A., and Jarvis, W. B. G. (1996). Dispositional differences in cognitive motivation: the life and times of individuals varying in need for cognition. Psychol. Bull. 119, 197–253. doi: 10.1037/0033-2909.119.2.197

Caviness, J. N., Driver-Dunckley, E., Connor, D. J., Sabbagh, M. N., Hentz, J. G., Noble, B., et al. (2007). Defining mild cognitive impairment in Parkinson’s disease. Mov. Disord. 22, 1272–1277.

Cerasa, A., and Quattrone, A. (2015). The effectiveness of cognitive treatment in patients with Parkinson’s disease: a new phase for the neuropsychological rehabilitation. Parkinson. Relat. Disord. 21:165. doi: 10.1016/j.parkreldis.2014.11.012

Clare, L., and Woods, R. T. (2004). Cognitive training and cognitive rehabilitation for people with early-stage Alzheimer’s disease: a review. Neuropsychol. Rehabil. 14, 385–401. doi: 10.1080/09602010443000074

Clarke, D., Jones, F., Harris, R., Robert, G., and Collaborative Rehabilitation Environments in Acute Stroke (CREATE) team (2017). What outcomes are associated with developing and implementing co-produced interventions in acute healthcare settings? A rapid evidence synthesis. BMJ Open 7:e014650. doi: 10.1136/bmjopen-2016-014650

Collins, L. E., Paul, N. E., Abbas, S. F., Leser, C. E., Podurgiel, S. J., Galtieri, D. J., et al. (2011). Oral tremor induced by galantamine in rats: a model of the parkinsonian side effects of cholinomimetics used to treat Alzheimer’s disease. Pharmacol. Biochem. Behav. 99, 414–422. doi: 10.1016/j.pbb.2011.05.026

Cronin-Golomb, A., Corkin, S., and Growdon, J. H. (1994). Impaired problem solving in Parkinson’s disease: impact of a set-shifting deficit. Neuropsychologia 32, 579–593. doi: 10.1016/0028-3932(94)90146-5

den Brok, M. G., Van Dalen, J. W., Van Gool, W. A., Moll Van Charante, E. P., De Bie, R. M., and Richard, E. (2015). Apathy in Parkinson’s disease: a systematic review and meta-analysis. Mov. Disord. 30, 759–769. doi: 10.1002/mds.26208

Dias, S. B., Diniz, J. A., Konstantinidis, E., Savvidis, T., Zilidou, V., Bamidis, P. D., et al. (2020). Assistive HCI-serious games co-design insights: the case study of i-PROGNOSIS personalized game suite for Parkinson’s disease. Front. Psychol. 11:612835. doi: 10.3389/fpsyg.2020.612835

Duncan, G. W., Khoo, T. K., Yarnall, A. J., O’brien, J. T., Coleman, S. Y., Brooks, D. J., et al. (2014). Health-related quality of life in early Parkinson’s disease: the impact of nonmotor symptoms. Mov. Disord. 29, 195–202. doi: 10.1002/mds.25664

Emre, M., Poewe, W., De Deyn, P. P., Barone, P., Kulisevsky, J., Pourcher, E., et al. (2014). Long-term safety of rivastigmine in parkinson disease dementia: an open-label, randomized study. Clin. Neuropharmacol. 37, 9–16. doi: 10.1097/wnf.0000000000000010

Flegal, K. E., Ragland, J. D., and Ranganath, C. (2019). Adaptive task difficulty influences neural plasticity and transfer of training. NeuroImage 188, 111–121. doi: 10.1016/j.neuroimage.2018.12.003

Folkerts, A.-K., Dorn, M. E., Roheger, M., Maassen, M., Koerts, J., Tucha, O., et al. (2018). Cognitive stimulation for individuals with Parkinson’s disease dementia living in long-term care: preliminary data from a randomized crossover pilot Study. Parkinsons Dis. 2018, 1–9. doi: 10.1155/2018/8104673

Folstein, M. F., Folstein, S. E., and Mchugh, P. R. (1975). “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198.

Fu, L., Kessels, R. P. C., and Maes, J. H. R. (2020). The effect of cognitive training in older adults: be aware of CRUNCH. Aging Neuropsychol. Cogn. 27, 949–962. doi: 10.1080/13825585.2019.1708251

Glizer, D., and MacDonald, P. A. (2016). Cognitive training in Parkinson’s disease: a review of studies from 2000 to 2014. Parkinsons Dis. 2016:9291713.

Goldman, J. G., Holden, S., Ouyang, B., Bernard, B., Goetz, C. G., and Stebbins, G. T. (2015). Diagnosing PD-MCI by MDS task force criteria: how many and which neuropsychological tests? Mov. Disord. 30, 402–406. doi: 10.1002/mds.26084

Gomes, K. M., and Ratwani, R. M. (2019). Evaluating improvements and shortcomings in clinician satisfaction with electronic health record usability. JAMA Netw. Open 2:e1916651. doi: 10.1001/jamanetworkopen.2019.16651

Greenland, J. C., Williams-Gray, C. H., and Barker, R. A. (2019). The clinical heterogeneity of Parkinson’s disease and its therapeutic implications. Eur. J. Neurosci. 49, 328–338. doi: 10.1111/ejn.14094

Guglietti, B., Hobbs, D., and Collins-Praino, L. E. (2021). Optimizing cognitive training for the treatment of cognitive dysfunction in Parkinson’s disease: current limitations and future directions. Front. Aging Neurosci. 13:709484. doi: 10.3389/fnagi.2021.709484

Harvey, P. D., Balzer, A. M., and Kotwicki, R. J. (2020). Training engagement, baseline cognitive functioning, and cognitive gains with computerized cognitive training: a cross-diagnostic study. Schizophr. Res. Cogn. 19:100150. doi: 10.1016/j.scog.2019.100150

Hess, T. M. (2014). Selective engagement of cognitive resources. Perspect. Psychol. Sci. 9, 388–407. doi: 10.1177/1745691614527465

Hess, T. M., Smith, B. T., and Sharifian, N. (2016). Aging and effort expenditure: the impact of subjective perceptions of task demands. Psychol. Aging 31, 653–660. doi: 10.1037/pag0000127

Hobbs, D. A., Hillier, S. L., Russo, R. N., and Reynolds, K. J. (2019). A custom serious games system with forced-bimanual use can improve upper limb function for children with cerebral palsy- results from a randomised controlled trial. Technol. Disabil. 31, 147–154.

Hobbs, D. A., Walker, A. W., Hughes, M. B., Watchman, S. K., Wilkinson, B. G., Hillier, S. L., et al. (2017). “Improving hand function through accessible gaming,” in Global Research, Education and Innovation in Assistive Technology (GREAT) Summit (Geneva: World Health Organisation [WHO]).

Hoops, S., Nazem, S., Siderowf, A., Duda, J., Xie, S., Stern, M., et al. (2009). Validity of the MoCA and MMSE in the detection of MCI and dementia in Parkinson disease. Neurology 73, 1738–1745. doi: 10.1212/wnl.0b013e3181c34b47

Jenkinson, C., Fitzpatrick, R., Peto, V., Greenhall, R., and Hyman, N. (1997). The Parkinson’s Disease Questionnaire (PDQ-39): development and validation of a Parkinson’s disease summary index score. Age Ageing 26, 353–357. doi: 10.1093/ageing/26.5.353

Johnson, P. W., Hewes, J., Dropkin, J., and Rempel, D. M. (1993). Office ergonomics: motion analysis of computer mouse usage. Am. Ind. Hyg. Assoc. 11:130.

Kehagia, A. A., Barker, R. A., and Robbins, T. W. (2010). Neuropsychological and clinical heterogeneity of cognitive impairment and dementia in patients with Parkinson’s disease. Lancet Neurol. 9, 1200–1213. doi: 10.1016/S1474-4422(10)70212-X

Kessler, D., Hauteclocque, J., Grimes, D., Mestre, T., Coted, D., and Liddy, C. (2019). Development of the Integrated Parkinson’s Care Network (IPCN): using co-design to plan collaborative care for people with Parkinson’s disease. Qual. Life Res. 28, 1355–1364. doi: 10.1007/s11136-018-2092-0

Kouroupetroglou, C. (2014). Enhancing the Human Experience Through Assistive Technologies and E-Accessibility. Beaverton: Ringgold, Inc.

Litvan, I., Goldman, J. G., Troster, A. I., Schmand, B. A., Weintraub, D., Petersen, R. C., et al. (2012). Diagnostic criteria for mild cognitive impairment in Parkinson’s disease: movement Disorder Society Task Force guidelines. Mov. Disord. 27, 349–356. doi: 10.1002/mds.24893

Lövdén, M., Bäckman, L., Lindenberger, U., Schaefer, S., and Schmiedek, F. (2010). A theoretical framework for the study of adult cognitive plasticity. Psychol. Bull. 136, 659–676. doi: 10.1037/a0020080

Lumsden, J., Edwards, E. A., Lawrence, N. S., Coyle, D., and Munafò, M. R. (2016). Gamification of cognitive assessment and cognitive training: a systematic review of applications and efficacy. JMIR Serious Games 4:e11. doi: 10.2196/games.5888

Maggio, M. G., De Cola, M. C., Latella, D., Maresca, G., Finocchiaro, C., La Rosa, G., et al. (2018). What about the role of virtual reality in parkinson Disease’s cognitive rehabilitation? Preliminary findings from a randomized clinical trial. J. Geriatr. Psychiatry Neurol. 31, 312–318. doi: 10.1177/0891988718807973

Mazzoni, P., Shabbott, B., and Cortes, J. C. (2012). Motor control abnormalities in Parkinson’s disease. Cold Spring Harb. Perspect. Med. 2:a009282. doi: 10.1101/cshperspect.a009282

Mohlman, J., Chazin, D., and Georgescu, B. (2011). Feasibility and acceptance of a nonpharmacological cognitive remediation intervention for patients with parkinson disease. J. Geriatr. Psychiatry Neurol. 24, 91–97. doi: 10.1177/0891988711402350

Mondolo, F., Jahanshahi, M., Grana, A., Biasutti, E., Cacciatori, E., and Di Benedetto, P. (2006). The validity of the hospital anxiety and depression scale and the geriatric depression scale in Parkinson’s disease. Behav. Neurol. 17, 109–115. doi: 10.1155/2006/136945

Muhammed, K., Manohar, S., Ben Yehuda, M., Chong, T. T.-J., Tofaris, G., Lennox, G., et al. (2016). Reward sensitivity deficits modulated by dopamine are associated with apathy in Parkinson’s disease. Brain 139, 2706–2721. doi: 10.1093/brain/aww188

Nagle, A., Riener, R., and Wolf, P. (2015). High user control in game design elements increases compliance and in-game performance in a memory training game. Front. Psychol. 6:1774. doi: 10.3389/fpsyg.2015.01774

Naismith, S. L., Mowszowski, L., Diamond, K., and Lewis, S. J. G. (2013). Improving memory in Parkinson’s disease: a healthy brain ageing cognitive training program. Mov. Disord. 28, 1097–1103. doi: 10.1002/mds.25457

Nes Begnum, M. E. (ed.) (2010). “Challenges for norwegian pc-users with parkinson’s disease – a survey,” in Lecture Notes in Computer Science, (Berlin: Springer), 292–299. doi: 10.1007/978-3-642-14097-6_47

Olson, M., Lockhart, T. E., and Lieberman, A. (2019). Motor learning deficits in Parkinson’s disease (PD) and their effect on training response in gait and balance: a narrative review. Front. Neurol. 10:62. doi: 10.3389/fneur.2019.00062

Orgeta, V., Mcdonald, K. R., Poliakoff, E., Hindle, J. V., Clare, L., and Leroi, I. (2020). Cognitive training interventions for dementia and mild cognitive impairment in Parkinson’s disease. Cochrane Database Syst. Rev. 2:CD011961.

Peres, S. C., Pham, T., and Phillips, R. (2013). Validation of the system usability scale (SUS). Proc. Hum. Fact. Ergon. Soc. Annu. Meet. 57, 192–196. doi: 10.1177/1541931213571043

Petrelli, A., Kaesberg, S., Barbe, M. T., Timmermann, L., Rosen, J. B., Fink, G. R., et al. (2015). Cognitive training in Parkinson’s disease reduces cognitive decline in the long term. Eur. J. Neurol. 22, 640–647. doi: 10.1111/ene.12621

Pocklington, C., Gilbody, S., Manea, L., and Mcmillan, D. (2016). The diagnostic accuracy of brief versions of the Geriatric Depression Scale: a systematic review and meta-analysis. Int. J. Geriatr. Psychiatry 31, 837–857. doi: 10.1002/gps.4407

Poston, K. L., Yorkwilliams, S., Zhang, K., Cai, W., Everling, D., Tayim, F. M., et al. (2016). Compensatory neural mechanisms in cognitively unimpaired Parkinson disease. Ann. Neurol. 79, 448–463. doi: 10.1002/ana.24585

Ravina, B., Putt, M., Siderowf, A., Farrar, J. T., Gillespie, M., Crawley, A., et al. (2005). Donepezil for dementia in Parkinson’s disease: a randomised, double blind, placebo controlled, crossover study. J. Neurol. Neurosurg. Psychiatry 76, 934–939. doi: 10.1136/jnnp.2004.050682

Reuter, I., Mehnert, S., Sammer, G., Oechsner, M., and Engelhardt, M. (2012). Efficacy of a multimodal cognitive rehabilitation including psychomotor and endurance training in Parkinson’s disease. J. Aging Res. 2012:235765. doi: 10.1155/2012/235765

Revenas, A., Hvitfeldt Forsberg, H., Granstrom, E., and Wannheden, C. (2018). Co-designing an ehealth service for the co-care of parkinson disease: explorative study of values and challenges. JMIR Res. Protoc. 7:e11278. doi: 10.2196/11278

Saredakis, D., Collins-Praino, L. E., Gutteridge, D. S., Stephan, B. C. M., and Keage, H. A. D. (2019). Conversion to MCI and dementia in Parkinson’s disease: a systematic review and meta-analysis. Parkinsonism Relat. Disord. 65, 20–31. doi: 10.1016/j.parkreldis.2019.04.020

Sauro, J. (2011). A Practical Guide to The System Usability Scale: Background, Benchmarks & Best Practices. Denver, CO: Measuring Usability LLC.

Schneider, J. S., Pioli, E. Y., Jianzhong, Y., Li, Q., and Bezard, E. (2013). Levodopa improves motor deficits but can further disrupt cognition in a macaque Parkinson model. Mov. Disord. 28, 663–667. doi: 10.1002/mds.25258

Siciliano, M., Trojano, L., Santangelo, G., De Micco, R., Tedeschi, G., and Tessitore, A. (2018). Fatigue in Parkinson’s disease: a systematic review and meta-analysis. Mov. Disord. 33, 1712–1723.

Sinforiani, E., Banchieri, L., Zucchella, C., Pacchetti, C., and Sandrini, G. (2004). Cognitive rehabilitation in Parkinson’s disease. Arch. Gerontol. Geriatr. 38, 387–391.

Skorvanek, M., Martinez-Martin, P., Kovacs, N., Rodriguez-Violante, M., Corvol, J. C., Taba, P., et al. (2017). Differences in MDS-UPDRS scores based on hoehn and yahr stage and disease duration. Mov. Disord. Clin. Pract. 4, 536–544. doi: 10.1002/mdc3.12476

Smith, C., Malek, N., Grosset, K., Cullen, B., Gentleman, S., and Grosset, D. G. (2019). Neuropathology of dementia in patients with Parkinson’s disease: a systematic review of autopsy studies. J. Neurol. Neurosurg. Psychiatry 90, 1234–1243. doi: 10.1136/jnnp-2019-321111

Sun, C., and Armstrong, M. J. (2021). Treatment of Parkinson’s disease with cognitive impairment: current approaches and future directions. Behav. Sci. 11:54. doi: 10.3390/bs11040054

Thomas, M., Lenka, A., and Kumar Pal, P. (2017). Handwriting analysis in Parkinson’s disease: current status and future directions. Mov. Disord. Clin. Pract. 4, 806–818. doi: 10.1002/mdc3.12552

Thompson, V. L. S., Leahy, N., Ackermann, N., Bowen, D. J., and Goodman, M. S. (2020). Community partners’ responses to items assessing stakeholder engagement: cognitive response testing in measure development. PLoS One 15:e0241839. doi: 10.1371/journal.pone.0241839

Trewin, S., and Pain, H. (1999). Keyboard and mouse errors due to motor disabilities. Int. J. Hum. Comput. Stud. 50, 109–144. doi: 10.1006/ijhc.1998.0238

Van De Weijer, S. C., Kuijf, M. L., De Vries, N. M., Bloem, B. R., and Duits, A. A. (2019). Do-It-Yourself gamified cognitive training: viewpoint. JMIR Serious Games 7:e12130. doi: 10.2196/12130

Walker, A., and Hobbs, D. (2014). An industrial design educational project: dedicated gaming controller providing haptic feedback for children with cerebral palsy. Int. J. Designed Objects 7, 11–21. doi: 10.18848/2325-1379/cgp/v07i03/38682

Watson, G. S., and Leverenz, J. B. (2010). Profile of cognitive impairment in Parkinson’s disease. Brain Pathol. 20, 640–645.

Yarnall, A. J., Breen, D. P., Duncan, G. W., Khoo, T. K., Coleman, S. Y., Firbank, M. J., et al. (2014). Characterizing mild cognitive impairment in incident Parkinson disease: the ICICLE-PD study. Neurology 82, 308–316. doi: 10.1212/WNL.0000000000000066

Yogev, G., Giladi, N., Peretz, C., Springer, S., Simon, E. S., and Hausdorff, J. M. (2005). Dual tasking, gait rhythmicity, and Parkinson’s disease: which aspects of gait are attention demanding? Eur. J. Neurosci. 22, 1248–1256. doi: 10.1111/j.1460-9568.2005.04298.x

Keywords: cognitive training, Parkinson’s, serious games, co-design, dementia, brain training, cognitive impairment