- 1National Clinical Research Center for Geriatrics, West China Hospital, Sichuan University, Chengdu, China

- 2Geriatric Health Care and Medical Research Center, Sichuan University, Chengdu, China

- 3Department of Geriatrics, West China Hospital, Sichuan University, Chengdu, China

- 4College of Electronics and Information Engineering, Sichuan University, Chengdu, China

- 5Department of Rehabilitation Medicine, West China Hospital, Sichuan University, Chengdu, China

- 6Geroscience and Chronic Disease Department, The 8th Municipal Hospital for the People, Chengdu, China

- 7Medical Examination Center, Aviation Industry Corporation of China 363 Hospital, Chengdu, China

- 8West China School of Basic Medical Sciences and Forensic Medicine, Sichuan University, Chengdu, China

- 9Medical College, Jiangsu University, Zhenjiang, China

- 10Public Health Department, Chengdu Medical College, Chengdu, China

- 11West China Biomedical Big Data Center, West China Hospital, Sichuan University, Chengdu, China

- 12Med-X Center for Informatics, Sichuan University, Chengdu, China

Background: Frail older adults have an increased risk of adverse health outcomes and premature death. They also exhibit altered gait characteristics in comparison with healthy individuals.

Methods: In this study, we created a Fried’s frailty phenotype (FFP) labelled casual walking video set of older adults based on the West China Health and Aging Trend study. A series of hyperparameters in machine vision models were evaluated for body key point extraction (AlphaPose), silhouette segmentation (Pose2Seg, DPose2Seg, and Mask R-CNN), gait feature extraction (Gaitset, LGaitset, and DGaitset), and feature classification (AlexNet and VGG16), and were highly optimised during analysis of gait sequences of the current dataset.

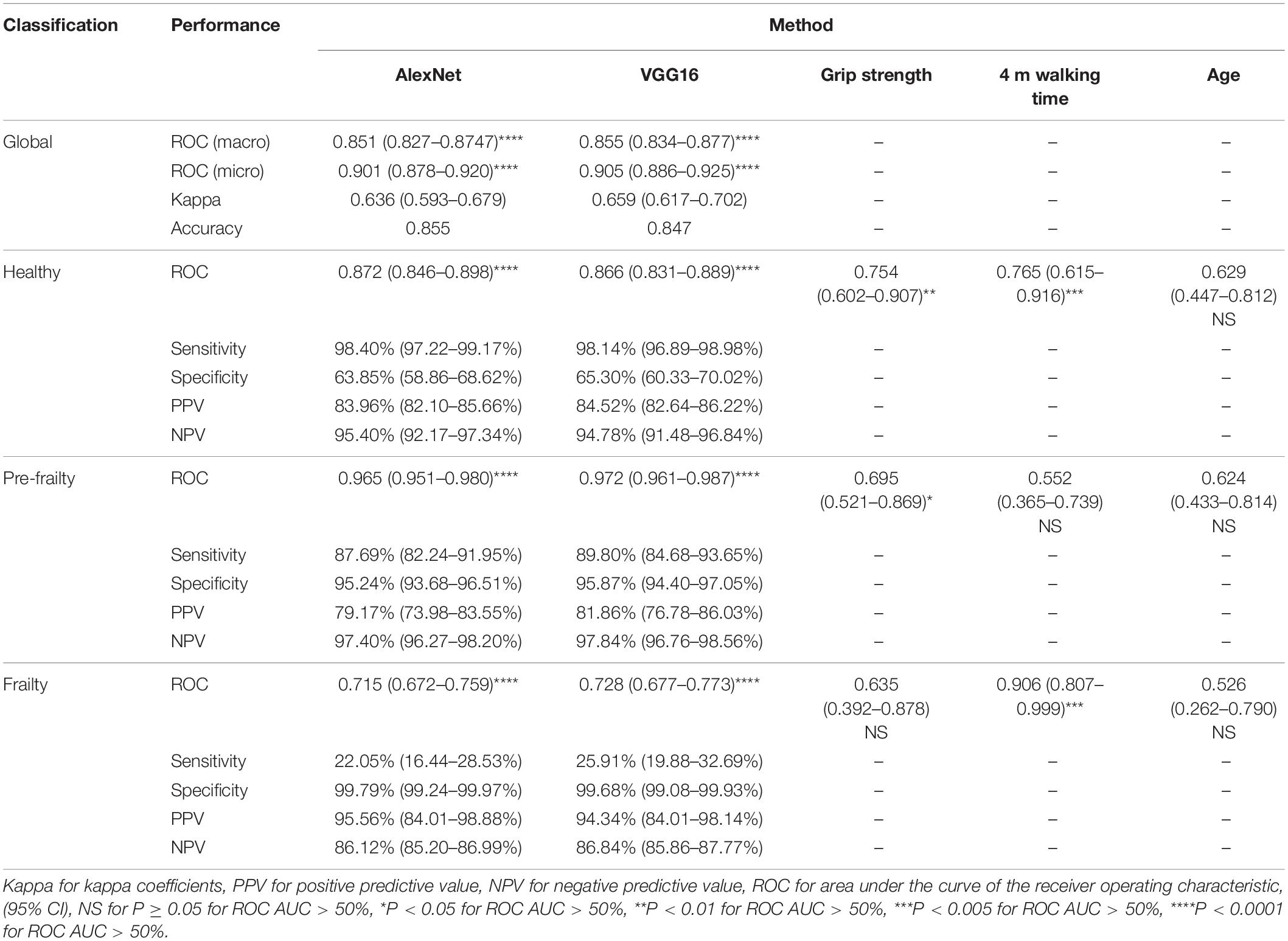

Results: The area under the curve (AUC) of the receiver operating characteristic (ROC) at the physical frailty state identification task for AlexNet was 0.851 (0.827–0.8747) and 0.901 (0.878–0.920) in macro and micro, respectively, and was 0.855 (0.834–0.877) and 0.905 (0.886–0.925) for VGG16 in macro and micro, respectively. Furthermore, this study presents the machine vision method equipped with better predictive performance globally than age and grip strength, as well as than 4-m-walking-time in healthy and pre-frailty classifying.

Conclusion: The gait analysis method in this article is unreported and provides promising original tool for frailty and pre-frailty screening with the characteristics of convenience, objectivity, rapidity, and non-contact. These methods can be extended to any gait-related disease identification processes, as well as in-home health monitoring.

Introduction

Frailty is a state of increased vulnerability to stress, which may lead to a diminished homeostatic capacity across multiple physiological systems (Fried et al., 2009). Frail older adults are at an increased risk of premature death and various adverse health outcomes, including falls, fractures, disability, and dementia, all of which could result in a poor quality of life and an increased cost of healthcare resources, such as emergency department visits, hospitalisation, and institutionalisation (Kojima et al., 2019). The comprehensive geriatric assessment (CGA), which serves as the basis for geriatric medicine and research, is primarily aimed at identifying and quantifying frailty by examining various risk-prone domains and body functions (Lee et al., 2020). Fried’s frailty phenotype (FFP), the most acceptable face to face evaluation for frailty, includes five components, namely weakness, slowness, exhaustion, low physical activity, and unintentional weight loss; trained personnel takes up 20 min for one case (Fried et al., 2001). The Rockwood frailty index (RFI), involving 70 clinical deficits, which is usually generated from comprehensive health records, was less available for seniors with limited medical resources (Rockwood et al., 2005).

Human locomotion is a common daily activity and is also an acquired yet complex behaviour. It requires the involvement of the nervous system, many parts of the musculoskeletal apparatus, and the cardiorespiratory system (Adolph and Franchak, 2017). Individual gait patterns are influenced by age, personality, mood, and sociocultural factors. Some age-related neurological cases, such as sensory ataxia and Parkinson’s disorders, lead to unique gait disorders (Pirker and Katzenschlager, 2017). Furthermore, the preferred walking speed in older adults is a sensitive marker of general health and survival (Pirker and Katzenschlager, 2017).

However, recent researchers have focused on understanding the impact of the frailty state on various gait parameters beyond speed, because only the gait speed might not be sufficient to classify the frailty state of an individual. An improved classification can be achieved by referring to parameters such as the signal root mean square and total harmonic distortion instead of simply relying on the gait speed (Martinez-Ramirez et al., 2015). Previous studies have suggested that transitionally frail individuals exhibit a reduced locomotive speed, cadence, stride length, increased stride time, double support (as a percentage of the gait cycle), and stride time variability as compared to healthy individuals (Schwenk et al., 2014). Artificial neural networks might also help to further investigate the frailty of gait. Dawoon recently analysed the gait statistics gathered from gyroscopes placed on the feet using a long short-term memory network-based classifier (Jung et al., 2021). Akbari performed a Kinect-sensor machine learning methodology as a frailty classifier via functional assessment exercises including a walking test (Akbari et al., 2021).

Different approaches have been implemented to determine the gait characteristics in clinical research. Numerous studies were based on signals from floor sensors or wearable sensors, which can relatively provide precise time and space information. However, these accessory devices have limited their applicability, specifically in developing districts (Muro-de-la-Herran et al., 2014). Human gait recognition and behaviour understanding (GRBU), mostly without the use of contact sensors, has become a major research branch of machine vision using artificial neural network tools and has a wide range of applications in the field of anti-terrorism, intelligent monitoring, access control, criminal investigation, pedestrian behaviour analysis, reality mining, and medical care (Luo and Tjahjadi, 2020).

Gait recognition and behaviour understanding are primarily divided into data-driven (model-free) and knowledge-based (model-based) methods, based on the requirement of any relevant human pose parameters for feature extraction. The idea underlying model-based methods is the application of mathematical constructs to analyse walking movement as a representation of gait appearance using several ellipses or segments (Yoo et al., 2002). The main advantages of the model-based approach are that it can reliably handle occlusion (particularly human body self-occlusion), noise, scale, and rotation, as well as overcome poor robustness and its dependence on precise modelling of the human body. A model-free GRBU method extracts the statistical information of gait contours in a gait cycle and matches the contours reflecting the same shape and motion characteristics. A gait energy image (GEI) (Li et al., 2018), which is a classical representation of gait features, derives many energy images of related features, such as the frame difference energy images (Chen et al., 2009), gait optical flow images (Lam et al., 2011), and pose energy images (Roy et al., 2012). The advantages of these approaches are that: (1) they can obtain more comprehensive spatial information, focusing on each silhouette; and (2) they can gather more temporal information because specialised structures are utilised to extract sequential information. However, the GEI-like method requires a high computational power.

A huge demand for telemedical care emerged owing to the increasing spread of the corona virus disease 2019 (COVID-19), particularly among the older adults. The application of deep learning algorithms introduces real-time health analysis via video recording devices, such as a monitoring camera, web camera, or smartphones (Banik et al., 2021). A machine vision-based self-reporting method has the potential to significantly enhance accessibility and reduce costs in frailty evaluation. A deep learning gait assessment, conducted in a recent trial, based on a wearable sensor or force plates, is promising for disease screening and in-home monitoring (Zhang et al., 2020). However, older adults’ resistance to wearable devices and the additional cost of equipment might limit the scenarios of these technologies.

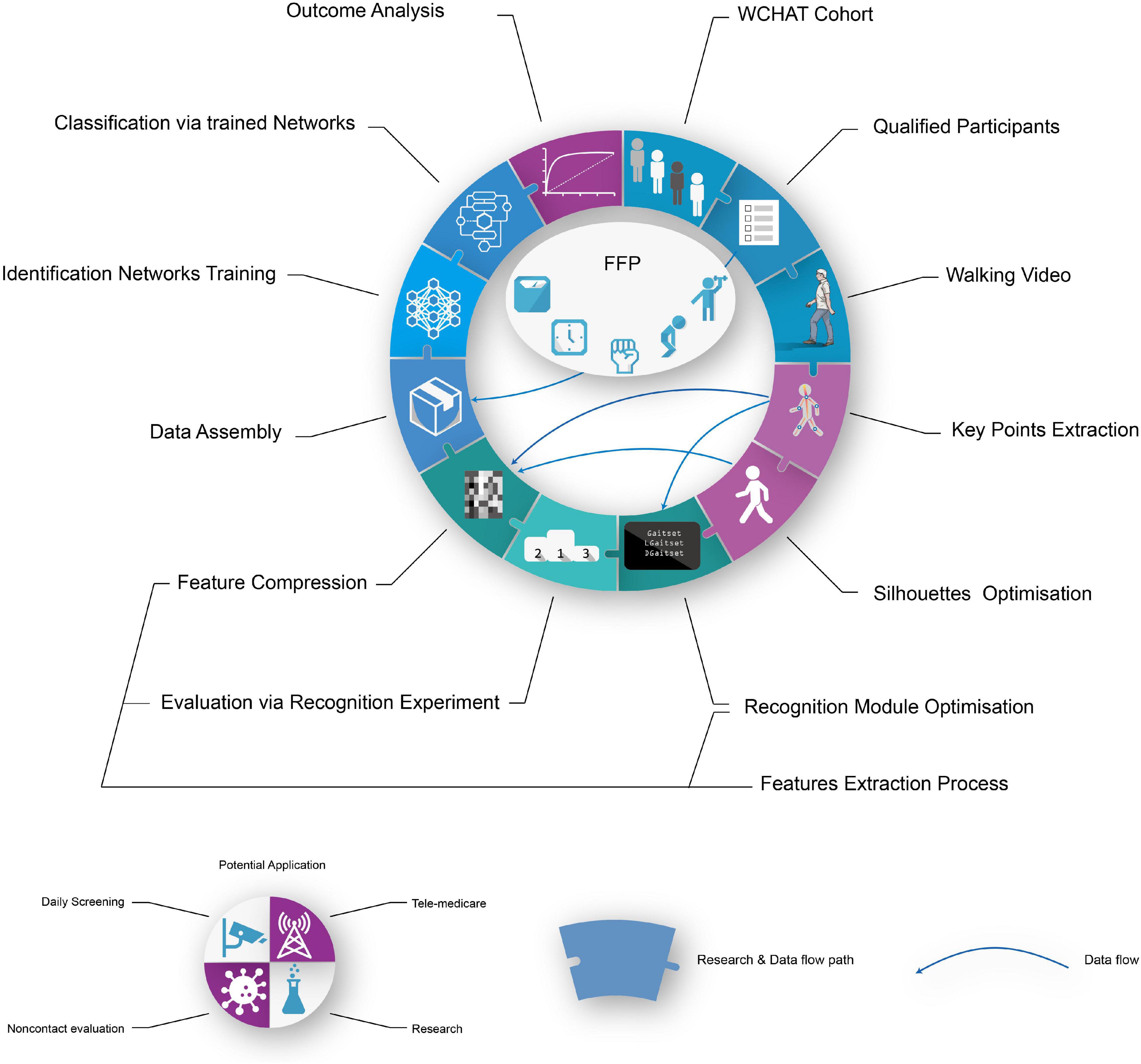

This study is aimed at developing a machine vision driven geriatric disease gait identification method without using a contact sensor or index and at exploring its potential as a frailty screening tool possessing the characteristics of convenience, objectivity, rapidity, and non-contact (Figure 1). In this study, a multidimensionally labelled gait video set of an older adult was established. Then, a series of hyperparameters in machine vision networks were optimised and evaluated for gait feature extraction and identification. The predictive power of the frailty and pre-frailty (patients at risk for frailty who fulfil some, but not all, criteria for frailty) evaluation was measured using the area under the curve (AUC) of the receiver operating characteristic (ROC). These methods may be generalised to any gait-related disease identification processes. The current approach provides unreported frailty and pre-frailty screening tools, which may potentially be generalised to gait-related diseases or in-home health monitoring, with the characteristics of convenience, objectivity, rapidity, and non-contact.

Materials and Methods

Participants

The current study involved a cross-sectional analysis of baseline data from the West China Health and Aging Trend (WCHAT) observational study that was designed to evaluate factors associated with healthy aging among community-dwelling adults aged 50 years and older in western China. From July to August 2019, we included a subset of 485 participants from five different locations in the Sichuan province. The final analysis consisted of 222 participants, excluding 205 individuals aged <60 years, 31 individuals who have difficulty in completing FFP evaluation safely, 24 individuals with a medical history of Parkinson’s disease or stroke (usually in a unique gait manner), and 3 individuals with incomplete walking video records. The recordings of participants who did not meet the criteria were used for an early-stage modification of the current pattern, such as the analysis of the body key points and a segmentation of the gait silhouettes. All participants (or their proxy respondents) were recruited by convenience, and they provided a written informed consent to the researchers, and our institutional ethics review boards approved the study. All researchers followed the local law and protocol to protect the rights of privacy, portraits, or other interests of the study participants.

Frailty and Pre-frailty

Frailty and pre-frailty are defined using the FFP scale (Fried et al., 2001), comprising the following five elements: shrinking, slowness, weakness, exhaustion, and low physical activity. Subsequently, those who meet three or more of the above criteria are termed as frail, those who meet one or two are termed as pre-frail, and those who meet none of the criteria are called as non-frail or healthy older adults. In this study, a low physical activity was determined by the total amount of kcal/week spent on commonly performed physical activities as measured using a validated China Leisure Time Physical Activity Questionnaire (CLTPAQ) (Yanyan et al., 2019). Supplementary Table 1 presents more details on these criteria.

Recording of Walking Video

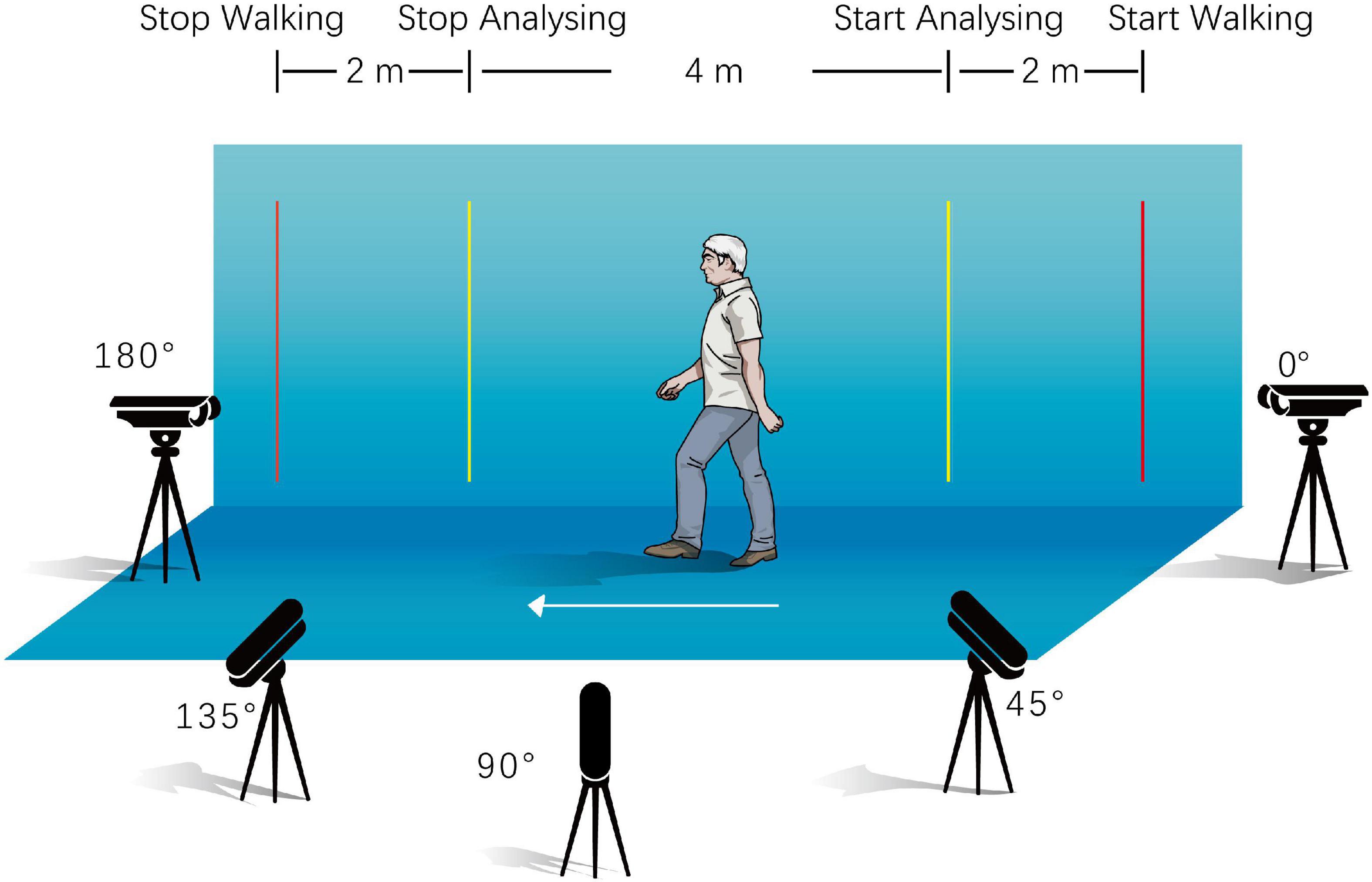

Gait videos can be better shot in spacious, warm environments, on flat grounds, and in well-lit indoor environments. The green screen; two yellow parallel benchmarks, which were placed 4 m apart from each other; and five security cameras (F = 4 mm, DS-IPC-B12V2-I, Hikvision, Zhejiang, China) were properly fixed, as shown in Figure 2. The height of the cameras from the ground was approximately 1.3 m, and their angles were adjusted to ensure that the body of the entire gait process between the aforementioned benchmarks could be filmed and stored by the recorder (DS-7816N-R2/8P, Hikvision, Zhejiang, China) in an MP4 format at a 1080p resolution. Participants were requested to start walking 2 m ahead of the first benchmark and stop 2 m behind the second benchmark, at their usual speed. A complete recording of each participant included six 4 m-walking sequences and synchronised video segment shots from five different camera stands for each sequence, if possible. All the walking videos were manually edited, and only the footage consisting of the walking movement between the inner pair of benchmarks was collected for the end results. Subsequently, video files of every walking sequence were converted into frames of a static image.

Machine Vision Approach and Analysis

There were two primary tasks underlying the gait identification process namely, gait feature extraction and feature classification. Feature extraction plays an important role in the identification and recognition processes, and directly affects their accuracy. Gaitset, a network that inputs a 64 × 64 walking silhouette sequence of a walking person recognition task, was used as a fundamental feature of the extraction network in the current study (Chao et al., 2021). In this study, the body key points were extracted before the silhouettes owing to their possession of different gait features, which were used as input for some silhouette segmentation modules. Then, the original silhouette segmentation and feature extraction methods were optimised and evaluated. Two classic pre-trained feature classifier networks were applied for the final frailty state identification.

Approach for Body Key Points Extraction

AlphaPose, an open-source pose estimation network, was used to extract the spatial information of the body key point from the original gait video (Fang et al., 2017). The performance of full-trained AlphaPose in key point extraction was evaluated by the quality of the merged image with the original image and the visualised body key point image in the current set. The framework of AlphaPose is presented in Supplementary Section 2 and Supplementary Figure 3. After evaluation, the body key point information of all walking image sequences was extracted via a pre-trained AlphaPose network. The operating system used was Ubuntu 16.04, and the graphics processing unit (GPU) was an NVIDIA GeForce GTX1080Ti graphics card. The trained model and setting were downloaded from GitHub and Google Drive (Supplementary Table 2).

Development and Treatment of Silhouettes Segmentation

Pose2Seg (Zhang et al., 2019) is a posture-based approach to solve the segmentation problem of occluded human body instances (Figure 3). Firstly, the feature pyramid network (FPN) (Lin et al., 2017) extracts features from inputted standard image and the key point coordinates. After an affine-align operation based on human posture templates, two types of skeleton features are generated for each human instance, namely confidence maps and part confidence maps (Cao et al., 2017). The segmentation module is designed based on the same residual unit in Resnet (He et al., 2016). Finally, a reverse affine-align operation is performed on each instance to obtain the final segmentation results.

Figure 3. Frame structure of Pose2Seg and DPose2Seg. FPN, feature pyramid network; 7 × 7: 7 × 7 convolution kernel; 1 × 1: 1 × 1 convolution kernel; DC3 × 3: 3 × 3 deconvolution kernel; SegModule, segmentation module; BN, batch normalisation; DB, dense block; US, up sample; TD, transition down; TU, transition up; C, concat.

Our main optimisation of Pose2Seg was replace the original segmentation module with a module applicated fully convolutional DenseNets (Huang et al., 2017), and was named DPose2Seg. The concept of DenseNets is based on the observation that if each layer is directly connected to every other layer in a feed-forward fashion, the accuracy of the network will be improved (Jégou et al., 2017). We also experimented with another widely applied human body segmentation algorithm, Mask R-CNN (He et al., 2017).

The average precision (AP) (Yilmaz and Aslam, 2006), a pixel-level evaluation index in the image processing field, used the Mean value from 10 intersections over union thresholds, starting at 0.5 up to 0.95 with an interval of 0.05 steps. The mean average precision (mAP), which is defined as the AP values averaged over all the different classes, and with an AP larger than 962 pixel (AP large) was used to measure the silhouette predictive power via the human body segmentation task in the opensource sets OCHuman (Zhang et al., 2019) and COCO2017 (COCO Consortium, 2017).

After evaluation, spatial information of the body key points and silhouettes of all packages were extracted and segmented by the best performance network in the trained status. The study environment for extraction and segmentation was as follows: the operating system used was Ubuntu 16.04, NVIDIA GeForce GTX1080Ti graphics card was the GPU, and PyTorch was tool programming the deep learning framework. The settings and parameters were as follows: 16 for batch size, 55 epochs for the entire training stage, 0.0002 for the initial learning rate, 0.00002 for the learning rate after the 33rd epoch, and the Adam algorithm was used to optimise the remaining parameters. The pre-trained Pose2Seg model (trained with COCO2017 and OCHuman) and setting files were downloaded from GitHub (Supplementary Table 2).

Development and Treatment of Gait Features Extraction

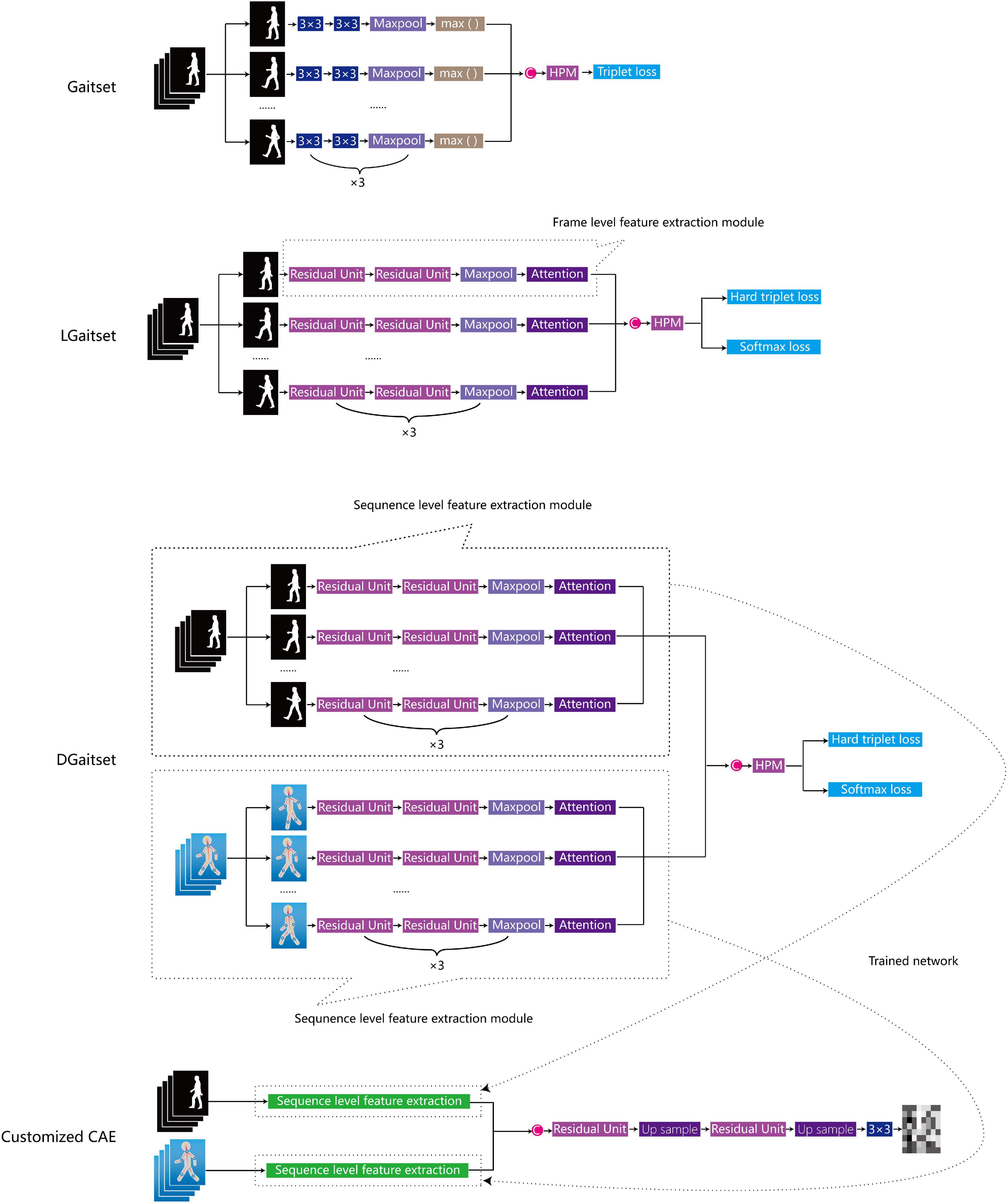

In the training model of original Gaitset (Chao et al., 2019), a convolutional neural network (CNN) was used to extract frame-level features from each frame of the silhouette independently (Figure 4). Second, an operation called max pooling was performed to aggregate frame-level features. Third, after three repetitions of two previous steps and a concatenation of all frame-level outputs, a structure called horizontal pyramid mapping (HPM) (Fu et al., 2019) was implemented to map the sequence-level feature into a more discriminative space to obtain the final representation. Triplet loss was computed to the corresponding features among different samples and employed to train the network (Chao et al., 2021).

Figure 4. Overview of network structure of feature extraction methods. C, concat; HPM, horizontal pyramid mapping, attention: attention module, 3 × 3: 3 × 3 convolution kernel; CAE, convolutional auto-encoder.

The first stage optimisation of Gaitset, named as LGaitset, included renewal of the loss function at the sequence level, and application of the attention module and residual units at the frame level (both were mentioned in the Gaitset study as alternative optimisations). To improve the convergence and performance of the model, LGaitset replaced triplet loss with the weighted sum of softmax loss and hard triplet loss (Taha et al., 2020). The attention module (Chao et al., 2019; Chaudhari et al., 2019) replaced the max function for an enhanced learning and the extraction of global features. The residual units partly replaced the convolution operation in the original network to improve the feature extraction ability and avoid the vanishing gradient problem (He et al., 2016; Chao et al., 2019).

The second-stage optimisation, DGaitset, a dual-channel input (silhouettes and body key point sequence) manner network structure based on LGaitset, was designed to achieve a better performance in feature extraction, focusing on the manner of imputation. Cause gait parameters, such as stride length, stride variation, and stride symmetry, are contained in the key point sequence and have been used as biomarkers in frailty evaluation.

The performance of these three methods was evaluated using an individual recognition task in the current walking dataset. The analysis set of the recognition task comprised data from 222 annotated individuals (1332 walking sequence) or from 6660 walking recordings, which were then shuffled and randomly separated into a 4/1 training/validation set split (Supplementary Figure 1). Recordings from an individual would enter in the training or validation set only, but not both sets to avoid a bias evaluation metrics. The validation set was maintained static during this experiment. Every gait recording in the validation set was input as a probe alternately to the three trained networks with a frozen weight and parameter; then, the remaining recording in validation would be regarded as a gallery and compared with the probe. In every epoch of the learning period, the system randomly split a few participants into the first four walking sequences, and the remaining recordings in the training set were regarded as the gallery set. The loss function’s objective was to calculate the distance from the probe recordings to a positive sample (belonging to the same individual) and a negative sample (not belonging to the same individual) in the gallery, and to adjust the wright and value in the model. A successful recognition refers to those recordings which exhibited the highest probabilities in the gallery, and belonged to the same individual who filmed the probe recording. The Chi-square test was conducted to test the significance of the recognition ratio between the models.

The hardware and software environments were similar to the previous silhouette sections. The settings and parameters of the Gait-set and LGaitset are batch size (8, 4), and every epoch takes 32 silhouette sequences (eight participants, four camera stands for every participant). The settings and parameters of DGaitset are batch size (6, 4), every training epoch takes 24 silhouettes and key point sequences (six participants, four camera stands for every participant), 80,000 epochs for the entire training stage, and 0.0001 for the initial learning rate. The Adam algorithm was used to optimise other parameters. Pre-trained Gaitset models were downloaded from the onedrive platform (Supplementary Table 2).

The sequence-level feature extraction part of the best performance-trained method was saved to generate a convolutional auto-encoder (CAE) (Masci et al., 2011) to compress the silhouettes and/or key point sequence into a 64 × 64 matrix for classification tasks in the next section.

Identification of Gait Features (Frailty State)

The project consists of two classic pre-trained image identification networks, AlexNet (Krizhevsky et al., 2017) and VGG16 (Simonyan and Zisserman, 2015), from the PyTorch platform for the purpose of gait feature classification (Supplementary Table 2). A three-class classification for frailty, pre-frailty, and health gait features was designed to evaluate the performance of AlexNet and VGG16 as frailty classifiers for the current dataset. The ground truth state for all gait features in this experiment was labelled using a previously performed FFP assessment.

The analysis set comprised data from 222 annotated individual or 6660 gait sequence features (64 × 64 resolution matrix) from each walking sequence, which were then shuffled and randomly separated into an 80/20% training and test set/validation set split. As depicted in Supplementary Figure 2, after the first split, the training and test sets contained 184 participants’ gait features, and the validation set contained 38 participants. The validation set was kept static throughout the experiment, and the testing set was used to evaluate the model performance at the end of each epoch during training and for hyperparameter optimisation. Data from the training and testing sets were randomly split in a 60/20% ratio by the units of sequence at the beginning of every training epoch to maximise the training effect. During the training process, the parameter of the lower convolutional layer was frozen. Only the last three fully connected neural layers of both AlexNet and VGG16 were customised. All weights of the identification model were frozen after 200 training epochs. The environment was similar to that described in the previous section. The settings and parameters of AlexNet and VGG16 are batch size 32 and 0.001 for the initial learning rate. The Adam algorithm was used to optimise the other parameters. The training epochs for AlexNet and VGG16 were 300 and 1000, respectively. The learning effect of the model during the training period was measured by calculating the accuracy in the test set and the loss function in the training set. The full-trained AlexNet and VGG16 output a series of probabilities for gait features in the validation set according to the classification of these features.

All details of the statistical analysis process were given in Supplementary Section 1.

Results

Characterisation of Participants in the Physical Frailty Status Subgroup and Analysing Set

We compared participants’ background information of the training and test set/validation sets (Table 1). We found no significant differences in age, gender, education level, marital status, and physical frailty status prevalence between the training/test and validation sets.

Table 1. Characterisation and physical status of participants among 222 older adults aged >60 in the three-class identification experiment.

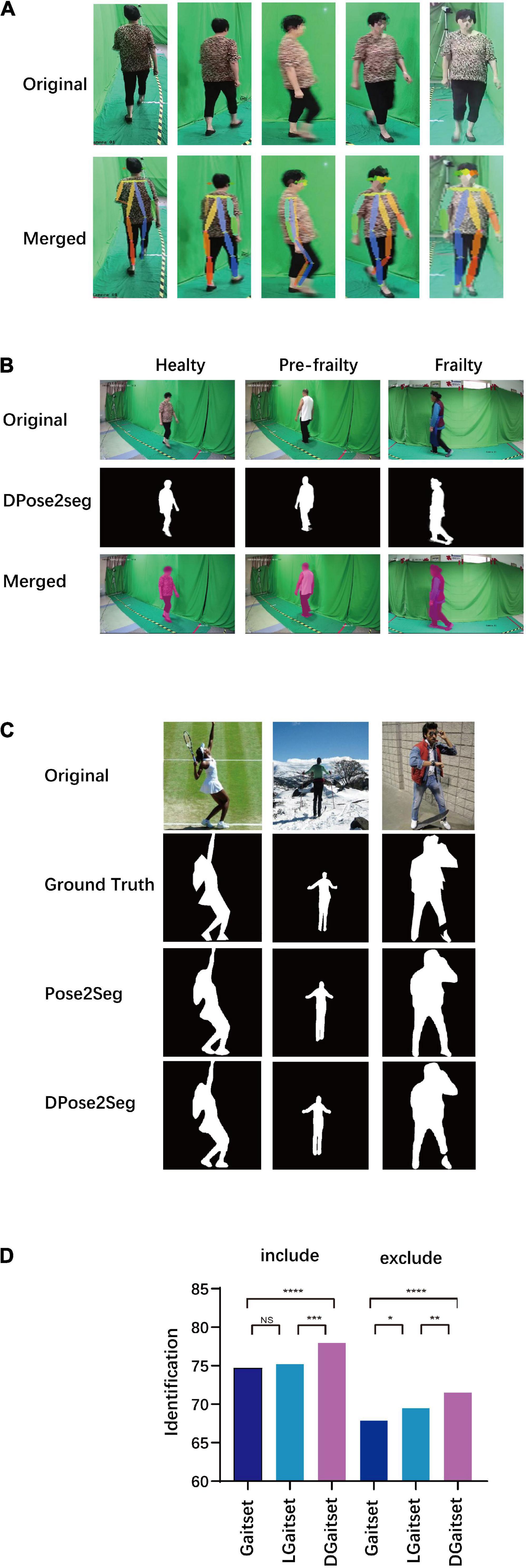

Body Key Points and Silhouettes

Figure 5A demonstrates the outcome of AlphaPose-applicated senior gait footage. The sample of merged images with the original image and key points in the current set evaluated by human vision presented satisfactory performance in body key point recognition for the current method. The body key point information or skeleton information, obtained as output from AlphaPose, was used as a part of the input in the segmentation and feature module customisation process.

Figure 5. Results of key point extraction, silhouette segmentation, and gait feature extraction. (A) Sample of body key points extraction (original image and visualisation of key points parameters merged with original). (B) Sample of silhouette segmentation in current dataset. (C) Sample of silhouette segmentation in COCO dataset. (D) Results of Gait identification task via different method. Include: validation set includes the gait sequence filmed from the same camera stand with the probe. Exclude: validation set excluding gait sequence filmed from the same camera stand with probe; NS, P-value for difference of successful identification ratio between methods ≥0.05; *P < 0.05; **P < 0.01; ***P < 0.005; ****P < 0.001.

Figures 5B,C and Supplementary Videos 1–6 presents the silhouette segmentation samples in the open dataset and target dataset via DPose2Seg and Pose2Seg. In the comparison of the precision of segmentation methods, DPose2Seg presents an advantage to Pose2Seg and Mask R-CNN in testing of labelled human image datasets, OCHuman and COCO2017 (Supplementary Table 3). Thus, trained DPose2Seg, though the current segmentation task, would generate the silhouettes needed in the following experiments.

The computational time consuming for different machine vision analysis task was presented in Supplementary Table 4.

Reorganisation and Feature Extraction

A reorganisation comparison was conducted to examine the performance of deep-learning models with respect to the gait feature extraction in the target dataset (Figure 5D). DGaitset had both better included and excluded the same camera stand with a probe at the gallery in an individual reorganisation test than the LGaitset and original Gaitset methods. Thus, the sequence level feature extraction part of the CAE was customised as DGaitset trained in the current experiment. All gait features contained in the body key points and silhouette sequence were arranged in a 64 × 64 matrix via the CAE.

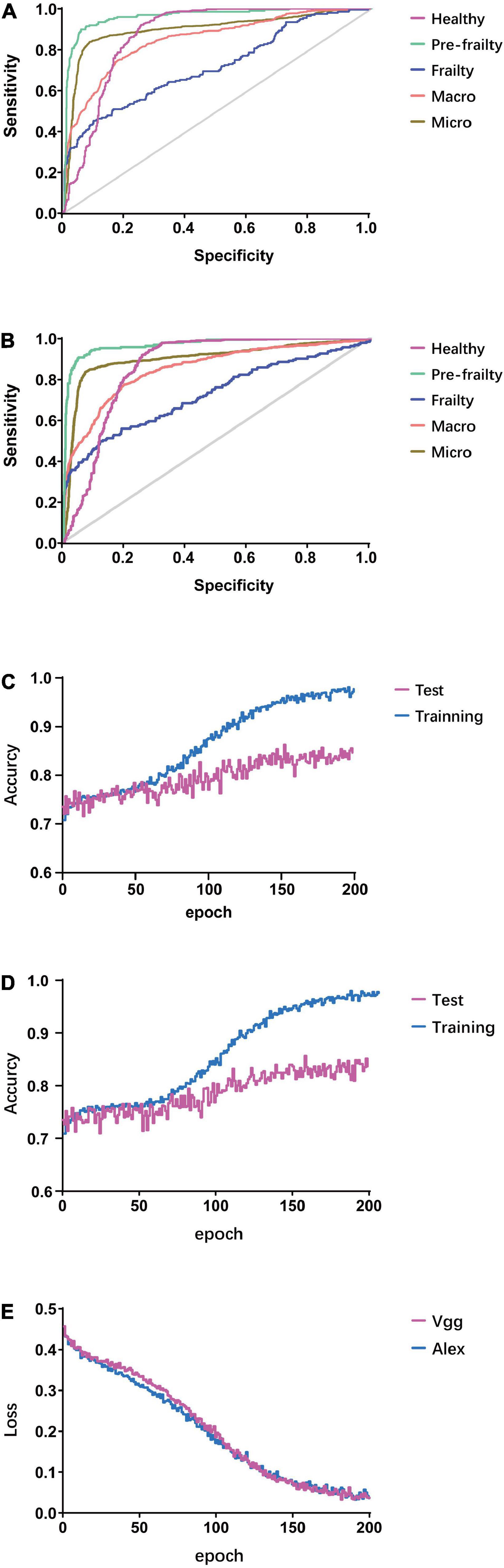

Performance of Gait Classification

In the three-class classification test, the AUC of ROC for AlexNet was 0.851 and 0.901 for macro and micro (Table 2 and Figures 6A,B), respectively, 0.872 for health state identification, 0.965 for pre-frailty identification, 0.715 for frailty; AUC of ROC for VGG16 was 0.855 and 0.905 for macro and micro, respectively, 0.866 for health state identification, 0.972 for pre-frailty, and 0.728 for frailty. The ROC AUC was found to be above 50% in all three classification tasks via AlexNet and VGG16 (all P-values < 0.0001).

Figure 6. Results of gait feature classification. (A) ROCAUC of validation set via AlexNet. (B) ROCAUC of validation set via VGG. (C) Accuracy via AlexNet. (D) Accuracy via VGG16. (E) Loss function of training set.

The machine vision gait feature classification methods (AlexNet and VGG16) performed a non-inferiority physical frailty state prediction using characteristics comparable to the 4 m walking time, as well as a better prediction than those carried out by considering the participant’s age and grip strength characteristics. By converting the three-class classification task to three different binary-classification tasks, the AUC of ROC for grip strength, age, and 4 m walking time to predict the physical frailty state in participants of the validation set were calculated as a contrast. A 4 m walking time exhibited a better predictive power than other methods in frailty identification (0.906, 95% CI 0.807–0.999), but not in pre-frailty identification (0.552, 95% CI 0.365–0.739). However, both machine vision methods showed superior advantages in pre-frailty classification compared to other methods. Grip strength showed a significant predictive value in healthy identification. The age of participants did not present a significant predictive value in any of the three classifications (P > 0.05).

The accuracy in the initial training period for the test set of both models was around 0.71–0.72, and a high classification accuracy for the test set was achieved during the 152nd epoch for AlexNet (0.862) and 158th epoch for VGG16 (0.856) (Figures 6C,D). The loss function for both models was approximately 0.45 at the beginning of learning, and it was below 0.1 mostly after the 130th epoch for both methods. The lowest loss was 0.0332 and 0.0327 for VGG16 (182nd epoch) and AlexNet (191st epoch), respectively (Figure 6E).

Discussion

In the current study, a machine vision method without using a contact sensor was implemented to identify frailty and pre-frailty among older adults based on their walking behaviour. First, an FFP-state-labelled senior walking video dataset consisting of 222 participants was created. All images of their gait sequences were treated using the body key point information extraction application AlphaPose. DPose2Seg, with a fully convolutional DenseNets segmentation module, also trained by an open-source human body image set, was the silhouette segmentation measure for the previous gait set. Gait body key points and silhouette information were used in a trained recognition network, DGaitset. The sequence-level feature extraction part in the trained DGaitset generated a customised CAE to compress the gait feature in a 64 × 64 matrix using the key points and silhouette sequences. We found that both machine vision methods (AlexNet and VGG16) equipped with better predictive performance globally than age and grip strength, as well as than 4-m-walking-time in healthy and pre-frailty classifying task.

In the pre-treatment stage, opensource AlphaPose and optimised Pose2Seg were used as tools for body key point extraction and silhouette segmentation. The first step of body key point extraction was the location of the human body, i.e., bounding the human within boxes. The inevitable small errors in body localisation can cause failures in a single-person body key point extraction. AlphaPose can handle inaccurate bounding boxes and redundant detections (Fang et al., 2017). The key point information output from the pre-trained AlphaPose not only provides mathematically constructed pose information to three silhouette segmentation methods but also provides part of the input for DGaitset and customisation of CAE. The replacement of layers of the residual unit with a fully convolutional DenseNets structure increased the precision of segmentation, which introduces the candidacy of DPose2Seg along with AlphaPose to function as a silhouette-generated method for other gait research.

As gait motion was a one-circle period, all silhouette clouds were represented in a single period. Our fundamental network, Gaitset, a model-free GRBU method, directly learns the representation of every frame silhouette independently via a CNN and set pooling instead of measuring the similarity between a pair of silhouette templates or sequences (Chao et al., 2019). Furthermore, a structure called HPM was used to map the set-level feature into a more discriminative space. Thereafter, the recognition was completed by calculating the distance between the representations of different samples. Gaitset exhibited a faster and more effective performance in individual re-identification tests in comparison with the previous model-free methods. The three major optimisations of DGaitset were as follows: replacement of max function with the attention module (in frame and sequence level), replacement triplet loss to weighted sum of softmax loss and hard triplet loss (in sequence level), and dual-channel input manner network (in global structure). DGaitset, a hybrid of model-based and model-free GRBU manner, performed better than the Gaitset and LGaitset approaches in the recognition task, and also suggests that DGaitset is a better candidate for the gait feature extraction and compression using the original video.

AlexNet used the residual unit activation function after the convolutional layers and softmax for the output layer, as well as applied max pooling instead of average pooling (Gu et al., 2018). VGG uses very small convolutional filters and very deep (16 and 19 layers) models (Bajić et al., 2019). The design decisions in the VGG models have become the starting point for the simple and direct use of CNNs in general.

The limitation of the current programme was the gait feature labelled by the FFP assessment excluding the prognosis events such as death, major cardiovascular events, or re-hospital, which directly point to a state of frailty because follow-up data of the WCHAT study is currently unavailable. The scale of the current walking video database and the unbalanced physical frailty state prevalence in the community-based cohort also limited the performance of the machine vision frailty classifier. As the potential clinical-gait-machine vision applications based on the current research may focus more on disease screening than accurate diagnosis, discarding random samples from the healthy group in the data compilation stage, or increasing the cost of the frailty group in the algorithm modification stage could increase the sensitivity to frailty state in the future development (Van Hulse et al., 2007; Krawczyk, 2016).

Although AlphaPose is a reliable method for building body key point images, its 17 key points (nose, left and right eyes, ears, shoulders, wrists, hips, knees, and ankles) did not include any points on the feet (Task Force Members et al., 2013). This deficit might cause an increase in noise around the feet compared with other body parts in silhouette segmentation. The latest body point extraction algorithm could label ankle, heel, and foot index, such as BlazePose and Zou’s method, which might provide a better choice for the machine vision body reconstruction model in this field (Bazarevsky et al., 2020; Zou et al., 2020). As most non-linear machine learning methods (Horst et al., 2019), part of the analysis processes in the current program were not straightforward, understandable, and interpretable.

Our methodology performs unique advantages in identifying the pre-frailty state, which might provide a clue for developing a novel biomarker. Pre-frailty is usually not as typical as frailty, which limits the proper preventive treatment, such as physical exercise, nutritional interventions, and implements (Serra-Prat et al., 2017). Furthermore, the current camera-based identification methods might extend their potential applications from frailty to other geriatric syndromes, such as cognitive impairment (Amboni et al., 2013).

A contact-free self-reported frailty assessment tool, based on this method, might help healthcare personnel (HCP) minimise their exposure to SARS-CoV-2-contaminated environment and equipment. It is well known that frailty status is a better predictor of prognosis than age in the COVID-19 therapy process (Hewitt et al., 2020). The evaluation of FFP depended on the face-to-face evaluation of HCPs, and HCP might not be able to enough detailed information within 30 min to make a comprehensive RFI evaluation for elderly patients diagnosed with hearing, visual or cognitive impairment. However, the cumulative exposure time of HCP to SARS-CoV-2 would increase the risk of transmission [National Center for Immunization and Respiratory Diseases (NCIRD), 2021].

After further mobile optimisation, our methodology might also expand in-home application scenarios with the rapid growth of smart device owners, globally (Silver and Taylor, 2019). With the rapid and large-scale growth of the elderly population in the world, there is a huge gap between the supply and demand of health monitoring and disease screening. Solutions aimed at reducing the strain on elderly care facilities and promoting independence, such as technology-enabled home-care services, will become the major part of the elderly care model in the near future (Mesko et al., 2018). Machine vision with artificial neural network tools has produced opportunities for convenient at-home screening of geriatric diseases such as frailty (Ahmed et al., 2020).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Biomedical Ethics Committee of West China Hospital, Sichuan University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

YL contributed to the design and carried out the current study. RW and YD contributed to modification of the experimental protocol. RH and QT contributed to optimisation of the silhouette segmentation module. XH and LQ contributed to key point extraction. BY and ZW contributed to the feature extraction module. YM, XLi, HW, XLiu, LZ, LD, ZX, and CX contributed to the film and edited the gait video. MG, XS, JJ, JC, XLin, and LX were involved in baseline information gathering. HG and HY analysed the experimental data. BD drafted the manuscript. XHH supervised the current research. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by Ministry of Science and Technology of China (grant numbers 2018YFC2002400, 2020YFC2005600, and 2020YFC2005602); West China Hospital, Sichuan University (grant numbers Y2018A44 and ZY2017201); Sichuan Provincial department of Science and Technology (grant numbers 2021YFS0239, 2019YFS0277, and 2016JY0125); Chinese Cardiovascular Association (grant number 2021-CCA-HTN-61); and Chengdu Science and Technology Bureau (grant numbers 2019-YF09-00120-SN and 2021-YF05-02144-SN).

Conflict of Interest

XLi is employed by Aviation Industry Corporation of China 363 Hospital.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnagi.2021.757823/full#supplementary-material

References

Adolph, K. E., and Franchak, J. M. (2017). The development of motor behavior. Wiley Interdiscip. Rev. Cogn. Sci. 8:e1430. doi: 10.1002/wcs.1430

Ahmed, Z., Mohamed, K., Zeeshan, S., and Dong, X. (2020). Artificial intelligence with multi-functional machine learning platform development for better healthcare and precision medicine. Database 2020:baaa010. doi: 10.1093/database/baaa010

Akbari, G., Nikkhoo, M., Wang, L., Chen, C. P. C., Han, D. S., Lin, Y. H., et al. (2021). Frailty level classification of the community elderly using microsoft kinect-based skeleton pose: a machine learning approach. Sensors 21:4017. doi: 10.3390/s21124017

Amboni, M., Barone, P., and Hausdorff, J. M. (2013). Cognitive contributions to gait and falls: evidence and implications. Mov. Disord. 28, 1520–1533. doi: 10.1002/mds.25674

Bajić, F., Job, J., and Nenadić, K. (2019). “Chart classification using simplified VGG model,” in Proceedings of the 2019 International Conference on Systems, Signals and Image Processing (IWSSIP), (Osijek).

Banik, S., Melanthota, S. K., Arbaaz, Vaz, J. M., Kadambalithaya, V. M., Hussain, I., et al. (2021). Recent trends in smartphone-based detection for biomedical applications: a review. Anal. Bioanal. Chem. 413, 2389–2406. doi: 10.1007/s00216-021-03184-z

Bazarevsky, V., Grishchenko, I., Raveendran, K., Zhu, T., Zhang, F., and Grundmann, M. (2020). “BlazePose: on-device real-time body pose tracking,” in Proceedings of the CVPR Workshop on Computer Vision for Augmented and Virtual Reality, (Seattle, WA).

Cao, Z., Simon, T., Wei, S. E., and Sheikh, Y. (2017). “Realtime multi-person 2D pose estimation using part affinity fields,” in Proceedings of the Conference on Computer Vision and Pattern Recognition, (Piscataway, NJ: IEEE). doi: 10.1109/TPAMI.2019.2929257

Chao, H., He, Y., Zhang, J., and Feng, J. (2019). GaitSet: regarding gait as a set for cross-view gait recognition. Proc. AAAI Conf. Artif. Intell. 33, 8126–8133.

Chao, H., Wang, K., He, Y., Zhang, J., and Feng, J. (2021). GaitSet: cross-view gait recognition through utilizing gait as a deep set. IEEE Trans. Pattern Anal. Mach. Intell. 1–1. doi: 10.1109/TPAMI.2021.3057879

Chaudhari, S., Mithal, V., Polatkan, G., and Ramanath, R. (2019). An Attentive Survey of Attention Models. Available online at: https://arxiv.org/abs/1904.02874

Chen, C., Liang, J., Zhao, H., Hu, H., and Tian, J. (2009). Frame difference energy image for gait recognition with incomplete silhouettes. Pattern Recognit. 30, 977–984.

COCO Consortium (2017). COCO Common Objects in Context. Available online at: https://cocodataset.org/#termsofuse (accessed March 5, 2021).

Fang, H., Xie, S., Tai, Y., and Lu, C. (2017). “RMPE: regional multi-person pose estimation,” in Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), (Venice). doi: 10.1109/ICCV.2017.256

Fried, L. P., Tangen, C. M., Walston, J., Newman, A. B., Hirsch, C., Gottdiener, J., et al. (2001). Frailty in older adults: evidence for a phenotype. J. Gerontol. A Biol. Sci. Med. Sci. 56, M146–M156. doi: 10.1093/gerona/56.3.m146

Fried, L. P., Xue, Q. L., Cappola, A. R., Ferrucci, L., Chaves, P., Varadhan, R., et al. (2009). Nonlinear multisystem physiological dysregulation associated with frailty in older women: implications for etiology and treatment. J. Gerontol. A Biol. Sci. Med. Sci. 64, 1049–1057. doi: 10.1093/gerona/glp076

Fu, Y., Wei, Y., Zhou, Y., Shi, H., Huang, G., Wang, X., et al. (2019). Horizontal pyramid matching for person re-identification. Proc. AAAI Conf. Artif. Intell. 33, 8295–8302.

Gu, J., Wang, Z., Kuen, J., Ma, L., Shahroudy, A., Shuai, B., et al. (2018). Recent advances in convolutional neural networks. Pattern Recognit. 77, 354–377. doi: 10.1016/j.patcog.2017.10.013

He, K., Gkioxari, G., Dollár, P., and Girshick, R. (2017). “Mask R-CNN,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), (Venice), 2961–2969.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Las Vegas, NV).

Hewitt, J., Carter, B., Vilches-Moraga, A., Quinn, T. J., Braude, P., Verduri, A., et al. (2020). The effect of frailty on survival in patients with COVID-19 (COPE): a multicentre, European, observational cohort study. Lancet Public Health 5, e444–e451. doi: 10.1016/S2468-2667(20)30146-8

Horst, F., Lapuschkin, S., Samek, W., Muller, K. R., and Schollhorn, W. I. (2019). Explaining the unique nature of individual gait patterns with deep learning. Sci. Rep. 9:2391. doi: 10.1038/s41598-019-38748-8

Huang, G., Liu, Z., Van Der Maaten, L., and Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Honolulu, HI).

Jégou, S., Drozdzal, M., Vazquez, D., Romero, A., and Bengio, Y. (2017). “The one hundred layers tiramisu: fully convolutional densenets for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, (Honolulu, HI).

Jung, D., Kim, J., Kim, M., Won, C. W., and Mun, K. R. (2021). Frailty assessment using temporal gait characteristics and a long short-term memory network. IEEE J. Biomed. Health Inform. 25, 3649–3658. doi: 10.1109/JBHI.2021.3067931

Kojima, G., Liljas, A. E. M., and Iliffe, S. (2019). Frailty syndrome: implications and challenges for health care policy. Risk Manag. Healthcare Policy 12, 23–30. doi: 10.2147/RMHP.S168750

Krawczyk, B. (2016). Learning from imbalanced data: open challenges and future directions. Prog. Artif. Intell. 5, 221–232. doi: 10.1007/s13748-016-0094-0

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2017). ImageNet classification with deep convolutional neural networks. J. Commun. ACM 60, 84–90. doi: 10.1145/3065386

Lam, T., Cheung, K. H., and Liu, J. N. K. (2011). Gait flow image: a silhouette-based gait representation for human identification. Pattern Recognit. 44, 973–987. doi: 10.1016/j.patcog.2010.10.011

Lee, H., Lee, E., and Jang, I. Y. (2020). Frailty and comprehensive geriatric assessment. J. Korean Med. Sci. 35:e16. doi: 10.3346/jkms.2020.35.e16

Li, W., Kuo, C. C. J., and Peng, J. (2018). Gait recognition via GEI subspace projections and collaborative representation classification. Neurocomputing 275, 1932–1945. doi: 10.1016/j.neucom.2017.10.049

Lin, T. Y., Dollár, P., Girshick, R., He, K., Hariharan, B., and Belongie, S. (2017). “Feature pyramid networks for object detection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Honolulu, HI).

Luo, J., and Tjahjadi, T. (2020). Gait recognition and understanding based on hierarchical temporal memory using 3D gait semantic folding. Sensors 20:1646. doi: 10.3390/s20061646

Martinez-Ramirez, A., Martinikorena, I., Gomez, M., Lecumberri, P., Millor, N., Rodriguez-Manas, L., et al. (2015). Frailty assessment based on trunk kinematic parameters during walking. J. Neuroeng. Rehabil. 12:48. doi: 10.1186/s12984-015-0040-6

Masci, J., Meier, U., Cireşan, D., and Schmidhuber, J. (2011). “Stacked convolutional auto-encoders for hierarchical feature extraction,” in Artificial Neural Networks and Machine Learning – ICANN 2011, Vol. 6791, eds T. Honkela, W. Duch, M. Girolami, and S. Kaski (Berlin: Springer).

Mesko, B., Hetenyi, G., and Gyorffy, Z. (2018). Will artificial intelligence solve the human resource crisis in healthcare? BMC Health Serv. Res. 18:545. doi: 10.1186/s12913-018-3359-4

Muro-de-la-Herran, A., Garcia-Zapirain, B., and Mendez-Zorrilla, A. (2014). Gait analysis methods: an overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 14, 3362–3394. doi: 10.3390/s140203362

National Center for Immunization and Respiratory Diseases (NCIRD) (2021). Interim U.S. Guidance for Risk Assessment and Work Restrictions for Healthcare Personnel with Potential Exposure to SARS-CoV-2. Available online at: https://www.cdc.gov/coronavirus/2019-ncov/hcp/guidance-risk-assesment-hcp.html (accessed April 20, 2021).

Pirker, W., and Katzenschlager, R. (2017). Gait disorders in adults and the elderly: a clinical guide. Wien Klin. Wochenschr. 129, 81–95. doi: 10.1007/s00508-016-1096-4

Rockwood, K., Song, X., MacKnight, C., Bergman, H., Hogan, D. B., McDowell, I., et al. (2005). A global clinical measure of fitness and frailty in elderly people. CMAJ 173, 489–495. doi: 10.1503/cmaj.050051

Roy, A., Sural, S., and Mukherjee, J. (2012). Gait recognition using pose kinematics and pose energy image. Signal Process. 92, 780–792.

Schwenk, M., Howe, C., Saleh, A., Mohler, J., Grewal, G., Armstrong, D., et al. (2014). Frailty and technology: a systematic review of gait analysis in those with frailty. Gerontology 60, 79–89. doi: 10.1159/000354211

Serra-Prat, M., Sist, X., Domenich, R., Jurado, L., Saiz, A., Roces, A., et al. (2017). Effectiveness of an intervention to prevent frailty in pre-frail community-dwelling older people consulting in primary care: a randomised controlled trial. Age Ageing 46, 401–407. doi: 10.1093/ageing/afw242

Silver, L., and Taylor, K. (2019). Smartphone Ownership Is Growing Rapidly Around the World, but Not Always Equally. Available online at: https://www.pewresearch.org/global/wp-content/uploads/sites/2/2019/02/Pew-Research-Center_Global-Technology-Use-2018_2019-02-05.pdf (accessed April 19, 2021).

Simonyan, K., and Zisserman, A. (2015). “Very deep convolutional networks for large-scale image recognition,” in Proceedings of the 3rd International Conference on Learning Representations (ICLR2015), (San Diego, CA).

Taha, A., Chen, Y., Misu, T., Shrivastava, A., and Davis, L. (2020). “Boosting standard classification architectures through a ranking regularizer,” in Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), (Snowmass, CO).

Task Force Members, G., Sechtem, U., Achenbach, S., Andreotti, F., Arden, C., et al. (2013). 2013 ESC guidelines on the management of stable coronary artery disease: the Task Force on the management of stable coronary artery disease of the European Society of Cardiology. Eur. Heart J. 34, 2949–3003. doi: 10.1093/eurheartj/eht296

Van Hulse, J., Khoshgoftaar, T. M., and Napolitano, A. (2007). “Experimental perspectives on learning from imbalanced data,” in ICML ‘07: Proceedings of the 24th International Conference on Machine Learning, (New York, NY: Association for Computing Machinery), 935–942.

Yanyan, W., Ding, D., Yi, S., Taiping, L., Jirong, Y., and Ning, G. (2019). Development and validation of the China Leisure time physical activity questionnaire in the elderly. Pract. Geriatr. 3, 229–233. doi: 10.3969/j.issn.1003-9198.2019.03.006

Yilmaz, E., and Aslam, J. A. (2006). “Estimating average precision with incomplete and imperfect judgments,” in Proceedings of the 15th ACM International Conference on Information and Knowledge Management, (New York, NY: Association for Computing Machinery), 102–111.

Yoo, J.-H., Nixon, M. S., and Harris, C. J. (2002). “Extracting gait signatures based on anatomical knowledge,” in Proceedings of the BMVA Symposium on Advancing Biometric Technologies, (London), 596–606.

Zhang, H., Deng, K., Li, H., Albin, R. L., and Guan, Y. (2020). Deep learning identifies digital biomarkers for self-reported Parkinson’s disease. Patterns 1:100042. doi: 10.1016/j.patter.2020.100042

Zhang, S. H., Li, R., Dong, X., Rosin, P., Cai, Z., Han, X., et al. (2019). “Pose2Seg: detection free human instance segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), (Long Beach, CA), 889–898.

Zou, Y., Yang, J., Ceylan, D., Zhang, J., Perazzi, F., and Huang, J. B. (2020). “Reducing footskate in human motion reconstruction with ground contact constraints,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), (Piscataway, NJ: IEEE), 459–468. doi: 10.1007/s11999-010-1633-9

Keywords: frailty, gait, machine vision, biomarkers, preventative health care, feature extraction

Citation: Liu Y, He X, Wang R, Teng Q, Hu R, Qing L, Wang Z, He X, Yin B, Mou Y, Du Y, Li X, Wang H, Liu X, Zhou L, Deng L, Xu Z, Xiao C, Ge M, Sun X, Jiang J, Chen J, Lin X, Xia L, Gong H, Yu H and Dong B (2021) Application of Machine Vision in Classifying Gait Frailty Among Older Adults. Front. Aging Neurosci. 13:757823. doi: 10.3389/fnagi.2021.757823

Received: 12 August 2021; Accepted: 18 October 2021;

Published: 16 November 2021.

Edited by:

Xiao-Yong Zhang, Fudan University, ChinaReviewed by:

Cuili Wang, Peking University, ChinaGhasem Akbari, Qazvin Islamic Azad University, Iran

Copyright © 2021 Liu, He, Wang, Teng, Hu, Qing, Wang, He, Yin, Mou, Du, Li, Wang, Liu, Zhou, Deng, Xu, Xiao, Ge, Sun, Jiang, Chen, Lin, Xia, Gong, Yu and Dong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaohai He, aHhoQHNjdS5lZHUuY24=; Birong Dong, Ymlyb25nZG9uZzEyM0BvdXRsb29rLmNvbQ==

Yixin Liu

Yixin Liu Xiaohai He4*

Xiaohai He4* Linghui Deng

Linghui Deng Ziqi Xu

Ziqi Xu Haopeng Yu

Haopeng Yu Birong Dong

Birong Dong