- 1Department of Information and Communication Engineering, Chosun University, Gwangju, South Korea

- 2Department of Neurology, Chonnam National University Medical School, Gwangju, South Korea

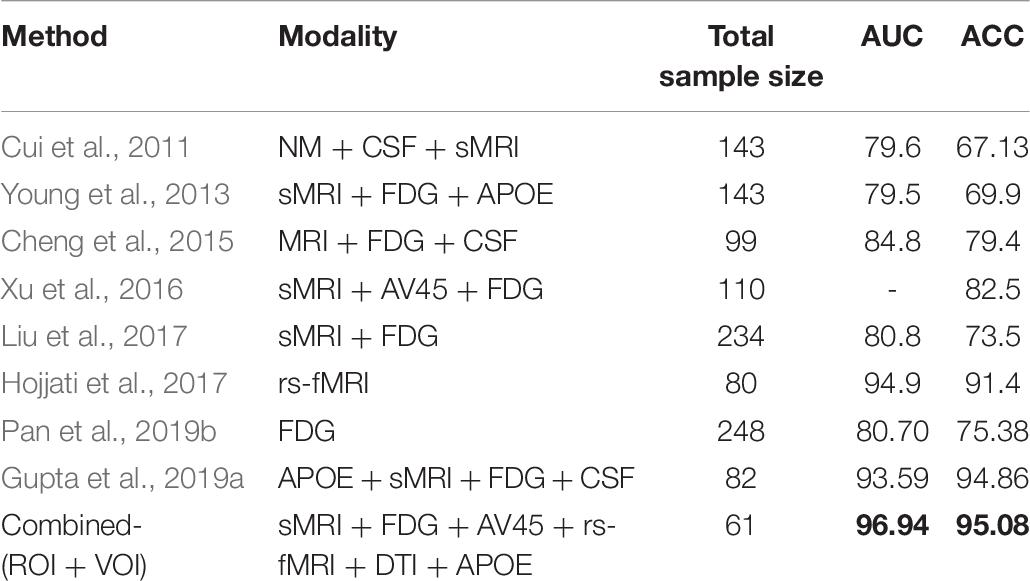

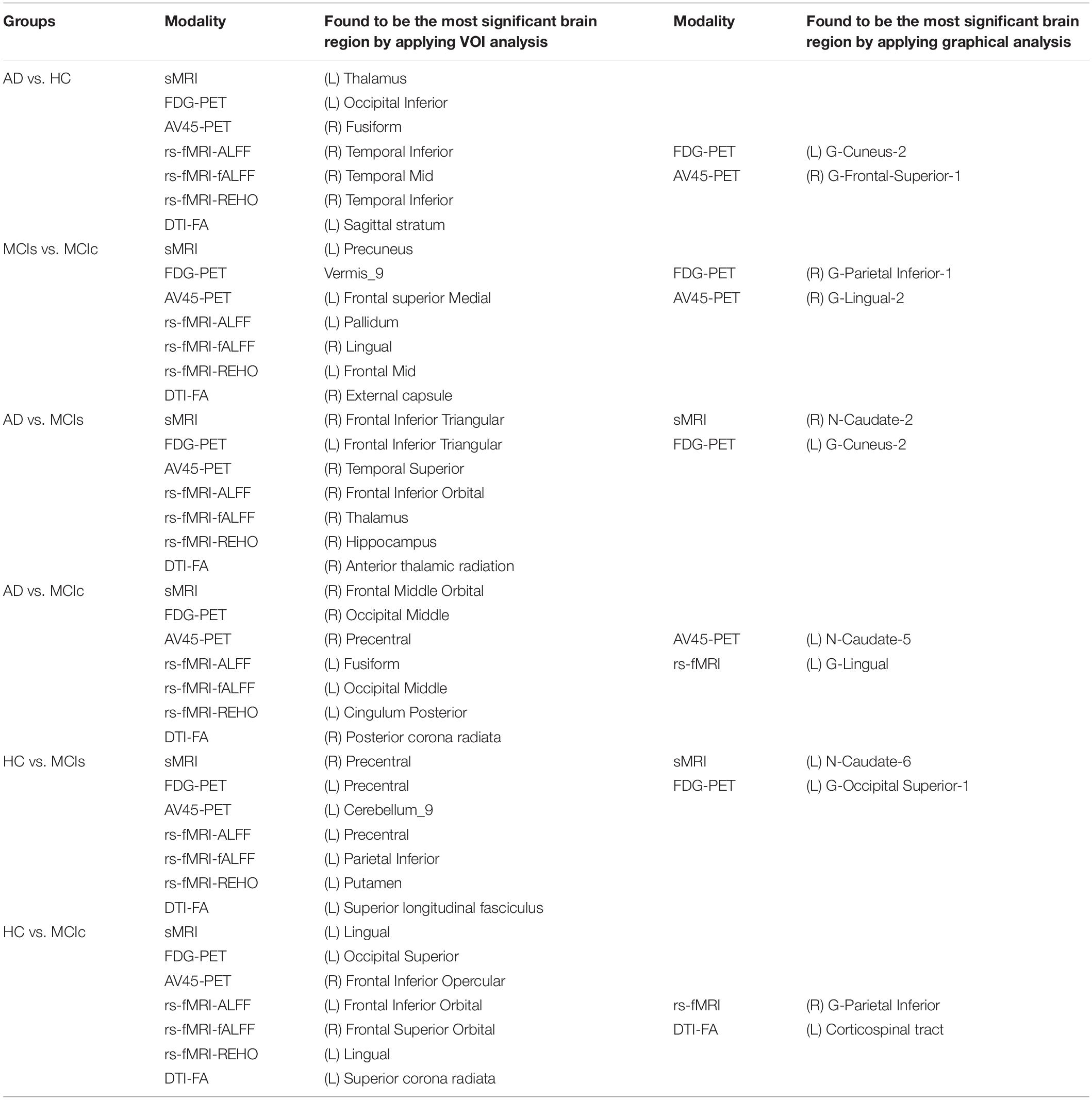

Graphical, voxel, and region-based analysis has become a popular approach to studying neurodegenerative disorders such as Alzheimer’s disease (AD) and its prodromal stage [mild cognitive impairment (MCI)]. These methods have been used previously for classification or discrimination of AD in subjects in a prodromal stage called stable MCI (MCIs), which does not convert to AD but remains stable over a period of time, and converting MCI (MCIc), which converts to AD, but the results reported across similar studies are often inconsistent. Furthermore, the classification accuracy for MCIs vs. MCIc is limited. In this study, we propose combining different neuroimaging modalities (sMRI, FDG-PET, AV45-PET, DTI, and rs-fMRI) with the apolipoprotein-E genotype to form a multimodal system for the discrimination of AD, and to increase the classification accuracy. Initially, we used two well-known analyses to extract features from each neuroimage for the discrimination of AD: whole-brain parcelation analysis (or region-based analysis), and voxel-wise analysis (or voxel-based morphometry). We also investigated graphical analysis (nodal and group) for all six binary classification groups (AD vs. HC, MCIs vs. MCIc, AD vs. MCIc, AD vs. MCIs, HC vs. MCIc, and HC vs. MCIs). Data for a total of 129 subjects (33 AD, 30 MCIs, 31 MCIc, and 35 HCs) for each imaging modality were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) homepage. These data also include two APOE genotype data points for the subjects. Moreover, we used the 2-mm AICHA atlas with the NiftyReg registration toolbox to extract 384 brain regions from each PET (FDG and AV45) and sMRI image. For the rs-fMRI images, we used the DPARSF toolbox in MATLAB for the automatic extraction of data and the results for REHO, ALFF, and fALFF. We also used the pyClusterROI script for the automatic parcelation of each rs-fMRI image into 200 brain regions. For the DTI images, we used the FSL (Version 6.0) toolbox for the extraction of fractional anisotropy (FA) images to calculate a tract-based spatial statistic. Moreover, we used the PANDA toolbox to obtain 50 white-matter-region-parcellated FA images on the basis of the 2-mm JHU-ICBM-labeled template atlas. To integrate the different modalities and different complementary information into one form, and to optimize the classifier, we used the multiple kernel learning (MKL) framework. The obtained results indicated that our multimodal approach yields a significant improvement in accuracy over any single modality alone. The areas under the curve obtained by the proposed method were 97.78, 96.94, 95.56, 96.25, 96.67, and 96.59% for AD vs. HC, MCIs vs. MCIc, AD vs. MCIc, AD vs. MCIs, HC vs. MCIc, and HC vs. MCIs binary classification, respectively. Our proposed multimodal method improved the classification result for MCIs vs. MCIc groups compared with the unimodal classification results. Our study found that the (left/right) precentral region was present in all six binary classification groups (this region can be considered the most significant region). Furthermore, using nodal network topology, we found that FDG, AV45-PET, and rs-fMRI were the most important neuroimages, and showed many affected regions relative to other modalities. We also compared our results with recently published results.

Introduction

Alzheimer’s disease (AD) is a neurodegenerative disorder that is characterized by chronic cortical atrophy (such as posterior cingulate atrophy and medial temporal atrophy), and by a progressive decline in cognitive function (Bishop et al., 2010; Albert, 2011). AD is typically diagnosed in people older than 65 years (Qiu and Kivipelto, 2009). As the life span of the population increases, the prevalence of AD and its costs to society are also increasing. Therefore, the detection of AD or its precursor forms, i.e., mild cognitive impairment (MCI) (Petersen, 2004) is an important aim in biomedical research for providing new therapeutics that help to slow the progression of AD. MCI is a transitional phase (which signifies an intermediate stage of functional and cognitive decline in normal aging and dementia patients) that is characterized by memory disturbance in the absence of dementia (Petersen, 2004; Angelucci et al., 2010), followed by widespread cognitive deficits in multiple domains until a disability threshold is reached. MCI is said to be prodromal AD (Petersen, 2004); subjects go on to develop an AD [this type of patient falls into an MCI-converting (MCIc) group]. Symptoms emerge, on average, within 2–3 years (Lopez et al., 2012). A prospective population-based study in the elderly showed that the conversion rate of MCIc patients to AD or to different forms of dementia is about 10–15% per year (Mitchell and Shiri-Feshki, 2009; Lopez et al., 2012; Wei et al., 2016). Despite our substantial knowledge of MCI converters, little is understood about the 47–67% (Ganguli et al., 2004; Lopez et al., 2012; Clem et al., 2017) of subjects diagnosed with MCI who neither return to normal cognition nor convert to dementia. In a study performed in a large community sample, 10 years identified as a MCI, 21% of those suspected to be at a greater risk for converting to dementia (Dubois and Albert, 2004; Jicha et al., 2006) managed to remain with a diagnosis of MCI (Ganguli et al., 2004; Clem et al., 2017). These studies suggest that certain subjects may not convert to AD, but rather remain diagnostically stable over a period of time (this type of patient falls into an MCI-stable, or MCIs group). Recent results from neuroimaging studies support the hypothesis that AD includes a disconnection syndrome (implying network-wide functional changes due to local structural changes) generated by a breakdown of the organized structure and functional connectivity (FC) of multiple brain regions, even in the early phase of MCI or before conversion to AD (Dubois and Albert, 2004; Bishop et al., 2010; Daianu et al., 2013; Clem et al., 2017; de Vos et al., 2018).

Previous studies have shown the potential of invasive and non-invasive biomarkers to predict conversion from MCI to AD dementia. For invasive markers, the APOE-ε4 genotype (for the carriers of the APOE-ε4 allele, brain alterations associated with AD may begin as early as infancy) (Liu et al., 2013; Dean et al., 2014), and amyloid-beta (Aβ) accumulation and neurofibrillary lesions are considered to be most important biomarkers for AD (Murphy and LeVine, 2010). Apolipoprotein-E (APOE) genotype polymorphism is considered to be the most common polymorphism in neurodegenerative diseases and has been consistently linked to normal cognitive decline in AD and MCI patients. APOE-ε4 is the strongest genetic risk factor, and it increases the risk for AD twofold to threefold. Furthermore, it lowers the age of AD onset (Michaelson, 2014). Recent developments in non-invasive neuroimaging techniques, including functional and structural imaging, have given rise to a variety of commonly used neuroimaging biomarkers for AD. Among the multiple neuroimaging modalities, structural magnetic resonance imaging (sMRI) has attracted significant interest due to its ready availability for mildly symptomatic patients and its high spatial resolution (Cuingnet et al., 2011; Salvatore et al., 2015; Wei et al., 2016; Long et al., 2017; Gupta et al., 2019b, c; Sun et al., 2019). sMRI can also reveal abnormalities in a wide range of brain areas, including gray matter (GM) atrophy in the medial temporal lobe and hippocampal/entorhinal cortex, which are identified as valuable AD-specific biomarkers for the discrimination or classification of AD patients (Cuingnet et al., 2011). In diffusion tensor imaging (DTI, or diffusion MRI), water diffusion in the brain is interpreted as an MR signal loss. Because neurodegenerative processes are accompanied by a loss of obstacles that restrict the motion of water molecules (Acosta-Cabronero and Nestor, 2014), DTI can reveal promising markers of microstructural white matter (WM) damage in AD and MCI patients. A connectivity-based analysis that applied graph theory to DTI data demonstrated disrupted topological properties of structural brain networks in AD, supporting the disconnection theory. Specifically, regional diffusion metrics for the limbic WM in the fornix, posterior cingulum, and parahippocampal gyrus have shown better performance than volumetric measurements of the GM in predicting MCI conversion (Sun et al., 2019). In clinical studies, fluorodeoxyglucose-positron emission tomography (FDG-PET), florbetapir-PET AV45 (amyloid protein imaging), and resting-state fMRI (rs-fMRI) are the most commonly used functional neuroimaging methods for AD diagnosis (Hojjati et al., 2018, 2017; Gupta et al., 2019a; Pan et al., 2019a, b). FDG-PET measures cerebral glucose metabolism via 18F-FDG in the brain, and helps to detect characteristic regional hypometabolism in AD patients, which reflects the neuronal dysfunctions in the brains of AD patients (Mosconi et al., 2008). In contrast, florbetapir-PET AV45 measures the accumulation of amyloid protein in AD brain homogenates and has faster in vivo kinetics. The use of florbetapir in amyloid imaging was recently validated in an autopsy study, and its safety profile allows its clinical application for brain imaging (Camus et al., 2012). Moreover, rs-fMRI imaging has been developed as a tool for mapping the intrinsic activity of the brain and for depicting the synchronization of interregional FC (Bi et al., 2018; de Vos et al., 2018). A recent rs-fMRI study showed that the FC pattern may be altered in some specific functional networks (default mode network) of AD and MCI patients. The authors found decreased FC between the hippocampus and several regions throughout the neocortex, i.e., reduced FC within the default mode networks and increased FC within the frontal networks (de Vos et al., 2018).

From the above studies, we observe that there is no clear evidence supporting the supremacy of any biomarker above another (CSF vs. APOE-ε4 vs. imaging) for the diagnostic estimation of AD. The choice of biomarkers mainly depends on price and availability. Nevertheless, some authors argue for the perfection of imaging above fluid biomarkers, given that imaging modalities can distinguish the different phases of the disease both anatomically and temporally (Khoury and Ghossoub, 2019; Márquez and Yassa, 2019). The above-mentioned studies used only a single modality to detect biomarkers for the detection of the conversion of MCI to AD. The proposed algorithm performance is approximately 80–90%, which is low compared with that of recently published multimodal studies (Zhang et al., 2011; Liu et al., 2014; Ritter et al., 2015; Xu et al., 2016; Gupta et al., 2019a). To date, it is true that no single imaging modality for biomarkers meets all of the diagnostic requirements set by previous studies (Hyman et al., 2012; Jack et al., 2018, 2016) (because each biomarker has their own advantage over others) and no single (whether genotype or fluid or imaging) biomarker can by itself correctly discriminate a heterogeneous disorder of AD with high accuracy, but several methods may provide complementary information, which leads to a call to develop a panel of neuroimaging biomarkers, or a combination of imaging and APOE or imaging with CSF data that merges information about the disease manner to improve diagnostic accuracy (Márquez and Yassa, 2019). Combining information (multimodal) from different types of neuroimaging (structural and functional) with genotype (APOE) or biochemical (CSF) information, as do sMRI, AV45-PET, FDG-PET, DTI, and rs-fMRI, can help to improve diagnostic performance for AD or MCI compared with single-modality methods (Zhang et al., 2011; Young et al., 2013; Schouten et al., 2016; Wei et al., 2016; Gupta et al., 2019a). Furthermore, it has been noted lately that a combination of biomarkers yields a powerful diagnostic technique for classifying the AD group with cognitively healthy subjects, with specificity and sensitivity scores reaching above 90% (Bloudek et al., 2011; Rathore et al., 2017; Khoury and Ghossoub, 2019).

Multiple studies have reported a combination of different neuroimaging modalities for investigating AD or MCI. Dai et al. (2012) used regional GM volumetric measures and functional measures (amplitude of low-frequency fluctuations, regional homogeneity, and regional FC strength) as features. They trained distinct maximum uncertainty LDA classifiers on functional and structural properties and merged the output of the classifiers by weighted voting. Zhang et al. (2011) used a multimodal method for the discrimination of healthy controls (HC) to AD patients. They used a kernel-based support vector machine (SVM) classifier for the classification, and they combined volumetric regional features with regional FDG-PET and CSF biomarkers. Young et al. (2013) proposed a method where they combined sMRI, FDG-PET, and APOE genotype data for the discrimination of AD with HC. These authors used Gaussian processes as a multimodal kernel method, and they applied an SVM classifier for the classification of MCIs vs. MCIc groups. However, their diagnostic accuracy was low. Another study proposed a system where multiple kernel learning (MKL) with the Fourier transform of the Gaussian kernels was applied to AD classification using both sMRI and rs-fMRI (Liu et al., 2014) neuroimages. Moradi et al. (2015) used GM density maps, age, and cognitive tests as features, and employed classification algorithms such as low-density separation and random forest for AD conversion discrimination. Another study proposed a novel method for the classification of AD using a multi-feature technique (regional thickness, regional correlative-calculated from thickness measures, and the APOE genotype) using an SVM classifier (Zheng et al., 2015). Schouten et al. (2016) combined regional volumetric measures, diffusion measures, and correlation measures between all brain regions calculated from functional MRI. They employed a logistic elastic net for classification. In addition, Liu et al. (2017) used independent component analysis and the COX model for the discrimination of MCIs to MCIc. In their study, they used sMRI and FDG-PET scans in combination with APOE data and some cognitive measures. Hojjati et al. (2018) combined the features extracted from sMRI (cortical thickness) and rs-fMRI (graph measures) for the detection of AD, employing SVM for the classification. It is worth noting that most of the above-presented multimodal methods used brain atrophy from a few manually extracted regions as a feature of sMRI and PET images for the detection of AD among different groups. However, using only a small number of brain regions as a feature in any imaging modality may not accurately reflect the spatiotemporal pattern of structural and physiological abnormalities as a whole (Fan et al., 2008). Furthermore, simply by increasing the number of modalities, combining modalities did not increase predictive power.

Therefore, the primary goal of this study was to combine five different imaging modalities (sMRI, AV45, FDG-PET, DTI, and rs-fMRI) with the APOE genotype to establish a multimodal system for the detection of AD. Moreover, in this study, we used three methods (that were completely different from each other) for the discrimination of AD from other groups. Moreover, we also aimed to discover which single modality of neuroimaging achieves high performance or plays a significant role in classifying all six binary classification groups (AD vs. HC, MCIs vs. MCIc, AD vs. MCIc, AD vs. MCIs, HC vs. MCIc, and HC vs. MCIs) based on these methods, and wanted to know which combined methods would perform well in the classification stage (whole-brain or voxel-wise analysis). Furthermore, we also aimed to discover the regions where these six binary groups massively differed from each other using voxel of interest (VOI) and graph methods. Whole-brain parcelation and voxel-wise methods were used to study regional and voxel differences in all six binary classification groups. We used NiftyReg (Young et al., 2013; Gupta et al., 2019a), the pyClusterROI script (Craddock et al., 2012), PANDA (Cui et al., 2013), DPRASF (Chao-Gan and Yu-Feng, 2010), and the CAT12 toolbox with the integration of SPM12 (Ashburner and Friston, 2001) for the extraction of features from the structural and functional neuroimaging data. Furthermore, graph-based analysis (John et al., 2017; Peraza et al., 2019) was performed to study the organization of (nodal and group) network connectivity using anatomical features (including GM volume, cortical thickness, and WM pathways between GM regions), and using the regional time series of the 200 brain regions included in the Craddock atlas. For this graph-based analysis, we used the BRAPH toolbox (Mijalkov et al., 2017). Later, we applied an MKL algorithm based on the EasyMKL (Aiolli and Donini, 2015; Donini et al., 2019) classifier for classification and for data fusion. This classifier works by simultaneously learning the predictor parameters and the kernel combination weights. Moreover, we applied a leave-one-out cross-validation technique that helps to find the optimal hypermeter for this MKL classifier. In this study, we also applied the radial basis function (RBF)-SVM classifier to compare its results with the results obtained from the EasyMKL. Our results showed that grouping different measurements (or complementary information) from the six different modalities exhibited much better performance for all six binary classification groups (using any combined-ROI, or combined-VOI, or a combination of all) than using both classifiers with the best individual modality.

Materials and Methods

Participants

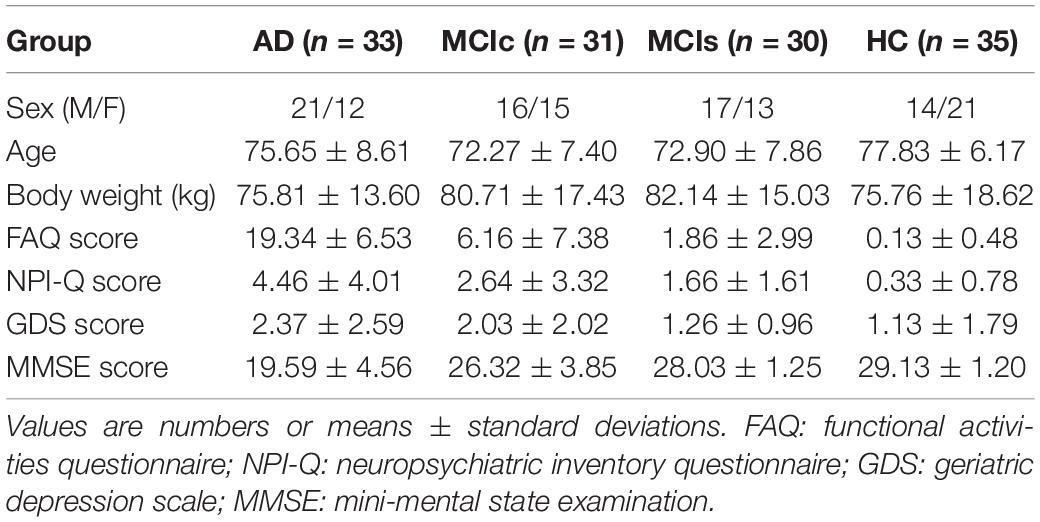

The participants included in this study were enrolled via the ADNI, which was launched in 2003 as a multicenter public-private partnership, guided by Principal Investigator Michael W. Weiner, MD. The participants were enrolled from 63 locations across the United States and Canada. The primary goal of ADNI was to test whether sMRI, PET, new biological markers, and clinical and neuropsychological evaluation could be combined to measure the development of MCI and early AD. The criteria used for the inclusion of subjects were those defined in the ADNI procedure.1 The enrolled subjects were between 56 and 92 (inclusive) years old, had a study partner able to offer an independent assessment of functioning, and spoke either Spanish or English. All subjects were willing and able to undergo all test trials, including neuroimaging, and agreed to a longitudinal follow-up. Specific psychoactive medications were excluded. For this study, we downloaded data from all subjects for whom all five imaging modalities (sMRI, rs-fMRI, FDG-PET, AV45-PET, and DTI) with their APOE genotype were available on the ADNI homepage. A total of 129 subjects were classified as either healthy controls (HC, n = 35), MCIc (n = 31), MCIs (n = 30), or AD (n = 33), with matched sex and age ratios. The groups were classified according to the criteria set by the ADNI consortium (Petersen et al., 2010). In the HC group, participants had global clinical dementia rating (CDR) scores of 0, mini-mental state examination (MMSE) scores between 27 and 30, functional activities questionnaire (FAQ) scores between 0 and 4, and geriatric depression scale (GDS) scores between 0 and 4. In the MCIs group, the MMSE score was between 25 and 30, the FAQ score between 0 and 16, and the GDS score was between 0 and 13. In the MCIc group, the MMSE score was between 19 and 30, the FAQ score between 0 and 18, and the GDS score was between 0 and 10.

In the AD group, patients had a global CDR score of 1, an MMSE score between 14 and 24, an FAQ score between 3 and 28, and a GDS score between 0 and 7 (Morris, 1993). We did not consider MCI subjects who had been tracked for less than 18 months and did not convert within this period. Table 1 shows participant demographic information, including the mean age and the sex ratios per group. To assess statistically significant changes in the demographics and clinical features between these groups, a Student’s t-test was used with the significance level set to 0.05. We found no significant differences (p−value > 0.05) between the groups for age or sex ratio. To attain unbiased estimates of performance, the classification groups were then randomly split into two clusters in a ratio of 70:30 for the training and testing sets. The model was trained on the training set, and the performance measures of diagnostic specificity and sensitivity were carried out on a separate testing set. The splitting procedure preserved the age and sex distribution.

sMRI Acquisition

We acquired 1.5-T T1-weighted MR images from the ADNI homepage. The MRI images were obtained from data centers using Philips, GE, or Siemens Medical system scanners. Because the acquisition protocol was different for each scanner, an image normalization step was carried out by ADNI. The image corrections included calibration, image geometry distortion due to gradient non-linearity (grad-warp), and reduction in the intensity non-uniformity due to waves, or residual intensity non-uniformity of the 1.5-T scans utilized on each image by ADNI. Further details about the sMRI images are available on the ADNI website.2 All scans had a resolution of 176×256×256 with 1mm spacing between each scan. In our study, we again pre-processed the obtained sMRI images using the FMRIB Software Library (FSL, v.6.0) (Smith, 2002) toolbox. For the anatomical sMRI images, this included the extraction of non-brain tissue from each image using the BET function. We then passed the skull-stripped images to the ANTs (Tustison et al., 2010) toolbox for N4 bias field correction to correct for inhomogeneous artifacts in each image. For co-registration to the standard Montreal Neurological Institute (MNI) 152 template (Grabner et al., 2006), we also used the FSL toolbox (Jenkinson et al., 2012).

FDG-PET Image Acquisition

ADNI provides four different types of FDG-PET samples, which are labeled as: (1) Co-registered Dynamic; (2) Co-registered, Averaged; (3) Co-reg, Avg, Standardized image and Voxel size; and (4) Co-reg, Avg, Std Img and Vox Siz, Uniform Resolution. The type (3) baseline FDG-PET images were downloaded from the ADNI homepage.

The downloaded baseline FDG-PET samples were in DICOM format. In the first step, we converted these DICOM format images to the Nifty format using the dcm2nii (Li et al., 2016) toolbox. Later, these images were spatially normalized to the MNI 152 template using the SPM12 toolbox (integrated within MATLAB 2019b) with a standard 91×109×91 tensor dimension image grid, having a voxel size of 2×2×2mm3. This image grid was oriented so that the subjects’ anterior-posterior (AC-PC) axis was parallel to the AC-PC line. The above normalization step was carried out on two levels: a global affine transformation, followed by a non-rigid spatial transformation. The general affine transformation requires a 12-parameter design, whereas the non-rigid spatial transformation uses a sequence of the lowest frequency elements of the three-dimensional cosine transform. Furthermore, the intensity normalization step was performed by splitting each voxel depth-wise with the average score of the global GM, which was obtained with the help of the AAL template image. More details concerning the FDG-PET imaging can be found on the ADNI homepage.3 Moreover, after the completion of these pre-processing steps, we co-registered the FDG-PET images to their corresponding sMRI T1-weighted images using the SPM12 toolbox.

AV45-PET Image Acquisition

Alzheimer’s Disease Neuroimaging Initiative provides four different types of AV45-PET samples, which are: (1) AV45 Co-registered Dynamic; (2) AV45 Co-registered, Averaged; (3) AV45 Co-reg, Avg, Standardized image and Voxel size; and (4) AV45 Co-reg, Avg, Std Img and Vox Siz, Uniform Resolution. The type (3) baseline AV45-PET images were downloaded from the ADNI homepage. For each scan, the 5-min frames (four for florbetapir, acquired 50–70 min post-injection) were co-registered to the frame (rigid-body translation/rotation, six degrees of freedom) using the NeuroStat “mcoreg” routine (followed by the ADNI organization) (Jagust et al., 2015). The downloaded baseline AV45-PET samples were in DICOM and ECAT formats. In the first step, we converted this DICOM and ECAT format images to the Nifty format using the dcm2nii (Li et al., 2016) toolbox. Later, these images were spatially normalized to the MNI 152 template using the SPM12 toolbox (integrated within MATLAB 2019b) with a standard 91×109×91 tensor dimension image grid, having a voxel size of 2×2×2mm3 using the same process we introduced in section “FDG-PET Image Acquisition” for the FDG-PET image. More details about AV45-PET imaging can be found on the ADNI website (see text footnote 3). Furthermore, after the completion of the above stated pre-processing steps, we co-registered the AV45-PET images to their corresponding sMRI T1-weighted images using the SPM12 toolbox.

Resting-State Functional MR Image Acquisition

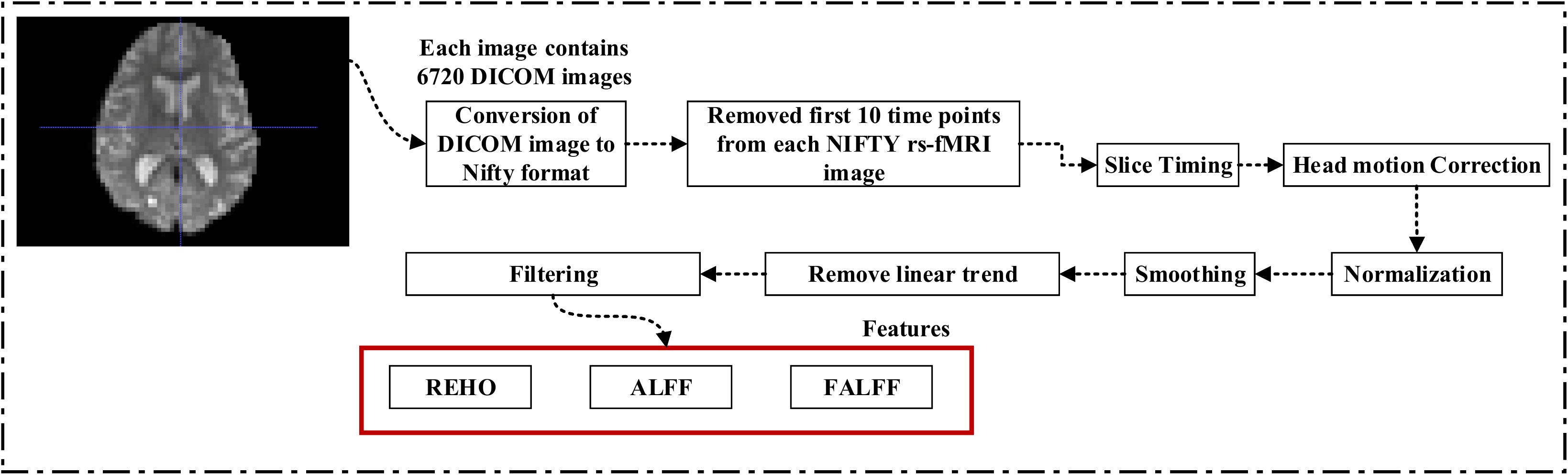

A 3.0-T Philips Medical sMRI scanner was used to acquire the fMRI images. All rs-fMRI images were obtained from the ADNI homepage. The sample for each subject consisted of 6720 DICOM images. The patients were required to relax, not to think, and to lie in the scanner during the scanning procedure. The sequence parameters were as follows: pulse sequence = GR, TR = 3000ms, TE = 30ms, flip angle = 80°, data matrix = 64×64, pixel spacing X, Y = 3.31mm and 3.31mm, slice thickness = 3.33mm, axial slices = 48, no slice gap, time points = 140. Because the signal-to-noise ratio (SNR) of the rs-fMRI images was limited, the collected data were pre-processed to reduce the impact of noise on the fMRI images. For the pre-processing of the rs-fMRI images, we used the Data Processing Assistant for Resting-state fMRI (DPARSF) (Chao-Gan and Yu-Feng, 2010) software, which can be downloaded from http://d.rnet.co/DPABI/DPABI_V2.3_170105.zip. For each subject, the entire pre-processing was divided into nine steps as follows: converting the DICOM format into the NIFTY format, removing the first ten time points, timing the slicing, head motion correction to adjust the time series of the images so that the brain was located in the same orientation in every image, normalization, smoothing by full width at half maximum (FWHM), removing the linear trend to eliminate the residual noise that systematically increases or decreases over time (Smith et al., 1999), temporal filtering to retain 0.01–0.08 Hz fluctuations, and removing covariates to eliminate physiological artifacts (Fransson, 2005), non-neuronal blood oxygen level-dependent (BOLD) fluctuations, and head motion.

Diffusion Tensor Imaging Image Acquisition

Diffusion tensor imaging images were also downloaded from the ADNI homepage. The DTI protocol used spin-echo diffusion-weighted echo-planar imaging with a TR/TE of 12000/1046ms, a voxel size of 0.9375×0.9375×2.35mm3, a matrix size of 256×256, 45 slices, 30 gradient directions, and a b-value of 1000s/mm2. More details about DTI imaging can be found on the ADNI webpage.4 This ADNI protocol was chosen after conducting a detailed comparison of several different DTI protocols to optimize the SNR in a fixed scan time (Jahanshad et al., 2013; Chen et al., 2018). The pre-processing of DTI data was performed using the diffusion toolbox of FSL (Version 6.0, FMRIB, Oxford, United Kingdom). FSL pre-processing included (i) corrections for eddy currents and head motion, (ii) skull stripping, and (iii) fitting the data to the diffusion tensor model to compute maps of fractional anisotropy (FA) and mean diffusivity (MD). A single diffusion tensor was fitted at each voxel of the eddy- and EPI-corrected DWI images using the FSL toolbox. Scalar anisotropy maps were obtained from the consequential diffusion tensor eigenvalues λ1,λ2,andλ3. FA, a measure of the degree of diffusion anisotropy, was defined in the standard way as

where < λ > is equal to the MD or average proportion of diffusion in all directions. The resulting images were smoothed with a Gaussian kernel of 5mm FWHM to improve the SNR and ensure a Gaussian distribution of the maps.

APOE Genotype

The APOE genotype for each subject was also obtained from the ADNI homepage. The APOE genotype is known to affect the risk of developing sporadic AD in carriers. The APOE genotype of each subject was noted as a pair of numbers representing which two alleles were present in the blood. This genetic feature was a single categorical variable for each participant and could have had one of five possible values: (ε2, ε3), (ε2, ε4), (ε3, ε3), (ε3, ε4), and (ε4, ε4). The most common allele is APOE ε3, but carriers of the APOE-ε4 variant have an increased risk of developing AD, whereas the APOE ε2 variant confers some protection on carriers (Michaelson, 2014). Of the three genetic polymorphisms, APOE shows the highest correlation with MCI status and stability (Brainerd et al., 2013). Several recent studies have linked the APOE-ε4 status to a relatively late risk of preclinical progression to AD. Until recently, ε4 variants (including ε4/ε4 and ε4/ε3 combinations) were inconsistently linked to the MCI status, likely reflecting both clinical and methodological differences in status classification. In this study, the genotype data were obtained from a 10 ml blood sample taken at the time of the scan and sent immediately to the University of Pennsylvania AD Biomarker Fluid Bank Laboratory for analysis.

Three Feature Extraction Processes

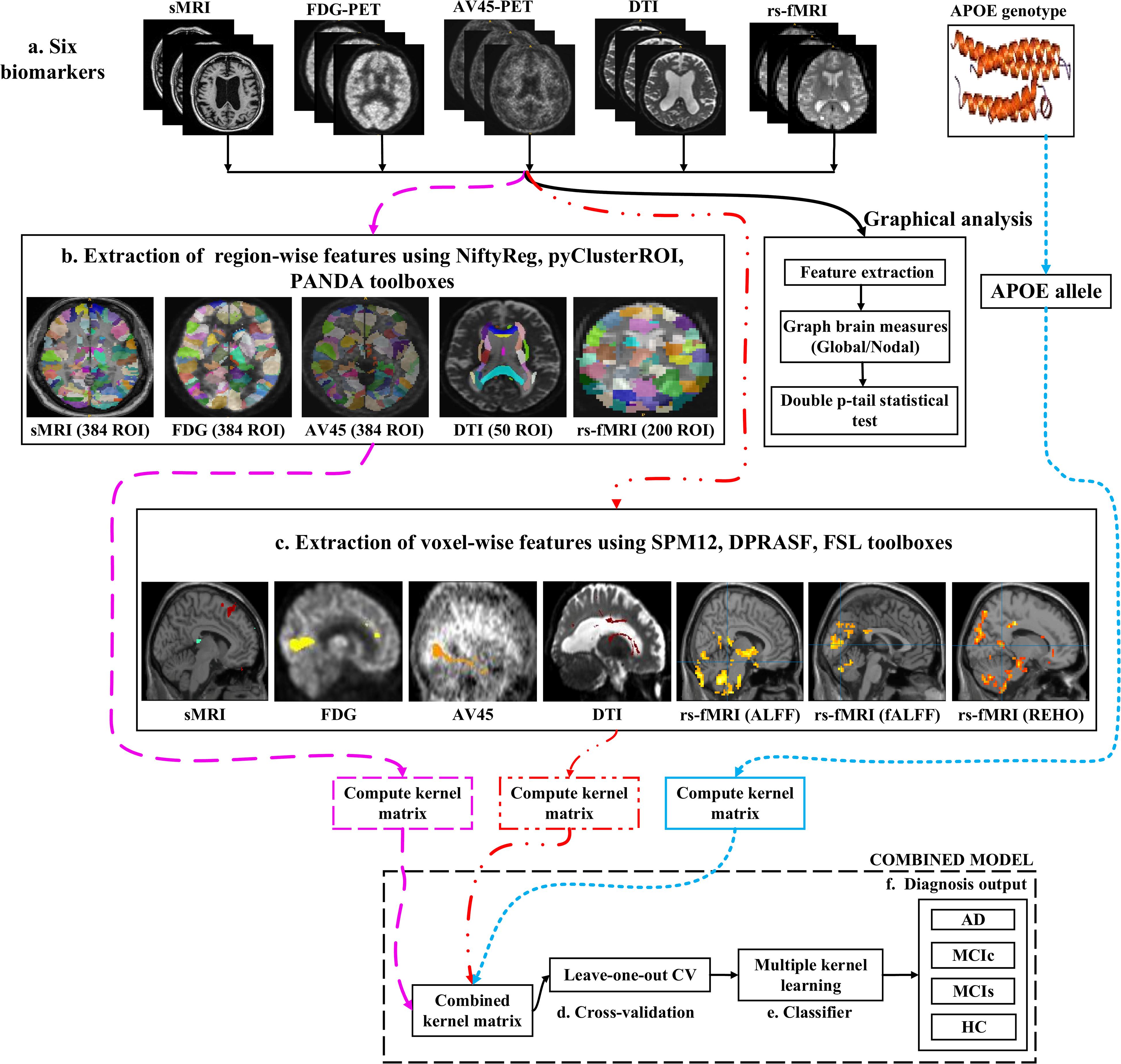

Figure 1 shows a block diagram of the proposed framework. In this study, we performed three types of analysis for the extraction of features from each imaging modality:

Figure 1. Overview of the multimodal framework. (A) Selection of five imaging modalities (sMRI, FDG-PET, AV45-PET, rs-fMRI, and DTI) and APOE genotype. (B) Extraction of regional features using NiftyReg, pyClusterROI, and PANDA toolboxes. (C) Extraction of voxel features using SPM12, DPARSF, and FSL toolboxes. (D) Leave-one-out cross-validation method. (E) Multiple kernel learning. (F) Diagnostic output.

(1) Whole-brain parcelation (or atlas-based segmentation) is a quantitative method that provides a non-invasive way to measure brain regions via neuroimaging. It works by assigning tissue labels to the unlabeled images using sMRI scans as well as the corresponding manual segmentation. For sMRI, FDG, and AV45-PET scans, we used the 2-mm Atlas of Intrinsic Connectivity of Homotopic Areas (AICHA) template image (Joliot et al., 2015) for the extraction of 384 regions of interest (ROIs) from each image, whereas for the rs-fMRI and DTI scans, we used the 2-mm Craddock atlas template (Craddock et al., 2012) for the extraction of 200 ROIs from each rs-fMRI image and the 2-mm Johns Hopkins University (JHU) WM labels atlas for the extraction of 50 ROIs from each DTI image (Hua et al., 2008).

(2) Voxel-wise analysis or morphometry (VBM) is a computational approach to neuroanatomy that measures differences in local concentrations of brain tissue through a voxel-wise comparison of multiple brain images. It uses statistics to recognize differences in brain structure between groups of patients, which in turn can be used to infer the presence of atrophy or normal-tissue expansion in patients with disease. For sMRI, FDG, and AV45-PET scans, we used the SPM12 toolbox to apply VBM, whereas, for the rs-fMRI scans, we used the DPRASF toolbox integrated with SPM12 to apply VBM. For the DTI scans, we used the tract-based spatial statistics (TBSS) function from FSL (v.6.0).

(3) Graph theory methods are powerful methods for quantifying the organization of a network by means of brain anatomical features, including cortical thickness, GM volume, and WM tracks between GM regions. When applying graph theory to different neuroimaging modalities, the outcome is a network with vertices or nodes that are represented by brain voxels or regions defined by a predetermined parcelation structure, while the edges are denoted by inter-individual data relations between the regions estimated, for example, as the intensity of correlation between the regional measurements. Note that the edges of a structural network are not always denoted by correlations between regional capacities, but by the density or number of WM tract-linking regions. In an analysis of sMRI, FDG, and AV45-PET networks, the nodes are generally defined using an anatomical segmentation or parcelation of the brain into different regions. In this study, we used the 2-mm AICHA atlas template image (which was already segmented into 384 distinct regions) for the extraction of 384 ROIs from each sMRI, FDG, and AV45-PET image. In the case of functional networks, for the rs-fMRI and DTI images, we used the 2-mm Craddock atlas template image for the extraction of 200 ROIs, and the 2-mm JHU-WM (ICBM-DTI-81) label atlas for the extraction of 50 ROIs from each DTI image. After the nodes of the network were defined, the edges indicating the relationship between different regions was computed. For this, we used the BRAPH toolbox (Mijalkov et al., 2017) integrated into MATLAB 2019a. In BRAPH, the edges are calculated in a GUI graphical analysis as the statistical correlation between the values of pairs of brain regions for an individual or for a group of subjects, depending on the neuroimaging technique.

Feature Extraction Using Atlas-Based Segmentation

After completing a series of image pre-processing steps for each imaging modality as shown in Figure 1, we extracted the features from each modality. The dashed pink line in Figure 1 shows the feature extraction for a whole-brain analysis. For sMRI, FDG, and AV45-PET images, we used the 2-mm AICHA atlas template image for the extraction of 384 ROIs from each image (Gupta et al., 2019a). We then processed these images using the open-source NiftyReg toolbox (Modat et al., 2010), which is a registration toolkit that performs fast diffeomorphic non-rigid registration on images. After the registration process, we obtained the subject-labeled image based on a template with 384 segmented regions. For each of the 384 ROIs in the labeled MR and PET images, we computed the GM volume and the relative cerebral metabolic rate of glucose from the baseline MRI, FDG, and AV45-PET data, respectively and later used it as a feature. Therefore, for each sMRI and PET image, we obtained 384 features. For the rs-fMRI images, we ran a pyClusterROI Python script, which was downloaded from https://ccraddock.github.io/cluster_roi/, for the extraction of 200 ROIs from each rs-fMRI image. This method employs a spatially constrained normalized-cut spectral clustering algorithm to generate individual-level and group-level parcelations. A spatial constraint was imposed to ensure that the resulting ROIs were spatially coherent, i.e., that the voxels in the resulting ROIs were connected. Moreover, for DTI images, we ran a pipeline to analyze the brain diffusion images (PANDA toolbox), which can be downloaded from https://www.nitrc.org/projects/panda/, integrated with MATLAB R2019a in the Ubuntu 18.04 operating system for the processing of diffusion MRI images. The PANDA pipeline uses the FMRIB Software Library (FSL), Pipeline System for Octave and MATLAB (PSOM), Diffusion Toolkit, and MRIcron packages for the extraction of diffusion metrics (e.g., FA and MD) that are ready for statistical analysis at the voxel level or atlas level. For the parcelation of DTI images, it uses the 2-mm JHU-WM (ICBM-DTI-81) label atlas, which is already segmented into 50 distinct ROIs. After completion of this process, we gained the subject-labeled image based on a template with 50 distinct segmented regions.

Feature Extraction Using Voxel-Wise Morphometry

The dashed and dotted red line in Figure 1 shows the pipeline for the voxel-wise analysis (or the extraction of features) from sMRI, FDG, AV45-PET, rs-fMRI, and DTI images. For the voxel-wise analysis of sMRI, FDG, and AV45 images, we used the statistical mapping method (SPM12) toolbox integrated with the computational anatomy toolbox (CAT version 12), which can be obtained from https://www.fil.ion.ucl.ac.uk/spm/software/spm12/ and http://www.neuro.uni-jena.de/cat/. First, the MRI data were anatomically standardized using the 12-parameter affine transformation offered by the SPM template to compensate for differences in brain size. We chose the East Asian brain image template and left all other factors at their default setting. The sMRI images were then parcellated into GM, WM, and CSF images using the unified tissue segmentation method after the image strength non-uniformity correction was complete. The obtained linearly transformed and parcellated images were then non-linearly distorted using diffeomorphic anatomical registration via exponentiated lie algebra (DARTEL) methods and modulated to create an improved template for a DARTEL-based MNI152 template image, followed by smoothing using an 8 mm FWHM kernel. The final step consisted of voxel-wise statistical assessments. To construct a statistical parametric map, we calculated contrast values based on general linear model-estimated regression parameters. This technique executes a two-sample t-test to determine if there are major regional density differences between two sets of GM images. We obtained these regional information values representing the major density differences between two sets of GM images after executing a false discovery rate (FDR) and family wise error rate (FWER) correction. Based on this data, cluster values were obtained to create ROI binary masks, which were subsequently used to acquire GM volumes from GM brain images for use as a morphometric feature. Moreover, before submitting FDG and AV45 images to VBM analysis, the first step was to register the FDG and AV45 images with their corresponding sMRI images. We used the SPM12 toolbox for this registration. After the registration, we followed the same method as was used for the sMRI VBM analysis. SPM12 was used to produce statistical maps of between-group alterations in the regional-to-whole-brain dimensions of the cerebral metabolic rate for glucose (CMRgl) consumption in likely AD, MCIs, MCIc, and HC groups.

For rs-fMRI images, to decrease the influence of the SNR, the selected data should be pre-processed. In this study, we used the DPARSF toolbox,5 which was integrated with MATLAB R2019a, to calculate the whole-brain ALFF, fractional (fALFF), and REHO feature maps, as shown in Figure 2. Spatial smoothing was performed with a 4×4×4mm FWHM Gaussian kernel before the ALFF, FALFF, and REHO calculation. To minimize the low-frequency drift, linear trending was removed from the process. To explore the ALFF, fALFF, and REHO differences between the AD patient group and the other groups, a random-effects two-sample t-test was implemented on the individual ALFF, fALFF, and REHO maps in a voxel-wise way by taking the patients’ age as a confounding covariate. The ALFF was calculated by filtering the time courses of each individual voxel of the subjects with a fast Fourier transformation of the frequency area, and the power range or spectrum was then gained. Because the power of a specified frequency is relative to the square of the amplitude of this frequency, the square root was computed at each frequency domain of the power range, and then the averaged square root was found across 0.01–0.08 Hz at each individual voxel. Later, this averaged square root was taken as the ALFF index. The fALFF was calculated as the ratio of the amplitude within the low-frequency spectrum (0.01–0.08 Hz) to the total amplitude over the full frequency spectrum (0–0.25 Hz). It is generally calculated as a fraction of the sum of amplitudes across the entire frequency range detectable in a given signal. REHO is a voxel-based measure of brain activity that evaluates the similarity or synchronization between the time series of a given voxel and its nearest neighbors (Zang et al., 2004). It was calculated using Kendall’s coefficient of concordance with the time series of every 27 neighboring voxels. Then, a Kendall’s coefficient of concordance value (ranging from 0 to 1) was assigned to each voxel center. Voxels of higher strength in the REHO maps show greater similarity with the neighboring voxel’s time series. For the DTI images, FA maps were then created using the DTIfit approach and were entered into the TBSS environment to investigate changes in diffusivity measures along the WM tract. First, all FA data were non-linearly aligned to a common space (FMRIB58_FA), then the normalized FA images were averaged to create the mean FA image and a threshold set (FA > 0.2) (to exclude voxels that were primarily GM or CSF) to create a mean FA skeleton. Next, each participant’s FA data were projected onto the mean FA skeleton, followed by voxel-wise statistical analysis. Voxel-wise statistical analysis of FA in the WM skeleton was performed using Randomize, FSL’s non-parametric permutation interference tool. Multiple comparisons were corrected for by using threshold-free cluster enhancement (p < 0.05). WM regions were identified with the JHU-WM (ICBM-DTI-81) label atlas included in FSL.

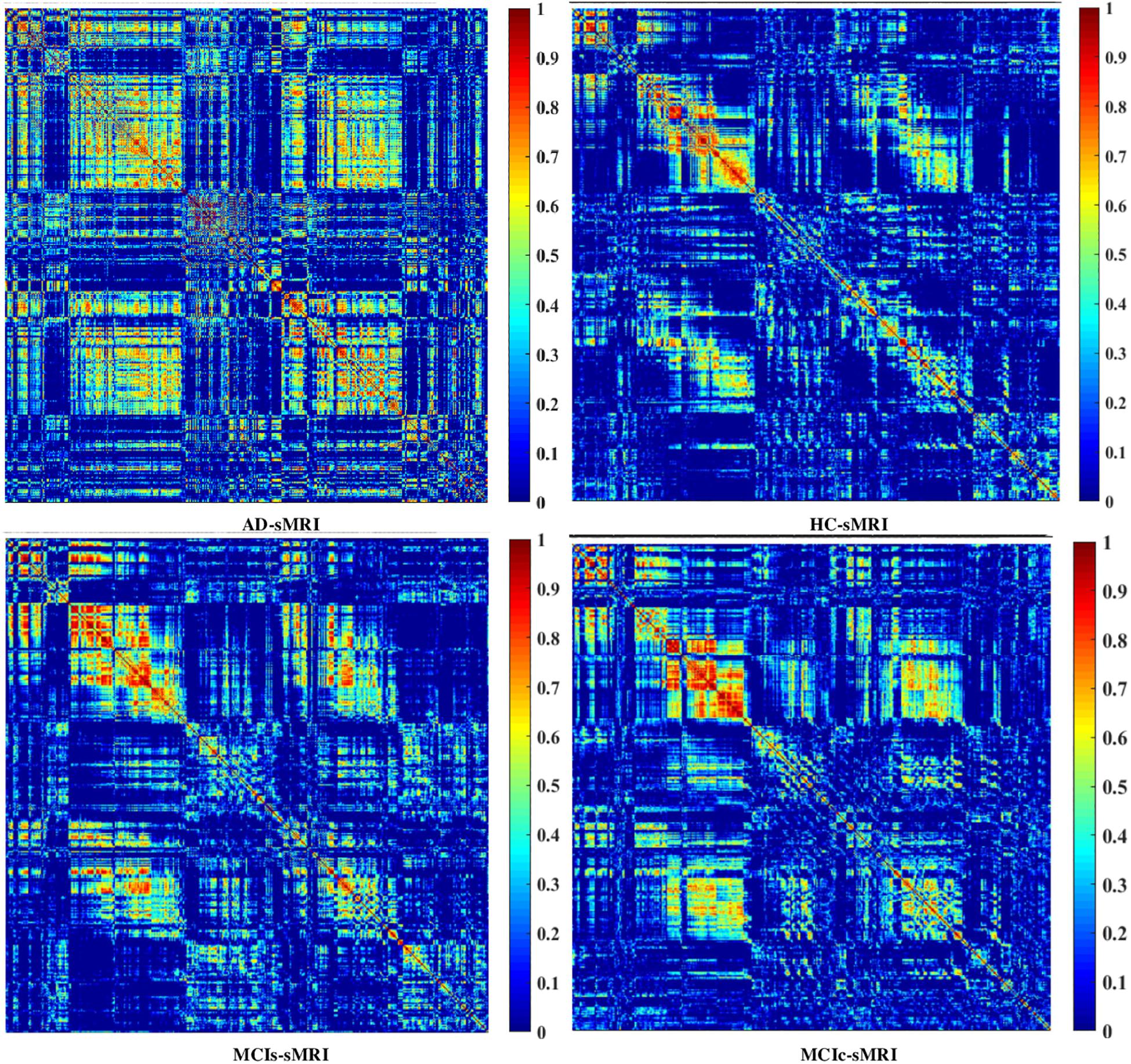

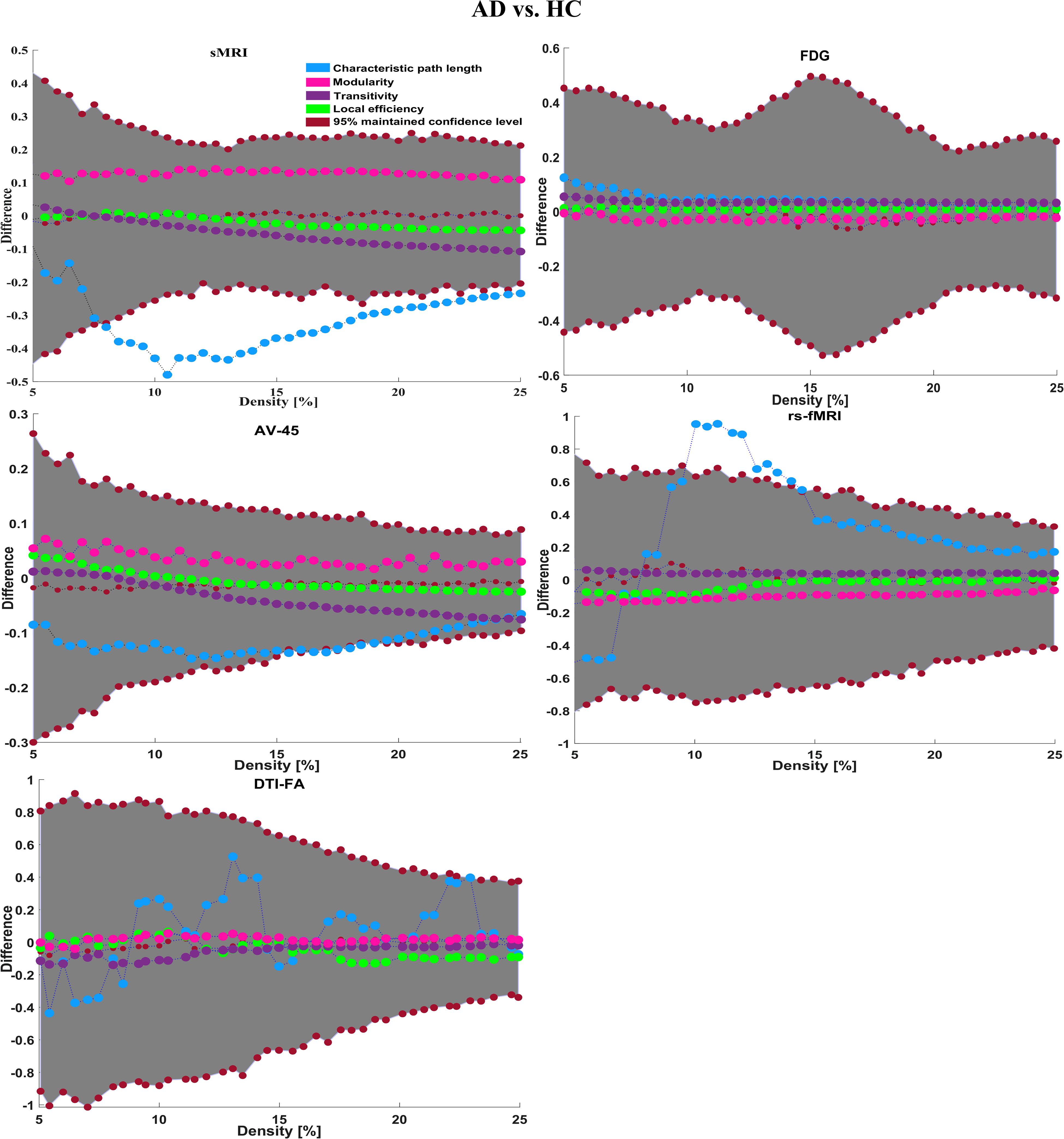

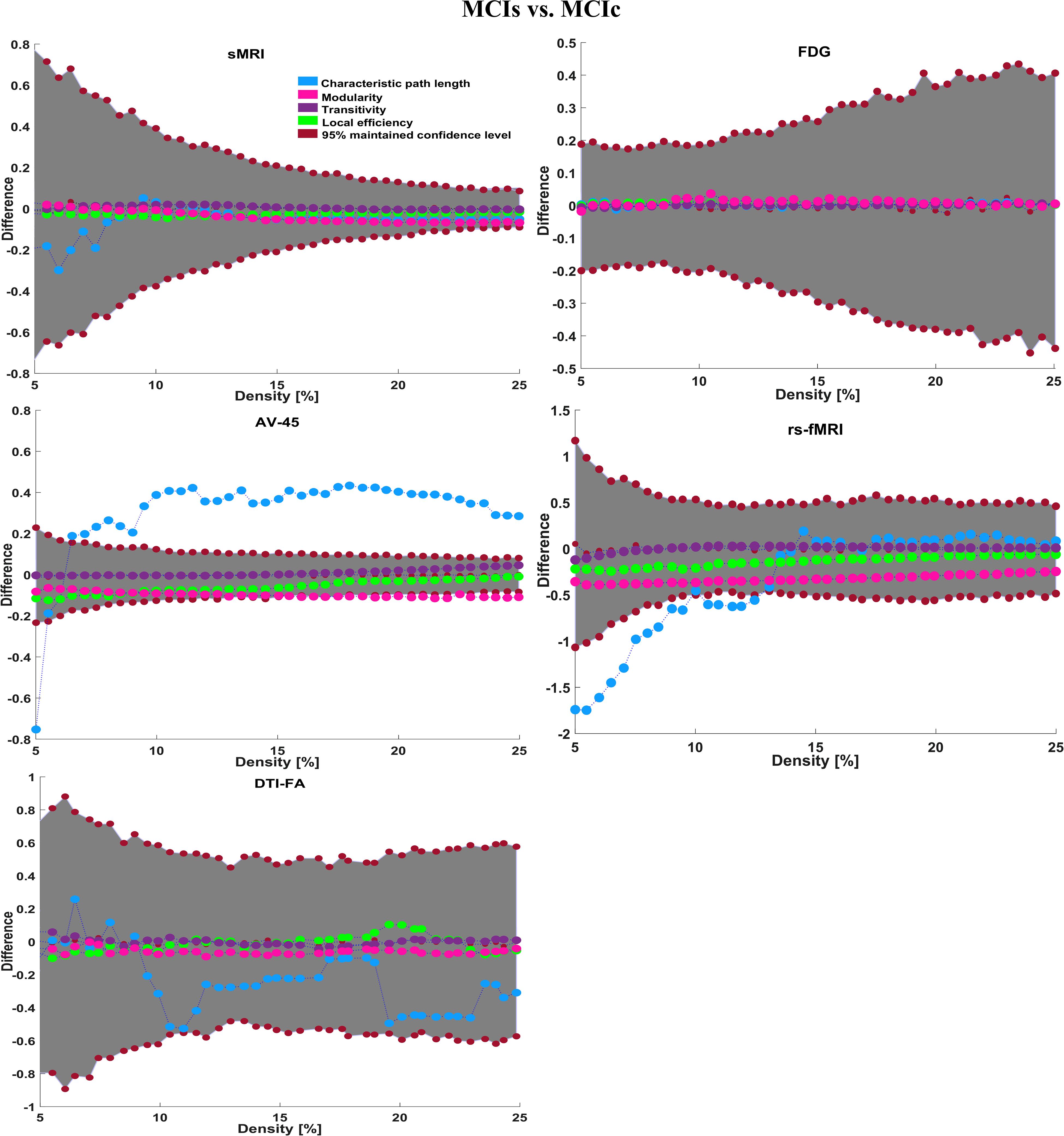

Graph Generation and Construction of sMRI, FDG-PET, AV45-PET, fMRI, and DTI Brain Networks

To assess the sMRI, FDG-PET, and AV45-PET network topology in AD, MCIs, MCIc, and HC subjects, the T1 images of these subjects were pre-processed using the NiftyReg toolbox with the integration of the 2-mm AICHA atlas template image. In total, 384 regions were extracted from each modality and included as a node in the network study. The edges between these brain regions were computed as a Pearson correlation, and the negative correlations were set to zero. The network connectivity analyses were carried out on the binary undirected graphs while controlling the number of networks across a range of densities from 5–25% with a step size of 0.5%. To assess the functional network topology of rs-fMRI images, we used the DPARSF toolbox integrated into MATLAB 2019a. Moreover, we followed the same method for the extraction of features that we followed for the rs-fMRI images in (see section “Feature Extraction Using Voxel-Wise Morphometry”). A regional time series of the 200 brain regions included in the Craddock template atlas was extracted for each patient. To compute the relationship between these regions, we used the Pearson coefficient and performed network analysis on the binary undirected graphs. To assess the DTI network topology in AD, MCIs, MCIc, and HC subjects, the DWI images of these subjects were pre-processed using the PANDA toolbox, which was integrated into MATLAB 2019b. The PANDA toolbox uses a 2-mm JHU-WM (ICBM-DTI-81) label atlas template image for the extraction of 50 WM regions from each DTI image. These extracted regions were later included as a node in the network analysis. The same procedure was followed with the same parameters as that described above for constructing a network for sMRI brain images. Moreover, several graph metrics were calculated to quantify the nodal or global topological organization of the structural and functional networks, including local efficiency, characteristic path length, transitivity, and modularity. Local efficiency is a measure of the average efficiency of data transfer within local subgraphs or regions, and it is defined as the converse of the shortest average path length between the regions of a given node and all other nodes. The local efficiency of node i is defined as: LEi = 1/di(di−1)∑j = Gi1/li,j, where di represents the number of nodes in the subgraph (Gi) and li,j is the length of the shortest path between nodes i and j. The distance between two vertices in a graph is the length of the shortest path between them if one exists. Otherwise, the distance is infinite, and the average of the shortest path between one node and all remaining other nodes is termed the characteristic path length. The characteristic path length lG is computed as: lG = 1/n(n−1)∑i≠jd(vi,vj), where n is the number of vertices (v) in a graph network, and d(vi,vj) denotes the shortest distance between vertices vi and vj. Transitivity (or the clustering coefficient) is defined as the ratio of paths that cross two edges to the number of triangles. Moreover, if a node is connected to a second node, which is in turn is linked to a third node, the transitivity reproduces the probability that the initial node is linked to the third node. It can be computed by: , where aij is the (i,j) entry of the binary connection matrix. aij = aji = 1 if there is a link between nodes i and j, and aij = aji = 0 otherwise. There are no self-loops in the network, thus aij = 0. Modularity is the fraction of the network edges that fall within the given groups, minus the expected fraction if edges were distributed at random. It also calculates to what extent a network can be divided into communities. It can be calculated by, , where the network is fully partitioned into M non-overlapping modules (or clusters), and qij represents the proportion of all links connecting nodes in module i with those in module j.

Classification Techniques

In supervised learning, classification resembles the task of determining to which category a new sample belongs, based on a training set of data containing instances for which associations have previously been identified. In neuroimaging, different information sources may contain different imaging modalities (e.g., sMRI, FDG, AV45, DTI, and rs-fMRI), different ways of extracting features from the same modality (e.g., ROI-based or voxel-based for every image), or a different feature subset. In the present study, we applied three different methods to extract features from the same neuroimaging modality as follows: whole-brain parcelation, voxel-wise analysis, and graphical representation. We were mainly interested in the first two approaches, where we used feature subsets as a kernel for each of the two methods. Later, we will combine (or concatenate) the approaches. We were particularly interested in examining models based on subsets of features extracted according to voxels or an anatomical criterion to attain predictions that enabled us to estimate the anatomical localization.

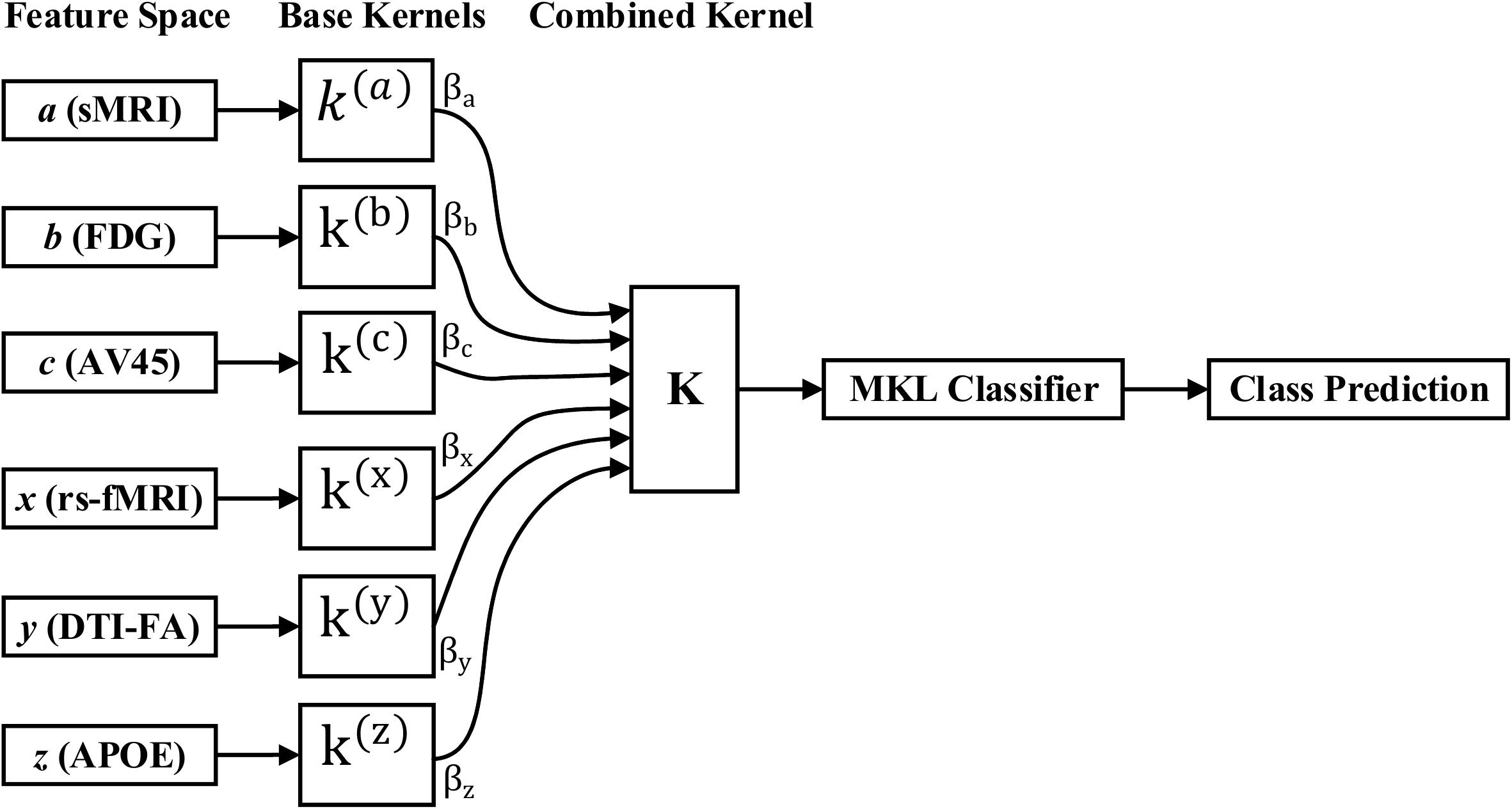

Multiple Kernel Learning

Kernel methods such as SVM (Cortes and Vapnik, 1995; Samper-González et al., 2018), which are based on similarity measures between data points, have been used with great success for dimensionality reduction and classification. Kernelization projects the native data space onto a higher-dimensional feature space. Non-linear relations between variables in the original space become linear in the transformed space. Let be the training sample, where is a data sample, M is the number of features from all modalities, and y(i)ε{1,−1} is the corresponding class label. The aim is to simultaneously acquire an optimal feature description and a max-margin classifier in kernel space because of its systematic and elegant way of forming complicated patterns. Therefore, to project the data, we will use the kernel trick. As we know, for any kernel (K) on an input space (X), there exists a Hilbert space (f), called the feature space, and the projection ∅ is given by the mapping ϕ:X→f, such that for any two-object (x,y) in X, K = (x,y) = < ϕ(x),ϕ(y) >, where <.,. > is the Euclidean or inner dot product of the data point. Examples of kernel functions include the linear, RBF, and others. Recent papers have shown that using multiple kernels rather than using a single kernel can improve the interpretability of a decision function, and in some instances, it improves the final performance. In MKL, the data are represented as a combination of base kernels. Each base kernel represents a different modality or feature of the entity. MKL seeks to find the optimal combination of base kernels so that the analysis tasks that follow are benefited the most. Classification tasks are represented especially well through MKL, as the optimal combination is the one that gives the maximum classification accuracy. The dual form of MKL optimization, as it is solved by conventional solvers like LIBSVM, is given as

where αi,αj are Lagrange multipliers, which are the variables obtained on converting the primal support vectors to the dual problem, and is the m-th kernel function, which is applied to each pair of the samples, and C is the regularization parameter that controls the distance between the hyperplane and the support vectors. From the set of n training samples, the features of the i-th sample from the m-th modality are in the vector , and its corresponding class label, yi, is either +1 or −1. The weight on the m-th modality kernel, represented as βm, is optimized using a grid search, or as a separate optimization problem with fixed α. For each new test sample, s, the kernel functions are computed against the training samples. An MKL overview is depicted in Figure 3. Recent research has shown that including the base datasets in more than one kernel, each differing in their selection of kernel parameters, improves performance. All kernels are then normalized to the unit trace through the formula . Here, we set the weights accordingly to conform with sMRI, FDG, AV45, DTI, rs-fMRI, and APOE genotype features. The combined kernels can be described as follows,

Then, we use the EasyMKL (Aiolli and Donini, 2015; Donini et al., 2019) solver to search for the combination of basic kernels that maximizes the classifier performances by optimizing a simple quadratic problem addressed by SVM by computing an optimal weighting. Besides its proven empirical success, a clear advantage of EasyMKL compared with other MKL approaches is its high scalability with respect to the number of kernels to be combined. It finds the coefficients η that maximize the edges in the training dataset, where the margin is calculated as the distance between the convex hull of the positive and negative samples. In particular, the general problem that EasyMKL aims to optimize is the following,

where y is a diagonal matrix with training samples on the diagonal, and λ is a regularization hyperparameter, whereas domain Γ signifies two probability distributions over the set of negative and positive samples in the training set, that is (Aiolli and Donini, 2015; Donini et al., 2019). Note that any element γεΓ resembles a pair of samples in the convex hull of the positive and negative training samples. At the solution, the first expression of an objective function denotes the obtained (squared) edge, which is the (squared) distance between a point in a convex hull of positive samples and a point in a convex hull of negative samples, in the considered feature space. It enforces sparsity across modalities while allowing more than one discriminative kernel to be chosen from the same modality. In other words, there is sparsity across modalities and non-sparsity within modalities, thereby making it a convex optimization problem. Moreover, in addition to the EasyMKL classifier, we also applied a RBF kernel (or Gaussian kernel) with SVM classifier for the comparison of our obtained results. An RBF kernel is a function with a score that depends on the distance from the origin (or from some points). It is represented by K(x1,x2) = e(∥x1−x2∥2/2σ2), where ∥x1−x2∥2 is the squared Euclidean distance between two data points x1 and x2. An RBF kernel has two parameters: gamma (γ) and C, and its performance depends on them. When the C value is small, the classifier is fine with misclassified input points (high bias, low variance), but when the C value is high, the classifier is severely penalized for misclassified data, and hence it leans over backward to avoid any misclassified input points (low bias, high variance). Moreover, when the γ value is low, the curve of the decision margin is quite low, and therefore the decision area is very large, but when the γ value is high, the curve of the decision margin is high, which creates decision-boundary bars around the input points. In our case, we applied the GridSearch method from a scikit-learn (v0.20) (Pedregosa et al., 2011) library to find the optimal hyperparameter (C and γ) value for the RBF-SVM classifier. The GridSearch was performed over the ranges of C = 1 to 9 and γ = 1e−4 to 7.

Cross Validation

Cross validation (CV) is one of the most widely utilized data resampling methods for estimating the generalization knowledge of a predictive design and for preventing under- or overfitting. CV is largely applied in settings where the aim is prediction and it is necessary to evaluate the accuracy of a predictive model. For a classification problem, a model is generally fitted with a known sample, called the training sample, and a set of unknown samples against which the model is examined, known as the test sample. The aim is to have a sample for testing the proposed model in the training period, and then present insight into how the particular model adapts to an independent sample. A round of CV involves the partitioning of samples into complementary subsets, then conducting analysis on an individual subset. After this, the study is verified on other subsets (called testing samples). To reduce variability, multiple rounds of CV are performed using several different partitions, and later an average of the outcomes is taken. CV is a powerful procedure in the evaluation of model performance. In this study, we utilized leave-one-out CV (LOOCV) from the scikit-learn (v0.20) (Pedregosa et al., 2011) library. LOOCV is a CV process in which the bulk of the fold is “1,” with “k” being fixed to the number of attributes in the dataset. This means that the number of folds equals the number of instances in the sample. Thus, the learning algorithm is employed once for each instance, utilizing all other instances as a training sample, and utilizing the selected instance as a single-item test sample. This type of CV is useful when the training samples are of limited size and the number of attributes to be verified is not high.

Implementation

Our classification framework and validation experiments were implemented in Python 3.5 using an interface to the scikit-learn v0.20 (Pedregosa et al., 2011) library to measure performance, and using MKLpy (v0.5)6 for the MKL framework. The main source code will be made available on the GitHub website.7 The dataset list will be available in the Supplementary Material. Nonetheless, please note that you must prepare the original image features independently.

Results

In this section, we present the performance results of each classification (AD vs. HC, MCIs vs. MCIc, AD vs. MCI, AD vs. MCIc, HC vs. MCIs, and HC vs. MCIc) for all five neuroimaging and APOE genotype modalities using whole-brain parcelation, the voxel-wise method, and the graphical method. We implemented the multimodal fusion approaches for the integration of the sMRI, FDG, AV45, rs-fMRI, DTI, and APOE genotype data. We used the combined representation to distinguish patients with AD from healthy subjects. The combined approach is considered successful if the classification task is performed with greater accuracy and higher AUC score, higher precision, and better sensitivity and specificity against unimodal classification. Along with the unimodal approaches, we evaluated the classification of a concatenated data vector comprising data from the five neuroimaging modalities and two APOE genotype modalities, and used it as a baseline. Moreover, after the completion of extracting features from each modality using whole-brain parcelation and voxel-wise analysis, we passed these extracted features through a polynomial kernel function to map the original non-linear low-dimensional features onto a higher-dimensional space in which they became separable. After that, a data fusion technique was used to combine the multiple kernel features into a single form before passing them through the EasyMKL classifier for the binary classification of the six groups. For an unbiased performance assessment, the classification groups were randomly split into two sets at a 70:30 ratio as training and testing sets, respectively. In a training set, finding the right values for the lambda (λ) parameter is quite difficult, and their values influence the classification result. Therefore, to find the optimal hyperparameter values for a lambda from 0 to 1 of an EasyMKL algorithm, we used the leave-one-out cross-validation technique on the training set. For each method, the optimized values obtained for the hyperparameter were then used to train the EasyMKL classifier using the training group. The performance of the resulting classifier was then estimated on the remaining 30% of the data in the testing dataset, which was not used during the training step. Here, cross validation was used to assess how the result of the classification analyses could generalize to an independent group. One round of cross validation includes partitioning the data sample into disjoint subsets of instances, performing the analysis on one subsection (the training group), and validating the study on the other subset (the testing or validation set). To reduce variability, numerous rounds of cross validation are done using different subsets, and the validation outcomes are averaged over the rounds. In the present study, we used an LOOCV, which involves separating a single instance (either control or patient) from the complete example for testing while the remaining instances are used for training purposes. This splitting is iterated so that each instance in the whole sample is used once for validation. After all iterations, the final accuracy is quantified as the mean of the accuracies gained across each fold. In this way, we attained unbiased estimations of the performance for each classification problem. Moreover, after the completion of whole-brain and voxel-wise analysis, we used the BRAPH toolbox to perform the brain graphical analysis for each classification group with the same five neuroimaging features (sMRI, FDG, AV45, rs-fMRI, and DTI), which we extracted in the whole-brain analysis. For each analysis, we measured the accuracy (ACC, calculated as the average of the proportion of correctly classified subjects from each class individually), the sensitivity (SEN, described as true positive – the number of subjects correctly classified), and the specificity (SPEC, described as true negative – the number of healthy controls correctly categorized), precision (PRE, referring to how well the measurements agree with each other across multiple tests), F1-score (explained as a weighted average of recall and precision, where an F1-score attains its best value at one and its worst at zero), and AUC-ROC [a receiver operating characteristic curve (ROC curve) is a graphical plot that illustrates the diagnostic aptitude of a binary classifier scheme as its differential threshold is varied]. An AUC-ROC curve is constructed by plotting the true positive rate (TPR) against the false positive rate (FPR). The TPR is the proportion of observations that were correctly predicted to be positive out of all positive observations [TP/(TP + FN)]. Likewise, the FPR is the proportion of observations that are incorrectly predicted to be positive out of all negative observations [FP/(TN + FP)]. The ROC curve shows the trade-off between sensitivity (or TPR) and specificity (1−FPR). Classifiers that give curves closer to the top-left corner show better performance. As a baseline, a random classifier is expected to give points lying along the diagonal (FPR = TPR). The closer the curve comes to the 45-degree diagonal of the ROC space, the less accurate the testing data are. For each classification group, we also measured Cohen’s kappa values, which measures the inter-rater reliability between two individuals (Cohen, 1960). Kappa measures the percentage of information scores in the main diagonal of a table and then adjusts these scores for the quantity of agreement that could be assumed due to chance alone. The formula for calculating Cohen’s kappa for two raters is given by K = p0−pc/1−pc, where p0 is the relative observed agreement among raters and pc is the hypothetical probability of chance agreement. Kappa is always less than or equal to 1. A value of 1 suggests perfect agreement, and scores less than 1 suggest less than the best agreement. In rare circumstances, Kappa can achieve a negative score. This signifies that the two groups agreed less than would be predicted by chance alone.

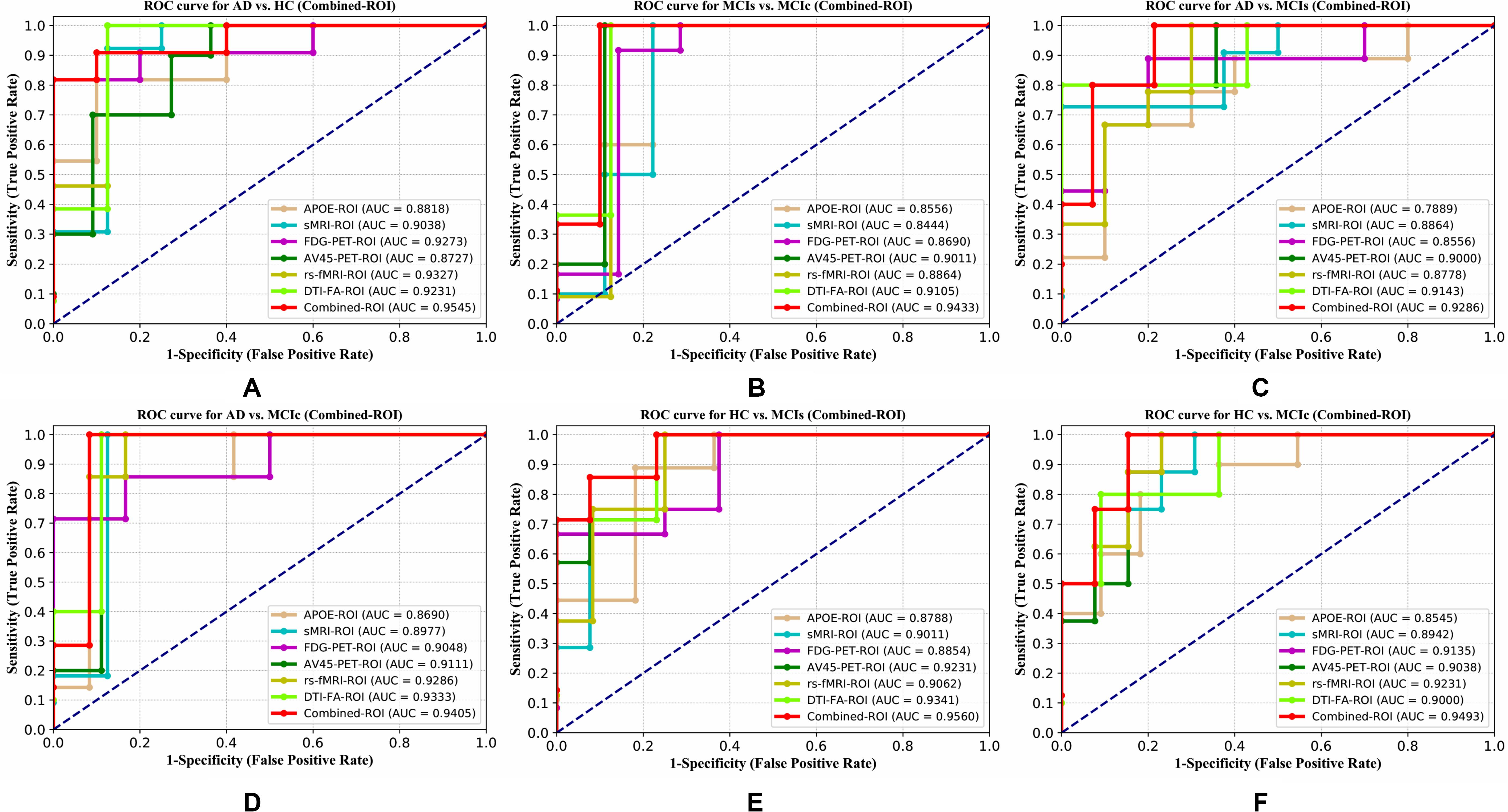

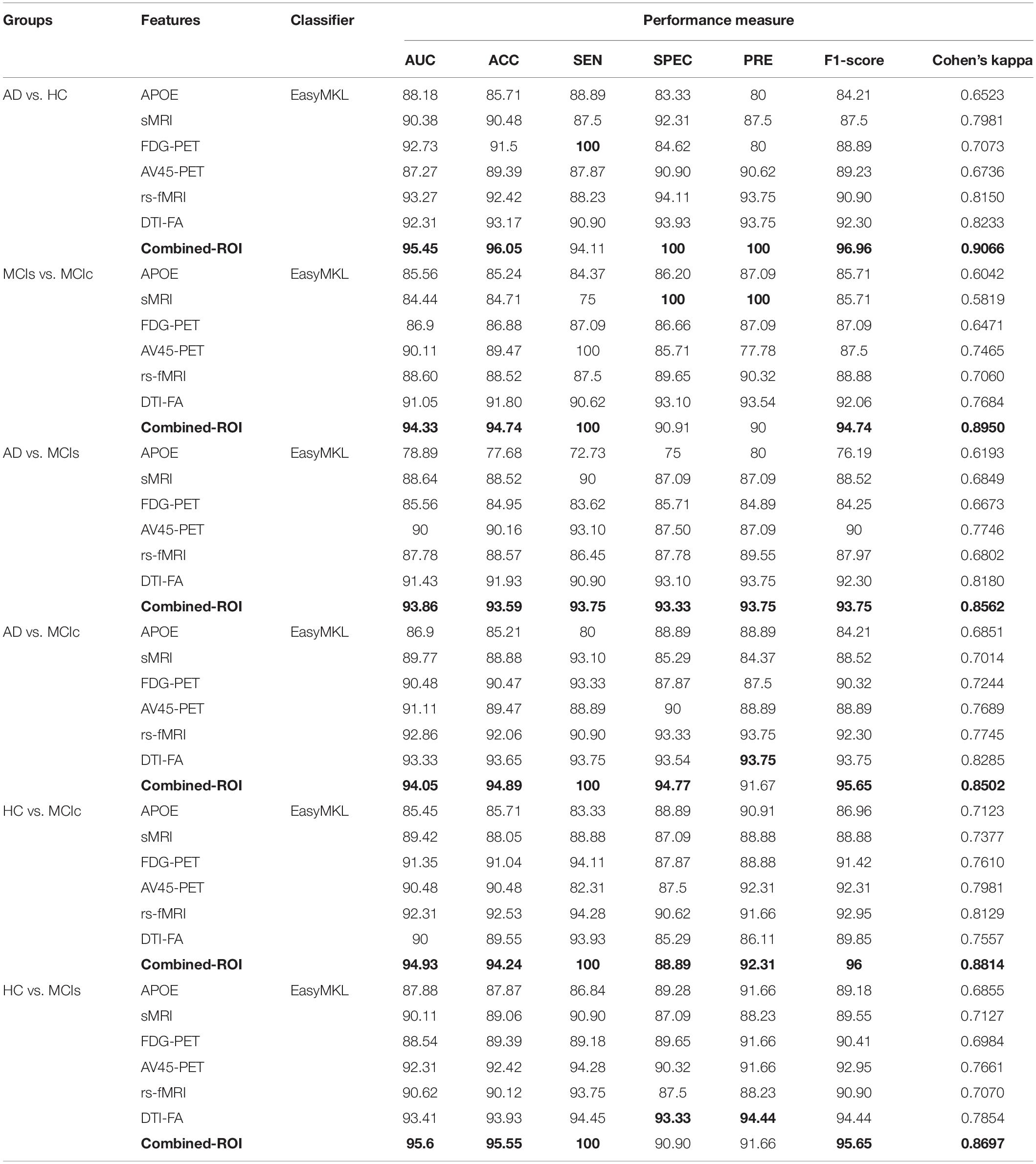

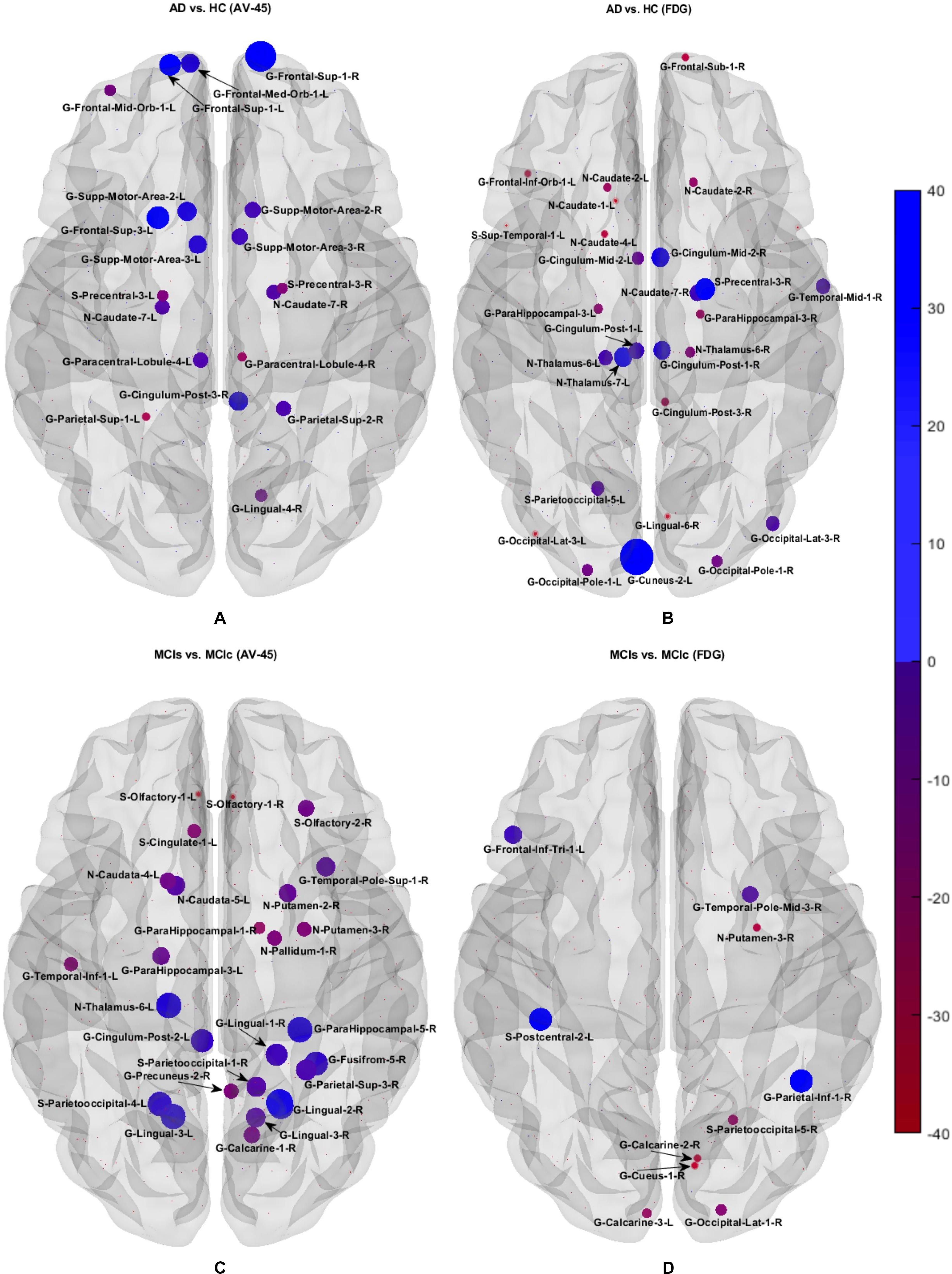

Classification Performance Across Single and Combined Modalities Using Whole-Brain Parcelation Analysis

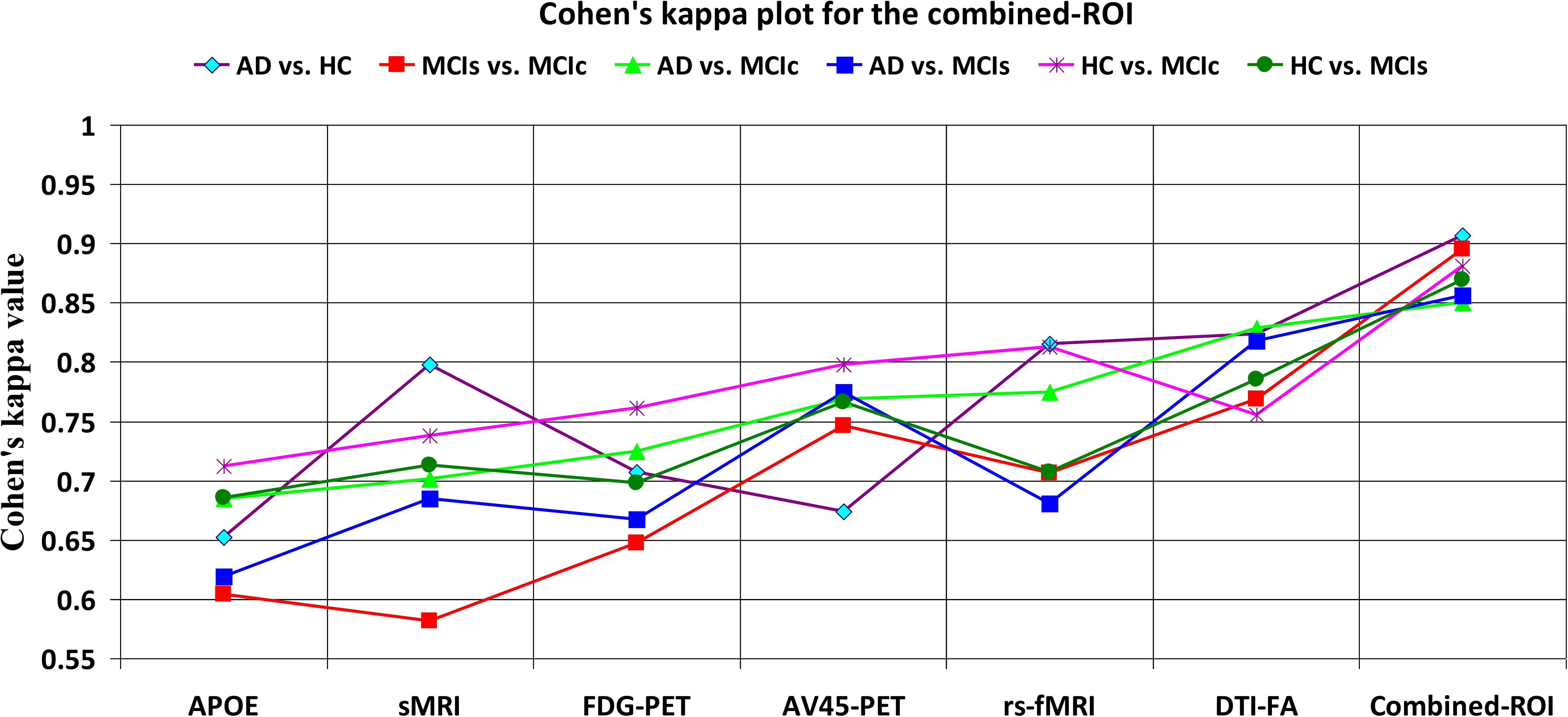

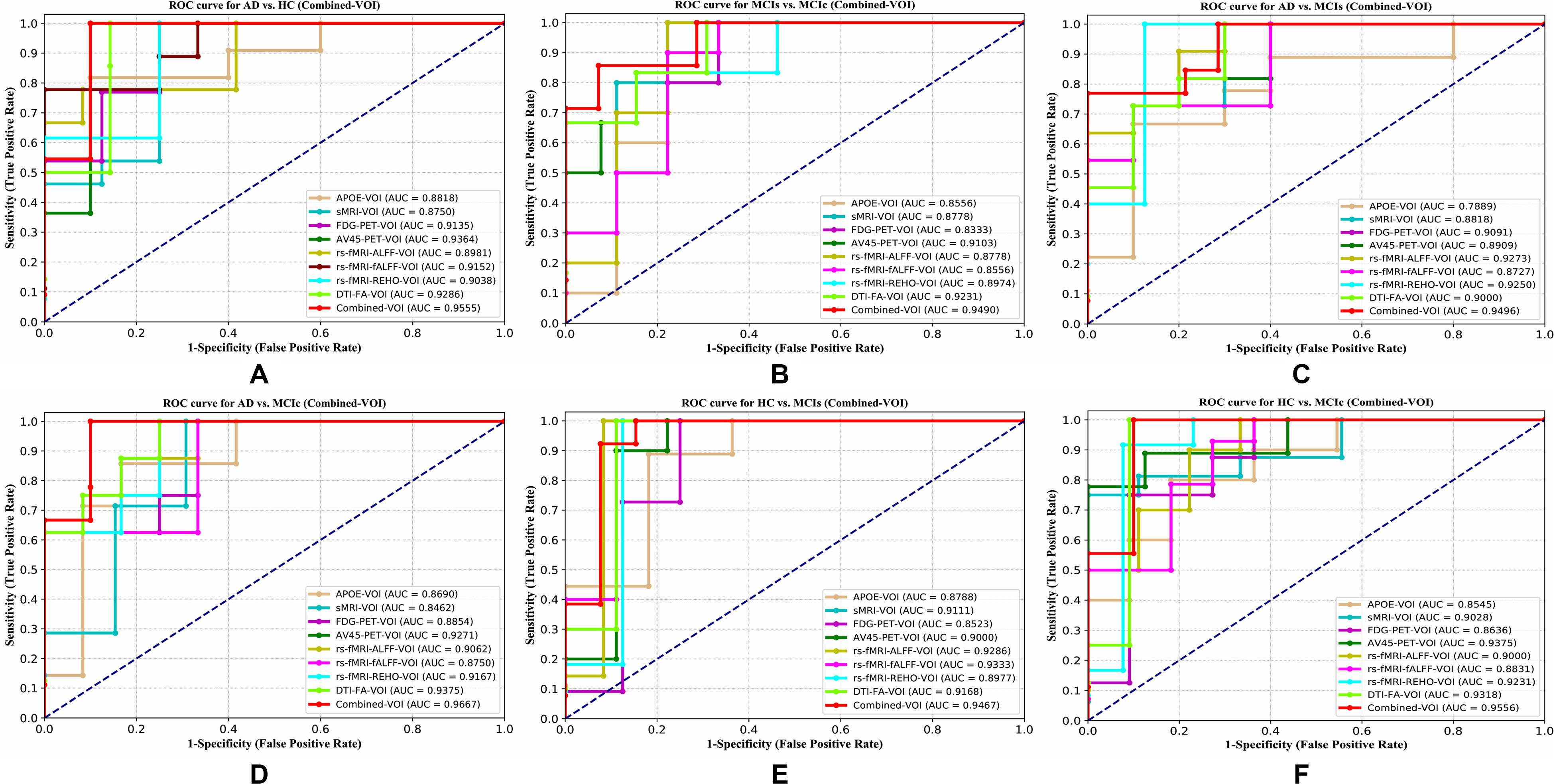

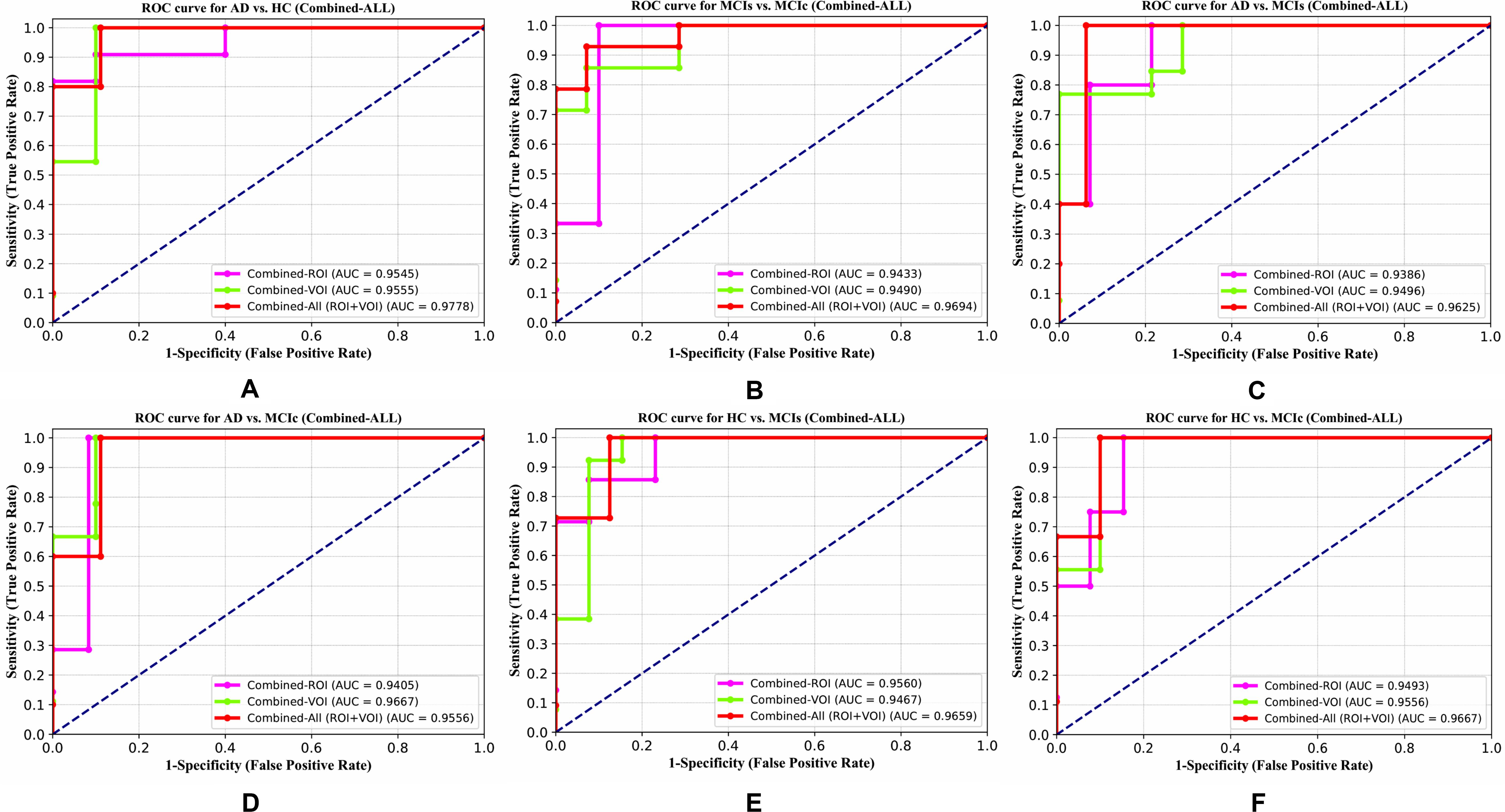

For the whole-brain analysis, we used the 2-mm AICHA atlas template image for sMRI, FDG, and AV45-PET images with the NiftyReg toolbox for the extraction of 384 ROIs from each neuroimaging modality (as shown in Figure 1B). For each rs-fMRI and DTI image, we used the 2-mm Craddock atlas template and the 2-mm JHU-WM (ICBM-DTI-81) label atlas for the extraction of 200 and 50 ROIs, from each rs-fMRI and DTI image, respectively, using the pyClusterROI Python script and the PANDA toolbox (as shown in Figure 1B). In total, we obtained 1404 features for a single image, 384 features from each sMRI, FDG, and AV45-PET image, 200 from each rs-fMRI image, 50 features from each DTI image, and two features from the APOE genotype data. Afterward, we passed these obtained features through a normalization technique to minimize the redundancy within the dataset. Furthermore, we passed these low-dimensional, normalized features from the polynomial kernel matrix to map them onto a high-dimensional feature space. Then we fused all these high-dimensional features in one form before passing them through the EasyMKL algorithm for classification. The obtained AUC-ROC graph and Cohen’s kappa scores are plotted in Figures 4, 5.

Figure 4. ROC curve for (A) AD vs. HC, (B) MCIs vs. MCIc, (C) AD vs. MCIs, (D) AD vs. MCIc, (E) HC vs. MCIs, and (F) HC vs. MCIc using whole-brain parcelation analysis. The red solid line shows the result of a combined-ROI curve with single modality features.

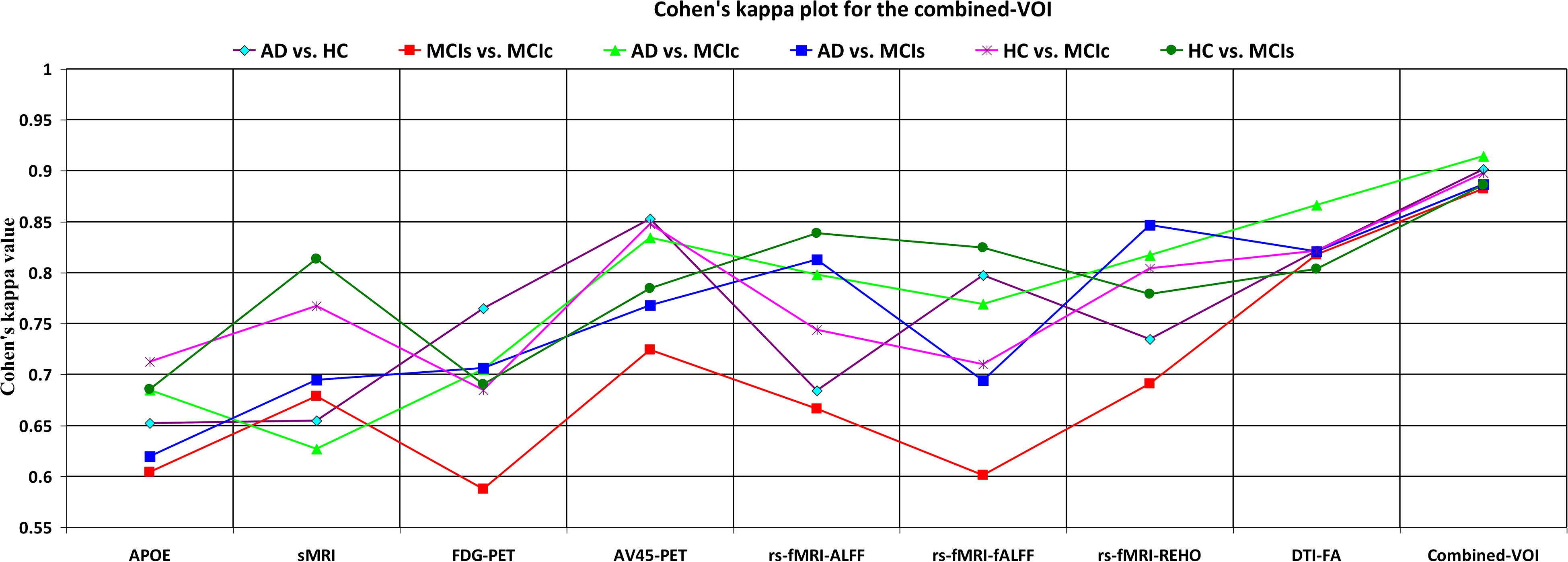

Figure 5. Cohen’s kappa plot for AD vs. HC, MCIs vs. MCIc, AD vs. MCIs, AD vs. MCIc, HC vs. MCIs, and HC vs. MCIc are grouped using whole-brain parcellation analysis. The above graph clearly shows the benefit of the combined-ROI modality over any single modality.

For the single modalities, whole-brain MKL analysis for AD vs. HC (Table 2), using only the APOE genotype, achieved 85.71% accuracy. Similar accuracy was obtained using sMRI (90.48%), FDG-PET (91.5%), AV45-PET (89.39%), and rs-fMRI (92.42%). Using DTI-FA, the accuracy was increased to 93.17% compared with both genotype and functional imaging. When the combined-ROI features were passed through the classifier, the accuracy increased to 96.05%. Additionally, the obtained Cohen’s kappa value was 0.9066, which is closer to 1 than the kappa values of the individual modalities. Figure 5 shows the Cohen’s kappa plot for the combined-ROI.

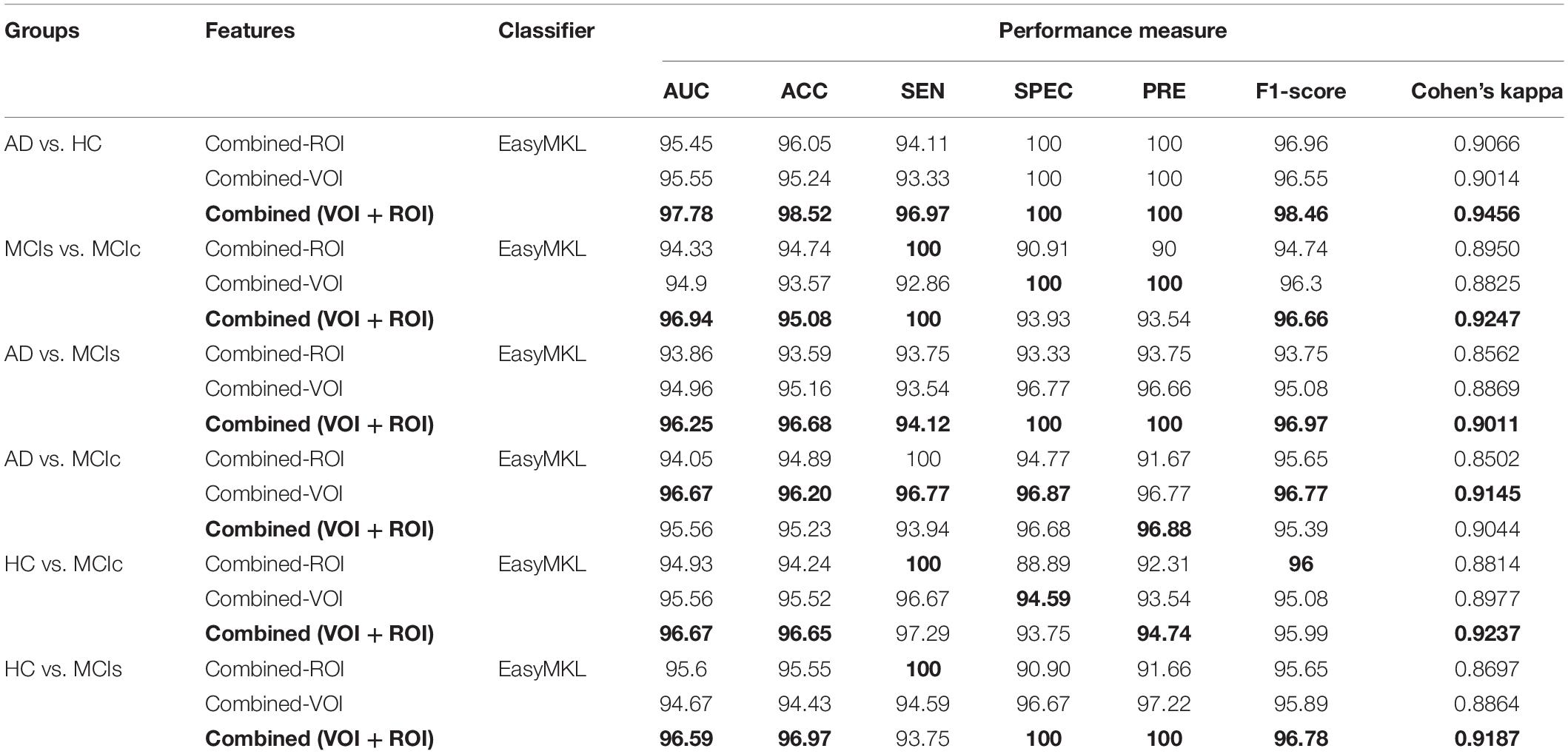

Table 2. Classification results for AD vs. HC, MCIs vs. MCIc, AD vs. MCIs, AD vs. MCIc, HC vs. MCIc, and HC vs. MCIs groups using ROI features (EasyMKL).

Here, for AD vs. HC classification, the combined-ROI features performed very well compared with the single modalities. Similarly, for the single modalities, whole-brain MKL analysis for MCIs vs. MCIc (Table 2) using only the APOE genotype achieved 85.24% accuracy. Similar accuracy was obtained using FDG-PET (86.88%), AV45-PET (89.47%), and rs-fMRI (88.52%). Using sMRI-extracted ROI features, the achieved accuracy was lower (84.71%) in comparison with other single modalities. Using DTI-FA, the accuracy increased to 91.80% in comparison with both genotype and functional images. Moreover, when the combined-ROI features were passed through the classifier, the accuracy increased to 94.74% and the obtained Cohen’s kappa value was 0.8950 (Figure 5), which is closer to 1 than that for the individual modalities. Here, for the MCIs vs. MCIc classification, the combined-ROI features performed very well compared with the single modalities. Likewise, for the AD vs. MCIc classification problem, the best performance was attained using a combination of six modalities of features, which achieved an accuracy of 94.89% with a Cohen’s kappa of 0.8502. In this case, the rs-fMRI and DTI-FA unimodal features performed better for classifying the (AD vs. MCIc) group than the other unimodal features, and their obtained accuracies were 92.06 and 93.65%. For the AD vs. MCIs group, our proposed technique achieved 93.59% accuracy, which was 1.66% higher than the best accuracy obtained by the DTI-FA (unimodal) feature for classifying this group. The Cohen’s kappa score obtained for the AD vs. MCIs group was 0.8562 (Figure 5) which is close to the maximum agreement value of 1. For the HC vs. MCIc classification problem, our proposed method combining all six modalities of a biomarker to distinguish between HC and MCIc achieved good results compared with the single modality biomarkers. For this classification problem, our proposed method achieved 94.24% accuracy with a Cohen’s kappa of 0.8814 (Figure 5). In this case, from Table 2, we can see that all three (FDG-PET, AV45-PET, and rs-fMRI) functional imaging features performed well compared with the other unimodal features, and their obtained Cohen’s kappa scores were 0.7610, 0.7981, and 0.8129, which are all close to 1. Likewise, for the HC vs. MCIs group, our proposed technique achieved 95.55% accuracy, which is 1.62% higher than the best accuracy obtained by the DTI-FA (unimodal) feature for classifying this group. The obtained Cohen’s kappa score for the HC vs. MCIs group was 0.8697 (Figure 5), which is close to the maximum agreement value of 1. Therefore, from Table 2 and Figures 4, 5, we can state that for all classification combinations, our proposed method attained a high level of performance compared with the individual modality of biomarkers, varying from 1 to 3%, and our proposed scheme also attained a higher level of agreement between all six classification combinations than the individual modality-based methods.

The number of extracted ROIs was slightly higher for the sMRI, FDG-PET, and AV45-PET images than for the other modalities, although the real number of features used as input for each single and combined model varied across the models. Except for the HC vs. MCIc group, in the (AD vs. HC, MCIs vs. MCIc, AD vs. MCIc, AD vs. MCIs, and HC vs. MCIs) classification sets, it is interesting to observe that even though the number of extracted ROI features for sMRI, FDG-PET, AV45-PET, and rs-fMRI were higher than the number of DTI-FA ROI features, the obtained accuracy was lower than for the DTI-FA ROI features for the stated classification groups. Out of the 1404 ROIs, 384 ROIs selected from sMRI, 384 ROIs selected from FDG-PET, 384 ROIs selected from AV45-PET, 200 ROIs from rs-fMRI, 50 ROIs from DTI-FA, and the remaining two ROIs from APOE genotype corresponded to 27.3% (each of sMRI, FDG-PET, and AV45-PET), 14.5% (rs-fMRI), 3.5% (DTI-FA), and 0.1% (APOE) of the total number of features. In Figure 4, we show the ROC curves (plots of the TPR vs. the FPR for dissimilar possible cut-points) for each study presented in Table 2. The obtained AUC is presented in each plot. Figure 4 shows that our proposed method achieved higher AUC values for all classification sets than did the individual modalities. For the AD vs. HC and HC vs. MCIs classification groups, our proposed method achieved an AUC greater than 95%, while for the MCIs vs. MCIc, AD vs. MCIs, AD vs. MCIc, and HC vs. MCIc groups, the proposed method achieved an AUC less than 95%, (MCIs vs. MCIc, AD vs. MCIs, AD vs. MCIc, and HC vs. MCIc) < 95% < (AD vs. HC and HC vs. MCIs). The obtained result using the RBF-SVM classifier can be found in Supplementary Table S31. From Supplementary Table S31, we can say that the combined-ROI features performed very well as compared with the individual modality outcomes for all six binary classification groups. Table 2 and Supplementary Table S31 clearly show the advantage of using combined features over individual ones (see Supplementary Table S31).

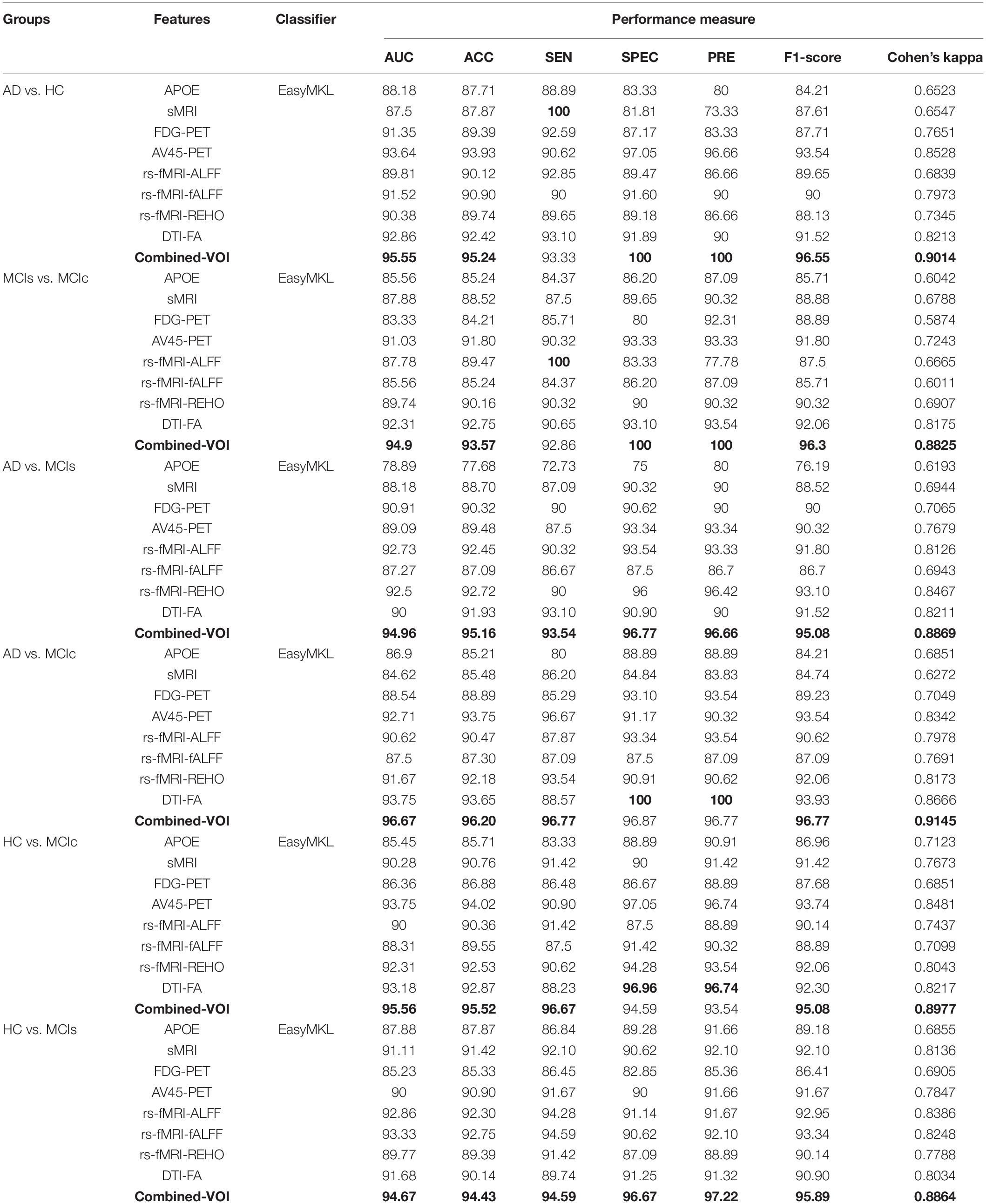

Classification Performance Across Single and Combined Modalities Using Voxel-Wise Analysis

For the voxel-wise analysis of the sMRI, FDG-PET, and AV45-PET images, we used the SPM12 toolbox with the integration of the CAT12 toolbox in MATLAB R2019a. For the DTI images, we used the DTIfit and TBSS functions from the FSL toolbox. For the voxel-wise analysis of rs-fMRI images, we used the DPARSF toolbox in MATLAB R2019a. Afterward, we passed these obtained features with two features from the APOE genotype data through a normalization technique to minimize the redundancy within the dataset. Furthermore, we passed these low-dimensional, normalized features from the polynomial kernel matrix to map them onto a high-dimensional feature space. Then we fused all these high-dimensional features in one form before passing them through the EasyMKL algorithm for classification. The obtained result are shown in Table 3 and Figure 6 shows the Cohen’s kappa plot for all six classification groups using voxel-wise analysis.

Table 3. Classification results for AD vs. HC, MCIs vs. MCIc, AD vs. MCIs, AD vs. MCIc, HC vs. MCIc, and HC vs. MCIs groups using voxel-wise (VOI) features (EasyMKL).

Figure 6. Cohen’s kappa plot for AD vs. HC, MCIs vs. MCIc, AD vs. MCIs, AD vs. MCIc, HC vs. MCIs, and HC vs. MCIc are grouped using voxel-wise analysis. The above graph clearly shows the benefit of the combined-VOI modality over any single modality.

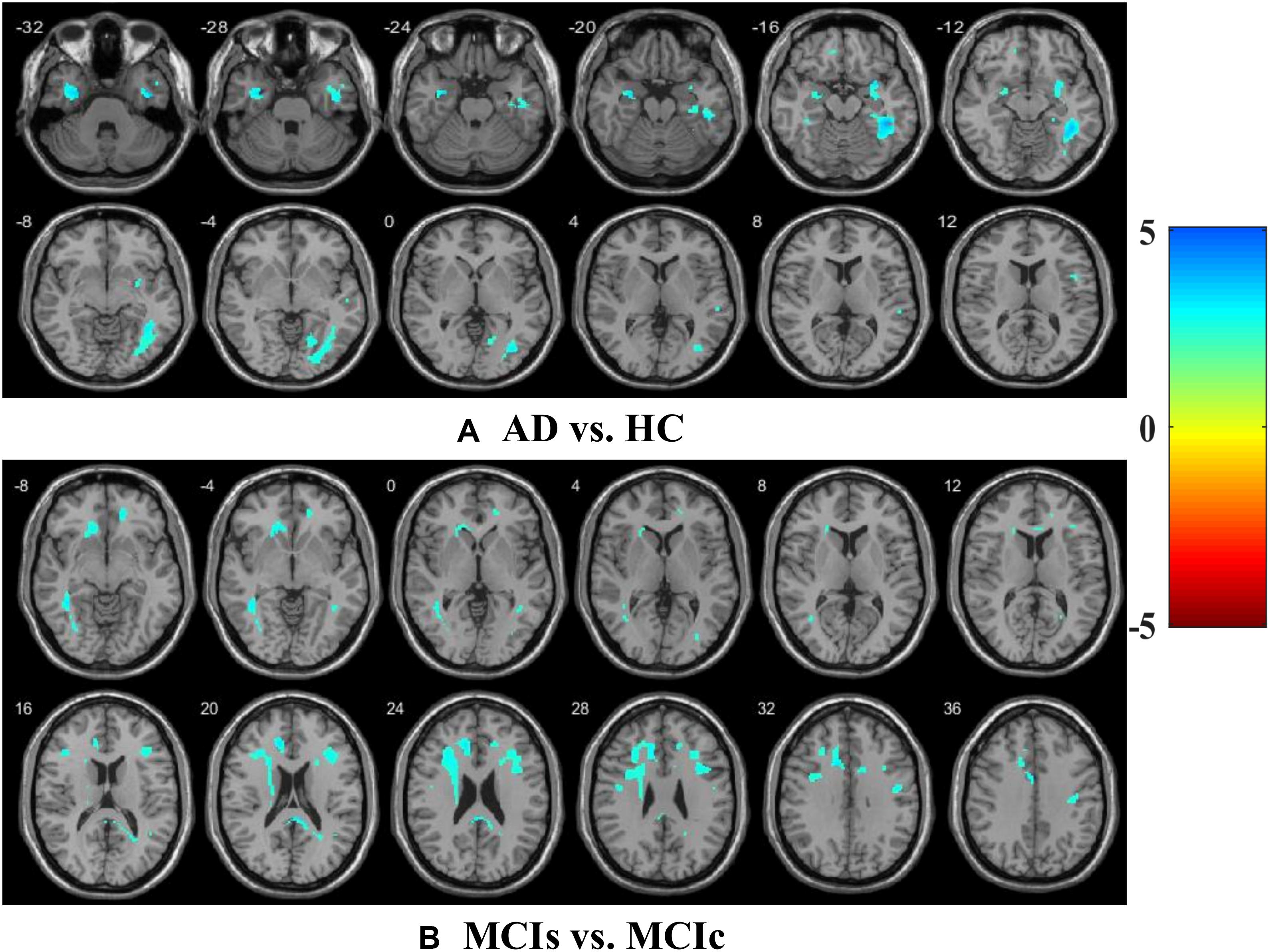

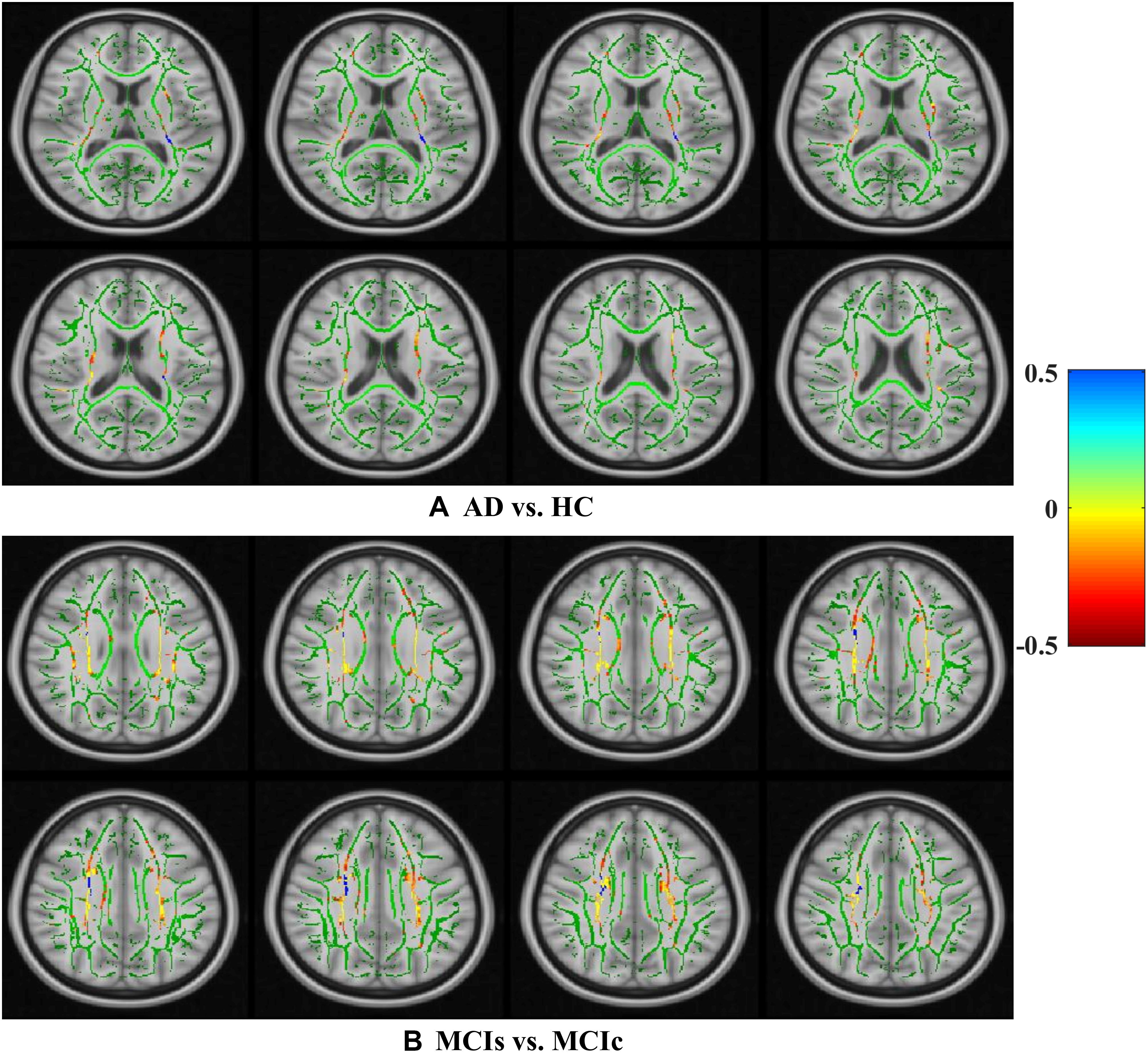

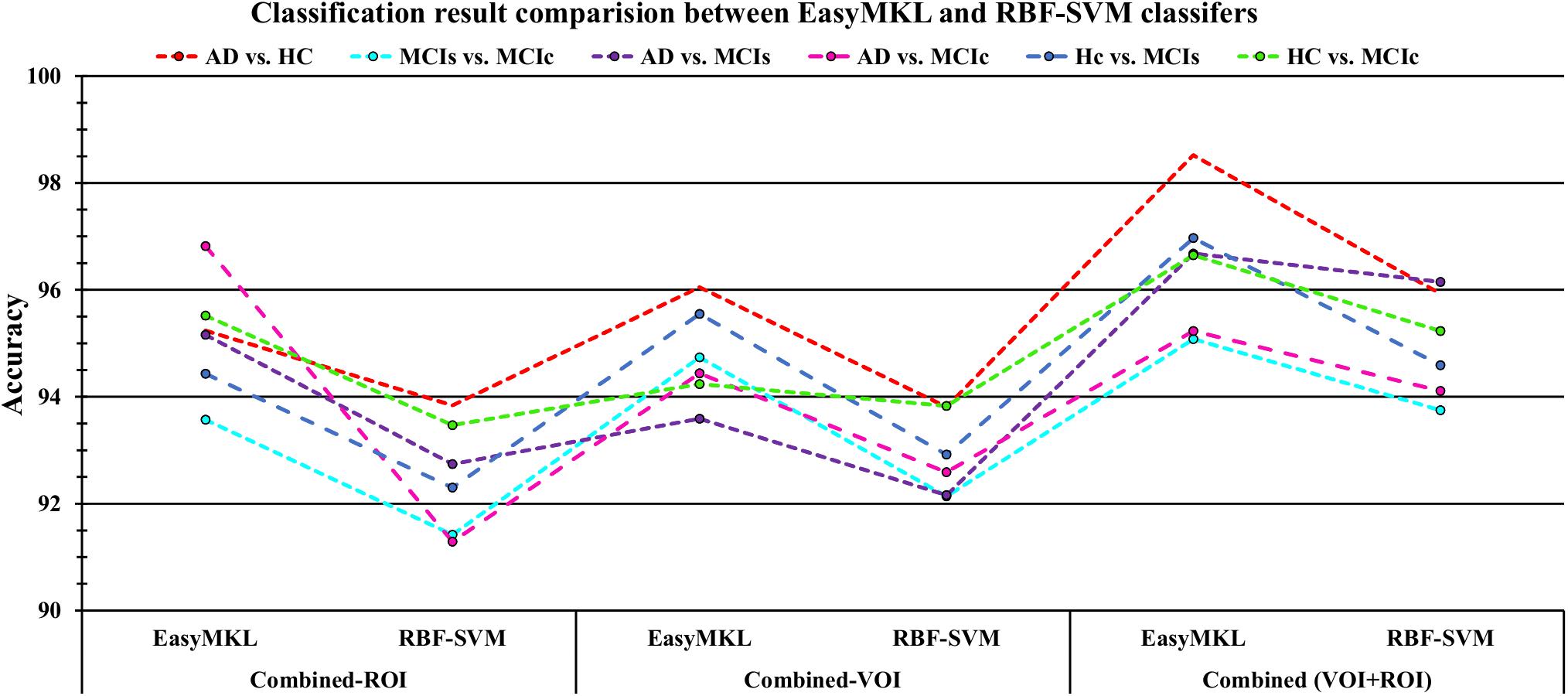

AD vs. HC

We calculated the statistical values that represented the significance levels of the groups in the activation map as presented in Supplementary Tables S1–S5 after comparing the outcomes of the statistical two-sample t-tests for the AD vs. HC group. These tables specify the main affected area observed in the AD vs. HC set and the obtained voxel cluster with detailed information, including its peak areas in the form of the MNI space, cluster-level p-score, and the peak intensity in the T-score of each group. We used an uncorrelated threshold value of puncorrected≤0.001 at the voxel level, an FDR value of pFDR = 0.05, and an FWER value of pFWER = 0.05 at the cluster level to achieve a bias alteration for multiple comparisons. An ROI binary mask was created from the selected clusters of each modality, and later the GM and WM volumes were removed from the two sets of images (AD vs. HC) (see Supplementary Tables S1–S5). Figure 7A shows the most significant regions where these groups differ from each other using an AV45-PET neuroimage, and the obtained voxel cluster is shown in Supplementary Table S3. Likewise, Figure 8A shows the most significant regions where these groups differ from each other using a DTI-FA neuroimage, and the obtained voxel cluster is shown in Supplementary Table S5 (see Supplementary Tables S3, S5).

Figure 8. Selected WM voxels for the (A) AD vs. HC, and (B) MCIs vs. MCIc classification using DTI image.

MCIs vs. MCIc

Supplementary Tables S6–S10 show the main affected areas in the MCIs vs. MCIc group, the obtained voxel clusters with detailed information about its peak coordinates in the MNI space, the cluster-level p-score, and the peak intensity in the T-score of each group. For the MCIs vs. MCIc group, we used an uncorrelated threshold value of puncorrected≤0.001 at the voxel level, an FDR value of pFDR = 0.05, and an FWER value of pFWER = 0.05 at the cluster level to accomplish a bias alteration for multiple comparisons. An ROI binary mask was created from the selected clusters of each modality and later GM and WM volumes were removed from the two sets of images (MCIs vs. MCIc) (see Supplementary Tables S6–S10). Figure 7B shows the most significant region where these groups differ from each other using an AV45-PET neuroimage, and the obtained voxel cluster is shown in Supplementary Table S8. Likewise, Figure 8B shows the most significant region where these groups differ from each other using a DTI-FA neuroimage, and the obtained voxel cluster is shown in Supplementary Table S10 (see Supplementary Tables S8, S10). Moreover, we followed the same procedure for the calculation of voxel clusters for the AD vs. MCIc, AD vs. MCIs, HC vs. MCIc, and HC vs. MCIs groups as we followed for the AD vs. HC and MCIs vs. MCIc groups. The obtained voxel clusters with detailed information about its peak coordinates in the MNI space, the cluster-level p-score, and the peak intensity in the T-score of each group are shown in Supplementary Tables S11–S30 (see Supplementary Tables S11–S30). Likewise, Supplementary Figures S1a,b show the most significant region for the AD vs. MCIc and HC vs. MCIc groups using the DTI-FA modality of the biomarker.

The obtained voxel cluster for these two groups is shown in Supplementary Tables S15, S25 (see Supplementary Figures S1a,b and Supplementary Tables S15, S25). After completing the extraction of the series of features from each individual modality, we passed these obtained features through the polynomial kernel matrix to map these low-dimensional features onto a high-dimensional feature space. We then fused all these high-dimensional features in one form before passing them through the EasyMKL algorithm for classification. Table 3 shows the classification results for the AD vs. HC group using voxel-wise features. It shows that the combined features performed very well for this group compared with the single-modality results. The combined features achieved a 95.55% AUC for classifying the AD vs. HC group, as shown in Figure 9, with a Cohen’s kappa value of 0.9014, which is close to 1, as shown in Table 3 and Figure 6. This indicates that these two groups have a good level of agreement between them. Table 3 shows the classification results for the MCIs vs. MCIc group using voxel-wise features. This table also shows that the combined features performed very well for classifying the MCIs vs. MCIc group compared with the single-modality performances. The combined features achieved an AUC of 94.90% when classifying the MCIs vs. MCIc group, as shown in Figure 9. The Cohen’s kappa value was 0.8825, which is close to 1, as shown in Table 3 and Figure 6. This indicates a good level of agreement between the two groups. Furthermore, for the AD vs. MCIc and AD vs. MCIs classification groups, our proposed system attained a high level of performance and agreement (0.9145 and 0.8869) compared with the individual modalities biomarkers. For the AD vs. MCIc group, AV45-PET and DTI-FA attained high classification accuracy compared with other unimodal biomarkers, but their gained accuracy was 3% less than the accuracy gained by the combined-VOI process, which was 96.20% with a 0.9145 (Figure 6) Cohen’s kappa score. Likewise, for the AD vs. MCIs group, the proposed method achieved 95.16% accuracy with a 0.8869 (Figure 6) Cohen’s kappa score. For the HC vs. MCIc classification group, the AV45-PET individual modality biomarkers performed very well compared with the other single modality biomarkers. The obtained accuracy and Cohen’s kappa score using AV45-PET biomarkers were 94.02% and 0.8481 (Figure 6). Moreover, we then passed the combined-VOI features through the EasyMKL classifier for the classification of the HC vs. MCIc group, and after the classifier was applied, the accuracy increased by 1.5%. This suggests that the combined-VOI features were beneficial for classifying this group. Likewise, for the HC vs. MCIs classification group, the rs-fMRI features of ALFF and fALFF achieved a good level of performance and agreement (0.8386 and 0.8248) compared with the other individual modality biomarkers. In this case, the individual features of the APOE genotype also performed well compared with the FDG-PET imaging modality biomarker, but the individual modality biomarker’s performance was not very good compared with the combined-VOI result (Table 3). The combined-VOI features achieved a 94.43% accuracy and an AUC of 94.67% with a 0.8864 Cohen’s kappa score for classifying the HC vs. MCIs group. Figure 9 shows the ROC curve for all six classification groups; the red solid line in every classification task indicates the combined-VOI features for that particular group. Consequently, from Table 3 and Figures 6, 9, we can state that for all classification combinations, our proposed method attained a high level of performance compared with the individual modality biomarkers, varying from 1 to 3%, and our proposed scheme also attained a high level of agreement between each of the six classification combinations compared with the individual modality-based methods. The obtained result using the RBF-SVM classifier can be found in Supplementary Table S32. From Supplementary Table S32, we can say that the combined-VOI features performed very well as compared with the individual modality outcomes for all six binary classification groups. Table 3 and Supplementary Table S32 clearly show the advantage of using combined features over individual ones (see Supplementary Table S32).

Figure 9. ROC curves for (A) AD vs. HC, (B) MCIs vs. MCIc, (C) AD vs, MCIs, (D) AD vs, MCIc, (E) HC vs. MCIs, and (F) HC vs. MCIc using voxel-wise (VOI) analysis. The red solid line shows the result of the combined-VOI curve, including all single-modality features.