- Department of Veterinary Pathobiology, College of Veterinary Medicine & Biomedical Sciences, Texas A&M University, College Station, TX, United States

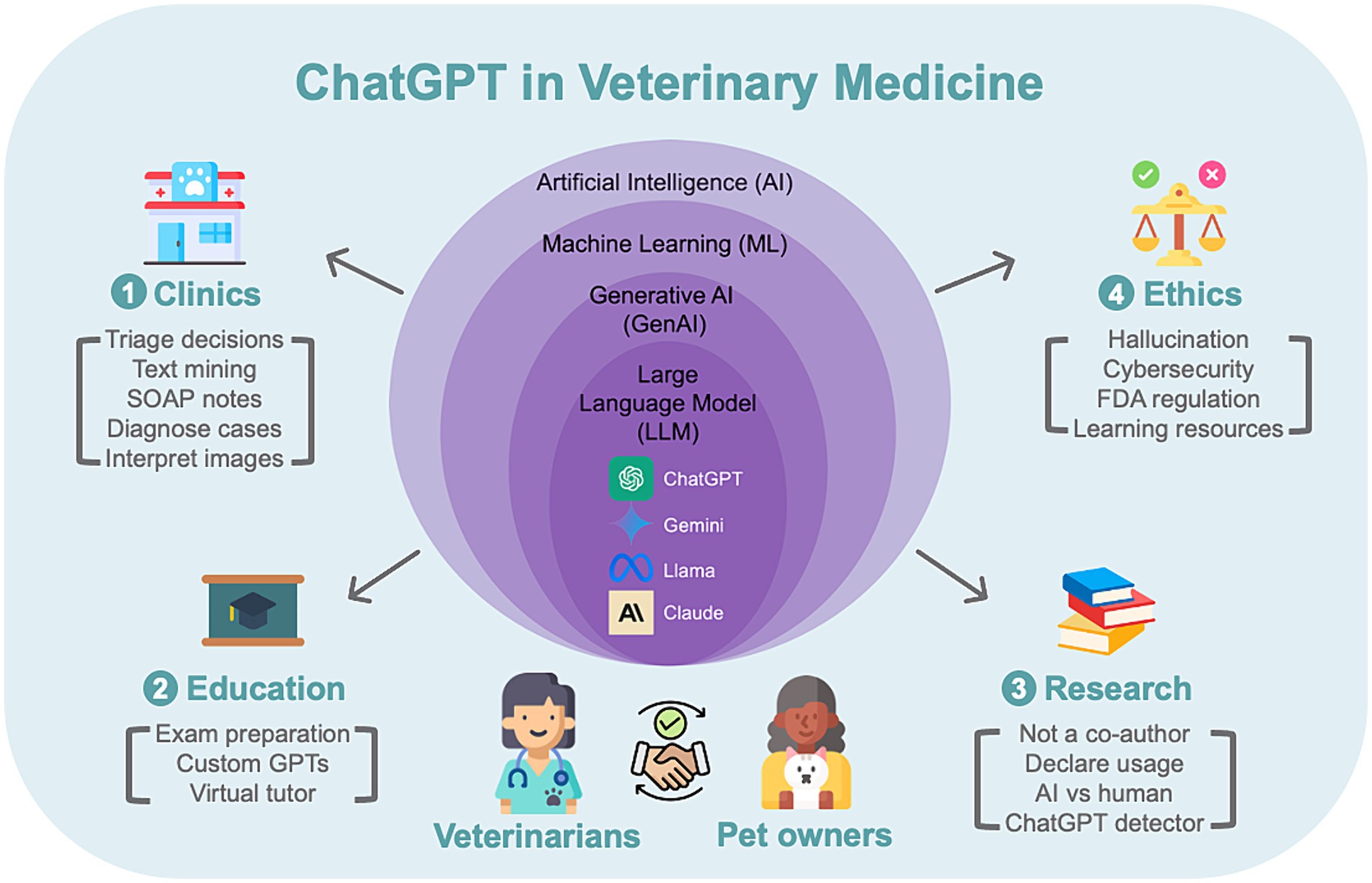

ChatGPT, the most accessible generative artificial intelligence (AI) tool, offers considerable potential for veterinary medicine, yet a dedicated review of its specific applications is lacking. This review concisely synthesizes the latest research and practical applications of ChatGPT within the clinical, educational, and research domains of veterinary medicine. It intends to provide specific guidance and actionable examples of how generative AI can be directly utilized by veterinary professionals without a programming background. For practitioners, ChatGPT can extract patient data, generate progress notes, and potentially assist in diagnosing complex cases. Veterinary educators can create custom GPTs for student support, while students can utilize ChatGPT for exam preparation. ChatGPT can aid in academic writing tasks in research, but veterinary publishers have set specific requirements for authors to follow. Despite its transformative potential, careful use is essential to avoid pitfalls like hallucination. This review addresses ethical considerations, provides learning resources, and offers tangible examples to guide responsible implementation. A table of key takeaways was provided to summarize this review. By highlighting potential benefits and limitations, this review equips veterinarians, educators, and researchers to harness the power of ChatGPT effectively.

Introduction

Artificial intelligence (AI) is a trending topic in veterinary medicine. A recent survey on AI in veterinary medicine by Digital and the American Animal Hospital Association, involving 3,968 veterinarians, veterinary technicians/assistants, and students, showed 83.8% of respondents were familiar with AI and its applications in veterinary medicine, with 69.5% using AI tools daily or weekly (1). Yet, 36.9% remain skeptical, citing concerns about the systems’ reliability and accuracy (70.3%), data security and privacy (53.9%), and the lack of training (42.9%) (1).

The current application of AI in veterinary medicine cover a wide range of topics, such as dental radiograph (2), colic detection (3), and mitosis detection in digital pathology (4). Machine learning (ML), a subset of AI, enables systems to learn from data without being explicitly programmed (5). Generative AI (genAI), in turn, is a field within ML specializing in creating new content. As a subset of genAI, large language models (LLMs) are known for their human-like text generation capabilities. Notable LLMs include ChatGPT (OpenAI) (6), which is utilized by Microsoft Copilot for Microsoft 365 (7), Llama 3 (Meta) (8), Gemini (Google) (9), and Claude 3 (Anthropic) (10). ChatGPT, initially powered by GPT-3.5, was made publicly accessible by OpenAI on November 30, 2022 (11). In less than a year, ChatGPT has attracted approximately a hundred million weekly users (12), making it the most popular LLM for newcomers to this technology. Based on PubMed search results, academic articles mentioned ‘ChatGPT’ in the title or abstract grew from 4 in 2022 to 2,062 in 2023, indicating a growing interest in ChatGPT in the medical field (13). Therefore, this review will focus on ChatGPT as the main example of generative AI and discuss its application in veterinary clinics, education, and research.

GPT, or Generative Pre-trained Transformer, excels in generating new text, images, and other content formats rather than solely analyzing existing data. It is pre-trained by exposure to vast datasets of text and code, enabling it to recognize patterns and generate human-like responses. It employs the transformer neural network architecture that is particularly adept at processing language, which enables coherent and contextually relevant outputs (14). The free version of ChatGPT provides the capability of answering questions, providing explanations, generating creative content, offering advice, conducting research, engaging in conversation, supporting technical tasks, aiding with education, and creating summaries. On February 1, 2023, OpenAI released ChatGPT Plus, a subscription-based model later powered by GPT-4, which has capabilities in text, image, and voice analysis and generation (15). OpenAI introduced GPT-4 Turbo with Vision on April 9, 2024 (16). This updated model is accessible to developers through the application programming interface (API). Its ability of taking in images and answer questions has sparked interest in radiology (17, 18), pathology (19), and cancer detection (20, 21). On May 13, 2024, OpenAI released GPT-4o to the public. The ‘o’ in its name emphasizes the new model’s omnipotent in reading, listing, writing, and speaking abilities (22). Despite ChatGPT’s widespread use, a comprehensive review of its applications in veterinary medicine is lacking.

The breadth of ChatGPT in medicine covers a wide range of areas, ranging from answering patient and professional inquiries, promoting patient engagement (23), diagnosing complex clinical cases (24), and creating educational material (25). Searching ‘ChatGPT AND veterinary’ in PubMed yielded 14 results until May 2024. After examining the title and abstract of all articles, 5 articles were deemed relevant to the subject and were included in the review (26–30). In addition, online search using the same combination of keywords identified commercial software that integrated ChatGPT to enhance virtual assistance, diagnostic accuracy, communication with pet owners, and optimization of workflows (31–37). While examples of ChatGPT applications are prevalent on social media and in various publications (38–40), the best way to understand its impact is through direct engagement. This article aims to discuss the applications of ChatGPT in veterinary medicine, provide practical implementations, and examine its limitations and ethical considerations. The following content will use’ ChatGPT’ as a general term. When the information of specific versions of ChatGPT is available, terms such as GPT-3.5 or GPT-4 will be used. Highlights of each section are listed in Table 1 for a quick summary of the review.

ChatGPT 101: prompts and prompt engineering

Understanding prompts is crucial before engaging with ChatGPT or other generative AI tools. Prompts act as conversation starters, consisting of instructions or queries that elicit responses from the AI. Effective prompts for ChatGPT integrate relevant details and context, enabling the model to deliver precise responses (28). Prompt engineering is the practice of refining inputs to produce optimal outputs. For instance, researchers instructing ChatGPT to identify body condition scores from clinical records begin prompts by detailing the data structure and desired outcomes: “Each row of the dataset is a different veterinary consultation. In the column ‘Narrative’ there is clinical text. Your task is to extract Body Condition Score (BCS) of the animal at the moment of the consultation if recorded. BCS can be presented on a 9-point scale, example BCS 6/9, or on a 5-point scale, example BCS 3.5/5. Your output should be presented in a short-text version ONLY, following the rules below: … (omitted) (28)”. Writing effective prompts involves providing contextual details in a clear and specific way and willingness to refine them as needed.

Moreover, incorporating ‘cognitive strategy prompts’ can direct ChatGPT’s reasoning more effectively (refer to Supplementary material for more details). For a comprehensive understanding of prompt engineering, readers are encouraged to refer to specialized literature and open-access online courses dedicated to this subject (41–44). Proper prompt engineering is pivotal for shaping conversations and obtaining the intended results, as illustrated by various examples in this review (Figure 1).

Using ChatGPT in clinical care

ChatGPT has the potential to provide immediate assistance upon the client’s arrival at the clinic. In human medicine, the pre-trained GPT-4 model is adept at processing chief complaints, vital signs, and medical histories entered by emergency medicine physicians, subsequently making triage decisions that align closely with established standards (45). Given that healthcare professionals in the United States spend approximately 35% of their time documenting patient information (46) and that note redundancy is on the rise (47), ChatGPT ‘s ability to distill crucial information from extensive clinical histories and generate clinical documents are particularly valuable (48). In veterinary medicine, a study utilizing GPT-3.5 Turbo for text mining demonstrated the AI’s capability to pinpoint all overweight body condition score (BCS) instances within a dataset with high precision (28). However, some limitations were noted, such as the misclassification of lameness scoring as BCS, an issue that the researchers believe could be addressed through refined prompt engineering (28).

For daily clinical documentation in veterinary settings, veterinarians can input signalment, clinical history, and physical examination findings into ChatGPT to generate Subjective-Objective-Assessment-Plan (SOAP) notes (46). An illustrative veterinary case presented in Supplementary material involved the generation of a SOAP note for a canine albuterol toxicosis incident (49), where ChatGPT efficiently identified the diagnostic tests executed in the case report, demonstrating that ChatGPT can be used as a promising tool to streamline the workflow for veterinarians.

Moreover, recent research has investigated ChatGPT’s proficiency in human clinical challenges. One study found that GPT-4 could accurately diagnose 57% of complex medical cases, a success rate that outperformed 72% of human readers of medical journals in answering multiple-choice questions (24). Additionally, GPT-4’s top diagnosis concurred with the final diagnosis in 39% of cases and included the final diagnosis within the top differential diagnoses in 64% of cases (50). In veterinary medicine, a notable case is a man on social media platform X (previously known as Twitter), who reported that ChatGPT saved his dog’s life by identifying immune-mediated hemolytic anemia—a diagnosis his veterinarian had missed (51). Veterinarians should recognize that pet owners may consult ChatGPT or similar AI chatbots for advice due to their accessibility (26). While the proliferation of veterinary information online can enhance general knowledge among clients, it also risks spreading misinformation (52). Customizing ChatGPT could address these challenges (refer to ‘Using ChatGPT in Veterinary Education’ below).

In a human medicine study, GPT-4 can interpret ECGs and outperformed other LLM tools in correctly interpreting 63% of ECG images (53). A similar study has yet to be found in veterinary medicine. A veterinary example is provided in the Supplementary material, showing that GPT-4 did not identify an atypical atrial flutter with intermittent Ashman phenomenon in a 9-year-old Pug despite the addition of asterisks in the ECG to indicate the wide and tall aberrant QRS complexes (35). This example emphasizes that while ChatGPT is a powerful tool, it cannot replace specialized AI algorithms approved by the Food and Drug Administration (FDA) for ECG interpretation (54, 55). Nevertheless, advances in veterinary-specific AI tools, such as a deep learning model for canine ECG classification, are on the horizon, with the potential to be available soon (56). With the updated image upload function, the capability of GPT-4 and GPT-4o extends to the interpretation of blood work images. The Supplementary material illustrates a veterinary example of GPT-4 and GPT-4o analyzing Case of the Month on eClinPath (57) and providing the correct top differential despite its limited ability to interpret the white blood cell dot plot.

Using ChatGPT in veterinary education

Recent studies leveraging Large Language Models (LLMs) in medical examinations underscore their utility in educational support. In human medical education, GPT-3’s performance, evaluated using 350 questions from the United States Medical Licensing Exam (USMLE) Steps 1, 2CK, and 3, was commendable, achieving scores near or at the passing threshold across all three levels without specialized training (58). This evaluation involved modifying the exam questions into various formats—open-ended or multiple-choice with or without a forced justification—to gage ChatGPT’s foundational medical knowledge. The AI-generated responses often included key insights, suggesting that ChatGPT’s output could benefit medical students preparing for USMLE (58).

Another investigation in human medical education benchmarked the efficacy of GPT-4, Claude 2, and various open-source LLMs using multiple-choice questions from the Nephrology Self-Assessment Program. Success rates varied widely, with open-source LLMs scoring between 17.1–30.6%, Claude 2 at 54.4%, and GPT-4 leading with 73.7% (59). A comparative analysis of GPT-3.5 and GPT-4 indicates the newer version substantially improved in the neonatal-perinatal medicine board examination (60). In the veterinary education context, researchers at the University of Georgia used GPT-3.5 and GPT-4 to answer faculty-generated 495 multiple-choice and true/false questions from 15 courses in the third-year veterinary curriculum (27). The result concurred with the previous study that GPT-4 (77% correct rate) performed substantially better than GPT-3.5 (55% correct rate); however, their performance is significantly lower than that of veterinary students (86%). These studies highlight the variances in LLM knowledge bases, which could affect the quality of medical and veterinary education.

Beyond exam preparation, the ChatGPT Plus subscribers can create customized ChatGPT, referred to as GPTs (41) that are freely accessible to other users (61). Veterinarians, for instance, can harness these tools to develop AI tutors to educate clients and boost veterinary students’ learning. For client education, the Cornell Feline Health Center recently launched ‘CatGPT,’ a customized ChatGPT that draws information from its website and peer-reviewed scientific publications to answer owner’s inquiries (62). An example of a custom GPT is a specialized veterinary clinical pathology virtual tutor named VetClinPathGPT (63). This custom GPT draws from legally available open-access textbooks with Creative Commons licenses (64–66) and the eClinPath website (57), ensuring the information provided is sourced from credible references. Students are encouraged to pose any question pertinent to veterinary clinical pathology and can even request specific references or links to web pages. More information about this GPT is detailed in the Supplementary material.

Using ChatGPT in academic writing

The incorporation of AI in academic writing, particularly in the field of medical research, is a topic marked by considerably more controversy than the previous sections discussed. Ever since the development of GPT-3 in 2020, its text-generating ability has ignited debate within academia (67). Leveraging editing services enhances clarity and minimizes grammatical errors in scientific manuscripts, which can improve their acceptance rate (68). While acknowledgments often thank editorial assistance, the use of spelling-checking software is rarely disclosed. Nowadays, AI-powered writing assistants have integrated advanced LLM capabilities to provide nuanced suggestions for tone and context (45), thus merging the line between original and AI-generated content. Generative AI, like ChatGPT, extends its utility by proposing titles, structuring papers, crafting abstracts, and summarizing research, raising questions about the AI’s role in authorship as per the International Committee of Medical Journal Editors’ guidelines (69) (Supplementary material). Notably, traditional scientific journals are cautious with AI, yet NEJM AI stands out for its advocacy for LLM use (70). However, these journals still refrain from recognizing ChatGPT as a co-author due to accountability concerns over accuracy and ethical integrity (70–72). The academic community remains wary of ChatGPT’s potential to overshadow faculty contributions (73).

Several veterinary journals have updated their guidelines in response to the emergence of generative AI. Among the top 20 veterinary medicine journals as per Google Scholar (74), 14 instruct on generative AI usage (Supplementary material). They unanimously advise against listing AI as a co-author, mandating disclosure of AI involvement in Methods, Acknowledgments, or other designated sections. These recommendations typically do not apply to basic grammar and editing tools (Supplementary material). AI could enhance writing efficiency and potentially alleviate disparities in productivity, posing a nuanced proposition that suggests broader acceptance of AI in academia might benefit less skillful writers and foster a more inclusive scholarly community (40).

The detectability of AI-generated content and the associated risks of erroneous academic judgments have become significant concerns. A misjudgment has led an ecologist at Cornell University to face publication rejection after being falsely accused by a reviewer who deemed her work as “obviously ChatGPT” (75). However, a study revealed that reviewers could only identify 68% of ChatGPT-produced scientific abstracts, and they also mistakenly tagged 14% of original works as AI-generated (76). In a veterinary study, veterinary neurologists only had a 31–54% success rate in distinguishing AI-crafted abstracts from authentic works (30).

To counteract this, a ‘ChatGPT detector’ has been suggested. An ML tool utilizes distinguishing features like paragraph complexity, sentence length variability, punctuation marks, and popular wordings, achieving over 99% effectiveness in identifying AI-authored texts (77). A subsequent refined model can further distinguish human writings from GPT-3.5 and GPT-4 writings in chemistry journals with 99% accuracy (78). While these tools are not publicly accessible, OpenAI is developing a classifier to flag AI-generated text (79), emphasizing the importance of academic integrity and responsible AI use.

ChatGPT’s limitations and ethical issues

Hallucination and inaccuracy

Hallucination, or artificial hallucination, refers to the generation of implausible but confident responses by ChatGPT, which poses a significant issue (80). ChatGPT is known to create fabricated references with incoherent Pubmed ID (81), a problem somewhat mitigated in GPT-4 (18% error rate) compared to GPT-3.5 (55% error rate) (82). The Supplementary material illustrated an example where GPT-4 could have provided more accurate references, including PMIDs, underscoring its limitations for literature searches.

In the medical field, accuracy is paramount, and ChatGPT’s inaccuracy can have serious consequences for patients. A study evaluating GPT-3.5’s performance in medical decision-making across 17 specialties found that the model largely generated accurate information but could be surprisingly wrong in multiple instances (83). Another study highlighted that while GPT-3.5 (Dec 15 version) can effectively simplify radiology reports for patients, it could produce obviously incorrect interpretations, potentially harming patients (84). With the deployment of GPT-4 and GPT-4o, the updated database should bring expected improvement; however, these inaccuracies underscore the necessity of using ChatGPT cautiously and in conjunction with professional medical advice.

Intellectual property, cybersecurity, and privacy

As an LLM, ChatGPT is trained using undisclosed but purportedly accessible online data and ongoing refinement through user interactions during conversations (85). It raises concerns about copyright infringement and privacy violations, as evidenced by ongoing lawsuits against OpenAI for allegedly using private or public information without their permission (86–88). Based on information from the OpenAI website, user-generated content is consistently gathered and used to enhance the service and for research purposes (89). This statement implies that any identifiable patient information could be at risk. Therefore, robust cybersecurity measures are necessary to protect patient privacy and ensure compliance with legal standards in medical settings (90). When analyzing clinical data using AI chatbot, uploading de-identified datasets is suggested. Alternatively, considering local installations of open-source, free-for-research-use LLMs, like Llama 3 or Gemma (Google), for enhanced security is recommended (91–94).

US FDA regulation

While the FDA has approved 882 AI and ML-enabled human medical devices, primarily in radiology (76.1%), followed by cardiology (10.2%) and neurology (3.6%) (95), veterinary medicine lacks specific premarket requirements for AI tools. The AI- and ML-enabled veterinary products currently span from dictation and notetaking apps (34, 35), management and communication software (36, 37), radiology service (31–33), and personalized chemotherapy (96), to name a few. These products may or may not have scientific validation (97–104) and may be utilized by veterinarians despite the clients’ lack of consent or complete understanding. In veterinary medicine, the absence of regulatory oversight, especially in diagnostic imaging, calls for ethical and legal considerations to ensure patient safety in the United States and Canada (105, 106). LLM tools like ChatGPT pose specific regulatory challenges, such as patient data privacy, medical malpractice liability, and informed consent (107). Continuous monitoring and validation are the key, as these models are continuously learning and updating after launch. As of today, FDA has not authorized any medical devices that use genAI or LLM.

Practical learning resources

Resources for learning about ChatGPT and generative AI are abundant, including AI companies’ documentation (108–110), online courses from Vanderbilt University and IBM on Coursera (41, 111), Harvard University’s tutorial for generative AI (112), and the University of Michigan’s guides on using generative AI for scientific research (113). These resources are invaluable for veterinarians seeking to navigate the evolving landscape of AI in their practice. Last but not least, readers are advised to engage ChatGPT with well-structured prompts, such as: ‘I’m a veterinarian with no background in programming. I’m interested in learning how to use generative AI tools like ChatGPT. Can you recommend some resources for beginners?’ (see Supplementary material).

The ongoing dialog

In the 2023 Responsible AI for Social and Ethical Healthcare (RAISE) Conference held by the Department of Biomedical Informatics at Harvard Medical School, several principles on the judicious application of AI in human healthcare were highlighted (114). These principles could be effectively adapted to veterinary medicine. Integrating AI into veterinary practices should amplify the benefits to animal welfare, enhance clinical outcomes, broaden access to veterinary services, and enrich the patient and client experience. AI should support rather than replace veterinarians, preserving the essential human touch in animal care.

Transparent and ethical utilization of patient data is paramount, advocating for opt-out mechanisms in data collection processes while safeguarding client confidentiality. AI tools in the veterinary field ought to be envisioned as adjuncts to clinical expertise, with a potential for their role to develop progressively, subject to stringent oversight. The growing need for direct consumer access to AI in veterinary medicine promises advancements but necessitates meticulous regulation to assure pet owners about data provenance and the application of AI.

This review discussed the transformative potential of ChatGPT across clinical, educational, and research domains within veterinary medicine. Continuous dialog, awareness of limitations, and regulatory oversight are crucial to ensure generative AI augments clinical care, educational standards, and academic ethics rather than compromising them. The examples provided in the Supplementary material encourage innovative integration of AI tools into veterinary practice. By embracing responsible adoption, veterinary professionals can harness the full potential of ChatGPT to make the next paradigm shift in veterinary medicine.

Author contributions

CC: Conceptualization, Project administration, Resources, Visualization, Writing – original draft, Writing – review & editing, Investigation.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. Texas A&M University start-up funds were used for publication of this article.

Acknowledgments

GPT-3.5, GPT-4, and GPT-4o (https://chat.openai.com/) produced the Supplementary material and provided spelling, grammar, and general editing of the original human writings. This manuscript has been submitted to arXiv as a preprint on February 26, 2024 (https://doi.org/10.48550/arXiv.2403.14654).

Conflict of interest

The author declares that the research was conducted without any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fvets.2024.1395934/full#supplementary-material

References

1. Danylenko, Galyna. AI in veterinary medicine: The next paradigm shift. (2024). Available at: https://digitail.com/blog/artificial-intelligence-in-veterinary-medicine-the-next-paradigm-shift/ [Accessed February 16, 2024]

2. Nyquist, ML, Fink, LA, Mauldin, GE, and Coffman, CR. Evaluation of a novel veterinary dental radiography artificial intelligence software program. J Vet Dent. (2024):8987564231221071. doi: 10.1177/08987564231221071

3. Eerdekens, A, Papas, M, Damiaans, B, Martens, L, Govaere, J, Joseph, W, et al. Automatic early detection of induced colic in horses using accelerometer devices. Equine Vet J. (2024). doi: 10.1111/evj.14069

4. Rai, T, Morisi, A, Bacci, B, Bacon, NJ, Dark, MJ, Aboellail, T, et al. Keeping pathologists in the loop and an adaptive F1-score threshold method for mitosis detection in canine perivascular wall tumours. Cancer. (2024) 16:644. doi: 10.3390/cancers16030644

5. Rahmani, AM, Yousefpoor, E, Yousefpoor, MS, Mehmood, Z, Haider, A, Hosseinzadeh, M, et al. Machine learning (ML) in medicine: review, applications, and challenges. Mathematics. (2021) 9:2970. doi: 10.3390/math9222970

6. OpenAI. GPT-4. (2023). Available at: https://openai.com/research/gpt-4 [Accessed February 17, 2024]

7. Microsoft. Microsoft Copilot for Microsoft 365 overview. (2024). Available at: https://learn.microsoft.com/en-us/copilot/microsoft-365/microsoft-365-copilot-overview [Accessed May 10, 2024]

8. Touvron, H, Martin, L, Stone, K, Albert, P, Almahairi, A, Babaei, Y, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv. [Preprint] (2023). doi: 10.48550/arXiv.2307.09288

9. Team, Gemini, Anil, R, Borgeaud, S, Wu, Y, Alayrac, J-B, Yu, J, et al. Gemini: A family of highly capable multimodal models. arXiv. [Preprint] (2023). doi: 10.48550/arXiv.2312.11805

10. Antropic. Introducing the next generation of Claude. (2024). Available at: https://www.anthropic.com/news/claude-3-family [Accessed May 10, 2024]

11. OpenAI. Introducing ChatGPT. (2022). Available at: https://openai.com/index/chatgpt/ [Accessed May 10, 2024]

12. OpenAI. OpenAI DevDay: Opening keynote. (2023). Available at: https://www.youtube.com/watch?v=U9mJuUkhUzk [Accessed May 10, 2024]

13. PubMed. ChatGPT[Title/Abstract] - Search results. (2024). Available at: https://pubmed-ncbi-nlm-nih-gov.srv-proxy2.library.tamu.edu/?term=ChatGPT%5BTitle/Abstract%5D [Accessed May 10, 2024]

14. Kocoń, J, Cichecki, I, Kaszyca, O, Kochanek, M, Szydło, D, Baran, J, et al. ChatGPT: Jack of all trades, master of none. Inf Fusion. (2023) 99:101861. doi: 10.1016/j.inffus.2023.101861

15. OpenAI. Introducing ChatGPT Plus. (2023). Available at: https://openai.com/index/chatgpt-plus/ [Accessed May 10, 2024]

16. OpenAI. GPT-4 Turbo and GPT-4. (2024). Available at: https://platform.openai.com/docs/models/gpt-4-turbo-and-gpt-4 [Accessed May 10, 2024]

17. Zhou, Y, Ong, H, Kennedy, P, Wu, CC, Kazam, J, Hentel, K, et al. Evaluating GPT-V4 (GPT-4 with vision) on detection of radiologic findings on chest radiographs. Radiology. (2024) 311:e233270. doi: 10.1148/radiol.233270

18. Kim, H, Kim, P, Joo, I, Kim, JH, Park, CM, and Yoon, SH. ChatGPT vision for radiological interpretation: an investigation using medical school radiology examinations. Korean J Radiol. (2024) 25:403–6. doi: 10.3348/kjr.2024.0017

19. Miao, J, Thongprayoon, C, Cheungpasitporn, W, and Cornell, LD. Performance of GPT-4 vision on kidney pathology exam questions. Am J Clin Pathol. (2024) aqae030. doi: 10.1093/ajcp/aqae030

20. Cirone, K, Akrout, M, Abid, L, and Oakley, A. Assessing the utility of multimodal large language models (GPT-4 vision and large language and vision assistant) in identifying melanoma across different skin tones. JMIR Dermatol. (2024) 7:e55508. doi: 10.2196/55508

21. Sievert, M, Aubreville, M, Mueller, SK, Eckstein, M, Breininger, K, Iro, H, et al. Diagnosis of malignancy in oropharyngeal confocal laser endomicroscopy using GPT 4.0 with vision. Arch Otorhinolaryngol. (2024) 281:2115–22. doi: 10.1007/s00405-024-08476-5

22. OpenAI. (2024). Hello GPT-4o. Available at: https://openai.com/index/hello-gpt-4o/ [Accessed May 29, 2024].

23. Mirza, FN, Tang, OY, Connolly, ID, Abdulrazeq, HA, Lim, RK, Roye, GD, et al. Using ChatGPT to facilitate truly informed medical consent. NEJM AI. (2024) 1:AIcs2300145. doi: 10.1056/AIcs2300145

24. Eriksen, AV, Möller, S, and Ryg, J. Use of GPT-4 to diagnose complex clinical cases. NEJM AI. (2023) 1:AIp2300031. doi: 10.1056/AIp2300031

25. Boscardin, CK, Gin, B, Golde, PB, and Hauer, KE. ChatGPT and generative artificial intelligence for medical education: potential impact and opportunity. Acad Med. (2024) 99:22–7. doi: 10.1097/ACM.0000000000005439

26. Jokar, M, Abdous, A, and Rahmanian, V. AI chatbots in pet health care: opportunities and challenges for owners. Vet Med Sci. (2024) 10:e1464. doi: 10.1002/vms3.1464

27. Coleman, MC, and Moore, JN. Two artificial intelligence models underperform on examinations in a veterinary curriculum. J Am Vet Med Assoc. (2024) 262:1–6. doi: 10.2460/javma.23.12.0666

28. Fins, IS, Davies, H, Farrell, S, Torres, JR, Pinchbeck, G, Radford, AD, et al. Evaluating ChatGPT text mining of clinical records for companion animal obesity monitoring. Vet Rec. (2024) 194:e3669. doi: 10.1002/vetr.3669

29. Abani, S, De Decker, S, Tipold, A, Nessler, JN, and Volk, HA. Can ChatGPT diagnose my collapsing dog? Front Vet Sci. (2023) 10:1245168. doi: 10.3389/fvets.2023.1245168

30. Abani, S, Volk, HA, De Decker, S, Fenn, J, Rusbridge, C, Charalambous, M, et al. ChatGPT and scientific papers in veterinary neurology; is the genie out of the bottle? Front Vet Sci. (2023) 10:1272755. doi: 10.3389/fvets.2023.1272755

31. MetronMind. Metron IQ: Veterinary AI radiology report software. (2023). Available at: https://www.metronmind.com/ [Accessed February 17, 2024]

32. PicoxIA. AI designed for veterinarians. (2022). Available at: https://www.picoxia.com/en/#scientific-validation [Accessed February 17, 2024]

33. Vetology. Veterinary radiology and artificial intelligence. (2023). Available at: https://vetology.ai/ [Accessed February 17, 2024]

34. Dragon Veterinary. Dragon veterinary. (2020). Available at: https://www.dragonveterinary.com/ [Accessed February 17, 2024]

35. ScribbleVet. AI for busy veterinarians. (2023). Available at: https://www.scribblevet.com/ [Accessed February 17, 2024]

36. PetsApp. Veterinary engagement and communication platform | PetsApp. (2020). Available at: https://petsapp.com/ [Accessed February 17, 2024]

37. Digitail. All-in-one veterinary practice management software. (2023). Available at: https://digitail.com/ [Accessed February 17, 2024]

38. Lee, P, Goldberg, C, and Kohane, I. The AI revolution in medicine: GPT-4 and beyond. US: Pearson (2023). 289 p.

39. Taecharungroj, V. “What can ChatGPT do?” analyzing early reactions to the innovative AI chatbot on twitter. Big Data Cogn Comput. (2023) 7:35. doi: 10.3390/bdcc7010035

40. Noy, S, and Zhang, W. Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence. SSRN Journal. (2023). doi: 10.2139/ssrn.4375283

41. White, Jules. Prompt engineering for ChatGPT. (2024). Available at: https://www.coursera.org/learn/prompt-engineering [Accessed February 14, 2024]

42. DAIR.AI. Prompt engineering guide. (2024). Available at: https://www.promptingguide.ai/ [Accessed February 19, 2024]

43. Ekin, S. Prompt engineering for ChatGPT: A quick guide to techniques, tips, and best practices. TechRxiv. [Preprint] (2023). Available at: https://www.techrxiv.org/users/690417/articles/681648-prompt-engineering-for-chatgpt-a-quick-guide-to-techniques-tips-and-best-practices [Accessed May 29, 2024].

44. Akın, FK. Awesome ChatGPT prompts. (2024). Available at: https://github.com/f/awesome-chatgpt-prompts [Accessed February 19, 2024].

45. Paslı, S, Şahin, AS, Beşer, MF, Topçuoğlu, H, Yadigaroğlu, M, and İmamoğlu, M. Assessing the precision of artificial intelligence in emergency department triage decisions: insights from a study with ChatGPT. Am J Emerg Med. (2024) 78:170–5. doi: 10.1016/j.ajem.2024.01.037

46. Nguyen, J, and Pepping, CA. The application of ChatGPT in healthcare progress notes: a commentary from a clinical and research perspective. Clin Transl Med. (2023) 13:e1324. doi: 10.1002/ctm2.1324

47. Rule, A, Bedrick, S, Chiang, MF, and Hribar, MR. Length and redundancy of outpatient progress notes across a decade at an academic medical center. JAMA Netw Open. (2021) 4:e2115334. doi: 10.1001/jamanetworkopen.2021.15334

48. Tierney, AA, Gayre, G, Hoberman, B, Mattern, B, Ballesca, M, Kipnis, P, et al. Ambient artificial intelligence scribes to alleviate the burden of clinical documentation. NEJM Catal Innov Care Deliv. (2024) 5:CAT.23.0404. doi: 10.1056/CAT.23.0404

49. Guida, SJ, and Bazzle, L. Rebound hyperkalemia in a dog with albuterol toxicosis after cessation of potassium supplementation. J Vet Emerg Crit Care. (2023) 33:715–21. doi: 10.1111/vec.13352

50. Kanjee, Z, Crowe, B, and Rodman, A. Accuracy of a generative artificial intelligence model in a complex diagnostic challenge. JAMA. (2023) 330:78–80. doi: 10.1001/jama.2023.8288

51. peakcooper. GPT4 saved my dog’s life. (2023). Available at: https://twitter.com/peakcooper/status/1639716822680236032?s=20 [Accessed May 13, 2024].

52. Souza, GV, Hespanha, ACV, Paz, BF, Sá, MAR, Carneiro, RK, Guaita, SAM, et al. Impact of the internet on veterinary surgery. Vet Anim Sci. (2020) 11:100161–1. doi: 10.1016/j.vas.2020.100161

53. Fijačko, N, Prosen, G, Abella, BS, Metličar, Š, and Štiglic, G. Can novel multimodal chatbots such as Bing chat Enterprise, ChatGPT-4 pro, and Google bard correctly interpret electrocardiogram images? Resuscitation. (2023) 193:110009. doi: 10.1016/j.resuscitation.2023.110009

54. Alivecor. FDA clears first of its kind algorithm suite for personal ECG. (2020). Available at: https://alivecor.com/press/press_release/fda-clears-first-of-its-kind-algorithm-suite-for-personal-ecg [Accessed February 20, 2024].

55. Yao, X, Rushlow, DR, Inselman, JW, McCoy, RG, Thacher, TD, Behnken, EM, et al. Artificial intelligence–enabled electrocardiograms for identification of patients with low ejection fraction: a pragmatic, randomized clinical trial. Nat Med. (2021) 27:815–9. doi: 10.1038/s41591-021-01335-4

56. Dourson, A, Santilli, R, Marchesotti, F, Schneiderman, J, Stiel, OR, Junior, F, et al. PulseNet: Deep Learning ECG-signal classification using random augmentation policy and continous wavelet transform for canines. arXiv. [Preprint] (2023). doi: 10.48550/arXiv.2305.15424

57. Stokol, Tracy. Cornell University. eClinPath. (2024). Available at: https://eclinpath.com [Accessed May 29, 2024].

58. Kung, TH, Cheatham, M, Medenilla, A, Sillos, C, Leon, LD, Elepaño, C, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health. (2023) 2:e0000198. doi: 10.1371/journal.pdig.0000198

59. Wu, S, Koo, M, Blum, L, Black, A, Kao, L, Fei, Z, et al. Benchmarking open-source large language models, GPT-4 and CLAUDE2 on multiple-choice questions in nephrology. NEJM AI. (2024) 1:AIdbp2300092. doi: 10.1056/AIdbp2300092

60. Sharma, P, Luo, G, Wang, C, Brodsky, D, Martin, CR, Beam, A, et al. Assessment of the clinical knowledge of ChatGPT-4 in neonatal-perinatal medicine: a comparative analysis with ChatGPT-3.5. J Perinatol. (2024). doi: 10.1038/s41372-024-01912-8

61. OpenAI. GPTs. (2024). Available at: https://chatgpt.com/gpts?oai-dm=1 [Accessed May 14, 2024].

62. Hartigan, Holly. Cornell feline health center launches playful CatGPT. Cornell Chron (2024). Available at: https://news.cornell.edu/stories/2024/03/cornell-feline-health-center-launches-playful-catgpt?utm_content=286593390&utm_medium=social&utm_source=twitter&hss_channel=tw-2451561181 [Accessed May 13, 2024]

63. Chu, CP. VetClinPathGPT: Your veterinary clinical pathology AI tutor. Available at: https://chatgpt.com/g/g-rfB5cBZ6X-vetclinpathgpt [Accessed May 29, 2024].

64. Dreaver-Charles, K, and Mayer, M. The lymphatic system of the dog: translating and transitioning to an open textbook. Otessaconference (2022) 2:1–5. doi: 10.18357/otessac.2022.2.1.97

65. Burton, E. Clinical veterinary diagnostic laboratory. (2021). Available at: https://pressbooks.umn.edu/cvdl [Accessed May 29, 2024].

66. Jennings, R, and Premanandan, C. Veterinary histology (2017). Available at: https://ohiostate.pressbooks.pub/vethisto [Accessed May 29, 2024].

67. Stokel-Walker, C. AI bot ChatGPT writes smart essays — should professors worry? Nature (2022) d41586-022-04397–7. doi: 10.1038/d41586-022-04397-7

68. Warren, W. Beating the odds for journal acceptance. Sci Adv. (2022) 8:eadd9147. doi: 10.1126/sciadv.add9147

69. ICMJE. Recommendations for the conduct, reporting, editing, and publication of scholarly work in medical journals. (2024). Available at: https://www.icmje.org/recommendations/ [Accessed February 19, 2024].

70. Koller, D, Beam, A, Manrai, A, Ashley, E, Liu, X, Gichoya, J, et al. Why we support and encourage the use of large language models in nejm AI submissions. NEJM AI. (2023) 1:AIe2300128. doi: 10.1056/AIe2300128

71. Thorp, HH. ChatGPT is fun, but not an author. Science. (2023) 379:313–3. doi: 10.1126/science.adg7879

72. Stokel-Walker, C. ChatGPT listed as author on research papers: many scientists disapprove. Nature. (2023) 613:620–1. doi: 10.1038/d41586-023-00107-z

73. Chrisinger, Ben. It’s not just our students — ChatGPT is coming for faculty writing. Chron High Educ (2023). Available at: https://www.chronicle.com/article/its-not-just-our-students-ai-is-coming-for-faculty-writing [Accessed February 22, 2024].

74. Google. Veterinary medicine: Google scholar metrics. (2024). Available at: https://scholar.google.com/citations?view_op=top_venues&hl=en&vq=med_veterinarymedicine [Accessed February 19, 2024].

75. Wolkovich, EM. ‘Obviously ChatGPT’ — how reviewers accused me of scientific fraud. Nature. (2024). doi: 10.1038/d41586-024-00349-5

76. Gao, CA, Howard, FM, Markov, NS, Dyer, EC, Ramesh, S, Luo, Y, et al. Comparing scientific abstracts generated by ChatGPT to real abstracts with detectors and blinded human reviewers. Npj Digit Med. (2023) 6:75–5. doi: 10.1038/s41746-023-00819-6

77. Desaire, H, Chua, AE, Isom, M, Jarosova, R, and Hua, D. Distinguishing academic science writing from humans or ChatGPT with over 99% accuracy using off-the-shelf machine learning tools. Cell Rep Phys Sci. (2023) 4:101426. doi: 10.1016/j.xcrp.2023.101426

78. Desaire, H, Chua, AE, Kim, M-G, and Hua, D. Accurately detecting AI text when ChatGPT is told to write like a chemist. Cell Rep Phys Sci. (2023) 4:101672. doi: 10.1016/j.xcrp.2023.101672

79. OpenAI. (2023). New AI classifier for indicating AI-written text. https://openai.com/index/new-ai-classifier-for-indicating-ai-written-text [Accessed Jun 3, 2024].

80. Ji, Z, Lee, N, Frieske, R, Yu, T, Su, D, Xu, Y, et al. Survey of hallucination in natural language generation. ACM Comput Surv. (2023) 55:1–38. doi: 10.1145/3571730

81. Alkaissi, H, and McFarlane, SI. Artificial hallucinations in ChatGPT: implications in scientific writing. Cureus. (2023) 15:e35179. doi: 10.7759/cureus.35179

82. Walters, WH, and Wilder, EI. Fabrication and errors in the bibliographic citations generated by ChatGPT. Sci Rep. (2023) 13:14045. doi: 10.1038/s41598-023-41032-5

83. Johnson, D, Goodman, R, Patrinely, J, Stone, C, Zimmerman, E, Donald, R, et al. Assessing the accuracy and reliability of AI-generated medical responses: an evaluation of the chat-GPT model. Res Sq. [Preprint] (2023) rs.3.rs-2566942. doi: 10.21203/rs.3.rs-2566942/v1

84. Jeblick, K, Schachtner, B, Dexl, J, Mittermeier, A, Stüber, AT, Topalis, J, et al. ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports. Eur Radiol. (2023) 34:2817–25. doi: 10.1007/s00330-023-10213-1

85. OpenAI. What is ChatGPT? (2024). Available at: https://help.openai.com/en/articles/6783457-what-is-chatgpt [Accessed February 17, 2024].

86. Kahveci, ZÜ. Attribution problem of generative AI: a view from US copyright law. J Intellect Prop Law Pract. (2023) 18:796–807. doi: 10.1093/jiplp/jpad076

87. Grynbaum, MM, and Mac, R. The Times sues OpenAI and Microsoft over A.I. use of copyrighted work. N Y Times (2023). Available at: https://www.nytimes.com/2023/12/27/business/media/new-york-times-open-ai-microsoft-lawsuit.html [Accessed February 17, 2024].

88. The Authors Guild. The Authors Guild, John Grisham, Jodi Picoult, David Baldacci, George R.R. Martin, and 13 other authors file class-action suit against OpenAI. (2023). Available at: https://authorsguild.org/news/ag-and-authors-file-class-action-suit-against-openai/ [Accessed February 17, 2024].

89. OpenAI. Privacy policy. (2023). Available at: https://openai.com/policies/privacy-policy [Accessed February 17, 2024].

90. Rieke, N, Hancox, J, Li, W, Milletari, F, Roth, H, Albarqouni, S, et al. The future of digital health with federated learning. Digital Med. (2020) 3:119. doi: 10.1038/s41746-020-00323-1

91. Hugging Face. Gemma release. (2024). Available at: https://huggingface.co/collections/google/gemma-release-65d5efbccdbb8c4202ec078b [Accessed February 22, 2024]

92. Schmid, P., Sanseviero, O., Cuenca, P., Belkada, Y., and von Werra, L. Welcome llama 3 - Meta’s new open LLM. (2024). Available at: https://huggingface.co/blog/llama3 [Accessed May 29, 2024].

93. Meta. Llama. (2023). Available at: https://llama.meta.com/ [Accessed February 17, 2024].

94. Jeanine, Banks, and Tris, Warkentin. Gemma: Introducing new state-of-the-art open models. (2024). Available at: https://blog.google/technology/developers/gemma-open-models/ [Accessed February 22, 2024].

95. U.S. Food and Drug Administration. Artificial intelligence and machine learning (AI/ML)-enabled medical devices. (2024). Available at: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices [Accessed May 13, 2024]

96. ImpriMed. Find the best drugs for treating your dog’s lymphoma BEFORE treatment begins. (2024). Available at: https://www.imprimedicine.com/ [Accessed February 25, 2024]

97. Müller, TR, Solano, M, and Tsunemi, MH. Accuracy of artificial intelligence software for the detection of confirmed pleural effusion in thoracic radiographs in dogs. Vet Radiol Ultrasound. (2022) 63:573–9. doi: 10.1111/vru.13089

98. Boissady, E, de La Comble, A, Zhu, X, and Hespel, A-M. Artificial intelligence evaluating primary thoracic lesions has an overall lower error rate compared to veterinarians or veterinarians in conjunction with the artificial intelligence. Vet Radiol Ultrasound. (2020) 61:619–27. doi: 10.1111/vru.12912

99. Boissady, E, De La Comble, A, Zhu, X, Abbott, J, and Adrien-Maxence, H. Comparison of a deep learning algorithm vs. humans for vertebral heart scale measurements in cats and dogs shows a high degree of agreement among readers. Front Vet Sci. (2021) 8:764570. doi: 10.3389/fvets.2021.764570

100. Adrien-Maxence, H, Emilie, B, Alois, DLC, Michelle, A, Kate, A, Mylene, A, et al. Comparison of error rates between four pretrained DenseNet convolutional neural network models and 13 board-certified veterinary radiologists when evaluating 15 labels of canine thoracic radiographs. Vet Radiol Ultrasound. (2022) 63:456–68. doi: 10.1111/vru.13069

101. Kim, E, Fischetti, AJ, Sreetharan, P, Weltman, JG, and Fox, PR. Comparison of artificial intelligence to the veterinary radiologist’s diagnosis of canine cardiogenic pulmonary edema. Vet Radiol Ultrasound. (2022) 63:292–7. doi: 10.1111/vru.13062

102. Bohannan, Z, Pudupakam, RS, Koo, J, Horwitz, H, Tsang, J, Polley, A, et al. Predicting likelihood of in vivo chemotherapy response in canine lymphoma using ex vivo drug sensitivity and immunophenotyping data in a machine learning model. Vet Comp Oncol. (2021) 19:160–71. doi: 10.1111/vco.12656

103. Koo, J, Choi, K, Lee, P, Polley, A, Pudupakam, RS, Tsang, J, et al. Predicting dynamic clinical outcomes of the chemotherapy for canine lymphoma patients using a machine learning model. Vet Sci. (2021) 8:301. doi: 10.3390/vetsci8120301

104. Callegari, AJ, Tsang, J, Park, S, Swartzfager, D, Kapoor, S, Choy, K, et al. Multimodal machine learning models identify chemotherapy drugs with prospective clinical efficacy in dogs with relapsed B-cell lymphoma. Front Oncol. (2024) 14:1304144. doi: 10.3389/fonc.2024.1304144

105. Bellamy, JEC. Artificial intelligence in veterinary medicine requires regulation. Can Vet J. (2023) 64:968–70.

106. Cohen, EB, and Gordon, IK. First, do no harm. Ethical and legal issues of artificial intelligence and machine learning in veterinary radiology and radiation oncology. Vet Radiol Ultrasound. (2022) 63:840–50. doi: 10.1111/vru.13171

107. Meskó, B, and Topol, EJ. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. Npj Digit Med. (2023) 6:120–6. doi: 10.1038/s41746-023-00873-0

108. Google. Gemini for Google Workspace prompt guide. (2024). Available at: https://inthecloud.withgoogle.com/gemini-for-google-workspace-prompt-guide/dl-cd.html [Accessed May 14, 2024].

109. Antropic. Prompt library. (2024). Available at: https://docs.anthropic.com/en/prompt-library/library [Accessed May 14, 2024].

110. OpenAI. OpenAI help center. (2024) https://help.openai.com/en/ [Accessed February 17, 2024].

111. Coursera. Generative AI: Prompt Engineering Basics Course by IBM. (2024). Available at: https://www.coursera.org/learn/generative-ai-prompt-engineering-for-everyone [Accessed May 14, 2024].

112. Harvard University Information Technology. Generative artificial intelligence (AI). (2024). Available at: https://huit.harvard.edu/ai [Accessed February 17, 2024].

113. Michigan Institute for Data Science. A quick guide of using GAI for scientific research. (2024). Available at: https://midas.umich.edu/generative-ai-user-guide/ [Accessed May 14, 2024].

Keywords: artificial intelligence, AI, generative AI, GenAI, large language model, prompt engineering, machine learning, GPT-4

Citation: Chu CP (2024) ChatGPT in veterinary medicine: a practical guidance of generative artificial intelligence in clinics, education, and research. Front. Vet. Sci. 11:1395934. doi: 10.3389/fvets.2024.1395934

Edited by:

Alasdair James Charles Cook, University of Surrey, United KingdomReviewed by:

Anna Zamansky, University of Haifa, IsraelCopyright © 2024 Chu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Candice P. Chu, Y2NodUBjdm0udGFtdS5lZHU=

Candice P. Chu

Candice P. Chu