- College of Veterinary Medicine, Iowa State University, Ames, IA, United States

We explored the relation between Undergraduate GPA (UGPA) and Graduate Record Examination (GRE) Verbal scores and several indices of achievement in veterinary medical education across five cohorts of veterinary students (N per model ranging from 109 to 143). Achievement indices included overall grade point average in veterinary school (CVMGPA), scores on the North American Veterinary Licensing Examination (NAVLE) and scores on the Veterinary Educational Assessment (VEA). We calculated zero order correlations among all measures, and corrected correlations for range restriction. In all cases, corrected correlations exceeded uncorrected ones. For each index of achievement, we conducted hierarchical regressions using the corrected correlations as input, entering UGPA in the first step and GRE Verbal in the second step. Overall, UGPA and GRE Verbal combined explained from 70 to 84% of variance in CVMGPA, 51–91% of variance in VEA scores, and 41–92% of variance in NAVLE scores. For 12 of 15 comparisons, the second step (including GRE Verbal scores) significantly improved R2. Our results reaffirm the value of UGPA scores and GRE Verbal scores for predicting subsequent academic achievement in veterinary school.

Introduction

Graduate and professional programs seek to admit applicants who will succeed academically and in subsequent careers. Additionally, programs often utilize admissions policies to meet other objectives, such as recognizing important non-academic indicators of success or merit, and ensuring fairness for all applicants.

One common predictor of success in veterinary medical education programs is undergraduate grade point average (UGPA). Research supports UGPA's utility in predicting achievement in veterinary school (1–10).

The Graduate Record Examination (GRE), a standardized examination offered by Educational Testing Services, is intended to predict achievement for graduate and professional school, and, like undergraduate GPA, is commonly used for admissions decisions in veterinary medical education. Researchers have explored the GRE's utility for predicting subsequent achievement for a wide range of disciplines. In a meta-analysis synthesizing research from 1,521 students, Kuncel et al. (11) found the GRE to have significant power to predict achievement in graduate programs, and Kuncel et al. (12) found that significance to be present for both doctoral and master's level study across a wide range of disciplines.

Veterinary programs have long relied upon the Graduate Record Examination (GRE) for informing admissions decisions, a practice that is supported in the literature, with decades of studies showing a positive relation between GRE scores or sub-scores and subsequent achievement (1–7, 13–15).

Some studies, however, have cast some doubt on the efficacy of the GRE, finding that the GRE provided little or no added predictive value, when combined with other common predictors such as grade point average in programs such as nurse anesthesiology (16), biomedical PhD programs (17, 18); physician assistant programs (19); nursing (20), construction management (21) and developmental biology (22). Additionally, the GRE has been criticized because it has been shown in some cases to under-predict success for women (23), and to present a barrier to application for otherwise qualified applicants (overall) (24) as well as underrepresented applicants (25).

Defenders of the GRE point to its overall track record (26), and note that representation of historically disadvantaged groups in graduate study has increased even as the GRE has been utilized for admissions decisions (27). Furthermore, many studies underestimate its predictive power by relying on simple univariate correlative approaches that do not account for factors such as restriction of range (3, 26). Additionally, standardized tests, generally, might play an important role in making the admissions process fairer for applicants. For instance, Schwager et al. (28) found the GRE to be an important admissions tool because they were recruiting students from an international population, and the countries supplying their applicants differed systematically in grading practices, thereby making it difficult to compare applicants fairly using GPA alone. They found that GRE scores were useful in predicting graduate GPA above and beyond undergraduate GPA, independent of students' socioeconomic status.

Despite the available literature, therefore, more studies are needed that investigate the characteristics and usefulness of standardized tests such as the GRE, providing data to contribute to meta-analysis, and overcoming the shortcomings of many existing studies that failed to account for restriction of range. The present study sought to address this need by answering the following questions for a large veterinary college in the Midwestern United States:

1. Accounting for restriction of range, what is the predictive relation between GRE scores and indicators of achievement in veterinary medicine, including veterinary grade point average (CVMGPA), score on the Veterinary Educational Assessment (VEA), and score on the North American Veterinary Licensing Exam (NAVLE)?

2. Do GRE scores provide predictive power beyond undergraduate GPA (UGPA)?

Materials and Methods

This study was reviewed and declared exempt by the Iowa State University Institutional Review Board, #19-243-00.

Participants

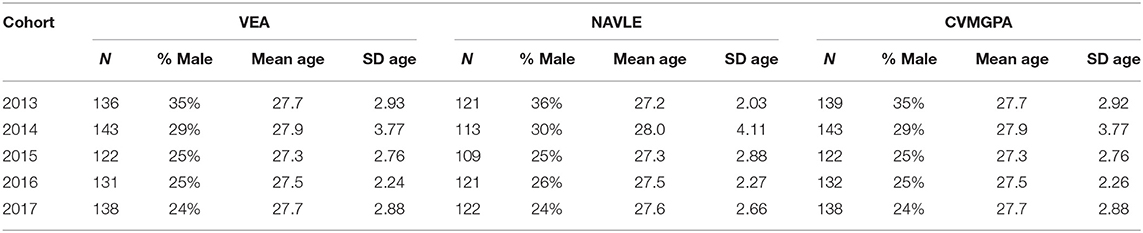

We obtained data from the admissions office of one veterinary medicine college in the Midwestern United States. Participants included 2013, 2014, 2015, 2016, and 2017 graduates who had complete data for undergraduate GPA, GRE scores, and three indices of subsequent achievement, including Veterinary Medicine Cumulative GPA (CVMGPA), Veterinary Educational Assessment (VEA) scores, and North American Veterinary Licensing Exam (NAVLE) scores. Table 1 presents the Ns, percent male, and mean age of the students at graduation, by cohort and outcome.

Measures

Undergraduate GPA

Undergraduate GPA (UGPA) is the final cumulative GPA from the student's undergraduate years. For the purpose of adjusting for restriction of range, the mean and standard deviation for UGPA in the unrestricted sample were obtained from a published article (29), and based on data from 62,122 students at 26 institutions between 2000 and 2005.

GRE Scores

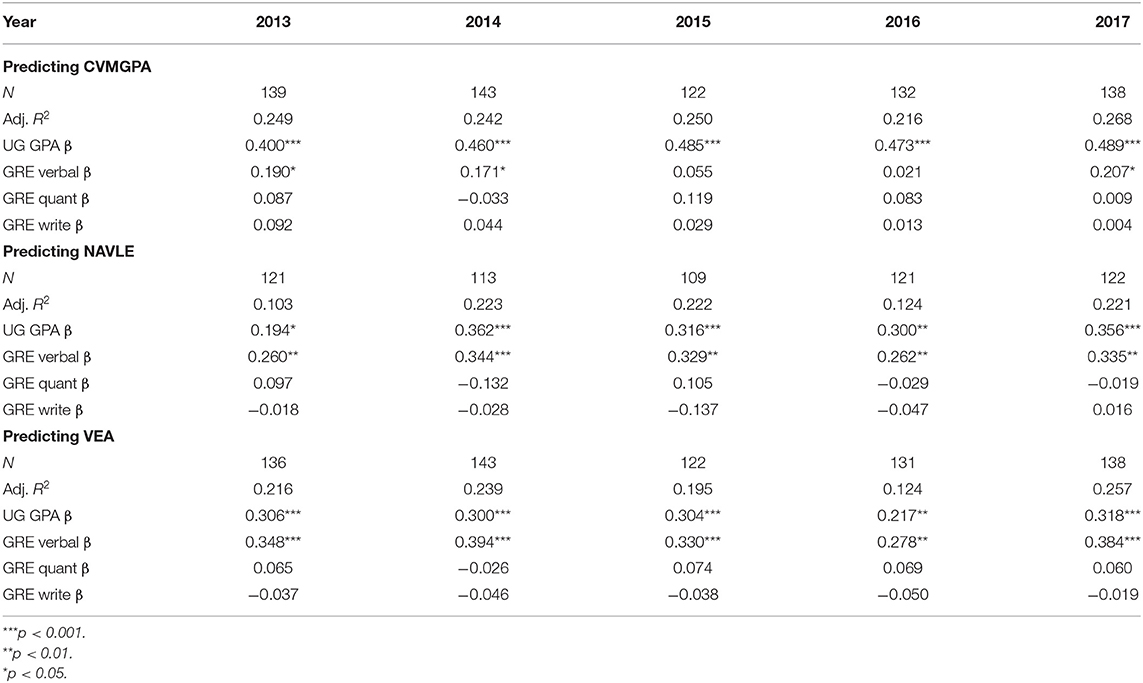

We conducted a series of regression analyses using observed correlations to predict our three indices of subsequent achievement (VEA, NAVLE, and Veterinary Medicine Cumulative GPA) using UGPA and three available GRE sub scores (Verbal, Quantitative, and Writing). Results showed that GRE Verbal was the only GRE score that significantly predicted subsequent achievement (See Table 2). Therefore, for subsequent analyses, we used only the Verbal Reasoning subtest of the GRE. The mean and standard deviation for GRE Verbal for the unrestricted sample were obtained from the Educational Testing Services website (www.ets.org/s/gre/pdf/gre_guide.pdf) and were based on data from >1 million test-takers collected between 2016 and 2018.

Three Indices of Subsequent Achievement

The three indices of subsequent achievement included Veterinary Educational Assessment (VEA) scores, North American Veterinary License Exam (NAVLE) scores, and Veterinary Medicine Cumulative GPA (CVMGPA).

The VEA is a 300-item, multiple-choice pre-clinical examination that was designed to assess basic proficiency in the areas of veterinary anatomy, physiology, pharmacology, microbiology, and pathology, developed by the International Council for Veterinary Assessment (ICVA) and the National Board of Veterinary Medical Examiners. The VEA is developed external to the College of Veterinary Medicine where the study was conducted, and is purchased by the College for the purpose of benchmarking student achievement in the basic sciences.

The NAVLE scores are students' scores on the North American Veterinary Licensing Examination. The NAVLE is an electronically administered, 360-item, multiple-choice examination that is required for veterinary licensure in the United States and Canada. The NAVLE is developed and administered external to the college, and students were not required to release their NAVLE scores to the college.

Veterinary Medicine GPA (CVMGPA) is students' cumulative grade point average at the end of their 4 years in the veterinary college.

As seen in Table 1, Ns vary somewhat across measures, with NAVLE data generally being available for fewer numbers of participants than VEA or CVMGPA data. In 2013 and 2016 there were also slightly fewer VEA scores available than CVMGPA values (three in 2013 and one in 2016). Some NAVLE data were unavailable for each cohort because releasing NAVLE data to the college was voluntary, and not all students chose to release it. Four VEA scores were unavailable because those students were unable to take the exam due to illness.

Method

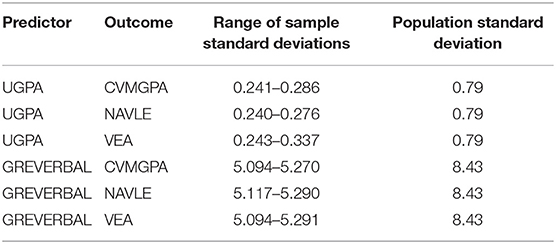

We conducted a series of correlations among UGPA, GRE Verbal Reasoning, and the three indices of achievement. Then, because these correlations were range restricted, we corrected the correlations following Wiberg and Sundström (30), using a formula that has been “widely discussed and used (31–35). The formula uses the correlation of the restricted sample and the standard deviation of the independent variable (X) in the restricted sample and in the unrestricted sample to provide an estimate of the correlation in the population (p. 4).” Table 3 contains a comparison of standard deviations for both UGPA and GRE verbal between the study cohorts and the wider population.

Finally, we conducted hierarchical regressions using the corrected correlations as input, entering UGPA in the first step and GRE Verbal in the second step. The NAVLE, VEA, and GPA would be expected to produce similar results across years with similar cohorts. In the present study, cohorts are similar, in that they were recruited to the same college of veterinary medicine using nearly identical admissions processes. Nonetheless, there are modest but systematic differences among cohorts. Because such differences could affect the results, we sought to eliminate the possibility that an exceptionally strong (or weak) relation within one or more cohorts might misleadingly distort the relation for another cohort. Therefore, we conducted these analyses separately for each cohort and each outcome.

Results

VEA Scores

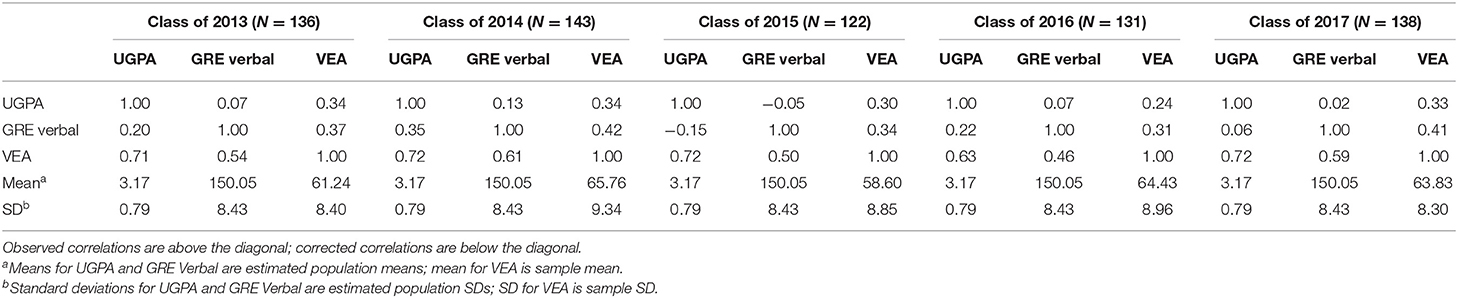

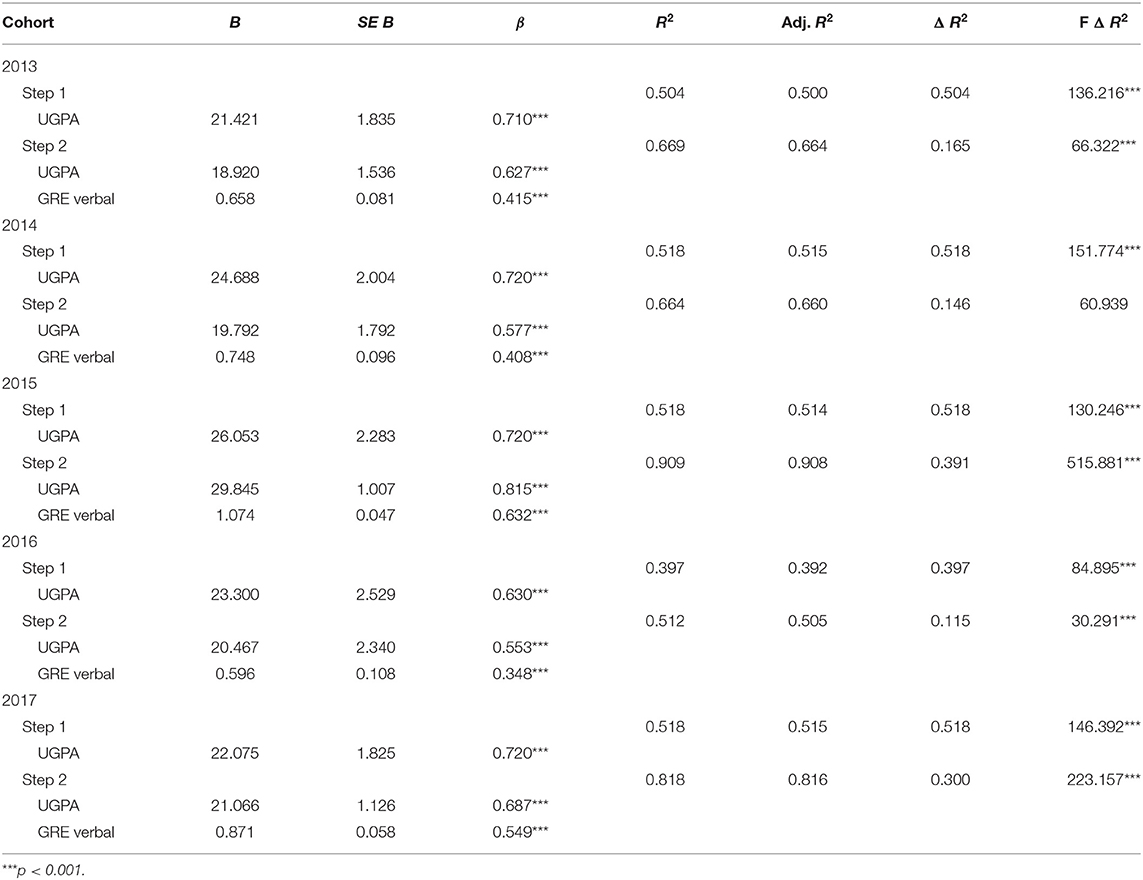

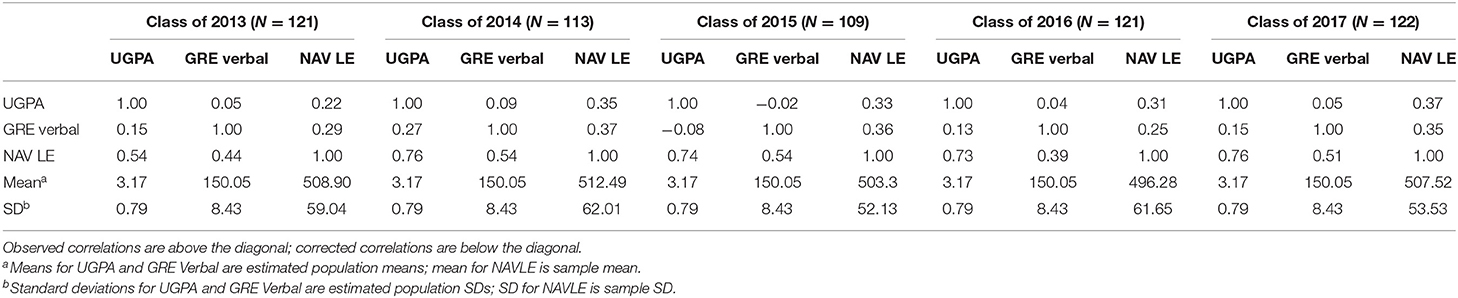

Table 4 contains the observed and corrected correlations, as well as the means and standard deviations for predicting VEA scores for each of the five cohorts. Table 5 contains the hierarchical regression results by cohort, using corrected correlations. Step 1 of the hierarchical regression contained UGPA. Step 2 included UGPA and GRE Verbal. With the exception of the class of 2014, the second step in the models significantly improved R2.

Across the cohorts, UGPA accounted for from 39 to 52% of the variance in VEA scores. When GRE Verbal was added, the models accounted for from 51 to 91% of the variance in VEA scores.

NAVLE Scores

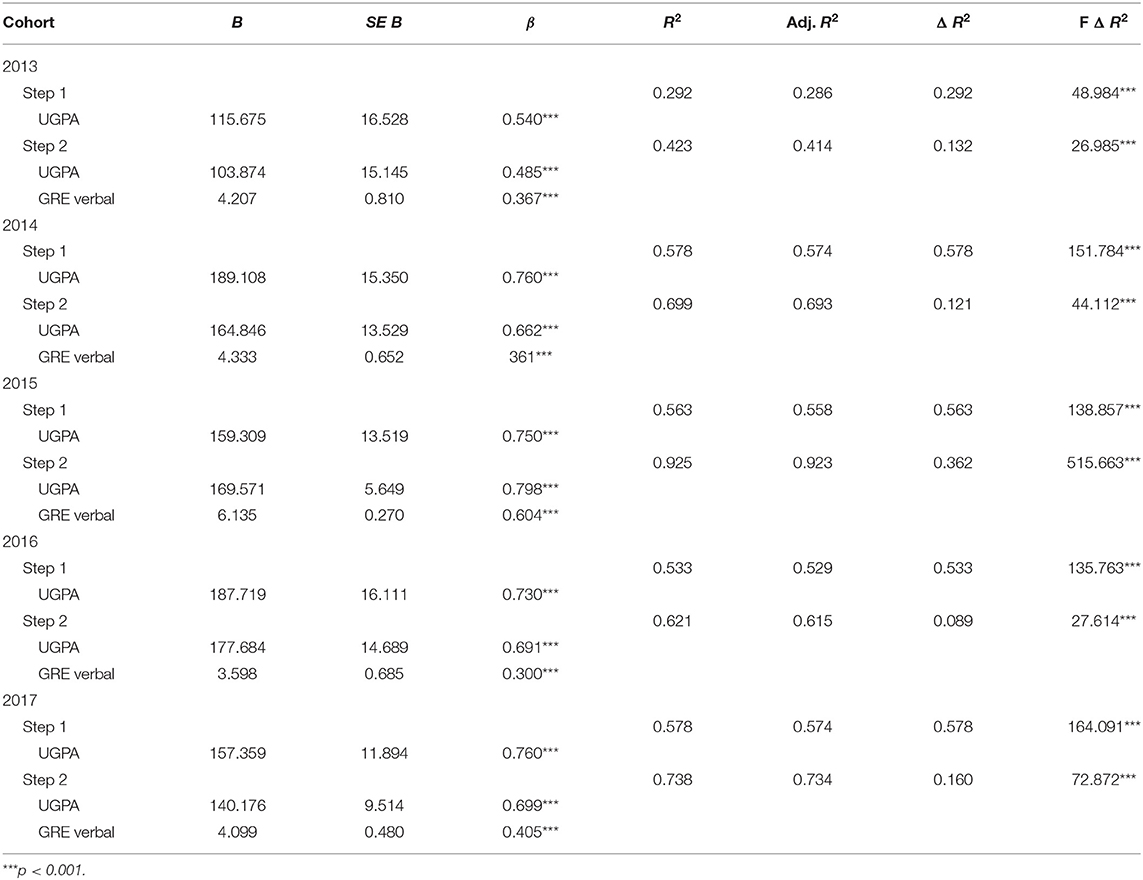

Table 6 contains the observed and corrected correlations, as well as the means and standard deviations for predicting NAVLE scores for each of the five cohorts. Table 7 contains the hierarchical regression results by cohort, using corrected correlations. Step 1 of the hierarchical regression contained UGPA. Step 2 included UGPA and GRE Verbal. The second step in the models significantly improved R2 for all cohorts. Across the cohorts, UGPA accounted for from 29 to 57% of the variance in NAVLE scores. When GRE Verbal was added, the models accounted for from 41 to 92% of the variance in NAVLE scores.

CVMGPA

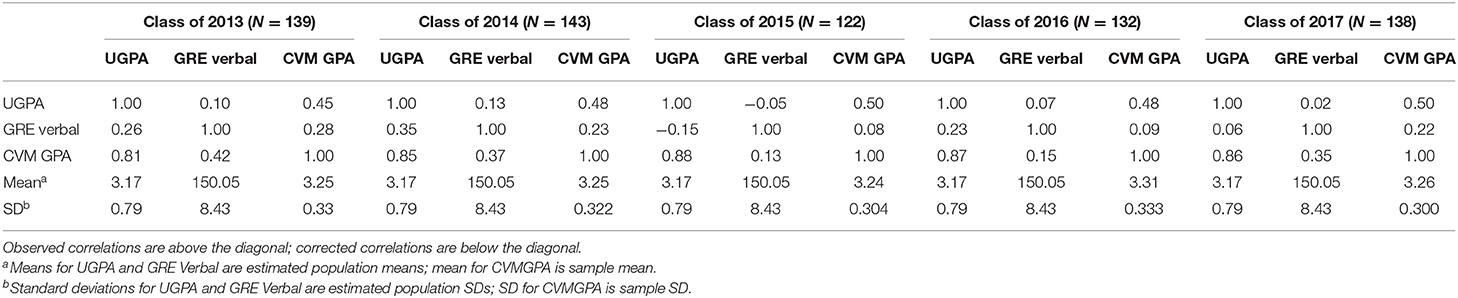

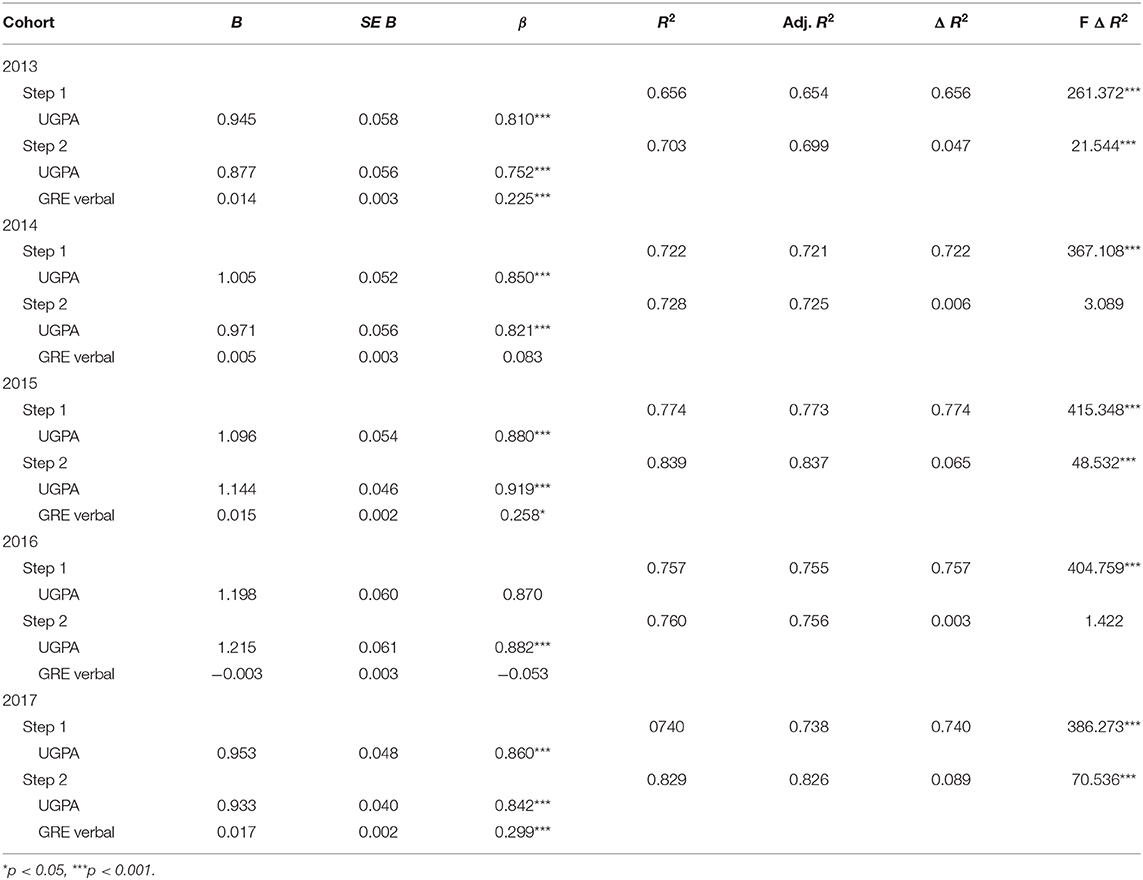

Table 8 contains the observed and corrected correlations, as well as the means and standard deviations for predicting CVMGPA scores for each of the five cohorts. Table 9 contains the hierarchical regression results by cohort, using corrected correlations. Step 1 of the hierarchical regression contained UGPA. Step 2 included UGPA and GRE Verbal. With the exception of the class of 2014 and the class of 2016, the second step in the models significantly improved R2 for the cohorts. Across the cohorts, UGPA accounted for from 65 to 77% of the variance in CVMGPA scores. When GRE Verbal was added, the models accounted for from 70 to 84% of the variance in CVMGPA scores.

Discussion

Overall, both UGPA and GRE-Verbal contributed significantly to explaining achievement on the outcome variables. As seen in Tables 4, 6, 8, the overall size of the effects from UGPA tended to be larger than the effects from GRE-Verbal. Furthermore, because UGPA tends to precede GRE scores, and because it is generally more ubiquitously available than GRE scores, we entered UGPA into each regression model first. As a result of these two factors, UGPA consistently explained more variance in each model than did GRE-Verbal scores.

Veterinary Educational Assessment

We found no research examining the VEA. However, two studies examined the Qualifying Exam (QE), an earlier version of the VEA. Danielson et al. (4) found that the Qualifying Exam (QE) was a significant predictor of success on the NAVLE Examination, and a mediator for the effect of UGPA and GRE (combined) scores for explaining success on the NAVLE. Similarly, Fuentalba et al. (5) found that GRE Quantitative scores were good predictors of QE scores, and found that QE scores correlated with GPA in all years of the curriculum.

Like the QE, the VEA was designed to measure veterinary students' mastery of the basic science knowledge undergirding diagnostic and clinical reasoning. Undergraduate GPA, which reflects how well-students perform in undergraduate courses prior to enrolling in veterinary school, could be associated with VEA scores in at least two ways. First, many of the undergraduate courses included in the UGPA calculation included content that was similar or prerequisite to content included in early courses in the veterinary curriculum. For instance, courses in General Chemistry, Organic Chemistry, Biochemistry, General Biology, Genetics, and Anatomy/Physiology contain knowledge undergirding subsequent learning of Veterinary Anatomy, Physiology, Pathology, Pharmacology, and Microbiology. Because VEA scores represent achievement in these subjects, it is reasonable to expect UGPA to predict VEA scores. Second, prerequisite undergraduate courses are focused on mastery of basic science rather than applied or clinical science. In this way, also, UGPA is aligned with the VEA. Across cohorts, in the present study variance in UGPA explained anywhere from 40 to 52% of variance in VEA scores, explaining 50% or more of variance in four out of five cohorts. This suggests that, for predicting students' ability to master basic science knowledge, UGPA is a powerful predictor.

The GRE Verbal test is designed to “assesses… ability to analyze and evaluate written material and synthesize information obtained from it, analyze relationships among component parts of sentences and recognize relationships among words and concepts” (36). Assuming the GRE Verbal test performed as designed, this ability should predict VEA scores independently of content knowledge or content-specific ability to analyze, evaluate, and synthesize information in medical basic sciences. The VEA uses formats that require analysis and evaluation of written material and synthesis of information, such as presenting scenarios involving disease or injury to animals, and requiring the examinee to select the most likely cause, or anatomical structure most likely involved (37) Of course, this ability should also be highly correlated with undergraduate GPA, since analysis, evaluation, and synthesis of verbal information should be associated with students' overall ability to perform well on coursework and course related exams. However, the GRE Verbal was able to explain variance in VEA scores independent of UGPA. Across all cohorts, the GRE Verbal score contributed significantly and meaningfully to the regression models, explaining an additional 12–30% of variability in VEA score, beyond the variation explained by UGPA alone.

If the VEA is an accurate indicator of veterinary student mastery of key basic science knowledge, then UGPA and GRE Verbal together contributed powerfully to our ability in the present study to predict students' subsequent basic science knowledge, together explaining between 51 and 91% of VEA test scores. Only in 2016 were these combined measures able to account for <2/3rds of the variability in VEA scores.

NAVLE

The NAVLE is intended to measure veterinary graduates' preparation to practice veterinary medicine across 13 species and four competency domains including clinical practice, communication, professionalism/practice management/wellness, and preventive medicine/animal welfare (38).

Few studies have explored relations between common pre-admission variables and NAVLE scores. Roush et al. (15) found a strong positive relationship between NAVLE scores and veterinary school GPA and veterinary class rank. They found low, but significant correlations between the NAVLE and the Verbal GRE, Total GRE, and pre-admission science GPA. Similarly, Danielson et al. (4) found modest correlations that were only inconsistently significant between GRE and NAVLE scores as well as between UGPA and NAVLE scores. They also found that both GRE and UGPA were significant indirect predictors of NAVLE scores through QE scores and/or VGPA. Molgaard et al. (6) similarly found that both UGPA and GRE scores (all combined) contributed modestly, but significantly to explaining NAVLE scores. None of the above researchers corrected their correlations for restriction of range. Both Roush et al. and Danielson et al. hypothesized that NAVLE scores were more strongly correlated with veterinary school performance than pre-admission variables because NAVLE scores better reflected what students did in veterinary school than the knowledge and skills needed to perform well on pre-admission measures. However, the strong corrected correlations in the present study introduce an alternative hypothesis. Perhaps the relatively weaker correlations found between prerequisite measures and NAVLE scores in the prior studies were a result of restriction of range. While the present study does not allow us to confirm either hypothesis, we recommend it be explored further.

As noted earlier, across each cohort, some NAVLE data were missing because students were not required to release their NAVLE scores. This could have affected the results of the analysis, particularly if students who chose to withhold their scores differed in some systematic way from other students. For instance if students who withheld their NAVLE scores systematically performed differently on the other variables, compared to other students, than they did on the NAVLE, then the strength of the relations may be over-estimated. Similarly, if consistently high or low scoring students chose to withhold their NAVLE scores, the strength of the correlations might be somewhat underestimated. However, the fact that the majority of students shared their NAVLE scores, combined with the strong correlations among NAVLE scores and other scores, suggest that sufficient students performing across a wide range of scores chose to share their scores that the overall nature of the relations among variables was detectable.

CVMGPA

Overall GPA during the veterinary school program should provide a broad indication of students' achievement in veterinary medicine across the basic and clinical sciences, and in both classroom and applied clinical contexts. As discussed earlier, long-standing research supports significant, though often modest positive relationships between undergraduate GPA and grades in veterinary school. This holds true for a variety of GPA calculations, including after Year 1 (2, 3, 7, 10), at various points throughout the program (1, 5, 6), and at the end of the program (4, 8, 9). Of those studies, only Powers' study corrected for restriction of range. The multiple correlations found by Powers for the effect of GRE (V, Q and A) and UGPA were, on average, 0.76, similar to the range of 0.70–0.84 found in the present study. This finding suggests that the more modest relations between CVMGPA and pre admissions GPA found in prior studies may have been influenced by restriction of range.

GRE Sub-Scores

Prior studies have reported significant correlations between GRE sub-scores and achievement in veterinary school, with a variety of outcomes. Four studies (1–3, 5) found that the GRE quantitative and analytical scores were the best predictors of subsequent achievement. Confer and Lorenz (2) found that the GRE Biology sub score was also a good predictor of subsequent achievement. A number of researchers (4, 6, 7, 14) combined multiple GRE sub section scores, and in all cases the combined scores were significant predictors of subsequent achievement. Like the current study, two prior studies found the GRE Verbal score to be a better predictor of subsequent achievement than other sub-scores for some dependent variables. Kelman (13) found that the GRE Verbal sub-score contributed significantly to a regression model predicting cumulative veterinary college GPA, whereas the quantitative sub score did not. Roush et al. (15) found that only the GRE Verbal sub score was significantly correlated with NAVLE score, whereas only the GRE quantitative and analytical sub scores correlated significantly with veterinary school GPA. In the present study the GRE Verbal sub-score consistently correlated more powerfully with subsequent measures than either the GRE Quantitative or GRE Writing scores and, when combined in one regression analysis, was the only sub-score that contributed significantly to regression models. It is unclear why GRE sub-scores do not appear to contribute consistently across studies. The variety of research approaches and dependent variables in existing studies do not reveal unambiguous patterns. This question deserves more study.

Implications

The present study highlights the value of correcting for range restriction when estimating the contribution of variables such as GPA and GRE to explaining subsequent achievement. Correcting for restriction of range, UGPA and GRE together explained as much as 84, 91, and 92% of the variance in CVMGPA, VEA, and NAVLE scores, respectively. There are at least two important implications of this finding. First, prior studies may have substantially under-estimated the ability of pre-admission variables such as UGPA and GRE scores to predict subsequent achievement. Therefore, we echo others' calls for further studies and meta-analyses that investigate the use of the GRE and similar standardized tests (11, 28, 39) when accounting for range restriction. Notwithstanding potential weaknesses in such examinations, studies such as the present study highlight their practical utility. Second, assumptions regarding correlations among pre-admissions variables and post-admissions variables should be re-explored with range restriction in mind. In the present study, the average Adj. R2 for the regression models using UGPA and GRE Verbal to explain variance in VEA, VGPA and NAVLE were 0.71, 0.77, and 0.73, respectively. These findings do not suggest significantly stronger relations between preadmission variables and earlier measures of achievement in veterinary school (e.g., VEA) than later measures of achievement (e.g., NAVLE). Therefore, it is possible that relations between all of these variables have more to do with students' stable overall ability, or capacity to “test well” than any change in their achievement related to veterinary medicine.

Finally, because women constitute the overwhelming majority of participants in the study, and because the number of underrepresented individuals was negligibly small, the present study did not clarify questions regarding gender or race specific biases in the GRE. However, it is clear that many women were admitted notwithstanding the GRE requirement, and that the GRE effectively predicted subsequent achievement for women. Similarly, while students from racial groups historically underrepresented in veterinary medicine were relatively few in this study, they were represented in all cohorts, and therefore constituted part of the sample for which GRE Verbal sub scores contributed significantly to predicting achievement. Therefore, programs with concerns regarding how GRE Verbal scores might impact admissions for women or racially underrepresented groups may consider mechanisms for mitigating potential subgroup differences other than eliminating the GRE Verbal all together from their admissions requirements.

Limitations

There are several limitations to the present study. First, because it represents only one veterinary program, findings may not generalize to other veterinary programs, or to programs in other fields of study. Second, there are important dependent variables that may not be adequately measured by VEA, NAVLE scores, or overall CVM GPA, such as hands-on clinical skill, communication and professionalism. We join the call for additional research that explores the relation between pre-admissions variables and a broad array of relevant indicators of achievement. Finally, we relied on the best available data when correcting for restriction of range. However, because the available data for the unrestricted sample did not overlap at all (UGPA) or only overlapped partially (GRE Verbal) with our dataset, it is possible that some error was present when calculating range restriction, and that corrected correlations would have been different, if data that exactly matched our cohorts had been available. Nonetheless, the fact that the corrected correlations found in the present study were very similar to those reported by Powers suggests that the corrected correlations are similar to what would have been found had exactly matching data been available.

Summary

The present study highlights several key implications for admissions decisions, as well as for future research. First, while both undergraduate GPA and GRE scores have been shown to significantly predict subsequent achievement in veterinary colleges, it seems likely that their predictive power has been consistently underestimated because range restriction often has not been taken into account. Veterinary programs that have not considered range restriction for these predictors in their own admissions models may be limiting their ability to predict which applicants will succeed in their programs. Second additional research is needed to establish whether this pattern holds true for other veterinary colleges in other contexts. Finally, in the present study undergraduate GPA and GRE Verbal scores were able to explain a remarkable amount of the variance in overall veterinary GPA, VEA scores, and NAVLE scores. Nonetheless, there continues to be a paucity of evidence regarding how to reliability measure or predict achievement in veterinary clinical practice. Continued research is necessary to address this deficiency and bolster our ability to select and train future veterinarians who will succeed and thrive in their workplaces.

Data Availability Statement

The datasets presented in this article are not readily available because our research protocol does not permit raw data to be shared. Requests to access the datasets should be directed to amFkYW5pZWxAaWFzdGF0ZS5lZHU=.

Ethics Statement

The studies involving human participants were reviewed and approved by Institutional Review Board - Iowa State University. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

JD and RB contributed to planning and conceptualizing the manuscript. RB conducted the data analysis. Both authors contributed to writing and reviewing the manuscript, with RB leading the methods/results sections, and JD leading the other sections.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Confer AW. Preadmission GRE scores and GPAs as predictors of academic performance in a college of veterinary medicine. JVME. (1990) 17:56–62.

2. Confer AW, Lorenz MD. Pre-professional institutional influence on predictors of first-year academic performance in veterinary college. JVME. (1999) 26:16–20.

3. Powers DE. Validity of graduate record examinations (GRE) general test scores for admissions to colleges of veterinary medicine. J Appl Psychol. (2004) 89:208–19. doi: 10.1037/0021-9010.89.2.208

4. Danielson JA, Wu TF, Molgaard LK, Preast VA. Relationships among common measures of student performance and scores on the North American veterinary licensing examination. J Am Vet Med Assoc. (2011) 238:454–61. doi: 10.2460/javma.238.4.454

5. Fuentealba C, Hecker KG, Nelson PD, Tegzes JH, Waldhalm SJ. Relationships between admissions requirements and pre-clinical and clinical performance in a distributed veterinary curriculum. J Vet Med Educ. (2011) 38:52–9. doi: 10.3138/jvme.38.1.52

6. Molgaard LK, Rendahl A, Root Kustritz MV. Closing the loop: using evidence to inform refinements to an admissions process. J Vet Med Educ. (2015) 42:297–304. doi: 10.3138/jvme.0315-045R

7. Karpen S, Brown S. Building and validating a predictive model for DVM academic performance. Educ Health Professions. (2019) 2:55–8. doi: 10.4103/EHP.EHP_20_19

8. Raidal SL, Lord J, Hayes L, Hyams J, Lievaart J. Student selection to a rural veterinary school. 2: predictors of student performance and attrition. Aust Vet J. (2019) 97:211–19. doi: 10.1111/avj.12816

9. Stelling AGP, Mastenbroek N, Kremer WDJ. Predictive value of three different selection methods for admission of motivated and well-performing veterinary medical students. J Vet Med Educ. (2019) 46:289–301. doi: 10.3138/jvme.0417-050r1

10. Hudson NPH, Rhind SM, Mellanby RJ, Giannopoulos GM, Dalziel L, Shaw DJ. Success at veterinary school: evaluating the influence of intake variables on year-1 examination performance. J Vet Med Educ. (2020) 47:218–29. doi: 10.3138/jvme.0418-042r

11. Kuncel NR, Hezlett SA, Ones DS. A comprehensive meta-analysis of the predictive validity of the graduate record examinations: implications for graduate student selection and performance. Psychol Bull. (2001) 127:162–81. doi: 10.1037//0033-2909.127.1.162

12. Kuncel NR, Wee S, Serafin L, Hezlett SA. The validity of the graduate record examination for master's and doctoral programs: a meta-analytic investigation. Educ Psychol Measur. (2010) 70:340–52. doi: 10.1177/0013164409344508

14. Rush BR, Sanderson MW, Elmore RG. Pre-matriculation indicators of academic difficulty during veterinary school. J Vet Med Educ. (2005) 32:517–22. doi: 10.3138/jvme.32.4.517

15. Roush JK, Rush BR, White BJ, Wilkerson MJ. Correlation of pre-veterinary admissions criteria, intra-professional curriculum measures, AVMA-COE professional competency scores, and the NAVLE. J Vet Med Educ. (2014) 41:19–26. doi: 10.3138/jvme.0613-087R1

16. Burns SM. Predicting academic progression for student registered nurse anesthetists. AANA J. (2011) 79:193–201.

17. Hall JD, O'Connell AB, Cook JG. Predictors of student productivity in biomedical graduate school applications. PLoS ONE. (2017) 12:e0169121. doi: 10.1371/journal.pone.0169121

18. Moneta-Koehler L, Brown AM, Petrie KA, Evans BJ, Chalkley R. The limitations of the GRE in predicting success in biomedical graduate school. PLoS ONE. (2017) 12:e0166742. doi: 10.1371/journal.pone.0166742

19. Higgins R, Moser S, Dereczyk A, Canales R, Stewart G, Schierholtz C, et al. Admission variables as predictors of PANCE scores in physician assistant programs: a comparison study across universities. J Physician Assist Educ. (2010) 21:10–7.

20. Suhayda R, Hicks F, Fogg L. A decision algorithm for admitting students to advanced practice programs in nursing. J Prof Nurs. (2008) 24:281–4. doi: 10.1016/j.profnurs.2007.10.002

21. Wao J, Ries R, Flood I, Lavy S, Ozbek M. Relationship between admission GRE scores and graduation gpa scores of construction management graduate students. Int J Construct Educ Res. (2016) 12:37–53. doi: 10.1080/15578771.2015.1050562

22. Weiner OD. How should we be selecting our graduate students? Mol Biol Cell. (2014) 25:429–30. doi: 10.1091/mbc.E13-11-0646

23. Fischer FT, Schult J, Hell B. Sex-specific differential prediction of college admission tests: a meta-analysis. J Educ Psychol. (2013) 105:478–88. doi: 10.1037/a0031956

24. Katz JR, Chow C, Motzer SA, Woods SL. The graduate record examination: help or hindrance in nursing graduate school admissions? J Prof Nurs. (2009) 25:369–72. doi: 10.1016/j.profnurs.2009.04.002

25. Wolf C. The effect of the graduate record examination on minority applications: experience at New York institute of technology. J Allied Health. (2014) 43:e65–67.

26. Klieger D, Frederick C, Steven H, Jennifer M, Florian L. New Perspectives on the Validity of the GRE®General Test for Predicting Graduate School Grades. (Princeton, NJ: Educational Testing Service) (2014)

27. Bleske-Rechek A, Browne K. Trends in GRE scores and graduate enrollments by gender and ethnicity. Intelligence. (2014) 46:25–34. doi: 10.1016/j.intell.2014.05.005

28. Schwager ITL, Hulsheger UR, Bridgeman B, Lang JWB. Graduate student Selection: Graduate record examination, socioeconomic status, and undergraduate grade point average as predictors of study success in a western European University. Int J Select Assess. (2015) 23:71–9. doi: 10.1111/ijsa.12096

29. Westrick PA. Reliability estimates for undergraduate grade point average. Educ. Assess. (2017) 22:231–52. doi: 10.1080/10627197.2017.1381554

30. Wiberg M, Sundström A. A comparison of two approaches to correction of restriction of range in correlation analysis. Pract Assess Res Eval. (2009) 14:5. doi: 10.7275/as0k-tm88

31. Gross AL, McGanney ML. The restriction of range problem and nonignorable selection processes. J Appl Psychol. (1987) 72:604. doi: 10.1037/0021-9010.72.4.604

32. Holmes D. The robustness of the usual correction for restriction in range due to explicit selection. Psychometrika. (1990) 55:19–32.

33. Mendoza JL. Fisher transformations for correlations corrected for selection and missing data. Psychometrika. (1993) 58:601–15.

34. Chernyshenko OS, Ones DS. How selective are psychology graduate programs? The effect of the selection ratio on gre score validity. Educ Psychol Measur. (1999) 59:951–61. doi: 10.1177/00131649921970279

35. Sackett PR, Yang H. Correction for range restriction: an expanded typology. J Appl Psychol. (2000) 85:112–8. doi: 10.1037/0021-9010.85.1.112

36. ETS. Overview of the Verbal Reasoning Measure. ETS. (2020) Available online at: https://www.ets.org/gre/revised_general/prepare/verbal_reasoning/ (accessed August 24, 2020).

37. ICVA. ICVA Veterinary Educational Assessment (VEA) Sample Questions 1 - 30. National Board of Veterinary Medical Examiners. Available online at: https://www.icva.net/image/cache/VEA_sample_questions.pdf (accessed August 24, 2020).

38. ICVA. North American Veterinary Licensing Examination Practice Analysis Executive Summary. ICVA. (2017) Available online at: https://www.icva.net/image/cache/ICVA_navle_practice_analysis_executive_summary_final.pdf (accessed August 24, 2020).

Keywords: academic achievement, admission predictors, GPA, GRE verbal, veterinary medical education, restriction of range, Veterinary Educational Assessment (VEA), North American Veterinary Licensing Exam (NAVLE)

Citation: Danielson JA and Burzette RG (2020) GRE and Undergraduate GPA as Predictors of Veterinary Medical School Grade Point Average, VEA Scores and NAVLE Scores While Accounting for Range Restriction. Front. Vet. Sci. 7:576354. doi: 10.3389/fvets.2020.576354

Received: 25 June 2020; Accepted: 22 September 2020;

Published: 28 October 2020.

Edited by:

Erik Hofmeister, Auburn University, United StatesReviewed by:

Sharanne Lee Raidal, Charles Sturt University, AustraliaStephanie Shaver, Midwestern University, United States

Copyright © 2020 Danielson and Burzette. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jared A. Danielson, amFkYW5pZWxAaWFzdGF0ZS5lZHU=

Jared A. Danielson

Jared A. Danielson Rebecca G. Burzette

Rebecca G. Burzette