- 1School of Advanced Technology, Xi’an Jiaotong-Liverpool University, Suzhou, China

- 2Department of Computing, School of Advanced Technology, Xi’an Jiaotong-Liverpool University, Suzhou, China

- 3Department of Engineering, King’s College London, London, United Kingdom

- 4Department of Computer Science, The University of British Columbia, Kelowna, BC, Canada

First-person view (FPV) technology in virtual reality (VR) can offer in-situ environments in which teleoperators can manipulate unmanned ground vehicles (UGVs). However, non-experts and expert robot teleoperators still have trouble controlling robots remotely in various situations. For example, obstacles are not easy to avoid when teleoperating UGVs in dim, dangerous, and difficult-to-access areas with environmental obstacles, while unstable lighting can cause teleoperators to feel stressed. To support teleoperators’ ability to operate UGVs efficiently, we adopted construction yellow and black lines from our everyday life as a standard design space and customised the Sobel algorithm to develop VR-mediated teleoperations to enhance teleoperators’ performance. Our results show that our approach can improve user performance on avoidance tasks involving static and dynamic obstacles and reduce workload demands and simulator sickness. Our results also demonstrate that with other adjustment combinations (e.g., removing the original image from edge-enhanced images with a blue filter and yellow edges), we can reduce the effect of high-exposure performance in a dark environment on operation accuracy. Our present work can serve as a solid case for using VR to mediate and enhance teleoperation operations with a wider range of applications.

1 Introduction

The teleoperation of robots has drawn the attention of robotics engineers for a long time. Various approaches have been developed for remote control teleoperations. For example, studies have illustrated that engineers use teleoperations for search-and-rescue (Casper and Murphy, 2003; BBCClick, 2017; Peskoe-Yang, 2019) after natural disasters (Settimi et al., 2014; Norton et al., 2017), for subsea operations using underwater robots (Petillot et al., 2002; Hagen et al., 2005; Khatib et al., 2016; Paull et al., 2014; Caharija et al., 2016), for working in space (Sheridan, 1993; Wang et al., 2012; Ruoff, 1994; Wang, 2021) and for healthcare work using medical telerobotics systems (Avgousti et al., 2016; King et al., 2010). A common characteristic of these studies is that they focus on the work of expert teleoperators who manipulate and maneuver robots at a distance in the physical world, especially in extreme situations. Although there have been some advances in the design of teleoperations to support teleoperators’ work in real life, remote-controlling robots to complete various specific tasks is still challenging.

Previous research can be employed in VR-mediated teleoperation systems; such designs have been proven to support controlling robots from a distance. However, the core problem is that those approaches are not designed to accommodate teleoperators’ specific work environments. Researchers have reported that, as a consequence of those studies, performing essential collision avoidance is still challenging (Pan et al., 2022; Norton et al., 2017; Luo et al., 2022), as is reducing operators’ stress (Baba et al., 2021) and increasing their work efficiency (Small et al., 2018) and accuracy (Li et al., 2022; Luo et al., 2023; Li et al., 2024). Thus, we argue that the teleoperation of robots may depend not only on the usability of the proposed engineering approaches but also on the usefulness of the VR-mediated teleoperation systems in everyday practice.

Furthermore, despite the significance of maintaining telepresence in the environment surrounding an unmanned ground vehicle (UGV), such as for obstacle avoidance or navigation (Luo et al., 2022; 2023; Li et al., 2022), much VR research overlooks the interface problems and, instead, addresses the imitation learning of robots (Naceri et al., 2021; Hirschmanner et al., 2019; Sripada et al., 2018; Lee et al., 2022). Other studies address managing control of the robot’s action through various interfaces, such as augmented reality, but have not investigated in detail the problems associated with these approaches (Senft et al., 2021; Fang et al., 2017; Xi et al., 2019; Havoutis and Calinon, 2019; Li et al., 2023). Some exceptions do exist, such as the few studies that pertain to assisting human operators via VR with remote manipulation tasks (Kot and Novák, 2018; Koopmann et al., 2020; Wibowo et al., 2021; Martín-Barrio et al., 2020; Fu et al., 2021; Nakanishi et al., 2020; Omarali et al., 2020; Nakayama et al., 2021; Van de Merwe et al., 2019). However, among the other issues involved, no matter which forms of assistance for human operators are deployed, users’ viewpoints can still be limited by time delay (Opiyo et al., 2021).

Alternatively, researchers must provide more data, especially detailed space information on robots, to provide operators with a sense of co-location. This alternative solution is expensive, not only regarding the amount of data. Researchers must also spend time and use expensive hardware devices to process it. Unfortunately, the literature provides little guidance on the kind of data that must be collected. As a foundation, we at least need to address teleoperators’ actual work practices and translate their work routines from real life into VR-mediated teleoperation systems. In that sense, we can provide valuable data on displaying stereoscopic images and offer an immersive environment to improve the teleoperator’s presence in the remote environment (Luo et al., 2021; Chen et al., 2007). However, rendering images, especially when similar colours in a VR environment must be detected by the human eye, is difficult: as with the protection provided by the crypsis of animals in nature, the human eye cannot distinguish objects from a background that shares the same colour. For example, teleoperators conducting search-and-rescue work in dim environments have reported having trouble discerning objects from the backgrounds of such environments. This problem is not unique to VR as a mediator for teleoperation purposes. While the human visual system is somewhat adept at differentiating objects of the same color as their background (e.g., shading), a common issue in the physical world is that humans have less accuracy in detecting objects in static than in moving backgrounds (Zheng and He, 2011; Qin and Liu, 2020).

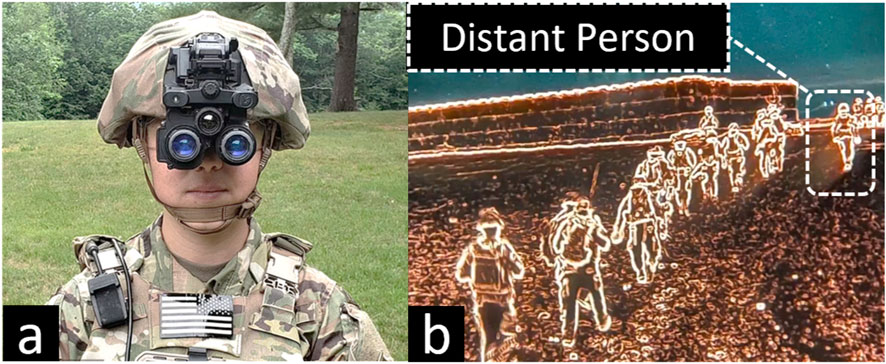

To solve this problem, we propose that edge detection can highlight an object’s boundaries (McIlhagga, 2018). We are inspired by the latest developments in military night vision goggles (NVG) (see Figure 1A). The military started with green and black NVG images and now has a combination of yellow edge enhancement and black background color to enhance soldiers’ ability to see objects, people, and the environment at night (see Figure 1B). This technology is based on edge enhancement technology and color selection and has been widely used in the military. In non-military scenarios, our participants also confirm that lights are uniquely useful for their daily work. In addition, yellow and black are commonly used in all workplaces. Therefore, we decided to use a similar principle of visual augmentation in the teleoperation of UGVs to help operators complete high-performance teleoperations with high-user experience. As seen in Figure 1B, the color edge enhancement can highlight the outline clearly, even those objects that are far away. Therefore, in this work, we propose a design of a VR interface based on this approach and apply it to improve teleoperation tasks.

Figure 1. (A) An example of a military night vision goggles (https://www.inverse.com/input/tech/the-armys-new-night-vision-goggle-upgrades-are-cyberpunk-as-hell); (B) The latest version of the vision of https://www.youtube.com/watch?v=ca4fgK5Axwk. *Note: (B) are not the same image with different processing.

We started with customising the Sobel algorithm to better support teleoperators when immersed in the VR world. The present research aimed to study whether edge detection can improve users’ performance and ability to avoid obstacles when teleoperating a UGV. Obstacle avoidance is essential in the teleoperation of UGVs and can have critical applications. Thus, our work represents an essential contribution to the literature by being the first study to examine the effectiveness of applying edge enhancement to objects to aid in the teleoperation of UGVs via VR head-mounted display (HMD). In line with the goal of the present study, we chose the yellow colour and blue filters as bases for helping teleoperators clearly distinguish the edges of objects under various work conditions. We conducted two user studies to evaluate our designed VR-mediated teleoperation system under different conditions. In Study One, we combined yellow edges with a blue filter to enhance the rendered images to explore how this design affected the teleoperation of a UGV under daylight conditions. Results showed that our edge enhancement algorithm enhanced user performance in complex static and dynamic obstacle avoidance tasks and reduced workload demands and simulator sickness. As UGVs are often driven in dark, suboptimal environments, in Study Two, we explored how to enhance teleoperators’ view of environment objects’ edges to improve maneuverability because yellow edges with a blue filter were not ideal for dark environments due to the high exposure of resulting images. We found that removing the original image and leaving only the blue filter and yellow edges can address the high-exposure issue. Results of the second study in a dark environment showed this was a suitable solution and led to accurate teleoperation with low levels of simulation sickness. In short, our results show that our technique is practical, efficient, and useable in both daylight conditions (standard exposure) and suboptimal light conditions (high exposure). As such, this paper makes two important contributions: (1) a new edge enhancement algorithm is proposed to aid in perceiving the distance of static and dynamic objects for VR-mediated teleoperations, and (2) edge enhancement is evaluated with multiple variations in UGV teleoperations via two user studies.

The rest of this paper is organised as follows. In Section 2, we present related work by describing the current research on human vision enhancement in VR HMD and edge detection in VR research as a basis for the investigation. Section 3 provides a system overview and detailed information about systems design. Section 4 outlines the experiment design, including task design, evaluation methods, and experimental procedures. Next, two user studies are presented, one in Section 5 and one in Section 6, each including information about the study participants, research hypotheses, and objective and subjective results. We present the main takeaways of this work and some directions for future work in Section 7. We conclude the paper with Section 8.

2 Related work

2.1 Human vision enhancement in virtual reality (VR)/augmented reality (AR) head-mounted display (HMDs)

Many studies focus on the design and improvement of VR interface to better control the robot rather than using a visual enhancement to improve the user’s perception of the environment (Peppoloni et al., 2015; Lipton et al., 2018). Vision in robots and bionic vision in humans could not be more different. Robotic vision is the concept that allows automated machines to ‘see’; bionic vision in humans refers to ocular vision enhanced by technology (Grayden et al., 2022). We can use algorithms and cameras to process visual data from the environment to inform our decision-making. However, how can we deliver bionic vision in a VR context? The human eye can be enhanced in various ways, such as through bionic vision, as mentioned. For example, Itoh et al. proposed an optical see-through HMD, combined with wearable sensing systems, including image sensors, to mediate human vision capability (Itoh and Klinker, 2015). Their research was significant in showing that such an approach corrects optical defects in human eyes, especially defocusing, by overlaying a compensation image on the user’s actual view. In their article, Kasowski et al. argued that many computational models for bionic vision lack biological realism, and they provide immersive VR simulations of bionic vision to allow sighted participants to see through the perspective of a bionic eye (Kasowski and Beyeler, 2022). Zhao et al. added that current VR applications do not support people with poor vision (Zhao et al., 2019), and thus, vision loss that falls short of complete blindness is not correctable by a VR HMD. However, VR can help with peripheral vision loss (Younis et al., 2017) if researchers carefully design supportive VR systems from a human-centric perspective (Chang et al., 2020). Similar work can also be found in various VR publications.

In addition, using real-time image processing algorithms, VR HMDs can address colour vision deficiencies through colour filters and augmentations (Chen and Liao, 2011). Defocus correction can also be implemented in VR HMD technology (Itoh and Klinker, 2015), which can be widely used in rehabilitation and vision enhancement to address multiple patient issues (Ehrlich et al., 2017; Hwang and Peli, 2014). However, most VR studies presume users have sight disabilities and, therefore, need VR technology to support them (Sauer et al., 2022). That assumption may be useful; however, those studies focused on rehabilitation and enhancement for people with vision deficiencies rather than on enhancing people’s standard or corrected-to-normal vision to improve their visual capabilities further (Hwang and Peli, 2014). Nevertheless, the combination of suitable image processing algorithms and HMD technology can benefit people with normal vision, even their night vision (Waxman et al., 1998).

Furthermore, Stotko and colleagues (Stotko et al., 2019) present an immersive robot teleoperation system and a scene exploration system based on VR. They use an RGB-D camera to reconstruct the depth information of objects in a 3D environment in real-time and use RGB colors to represent depth. This approach requires a strong computing power and signal transmission environment. However, utilising a sample and inexpensive approach to suitably improve the user’s perception of object outlines and depth information under only binocular images are still worth exploring. In the present study, our focus is not on users who have sight issues or might lose vision during teleoperation; instead, our users are those somewhat familiar with teleoperation without eyesight issues. We are interested in examining their work with daylight and dark environments via VR related to distinguishing the edges of objects placed against a similar background. Our research aligns with those approaches that, to some extent, assign additional abilities to users, giving them ‘superhuman powers’ in VR (Xu et al., 2020; Granqvist et al., 2018; Ioannou et al., 2019) to support the real-time teleoperation of UGVs. This unique purpose drove us to seek customising edge detection approaches as a basis for the VR-mediated teleoperation to enhance users’ view of the environment.

2.2 Edge detection

Edge detection, which is not a new concept in computer science, can be addressed through various methods. However, regardless of whether a researcher applies search-based or zero-crossing-based principles, the goal is still to identify edges and curves in a digital image where the image brightness changes sharply or, more formally, has discontinuities (Lindeberg, 2001). Image edge detection in VR is one of the most critical technologies; for example, researchers use the Canny algorithm to compare the advantages and disadvantages of various algorithms in image edge detection to suppress the impulse noise in the image (Liu et al., 2020). However, the Canny algorithm performs poorly in real-time image processing compared to the Sobel algorithm (Lynn et al., 2021), which is often used to detect objects’ edges in horizontal and vertical directions (Sobel and Feldman, 1968). Moreover, the Canny edge detection algorithm is a common approach with high performance (Canny, 1983; 1986) but is less efficient than Sobel in this context also (Jose et al., 2014). In addition, Prewitt and Roberts’ algorithms were designed for better performance and efficiency in computer vision applications. However, among these algorithms, the Sobel algorithm leads to lower information loss, rendering images more natural to the human eye (Jose et al., 2014; Prewitt, 1970; Hassan et al., 2008). Moreover, the algorithms are typically meant to be used by applications and not directly by people (Ziou and Tabbone, 1998). As such, exploration of human-UGV teleoperation via a VR HMD is limited.

2.3 Visual augmentation for UGV teleoperation

Recent works have explored visual augmentation methods to aid the teleoperation of unmanned ground vehicles (UGVs) in challenging environments (Zhang, 2010). proposed an augmented reality interface that fuses visual and depth data to overlay terrain information onto the live video feed during UGV teleoperation. Their results showed improved navigation through hazardous terrain compared to standard video interfaces (Al-Jarrah et al., 2018). developed a system to detect hazards from UGV camera feeds using computer vision and highlight potential obstacles on the operator’s screen. They demonstrated enhanced situational awareness and accident avoidance in urban driving scenarios (Choi et al., 2013). augmented the teleoperation view with 3D point cloud data to provide operators with additional spatial awareness cues. In user studies, their augmented interface led to fewer collisions during UGV teleoperation along cluttered driving paths. These works demonstrate the potential for visual augmentation to enhance UGV teleoperation, but limitations remain in robustly detecting hazards and fusing multi-modal sensor data into intuitive augmented, virtual, and mixed reality interfaces. However, using edge detection to improve people’s ability to obtain visual information is underexplored in the UGV teleoperation literature.

Thus, the Research Questions for the present study is as follows: RQ1) To what degree do we enhance teleoperators’ perceptions of edge detection techniques in VR-mediated teleoperations? RQ2) To what degree can the Sobel algorithm with yellow edges and blue filters directly enhance human sight capacity in VR-mediated teleoperations?

3 System overview

To answer the above research questions, we first designed a VR-meditated system. In this section, we describe in detail the system design, including the technical and non-technical instruments and the justification for choosing yellow and blue colours for edge enhancement. We also describe the experiment setting design and map out the relations between the experiment setting and the actual workplace of the teleoperators.

3.1 Technique design

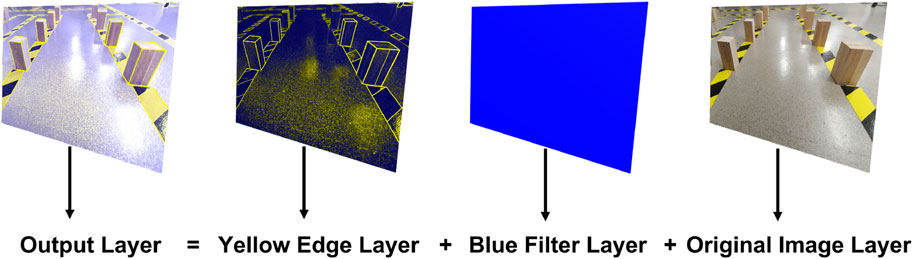

From the perspective of how the human eye works, complementary colours can play an essential role in highlighting and improving the identification of details in objects that appear in images (Chen et al., 2017; MacAdam, 1938). An important aspect is the different spectral sensitivities the human eye has to colours. For example, the eye is more sensitive to yellow than to other colours, such as blue, which is yellow’s complementary colour (Schubert, 2006; Chen et al., 2017; MacAdam, 1938). The colour combination of yellow lines overlaid on a blue filter is effective for making objects stand out and for highlighting their boundary contours (Chen et al., 2017). These insights into the human eye’s spectral sensitivities to colours agree with the indications of the participants. The participating teleoperators may experience various versions of daylight in their natural work environments. Without the construction of yellow-black lines (warning marks) on the floor, accomplishing their work would be challenging. However, warning marks cannot be placed in dangerous areas, and performing these functions in person after a disaster may not be possible. In such cases, the only way to help the teleoperators is to pre-set the warning marks after construction is completed. Then, when teleoperation is needed, the participant can remotely control robots to accomplish tasks. As noted previously, the current VR technology focuses on creating situational environments for teleoperators; those operations require high-quality 2D maps converted to a 3D background to support teleoperators in finishing tasks that are naturally overlooked due to the bandwidth, time delay, and accuracy of sensors. Although expensive instruments are provided, the teleoperators still have a low capacity to distinguish similar colours or to identify object edges in various lighting conditions. Thus, we proposed edge enhancement as a method to aid the quality point cloud data to support efficient and accurate teleoperation practices (see Figure 2).

Figure 2. The proposed technical design. In addition to the construction lines, the yellow and blue colours are chosen to enhance the original images for teleoperation.

Because the colours of the images operated by the Sobel algorithm are grey-scale only, we did a pilot exploration With Yellow Edges and With Blue Filter on the images with positive results. We used the shader of Unity3D to overlay and blend three elements – (1) the original image layers from the camera, (2) the blue filter layer, and (3) the yellow enhanced edge layer–to produce a final output layer as the display, as shown in Figure 2. Our algorithm takes original colors sampled from UV coordinates (“U″ and “V″ denote the axes of the 2D texture) on the texture in the fragment shader as the input color (Equation 1). The edges are gradients calculated by the relative luminance of sampled RGB colors from a scaled Sobel operator on the UV coordinates on the textures (Equation 2) (Sobel and Feldman, 1968; Al-Tairi et al., 2014). Scale of Sobel kernel

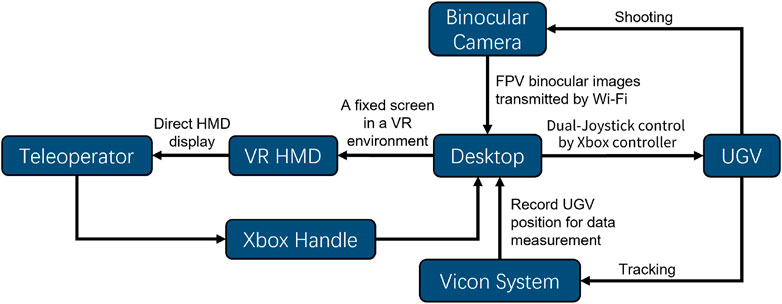

In Figure 3, we illustrate the overall VR-mediated teleoperation system workflow, which includes the use of a DJI RoboMaster S1 prototype as the mobile UGV. The transmission system consists of binocular cameras, a mini desktop, and a power bank. The specifications for the binocular camera included the model (CAM-OV9714-6), resolution (2560 × 720, 30fps), and nominal field of view (62° vertical, 38° horizontal). The first-person view (FPV) images from the camera were transmitted to a PC and rendered in an HTC VIVE Pro Eye, a commercial VR HMD with a dual 3.5-inch screen size of

In our design, head tracking is done so that there is no severe vestibular vertigo (LaViola, 2000). In this way, our use of VR HMDs to display FPV images is to improve the sense of immersion and to show the stereoscopic images (Luo et al., 2021) captured via the binocular camera. Our design focuses on how to use filters and edge detection to overlay layers and display them via VR HMDs.

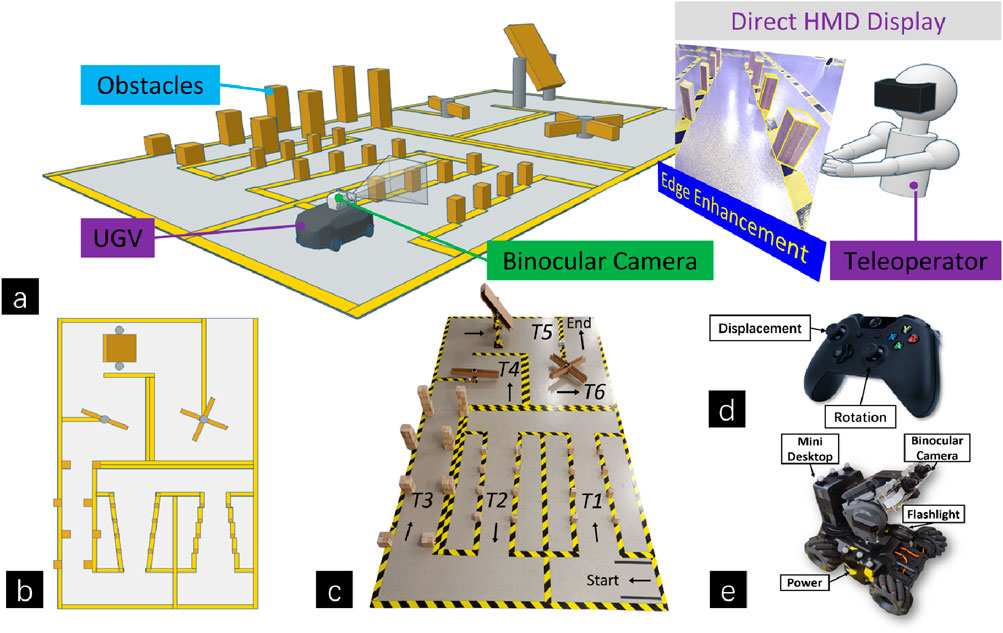

3.2 Scenario design

Our scenario is based on the telepresence and teleoperation of a UGV during actual operations in a wholesale store or storage facility. The users monitor the UGV from an FPV via a VR HMD and remotely control the UGV through wireless signals. Because the HMD fully covers their vision, they cannot see the UGV or the physical environment. We designed a prototype and constructed an experimental site (see Figures 4C, E). Users monitor the FPV display in the VR HMD and remotely control our prototypes to complete obstacle avoidance tasks under various conditions. In line with the needs of the operators, we used construction yellow–black lines to simulate their actual workplace. The robots cannot work across the lines because shelves are located behind the bars. In addition, boxes can sometimes drop from the stands, so the robots must be able to avoid those obstacles (wood in T5, in Figure 4). In reality, other robots are moving near the corners (like T6), so we use a cross wood, forcing the robot to circle in T6 because another robot and wood are in T4. However, no differences exist between T4 and T6; the shapes of the pieces of wood are only used to distinguish the moving robots for the participants.

Figure 4. An overview of the scenario design. (A) The teleoperator observes the stereoscopic FPV images in the VR environment captured by the UGV using a binocular camera and controls the robot to perform obstacle avoidance tasks with an Xbox controller. (B) a top view of the map (2 m × 3 m); (C) a photo of the map and the six task areas included (T1-T6); (D) the Xbox controller as the input device; (E) the UGV prototype setup used in the experiment which could capture stereoscopic FPV images.

As this experiment setting was designed to simulate an actual environment (e.g., a wholesale store), our VR-mediated teleoperation system required further testing to complete a proper investigation. A lab-based usability test may help investigate errors in the system and determine whether the teleoperators can finish the artificial tasks, but VR researchers have the responsibility to ensure that systems are helpful to the participants before rolling them out. However, directly investigating the usefulness of the systems in the present study would be expensive. At the same time, damages to the workplace that teleoperators may cause with their teleoperation if their UGV hits a structure by accident can also be expensive. Moreover, if researchers must redesign the VR system after it is delivered, the time-consuming maintenance and revision will also be expensive. In the meantime, the establishment of good working partnerships between researchers and teleoperators is essential. Therefore, out of respect for our teleoperators, we can only deliver VR-mediated teleoperation systems if all teleoperators’ needs are fulfilled. This motivation distinguishes our work from previous VR research and forces us to attend to both the usability and usefulness of bug-free VR-mediated teleoperations for real use. In that light, the current artificial experiment setting is the best natural choice to represent a research setting for the present study.

4 Experiment design

4.1 Tasks design

A maze was built and obstacles were included to simulate the environment for the UGV to navigate. The maze consisted of 6 (T1–T6) different (local) obstacle avoidance tasks (see Figure 4A–C). T1–T3 consisted of static obstacles made of blocks of wood. The narrowest parts (ends) of T2 and T3 were 5 mm longer than the width of the UGV. Although the narrowest part of T1 was 5.5 cm longer than the width of the UGV, T1 contained three bends, while T2 and T3 had no hooks. T4 and T6 were horizontal rotating obstacles set at different speeds using different shapes. Finally, T5 was a vertical rotating obstacle.

4.2 Evaluation metrics

4.2.1 Performance measures

To evaluate the performance and usability of the visual enhancement technique, we measured the number of collisions and completion time for each task. Collisions were defined as contact between the UGV and any obstacles in the environment. We used two metrics to quantify collisions: (1) Number of collision instances: Each distinct contact between the UGV and an obstacle was counted as one collision instance, regardless of duration. Multiple brief collisions with the same obstacle were counted as separate instances. (2) Total collision time: The total duration of all collision instances was summed to compute the total collision time. Any collision lasting less than 1 s was counted as 1 s of collision time. For collisions exceeding 1 s, the total duration was used.

Collision instance and time data were recorded by manually analyzing frame-by-frame footage from a side-mounted camera on the UGV. Completion time for each trial was measured using a 9-camera VICON motion tracking system surrounding the maze environment. While manually analyzing collisions from video footage provides an approximate measure of collision times, we note that it may not be a highly precise metric. However, because the same video analysis protocol was applied consistently across all experimental conditions and test samples, the relative comparisons of average collision times between conditions should still provide a valid performance evaluation despite this limitation. The potential inaccuracies in absolute collision time measurements would not affect the observed effects between conditions or the statistically significant differences in performance.

4.2.2 Subjective measures

4.2.2.1 NASA-TLX workload questionnaire

We used the NASA-TLX (Hart, 2006) to measure the workload demands of each task. This questionnaire used 11-point scales (from 0 to 10) to assess six elements of users’ workloads (mental, physical, temporal, performance, effort, and frustration).

4.2.2.2 Simulator sickness questionnaire (SSQ)

The SSQ (Kennedy et al., 1993) was used to measure the teleoperators’ sickness levels during each task. This questionnaire contained questions with a five-point scale (from 0 to 5) that can be used to assess four elements of a user’s sickness level (nausea, oculomotor, disorientation, and total score). We also conducted informal interviews with the teleoperators after each session to investigate the usefulness of our VR-mediated teleoperation system from their viewpoint. The interviews were semi-structured with a focus on gathering information to help understand how VR systems help teleoperators perform their tasks and suggestions for improvements.

4.3 Procedure

Participants were required to drive two rounds under each condition in our two within-subjects studies (4 conditions in Study One and two in Study Two). The order of conditions was counterbalanced using a Latin square design to mitigate carry-over effects. Participants completed training sessions to allow them to familiarise themselves with the teleoperation interfaces. The content of the training includes being familiar with the FPV screen and controlling the UGV. Participants were required to avoid collision with all obstacles and stay within the yellow/black tape while driving the UGV as they would usually do in the workplace or rescue mission. Before starting, they needed to complete a questionnaire to provide their demographic data and past VR and UGV teleoperation experience. Participants also completed a pre-SSQ before beginning the first condition. After each condition, participants were required to complete a delta SSQ and NASA-TLX workload questionnaire. The subsequent round would begin after participants had adequate rest to prevent the accumulation of simulator sickness (SS), if any. We used a high-definition video camera and an 8-camera VICON system to capture the movement of the UGVs to check for completion times and collisions with obstacles.

5 User Study One

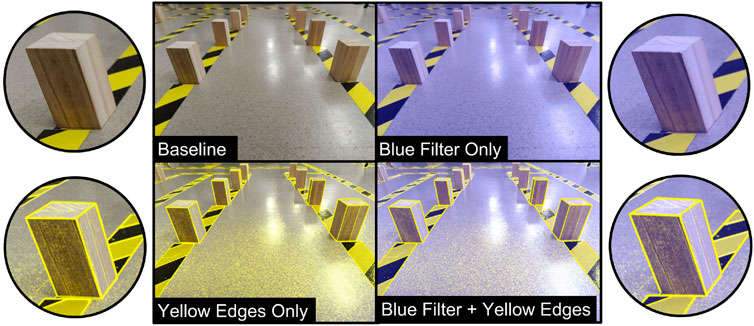

This study aimed to explore how yellow edges, together with a blue filter, would affect the teleoperation of UGVs. Four conditions derived from two independent variables (Without vs. With Yellow Edges and Without vs. With Blue Filter) were explored (see Figure 5): (1) Baseline: Original image without edge enhancement or filter; (2) Blue filter: Original image With Blue Filter only; (3) Yellow Edges: Original image With Yellow Edges only; (4) Blue Filter + Yellow Edges: Original image both With Blue Filter and With Yellow Edges. Overall system performance is illustrated in Figure 6. Our system can clearly guide teleoperators by adding a blue filter and yellow edges to the original images.

Figure 5. An overview of the performance of the system. The baseline is the original picture. After adding yellow edges (left lower), the wood block may be somewhat difficult to recognise. However, after adding a blue filter and yellow edges (lower right), the wood block is not so difficult to recognise during real-time teleoperation.

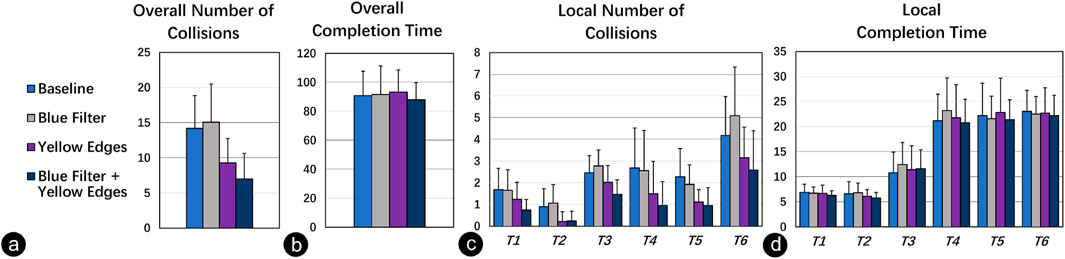

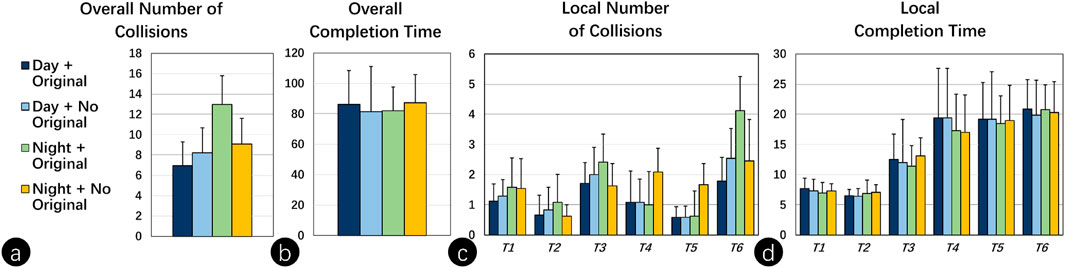

Figure 6. (A) Mean number of collisions; (B) mean completion times of overall tasks; (C) mean number of collisions; and (D) mean completion times of each local task. The error bars represent a 95% confidence interval (CI).

5.1 Hypotheses

Based on our experiment design, we formulated the following hypotheses:

5.2 Participants

We recruited 16 participants (8 males and eight females; aged 18–29, mean = 22) for this experiment. They all declared themselves healthy with no health issues, physical or otherwise. They had normal or corrected-to-normal vision and did not suffer from any known motion sickness issues in their normal daily activities. They did not suffer from colour blindness and could distinguish the colours used in the study. They all consented to participate voluntarily in the study. None of the participants had experience driving a UGV using an HMD in FPV in a virtual world. Thus, this experiment constituted the first time each participant operated a remote UGV in this manner. However, as stated earlier, participants were given training sessions to allow them to become familiar with VR-based teleoperation before they went into formal experiments. Participants were instructed to complete the courses as quickly as possible while avoiding collisions. This experiment was reviewed and approved by our University Ethics Committee.

5.3 Results

All participants understood the nature of the tasks, and all recorded data were valid. No outliers were found using residuals that exceeded

5.3.1 Objective results

5.3.1.1 Overall task performance

A two-way RM-ANOVA showed the interaction effects on the number of collisions (F1, 15 = 18.411, p < .01). We also found the following simple effects (see also Figure 6): when Without Blue Filter, the number of collisions of Without Yellow Edge Enhancement group was 4.938 higher than that of With Yellow Edge Enhancement (95% CI: 3.002–6.873, p < .0001); when With Blue Filter, the number of collisions of Without Yellow Edge Enhancement group was 8.094 higher than that of With Yellow Edge Enhancement (95% CI: 5.935–10.252, p < .0001); when With Yellow Edge Enhancement, the number of collisions of Without Blue Filter group was 0.485 higher than that of With Blue Filter (95% CI: 1.247–3.315, p < .0001).

Another RM-ANOVA found no main effect on completion time for Blue Filter (F1, 15 = 1.451, p > .05) and Yellow Edge Enhancement (F1, 15 = 0.032, p > .05) respectively.

5.3.1.2 Local task performance

We found four main effects on the number of collisions in local tasks as follows: Without Yellow Edge Enhancement group was 0.672 higher than that of With Yellow Edge Enhancement in T1 (95% CI: 0.309–1.035, F1, 15 = 15.555, p < .01); Without Yellow Edge Enhancement group was 0.750 higher than that of With Yellow Edge Enhancement in T2 (95% CI: 0.502–0.998, F1, 15 = 41.538, p < .0001); Without Yellow Edge Enhancement group was 1,391 higher than that of With Yellow Edge Enhancement in T4 (95% CI: 0.823–1.958, F1, 15 = 27.308, p < .0001); Without Yellow Edge Enhancement group was 1.063 higher than that of With Yellow Edge Enhancement in T5 (95% CI: 0.542–1.583, p < .01).

We also found interaction effects in complex tasks T3: when With Blue Filter, Without Yellow Edge Enhancement group was 1.313 higher than that of With Yellow Edge Enhancement (95% CI: 0.755–1.87, p < .01); when Without Yellow Edge Enhancement, Without Blue Filter group was 0.312 lower than that of With Blue Filter (95% CI: 0.007–0.618, p < .05); when With Yellow Edge Enhancement, Without Blue Filter group was 0.563 higher than that of With Blue Filter (95% CI: 0.163–0.962, p < .01).

In T6: when Without Blue Filter, Without Yellow Edge Enhancement group was 1.031 higher than that of With Yellow Edge Enhancement (95% CI: 0.375–1.687, p < .01); when With Blue Filter, Without Yellow Edge Enhancement group was 0.750 higher than that of With Yellow Edge Enhancement (95% CI: 0.502–0.998, p < .0001); when Without Yellow Edge Enhancement, Without Blue Filter group was 0.906 lower than that of With Blue Filter (95% CI: 0.502–0.998, p < .05). Another RM-ANOVA showed two main effects on completion time: Without Yellow Edge Enhancement group was 0.797 s higher than that of With Yellow Edge Enhancement in T2 (95% CI: 0.298–1.296, F1, 15 = 11.587, p < .01); Without Blue Filter group was 0.891 s lower than that of With Blue Filter in T3 (95% CI: 0.067–1.715, F1, 15 = 5.307, p < .05).

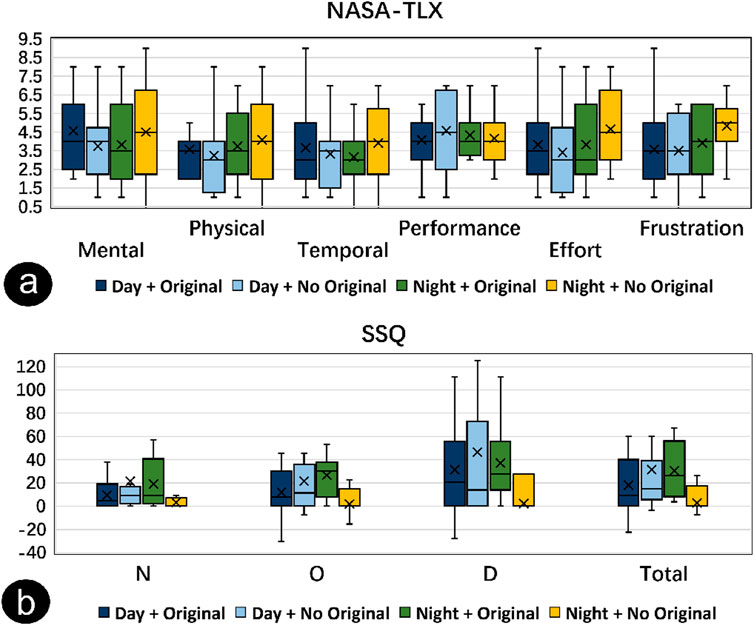

5.3.2 Results

5.3.2.1 Workload demands

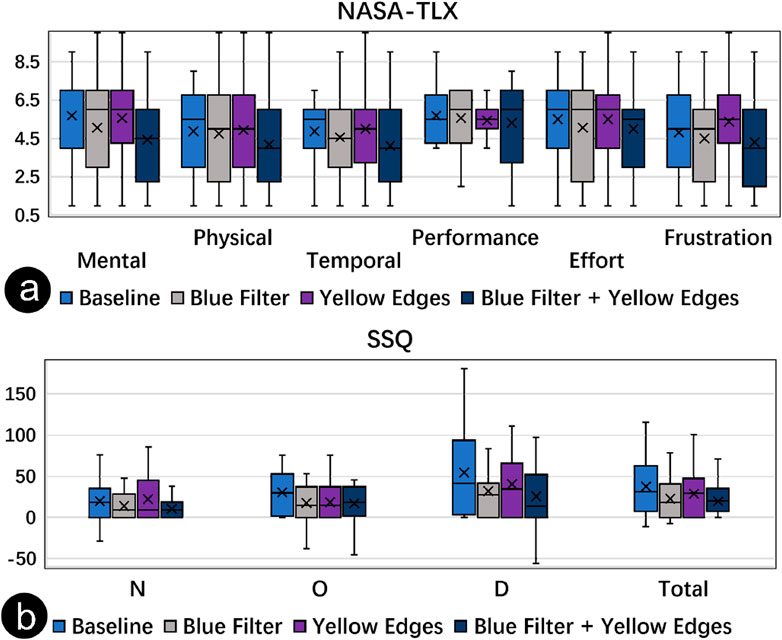

After applying Aligned Rank Transform to the workload demands data, an RM-ANOVA showed three main effects (see Figure 7A): Without Yellow Edge Enhancement group was higher than that of With Yellow Edge Enhancement (F1,15 = 4.937, p < .05) in Mental; Without Blue Filter group was higher than that of With Blue Filter (F1,15 = 4.636, p < .05) in Mental; Without Blue Filter group was higher than that of With Blue Filter in Physical (F1,15 = 5.172, p < .05).

Figure 7. (A) Box Plots of workload demands, and of (B) SSQ of Study One. The error bars represent 95% CI. ‘

5.3.2.2 Simulator sickness

After applying Aligned Rank Transform to the simulator sickness data, an RM-ANOVA showed three main effects (see Figure 7B): Without Yellow Edge Enhancement group was higher than that of With Yellow Edge Enhancement in Nausea (F1,15 = 5.56, p < .05); Without Yellow Edge Enhancement group was higher than that of With Yellow Edge Enhancement in Disorientation (F1,15 = 6.894, p < .05); Without Yellow Edge Enhancement group was higher than that of With Yellow Edge Enhancement in Total Score (F1,15 = 5.224, p < .05).

5.4 Summary of user Study One

The enhancement of With Yellow Edges, both With and Without Blue Filter, significantly reduced the number of collisions compared to Without Yellow Edges. However, With Blue Filter and With Yellow Edges reduced the number of collisions more than With Yellow Edges alone, which supports

Local tasks had different effects on user performance. In the more spartan obstacle tasks T1, T2, T4, and T5, the operation accuracy improved when the image was With or Without Blue Filter as long as the With Yellow Edges enhancement was present. In the more complex static obstacle task T3, With Blue Filter + With Yellow Edges improved the accuracy compared to With Yellow Edges only or With Blue Filter only. In addition, With Blue Filter only reduced the accuracy compared to the baseline. In the more complex task T6 with moving obstacles, precision improved when the image was With or Without Blue Filter as long as the photos were enhanced With Yellow Edges. In addition, accuracy was reduced when With Blue Filter only compared to the baseline for this task also.

The teleoperators said the vision was much clearer With Yellow Edges when With Blue Filter than With Blue Filter alone. Furthermore, analysis of the completion time data showed the efficiency increased as long as images were enhanced With Yellow Edges in T2. Similarly, in T3, efficiency decreased as long as the image was enhanced With Blue Filter. These observations confirm 1.2. In short, the more complex the local task was, the more advantageous With Blue Filter + With Yellow Edges was in supporting user performance. Our workload demands data showed that using With Yellow Edges reduced the task pressure from the mental perspective, but using With Blue Filter increased both psychological and physical elements of task pressure, which resulted in the With Blue Filter + With Yellow Edges condition neither increasing nor decreasing task pressure, which supports

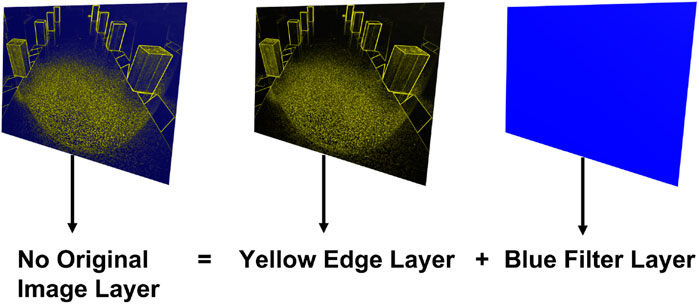

Based on the preceding discussion, we conclude that With Blue Filter + With Yellow Edges can improve users’ operation accuracy and efficiency, reduce mental and physical aspects of their task pressure, and reduce their dizziness during teleoperation. While Study One showed promising results, it also led to an important observation. The experiment was conducted under daylight conditions, but our approach may not work well for UGVs that have to be driven under non-optimal conditions (e.g., in dark environments). This observation led us to do some trials in a dark environment (without lights), and we found that, in that environment, the With Blue Filter + With Yellow Edges combination was not ideal. To compensate for this, we tried several variations and discovered that removing the original image (showing the resulting images with the blue filter layer and yellow edge layer only) could work well in dark environments with little or no light (see Figure 8). We ran a second user study to test this approach.

Figure 8. An example with the original image turned off in a dim environment. The yellow edges and blue filter can help to outline the wood block effectively.

6 User Study Two

Given that Study One showed positive results for the combination With Yellow Edges + With Blue Filter, we wanted to explore further the performance of our approach under different conditions of Lighting View (Day vs. Night View) and Original Image (With vs. Without Original Image) (see Figure 8). This study also followed a within-subjects design with 12 participants, and the conditions were counterbalanced. When operating under the Night View conditions, a front flashlight was attached to the UGV as the light source, which had an illuminance below the minimal level (0.05 lux) of the binocular cameras.

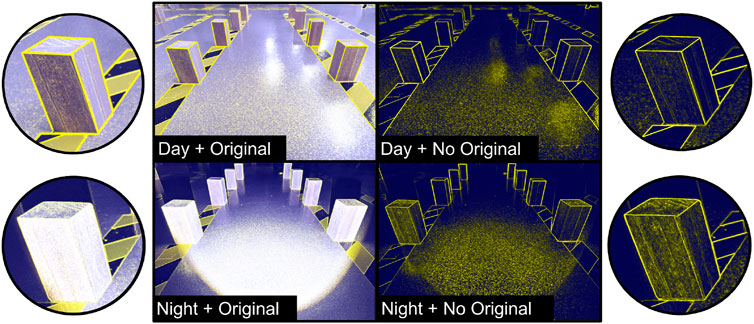

The following four conditions, which were premised on With Yellow Edges + With Blue Filter and were derived from two independent variables (Day vs. Night View and Without vs. With Original Image), were explored (see Figure 9): (1) Day + Orig. = With Original Image in Day View; (2) Day + No Orig. = Without Original Image in Day View; (3) Night + Orig. = With Original Image in Night View; and (4) Night + No Orig. = Without Original Image in Night View.

Figure 9. Screenshots of the effects explored in the second experiment. The top left image presents the Day View, where the wood block is marked out once, adding yellow edges and a blue filter. The combination increases the visibility of the objects. The bottom right image presents the Night View, where, after adding a yellow border and turning off the original image, the blue filter can help to outline the objects clearly.

6.1 Hypotheses

Based on our experiment design, we formulated the following hypotheses:

6.2 Participants

Another 12 volunteers (6 males and six females; aged between 20 and 30, mean = 20.5) were recruited for this study from the same organisation. They all had normal or corrected-to-normal eyesight and had no history of colour blindness or other health issues. They all consented to participate voluntarily in the study and have also undergone sufficient training sessions.

All participants understood the nature of the tasks, and all recorded data were valid. No outliers were found using residuals that exceeded

6.3 Results

6.3.1 Objective results

6.3.1.1 Overall performance

A two-way RM-ANOVA showed the interaction effects on the number of collisions (F1, 12 = 24.076, p < .0001). We then found the following simple effects (see also Figure 10A, B: when With Original Image, the number of collisions in Day View group was 6.25 lower than that in Night View (95% CI: 4.652–7.348, p < .0001); when in Day View, the number of collisions of With Original Image group was 1.333 lower than that of Without Original Image (95% CI: 0.089–2.577, p < .05); when in Night View, the number of collisions of With Original Image group was 3.875 higher than that of Without Original Image (95% CI: 1.247–3.315, p < .0001).

Figure 10. (A) Mean number of collisions; (B) mean completion times of overall tasks; (C) mean number of collisions; and (D) mean completion times of each local task. The error bars represent 95% CI.

Another RM-ANOVA found no main effect on completion time for Lighting View and Original Image, respectively.

6.3.1.2 Local task performance

We found two main effects on the number of collisions in local tasks as follows (see also Figure 10C): Day View group was 0.708 lower than that in Night View in T4 (95% CI: 0.038–1.378, F1, 12 = 5.416, p < .05); Day View group was 0.937 lower than that in Night View in T5 (95% CI: 0.463–1.412, F1, 12 = 18.893, p < .01). We also found two interaction effects in complex tasks T3: when With Original Image, Day View group was 0.708 lower than that in Night View (95% CI: 0.126–1.29, p < .05); when Without Original Image, Day View group was 0.792 higher than that in Night View (95% CI: 0.295–1.289, p < .01); In T6: when With Original Image, Day View group was 2.333 lower than that in Night View (95% CI: 1.898–2.769, p < .0001); when in Day View, With Original Image group was 0.75 lower than that of Without Original Image (95% CI: 0.2–1.3, p < .05); when Without Original Image, Day View group was 1.667 higher than that in Night View (95% CI: 0.958–2.375, p < .0001).

Another RM-ANOVA found no effect on completion time for Lighting View and Original Image in local tasks (see Figure 10D).

6.3.2 Subjective results

6.3.2.1 Workload demands

After applying the Aligned Rank Transform to the workload data, an RM-ANOVA showed there is no effect on workload demands (see Figure 11A).

Figure 11. (A) Box Plots of workload demands, and of (B) SSQ of Study Two. The error bars represent 95% CI. ‘

6.3.2.2 Simulator sickness

After applying Aligned Rank Transform to the simulator sickness data, an RM-ANOVA showed an interaction effect (see Figure 11B): when Without Original Image, Day View group was higher than that in Night View in Oculomotor (F1, 12 = 6.465, p < .05).

6.4 Summary of user Study Two

In terms of overall performance, we found that the Night View group had significantly lower accuracy than the Day View group when With Original Image. The flashlight as a light source in the Night View formed a high-brightness effect on the obstacles, which reduced the users’ ability to identify the barriers. Thus, according to the teleoperators, Without Original Image led to a reduced operation accuracy compared to With Original Image in Day View. However, in Night View, Without Original Image led to an increased operation accuracy compared to With Original Image. Teleoperators also stated that they had trouble seeing the yellow edges clearly in images with high brightness. Accordingly, we had to turn off the original image in high-brightness environments and turn on the original image in everyday lighting environments. These results confirm

Regarding local task performance, we found that the Night View group had a significantly reduced accuracy compared to the Day View group in the tasks with moving obstacles T4 and T5. While challenging, their difficulty level was still lower than that of T6, so only the main effect was demonstrated. In the most difficult static obstacle task, T3, we found that Without Original Image reduced the operation accuracy compared to With Original Image in Day View. However, Without Original Image increased the operation accuracy compared to With Original Image in Night View. For the most complex moving obstacle task, T6, results showed the same effects as in T3 and the overall performance. This indicates that the more difficult the job, the stronger the impact of light conditions and the presence or absence of the original image on the operation performance, which confirms

In line with the point emphasised by the teleoperators about being able to complete their tasks, they observed that they felt relaxed when working in our VR systems. That confirmed our finding of no significant difference in task pressure (

7 Takeaways and future work

Before closing this paper, we present some takeaways from the present work. The purpose is to generate some takeaways for other researchers who share a similar research interest in teleoperation and its combination with virtual reality. In turn, we can summarise our future work.

One advantage of the present work is the algorithm. However, we must acknowledge that the simple structure of the Sobel algorithm-based VR interfaces has, to some extent, a limitation for those operators who have poor sights on objects with complex patterns, such as the floor in Figure 8. This problem is a genuine issue that cannot simply be solved by an algorithm. However, our study is a showcase for other researchers to duplicate our successful scenarios in their own work. Thus, we extend the boundaries of VR-based teleoperation and open the room for more contributions.

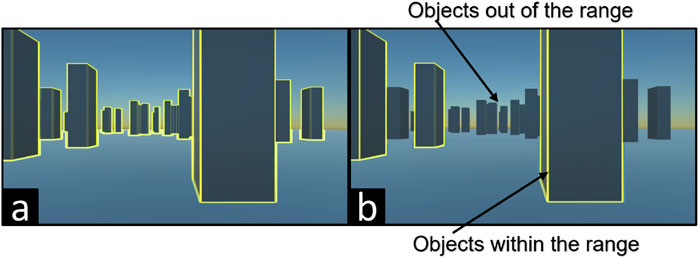

In addition, we contribute that image-altering techniques can be explored to assess their effect on teleoperators’ performance and experience. For example, it may help to include visual enhancements to better distinguish objects at different depths. Depth information can be calculated in real time when a binocular or infrared depth camera is used. In that sense, we can test partial and complete edge enhancement approaches. In that manner, the edge enhancement is only available in the near objects of the image but not available in the distant objects (or vice-versa) (see Figure 12). The teleoperator can identify the close objects’ color and predict the position and shape of the upcoming object in advance. This could help efficiency significantly.

Figure 12. An example of (A) our technique; and (B) with the depth information. Additional depth information can help teleoperators narrow down their focus (e.g., on objects within a certain range or radius of the UGV).

Also, considering the promising results obtained by visually augmenting the images only, it would be worthwhile to investigate the integration of visual and haptic modalities in teleoperation (Cai et al., 2021; Lee et al., 2019; Luo et al., 2022). By generating corresponding haptic data based on enhanced visual scenes, users could receive tactile cues and signals to perceive potential obstacles and distances better. This type of multimodal integration has been explored in some prior work, such as providing tactile feedback for teleoperated grasping based on visual inputs (Cai et al., 2021). The combination of enhanced visuals and realistic haptics has the potential to significantly enhance teleoperation efficiency, especially in challenging low-light conditions (Lee et al., 2019; Guo et al., 2023). Furthermore, multimodality can involve capturing and using other biometric cues from users, such as eye gaze and head movement direction, to determine users’ focus and provide augmented images of areas where the user is paying attention. This approach has been shown to improve interaction accuracy and user experience in VR scenarios [e.g. (Yu et al., 2021; Wei et al., 2023)] and can also be applied to teleoperation, improving user experience and efficiency.

Finally, our technique is more applicable when fast obstacle avoidance is required rather than identifying the objects’ details. Users should have the autonomy to control the switches of our techniques. This advantage is a rule of thumb for our future work. While the study results are promising, the studies took place in a lab setting because it gave control of environmental factors (e.g., the type of floor and lighting) and allowed to make sure that there was suitable WI-FI connectivity and that the two video cameras and the VICON tracking system captured helpful information. Our results showed a clear case for using edge enhancement to aid in object avoidance. Hence, we aim to design and run a series of studies outside lab settings in the future, simulating more realistic scenarios (e.g., the search and rescue cases). Results from these studies will be helpful and applicable to a wide range of techniques and applications.

8 Conclusion

In the present paper, teleoperation in VR systems is developed with a focus on teleoperators’ everyday work practices. To verify the usefulness and usability of the designed system, we conducted two user studies to evaluate our image enhancement approach under different conditions. In Study One, we tested the combination of yellow edges with a blue filter to enhance the rendered images. We explored how the combination would affect the teleoperation of a UGV in daylight conditions. Results showed that the edge enhancement approach could enhance user performance in complex scenarios involving avoiding both static and dynamic obstacles and could reduce workload demands and simulator sickness levels. In Study Two, as UGVs are often driving in dark, sub-optimal environments, we explored how to enhance objects’ edges to improve maneuverability because yellow edges with a blue filter were not ideal for dark environments due to the high exposure of resulting enhanced images. We found that removing the original image and leaving only the blue filter and yellow edges could address the high-exposure issue. Results of the second study in a dark environment showed this was a suitable solution and led to accurate teleoperation with low levels of simulation sickness. In short, our approach to enhance the images via a VR interface has great potential to improve the teleoperation of UGVs. The findings of this work can be valuable and are practicable for designing VR systems to support teleoperations.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

YL: Conceptualization, Methodology, Software, Formal analysis, Investigation, Writing–original draft, Writing–review and editing; JW: Methodology, Software, Data curation, Visualization, Writing–review and editing; YP: Investigation, Data curation, Validation, Writing–review and editing; SL: Formal analysis, Visualization, Writing–review and editing; PI: Conceptualization, Resources, Supervision, Writing–review and editing; H-NL: Conceptualization, Methodology, Supervision, Project administration, Funding acquisition, Writing–original draft, Writing–review and editing.

Funding

This work was supported in part by the Suzhou Municipal Key Laboratory for Intelligent Virtual Engineering (#SZS2022004), the National Natural Science Foundation of China (#62272396), and XJTLU Research Development Fund (#RDF-17-01-54 and #RDF-21-02-008).

Acknowledgments

We thank our participants in the present study. Without them, this work would have been impossible to achieve. We also thank the reviewers for their detailed comments and insightful suggestions that have helped improve our paper’s quality.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Al-Jarrah, R., Al-Jarrah, M., and Roth, H. (2018). A novel edge detection algorithm for mobile robot path planning. J. Robotics 2018, 1–12. doi:10.1155/2018/1969834

Al-Tairi, Z. H., Rahmat, R. W., Iqbal Saripan, M., and Sulaiman, P. S. (2014). Skin segmentation using YUV and RGB color spaces. J. Inf. Process. Syst. 10, 283–299. doi:10.3745/JIPS.02.0002

Avgousti, S., Christoforou, E. G., Panayides, A. S., Voskarides, S., Novales, C., Nouaille, L., et al. (2016). Medical telerobotic systems: current status and future trends. Biomed. Eng. online 15, 96–44. doi:10.1186/s12938-016-0217-7

Baba, J., Song, S., Nakanishi, J., Yoshikawa, Y., and Ishiguro, H. (2021). Local vs. avatar robot: performance and perceived workload of service encounters in public space. Front. Robotics AI 8, 778753. doi:10.3389/frobt.2021.778753

Caharija, W., Pettersen, K. Y., Bibuli, M., Calado, P., Zereik, E., Braga, J., et al. (2016). Integral line-of-sight guidance and control of underactuated marine vehicles: theory, simulations, and experiments. IEEE Trans. Control Syst. Technol. 24, 1623–1642. doi:10.1109/TCST.2015.2504838

Cai, S., Zhu, K., Ban, Y., and Narumi, T. (2021). Visual-tactile cross-modal data generation using residue-fusion gan with feature-matching and perceptual losses. IEEE Robotics Automation Lett. 6, 7525–7532. doi:10.1109/LRA.2021.3095925

Canny, J. (1986). A computational approach to edge detection. IEEE Trans. Pattern Analysis Mach. Intell. PAMI-8, 679–698. doi:10.1109/TPAMI.1986.4767851

Canny, J. F. (1983). Finding edges and lines in images. Report No: AITR-720. Massachusetts Inst of Tech Cambridge Artificial Intelligence Lab.

Casper, J., and Murphy, R. (2003). Human-robot interactions during the robot-assisted urban search and rescue response at the world trade center. IEEE Trans. Syst. Man, Cybern. Part B Cybern. 33, 367–385. doi:10.1109/TSMCB.2003.811794

Chang, C., Bang, K., Wetzstein, G., Lee, B., and Gao, L. (2020). Toward the next-generation vr/ar optics: a review of holographic near-eye displays from a human-centric perspective. Optica 7, 1563–1578. doi:10.1364/OPTICA.406004

Chen, J. Y., Haas, E. C., and Barnes, M. J. (2007). Human performance issues and user interface design for teleoperated robots. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 37, 1231–1245. doi:10.1109/TSMCC.2007.905819

Chen, Y., Li, D., and Zhang, J. Q. (2017). Complementary color wavelet: a novel tool for the color image/video analysis and processing. IEEE Trans. Circuits Syst. Video Technol. 29, 12–27. doi:10.1109/tcsvt.2017.2776239

Chen, Y. C., and Liao, T. S. (2011). Hardware digital color enhancement for color vision deficiencies. ETRI J. 33, 71–77. doi:10.4218/etrij.11.1510.0009

Choi, C., Trevor, A. J. B., and Christensen, H. I. (2013). “Rgb-d edge detection and edge-based registration,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, (Tokyo, Japan: IEEE), 03-07 November 2013, 1568–1575. doi:10.1109/IROS.2013.6696558

Ehrlich, J. R., Ojeda, L. V., Wicker, D., Day, S., Howson, A., Lakshminarayanan, V., et al. (2017). Head-mounted display technology for low-vision rehabilitation and vision enhancement. Am. J. Ophthalmol. 176, 26–32. doi:10.1016/j.ajo.2016.12.021

Fang, B., Sun, F., Liu, H., Guo, D., Chen, W., and Yao, G. (2017). Robotic teleoperation systems using a wearable multimodal fusion device. Int. J. Adv. Robotic Syst. 14, 172988141771705. doi:10.1177/1729881417717057

Fu, Y., Lin, W., Huang, J., and Gao, H. (2021). Predictive display for teleoperation with virtual reality fusion technology. Asian J. Control 23, 2261–2272. doi:10.1002/asjc.2690

Granqvist, A., Takala, T., Takatalo, J., and Hamaainen, P. (2018). “Exaggeration of avatar flexibility in virtual reality,” in Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play (New York, NY, USA: Association for Computing Machinery), 201–209. doi:10.1145/3242671.3242694

Guo, J., Ma, J., Ángel, F.G.-F., Zhang, Y., and Liang, H. (2023). A survey on image enhancement for low-light images. Heliyon 9, e14558. doi:10.1016/j.heliyon.2023.e14558

Hagen, P., Storkersen, N., Marthinsen, B.-E., Sten, G., and Vestgard, K. (2005). Military operations with hugin auvs: lessons learned and the way ahead. Eur. Oceans 2005 2, 810–813. doi:10.1109/OCEANSE.2005.1513160

Hart, S. G. (2006). Nasa-task load index (nasa-tlx); 20 years later. Proc. Hum. Factors Ergonomics Soc. Annu. Meet. 50, 904–908. doi:10.1177/154193120605000909

Hassan, M. A. A., Khalid, N. E. A., Ibrahim, A., and Noor, N. M. (2008). Evaluation of sobel, canny, shen & castan using sample line histogram method. 2008 Int. Symposium Inf. Technol. 3, 1–7. doi:10.1109/ITSIM.2008.4632072

Havoutis, I., and Calinon, S. (2019). Learning from demonstration for semi-autonomous teleoperation. Aut. Robots 43, 713–726. doi:10.1007/s10514-018-9745-2

Hirschmanner, M., Tsiourti, C., Patten, T., and Vincze, M. (2019). “Virtual reality teleoperation of a humanoid robot using markerless human upper body pose imitation,” in 2019 IEEE-RAS 19th international conference on humanoid robots (humanoids), (Toronto, ON, Canada: IEEE), 15-17 October 2019, 259–265. doi:10.1109/Humanoids43949.2019.9035064

Hwang, A. D., and Peli, E. (2014). An augmented-reality edge enhancement application for google glass. Optometry Vis. Sci. official Publ. Am. Acad. Optometry 91, 1021–1030. doi:10.1097/opx.0000000000000326

Ioannou, C., Archard, P., O’Neill, E., and Lutteroth, C. (2019). “Virtual performance augmentation in an immersive jump & amp; run exergame,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 19, 1–15. doi:10.1145/3290605.3300388

Itoh, Y., and Klinker, G. (2015). Vision enhancement: defocus correction via optical see-through head-mounted displays. ACM Int. Conf. Proceeding Ser. 11, 1–8. doi:10.1145/2735711.2735787

Jose, A., Deepa Merlin Dixon, K., Joseph, N., George, E. S., and Anjitha, V. (2014). “Performance study of edge detection operators,” in International Conference on Embedded Systems (Coimbatore, India: IEEE), (03-05 July 2014), 7–11. doi:10.1109/EmbeddedSys.2014.6953040

Kasowski, J., and Beyeler, M. (2022). “Immersive virtual reality simulations of bionic vision,” in Augmented humans 2022 (New York, NY, USA: Association for Computing Machinery), 82–93. AHs 2022. doi:10.1145/3519391.3522752

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilienthal, M. G. (1993). Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3, 203–220. doi:10.1207/s15327108ijap0303_3

Khatib, O., Yeh, X., Brantner, G., Soe, B., Kim, B., Ganguly, S., et al. (2016). Ocean one: a robotic avatar for oceanic discovery. IEEE Robotics & Automation Mag. 23, 20–29. doi:10.1109/MRA.2016.2613281

King, C.-H., Killpack, M. D., and Kemp, C. C. (2010). “Effects of force feedback and arm compliance on teleoperation for a hygiene task,” in Haptics: generating and perceiving tangible sensations. Editors A. M. L. Kappers, J. B. F. van Erp, W. M. Bergmann Tiest, and F. C. T. van der Helm (Berlin, Heidelberg: Springer Berlin Heidelberg), 248–255.

Kot, T., and Novák, P. (2018). Application of virtual reality in teleoperation of the military mobile robotic system taros. Int. J. Adv. Robotic Syst. 15, 172988141775154. doi:10.1177/1729881417751545

LaViola, J. J. (2000). A discussion of cybersickness in virtual environments. SIGCHI Bull. 32, 47–56. doi:10.1145/333329.333344

Lee, H., Kim, S. D., and Amin, M. A. U. A. (2022). Control framework for collaborative robot using imitation learning-based teleoperation from human digital twin to robot digital twin. Mechatronics 85, 102833. doi:10.1016/j.mechatronics.2022.102833

Lee, J.-T., Bollegala, D., and Luo, S. (2019). “touching to see” and “seeing to feel”: robotic cross-modal sensory data generation for visual-tactile perception. International Conference on Robotics andAutomation (ICRA), (Montreal, Canada: IEEE), 4276–4282. doi:10.1109/ICRA.2019.8793763

Li, Z., Luo, Y., Wang, J., Pan, Y., Yu, L., and Liang, H.-N. (2022). “Collaborative remote control of unmanned ground vehicles in virtual reality,” in 2022 international conference on interactive media, smart systems and emerging technologies (IMET), (Limassol, Cyprus: IEEE), 04-07 October 2022, 1–8. doi:10.1109/IMET54801.2022.9929783

Li, Z., Luo, Y., Wang, J., Pan, Y., Yu, L., and Liang, H.-N. (2024). Feasibility and performance enhancement of collaborative control of unmanned ground vehicles via virtual reality. Personal Ubiquitous Comput., 1–17. doi:10.1007/s00779-024-01799-4

Li, Z., Qin, Z., Luo, Y., Pan, Y., and Liang, H.-N. (2023). “Exploring the design space for hands-free robot dog interaction via augmented reality,” in 2023 9th international conference on virtual reality (ICVR), (Xianyang, China: IEEE), 12-14 May 2023, 288–295. doi:10.1109/ICVR57957.2023.10169556

Lipton, J. I., Fay, A. J., and Rus, D. (2018). Baxter’s homunculus: virtual reality spaces for teleoperation in manufacturing. IEEE Robotics Automation Lett. 3, 179–186. doi:10.1109/LRA.2017.2737046

Liu, J., Chen, D., Wu, Y., Chen, R., Yang, P., and Zhang, H. (2020). Image edge recognition of virtual reality scene based on multi-operator dynamic weight detection. IEEE Access 8, 111289–111302. doi:10.1109/ACCESS.2020.3001386

Luo, Y., Wang, J., Liang, H.-N., Luo, S., and Lim, E. G. (2021). “Monoscopic vs. stereoscopic views and display types in the teleoperation of unmanned ground vehicles for object avoidance,” in 2021 30th IEEE International Conference on Robot & Human Interactive Communication (Vancouver, BC, Canada: RO-MAN IEEE), 418–425.(08-12 August, 2021).

Luo, Y., Wang, J., Pan, Y., Luo, S., Irani, P., and Liang, H.-N. (2023). “Teleoperation of a fast omnidirectional unmanned ground vehicle in the cyber-physical world via a vr interface,” in Proceedings of the 18th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and Its Applications in Industry (New York, NY, USA: Association for Computing Machinery). VRCAI ’22. doi:10.1145/3574131.3574432

Luo, Y., Wang, J., Shi, R., Liang, H.-N., and Luo, S. (2022). In-device feedback in immersive head-mounted displays for distance perception during teleoperation of unmanned ground vehicles. IEEE Trans. Haptics 15, 79–84. doi:10.1109/TOH.2021.3138590

Lynn, N. D., Sourav, A. I., and Santoso, A. J. (2021). Implementation of real-time edge detection using canny and sobel algorithms. IOP Conf. Ser. Mater. Sci. Eng. 1096, 012079. doi:10.1088/1757-899x/1096/1/012079

MacAdam, D. L. (1938). Photometric relationships between complementary colors. JOSA 28, 103–111. doi:10.1364/josa.28.000103

Martín-Barrio, A., Roldán, J. J., Terrile, S., del Cerro, J., and Barrientos, A. (2020). Application of immersive technologies and natural language to hyper-redundant robot teleoperation. Virtual Real. 24, 541–555. doi:10.1007/s10055-019-00414-9

McIlhagga, W. (2018). Estimates of edge detection filters in human vision. Vis. Res. 153, 30–36. doi:10.1016/j.visres.2018.09.007

Naceri, A., Mazzanti, D., Bimbo, J., Tefera, Y. T., Prattichizzo, D., Caldwell, D. G., et al. (2021). The vicarios virtual reality interface for remote robotic teleoperation. J. Intelligent & Robotic Syst. 101, 80–16. doi:10.1007/s10846-021-01311-7

Nakanishi, J., Itadera, S., Aoyama, T., and Hasegawa, Y. (2020). Towards the development of an intuitive teleoperation system for human support robot using a vr device. Adv. Robot. 34, 1239–1253. doi:10.1080/01691864.2020.1813623

Nakayama, A., Ruelas, D., Savage, J., and Bribiesca, E. (2021). Teleoperated service robot with an immersive mixed reality interface. Inf. automation 20, 1187–1223. doi:10.15622/ia.20.6.1

Norton, A., Ober, W., Baraniecki, L., McCann, E., Scholtz, J., Shane, D., et al. (2017). Analysis of human–robot interaction at the darpa robotics challenge finals. Int. J. Robotics Res. 36, 483–513. doi:10.1177/0278364916688254

Omarali, B., Denoun, B., Althoefer, K., Jamone, L., Valle, M., and Farkhatdinov, I. (2020). “Virtual reality based telerobotics framework with depth cameras,” in 2020 29th IEEE international conference on robot and human interactive communication (Naples, Italy: IEEE), 31 August 2020 - 04 September 2020, 1217–1222. doi:10.1109/RO-MAN47096.2020.9223445

Opiyo, S., Zhou, J., Mwangi, E., Kai, W., and Sunusi, I. (2021). A review on teleoperation of mobile ground robots: architecture and situation awareness. Int. J. Control, Automation Syst. 19, 1384–1407. doi:10.1007/s12555-019-0999-z

Pan, M., Li, J., Yang, X., Wang, S., Pan, L., Su, T., et al. (2022). Collision risk assessment and automatic obstacle avoidance strategy for teleoperation robots. Comput. & Industrial Eng. 169, 108275. doi:10.1016/j.cie.2022.108275

Paull, L., Saeedi, S., Seto, M., and Li, H. (2014). Auv navigation and localization: a review. IEEE J. Ocean. Eng. 39, 131–149. doi:10.1109/JOE.2013.2278891

Peppoloni, L., Brizzi, F., Avizzano, C. A., and Ruffaldi, E. (2015). Immersive ros-integrated framework for robot teleoperation. 2015 IEEE Symposium 3D User Interfaces 3DUI, 177–178. doi:10.1109/3DUI.2015.7131758

Peskoe-Yang, L. (2019). Paris firefighters used this remote-controlled robot to extinguish the notre dame blaze. IEEE Spectr. Technol. Eng. Sci. News.

Petillot, Y., Reed, S., and Bell, J. (2002). Real time auv pipeline detection and tracking using side scan sonar and multi-beam echo-sounder. OCEANS ’02 MTS/IEEE 1, 217–222. doi:10.1109/OCEANS.2002.1193275

Prewitt, J. M. S. (1970). Object enhancement and extraction. Pict. Process. Psychopictorics 10, 15–19.

Qin, S., and Liu, S. (2020). Efficient and unified license plate recognition via lightweight deep neural network. IET Image Process. 14, 4102–4109. doi:10.1049/iet-ipr.2020.1130

Ruoff, C. F. (1994). Teleoperation and robotics in space. Washington DC: American Institute of Aeronautics and Astronautics, Inc.

Sauer, Y., Sipatchin, A., Wahl, S., and García García, M. (2022). Assessment of consumer vr-headsets’ objective and subjective field of view (fov) and its feasibility for visual field testing. Virtual Real. 26, 1089–1101. doi:10.1007/s10055-021-00619-x

Schubert, E. F. (2006). Human eye sensitivity and photometric quantities. Light-emitting diodes, 275–291.

Senft, E., Hagenow, M., Welsh, K., Radwin, R., Zinn, M., Gleicher, M., et al. (2021). Task-level authoring for remote robot teleoperation. Front. Robotics AI 8, 707149. doi:10.3389/frobt.2021.707149

Settimi, A., Pavan, C., Varricchio, V., Ferrati, M., Mingo Hoffman, E., Rocchi, A., et al. (2014). “A modular approach for remote operation of humanoid robots in search and rescue scenarios,” in International Workshop on Modelling and simulation for autonomous systems (Springer), 192–205.

Sheridan, T. (1993). Space teleoperation through time delay: review and prognosis. IEEE Trans. Robotics Automation 9, 592–606. doi:10.1109/70.258052

Small, N., Lee, K., and Mann, G. (2018). An assigned responsibility system for robotic teleoperation control. Int. J. intelligent robotics Appl. 2, 81–97. doi:10.1007/s41315-018-0043-0

Sobel, I., and Feldman, G. (1968). “A 3x3 isotropic gradient operator for image processing,” in A talk at the stanford artificial project, 271–272.

Sripada, A., Asokan, H., Warrier, A., Kapoor, A., Gaur, H., Patel, R., et al. (2018). “Teleoperation of a humanoid robot with motion imitation and legged locomotion,” in 2018 3rd international conference on advanced robotics and mechatronics (ICARM), (Singapore: IEEE), 18-20 July 2018, 375–379. doi:10.1109/ICARM.2018.8610719

Stotko, P., Krumpen, S., Schwarz, M., Lenz, C., Behnke, S., Klein, R., et al. (2019). “A vr system for immersive teleoperation and live exploration with a mobile robot,” in 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS), (Macau, China: IEEE), 03-08 November 2019, 3630–3637. doi:10.1109/IROS40897.2019.8968598

Van de Merwe, D. B., Van Maanen, L., Ter Haar, F. B., Van Dijk, R. J. E., Hoeba, N., and Van der Stap, N. (2019). “Human-robot interaction during virtual reality mediated teleoperation: how environment information affects spatial task performance and operator situation awareness,” in Virtual, augmented and mixed reality. Applications and case studies. Editors J. Y. Chen, and G. Fragomeni (Cham: Springer International Publishing), 163–177.

Wang, X., Liu, H., Xu, W., Liang, B., and Zhang, Y. (2012). A ground-based validation system of teleoperation for a space robot. Int. J. Adv. Robotic Syst. 9, 115. doi:10.5772/51129

Waxman, A. M., Aguilar, M., Fay, D. A., Ireland, D. B., and Racamato, J. P. (1998). Solid-state color night vision: fusion of low-light visible and thermal infrared imagery. Linc. Laboratory J. 11, 41–60.

Wei, Y., Shi, R., Yu, D., Wang, Y., Li, Y., Yu, L., et al. (2023). “Predicting gaze-based target selection in augmented reality headsets based on eye and head endpoint distributions,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3544548.3581042

Wibowo, S., Siradjuddin, I., Ronilaya, F., and Hidayat, M. N. (2021). Improving teleoperation robots performance by eliminating view limit using 360 camera and enhancing the immersive experience utilizing VR headset. IOP Conf. Ser. Mater. Sci. Eng. 1073, 012037. doi:10.1088/1757-899x/1073/1/012037

Wobbrock, J. O., Findlater, L., Gergle, D., and Higgins, J. J. (2011). The aligned rank transform for nonparametric factorial analyses using only anova procedures. Proc. SIGCHI Conf. Hum. factors Comput. Syst., 143–146.

Xi, B., Wang, S., Ye, X., Cai, Y., Lu, T., and Wang, R. (2019). A robotic shared control teleoperation method based on learning from demonstrations. Int. J. Adv. Robotic Syst. 16, 172988141985742. doi:10.1177/1729881419857428

Xu, W., Liang, H.-N., Ma, X., and Li, X. (2020). VirusBoxing: a HIIT-based VR boxing game. New York, NY, USA: Association for Computing Machinery, 98–102.

Younis, O., Al-Nuaimy, W., Al-Taee, M. A., and Al-Ataby, A. (2017). “Augmented and virtual reality approaches to help with peripheral vision loss,” in 2017 14th international multi-conference on systems, signals & devices (SSD), (Marrakech, Morocco: IEEE), 28-31 March 2017, 303–307. doi:10.1109/SSD.2017.8166993

Yu, D., Lu, X., Shi, R., Liang, H.-N., Dingler, T., Velloso, E., et al. (2021). “Gaze-supported 3d object manipulation in virtual reality,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3411764.3445343

Zhang, W. (2010). “Lidar-based road and road-edge detection,” in 2010 IEEE intelligent vehicles symposium, 845–848. doi:10.1109/IVS.2010.5548134

Zhao, Y., Cutrell, E., Holz, C., Morris, M. R., Ofek, E., and Wilson, A. D. (2019). “Seeingvr: a set of tools to make virtual reality more accessible to people with low vision,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–14. doi:10.1145/3290605.3300341

Zheng, L., and He, X. (2011). “Character segmentation for license plate recognition by k-means algorithm,” in Image analysis and processing – ICIAP 2011. Editors G. Maino, and G. L. Foresti (Berlin, Heidelberg: Springer Berlin Heidelberg), 444–453.

Keywords: vision augmentation, edge enhancement, virtual reality, teleoperation, unmanned ground vehicles

Citation: Luo Y, Wang J, Pan Y, Luo S, Irani P and Liang H-N (2024) Visual augmentation of live-streaming images in virtual reality to enhance teleoperation of unmanned ground vehicles. Front. Virtual Real. 5:1230885. doi: 10.3389/frvir.2024.1230885

Received: 29 May 2023; Accepted: 27 August 2024;

Published: 07 October 2024.

Edited by:

Oliver Staadt, University of Rostock, GermanyReviewed by:

Shaoyu Cai, National University of Singapore, SingaporeVladimir Goriachev, VTT Technical Research Centre of Finland Ltd., Finland

Copyright © 2024 Luo, Wang, Pan, Luo, Irani and Liang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hai-Ning Liang, aGFpbmluZy5saWFuZ0B4anRsdS5lZHUuY24=

†Present address: Hai-Ning Liang, Computational Media and Arts Thrust, Information Hub, The Hong Kong University of Science and Technology (Guangzhou), Guangzhou, China

Yiming Luo

Yiming Luo Jialin Wang1

Jialin Wang1 Yushan Pan

Yushan Pan Hai-Ning Liang

Hai-Ning Liang